Abstract

In this paper, a parameter estimation method for multi-component chirp signals with white Gaussian noise is proposed based on the modified discrete chirp Fourier transform (MDCFT) and the population Monte Carlo (PMC) methodology, in which the model order is unknown. By utilizing the integrability of linear parameters in the Bayesian model, this paper considers the posterior distribution of nonlinear parameters. MDCFT was adopted to calculate the chirpogram of the observed data, and clear peaks can be detected in the discrete chirp Fourier transform domain. The importance function (IF) was constructed according to the peaks, and the PMC algorithm was employed to evaluate the posterior distribution. The proposed method cannot only use the selected IF to generate the sample fitting target function in the parameter region of interest, but can also utilize samples and importance weights to update the IF adaptively. The simulation results indicated that the proposed method can realize joint Bayesian model selection and parameter estimation of multi-component chirp signals. Compared with the two existing methods based on Monte Carlo methodology, the proposed method exhibits improved performance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Chirp signals are widely used in radar and communication applications [1, 2]. For example, the Doppler frequency of a moving object in the radar system is approximately proportional to the speed of the object, and the echo of the object under uniform accelerated motion is regarded as a chirp signal. The status and characteristics of a moving object can be reflected by the chirp signal parameters. Frequency and chirp rate are the prerequisites to obtain the speed and acceleration of the moving object. According to the amplitudes, the size and reflection characteristics of the object can be known. Therefore, the proper utilization of the limited observed data to estimate the chirp signal parameters accurately is significant.

Numerous researchers have explored the parameter estimation problem of chirp signals from different aspects. Traditional methods include algorithms based on the principal of maximum likelihood estimation (MLE) [3, 4], discrete polynomial-phase transform (DPT) [5, 6], time–frequency transform [7, 8], and sparse signal reconstruction [9–11]. Most existing methods are based on the MLE principle, the estimation accuracy of which can be close to the Cramer–Rao low bound (CRLB). However, the computational load of such methods is large and cannot address multi-component chirp signals. The DPT-based methods utilize the instantaneous phase or instantaneous frequency to perform parameter estimation in a simple and efficient manner. However, such methods require a high signal-to-noise ratio (SNR). The idea behind time–frequency transform is to transform the detection and estimation problem of chirp signals into a line search problem of the time–frequency plane and then simplify the problem into a two-dimensional peak search problem through Radon or Hough transform. However, this method is limited by the calculation load, resolution of time–frequency, and cross-term interference. Sparse reconstruction is based on the idea that signals can be discomposed into a linear expansion of chirplets while utilizing matching pursuit (MP) to identify the chirplet in the signals [11]. The obtained chirplet can be directly used to estimate the chirp signal parameters. However, this method also has several limitations. First, in the MP process, one chirp component can be decomposed into multiple chirplets. Second, a huge dictionary is adopted, the computation load of which is not applicable in practice.

Researchers have applied Bayesian inference to the parameter estimation problem of multi-component signals [12–15]. A numerical method is typically used to evaluate the joint posterior distribution of unknown parameters. Some researchers [14, 15] have proposed one solution based on the Markov chain Monte Carlo (MCMC) method. This method uses the chirpogram generated from the discrete chirp Fourier transform (DCFT) to select initial parameters. The MCMC method combined with the random walk Metropolis–Hastings algorithm and the Gibbs sampler is utilized to calculate the posterior distribution of unknown parameters. However, this method needs a burn-in period, during which samples need to be abandoned. Moreover, the MCMC method is difficult to implement in a parallel manner, which significantly reduces its practicability. Researchers [16] have also combined importance sampling (IS) with MLE, which can deal with the parameter estimation problem of multi-component chirp signals. The estimation accuracy of this method can be close to that of the CRLB, but its computation load is huge, and its estimation precision is affected by the “picket fence” effect.

Several methods are based on adaptive importance sampling (AIS). AIS, also called as the population Monte Carlo (PMC) [17, 18], is an adaptive learning method, which can adaptively select and adjust the importance function (IF) according to the collected information. As a result, samples can be drawn around the true value with a greater possibility and the approximation to the object distribution can be obtained. In [17], a fixed number of preselected transition kernels were used and each of these kernels was assigned different weights at different iterations. In [19], the performance of the PMC method was improved through Rao–Blackwellization. A combination of the Gibbs sampler and PMC was proposed in [20]. This method first uses local maximum peaks in the chirpogram to construct the IF and then generates samples and uses resampling technology to prevent sample degeneration. Finally, the method iterates the above process and estimates the parameters and target function of multi-component chirp signals.

The performance of the AIS method is closely related to the selection of the IF. An inaccurate IF does not necessarily lead to the failure of the method but will significantly degrade performance. For chirp signals, DCFT has good detection performance. However, the detected signals need to satisfy specific conditions [21]; otherwise, the detection performance of DCFT will degrade significantly. Thus, this paper adopts the modified discrete chirp Fourier transform (MDCFT) method [22–24] to detect peaks and construct IFs. The PMC is then used to estimate the parameters of multi-component chirp signals. In the iteration process of the PMC, on the one hand, samples are drawn from the IF constructed in the former iteration process; on the other hand, IF will be updated by the samples and their weights, and this IF will be used to draw samples in the next iteration. The whole iteration process can be stopped at any time. This method cannot only estimate the signal parameters but also the posterior distribution of unknown parameters. In addition, when model order is unknown, this method can realize the joint Bayesian model selection and parameter estimation of multi-component chirp signals.

The remainder of this paper is organized as follows: Section 2 mainly describes the mathematical model of multi-component chirp signals and the posterior distribution in the Bayesian framework. In Sect. 3, the idea and procedure of the proposed method is analyzed and the implementation steps and performance analysis are discussed. Section 4 verifies the performance of the proposed method through simulation experiments. Finally, Sect. 5 concludes the paper.

2 Problem description and theoretical background

2.1 Signal model

Let \({\varvec{y}}=\left[ {y\left[ 0 \right] ,y\left[ 1 \right] ,\ldots ,y\left[ {N-1} \right] } \right] ^{\mathrm{T}}\) denote the observed vector of \(N\) data samples. \({\varvec{y}}\) is generated from one of the following \(K\) models:

where \(n=0,\ldots ,N-1,\; K\) denotes the model order, and \(W_{k} \left[ n \right] =f_k n+\frac{s_k }{2}\left( {n-\frac{N-1}{2}} \right) ^{2}\). \(f_{k}, s_{k}\), and \(A_{k}\), respectively, denote the center frequency, chirp rate, and complex amplitude of the \(k\)th chirp component. \(\varepsilon \left[ n \right] \) denotes the additive white Gaussian noise with zero mean and unknown variance \(\sigma _\varepsilon ^{2}\).

Equation (1) can be written into the following matrix form:

where \(\varvec{\varepsilon } =\left[ {\varepsilon \left[ 0 \right] ,\varepsilon \left[ 1 \right] ,\ldots ,\varepsilon \left[ {N-1} \right] } \right] ^{\mathrm{T}}\) is a noise vector; \(\varvec{\alpha } =\left[ {A_{1} ,A_{2} ,\ldots ,A_{K} } \right] ^{\mathrm{T}}\) is a complex amplitude vector; and the \(N\times K\) matrix \({\varvec{D}}\) is defined as follows:

where \(n=0,\ldots ,N-1,\; k=1,\ldots ,K\).

The parameter estimation problem of multi-component chirp signals can be described as follows: Assuming parameter set \(\varvec{{\varTheta }} \triangleq \left[ {\varvec{\alpha }^{\mathrm{T}}\!,{\varvec{f}}^{\mathrm{T}}\!,{\varvec{s}}^{\mathrm{T}}\!,\sigma _{\varepsilon }^{2}\!,K} \right] ^{\mathrm{T}}\) is unknown, the objective is to estimate model order \(K\), center frequency \({\varvec{f}}=\left[ {f_{1} ,f_{2} ,\ldots ,f_{K} } \right] ^{\mathrm{T}}\) and chirp rate \({\varvec{s}}=\left[ {s_{1} ,s_{2} ,\ldots ,s_{K} } \right] ^{\mathrm{T}}\) from the data \(y\).

2.2 Bayesian model and aims

In the Bayesian model, data and signal parameters can be regarded as random variables. According to Eq. (2), the likelihood of \({\varvec{y}}\) is assumed as:

Using Bayesian methodology \(p\left( {\varvec{{\varTheta }} |{\varvec{y}}} \right) ={p\left( {{\varvec{y}}|\varvec{{\varTheta }} } \right) p\left( \varvec{{\varTheta }} \right) }/ {p\left( {\varvec{y}} \right) }\), the posterior distribution of the unknown parameter set \(\varvec{{\varTheta }}\) can be expressed as follows:

The Bayesian inference has to assume the prior of the unknown parameters reasonably and construct the following form [12, 13, 18]:

The amplitude vector \(\varvec{\alpha }\), center frequency vector \({\varvec{f}}\), and the chirp rate vector \({\varvec{s}}\) determine the components of each chirp. The amplitude vector \(\varvec{\alpha }\) and the additive noise level \(\sigma _{\varepsilon }^{2}\) are closely related since they both determine the amplitude of the observed signal as well as the SNR. Thus, \(\varvec{\alpha }\) is closely associated with \({\varvec{f}},{\varvec{s}},\sigma _\varepsilon ^{2}\), and \(K\). The prior of \(\varvec{\alpha }\) can be expressed as \(p\left( {\varvec{\alpha } |{\varvec{f}},{\varvec{s}},\sigma _\varepsilon ^{2} ,K} \right) \). This paper regards the center frequency and chirp rate of each chirp component as a set of parameters to draw samples. Considering their close correlation, the prior of \({\varvec{f}}\) and \({\varvec{s}}\) is expressed as \(p\left( {{\varvec{f}},{\varvec{s}}|K} \right) \).

Referring to the selected parameter priors in [12, 13, 18], the prior of each parameter is assumed as

where \(\delta ^{2}\) denotes an expected SNR of the observed signals; \(\varvec{\alpha }\) is subject to maximum entropy Gaussian distribution of zero mean; \(N\left( {{\varvec{x}};{\varvec{m}}_{{\varvec{x}}} ,\varvec{{\varSigma }}_{\varvec{x}} } \right) \) indicate that \({\varvec{x}}\) obeys the multivariate Gaussian distribution, the mean of which is \({\varvec{m}}_{{\varvec{x}}}\) and covariance matrix is \(\varvec{{\varSigma }}_{{\varvec{x}}}\); and \(\hbox {IG}\left( {x;\alpha ,\beta } \right) \) denotes that \({\varvec{x}}\) obeys the inverse Gamma distribution, the hyper-parameters of which are \(\left( {\alpha ,\beta } \right) \). Assuming that the prior probability of each model is equal, \(p\left( K \right) =1/K\). The normalized center frequencies satisfy \(0<f_1 <f_2 <\cdots <f_K <{1}\), and the normalized chirp rates satisfy \(0\le s_k \le 2\). Therefore, we can obtain \(p\left( {{\varvec{f}},{\varvec{s}}|K} \right) =\left( {1/2} \right) ^{K}\).

Based on this assumption, we can derive the posterior distribution of \(K,\; {\varvec{f}}\), and \({\varvec{s}}\) on \({\varvec{y}}\) as follows (please refer to “Appendix 1” for the detailed deviation):

The posterior distribution of Eq. (8) is highly nonlinear and therefore cannot be expressed in a closed form. Section 3 will describe the feasibility of approximation to the posterior using the proposed method.

2.3 DCFT and MDCFT

This subsection describes the DCFT and MDCFT and explains the advantage of the latter over the former.

The DCFT for discrete chirp signals with length \(N\) is defined as

where \(k\) and \(l\), respectively, represent the frequency and chirp rate; \(W_{N} =\exp \left( {-j2\pi /N} \right) \); \(x\left( n \right) \) can represent \(x\left( n \right) =\exp \left[ {\frac{j2\pi }{N}\left( {k_0 n+l_0 n^{2}} \right) } \right] =W_N^{-\left( {k_0 n+l_0 n^{2}} \right) } \); \(k_0 \) and \(l_0\) are both integers; and \(0\le k_{0} ,l_{0} \le N-1\).

The literature [21] introduces the basic properties of \(X_c \left( {k,l} \right) \). Given that DCFT is a linear transform, it will not give rise to the cross-term interference of Wigner–Ville distribution. This method can deal with multi-component chirp signals. The multiple peaks in the DCFT domain, respectively, correspond to each target signal. However, this method requires signals to satisfy specific conditions [21, 25]. For example, the value \(N\) should be a prime number, and the chirp rate should be an integer (that is, the value should locate on the divided lattice point). Otherwise, the peak in the chirpogram calculated by the DCFT will be unclear, which may result in a sharp decline in detection performance.

Specific to this shortcoming, this paper employs MDCFT to perform peak detection. MDCFT is defined as

where \(x^{{\prime }}\left( n \right) =W_N^{-\left( {k_0 n+\left( {l_0 /N} \right) n^{2}} \right) } \).

Compared with DCFT, MDCFT reduces \(N\) times of the search step length of the chirp rate and its estimation range turns into \(k^{{\prime }}\in \left( {0,1,\ldots ,N-1} \right) \), and \(l^{{\prime }}\in \left( {0,1/N,\ldots ,N-1/N} \right) \), that is, \(k^{{\prime }}=k\) and \(l^{{\prime }}=l/N\). Therefore, MDCFT increases the estimation accuracy of the chirp rate, detects the adjacent peaks with smaller interval, and weakens the “picket fence” effect. In addition, the MDCFT has no limitation of the signal length.

In the parameter estimation problem of multi-component chirp signals, frequency and chirp rate do not necessarily lie in the divided lattice points, and the signal length is not necessarily equal to prime. Thus, MDCFT is more suitable for transforming the signal into the DCFT domain. Considering that the mathematical model adopted in this paper includes the center frequency and chirp rate, Eq. (10) is redefined as

2.4 Generic PMC methodology

PMC is an adaptive learning methodology that concurrently implements multiple IFs and adaptively selects and adjusts IFs in the iteration process according to each performance. As a result, samples have a greater probability to occur around the true value. In the initial stage of the PMC method, the choice of IFs in the full-exploration parameter space contributes to the rapid identification of parameter region of interest. In the follow-up stage, the choice of IFs with better local characteristics can contribute to a finer search of the region adjacent to the true parameters.

The general procedure of the PMC is as follows [17, 18, 26].

We assume that \(p\left( \varvec{\theta } \right) \) is the objective function, whereas \(q^{\left( t \right) }\left( \varvec{\theta } \right) \) is the IF in the \(t\)th iteration. When samples cannot be directly drawn from \(p\left( \varvec{\theta } \right) \), the alternative method is to draw sample \(\varvec{\theta }^{\left( {i,t} \right) }\) from \(q^{\left( t \right) }\left( \varvec{\theta } \right) \). \(\varvec{\theta }^{\left( {i,t} \right) }\) denotes the \(i\)th sample in the \(t\)th iteration. The importance weight of the sample is \({\tilde{\omega }}^{\left( {i,t} \right) }={p\left( {\varvec{\theta }^{\left( {i,t} \right) }} \right) }\big /{q\left( {\varvec{\theta }^{\left( {i,t} \right) }} \right) }\). Therefore, the random observation \(\chi =\left\{ {\varvec{\theta }^{\left( {i,t} \right) },\omega ^{\left( {i,t} \right) }} \right\} _{i=1}^{R}\) derived from the samples and importance weights can approximate the target function, where \(\omega ^{\left( {i,t} \right) }\) represents the normalized weight corresponding to sample \(\varvec{\theta }^{\left( {i,t} \right) }\) for \(i=1,\ldots ,R\), and \(R\) denotes the total number of samples.

In the initial stage of the method, \(D\) IFs \(g\left( {\varvec{\theta };\varvec{\xi }_{1} } \right) ,\ldots , g\left( {\varvec{\theta };\varvec{\xi }_{D} } \right) \) are selected. The overall IF constructed in the \(t\)th iteration can be expressed as follows:

where \(\sum \nolimits _{d=1}^D {\alpha _d^{\left( t \right) } } =1\). \(d=1,\ldots ,D\); \(q^{\left( t \right) }\left( \varvec{\theta } \right) \) is a mixture of \(D\) IFs; \(g\left( {\varvec{\theta };\varvec{\xi }_d^{\left( t \right) } } \right) \) and \(\alpha _d^{\left( t \right) } \), respectively, represent the \(d\)th IF and its candidate probability in the \(t\)th iteration, that is, \(\alpha _d^{\left( t \right) } \) could be interpreted as frequency of using the \(d\)th IF for sampling; \(\varvec{\xi }_d^{\left( t \right) } \) represents the construction parameter of function \(g\left( {\varvec{\theta };\varvec{\xi }_d^{\left( t \right) } } \right) \); and \(d^{\left( {i,t} \right) }\in \left\{ {1,\ldots ,D} \right\} \) denotes the index of the IF used for sampling \(\varvec{\theta }^{\left( {i,t} \right) }\) and obeys multinomial distribution \(M\left( {\alpha _1^{\left( t \right) } ,\ldots ,\alpha _D^{\left( t \right) } } \right) \).

To evaluate the performance of the \(d\)th IF \(g\left( {\varvec{\theta };\varvec{\xi }_d^{\left( t \right) } } \right) \), the sum of weights corresponding to all the samples generated from the IF is utilized. \(q^{\left( t \right) }\left( \varvec{\theta } \right) \) is determined through all IFs and candidate probability. Therefore, the sum of the weights of samples generated in the true parameter region is large. The formula is as follows:

where \(\Pi _{g\left( {\varvec{\theta };\varvec{\xi }_d^{\left( t \right) } } \right) } \varvec{\theta }^{\left( {i,t-1} \right) }\) is an indicator function taking the value of 1 when sample \(\varvec{\theta }^{\left( {i,t-1} \right) }\) is generated from IF\(g\left( {\varvec{\theta };\varvec{\xi }_d^{\left( t \right) } } \right) \) and is 0 otherwise.

\(R\) samples are drawn from the overall IF in each iteration. Such samples and weights are used to approximate the distribution of random variables. When the times of iterations are sufficiently large, the proposed method can accurately estimate the target distribution.

3 Parameter estimation method based on the MDCFT and the PMC

3.1 Idea of the proposed method

Researchers [16, 27] have applied the PMC method to the parameter estimation of multi-sinusoids. Thus, this paper further solves the problem of joint model selection and parameter estimation of multi-component chirp signals. To enable the samples generated from the IFs to occur around the true value with a greater possibility, IFs are elaborately chosen through the MDCFT and are adaptively updated through the PMC.

According to the characteristics of multi-component chirp signals, this paper adopts the MDCFT for peak detection in the DCFT domain. Compared with the conventional DCFT, the MDCFT has the following advantages [24, 25]: (1) reduces the “picket fence” effect, (2) has no limit on the signal length, and (3) reduces the number of mirror peaks [14] or local peaks and decreases the difficulty in peak detection. Furthermore, the MDCFT utilizes the detected peaks to construct IFs and then employs PMC to realize joint model selection and parameter estimation. In the PMC iteration process, on one hand, IFs are constructed according to the samples generated in the former iteration; on the other hand, samples and weights are used to update IFs that will be used in the next iteration. The whole iteration process can be stopped at any time. This method can estimate not only the parameters but also the posterior distribution of the observed data on unknown parameters.

3.2 Proposed method

3.2.1 Construction of IFs

According to Eq. (11), MDCFT is used to transform data into the chirpogram for peak detection. The detected peaks are ranked from large to small based on their amplitudes. The top \(L\) spectral peaks with larger amplitudes are retained, and the parameter space of \(l\) th peak is assumed to have a bivariate normal distribution, noted as \(\varphi \left( {\varvec{\theta }_l } \right) \), where \(\varvec{\theta }_l \triangleq \left( {f_l ,s_l } \right) \). \(f_l\) and \(s_l\), respectively, represent the central frequency and chirp rate of the \(l\)th peak. The constructed bivariate normal distribution is expressed as

where \(\varvec{\mu }_l\) and \(\varvec{{\varSigma }}_l\), respectively, denote the corresponding mean and covariance of parameter \(\varvec{\theta }_l\). \(\varvec{{\varSigma }}_l \!=\!\) \({\left( {{\begin{array}{ll} {\sigma _{f_l }^2 }&{} {\rho _l \sigma _{f_l } \sigma _{s_l } } \\ {\rho _l \sigma _{f_l } \sigma _{s_l } }&{} {\sigma _{s_l }^2 } \\ \end{array} }} \right) }\); and \(\sigma _{f_l }^{2}, \sigma _{s_l }^2\), and \(\rho _l\), respectively, denote the variance of \(f_l\), the variance of \(s_l\) in the Gaussian distribution, and the correlation coefficient between two variances.

After obtaining the result of the detected peaks, greater quantization error may exist if the corresponding parameter of the peaks with the largest amplitude is directly taken as the chirp signal parameter. In addition, the accuracy of parameter estimation is limited and the model order is unlikely to be estimated. Thus, after obtaining the peaks through the MDCFT, the PMC must be utilized to estimate the model order and signal parameters.

When initializing the method, we assume the selected \(D\) IFs \(g\left( {\varvec{\theta };\varvec{\xi }_1^{\left( 0 \right) } } \right) ,\ldots ,g\left( {\varvec{\theta };\varvec{\xi }_D^{\left( 0 \right) } } \right) \), where \(\varvec{\theta } \triangleq \left\{ {{\varvec{f}},{\varvec{s}}} \right\} \). Each IF is composed of the corresponding bivariate normal distribution of \(n\left( {n\le L} \right) \) different peaks in the DCFT domain, and the components of each IF are different. The \(d\)th IF can be expressed as the product of \(n\) bivariate Gaussian distribution as follows:

where \(\left\{ {c_1 ,\ldots ,c_{n} } \right\} \) denotes the index number of the peak that constitutes IFs \(g\left( {\varvec{\theta };\varvec{\xi }_d^{\left( 0 \right) } } \right) , 1\le c_1 <\cdots <c_n \le L\), and \(n\ge 1\). \(\varvec{\xi }_d^{\left( 0 \right) } \) represents the mean and covariance of all bivariate Gaussian distributions of IF \(g\left( {\varvec{\theta };\varvec{\xi }_d^{\left( 0 \right) } } \right) \), that is, \(\varvec{\xi }_d^{\left( 0 \right) } =\left( {\varvec{\mu }_{c_1 }^{\left( 0 \right) } ,\varvec{{\varSigma }}_{c_1 }^{\left( 0 \right) } ,\ldots ,\varvec{\mu }_{c_n }^{\left( 0 \right) } ,\varvec{{\varSigma }}_{c_n }^{\left( 0 \right) } } \right) \). The overall IF constructed according to Eq. (12) can be expressed as \(q^{\left( 0 \right) }\left( \varvec{\theta } \right) =\sum \nolimits _{d=1}^D {\alpha _d^{\left( 0 \right) } g\left( {\varvec{\theta };\varvec{\xi }_d^{\left( 0 \right) } } \right) } \) and \(\alpha _d^{\left( 0 \right) } =1/D\) for \(d=1,2,\ldots ,D\).

3.2.2 Update of IFs

According to the general flow of the PMC method in Sect. 2.4, \(g\left( {\varvec{\theta };\varvec{\xi }_d^{\left( t \right) } } \right) \) represents the \(d\)th IF in the \(t\)th iteration, which can be expressed as

where \(\varvec{\xi }_d^{\left( t \right) } =\left( {\varvec{\mu }_{c_1 }^{\left( t \right) } ,\varvec{{\varSigma }}_{c_1 }^{\left( t \right) } ,\ldots ,\varvec{\mu }_{c_n }^{\left( t \right) } ,\varvec{{\varSigma }}_{c_n }^{\left( t \right) } } \right) \). According to Eq. (12), the overall IF can be constructed as

The \(i\)th weight of the sample drawn from the IFs in the \(t\)th iteration are calculated as follows:

The IF parameters are updated as follows (Please refer to the literature [26, 28, 29] and see the detailed derivation in “Appendix 2”):

where \(h_{d} \left( {\varvec{\theta }^{\left( {i,t} \right) };\alpha ^{\left( t \right) },\varvec{\xi }^{\left( t \right) }} \right) =\frac{\alpha _d^{\left( t \right) } g\left( {\varvec{\theta }^{\left( {i,t} \right) };\varvec{\xi }_d^{\left( t \right) } } \right) }{\sum \nolimits _{d=1}^D {\alpha _d^{\left( t \right) } g\left( {\varvec{\theta }^{\left( {i,t} \right) };\varvec{\xi }_d^{\left( t \right) } } \right) } }\), and \(\omega ^{\left( {i,t} \right) }\) denotes the normalized weight of the \(i\)th sample in the \(t\)th iteration.

As a typical AIS methodology, the PMC method has shown superior performance in solving high-dimensional problems, which has been proven theoretically and experimentally. In the PMC method, samples are drawn according to the importance weight in each iteration, such that the convergence problem is resolved [17, 18].

3.2.3 Joint model selection and parameter estimation

In case the number of chirp components in the data is a priori unknown, the idea in the literature [18] was employed in this paper to realize the joint model selection and parameter estimation. We assume that \(\varvec{\theta }\in S_{K}, S_{K}\) denotes the parameter space of \(M_K\). The total parameter space \(\varvec{{\varOmega }}\) is assumed as \(\varvec{{\varOmega }} \triangleq \cup _{K=0}^{K_{\max } } \left\{ K \right\} \times S_K\), where \(K_{\max }\) denotes the maximum number of the chirp components. We adopted the Bayesian inference strategy. The marginal maximum a posterior estimate of \(K\) is given by

where the indicator function \(\prod _{K} \left( {{\tilde{K}}} \right) \) takes the value of 1 when \({\tilde{K}}=K\) and is 0 otherwise.

\(R\) denotes the sample size. The samples \(\left\{ {\left( {K^{\left( i \right) },\varvec{\theta }^{\left( i \right) }} \right) } \right\} _{i=1}^R \) can be drawn from the IF \(q\left( \varvec{\theta } \right) \), and the normalized weights \(\omega ^{\left( i \right) }\) can be calculated. According to the principle of the IS approach, a Monte Carlo integration can be performed by drawing \(R\) samples from the IF to approximate the integral in Eq. (22) with the sample average [18]

As \(R\) goes to infinity, the result in Eq. (23) approximates the true posterior by the law of large numbers [29]. In the parameter estimation, the sum of sample weights approaching the true values usually has a large value \(\lambda \). When the value \(\lambda \) is less than a specific threshold value (for example, 1e\(-\)2), the IF belongs to an unreasonable prior and can be abandoned. Therefore, after several iterations, the IF with the largest \(\lambda \) can be determined and its model order is the true value \({\hat{K}}=K\).

The \(t\)th iteration in the proposed method comprises two steps. Multinomial distribution \(M\left( {\alpha _1^{\left( t \right) } ,\ldots ,\alpha _D^{\left( t \right) } } \right) \) is used to denote the candidate probability of IFs, which is usually determined by the sum of all sample weights generated from some IF. One step is to select the IF according to \(M\left( {\alpha _1^{\left( t \right) } ,\ldots ,\alpha _D^{\left( t \right) } } \right) \), whereas the other is to draw samples from the selected IF.

If the model order is a priori known, the detection process above can be skipped and the signal parameter can be directly estimated using Eqs. (16)–(21). In the aforementioned model, order estimation method, the pre-detection estimation method first detects peaks according to the chirpogram transformed using the MDCFT method and then constructs IFs. The PMC method is used to estimate the model order and signal parameters at the same time. The method performance is closely related to IF selection. By utilizing the characteristics of chirp signals, the proposed method efficiently selects IFs and the generated samples occur around the true value with a great possibility. The method adapts low SNR and has high estimation accuracy in case of a small sample size. Therefore, the proposed method has efficient detection and parameter estimation performance.

3.3 Flow of the proposed method

Considering the detailed description of the principles and implementation procedures of the proposed method, the flow chart of the MDCFT–PMC method is shown in Fig. 1.

The specific implementation process of the proposed method is shown in Table 1. The estimation process of the model order will be given as supplementary explanation.

4 Numerical results

To validate the parameter estimation performance of multi-component chirp signals based on the MDCFT–PMC method, this section performs simulations in case of changing SNR and sample size and compares the results with that of the MCMC [12–14] and Gibbs–MPMC methods [17, 20]. This section also uses simulations to indicate the convergence of the proposed method.

The parameters of the simulations are set as follows: In the Bayesian model of multi-component chirp signals, the hyper-parameter of the prior noise variance is \(\upsilon _0 =2,\gamma _0 >0\). The iteration times of the Gibbs–MPMC and MDCFT–PMC methods are both 10. The size of the samples drawn in each iteration is \(R\). In the initialization of the method, the variances of the center frequency and chirp rate of IFs are 0.01 and 0.001, respectively. The correlation coefficient between both variances is 0.1. The setting of the MCMC method is consistent with the literature [12]. The selected length of the Markov chain is \(10\times R\) when comparing the performance. In each simulation realization, \(W\) simulations are implemented. When evaluating the parameter estimation accuracy of each method, the variances of the center frequency and chirp rate of each chirp are taken as the criterion, which is defined as

where \(W\) denotes the simulation times in a certain simulation realization. \(\varvec{\theta }_k^{\left( w \right) } \) and \({\hat{{\varvec{\theta }}}}_k^{\left( w \right) } \), respectively, correspond to the true and estimated values of the chirp parameter of the \(k\)th component in the \(w\)th simulation. Accordingly, the CRLB [30], which is used to compare performance, also takes the mean square error of the CRLB of each chirp component.

4.1 Example

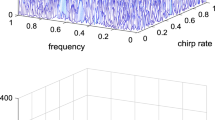

With the aid of simulation in typical realization, the implementation process of the proposed method is illustrated. This subsection provides a comparison of the chirpograms based on the DCFT and MDCFT methods.

Similar to the simulation realization in [16, 20], two chirp components with SNR = 10 dB are assumed in the environment. The normalized center frequencies are 0.302 and 0.328; the normalized chirp rates are 0.001 and 0.002; the complex amplitudes are 1; the number of observed data is 50; the calculation formula of the SNR is \(\hbox {SNR}=10\log 10\left[ {A_1^{2} / \sigma _\varepsilon ^2 } \right] \); the observation noise \(\sigma _\varepsilon ^2 \) obeys complex Gaussian distribution; and the components in the real and imaginary parts are independent and satisfy Gaussian distribution \(N\left( {0,\sigma _\varepsilon ^2 /2} \right) \). In single simulation experiment, the divided lattice space based on the MDCFT is \(100\times 100\). The iteration times based on the PMC method are 10, and the size of the samples drawn in each iteration is 1,000. The estimation outputs of the center frequency and chirp rate of two chirp components are shown in Fig. 2.

In Fig. 2, the MDCFT–PMC method can guarantee that the generated samples will occur around the true value with great probability and can reach very high estimation accuracy after 10 iterations. After the relatively acute fluctuation of the center frequency and chirp rate, the estimation value can stabilize around the true value and can reach very high estimation accuracy after 10 iterations. The estimated value can accurately reflect the true value. The iteration process of the MDCFT–PMC method can be interrupted at any time, and an increase in iteration times can improve the parameter estimation accuracy.

Figure 3 shows the chirpogram of the signals calculated by the DCFT and MDCFT. The divided lattice space is \(100\times 100\). Thus, the center frequency and chirp rate both do not lie in the divided lattice point. Figure 3 (left) shows that the chirpogram calculated by the DCFT is affected by the “picket fence,” and the true peak is submerged in numerous peaks in the DCFT domain. Consequently, the detection accuracy of peaks decreased. Figure 3 (right) shows that the MDCFT can avoid the “picket fence” effect and easily detect the correct peaks.

Therefore, when constructing the IFs of the PMC method with the information provided by the peaks in the chirpogram, the detection results can include the true peak with more retained peaks in the chirpogram calculated by the DCFT, which undoubtedly increases the processing difficulty and computational complexity of the follow-up method. By contrast, the chirpogram calculated by the MDCFT evidently increases the detection rate of the real peaks and better constructs more reasonable IFs using the detection results.

4.2 Estimation performance of the MDCFT–PMC method on the model order

The estimation performance of the MDCFT–PMC method in terms of the model order of multi-component chirp signals is verified through simulation. The variation range of the SNR falls between [\(-\)5, 10] dB, and the variation range of the sample size falls between [600, 1,000], with a step length of 200. The other simulation parameters are similar to those in Sect. 4.1. The simulation experiments are repeated 50 times. The estimation accuracy of the MDCFT–PMC method on the model order is shown in Fig. 4.

Figure 4 shows that in terms of different sample sizes, the MDCFT–PMC method does not have approximately the same SNR threshold. When the sample size is 1,000, the estimation accuracy of the MDCFT–PMC method can reach 95 % or more in case of \(-\)2 dB. When the sample size is 600 or 800, the estimation accuracy of the MDCFT–PMC method can reach 95 % in case of \(-\)1 dB. An increase in sample size can improve the estimation accuracy of the MDCFT–PMC method in terms of the model order and enable the method to have the adaptive capacity for low SNR and to adapt to the follow-up processing requirements.

4.3 Convergence of the MDCFT–PMC method

The MDCFT–PMC method adopts the strategies based on the PMC and has good convergence. By the aid of the simulation experiments in typical simulation realizations, the method is illustrated in detail. The simulation in Sect. 4.1 was repeated for 50 times, and the update speed of the center frequency and chirp rate in 10 iterations was obtained as \({\sum \nolimits _{w=1}^W {{\left\| {{\hat{{\varvec{\theta }}}}_{k+1} -{\hat{{\varvec{\theta }}}}_k } \right\| _2 }\big /{\left\| {{ \hat{{\varvec{\theta }}}}_k } \right\| _2 }} }/W\). Figure 5a corresponds to the center frequency parameters, whereas Fig. 5b corresponds to the chirp rate parameters.

Figure 5a, b shows that the convergence of the estimation of the MDCFT–PMC method on the center frequency and chirp rate is good. The convergence rate is initially rapid. After four iteration processes, the sample results of the center frequency and chirp rate quickly converged. In the follow-up iteration processes, the convergence rate tends to be stable. During method initialization, the constructed IFs tend to select IFs with a larger search parameter space. Significant randomness exists during sample generation. In the follow-up stage, the MDCFT–PMC method adaptively selects and adjusts IFs in the iteration process. In this manner, the IFs with better local character are likely to be selected and the sample can occur around the true value with greater possibility.

4.4 Parameter estimation performance of the MDCFT–PMC method

The parameter estimation performance of the MDCFT–PMC method on multi-component chirp signals is verified. To prevent the influences of other factors, the model order of chirp signals is assumed as known. This section investigates the MDCFT–PMC method performance along with the SNR and sample size variation and compares this method with the classical MCMC and Gibbs–MPMC methods.

4.4.1 Performance comparison in noisy environment

To verify the robustness of the MDCFT–PMC method in a noisy environment, this subsection evaluates the parameter estimation performance of the proposed method through simulation experiments and compares with the MCMC and the Gibbs–MPMC method. We assume that the SNR variation range of chirp signals falls between [\(-\)2, 10] dB. The simulation experiments are repeated 100 times, whereas the other parameters are the same as those in Sect. 4.1. The parameter estimation performance chart of the center frequency and chirp rate based on the MCMC, Gibbs–MPMC, and MDCFT–PMC methods is shown in Fig. 6.

Figure 6 shows that the parameter estimation accuracy of the three methods to reach the CRLB differs in terms of the SNR thresholds. The MCMC and Gibbs–MPMC methods adopt the DCFT method to calculate the chirpogram and perform spectral peak detection. Compared with the MDCFT method, the chirpogram calculated using the DCFT method does not have evident peaks, which results in a decrease in detection performance. The MCMC method performance depends on the proposal distribution of the initial selection. If the selected proposal distribution is not ideal, the performance will exhibit a sharp reduction. The Gibbs–MPMC method selects spectral peaks according to the chirpogram calculated using the DCFT method and constructs the IF to generate samples. Resampling is adopted to approach the true parameters constantly. The performance of this method can be easily affected by the SNR. The estimation accuracy of the center frequency and chirp rate of the MDCFT–PMC method can reach the CRLB in case of 0 dB, whereas those of the Gibbs–MPMC method can reach the CRLB in case of 2 dB. The effect of the SNR is greater on the MPMC method. In the case of low SNR, the proposed method has better parameter estimation performance.

4.4.2 Performance comparison in case of changing sample size

A simulation in case of changing sample size is performed. Only the Gibbs–MPMC and MDCFT–PMC methods are considered. The variation range of the sample size falls between [100, 1,400], with the step length of 100. The simulation is repeated 100 times. The chart of the parameter estimation performance of the center frequency and chirp rate based on the Gibbs–MPMC and MDCFT–PMC methods is shown in Fig. 7.

Figure 7 shows that when the sample size is 400, the parameter estimation accuracy of the MDCFT–PMC method can reach the CRLB. When the sample size is 700, the parameter estimation accuracy of the Gibbs–MPMC method can reach the CRLB. The high quality of the samples generated in the parameters’ importance region of the Gibbs–MPMC method is appropriate to the true parameters. In other words, a larger size of samples results in a greater possibility of involving the true value. Therefore, the performance of the Gibbs–MPMC method is significantly affected by the sample size. In the iteration process of the MDCFT–PMC method, the samples with high quality are utilized to update the IFs, which are used to generate samples in the next iteration. In this way, even in the case of a small sample size, the parameter and target function of multi-component chirp signals can be estimated. In conclusion, the proposed method exhibits better parameter estimation performance in case of a small sample size.

5 Conclusion

Through the combination and development of the previous achievements on the MDCFT and PMC methodologies, this paper applied the relevant progress into the joint Bayesian model selection and parameter estimation of multi-component chirp signals with white Gaussian noise. To enable the samples drawn from the IF to occur around the true value with a greater possibility, this paper adopted the MDCFT to calculate the chirpogram and detect peaks in the DCFT domain and constructed multiple IFs on the basis of peaks. Meanwhile, this paper used the PMC method to update the IFs with an adaptive learning process. This method can estimate not only the signal parameters but also the posterior distribution of the observed data on unknown parameters.

The simulation results show that compared with two existing methods based on the Monte Carlo concept, the proposed method exhibits better performance in case of lower SNR or small sample size and good convergence. The proposed method also has strong significance in solving related problems in spectral analysis, wideband radar imaging field, and so on.

References

Djuric, P.M., Kay, S.M.: Parameter estimation of chirp signals. IEEE Trans. Acoust. Speech Signal Process. 38(12), 2118–2126 (1999)

Li, H.S., Djuric, P.M.: MMSE estimation of nonlinear parameters of multiple linear & quadratic chirps. IEEE Trans. Signal Process. 46(3), 796–800 (1998)

Abatzoglou, T.J.: Fast maximum likelihood joint estimation of frequency and frequency rate. In: IEEE International Conferenceon Aerospace Electronic Systems, Tokyo, Japan, April 7–11 (1986)

Besson, O., Ghogho, M., Swami, A.: Parameter estimation for random amplitude chirp signals. IEEE Trans. Signal Process. 47(12), 208–3219 (1999)

Ikram, M.Z., Meraim-Abed, K., Hua, Y.: Iterative parameter estimation of multiple chirp signals. Electron. Lett. 33(8), 657–659 (1997)

Ikram, M.Z., Meraim-Abed, K., Hua, Y.: Estimating the parameters of chirp signals: an iterative approach. IEEE Trans. Signal Process. 46(12), 3436–3441 (1998)

Barbarossa, S.: Analysis of multicomponent LFM signals by a combined Wigner–Hough transform. IEEE Trans. Signal Process. 43(6), 1511–1515 (1995)

Guo, J., Zou, H., Yang, X., Liu, G.: Parameter estimation of multicomponent chirp signals via sparse representation. IEEE Trans. Aerosp. Electron. Syst. 47(3), 2261–2268 (2011)

Applebaum, L., Howard, S.D., Searle, S., Calderbank, R.: Chirp sensing codes: deterministic compressed sensing measurements for fast recovery. Appl. Comput. Harmon. Anal. 26(2), 283–290 (2009)

Millioz, F., Davies, M.: Sparse detection in the chirplet transform: application to FMCW radar signals. IEEE Trans. Signal Process. 60(6), 2800–2813 (2012)

Mallat, S.G., Zhang, Z.: Matching pursuits with time-frequency dictionaries. IEEE Trans. Signal Process. 41(12), 3397–3415 (1993)

Andrieu, C., Doucet, A.: Joint Bayesian model selection and estimation of noisy sinusoids via reversible jump MCMC. IEEE Trans. Signal Process. 47(10), 2667–2676 (1999)

Davy, M., Doncarli, C., Tourneret, J.Y.: Classification of chirp signals using hierarchical Bayesian learning and MCMC methods. IEEE Trans. Signal Process. 50(2), 377–388 (2002)

Lin, C.C., Djuric, P.M.: Estimation of chirp signals by MCMC. in: ICASSP ’00. Proceedings, June 5–9, pp. 265–268 (2000)

Lin, Y., Peng, Y., Wang, X.: Maximum likelihood parameter estimation of multiple chirp signals by a new Markov Chain Monte Carlo approach. In: IEEE Radar Conference Proceedings, Philadelphia, USA, Apr. 26–29, pp. 559–562 (2004)

Saha, S., Kay, S.M.: Maximum likelihood parameter estimation of superimposed chirps using Monte Carlo importance sampling. IEEE Trans. Signal Process. 50(2), 224–230 (2002)

Cappe, O., Guillin, A., Marin, J.M., Robert, C.P.: Population Monte Carlo. J. Comput. Graph. Stat. 13(4), 907–929 (2004)

Hong, M., Bugallo, M.F., Djuric, P.M.: Joint model selection and parameter estimation by population Monte Carlo simulation. IEEE J. Sel. Top. Signal. Process. 4(3), 526–539 (2010)

Bugallo, M.F., Hong, M., Djuric, P.M.: Marginalized population Monte Carlo. In: ICASSP ’09. Proceedings, Taipei, Taiwan, April 19–24, pp. 2925–2928 (2009)

Shen, B., Bugallo, M.F., Djuric, P.M.: Estimation of multimodal posterior distributions of chirp parameters with population Monte Carlo sampling. In: ICASSP ’12. Proceedings, Kyoto, Japan, March 25–30, pp. 3861–3864 (2012)

Xia, X.G.: Discrete chirp-Fourier transform and its application to chirp rate estimation. IEEE Trans. Signal Process. 48(11), 3122–3133 (2000)

Fan, P., Xia, X.G.: Two modified discrete chirp-Fourier transform schemes. Sci. China, Ser. F 44(5), 329–341 (2001)

Guo, X., Sun, H.B., Wang, S.L.: Comments on discrete chirp-Fourier transform and its application to chirp rate estimation. IEEE Trans. Signal Process. 50(12), 3115–3116 (2002)

Xia, X.G.: Response to comments on discrete chirp-Fourier transform and its application to chirp rate estimation. IEEE Trans. Signal Process. 50(12), 3116 (2002)

Wu, L., Wei, X.Z., Yang, D.G., Wang, H.Q., Li, X.: ISAR imaging of targets With complex motion based on discrete chirp Fourier transform for cubic chirps. IEEE Trans. Geosci. Remote Sens. 50(10), 4201–4212 (2012)

Darren, W., Martin, K., Karim, B., Olivier, C., Jean, F.C., Gersende, F., Simon, P., Christian, P.R.: Estimation of cosmological parameters using adaptive importance sampling. Phys. Rev. D 80(2), 1511–1539 (2009)

Cheng, H., Zeng, D., Zhu, J., Tang, B.: Maximum likelihood estimation of co-channel multicomponent polynomial phase signals using importance sampling. Progress Electromagn. C 23, 111–122 (2011)

Kilbinger, M., Wraith, D., Robert, C.P., Benabed, K., Cappé, O., Cardoso, J.F., Fort, G., Prunet, S., Bouchet, F.R.: Bayesian model comparison in cosmology with population Monte Carlo. MNRAS 405(4), 2381–2390 (2010)

Douc, R., Guillin, A., Marin, J.M., Robert, C.P.: Convergence of adaptive mixtures of importance sampling schemes. Ann. Appl. Stat. 35(1), 420–448 (2007)

Friedlander, B., Francos, J.M.: Estimation of amplitude and phase parameter of multicomponent signals. IEEE Trans. Signal Process. 43(4), 917–926 (1995)

Acknowledgments

The authors would like to thank the anonymous reviewers for their valuable comments that helped improve this paper to its present form.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1

The posterior distribution of \(\left( {\varvec{\alpha }, {\varvec{f}},{\varvec{s}},\sigma _\varepsilon ^2 ,K} \right) \) can be expressed as follows according to the likelihood function of \({\varvec{y}}\) given in Eq. (3) and the a priori of each parameter given in Eqs. (6) and (7):

Referring to the literature [12, 13, 18], the expression after integrating terms relevant to amplitudes \(\varvec{\alpha }\) in Eq. (25) is given as

where

Equation (26) has a Gaussian function of \(\varvec{\alpha }\) and inverse Gamma function of \(\sigma _\varepsilon ^2 \). After the integration of these two variables in (26), the posterior distribution of the observed data \({\varvec{y}}\) on \(K, {\varvec{f}}\), and \({\varvec{s}}\) is

Appendix 2

We assume that \(p\left( \varvec{\theta } \right) \) is the target function; \(q^{\left( t \right) }\left( \varvec{\theta } \right) \) represents the IF in the \(t\)th iteration; and \(\varvec{\theta }\) represents the unknown parameters. When applying the PMC methodology to the parameter estimation problem, whether the adopted IF can be approximated to the target function is important. Our objective is to minimize the Kullback divergence, which can be expressed as \(K\left( {p|| q^{\left( t \right) }} \right) =\int {\log \left( {\frac{p\left( \varvec{\theta } \right) }{q^{\left( t \right) }\left( \varvec{\theta } \right) }} \right) p\left( \varvec{\theta } \right) \mathrm{d}\varvec{\theta }}\).

Assume \(h_d \left( {\varvec{\theta };\alpha ,\varvec{\xi }} \right) =\frac{\alpha _d g\left( {\varvec{\theta };\varvec{\xi }_d } \right) }{\sum \nolimits _{d=1}^D {\alpha _d g\left( {\varvec{\theta };\varvec{\xi }_d } \right) } }\). In \(t\)th iteration, the intermediate quantity is constructed as

The derivation in [26, 28, 29] indicates that for any \(\alpha \) and \(\varvec{\xi }\), when the intermediate quantity (31) increases, the target function also increases. The maximum \(L^{\left( t \right) }\left( {\alpha ,\varvec{\xi }} \right) \) canobtain a closed solution. In the multivariate Gaussian distribution, the parameters include mean value and covariance matrix. The intermediate quantity can be expressed as

up to terms that do not depend on \(\alpha ,\varvec{\xi }\).

When the above formula reaches the minimum, the following equations are satisfied:

In practice, the numerator and the denominator are integral. By utilizing the samples and weights in each iteration for approximation, Eqs. (19)–(21) can be obtained.

Rights and permissions

About this article

Cite this article

Yang, P., Liu, Z. & Jiang, WL. Parameter estimation of multi-component chirp signals based on discrete chirp Fourier transform and population Monte Carlo. SIViP 9, 1137–1149 (2015). https://doi.org/10.1007/s11760-013-0552-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-013-0552-0