Abstract

A coating buildup model was developed, the aim of which was simulating the microstructure of a tantalum coating cold sprayed onto a copper substrate. To do so, first was operated a fine characterization of the irregular tantalum powder in 3D, using x-ray microtomography and developing specific image analysis algorithms. Particles were grouped by shape in seven classes. Afterward, 3D finite element simulations of the impact of the previously observed particles were realized. To finish, a coating buildup model was developed, based on the results of finite element simulations of particle impact. In its first version, this model is limited to 2D.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Cold spray is a thermal spraying technique, where the sprayed powder adheres to the substrate due to plastic deformation at particle impact (Ref 1). The powder and the substrate undergo moderate heating, and the respective temperatures stay below the melting point. This process is more and more attractive to several industries due to its additive manufacturing abilities (Ref 2,3,4,5,6,7), compared to other coating applications that are more traditional and have been the subject of extensive study in the last decades. The typical cold spray deposit is characterized by a peculiar microstructure (Ref 8) that reflects the buildup process in which the powder particles impinge upon the substrate and eventually adhere to it. During each one of the impacts, both the particle and the substrate deform in a way that strongly depends on the respective material properties and impact conditions (Ref 9).

Modeling is a fundamental tool for understanding the phenomena related to cold spray. It has been extensively used to this aim. For instance, it is the only known way to estimate certain properties, such as local particle or substrate temperature rise, data that have not been experimentally accessible so far. A major fraction of the modeling work to this day has focused on the idealized case of a perpendicular impact of a single spherical particle on a flat substrate using the finite element (FE in the following) analysis. With this technique, for the case of 3D simulations, the number of particles is bound to the order of tens, due to limited computational resources. As a consequence, only the first layers of deposited particles could eventually be simulated in such a way. Other simulation methods were used to simulate the buildup process of a large number of particles. For example, in Ref 10 the “smooth particle hydrodynamic” (SPH) allowed the simulation of multi-particle impacts and in Ref 11 another method was developed to simulate the buildup process of a large number of particles. In the latter, the model was based on phenomenological considerations for the particle deformation and did not show the resulting microstructure, limiting to the simulation of the coating porosity.

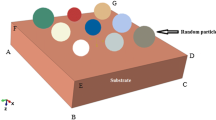

The present study aims at developing an original framework for the modeling of the cold spray process, particularly focusing on the simulation of the coating microstructure. This original approach to the modeling of the process is morphological in multiple senses. Firstly, it focused on the morphology of the real powder, taking advantage of the abilities of microtomography as a 3D imaging method. Then, the powder particles’ mechanical behavior was investigated by means of 3D FE impact simulations, again focusing on the changes in the shape of the particles and substrate. Finally, aiming to reproduce by simulations of the microstructure of the coating, a buildup model was developed. This last modeling step used the results of FE simulations to take into account deformations at the impact and aimed to reproduce the deposition of thousands of particles. The buildup model is still at a developing stage and is limited, for the moment, to the 2D case.

The modeling approach developed in this study focused on the case of a tantalum coating onto a copper substrate, but it can be applied to other metals, provided that the material model parameters are known for those materials. Even the case of a mixed powder could be approached with this method. The modeling efforts presented in this paper go in the direction of filling the gap between the idealized view of simulations and the complexity of the real world, in the hope that a solid modeling foundation will enable a numerical guided optimization of substrate treatments and powder shaping for the cold spray and other processes.

Materials and Process

The materials used here as a case of study were copper for the substrate and tantalum for the sprayed powder. Nevertheless, the methods developed for the characterization of the powder and the models are applicable, with some adaptation, to other kind of materials.

The tantalum powder was the Amperit 151.065(HC Stark, Munich, Germany), with a granulometry characterized by the following diameter quantiles: d 10 = 9.70 µm, d 50 = 21.45 µm and d 90 = 42.80 µm. The powder was of the fused and crushed type, characterized by irregularly shaped particles typical of this production route. Substrates consisted in oxygen-free high thermal conductivity (OFHC) copper parallelepipeds of size 5 × 5 × 1 cm3. No particular surface preparation was applied to as-machined substrates.

All the sprayings were performed at the thermal spraying laboratory of CEA (the French Alternative Energies and Atomic Energy Commission)—Le Ripault (Ref 12), in a specific cold spray facility, with the use of helium as principal gas. Spraying parameters were kept constant for all the experiments: the pressure of the principal gas was 12 bar and its temperature 200 °C, while the stand-off distance of the nozzle was 10 mm. A tungsten carbide MOC (Method Of Characteristics) optimized nozzle was used for all the sprayings. A typical picture of the resulting microstructure, showing the deformed tantalum particles that constitute the coating, is presented in Fig. 1. To reveal part of the particle boundaries visible in the figure, the sample has been etched for 5 s in a solution made of 20% vol. HNO3, 20% vol. HF and 60% vol. H2SO4.

In-flight particle velocity measurements were performed during the spraying, by means of an ultra-fast camera installation in the spray chamber. The system is illustrated in Fig. 2. A flash light illuminated the particle in flight. Two synchronized cameras took two images at different times, at 100 ns of interval. Image analysis tools enabled the individual detection of each particle in two subsequent pictures. Thus, knowing the time difference and measuring the distance flown by the particle in the same interval, particle velocity was able to be estimated. To have less particles in each image and thus make their identification easier, the sprayings were intentionally performed with a reduced powder flow. Moreover, a diaphragm (i.e., a metal sheet with a 2 mm diameter hole) was used to further reduce the flux of impacting particles, as needed by the velocity measurement system. In addition, the diaphragm selected only the particles passing near the center of the stream and the variability in the distribution of their velocities was reduced. Size–velocity measurements are shown in Fig. 3. Even if only the particles passing through the center of the stream were selected, for each size class there still was a large variation in the distribution of the velocities. This data set was used for the assignment of the velocities for subsequent FE simulations.

Morphological Characterization of the Powder

As already mentioned, the way this powder was produced resulted in a large variety of particle shapes, as shown in the SEM top view of the free powder in Fig. 4. Henceforth, in this study a particular attention was paid in accurately characterizing the powder morphology. The aim of this part of the study was to build a 3D library of powder particles, to be used in FE impact simulations.

Methodology

A sample of the tantalum powder was dispersed into a resin to produce a cylindrical sample of 1 × 1 × 3 mm3 and then analyzed at the European Synchrotron Radiation Facility (ESRF) in Grenoble, ID19 line, where x-ray microtomography (XMT) was performed. XMT is nowadays a well-developed nondestructive 3D imaging technique, widely used in many different scientific fields, including thermal spraying (Ref 13,14,15,16,17). A cross section of the 3D XMT image of the powder is shown in Fig. 5. The gray level of each voxel (i.e., the 3D analogue of a pixel) is proportional to the local x-ray absorption of the sample. Thus, the particles appear in white and the embedding resin is shown in black.

The 3D XMT image was treated with SMIL (Simple Morphological Image Library Ref 18) in a python environment. A marker-driven watershed algorithm (Ref 19) was used for the first segmentation. The markers were obtained by means of a simple thresholding which permitted to identify the core of the particles. The presence of aggregates, i.e., particles that were too close to each other to be separated by segmentation, could influence the subsequent shape analysis. Consequently, to separate each aggregate into its constituents, a specific image treatment procedure was developed and applied to the collected data. This analysis was performed on one 3D image and led to the construction of a 3D particle library consisting in about 15,000 objects, to be used in the subsequent models.

Considering the large number of particles, a classification method based on shape criteria was contemplated. It consisted in the regroupment of all particles in a finite set of shape classes. This innovative method, besides presenting a relevant interest per se, was meant to be a tool for guiding the sampling procedure, necessary for the extraction of a set of particles statistically representative of the powder. The first step was the quantitative shape measurement on each individual particle. To do so, a series of morphological measurements was performed on the whole particle library, again using SMIL. A description of the chosen measures and their distribution into extensive (i.e., size-dependent) and intensive (size-independent) ones is shown in Table 1. In particular, two parameters that need to be explained more thoroughly were introduced: the normalized PAI (Principal Axes of Inertia) and the imbrication. The first one, defined in Table 1, was taken from (Ref 20). Its value indicates if the shape of the particle tends to be compact rather than elongated or flat. The second one was a measure of the convexity of objects along a certain direction. Imbrication was introduced in Ref 21, where it was measured along the three independent directions in space. In contrast, in the present work imbrication was measured along 20 directions isotropically distributed and then the mean value was retained.

A first class of particles could already be isolated, gathering the smallest ones and the almost spherical ones. For small particles (<3 µm in at least one direction), whose size was close to the experimental limits of the applied XMT imaging technique (voxel size of 0.85 µm3), the precise determination of the shape was not possible. It was nevertheless decided to include them in the library, in order to avoid a bias in the granulometry. It was then assumed, as their shape could not be determined precisely, that these particles were nearly spherical. This has no consequence on the study, since they represent a negligible fraction of the powder volume, as will be shown in the results.

The correlation between each couple of measures was checked as shown in Fig. 6 in the whole library, separating the set of size and shape parameters. In general, pairwise diagrams show the degree of correlation between two variables. Data points grouped along a straight line indicate a linear correlation between the two variables. Other nonlinear correlations can also appear from these diagrams when data points are organized in typical patterns. If, on the contrary, points are dispersed without any particular order, the two variables are completely uncorrelated. Looking at the results in Fig. 6, it appears that size measurements (the first set) were highly correlated, while, on the contrary, shape measurements appeared to be quite uncorrelated. As a consequence, the whole set of shape measures was kept for later analysis.

In order to reduce the number of parameters involved in the classification process, the PCA (Principal Component Analysis) was applied separately to the size and to the shape parameters. One may refer to (Ref 22) for a complete description of this method. Considering the set of size measures, as expected, just one principal component was sufficient to express almost 95% of the total variance. On the other hand, for the set of shape measures, the first three principal components expressed 90% of the total variance of the data set. Thus, they were considered to be sufficient to describe the shape variability within the library. The three principal components of the shape analysis were kept as the data set for the following classification.

Cluster analysis was pointed out to be the natural framework to group particles in shape classes. Among the different techniques available, the K-means (an unsupervised clustering method) was chosen. A full description of this technique is given in Ref 23. The only parameter to be chosen by the user was K, the number of classes. The lack of a native group structure in data, which were almost homogeneously distributed in the PCA space, made the choice of K rather arbitrary. Even examining the variations with K of intra- and inter-group variance, it was not possible to determine an optimal value for K, which was then fixed to 6. A random sampling procedure was finally used to extract some representative particles within each class, to be used in the subsequent impact simulations. Note that the final number of classes is seven when counting also the class of spherical small particles, which was excluded from the clustering.

Results and Discussion

The distribution of the particles in shape classes, together with some representative, is shown in Fig. 7. The two pie charts describe, respectively, the number and the cumulative volume of particles in each class. The fact that certain classes were predominant in volume means that size was non-homogeneous between classes. In the same figure, two particles for each class are shown (except for class 1). When looking at this image, the reader should consider the limitations of a 2D representation. In fact, some particles seem to be actually very similar in their shapes, while belonging to different classes. This can be due to an optical effect produced by the 2D representation of a 3D image: the foreground hides the back of the particle. The shape of the showed particles could be fully judged in a 3D view only. Nevertheless, it is admissible that some particles with a very similar shape belong to different classes. The clustering, in this case, was a structure which was imposed on the data without being naturally present, as can be inferred from Fig. 6. The data set appears as a dense homogeneous cloud of points, lacking any structural organization. Thus, two particles, which belong to neighboring classes and lie close to the common boundary (on opposite sides), are expected to have very similar shapes and there is no contradiction in this.

The choice of K was made on the basis of qualitative remarks and was a compromise between the homogeneity of shapes inside each class and the simplicity of the description. In fact, a big K (i.e., many small classes) would result in a more complex range of shapes, closer to each other. Moreover, a higher number of classes and, consequently, more boundaries between them, would result in a higher total length for the internal boundaries and, thus, in a higher number of particles close to these boundaries. Because particles close to an internal boundary share the characteristics of the neighboring classes, having more classes would increase the overall uncertainty of the classification. On the contrary, a small K (i.e., few big classes) would conduce to a pronounced heterogeneity in shapes inside each class, thus reducing the precision of the classification. Several values of K in the range 4-15 were tried and compared. At the end, K = 6 was retained the best compromise.

Finite Element Modeling

Description of the Model

Finite element simulations were developed to study the deformation field produced by the impacts. The physics behind the simulations did not differ from most of the modeling articles present in the literature (e.g., among many others Ref 24, 25). The Mie–Grüneisen equation of state (EOS), describing the material state in the high pressure domain and shock wave propagation, was used in the Hugoniot formulation:

where p is the pressure, ρ the density, E the internal energy, Γ = Γ 0 ρ 0 /ρ, η = 1 − ρ 0 /ρ, p H = ρ 0 c 2 0 η/(1 − s η) 2 and E H = p H η/2ρ 0 . There are three independent parameters for each material in the EOS: η, s and c 0 . Further details on the Mie–Grüneisen EOS can be found in [26].

There were different types of material models that could be used to simulate the mechanical phenomena involved in cold spray (Ref 27, 28). The widely used Johnson–Cook model (Ref 29), an empirically based representation of the yield stress, was selected on the basis of material parameters availability in the literature. The model considers strain hardening, strain rate hardening and thermal softening. The yield stress is given as:

where A, B, C, n and m are material parameters, ε is the strain, \(\dot{\varepsilon }\) is the strain rate, \(\dot{\varepsilon }_{0}\) a reference strain rate, T m the melting temperature of the material and T 0 a reference temperature.

Heat conduction was included in the model, but heat transfer between the particle and the substrate was neglected, to avoid the inclusion of other non-measurable parameters in the model. The parameters for the two materials of this study, taken from the literature, are given in Table 2.

At the interface between the particle and the substrate, friction was considered. A modified Coulomb model was applied (i.e., the limit shear grows linearly with the normal contact pressure, until an upper value is reached). In earlier works a negligible effect of the friction coefficient was reported (Ref 25), so in the present study it was fixed at 0.5. Adhesion was not considered, because it would have required additional unknown parameters and it was not believed to have a major influence on the deformation field. The material parameters used are summarized in Table 2. The whole model was implemented in the commercial finite element software Abaqus/Explicit®, in a full 3D configuration.

Among all the impact variables (i.e., the features that can be different for each impact and can thus influence the result), only a few were selected for a parametric study. In particular it was decided to vary the shape, the orientation at impact, the velocity and the substrate material. These variations are explained in the following. For each shape class, ten representatives were randomly chosen, in order to define a set of particles representative of the whole powder.

The question of the orientation of the irregular particles at the impact is not discussed in the literature to our knowledge. Some considerations can be made, on the basis of fluid dynamics arguments in regard to the forces resulting on a rigid body of arbitrary shape in a fluid flow (Ref 30). If some equilibrium position exists for bodies of particular symmetry, in the general case of an irregular body the existence of a stable orientation is not assured. As a result, most of the particles will oscillate in an irregular and hardly predictable way. Even without using the arguments of particle-to-particle interaction before impact (i.e., in-flight collisions) and particle–nozzle wall interaction, which certainly contribute to the complexity of this problem, it can be reasonably stated that irregularly shaped particles do not have, in general, a defined orientation at impact. A deeper study of particle–fluid interaction could give more insights on this topic. For example it is likely that for each particle some probability distribution of the different orientations exists.

In the present study, it was decided to test, for each particle, four different orientations at impact. The already mentioned shape–velocity measurements were used as a basis when assigning a velocity to each particle in the following way. Three different velocities, statistically chosen as a function of the particle size on the basis of the data variation shown in Fig. 3, were tried for each impact configuration. Finally, the substrate material was varied between copper (representative of the first impacts of the coating buildup only) and tantalum (representative of the rest of the impacts, i.e., onto already deposited particles). The total number of simulations to be performed can thus be calculated by multiplication: 7 classes × 10 representatives × 3 velocities × 4 orientations × 2 substrates = 1680.

The process to get an appropriate mesh for the 3D particles was performed in several steps. First, starting from the segmented 3D tomographical image previously discussed, the commercial software Avizo, FEI (Hillsboro, OR, USA), was used to produce a triangulation of the surface and a smoothing of the latter, in order to remove the stair-like effect given by the pixels. Then, the products MeshGems-SurfOpt and MeshGems-Hexa, developed by Distene (Bruyeres-le-Chatel, France) were, respectively, used to optimize the surface mesh and to transform the surface triangulation into a hexahedric (i.e., bricklike) volumetric mesh. The choice of hexahedric elements was dictated by an apparent incapability of the ALE (Arbitrary Lagrangian–Eulerian) remeshing tool of Abaqus® in 3D, which will be shortly explained, to deal with tetrahedral meshes.

The substrate was modeled as a square cuboid, divided into three parts for meshing purposes: the zone near the impact (with a fine mesh), a transition zone and the part far from the impact (with a coarse mesh). The deformation of the mesh could be locally very large. For achieving a numerical convergence with such large deformations, the adaptive meshing tool available in the Abaqus software was applied to a domain which corresponded to the particle and the finely meshed part of the substrate. This tool, namely ALE remeshing, performed a regularization of the distorted mesh by displacing the nodes at the interior of the selected domain, which resulted in a reduced overall distortion. The solution was then mapped to the new mesh, with a process that guarantees energy conservation (Ref 31). This procedure was given the name of “mesh sweep.” The user could set the frequency f and the intensity i of the remeshing step. At every f increment in time, the software performed i mesh sweeps. The whole simulation was divided into two temporal steps: the first covered the impact and the main part of the deformation, while the second one contained the rest of the simulation, i.e., the less severe part of deformation, with relaxation and rebound of the particle. Remeshing was necessary in the first step only, when fast material deformation entrained a severe distortion of the mesh. It was deactivated in the second step, to avoid any further influence of the remeshing on the results. In effect, the massive application of ALE remeshing could introduce unlikely distortions of the mesh and non-physical temperature changes during the simulation.

An important issue linked with the remeshing was the loss of correspondence between mesh nodes and material points. In order to study the displacement fields produced within the substrate at the impact, it was important to know the movement of the material points. The Abaqus® tool called “tracer particles” came into play for this aim. It allows to follow the position of a certain number of material points during the simulation, the number of points being limited by the available memory. The material points that were coincident at t = 0 with a chosen sample of nodes were thus followed during the whole simulation. At the end, the coordinates of these points were exported to a file. In this way, a discretized picture of the final displacement fields was able to be produced, allowing the storage of the information to be used later, in the buildup model. The nodes to be used as tracer particles were in the finely meshed portion of the substrate. The application of these techniques allowed to simulate and export the deformation fields that could result from different conditions at impact.

Results and Discussion

The results of the classification of the powder particles were used for the choice of impact simulations of real particles onto a flat substrate, which is presented in this section. Ten representative particles from each class were randomly chosen for the impact simulations. The fact of using particle classification results guaranteed a better representativeness of the chosen set of particles, which helped in limiting the number of simulations to be done. Nevertheless, this number, evaluated at 1680, was still too large to deal with simulations individually, i.e., to manually tune the parameters (mesh size, step times, remeshing parameters) for each simulation. Instead, an automatic procedure was set up, thanks to the scripting capabilities of Abaqus. In this way, a large number of simulations was tried. As a drawback, there was less control on the individual simulations, because some of the parameters, as the mesh size, for example, had to be fixed to the same value for every simulation. For this reason, only a small number of simulations could be successfully completed. The rest failed due to the excessive distortion of one or more elements. An analysis of the simulations is shown in Fig. 8, where the effect of substrate material (left) and particle velocity (right) is shown. Concerning the material, tantalum particles impacted, respectively, a copper and a tantalum substrate. The first have a worse success ratio than the latter, due to the fact that global deformation is bigger, so the probability of an element to experience excessive deformation is higher. The same argument is valid for the effect of particle velocity: The fraction of successful simulations decreases with the increase in particle velocity, because higher velocity results in bigger deformation. A possible solution to the low success ratio of simulations could be the development of a process for the automatic tuning of meshing parameters and of the duration of the time steps. Another way of improving the simulation outcome could be the utilization of a different modeling approach, e.g., eulerian instead of lagrangian, which is more promising for large deformations. Element suppression due to damage was proved to be another effective simulation technique to avoid excessive distortion of the mesh (Ref 32). A drawback of this particular technique is that the mass of the model is not conserved.

An example of a real particle impact simulation on copper is shown in Fig. 9. Particle velocity was, in this case, 572 ms−1. The upper image represents a part of the simulation domain at the initial state, before the impact. The lower picture shows a cross section of the deformed state. Copper is in lighter gray, tantalum in darker gray. As already pointed out in the literature (Ref 33, 34), substrate surface has an important effect on the deformation behavior. For example, as shown in Ref 33, a disk-like particle impinging the substrate on its flat side or on its edge can produce dramatically different results. Now, in 3D and for completely irregularly shaped particles, the variability of single impacts is much higher. The analysis of the impact outcome for all the particle shapes and orientations was not among the aims of the present study, which is more oriented toward the development of new methods. In particular, these FE simulations were conceived and will be used to feed the coating buildup model, presented hereafter, when this will be extended to 3D. What can be said here on these simulations is that the impact of a non-spherical particle onto a flat substrate, for a given couple of materials and in the velocity range of the cold spray process, is a complex phenomenon which depends on several key factors, such as particle velocity, shape and orientation. From a qualitative point of view, the ratio of kinetic energy per unit contact area seems to be the parameter controlling the extent of deformation and penetration and more work is needed to establish a quantitative relationship between shape factors and deformation patterns.

The focus of this study was on particle morphology, and for the sake of simplicity, the substrate was idealized as flat. Nevertheless, it is well known that the morphology of the substrate surface plays a key role in the coating buildup, as well as in single particle impacts (Ref 34) and should be considered in future developments of FE analysis.

Buildup Model

Bases of the Modeling Approach

FE analysis is a powerful technique for the modeling of particle impacts but, when it comes to the simulation of the buildup of a whole coating, the requirements in terms of CPU time and memory become prohibitive, due to the high number of particles involved. To overcome this limit, without renouncing to the physically meaningful results of FE analysis, a coating buildup model was developed. Here, data from different FE simulations of single particle impacts were combined iteratively, to simulate the coating formation process, consisting in successive depositions of single powder particles. The buildup model was completely implemented in a Python environment, using the library NumPy (Ref 35) and the modules “interpolate” and “spatial” from the SciPy bundle (Ref 36). In the basic iterative algorithm, splats are added one by one to the substrate, which, at the beginning of the simulation, is a piece of flat copper. For the sake of simplicity, the model was developed in 2D, although it is planned to extend it to 3D in future developments. Thus, a series of 2D FE simulations of disk-like particles of different sizes and velocities was performed, with the same modeling techniques as in 3D (in particular remeshing and tracer particles). In this way, a data set consisting of 2D discretized displacement fields was created and could be used as a basis for the 2D coating buildup model, as exposed hereafter.

Data from single particle impact FE simulations consisted in discretized displacement fields, i.e., the positions of the tracer particles before and after the impact, as shown in Fig. 10. These two sets of points are in one-to-one correspondence and will be called hereafter P and P′. They constitute the basic input needed by the coating buildup model. During the process of coating formation, an arbitrary particle impinges on a substrate which is composed by already deposited particle. These particles, at least those near the impinging particle, deform further as a consequence of the impact. For the purposes of the model, it is important to keep track of the changes of particle boundaries inside the coating in formation, induced by each impact. To this aim, the discrete deformation fields are not sufficient and have to be extended to the continuum. A solution for this problem was developed using an interpolation scheme based on Delaunay’s triangulation and a set of affine transformations, in the following way. An algorithm implementing Delaunay’s triangulation (from the already mentioned SciPy bundle) was applied to initial tracer particle positions (P). For each triangle ABC of the triangulation, the corresponding deformed triangle A′B′C′ is obtained from the final positions (deformed state) of the tracer particles. Then, as one and only one affine transformation, namely α i, maps ABC into A′B′C′ (Ref 37), the positions of the vertex before and after the deformation are sufficient to define an affine transformation α i, for each triangle of the Delaunay’s triangulation. The collection of affine transformations {α i}, which can be seen as a piecewise continuum affine transformation, maps every point of the triangulated substrate domain (before impact) into the corresponding point after the impact.

When looking at single particle impacts, an important difference exists between FE simulations and the coating buildup simulation. In the former case, the substrate is a perfectly flat block of homogeneous material (copper or tantalum), while in the latter, excluding the first impact, it is rough and inhomogeneous, being made by the initial copper substrate and the already deposited particles. Considering the complexity of the roughness, it was decided not to include this effect in FE simulations, since the already high number of simulations to be done would have been dramatically increased. Future developments of the model will focus on this question. In particular, the effect of substrate roughness on the deformation pattern at impact and the presence of already deposited particles will be directly included into the coating buildup model, with the help of a preliminary FE study. Some steps in this direction can already be found in Ref 34. To resume, a strong working hypothesis was made for the present model, namely that substrate roughness was considered to have no particular effect on the displacement fields. To deal with coating roughness, data from FE simulations (impacts onto a flat substrate) were adapted to the free surface of the coating in formation by means of a particular transformation, which can be expressed in the following way:

where x and y are the coordinates of the tracer particle in FE simulation (the origin of the system of coordinates here corresponded to the first contact point between particle and substrate), x imp is the impact x-coordinate (in the system of coordinates of coating buildup model) and s(x) represents the y-coordinate value of the free surface at x. The impact onto the rough surface is, thus, decomposed: the roughness existing before the impact is simply added to the impact results on a flat surface. An important drawback of this modeling approach, in comparison with a full FE coating buildup simulation, consists in the fact that the stress and strain state of the substrate and deposited particles are neglected here. Future developments of the model will try to keep track of the evolution of stress and strain, even though some modifications of the present modeling approach will be needed.

The basic iterative algorithm, which is the core of the buildup model, is given stepwise below.

-

1.

An impinging particle is selected from the particle library.

-

2.

Impact conditions, namely particle velocity and substrate material, are assigned. This identifies one particular displacement field, obtained from the previously done FE simulation.

-

3.

2D FE simulation results are placed with respect to the actual free surface of the coating (as given in Eq 3) and interpolated (Delaunay’s triangulation and affine transformations).

-

4.

The substrate, consisting at this point in the initial substrate and the already deposited particles, is deformed as dictated by the interpolated deformation field.

-

5.

The impinging particle itself is added (as taken from the deformed state in FE simulations) and the list of particle boundaries of the coating is updated.

This procedure can then be repeated for the next splats, in an iterative way. The data structure stocked at each step represents a complete picture of the microstructure. It consists of a dictionary (in the pythonic sense of the term) containing arrays of points (discretized splat boundaries), labeled to keep track of the neighborhood relationships between couples of splats.

Results and Discussion

Figure 11 shows pictures of the coating buildup model results, after 5, 10, 20 and 30 iterations, respectively. Looking at these results, the model seems to be able to correctly reproduce the deformations induced by the first impacts. After a certain number of iterations, however, localized discontinuities of the free surface, which will be called “spikes” hereafter, unrealistically accumulate in certain zones of the coating. Physically, a particle impinging onto one of these spikes would flatten it, at least partially. Instead in the model certain spikes tend to survive to particle impacts, resulting in an unrealistic roughness of the coating and splat deformation. Spikes can thus be considered as numerical artifacts, certainly due to the fact that the effect of substrate roughness on single impacts was neglected. Moreover, the extreme roughness of the coating in formation, induced by the spike formation mechanism, badly affects the quality of the Delaunay triangulation, creating splat boundary intercrossing which would never happen in reality.

The results presented in this paper show that the proposed approach is valid and promising, but the working hypothesis of neglecting substrate roughness has to be somehow relaxed. The problem is at present under study. The coating buildup model, although not yet optimized, can easily run on a laptop PC because it requires a relatively small amount of memory and computational time. It has to be mentioned, however, that the model was never tested for more than 120 iterations, because the roughness accumulation issue made it unservable for more than few layers of deposited particles. Nevertheless, the model is today at its first steps and future developments will make possible to simulate microstructures closer to reality and to increase the number of deposited particles.

Conclusion

The modeling approach presented in this paper aimed to develop a tool covering a large scope, ranging from the morphology of the initial powder to the microstructure of the final coating.

The originality of the work lies in different aspects. Firstly, the focus was on 3D, at least in the experimental characterization by microtomography and in the finite element modeling. Secondly, the finite elements impact simulations were not applied to idealized (e.g., spherical) particles, but to “real” ones, as they were observed by microtomography. Finally, the idea to combine results from finite elements modeling with a simpler morphological approach is very promising. Although the idea is in an initial state of development, it allowed nevertheless to perform a preliminary 2D simulation of the coating buildup. In virtue of the coupling with the finite elements method, this approach allows to maintain a strong link with the physical mechanical behavior at impact.

Outlook

The development of the coating buildup model is ongoing, addressing one by one the issues that limit its applicability. The final aim is to complete the development of a reliable tool, capable of simulating the impact of thousands of particles. The pathway to get there passes on the inclusion of roughness effects, to the extension to 3D and to the use of real particle impact simulations. The first step, i.e., including roughness effects, is believed to solve, or at least to alleviate, several problems, including in particular the accumulation of spikes. The impact of a particle onto a protruding part of the surface will have the effect of smoothing it. At the same time valleys in the surface will have an enhanced tendency to be filled, so that the coating buildup mechanism will be more regular and the final microstructure closer to the real one. Concerning the extension to 3D, new interpolation techniques have to be developed for the displacement fields. This extension is believed to help filling the gap between modeling and real life, allowing the exploration and understanding of interlocking and piling-up mechanisms, which are not possible in 2D simulations but certainly happen in reality.

The final model will find its application in the optimization of process parameters (powders, gas pressure and temperature), so that the deposited material will meet the required mechanical or functional properties.

Abbreviations

- FE:

-

Finite elements

- SEM:

-

Scanning electron microscopy

- ESRF:

-

European synchrotron radiation facility

- XMT:

-

X-ray microtomography

- PAI:

-

Principal axes of inertia

- PCA:

-

Principal component analysis

- EOS:

-

Equation of state

- ALE:

-

Arbitrary Lagrangian–Eulerian

References

Champagne V.K. (editor), et al., The Cold Spray Material Deposition Process. Woodhead Publishing Limited, Cambridge, 2010.

A. Vardelle, C. Moreau, J. Akedo et al., The 2016 Thermal Spray Roadmap, J. Therm. Spray Technol., 2016, 25(8), p 1376. doi:10.1007/s11666-016-0473-x

H. Assadi, H. Kreye, F. Gartner, and T. Klassen, Cold Spraying—A Materials Perspective, Acta Mater., 2016, 116, p 382-407

G. Benenati and R. Lupoi, Development of a Deposition Strategy in Cold Spray for Additive Manufacturing to Minimize Residual Stresses, Proc. CIRP, 2016, 55, p 101-108

Y. Cormier, P. Dupuis, B. Jodoin, and A. Corbeil, Pyramidal Fin Arrays Performance Using Streamwise Anisotropic Materials by Cold Spray Additive Manufacturing, J. Therm. Spray Technol., 2016, 25(1-2), p 170-182

X. Wang et al., Characterization and Modeling of the Bonding Process in Cold Spray Additive Manufacturing, Addit. Manuf., 2015, 8, p 149-162

D. MacDonald, R. Fernández, F. Delloro et al., Cold Spraying of Armstrong Process Titanium Powder for Additive Manufacturing, J. Therm. Spray Technol., 2017, 26(4), p 598-609. doi:10.1007/s11666-016-0489-2

C. Lee and J. Kim, Microstructure of Kinetic Spray Coatings: A Review, J. Therm. Spray Technol., 2015, 24(4), p 592-608

G. Bae et al., General Aspects of Interface Bonding in Kinetic Sprayed Coatings, Acta Mater., 2008, 56, p 4858-4868

M. Saleh, V. Luzin, and K. Spencer, Analysis of the Residual Stress and Bonding Mechanism in the Cold Spray Technique Using Experimental and Numerical Methods, Surf. Coat. Technol., 2014, 252, p 15-28

A. Trinchi, Y.S. Yang, A. Tulloh et al., Copper Surface Coatings Formed by the Cold Spray Process: Simulations Based on Empirical and Phenomenological Data, J. Therm. Spray Technol., 2011, 20(5), p 986-991. doi:10.1007/s11666-011-9613-5

http://www.cea.fr/Pages/le-cea/les-centres-cea/le-ripault.aspx.Accessed 31 May 2017 (in French)

O. Amsellem, K. Madi, F. Borit et al., Two-Dimensional (2D) and Three-Dimensional (3d) Analyses of Plasma-Sprayed Alumina Microstructures for Finite-Element Simulation of Young’s Modulus, J. Mater. Sci., 2008, 43(12), p 4091-4098. doi:10.1007/s10853-007-2239-9

S. Ahmadian, A. Browning, and E.H. Jordan, Three-Dimensional X-Ray Micro-Computed Tomography of Cracks in a Furnace Cycled Air Plasma Sprayed Thermal Barrier Coating, Scr. Mater., 2015, 97, p 13-16

M. Jeandin, H. Koivuluoto, and S. Vezzu, Chap. 4 “Coating Properties”, Modern Cold Spray, 1st ed., J. Villafuerte, Ed., Springer, Berlin, 2015,

G. Rolland et al., Laser-Induced Damage in Cold-Sprayed Composite Coatings, Surf. Coat. Technol., 2011, 205, p 4915-4927

O. Amsellem, F. Borit, D. Jeulin et al., Three-Dimensional Simulation of Porosity in Plasma-Sprayed Alumina Using Microtomography and Electrochemical Impedance Spectrometry for Finite Element Modeling of Properties, J. Therm. Spray Technol., 2012, 21(2), p 193. doi:10.1007/s11666-011-9687-0

https://smil.cmm.mines-paristech.fr. Accessed 21 Dec 2016.

S. Beucher et al., The Watershed Transformation Applied to Image, 10th Pfefferkorn Conf. on Signal and Image Processing in Microscopy and Microanalysis, 16–19 sept. 1991, Cambridge, UK, Scanning Microscopy International, suppl. 6, 1992, p 299–314

Denis E. Parra, C. Barat, D. Jeulin, and C. Ducottet, 3D Complex Shape Characterization by Statistical Analysis: Application to Aluminium Alloys, Mater. Charact., 2008, 59, p 338-343

L. Gillibert, C. Peyrega, D. Jeulin, V. Guipont, and M. Jeandin, 3D Multiscale Segmentation and Morphological Analysis of X-Ray Microtomography From Cold-Sprayed coatings, J. Microsc., 2012, 248(2), p 187-199

I.T. Jolliffe, Principal Component Analysis, 2nd ed., Wiley, New York, 2002

B.S. Everitt, S. Landau, M. Leese, and D. Stahl, Cluster Analysis, 5th ed., Wiley, New York, 2011

R. Ghelici, S. Bagherifard, M. Guagliano, and M. Verani, Numerical Simulation of Cold Spray Coating, Surf. Coat. Technol., 2011, 205, p 5294-5301

W.Y. Li, C.J. Li, and H. Liao, in Modeling Aspects of High Velocity Impact of Particles in Cold Spraying by Explicit Finite Elements Analysis. Proceedings of the International Thermal Spray Conference, 2009, pp. 432-441

T. Antoun et al., Spall Fracture, Springer, New York, 2003

S.R. Chen and G.T. Gray, Constitutive Behaviour of Tantalum and Tantalum-Tungsten Alloys, Metall. Mater. Trans. A, 1996, 27(10), p 2994-3006

S. Rahmati and A. Ghaei, The Use of Particle/Substrate Material Models in Simulation of Cold-Gasdynamic-Spray Process, J. Therm. Spray Technol., 2014, 23(3), p 530-540

G.R. Johnson and W.H. Cook, in A Constitutive Model and Data for Metals Subjected to Large Strains, High Strain Rates, and High Temperatures. Proceedings of the 7th International Symposium on Ballistics, 1983, pp. 541-547

R. Clift, J.R. Grace, and M.E. Weber, Bubbles, Drops, and Particles, Academic Press, New York, 1978

ABAQUS Documentation, Dassault Systèmes, Providence, RI, USA, 2015.

B. Yildirim, S. Müftü, and A. Gouldstone, Modeling of High Velocity Impact of Spherical Particles, Wear, 2011, 270(9–10), p 703-713

S. Yin, P. He, H. Liao, and X. Wang, Deposition Features of Ti Coating Using Irregular Powders in Cold Spray, J. Therm. Spray Technol., 2014, 23(6), p 984

Q. Blochet et al, in Influence of Spray Angle on Cold Spray with Al for the Repair of Aircraft Components. Thermal Spray 2014: Proceedings of the International Thermal Spray Conference, Barcelona, Spain.

S. van der Walt, S.C. Colbert, and G. Varoquaux, The NumPy Array: A Structure for Efficient Numerical Computation, Comput. Sci. Eng., 2011, 13, p 22-30. doi:10.1109/MCSE.2011.37

E. Jones, E. Oliphant, P. Peterson et al., SciPy: Open Source Scientific Tools for Python, 2001, http://www.scipy.org. Accessed 21 Dec 2016.

P.J. Ryan, Euclidean and Non-Euclidean Geometry, International Student Edition, Cambridge University Press, 2009.

Acknowledgments

The authors are grateful to CEA DAM and to the Institute Carnot-M.I.N.E.S. for the financial support of this study.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Delloro, F., Jeandin, M., Jeulin, D. et al. A Morphological Approach to the Modeling of the Cold Spray Process. J Therm Spray Tech 26, 1838–1850 (2017). https://doi.org/10.1007/s11666-017-0624-8

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11666-017-0624-8