Abstract

Recent work has investigated various schemes for the attachment of free-floating grains in models of equiaxed solidification in multicomponent alloys. However, these models are deterministic in nature, and simply investigating their differences for a limited number of results would not constitute an adequate comparison of their predictions. Instead, the models are compared in the context of the uncertainty in the most important input parameters. This approach is especially important in light of the effort required to implement a new model. If the predictions are essentially the same, then either model will suffice, or one may be selected for ease of implementation, numerical robustness, or computational efficiency. If, however, the models are significantly different, then the most accurate should be selected. In order to investigate the effects of input uncertainty on the output of grain attachment models, the PRISM Uncertainty Quantification framework was employed. The three models investigated were a constant packing fraction (CPF) scheme, an average solid velocity method (AVM), and a continuum attachment approach. Comparisons were made between the CPF and AVM models to estimate the importance of the local velocity field and between the CPF and continuum models to determine the sensitivity of the macrosegregation to new parameters unique to the continuum model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In any model intended to represent some physical system, there are uncertainties in both the values of the appropriate input parameters and the assumptions used in constructing the model itself. These different sources of uncertainty may be broadly categorized into two types: epistemic and aleatoric.[1] In many cases, different models are proposed for a specific physical phenomenon, each making use of different assumptions and representing the underlying governing equations in different ways. Epistemic uncertainties, also called reducible uncertainties, may be theoretically reduced by gaining additional knowledge of a system. For example, improved measurements of a material property or experimental validation sufficiently support the use of one model type over another. The second type is aleatoric, or irreducible, uncertainty. Aleatoric uncertainties are natural, random variations in inputs. They cannot be reduced given more knowledge of the system. An example is the uncertainty in the value of a given input due to the error inherent to a particular measurement technique.

The practice of understanding how these different sources of uncertainty affect the output of a model is called uncertainty quantification. Due to the ever present nature of input uncertainties, UQ has become increasingly common in fields such as heat transfer,[2] fluid mechanics,[3,4] and materials[1] modeling. To this point, however, UQ has only gained limited adoption in the solidification community.[5,6,7,8] Hardin et al.[5] used UQ for design optimization in a simple casting by predicting the probability of failure for a particular riser size, and iterating to reduce cost while minimizing casting defects. Fezi and Krane[6,9] used a non-intrusive UQ method to evaluate the uncertainty in a series of solidification models of increasing complexity, beginning with a one-dimensional wire casting model, and advancing to two-dimensional models for static castings and direct chill casting. These examples primarily considered the effect of aleatoric uncertainties (although Fezi and Krane[6] considered the effect of three different permeability models for the mushy zone). The purpose of the present work is to further demonstrate how UQ may be used within the solidification field to understand the present state of solidification modeling, to improve comparisons to experimental data, and to accelerate model development and guide corresponding experimental work. In particular, cases in which multiple possible models may be used for the same physical phenomenon are of interest. To this end, an example problem of static casting of a grain refined aluminum alloy is presented in which several different models for treating the attachment of free-floating grains have been proposed. The primary goal is to understand the effect of the epistemic uncertainty in the choice of various models on the resulting macrosegregation pattern. A method for quantifying uncertainty in solidification models is presented, as well as techniques for comparing outputs of the UQ process between models and approaches for visualizing the results in a manner that will enable comparisons to future experimental work.

Predicting macrosegregation in equiaxed solidification presents several challenges from a modeling standpoint. The motion of free-floating particles with respect to the bulk fluid flow, their coalescence into a rigid solid structure, and the permeability of the resulting solid network all affect the overall macrosegregation distribution and each necessitates careful consideration of both the model and its numerical representation. In particular, the shape of the interface between the slurry (containing free-floating grains) and rigid solid regions has a dramatic effect on the local fluid flow due to the damping effect of the dendrite permeability. The multi-phase and mixture formulations represent two distinct approaches to modeling equiaxed solidification, each with advantages and disadvantages. The multi-phase formulation solves governing conservation equations for each phase considered within the system. The simplest of these are two-phase models[10,11] that include only the solid and bulk liquid and generally assume a spherical grain morphology. In this case, dendrite coherency occurs at a volume fraction solid equal to the density of random close packed spheres (0.637).[12] Three-phase models[13,14] add a set of equations for the interdendritic liquid, allowing for the consideration of spherical grain envelopes that include solid dendrites and interdendritic liquid. In this case, dendrite coherency occurs when the grain envelope fraction within a numerical cell equals that of random close packed spheres, and this value relates to the solid fraction via a set of relationships describing the solid grain morphology inside the envelope.[15] Further extensions of this model type include both equiaxed and columnar grain morphologies and their associated interdendritic liquids, allowing for the prediction of the columnar-to-equiaxed transition.[16,17]

While flexible and detailed, the multi-phase formulation also incurs a significant computational expense due to the necessity of solving a separate set of conservation equations for each phase. The mixture approach avoids this problem by developing a set of governing equations written in terms of variables which are mixtures of contributions from the constituent phases.[18,19] In this way, only a single set of conservation equations is solved, and individual phase contributions are extracted using supplemental relationships when necessary. Vreeman et al.[20,21] formulated such a model for equiaxed solidification based on the work of Ni and Incropera.[22,23] Subsequent work compared macrosegregation predictions from this type of model to experimental measurements of an industrial scale direct chill casting, finding a favorable comparison for the case of a grain refined, fully equiaxed ingot.[24] These studies assumed that dendrite coalescence occurred at a specified volume fraction solid that was held constant and uniform throughout the domain, disregarding solid morphology and velocity in determining the dendrite coherency point. Instead, the selection of a packing fraction lower than that for random close-packed spheres implicitly defined an assumption about the grain morphology.

Within the framework of the mixture formulation of the governing conservation equations for heat and mass transport in solidification, previous studies[25,26,27] have proposed several models for grain attachment (also referred to as dendrite coherency or packing) and compared their predictions in simple example cases. This study seeks to expand on these comparisons using an uncertainty quantification (UQ) framework to understand the distribution of outputs that result from each of the attachment schemes and to determine whether they produce significantly different predictions.

The previously mentioned studies in equiaxed solidification modeling (for both formulations of the governing equations) assumed that dendrite coherency occurs at some solid or grain envelope volume fraction that is held constant and uniform over the domain. For the mixture formulation, Vusanovic and Krane[25] developed a method for including the effect of the local velocity field on grain attachment and implemented it in a model for horizontal direct chill casting. They presumed that the flow of solid grains toward a rigid interface caused a local depression in the packing fraction, while flow away from an interface increased the packing fraction. Their model worked by expressing the packing criterion as a function of the conditions of a control volume downstream from the cell of interest. In this way, rather than using the constant and uniform packing fraction proposed by Vreeman et al.,[20,21] the local packing fraction varied throughout the domain depending on the motion of the free-floating grains.

Plotkowski and Krane[26] more closely investigated the consequences of using velocity-based packing models in simulations of static castings. They found that attachment models used for mixture formulations of equiaxed solidification suffered from significant numerical artifacts resulting from the treatment of grain attachment phenomena as discrete events on the length scale of the numerical grid. In these models, each control volume may only contain fully free-floating or fully rigid solid with no intermediate states. When a cell switches from one state to the other, the sudden introduction of the permeability term for the rigid solid has a dramatic effect on the flow, causing changes in the local solute advection and erroneous macrosegregation predictions. In a subsequent study,[27] they proposed a continuum grain attachment model that significantly reduced the formation of these artifacts. This model managed the transition from free-floating to rigid solid within a cell so that changes in the governing equations occur smoothly over a specified range of solid fractions. The path that the system takes through the transition was controlled using a simple power-law weighting function that affects the relevant source terms in the momentum conservation equations.

Essentially, there are currently three types of grain attachment models to choose from within the mixture formulation: constant packing fraction, velocity based, and continuum. While there are some differences in the outcomes of each packing model (highlighted in References 26 and 27), there is uncertainty in which is the best representation of the physical behavior of the system. This is an epistemic uncertainty in that improved knowledge of the physical system through careful experimentation would theoretically allow for determination of the most accurate model. Unfortunately, validation data sufficient to select a particular attachment model are not currently available, but we must still ask the question: given the present knowledge of the system, is one model actually superior to the others? To approach this problem, a more fundamental question must first be answered: do the models actually produce different results? In answering these questions, specific areas of model development and experimental validation research will be identified.

This study will first present a general mixture model for equiaxed solidification capable of representing solid motion and each of the three grain attachment schemes followed by a description of the uncertainty quantification framework and methods for quantitatively comparing output distributions. Next, the results of each attachment scheme for a simple example problem will be presented and the main differences will be highlighted. Probability distributions for a variety of input parameters will then be imposed. Answers to the questions outlined above will be investigated by comparing the relevant output probability distributions. It should be noted that the purpose of this study is not to present a detailed analysis of the uncertainty for one particular case, but to demonstrate how these uncertainty quantification methods may be implemented for solidification models in general in order to guide future work in both model development and supporting experimentation. To that end, differences among the grain attachment models will be used as an example of a facet of solidification modeling that presently has a significant amount of uncertainty in both input parameters and choice of numerical methods.

Model Development

Solidification Model Description

The basis of the models used for this work is the mixture formulation proposed by Vreeman et al.,[20] written here for an axisymmetric domain, and solved using standard finite volume techniques[28] on a uniform, orthogonal, staggered grid. Mass continuity may be expressed as:

where \( \rho = g_{s} \rho_{s} + g_{l} \rho_{l} \) is the mixture density with solid and liquid densities, \( \rho_{i} \), weighted by volume fractions \( g_{i} \), and \( \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {V} \) is the mixture velocity vector defined as \( \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {V} = f_{s} \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {V}_{s} + f_{l} \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {V}_{l} \), where \( \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {V}_{i} \) are the velocity vectors of the solid and liquid phases and \( f_{i} \) are the corresponding mass fractions. Phase densities are assumed constant with temperature and composition except where represented by the Boussinesq approximation, and in this work, \( \rho_{s} = \rho_{l} \) and so \( f_{i} = g_{i} \). However, the appropriate use of these two different sets of variables, as well as solid and liquid densities, has been used throughout in order to maintain generality in the model description. Buoyancy forces due to actual differences between solid and liquid density have been included using a representative density difference, \( \Delta \rho \ne 0 \).

Conservation of axial momentum is

where t is time, u the axial component of the mixture velocity, \( \mu_{l} \) liquid dynamic viscosity, and P pressure. The final two terms represent source terms that apply to either the slurry of free-floating grains suspended in the liquid metal, or the rigid mush, respectively. The weighting function, F, is used to activate each of these terms in the appropriate regions of the domain, and in the case of continuum grain attachment model, to vary these terms continuously at the interface between the two regions. The source terms are defined as follows:

and

where \( \bar{\mu }_{s} \) is the average solid viscosity, \( \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {V}_{s} \) the solid velocity vector with axial component \( u_{s} \), \( g \) the acceleration due to gravity, \( \beta_{T,s} \)the thermal expansion coefficient for the solid, \( T \) temperature, T 0 a constant reference temperature, \( \beta_{S,s}^{i} \) the solutal expansion coefficient of component i, \( C_{s}^{i} \) the solid composition with respect to component i, \( C_{0}^{i} \) the nominal composition of component i, \( \beta_{T,l} \) the thermal expansion coefficient of the liquid, \( \beta_{S,l}^{i} \) the solutal expansion coefficient of component i, \( C_{l}^{i} \) the composition of the liquid with respect to component i, and K the permeability.

In the radial direction, momentum conservation is written as:

where the slurry and rigid source terms are again weighted using F, and are defined as:

and

The permeability is described using the Blake–Kozeny model:

where \( \lambda \) is the dendrite arm spacing.

The average solid viscosity, \( \bar{\mu }_{s} \), is calculated by numerical integration of (9)[21,29]:

where g s,c is the critical solid fraction used for the grain attachment models and

The solid velocity is calculated from Eqs. [2] and [5], the definition of mixture velocity, and using Stokes’ law for spherical particles to described the combined effects of drag and buoyancy:

where P is the packed fraction, \( \mu_{m} \) the mean viscosity, and d a representative particle diameter, which, in the case of equiaxed solidification, may be interpreted as a representative free-floating grain size.

The conservation equations that govern energy and species are

and

where c is the constant pressure specific heat, k thermal conductivity, L f latent heat of fusion, and D mass diffusivity. The SIMPLER algorithm is used for the momentum and continuity equations. The transient latent heat source term in the energy equation is linearized using the method of Voller and Swaminathan,[30] and the advection-like source terms in the species equations are discretized according to Vreeman and Incropera.[31] Based on the recommendations of Kumar et al.,[32] the permeability is calculated in the staggered velocity grids using a linear interpolation of the volume fraction solid from the main control volumes.

Grain Attachment Model Descriptions

In this study, three different grain attachment models are considered and include two discrete models and one continuum model. The first of the discrete models applies a constant and uniform critical solid fraction, g s,c , to the domain. Any cell with a volume fraction solid that exceeds g s,c is considered packed, under that condition that it has at least one packed neighbor. Here, the packing fraction throughout the domain, g s,p , is simply equal to the critical solid fraction g s,c . For reference, this scheme will be referred to as the constant packing fraction (CPF) model.

The second discrete model was originally proposed by Vusanovic and Krane[25] and explored further by Plotkowski and Krane.[26] It is intended to account for the effect of the local velocity field on the likelihood of grain attachment by determining packing for a given control volume based upon the condition of a neighboring cell in the downstream direction. If the downstream cell has a volume fraction solid exceeding the critical value, g s,c , and is packed itself, then the solid within the cell of interest is converted from free-floating grains to a rigid structure. The downstream cell is determined by averaging the solid velocities (located at the cell faces) in each direction and using the larger of the two resulting vectors to indicate the downstream direction. This scheme will be referred to as the average velocity method (AVM). In this case, the local packing fraction for each cell varies throughout the domain. For comparison to the other attachment models, the average packing fraction of the domain, \( \bar{g}_{s,p} \), will be used. Plotkowski and Krane[26] accurately fit a quadratic function (\( R^{2} = 0.9989 \)) to the relationship between the average packing fraction and the critical solid fraction for the AVM model in the present system using simulation data over a range of critical values:

In these two discrete models, all of the solid within each main control volume is assumed to be either fully rigid or fully free-floating. Therefore, depending on the condition of a cell, P is set to a value of either zero or one, with no states in between. On the staggered velocity grids, because by definition solid cannot flow into or out of a fully rigid cell, the value of P in a staggered cell is one if either of the neighboring cells (in the direction of the momentum equation of interest) is packed, and equal to zero otherwise.

The continuum model used here[27] is similar to the CPF model in that cells become fully packed at a particular volume fraction solid that is constant and uniform throughout the domain. However, rather than P being a binary variable, it is allowed to vary continuously from zero to one over a finite range of solid fractions described by the packing range, \( \Delta g_{s} \), with the upper limit equal to g s,c . A linear relationship between the solid fraction and the packed fraction over the packing range is expressed as:

where g s is the volume fraction solid of a main control volume. Unlike the discrete models, here, since P is now a continuous variable, it may simply be interpolated for the staggered velocity grids.

As described in Reference 27, due to the large difference in the order of magnitude of the rigid and slurry source terms in the momentum equations, a weighting function is used as a way of adjusting the behavior of the cells undergoing the transition from free-floating to rigid solid. A power-law form for this function is a simple way of adjusting the behavior while introducing only a single new parameter:

For values of the weighting exponent, n, less than one, the transition region is weighted toward rigid solid behavior. For values greater than one, it is weighted toward the behavior of the slurry. In the discrete attachment models, the value of n does not affect the solution because P may not take on values between zero and one. The effect of the addition of these two new parameters (n and Δg s ) is investigated in Section III–D.

Model Limitations and Implications on Uncertainty Quantification

The rationale of the mixture formation of the governing conservation equations is to minimize the computational expense required to express the relationships between the solid and liquid phases compared to multi-phase methods. The limitation of this approach is the simplification of many of the relevant microscale effects present during solidification. For example, a uniform free-floating particle size is assumed here, whereas in order to accurately predict the solid motion, the size distribution of the free-floating grains must be known for each computational cell. This information can only be obtained by accurately modeling the size distribution and transport phenomena of potential nucleation sites as well as the grain growth kinetics. Complex models that include some of these effects exist (i.e., References 33 and 34), but include still other assumptions. Furthermore, it is not clear that a more complex model necessarily produces more certain results. The inclusion of new phenomena requires the addition of new parameters, each of which has its own uncertainty that contributes to the overall output uncertainty in the model predictions, and also significantly increases the computational expense. Therefore, an honest assessment of the selected model assumptions is required to properly determine whether increased complexity and expense improves the model predictions.

The specific assumptions of the present model that will be used during the subsequent uncertainty quantification include the following:

-

(1)

A constant and uniform free-floating grain size, affecting the solid velocity field through viscous drag

-

(2)

A constant and uniform dendrite arm spacing, affecting the damping force exerted on the flow field by the rigid dendritic array within the packed region of the domain

-

(3)

Parameters of each grain attachment model that control the coalescence of free-floating grains

-

(4)

Constant and uniform thermophysical properties are not a function of phase or temperature (except where expressed using the Boussinesq approximation)

Uncertainty Quantification Methodology

The PUQ (PRISM Uncertainty Quantification) framework is an alternative to direct Monte Carlo sampling for propagating uncertainty in computationally expensive models.[35] The fundamental idea of this code is to compute the complete model for a small number of specific cases, then to fit a lower order response surface to the results, from which output PDFs may be efficiently sampled. PUQ uses the Smolyak sparse grid algorithm to select a comparatively small number of model configurations based on the input probability distributions and fits polynomials to the resulting outputs using generalized polynomial chaos (gPC). The number of data points in the sparse grid corresponds to the order of the polynomial response function. A level 1 sparse grid fits a linear function for each of the input parameters and a level 2 fits a quadratic function, etc. The quality of fit of the response surface to the model data is quantified using the root mean squared error (RMSE). Latin hypercube sampling of the response surface is used to construct the output PDF. The first advantage of this approach is that it is non-intrusive, in that it does not require any changes in the underlying simulation tool in order to propagate uncertainty through the model. The second is a considerable savings in computation time because the model need only be evaluated at selected points based on the sparse grid algorithm and the sampling required for construction of the output distribution is performed exclusively on the inexpensive surrogate model. Further details of the use of PUQ to study uncertainty propagation in solidification models may be found in References 6, 7, and 9.

The primary results from the uncertainty quantification are the probability distribution of output parameters and the sensitivities of the outputs to each of the inputs. The output PDFs are calculated by sampling from the response surface. The sensitivities are calculated using the elementary effects method.[6,36,37] In this approach, an elementary effect is the partial derivative of the output of interest with respect to a particular input as calculated between a neighboring pair of points in the sparse grid. The mean sensitivity, u*, has units of the output of interest (normalized by the PDF range for the input in question to allow comparisons between inputs), is positive, and calculated using an arithmetic average of the associated elementary effects. Any higher order effects caused by a non-linear output relative to that input, or interacting effects with other inputs, are characterized by the standard deviation of the elementary effects, σ.

For this study, it is of interest to quantitatively compare the output probability distributions of interest for the various grain attachment models. To do so, the Bhattacharyya coefficient[38,39] will be used to describe the amount of overlap between two output distributions. For two continuous distributions p and q, both with respect to an output variable x, it is defined as:

The coefficient varies from zero to one, in which a value of one indicates two identical distributions, and a value of zero two distributions that do not overlap at all. The output distributions in the present study are not continuous since they consist of discrete bins of data. Additionally, these bins are not of equal size or location between different distributions. As a result, Eq. [17] is calculated by numerical integration over the two distributions, using a step size much smaller than either bin size and representing each bin as a constant value over its width.

This discussion of uncertainty quantification so far applies generally to any output of interest. To make the analysis specific to macrosegregation, it is necessary to identify an output metric that describes the composition field. Previous work[40] showed that a three-parameter Weibull distribution effectively characterizes the final composition distribution within the domain when weighted by volume. The volume distribution function for the composition C of a particular alloying element is given by:

where α is the shape parameter, β the scale parameter, and γ the threshold value. The deviation, \( \sigma_{W} \), normalized by the nominal composition of the alloying element in question represents the spread of the volume distribution, and therefore, characterizes the magnitude of the overall macrosegregation. The normalized Weibull deviation, W, is defined as:

where C 0 is the nominal composition of the alloying element in question and Γ is the gamma function. To understand the effect of input uncertainty on macrosegregation, the normalized Weibull deviation will be used as the primary model output. For a given set of input uncertainties, the three grain attachment models will be compared using the sensitivities of the normalized Weibull deviation to each of the inputs, and the output PDFs will be compared using the Bhattacharyya coefficient.

Example System

A simple static casting will be used as a basis for comparison of the attachment models. AA7050, an industrially relevant alloy, was used for the simulations, the properties of which are given in Table I. Equilibrium thermodynamics are assumed. While the alloy contains many alloying elements, only the transport of Zn, Cu, and Mg is explicitly modeled. The fraction solid and solid and liquid compositions for each cell are calculated using an equation for the liquidus surface and partition coefficient for each element. These thermodynamic parameters were obtained from Thermo-calcTM using the TCAL1 database.[41] The relevant partition coefficients are given in Table I, and the liquidus surface is given by:

where the temperature is in K, the first term is the melting point of pure aluminum, the second accounts for elemental additions not calculated by the model, and the three remaining terms correspond to weight fractions of Zn, Cu, and Mg, respectively.

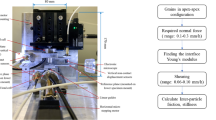

The domain in question (Figure 1) is axisymmetric, with heat transfer coefficient boundary conditions applied at the bottom and outer radius, with the top assumed insulated and symmetry applied at the centerline. The height and radius are both 15cm, the initial temperature is 950 K (677 °C), with an ambient temperature of 300 K (27 °C). The nominal packing fraction value used for this case is g s,p = 0.15. For the average velocity method, in which the packing fraction varies throughout the domain, previous work,[26] in developing Eq. [14], showed that a critical fraction solid of g s,c = 0.175 yields an average packing fraction of 0.15.

Results and Discussion

Comparison of Grain Attachment Models

Before performing any uncertainty quantification, a brief comparison of the effects of the three attachment models on the macrosegregation patterns will be presented for the nominal values of the system parameters given in Table I and Figure 1. Figure 2 shows the Zn composition field, streamlines, and packed interface for two intermediate times and the final composition field for the three attachment models, all with a uniform 80 × 80 numerical grid. A weighting exponent \( n = 1 \) and a packing range of \( \Delta g_{s} = 0.05 \) are used for the continuum model. In all cases, because the partition coefficient for Zn is less than one (true for the three main alloying elements), the first solid to form at the outer radius is depleted. Negative thermosolutal buoyancy at the wall drives a flow cell in a clockwise direction. The magnitude of the flow is similar for the CPF and AVM schemes, but slightly slower for the continuum model due to a gradual introduction of the permeability term at solid fractions below g s,c . As the rigid solid advances upwards and to the left, the flow carries enriched liquid from the rigid interface into the bulk, replaced by liquid nearer the nominal composition. Solid is also advected in the flow cell, but the downward buoyancy of the dense solid particles resists the upward flow at the centerline, resulting in a depleted region caused by particle settling. This solid motion displaces an associated amount of enriched liquid, which eventually collects at the top of the domain and becomes the last solid to freeze.

Zn compositions fields at intermediate times during the process for the discrete and continuous packing models. The dark line shows the interpolated P=0.5 interface, and the lighter lines are streamlines varying from 0.02 to 0.22 kg/s in 0.02 kg/s increments. Simulations were performed with an 80 × 80 numerical grid

The general trends in the final composition fields are largely the same for the three attachment models. The largest differences are in the channel segregates that tend to form near the outer radius, as reported by Plotkowski and Krane[26,27] and found to be numerical artifacts of the treatment of the transition from free-floating to rigid solid. These channels are most severe for the CPF model. They are suppressed in the velocity-based model for this particular case, but have been found to reappear even for small changes in the input parameters, such as the boundary conditions. The continuum model largely suppresses these artifacts for a wide range of conditions due to the improved smoothness of the interface between the slurry and rigid solid regions.[27] The discrete models (CPF and AVM) force this boundary to lie on the faces of the control volumes and to advance one cell at a time, while the continuum model interpolates the interface, allowing it to move smoothly through the domain.

Another major difference between the three attachment models is the dependence of the predicted macrosegregation on the size of the numerical grid (Figure 3). The channel segregates in the CPF model appear regardless of the grid size and are always spaced on the length scale of the grid. The AVM model suppresses these channels for intermediate length scales (as shown in Figure 2) but they appear in the coarser grid and, to a lesser extent, in the finer grid as well. Alternatively, the shape of the composition field does not change dramatically with grid size for the continuum model, but rather, the features are better resolved as the grid is refined. Fezi et al.[40] showed that the grid dependence of solidification simulations can be quantified using the normalized Weibull deviation (Figure 4) which is a measure of the distribution of the composition in the ingot. This analysis quantifies the observations from the contour plots in Figure 3 in that the continuum model shows less dependence on grid size than either of the discrete models. The velocity-based model in particular shows a strange relationship between the number of cells and the normalized Weibull deviation caused by the changing appearance of the channel segregates. As a compromise between accuracy and computational expense, an 80 × 80 numerical grid was used for all subsequent simulations.

Initial Uncertainty Quantification

In order to use PUQ to compare the grain attachment models, some decisions must be made on the inputs of interest and their probability distributions. Because the differences between the grain attachment models are the main concern of this study, inputs that directly relate to grain attachment are of the greatest interest. However, differences between the grain attachment models are negligible if uncertainties in the material properties and boundary conditions affect the result much more than the attachment models do. Unfortunately, the solidification model is too computationally expensive to consider a large number of parameters at once due to an increase in the number data points required by the sparse grid algorithm. Therefore, the best approach to is to first use a low level uncertainty analysis to determine the importance of the grain attachment inputs relative to material properties and boundary conditions, and then to reduce the number of inputs in order to perform higher level analyses.

Normal distributions were chosen to represent the uncertainties of all of the inputs because they only require two parameters (mean and standard deviation) and using mean values equal to those given in Table I and Figure 1 means that the most probable outcomes are those shown in Figure 2. The inputs that will be considered for level one analysis and the parameters of their probability distributions are given in Table I, divided into categories for grain attachment, material properties, and boundary conditions. The primary inputs that affect solid motion and grain attachment are the packing fraction, the average particle size, and the dendrite arm spacing. As emphasized in Section II–3, these parameters are assumed uniform and constant throughout the domain, but in reality, local difference in cooling conditions, composition, and the number and size of grain refiner particles, may cause them to vary widely. Therefore, fairly large standard deviations were selected for these input distributions (Table II). By comparison, the material properties are reasonably well understood. The epistemic uncertainty in this case comes from the assumption that they are not functions of temperature and phase. Therefore, a somewhat arbitrary choice was made to give these inputs standard deviations equal to five percent of the mean value. Similar uncertainties were applied to the boundary conditions as well. The probability distributions for all input parameters are summarized in Table II. For the purposes of this analysis, the weighting range and weighting exponent for the continuum model are assumed constant, where \( n = 1 \) and \( \Delta g_{s} = 0.05 \).

Due to the computational expense of using so many input uncertainty distributions, only a level one analysis was used, corresponding to linear response functions with respect to each input. The resulting sensitivities for each attachment model are shown in Figure 5 where the height of the bars represent the mean elementary effect of the normalized Weibull deviation for the final Zn composition field with respect to a given input, and the error bars indicate the standard deviation of the elementary effects. Based on these results for all three attachment schemes, the sensitivity of the simulations to parameters that directly influence free-floating solid and grain attachment are comparable or much greater than to the material properties and boundary conditions. For subsequent analysis, in order to reduce the number of model evaluations for higher order response surfaces, the uncertainty in material properties and boundary conditions will be neglected, and only the input uncertainties for the packing fraction (\( g_{s,c} \)), particle size (\( d \)), and dendrite arm spacing (\( \lambda \)) will be used. Note that while the boundary condition at the right wall does contribute uncertainty to the macrosegregation predictions (though generally less than the grain attachment parameters), this is not a parameter subject to changes through refinement in the model formulation. So while it is acknowledged as an important factor to understand, it is neglected here to simplify the interpretation of the following results.

Sensitivities from level 1 analysis of the three attachment schemes for the input distributions given in Table II

Uncertainty Quantification in Discrete Attachment Models

The first comparison of interest is between the two discrete grain attachment models. The purpose of the AVM scheme proposed by Vusanovic and Krane[25] was to include the effect of the local velocity field on the likelihood of free-floating grains attaching to the rigid solid interface. However, it is of interest to determine how much of a difference the inclusion of this effect actually has on the macrosegregation prediction. If the solutions are very different, then those which more accurately reflect the physical system must be determined. If they are largely the same (for some set of input uncertainties), then one model might be selected over another for other considerations, such as numerical stability or computational expense.

By reducing the number of inputs to only those directly affecting the grain attachment model (packing fraction, particle size, and dendrite arm spacing), the uncertainty analysis was able to be increased to level three, significantly reducing the error in the fit of the response surface to the model data points collected from the sparse grid. The resulting probability distribution functions for the normalized Weibull deviation of the final Zn composition fields are shown in Figure 6. The two models have slightly different mean values (10.5 × 10−3 for the CPF model and 8.94 × 10−3 wt. fr. Zn for the AVM) but mostly overlap. The Bhattacharyya coefficient for these two distributions is 0.871.

Considering that these two attachment models yield substantially the same results for the given input uncertainties, an interesting question is with what level of certainty must these inputs be known in order for the differences in the attachment models to become significant? To address this question, the standard deviations of the input distributions were multiplied by factors less than one, and the overlap in the output distributions was monitored as the input uncertainties were decreased. The Bhattacharyya coefficient (measuring the amount of overlap between the distributions) as a function of the assumed standard deviation multiplier is shown in Figure 7 along with examples of the output PDFs at selected points. As expected, when the input uncertainties are decreased, the overlap between the output PDFs also decreases. When the input standard deviations reach 10 pct of the original values given in Table II, there is no overlap between the output distributions. The consequence of this analysis is that, if the uncertainty in the outputs does not achieve a high amount of precision so that they do not significantly overlap, then they may be said to produce essentially the same results. In this case, selection of one model over the other can be based on concerns such as ease of implementation or numerical efficiency, rather than on physical accuracy. However, if this is not the case and the two models are significantly different, then one must be selected over another based on their physical relevance.

The Bhattacharyya coefficient comparing the CPF and AVM schemes as a function of the width of the input uncertainty distributions (going from the deviations given in Table II toward zero), with insets showing the output probability distribution function for the normalized Weibull deviation for the final Zn composition fields for three different input uncertainty levels

A more traditional manner of comparing the results of the two discrete attachment models is by comparing the means and standard deviations of the output distributions as a function of the input deviation multiplier (Figure 8). As the level of input uncertainty is reduced, the means of the models (particularly the AVM scheme) decrease slightly, but the standard deviations become much smaller as the input uncertainty is reduced. This manner of representing the data is a convenient way to compare to experiments that have their own set of uncertainty ranges.

There are essentially three different sources of the output uncertainty shown in Figures 6 through 8. The first is the lack of knowledge of the appropriate values of the critical solid fraction, dendrite arm spacing, and particle size. This source of error is significant, but reducible through improved experimentation. Second, any experimentation used to determine these inputs contains irreducible error in the limitations of the measurement technique, generally referred to as aleatoric uncertainty.

The last type of error is related to the model limitations in that both the CPF and AVM schemes assume that the these inputs are constant and uniform over the domain. However, the critical fraction solid and dendrite arm spacing are related to the grain morphology which is subsequently a function of the local cooling conditions, solute field, and details of the grain refiner additions. The particle size is a representative average, but in reality, the free-floating grains nucleate at some size and then grow over time, resulting in a time varying distribution of sizes over the domain. If these spatial distributions are well known before hand, then it is a simple extension of the model to allow these inputs to vary over the domain. However, using new sub-models to predict these distributions represent a significant effort and will introduce new input parameters that must then be determined in some way. The effort required to add new physical phenomena to the model requires the modeler to ask whether it will really improve the macrosegregation predictions. Thankfully, uncertainty quantification allows for insight into this question before expending the effort. If further experiments will allow for better determination of the spatial variation among these parameters for a particular situation, then using these distributions as input uncertainties may reduce the level of output uncertainty. If that level is still too high, then this result may be motivation to add new physics to the model. If the output uncertainty is within a tolerable limit, then the model may be used in its present condition.

Comparison of Continuum and Discrete Attachment Models

The other model comparison of interest is between the CPF and continuum attachment schemes. The primary question to be answered here is how the continuum model changes the macrosegregation predictions relative to the related discrete model. (In this case, the best comparison is not with the AVM scheme because the continuum model does not include the effect of the local velocity field.) The intention of the continuum attachment model is to reduce the occurrence of numerical artifacts that appear in the discrete models due to the effect of inaccurate geometric representations of the rigid solid interface on the local velocity field and solute advection (Figures 2 and 3). In doing so, however, the continuum model may have also significantly changed the overall macrosegregation prediction. If this is in fact the case, then experimental validation data are required to show that the continuum model is more physically accurate. However, if the results of the two models are largely similar, then the continuum model may be used freely in place of the CPF model and the benefits of limiting numerical artifacts gained.

Another concern, however, is that there is uncertainty in the model parameters that were introduced with the continuum scheme that do not apply to the discrete attachment models, namely the weighting exponent and packing range. Two interesting problems arise from this complication. The first is that, in the absence of robust experimental validation data (to which those parameter might be calibrated), it is of interest to determine what input values yield the closest predictions to the CPF model, allowing a fair comparison of the effects of the other various inputs. The second is the determination of the sensitivity of the model to these new input parameters. If the model is very sensitive to them, then their calibration to experimental results (or the development of a new packing scheme) becomes a priority. If, however, the model is not overly sensitive to these parameters, then less precise knowledge of their values is acceptable.

The first task is to calibrate the new parameters in the continuum model by determining the values of the weighting exponent and packing range that yield output distributions with the greatest similarity to the CPF model. This goal can be accomplished by holding one of the inputs constant while the other is varied (though with zero input uncertainty) and then comparing the output distribution functions against the CPF model. First, the weighting exponent is varied while the weighting range is held constant (\( \Delta g_{s} = 0.05 \)). An example of three resulting distributions is shown in Figure 9, compared against the CPF model. For small values of the weighting exponent, the permeability term in the momentum equations is introduced quickly once the fraction solid of a cell crosses the threshold into the weighting range. The effect of the permeability term is to damp the flow at the edge of the rigid mush, effectively reducing the relative motion of solid and liquid of differing compositions and reducing the overall macrosegregation. High values of the weighting exponent introduce the permeability term slowly, tending to allow more flow at the rigid interface and increasing the macrosegregation. These trends are reflected in the distributions as shown in Figure 9 which translate to higher Weibull deviations with larger exponent values, while generally remaining similar in shape and width.

The effect of the weighting exponent relative to the CPF model can also be determined by plotting the mean and standard deviation of the Weibull deviation distributions against that of the CPF model and by calculating the Bhattacharyya coefficient between the continuum Weibull deviation distributions and the output of the CPF model. The results of both approaches are shown in Figure 10. Again, as the weighting exponent is increased, the mean of the Weibull deviation increases as well, although the standard deviation of the distribution stays relatively constant regardless of the exponent value. Figure 10(a) shows that several weighting exponents, ranging from about 1.4 to 1.7, give close agreement with the CPF model. The calculation of the Bhattacharyya coefficient (Figure 10(b)) agrees, and suggests that the best fit to the CPF model is for an exponent value of 1.5 (BC = 0.995).

Comparison of the output normalized Weibull deviation distributions for the continuum model for various values of the weighting exponent (n) and a constant weighting range (0.05) to the CPF model. (a) Mean normalized Weibull deviations for the continuum model with error bars for the standard deviation against the mean CPF value (solid line) and plus and minus one deviation (dotted lines). (b) The Bhattacharyya coefficient between each output distribution from the continuum model to the CPF model

Similarly, the weighting exponent was then held constant (n = 1.5) and the weighting range was varied. The comparisons of the resulting Weibull deviation distributions are shown in Figure 11. It is obvious from these results that changes in the weighting range have a much smaller effect on the overall macrosegregation distribution than the weighting exponent does. For all values of the weighting range that were tested, the Bhattacharyya coefficient is above 0.94 (Figure 11(b)). The best match with the CPF model is when \( \Delta g_{s} = 0.05 \).

Comparison of the output normalized Weibull deviation distributions for the continuum model for various values of the weighting range (Δg s ) and a constant weighting exponent (1.5) to the CPF model. (a) Mean normalized Weibull deviations for the continuum model with error bars for the standard deviation against the mean CPF value (solid line) and plus and minus one deviation (dotted lines). (b) The Bhattacharyya coefficient between each output distribution from the continuum model to the CPF model

Now that the best mean values for the new inputs to the continuum attachment model have been determined, a better comparison of it to the discrete CPF model may be made. Clearly, if these inputs are held constant, the output of the continuum model is essentially identical to the discrete model. However, if the continuum model is overly sensitive to these parameters, any uncertainty in their value has the potential to negate any benefit derived from its differences with the discrete models. To test this, the input uncertainties shown in Table III are implemented into the continuum model. While the resulting sensitivities (Figure 12) are not completely negligible, they are smaller than the sensitivities of the model to the input parameters that are shared with the discrete models. These results show that the continuum attachment model may be implemented in place of a discrete model in order to reduce numerical artifacts and improve convergence without fear of significantly altering the model predictions as a function of the uncertainty in the values of the new parameters.

Guidance for Experimental Validation and Model Development

The results in Sections III.3 and III.4 may be used to guide future experimental efforts and model development. The sensitivity analysis shown in Figure 5 demonstrates the importance of the grain attachment models and the parameters that govern them. One method of refining the model predictions might be to sacrifice some computational expense in order to break down the assumptions made here about the uniformity of the grain size distribution, the packing fraction, and the dendrite arm spacing, and to replace these with physics based sub-models. In this case, however, similar to the continuum model compared to the CPF model, new model parameters are necessarily introduced. Therefore, a new uncertainty analysis must be performed in order to understand the impact of these new parameters.

Model development should correspond to experimental observations, the primary purpose of which is to obtain high-fidelity validation data. For the specific case of macrosegregation predictions, the best approach is composition measurements for simplified laboratory scale ingots including sufficient repetition of experiments in order to establish error bounds. Using a simple laboratory case (a simple static sand casting for example), it should also be possible to carefully measure the parameters required for input to the model. For example, inverse heat conduction methods[45] may be used to approximate thermal boundary conditions from thermocouple data, and post-mortem metallography may be used to determine the dendrite arm spacing and an upper limit for the free-floating grain size. Ideally, these experiments would also include more complex analyses of the dendrite coalescence point which requires either additional thermal measurements within the melt[46,47] or separate rheological measurements[48,49,50] for the alloy in question.

Conclusions

This study used the PUQ framework to quantify the epistemic uncertainty in macrosegregation predictions produced by the use of three different grain attachment schemes in a model for equiaxed solidification. The three models, namely, the constant packing fraction (CPF), average velocity method (AVM), and the continuum method, were successfully compared for a base level of input uncertainties in those parameters that most directly affect the attachment of free-floating grains and the resulting macrosegregation (the critical fraction solid, free-floating particle size, and dendrite arm spacing). The macrosegregation was characterized using the deviation of a three-parameter Weibull distribution, normalized by the nominal composition, and the output of the uncertainty quantification was a distribution of these values. The Bhattacharyya coefficient was used as a metric for comparing the overlap between distributions obtained from different attachment schemes. It was found that the inclusion of the velocity field in the AVM did not significantly impact the macrosegregation predictions relative to the CPF model. For this effect to become significant, experimental work is required to precisely define these input parameters. The continuum model was also found to produce largely similar results to the CPF model given appropriate calibration. More importantly, the introduction of new model parameters did not greatly affect the output uncertainty in the macrosegregation predictions. Based on these results, the continuum attachment model is recommended due to its decreased susceptibility to numerical artifacts.

A larger goal of this work was to provide an example for how uncertainty quantification can be used for comparison among various models and between models and experiments within the solidification community. The present grain attachment models primarily function as a case study for how this type of analysis may be used to evaluate the effect of model development and enable quantitative comparisons between modeling approaches as well as to experimental data, and weigh the constant tradeoff between computational expense and accuracy. Hopefully, future research will use these or similar techniques to target experimental and modeling efforts by fully understanding the impact of specific inputs.

References

[1] A. Chernatynskiy, S. R. Phillpot, and R. LeSar: Annu. Rev. Mater. Res., 2013, vol. 43, pp. 157–82.

P. Marepalli, J.Y. Murthy, B. Qiu, and X. Ruan: J. Heat Transf., 2014, vol. 136, art no. 111301.

[3] P. J. Roache: Annu. Rev. Fluid Mech., 1997, vol. 29, pp. 123–60.

[4] Habib N Najm: Annu. Rev. Fluid Mech., 2009, vol. 41, pp. 35–52.

[5] R. A. Hardin, K. K. Choi, N. J. Gaul, and C. Beckermann: Int. J. Cast Met. Res., 2015, vol. 28, pp. 181–92.

[6] K. Fezi and M. J. M. Krane: IOP Conf. Ser. Mater. Sci. Eng., 2015, vol. 84, p. 12001.

K. Fezi and M. J. M. Krane: Int. J. Cast Met. Res., 2016, Available Online.

K. Fezi and M. J. M. Krane: J. Heat Transfer, 2016, In Press.

[9] K. Fezi and M. J. M. Krane: IOP Conf. Ser. Mater. Sci. Eng., 2016, vol. 143, pp. 407–14.

[10] J. Ni and C. Beckermann: Metall. Trans. B, 1991, vol. 22, pp. 349–61.

A. V. Reddy and C. Beckermann: in Mater. Process. Comput. Age II, V. R. Voller, S. P. Marsh, and N. El-Kaddah, eds., 1995, pp. 89–102.

[12] G. D. Scott and D. M. Kilgour: J. Phys. D. Appl. Phys., 2002, vol. 2, pp. 863–66.

[13] C. Y. Wang and C. Beckermann: Metall. Mater. Trans. A, 1996, vol. 27, pp. 2754–64.

[14] C. Y. Wang and C. Beckermann: Metall. Mater. Trans. A, 1996, vol. 27, pp. 2765–83.

[15] C Y Wang and C. Beckermann: Metall. Trans. A, 1993, vol. 24, pp. 2787–2802.

[16] A. Ludwig and M. Wu: Metall. Mater. Trans. A, 2002, vol. 33, pp. 3673–83.

[17] M. Zaloznik and H. Combeau: Comput. Mater. Sci., 2010, vol. 48, pp. 1–10.

[18] W. D. Bennon and F. P. Incropera: Int. J. Heat Mass Transf., 1987, vol. 30, pp. 2171–87.

[19] W. D. Bennon and F. P. Incropera: Int. J. Heat Mass Transf., 1987, vol. 30, pp. 2161–70.

[20] C. J. Vreeman, M. J. M. Krane, and F. P. Incropera: Int. J. Heat Mass Transf., 2000, vol. 43, pp. 677–86.

[21] C. J. Vreeman and F. P. Incropera: Int. J. Heat Mass Transf., 2000, vol. 43, pp. 687–704.

[22] J. Ni and F. P. Incropera: Int. J. Heat Mass Transf., 1995, vol. 38, pp. 1271–84.

[23] J. Ni and F. P. Incropera: Int. J. Heat Mass Transf., 1995, vol. 38, pp. 1285–96.

[24] C. J. Vreeman, J. D. Schloz, and M. J. M. Krane: ASME J. Heat Transf., 2002, vol. 124, p. 947.

[25] I. Vusanovic and M. J. M. Krane: IOP Conf. Ser. Mater. Sci. Eng., 2012, vol. 27, pp. 1–6.

[26] A. Plotkowski and M. J. M. Krane: Appl. Math. Model., 2016, vol. 40, pp. 9212–27.

[27] A. Plotkowski and M. J. M. Krane: Comput. Mater. Sci., 2016, vol. 124, pp. 238–48.

[28] S. V. Patankar: Numerical Heat Transfer and Fluid Flow, McGraw-Hill, New York, NY, 1980.

C. J. Vreeman: M.S. Thesis Purdue University, 1997.

[30] V. R. Voller and C. R. Swaminathan: Numer. Heat Transf. Part B Fundam., 1991, vol. 19, pp. 175–89.

[31] C. J. Vreeman and F. P. Incropera: Numer. Heat Transf. Part B Fundam., 1999, vol. 36, pp. 1–14.

[32] A. Kumar, B. Dussoubs, M. Zaloznik, and H. Combeau: IOP Conf. Ser. Mater. Sci. Eng., 2012, vol. 27, pp. 1–8.

[33] M Wu and A Ludwig: Acta Mater., 2009, vol. 57, pp. 5621–31.

M. Wu and A. Ludwig: Acta Mater., 2009, vol. 57, pp. 5632–44.

[35] M. Hunt, B. Haley, M. McLennan, M. Koslowski, J. Murthy, and A. Strachan: Comput. Phys. Commun., 2015, vol. 194, pp. 97–107.

[36] M. D. Morris: Technometrics, 1991, vol. 33, pp. 161–74.

[37] F. Campolongo, J. Cariboni, and A. Saltelli: Environ. Model. Softw., 2007, vol. 22, pp. 1509–18.

[38] A. Bhattacharyya: Bull. Calcutta Math. Soc., 1943, vol. 35, pp. 99–109.

[39] T. Kailath: IEEE Trans. Commun. Technol., 1967, vol. 15, pp. 52–60.

[40] K. Fezi, A. Plotkowski, and M. J. M. Krane: Metall. Mater. Trans. A, 2016, vol. 47, pp. 2940–51.

[41] J. O. Andersson, Thomas Helander, Lars Höglund, Pingfang Shi, and Bo Sundman: Calphad Comput. Coupling Phase Diagrams Thermochem., 2002, vol. 26, pp. 273–312.

M. Lalpoor, D. G. Eskin, D. Ruvalcaba, H. G. Fjær, A. Ten Cate, N. Ontijt, and L. Katgerman: Mater. Sci. Eng. B, 2011, vol. 528, pp. 2831–42.

W. F. Gale: Smithells Metals Reference Book, 8th ed., Elsevier, New York, 2004.

[44] T. Iida and R. Guthrie: The Physical Properties of Liquid Metals, Clarendon Press, Oxford, 1988.

[45] A. Plotkowski and M. J. M. Krane: J. Heat Transfer, 2015, vol. 137, pp. 0313011–19.

[46] L. Arnberg, G. Chai, and L. Backerud: Mater. Sci. Eng. A, 1993, vol. 173, pp. 101–3.

[47] M. Malekan and S. G. Shabestari: Metall. Mater. Trans. A Phys. Metall. Mater. Sci., 2009, vol. 40, pp. 3196–3203.

[48] D. B. Spencer, R. Mehrabian, and M. C. Flemings: Metall. Trans., 1972, vol. 3, pp. 1925–32.

[49] R. J. Claxton: JOM, 1975, vol. 27, pp. 14–16.

[50] A. K. Dahle and D. H. StJohn: Acta Mater., 1999, vol. 47, pp. 31–41.

Acknowledgments

The authors thank Robert Wagstaff and Novellis Inc. for the financial gift that funded this work.

Author information

Authors and Affiliations

Corresponding author

Additional information

Manuscript submitted September 17, 2016.

Rights and permissions

About this article

Cite this article

Plotkowski, A., Krane, M.J.M. Quantification of Epistemic Uncertainty in Grain Attachment Models for Equiaxed Solidification. Metall Mater Trans B 48, 1636–1651 (2017). https://doi.org/10.1007/s11663-017-0933-9

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11663-017-0933-9