Abstract

In model-based clustering and classification, the cluster-weighted model is a convenient approach when the random vector of interest is constituted by a response variable \(Y\) and by a vector \({\varvec{X}}\) of \(p\) covariates. However, its applicability may be limited when \(p\) is high. To overcome this problem, this paper assumes a latent factor structure for \({\varvec{X}}\) in each mixture component, under Gaussian assumptions. This leads to the cluster-weighted factor analyzers (CWFA) model. By imposing constraints on the variance of \(Y\) and the covariance matrix of \({\varvec{X}}\), a novel family of sixteen CWFA models is introduced for model-based clustering and classification. The alternating expectation-conditional maximization algorithm, for maximum likelihood estimation of the parameters of all models in the family, is described; to initialize the algorithm, a 5-step hierarchical procedure is proposed, which uses the nested structures of the models within the family and thus guarantees the natural ranking among the sixteen likelihoods. Artificial and real data show that these models have very good clustering and classification performance and that the algorithm is able to recover the parameters very well.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Mixture models have been used for clustering for at least fifty years (Wolfe 1963, 1970). Following the inception of the expectation-maximization (EM) algorithm (Dempster et al. 1977), parameter estimation became more manageable and applications of mixture models for clustering and classification became more common (see Titterington et al. 1985; McLachlan and Basford 1988 for examples). With the increasing availability of computational power, the popularity of mixture models has grown consistently since the mid-1990s, including notable work by Banfield and Raftery (1993), Celeux and Govaert (1995), Ghahramani and Hinton (1997), Tipping and Bishop (1999), McLachlan and Peel (2000a), Fraley and Raftery (2002), Dean et al. (2006), Bouveyron et al. (2007), McNicholas and Murphy (2008, 2010a), Karlis and Santourian (2009), Lin (2010), Scrucca (2010), Baek et al. (2010), Andrews et al. (2011), Browne et al. (2012), McNicholas and Subedi (2012), and Browne and McNicholas (2012), amongst others.

Consider data \(({\varvec{x}},y)\) that are realizations of the pair \(({\varvec{X}},Y)\) defined on some space \(\Omega \), where \(Y \in \mathbb{R }\) is a response variable and \({\varvec{X}}\in \mathbb{R }^p\) is a vector of covariates. Suppose that \(\Omega \) can be partitioned into \(G\) groups, say \(\Omega _1, \ldots ,\Omega _G\). Let \(p\left( {\varvec{x}},y\right) \) be the joint density of \(({\varvec{X}},Y)\). In this paper, we shall consider a mixture model having density of the form

where \(\phi ({\varvec{x}};\varvec{\mu }_g,{{\varvec{\Sigma }}}_g)\) denotes a \(p\)-variate Gaussian density with mean \({{\varvec{\mu }}}_g\) and covariance matrix \({{\varvec{\Sigma }}}_g\), and \(\phi (y|\varvec{x};m({\varvec{x}};{{\varvec{\beta }}}_g),\sigma ^2_g)\) denotes the (Gaussian) density of the conditional distribution of \(Y|{\varvec{x}}\) with mean \(m({\varvec{x}};{{\varvec{\beta }}}_g)=\beta _{0g} + {{\varvec{\beta }}}^{\prime }_{1g} {\varvec{x}}\), \(\beta _{0g} \in \mathbb{R }\) and \({{\varvec{\beta }}}_{1g}\in \mathbb{R }^p\), and variance \(\sigma ^2_g\). Model parameters are denoted by \({\varvec{\theta }}\). The density in (1) defines the linear Gaussian cluster-weighted model (see, e.g., Gershenfeld 1997; Schöner 2000). Quite recently, the cluster-weighted model (CWM) has been developed under more general assumptions: Ingrassia et al. (2012a) consider \(t\) distributions, Ingrassia et al. (2013) introduce a family of twelve parsimonious linear \(t\) CWMs for model-based clustering, and Ingrassia et al. (2012b) propose CWMs with categorical responses. Finally, Punzo (2012) introduces the polynomial Gaussian CWM as a flexible tool for clustering and classification.

In the mosaic of work around the use of mixture models for clustering and classification, CWMs have their place in applications with random covariates. Indeed, differently from finite mixture of regressions (see, e.g., Leisch 2004; Frühwirth-Schnatter 2006), which are examples of mixture models with fixed covariates, the CWM allows for assignment dependence: the covariate distributions for each group \(\Omega _g\) can also be distinct. In clustering and classification terms, this means that \({\varvec{X}}\) can directly affect the clustering results and this represents an advantage, for most applications, with respect to the fixed covariates approach (Hennig 2000).

However, the applicability of model (1) in high dimensional \({\varvec{X}}\)-spaces still remains a challenge. In particular, the number of parameters for this model is \((G-1)+G(p+2)+G[p+p(p+1)/2]\), of which \(Gp\left( p+1\right) /2\) are used for the component (or group) covariance matrices \({{\varvec{\Sigma }}}_g\) of \({\varvec{X}}\), \(g=1,\ldots ,G\), and this increases quadratically with \(p\). To overcome this issue, we assume a latent Gaussian factor structure for \({\varvec{X}}\), in each mixture-component, which leads to the factor regression model (FRM) of \(Y\) on \({\varvec{x}}\) (see West 2003; Wang et al. 2007, and Carvalho et al. 2008). The FRM assumes \({{\varvec{\Sigma }}}_g={{\varvec{\Lambda }}}_g{{\varvec{\Lambda }}}_g^{\prime }+{{\varvec{\Psi }}}_g\), where the loading matrix is a \(p\times q\) matrix of parameters typically with \(q\ll p\) and the noise matrix \({{\varvec{\Psi }}}_g\) is a diagonal matrix. The adoption of this group covariance structure in (1) leads to the linear Gaussian cluster-weighted factor analyzers (CWFA) model, which is characterized by \(G\left[ pq-q\left( q-1\right) /2\right] +Gp\) parameters for the group covariance matrices. The CWFA model follows the principle of the general form of mixtures of factor analyzers regarding \({\varvec{X}}\). Mixtures of factor analyzers were introduced by Ghahramani and Hinton (1997) and further developed by Tipping and Bishop (1999) and McLachlan and Peel (2000b). More recent results have been also provided in Montanari and Viroli (2010, 2011).

Starting from the works of McNicholas and Murphy (2008), McNicholas (2010), Ingrassia et al. (2012b), and Ingrassia et al. (2013), a novel family of sixteen mixture models—obtained as special cases of the linear Gaussian CWFA by conveniently constraining the component variances of \(Y|{\varvec{x}}\) and \({\varvec{X}}\)—is introduced to facilitate parsimonious model-based clustering and classification in the defined paradigm. The novelty of this proposal is that it considers a family of models that use factor models in a regression context to effectively, and flexibly, reduce dimensionality.

The paper is organized as follows. Sect. 2 recalls the FRM; the linear Gaussian CWFA models are introduced in Sect. 3. Model fitting with the alternating expectation-conditional maximization (AECM) algorithm is presented in Sect. 4. Section 5 addresses computational details on some aspects of the AECM algorithm and discusses model selection and evaluation. Artificial and real data are considered in Sect. 6, and the paper concludes with discussion and suggestions for further work in Sect. 7.

2 The factor regression model

The factor analysis model (Spearman 1904; Bartlett 1953), for the \(p\)-dimensional variable \({\varvec{X}}\), postulates that

where \({\varvec{U}}\sim N_q\left( {\varvec{0}}, {\varvec{I}}_q\right) \) is a \(q\)-dimensional \((q\ll p)\) vector of latent factors, \({{\varvec{\Lambda }}}\) is a \(p\times q\) matrix of factor loadings, and \({\varvec{e}}\sim N_p\left( {\varvec{0}}, {{\varvec{\Psi }}}\right) \), with \({{\varvec{\Psi }}}= \text{ diag}\left( \psi _1^2, \ldots , \psi _p^2\right) \), independent of \({\varvec{U}}\). Then \({\varvec{X}}\sim N_p({{\varvec{\mu }}}, {{\varvec{\Lambda }}}{{\varvec{\Lambda }}}^{\prime }+{{\varvec{\Psi }}})\) and, conditional on \({\varvec{u}}\), results in \({\varvec{X}}|{\varvec{u}}\sim N_p\left( {{\varvec{\mu }}}+{{\varvec{\Lambda }}}{\varvec{u}},{{\varvec{\Psi }}}\right) \).

Model (2) can be considered jointly with the standard (linear) regression model \(Y=\beta _0+{{\varvec{\beta }}}_1^{\prime }{\varvec{X}}+ \varepsilon \) leading to the Factor Regression Model (FRM) (see West 2003; Wang et al. 2007, and Carvalho et al. 2008);

where \(\varepsilon \) is assumed to be independent of \({\varvec{U}}\) and \({\varvec{e}}\). The mean and variance of \(Y\) are given by

respectively, and so \(Y \sim N\left( \beta _0 + {{\varvec{\beta }}}^{\prime }_1 {{\varvec{\mu }}}, {{\varvec{\beta }}}^{\prime }_1 \left( {{\varvec{\Lambda }}}{{\varvec{\Lambda }}}^{\prime } + \Psi \right) {{\varvec{\beta }}}_1 + \sigma ^2\right) \).

Consider the triplet \(\left( Y,{\varvec{X}}^{\prime },{\varvec{U}}^{\prime }\right) ^{\prime }\). Its mean is given by

and because \(\text{ Cov}({\varvec{X}},Y)= ({{\varvec{\Lambda }}}{{\varvec{\Lambda }}}^{\prime }+{{\varvec{\Psi }}}){{\varvec{\beta }}}_1\) and \(\text{ Cov}({\varvec{U}},Y) = {{\varvec{\Lambda }}}^{\prime } {{\varvec{\beta }}}_1\), it results that

where \({{\varvec{\Sigma }}}={{\varvec{\Lambda }}}{{\varvec{\Lambda }}}^{\prime } + {{\varvec{\Psi }}}\). Now, we can write the joint density of \(\left( Y,{\varvec{X}}^{\prime },{\varvec{U}}^{\prime }\right) ^{\prime }\) as

Here, the distribution and related parameters for both \({\varvec{X}}|{\varvec{u}}\) and \({\varvec{U}}\) are known. Thus, we only need to analyze the distribution of \(Y|{\varvec{x}},{\varvec{u}}\). Importantly, \(\mathbb E \left( Y|{\varvec{x}},{\varvec{u}}\right) = \mathbb E \left( Y|{\varvec{x}}\right) \) and \(\text{ Var}\left( Y|{\varvec{x}},{\varvec{u}}\right) = \text{ Var}(Y|{\varvec{x}})\), and so \(Y|{\varvec{x}},{\varvec{u}}\sim N\left( \beta _0 + {{\varvec{\beta }}}^{\prime }_1 {\varvec{x}}, \sigma ^2\right) \); mathematical details are given in Appendix A. This implies that \(\phi \left( y|{\varvec{x}},{\varvec{u}}\right) =\phi \left( y|{\varvec{x}}\right) \) and, therefore, \(Y\) is conditionally independent of \({\varvec{U}}\) given \({\varvec{X}}={\varvec{x}}\), so that (3) becomes

Similarly, \({\varvec{U}}|y,{\varvec{x}}\sim N\left( {{\varvec{\gamma }}}\left( {\varvec{x}}-{{\varvec{\mu }}}\right) ,{\varvec{I}}_q-{{\varvec{\gamma }}}{{\varvec{\Lambda }}}\right) \), where \({{\varvec{\gamma }}}={{\varvec{\Lambda }}}^{\prime }\left( {{\varvec{\Lambda }}}{{\varvec{\Lambda }}}^{\prime }+{{\varvec{\Psi }}}\right) ^{-1}\), and thus \({\varvec{U}}\) is conditionally independent of \(Y\) given \({\varvec{X}}={\varvec{x}}\). Therefore,

3 The modelling framework

3.1 The general model

Assume that for each \(\Omega _g\), \(g=1, \ldots , G\), the pair \(({\varvec{X}},Y)\) satisfies a FRM, that is

where \(\varvec{\Lambda }_g\) is a \(p\times q\) matrix of factor loadings, \({\varvec{U}}_g \sim N_q\left( \varvec{0},{\varvec{I}}_q\right) \) is the vector of factors, \({\varvec{e}}_g\sim N_p\left( \varvec{0},\varvec{\Psi }_g\right) \) are the errors, \(\varvec{\Psi }_g=\text{ diag}\left( \psi _{1g},\ldots ,\psi _{pg}\right) \), and \(\varepsilon _g \sim N(0,\sigma ^2_g)\). Then the linear Gaussian CWM in (1) can be extended in order to include the underlying factor structure (5) for \({\varvec{X}}\). In particular, by recalling that \(Y\) is conditionally independent of \({\varvec{U}}\) given \({\varvec{X}}={\varvec{x}}\) in the generic \(\Omega _g\), we get

where \({{\varvec{\theta }}}=\left\{ \pi _g,{{\varvec{\beta }}}_g,\sigma ^2_g,{{\varvec{\mu }}}_g,{{\varvec{\Lambda }}}_g,{{\varvec{\Psi }}}_g;g=1,\ldots ,G\right\} \). Model (6) is the linear Gaussian CWFA, which we shall refer to as the CWFA model herein.

3.2 Parsimonious versions of the model

To introduce parsimony, we extend the linear Gaussian CWFA after the fashion of McNicholas and Murphy (2008) by allowing constraints across groups on \(\sigma ^2_g\), \({{\varvec{\Lambda }}}_g\), and \({{\varvec{\Psi }}}_g\), and on whether \({{\varvec{\Psi }}}_g=\psi _g\varvec{I}_p\) (isotropic assumption). The full range of possible constraints provides a family of sixteen different parsimonious CWFAs (Table 1).

Here, models are identified by a sequence of four letters. The letters refer to whether or not the constraints \(\sigma ^2_g=\sigma ^2\), \({{\varvec{\Lambda }}}_g={{\varvec{\Lambda }}}\), \({{\varvec{\Psi }}}_g={{\varvec{\Psi }}}\), and \({{\varvec{\Psi }}}_g=\psi _g\varvec{I}_p\), respectively, are imposed. The constraints on the group covariances of \({\varvec{X}}\) are in the spirit of McNicholas and Murphy (2008), while that on the group variances of \(Y\) are borrowed from Ingrassia et al. (2013). Each letter can be either C, if the corresponding constraint is applied, or U if the particular constraint is not applied. For example, model CUUC assumes equal \(Y\) variances between groups, unequal loading matrices, and unequal, but isotropic, noise.

3.3 Model-based classification

Suppose that \(m\) of the \(n\) observations in \(\mathcal S \) are labeled. Within the model-based classification framework, we use all of the \(n\) observations to estimate the parameters in (6); the fitted model classifies each of the \(n-m\) unlabeled observations through the corresponding maximum a posteriori probability (MAP). As a special case, if \(m=0\), we obtain the clustering scenario. Drawing on Hosmer Jr. (1973), (Titterington et al. (1985), Section 4.3.3) show that knowing the label of just a small proportion of observations a priori can lead to improved clustering performance.

Notationally, if the \(i\)th observation is labeled, denote with \(\widetilde{\varvec{z}}_i=\left( \widetilde{z}_{i1}, \ldots ,\widetilde{z}_{iG}\right) \) its component membership indicator. Then, arranging the data so that the first \(m\) observations are labeled, the complete-data likelihood becomes

where \(H\ge G\) (often, it is assumed that \(H=G\)). For notational convenience, in this paper we prefer to present the AECM algorithm in the model-based clustering paradigm (cf. Sect. 4). However, the extension to the model-based classification context is simply obtained by substituting the ‘dynamic’ (with respect to the iterations of the algorithm) \({\varvec{z}}_1,\ldots ,{\varvec{z}}_m\) with the ‘static’ \(\widetilde{{\varvec{z}}}_1,\ldots ,\widetilde{{\varvec{z}}}_m\).

3.4 On identifiability

No theoretical results are currently available on the identifiability of CWMs; however, because they can be seen as mixture models with random covariates, the results in (Hennig (2000), Section 3, Model 2.a) can apply. With regard to the latent factor structure, the necessary conditions for the identifiability of factor analyzers are discussed by Bartholomew and Knott (1999).

4 Maximum likelihood estimation

4.1 The AECM algorithm

The AECM algorithm (Meng and van Dyk 1997) is used for fitting all models within the CWFA family defined in Sect. 1. The expectation-conditional maximization (ECM) algorithm proposed by Meng and Rubin (1993) replaces the M-step of the EM algorithm by a number of computationally simpler conditional maximization (CM) steps. The AECM algorithm is an extension of the ECM algorithm, where the specification of the complete data is allowed to be different on each CM step.

Let \(\mathcal S =\left\{ \left( {\varvec{x}}_i,y_i\right) ;i=1,\ldots ,n\right\} \) be a sample of size \(n\) from (6). In the EM framework, the generic observation \(\left( {\varvec{x}}_i,y_i\right) \) is viewed as being incomplete; its complete counterpart is given by \(\left( {\varvec{x}}_i,y_i,{\varvec{u}}_{ig},\varvec{z}_i\right) \), where \(\varvec{z}_i\) is the component-label vector in which \(z_{ig}=1\) if \(\left( {\varvec{x}}_i,y_i\right) \) comes from \(\Omega _g\) and \(z_{ig}=0\) otherwise. Then the complete-data likelihood, by considering the result in (4), can be written as

The idea of the AECM algorithm is to partition \({{\varvec{\theta }}}\), say \({{\varvec{\theta }}}=\left( {{\varvec{\theta }}}^{\prime }_1,{{\varvec{\theta }}}^{\prime }_2\right) ^{\prime }\), in such a way that the likelihood is easy to maximize for \({{\varvec{\theta }}}_1\) given \({{\varvec{\theta }}}_2\) and vice versa. For the application of the AECM algorithm to our CWFA family, one iteration consists of two cycles, with one E-step and one CM-step for each cycle. The two CM-steps correspond to the partition of \({{\varvec{\theta }}}\) into the two subvectors \({{\varvec{\theta }}}_1\) and \({{\varvec{\theta }}}_2\). Then, we can iterate between these two conditional maximizations until convergence. In the next two sections, we illustrate the two cycles for the UUUU model only. Details on the other models of the CWFA family are given in Appendix B.

4.2 First cycle

Here, \({{\varvec{\theta }}}_1=\left\{ \pi _g,{{\varvec{\beta }}}_g,{{\varvec{\mu }}}_g,\sigma ^2_g;g=1, \ldots , G\right\} \), where the missing data are the unobserved group labels \(\varvec{z}_i\), \(i=1,\ldots ,n\). The complete-data likelihood is

Consider the complete-data log-likelihood

where \(n_g = \displaystyle \sum _{i=1}^n z_{ig}\). Because \({{\varvec{\Sigma }}}_g = {{\varvec{\Lambda }}}{{\varvec{\Lambda }}}^{\prime }_g + {{\varvec{\Psi }}}_g\), we get

The E-step on the first cycle of the \(\left( k+1\right) \)st iteration requires the calculation of \(Q_1\left( {{\varvec{\theta }}}_1; {{\varvec{\theta }}}^{\left( k\right) }\right) = \mathbb E _{{{\varvec{\theta }}}^{\left( k\right) }}\left[ l_{c} \left( {{\varvec{\theta }}}_1\right) |\mathcal S \right] \), which is the expected complete-data log-likelihood given the observed data and using the estimate \({{\varvec{\theta }}}^{\left( k\right) }\) from the \(k\)th iteration. In practice, it requires calculating \(\mathbb E _{{{\varvec{\theta }}}^{\left( k\right) }}\left[ Z_{ig}|\mathcal S \right] \); this step is achieved by replacing each \(z_{ig}\) by \(z_{ig}^{(k+1)}\), where

For the M-step, the maximization of this complete-data log-likelihood yields

where \(n_g^{\left( k+1\right) }=\displaystyle \sum _{i=1}^n z_{ig}^{\left( k+1\right) }\). Following the notation in McLachlan and Peel (2000a), we set \({{\varvec{\theta }}}^{\left( k+1/2\right) }=\left\{ {{\varvec{\theta }}}_1^{\left( k+1\right) },{{\varvec{\theta }}}_2^{\left( k\right) }\right\} \).

4.3 Second cycle

Here, \({{\varvec{\theta }}}_2=\left\{ {{\varvec{\Lambda }}}_g,{{\varvec{\Psi }}}_g;g=1, \ldots , G\right\} \), where the missing data are the unobserved group labels \(\varvec{z}_i\) and the latent factors \(\varvec{u}_{ig}\), \(i=1,\ldots ,n\), and \(g=1,\ldots ,G\). Therefore, the complete-data likelihood is

because \(Y\) is conditionally independent of \({\varvec{U}}\) given \({\varvec{X}}={\varvec{x}}\) and

Hence, the complete-data log-likelihood is

where we set

The E-step on the second cycle of the \(\left( k+1\right) \)st iteration requires the calculation of \(Q_2\left( {{\varvec{\theta }}}_2; {{\varvec{\theta }}}^{(k+1/2)}\right) = \mathbb E _{{{\varvec{\theta }}}^{(k+1/2)}} \left[ l_{c2} \left( {{\varvec{\theta }}}_2\right) | \mathcal S \right] \). Therefore, we must calculate the following conditional expectations: \(\mathbb E _{{{\varvec{\theta }}}^{(k+1/2)}} \left( Z_{ig} | \mathcal S \right) \), \(\mathbb E _{{{\varvec{\theta }}}^{(k+1/2)}} \left( Z_{ig} {\varvec{U}}_{ig} | \mathcal S \right) \), and \(\mathbb E _{{{\varvec{\theta }}}^{(k+1/2)}} \left( Z_{ig}{\varvec{U}}_{ig} {\varvec{U}}^{\prime }_{ig} |\mathcal S \right) \). Based on (2), these are given by

where

Thus, the \(g\)th term of the expected complete-data log-likelihood \(Q_2\left( {{\varvec{\theta }}}_2; {{\varvec{\theta }}}^{(k+1/2)}\right) \) becomes

where \(\text{ C}\left( {{\varvec{\theta }}}_1^{\left( k+1\right) }\right) \) denotes the terms in (9) that do not depend on \({{\varvec{\theta }}}_2\). Then (9) is maximized for \(\left\{ \hat{{{\varvec{\Lambda }}}}, \hat{{{\varvec{\Psi }}}}\right\} \), satisfying

Therefore,

From (10), we get

and substituting in (11) we get

which yields

Hence, the maximum likelihood estimates for \({{\varvec{\Lambda }}}\) and \({{\varvec{\Psi }}}\) are obtained by iteratively computing

where the superscript \(^{+}\) denotes the update estimate. Using (7) and (8), we get

4.4 Outline of the algorithm

In summary, the procedure can be described as follows. For a given initial guess \({{\varvec{\theta }}}^{\left( 0\right) }\), on the \(\left( k+1\right) \)st iteration, the algorithm carries out the following steps for \(g=1,\ldots ,G\):

-

1.

Compute \(\pi ^{\left( k+1\right) }_g, {{\varvec{\mu }}}^{\left( k+1\right) }_g, {{\varvec{\beta }}}^{\left( k+1\right) }_g, \sigma ^{2\left( k+1\right) }_g\);

-

2.

Set \({{\varvec{\Lambda }}}_g \leftarrow {{\varvec{\Lambda }}}^{\left( k\right) }_g\) and \({{\varvec{\Psi }}}\leftarrow {{\varvec{\Psi }}}^{\left( k\right) }_g\), and compute \({{\varvec{\gamma }}}_g\) and \({{\varvec{\Theta }}}_g\);

-

3.

Repeat the following steps until convergence on \({{\varvec{\Lambda }}}_g\) and \({{\varvec{\Psi }}}_g\):

-

(a)

Set \({{\varvec{\Lambda }}}_g^{+} \leftarrow {\varvec{S}}^{\left( k+1\right) }_g {{\varvec{\gamma }}}^{\prime }_g {{\varvec{\Theta }}}_g^{-1}\) and \({{\varvec{\Psi }}}^{+}_g \leftarrow \text{ diag}\left\{ {\varvec{S}}^{\left( k+1\right) }_g - {{\varvec{\Lambda }}}_g^{+} {{\varvec{\gamma }}}_g{\varvec{S}}^{\left( k+1\right) }_g\right\} \);

-

(b)

Set \({{\varvec{\gamma }}}^{+}_g \leftarrow {{\varvec{\Lambda }}}^{\prime +}_g \left( {{\varvec{\Lambda }}}_g^{+} {{\varvec{\Lambda }}}^{\prime +}_g +{{\varvec{\Psi }}}^{+}_g\right) ^{-1}\) and \({{\varvec{\Theta }}}^{+}_g \leftarrow {\varvec{I}}_q-{{\varvec{\gamma }}}^{+}_g {{\varvec{\Lambda }}}^{+}_g+ {{\varvec{\gamma }}}^{+}_g {\varvec{S}}_g^{\left( k+1\right) } {{\varvec{\gamma }}}^{\prime +}_g\);

-

(c)

Set \({{\varvec{\Lambda }}}_g \leftarrow {{\varvec{\Lambda }}}_g^{+}\), \({{\varvec{\Psi }}}_g \leftarrow {{\varvec{\Psi }}}_g^+\), \({{\varvec{\gamma }}}_g \leftarrow {{\varvec{\gamma }}}^{+}_g\), and \({{\varvec{\Theta }}}_g \leftarrow {{\varvec{\Theta }}}^{+}_g\);

-

(a)

-

4.

Set \({{\varvec{\Lambda }}}_g^{(k+1)} \leftarrow {{\varvec{\Lambda }}}_g\) and \({{\varvec{\Psi }}}^{(k+1)}_{g} \leftarrow {{\varvec{\Psi }}}_g\).

4.5 AECM initialization: a 5-step procedure

The choice of starting values is a well known and important issue in EM-based algorithms. The standard approach consists of selecting a value for \(\varvec{\theta }^{\left( 0\right) }\). An alternative method, more natural in the authors’ opinion, consists of choosing a value for \(\varvec{z}_i^{\left( 0\right) }\), \(i=1,\ldots ,n\) (see McLachlan and Peel 2000a, p. 54). Within this approach, and due to the hierarchical structure of the CWFA family of parsimonious models, we propose a 5-step hierarchical initialization procedure.

For a fixed number of groups \(G\), let \(\varvec{z}_i^{\left( 0\right) }\), \(i=1,\ldots ,n\), be the initial classification for the AECM algorithm, so that \(z_{ig}^{\left( 0\right) }\in \left\{ 0,1\right\} \) and \(\sum _gz_{ig}^{\left( 0\right) }=1\). The set \(\left\{ {\varvec{z}}_i^{\left( 0\right) };i=1, \ldots , n\right\} \) can be obtained either through some clustering procedure (here we consider the \(k\)-means method) or by random initialization, for example by sampling from a multinomial distribution with probabilities \(\left( 1/G,\ldots ,1/G\right) \). Then, at the first step of the procedure, the most constrained CCCC model is estimated from these starting values. At the second step, the resulting (AECM-estimated) \(\hat{z}_{ig}\) are taken as the starting group membership labels to initialize the AECM-algorithm of the four models \(\left\{ \text{ UCCC}, \text{ CUCC}, \text{ CCUC}, \text{ CCCU}\right\} \) obtained by relaxing one of the four constraints. At the third step, the AECM-algorithm for each of the six models \(\left\{ \text{ CCUU}, \text{ CUCU}, \text{ UCCU}, \text{ CUUC}, \text{ UCUC}, \text{ UUCC}\right\} \) with two constraints is initialized using the \(\hat{z}_{ig}\) from the previous step and the model with the highest likelihood. For example, to initialize CCUU we use the \(\hat{z}_{ig}\) from the model having the highest likelihood between CCCU and CCUC. In this fashion, the initialization procedure continues according to the scheme displayed in Fig. 1, until the least constrained model UUUU is estimated at the fifth step.

For all of the models in the CWFA family, in analogy with McNicholas and Murphy (2008), the initial values for the elements of \(\varvec{\Lambda }_g\) and \(\varvec{\Psi }_g\) are generated from the eigen-decomposition of \(\varvec{S}_g\) as follows. The \(\varvec{S}_g\) are computed based on the values of \(z_{ig}^{\left( 0\right) }\). The eigen-decomposition of each \(\varvec{S}_g\) is obtained using the Householder reduction and the QL method (details given by Press et al. 1992). Then the initial values of the elements of \(\varvec{\Lambda }_g\) are set as \(\lambda _{ij}=\sqrt{d_j}\rho _{ij}\), where \(d_j\) is the \(j\)th largest eigenvalue of \(\varvec{S}_g\) and \(\rho _{ij}\) is the \(i\)th element of the eigenvector corresponding to the \(j\)th largest eigenvalue of \(\varvec{S}_g\), where \(i\in \left\{ 1,2,\ldots ,d\right\} \) and \(j\in \left\{ 1,2,\ldots ,q\right\} \). The \(\varvec{\Psi }_g\) are then initialized as \(\varvec{\Psi }_g=\text{ diag}\left( \varvec{S}_ g-\varvec{\Lambda }_g\varvec{\Lambda }_g^{\prime }\right) \).

4.6 Convergence criterion

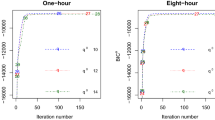

The Aitken acceleration procedure (Aitken 1926) is used to estimate the asymptotic maximum of the log-likelihood at each iteration of the AECM algorithm. Based on this estimate, a decision is made about whether the algorithm has reached convergence, i.e., whether the log-likelihood is sufficiently close to its estimated asymptotic value. The Aitken acceleration at iteration \(k\) is given by

where \(l^{\left( k+1\right) }\), \(l^{\left( k\right) }\), and \(l^{\left( k-1\right) }\) are the log-likelihood values from iterations \(k+1\), \(k\), and \(k-1\), respectively. Then, the asymptotic estimate of the log-likelihood at iteration \(k + 1\) is

Böhning et al. (1994). In the analyses in Section 6, we stop our algorithms when \(l_{\infty }^{\left( k+1\right) }-l^{\left( k\right) }<\epsilon \) (Böhning et al. 1994; Lindsay 1995). Note that we use \(\epsilon =0.05\) for the analyses herein.

5 Model selection and performance assessment

5.1 Model selection

The CWFA model, in addition to \({{\varvec{\theta }}}\), is also characterized by the number of latent factors \(q\) and by the number of mixture components \(G\). So far, these quantities have been treated as a priori fixed. Nevertheless, the estimation of these is required, for practical purposes, when choosing a relevant model.

For model-based clustering and classification, several model selection criteria are used, such as the Bayesian information criterion (BIC; Schwarz 1978), the integrated completed likelihood (ICL; Biernacki et al. 2000), and the Akaike information criterion (AIC; Sakamoto et al. 1983). Among these, the BIC is the most predominant in the literature and is given by

where \(l(\hat{{{\varvec{\theta }}}})\) is the maximized log-likelihood and \(\eta \) is the number of free parameters. This is the model selection criterion used in the analyses of Sect. 6.

5.2 Adjusted rand index

Although the data analyses of Sect. 6 are mainly conducted as clustering examples, the true classifications are actually known for these data. In these examples, the adjusted Rand index (ARI; Hubert and Arabie 1985) is used to measure class agreement. The Rand index (RI; Rand 1971) is based on pairwise comparisons and is obtained by dividing the number of pairwise agreements (observations that should be in the same group and are, plus those that should not be in the same group and are not) by the total number of pairs. The ARI corrects the RI to account for agreement by chance: a value of ‘1’ indicates perfect agreement, ‘0’ is expected under random classification, and negative values indicate a classification that is worse than would be expected by guessing.

6 Data analyses

This section presents the application of the CWFA family of models to both artificial and real data sets. Code for the AECM algorithm, described in this paper, was written in the R computing environment (R Development Core Team 2012).

6.1 Simulated data

6.1.1 Example 1

The first data set consists of a sample of size \(n=175\) drawn from model UUCU with \(G=2,\, n_1=75,\,n_2=100,\,p=5\), and \(q=2\) (see Fig. 2 for details). The parameters used for the simulation of the data are given in Table 2 (see Appendix C.1 for details on the covariance matrices \({{\varvec{\Sigma }}}_g\), \(g=1,\ldots ,G\)).

All 16 CWFA models were fitted to the data for \(G\in \{1,2,3\}\) and \(q\in \{1,2\}\), resulting in 96 different models. As noted above (Sect. 4.5), initialization of the \({\varvec{z}}_i\), \(i=1,\ldots ,n\), for the most constrained model (CCCC), and for each combination \(\left( G,q\right) \), was done using the \(k\)-means algorithm according to the kmeans function of the R package stats. The remaining 15 models, for each combination \(\left( G,q\right) \), were initialized using the 5-step hierarchical initialization procedure described in Sect. 4.5. The BIC values for all models were computed and the model with the largest BIC value was selected as the best. In this example, the model corresponding to the largest BIC value (\(-\)5,845.997) was a \(G=2\) component UUCU model with \(q=2\) latent factors, the same as the model used to generate the data. The selected model gave perfect classification and the estimated parameters were very close to the parameters used for data simulation (see Table 2 and Appendix C.1).

Figure 3 shows the BIC values of the top 10 models sorted in increasing order. The horizontal dotted line separates the models with a BIC value within 1 % of the maximum (over all 96 models) BIC value (hereafter simply referred to as the ‘1% line’). As mentioned earlier, the model with the largest BIC was UUCU (with \(G=2\) and \(q=2\)). The subsequent two models, those above the 1 % line, were UUUU with \(G=2\) and \(q=2\) (BIC equal to \(-\)5,867.006) and CUCU with \(G=2\) and \(q=2\) (BIC equal to \(-\)5,869.839). These two models are structurally very close to the true UUCU model and also yielded perfect classification. It should also be noted that most of the models with high BIC values have \(G=2\) and \(q=2\).

6.1.2 Example 2

For the second data set, a sample of size \(n=235\) was drawn from the CUUC model with \(G=3\) groups (with \(n_1=75\), \(n_2=100\), and \(n_3=60\)) and \(q=2\) latent factors (see Fig. 4).

All 16 CWFA models were fitted to the data for \(G\in \{1,2,3,4\}\) and \(q\in \{1,2\}\), resulting in 128 different models. The algorithm was initialized in the same way as for Example 2. The model with the highest BIC (\(-\)6,579.116) was CUUC with \(G=3\) and \(q=2\), resulting in perfect classification. The estimated parameters of this model were very close to the true ones (Table 3 and Appendix C.2).

The plot of BIC values is omitted for the sake of space. Besides the true model, we underline that the other three models above the 1% line are UUUC (BIC = \(-\)6,583.692), CUUU (BIC = \(-\)6,637.222), and UUUU (BIC = \(-\)6,641.798), all with \(G=3\) and \(q=2\). Thus, these models are congruent, with respect to the true one, in terms of \(G\) and \(q\). Moreover, they have a similar covariance structure to the true one (CUUC) and yielded perfect classification.

6.1.3 Example 3

A third simulated data set, of dimension \(p+1=11\), was generated from the CCUU model with \(G=2\) groups (with \(n_1=75\) and \(n_2=100\)) and \(q=4\) latent factors. All 16 CWFA models were fitted to the data for \(G\in \{1,2,3\}\) and \(q\in \{1,2,3,4,5\}\), resulting in 240 different models. The algorithm was initialized in the same way as for Example 1. The model with the highest BIC (\(-\)10,190.23) was CCUU with \(G=2\) and \(q=4\), resulting in perfect classification. The estimated parameters of this model were very close to the true ones (Table 4).

As before, the plot of BIC values is omitted for the sake of space. The next three models with the highest BIC were UCUU (BIC \(= -10,192.989, q=4\)), CCUU (BIC \(= -10,195.264, q=5\)), and UCUU (BIC \(= -10,198.027, q=5\)), all with \(G=2\). All of these models had two components and a constrained loading matrix, and yielded perfect classification.

6.1.4 Example 4

A fourth simulated data set, of dimension \(p+1=21\), was generated from the UUCC model with \(G=2\) groups (with \(n_1=120\) and \(n_2=100\)) and \(q=5\) latent factors.

All 16 CWFA models were fitted to the data for \(G\in \{1,2,3\}\) and \(q\in \{1,2,3,4,5,6\}\), resulting in 288 different models. The algorithm was initialized in the same way as for Example 1. The model with the highest BIC (\(-\)24,199.57) was UUCC with \(G=2\) and \(q=5\), resulting in perfect classification. The estimated parameters of this model were very close to the true ones (Table 5).

The plot of BIC values is omitted for the sake of space. The next three models with the highest BIC were UUUC (BIC = \(-\)24204.955), CUCC (BIC = \(-\)24,213.742), and CUUC (BIC = 24,219.126), all with \(G=2\) and \(q=5\).

6.2 The f.voles data set

In addition to the simulated data analyses of Sect. 6.1, the CWFA family was also applied to a real data set for both clustering and classification. The f.voles data set, detailed in Flury (1997, Table 5.3.7) and available in the Flury package for R, consists of measurements of female voles from two species, M. californicus and M. ochrogaster. The data consist of 86 observations for which we have a binary variable \(\mathsf Species \) denoting the species (\(45\) Microtus ochrogaster and \(41\) M. californicus), a variable \(\mathsf Age \) measured in days, and six remaining variables related to skull measurements. The names of the variables are the same as in the original analysis of this data set by Airoldi and Hoffmann (1984): \(\mathsf L _2=\text{ condylo-incisive} \text{ length}\), \(\mathsf L _9=\text{ length} \text{ of} \text{ incisive} \text{ foramen}\), \(\mathsf L _7=\text{ alveolar} \text{ length} \text{ of} \text{ upper} \text{ molar} \text{ tooth} \text{ row}\), \(\mathsf B _3=\text{ zygomatic} \text{ width}\), \(\mathsf B _4=\text{ interorbital} \text{ width}\), and \(\mathsf H _1=\text{ skull} \text{ height}\). All of the variables related to the skull are measured in units of 0.1 mm.

The purpose of Airoldi and Hoffmann (1984) was to study age variation in M. californicus and M. ochrogaster and to predict age on the basis of the skull measurements. For our purpose, we assume that data are unlabelled with respect to \(\mathsf Species \) and that our interest is in evaluating clustering and classification using the CWFA family models as well as comparing the algorithm with some well-established mixture model-based techniques. Therefore, \(\mathsf Age \) can be considered the natural \(Y\) variable and the \(p=6\) skull measurements can be considered as the \({\varvec{X}}\) variable for the CWFA framework.

6.2.1 Clustering

All sixteen linear Gaussian CWFA models were fitted—assuming no known group memberships—for \(G\in \{2,\ldots ,5\}\) components and \(q\in \{1,2,3\}\) latent factors, resulting in a total of 192 different models. The model with the largest BIC value was CCCU with \(G=3\) and \(q=1\), with a BIC of \(-3{,}837.698\) and an ARI of 0.72. Table 6 displays the clustering results from this model.

Table 6 also shows the clustering results of the following model-based clustering approaches applied to the vector \(({\varvec{X}},Y)\):

-

PGMM: parsimonious latent Gaussian mixture models as described in McNicholas and Murphy (2008, 2010b); McNicholas (2010), and McNicholas et al. (2010), and estimated via the pgmmEM function of the R package pgmm (McNicholas et al. 2011);

-

FMA: factor mixture analysis as described in Montanari and Viroli (2010, 2011), and implemented via the fma function of the R package FactMixtAnalysis;

-

FMR: finite mixtures of linear Gaussian regressions as described, among many others, in DeSarbo and Cron (1988), and estimated via the stepFlexmix function of the R package flexmix (Leisch 2004);

-

FMRC: finite mixtures of linear Gaussian regressions with concomitants as described in Grün and Leisch (2008) and estimated via the stepFlexmix function of the R package flexmix; and

-

MCLUST: parsimonious mixtures of Gaussian distributions as described in Banfield and Raftery (1993); Celeux and Govaert (1995), and Fraley and Raftery (2002), and estimated via the Mclust function of the R package mclust (see Fraley et al. 2012, for details).

In all cases, we use the range \(G\in \{2,\ldots ,5\}\) for mixture components and values \(q\in \{1,2,3\}\) for the number of latent factors where relevant (i.e., PGMM and FMA). The best model is selected using the BIC. Finally, note that the pair \(({\varvec{X}},Y)\) is used as a unique input in MCLUST and FMA. Furthermore, as regards the former, all ten available covariance structures in the package mclust are considered while, ceteris paribus with the other approaches, no further covariates are considered for FMA. As seen from Table 6, M. californicus was classified correctly using the four approaches: CWFA, PGMM, FMA, and MCLUST. M. ochrogaster was classified into two sub-clusters using CWFA and PGMM while FMA and MCLUST classified it into one cluster. However, the CWFA approach had no misclassifications between the two species but PGMM, FMA, and MCLUST misclassified two M. ochrogaster as M. californicus. On these data, the other two approaches, FMR and FMRC, do not show a good clustering performance; poor results were obtained for FMR in particular.

For completeness, we give estimated parameters for the chosen CWFA model (CCCU, \(q=1\), \(G=3\)) in Table 7.

The plot of BIC values is omitted for the sake of space. In Table 8 we list the five models which attained the largest BIC values. Notably, the first four models were characterized by \(G=3\) components and the subsequent four by \(G=2\).

Airoldi and Hoffmann (1984) mention that some unexplained geographic variation may exist among the voles. However, no covariate was available with such information. Hence, we opted for the scatter plot matrix to evaluate the presence of sub-clusters (see Fig. 5). Here, the scatter plot of the variables \(\mathsf B _3\) versus \(\mathsf B _4\) shows the presence of distinct sub-clusters for M. ochrogaster, which supports our results attained using CWFA modelling.

6.2.2 Classification

A subset of observations, consisting of 50 % of the data, was randomly selected and these observations were assumed to have known component membership. To allow for the unobserved sub-cluster noted in the clustering application of Sect. 6.2.1, we ran the algorithm for \(G=2,3\) and \(q=1,2,3\). The best model (CCUU with \(G=2\) and \(q=1\)) selected by the BIC (\(-\)3,843.482) gave perfect classification, as we can see from Table 9a.

We also ran the classification assuming that the data are actually comprised of three known groups. Therefore, using the classification observed by clustering, we also ran the classification algorithm with 50 % known (i.e., labelled) and 50 % unknown (i.e., unlabelled). To further allow for the unobserved sub-cluster, we ran the algorithm for \(G\in \{3,4\}\) and \(q\in \{1,2,3\}\). The model selected using the BIC was CCCU with \(G=3\) and \(q=1\), with a BIC value of \(-\)3,837.383. Even though the BIC value observed using the classification approach (with \(G=3\) known groups) was very close to the BIC value using clustering, the sub-clusters do not have precisely the same classification using the two approaches. This could be a consequence of the classification of borderline observations among the sub-clusters using maximum a posteriori probability. However, the BIC value for the classification using three known groups was higher than the BIC value using two known groups, which again suggests the presence of sub-clusters.

7 Conclusions, discussion, and future work

In this paper, we introduced a novel family of 16 parsimonious CWFA models. They are linear Gaussian cluster-weighted models in which a latent factor structure is assumed for the explanatory random vector in each mixture component. The parsimonious versions are obtained by combining all of the constraints described in McNicholas and Murphy (2008) with one of the constraints illustrated in Ingrassia et al. (2013). Due to the introduction of a latent factor structure, the parameters are linear in dimensionality as opposed to the traditional linear Gaussian CWM where the parameters grow quadratically; therefore, our approach is more suitable for modelling complex high dimensional data.The AECM algorithm was used for maximum likelihood estimation of the model parameters. Being based on the EM algorithm, it is very sensitive to starting values due to presence of multiple local maxima. To overcome this problem, we proposed a 5-step hierarchical initialization procedure that utilizes the nested structures of the models within the CWFA family. Because these models have a hierarchical/nested structure, this initialization procedure guarantees a natural ranking on the likelihoods of the models in our family.Using artificial and real data, we demonstrated that these models give very good clustering performance and that the AECM algorithms used were able to recover the parameters very well.

Also, while the BIC was able to identify the correct model in our simulations, the choice of a convenient model selection criterion for these models is still an open question. Some future work will be devoted to the search for good model selection criteria for these models. Finally, we assumed that the number of factors was the same across groups, which might be too restrictive. However, assuming otherwise also increases the number of models that need to be fitted, resulting in an additional computational burden. Approaches such as variational Bayes approximations might be useful for significantly reducing the number of models that need to be fitted.

References

Airoldi, J, Hoffmann R (1984) Age variation in voles (Microtus californicus, M. ochrogaster) and its significance for systematic studies, Occasional papers of the Museum of Natural History, vol 111. University of Kansas, Lawrence

Aitken AC (1926) On Bernoulli’s numerical solution of algebraic equations. Proc Royal Soc Edinburgh 46:289–305

Andrews JL, McNicholas PD, Subedi S (2011) Model-based classification via mixtures of multivariate t-distributions. Comput Stat Data Anal 55(1):520–529

Baek J, McLachlan GJ, Flack LK (2010) Mixtures of factor analyzers with common factor loadings: applications to the clustering and visualization of high-dimensional data. IEEE Trans Pattern Anal Mach Intell 32:1298–1309

Banfield JD, Raftery AE (1993) Model-based Gaussian and non-Gaussian clustering. Biometrics 49(3):803–821

Bartholomew DJ, Knott M (1999) Latent variable models and factor analysis. In: Kendall’s library of statistics, vol 7, 2nd edn. Edward Arnold, London

Bartlett M (1953) Factor analysis in psychology as a statistician sees it. In: Uppsala symposium on psychological factor analysis, Number 3 in Nordisk Psykologi’s Monograph Series, Uppsala, Sweden, pp 23–34. Almquist and Wiksell, Uppsala

Biernacki C, Celeux G, Govaert G (2000) Assessing a mixture model for clustering with the integrated completed likelihood. IEEE Trans Pattern Anal Mach Intell 22(7):719–725

Böhning D, Dietz E, Schaub R, Schlattmann P, Lindsay B (1994) The distribution of the likelihood ratio for mixtures of densities from the one-parameter exponential family. Annals Inst Stat Math 46(2):373–388

Bouveyron C, Girard S, Schmid C (2007) High-dimensional data clustering. Comput Stat Data Anal 52(1):502–519

Browne RP, McNicholas PD (2012) Model-based clustering, classification, and discriminant analysis of data with mixed type. J Stat Plann Infer 142(11):2976–2984

Browne RP, McNicholas PD, Sparling MD (2012) Model-based learning using a mixture of mixtures of Gaussian and uniform distributions. IEEE Trans Pattern Anal Mach Intell 34(4):814–817

Carvalho C, Chang J, Lucas J, Nevins J, Wang Q, West M (2008) High-dimensional sparse factor modeling: applications in gene expression genomics. J Am Stat Assoc 103(484):1438–1456

Celeux G, Govaert G (1995) Gaussian parsimonious clustering models. Pattern Recogn 28(5):781–793

Dean N, Murphy TB, Downey G (2006) Using unlabelled data to update classification rules with applications in food authenticity studies. J Royal Stat Soc Ser C 55(1):1–14

Dempster A, Laird N, Rubin D (1977) Maximum likelihood from incomplete data via the EM algorithm. J Royal Stat Soc Ser B 39(1):1–38

DeSarbo W, Cron W (1988) A maximum likelihood methodology for clusterwise linear regression. J Classif 5(2):249–282

Flury B (1997) A first course in multivariate statistics. Springer, New York

Fraley C, Raftery AE (2002) Model-based clustering, discriminant analysis, and density estimation. J Am Stat Assoc 97(458):611–631

Fraley C, Raftery AE, Murphy TB, Scrucca L (2012) mclust version 4 for R: normal mixture modeling for model-based clustering, classification, and density estimation. Technical report 597, Department of Statistics, University of Washington, Seattle, Washington, USA

Frühwirth-Schnatter S (2006) Finite mixture and markov switching models. Springer, New York

Gershenfeld N (1997) Nonlinear inference and cluster-weighted modeling. Ann New York Acad Sci 808(1):18–24

Ghahramani Z, Hinton G (1997) The EM algorithm for factor analyzers. Technical report CRG-TR-96-1, University of Toronto, Toronto

Grün B, Leisch F (2008) Flexmix version 2: finite mixtures with concomitant variables and varying and constant parameters. J Stat Softw 28(4):1–35

Hennig C (2000) Identifiablity of models for clusterwise linear regression. J Classif 17(2):273–296

Hosmer D Jr (1973) A comparison of iterative maximum likelihood estimates of the parameters of a mixture of two normal distributions under three different types of sample. Biometrics 29(4):761–770

Hubert L, Arabie P (1985) Comparing partitions. J Classif 2(1):193–218

Ingrassia S, Minotti SC, Punzo A (2013) Model-based clustering via linear cluster-weighted models. DOI:10.1016/j.csda.2013.02.012 Computational Statistics and Data Analysis

Ingrassia, S, Minotti SC, Punzo A, Vittadini G (2012a) Generalized linear cluster-weighted models. eprint arXiv: 1211.1171, http://arxiv.org/abs/1211.1171

Ingrassia S, Minotti SC, Vittadini G (2012b) Local statistical modeling via the cluster-weighted approach with elliptical distributions. J Classif 29(3):363–401

Karlis D, Santourian A (2009) Model-based clustering with non-elliptically contoured distributions. Stat Comput 19(1):73–83

Leisch F (2004) Flexmix: a general framework for finite mixture models and latent class regression in R. J Stat Softw 11(8):1–18

Lin T-I (2010) Robust mixture modeling using multivariate skew t distributions. Stat Comput 20:343–356

Lindsay BG (1995) Mixture models: theory, geometry and applications. In: NSF-CBMS regional conference series in probability and statistics, vol. 5. Institute of Mathematical Statistics, Hayward

McLachlan GJ, Basford KE (1988) Mixture models: inference and applications to clustering. Marcel Dekker, New York

McLachlan GJ, Peel D (2000a) Finite mixture models. Wiley, New York

McLachlan GJ, D Peel (2000b) Mixtures of factor analyzers. In: Proceedings of the seventh international conference on machine learning, pp 599–606. Morgan Kaufmann, San Francisco.

McNicholas PD (2010) Model-based classification using latent Gaussian mixture models. J Stat Plann Infer 140(5):1175–1181

McNicholas PD, Jampani KR, McDaid AF, Murphy TB, Banks L (2011) PGMM: Parsimonious Gaussian Mixture Models. R package version 1.0.

McNicholas PD, Murphy TB (2008) Parsimonious Gaussian mixture models. Stat Comput 18(3):285–296

McNicholas PD, Murphy TB (2010a) Model-based clustering of longitudinal data. Can J Stat 38(1):153–168

McNicholas PD, Murphy TB (2010b) Model-based clustering of microarray expression data via latent Gaussian mixture models. Bioinformatics 26(21):2705–2712

McNicholas PD, Murphy TB, McDaid AF, Frost D (2010) Serial and parallel implementations of model-based clustering via parsimonious Gaussian mixture models. Comput Stat Data Anal 54(3):711–723

McNicholas PD, Subedi S (2012) Clustering gene expression time course data using mixtures of multivariate t-distributions. J Stat Plann Infer 142(5):1114–1127

Meng XL, Rubin DB (1993) Maximum likelihood estimation via the ECM algorithm: a general framework. Biometrika 80(2):267–278

Meng XL, van Dyk D (1997) The EM algorithm: an old folk-song sung to a fast new tune. J Royal Stat Soc Ser B (Stat Methodol) 59(3):511–567

Montanari A, Viroli C (2010) Heteroscedastic factor mixture analysis. Stat Modell 10(4):441–460

Montanari A, Viroli C (2011) Dimensionally reduced mixtures of regression models. J Stat Plann Infer 141(5):1744–1752

Press WH, Teukolsky SA, Vetterling WT, Flannery BP (1992) Numerical recipes in C: the art of scientific computation, 2nd edn. Cambridge University Press, Cambridge

Punzo, A (2012) Flexible mixture modeling with the polynomial Gaussian cluster-weighted model. eprint arXiv: 1207.0939, http://arxiv.org/abs/1207.0939

Rand W (1971) Objective criteria for the evaluation of clustering methods. J Am Stat Assoc 66(336):846–850

Sakamoto Y, Ishiguro M, Kitagawa G (1983) Akaike information criterion statistics. Reidel, Boston

Schöner B (2000) Probabilistic characterization and synthesis of complex data driven systems. Ph. D. thesis, MIT

Schwarz G (1978) Estimating the dimension of a model. Ann Stat 6(2):461–464

Scrucca L (2010) Dimension reduction for model-based clustering. Stat Comput 20(4):471–484

R Development Core Team (2012) R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna

Spearman C (1904) The proof and measurement of association between two things. Am J Psychol 15(1):72–101

Tipping TE, Bishop CM (1999) Mixtures of probabilistic principal component analysers. Neural Comput 11(2):443–482

Titterington DM, Smith AFM, Makov UE (1985) Statistical analysis of finite mixture distributions. Wiley, New York

Wang Q, Carvalho C, Lucas J, West M (2007) BFRM: Bayesian factor regression modelling. Bull Int Soc Bayesian Anal 14(2):4–5

West M (2003) Bayesian factor regression models in the “large $p$, small $n$” paradigm. In: Bernardo J, Bayarri M, Berger J, Dawid A, Heckerman D, Smith A, West M (eds) Bayesian statistics, vol 7. Oxford University Press, Oxford, pp 723–732

Wolfe JH (1963) Object cluster analysis of social areas. Master’s thesis, University of California, Berkeley

Wolfe JH (1970) Pattern clustering by multivariate mixture analysis. Multivariate Behav Res 5(3):329–350

Woodbury MA (1950) Inverting modified matrices. Statistical Research Group, Memo. Rep. no. 42. Princeton University, Princeton

Acknowledgments

The authors sincerely thank the Associate Editor and the referees for helpful comments and valuable suggestions that have contributed to improving the quality of the manuscript. The work of Subedi and McNicholas was partly supported by an Early Researcher Award from the Ontario Ministry of Research and Innovation.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: The conditional distribution of \(Y|{\varvec{x}},{\varvec{u}}\)

To compute the distribution of \(Y|{\varvec{x}},{\varvec{u}}\), we begin by recalling that if \({\varvec{Z}}\sim N_q\left( {\varvec{m}},{{\varvec{\Gamma }}}\right) \) is a random vector with values in \(\mathbb{R }^q\) and if \({\varvec{Z}}\) is partitioned as \({\varvec{Z}}=\left( {\varvec{Z}}^{\prime }_1,{\varvec{Z}}^{\prime }_2\right) ^{\prime }\), where \({\varvec{Z}}_1\) takes values in \(\mathbb{R }^{q_1}\) and \({\varvec{Z}}_2\) in \(\mathbb{R }^{q_2}=\mathbb{R }^{q-q_1}\), then we can write

Now, because \({\varvec{Z}}\) has a multivariate normal distribution, \({\varvec{Z}}_1|{\varvec{Z}}_2={\varvec{z}}_2\) and \({\varvec{Z}}_2\) are statistically independent with \({\varvec{Z}}_1|{\varvec{Z}}_2={\varvec{z}}_2 \sim N_{q_1}\left( {\varvec{m}}_{1|2}, {{\varvec{\Gamma }}}_{1|2}\right) \) and \({\varvec{Z}}_2 \sim N_{q_2}\left( {\varvec{m}}_2, {{\varvec{\Gamma }}}_{22}\right) \), where

Therefore, setting \({\varvec{Z}}=\left( {\varvec{Z}}^{\prime }_1,{\varvec{Z}}^{\prime }_2\right) ^{\prime }\), where \({\varvec{Z}}^{\prime }_1=Y\) and \({\varvec{Z}}_2=\left( {\varvec{X}}^{\prime }, {\varvec{U}}^{\prime }\right) ^{\prime }\), gives \({\varvec{m}}_1= \beta _0 + {{\varvec{\beta }}}^{\prime }_1 {{\varvec{\mu }}}\) and \({\varvec{m}}_2 = \left( {{\varvec{\mu }}}^{\prime },{\varvec{0}}^{\prime }\right) ^{\prime }\), with the elements in \({{\varvec{\Gamma }}}\) given by

It follows that \(Y|{\varvec{x}},{\varvec{u}}\) is Gaussian with mean \({\varvec{m}}_{y|{\varvec{x}},{\varvec{u}}}= \mathbb E \left( Y|{\varvec{x}},{\varvec{u}}\right) \) and variance \(\sigma ^2_{y|{\varvec{x}},{\varvec{u}}}=\text{ Var}\left( Y|{\varvec{x}},{\varvec{u}}\right) \), in accordance with the formulae in (15). Because the inverse matrix of \({{\varvec{\Gamma }}}_{22}\) is required in (15), the following formula for the inverse of a partitioned matrix is utilized:

Again, writing \({{\varvec{\Sigma }}}={{\varvec{\Lambda }}}{{\varvec{\Lambda }}}^{\prime } + {{\varvec{\Psi }}}\), we have

Moreover, according to the Woodbury identity (Woodbury 1950),

Now,

Finally, according to (15), we have

Appendix B: Details on the AECM algorithm for the parsimonious models

This appendix details the AECM algorithm for the models summarized in Table 1.

1.1 B.1 Constraint on the \(Y\) variable

In all of the models whose identifier starts with ‘C’, that is the models in which the error variance terms \(\sigma _g^2\) (of the response variable \(Y\)) are constrained to be equal across groups, i.e., \(\sigma _g^2 =\sigma ^2\) for \(g=1,\ldots ,G\), the common variance \(\sigma ^2\) at the \(\left( k+1\right) \)th iteration of the algorithm is computed as

1.2 B.2 constraints on the \({\varvec{X}}\) variable

With respect to the \({\varvec{X}}\) variable, as explained in Sect. 3.2, we considered the following constraints on \({{\varvec{\Sigma }}}_g={{\varvec{\Lambda }}}_g{{\varvec{\Lambda }}}_g^{\prime }+{{\varvec{\Psi }}}_g\): (i) equal loading matrices \({{\varvec{\Lambda }}}_g = {{\varvec{\Lambda }}}\), (ii) equal error variance \({{\varvec{\Psi }}}_g = {{\varvec{\Psi }}}\), and (iii) isotropic assumption: \({{\varvec{\Psi }}}_g = \psi _g {\varvec{I}}_p\). In such cases, the \(g\)th term of the expected complete-data log-likelihood \(Q_2\left( {{\varvec{\theta }}}_2; {{\varvec{\theta }}}^{(k+1/2)}\right) \), and then the estimates (12) and (13) in Sect. 4.3, are computed as follows.

1.2.1 B.2.1 Isotropic assumption: \({{\varvec{\Psi }}}_g=\psi _g{\varvec{I}}_p\)

In this case, Eq. (9) becomes

yielding

Then the estimated \(\psi _g\) is attained for \(\hat{\psi }_g\), satisfying

Thus, according to (12), for \({{\varvec{\Lambda }}}_g=\hat{{{\varvec{\Lambda }}}}_g = {\varvec{S}}^{\left( k+1\right) }_g {{\varvec{\gamma }}}^{\prime \left( k\right) }_g{{\varvec{\Theta }}}_g^{-1}\) we get \(\text{ tr}\left\{ {{\varvec{\Lambda }}}_g {{\varvec{\Theta }}}^{\left( k\right) }_g {{\varvec{\Lambda }}}_g^{\prime } \right\} = \text{ tr} \left\{ {{\varvec{\gamma }}}_g^{\left( k\right) }{\varvec{S}}_g^{\left( k+1\right) } {{\varvec{\Lambda }}}_g \right\} \) and, finally, \(\hat{\psi }_g =\frac{1}{p}\text{ tr}\left\{ {{\varvec{S}}_g^{\left( k+1\right) } -\hat{{{\varvec{\Lambda }}}}_g}{{\varvec{\gamma }}}_g^{\left( k\right) } {\varvec{S}}_g^{\left( k+1\right) }\right\} .\) Thus,

with \({{\varvec{\Theta }}}_g^+\) computed according to (14).

1.2.2 B.2.2 Equal error variance: \({{\varvec{\Psi }}}_g ={{\varvec{\Psi }}}\)

In this case, from Eq. (9), we have

yielding

Then the estimated \(\hat{{{\varvec{\Psi }}}}\) is obtained by satisfying

that is

which can be simplified as

with \(\displaystyle \sum _{g=1}^G n^{\left( k+1\right) }_g =n\). Again, according to (12), for \({{\varvec{\Lambda }}}_g=\hat{{{\varvec{\Lambda }}}}_g = {\varvec{S}}^{\left( k+1\right) }_g {{\varvec{\gamma }}}^{\prime \left( k\right) }_g{{\varvec{\Theta }}}_g^{-1}\) we get \(\hat{{{\varvec{\Lambda }}}}_g{{\varvec{\Theta }}}^{\left( k\right) }_g \hat{{{\varvec{\Lambda }}}}_g^{\prime } =\hat{{{\varvec{\Lambda }}}}_g {{\varvec{\gamma }}}_g^{\left( k\right) }{\varvec{S}}_g^{\left( k+1\right) } \) and, afterwards,

Thus,

where \({{\varvec{\Theta }}}_g^+\) is computed according to (14).

1.2.3 B.2.3 Equal loading matrices: \({{\varvec{\Lambda }}}_g={{\varvec{\Lambda }}}\)

In this case, Eq. (9) can be written as

yielding

Then the estimated \(\hat{{{\varvec{\Lambda }}}}\) is obtained by solving

with \({{\varvec{\gamma }}}^{\left( k\right) }_g ={{\varvec{\Lambda }}}^{^{\prime }\left( k\right) }\left( {{\varvec{\Lambda }}}^{\left( k\right) }{{\varvec{\Lambda }}}^{^{\prime }\left( k\right) }+{{\varvec{\Psi }}}^{\left( k\right) }_g\right) ^{-1}\). In this case, the loading matrix cannot be solved directly and must be solved in a row-by-row manner as suggested by McNicholas and Murphy (2008). Therefore,

where \(\lambda ^+_i\) is the \(i\)th row of the matrix \({{\varvec{\Lambda }}}^+\), \(\psi _{g\left( i\right) }\) is the \(i\)th diagonal element of \({{\varvec{\Psi }}}_g\), and \(\mathbf r _i\) represents the \(i\)th row of the matrix \(\displaystyle \sum \nolimits _{g=1}^G n_g^{\left( k+1\right) } \left( {{\varvec{\Psi }}}^{\prime }_g\right) ^{-1} {\varvec{S}}_g^{\left( k+1\right) }\).

1.2.4 B.2.4 Further details

A further schematization is here given without considering the constraint on the \(Y\) variable. Thus, with reference to the model identifier, we will only refer to the last three letters.

-

Models ended by UUU: no constraint is assumed.

-

Models ended by UUC: \({{\varvec{\Psi }}}_g =\psi _g{\varvec{I}}_p\), where the parameter \(\psi _g\) is updated according to (16).

-

Models ended by UCU: \({{\varvec{\Psi }}}_g ={{\varvec{\Psi }}}\), where the matrix \({{\varvec{\Psi }}}\) is updated according to (18).

-

Models ended by UCC: \( {{\varvec{\Psi }}}_g =\psi {\varvec{I}}_p\). By combining (16) and (18) we obtain

$$\begin{aligned} \hat{\psi }&= \frac{1}{p}\sum _{g=1}^G \frac{n_g^{\left( k+1\right) }}{n}\text{ tr}\left\{ {{\varvec{S}}_g^{\left( k+1\right) } -\hat{{{\varvec{\Lambda }}}}_g}{{\varvec{\gamma }}}_g^{\left( k\right) } {\varvec{S}}_g^{\left( k+1\right) }\right\} \nonumber \\&= \frac{1}{p}\sum _{g=1}^G \hat{\pi }_g^{\left( k+1\right) } \text{ tr}\left\{ {{\varvec{S}}_g^{\left( k+1\right) } -\hat{{{\varvec{\Lambda }}}}_g}{{\varvec{\gamma }}}_g^{\left( k\right) } {\varvec{S}}_g^{\left( k+1\right) }\right\} . \end{aligned}$$(23)Thus, \(\psi ^+ = ({1}/{p})\sum _{g=1}^G \pi _g^{\left( k+1\right) } \text{ tr}\left\{ {{\varvec{S}}_g^{\left( k+1\right) } - {{\varvec{\Lambda }}}^+_g} {{\varvec{\gamma }}}_g {\varvec{S}}_g^{\left( k+1\right) }\right\} \) and \({{\varvec{\gamma }}}^+_g = {{\varvec{\Lambda }}}^{\prime +}_g \left( {{\varvec{\Lambda }}}^+_g {{\varvec{\Lambda }}}^{\prime +}_g+\psi ^+ {\varvec{I}}_p\right) ^{-1}\), with \({{\varvec{\Theta }}}_g^+\) computed according to (14).

-

Models ended by CUU: \({{\varvec{\Lambda }}}_g ={{\varvec{\Lambda }}}\), where the matrix \({{\varvec{\Lambda }}}\) is updated according to (20). In this case, \({{\varvec{\Psi }}}_g\) is estimated directly from (11) and thus \({{\varvec{\Psi }}}^+_g =\text{ diag}\left\{ {\varvec{S}}_g^{\left( k+1\right) }-2 {{\varvec{\Lambda }}}^+ {{\varvec{\gamma }}}_g {\varvec{S}}_g^{\left( k+1\right) } +{{\varvec{\Lambda }}}^+ {{\varvec{\Theta }}}_g {{\varvec{\Lambda }}}^{\prime +} \right\} \), with \({{\varvec{\gamma }}}_g^+\) and \({{\varvec{\Theta }}}^+_g\) computed according to (21) and (22), respectively.

-

Models ended by CUC: \({{\varvec{\Lambda }}}_g ={{\varvec{\Lambda }}}\) and \({{\varvec{\Psi }}}_g =\psi _g{\varvec{I}}_p\). In this case, Equation (19), for \({{\varvec{\Psi }}}_g =\psi _g{\varvec{I}}_p\), yields

$$\begin{aligned} \sum _{g=1}^G \frac{\partial Q_2 \left( {{\varvec{\Lambda }}}, \psi _g; {{\varvec{\theta }}}^{(k+1/2)}\right) }{\partial {{\varvec{\Lambda }}}}&= \sum _{g=1}^G n_g^{\left( k+1\right) } \psi _g^{-1}{\varvec{S}}_g^{\left( k+1\right) } {{\varvec{\gamma }}}_g^{^{\prime }\left( k\right) } \\&- \sum _{g=1}^G n_g^{\left( k+1\right) } \psi _g^{-1} {{\varvec{\Theta }}}^{\left( k\right) }_g = {\varvec{0}}, \end{aligned}$$and afterwards

$$\begin{aligned} \hat{{{\varvec{\Lambda }}}} = \left( \sum _{g=1}^G \frac{n_g^{\left( k+1\right) }}{\psi _g^{-1}} {\varvec{S}}_g^{\left( k+1\right) } {{\varvec{\gamma }}}_g^{^{\prime }\left( k\right) } \right) \left( \sum _{g=1}^G \frac{n_g^{\left( k+1\right) }}{\psi _g^{-1}} {{\varvec{\Lambda }}}\right) ^{-1}, \end{aligned}$$with \({{\varvec{\gamma }}}^{\left( k\right) }_g ={{\varvec{\Lambda }}}^{^{\prime }\left( k\right) }\left( {{\varvec{\Lambda }}}^{\left( k\right) }{{\varvec{\Lambda }}}^{^{\prime }\left( k\right) }+\psi ^{\left( k\right) }_g {\varvec{I}}_p\right) ^{-1}\). Moreover, from

$$\begin{aligned} \frac{\partial Q_2\left( {{\varvec{\Lambda }}}, \psi _g; {{\varvec{\theta }}}^{(k+1/2)}\right) }{\partial \psi _g^{-1}}&= \frac{p}{2} \psi _g -\frac{n_g^{\left( k+1\right) }}{2} \left[ \text{ tr}\left\{ {\varvec{S}}_g^{\left( k+1\right) }\right\} -2 \text{ tr}\left\{ {\varvec{S}}_g^{^{\prime }\left( k+1\right) } {{\varvec{\gamma }}}^{\prime \left( k\right) }_g {{\varvec{\Lambda }}}^{\prime }\right\} \right. \\&\left. + \text{ tr}\left\{ {{\varvec{\Lambda }}}{{\varvec{\Theta }}}_g^{\left( k+1\right) } {{\varvec{\Lambda }}}^{\prime }\right\} \right] = 0 \end{aligned}$$we get \(\hat{\psi }_g =({1}/{p})\text{ tr}\left\{ {\varvec{S}}_g^{\left( k+1\right) }-2\hat{{{\varvec{\Lambda }}}} {{\varvec{\gamma }}}^{\prime \left( k\right) }_g {\varvec{S}}_g+\hat{{{\varvec{\Lambda }}}} {{\varvec{\Theta }}}_g\hat{{{\varvec{\Lambda }}}}^{\prime }\right\} \). Thus,

$$\begin{aligned} {{\varvec{\Lambda }}}^+&= \left( \sum _{g=1}^G \frac{n_g^{\left( k+1\right) }}{\psi _g^{-1}} {\varvec{S}}_g^{\left( k+1\right) } {{\varvec{\gamma }}}_g^{\prime } \right) \left( \sum _{g=1}^G \frac{n_g^{\left( k+1\right) }}{\psi _g^{-1}} {{\varvec{\Lambda }}}\right) ^{-1}\\ \psi ^+_g&= \frac{1}{p}\text{ tr}\left\{ {\varvec{S}}_g^{\left( k+1\right) }-2\ {{\varvec{\Lambda }}}^+ {{\varvec{\gamma }}}^{\prime }_g {\varvec{S}}_g+ {{\varvec{\Lambda }}}^+ {{\varvec{\Theta }}}{{\varvec{\Lambda }}}^{\prime +} \right\} \\ {{\varvec{\gamma }}}^+_g&= {{\varvec{\Lambda }}}^{\prime +}\left( {{\varvec{\Lambda }}}^+{{\varvec{\Lambda }}}^{\prime +} +\psi ^+_g {\varvec{I}}_p\right) ^{-1}, \end{aligned}$$with \({{\varvec{\Theta }}}_g^+\) computed according to (22).

-

Models ended by CCU: \({{\varvec{\Lambda }}}_g\!=\!{{\varvec{\Lambda }}}\) and \({{\varvec{\Psi }}}_g \!\!=\!{{\varvec{\Psi }}}\), so that \({{\varvec{\gamma }}}^{\left( k\right) } \!\!=\!{{\varvec{\Lambda }}}^{\prime \left( k\right) }\left( \!{{\varvec{\Lambda }}}^{\left( \!k\!\right) }{{\varvec{\Lambda }}}^{\left( \!k\!\right) }\!+\!{{\varvec{\Psi }}}^{\left( \!k\!\right) }\!\right) ^{-1}\). Setting \({{\varvec{\Psi }}}_g={{\varvec{\Psi }}}\) in (19), we get

$$\begin{aligned} \sum _{g=1}^G \frac{\partial Q_2\left( {{\varvec{\Lambda }}}, {{\varvec{\Psi }}}; {{\varvec{\theta }}}^{(k+1/2)}\right) }{\partial {{\varvec{\Lambda }}}}&= \sum _{g=1}^G n_g^{\left( k+1\right) } {{\varvec{\Psi }}}^{-1} \left[ {\varvec{S}}_g^{\left( k+1\right) } {{\varvec{\gamma }}}^{\prime \left( k\right) } - {{\varvec{\Lambda }}}{{\varvec{\Theta }}}^{\left( k\right) }_g \right] \\&= {{\varvec{\Psi }}}^{-1} \left[ {{\varvec{\gamma }}}^{\prime \left( k\right) } \sum _{g=1}^G n_g^{\left( k+1\right) } {\varvec{S}}_g^{\left( k+1\right) } - {{\varvec{\Lambda }}}\sum _{g=1}^G n_g^{\left( k+1\right) } {{\varvec{\Theta }}}^{\left( k\right) }_g \right] \\&= {{\varvec{\Psi }}}^{-1} \left[ {{\varvec{\gamma }}}^{\prime \left( k\right) } {\varvec{S}}^{\left( k+1\right) } - {{\varvec{\Lambda }}}{{\varvec{\Theta }}}^{\left( k\right) } \right] = {\varvec{0}}, \end{aligned}$$where \({\varvec{S}}^{\left( k+1\right) } = \sum _{g=1}^G \pi _g^{\left( k+1\right) } {\varvec{S}}_g^{\left( k+1\right) } \) and \({{\varvec{\Theta }}}^{\left( k\right) } =\sum _{g=1}^G \pi _g^{\left( k+1\right) } {{\varvec{\Theta }}}^{\left( k\right) }_g = {\varvec{I}}_q-{{\varvec{\gamma }}}^{\left( k\right) } {{\varvec{\Lambda }}}^{\left( k\right) }+ {{\varvec{\gamma }}}^{\left( k\right) } {\varvec{S}}^{\left( k+1\right) } {{\varvec{\gamma }}}^{\prime \left( k\right) }\). Thus,

$$\begin{aligned} \hat{{{\varvec{\Lambda }}}} ={\varvec{S}}^{\left( k+1\right) } {{\varvec{\gamma }}}^{\prime \left( k\right) } \left( {{\varvec{\Theta }}}^{\left( k\right) }\right) ^{-1}. \end{aligned}$$(24)Moreover, setting \({{\varvec{\Lambda }}}_g={{\varvec{\Lambda }}}\) in (17), we get \(\hat{{{\varvec{\Psi }}}} = \text{ diag}\left\{ {{\varvec{S}}^{\left( k+1\right) }-\hat{{{\varvec{\Lambda }}}}}{{\varvec{\gamma }}}^{\left( k\right) } {\varvec{S}}^{\left( k+1\right) }\right\} \). Hence,

$$\begin{aligned} {{\varvec{\Lambda }}}^+&= {\varvec{S}}^{\left( k+1\right) } {{\varvec{\gamma }}}^{\prime } {{\varvec{\Theta }}}^{-1}\\ {{\varvec{\Psi }}}^+&= \text{ diag}\left\{ {\varvec{S}}^{\left( k+1\right) }- {{\varvec{\Lambda }}}^+ {{\varvec{\gamma }}}{\varvec{S}}^{\left( k+1\right) }\right\} \nonumber \\ {{\varvec{\gamma }}}^+_g&= {{\varvec{\Lambda }}}^{\prime +}\left( {{\varvec{\Lambda }}}^+{{\varvec{\Lambda }}}^{\prime +} +{{\varvec{\Psi }}}^+\right) ^{-1}, \nonumber \end{aligned}$$(25)with \({{\varvec{\Theta }}}_g^+\) computed according to (22).

-

Models ended by CCC: \({{\varvec{\Lambda }}}_g ={{\varvec{\Lambda }}}\) and \({{\varvec{\Psi }}}_g =\psi {\varvec{I}}_p\), so that \({{\varvec{\gamma }}}^{\left( k\right) } ={{\varvec{\Lambda }}}^{\prime \left( k\right) }\left( {{\varvec{\Lambda }}}^{\left( k\right) }{{\varvec{\Lambda }}}^{\prime \left( k\right) }+\psi ^{\left( k\right) }\right) ^{-1}\). Here, the estimated loading matrix is again (24), while the isotropic term obtained from (23) for \({{\varvec{\Lambda }}}_g\!=\!{{\varvec{\Lambda }}}\) is \(\hat{\psi } \!=\! ({1}/{p})\text{ tr}\Big \{{\varvec{S}}^{\left( k+1\right) }- \hat{{{\varvec{\Lambda }}}}{{\varvec{\gamma }}}^{\left( k\right) }{\varvec{S}}^{\left( k+1\right) }\Big \}\), with \({{\varvec{\gamma }}}^{\left( k\right) }_g \!=\!{{\varvec{\Lambda }}}^{\prime \left( k\right) }_g\left( \!{{\varvec{\Lambda }}}^{\left( k\right) }_g{{\varvec{\Lambda }}}^{\prime \left( k\right) }_g\!+\!\psi ^{\left( k\right) } {\varvec{I}}_p\right) ^{-1}\). Hence, \(\psi ^+\! =\! ({1}/{p}) \text{ tr}\left\{ {\varvec{S}}^{\left( k+1\right) }- {{\varvec{\Lambda }}}^+ {{\varvec{\gamma }}}{\varvec{S}}^{\left( k+1\right) }\right\} \) and \({{\varvec{\gamma }}}^+ \!=\! {{\varvec{\Lambda }}}^{\prime \!}+\!\left( {{\varvec{\Lambda }}}^+{{\varvec{\Lambda }}}^{\prime +} + \psi ^+ {\varvec{I}}_p\right) ^{-1}\), with \({{\varvec{\Lambda }}}^+\) and \({{\varvec{\Theta }}}_g^+\) computed according to (25) and (22), respectively.

Appendix C: True and estimated covariance matrices of Sect. 6.1

Because the loading matrices are not unique, for the simulated data of Examples 1 and 2 we limit the attention to a comparison, for each \(g=1,\ldots ,G\), of true and estimated covariance matrices.

1.1 C.1 Example 1

and

1.2 C.2 Example 2

and

Rights and permissions

About this article

Cite this article

Subedi, S., Punzo, A., Ingrassia, S. et al. Clustering and classification via cluster-weighted factor analyzers. Adv Data Anal Classif 7, 5–40 (2013). https://doi.org/10.1007/s11634-013-0124-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11634-013-0124-8