Abstract

The mixture of factor analyzers (MFA) model has emerged as a useful tool to perform dimensionality reduction and model-based clustering for heterogeneous data. In seeking the most appropriate number of factors (q) of a MFA model with the number of components (g) fixed a priori, a two-stage procedure is commonly implemented by firstly carrying out parameter estimation over a set of prespecified numbers of factors, and then selecting the best q according to certain penalized likelihood criteria. When the dimensionality of data grows higher, such a procedure can be computationally prohibitive. To overcome this obstacle, we develop an automated learning scheme, called the automated MFA (AMFA) algorithm, to effectively merge parameter estimation and selection of q into a one-stage algorithm. The proposed AMFA procedure that allows for much lower computational cost is also extended to accommodate missing values. Moreover, we explicitly derive the score vector and the empirical information matrix for calculating standard errors associated with the estimated parameters. The potential and applicability of the proposed method are demonstrated through a number of real datasets with genuine and synthetic missing values.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The mixture of factor analyzers (MFA) model, initially introduced by Ghahramani and Hinton (1997), has attracted considerable attention over the past three decades and been broadly applied in diverse fields, see the monograph by McLachlan and Peel (2000) for a comprehensive overview. The MFA combines the advantages of Gaussian mixture model and factor analysis (FA) and has now been taken as a promising tool for simultaneously performing model-based clustering and linear dimensionality reduction. More precisely, it provides a global nonlinear approach to dimension reduction via the adoption a factor-analytic representation for the covariance matrices of component distributions. Ghahramani and Beal (2000) presented a novel variational inference for a Bayesian treatment of MFA models. Ueda et al. (2000) proposed a split-and-merge expectation maximization (SMEM) algorithm for the MFA model and showed its real-world applications to image compression and handwritten digits recognition. In clustering microarray gene-expression profiles, McLachlan et al. (2002, 2003) illustrated the effectiveness of the MFA approach for reducing the dimension of the feature space.

A computational feasible EM algorithm (Dempster et al. 1977) has been suggested by Ghahramani and Hinton (1997) for fitting the MFA model. McLachlan et al. (2003) developed an alternating expectation conditional maximization (AECM) algorithm (Meng and van Dyk 1997) for fitting MFA and further investigated its practical use for modeling high-dimensional data. The convergence of AECM can be moderately faster than EM due to less amount of missing data in some CM-steps. Zhao and Yu (2008) further provided a much more efficient procedure, which is developed under an expectation conditional maximization (ECM; Meng and Rubin 1993) scheme by treating only membership indicators as missing data. Its appealing efficiency can be attributable to the fact that the latent factors are not taken into account in the complete-data space, while all estimators in CM-steps still have closed forms.

The occurrence of missing data that may complicate data analysis is a ubiquitous problem in nearly all fields of scientific research. There exist many strategies for dealing with incomplete data under various missing-data mechanisms. Little and Rubin (2002) outlined a taxonomy of techniques for handling missing values. The maximum likelihood (ML) methods of imputing missing values under mixtures of multivariate normal distributions have been well studied, see, for example, Ghahramani and Jordan (1994) and Lin et al. (2006). For learning FA models with possibly missing values, Zhao and Shi (2014) proposed a novel automated factor analysis (AFA) algorithm that allows for determining the number of factors (q) in an automated manner.

In this paper, we establish a generalization of AFA algorithm, called the automated MFA (AMFA) algorithm, which performs parameter estimation and determination of the number of factors (q) simultaneously for fitting the MFA with known mixture component size (g). When g is treated as unknown, the AMFA algorithm can also be applied to automatically determine an appropriate number of q for each value of g within a given range, still being much more efficient than the two-stage methods. The computational cost of AMFA can be substantially faster than the two-stage EM-based algorithms when the dimension of variables (p) or the proportion of missing information becomes high. Moreover, the proposed learning procedure allows for handling the data in the presence of missing values under the assumption of missing at random (MAR) mechanism. Notably, the two-stage ECM algorithm can be treated as a simplified case of AMFA without updating q. To facilitate implementation, two auxiliary permutation matrices that exactly extract the observed and missing portions of an individual are incorporated into the estimating procedure. The Hessian matrix of the MFA model with incomplete data is also explicitly derived for obtaining the asymptotic standard errors of parameter estimators.

The rest of the paper is organized as follows. Section 2 formulates an incomplete-data specification of MFA model and presents some of its essential properties. In Sect. 3, we briefly describe how to perform the EM and AECM algorithms for fitting MFA models under a MAR mechanism. In Sect. 4, we propose a one-stage AMFA algorithm for parameter estimation and automatic determination of q. In Sect. 5, we explicitly derive the Hessian matrix of the observed log-likelihood function for computing the asymptotic standard errors of the ML estimators. We illustrate the usefulness and ability of the proposed method in Sect. 6 through two real-data examples and two simulation studies. Section 7 offers some concluding remarks and highlights possible directions for further work. The detailed derivations of lengthy technical results are sketched in Online Supplementary Appendices.

2 MFA model with missing data

The MFA model is a global nonlinear approach by postulating a finite mixture of g FA models for the representation of the data in a lower-dimensional subspace. Let \(\varvec{Y}_j\) denote a p-dimensional random vector of the jth individual for \(j=1,\ldots ,n\). In the MFA formulation, each observation \(\varvec{Y}_j\) is modeled as

where \(\pi _i^{\prime }s\) are known as mixing proportions which are constrained to be positive and sum to one, g is the number of mixture components, \(\varvec{\mu }_i\) is a \(p\times 1\) mean vector, \(\varvec{A}_i\) is a \(p\times q\) matrix of factor loadings, \(\varvec{F}_{ij}{\mathop {\sim }\limits ^{\mathrm{iid}}}\mathcal {N}_q({\varvec{0}},{\varvec{I}}_q)\) is a q-dimensional vector (\(q < p\)) of factors and \(\varvec{\varepsilon }_{ij}{\mathop {\sim }\limits ^{\mathrm{ind}}}\mathcal {N}_p(\varvec{0},\varvec{\varPsi }_i)\) is a p-dimensional vector of errors and independent of \({\varvec{F}}_{ij}\). Besides, \(\varvec{I}_q\) is an identity matrix of size q, and \(\varvec{\varPsi }_i\) is a \(p\times p\) diagonal matrix with positive diagonal elements referred to as uniqueness variances.

Let \(\varvec{\varTheta }=\{\pi _i,\varvec{\theta }_i\}_{i=1}^g\) be the entire unknown parameters subject to \(\sum ^g_{i=1}\pi _i=1\), where \(\varvec{\theta }_i=\{\varvec{\mu }_i,\varvec{A}_i,\varvec{\varPsi }_i\}\) denotes the vector of the unknown parameters within the ith component. Thus, the MFA model defined as (1) has the probability density function (pdf) of \(\varvec{Y}_j\):

where \(\phi _p(\cdot ; \varvec{\mu },\varvec{\varSigma })\) indicates the pdf of \(\mathcal {N}_p(\varvec{\mu },\varvec{\varSigma })\) and \(\varvec{\varSigma }_i\) is the ith component covariance matrix taking the form of \(\varvec{\varSigma }_i=\varvec{A}_i\varvec{A}^{\top }_i+\varvec{\varPsi }_i\).

To handle the data with possibly missing values, we introduce two indicator matrices for identifying the observed and missing locations of each individual, denoted by \(\varvec{O}_j(p_j^\mathrm{o}\times p)\) and \(\varvec{M}_j((p-p_j^\mathrm{o})\times p)\), such that

where \(\varvec{Y}_j^\mathrm{o}(p_j^\mathrm{o}\times 1)\) and \(\varvec{Y}_j^\mathrm{m}((p-p_j^\mathrm{o})\times 1)\) are the observed and missing components of \(\varvec{Y}_j\), respectively. To identify the original group of individual \(\varvec{Y}_j\), an unobservable allocation vector \(\varvec{Z}_j=\{Z_{1j},\ldots ,Z_{gj}\}\) is introduced, for \(j=1,\ldots ,n\), where \(Z_{ij}\in \{0,1\}\) are binary outcomes with constraint \(\sum _{i=1}^g Z_{ij}=1\). The role of \(\varvec{Z}_j\) is to encode which component has brought into \(\varvec{Y}_j\). That is, \(Z_{ij}=1\) if \(\varvec{Y}_j\) belongs to the ith group, and \(Z_{ij}=0\) otherwise. It follows that \(\varvec{Z}_j\sim {\mathcal M}(1,\varvec{\pi })\) has a multinomial distribution with prior probabilities \({\varvec{\pi }}=(\pi _1,\ldots ,\pi _g)\).

Incorporating missing information (2) into (1) leads to \(\varvec{Y}^\mathrm{o}_j\mid (Z_{ij}=1)\sim \mathcal {N}_{p^\mathrm{o}_j}(\varvec{\mu }^\mathrm{o}_{ij},\varvec{\varSigma }^\mathrm{oo}_{ij})\), where \(\varvec{\mu }^\mathrm{o}_{ij}=\varvec{O}_j\varvec{\mu }_i\) and \(\varvec{\varSigma }^\mathrm{oo}_{ij}=\varvec{O}_j\varvec{\varSigma }_i\varvec{O}^{\top }_j\). Therefore, the marginal pdf of \(\varvec{Y}^\mathrm{o}_j\) is given by

Furthermore, we obtain a hierarchical representation of \({\varvec{Y}}_j^\mathrm{o}\) under the MFA framework:

As a consequence, we can establish the following theorem, which is useful for the evaluation of some conditional expectations involved in the EM algorithm discussed in Sect. 3.

Theorem 1

Given the hierarchical representation of the MFA model specified by (4), we obtain the following conditional distributions:

-

(a)

The conditional distribution of \(\varvec{Y}_j^\mathrm{m}\) given \(\varvec{y}_j^{\mathrm{o}}\), \(\varvec{F}_{ij}\) and \(Z_{ij}=1\) is

$$\begin{aligned} \varvec{Y}_j^\mathrm{m}\mid (\varvec{y}_j^\mathrm{o},\varvec{F}_{ij},Z_{ij}=1)\sim \mathcal {N}_{p-p_j^\mathrm{o}}(\varvec{\mu }_{ij}^\mathrm{m\cdot \mathrm o},\varvec{\varSigma }_{ij}^\mathrm{{mm}\cdot \mathrm o}), \end{aligned}$$where \(\varvec{\mu }_{ij}^\mathrm{m\cdot \mathrm o}=\varvec{M}_j\big \{\varvec{\mu }_i+\varvec{A}_i\varvec{F}_{ij}+\varvec{\varPsi }_i\varvec{C}_{ij}^\mathrm{{oo}}(\varvec{y}_j-\varvec{\mu }_i-\varvec{A}_i\varvec{F}_{ij})\big \}\) and \(\varvec{\varSigma }_{ij}^\mathrm{{mm}\cdot \mathrm o}=\varvec{M}_j(\varvec{I}_p-\varvec{\varPsi }_i\varvec{C}_{ij}^\mathrm{{oo}})\varvec{\varPsi }_i\varvec{M}_j^{\top }\) with \(\varvec{C}_{ij}^\mathrm{{oo}}=\varvec{O}_j^{\top } (\varvec{O}_j\varvec{\varPsi }_i\varvec{O}_j^{\top })^{-1}{\varvec{O}}_j\).

-

(b)

The conditional distribution of \(\varvec{F}_{ij}\) given \(\varvec{y}_j^\mathrm{o}\) and \(Z_{ij}=1\) is

$$\begin{aligned} \varvec{F}_{ij}\mid (\varvec{y}_j^\mathrm{o},Z_{ij}=1)\sim \mathcal {N}_q\big (\varvec{A}_i^{\top }\varvec{S}_{ij}^\mathrm{{oo}}(\varvec{y}_j-\varvec{\mu }_i),\varvec{I}_q-\varvec{A}_i^{\top }\varvec{S}_{ij}^\mathrm{{oo}}\varvec{A}_i\big ), \end{aligned}$$where \(\varvec{S}_{ij}^\mathrm{{oo}}=\varvec{O}_j^{\top }(\varvec{O}_j\varvec{\varSigma }_i\varvec{O}_j^{\top })^{-1}\varvec{O}_j\).

-

(c)

The conditional distribution of \(\varvec{Y}_j^\mathrm{m}\) given \(\varvec{y}_j^\mathrm{o}\) and \(Z_{ij}=1\) is

$$\begin{aligned} {\varvec{Y}}_j^\mathrm{m}\mid (\varvec{y}_j^\mathrm{o},Z_{ij}=1)\sim \mathcal {N}_{p-p_j^\mathrm{o}}(\varvec{\mu }_{2 \cdot 1},\varvec{\varSigma }_{22 \cdot 1}), \end{aligned}$$where \(\varvec{\mu }_{2 \cdot 1}=\varvec{M}_j\big \{\varvec{\mu }_i+\varvec{\varSigma }_i\varvec{S}_{ij}^\mathrm{{oo}}(\varvec{y}_j-\varvec{\mu }_i)\big \}\) and \(\varvec{\varSigma }_{22 \cdot 1}=\varvec{M}_j\big \{\varvec{I}_p-\varvec{\varSigma }_i\varvec{S}_{ij}^\mathrm{{oo}}\big \}\varvec{\varSigma }_i\varvec{M}^{\top }_j\).

-

(d)

The conditional distribution of \(\varvec{F}_{ij}\) given \(\varvec{y}_j\) and \(Z_{ij}=1\) is

$$\begin{aligned} \varvec{F}_{ij}\mid (\varvec{y}_j,Z_{ij}=1)\sim \mathcal {N}_q\big (\varvec{A}_i^{\top }\varvec{\varSigma }^{-1}_{i}(\varvec{y}_j-\varvec{\mu }_i),\varvec{I}_q-\varvec{A}_i^{\top }\varvec{\varSigma }^{-1}_{i}\varvec{A}_i\big ). \end{aligned}$$

Proof

See Supplementary Appendix A. \(\square \)

We further establish the following corollary, which is useful for evaluating the Q-function in the EM algorithm discussed in Sect. 3.1.

Corollary 1

Given the conditional distributions in Theorem 1, we have

-

(a)

\(E(\varvec{Y}_j\mid \varvec{y}^\mathrm{o}_j, Z_{ij}=1)=\varvec{\mu }_i+\varvec{\varSigma }_i\varvec{S}_{ij}^{\mathrm{oo}}(\varvec{y}_j-\varvec{\mu }_i),\)

-

(b)

\(E\left\{ \varvec{M}_j^\top \mathrm{cov}(\varvec{Y}_j^\mathrm{m} \mid \varvec{y}^\mathrm{o}_j,\varvec{F}_{ij},Z_{ij}=1) \varvec{M}_j\mid \varvec{y}^\mathrm{o}_j, Z_{ij}=1\right\} =(\varvec{I}_p-\varvec{\varPsi }_i\varvec{C}_{ij}^{\mathrm{oo}})\varvec{\varPsi }_i\),

-

(c)

\(\mathrm{cov}\left\{ \varvec{M}_j^\top E(\varvec{Y}_j^m \mid \varvec{y}^\mathrm{o}_j,\varvec{F}_{ij},Z_{ij}=1)-\varvec{A}_i \varvec{F}_{ij}\mid \varvec{y}^\mathrm{o}_j, Z_{ij}=1\right\} =(\varvec{\varOmega }_{ij}-\varvec{A}_i)\varvec{\varPhi }_{ij}(\varvec{\varOmega }_{ij}-\varvec{A}_i)^\top \),

where \(\varvec{\varPhi }_{ij}=\mathrm{cov}(\varvec{F}_{ij}\mid \varvec{y}_j^\mathrm{o},Z_{ij}=1)= \varvec{I}_q-\varvec{A}_i^{\top }\varvec{S}_{ij}^\mathrm{{oo}}\varvec{A}_i\) and \(\varvec{\varOmega }_{ij}=\big (\varvec{I}_p-\varvec{\varPsi }_{i}\varvec{C}_{ij}^{\mathrm{oo}}\big )\varvec{A}_i\).

Proof

See Supplementary Appendix B. \(\square \)

Furthermore, we have the following conditional expectations which are summarized in Corollary 2 and required for the development of the AECM algorithm described in Sect. 3.2.

Corollary 2

Given the hierarchical specification of (4), we can get

-

(a)

\(\mathrm{cov}(\varvec{Y}_j\mid \varvec{y}_j^\mathrm{o},Z_{ij}=1)=(\varvec{I}_p-\varvec{\varSigma }_i{\varvec{S}}_{ij}^\mathrm{{oo}})\varvec{\varSigma }_{i}\),

-

(b)

\(E\{\varvec{A}_i\varvec{F}_{ij}(\varvec{Y}_j-\varvec{\mu }_i)^\top \mid \varvec{y}^\mathrm{o}_j,Z_{ij}=1\}=\varvec{A}_i\varvec{\Gamma }_i^{\top }\varvec{V}_{ij}\),

-

(c)

\(E(\varvec{F}_{ij}\varvec{F}_{ij}^\top \mid \varvec{y}^\mathrm{o}_j ,Z_{ij}=1)=\varvec{\Gamma }_i^{\top }\varvec{V}_{ij}\varvec{\Gamma }_i+\varvec{I}_q-\varvec{\Gamma }_i^{\top }\varvec{A}_i\),

where \(\varvec{\Gamma }_i=\varvec{\varSigma }_i^{-1}\varvec{A}_i\) and

Proof

See Supplementary Appendix C. \(\square \)

3 ML estimation via the EM and AECM algorithms

3.1 The EM algorithm

The EM algorithm (Dempster et al. 1977) is a popular tool for carrying out ML estimation in a variety of incomplete-data problems. Each iteration of the EM algorithm is composed of two processes, alternating between an E-step in which the missing data are estimated by their conditional expectations, and an M-step which simultaneously maximizes the conditional expectation of complete-data log-likelihood function computed in the E-step with respect to all unknown parameters. The EM procedure is particularly useful when the M-step is computationally simpler than the maximization of the original likelihood.

We offer a feasible EM procedure for learning the MFA model with incomplete data. For notational convenience, we denote the allocation variables by \(\varvec{Z}=({\varvec{Z}}_1,\ldots ,{\varvec{Z}}_n)\), latent factors by \(\varvec{F}=\{{\varvec{F}}_{i1},\ldots ,\varvec{F}_{in}\}_{i=1}^g\) and missing portion of the data by \(\varvec{y}^\mathrm{m}=(\varvec{y}_1^\mathrm{m},\ldots ,\varvec{y}_n^\mathrm{m})\). Let \(\varvec{y}^\mathrm{o}=(\varvec{y}_1^\mathrm{o},\ldots ,\varvec{y}_n^\mathrm{o})\) be the observed portion of the data and \(\varvec{Y}_c^{[1]}=(\varvec{y},\varvec{F},\varvec{Z})\) be the complete data, where \(\varvec{y}=(\varvec{y}^\mathrm{o},\varvec{y}^\mathrm{m})\).

The log-likelihood function of \(\varvec{\varTheta }\) for complete data \(\varvec{Y}_c^{[1]}=(\varvec{y},\varvec{F},\varvec{Z})\), omitting additive constant terms, is

Let \(\hat{\varvec{\varTheta }}^{(k)}=\{\hat{\pi }^{(k)}_i,\hat{\varvec{\mu }}^{(k)}_i\hat{\varvec{A}}^{(k)}_i,\hat{\varvec{\varPsi }}^{(k)}_i\}_{i=1}^g\) denote the estimates of \(\varvec{\varTheta }\) at the kth iteration. On the E-step, we compute the expected log-likelihood for the complete data (the so-called Q-function), where the expectation is taken with respect to the conditional distributions of the missing data \((\varvec{y}^\mathrm{m},\varvec{F},\varvec{Z})\) given the observed data \(\varvec{y}^\mathrm{o}\) and the current estimates of parameters \(\hat{\varvec{\varTheta }}^{(k)}\). On the M-step, we maximize the Q-function to compute the updated parameter estimates, say \(\hat{\varvec{\varTheta }}^{(k+1)}\). The detailed implementation of the proposed EM algorithm is summarized as follows:

E-step: Compute the following conditional expectations:

and \(\hat{\varvec{\varLambda }}_{ij}^{(k)}=E(\varvec{F}_{ij}\varvec{F}_{ij}^\top \mid \varvec{y}^\mathrm{o}_j,Z_{ij}=1,\hat{\varvec{\varTheta }}^{(k)}) =\hat{\varvec{F}}_{ij}^{(k)}\hat{\varvec{F}}_{ij}^{(k)\top }+\hat{\varvec{\varPhi }}_{ij}^{(k)}\), where \(\hat{\varvec{\mu }}_{ij}^{\mathrm{o}(k)}=\varvec{O}_j\hat{\varvec{\mu }}_i^{(k)}\), \(\hat{\varvec{\varSigma }}_{ij}^{\mathrm{oo}(k)}=\varvec{O}_j\hat{\varvec{\varSigma }}_i^{(k)}\varvec{O}_j^\top \) and \(\hat{\varvec{S}}_{ij}^{\mathrm{oo}(k)}=\varvec{O}_j^\top \big (\varvec{O}_j\hat{\varvec{\varSigma }}_i^{(k)}\varvec{O}_j^\top \big )^{-1}\varvec{O}_j\).

Therefore, the resulting Q-function is obtained as

where \(\hat{\varvec{C}}_{ij}^{\mathrm{oo}(k)}={\varvec{O}}_j^{\top }({\varvec{O}}_j\hat{\varvec{\varPsi }}_i^{(k)}{\varvec{O}}_j^{\top })^{-1}{\varvec{O}}_j\) and \(\hat{\varvec{\varOmega }}_{ij}^{(k)}=\big ({\varvec{I}}_p-\hat{\varvec{\varPsi }}_{i}^{(k)}\hat{\varvec{C}}_{ij}^{\mathrm{oo}(k)}\big )\hat{\varvec{A}}_i^{(k)}\).

M-step: Find \(\hat{\varvec{\varTheta }}^{(k+1)}\) by maximizing Q-function, leading to

where \(\hat{\varvec{\varUpsilon }}_{ij}^{(k)} =\big (\hat{\varvec{y}}_j^{(k)}-\hat{\varvec{\mu }_i}^{(k+1)}-\hat{{\varvec{A}}_i}^{(k+1)}\hat{\varvec{F}}_{ij}^{(k)}\big ) \big (\hat{\varvec{y}}_j^{(k)}-\hat{\varvec{\mu }_i}^{(k+1)}-\hat{{\varvec{A}}_i}^{(k+1)}\hat{\varvec{F}}_{ij}^{(k)}\big )^{\top } +({\varvec{I}}_p-\hat{{\varvec{\varPsi }}_i}^{(k)}\hat{\varvec{C}}_{ij}^{\mathrm{oo}(k)})\hat{\varvec{\varPsi }}_i^{(k)} +(\hat{\varvec{\varOmega }}_{ij}^{(k)}-\hat{\varvec{A}}_i^{(k+1)}\big )\hat{\varvec{\varPhi }}_{ij}^{(k)} \big (\hat{\varvec{\varOmega }}_{ij}^{(k)}-\hat{\varvec{A}}_i^{(k+1)}\big )^\top \).

3.2 The AECM algorithm

The AECM algorithm (Meng and van Dyk 1997) is a flexible extension of the ECM algorithm (Meng and Rubin 1993) in which the specification of the complete data on each CM-step is allowed to be different. With the adoption of AECM, each iteration consists of several cycles and each cycle has its own E-step and CM-steps. Indeed, two main advantages of AECM lie on its mathematical simplicity and less computational cost, as compared with the EM algorithm derived in the preceding subsection.

To employ the AECM algorithm to the fitting of MFA models with missing values, we partition the unknown parameters \({\varvec{\varTheta }}=\big ({\varvec{\varTheta }}_1,{\varvec{\varTheta }}_2\big )\), where \({\varvec{\varTheta }}_1=\{\pi _i,{\varvec{\mu }}_i\}_{i=1}^g\) and \({\varvec{\varTheta }}_2=\{{\varvec{A}}_i,{\varvec{\varPsi }}_i\}_{i=1}^g\). In the first cycle, given \({\varvec{\varTheta }}_2=\hat{{\varvec{\varTheta }}}^{(k)}_2\), the log-likelihood function of \({\varvec{\varTheta }}_1\) for complete data \({\varvec{Y}}_c^{[2]}=({\varvec{y}},{\varvec{Z}})\), excluding constant terms, takes the form of

The implementation of the proposed AECM algorithm, as detailed below, consists of one E-step followed by one CM-step in each of two cycles across iterations.

E-step of cycle 1: Compute the Q-function corresponding to (6) as follows:

where \(\hat{{\varvec{\varSigma }}}^{(k)}_i=\hat{{\varvec{A}}}^{(k)}_i\hat{{\varvec{A}}}^{(k)^\top }_i+\hat{\varvec{\varPsi }}^{(k)}_i\) and \(\hat{\varvec{y}}_{ij}^{(k)}\) is the same as (5) given in the E-step of EM.

CM-steps of cycle 1: Find \(\hat{{\varvec{\varTheta }}}_1^{(k+1)}\) by maximizing (7). The resulting estimators are

in which \(\hat{\varvec{\mu }}_i^{(k+1)}\) has a simpler expression than that of EM.

In the second cycle, we take \(({\varvec{y}},{\varvec{F}},{\varvec{Z}})\) to be the complete data and estimate \({\varvec{\varTheta }}_2\) given \({\varvec{\varTheta }}_1=\hat{\varvec{\varTheta }}^{(k+1)}_1\). Hence, the complete-data log-likelihood function of \({\varvec{\varTheta }}_2\) for \({\varvec{Y}}_c^{[1]}=({\varvec{y}},{\varvec{F}},{\varvec{Z}})\), omitting terms irrelevant to \({\varvec{\varTheta }}_2\), is

E-step of cycle 2: Similarly, obtain the Q-function corresponding to (9), given by

where \(\hat{\varvec{\varGamma }}_i^{(k)}=\hat{\varvec{\varSigma }}_i^{{(k)}-1}\hat{\varvec{A}}_i^{(k)}\), \(\hat{\varvec{\zeta }}_{i}^{(k)}=\sum _{j=1}^n\hat{z}_{ij}^{(k)}({\varvec{I}}_q-\hat{\varvec{\varGamma }}_i^{(k)\top }\hat{\varvec{A}}_i^{(k)})/\sum _{j=1}^n\hat{z}_{ij}^{(k)}\) and

CM-step of cycle 2: Maximizing (10) over \({\varvec{A}}_i\) and \({\varvec{\varPsi }}_i\) gives

4 Automated learning of MFA with missing information

In the EM and AECM algorithms, the values of g and q are considered to be fixed and known. The most popular measure for model selection in mixture models is the Bayesian information criterion (BIC; Schwarz 1978) due to its satisfactory theoretical properties (Keribin 2000) and empirical performances (Fraley and Raftery 1998, 2002). The BIC is calculated as

where m is the number of parameters and \(\ell _{\max }\) is the maximized log-likelihood value. However, it is typically a time-consuming process to perform a grid search of a range of (g, q) pairs. To cope with this obstacle, we develop a faster learning procedure, called the AMFA algorithm in short, to determine the best q in MFA despite that g is still assumed to be known. When \(g=1\), our procedure includes the one-stage AFA algorithm (Zhao and Shi 2014) as a special case.

Meng and Rubin (1993) have shown that the asymptotic convergence rate of the EM-type algorithm is inversely related the amount of missing data. More exactly, the speeds of the EM-type algorithms are governed by the fractions of observed information in the respective data-augmentation space. Zhao and Yu (2008) proposed a fast ECM algorithm for the MFA model without the presence of missing values under a smaller data augmentation \({\varvec{Y}}_c^{[2]}\) as utilized in the first cycle of AECM.

From (6), unlike the AECM algorithm, we utilize the smaller complete data \({\varvec{Y}}_c^{[2]}=({\varvec{y}},{\varvec{Z}})\) to update \(\hat{{\varvec{A}}}^{(k)}_i\) and \(\hat{{\varvec{\varPsi }}}^{(k)}_i\) by maximizing

where \({\varvec{\varTheta }}_2=\{{\varvec{A}}_i,{\varvec{\varPsi }}_i\}_{i=1}^g\) and \(\hat{\varvec{V}}_{i}^{(k)}\) is the local covariance matrix as defined by (11). Let \(\tilde{{\varvec{A}}}_i\triangleq [\hat{{\varvec{\varPsi }}}_i^{(k)}]^{-1/2}{\varvec{A}}_i\) and \(\tilde{{\varvec{V}}}_i\triangleq [\hat{{\varvec{\varPsi }}}_i^{(k)}]^{-1/2}\hat{{\varvec{V}}}^{(k)}_i[\hat{{\varvec{\varPsi }}}_i^{(k)}]^{-1/2}\). To locally maximize (12) under smaller augmentation data space with \(({\varvec{y}},{\varvec{Z}})\), it follows straightforwardly from Zhao and Yu (2008) that each \(\tilde{{\varvec{A}}}_i\) satisfies

Let \({\varvec{U}}_{iq_i}{\varvec{D}}_i{\varvec{R}}_i\) be the singular value decomposition of \(\tilde{{\varvec{A}}}_i\), where \({\varvec{U}}_{iq_i}\) is a \(p\times q_i\) matrix satisfying \({\varvec{U}}_{iq_i}^\top {\varvec{U}}_{iq_i}={\varvec{I}}_{q_i}\), \({\varvec{D}}_i=\hbox {Diag}(d_{i1},\ldots , d_{iq_i})\) is a diagonal matrix with elements \(d_{i1}\ge d_{i2}\ge \cdots \ge d_{iq_i}>0\), and \({\varvec{R}}_i\) is an arbitrarily chosen \(q_i\times q\) matrix satisfying \({\varvec{R}}_i{\varvec{R}}_i^\top ={\varvec{I}}_{q_i}\). Therefore, the decomposition of (13) is equivalent to

where \(\left( {\varvec{u}}_{i1},1+d_{i1}^2\right) ,\ldots ,\left( {\varvec{u}}_{iq_i},1+d_{iq_i}^2\right) \) are the corresponding eigenvector–eigenvalue pairs of \(\tilde{{\varvec{V}}}_i\).

Consider the decomposition for \(\tilde{{\varvec{V}}}_i={\varvec{U}}_i{\varvec{\varLambda }}_i{\varvec{U}}_i^\top \), where \({\varvec{U}}_i=\big [{\varvec{u}}_{i1}~\cdots ~{\varvec{u}}_{ip}\big ]\) is a \(p\times p\) orthogonal matrix and \({\varvec{\varLambda }}_i=\hbox {Diag}(\lambda _{i1},\ldots , \lambda _{ip})\). Using the facts of

it can be therefore established from (12) that

where \(\hat{n}_{i}^{(k)}=\sum _{j=1}^n\hat{z}_{ij}^{(k)}\). Obviously, the sum \(\sum _{i=1}^g n_{i}^{(k)} \big (\ln |\hat{\varvec{\varPsi }}_i^{(k)}|+\sum _{r=1}^p\lambda _{ir}\big )\) is irrelevant to the determination of \(q_i\), and the function \(f(\lambda )=\ln \lambda -\lambda +1\) is negative and strictly decreasing over the interval \((1,\infty )\). So, it is clear to see that the more the eigenvalue \(\lambda _r\) is larger than one, the more the factor r contributes (14).

Following Zhao and Shi (2014), we incorporate the penalty term of BIC, say \(m(q)\ln (n)\), into (14) to aid the selection of q. Accordingly, the optimal q can be calculated as an integer solution satisfying the equation:

where \(q_{\max }\) is the greatest integer satisfying the following identifiability constraint (Ledermann 1937):

Letting \(q=\hat{q}^{(k+1)}\), it can be shown that the solution for \({\varvec{A}}_i\) that globally maximizes (12) is

where \({\varvec{\varLambda }}_{iq^{\prime }_i}=\hbox {Diag}(\lambda _{i1},\ldots , \lambda _{iq^{\prime }_i})\) for which \(q^{\prime }_i=q\) if \(\lambda _{iq^{\prime }_i}>1\); otherwise, \(q^{\prime }_i\) is set to be the unique integer satisfying \(\lambda _{iq^{\prime }_i}>1\ge \lambda _{iq^{\prime }_i+1}\). As such, \({\varvec{\varLambda }}_{iq^{\prime }_i}-{\varvec{I}}_{q^{\prime }_i}\) is guaranteed to be positive definite. For simplicity, the rotation matrix \({\varvec{R}}_i\) is chosen as the first \(q^{\prime }_i\) rows of \({\varvec{I}}_q\) to satisfy the requirement of \({\varvec{R}}_i{\varvec{R}}_i^{\top }={\varvec{I}}_{q^\prime _i}\) in our analysis.

Next, we devise an element-wise scheme in a similar spirit of Zhao et al. (2008) for updating the component uniqueness variances sequentially. First, we define

Given \({\varvec{A}}_i=\hat{{\varvec{A}}}_i^{(k+1)}\) and \({\varvec{\varPsi }}_i=\hat{{\varvec{\varPsi }}}_{ir}^{(k)}\), Eq. (12) is obviously a function of \(\psi _{ir}\), so we simply denote it by \(\bar{\ell }(\psi _{ir})\). Letting \({\varvec{\varSigma }}_{ir}\triangleq \hat{{\varvec{\varPsi }}}_{ir}^{(k)}+\hat{{\varvec{A}}}_i^{(k+1)}\hat{{\varvec{A}}}_i^{(k+1)\top }\), maximization of \(\bar{\ell }(\psi _{ir})\) is equivalent to solving the following equation:

where \((\cdot )_{rr}\) indicates the (r, r)th entry of the matrix given in the parenthesis. Multiplying both sides by \([\hat{{\varvec{\varPsi }}}_{i}^{(k)}]^{-1/2}\), Eq. (18) can be equivalently rewritten as

where \(\tilde{{\varvec{\varSigma }}}_{ir}=[\hat{{\varvec{\varPsi }}}_i^{(k)}]^{-1/2}{\varvec{\varSigma }}_{ir}[\hat{{\varvec{\varPsi }}}_i^{(k)}]^{-1/2}\).

To calculate the inverse of \(\tilde{{\varvec{\varSigma }}}_{ir}\) easily, we adopt the following notation:

where \(\omega _{ir}=\psi _{ir}/\psi _{ir}^{(k)}-1\) and \(\hat{\omega }_{ih}^{(k+1)}=\hat{\psi }_{ih}^{(k+1)}/\hat{\psi }_{ih}^{(k)}-1\). Combining the above definition, we obtain

where \({\varvec{e}}_r\) is the rth column of \({\varvec{I}}_p\), for \(r=1,\ldots ,p\), and \({\varvec{B}}_{ir}=\sum _{h=1}^{r-1}\hat{\omega }_{ih}^{(k+1)}{\varvec{e}}_h{\varvec{e}}_h^\top +{\varvec{I}}_p+\tilde{{\varvec{A}}}_i\tilde{{\varvec{A}}}_i^\top \). Using the matrix inversion formula (Golub and Van Loan 1989), we obtain

where the last equality holds when \({\varvec{B}}_{ir}\) is a nonsingular matrix and \(1+\omega _{ir}{\varvec{e}}_r^\top {\varvec{B}}_{ir}^{-1}{\varvec{e}}_r\ne 0\).

Substituting (20) into (19) yields the following result:

where the right-hand side is a function of \(\omega _{ir}\). Because the denominator of (21) is greater than zero, equating the equation to zero gives the unique solution

The corresponding solution of \(\psi _{ir}\) is given by

However, the solution (23) is not always kept positive under certain circumstances. To ensure that \({\varvec{\varPsi }}_i\) is positive definite, one common way is to choose a very small number \(\eta >0\) and assume \(\psi _{ir}>\eta \). That is, we set \(\hat{\psi }_{ir}^{(k+1)}=\eta \) if \(\hat{\omega }_{ir}^{(k+1)}\le -1\). In such a way, the uniqueness variances are guaranteed to be positive and \(\hat{\omega }^{(k+1)}_{ih}=(\hat{\psi }_{ih}^{(k+1)}/\hat{\psi }_{ih}^{(k)}-1)>-1\), for \(h=1,\ldots , r-1\). Thus, it is trivial to see that \({\varvec{B}}_{ir}=\hbox {diag}(\hat{\omega }_{i1}^{(k+1)}+1,\ldots ,\hat{\omega }_{i,r-1}^{(k+1)}+1,1,\ldots ,1)+\tilde{{\varvec{A}}}_i\tilde{{\varvec{A}}}_i^\top \) is positive definite and so is invertible. It is worth to note that the inverse of \({\varvec{B}}_{ir}\) can be easily calculated because of \(1+\omega _{ir}{\varvec{e}}_r^\top {\varvec{B}}_{ir}^{-1}{\varvec{e}}_r >0\) as \(\omega _{ir}>-1\). This fact is justified in Proposition 2 of Zhao et al. (2008) for the single-factor analysis model.

Afterward, we discuss in more detail about the unimodal property of \(\bar{\ell }(\psi _{ir})\). As we have shown previously,

From (23), we obtain \(\hat{\omega }_{ir}^{(k+1)}=\hat{\psi }_{ir}^{(k+1)}/\hat{\psi }_{ir}^{(k)}-1\) and \(\omega _{ir}=\psi _{ir}/\hat{\psi }_{ir}^{(k)}-1\). Now, if \(\hat{\psi }_{ir}^{(k+1)}>\psi _{ir}\), it implies that

or equivalently \({\varvec{e}}_r^\top {\varvec{B}}_{ir}^{-1}{\varvec{e}}_r+\omega _{ir}({\varvec{e}}_r^\top {\varvec{B}}_{ir}^{-1}{\varvec{e}}_r)^{2}-{\varvec{e}}_r^\top {\varvec{B}}_{ir}^{-1}\tilde{{\varvec{V}}}_i{\varvec{B}}_{ir}^{-1}{\varvec{e}}_r<0\). This will lead to \(\bar{\ell }^\prime (\psi _{ir})>0\), since \(\hat{\psi }_{ir}^{{(k)}}\) is always positive. Similarly, we can verify that \(\bar{\ell }^\prime (\psi _{ir})<0\) if \(\hat{\psi }_{ir}^{(k+1)}<\psi _{ir}\). Hence, we can conclude that \(\hat{\psi }_{ir}^{(k+1)}\) is a global maximizer of \(\bar{\ell }(\psi _{ir})\) at iteration \(k+1\).

In summary, the proposed AMFA algorithm proceeds as follows:

-

E-step: Same as the E-step of cycle 1 of the AECM algorithm.

-

CM-step 1: Compute \(\hat{\pi }_i^{(k+1)}\) and \(\hat{\varvec{\mu }}_i^{(k+1)}\) using the same estimators (8) as in the AECM algorithm.

-

CM-step 2: Compute \(\hat{q}^{(k+1)}\) using Eq. (15).

-

CM-step 3: Compute \(\hat{{\varvec{A}}}^{(k+1)}_i\) using Eq. (17).

-

CM-step 4: Compute \(\hat{\psi }_{ir}^{(k+1)}\) using (23) for \(i=1,\ldots ,g\) and \(r=1,\ldots ,p\). To avoid an improper solution of \(\psi _{ir}\), the estimated value of \(\omega _{ir}\) in (22) can be rendered as

$$\begin{aligned} \hat{\omega }^{(k+1)}_{ir}=\max \left\{ \frac{{\varvec{b}}_{i,r}^{\top }\tilde{{\varvec{V}}}_i{\varvec{b}}_{i,r}-b_{i,rr}}{b_{i,rr}^{2}},~\frac{\eta }{\hat{\psi }^{(k)}_{ir}}-1\right\} , \end{aligned}$$where \({\varvec{b}}_{i,r}\) and \({\varvec{b}}_{i,rr}\) denote the rth column and the (r, r)th element of \({\varvec{B}}_{ir}^{-1}\), respectively.

Notably, when CM-step 2 is skipped, the AMFA algorithm is referred to as the ECM procedure (Zhao and Yu 2008) for a fixed q. As will be demonstrated in our illustrations, the AMFA algorithm is much more efficient than the tandem approach implemented by EM, AECM and ECM algorithms.

5 Provision of standard error estimates

The sampling-based bootstrap technique (Efron and Tibshirani 1993) is a simple procedure for estimating the sampling distribution of estimator of interest. Although conceptually simple, one major obvious drawback of this approach is that it can be either computational intractable or unrealistically time-consuming, especially for mixture models (Basford et al. 1997). We offer a simple and effective information-based method for obtaining the standard errors of the ML estimates of the parameters after convergence of the AMFA algorithm. Following the notation used in Boldea and Magnus (2009) and Montanari and Viroli (2011), we explicitly derive the score vector and the Hessian matrix of the MFA model with possible missing values. The standard errors for the parameter estimates can be obtained by evaluating the inverse of the observed information matrix.

From (3), the log-likelihood for the MFA model with incomplete data is

where \(\phi ^\mathrm{o}_{ij}=\phi _{p^\mathrm{o}_j}({\varvec{y}}^\mathrm{o}_j;{\varvec{\mu }}^\mathrm{o}_{ij},{\varvec{\varSigma }}^\mathrm{oo}_{ij})\).

Let \({\varvec{f}}_i=\mathrm{vec}({\varvec{A}}_i)\) denote a \(pq\times 1\) column vector obtained by stacking all columns of \({\varvec{A}}_i\), and \({\varvec{\psi }}_i\) a \(p\times 1\) containing the main diagonal of \({\varvec{\varPsi }}_i\). Thus, there exists a unique \(p^2\times p\) duplication matrix \({\varvec{D}}\) such that \({\varvec{\psi }}_i={\varvec{D}}^{\top }\mathrm{vec}({\varvec{\varPsi }}_i)\). Moreover, it can be shown that the first and second derivatives of \(\ln \phi ^\mathrm{o}_{ij}\) are, respectively, given by

and

where \({\varvec{b}}_{ij}={\varvec{S}}_{ij}^\mathrm{oo}({{\varvec{y}}}_{j}-{\varvec{\mu }}_{i})\), \({{\varvec{B}}}_{ij}={\varvec{S}}_{ij}^\mathrm{oo}-{{\varvec{b}}}_{ij}{{\varvec{b}}}_{ij}^\top \) and \({\varvec{\varUpsilon }}_{ij}={\varvec{S}}_{ij}^\mathrm{oo}-2{{\varvec{B}}}_{ij}\). For the sake of concise representation, the following lemma presents the compact forms of (25) and (26) whose detailed proof is sketched in Supplementary Appendix D.

Lemma 1

The first two derivatives of \(\ln \phi ^\mathrm{o}_{ij}\) in MFA models that allow for missing values are given by

where

Proof

The proof is straightforward and hence omitted. \(\square \)

Following the same notation used in Boldea and Magnus (2009), we write \({\varvec{a}}_i={\varvec{e}}_i/\pi _i\), for \(i=1,\ldots , g-1\), where \({\varvec{e}}_i\) denotes the ith column of \({\varvec{I}}_{g-1}\), and \({\varvec{a}}_{g}=-{\varvec{1}}_{g-1}/\pi _g\) with \({\varvec{1}}_{g-1}\) being a \((g-1)\times 1\) vector of ones. The score vector is defined as the first derivative of (24), denoted by \({\varvec{s}}({\varvec{\varTheta }}\mid {\varvec{y}}^\mathrm{o})=\sum _{j=1}^n {\varvec{s}}_j({\varvec{\varTheta }}\mid {\varvec{y}}^\mathrm{o}_j)\), where

Using Lemma 1 and

we obtain the explicit expressions for the elements of \({\varvec{s}}_j({\varvec{\varTheta }}\mid {\varvec{y}}^\mathrm{o}_j)\), which contain \({\varvec{s}}^{\varvec{\pi }}_j=\sum _{i=1}^g\alpha ^\mathrm{o}_{ij}{\varvec{a}}_i=\bar{{\varvec{a}}}_j\) and \({\varvec{s}}^{i}_j=\varvec{\alpha }^\mathrm{o}_{ij}{\varvec{c}}_{ij}\) for \(i=1,\ldots ,g\), where

is the posterior probability that \({\varvec{y}}^\mathrm{o}_j\) belongs to the ith group.

Consequently, the second derivative of the log-likelihood function, called the Hessian matrix, is \({\varvec{H}}({\varvec{\varTheta }}\mid {\varvec{y}}^\mathrm{o})=\sum _{j=1}^n {\varvec{H}}_j({\varvec{\varTheta }}\mid {\varvec{y}}^\mathrm{o}_j)\), where

From (28), we can deduce

Using Lemma 1 in conjunction with (31), we establish the following theorem, which allows a direct calculation of the Hessian matrix defined in (30).

Theorem 2

The Hessian matrix in (30) is composed of the elements of

where \({\varvec{c}}_{ij}\) and \({\varvec{C}}_{ij}\) are defined as in (27), and \(\alpha ^\mathrm{o}_{ij}\) is given in (29).

Proof

The proof is straightforward and hence omitted. \(\square \)

It is well known that the mixture models may suffer from the label-switching problem (Stephens 2000) such that the parameters are not identifiable. A common strategy of alleviating this problem is to impose a constraint that makes the components unique, e.g., \(\pi _1>\cdots >\pi _g\). Suppose that the mixture parameters are identifiable and have a bounded likelihood. Redner and Walker (1994) showed that the ML estimator \(\hat{{\varvec{\varTheta }}}\) can converge in probability to the true values of \({\varvec{\varTheta }}\) and in distribution to a multivariate normal distribution with mean vector \({\varvec{\varTheta }}\) and covariance matrix being the inverse of Fisher information matrix. That is, as \(n\rightarrow \infty \),

where \(m=\hbox {Dim}({\varvec{\varTheta }})\). Under suitable regularity conditions (Cramér 1946), the expected information matrix \(\mathcal {I}({\varvec{\varTheta }})\) can be approximated by

Thus, the large sample properties of (32) and (33) are useful for proceeding with hypothesis testing and constructions of confidence intervals. In practice, standard errors of parameter estimates can be extracted from the square root of the diagonal elements of \(\mathcal {I}^{-1}_o(\hat{\varvec{\varTheta }})\).

6 Numerical illustrations

6.1 Example 1: Ozone day detection data

Ozone is an allotrope of oxygen and is known to be much less stable than the diatomic allotrope O\(_2\). It is generated by binding dioxygen and oxygen atom together which is decomposed by high-energy radiation from the effect of ultraviolet light and atmospheric electrical discharges. Most of ozone is in stratosphere, and the consistency is highest at distance 20 km to 30 km above the earth’s surface called the ozone layer region, which absorbs ultraviolet radiation (UV) that is especially harmful to living creatures.

Our first example concerns the one-hour (1-h) and eight-hour (8-h) peak of ground ozone level data with genuine missing values, which were collected by Zhang and Fan (2008) from 1998 to 2004 at the Houston, Galveston and Brazoria (HGB) area of Texas, USA. Both of which consist of more than 2500 instances with 72 continuous attributes containing various measures of air pollutant and meteorological information for the HGB area. Moreover, there is a nominal variable whose label equals 1 for the ozone day and 0 for the normal day. In July 1997, the Environmental Protection Agency (EPA) announced a new standard 8-h 0.08 parts per million (ppm) in place of the previous 0.12 ppm 1-h standard. The difference between the two datasets is the measure of air pollution at peaks during 1 h and 8 h. It is interesting to notice that only a few percent belongs to ozone days. Specifically, there are 73 (2.88%) ozone days versus 2463 normal days in the 1-h samples and 160 (6.31%) ozone days versus 2374 normal days in the 8-h samples. The data are publicly available from the UCI machine learning database repository (Frank and Asuncion 2010). There are plenty of missing values in these data. A more detailed account of the fractions of missing values is summarized in Table 1. From the table, we found that both datasets exhibit similar missing patterns across different percentage ranges. In addition, the normal days tend to have greater proportions of missing values than ozone days.

To compare the convergence behavior of the EM, AECM and ECM algorithms, we fit the MFA model to the 1-h and 8-h datasets. The number of components g is fixed at 2 to reflect two intrinsic clusters (normal versus ozone days), whereas the number of factors q varies from 1 to 40, though in principle the maximum on the basis of (16) should be 60. Such a consideration arises from the fact that the three algorithms may fail to converge due to an over-fitting of MFA when q is over 40. Table 2 compares the top three models for getting the highest BIC\(^a=-\hbox {BIC}/2\) and the required CPU time. Comparing the resulting fitting performances for the 1-h and 8-h datasets, the optimal numbers of factors chosen by the three algorithms are between 24 to 28. It can be observed that the ECM algorithm converges significantly faster than EM and AECM and attains higher BIC\(^a\) values. Accordingly, a larger BIC\(^a\) value indicates a better-fitting model. Even though the ECM algorithm is more efficient, it takes nearly 2 machine hours to complete the learning. From a practical viewpoint, the running time of the two-stage procedures seems a bit too long.

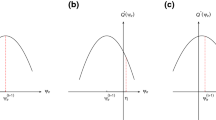

To speed up the learning process, we adopt the AMFA algorithm which generally takes within 3 minutes to converge (Table 2). Note that different initial values \(\hat{q}^{(0)}\) may yield slightly different optimal q; they all ended up with similar BIC\(^a\) values to those of ECM. Figure 1 displays the evolvement of BIC\(^a\) values against number of iterations starting by \(\hat{q}^{(0)}=10,~12\) and 14. It is obvious that the AMFA algorithm performs similarly to get the final \(q\in (24,28)\) (Table 2), but it converges very quickly in the sense of fewer iterations as well as the CPU time as compared to the two-stage algorithms.

6.2 Example 2: Diabetes in Pima Indian women

Diabetes mellitus (DM), called diabetes for short, is a disease that occurs when patients’ blood sugar is at high level for a long time. Symptoms of diabetes include frequent urination, increased thirst, increased hunger and decreased weight. There are three main types of diabetes; no matter which one of types, if keep untreated, they will cause complications. Type-1 DM is known as insulin-dependent diabetes mellitus (IDDM), resulting from autoimmune destruction of the \(\beta \)-cells that will cause shortage of insulin. Type-2 DM, known as non-insulin-dependent diabetes mellitus (NIDDM), is a long-term metabolic disorder due to obesity and lack of exercise. Gestational diabetes mellitus (GDM) is a situation that women without diabetes have high blood sugar levels during pregnancy.

The second example to which we testify the usefulness of our methods is the Pima Indian women data, publicly available at the UCI machine learning database repository (Frank and Asuncion 2010). The dataset comprised \(p=8\) attributes, including number of times pregnant (\(x_1\)), plasma glucose concentration (\(x_2\)), diastolic blood pressure in mmHg (\(x_3\)), triceps skinfold thickness in mm (\(x_4\)), 2-h serum insulin in mu U per ml (\(x_5\)), body mass index (\(x_6\)), diabetes pedigree function (\(x_7\)) and age (\(x_8\)), for 268 diabetic and 500 non-diabetic female patients. A detailed description of the eight attributes and their numbers of missing units is summarized in Table 2 of Wang and Lin (2015). Overall, there are 652 unobserved measurements over the total of \(8\times 768=6144\) measurements, leading to a missing rate of 10.61%.

Because there are two known clusters labeled by ‘diabetic’ and ‘non-diabetic,’ we therefore focus on the comparison under a two-component MFA model with \(q=1-4\). We implement the EM, AECM, ECM and AMFA algorithms to fit MFA models to the data. Figure 2 displays the typical evolution of the BIC\(^a\)=\(-\hbox {BIC}/2\) values trained by the four algorithms. As can be seen, all algorithms achieve nearly the same BIC\(^a\) values except for EM which suffers from premature convergence for \(q=2\) and 3. The premature convergence means that evolutionary process gets stagnated too early to obtain the optimal value or results in a drop of successive log-likelihood values.

Table 3 shows the performance of the best chosen model along with the required CPU time obtained by running the three two-stage EM-based algorithms and the one-stage AMFA algorithm. Observing the table, the AECM, ECM and AMFA algorithms select the same number of factors \((q=3)\) and attain approximately the same BIC\(^a\) value. The EM algorithm offers an inferior solution \((q=4)\) due to premature convergence. It is also readily seen that the AMFA algorithm takes the least amount of CPU time to reach the optimal solution \((q=3)\). The empirical results provide evidence that the AMFA algorithm is more efficient than the two-stage algorithms.

Table 4 summarizes the resulting parameter estimates together with the associated standard errors obtained by inverting (33) for the chosen three-factor MFA model. The results indicate that all the mean estimates of eight variables are significantly less than zero for component 1 and greater than zero for component 2, suggesting that all variables contribute equally important to the separation between groups.

6.3 Example 3: Simulation based on cereal data with synthetic missing values

Our third example concerns the cereal data reported originally by Lattin et al. (2003). Among these data, 116 participants appraise 12 cereals on \(p = 25\) attributes, including filling, salt, soggy and so on. Some of respondents assessed more than one cereal so that there are \(n = 235\) observations. Participants used the five-point scale to demonstrate the level to each characteristic of cereal brands. Zhao and Shi (2014) implemented the AFA algorithm to fit a single-component MFA model to the cereal data and concluded the most appropriate number of factors attains at \(q=4\).

We consider the fitting of the MFA models to the cereal data with g varying from 1 to 3 and q from 1 to \(q_\mathrm{max}=18\). Table 5 compares the performance of the AMFA and three two-stage procedures for estimating 12 different MFA models, and outputs include the optimal choice of q, final BIC\(^a\) value as well as the CPU time. As one can see, all algorithms give the same final ML solution, among the AMFA demands substantially smaller CPU time (less than 1 s). It is also worth noting that the two-component MFA model with three factors provides the best fit. Contrary to the previous studies, the underlying distribution of the cereal data should be multimodal rather than unimodal.

To study the computational efficiency of the AMFA algorithm relative to the two-stage procedures for learning MFA models in the presence of missing values, artificial missing data were generated by deleting randomly from the complete cereal data studied under three proportions of missingness: 5%, 10% and 20%. In this study, we focus on comparing the learning of two-component MFA models as g is a priori determined to be 2 based on the best fitting of the complete cereal data. Table 6 summarizes the results, including the CPU time, BIC\(^a\) values and the selected number of factors q averaged over 100 Monte Carlo replications together with their corresponding standard deviations. The computational results of EM are not included because it urges to converge prematurely, especially when the missing rate rises. We observe that both AECM and ECM procedures give somewhat similar BIC\(^a\) values, whereas the AMFA algorithm is prone to provide slightly inferior BIC\(^a\) scores. Apparently, the numbers of factors selected by the three estimating procedures are all getting smaller as the missing rate increases. In terms of computational efficiency, the AMFA algorithm represents a much faster computational speed than the two-stage procedures. Consequently, the optimal values of q determined by the three considered procedures are quite close, while the AMFA algorithm is shown to be more reliable as it yields lower standard deviations.

6.4 Example 4: Simulation based on artificial data

A simulation experiment is conducted to examine the performance of the EM, AECM, ECM and AMFA algorithms in recovering the true underlying parameter values when the number of components is correctly specified. We generate 500 Monte Carlo data of \(p=8\) attributes, and sample sizes n=150 (small), 300 (moderate) and 600 (relatively large) form a MFA model with \(g=3\) components and \(q=3\) factors. The setup of true values of parameters is

where \(\mathrm{Unif}(p,q)\) denotes a \(p\times q\) matrix of random numbers drawn from a uniform distribution on the unit interval (0, 1), \({\varvec{1}}_8\) indicates a column vector of length 8 with all entries equal to 1, and \({\varvec{I}}_8\) denotes a \(8\times 8\) identity matrix.

For each simulated dataset, we compare the estimation accuracy and efficiency of the four considered algorithms on the fitting of the three-component MFA model with q ranging from 1 to \(q_{\max }=4\). To investigate the estimation accuracies, we report in Table 7 the average norm of the bias of parameters \(\{\pi _i,{\varvec{B}}_i,{\varvec{\varPsi }}_i\}_i^{3}\), since their true values are known, summarized over 500 replications along with the standard deviations in parentheses. For a specific parameter vector \(\varvec{\theta }\) of length d with true values \((\theta _1,\ldots ,\theta _d)\), the norm of the bias is defined as \(\{(\hat{\theta }^{(r)}_1-\theta _1)^2+\cdots +(\hat{\theta }^{(r)}_d-\theta _d)^2)\}^{1/2}\), where \(\hat{\theta }^{(r)}_{s}\) is the estimate of \(\theta _{s}\), for \(s=1,\ldots d\), obtained at the rth replication. The final converged BIC values and consumed CPU time are also shown in the last two columns for the sake of comparison. From the numerical results listed in Table 7, the four algorithms produce very similar estimation accuracy and the AMFA algorithm demands the lowest computational cost in all cases. Notice that the EM algorithm is slowest, while the AECM algorithm gives slightly lower accuracy for some cases. It is also noteworthy that the norm of the bias as well as the standard deviations for all parameter vectors is getting smaller when the sample size increases. This indicates empirical evidence that the estimates obtained by the four estimating procedures have desirable asymptotic properties. Although the above simulation experiment is somewhat limited, it demonstrates the AMFA algorithm can yield comparable parameter estimates and converged log-likelihood to the two-stage methods which may demand a prohibitively higher computational burden.

7 Conclusion

We have devised a one-stage AMFA procedure that seamlessly integrates the selection of the number of factors into parameter estimation for fast learning MFA model with possibly missing values. The efficiency of such an automatic scheme stems from less amount of missing information and quick determination of factor dimensions based on the eigendecomposition of local sample covariance matrices. Two auxiliary permutation matrices are incorporated into all considered estimating procedures that allow for ease of algorithmic representation and computer coding. We have further explicitly derived the Hessian matrix of the MFA model with missing information; thereby, the asymptotic standard errors of the ML estimates can be directly obtained without having to resort to computationally intensive bootstrap methods (Efron and Tibshirani 1986). Experiments with real and synthetic examples reveal that the AMFA algorithm performs comparably to the two-stage methods, but the computational demands are much lower, particularly for data involving a higher proportion of missing outcomes.

The proposed one-stage approach is limited to fast learning MFA under a given number of components (g). Although pointed out by many researchers, it is still an open question to develop an automated algorithm for fast determination of the best pair (g, q) without sacrificing too much computational complexity. The BIC is adopted to choose the most plausible q due to its consistence in model selection. However, other criteria such as the integrated completed likelihood (ICL; Biernacki et al. 2000) or approximate weight of evidence (AWE; Banfield and Raftery 1993) can also be used and might perform better than BIC in discovering the true numbers of factors or clusters. Nevertheless, all these criteria suffer from the problem of over-penalization in dealing with incomplete data when the rate of missingness gets large (Ibrahim et al. 2008). It would be a worthwhile future task to develop an improved procedure that can take the aforementioned issues into account. In the formulation of MFA, the number of local factors per component is assumed to be equal to guarantee the global structural identifiability. However, such a restriction might cause an overlearning effect when the number of component (g) or factors (q) increases. To remedy this deficiency, an attempt on the extension of our current approach allowing for automatic determination of varying dimensions of local factors \(\{q_i\}_{i=1}^g\) deserves further attention. Finally, it is of interest to extend the current approach to the mixture of t factor analyzers (McLachlan et al. 2007; Wang and Lin 2013) for modeling incomplete high-dimensional data that have heavy-tailed behavior in a more efficient manner.

References

Banfield JD, Raftery AE (1993) Model-based Gaussian and non-Gaussian clustering. Biometrics 49:803–821

Basford KE, Greenway DR, McLachlan GJ, Peel D (1977) Standard errors of fitted means under normal mixture models. Comput Stat 12:1–17

Biernacki C, Celeux G, Govaert G (2000) Assessing a mixture model for clustering with the integrated completed likelihood. IEEE Trans Pattern Anal Mach Intell 22:719–725

Boldea O, Magnus JR (2009) Maximum likelihood estimation of the multivariate normal mixture model. J Am Stat Assoc 104:1539–1549

Cramér H (1946) Mathematical methods of statistics. Princeton University Press, Princeton

Dempster AP, Laird NM, Rubin DB (1977) Maximum likelihood from incomplete data via the EM algorithm (with discussion). J R Stat Soc B 39:1–38

Efron B, Tibshirani R (1986) Bootstrap methods for standard errors, confidence intervals, and other measures of statistical accuracy. Stat Sci 1:54–75

Efron B, Tibshirani R (1993) An introduction to the bootstrap. Chapman & Hall, London

Fraley C, Raftery AE (1998) How many clusters? Which clustering method? Answers via model-based cluster analysis. Comput J 41:578–588

Fraley C, Raftery AE (2002) Model-based clustering, discriminant analysis, and density estimation. J Am Stat Assoc 97:611–612

Frank A, Asuncion A (2010) UCI machine learning repository. School of Information and Computer Science, University of California, Irvine, CA. http://archive.ics.uci.edu/ml

Ghahramani Z, Beal MJ (2000) Variational inference for Bayesian mixture of factor analysers. In: Solla S, Leen T, Muller K-R (eds) Advances in neural information processing systems 12. MIT Press, Cambridge, pp 449–455

Ghahramani Z, Jordan MI (1994) Supervised learning from incomplete data via an EM approach. In: Cowan JD, Tesarro G, Alspector J (eds) Advances in neural information processing systems, vol 6. Morgan Kaufmann, San Francisco, pp 120–127

Ghahramani Z, Hinton GE (1997) The EM algorithm for mixtures of factor analyzers. Technical report no. CRG-TR-96-1, University of Toronto

Golub GH, Van Loan CF (1989) Matrix computations, 2nd edn. Johns Hopkins University Press, Baltimore, MD

Ibrahim JG, Zhu H, Tang N (2008) Model selection criteria for missing data problems via the EM algorithm. J Am Stat Assoc 103:1648–1658

Keribin C (2000) Consistent estimation of the order of mixture models. Sankhya 62:49–66

Lattin J, Carrol JD, Green PE (2003) Analyzing multivariate data. Brooks/Cole, Pacific Grove, CA

Ledermann W (1937) On the rank of the reduced correlational matrix in multiple-factor analysis. Psychometrika 2:85–93

Lin TI, Lee JC, Ho HJ (2006) On fast supervised learning for normal mixture models with missing information. Pattern Recogn 39:1177–1187

Little RJA, Rubin DB (2002) Statistical analysis with missing data, 2nd edn. Wiley, New York

McLachlan GJ, Peel D (2000) Finite mixture models. Wiley, New York

McLachlan GJ, Bean RW, Peel D (2002) A mixture model-based approach to the clustering of microarray expression data. Bioinformatics 18:413–422

McLachlan GJ, Peel D, Bean RW (2003) Modelling high-dimensional data by mixtures of factor analyzers. Comput Stat Data Anal 41:379–388

McLachlan GJ, Bean RW, Jones LBT (2007) Extension of the mixture of factor analyzers model to incorporate the multivariate \(t\)-distribution. Comput Stat Data Anal 51:5327–5338

Meng XL, van Dyk D (1997) The EM algorithm—an old folk-song sung to a fast new tune. J Roy Stat Soc B 59:511–567

Meng XL, Rubin DB (1993) Maximum likelihood estimation via the ECM algorithm: a general framework. Biometrika 80:267–278

Montanari A, Viroli C (2011) Maximum likelihood estimation of mixtures of factor analyzers. Comput Stat Data Anal 55:2712–2723

Redner RA, Walker HF (1984) Mixture densities, maximum likelihood and the EM algorithm. SIAM Rev 26:195–239

Schwarz G (1978) Estimating the dimension of a model. Ann Stat 6:461–464

Stephens M (2000) Dealing with label switching in mixture models. J Roy Stat Soc B 62:795–809

Ueda N, Nakano R, Ghahramani Z, Hinton GE (2000) SMEM algorithm for mixture models. Neural Comput 12:2109–2128

Wang WL, Lin TI (2013) An efficient ECM algorithm for maximum likelihood estimation in mixtures of \(t\)-factor analyzers. Comput Stat 28:751–769

Wang WL, Lin TI (2015) Robust model-based clustering via mixtures of skew-\(t\) distributions with missing information. Adv Data Anal Classif 9:423–445

Zhang K, Fan W (2008) Forecasting skewed biased stochastic ozone days: analyses, solutions and beyond. Knowl Inf Syst 14:299–326

Zhao JH, Shi L (2014) Automated learning of factor analysis with complete and incomplete data. Comput Stat Data Anal 72:205–218

Zhao JH, Yu PLH (2008) Fast ML estimation for the mixture of factor analyzers via an ECM algorithm. IEEE Trans Neural Netw 19:1956–1961

Zhao JH, Yu PLH, Jiang Q (2008) ML estimation for factor analysis: EM or non-EM? Stat Comput 18:109–123

Acknowledgements

The authors gratefully acknowledge the editors and two anonymous referees for their comments and suggestions that greatly improved the quality of this paper. We are also grateful to Ms. Ying-Ting Lin for her assistance in initial simulations. This research was supported by the Ministry of Science and Technology of Taiwan under Grant Nos. 107-2628-M-035-001-MY3 and 107-2118-M-005-002-MY2.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Wang, WL., Lin, TI. Automated learning of mixtures of factor analysis models with missing information. TEST 29, 1098–1124 (2020). https://doi.org/10.1007/s11749-020-00702-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11749-020-00702-6

Keywords

- Automated learning

- Factor analysis

- Maximum likelihood estimation

- Missing values

- Model selection

- One-stage algorithm