Abstract

In this paper, a conjugate gradient optimized regularized extreme learning machine (CG-RELM) is investigated to estimate the state of charge (SOC) of lithium batteries. The CG algorithm naturally avoids calculation load of the inverse matrix in the least square algorithm in updating output weights of the RELM. In addition, the weights adjustment directions in the CG algorithm at different points are conjugate orthogonal to each other, guaranteeing the high convergence speed of the CG-RELM algorithm. The simulation results verify that the investigated algorithm can effectively estimate the battery SOC under the dynamic stress test (DST) data set. The CG-RELM shows faster convergence speed when compared with BP neural network, higher estimation accuracy when the number of hidden layer neurons increases, and high robustness when applied to the data set with noise.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

With the development of power generation technology and energy storage technology, the electric vehicles (EVs) have become a new direction for the development of the automotive industry. Lithium batteries are ideal power sources of EVs due to their excellent performance [1, 2]. An essential link between batteries and EVs is the battery management system (BMS) [3, 4]. However, the most important parameter in BMS, the State of Charge (SOC), cannot be obtained by direct measurement [5]. Therefore, accurately estimating the SOC of lithium batteries has become a very important issue.

The SOC estimation methods

A number of SOC estimation methods have been proposed by researchers, which can be divided into the following categories.

-

1)

The traditional methods

The traditional methods contain the Coulomb counting (CC) method [6] and the open-circuit voltage (OCV) method [7]. The CC method obtains the SOC by integrating the battery current over time during charging or discharging. However, its estimation performance depends on whether there is an exact initial SOC and a highly accurate sensor. If not, cumulative errors may occur, which will affect the final estimation result [7]. The OCV method obtains the SOC by looking up the SOC-OCV curve. But it requires a long time to keep the battery in an open-circuit state before measuring its OCV. This makes the method unable to meet the requirements of real-time estimation [8]. Furthermore, if the SOC-OCV curve obtained in advance is not accurate enough, the estimation performance of the OCV method may be counterproductive.

-

2)

The Kalman filter-based methods

The Kalman filter-based methods estimate the battery SOC by combining an electrical circuit model (ECM) [9, 10] with an appropriate Kalman filter (KF), e.g., the extended Kalman filter (EKF) [11,12,13], the unscented Kalman filter (UKF) [14], the dual Kalman filter (DKF) [15], and the cubature Kalman filter (CKF) [16, 17], as well as other filters [18,19,20]. These strategies typically require accurate battery models to perform SOC estimation at varying conditions. Practically, there are a large number of parameters which are difficult to be identified in the battery model. In addition, only when the noise is zero mean (also called the Gaussian noise), the estimation results of the Kalman filter-based methods are accurate [21]. However, in a wide variety of applications, the noise is non-Gaussian.

-

3)

The neural network-based methods

The neural network is an efficient recognition algorithm widely used in fault location [22, 23], classification [24], pattern recognition [25], and other fields. When applied to SOC estimation, the neural network-based methods can infer the relationship between the battery current, voltage, other variables, and SOC according to a large amount of training data [26, 27]. These methods realize the optimization of weights and biases through iterative training. The commonly used optimization algorithm is the gradient descent (GD) algorithm [28, 29]. However, during the training process based on the GD algorithm, when the weights of some layers in the neural network change significantly, the weights of other layers usually remain unchanged and only change during further iterations. In other words, the GD algorithm has inherent instability. Therefore, it will take a long time to update all the weights and biases of the network to an ideal state.

The extreme learning machine

The extreme learning machine (ELM) was proposed by Huang et al. in 2004 [30]. It is a special single-hidden layer feedforward neural network (SLFN). In an ELM, the input weights and hidden layer biases are set randomly and remain unchanged during the training process. The output weights are calculated according to the batch data least square algorithm [31]. Compared with the GD algorithm-based neural network, the training steps of the ELM are simpler, which makes its training speed faster. However, a potential disadvantage of the ELM is that the improvement of its learning accuracy is guaranteed by increasing the number of neurons in the hidden layer [32]. When dealing with complex problems, the structure of the ELM is often too large due to numerous neurons; then, over-fitting may occur, which will reduce the generalization ability of the algorithm [33].

Huang et al. introduced the regularization term into the objective function of the standard ELM and then got the regularized extreme learning machine (RELM) [34]. Correspondingly, during the training of the RELM by using the least square algorithm, the regularization coefficient is included in the Moore-Penrose generalized inverse matrix of the hidden output. In this way, both the empirical risk and the structural risk of the model can be reduced. Therefore, the generalization ability of the model can be improved, and the over-fitting can be effectively prevented.

With its excellent learning performance, many RELM-based methods have been proposed and have been widely used in various fields. For example, an online sequential regularized extreme learning machine (OS-RELM) was proposed by Cosmo et al. for single image super resolution and achieved comparable or better results than some state-of-the-art methods [35]. Gumaei et al. used a hybrid feature extraction method of a RELM for brain tumor classification with superior classification results of brain images [36]. Weng et al. applied a BIC criterion and genetic algorithm-based RELM in iron ore price forecasting [37].

For the ELM, its inverse matrix, which has a high computational complexity, needs to be calculated during the training process of the standard ELM and its variants [38]. Furthermore, a large number of intermediate matrices need to be stored in the process of calculating the inverse matrix, which leads to a large occupation of computer memory.

Contributions

In this paper, a conjugate gradient optimized regularized extreme learning machine (CG-RELM) is proposed to estimate battery SOC. The main contributions of this study reflect in the following aspects:

-

1)

For a lithium battery, a CG-RELM model is established for SOC estimation with the measured voltage and current as the inputs, and the estimated SOC as the output of the model.

-

2)

In the CG-RELM, the conjugate gradient (CG) algorithm is used to calculate the output weights. This not only avoids calculating the inverse matrix but guarantees the high convergence speed of the algorithm.

-

3)

In simulation, the dynamic stress test (DST) data set is used to train the CG-RELM, and the simulation results verify that the proposed algorithm can effectively estimate the battery SOC.

This paper is set as follows. Section 2 illustrates the principle of the RELM. Section 3 introduces the principle of the CG algorithm. Section 4 investigates the CG algorithm optimized RELM for battery SOC estimation. Section 5 constructs the experimental platform, performs simulation, and analyzes the results of the CG-RELM model. The conclusion is given in Section 6.

The regularized extreme learning machine

The regularized extreme learning machine (RELM) is a kind of feedforward neural network with a single-hidden layer.

Notations:

-

M, L, and N indicate the number of neurons in the input layer, the hidden layer, and the output layer, respectively.

-

X and Y indicate the input matrix and the target output matrix of the network, respectively.

-

O indicates the output matrix of the network.

-

σ(·) indicates the Sigmoid activation function of the hidden layer.

-

W1, b, and W2 indicate the input weight matrix, the bias vector, and the output weight matrix of the network.

Based on the above notation explanations, X and Y can be expressed as follows:

where S indicates the sample size.

W1, b, and W2 can be expressed as follows:

Then the output matrix of the hidden layer can be calculated as:

Finally, the output matrix of the network can be obtained:

According to the RELM algorithm, the input weight matrix (W1) and bias vector (b) are set randomly and stay unchanged during the training process. Therefore, the task of the RELM is to find an estimate W2, ∗ that can minimize the error between the output (O) and the target output (Y) of the network, which can be expressed as:

The traditional ELM algorithm calculates W2, ∗ by using the batch data least square algorithm, as shown below:

where Η+ = (ΗTΗ)−1ΗT indicates the generalized Moore-Penrose inverse matrix of H.

However, when dealing with complex problems, in order to meet the requirement of accuracy, the hidden layer neurons of the ELM are usually set too much. This may lead to over-fitting, thereby reducing the generalization ability of the network. Moreover, when H is not a column full-rank matrix, the result of the determinant of HTH is 0. Then, an error may occur when calculating W2, ∗ according to (4).

To solve the above problems, the RELM adds a positive constant λ(called the regularization coefficient) to each element on the diagonal of HTH. Then,W2, ∗ can be calculated as follows:

where I is an identity matrix.

The conjugate gradient algorithm

The related work

Definition 3.1

An n × n matrix A is positive definite if zTAz > 0 for every n-dimensional column vector z≠0, where zT is the transpose of vector z.

Definition 3.2

An n × n matrix A is positive semi-definite if zTAz ≥ 0 for every n-dimensional column vector z≠0, where zT is the transpose of vector z.

Definition 3.3

Two vectors, r1 and r2, are conjugate with respect to A (called A-conjugate) if \( {\boldsymbol{r}}_1^{\mathrm{T}}{\boldsymbol{Ar}}_2=0 \), where A is an n × n symmetric positive definite matrix and r1, r2 ∈ ℝn.

Also, r1, r2, ⋯, rk ∈ ℝn are a set of conjugate directions of A if \( {\boldsymbol{r}}_i^T{\boldsymbol{Ar}}_j \) = 0, i≠j, i, j = 1,2,…,k.

Remark 3.1

The notion of conjugacy is in some ways a generalization of orthogonality. When A is the identity matrix I, A-conjugate means A-orthogonal.

Theorem 3.1

Suppose there is a linear system Ax = B, where A is the coefficient matrix, x is the solution vector, and B is the constant vector. If A is a symmetric positive definite matrix, then, the solution of Ax = B is also the minimal point (denoted as x*) of the function f(x) shown below:

Theorem 3.2

Suppose r1, r2, ⋯, rn ∈ ℝn are a set of A-conjugate vectors, where A is an n × n symmetric positive definite matrix. Take any x1 ∈ ℝn as the initial point, a series of new points can be obtained by performing one-dimensional search in the direction of r1, r2, ⋯, rn. The minimal point of the function f(x) in Theorem 3.1 can be obtained by iterating at most n times—namely, the conjugate direction algorithm.

According to Theorems 3.1–3.2, if there is a set of A-conjugate direction vectors r1, r2, ⋯, rn ∈ ℝn, the solution of Ax = B can be obtained by the conjugate direction algorithm.

The principle of the conjugate gradient algorithm

The conjugate gradient (CG) algorithm [39], a kind of conjugate direction algorithm, generates A-conjugate direction vectors by using the gradient vectors of f(x). Then, the minimal point of f(x) can be calculated, which is also the solution of the linear equation Ax = B.

Remark 3.2

The gradient of the function f(x) can be obtained by calculating the partial derivative of f(x) against x.

According to Theorem 3.1 and Remark 3.2, we have:

According to the CG algorithm, the direction vector at the kth iteration (rk) is obtained from that at the previous iteration (rk-1) and the gradient vector at the current iteration (gk), as shown below:

where βk is the correction coefficient.

According to Definition 3.3 and (8), βk in the CG algorithm need meet:

Then, we have the calculation equation of βk:

Definition 3.4

Define the learning rate at the kth iteration as αk. Then, the parameter updating equation of the CG algorithm is:

Definition 3.5

Define ek = x∗ − xk as the error vector at the kth iteration, and rk-1 is the direction vector at the previous iteration. The accurate one-dimensional search requires that ek and rk-1 be conjugate with respect to A; that is, \( {\boldsymbol{r}}_{k-1}^{\mathrm{T}}{\boldsymbol{Ae}}_k=0 \).

Then, we can get:

Further, the calculation of αk can be obtained:

Substituting (6) and (7) into (13), the simplified expression of αk can be obtained as follows:

The expression of βk in (10) can also be simplified, and there are many equations for simplification, such as the Polak-Ribière-Polyak (PRP) equation [40], the Fletcher-Reeves (FR) equation [41], the conjugate descent (CD) equation [42], the Liu-Storey (LS) equation [43], and the Dai-Yuan (DY) equation [44].

In this paper, the FR equation is selected. The advantage of the FR equation is that only the gradient vectors are used when calculating βk, and the coefficient matrix and the direction vectors are not needed.

The derivation of the FR equation is as follows:

According to Definition 3.5 and (11), when i < j, the error vector at the jth iteration can be expressed as follows:

Then, according to (6), (7), and (15), we have:

According to Definition 3.3 and Definition 3.5, we have: \( {\boldsymbol{r}}_i^{\mathrm{T}}{\boldsymbol{Ae}}_{i+1}=0 \) and \( {\boldsymbol{r}}_i^{\mathrm{T}}{\boldsymbol{Ar}}_k=0,k=i+1,\cdots, j-1 \). Then, we get:

Substituting (11) into (6) gives:

Then, we have:

Substituting (19) into (10) gives:

According to (8) and (17), we have:

Then, we get:

Therefore, we have:

Substituting (21)–(23) into (20), we obtain the FR equation:

Choose the opposite gradient direction as the initial direction. Then, the CG algorithm can generate a set of A-conjugate direction vectors r1, r2, ⋯, rn ∈ ℝn according to the following equation:

The CG-RELM algorithm for SOC estimation

The conjugate gradient optimized regularized extreme learning machine (CG-RELM) is used for battery SOC estimation by taking the measured voltage Vi and current Ii (i=1,2,...,S) as inputs and the estimated SOCi as the output. Therefore, the number of neurons in the input layer and the output layer is 2 and 1, respectively, and we have:

Let Ψ = HTH + λI and T = HTY; then, Eq. (5) can be transformed into:

According to Definitions 3.2–3.3, Ψ is a symmetric positive definite matrix. The linear equation ΨW2, ∗ = Τ is same as Ax = B. Thus, according to Theorems 3.1–3.2, its solution can copy the results of Ax = B by the CG algorithm as:

The training steps of the CG-RELM algorithm are shown in Fig. 1.

Experiment and simulation

Data sampling and preprocessing

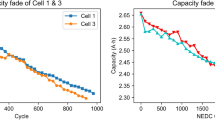

As shown in Fig. 2, there is a battery test platform which consists of a battery tester (NEWARE CT-4008-5V12A-TB), a battery holder, NCR18650PF (2900mAh) lithium batteries, and a computer. The battery indexes are indicated in Table 1. Perform discharge test on this battery test platform to sample data, and then, get the dynamic stress test (DST) data set, which is shown in Fig. 3.

First, we adopt the exponential moving average method to process the original voltage and current data sampled during the discharge process. The calculation formula is as follows:

where \( {y}_k^{\prime } \) represents the processed data, yk represents the original data, and η is a constant coefficient.

Then, we normalize the battery data to (−1, 1) to reduce the network calculation load and improve the training speed.

Simulation

Take the mean squared error (MSE) as the cost function, and adopt the root mean squared error (RMSE), the mean absolute error (MAE), as well as the coefficient of determination (R2) to evaluate the estimation performance of the CG-RELM.

Randomly select 75% of all processed data as the training set, and the rest as the test set.

-

(1)

Set the number of hidden layer neurons (L) to 300, and then, respectively, apply the CG-RELM and BP neural network (BP-NN) to estimate SOC. The simulation results are shown in Fig. 4 and Table 2.

Table 2 The performance of different models

It can be seen that the CG-RELM has faster convergence speed and better estimation performance when compared with the BP-NN.

-

(2)

Respectively apply the CG-RELM with different numbers of hidden layer neurons (L = 200 and L = 600) to estimate SOC. The simulation results are shown in Fig. 5 and Table 3.

It can be seen from the results that the CG-RELM has better estimation performance when L is larger.

-

(3)

In order to test the robustness of the CG-RELM, we add appropriate amount of noise to the original data. The noise variance corresponding to the voltage data is 0.01 and the current data 0.03. Respectively apply the CG-RELM (L =500) with different data sets to estimate SOC. The simulation results are shown in Fig. 6 and Table 4.

It can be seen from Fig. 6 and Table 4 that although the estimation error under the noise-added data has increased, the estimated SOCs are still within a satisfactory range.

Figures 4, 5, and 6 and Tables 2, 3, and 4 indicate that the CG-RELM has high accuracy and strong robustness.

Conclusion

A conjugate gradient optimized regularized extreme learning machine (CG-RELM) is investigated to estimate battery SOC. In this hybrid algorithm, the conjugate gradient (CG) algorithm is used to calculate the output weights of the regularized extreme learning machine (RELM), which is a special feedforward neural network (SLFN) with fixed input weights and hidden layer biases. The weights adjustment directions at different points calculated by the CG algorithm are conjugate orthogonal to each other, avoiding the calculation load caused by the inverse matrix and guaranteeing the high convergence speed of the CG-RELM.

The simulation results verify that the CG-RELM can effectively estimate the battery SOC. (i) The convergence speed of the CG-RELM is faster when compared with the BP neural network. (ii) Increasing the number of hidden layer neurons can improve estimation precision. (iii) The algorithm shows high robustness when applied to the noise-added data set. This investigated method can also be applied to block-oriented systems with NN non-linear parts [45,46,47].

References

Zubi G, Dufo-Lopez R, Carvalho M, Pasaoglu G (2018) The lithium-ion battery: state of the art and future perspectives. Renewable and Sustainable Energy Reviews 89:292–308

Duan B, Li Z, Gu P, Zhou Z, Zhang C (2018) Evaluation of battery inconsistency based on information entropy. Journal of Energy Storage 16:160–166

Lu L, Han X, Li J, Hua J, Ouyang M (2013) A review on the key issues for lithium-ion battery management in electric vehicles. Journal of Power Sources 226:272–288

Liu K, Li K, Peng Q, Zhang C (2019) A brief review on key technologies in the battery management system of electric vehicles. Frontiers of Mechanical Engineering 14:47–64

Hong J, Wang Z, Chen W, Wang L, Qu C (2020) Online joint-prediction of multi-forward-step battery SOC using LSTM neural networks and multiple linear regression for real-world electric vehicles. Journal of Energy Storage 30: Art. no. 101459

Lipu M, Hannan M, Hussain A, Hoque M, Ker P, Saad M, Ayob A (2018) A review of state of health and remaining useful life estimation methods for lithium-ion battery in electric vehicles: challenges and recommendations. Journal of Cleaner Production 205:115–133

Li Z, Huang J, Liaw B, Zhang J (2017) On state-of-charge determination for lithium-ion batteries. Journal of Power Sources 348:281–301

Xiong R, Cao J, Yu Q, He H, Sun F (2018) Critical review on the battery state of charge estimation methods for electric vehicles. IEEE Access 6:1832–1843

He H, Xiong R, Fan J (2011) Evaluation of lithium-ion battery equivalent circuit models for state of charge estimation by an experimental approach. Energies 4(4):582–598

Duan B, Zhang Q, Geng F, Zhang C (2020) Remaining useful life prediction of lithium-ion battery based on extended Kalman particle filter. International Journal of Energy Research 44(3):1724–1734

Plett G (2004) Extended Kalman filtering for battery management systems of LiPB-based HEV battery packs Part 1. Background. Journal of Power Sources 134(2):252–261

Plett G (2004) Extended Kalman filtering for battery management systems of LiPB-based HEV battery packs: part 2. Modeling and identification. Journal of Power Sources 134(2):262–276

Plett G (2004) Extended Kalman filtering for battery management systems of LiPB-based HEV battery packs: part 3. State and parameter estimation. Journal of Power Sources 134(2):277–292

Sun F, Hu X, Zou Y, Li S (2011) Adaptive unscented Kalman filtering for state of charge estimation of a lithium-ion battery for electric vehicles. Energy 36(5):3531–3540

Li Y, Wang C, Gong J (2017) A multi-model probability SOC fusion estimation approach using an improved adaptive unscented Kalman filter technique. Energy 141:1402–1415

Zeng Z, Tian J, Li D, Tian Y (2018) An online state of charge estimation algorithm for lithium-ion batteries using an improved adaptive cubature Kalman filter. Energies 11(1): Art. no. 59

X. Cui, Z. Jing, M. Luo, Y. Guo, and H. Qiao (2018) A new method for state of charge estimation of lithium-ion batteries using square root cubature Kalman filter, Energies, vol. 11, no. 1, Art. no. 209.

Li W, Yang Y, Wang D, Yin S (2020) The multi-innovation extended Kalman filter algorithm for battery SOC estimation. Ionics 26(12):6145–6156

Chen J, Zhang Y, Zhu Q, Liu Y (2019) Aitken based modified Kalman filtering stochastic gradient algorithm for dual-rate nonlinear models. Journal of Franklin Institute 356(8):4732–4746

Feng L, Ding J, Han Y (2020) Improved sliding mode based EKF for the SOC estimation of lithium-ion batteries. Ionics 26(3):2875–2288

Muhammad S, Rafique M, Li S, Shao Z, Wang Q, Guan N (2017) A robust algorithm for state-of-charge estimation with gain optimization. IEEE Transactions on Industrial Informatics 13(6):2983–2994

Karmacharya IM, Gokaraju R (2018) Fault location in ungrounded photovoltaic system using wavelets and ANN. IEEE Transactions on Power Delivery 33(2):549–559

Lan S, Chen M, Chen D (2019) A novel HVDC double-terminal non-synchronous fault location method based on convolutional neural network. IEEE Transactions on Power Delivery 34(3):848–857

Bagheri A, Gu IYH, Bollen MHJ, Balouji E (2018) A robust transform-domain deep convolutional network for voltage dip classification. IEEE Transactions on Power Delivery 33(6):2794–2802

Peng X, Yang F, Wang G, Wu Y, Li L, Li Z, Bhatti AA, Zhou C, Hepburn DM, Reid AJ, Judd MD, Siew WH (2019) A convolutional neural network-based deep learning methodology for recognition of partial discharge patterns from high-voltage cables. IEEE Transactions on Power Delivery 34(4):1460–1469

Chemali E, Kollmeyer P, Preindl M, Emadi A (2018) State-of-charge estimation of Li-ion batteries using deep neural networks: a machine learning approach. Journal of Power Sources 400:242–255

Jiao M, Wang D, Qiu J (2020) A GRU-RNN based momentum optimized algorithm for SOC estimation. Journal of Power Sources 459: Art. no. 228051.

Nakama T (2009) Theoretical analysis of batch and on-line training for gradient descent learning in neural networks. Neurocomputing 34(1-3):151–159

Gan M, Guan Y, Chen G, Chen C (2020) Recursive variable projection algorithm for a class of separable nonlinear models. IEEE Transactions on Neural Network and Learning Systems (in press) Doi:https://doi.org/10.1109/TNNLS.2020.3026482

Huang G, Zhu Q, Siew C (2004) Extreme learning machine: a new learning scheme of feedforward neural networks. IEEE International Joint Conference on Neural Networks 1-4:985–990

Huang G, Zhu Q, Siew C (2006) Extreme learning machine: theory and applications. Neurocomputing 70:489–501

Huang G, Li M, Chen L, Siew C (2008) Incremental extreme learning machine with fully complex hidden nodes. Neurocomputing 71(4-6):576–583

Luo X, Chang X, Ban X (2016) Regression and classification using extreme learning machine based on L-1-norm and L-2-norm, neurocomputing 174: 179-186

Huang G (2015) What are extreme learning machines? Filling the gap between frank Rosenblatt’s dream and John von Neumann’s puzzle. Cognitive Computation 7(3):263–278

Cosmo D, Salles E (2019) Multiple sequential regularized extreme learning machines for single image super resolution. IEEE Signal Processing Letters 26(3):440–444

Gumaei A, Hassan M, Hassan M, Alelaiwi A, Fortino F (2019) A hybrid feature extraction method with regularized extreme learning machine for brain tumor classification. IEEE Access 7:36266–36273

Weng F, Hou M, Zhang T, Yang Y, Wang Z, Sun H, Zhu H, Luo J (2018) Application of regularized extreme learning machine based on BIC criterion and genetic algorithm in iron ore price forecasting, 3rd International Conference on Modelling, Simulation and Applied Mathematics (MSAM), Shanghai, China, Jul 22-23, pp. 212-217

Li S, You Z, Guo H, Luo X, Zhao Z (2016) Inverse-free extreme learning machine with optimal information updating. IEEE Transactions on Cybernetics 46(5):1229–1241

Hestenes MR, Steifel EL (1952) Methods of conjugate gradients for solving linear systems. Journal of Research of the National Bureau of Standards 49(6):409–436

Polyak BT (1969) The conjugate gradient method in extremal problems. USSR Computational Mathematics and Mathematical Physics 9(4):94–112

Fletcher R, Reeves CM (1964) Function minimization by conjugate gradients. The Computer Journa 7(2):149–154

Li M, Li D A modified conjugate-descent method and its global convergence. Pacific Journal of Optimization 8(2):247–259

Shi Z, Shen J (2007) Convergence of Liu-Storey conjugate gradient method. European Journal of Operational Research 182(2):552–560

Dai Y (1999) Yuan Y (1999) A nonlinear conjugate gradient method with a strong global convergence property. SIAM Journal on Optimization 10(1):177–182

Wang D, Zhang S, Gan M, Qiu J (2020) A novel EM identification method for Hammerstein systems with missing output data. IEEE Transactions on Industrial Informatics 16(4):2500–2508

Wang D, Li L, Ji Y, Yan Y (2018) Model recovery for Hammerstein systems using the auxiliary model based orthogonal matching pursuit method. Applied Mathematical Modelling 54:537–550

Wang D, Yan Y, Liu Y, Ding J (2019) Model recovery for Hammerstein systems using the hierarchical orthogonal matching pursuit method. Journal of Computational and Applied Mathematics 345:135–145

Funding

This work was supported by the National Natural Science Foundation of China under Grant Nos. 61873138.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Jiao, M., Yang, Y., Wang, D. et al. The conjugate gradient optimized regularized extreme learning machine for estimating state of charge. Ionics 27, 4839–4848 (2021). https://doi.org/10.1007/s11581-021-04169-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11581-021-04169-9