Abstract

Autism Spectrum Disorder (ASD) is a neurodevelopmental disorder in which changes in brain connectivity, associated with autistic-like traits in some individuals. First-degree relatives of children with autism may show mild deficits in social interaction. The present study investigates electroencephalography (EEG) brain connectivity patterns of the fathers who have children with autism while performing facial emotion labeling task. Fifteen biological fathers of children with the diagnosis of autism (Test Group) and fifteen fathers of neurotypical children with no personal or family history of autism (Control Group) participated in this study. Facial emotion labeling task was evaluated using a set of photos consisting of six categories (mild and extreme: anger, happiness, and sadness). Group Independent Component Analysis method was applied to EEG data to extract neural sources. Dynamic causal connectivity of neural sources signals was estimated using the multivariate autoregressive model and quantified by using the Granger causality-based methods. Statistical analysis showed significant differences (p value < 0.01) in the connectivity of neural sources in recognition of some emotions in two groups, which the most differences observed in the mild anger and mild sadness emotions. Short-range connectivity appeared in Test Group and conversely, long-range and interhemispheric connections are observed in Control Group. Finally, it can be concluded that the Test Group showed abnormal activity and connectivity in the brain network for the processing of emotional faces compared to the Control Group. We conclude that neural source connectivity analysis in fathers may be considered as a potential and promising biomarker of ASD.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Nonverbal communications in human interactions convey signals to others about an individuals’ thinking, intentions, and feelings have been a crucial part of human communications (Black et al. 2017). Facial gesture plays an important role in social interactions and helps to find out internal emotional and mental moods. Problems in recognizing other’s facial expressions have been observed in children and adults diagnosed with ASD (American Psychiatric Association 2013).

Some relatives of individuals with ASD, especially first-degree relatives show milder traits of the ASD phenotype, referred to as the Broader Autism Phenotype (BAP) (Billeci et al. 2019; Cruz et al. 2013; Sucksmith et al. 2011; Tajmirriyahi et al. 2013). These sub-threshold characteristics are similar, although less severe, to those of ASD patients and they are more common in the parents of ASD individuals (Rubenstein et al. 2018). Only a few studies have considered facial expression recognition among first-degree relatives of people with autism (Hu et al. 2018; Kadak et al. 2014; Palermo et al. 2006; Wallace et al. 2010). In Palermo et al. (2006) emotion recognition abilities of parents of ASD individuals and the Control Group were analyzed behaviorally. Parents were asked to label five basic facial emotions (happiness, anger, sadness, surprise, and disgust), and the findings showed impaired results of Test Group for sadness or disgust (p value < 0.01). Another behavioral study reported poor performance of relatives of autism individuals in identifying facial emotions happiness and surprise by evaluating Emotion Recognition Test (p value < 0.05) (Kadak et al. 2014). The number of correct responses to the emotion recognition task was analyzed in Hu et al. (2018) and the results showed that the number of correct responses was significantly lower (p value < 0.05) for parents of ASD children in all emotions (fear, sadness, anger, disgust, happiness, and surprise). Wallace et al. analyzed the performance of ASD parents on the task of facial emotion recognition (Wallace et al. 2010). The results showed significantly worse recognition of disgust and fear in ASD parents than neurotypical adults (p value < 0.01). A few studies have analyzed facial expression recognition in parents of children with ASD using neuroimaging techniques (Baron-Cohen et al. 2006; Greimel et al. 2010; Yucel et al. 2015). The first study that evaluated parents of children with ASD through the fMRI technique used the “Reading the Mind in the Eyes” test to investigate if the parents had a similar atypical brain function observed in the ASD individuals. Results indicated that ASD parents illustrated atypical brain activity compared with healthy control (Baron-Cohen et al. 2006). Responding to emotions of others in the ASD fathers was explored in Greimel et al. (2010). ASD fathers showed abnormal brain activation in the fusiform gyrus and inferior frontal gyrus. Another fMRI study conducted in ASD parents examined neural substrates of face processing using an emotional matching paradigm. The authors reported that ASD parents had lower activation of right insula and higher activation of the fusiform gyrus and amygdala compared with healthy control (Yucel et al. 2015).

Brain connectivity alterations are associated with autistic symptoms in some individuals. The search for autism endophenotypes in brain connectivity is an emerging field, and a limited number of studies have addressed this issue (Billeci et al. 2016). Connectivity abnormalities in ASD parents were reported in a MEG study, evidently in language and visual areas (Buard et al. 2013). In Billeci et al. (2019), diffusion network analysis was applied to MRI to investigate network organizations in the ASD fathers. The results showed some brain regions that are crucial for social functioning in the autism group and ASD fathers, so it may help in clarifying the endophenotype of autism. Atypical white matter structure in siblings of individuals with ASD in Lisiecka et al. (2015) and altered functional connectivity in high-risk infants in Fields and Glazebrook (2017), Keehn et al. (2015), Orekhova et al. (2014) and Righi et al. (2014) have been reported in the literature but no study has previously investigated a brain connectivity endophenotype in parents using Electroencephalogram (EEG) signals.

This study aims to examine the brain connectivity in the fathers of children with autism (Test Group) compared to the fathers of neurotypical children (Control Group) while performing emotion labeling task using EEG signals. The hypothesis was that there would be an impairment in recognition of basic facial expressions in the fathers of children with ASD, in comparison with the fathers of neurotypical children.

Materials and methods

Participants

Fifteen biological fathers (age = 40.60 ± 3.97 years) of children with ASD diagnosis, whom were screened by a pediatric neurologist/psychiatrist, participated in this study. Fathers had one child meeting the fifth edition of the Diagnostic and Statistical Manual of Mental Disorders [DSM-V (American Psychiatric Association 2013)]. Participants had no history of psychiatric or neurological diseases such as depression, schizophrenia, and bipolar disorder. Fifteen subjects (fathers of neurotypical children) (age = 37.33 ± 3.70 years) with no personal or family history of any neurodevelopmental disorders participated in the Control Group. Ethical approval was obtained from the local ethics committee in the university of medical sciences. All participants signed a consent form before starting the experiment in accordance with the Helsinki Declaration. After the registration step, all participants were requested to complete the Autism Spectrum Quotient (AQ), questionnaire for quantifying ASD traits (Baron-cohen et al. 2001). The average AQ score for Test Group was 19.46 (SD = 4.77) and for the Control Group, fathers were 14.2 (SD = 3.52). Statistical analysis of the AQ score showed a significant main effect of group, with the Test Group scoring higher than the Control Group (p value = 0.0019). Demographic characteristics of study participants are summarized in Table 1.

Task and stimuli

A set of human emotional faces consist of happy, sad, and anger, each with two emotional intensities levels (mild and extreme intensity), were selected from the extended Cohn-Kanade (ck+) dataset (Lucey et al. 2010). 70 volunteers evaluated the selected images and they labeled the facial expressions and intensity levels. Finally, emotional faces with the highest percentage of agreement (above 80%) were selected for the task design. The contrast and brightness of these images were equalized using Photoshop CS6 (www.adobe.com). The black and white images (400 × 480 pixels) were placed in an oval frame to provide participants’ focus on facial expression and reduce hair and ears.

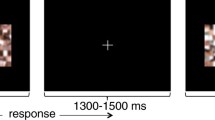

The emotional faces were presented for a duration of 3000 ms on a computer screen and immediately replaced by a black screen for 1000 ms. The task was developed in three blocks using version 3.0.14 of Psychtoolbox software (Brainard et al. 1997). Each block included 60 trials; Six emotional faces (mild and extreme anger, sadness, and happiness) of 5 persons that were presented two times randomly. Participants were requested to determine the emotion category that is depicted by pressing a key. A gamepad with three buttons corresponding to each expression was used to labeling the emotions. The exemplar stimuli for the six emotional faces and the schematic representation of the employed task are illustrated in Fig. 1.

Participants were seated in a comfortable chair in a sound-attenuated room during EEG data acquisition. A monitor screen was placed at a distance of approximately 60 cm and participants were allowed to adjust it to a suitable distance. In the beginning of each recording session, the task was explained to the participants and they were requested to complete a training stage to ensure they had understood how to use the gamepad and do the task. They were asked to look at the center of the screen and attempt to reduce blinks and eye movements as much as possible. Participants were instructed to answer by pressing the corresponding key immediately after they recognize the emotion. At the end of each block, a two-minute rest period was considered.

EEG data acquisition

EEG signal was continuously recorded using 32 active electrodes situated on a standard cap according to the 10–20 system using a g.tec amplifier and digitized at 1200 Hz. Impedances of all active electrodes were kept below 10 kΩ throughout data acquisition. Three electrodes were used to record electrooculography (EOG) signals, two electrodes located outside the outer canthus of each eye and another below the right eye.

EEG analysis

The flowchart represented in Fig. 2 outlines the summary of the procedure in this study. As shown in Fig. 2a, EEG data of all participants are preprocessed at first. Independent components are extracted from temporal concatenated data and then “non- neural” components are removed as illustrated in Fig. 2b. The multivariate autoregressive (MVAR) representation of EEG source signals is utilized to find out the information flow between neural sources and directional interactions between them as shown in Fig. 2c. Data are analyzed using EEGLAB (Delorme et al. 2011) and the SIFT toolbox (Mullen 2010). Finally, statistical analysis is used to compare the source connectivity of two groups, as summarized in Fig. 2d.

Summary of EEG data analysis pipeline. a Steps of all preprocessing procedures. b Temporal group ICA: Concatenating EEG data of specified emotion corresponding to all participants of one group temporally and then applying ICA. Eliminating “non-neural” components like muscle activity, eye blink, line noise based on the power spectrum, time-trial plot, and scalp map. c Estimating the MVAR model of the data and then calculating connectivity measure (here GCI and gPDC) in different frequency bands. d Multiple comparisons of groups using the unbalanced ANOVA

Preprocessing

After re-referencing the data to the average of the two earlobe electrodes and baseline removing, low-pass filter at 80 Hz, high-pass filter at 0.5 Hz, and a 50 Hz notch filter were implemented. Each participantt’s EEG data is checked separately, and stereotypical artifacts are removed. Independent component analysis (ICA) with the Infomax algorithm was applied (Bell and Sejnowski 1995) to reduce eye movement and blink artifacts. The data of six emotions were epoched (pre-stimulus time = 500 ms, post-stimulus time = 1500 ms). Trials with improbable data of trend and amplitude (out of 3 standard deviations from average) were excluded from further analysis with expert supervision.

Group independent component analysis (gICA)

Due to a non-trivial problem in identifying and ordering similar components across individuals, ICA is not suitable for a group of participants. The brain sources that are activated in different people when performing the same task may present in different regions and also follow a different cognitive strategy. On the other hand, differences in brain anatomy can lead to variations in sources’ mixing that are recorded at the head surface (Huster et al. 2015). One proposed method to solve this problem is the group–ICA (gICA), namely, data of all participants are concatenated and a single set of independent components extracted. Finally, the individual data were rebuilt based on the weights of group-level components (Huster et al. 2015; Ponomarev et al. 2014).

Let \(x_{i}^{k}(t),i=1,\ldots,n\) be EEG recordings of the k-th participant from n electrodes at the head surface and \({s_j}(t),j=1,\ldots,m\) be all sources generated potentials which are recorded by electrodes. The concatenated data \(X_{i}^{K}(t)=[x_{i}^{1},\ldots,x_{i}^{k},\ldots, x_{i}^{K}]\) is given by the temporal concatenation of individual EEG data with K being the number of participants included in the group analysis. The simplest mixture model in the linear instantaneous ICA method is considered as \(X(t)={A_{Total}}S(t)\), where ATotal (n × n) is invertible mixing matrix (i.e. columns of ATotal denote the gIC topographies). To extract the source signals from the mixed signals, which is the purpose of the BSS method, the estimated sources \(\tilde {S}(t)\) are formulated as \(\tilde {S}(t)={W_{Total}}X(t)\), where \({W_{Total}}={A_{Total}}^{{ - 1}}\) is an un-mixing matrix (Kozhushko et al. 2018). Participants in one group are concatenated the same as the method described. The concatenated data are used for the estimation of the WTotal matrix. Then the EEG signal of each individual was back reconstructed. Since all participants in one group have common sources, this model naturally explains the group-level inferences in analyzing a given facial emotion and the sources are equivalent in group comparisons.

Measuring time-varying information flow

MVAR modeling has proved to be a practical approach for connectivity analysis of biological signals, specially EEG signals (Afshani et al. 2019; Omidvarnia 2014). This process can model interactions between neural sources time series based on linear differential equations. Neural sources time series are used as input of the MVAR model in order to measure time-varying information flow each evaluate different properties in the time series and has some advantages and disadvantages (Koichi and Antonio 2014). In this study two measures, Granger Causality Index (GCI) and generalized Partial Directed Coherence (gPDC), have been considered. The measure GCI is a time-domain connectivity metric based on the Granger causality concept (Geweke 1982) and gPDC (Baccalá et al. 2007) is a combination of the idea of PDC, to show influenced effects and Directed Transfer Function (DTF), to show the influencing effects between two time series.

Granger causality (GC) states that if one stochastic process Xh(t) contains information in past values that permits a more accurate prediction of Xi(t), then Xh(t) could be called a casual to Xi(t) (Blinowska 2011). Assume Xi(t) is represented by a multivariate AR model using k previous values of all m time series with a prediction error \(u(t),\)

To investigate the effect of the time series h on the time series i, the Xi(t) is rewritten after elimination of the time series h with the \(u^{\prime}(t)\) prediction error:

If the variance of \(u^{\prime}(t)\) (\(\delta^{2}(u^{\prime}(t))\)) is more than the variance of \(u(t)\) (\(\delta^{2}(u(t))\)), then it is an explanation of a causal interaction from Xh(t) to Xi(t).

Granger Causality Index (GCI)

Granger causality index (GCI) is defined based on the Granger causality definition. So, GCI from Xh(t) to Xi(t) is defined as,

The asymmetry in \(GC{I_{{x_h} \to {x_i}}}\)and \(GC{I_{{x_i} \to {x_h}}}\) shows the directionality of causality between X1(t) and X2(t).

Generalized partial directed coherence (gPDC)

The PDC was defined in the following form (Baccalá and Sameshima 2001):

In this equation, \({A_{ij}}(f)\) is the Fourier transform of MVAR model coefficients \(A(t)\) and \({a_j}(f)\) is a column with index j of \(A(f)\) matrix.

As previously mentioned, gPDC is a combination of the idea of PDC and DTF between two time series (Baccalá et al. 2007). Generalized Partial Directed Coherence was defined by the formula:

The normalization factor in the denominator of this equation is similar to the one employed in the DTF definition to show the influencing effects.

Statistical analysis

In this study, the unbalanced analysis of variance was employed for comparison of groups in order to detect meaningful differences in the connectivity measures. An unbalanced ANOVA indicates the particular layout of ANOVA in which the numbers of observations in each group are unequal. Welch’s test is a well-accepted method to solve the problem and due to its simplicity and accuracy, commonly used in practical applications (Krishnamoorthy et al. 2007). This test is based on the Student’s t distribution and considers both the sample sizes and the variance of the samples. Facial expression as the within-participants factor, groups as the between participant factor, and connectivity measure as the dependent variable were selected. Multiple comparisons were followed up with Games-Howell post-hoc analysis. In this study, the statistical significance level was considered as p value less than 0.05. To determine the important effects, the partial eta squared is used as effect size and a value of eta squared larger than 0.2 is condidered as relatively high effect sizes.

Results

The effective brain connectivity was compared between the Test and Control group within the frequency bands (\(\delta\): 0.1–4 Hz,\(\theta\): 4–8 Hz, \(\alpha\) alpha: 8–13 Hz,\(\beta\): 13–30 Hz and \(\gamma\): >30 Hz). The results consisted of 2 different analyses: First, testing group differences for the GCI connectivity measure and second for gPDC connectivity measure, separately for each frequency band. The unbalanced ANOVA (emotion × group [6 × 2]) was applied to the connectivity measures in five frequency bands to find possible differences in brain connectivity. Connectivity measure as the dependent variable, groups and emotion (mild and extreme anger, happiness, and sadness) as the independent variables yielded significant differences (p values < 0.05) in the group-ICs’ brain connectivity in some frequency bands. In the case of significant differences between groups for a given facial expression, additional post-hoc test was applied. The results of comparisons are demonstrated in Tables 2 and 3 for GCI and gPDC respectively. Only p values, which are below the alpha significance level, are reported.

According to the results presented in Tables 2 and 3, the mild anger and mild sadness emotion categories are the most apparent differences between the two groups. Thus, in this article, source-level brain connectivity analysis has been studied in these two emotion categories. It should be noted that the magnitude of connectivity in the Control Group is greater than the Test Group.

The gPDC is an upgraded version of PDC and a higher level connectivity measure in the frequency domain and seems to provide more reliable information than simpler metrics. Some limitations of other measures, such as sensitivity to the scale of signals, have been corrected in gPDC. So, the results are represented for this metric in the rest of the paper. The time-frequency grid representations of connectivity between neural sources are displayed in Figs. 3 and 4 for mild anger and mild sadness expressions, respectively. The corresponding gPDC expresses the dynamic causal connections between each sink and source. As shown in Figs. 3 and 4, different connectivity patterns appear in the neural source network at low frequencies (delta, theta, and alpha). Time-frequency representations qualitatively confirm the results presented in Table 3, in which the differences between the two groups were observed at low frequencies.

The gPDC connectivity for component dipoles and the changes over time are presented in Figs. 5 , 6, 7 and 8. The neural sources are shown by nodes with different sizes and colors, with the magnitude of causal information outflow illustrated by color and the power illustrated by size. Larger size nodes show larger power and warmer colored nodes show larger information outflow. Cylinders display the gPDC connectivity between nodes with different diameters and colors. The direction of information flow is represented by cylinder and flow magnitude are showed by color, with warmer colors for higher magnitude. Effective connectivity is integrated across 0.5–80 Hz and is represented in Figs. 5 , 6, 7 and 8 for four time points throughout an epoch. As shown in Fig. 5, five components were selected for mild anger/Control Group category with their dipoles being localized to the following regions: right and left inferior frontal gyrus (Brodmann 47), right associative visual cortex (Brodmann 19), left angular gyrus (Brodmann 39), and right somatosensory association cortex (Brodmann 7). As shown in Fig. 5, great couplings are displayed over right and left inferior frontal gyrus, visual cortex and inferior frontal gyrus in right hemisphere, and additionally between somatosensory and visual cortex over time. One of the superiorities of this type of representation is that it includes causality information and how it changes over time. Figure 6 shows this finding for four components selected for mild anger/Test Group category with their dipoles being localized to the following regions: left anterior cingulate (Brodmann 24), right posterior cingulate (Brodmann 31), right and the left angular gyrus (Brodmann 39). In Fig. 6, some weak short-range connectivity (smaller than 30% of maximum amplitude of connectivity) are observed over these four regions among which right posterior cingulate and the right angular gyrus have slightly stronger couplings (larger than 80% of maximum amplitude of connectivity).

Figure 7 shows connectivity between four components which were selected for mild sadness/Control Group category with their dipoles being localized to the following regions: right posterior cingulate (Brodmann 31), left somatosensory association cortex (Brodmann 7), right superior frontal gyrus (Brodmann 10), and fusiform gyrus (Brodmann 37). As seen in Fig. 8, some long-range causal connectivities are revealed between superior frontal gyrus in right hemisphere and fusiform gyrus in the left hemisphere. A short-range connectivity between right posterior cingulate and left somatosensory cortex is observed too. In Fig. 8, five components were selected for mild sadness/Test Group category with their dipoles being localized to the following regions: left motor cortex (Brodmann 6), left somatosensory association cortex (Brodmann 7), right and left angular gyrus (Brodmann 39), and right somatosensory association cortex (Brodmann 5). As shown in Fig. 8, some short-range couplings are displayed over the left and right somatosensory association cortex and also between the angular gyrus and somatosensory cortex in the right hemisphere

Discussion

The purpose of the current paper was to examine brain connectivity in fathers of individuals with autism compared to fathers of children without autism while performing facial emotion recognition task. Along with other neuroimaging technologies, the use of EEG for investigation of connectivity is of important additional utility due to high temporal resolution. To minimize the signal processing confounds caused by volume conduction and to obtain a finer spatial resolution, a solution is to apply the connectivity analysis at the source level.

The current study indicates that effective connectivity can be evaluated in the time-frequency domain from multivariate neural sources signals, and it can be statistically assessed to compare two groups. The proposed method utilizes group independent component analysis, time-frequency representation of MVAR processes, and the concept of Granger causality includes basic GCI measure and the generalized version of PDC. Connectivity measures yielded significant differences (p values < 0.05) in the source-level brain connectivity in some frequency bands. Brain connectivity in recognition of mild condition in each of negative facial expressions (anger and sadness), demonstrated significant differences (p values < 0.05) between two groups and these differences are more pronounced at low frequencies. Similar behavior has been reported for people with autism in negative emotion processing. During the processing of the facial expression, individuals with autism focus more on others’ mouths to get the information instead of both mouth and eyes. This strategy results in the loss of vital information about the facial emotion (Ashwin et al. 2006). No differences in high frequencies may be due to a compensatory factor in these frequencies, which results in normal brain connectivity like Control Group. Brain connectivity of Test Group while watching the faces with mail-negative emotions was mostly short-range and observed in posterior and central regions, while for the Control Group, long-range and between hemisphere connections were appeared too. It should be noted that many studies reported the long-range underconnectivity and local overconnectivity in ASD [for review, see (O’Reilly et al. 2017)]. Post-mortem immunocytochemistry, structural, and functional MRI studies strongly supported this hypothesis. The results obtained in this paper are also in line with the confirmation of this hypothesis for ASD fathers. Considering the notion of BAP, it can be inferred that Test Group had different performance in identifying mild-negative expressions in comparison to Control Group.

The neural sources connectivity networks associated with mild negative emotional conditions were depicted in Fig. 5 , 6, 7 and 8. Dynamic causal interactions between neural sources and how it changes over time were represented for one participant in each group. Brain areas involved and activated during the task and the connections between them were demonstrated in figures. Several areas of heightened causality, especially parietal and central cortices, are detected and in each figure, these areas are partly active. The connectivity is short-range for the Test Group and observed in central and parietal regions, including posterior cingulate, angular gyrus and somatosensory association cortex (Figs. 6, 8). For the Control Group, the long-range connections appear throughout the anterior and posterior region of the brain, including inferior and superior frontal gyrus, associative visual cortex, and fusiform gyrus (Figs. 5, 8). Also, unlike the Test Group, interhemispheric interactions are observed in the Control Group. Right and left inferior frontal gyrus, posterior cingulate, and somatosensory association cortex are involved in these interactions. Involved brain regions in the neural sources network of the Control Group, which show considerable connectivity and activity to emotional faces, include visual cortex, cingulate, and fusiform gyrus. These regions are parts of a neuronal path specialized for face processing and emotional perception (Kanwisher and Yovel 2006; Monteiro et al. 2017; Vissers et al. 2012). The activity of these regions is altered during a visual stimulus exposure especially negative emotional stimuli and provides more detailed processing of expressions (Etkin et al. 2011; Ousdal et al. 2014; Wegrzyn et al. 2015). The current study is one of the first studies that considered the information flow and causality between neural sources using the EEG signal of fathers of individuals with autism in a clinical population. The connectivity between sources was consistent with previous results reported in the literature, which have shown neural connectivity deficits in autism patients (Coben et al. 2014; Coben and Myers 2008; Pillai et al. 2017) and also consistent with the results of systematic review articles, which point to the poor performance of the autism individuals versus control in recognizing the emotion of anger and sadness (Black et al. 2017; Uljarevic and Hamilton 2013). The present paper also confirms the results reported in Yucel et al. (2015) that represented impaired emotional face processing regions in the parents of children with autism.

The main properties of the proposed method are summarized as follows. The study of brain connectivity at the level of independent brain sources has increased the validity of the results due to the reduced volume conduction. On the other hand, the use of group analysis of independent components has made it possible to infer results at the group level. The granger-based metrics are derived from the causal MVAR model, which takes into account all previous interactions between neural sources. Therefore, it can show the causal influence of one neural source on another. Considering the causality between neural sources provides more realistic information about brain mechanisms. Such metrics can identify the information flow direction and differentiate between direct/indirect interactions. By investigating the changes in the connectivity of component dipoles over time, one can see the influence of neural source on each other and how the strength of their connectivity changes, and also compare the results with another group to find out the possible differences.

Conclusion

The hypothesis of the present study was that fathers of individuals with autism, compared with Control Group, would illustrate abnormal neural connectivity patterns in face and emotion processing tasks. Based on our comprehensive study, the Test Group may show impaired brain connectivity during emotion labeling tasks compared to Control Group because of the significant difference in emotion recognition with Control Group. The proposed EEG analysis indicated different brain connectivity of two groups in the low-frequency band of EEG in recognition of mild facial emotions (p values < 0.01). Significant neural sources of the Test Group are in parietal and central cortices and the interactions are short-range.

On the other hand, the significant neural sources in Control Group are localized in the anterior regions of the brain in addition to the central and parietal areas. Therefore, long-range and interhemispheric connectivity appears. By evaluating the sources identified in facial emotion recognition, it can be found out that the core face and emotion processing networks like the visual cortex, fusiform gyrus, anterior and posterior cingulate are better identified in the Control Group and represented somewhat strong connections. Findings suggest that abnormalities of neural connectivity of the Test Group can affect the processing of emotional faces as a behavioral feature of the BAP. So the neural source connectivity of fathers can be considered as a marker of ASD and may represent a cognitive endophenotype. The connectivity analysis of neural source signals of fathers may help early diagnosis of autism. These fathers will be more aware of their child’s behavior before the age of six which is known as the “golden time” for required therapies, and also fathers-to-be will make a more informed decision. With further development in this research field, one can hope to derive heritable endophenotypes which will reliably demonstrate and diagnose autism which can be promising for healthcare investors and families. There is much work to be done to extend the proposed approach and implement it to the more enormous sample sizes of fathers and their children to judge the brain connectivity.

References

Afshani F, Shalbaf A, Shalbaf R, Sleigh J (2019) Frontal–temporal functional connectivity of EEG signal by standardized permutation mutual information during anesthesia. Cogn Neurodyn 13(6):531–540

American Psychiatric Association (2013) Diagnostic and statistical manual of mental disorders (DSM-5®). American Psychiatric Pub. Accessed from http://www.dsm5.org/proposedrevision/Pages/SomaticSymptomDisorders.aspx

Ashwin C, Chapman E, Colle L, Baron-Cohen S (2006) Impaired recognition of negative basic emotions in autism: a test of the amygdala theory. Soc Neurosci 1(3–4):349–363

Baccalá LA, Sameshima K, Takahashi DY, Prof A, Gualberto L, Arnaldo A (2007) Generalized partial directed coherence. In: 200715th international conference on digital signal processing. IEEE, pp 163–166

Baccalá L, Sameshima K (2001) Partial directed coherence: a new concept in neural structure determination. Biol Cybern 84(6):463–474. https://doi.org/10.1007/PL00007990

Baron-Cohen S, Ring H, Chitnis X, Wheelwright S, Gregory L, Williams S, Bullmore E (2006) fMRI of parents of children with asperger syndrome: a pilot study. Brain Cogn 61(1):122–130. https://doi.org/10.1016/j.bandc.2005.12.011

Baron-cohen S, Wheelwright S, Skinner R, Martin J, Clubley E (2001) The autism-spectrum quotient (AQ): evidence from asperger syndrome/high-functioning autism, malesand females, scientists and mathematicians. J Autism Dev Disord 31(1):5–17

Bell AJ, Sejnowski TJ (1995) An information-maximization approach to blind separation and blind deconvolution. Neural Comput 7(6):1129–1159

Billeci L, Calderoni S, Conti E, Gesi C, Carmassi C, Dell’Osso L, Guzzetta A (2016) The broad autism (endo)phenotype: neurostructural and neurofunctional correlates in parents of individuals with autism spectrum disorders. Front Neurosci. https://doi.org/10.3389/fnins.2016.00346

Black MH, Chen NTM, Iyer KK, Lipp OV, Bölte S, Falkmer M, et al (2017) Mechanisms of facial emotion recognition in autism spectrum disorders: insights from eye tracking and electroencephalography. Neurosci Biobehav Rev 80(2016):488–515. https://doi.org/10.1016/j.neubiorev.2017.06.016

Billeci L, Calderoni S, Conti E, Lagomarsini A, Narzisi A, Gesi C, Muratori F (2019) Brain network organization correlates with autistic features in preschoolers with autism spectrum disorders and in their fathers: preliminary data from a DWI analysis. J Clin Med 8(4):487

Blinowska KJ (2011) Review of the methods of determination of directed connectivity from multichannel data. Med Biol Eng Comput 49(5):521–529. https://doi.org/10.1007/s11517-011-0739-x

Brainard DH, Brainard DH, Vision S (1997) The psychophysics toolbox. Spat Vis 10:433–436

Buard I, Rogers SJ, Hepburn S, Kronberg E, Rojas DC (2013) Altered oscillation patterns and connectivity during picture naming in autism. Front Hum Neurosci 7:742. https://doi.org/10.3389/fnhum.2013.00742

Coben R, Myers TE (2008) Connectivity theory of Autism: use of connectivity measures in assessing and treating autistic disorders. J Neurother 12:2–3

Coben R, Mohammad-Rezazadeh I, Cannon RL, Neuroscience H, Coben R, Mohammad-Rezazadeh I, et al. (2014) Using quantitative and analytic EEG methods in the understanding of connectivity in autism spectrum disorders: a theory of mixed over- and under-connectivity. Front Hum Neurosci 8:45. https://doi.org/10.3389/fnhum.2014.00045

Cruz LP, Camargos-Junior W, Rocha FL (2013) The broad autism phenotype in parents of individuals with autism: a systematic review of the literature. Trends Psychiatry Psychother 35(4):252–263. https://doi.org/10.1590/2237-6089-2013-0019

Delorme A, Mullen T, Kothe C, Akalin Acar Z, Bigdely-Shamlo N, Vankov A, Makeig S (2011) EEGLAB, SIFT, NFT, BCILAB, and ERICA: new tools for advanced EEG processing. Comput Intell Neurosci. https://doi.org/10.1155/2011/130714

Etkin A, Egner T, Kalisch R (2011) Emotional processing in anterior cingulate and medial prefrontal cortex. Trends Cognit Sci 15(2):85–93. https://doi.org/10.1016/j.tics.2010.11.004.Emotional

Fields C, Glazebrook JF (2017) Disrupted development and imbalanced function in the global neuronal workspace: a positive-feedback mechanism for the emergence of ASD in early infancy. Cogn Neurodyn 11(1):1–21

Geweke J (1982) Measurement of linear dependence and feedback between multiple time series. J Am Stat Assoc 77(378):304–313

Greimel E, Schulte-Rüther M, Kircher T, Kamp-Becker I, Remschmidt H, Fink GR et al (2010) Neural mechanisms of empathy in adolescents with autism spectrum disorder and their fathers. NeuroImage 49(1):1055–1065. https://doi.org/10.1016/j.neuroimage.2009.07.057

Hu X, Yin L, Situ M, Guo K, Yang P, Zhang M, Huang Y (2018) Parents’ impaired emotion recognition abilities are related to children’s autistic symptoms in autism spectrum disorder. Neuropsychiatric Disease Treatment 14:2973

Huster RJ, Plis SM, Calhoun VD (2015) Group-level component analyses of EEG: validation and evaluation. Front Neurosci 9:1–14. https://doi.org/10.3389/fnins.2015.00254

Kadak MT, Demirel ÖF, Yavuz M, Demir T (2014) Recognition of emotional facial expressions and broad autism phenotype in parents of children diagnosed with autistic spectrum disorder. Compr Psychiatry 55(5):1146–1151. https://doi.org/10.1016/j.comppsych.2014.03.004

Kanwisher N, Yovel G (2006) The fusiform face area: a cortical region specialized for the perception of faces. Philos Trans R Soc B Biol Sci 361(1476):2109–2128. https://doi.org/10.1098/rstb.2006.1934

Keehn B, Vogel-Farley V, Tager‐Flusberg H, Nelson CA (2015) Atypical hemispheric specialization for faces in infants at risk for autism spectrum disorder. Autism Res 8(2):187–198

Koichi S, Antonio BL (2014) Methods in brain connectivity inference through multivariate time series analysis. CRC Press

Kozhushko NJ, Nagornova ZV, Evdokimov SA, Shemyakina NV, Ponomarev VA, Tereshchenko EP, Kropotov JD (2018) Specificity of spontaneous EEG associated with different levels of cognitive and communicative dysfunctions in children. Int J Psychophysiol 128:22–30. https://doi.org/10.1016/j.ijpsycho.2018.03.013

Krishnamoorthy K, Lu F, Mathew T (2007) A parametric bootstrap approach for ANOVA with unequal variances: Fixed and random models. Comput Stat Data Anal 51:5731–5742. https://doi.org/10.1016/j.csda.2006.09.039

Lisiecka DM, Holt R, Tait R, Ford M, Lai MC, Chura LR et al (2015) Developmental white matter microstructure in autism phenotype and corresponding endophenotype during adolescence. Transl Psychiatry 5(3):e529

Lucey P, Cohn JF, Kanade T, Saragih J, Ambadar Z, Matthews I (2010) The extended Cohn–Kanade dataset (ck+): a complete dataset for action unit and emotion-specified expression. In: 2010 IEEE computer society conference on computer vision and pattern recognition workshops (CVPRW). IEEE, pp 94–101

Monteiro R, Simões M, Andrade J, Castelo Branco M (2017) Processing of facial expressions in Autism: a systematic review of EEG/ERP evidence. Rev J Autism Dev Disorders 4(4):255–276. https://doi.org/10.1007/s40489-017-0112-6

Mullen T (2010) Source Information Flow Toolbox (SIFT). Swartz Center Comput Neurosci, 1–69

Omidvarnia, A. (2014). Newborn EEG connectivity analysis using time-frequency signal processing techniques. The University of Queensland

O’Reilly C, Lewis JD, Elsabbagh M (2017) Is functional brain connectivity atypical in autism? A systematic review of EEG and MEG studies. PLoS ONE 12(5):1–28

Orekhova EV, Elsabbagh M, Jones EJH, Dawson G, Charman T, Johnson MH (2014) EEG hyper-connectivity in high-risk infants is associated with later autism. J Neurodev Disorders 6(1):40. https://doi.org/10.1186/1866-1955-6-40

Ousdal OT, Andreassen OA, Server A, Jensen J (2014) Increased amygdala and visual cortex activity and functional connectivity towards stimulus novelty is associated with state anxiety. PLoS ONE 9(4), 1–8. https://doi.org/10.1371/journal.pone.0096146

Palermo MT, Pasqualetti P, Barbati G, Intelligente F, Rossini MP, Rossini PM (2006) Recognition of schematic facial displays of emotion in parents of children with autism. Autism 10(4):353–364. https://doi.org/10.1177/1362361306064431

Pillai AS, McAuliffe D, Lakshmanan BM, Mostofsky SH, Crone NE, Ewen JB (2017) Altered task-related modulation of long-range connectivity in children with autism. Autism Res. https://doi.org/10.1002/aur.1858

Ponomarev VA, Mueller A, Candrian G, Grin-Yatsenko VA, Kropotov JD (2014) Group Independent Component Analysis (gICA) and Current Source Density (CSD) in the study of EEG in ADHD adults. Clin Neurophysiol 125(1):83–97. https://doi.org/10.1016/j.clinph.2013.06.015

Righi G, Tierney AL, Tager-Flusberg H, Nelson CA (2014) Functional connectivity in the first year of life in infants at risk for autism spectrum disorder: an EEG study. PLoS ONE 9(8):1–8. https://doi.org/10.1371/journal.pone.0105176

Rubenstein E, Chawla D, Hill C (2018) Broader autism phenotype in parents of children with autism: a systematic review of percentage estimates. J Child Fam Stud 27(6):1705–1720. https://doi.org/10.1007/s10826-018-1026-3.Broader

Sucksmith E, Roth I, Hoekstra RA (2011) Autistic traits below the clinical threshold: re-examining the broader autism phenotype in the 21st century. Neuropsychol Rev 21(4):360–389. https://doi.org/10.1007/s11065-011-9183-9

Tajmirriyahi M, Nejati V, Pouretemad H, Sepehr RM (2013) Reading the mind in the face and voice in parents of children with Autism Spectrum Disorders. Res Autism Spectrum Disorders 7(12):1543–1550. https://doi.org/10.1016/j.rasd.2013.08.007

Uljarevic M, Hamilton A (2013) Recognition of emotions in autism: A formal meta-analysis. J Autism Dev Disord 43(7):1517–1526. https://doi.org/10.1007/s10803-012-1695-5

Vissers ME, Cohen MX, Geurts HM (2012) Brain connectivity and high functioning autism: a promising path of research that needs refined models, methodological convergence, and stronger behavioral links. Neurosci Biobehav Rev 36(1):604–625. https://doi.org/10.1016/j.neubiorev.2011.09.003

Wallace S, Sebastian C, Pellicano E, Parr J, Bailey A (2010) Face processing abilities in relatives of individuals with ASD. Autism Res 3(6):345–349. https://doi.org/10.1002/aur.161

Wegrzyn M, Riehle M, Labudda K, Woermann F, Baumgartner F, Pollmann S et al (2015) Science direct investigating the brain basis of facial expression perception using multi-voxel pattern analysis. CORTEX 69:131–140. https://doi.org/10.1016/j.cortex.2015.05.003

Yucel GH, Belger A, Bizzell J, Parlier M, Adolphs R, Piven J (2015) Abnormal neural activation to faces in the parents of children with autism. Cereb Cortex 25(12):4653–4666. https://doi.org/10.1093/cercor/bhu147

Acknowledgements

We want to thank the National Brain Mapping Lab (NBML) for their contributions to data collection. This study has been supported by the Cognitive Science and Technology Council (Grant No. 3510).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mehdizadehfar, V., Ghassemi, F., Fallah, A. et al. Brain connectivity analysis in fathers of children with autism. Cogn Neurodyn 14, 781–793 (2020). https://doi.org/10.1007/s11571-020-09625-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11571-020-09625-2