Abstract

Diabetic retinopathy (DR) is a leading cause of vision loss among diabetic patients in developed countries. Early detection of occurrence of DR can greatly help in effective treatment. Unfortunately, symptoms of DR do not show up till an advanced stage. To counter this, regular screening for DR is essential in diabetic patients. Due to lack of enough skilled medical professionals, this task can become tedious as the number of images to be screened becomes high with regular screening of diabetic patients. An automated DR screening system can help in early diagnosis without the need for a large number of medical professionals. To improve detection, several pattern recognition techniques are being developed. In our study, we used trace transforms to model a human visual system which would replicate the way a human observer views an image. To classify features extracted using this technique, we used support vector machine (SVM) with quadratic, polynomial, radial basis function kernels and probabilistic neural network (PNN). Genetic algorithm (GA) was used to fine tune classification parameters. We obtained an accuracy of 99.41 and 99.12 % with PNN–GA and SVM quadratic kernels, respectively.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Diabetic retinopathy (DR) is a complication arising from the more common diabetes mellitus (DM) which is a cause of concern in the developed world. Though DM is an endocrine disorder, it has far reaching implications on the vision of a person with DR being a leading cause of visual impairment in the developed world [31]. The most common treatment of DR is through laser photocoagulation or through corticosteroid injections [31]. Early detection and treatment is absolutely essential to prevent vision loss in diabetic patients [27]. But early detection of DR is a difficult task since this would require regular screening of diabetic patients and that would translate into an increased load on the opthamologist in-charge of screening the patients. To offset this requirement for an increased load on opthamologist, research is in progress to automate the DR screening process. The other advantage of an automated detection system is the ability to screen large number of patients in a short time and more objectively than an observer-driven technique [21].

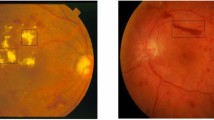

There are several signs to identify DR. Some of them include red lesions, such as microaneurysms, intraretinal microvascular abnormalities, and hemorrhages, and bright lesions such as hard exudates and soft exudates or cotton-wool spots [7]. These signs of DR can be used when there has to be automated detection of exudates in retinal images. In our study, we focus on classification of retinal images into normal and DR images. Hence, we consider the retinal images as a whole to study features which might be useful in classification of the images into the two classes. We do this since the goal of this study is to adapt and develop trace transform functionals, a relatively unused feature extraction technique for medical image analysis. From an exhaustive review of the literature, we are yet to find a significant work which uses trace transform for retinal image analysis. The closest study as ours can be seen in [11]. In this study, radon transforms were used for microaneurysm detection in [11]. This can be considered as a precursor to our work since trace transform is a generalization of radon transform.

In general, automated DR detection is done by identifying exudates, red lesions, and other commonly occurring symptoms mentioned previously. The most basic way to do this is gray-level thresholding [15, 22]. But the problem with thresholding is that the results are not consistent due to uneven illumination of the hard exudates [3]. Subsequently, to counter this effect, an algorithm based on edge detection and mixture models was tested by [3] to obtain a classification accuracy of 95 %. Other simple techniques for segmentation of lesions in fundus images for DR screening were developed by [16]. In this work, region growing, adaptive region growing, and Bayesian-based approaches were shown to attain an accuracy rate of 90 % for DR detection. Thresholding and shape features for microaneurysm and hemorrhage detection were used by [25] to obtain an accuracy rate of 95.65 % for classification of normal and abnormal retinal images. These examples show that it is possible to identify DR fundus images using simple techniques. But at times, due to variation in illumination and other image properties, it is necessary to go in for advanced techniques.

An assessment of neural network-based classifiers was done by [8] to study the efficacy of neural networks in identifying red lesions in retinal images. In this study, image and shape features were extracted to obtain a mean accuracy of 86 %. An ensemble based system with a combined algorithm of several machine learning techniques including pyramidal decomposition, edge detectors, and hough transforms was used by [24] for identifying macula and optic disk in retinal fundus images for DR screening. An exudate probability map and wavelet analysis were used by [10] to identify exudates in fundus images with an accuracy rate of 94 %. A color information-based feature dimension in combination with Fisher’s linear discriminant analysis was used by [26] to obtain an accuracy rate of 100 % for detection of hard exudates. This study shows that it is indeed possible to classify images with a perfect accuracy rate using image information in color images. A study based on Gaussian mixture models (GMM) and SVM to classify image shape and statistic features for microaneurysm detection was done by [1] with an accuracy rate of 99.53 %.

A study close to ours was performed by [23]. In this study, a multiple-instance learning framework was developed for identifying patterns in normal and DR retinal fundus images. An accuracy rate of 88.1 % was obtained in this study. Dictionary learning and sparse representation classifier (SRC) were used by [2] to detect microaneurysm and blood vessels in fundus images, with an accuracy of 84.67 %.

The quality of retinal images is important in any automated analysis technique for DR screening. For this, a traditional clustering algorithm was developed by [19] to verify the quality of color retina images. It is also important to note that the accuracy of any classification system for DR screening depends on the quality of retinal images due to the huge number of features and blood vessels in normal images which look very similar to exudates and other symptoms visible in DR images [5, 19]. In line with this, our work uses a contrast correction and enhancement technique discussed in the next section. The proposed system has been shown in Fig. 1. In our technique, followed by preprocessing, we use trace transform for feature extraction. In using trace transform, we develop application-specific functionals in order to effectively extract suitable features for DR fundus images. We then used SVM kernels and PNN to classify the features extracted using trace transform functionals.

2 Methods

2.1 Image database

The data used in this work were obtained from the Department of Opthamology, Kasturba Medical College, Manipal, India. The images were acquired using a TOPCON non-mydriatic retinal camera to provide a 3.1 megapixel retinal image. For the purpose of this study, 170 normal images and 170 DR images were used. Out of the 170 DR images, 23 were mild Non-proliferative DR (NPDR) images, 52 were moderate NPDR images, 30 were severe NPDR images, and 65 were Proliferative DR (PDR) images. The age group of patients considered for the study was 24–57 years.

2.2 Methods

Each of the processes followed in the study has been described below.

2.2.1 Preprocessing

The color scale RGB images were first converted into gray scale for efficient computation. Since the exudates are visible with more contrast in gray scale, this mode was preferred for further analysis. An equalization procedure was performed on the images to obtain a local contrast that is approximately equal at all image intensities. In this method, a neighborhood \(\wp\) of an image location \((x, y)\) is considered. The local contrast of this neighborhood is estimated as follows:

where \(c(x, y)\) is the estimated local contrast, \(f(x, y)\) is the image gray level at \((x, y)\) and \(median_{\wp }(x, y)\) is the median gray level within the neighborhood \(\wp\) of \((x,y).\) Equation (1) can be equated to a high-pass spatial filter. The local contrast provides a measure of the high-frequency image noise. The noise associated with each image gray level \(I\) can be measured by the local contrast standard deviation \(\sigma _{c}(I)\equiv \sigma \{c(I)\}\). The contrast enhancement function is then defined as follows:

while executing the contrast enhancement function, the gray scale is divided into several overlapping bins \(i=1,...,N\) where \(N\) is the number of bins. Interpolation of the estimated \(f_{ceq}(I_{i})\) values provides an estimate of the function \(f_{ceq}(I)\) for all image intensities \(I\). This method of contrast enhancement is a nonlinear gray level rescaling technique. This transfer function \(f_{ceq}(I_{i})\) is then normalized such that the total value adds up to 1, the same way it is done in the case of a probability density function.

2.2.2 Feature extraction using trace transform functionals

Trace Transform functionals [14] were used for feature extraction. Trace transforms are a generalization of the radon transform where the transform calculates functionals of the image function along lines criss-crossing its domain [14]. The trace transform works by transforming the original image into a mapped image, which is a 2D function, based on a set of parameters (\(\phi\), \(\rho\)) that characterizes each line criss-crossing its domain. To describe it in a simpler way, consider a fish tank with a single fish in it. For the purpose of this description, let us assume to be living in a two-dimensional plane, where we can view the fish tank from only one direction at a time with no knowledge about the information in other directions. So, when the fish inside the fish tank is viewed from the front, the fish would appear to be a conical-shaped object. When it is viewed from the sides, it would appear to be a streamlined flat object, and from the top, it would appear to be a straight line. A person living in a two-dimensional plane, who has viewed the fish from all the directions, would describe the fish with information gathered from the views obtained in all possible directions after accumulating and combining the information from several angles. Trace transform functions in the same way where the images are scanned by tracing lines in all angles starting from 0°, running until 359°. At each angle, the tracing lines obtain the pixel information of the image along the tracing line. This pixel information is then combined and calculated according to what are called as the trace functionals (T) defined in Table 1. This would result in a 2D image in the trace transform domain. An example of this 2D image obtained by accumulating the trace lines through T can be seen in Fig. 2a–d.

After a 2D image has been obtained in the trace transform domain, the same procedure of tracing the image is followed using diametric functionals (\(P\)) defined in the second column of Table 1. This would result in a 1D line containing condensed information from the 2D image obtained through \(T\). Followed by this, circus functionals (\(\Phi\)) are applied on the 1D line, to obtain a single feature defining the original image. This single feature is referred to as the triple feature of the image in consideration.

Mathematically, the trace transform can be defined as a function \(g\) definited on \(\Lambda\) with the help of \(T\) which is some functional of the image function, where \(T\) is the trace functional. If \(L(C_{1};\phi ,p,t)\) is a line in coordinate system \(C_{1}\), then [14]:

where \(F(C_{1};\phi ,p,t)\) means the values of the image function along the chosen line. This functional results in a two-dimensional function of the variables \(\phi\) and p and can be interpreted as another image defined on \(\Lambda\). Here, the triple feature \(\Pi\) is defined as [14]:

The choice of these three functionals is totally dependent on the engineer’s choice and can be varied according to the application and image properties in hand. The functionals are chosen in such a way that they are invariant to rotation, translation, and scaling. As long as the functionals chosen have these properties, the engineer is free to choose any mathematical function to define his image properties. In our study, the functionals used can be seen from Table 1 [6].

2.2.3 Feature selection

In any classification algorithm, one of the most important steps is choosing variables that contribute most to the classification task. It is also necessary to eliminate variables that do not contribute to the overall classification. Statistically speaking, it is essential to reduce a d-dimensional feature vector into a m-dimensional vector (\(m\le d\)) such that m represents the most effective set of feature measurements for the given problem [29]. In the current study, a forward feature selection technique with a Mahalanobis distance measure was used to rank the features. The procedure of finding the distance measure was done at each round of the ten-fold cross validation since a bias would be introduced if the same data are used for both feature selection and accuracy estimation. Out of a total of 840 features extracted (20*7*6) , 672 statistically significant features (16*7*6) ranked using Mahalanobis distance measure were used. Since the focus of our study was testing the efficacy of features extracted using trace transform, we used one of the most widely employed distance measure: Mahalanobis distance measure. We did not compare it with other distance measures as it would be a separate topic of study by itself.

With 16+7+6 functionals listed in Table 1, we can extract 16*7*6= 672 features. For this study, we used a total of 20*7*6 = 840 features. Since we found only the first 672 features to be statistically significant for use in classification, we neglect the trace functionals 17–20 during the final classification.

2.3 Classification

Classification was done using three support vector machine (SVM) kernels and probabilistic neural network (PNN). The accuracy of PNN was further improved using Genetic Algorithms.

2.3.1 Support vector machine

The SVM is a linear classifier which uses a kernel trick to classify features in a nonlinear space. The SVM simultaneously maximizes the distance between the patterns and the class separating hyper-plane for both the classes. It has higher generalization ability in the sense that it can classify unseen new data accurately. Generally, nonlinear patterns are not separable in the original feature space, and hence, a nonlinear kernel transformation is necessary [18]. If is the perpendicular vector to the class separating hyper-plane, the SVM optimization problem will be

where are the Lagrange multipliers under the constraint, \(\alpha _{i}\ge 0\) and \(k(x_{i},x_{j})\) is the kernel inner product to transform the features into high dimensional kernel space. The \(y_{i}\) and \(y_{j}\) are the targets of ith and jth pattern, respectively. There are numerous kernel functions, and the kernels chosen are highly application specific. In the current study, quadratic, polynomial, and radial basis function (RBF) kernels are used which are given in Eqs. (6, 7, 8), respectively.

where \(\sigma\) is the width parameter of RBF kernel.

2.3.2 Probabilistic neural network

It consists of three layers: input layer, pattern layer, and category layer. The input layer consists of as many numbers of nodes as the number of features [28]. The pattern layer consists of as many numbers of nodes equal to the number of samples in the training set. The category layer consists of as many numbers of nodes as the number of classes present in the data [4]. The connection weight between the input layer and pattern layer is obtained by normalizing the feature vector as,

Each sample comprising of feature vector at the input nodes is connected to only one node in the pattern layer. Each node in the pattern layer is connected to only one node in the category layer for which the sample belongs. During testing of a sample, the feature vector is normalized prior to the operation. The normalized feature vector is multiplied with the connection weights between input and pattern units to obtain the pattern node output as,

If the given pattern node is connected to the category node, then the response at the category node is incremented as,

Finally, the classification of test sample is performed as,

As seen from Eq. (11), the output at category node is a function of \(z_{k}\) and \(\sigma\), the spread parameter of the PNN. Depending on \(\sigma\), the output at the category node changes, thereby affecting the accuracy.

2.3.3 Optimization of PNN using genetic algorithms

Genetic algorithms (GA) are an evolutionary and population-based optimization method. In the present study, this method is used to find the consistent optimal spread of a PNN classifier such that it provides the highest discrimination between classes. A GA consists of coding and decoding of populations, fitness function evaluation, reproduction, crossover, mutation, and test for convergence of the algorithm. The GA initially codes the problem variables into binary-valued strings. Each such string has a relative importance toward the final optimization goal . The relative importance of each string is computed by evaluating the fitness function. Based on the fitness function, the fit strings (having higher fitness function value) are reproduced or multiplied in numbers and less fit solutions are discarded. These fit solutions are made to crossover [17].

A location (string position) is defined, and the string was cut into two pieces. When such two pieces belonging to different strings are combined with each other, the operation is called crossover. A few bits in the strings were flipped in the mutation operation, so that it would avoid convergence of the solution to a local optimum. The three operations, reproduction, crossover, and mutation were repeated until the fitness function becomes steady and will not be improved with further iterations. In the current study, a population size of 30 was chosen. In every generation (iteration), there would be 30 solutions. Initially, 30 solutions were randomly chosen. Out of these 30 solutions, the solutions leading higher accuracy ( high fitness function) were multiplied and the one leading low accuracy were discarded. The fit solutions were encoded into binary strings and reproduction, crossover and mutation were performed iteratively until all solutions in a generation were more or less same, and there would not be any further improvement over iterations. In the case of this implementation, the GA has a population size of 30. In the first generation, 30 different spread values are chosen randomly. In 52 generations, the GA converges. In the 52nd generation, all individual solutions will have same spread value, which is 0.984, obtained by the optimization process.

3 Results

The current work has provided good results for classification of normal and DR classes of fundus images. We have obtained accuracies comparable to popular works in the literature as discussed in the next section. We have obtained an accuracy of 99.41 and 99.12 % using PNN-GA and SVM-Quadratic, respectively. One of the reason for a high accuracy rate is due to the spread of the data which has a high between class-scatter and low within class-scatter. This results in good separability between the two classes. Thus, it is clear that apart from the discriminative capacity of the classifiers, the feature extraction technique works well in producing features with a good spread. From visual inspection of features, we find that the DR data have a wider spread than normal data. This is due to the fact that we have four different stages of abnormalities grouped into the same class.

PNN classifier, when used on our feature set, provided an accuracy which was lower than SVM kernels. Since the accuracy rates did not match with the feature vector spread and distribution, Genetic Algorithms, which is an optimization technique, was used to optimize the classifier parameters to improve classification accuracy. The optimization technique helped in improving the classification accuracy to a considerable extent as seen from Table 2.

GA is a population-based optimization technique. It chooses best solution from an ensemble of solutions. In our study, we have used a population size of 30. It means the GA finds 30 solutions in a generation/iteration, and out of them, it chooses fit solutions to multiply it in the next generations. The process is repeated many times until the algorithm converges. In the first generation, the GA finds a few solutions which are diverse and contain both fit and bad (unwanted) solutions. From this, it will choose fit solutions and multiply them using reproduction, crossover, and mutation operations. In the first generation, all fit and bad solutions will be present. From these, the GA will choose the fit solutions, and the process of reproduction, crossover, and mutation continues over generations until convergence. It is seen that most of the solutions are fit, with high accuracy. Since this accuracy is obtained over all the individuals in a generation, and such (similar) solutions were obtained in subsequent generations, the algorithm is inferred to be converged. Table 2 shows the classification accuracy of different classifiers for ten-folds. The optimum spread value of the PNN obtained after optimization is 0.984 for which a highest accuracy of 99.41 % is obtained. The results obtained using our technique has been provided in Fig. 3 and Table 2.

To validate the accuracy of results obtained using our in-house database, we replicated our experiments on an open database (MESSIDOR) [13] choosing the same number of images as we did with our in-house database. These images were chosen randomly using a random number generator. We performed the validation experiments with the same set of measurements and feature ranking techniques along with similar classifier settings. The results of this are provided in Fig. 4 and Table 3.

4 Discussion

A new set of functionals for use with trace transform has been proposed, keeping in mind the nature of images in hand. The functionals are new in a way that most of the mathematical definitions utilized for extracting features used in this study have not been tried before in other studies related to computer-aided diagnosis of DR. In this case, we have used normal and DR retinal images for a two-class classification problem. Since we tried to study various functionals to test the efficacy of the same with automated DR classification, we did not further subdivide DR classes into mild, moderate, proliferative, and severe classes. As a start, we have found that the technique works very well with a two-class problem. Also, we experimented with numerous functionals in all three stages of the trace transform. In Table 1, we have given only the functionals which provided us with good results. The development of functionals in itself is an exciting and challenging task since we have to choose functionals which best represent the images in consideration. Having said that, it is also imperative to note that the choosing of functionals is not a rigid task, and it is the researcher’s prerogative to choose the one which is a good descriptor of the sample in hand. By stating the functionals are not rigid, it is implied that the functionals presented in our study are not exhaustive, and the engineer is free to alter the functionals according to his needs as long as the functionals are invariant to rotation, translation, and scaling. With this in mind, the functionals developed in our work have shown to be very effective in discriminating the two classes.

Also, we have tried implementing a GA-based optimization technique which produced a visible improvement in classification accuracy for a PNN classifier. Since in the current work the emphasis has been more on the development of a new framework for feature extraction using trace transform functional, we opted to test only a few classifiers. This has not hindered the pattern recognition framework in any way since we have obtained good accuracy rates for all the classifiers considered. In fact, the accuracy rates obtained in the present work are one of the best in the literature as seen from Table 4.

An adaptation of extracting features through trace transform has been attempted for application in automated detection of DR images for a two-class classification problem. From a study of available literature, we find that our method has provided one of the best classification accuracies for the problem in hand. We believe that since the functionals used in trace transforms are not rigid, the usage of these functionals can be adapted and fine tuned or tweaked according to the engineer’s needs. Since trace transform functionals capture image information as a whole, we believe that it would be difficult to identify and differentiate lesions arising from other diseases, unless the lesion structure and texture are different from lesions of DR. Also, since this is an attempt to see whether trace transform functionals can indeed be used for identification of different classes of DR, we believe that this could be the future direction of our work to identify and differentiate between lesions from different classes and diseases. As a direct impact, the proposed methodology can be adapted and extended to other areas of medical image analysis as well. The features extracted using this technique, classified using SVM kernels, and PNN-GA provided a classification accuracy of 99.44 and 99.12 %, respectively.

With respect to processing time for the images, processing was done using a Core 2 Duo processor @2.4GhZ with a 4.0GB RAM. All processing was done using Matlab. The average time required to extract 840 features for one image was 12.06 min for a high-resolution 3.1 megapixel image. Since in real-time processing we would not require an image of such high resolution, the processing time can considerably reduce. Further reduction in time could be obtained by extracting only the 672 statistically significant features that have been identified in this study. Validation of our study was done by testing the same algorithm and workflow with 170 normal images and 170 DR images from an open database MESSIDOR [31] with good results being obtained for the same.

References

Akram MU, Khalid S, Khan SA (2013) Identification and classification of microaneurysms for early detection of diabetic retinopathy, Pattern Recognition, 46(1), 2013. ISSN 107–116:0031–3203. doi:10.1016/j.patcog.2012.07.002

Bob Zhang, Fakhri Karray, Qin Li, Lei Zhang (2012) Sparse representation classifier for microaneurysm detection and retinal blood vessel extraction. Inf Sci, Volume 200, 1 Oct 2012, Pages 78–90, ISSN 0020–0255. doi:10.1016/j.ins.2012.03.003

Clara IS, María G, Agustín M, María IL, Roberto H (2009) Retinal image analysis based on mixture models to detect hard exudates. Med Image Anal, 13(4): 650–658, ISSN 1361–8415. doi:10.1016/j.media.2009.05.005

Duda RO, Hart PE, Stork DG (2001) Pattern classification, 2nd edn. Wiley, USA

Fleming AD, Philip S, Goatman KA, Sharp PF, Olson JA (2012) Automated clarity assessment of retinal images using regionally based structural and statistical measures, Med Eng Phys, 34(7), 2012. ISSN 849–859:1350–4533. doi:10.1016/j.medengphy.2011.09.027

Ganesan K, Acharya UR, Chua CK, Lim CM, Abraham KT One-class classification of mammograms using trace transform functionals, instrumentation and measurement. IEEE Trans, vol.PP, no.99, pp. 1,1, 0, doi:10.1109/TIM.2013.2278562

García M, López MI, Álvarez D, Hornero R (2010) Assessment of four neural network based classifiers to automatically detect red lesions in retinal images. Med Eng Phys, 32(10). ISSN 1085–1093:1350–4533. doi:10.1016/j.medengphy.2010.07.014

García M, Sánchez CI, López MI, Abásolo D, Hornero R (2009) Neural network based detection of hard exudates in retinal images. Comput Method Prog Biomed, 93(1). ISSN 9–19:0169–2607. doi:10.1016/j.cmpb.2008.07.006

Gen M, Cheng R (1999) Genetic algorithms and engineering optimization. Vol. 7. Wiley-interscience

Giancardo L, Meriaudeau F, Karnowski TP, Li Y, Garg S, Tobin KW, Jr., Edward C (2012) Exudate-based diabetic macular edema detection in fundus images using publicly available datasets. Med Image Analysis, 16(1). ISSN 216–226:1361–8415. doi:10.1016/j.media.2011.07.004

Giancardo L, Meriaudeau F, Karnowski TP, Li Y, Tobin KW, Chaum E (2011) Microaneurysm detection with radon transform-based classification on retina images, Engineering in Medicine and Biology Society, EMBC, 2011 Annual International Conference of the IEEE, vol., no., pp. 5939,5942, 30 Aug 2011–3 Sept 2011. doi:10.1109/IEMBS.2011.6091562

Goldberg DE (1989) Genetic algorithms in search, optimization, and machine learning. Addison-Wesley Press, Cambridge

Kadyrov A , Petrou M The trace transform and its applications, School of Electronic Engineering, Informational Technology and Mathematics, University of Surrey, Guildford, GU27XH, UK

Kavitha D, Devi SS (2005) Automatic detection of optic disk and exudates in retinal images. In: Proceedings of the International Conference in Intelligent Sensing and Information Processing, Chennai, pp. 501–506

Köse Cemal, ŞU, İkibaş C, Erdöl H (2012) Simple methods for segmentation and measurement of diabetic retinopathy lesions in retinal fundus images. Comput Method Prog Biomed, 107(2). ISSN 274–293:0169–2607. doi:10.1016/j.cmpb.2011.06.007

Mookiah MRK, Rajendra Acharya U, Martis RJ, Chua CK, Lim CM, Ng EYK, Laude A (2013) Evolutionary algorithm based classifier parameter tuning for automatic diabetic retinopathy grading: a hybrid feature extraction approach, knowledge-based systems. 39, February 2013, pp. 9–22, ISSN 0950–7051, http://dx.doi.org/10.1016/j.knosys.2012.09.008

Muller KR, Mika S, Ratsch G, Tsuda K, Scholkopf B (2001) An introduction to kernel based learning algorithms. IEEE Trans Neural Netw 12(2):181–201

Niemeijer M, Abràmoff MD, van Ginneken B (2006) Image structure clustering for image quality verification of color retina images in diabetic retinopathy screening. Med Image Analysis, 10(6). ISSN 888–898:1361–8415. doi:10.1016/j.media.2006.09.006

Noronha K, Acharya UR, Kamath S, Bhandary SV, Nayak KP (2012) Decision support system for diabetic retinopathy using discrete wavelet transform, Proceedings of the Institution of Mechanical Engineers, Part H: J Eng Med, 227(3)

Patton N, Aslam TM, MacGillivray T, Deary IJ, Dhillon B, Eikelboom RH, Yogesan K, Constable IJ (2006) Retinal image analysis: concepts, applications and potential. Prog Retin Eye Res 25:99–127

Philips R, Forrester J, Sharp P (1993) Automated detection and quantification of retinal exudates. Graefes Arch Clin Exp Ophthalmol 231(2):90–94

Quellec G, Lamard M, Abràmoff MD, Decencière E, Lay B, Erginay A, Cochener B, Cazuguel G (2012) A multiple-instance learning framework for diabetic retinopathy screening. Med Image Analysis, 16(6), 2012. ISSN 1228–1240:1361–8415. doi:10.1016/j.media.2012.06.003

Qureshi RJ, Kovacs L, Harangi B, Nagy B, Peto T, Hajdu A (2012) Combining algorithms for automatic detection of optic disc and macula in fundus images. Comput Vis Image Underst 116(1). ISSN 138–145:1077–3142. doi:10.1016/j.cviu.2011.09.001

Saleh MD, Eswaran C (2012) An automated decision-support system for non-proliferative diabetic retinopathy disease based on MAs and HAs detection, Comput Method Prog Biomed 108(1). ISSN 186–196:0169–2607. doi:10.1016/j.cmpb.2012.03.004

Sánchez CI, Roberto H, López MI, Aboy M, Poza J, Abásolo D (2008) A novel automatic image processing algorithm for detection of hard exudates based on retinal image analysis. Med Eng Phys, 30(3), 2008. ISSN 350–357:1350–4533. doi:10.1016/j.medengphy.2007.04.010

Singer DE, Nathan DM, Fogel HA, Schachat AP (1992) Screening for diabetic retinopathy. Ann Int Med 116(8):660–671

Wang J, Downs T (2003) Tuning pattern classifier parameters using a genetic algorithm with an application in mobile robotics, evolutionary computation, 2003. CEC ’03. The 2003 Congress on, 1: 581, 586 Vol. 1, 8–12 Dec. 2003 doi:10.1109/CEC.2003.1299628

Webb AR (2002) Statistical pattern recognition. 2nd edition, Wiley, ISBN: 0470845147

Winder RJ, Morrow PJ, McRitchie IN, Bailie JR, Hart PM (2009) Algorithms for digital image processing in diabetic retinopathy. Comput Med Imaging Gr 33(8). ISSN 608–622:0895–6111. doi:10.1016/j.compmedimag.2009.06.003

World Health Organisation (2005) Prevention of blindness from diabetes mellitus: report of a WHO consultation in Geneva, Switzerland. WHO Library Cataloguing-in-Publication Data, Switzerland

Acknowledgments

The study was funded by the NHG CSCS/12006 grant.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ganesan, K., Martis, R.J., Acharya, U.R. et al. Computer-aided diabetic retinopathy detection using trace transforms on digital fundus images. Med Biol Eng Comput 52, 663–672 (2014). https://doi.org/10.1007/s11517-014-1167-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11517-014-1167-5