Abstract

Slope engineering is a complex nonlinear system. It is difficult to respond with a high level of precision and efficiency requirements for stability assessment using conventional theoretical analysis and numerical computation. An ensemble learning algorithm for solving highly nonlinear problems is introduced in this paper to study the stability of 444 slope cases. Different ensemble learning methods [AdaBoost, gradient boosting machine (GBM), bagging, extra trees (ET), random forest (RF), hist gradient boosting, voting and stacking] for slope stability assessment are studied and compared to make the best use of the large variety of existing statistical and ensemble learning methods collected. Six potential relevant indicators, \(\gamma\), C, \(\varphi\), \(\beta\), H and \(r_{u}\), are chosen as the prediction indicators. The tenfold CV method is used to improve the generalization ability of the classification models. By analysing the evaluation indicators AUC, accuracy, kappa value and log loss, the stacking model shows the best performance with the highest AUC (0.9452), accuracy (84.74%), kappa value (0.6910) and lowest log loss (0.3282), followed by ET, RF, GBM and bagging models. The analysis of engineering examples shows that the ensemble learning algorithm can deal with this relationship well and give accurate and reliable prediction results, which has good applicability for slope stability evaluation. Additionally, geotechnical material variables are found to be the most influential variables for slope stability prediction.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Due to the needs of economic development and the expansion of the scope of geotechnical engineering, as an essential environment for human survival, slopes are a crucial part of engineering construction, and research on their stability is constantly developing and advancing.

Slope stability evaluation is a crucial research aspect in slope engineering. However, the slope deformation and failure process is a very complex geological process. The factors that affect the slope stability are uncertain [27], so it is not easy to accurately evaluate slope stability with traditional theoretical analysis [13] and numerical calculations (such as the finite element method [20], discontinuous deformation analysis [60, 61, 70], virtual element method [33, 48] and phase-field [65,66,67]). Slope engineering is an uncertain, nonlinear, dynamic and open complex system. Its stability is comprehensively affected by geological and engineering factors with randomness, fuzziness, variability and other uncertain characteristics. There is a highly nonlinear relationship between slope stability and influencing factors. Therefore, the inevitable slope stability research trend is to break through traditional deterministic thoughts and fully consider the uncertainty caused by various slope factors. Slope stability analysis can be regarded as a pattern recognition task [59]. Based on the slope information database [51], some scholars use machine learning (ML) [35, 38, 50] to judge slope stability. Lu et al. [36] used an artificial neural network (ANN) [2] to predict the failure of circular slopes, which showed that the ANN has obvious advantages compared with the maximum likelihood estimation (MLE) technique. Samui [44] studied the application of a support vector machine (SVM) to predict slope stability. Sakellariou et al. [43] established a series of ANNs to predict the safety factor of the slope wedge failure mechanism and circular failure mechanism and evaluate their stability. Dickson et al. [9] introduced three ML algorithms to analyse the control of coastal cliff landslides. Yan et al. [57] studied the application of the Bayes discriminant analysis method in the stability prediction of open pit slopes. Zhou et al. [63] introduced six supervised learning methods to predict the stability of hard rock pillars, and the performance of these algorithms was compared and analysed. Cheng and Hoang [8] used a novel bee colony optimized support vector classifier to evaluate the slope collapse caused by a typhoon. Lee et al. [29] and Lin et al. [32] established a slope stability prediction model using an ANN. Based on ML, Hoang et al. [23] used the least squares support vector classification (LS-SVC) and firefly algorithm (FA) methods to study 168 slope cases. All the above artificial intelligence models can help us better understand the slope status, but each model has advantages and disadvantages. Due to the complexity of the problem, there are still some imperfections in these methods. Different algorithms have different sensitivities to the same type of data, and the classification accuracy of the same category under different algorithms will also vary. Each classifier has its limitations. The focus of slope stability evaluation research is the ML algorithm, which constantly looks for newer and more robust ML algorithms to establish slope stability evaluation models to achieve better prediction results. Therefore, it is necessary to find intelligent algorithms with high accuracy and strong applicability. Ensemble learning trains multiple algorithms to achieve complementary advantages and obtain better slope stability prediction results than the single algorithm.

Ensemble learning forms a more comprehensive 'strong learner' through the combination of multiple models. Ensemble learning can obtain more accurate prediction results, with better generalization performance and broader applications [10, 11]. Ensemble learning has been successfully applied to character recognition [12], medical diagnosis [62], facial recognition [21] and seismic wave classification [46]. Lin et al. [34] applied four supervised learning algorithms (random forest (RF), gravitational search algorithm (GSA), SVM and naive Bayesian) to slope stability evaluation. They carried out a comparative analysis to prove that the performance of RF and GSA is better than other algorithms. Based on an updated database of case histories, Zhou et al. [64] proposed a prediction method for slope stability analysis using the gradient boosting machine (GBM) method. Qi and Tang [42] used FA to adjust the superparameters and verified and discussed the feasibility of six comprehensive methods (logistic regression (LR), RF, decision trees (DT), GBM, multilayer perceptron neural network and SVM) in slope stability prediction. Qi and Tang [41] proposed a slope stability evaluation algorithm, adaptive boosted decision trees (ABDT), quadratic discriminant analysis (QDA), SVM, ANN, Gaussian process classification (GPC) and K‐nearest neighbours (KNN) as individual classifiers and a weighted majority voting method as the combined classifier. The results show that the hybrid ensemble method greatly improves slope stability prediction performance. Previous studies have shown that ensemble learning can provide a feasible tool for constructing a slope stability discrimination system, which allows the classification of slope stability. However, there is no comprehensive comparison of classifier ensemble algorithms over the prediction of slope stability. To improve the prediction accuracy of nonlinear slope behaviour and establish a simple model that can be widely promoted, it is necessary to continue exploring an ensemble algorithm that is more suitable for analysing nonlinear slope behaviour.

Because of this, the primary purpose of this paper is to compare the applicability of different classifier ensemble algorithms in slope stability analysis. To achieve this goal, we developed a research method for comparing the performance of different classifier ensemble algorithms in Scikit-learn [40], including bagging, RF, AdaBoost, GBM, stacking, voting and extra trees (ET) and hist gradient boosting classifier (HGB). These ensemble learning algorithms are specifically selected because they are increasingly used in engineering, but they have not been thoroughly compared with each other. In Sect. 2, the ensemble learning algorithm is briefly introduced, and Sect. 3 analyses the slope dataset. In Sect. 4, these methods are applied to classify slope stability, and the results are discussed according to performance criteria. The conclusion of this study is presented in the final section.

2 Ensemble learning methods

Ensemble learning [10] is a process of effective fusion to form a strong classifier based on individual learners (as shown in Fig. 1). According to the difference of the combination methods of base learners, ensemble learning can be divided into parallel topology structure (representing algorithm is bagging), serial topology structure (representing algorithm is boosting) and hybrid topology structure (representing algorithm is stacking). In this study, we consider several common ensemble learning algorithms in Scikit-learn. All these methods are increasingly being used and have efficient implementations; some of these methods have been used in slope classification [58, 64] with good results; the resulting model allows rapid slope stability prediction. The following is only a brief introduction to each ensemble classification algorithm. For a more in-depth discussion, please refer to the relevant literature [23, 24].

2.1 Bagging

Bagging [4] is one of the simplest and most efficient ensemble learning algorithms. Figure 2 shows the structure diagram of bagging. First, m training samples are extracted from the original training samples. After the T-round extraction, T training sets can be obtained, and then the T training sets are used to train each 'weak learner'. Finally, each 'weak learner' result is combined according to the average or voting method. Each time the training set is extracted, all the features can be used for training, or some features can be randomly selected. There is no dependency among the 'weak learners', and they are all connected in parallel. Bagging can not only uniformly sample datasets but also realize parallel computing. For this reason, learners based on the bagging framework have better generalization ability and higher computing speed.

2.2 Random forest

Random forest (RF) is a method based on bagging and decision trees (DTs) [19]. Unlike the traditional decision tree, RF randomly extracts n subsamples containing k attributes from the K attributes contained in N samples and then selects an optimal attribute from n subsamples for partitioning. RF combines the final prediction results based on the performance of multiple decision trees. RF can process high-dimensional data without feature selection and has strong adaptability to the dataset.

2.3 Boosting

Boosting [45] trains each 'weak learner' in an iterative manner. As shown in Fig. 3, 'weak learner 1' is trained on the original training set 1 and adjusts the training sample distribution according to its prediction results to obtain training set 2. Train on training set 2 to obtain 'weak learner 2'. The above process is repeated until the termination condition is satisfied, and the final prediction result is the weighted sum of the results of each base learner. Boosting is a forward distributed boosting algorithm. Each calculation step is affected by the previous step, and the weight of the miscalculated samples increases to improve the overall predictive ability of the algorithm model.

Adaptive boosting [17] (AdaBoost) is the most typical. AdaBoost can combine several low-precision classifiers and automatically adjust the sample weights according to the classification effect to synthesize a strong classifier with higher precision. AdaBoost is sensitive to outliers and noisy data.

2.4 Gradient boosting machine

The gradient boosting machine (GBM) [18] is a boosting algorithm. The GBM algorithm searches for weak classifiers along the gradient descent direction and trains an additive model to combine all weak classifiers. The loss function is used to express the fitting degree between the test set and training set. The lower the loss function is, the higher the prediction accuracy and the more reliable the result. GBM includes GBDT (gradient boosting decision tree), XGBoost (extreme gradient boosting), LightGBM (light gradient boosting machine) and CatBoost (categorical boosting).

Hist gradient boosting (HGB) [1], or histogram-based gradient boosting, is a boosting ensemble that uses feature histograms for fast and accurate selection of best splits. Light GBM inspires its implementation. HGB is more efficient than GBM in terms of memory consumption and processing speed.

2.5 Stacking

The stacking algorithm [53] has the advantages of simple structure, high performance and strong classification ability. The stacking structure diagram is shown in Fig. 4. The model of the first layer is called the base model, and the model of the second layer is called the meta-model. Using the predicted values of each model in the first layer as the input characteristics of the next layer, stacking has a stronger nonlinear expression ability than a single prediction model and can further reduce the generalization error. Based on the stacking algorithm, this paper uses GBM, RF, KNN, DT, SVC, LR, ET, bagging and Gaussian naive Bayes (GaussianNB) as the primary learners. LR is the secondary learner, and 10 times fivefold cross-training is used to avoid overfitting between the primary and second training models.

Stacking is a general method for achieving higher prediction accuracy by combining high-level models with low-level models. However, stacking generalization does not consider the data distribution and is suitable for standard datasets other than unbalanced data.

Bagging, boosting and stacking algorithms improve machine learning performance by merging multiple models. Bagging reduces model variance, boosting reduces model deviation and stacking improves model prediction results.

2.6 Voting

The voting ensemble (majority vote set) is a machine learning model set that combines the predictions of several other models. Voting prediction classification methods include hard voting and soft voting. The hard vote set summarizes the votes from the clear class labels of other models and predicts the class with the most votes. A soft voting set includes the sum of predicted probabilities of the class labels and predicting the class label with a greater probability addition. Regardless of the machine learning model, the voting ensemble can be used and considered a meta-model. The voting method is fast and straightforward. It only requires the prediction results of the established model on the test dataset without retraining.

2.7 Extra trees

Extra trees (ET) [47] and RF have a similar structure. Unlike RF, ET uses the entire dataset to train each decision tree to utilize all samples fully. In addition, when ET trains T decision trees, N input features are randomly selected to form T subdatasets. In node partitioning, the subdataset is selected at random by the partition threshold of each characteristic, and the feature with the best partition effect is selected as the optimal partition attribute according to the specified threshold. According to the theory of 'error-ambiguity decomposition', the greater the difference between 'weak learners' and the higher the prediction accuracy of each 'weak learner', the better the integration effect. The size of the ET decision tree is generally larger than that of RF, but the variance in the model is minor.

3 Database and variables

3.1 Parameter analysis

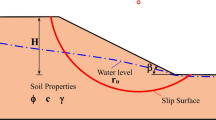

When establishing the classification model, the factors affecting slope stability are selected first. The principles of feature selection are as follows: (a) the meaningful features are selected from the given feature set to train the model to reduce the dimension disaster problem; (b) the complexity of the learning process is simplified by removing some features that are not related to the subsequent learning process. At present, gravity \(\gamma\), cohesion C, internal friction angle \(\varphi\), slope angle \(\beta\), slope height H and pore water ratio \(r_{u}\) have extensive use in slope stability prediction. According to the training, the results are good, and the data can easily be obtained [58]. It should be noted that according to engineering experience, the stability of the slope is also affected by other factors, such as the structure type of rock and soil, joints and the relationship between the joint surface and slope angle. However, it is difficult to obtain the values of these indicators accurately. The results indicated that slope stability could be well predicted without considering these indices [64].

3.2 Case data and preliminary analysis

From a performance point of view, measurements and comparisons have been made for the ensemble learning algorithm, 444 engineering examples of slopes were collected directly from the literature [5,6,7, 14,15,16, 22, 26, 30, 31, 36, 37, 49, 51, 54,55,56, 68, 69], and all the data are from these studies without any processing. A detailed description of the data is provided in Appendix. As shown in Fig. 5, the box diagram describes all the input features used in the slope stability prediction model. From these figures, the range and average distribution of all input features can be found directly. Except for \(\beta\), most variables have noncentre medians to the boxes. This indicates an asymmetric distribution of these variables. Additionally, except for \(\varphi\) and \(\beta\), there are several outliers in all input features.

There are two types of slope stability in the dataset: stable (S, 224 cases) and unstable (F, 220 cases). The variable correlation matrix is shown in Fig. 6. There is a pairwise relationship between the correlation coefficients of the variables related to slope stability. The red lines outside the diagonal are regression lines, which reflect the degree of linear correlation between variables. The diagonal of the figure is the marginal frequency distribution of each parameter. The red lines on the diagonal represent the empirical probability density curves. The numbers in the upper panels indicate the correlation between the two variables. The red stars (‘***’, ‘**’, ‘*;, ‘.’, ‘ ’) < = > p values (0, 0.001, 0.01, 0.05, 0.1, 1). As shown in Fig. 6, the correlation is rather poor with most variables (R < 0.5). There is a significant correlation between \(\gamma\) and \(\varphi\) (R = 0.73), \(\beta\) (R = 0.74), a significant correlation between \(\beta\) and \(\varphi\) (R = 0.70), and a moderate correlation between \(\gamma\) and H (R = 0.49). \(r_{u}\) is negatively correlated with \(\gamma\) (R = − 0.20) and \(\varphi\) (R = − 0.24).

Furthermore, it is evident that the dataset is widely distributed and that the distribution of the variable is nonsymmetrical. Principal component analysis (PCA) summarizes and visualizes the collected slope data and explains its variance–covariance structure. As shown in Table 1, the contribution rate of PC1 is the highest, 52.26%, and the contribution rate of PC6 is the lowest, only 3.65%. PCA allows us to visualize the classification capabilities of the slope dataset on a two-dimensional plane. According to the PCA results (as shown in Fig. 7a), the components of the first and second dimensions were visualized (Fig. 7b). As shown in Fig. 7b, the data distribution regions of the two types of slope states on the first two components are relatively close, with overlapping areas. In addition, some indicators with significant skewness will affect the classification results of slope stability, as shown in Fig. 1. To avoid the interference of large fluctuation data on the model performance, when the difference in the order of magnitude of each control factor is too large or the same control factor is discrete, it is necessary to standardize the sample data. This article uses the Quantile Transformer function for data normalization.

Ideally, for the sake of precise classification, each value of all features can only have one class label (stable or failure) in the diagram. Slope stability is classified according to different indicators as shown in Fig. 8. It can be observed from this figure that, in some events, the same indicator's value corresponds to both types of slope states. The possible reason is that the data are not linearly separable and it is difficult to define boundaries of feature values.

4 Development of slope stability assessment model

4.1 Data pre-processing

To form and control the performance of the classifiers, as shown in Fig. 9, a stratified and random sampling method is used to split the dataset into training datasets (90%) and test datasets (10%). The tenfold cross-validation (CV) and grid search method are adopted to obtain optimal parameters of the ensemble learning models. In the process of tenfold CV, the training is divided into random tenfold training, nine of which are trained by the ensemble learning model, and the remaining training is used as the verification set to verify the model's performance. The training and testing process is repeated 10 times, and 10 different subsets are used as testing sets. Therefore, the overall performance of the ensemble learning algorithm on the training set is calculated by simply averaging the 10 training results.

4.2 Model performance evaluation

For slope stability classification problems, receiver operating characteristic (ROC) curves [3], log loss, accuracy and kappa coefficients [28] are the main indicators for evaluating the performance of classifiers. The ROC curve is a good comprehensive evaluation index without considering the sample distribution, making a credible performance evaluation of the algorithm results. The area under the curve (AUC) is enclosed by the ROC curve and the coordinate axis. AUC represents the probability that a positive sample is more likely to be predicted as a positive class than a negative sample to be a positive sample, which is often used to measure the classification performance of a classifier on unbalanced data. Different AUC values reflect different classification effects [3, 25]: 0.900–1.00 represents outstanding discrimination, 0.800–0.900 represents excellent discrimination, and 0.700–0.800 represents acceptable discrimination.

Log loss is a performance metric used to evaluate the probability of members of a given class. The log loss can be given using Eq. 1.

where n represents the total number of samples and m is the number of types of slope stability. When sample i belongs to class j, \(y_{ij}\) is 1; otherwise, \(y_{ij}\) is 0. Furthermore, \(p_{ij}\) represents the probability of sample i belonging to class j. For a binary classification problem, the log loss function is as follows:

Accuracy is defined as the percentage of the number of samples correctly classified relative to the total number of samples selected for validation, and Eq. 3 calculates the precision. The accuracy can reflect the overall classification accuracy to a certain extent but cannot directly reflect the different classes' prediction results. The kappa coefficient is a comprehensive classifier evaluation index [63] mainly used to reflect the proportion of error reduction compared with random classification. Equation 4 is the expression of kappa.

where r represents the number of categories to be classified by the confusion matrix. zii is the value of row i and column i of the confusion matrix, the number of samples correctly classified; zi+ and z+i represent the sums of row i and column i, respectively, of the confusion matrix.

The distribution range of the kappa coefficient is [− 1,1], where − 1 represents the worst classification effect, 0 represents the classification performance of the classifier equal to the random classifier and 1 represents the best classification performance. Different kappa coefficients reflect different classification effects: 0.810–1.000 (perfect), 0.610–0.800 (substantial), 0.410–0.600 (moderate), 0.210–0.400 (poor), 0.000–0.200 (slight) and − 1.000–0.000 (total disagreement). Generally, 0.4 is taken as the threshold of the kappa coefficient [63].

4.3 Model development and parameter optimization

This paper studies the applicability of eight common ensemble learning algorithms (GBM, AdaBoost, bagging, ET, RF, HGB, voting and stacking) in slope stability classification. Most classifiers (GBM, AdaBoost, bagging, ET and RF) contain parameters that need to be adjusted. The ‘gridsearchcv’ function in sklearn performs a tuning parameter grid for many classification problems, which allows a single consistent environment to train each ensemble learning method and tune their related parameters. After evaluating the optimal parameters, the final slope stability prediction models are established using the entire training dataset. To optimize the critical parameters of each integrated training model and obtain the best discrimination performance, a tenfold reputation of CV is used to select the ‘optimal’ value of the adjustment parameter. As a binary classification problem, slope stability is a trade-off between sensitivity and specificity. AUC represents the model's ability to distinguish between positive (stable) and negative (failure) categories. Therefore, the AUC is used to evaluate the performance of the parameters in the repeated tenfold CV process. Table 2 shows the hyperparameter settings and prediction results of the ensemble learning algorithms in the current research.

5 Results and discussion

5.1 Discriminant results and performance analysis

To compare the performance of different classification algorithms, the KNN, LR, GaussianNB, MLPClassifier (MLPC) and SVM methods are also used for slope stability classification. The prediction results of the model are shown in Fig. 10. The AUC of SVM is 0.898, LR is 0.752, KNN is 0.934, GaussianNB is 0.695, MLPC is 0.839, RF is 0.967, GBM is 0.961, ET is 0.966, AdaBoost is 0.961, bagging is 0.965 and HGBC is 0.959. It is worth noting that the AUC determines the classifier's performance greatly, and 1.0 represents the ideal performance. The ROC curves of ensemble learning (RF, GBM, ET, AdaBoost, bagging and HGB) are slightly more approximated to the left and upper axes than the other models. Their AUC values were above 0.90, slightly higher than those of KNN, and significantly higher than those of SVM, LR and MLPC. It also shows that ensemble learning is more suitable for slope stability prediction, and GaussianNB has the lowest prediction performance. The AUC value of the ensemble learning model constructed by the average predicted value of SVM, LR, KNN, GaussianNB, MLPC, RF, GBM, ET, AdaBoost, bagging and HGB is 0.961. These facts convincingly demonstrate that ensemble learning has a good ability to predict slope stability.

According to the visual comparison, one can easily observe the performance of all the ensemble learning classification methods, as shown in Figs. 11 and 12. The boxplots in Fig. 11 report the performance of eight classifiers on a repeated tenfold CV procedure with average AUC, accuracy, log loss and kappa. Since these models use the same dataset, it is meaningful to analyse the differences between the calculated results. The distinction of the upper and lower quartiles in the stacking classifier is smaller than that of the other methods, indicating that the accuracy variance is relatively low in different iterations (see Fig. 11). Figure 12 shows that the average AUC of each ensemble classifier in slope stability prediction is between [0.8992–0.9452]. The stacking model has the highest AUC, with an AUC of 0.9452, followed by the GBM method (AUC = 0.9293), RF method (AUC = 0.9210) and ET method (AUC = 0.9180). According to the consistency scale, the AUCs of all modelling techniques used in the model evaluation test set are excellent. As shown in Fig. 11, the accuracy and kappa value of stacking have the closest quartile range. The quartile range of the ROC value of GBM is the closest, but there are some outliers. The average value of log loss of each ensemble classifier in slope stability prediction falls into [0.3282–0.6860]. The lowest log loss is the stacking model, and the log loss is 0.3282, followed by the RF method (log loss = 0.3776) and ET method (log loss = 0.3894). Smaller log loss is better, with 0 representing a perfect log loss. AdaBoost performed relatively worse, with a log loss of 0.6860.

The average accuracy of the ensemble classifiers in slope stability prediction falls into the range of [82.07–84.74%]. Obviously, the accuracy of the stacking model is the highest (accuracy = 84.74%), and then the ET (accuracy = 83.53%), bagging (accuracy = 83.07%) and GBM (accuracy = 82.81%) models rank successively. Voting achieves the lowest average accuracy (82.07%). For the eight ensemble learning techniques, the performance (in terms of average kappa) falls into the range of (0.6377–0.8474). The kappa values of all ensemble learning models are above 0.4. The stacking predictor achieves the highest kappa (0.6910), followed by ET, bagging and GBM, with average kappa rates of 0.6703, 0.6590 and 0.6545, respectively.

According to the concordance scale, the kappa of the training set and test set of eight ensemble learning classification techniques in model calibration data is medium to substantial. It is clear that the kappa and accuracy of each ensemble learning classification algorithm are basically the same, but kappa is usually smaller than accuracy. The reason is that kappa can more comprehensively reflect the error information of the model, while accuracy only considers the diagonal element error in the confusion matrix and ignores the element error on the nondiagonal line.

The results show that ensemble learning models have good performance in predicting slope stability, which is feasible and reliable. We can observe the following facts. Compared with a single classifier, the stacking ensemble classifier has a relatively higher average AUC (0.9452), average accuracy (84.74%) and kappa average (0.6910) and a relatively lower average log loss (0.3282) on the testing set, indicating that its prediction performance is relatively higher, but the calculation intensity is high, and it takes the longest time to train; stacking has better generalization performance than voting. This research indicates that using a classifier ensemble, rather than searching for the ideal single classifier, may be more helpful for slope stability prediction.

5.2 Relative variable impact

Determining the sensitivity of the factors affecting slope stability is very important for evaluating slope stability and the design of support structures. A relative importance score is used in this study, and this is based on optimal ensemble learning (GBM, ET and RF) to study the sensitivity. The methods are selected according to their excellent performance on the test set. The variable importance ranking is obtained by averaging the tenfold CV feature selection results of RF, ET and GBM. Figure 13 shows the normalized score of variable importance. \(\gamma\) (score = 0.2309), C (score = 0.2007) and \(\varphi\) (score = 0.2003) are the most sensitive factors to slope stability, which indicates the importance of slope material variables. The correlation matrix of the variables is consistent with these results (Fig. 6), the component matrix and scores, and the literature results [52]. Therefore, the values of the material \(\gamma\), C and \(\varphi\) in artificial slopes must be reasonably and accurately selected based on specific indoor and field tests. In treating landslides, geomaterials' cohesive force and internal friction angle should be improved [39]. The importance scores of \(\beta\) and H are 0.1449 and 0.1395, respectively, indicating that geometric variables also affect slope stability. Optimizing these two variables in practical design is a feasible method for ensuring slope stability. It can also be found that the sensitivity of \(r_{u}\) (0.0837) is not as good as that of the first five features.

6 Conclusions

Ensemble learning algorithms (GBM, AdaBoost, bagging, ET, RF, HGB, voting and stacking) are introduced in this study to study the stability of 444 slope cases. Six potential relevant indicators, \(\gamma\), C, \(\varphi\), \(\beta\), H and \(r_{u}\), serve as indicators for prediction, and the generalization ability of the classification models is improved using the tenfold CV method. According to the analyses, the following conclusions are presented:

-

1.

The ROC curves of ensemble learning algorithms are closer to the left and top axes than other algorithms. The AUC of ensemble learning models is more than 0.900, slightly higher than KNN, SVM, LR and MLPC. The AUC value of the ensemble learning model constructed by the average predicted value of SVM, LR, KNN, GaussianNB, MLPC, RF, GBM, ET, AdaBoost, bagging and HGBC is 0.961. Ensemble learning algorithms have an excellent ability to predict slope stability.

-

2.

The stacking model has a higher AUC, higher accuracy and kappa value, and lower log loss. It can be used as a valuable tool for predicting slope stability, especially in cases that very easily fail and classes with very low stability followed by ET, RF, GBM and bagging models. However, the stacking model has a large number of calculations and long training time, so it is necessary to combine the super learner function in practical applications to improve the computational efficiency.

-

3.

The importance scores of 0.2309, 0.2007 and 0.2003 obtained by the prediction variables more influence slope stability, of which are geotechnical material variables (\(\gamma\), C and \(\varphi\)).

-

4.

The relationship between slope stability and influencing factors is a high-dimensional complex nonlinear relationship, which is challenging to address by traditional modelling methods. The analysis of engineering examples shows that the ensemble learning algorithm can deal with this relationship well and achieve accurate and reliable prediction results, which has good applicability for slope stability evaluation. For future work, the introduction of samples and parameters that play an essential role can be added to develop an ensemble learning algorithm and improve its generalization and reliability, such as rainfall, earthquakes, human activities and other external or trigger factors.

References

Aljamaan H, Alazba A (2020) Software defect prediction using tree-based ensembles. In: Proceedings of the 16th ACM international conference on predictive models and data analytics in software engineering, pp 1–10

Anitescu C, Atroshchenko E, Alajlan N, Rabczuk T (2019) Artificial neural network methods for the solution of second-order boundary value problems. Comput Mater Contin 59(1):345–359

Bradley P (1997) The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern Recogn 30(7):1145–1159

Breiman L (1996) Bagging predictors machine learning. Mach Learn 24:123–140

Chakraborty A, Goswami D (2017) Prediction of slope stability using multiple linear regression (MLR) and artificial neural network (ANN). Arab J Geosci 10(17):385

Chen L, Peng Z, Chen W, Peng W, Wu Q (2009) Artificial neural network simulation on prediction of clay slope stability based on fuzzy controller. J Cent South Univ (Sci Technol) 40(5):1381–1387

Chen C, Xiao Z, Zhang G (2011) Stability assessment model for epimetamorphic rock slopes based on adaptive neuro-fuzzy inference system. Electron J Geotech Eng 16:93–107

Cheng M, Hoang ND (2015) Typhoon-induced slope collapse assessment using a novel bee colony optimized support vector classifier. Nat Hazards 78(3):1961–1978

Dickson M, Perry GLW (2016) Identifying the controls on coastal cliff landslides using machine-learning approaches. Environ Model Softw 76:117–127

Dietterich TG (1997) Machine learning research: four current directions. AI Mag 18(4):97–136

Dietterich TG (2000) An experimental comparison of three methods for constructing ensembles of decision trees: bagging, boosting, and randomization. Mach Learn 40(2):139–157

Drucker H, Schapire R, Simard P (1993) Boosting performance in neural networks. Int J Pattern Recogn Artif Intell 7(04):705–719

Duncan JM (1996) State of the art: limit equilibrium and finite-element analysis of slopes. J Geotech Eng 123(7):577–596

Fattahi H (2017) Prediction of slope stability using adaptive neuro-fuzzy inference system based on clustering methods. J Min Environ 8(2):163–177

Feng X, Hudson J (2004) The ways ahead for rock engineering design methodologies. Int J Rock Mech Min Sci 41(2):255–273

Feng X, Wang Y, Lu S (1995) Neural network estimation of slope stability. J Eng Geol 3(4):54–61

Freund Y, Schapire RE (1997) A decision-theoretic generalization of on-line learning and an application to boosting. J Comput Syst Sci 55(1):119–139

Friedman JH (2001) Greedy function approximation: a gradient boosting machine. Ann Stat 29(5):1189–1232

Gounaridis D, Koukoulas S (2016) Urban land cover thematic disaggregation, employing datasets from multiple sources and RandomForests modeling. Int J Appl Earth Observ Geoinf 51:1–10

Griffiths D, Lane P (1999) Slope stability analysis by finite elements. Geotechnique 49(3):387–403

Gutta S, Wechsler H (1996) Face recognition using hybrid classifier systems. In: Proceedings of international conference on neural networks (ICNN'96). IEEE.

He F, Wu S, Zhang Y, Bao H (2004) A neural network method for analyzing compass slope stability of the highway. Acta Geosici Sin 25(1):95–98

Hoang ND, Pham AD (2016) Hybrid artificial intelligence approach based on metaheuristic and machine learning for slope stability assessment: a multinational data analysis. Expert Syst Appl 46:60–68

Hong H, Liu J, Bui D, Pradhan B, Acharya TD, Pham B, Zhu A, Chen W, Ahmad B (2018) Landslide susceptibility mapping using J48 Decision Tree with AdaBoost, Bagging and Rotation Forest ensembles in the Guangchang area (China). CATENA 163:399–413

Hosmer J, Lemeshow S, Sturdivant RX (2013) Applied logistic regression. Wiley, New York

Jin L, Feng W, Zhang J (2004) Maximum likelihood estimation on safety coefficiefficients of rocky slope near dam of Fengtan project. Chin J Rock Mech Eng 23(11):1891–1894

Kang F, Li J, Ma Z (2013) An artificial bee colony algorithm for locating the critical slip surface in slope stability analysis. Eng Optim 45(2):207–223

Kuhn M, Johnson K (2013) Applied predictive modeling. Springer, Berlin

Lee T, Lin H, Lu Y (2009) Assessment of highway slope failure using neural networks. J Zhejiang Univ 10(1):101–108

Li J, Wang F (2010) Study on the forecasting models of slope stability under data mining. In: Earth and space 2010: engineering, science, construction, and operations in challenging environments, pp 765–776

Li W, Yang S, Chen E, Qiao J, Dai L (2006) Neural network method of analysis of natural slope failure due to underground mining in mountainous areas. Yantu Lixue (Rock Soil Mech) 27(9):1563–1566

Lin H, Chang S, Wu J, Juang H (2009) Neural network-based model for assessing failure potential of highway slopes in the Alishan, Taiwan Area: pre-and post-earthquake investigation. Eng Geol 104(3–4):280–289

Lin S, Zheng H, Jiang W, Li W, Sun G (2020) Investigation of the excavation of stony soil slopes using the virtual element method. Eng Anal Bound Elem 121:76–90

Lin Y, Zhou K, Li J (2018) Prediction of slope stability using four supervised learning methods. IEEE Access 6:31169–31179

Liu Z, Shao J, Xu W, Chen H, Zhang Y (2014) An extreme learning machine approach for slope stability evaluation and prediction. Nat Hazards 73(2):787–804

Lu P, Rosenbaum M (2003) Artificial neural networks and grey systems for the prediction of slope stability. Nat Hazards 30(3):383–398

Manouchehrian A, Gholamnejad J, Sharifzadeh M (2014) Development of a model for analysis of slope stability for circular mode failure using genetic algorithm. Environ Earth Sci 71(3):1267–1277

Marjanović M, Kovačević M, Bajat B, Voženílek V (2011) Landslide susceptibility assessment using SVM machine learning algorithm. Eng Geol 123(3):225–234

Michalowski LR (1995) Slope stability analysis: a kinematical approach. Geotechnique 45(2):283–293

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B (2011) Scikit-learn: machine learning in python. J Mach Learn Res 12:2825–2830

Qi C, Tang X (2018) A hybrid ensemble method for improved prediction of slope stability. Int J Numer Anal Methods Geomech 42(15):1823–1839

Qi C, Tang X (2018) Slope stability prediction using integrated metaheuristic and machine learning approaches: a comparative study. Comput Ind Eng 118:112–122

Sakellariou M, Ferentinou M (2005) A study of slope stability prediction using neural networks. Geotech Geol Eng 23(4):419–445

Samui P (2008) (2008) Slope stability analysis: a support vector machine approach. Environ Geol 56(2):255–267

Schapire RE, Singer Y (1999) Improved boosting algorithms using confidence-rated predictions. Mach Learn 37(3):297–336

Shimshoni Y, Intrator N (1998) Classification of seismic signals by integrating ensembles of neural networks. IEEE Trans Signal Process 46(5):1194–1201

Simm J, Abril I (2014) Extratrees: extremely randomized trees (ExtraTrees) method for classification and regression. R package version 1.0. 5.

Sun G, Lin S, Zheng H, Tan Y, Sui T (2020) The virtual element method strength reduction technique for the stability analysis of stony soil slopes. Comput Geotech 119:103349

Wang CH (2004) Study on prediction methods for high engineering slope. Master thesis

Wang L, Wu C, Tang L, Zhang W, Lacasse S, Liu H, Gao L (2020) Efficient reliability analysis of earth dam slope stability using extreme gradient boosting method. Acta Geotech 15(11):3135–3150

Wang H, Xu W, Xu R (2005) Slope stability evaluation using back propagation neural networks. Eng Geol 80(3–4):302–315

Wen S, La H, Wang C (2013) Analysis of influence factors of slope stability. Appl Mech Mater Trans Tech Publ 256:34–38

Wolpert DH (1992) Stacked generalization. Neural Netw 5(2):241–259

Xiao Z, Chen C, Ji Y (2011) Applying adaptive neuro-fuzzy inference system to stability assessment of reservoir slope. Bull Soil Water Conserv 31(5):186–190

Xu W, Shao J (1998) Artificial neural network analysis for the evaluation of slope stability. Application of numerical methods to geotechnical problems. Springer, Berlin, pp 665–672

Xu F, Xu W, Wang K (2009) Slope stability analysis using least square support vector machine optimized with ant colony algorithm. J Eng Geol 17(2):253–257

Yan X, Li X (2011) Bayes discriminant analysis method for predicting the stability of open pit slope. In: 2011 International conference on electric technology and civil engineering (ICETCE). IEEE, pp 147–150

Yun L, Keping Z, Jielin L (2018) Prediction of slope stability using four supervised learning methods. IEEE Access 6:31169–31179

Zhao H, Yin S, Ru Z (2012) Relevance vector machine applied to slope stability analysis. Int J Numer Anal Meth Geomech 36(5):643–652

Zheng F, Leung YF, Zhu J, Jiao Y (2019) Modified predictor-corrector solution approach for efficient discontinuous deformation analysis of jointed rock masses. Int J Numer Anal Meth Geomech 43(2):599–624

Zheng F, Zhuang X, Zheng H, Jiao Y, Timon R (2020) Kinetic analysis of polyhedral block system using an improved potential-based penalty function approach for explicit discontinuous deformation analysis. Appl Math Model 82:314–335

Zhou Z, Jiang Y, Yang Y, Chen S (2002) Lung cancer cell identification based on artificial neural network ensembles. Artif Intell Med 24(1):25–36

Zhou J, Li X, Mitri HS (2015) Comparative performance of six supervised learning methods for the development of models of hard rock pillar stability prediction. Nat Hazards 79(1):291–316

Zhou J, Li E, Yang S, Wang M, Mitri H (2019) Slope stability prediction for circular mode failure using gradient boosting machine approach based on an updated database of case histories. Saf Sci 118:505–518

Zhou S, Rabczuk T, Zhuang X (2018) Phase field modeling of quasi-static and dynamic crack propagation: COMSOL implementation and case studies. Adv Eng Softw 122:31–49

Zhou S, Zhuang X, Rabczuk T (2018) A phase-field modeling approach of fracture propagation in poroelastic media. Eng Geol 240:189–203

Zhou S, Zhuang X, Zhu H, Rabczuk T (2018) Phase field modeling of crack propagation, branching and coalescence in rocks. Theor Appl Fract Mech 96:174–192

Zhu C (2005) Analysis and evaluation of slope stability—taking yuanmo expressway slope as an example. Kunming University of Science and Technology

Zhu B, Zhou D, Chen S, Wang L (2011) Evaluation of slope stability by improved BP neural network with L-M method. West-China Explor Eng 10:21–24

Zhuang X, Zheng F, Zheng H, Jiao Y, Rabczuk T, Wriggers P (2021) A cover-based contact detection approach for irregular convex polygons in discontinuous deformation analysis. Int J Numer Anal Meth Geomech 45:208–233

Acknowledgements

Supported by Open Research Fund of the State Key Laboratory of Geomechanics and Geotechnical Engineering, Institute of Rock and Soil Mechanics, and Chinese Academy of Sciences (Z019008), the Natural Science Foundation of China (Grant Nos. 42107214, 11972043 and 11902134)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Rights and permissions

About this article

Cite this article

Lin, S., Zheng, H., Han, B. et al. Comparative performance of eight ensemble learning approaches for the development of models of slope stability prediction. Acta Geotech. 17, 1477–1502 (2022). https://doi.org/10.1007/s11440-021-01440-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11440-021-01440-1