Abstract

Predictive models of student success in Massive Open Online Courses (MOOCs) are a critical component of effective content personalization and adaptive interventions. In this article we review the state of the art in predictive models of student success in MOOCs and present a categorization of MOOC research according to the predictors (features), prediction (outcomes), and underlying theoretical model. We critically survey work across each category, providing data on the raw data source, feature engineering, statistical model, evaluation method, prediction architecture, and other aspects of these experiments. Such a review is particularly useful given the rapid expansion of predictive modeling research in MOOCs since the emergence of major MOOC platforms in 2012. This survey reveals several key methodological gaps, which include extensive filtering of experimental subpopulations, ineffective student model evaluation, and the use of experimental data which would be unavailable for real-world student success prediction and intervention, which is the ultimate goal of such models. Finally, we highlight opportunities for future research, which include temporal modeling, research bridging predictive and explanatory student models, work which contributes to learning theory, and evaluating long-term learner success in MOOCs.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In their short history to date, Massive Open Online Courses (MOOCs) have simultaneously generated enthusiasm, participation, and controversy from both traditional and novel participants across the educational landscape. Trying to understand and improve enrollment, completion, and the overall learner experience has led to efforts to generate effective student models which can predict student dropout, completion, and learning in MOOCs. Despite the extensive attention devoted to such work by several related research communities and by the popular media, little overall synthesis of this work has been performed. We believe that such a synthesis is necessary, now more than ever, for several reasons.

Published predictive modeling research in MOOCs over time. MOOC research has expanded dramatically since 2012, but little overall synthesis of predictive modeling work has been published. Even less work has synthesized or critically evaluated the feature extraction, modeling, and methodology of prior research, as we do in the current work

First, MOOC research is at a critical stage in its development. An abundance of research has explored the phenomenon of MOOC dropout from several perspectives since the “year of the MOOC” in 2012 (Pappano 2012), as shown in Fig. 1. We survey \(n = 87\) such studies in this work. A clear synthesis of this research is necessary in order to explore where consensus has emerged across the research community, where there may be research gaps or unanswered questions, and what action needs to be taken as a result of both. If we fail to learn from the lessons of several years of MOOC analysis, MOOCs may fail to deliver on their promise for millions of learners around the globe.

Second, there is a need to evaluate not only the findings of such research, but also its methodology. Now that a body of research on student success prediction in MOOCs has accumulated, it is possible and appropriate to survey the techniques most commonly used. Such a critical survey allows us to disseminate consensus findings on effective techniques for student success prediction, to understand whether gaps exist, and to determine a future research agenda to address them. In particular, this issue is relevant to predictive modeling of student success in MOOCs because of the diverse communities that its practitioners are drawn from: education and the learning sciences, computer science, statistics and machine learning, behavioral science, and psychology researchers each bring different methods to the field. A methodological survey allows scientists to ensure that their knowledge is constructed on a strong methodological foundation, and to strengthen it where appropriate. In particular, this survey of predictive modeling allows for (a) the sharing of feature extraction and modeling approaches known to be effective, while also encouraging exploration into under-researched methods, and (b) sharing of overarching experimental protocols, such as prediction architectures and statistical evaluation techniques, which affect the inferences such modeling experiments produce. In this work, we provide detailed and novel data about the state of predictive student modeling in MOOCs for researchers interested in both (a) and (b).

Third, a critical promise of student success prediction in MOOCs has not yet been delivered on: the use of these predictions to actively improve learner outcomes and experiences through the operationalization of predictive models in MOOC platforms. We hope that this work can identify effective strategies for such tools to be used “in the wild” in active courses to achieve their oft-stated goal of impacting learner success in MOOCs. The implementation of live, real-time tools and personalized interventions stands to benefit from effective predictive modeling which can target and personalize interventions for those who need them most. Additionally, the implementation of predictive modeling as part of a MOOC has never been more practically achievable, as both the hardware and software required for user modeling in digital environments (such as MOOCs) have become increasingly accessible. The use of predictive models for adaptive user experiences more broadly has grown quite common, and is commonly executed at a massive scale (for example, prediction-based targeted advertising on the World Wide Web). A clear knowledge of the research consensus on effective predictive modeling methods in MOOCs will support the construction of such tools, effectively “closing the loop” of predictive modeling in MOOCs. We leave the development of the interventions based on these predictive models to future work.

In the work that follows, we address each of these three goals. In the remainder of this section, we provide the reader with a basic introduction to MOOCs and survey the state of the overall MOOC landscape to date. In Sect. 2, we dive deeper into the specific focus of this work by discussing student success prediction in MOOCs, introducing the task, the data typically available for its execution, and the basic procedure for the construction and evaluation of predictive models. Section 3 surveys prior work on predictive models of student success in MOOCs, including a detailed matrix of \(n = 87\) previous works on this topic in Appendix Table 7 (with abbreviations listed in Table 8). We synthesize the results of this survey in Sect. 4, highlighting overall trends and providing detailed data on the methodologies used across the sample of works surveyed. We discuss research gaps, methodological issues, and unanswered questions suggested by the literature survey in Sect. 5. Opportunities for future research suggested by our survey, as well as our interpretation of the direction of the field, are discussed in Sect. 6. We conclude in Sect. 7.

This work is part of a series on predictive models in MOOCs, and in future works we provide a discussion of techniques for model evaluation, and infrastructure for replication of machine learned models in MOOCs.

1.1 MOOCs: a novel educational and research context

Massive Open Online Courses are enticing, in part, because they are so different from many other forms of education. However, exactly what a MOOC is is itself the subject of some debate. We do not seek to fully resolve this debate here, but in this section, we detail several generally agreed-upon characteristics of MOOCs in order to build a working definition for use within this review.

We take MOOCs to have the following attributes:

Massive, open and online By definition, these are the attributes most closely associated with MOOCs. MOOCs are massive in that they typically have far more students than even the largest traditional classroom courses. This would include, at minimum, hundreds of learners for specialized courses to hundreds of thousands of learners for more general or popular courses. The instructional team tasked with supporting these learners is typically very small; therefore the student–teacher ratio in these courses is far higher than in traditional higher education or e-learning courses. MOOCs are open to all learners, often being both public and free. The two largest English-language MOOC providers, Coursera and edX, initially offered all courses free of cost, though business model changes have seen more barriers to taking free courses over time (though both platforms still offer financial aid programs, and at least partial access for unpaid learners in most courses). The openness of MOOCs is perhaps what makes them most exciting by providing access to high-quality educational experiences for all learners around the globe.Footnote 1 Finally, MOOCs are online—they are digital, internet-based courses, not in-person courses. Course materials, assignments, instructors, and peers are all accessed on the World Wide Web via a computer or other device with a web browser or a dedicated platform-specific application.

Low- or no-stakes Traditional higher education and e-learning courses are typically taken strictly for academic credit or other official certifications, often at a non-trivial financial cost to the participant, with implicit or explicit penalties for poor performance (e.g. low grades, loss of tuition without credit). In contrast, MOOCs provide the option to simply take the course independent of any certification, credit, or degree program, with no penalty for repeating or failing to complete the course. This gives MOOCs a particularly unique set of course participants who sometimes have little or no investment in completing a course, making the task of student success prediction (and, consequently, the task of student support based on these predictions) particularly challenging. Under this definition, paid and for-credit online courses are typically not considered MOOCs. Many other low- or no-stakes learning environments exist—such as textbooks and tutorials, museums, and other offline and online resources—but these environments do not share the other features of MOOCs.

Asynchronous The time scale for content consumption and participation in a MOOC tends towards the flexible, although the degree of this flexibility may vary. Many MOOCs are clearly divided into “modules,” often by week, which are released to learners over time. These courses often have clearly-defined start and end dates, with successful completion being contingent upon learners meeting specified criteria by the course end date. Within these time windows, however, learners were typically free to browse content and complete assignments in any order and at any time. A fully asynchronous model has recently become more common in MOOCs, where learners have access to all content on demand after entering a course, and can complete content at their own pace. We note that this model has coincided with the transition to a subscription-based, as opposed to course-based, pricing model on certain MOOC platforms.

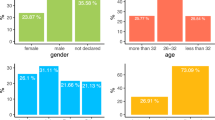

Heterogeneous As a direct consequence of many of these features, the population of learners in MOOCs is heterogeneous in terms of both demographics and intentions (Koller et al. 2013; Chuang and Ho 2016). Even as course populations skew toward college-educated males from industrialized countries, these course populations are still far more diverse than any of the other educational contexts superficially similar to MOOCs (Glass et al. 2016). The backgrounds of learners vary significantly, from graduate-level educated learners who are employed full-time in the subject area of the course, to students without a high school diploma. Learners vary in gender, age, nationality, and intent. The majority of MOOC students are located outside the United States and hold a bachelor’s degree (Chuang and Ho 2016), and there is also evidence that teachers are well-represented in course populations (Seaton et al. 2015; Chuang and Ho 2016). However, obtaining even basic demographic data on users is currently only available through on optional questionnaires with low response rates (Kizilcec and Halawa 2015; Whitehill et al. 2015; DeBoer et al. 2013). As a result, predictive models are often unable to utilize this data directly and instead need to draw directly on learner behavior, not demographics or reported intentions.

Together, these features of MOOCs define an educational environment that is sufficiently different from other well-studied environments—such as e-learning, on-campus higher education, or digital K-12 education—to justify the formation of a new and separate predictive modeling literature. As an illustrative example, consider a comparison of a “dropout” student (a non-completer) in a MOOC versus any of the traditional contexts mentioned above. One might reasonably expect different factors to contribute to dropout, different subpopulations to be most likely to drop out, and for learners to experience different consequences of dropping out, in a MOOC compared to other educational contexts. Indeed, DeBoer et al. (2014) argues for a broad reconceptualization of traditional student success metrics in MOOCs instead of the use of terms grounded in traditional education courses, such as the term “dropout;” Reich (2014) proposes “stopout” as a more appropriate term for this outcome.

As we will discuss below, there are also very different data sources available in MOOCs compared to other educational contexts: for example, MOOCs collect rich, granular behavioral data at a level that is unavailable in almost any other context. MOOCs are also characterized by a lack of complete and reliable historical or demographic data; in contrast, institutional course providers (such as brick-and-mortar schools) typically lack any readily-available behavioral data but have rich historical, demographic, and co-curricular data. These data sources are directly relevant to the predictive models which they are used to construct in each context. Again, this implies a material difference between predictive modeling in MOOCs and other educational environments.

Our goal in describing these features of MOOCs is not to argue for a particular conceptualization of MOOCs; it is simply intended to introduce the basic concept of a MOOC to readers, and to motivate the features of MOOCs used as the criteria for inclusion in the literature review in Sect. 3 below.

1.2 The state of the MOOC landscape

As of 2017, an estimated 81 million students have registered for or participated in at least one MOOC (Shah 2018). The five largest MOOC providers, according to self-reported enrollment numbers, are Coursera,Footnote 2 30 million registered users; edX,Footnote 3 14 million registered users; XuetangX,Footnote 4 9.3 million registered users; Udacity,Footnote 5 8 million registered users; and FutureLearn,Footnote 6 7.1 million registered users (Shah 2018). Enrollment continues to grow over time, but there is some indication that enrollments have begun to slow as platforms have transitioned to paid models and phased out various free certification options, and as the course population declines in size over repeated iterations of a course (Chuang and Ho 2016).

These impressive enrollment figures mask a well-known issue with the MOOC experience: around 90% of students who enroll in a MOOC fail to complete it (Jordan 2014). Given the lack of barriers to entry, massive course populations, and high student to teacher ratios in MOOCs, this may not be particularly surprising. As shown in Table 1, a majority of predictive modeling research in MOOCs has focused on dropout prediction. While the massive dropout rate may fail to account for student intentions (Koller et al. 2013), the best data indicates that slightly more than half of students intend to achieve a certificate of completion in a typical MOOC, and around 30% of these respondents achieve this certification (Chuang and Ho 2016). This low completion rate even among intended completers is still cause for concern. Effective predictive models can support several approaches to improving MOOC dropout rates.

As of 2017, MOOCs cover a variety of topics, with over 6850 courses offered by more than 700 universities across these platforms (Shah 2018). Coursera, for instance, offers more than 180 specializations (sequences of courses in a specific topic area, such as “Data Structures and Algorithms” or “Dynamic Public Speaking”). There are several full online graduate degrees offered on the platform, such as the Master of Business Administration iMBA program offered by the University of Illinois, Urbana-Champaign on Coursera. The edX platform offers pathways for learners into higher education, such that when a program (called a MicroMasters) is completed on the MOOC platform, learners are then provided with credit transfer if they subsequently enroll in a residential graduate program. The University of Arizona’s Global Freshman Academy provides the opportunity for students to complete their entire freshman year online. Regardless of platform, format, and structure, Computer Science courses continue to be the most popular courses on the platform, with science, history, business, and health courses also popular (Chuang and Ho 2016; Shah 2018; Whitehill et al. 2017).

2 Student success prediction in MOOCs

Before surveying the vast body of prior work on student success prediction in MOOCs, in this section we seek to clearly define and motivate the task. This framing is essential to the discussion below and to the conclusions we draw from this review.

2.1 Defining student success

Student success in a MOOC can be viewed from several different perspectives. Several outcomes have been used to measure and predict student success in MOOCs, including completion, certification, overall course grades, and exam grades, shown in Table 1. The task of discussing student success in MOOCs is particularly challenging due to the fact that we typically apply language and metrics adopted from traditional educational settings—i.e., dropout, achievement, participation, enrollment—that can mean different things, or seem incoherent, in the context of a MOOC (DeBoer et al. 2014).

In the context of this work, we define student success as encompassing a broad class of metrics which measure course completion, engagement, learning, or future achievement related to the content or goals of a MOOC. We believe that each of these broad categories suggests at least one kind of motivation participants in a MOOC might have for joining the course, but each alone is certainly inadequate to describe “success.” We review work which presents the results of a predictive model of any type of student success according to this definition.

Having several potential metrics to describe student success in MOOCs is useful for several reasons: (a) it allows us to capture metrics related to the diverse goals MOOC learners have, such as course completion, certification, career advancement, or subject mastery (Koller et al. 2013; Reich 2014; b) it reflects the lack of research consensus on how to measure student success in MOOCs (Perna et al. 2014; DeBoer et al. 2014); and (c) it allows us to test the robustness of models by potentially checking their ability to predict multiple different outcomes. While (c) has been an uncommon approach to date, we believe that this is an important avenue for future work [for one example, see Fei and Yeung (2015)].

Several metrics are used to measure student success in the works surveyed below. A collection of the most common metrics used for student success prediction and the frequency with which they occur in our literature review is shown in Table 1. For an examination of alternative long-term metrics of student success, see Wang (2017).

2.2 Why model student success in MOOCs?

Student success predictions are useful for a wide variety of tasks, and these models vary along three main dimensions relevant to these tasks (shown in Fig. 2). We identify three main reasons for developing predictive models of student success:

Three salient dimensions of predictive models in MOOCs. Models vary along all three dimensions, but there is no strict trade-off between any dimensions. We synthesize the state of MOOC research with respect to these dimensions, and highlight methodological gaps needed to improve predictive student models, in Sect. 5

Personalized support and interventions Identifying students likely to succeed (or not succeed) has the potential to improve the student experience by providing targeted and personalized interventions to those students predicted to need assistance. This is the stated motivation behind much of the work surveyed here, which often refer to these students as “at risk” learners (a term adopted from the broader educational literature). In particular, because of the massive student population in MOOCs relative to the size of the instructional support staff, clearly identifying struggling students is important to providing those students with targeted and timely support. Many of the “human” resources in MOOCs are quite scarce (i.e., instructor time), and predictive models can provide timely guidance on (a) identification of which students need these resources, and (b) intervention by predicting which resources can best support each at-risk student. While a teacher might be able to directly observe students in a traditional in-person higher education course, or even in a modestly sized e-learning course, such observation is not available to support MOOC instructors at scale, and predictive models can serve this purpose. Particularly when instructor time and resources are scarce, predictive models which can identify these students with high confidence and accuracy are required. Additionally, many interventions would be unnecessary or even detrimental to the learning of engaged or otherwise successful students.

In order to deliver personalized support and interventions, a predictive model must provide predictions which are both accurate and actionable. We refer to the dimension along which model predictive performance varies in its ability to relate student behavior or attributes to the outcome of interest as its accuracy. We discuss how to measure the quality of a model’s predictions in Sect. 5.2. Here, it suffices to say that accuracy is critical to the delivery of personalized interventions; a model which cannot correctly identify students at risk of dropout cannot effectively support interventions to prevent it. Furthermore, the predictions of such a model must also be actionable. That is, these predictions must enable targeted and timely interventions for supporting student success. We argue in Sect. 5 that there are problems with the actionability of most prior predictive modeling research in MOOCs due to their prediction architecture, which often cannot be implemented in actively running courses.

Adaptive content and learner pathways Predictive models in MOOCs stand to enable the delivery of course content and experiences in a way that optimizes for expected student success. Very little prior research has utilized adaptivity or true real-time intervention based on student success predictions of any form in MOOCs. Whitehill et al. (2015) utilizes dropout prediction to optimize learner response to a post-course survey (this work optimizes for data collection, not learner success), and He et al. (2015) describes a hypothetical intervention based on predicted dropout probabilities (but only implements the predictive model to support it, not the intervention itself). Kotsiantis et al. (2003) describes a predictive model-based support tool for a distance learning degree program of 354 students, a scale far smaller than most MOOCs. The work which most clearly demonstrates adaptive content and learner pathways of which the authors are aware is Pardos et al. (2017), which implements a real-time adaptive content model in an edX MOOC. However, this implementation is optimized for time-on-page, not student learning. The dearth of research on adaptive content and learner pathways supported by accurate, actionable models at scale is, at least in part, due to a lack of consensus on the most effective techniques for building predictive models in MOOCs, which we address through the current work.

Data understanding Predictive models can also be useful exploratory or explanatory tools that help understand the mechanisms behind the outcome of interest. Instead of strictly providing predictions to enable personalized interventions or adaptive content, predictive models can be tools to identify learner behaviors, learner attributes, and course attributes associated with success in MOOCs. These insights can drive improvements to the content, pedagogy, and platform, and contribute to our understanding of the underlying factors influencing student success in these contexts. They also contribute more directly to theory by providing a more detailed understanding of the complex relationships between predictors and outcomes discovered via predictive modeling. We describe this dimension of models as theory-building to highlight their usefulness in the formation of theories about these underlying factors. From this perspective, certain types of models are more useful than others: models with straightforward, interpretable parameters (such as linear or generalized linear models, which provide interpretable coefficients and p values; and decision trees, which generate human-readable decision rules) are far more useful for human understanding of the underlying relationship than those with many complex and interacting parameters (such as a multilayer neural network). Unfortunately, the latter are usually (although not always) more effective in making predictions in practice, so there is often a tradeoff between interpretability and predictive performance. Recent advances in making more complex models interpretable suggest that this tradeoff may be reduced in the future (e.g. Baehrens et al. 2010; Craven and Shavlik 1996; Ribeiro et al. 2016), but at present this “fidelity-interpretability tradeoff” is still a salient issue for predictive models in MOOCs (Nagrecha et al. 2017). This issue is further discussed in Sect. 6.2 below.

2.3 Data for student success prediction in MOOCs

In this subsection, we briefly describe the raw data available for student success prediction in MOOCs, including the common formats, schema, and types of behaviors and metrics collected. We provide data on the use of each raw data source across works surveyed in Sect. 4.

Student success prediction in MOOCs has attracted a great deal of enthusiasm in part because of the data available to researchers interested in studying MOOCs. Digital learning environments such as MOOCs provide rich, high-granularity data at a scale simply not available in traditional educational contexts. While this data varies slightly from platform to platform, because of the dominance of only a few large MOOC providers (most notably, Coursera and edX), the available datasets are remarkably consistent in practice. This is useful for several reasons: (a) enables the use of consistent feature extraction and modeling methods, even across platforms, which reduces both development and computation time; (b) it allows for direct replication of research across courses and even across platforms (Gardner et al. 2018).

Common data generated by MOOC platforms are discussed below. The frequency with which these data types were utilized across our literature survey is shown in Fig. 7.

2.3.1 Clickstream exports

Clickstream exports, also called server logs or clickstream logs, are typically records of every interaction with the server which hosts the course platform in JavaScript Object Notation (JSON) format. These interactions include every request to the web server hosting the course content, including each mouse click, page view, video play/pause/skip, question submission, forum post, etc. The same metadata is recorded for each interaction, and from this record, we can build detailed datasets at several levels of aggregation. An example of entries from a clickstream log is shown in Fig. 3; note the many detailed attributes recorded for each interaction. Clickstream exports are the most raw, high-granularity data available from MOOC platforms. However, this granularity also presents a challenge: raw clickstream data cannot be directly used as input for most predictive models; instead, “features”—attributes relevant to the outcome of interest—need to be manually extracted from the clickstream log. This is a labor-intensive process (we use the terms feature engineering and feature extraction interchangeably to refer to this process). Feature engineering appears more important to the effectiveness of predictive models than the statistical algorithm itself (see Sect. 3 for a more detailed discussion of the importance of feature engineering). Indeed, many of the works surveyed here introduce innovations only to the feature engineering method and adopt otherwise standard classification algorithms for predicting student success from clickstream data (e.g. Brooks et al. 2015a; Veeramachaneni et al. 2014).

Clickstream data also presents a challenge of scale. This data is often quite large (tens of gigabytes for a single course), due to its granular nature and the many individual interactions that take place over the duration of a MOOC. Any aggregation of individual user sessions or interactions requires manually parsing and aggregating data from the clickstream. Simply reading, processing, and extracting the features from such data can be computationally expensive.

2.3.2 Forum posts

A defining feature of most MOOCs is a set of thread-based discussion fora used for various tasks, including interactions directly related to course content and more general community-building and discussion. Different platforms implement discussion fora differently,Footnote 7 but across every major platform, the text of forum posts and a variety of metadata and related interactions (such as upvotes for questions or answers) are typically collected in a relational database, accessed via Structured Query Language (SQL). As shown in Fig. 7, forum post data is second only to clickstream data in terms of its use in predictive models of student success in MOOCs. This data is often used to extract (a) measures of engagement, by tracking users’ forum viewing patterns; (b) measures of mastery, understanding, or affect, generated by applying natural language processing to the raw text of forum posts; and (c) social network data by assembling graphs where various connections in the fora constitute edges. An illustration of a threaded discussion post in a Coursera course is shown in Fig. 4.

2.3.3 Assignments

Assignments are often used in MOOCs similar to the way they are used in residential or in-person courses, and data related to assignment submission is also often stored in a relational database. A variety of assignment types are used in MOOCs, including automatically graded assignments (such as multiple-choice assessments and small programming tasks), manually-graded assignments (such as data analysis reports or essays, which can be graded by both course instructors or, more commonly, peers in the course), in-video questions, interactive lab simulations, and programming assignments completed in external environments (e.g., Jupyter notebooks). Assignment data is typically limited to metadata (i.e., open date, due date) and assignment-level or (less commonly) question-level data about submissions or data about the content of submissions (such as text cohesion metrics of written work or syntactic analysis of submitted code). As Fig. 7 indicates, the use of assignment features is less common, likely due to a combination of (a) the low number of users who complete assignments in MOOCs, as a proportion of total registrants or participants, and (b) the substantial variation across courses in the way assignments are used.

2.3.4 Course metadata

Detailed information about the course and instructional materials are also typically recorded in MOOC platforms and retained for post-hoc analysis. This includes information about course modules, video lectures (length, title, module), and assignments (including quizzes, homework, essays, human-graded assignments, exams, etc.). Little research has actively explored the use of course metadata in predicting student success. The research which has evaluated such data, however, suggests that it may indeed impact factors such as learner persistence and engagement (e.g. Evans et al. 2016; Qiu et al. 2016).

2.3.5 Learner demographics

Most MOOC platforms also record information about learner demographics, when it is available. However, such information is typically collected via optional pre- and post-course surveys, which are subject to various response biases (Kizilcec and Halawa 2015). While this information is potentially interesting, its limited availability (and bias in the data that is available) has limited the research on demographics in MOOCs to date to a small number of studies which we survey in Sect. 3.8. Hansen and Reich (2015) explores using external datasets and IP address-based geolocation to fetch additional demographic data, but not for predictive student modeling.

2.4 Relation to other MOOC research

The predictive modeling research evaluated in this work is situated in the context of a much larger and broader body of MOOC-related research. Prior research on MOOCs has covered a broad variety of topics, including changes in learner discourse over time (Dowell et al. 2017), interventions to improve student completion (Kizilcec and Cohen 2017), demographics and participation rates and the relationship to course activity (Guo and Reinecke 2014), and student plagiarism and academic honesty issues (Alexandron et al. 2017). Additionally, the researchers addressing this topic, both in the predictive context and more broadly, come from a wide variety of academic perspectives, including learning theory, social and experimental psychology, computer science, statistics, economics, design, and linguistics.

Predictive modeling most often occurs in research contexts where the goal is either (a) data understanding (e.g., for learning theorists and psychologists with the aim of understanding the factors most closely associated with dropout) or (b) utilizing predictions as part of a larger learner support system which can be used to improve student experiences or outcomes (e.g., for instructional designers and platform architects). This distinction reflects a larger distinction between the “two cultures” of statistical modeling discussed in Sect. 6.2. We consider both types of work (those focused on modeling for understanding, and those modeling for prediction) in this survey, as both contribute to the goals of understanding and supporting MOOC learners.

3 Predictive models of student success in MOOCs: a feature, outcome, and model-based taxonomy

In this section, we survey prior research on predictive models of student success. We begin the review with an overview of our methodology and relevant categorizations, as well as our methodology and its motivation.

3.1 Categorization scheme

This section describes the categorization scheme used to organize the literature review presented in this work. The three components used in the categorization are also defined in Table 2.

3.1.1 Feature-outcome-model categorization

The works below are grouped into broad conceptual categories based on the the input features, the outcomes of the prediction, and the theoretical models used to motivate the work, when they are described. Generally, there is a strong association between these three components (i.e., experiments which use activity-based features most often predict an activity-based outcome, dropout, and are constructed to evaluate theories about learner behaviors; experiments using cognitive features most often predict a cognitive outcome, such as learning gains, and are supported by theories of cognition and learning). The strongest association is between the input features and the prediction outcome (as we will discuss in detail in Sect. 4.2.2). Theoretical motivations for predictive models are sometimes missing or left unstated (see Sect. 6.3 for further discussion), but when these models are present, they often also align with the input data and the outcome of interest. While we note that the feature-model-outcome correlations are imperfect and there is significant overlap between many groups, we believe that this provides both an effective categorization of prior MOOC research as well as a reasonable model of how this research is conducted (with a set of input data, an outcome of interest, and a theoretical model or question about what is driving associations between input predictors and the outcome). Where a work fits into multiple categories, we discuss it in each applicable category below.

This categorization is a novel contribution of the current work, and has not been previously applied to predictive modeling research in MOOCs, to the authors’ knowledge. Data describing the observed feature-outcome pairings across prior research also contributes insight regarding well-researched areas, and gaps or opportunities for future research. For example, Table 3 shows that only two works surveyed used performance-based features to predict course completion; further research in this area seems warranted.

Each of the broad model categories considered below has something important to offer predictive modeling efforts, but there are likely different underlying factors driving the predictive performance of student success in each category, which makes the separate discussion necessary. Similar feature-based groupings have been used or suggested in other works (e.g. Whitehill et al. 2015, 2017; Li et al. 2017; Liang et al. 2016).

3.1.2 Feature extraction as critical to predictive modeling in MOOCs

Feature extraction, in particular, emerged throughout our survey as a useful dimension on which to separate models, and an element of particular interest to predictive modeling researchers in MOOCs. It has been noted in several works that in addition to being perhaps the most difficult, feature extraction is also one of the most critical tasks in predictive models of student success (Li et al. 2016a; Robinson et al. 2016; Nagrecha et al. 2017).

For example, Li et al. (2017), citing Zhou et al. (2015), notes that “data preprocessing should be considered with more attention than learning algorithms”. Sharkey and Sanders (2014) claims that feature extraction is “arguably the most important step in the process of developing a predictive model.” Taylor et al. (2014b) state that “[w]e attribute success of our models to these variables (more than the models themselves)...any vague assumptions, quick and dirty data conditioning or preparation will create weak foundations for ones modeling and analyses,” emphasizing their feature extraction methods over their modeling techniques despite fitting over 70,000 models in this experiment. The same authors argue in Veeramachaneni et al. (2014) that “[h]uman intuition and insight defy complete automation and are integral part of the process” of predictive modeling in MOOCs; they find that the most predictive features are complex, often relational (requiring the linking of multiple data fields), and were discovered through expert knowledge of both context and content. Feature extraction is highlighted as one of the core components of the dropout prediction problem in Nagrecha et al. (2017), which notes that “the electronic nature of MOOC instruction makes capturing signals of student engagement extremely challenging, giving rise to proxy measures for various use-cases”—that is, the extraction of signal (useful features) from the electronic records of a MOOC is a key task in the pipeline of predictive model-building.

Therefore, we concluded that an effective categorization scheme for this review should highlight feature extraction techniques. The association between many feature extraction methods and the outcomes they are used to predict further “brightens the lines” of this categorization in many cases (such as with performance-based models, which are overwhelmingly used to predict academic performance as shown in Table 3).

3.1.3 Predictive performance evaluation

Despite the current survey’s emphasis on understanding predictive models of student success, we avoid categorizing the work surveyed based on their predictive results alone. This is because of large case-by-case variation in (a) the experimental subpopulations, which are different subgroups of different MOOC course populations, (b) the methodology and metrics for model evaluation, and (c) the outcome being predicted. These three factors are so divergent across the work surveyed that holding the performance of each experiment to the same standard would be more misleading than it would be useful, as we discuss below.

Limited prior research has investigated the issue of how using different types of experimental protocols in predictive modeling experiments might influence or bias the results. This work has demonstrated how different prediction architectures, for example, can influence the results of predictive modeling experiments in MOOCs (Boyer and Veeramachaneni 2015; Brooks et al. 2015a; Whitehill et al. 2017). We will discuss some of the methodological shortcomings that make conducting these comparisons so difficult in Sect. 5 below, including inconsistent experimental populations; ineffective model evaluation; unrealistic or impractical prediction architectures; inconsistent model performance metrics; and others. In another work, we present a sociotechnical platform designed to enable direct replication of predictive modeling results on the same MOOC datasets, which can ameliorate the issue of “apples-to-oranges” model comparison faced by readers to date (Gardner et al. 2018).

3.2 Survey methodology and criteria for inclusion

We intend this to be a relatively broad, inclusive literature survey. We include work which (a) involves an application of predictive modeling of student success, where student success is broadly construed according to one or more of the metrics listed in Table 1; (b) doing so in the context of a MOOC, or in a context sufficiently similar to be of interest to MOOC researchers; (c) which meet basic standards for quality research, including peer-reviewed work which contains sufficient description of their methods as to provide insight into the data and feature engineering, modeling, and experimental results. When a work was considered borderline on one or more of these criteria, we generally erred on the side of inclusion if it made a novel or relevant contribution to the literature. The literature surveyed was drawn from several top conferences and journals in the fields of learning analytics and educational data mining, computer science, web usage mining, and education, but was also collected from other sources (online searches, citations from other works surveyed).

We conducted a broad survey of existing research, hoping to unify work from a wide variety of disciplines which can be broadly considered predictive models. We evaluate work which studies environments that meet the definition of MOOCs described in Sect. 1.1 above. Where studies are excluded, it is typically because they did not evaluate what we considered to be MOOCs, or did not meet other criteria discussed in Sect. 3.

Several keywords were used to search prominent peer-reviewed conference, journal, and workshop proceedings in the fields of Learning Analytics and Educational Data Mining, including the Journal of Learning Analytics, the International Conference on Learning Analytics and Knowledge (LAK), Journal of Educational Data Mining , the International Conference on Educational Data Mining, the International Conference on Learning@Scale, the International Conference on Artificial Intelligence in Education, and the Journal of Artificial Intelligence in Education. Keywords used included “MOOC”, “predict”, “model”, and “dropout”. Additionally, we used the works cited in those works uncovered in our initial survey to ensure that we collected relevant work from the many other fields which have contributed research to predictive modeling in education, such as computer science, data mining, psychology, and educational theory. This review surveys work published in the year 2017 or earlier.

We note that select studies were still included despite not meeting individual components of this definition (for example, we do consider some work evaluating for-credit courses); in these cases we typically include such work either (a) for completeness due to the novelty or important contribution of the work, or (b) in order to err on the side of inclusion when the context of the course(s) under evaluation was not clear. We also note that the xMOOC phenomenon is not represented in our analysis, but this is in part because we were not able to identify any instances of xMOOCs being used with predictive student success models.

In particular, we also note that some work included in the review below might not have prediction as its stated aim. We believe, however, several such works are relevant to this review. “Predictive” modeling and modeling for data understanding are, as we discuss in Sect. 6.2, two sides of the same coin—both use statistical models of the data, which must capture relevant attributes and learn their relationship to an outcome of interest. While one work might construct a logistic regression model, for example, with the aim of understanding its parameters (e.g. Kizilcec and Halawa 2015), another might use the same modeling technique for a purely predictive goal (e.g. Whitehill et al. 2017). As such, techniques which are effective for one approach are often enlightening for the other as well. This is one of the core tensions of the “two cultures”—while black-box models often fit the data better, they are more difficult to interpret; while data models are often highly interpretable, they are often so at the cost of the quality of fit. We thus found that many experiments which might not have the stated aim of prediction were still of great interest to readers of this review. We do, however, attempt to distinguish between works which are purely exploratory or descriptive (where the stated goal is not predictive) throughout the survey that follows.

3.3 Activity-based models

Activity-based models use behavioral data, evaluate behavioral outcomes, or are grounded in theories of learner behavior for predictive modeling.

As shown in Fig. 5, models utilizing activity-based features and outcomes are overwhelmingly the most common in the work surveyed. This is so for several reasons: first, as demonstrated in Fig. 8, most of the works surveyed predict an engagement-based outcome related to dropout or course persistence. Activity features seem most appropriate for this type of prediction task (although more diverse feature sets may improve the quality and robustness of these models). Second, activity data are the most abundant and granular data available from MOOC platforms. Clickstream files (shown in Fig. 3) provide detailed interaction-level data about users’ engagement with the platform, and such granular data is simply not available for any of the other model categories we survey. Collecting a similar level of granularity for these other feature types would require far more sophisticated data collection practices, such as affect detectors or other sensors, which are impractical at MOOC scale. Third, activity features appear to provide reasonable predictive performance even in non-activity-based prediction tasks, such as in grade prediction (e.g., Brinton et al. 2015). Indeed, it is reasonable to expect behavior to be associated with non-behavioral outcomes (i.e., learning). However, we note that state-of-the-art predictive models generally combine feature types to achieve a complete, multidimensional view of learners (e.g. Taylor et al. 2014b).

Counts of works surveyed by feature type, which broadly represents the most common approaches in predictive models of student success in MOOCs. Activity-based feature sets are the most common, which primarily reflects the activity-based outcome (dropout) most commonly predicted in the works surveyed. Note that experiments considering multiple types of features were counted in all relevant categories. Each category is defined in a corresponding subsection of Sect. 3

The level of sophistication of the activity-based features in the works surveyed varies substantially, ranging from simple counting-based features (e.g. Kloft et al. 2014; Xing et al. 2016) to more complex features, including temporal indicators of increase/decrease (Veeramachaneni et al. 2014; Chen and Zhang 2017; Bote-Lorenzo and Gómez-Sánchez 2017), sequences (Balakrishnan and Coetzee 2013; Fei and Yeung 2015), and latent variable models (Sinha et al. 2014a; Ramesh et al. 2013, 2014; Qiu et al. 2016). Despite this variation, each of these typically uses the same underlying data source (clickstream, or a relational database consisting of extracted time-stamped clickstream events) and draws from a relatively small and consistent set of base features, including:

-

Page viewing, or visiting various course pages, such as video lecture viewing pages, assignment pages, or course progress pages;

-

Video interactions, such as play/pause/skip/change speed;

-

Forum posting or forum viewing (a more specific subset of page viewing which has received particular attention);

-

Content interactions, which can take a variety of forms depending on the course and which may include assignment attempts, programming activity, peer assignment review, or exam activity.

The relative consistency of the underlying activity-based feature sets and the few categories into which they can be distilled is largely a reflection of the consistency of the affordances available across the dominant MOOC platforms, particularly edX and Coursera: page viewing, video viewing, forum posting, and assignment submission were, until the introduction of relatively recent features such as interactive programming exercises, some of the only activities available to users of the platform, and the only activities recorded in Coursera clickstreams (Coursera 2013).

3.3.1 Counting-based activity features

Kloft et al. (2014) provided a foundational early predictive model, utilizing a Support Vector Machine (SVM) built on simple counting-based features extracted entirely from clickstream events. They find that high-level features related to activity (number of sessions, number of active days) were predictive of dropout during the first third of the course; measures of content interaction (wiki page views and homework submission page views) became more predictive in the middle third of the course; and navigation and general activity (number of requests, number of page views) were most predictive during the final third of the course. Kloft et al. use principal component analysis to demonstrate that successively generating a wider feature space by concatenating feature vectors for each subsequent week improves separability between dropout and non-dropout students by the final third of the course. This feature appending strategy has been widely adopted in predictive modeling in MOOCs (e.g. Xing et al. 2016), likely due to the nearly universal structuring of MOOCs into weekly modules. Kloft et al. (2014) only report the accuracy of their method, but based on the data they provide, the model’s predictions offer less than a 5% improvement on a majority-class prediction over the first 10 weeks of the course, when most dropouts occur (the challenge of evaluating this particular result using only accuracy highlights issues related to model evaluation and the lack of consistent metrics for reporting predictive results we discuss in Sect. 5). If predictive models are to be used to support early interventions in MOOCs, more accurate predictions are required.

Kloft et al’s results reinforce earlier findings from other digital education environments, such as Ramos and Yudko (2008), who argued in 2008 in the title of their work that “Hits” (not “Discussion Posts”) predict student success in online courses. This pre-MOOC study, conducted on an online university course, is notable for its finding that a simple count of page hits predicted student success (as measured by final course grade) better than either discussion posts or quiz scores, predicting between 7 and 26% of the variance in course grades. This finding has been reinforced in subsequent experiments evaluating behavioral features against other feature types in MOOCs (Crossley et al. 2016; Gardner and Brooks 2018).

3.3.2 Models utilizing early course activity

Several works attempt to address the need for early predictions of student success in a MOOC. Jiang et al. (2014b) offers a simple logistic regression classifier based only on week 1 behavior which effectively predicts certification in a MOOC offered to university students. This model uses only four predictors representing different aspects of student engagement in week 1 of the course (average quiz score, number of peer assessments completed, social network degree, and an indicator for being an incoming university student at the institution offering the course), again suggesting that a limited, but diverse, feature space can effectively predict MOOC student success. However, the fact that this model was trained and tested on only a single MOOC—one which may be particularly unique, because it was offered with an incentive (early enrollment in a university biology major) to current or prospective students at the hosting institution—means that further replication is needed to determine the extent to which these results are generalizable. We highlight similar issues with comparing different experimental populations in MOOC research in Sect. 5.

Other work which attempts to perform more fine-grained dropout prediction with the intention of performing early intervention includes Xing et al. (2016), which uses an ensemble of C4.5 tree and Bayesian Network models built on a set of counting-based engagement features and a Principle Component Analysis-based approach similar to Kloft et al. (2014). Baker et al. (2015) find that early access to course resources in an e-learning history course, including a course textbook and its integrated formative assessments, provides accurate predictions of success or failure within the first 2 weeks of the course. In a pair of works which use more sophisticated temporal features to aid in early dropout prediction, Ye and Biswas (2014) and Ye et al. (2015) find that fine-grained features related to either (a) temporal engagement with lecture quizzes or (b) the quantity of engagement with lecture quizzes improve models, but that once either (a) or (b) is included, adding the other provides no further performance gains. This result may suggest a plateau to the effectiveness of the features they evaluate, or it may highlight the need for more flexible modeling techniques to learn the complex patterns in rich, granular feature sets.

Stein and Allione (2014) evaluate learner behavior in a microeconomics MOOC. Stein and Allione find that early engagement—completing a quiz or a peer assessment exercise in the first week of a 9-week course—is a significant predictor of persistence in the course, even when controlling for other behaviors. They conclude that “the attrition pattern is not uniform among all enrollees, but rather there are distinct sub-groups of participants who reveal their type early on” (Stein and Allione 2014, p. 2). This suggests that students’ behavior early in the course might be particularly predictive of their final performance, which is a useful result for researchers or other stakeholders interested in obtaining accurate, early performance predictions.

A practical issue with “early warning” systems is that their predictions can change dramatically during the early stages of a course as the model predicts based on only small amounts of data. He et al. (2015) address this challenge by using a smoothed logistic regression model trained from a previous offering of a MOOC to make calibrated predictions on a future offering which where fluctuation of predicted dropout probabilities over time is minimized. This smoothing provides stable predictions of at-risk students for early intervention, a useful property for real-world implementation which allows the students tagged as “at-risk” to remain relatively stable over time.

3.3.3 Temporal and sequential activity models

An early approach to utilizing the temporal nature of activity data (by using a model which captures transition probabilities over time from a weekly feature set) is Balakrishnan and Coetzee (2013). This work uses a relatively small set of features (cumulative percentage of available lecture videos watched, number of threads viewed on the forum, number of posts made on the forum, number of times the course progress page was checked), compiled over each week of the course, to construct a Hidden Markov Model (HMM) to predict dropout. A particularly novel aspect of this work is the use of students’ checking of their course progress page as an input feature. A challenge present in all predictive models of student success in MOOCs is accounting for students’ diverse intentions (browsing, learning, completing, etc.). Balakrishnan and Koetzee introduce the course progress checking feature as an observable—and effective—proxy for an intention to complete: students who never check their course progress have a dropout rate of 20–40% at each week of the course, while students who check their progress four or more times have a dropout rate of less than 5% each week. This particular result suggests that finding observable proxies for student intentions is a tractable and useful problem for predictive models of student success in MOOCs.

In contrast to the simple feature appending approach used by e.g. Kloft et al. (2014), which shows variable (and only slight) improvement over weeks as data accumulates, more sophisticated temporal modeling approaches have demonstrated the ability to improve predictions more rapidly and consistently. Brooks et al. (2015a) examine how a higher-order time series method improves by exploring its incremental changes in performance with each additional day of MOOC data; they demonstrate rapid performance gains over the first 3 weeks of each MOOC evaluated. Fei and Yeung (2015) explore sequential models, including a Long Short-Term Memory neural network (LSTM), which takes sequences of weekly activity feature vectors as its input. Fei and Yeung demonstrate consistent improvement in these models’ performance as additional data is collected over course weeks, particularly over the initial weeks of a course. This model is directly compared to several others, outperforming (1) a Support Vector Machine [SVM; for reference to Kloft et al. (2014) but with a different basis kernel]; (2) two variants of Input–Output Hidden Markov Models [IOHMM; for reference to Balakrishnan and Coetzee (2013), which uses a different HMM variant]; and (3) logistic regression [compare to Jiang et al. (2014b), Veeramachaneni et al. (2014), Liang et al. (2016)].

The use of an LSTM by Fei and Yeung (2015) is a promising approach, but further replication across a larger sample of courses is needed. This work also demonstrates how challenging it can be to compare results across machine learned models when exact replication of experimental populations and method is not possible (for example, Fei and Yeung cannot compare their model by using the data from Balakrishnan and Coetzee (2013), nor can they perfectly reproduce the HMM model implementation from only the published description; we discuss this issue in Sect. 5), but their effort to provide these reference points is still useful.

Additionally, Fei and Yeung (2015) implement their model using three different definitions of dropout, which demonstrates the challenges of comparing predictive models of student success using published results (which often only vaguely describe outcome or feature definitions) and also suggests the robustness of their results. Wang and Chen (2016) evaluate a Nonlinear State Space Model (NSSM) in comparison to several other models, including an LSTM, and suggest that the NSSM achieves superior performance. We discuss the need for further comparative work in Sect. 6.

Furthermore, we note that LSTMs and any deep neural network architectures require a large amount of data in order to accurately estimate the large number of model parameters involved. As a result, the use of these models is only available when large sets of training data (thousands or millions of instances) are available. This also points to the need for large, shared benchmarking datasets in the educational predictive modeling community, such as those provided by the MOOC Replication Framework (MORF) (Gardner et al. 2018)Footnote 8 and DataStage.Footnote 9

Sinha et al. (2014b) use sequential activity features in combination with higher-order graphical features (which represent the richness, repetition, and activity/passivity of students’ interaction sequences) to predict dropout. They also conduct the useful comparison of whether using features from the current week only versus a students’ entire history improves performance, finding that the full history does not provide a significant improvement over current week only features. This result conflicts with Xing et al. (2016), which finds that historical features improve the quality and stability of predictions in a single course offered on Canvas, but Sinha et al. use a larger and perhaps more representative sample of MOOCs.

3.3.4 Latent variable modeling

Latent variable modeling has been commonly applied to predictive models of student success, because of its ability to infer complex relationships between predictors in a data-driven way.

Ramesh et al. (2013) apply Probabilistic Soft Logic (PSL) to a set of activity- and natural language-based features to model student performance. This work uses an expert-generated latent variable approach in which engagement is “modeled as a complex interaction of behavioral, linguistic and social cues” (p. 6). However, this particular method presents a potential barrier to practical implementation by utilizing only human-generated PSL rules. This is problematic for two reasons: (a) even experts may not be able to exhaustively identify the factors important to student success in MOOCs, particularly in a new course or a different domain (indeed, this is what motivates much of the work surveyed here), and (b) learning these features is itself the goal of data-driven predictive modeling. Manually defining latent engagement categories prevents truly data-driven discovery of latent user profiles or engagement types. Furthermore, by restricting the model to a small set of 5-7 features, this approach limits experimenters from learning about broader feature sets and their relationship to student success. Ramesh et al. (2014) expands on their approach by using the latent variable assignments from this PSL method as predictors in a survival model.

In a pair of works utilizing the same underlying feature set, Halawa et al. (2014) and Kizilcec and Halawa (2015) explore the use of learner activity features for predicting dropout in MOOCs. In the first of these works, Halawa et al. (2014) use a simple thresholding model to explore the use of counting-based learner activity features to predict dropout, theorizing that both observable learner activity and dropout are driven by latent, unobservable “persistence factors” which students possess to varying degrees. Halawa et al. show that this model is able to spot risk signals at least 2 weeks before dropout for over 60% of the students in their experimental population (students who joined in the first 10 days of the course and have viewed at least one video), suggesting that early dropout prediction may be tractable for this group. Kizilcec and Halawa (2015) applies this analysis to a sample of 20 MOOCs, utilizing the same feature set with a simple logistic regression model with similar findings.

3.3.5 Course metadata

There has been a limited amount of prior work on studying aspects of courses themselves which may be relevant to student activity within the courses. In a work notable for its comprehensive sample of MOOCs, Evans et al. (2016) examine a sample of 44 MOOCs and over 2 million learners, evaluating both student and course traits for association with engagement and persistence. Four findings are particularly relevant to student success prediction in MOOCs. First, early engagement (such as registering more than 4 weeks prior to course opening, or completing a pre-course survey) is the strongest predictor of completion. Second, the steep dropoff in engagement is “very strong and nearly universal” across the courses examined, which provides evidence supporting the implicit assumption of generalizability across courses in many other works. Third, the title of individual lectures are associated with differing levels of engagement, with titles containing the words “intro,” “overview,” and “welcome” having significantly higher rates of watching. Fourth, the first offering of a course has significantly higher rates of completion than subsequent offerings—an important finding with implications for the real-world deployment of models learned on data from previous courses.

Additionally, Qiu et al. (2016) evaluates the ways in which course subject interacts with learner demographics (i.e., gender) in predictive models. Qiu et al. find significant differences between the behavior of students in science MOOCs versus non-science MOOCs. However, Whitehill et al. (2017) find that models trained on data from many different domains are actually more accurate than models trained on courses from only the same field as the target course, so perhaps these cross-disciplinary differences in student behavior can be addressed by using sufficiently diverse training sets to construct student models.

3.3.6 Higher-order activity-based features

Other work, utilizing more complex feature types, has also begun to emerge in MOOC research. This includes explorations of higher-order n-gram representations of learner activity data, which has demonstrated promising predictive performance (e.g. Brooks et al. 2015a, b; Li et al. 2017). In activity-based n-gram models, features are assembled using counts of unique sequences of events or behaviors; these features are then used to construct supervised learning models. This allows for the construction of large feature spaces which capture complex temporal patterns, and the frequency with which they occur. These works operate under the (often explicit) assumptions that sequences of behavior, irrespective of the time gaps between them, contain richer information than individual events or counts of these events without considering the context of other neighboring events in time.

As discussed above, Sinha et al. (2014b) use n-gram features with a graphical model, and demonstrate that they can achieve reasonable predictive accuracy with only a single week of historical data.

We previously discussed Coleman et al. (2015), which applies topic modeling to sequences of learner data to learn “profiles” of MOOC learners based on their activity sequences (“shopping”, “disengaging”, and “completing”) . Each of these works and other sequence-based approaches discussed above (i.e., Balakrishnan and Coetzee 2013; Wang and Chen 2016) can be thought of as capturing a temporal element of MOOC data. We argue in Sect. 6.1 below that further work in this vein is needed.

As we discuss below, feature engineering (not predictive modeling algorithms) is the primary driver of improvements in predictive modeling in MOOCs to date; future work should continue to pursue higher-order or other unique feature engineering approaches which capture information relevant to student success.

3.3.7 Novel feature extraction and prediction architectures

In a series of works, Veeramachaneni et al. (2014), Taylor et al. (2014a, b) and Boyer and Veeramachaneni (2015) further demonstrate both the utility of effective feature engineering and how, when combined with effective statistical models, such methods yield performant student success predictors. These works use a combination of crowd-sourced feature extraction, automatic model tuning, and transfer learning to demonstrate several novel approaches to constructing activity-based models of student success in MOOCs.

Veeramachaneni et al. (2014) use crowd-sourced feature extraction, leveraging members of a MOOC to apply their human expertise and domain knowledge to define behavioral features for stopout prediction. The authors find that these crowd-proposed features are more complex and have better predictive performance than simpler author-proposed features for all four cohorts evaluated (passive collaborator, wiki contributor, forum contributor, and fully collaborative). This work utilizes a simple regularized logistic regression for the predictive model, again demonstrating that many effective predictive models of student success in MOOCs have relied on clever feature engineering, not sophisticated algorithms. The use of regularization common in MOOC research (see Sect. 4 for details) due to the large number of correlated predictors often present in student models.

Taylor et al. (2014b) applies the feature set from Veeramachaneni et al. (2014) to explore over 70,000 models using a self-optimizing machine learning system. However, the consideration of such a massive model space on only a single cohort of students virtually guarantees at least some success in prediction due to chance alone. Further validation and testing of the “best” models identified in this work are needed. In many ways, this work is an extreme example of a common approach where large model spaces are explored without utilizing effective statistical evaluation methods, resulting in performance data whose significance and generalizability is difficult to interpret.Footnote 10

Boyer and Veeramachaneni (2015) explore transfer learning using a subset of the feature set from these prior works. Boyer and Veeramachaneni (2015) is notable for its experimental treatment of how previous iterations of a MOOC can be used to predict on future iterations, which is how such models are used in practice. This setup addresses one challenge of model deployment in “live” courses, and provides initial data on effective transfer architectures for doing so. While many of the experimental results are inconclusive, Boyer and Veeramachaneni demonstrate two particularly important findings.

First, Boyer and Veeramachaneni find that a posteriori models—built retrospectively using the labeled data from the target course itself, which is the dominant experimental architecture used across our survey—presents “an optimistic estimate,” and that such models “struggle to achieve the same performance when transferred” (we discuss potential issues with a posteriori models, and their prevalence across the work reviewed, in Sect. 5.3). They conclude: “when developing stopout models for MOOCs for real time use, one must evaluate the performance of the model on successive offerings and report its performance” (emphasis from original) (Boyer and Veeramachaneni 2015, p. 8). This and other work (e.g. Brooks et al. 2015a; Evans et al. 2016; Whitehill et al. 2017) suggests that there is a great deal of work to do in replicating, re-evaluating, and exploring the generalizability of previous stopout prediction work performed using an a posteriori architecture. For one example of work which compares models evaluated both within and across courses, see Wang and Chen (2016), which presents evidence that the “penalty” for model transfer across courses might be minimal.

Second, Boyer and Veeramachaneni find that an in situ prediction architecture transfers well, achieving performance comparable to a model which considers a users’ entire history (which is not actually possible to obtain during an in-progress course). In situ architectures consider data and proxy labels from the same course to train a model (rather than true labels of future stopout, which are not known at the time of training/prediction in this realistic formulation of the task). This finding presents a possible approach to resolve the problems with using a posteriori modeling in practice, and is supported by other work (e.g. Whitehill et al. 2017).

In a different examination of model transfer, we surveyed two works (Vitiello et al. 2017b; Cocea and Weibelzahl 2007) which examine how models trained on one platform transfer to another (the former studies a MOOC environment; the latter a web-based e-Learning system). Both demonstrate that high-performing features are stable even for models trained across different platforms. This suggests that effective activity-based feature sets may transfer well across MOOC platforms (when the data they require is available from these platforms), but further research is required to verify this result.

Another innovative approach to representing and modeling activity sequences is presented in Zafra and Ventura (2012), where a multi-instance genetic algorithm is used to model “bags” of instances representing information about each students’ activity across various behavior and resource types. This algorithm is particularly unique in its ability to resolve missing-data issues with sparse features (such as forum posts) available only for a small subset of learners (Gardner and Brooks 2018): the multi-instance algorithm accepts bags of varying sizes to accommodate the unique subsets of activities displayed by each student. Zafra and Ventura’s experiment is conducted in the context of a set of e-learning courses offered via Moodle, but the authors argue that this approach is scalable and that it would be particularly useful for large online courses due to the heterogeneous student behavior patterns in these courses.

3.4 Discussion forum and text-based models

Discussion forum and text-based models use natural language data generated by learners and/or use linguistic theory as the basis of student models.

Threaded discussion fora are a prominent feature of every major MOOC platform and are widely used in most courses. Detailed analysis of the data from discussion fora provides the opportunity to study several dimensions of learner experience and engagement which are not detectable elsewhere. This includes a rich set of linguistic (measured by analysis of the textual content of forum posts), social (measured by the networks of posts and responses, or actions such as up/downvotes), and behavioral features not available purely from the evaluation of clickstream data. Gardner and Brooks (2018) argues that understanding the individual contributions that separate data sources make to predictive models is useful in determining whether scarce developer time ought to be dedicated to feature engineering, extraction, and modeling from those sources. This is particularly relevant to the complex data in discussion fora: extracting the features required to construct many of the models surveyed below can be time- and developer-intensive; it should only be done if the benefits (in terms of improved prediction or insight) justify these costs.

A foundational series of forum-based predictive work is that of Rosé, Wen, Yang, and collaborators (Rosé et al. 2014; Wen et al. 2014b; Yang et al. 2015), and particularly Yang et al. (2013). This series of work uses discussion forum data to identify the social environmental characteristics that are most conducive to persistence or sustained engagement in a MOOC. Yang et al. (2013) uses forum post data to explore the predictiveness of three types of features for forum posters in a single MOOC: cohort (the week in which a user joined the course), forum post (threads started, post length, content length), and social network (several metrics, including centrality, degree, authority, etc.). Of 16 variables considered in a variety of model specifications, Yang et al. find only three that are significant predictors for these students: being a member of cohort 1 (joining in the first week of the course), writing forum posts that are longer than average, and having a higher than average authority score are all associated with a lower probability of dropout. Rosé et al. (2014) adds subcommunity membership to this feature set; in this case, cohort 1 membership is still significant, but their finding on authority is reversed—with a “nearly 100% likelihood of dropout on the next time point for students who have an authority score on a week that is a standard deviation larger than average in comparison with students who have an average authority score” (p. 198). A Mixed Membership Stochastic Blockmodel (MMSB) is used to identify the subcommunities utilized as predictors. These results suggest that the social factors, and not the language, of discussion fora may be more effective predictors of dropout for students who post in the fora than the text of the post itself. Work by Wen et al. (2014b) and Yang et al. (2015) are discussed in Sect. 3.6 below.