Abstract

In this paper, we construct a novel algorithm for solving non-smooth composite optimization problems. By using inertial technique, we propose a modified proximal gradient algorithm with outer perturbations, and under standard mild conditions, we obtain strong convergence results for finding a solution of composite optimization problem. Based on bounded perturbation resilience, we present our proposed algorithm with the superiorization method and apply it to image recovery problem. Finally, we provide the numerical experiments to show efficiency of the proposed algorithm and comparison with previously known algorithms in signal recovery.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(\varGamma _{0}(H)\) be a class of convex, lower semicontinuous, and proper functions from a Hilbert space H to \(( \infty , + \infty ].\) The non-smooth composite optimization problem (shortly, NSCOP) is defined by

where \(\psi , \varphi \in \varGamma _{0}(H) \), \(\psi \) is differentiable, \(\varphi \) is not necessarily differentiable, and \(\nabla \psi \) is Lipschitz continuous on H. The NSCOP can be traced back to classical work of Bauschke and Combettes [1] and it also has a typical research fields in linear inverse problems [2]. This class of optimization problem covers a large number of applications in applied mathematics, robotics, medical imaging, financial engineering, machine learning, signal processing and resource allocation, see e.g. [3,4,5,6,7,8,9]. Due to recent boom in automations and machine learning, smart iterative algorithms are indispensable in the fields of artificial intelligence. This, however, has lead to an increase in demand for feasible and faster algorithms in which all the relevant components can be evaluated sufficiently quickly and at least time. One of the goals in the present paper is to seek to explore a novel and faster algorithm for solving NSCOP.

The important and well-known power tool for solving the problem (1) is proximal gradient algorithm. It is as an analogous tool for non-smooth, constrained, large-scale, or distributed versions of Newton’s method for solving unconstrained smooth optimization problems of modest size.

Assumption 1

[1]: (The existence of solution of NSCOP)

Let \(\varOmega \) be the set of all solutions of (1). If \( \psi (x) + \varphi (x) := \varPhi \) is coercive, that is,

Then \(\varOmega = Arg \min \varPhi \ne \emptyset . \) This means that \(\varPhi \) has a minimizer over H.

Assumption 2

[1]: (Uniqueness of Solution of Proximal Mappings)

Let \(\varphi \in \varGamma _{0}(H) ,\) then for each x, the function

In view of this, the proximal operator of \(\varphi \) of order \(\lambda >0\) is defined by

The proximal gradient algorithms (shortly, PGA) are used to split the contribution of the fuctions \( \psi \) and \( \varphi \), in particular, the gradient descent step determined by \(\psi \) [10, 11] and the proximal step induced by \(\varphi \) [12,13,14]. PGA is easy to implement and applicable in problems that involve large or high-dimensional datasets. Xu [15] submitted that \(x^{*}\) is a solution of the NSCOP (1) if and only if \(x^{*}\) solves the fixed point equation

He then established the weak convergence of PGA as follows:

where \(\lambda _{n} > 0\) is a step size. Recently, Guo and Cui [16] modified the PGA by using viscosity algorithm [17] as follows:

where \(\{\alpha _n\}\) is a sequence in [0, 1], \(f: H \rightarrow H \) is a \(\rho \in (0,1)\) contractive operator, \(L > 0\) a Lipschitz constant of \(\psi \) and \(e : H \rightarrow H \) represents a perturbation operator satisfying

They proved that the sequence \(\{ x_n \}\) generated by (5) converges strongly to a point \(x^{*}\in \varOmega \), where \(x^{*}\) is the unique solution of the variational inequality problem:

under the following conditions:

-

(C1)

\(0< a= \inf _{n} \lambda _{n} \le \lambda _{n} < \frac{2}{L}\) and \( \displaystyle \sum _{n=0}^\infty \Vert \lambda _{n+1}-\lambda _{n} \Vert < \infty \);

-

(C2)

\( \{\alpha _{n} \} \subset (0, 1)\) and satisfying \( \displaystyle \lim _{n\rightarrow \infty }\alpha _n=0 \);

-

(C3)

\( \displaystyle \sum _{n=0}^\infty \alpha _{n} = \infty \) and \( \displaystyle \sum _{n=0}^\infty \Vert \alpha _{n+1}-\alpha _{n} \Vert < \infty \).

The superiorization methodology (shortly, SM) was invented by Censor et al. [18] in attempt to check computation tractability of certain image recovery algorithms with limited computing resources. The SM is a heuristic approach with focus on time consumption [19, 20]. The base operation of SM requires that an algorithm which generates a convergent sequence of feasible solutions is bounded perturbation resilient, and that superiorization which uses perturbations proactively to get superior feasible solutions in the sense that the values of the objective function decrease is put in force. The technique is to find a point with a lower cost function value than other points through a simple algorithm is called the basic algorithm. This basic algorithm is usually pre-checked for perturbation resilience. The SM seems to be vast oversimplification, yet it represents a dramatic slight in approach to implementation of iterative algorithms. The SM has been investigated and studied by many researchers (see e.g. [6, 21,22,23]). Very recently, Guo and Cui showed that the sequence generated by (5) is perturbation resilient and prove strong convergence results which is also solution of variational inequality problem (6).

In optimization theory, the technique to increase efficiency of convergence rate, Polyak [24] pioneered the use of heavy ball method of the two-order time dynamical system which is a two-step iterative method for solving smooth convex minimization problem (i.e. a problem where all the constraints are convex functions, and the objective function is a smooth convex function). Later on, the development of this method was emphasized and used to increase the performance the convergence rate by Nesterov [25] as follows:

where \(\zeta _n \in [0,1)\) is an extrapolation factor, \(\lambda _n\) is a positive real sequence and \(\nabla f\) is the gradient of a smooth convex function f. The method is effective and converges faster since the vector \((x_{n} - x_{n-1}) \) acts as an impulsion term and \(\zeta _{n}\) serves as a speed regulator of the momentum term \(\zeta _{n} ( x_{n} - x_{n-1} )\) in (7). For more about inertial algorithms, we refer to [26,27,28,29,30,31,32] and the references therein.

Inspired and motivated by some contributions of authors mentioned above, we study a new method of approximation of solutions of the NSCOP (1) using a modified proximal gradient algorithm combined with inertial technique, and we then prove its strong convergence theorem of the sequence generated by the proposed algorithm under suitable conditions. Further, we verify that the proposed algorithm is of bounded perturbation resilient and apply it to image recovery problem. The effectiveness and the implementation of the proposed algorithm were illustrated by comparing it with previously known algorithms through numerical experiments in signal recovery.

The rest of this paper is organized as follows: basic notations, definitions and lemmas are recalled in Sect. 2. Our main results are presented in Sect. 3, that is, the inertial modified proximal gradient algorithm involving both perturbation and superiorization method are presented and some strong convergence results are proved. Next, Sect. 4 contains an application and numerical experiments to support our study. Finally, the conclusion of this research is given.

2 Preliminaries

The following notations, definitions and lemmas will play a crucial role in the sequel. Let \(\{ x_{n} \}\) be a sequence in Hilbert space H and \(x\in H.\)

-

(1)

\( x_{n} \rightarrow x\) means \( \{ x_{n} \} \) converges strongly to x;

-

(2)

\(x_{n}\rightharpoonup x\) means \( \{ x_{n} \} \) converges weekly to x;

-

(3)

A point z is said to be a cluster point of \(\{ x_{n} \}\) if there exists a subsequence \(\{ x_{n_j} \}\) which converges weekly to a point z. The set of all cluster points of \(\{ x_{n} \}\) is denoted by \(\omega _{W}(x_{n} ).\)

-

(4)

An operator \(T: H\rightarrow H\) is said to be \(\lambda \)-averaged if \(T= ( 1-\lambda )I + \lambda S\), where \(\lambda \in (0,1)\) and \(S: H \rightarrow H\) is nonexpansive;

-

(5)

\(A: H \rightarrow H\) is said to be v-inverse strongly monotone \((v-ism )\) with \(v > 0,\) if

$$\begin{aligned} \langle Ax-Ay, x-y \rangle \ge \Vert Ax-Ay \Vert ^{2}\qquad \forall x,y\in H; \end{aligned}$$ -

(6)

T is nonexpansive if and only if the complement \(I-T\) is \(\frac{1}{2}\)-ism [33];

-

(7)

If T is \(\nu \)-ism, then for \(\gamma >0,\) \(\gamma T\) is \(\frac{\nu }{\gamma }\)-ism;

-

(8)

T is averaged if and only if the complement \(I-T\) is \(\nu \)-ism for some \(\nu > \frac{1}{2}.\) Indeed, T is \(\alpha \)-averaged if and only if \(I-T\) is \(\frac{1}{2\alpha }\) -ism, for \(\alpha \in (0,1);\)

-

(9)

The composite of finitely many averaged operators is averaged. That is, if for each \(i=1,..., N,\) the operator \(T_{i}\) is averaged, then so is the composite operator \(T_{1}...T_{N}.\) In particular, if \(T_{1}\) is \(\alpha _{1}\)-averaged and \(T_{2}\) is \(\alpha _{2}\)-averaged, where \( \alpha _{1}, \alpha _{2}\in (0,1)\), then the composite \(T_{1}T_{2}\) is \(\alpha \)-averaged, where \(\alpha = \alpha _{1}+ \alpha _{2}-\alpha _{1}\alpha _{2}\).

Lemma 1

The following results are well known.

-

(i)

\(\Vert x+y \Vert ^{2} = \Vert x \Vert ^{2} + 2 \langle x, y \rangle + \Vert y \Vert ^{2},~~\forall ~x,y\in H\);

-

(ii)

\(\Vert x+y \Vert ^{2} \le \Vert x \Vert ^{2} + 2 \langle y, x+ y \rangle ,~~\forall ~x,y\in H\).

Lemma 2

[15] Let \( \varphi \in \varGamma _{0}(H) \), \( \lambda >0 \) and \( \mu >0.\) Then

Lemma 3

[34] Let \( \varphi \in \varGamma _{0}(H) \) and \( \lambda >0 \). Then the proximal operator \(\mathbf{prox} _{\lambda \varphi }\) is \(\frac{1}{2}\)-average. In particular, it is nonexpansive:

Lemma 4

[1] Let \(T: H \rightarrow H\) be a nonexpansive mapping with \(\text {Fix}~(T) \ne \emptyset \). If a sequence \(\{ x_{n} \}\) in H converges weekly to x and \( \{ (I-T)x_{n} \} \) converges strongly to y, then \((I-T)x=y,\) where \(\text {Fix}~(T)\) is a fixed point set of T.

Lemma 5

[21] Assume that \(\{ a_{n} \}\) is a sequence of nonnegative real numbers satisfying

where \(\{ \gamma _{n}\},\) \(\{ \delta _{n}\},\) and \(\{ \beta _{n}\}\) satisfy the conditions:

-

(i)

\(\{ \gamma _{n}\}\subset [0, 1],\qquad \sum\nolimits _{n=0}^{\infty } \gamma _{n}= \infty ;\)

-

(ii)

\(\displaystyle \underset{n \rightarrow \infty }{\limsup }\,\, \delta _n \le 0;\)

-

(iii)

\(\beta _{n} \ge 0\) and \( \sum\nolimits _{n=0}^{\infty } \beta _{n} < \infty \). Then \(\displaystyle \underset{n \rightarrow \infty }{\lim } a_n =0.\)

3 Main results

In this section, using the proximal mapping, we demonstrate a prove of a strong convergence theorem for finding a solution of the non-smooth convex composite optimization problem (1).

Assumption 3

-

(i)

\(f: H\rightarrow H \) is a \(\rho \)-contractive operator with \(\rho \in (0, 1)\);

-

(ii)

\(\psi : H\rightarrow (-\infty , \infty ] \) is proper, lower semicontinuous, convex and \(\nabla \psi \) is Lipschitz continuous with Lipschitz constant \(L >0\);

-

(iii)

\( \varphi : H\rightarrow (-\infty , \infty ] \) is proper, lower semicontinuous and convex;

-

(iv)

The set \(\varOmega \) of all solutions of problem 1 is nonempty.

Assumption 4

Suppose \(\{ \lambda _{n}\}\subset (0, \frac{2}{L}),\) \( \{\zeta _{n} \}\subset [0, 1)\) and \( \{\alpha _{n} \}\) is a sequence in (0, 1) satisfying the following conditions:

-

(B1)

\(0< a= \inf _{n} \lambda _{n} \le \lambda _{n} < \frac{2}{L}\) and \( \sum _{n=0}^\infty \Vert \lambda _{n+1}-\lambda _{n} \Vert < \infty \);

-

(B2)

\( \displaystyle \lim _{n\rightarrow \infty }\alpha _n=0 ,\) and \( \sum _{n=0}^\infty \alpha _{n} = \infty \)

-

(B3)

\( \displaystyle \underset{n \rightarrow \infty }{\lim } \frac{\zeta _{n}}{\alpha _{n}} \Vert x_{n}-x_{n-1} \Vert =0.\)

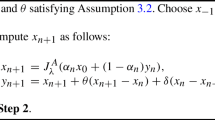

Algorithm 3.1 : Inertial viscosity proximal gradient algorithm with perturbation

Initialization: Take arbitrary \(x_{0}, x_{1}\in H,\) and set \(n=1.\) Choose sequences \(\{ \alpha _{n} \}\) and \(\{ \zeta _n \}\) such that the conditions in Assumption 4 hold.

Step 1: If \(\Vert x_{n}- x_{n-1}\Vert =0.\) STOP, \((x_{n})\) is a solution of NSCOP (1). Otherwise, go to Step 2.

Step 2: For a given current \(x_{n-1}\), \(x_{n}\) and \(\zeta _{n}.\) Compute \(\theta _{n}\) as

Step 3: Compute the next iterate \(( x_{n+1} ) \) as:

where \(e : H \rightarrow H\) is a perturbation operator satisfying the following condition

Last step: Update \(n:=n+1\) and go to Step1 .

If \(\zeta _{n}= 0\) in Algorithm 3.1, then we obtain the viscosity proximal gradient algorithm with perturbation (5) of Guo and Cui [16].

Definition 1

(Bounded Perturbation Resilience)

Let \(\{ x_{n} \}\) be a sequence generated by the algorithm

where A is an algorithmic operator from H into H. Then A is said to be bounded perturbation resilient if

for a bounded vector sequence \(\{ v_{n} \}\) and scalar \(\{ \beta _{n} \}\) such that \(\beta _{n} \ge 0\) \(\forall n\ge 0,\) and \( \sum \nolimits _{n=0}^{\infty } \beta _{n} < \infty .\) This operator A is called the basic algorithm.

Algorithm 3.2: Inertial viscosity proximal gradient algorithm with superiorization method

Initialization: Take arbitrary \(x_{0}, x_{1}\in H,\) and set \(n=1.\) Choose sequences \(\{ v_{n} \}\) and scalar \(\{ \beta _{n} \}\) as in Definition 1. Choose \(\{ \alpha _{n} \}\) and \(\{ \zeta _n \}\) such that the conditions in Assumption 4 hold.

Step 1: If \(\Vert x_{n}- x_{n-1} \Vert =0.\) STOP \((x_{n})\) is a solution of NSCOP (1). Otherwise, go to Step 2.

Step 2: For a given current \(x_{n-1}\), \(x_{n}\) and \(\zeta _{n}.\) Compute \(\theta _{n}\) as

Step 3: Compute the next iterate \(( x_{n+1} ) \) as:

Last step: Update \(n:=n+1\) and go to Step1.

Theorem 1

Assume that Assumption 3 holds. Then the sequence \(\{x_n\}\) generated by Algorithm 3.1 is bounded.

Proof

Step 1: We prove that \( \mathbf{prox} _{\lambda _{n} \varphi } \left( I- \lambda _{n} \nabla \psi \right) \) is nonexpansive for each n. Since \(\nabla \psi \) is L-Lipschitzian then \(\nabla \psi \) is \(\frac{1}{L}\)-ism which implies that \(\lambda _{n}\nabla \psi \) is \(\frac{1}{\lambda _{n}L}\)-ism and by (8), the complement \(\left( I- \lambda _{n} \nabla \psi \right) \) is \(\frac{\lambda _{n}L}{2}\)-average as \(\lambda _{n}\in (0, \frac{2}{L} ).\) This implies that \( \mathbf{prox} _{\lambda _{n} \varphi } \) is \(\frac{1}{2}\) -averaged. This further implies that the composition \( \mathbf{prox} _{\lambda _{n} \varphi } \left( I- \lambda _{n} \nabla \psi \right) \) is \(\frac{\lambda _{n}L + 2}{4}\)-averaged. Therefore the composition

for each n.

Step 2: We show that

Let \(x^*\in \varOmega ,\) and \(T_{n}= \mathbf{prox} _{\lambda _{n} \varphi } \left( I- \lambda _{n} \nabla \psi \right) .\) From Algorithm 3.1, we compute

By condition (B3) in Assumption 4. Then there exists a constant \(M_{1} \ge 0\) such that

Step 3: We show that

It follows from Algorithm 3.1, we compute

By mathematical induction, we derive relation (11). Using condition (9), we deduce that \(\{ x_{n} \}\) is bounded. \(\square \)

Theorem 2

Assume that Assumption 3 holds. Then the sequence \(\{x_n\}\) generated by Algorithm 3.1 converges strongly to a point \(x^{*}\in \displaystyle \varOmega ,\) satisfying

Proof

Step 1: We show that

By using Algorithm 3.1, we get

Step 2: We show that

We compute as follows:

Step 3: We show that

It follows from Algorithm 3.1, we compute

Since f is \(\rho \)-contractive, we obtain

Substituting (14) into (16), we obtain

Substituting (13) into (17), we obtain

where \( M_{2}= \sup _{n\in {\mathbb {N}}} \left\{ \Vert f(\theta _{n-1} ) \Vert + \Vert T_{n-1}\theta _{n-1} \Vert , \frac{\Vert \theta _{n-1}\Vert + \Vert T_{n-1} (\theta _{n-1}) \Vert }{a } \right\} .\) This implies

Taking \( \beta _{n} = M_{2} ( \mid \alpha _{n}-\alpha _{n-1} \mid + \mid \lambda _{n} - \lambda _{n-1} \mid ) + ( 1- \alpha _{n}( 1- \rho ) ) (\alpha _{n} + \alpha _{n-1} )M_{1} + \Vert e(\theta _{n}) \Vert + \Vert e(\theta _{n-1}) \Vert ,\) \( \gamma _{n}= \alpha _{n}( 1- \rho )\) and \( \delta _{n}= \frac{(-1) \mid \lambda _{n} - \lambda _{n-1} \mid ( \Vert \theta _{n-1}\Vert + \Vert T_{n-1} (\theta _{n-1}) \Vert ) }{a (1- \rho )} \) in equation (18), we have that

Applying Lemma 5 on (19), we deduce that \(\displaystyle \underset{n \rightarrow \infty }{\lim } \Vert x_{n+1}-x_{n} \Vert =0.\)

Step 4: We show that

We apply Lemma 3 to obtain

Letting \(\lambda _{n_{j}} \rightarrow \lambda \) as \(j \rightarrow \infty \) in (21), we derive (20).

Step 5: We show that

We compute

Since \(\{ x_{n} \}\) is bounded, then there exists a subsequence \(\{ x_{n_{j}} \}\) such that \(x_{n_{j}} \rightharpoonup z\) as \( j \rightarrow \infty .\) That is, z is a cluster point of \(\{ x_{n} \}\). Taking limit on both sides of (23) using (16), (20) and the hypothesis on \(\{ \alpha _{n}\}.\) We obtain (22). Since \(x_{n_{j}} \rightharpoonup z\) as \( j \rightarrow \infty \) and \( (I-T)x_{n_{j}} \rightarrow 0 ,\) then by Lemma 4, we have that \((I-T)z=0\) which implies that \(z\in \varOmega .\) Hence \(\omega _{W}(x_{n} ) \subset \varOmega .\)

Step 6: We show that

To see this, let \(\{ \theta _{n_{j}} \}\) be arbitrary subsequence of \(\{ \theta _{n} \}\) such that

Since \(\{ \theta _{n_{j}} \}\) is bounded, it has a weakly convergent subsequence. Without loss of generality, choose \(\{ \theta _{n_{j}} \}\) has the subsequence and assume that \(\theta _{n_{j}} \rightharpoonup u\in \varOmega .\) Then, by our hypothesis, we have

Hence,

Step 7: We show that

It follows from Algorithm 3.1, we compute

Applying Lemma 1, we get

Substituting (13) into (26) yields

where \(M_{ 3}= \sup _{n} \{ 2 \left( \alpha _{n} \Vert fx^{*}-x^{*} \Vert + \Vert \theta _{n+1}-x^{*} \Vert \right) \} < \infty \). Setting \(\gamma _{n}= \alpha _{n}( 1-\rho ^{2}),\) \(\beta _{n}= M_{ 3}\Vert e(\theta _{n} \Vert \) and \(\delta _{n}= \frac{2}{1-\rho ^{2}}\langle fx^{*}-x^{*} , \theta _{n+1}-x^{*} \rangle \). We have

Hence by Lemma 5, we get \( \displaystyle \underset{n \rightarrow \infty }{\lim } \Vert x_{n}- x^{*} \Vert =0.\) This completes the proof. \(\square \)

Theorem 3

Under the same condition of Theorem 2. Let \(\{ \beta _{n} \}\) and \(\{ v_{n} \}\) satisfy the assumption in Definition 1. Then Algorithm 3.1 is bounded perturbation resilient. That is, the sequence generated by Algorithm 3.2 converges strongly to \(x^{*}\in \varOmega .\)

Proof

Algorithm 3.2 can be rewritten as

where

We check that \( \sum\nolimits _{n=0}^\infty \Vert e(\theta _{n}) \Vert < + \infty .\) Applying Lemma 3, we get

This means that \( \displaystyle \sum _{n=0}^\infty \Vert e(\theta _{n}) \Vert < + \infty \). Therefore, the conclusion follows from Theorem 2. \(\square \)

Remark 1

Typically, the inertial scheme speeds up (boosts) the iterative sequence towards the solution set. It provides a major advantage over the execution time and the number of iterations for large-scale problems.

Remark 2

If \(\zeta _{n}= 0\) in Algorithm 3.2, then we obtain the modified proximal gradient algorithm with superiorization method of Guo and Cui [16] as follows:

Remark 3

[28] The condition (B3) is easily satisfied in numerical computation because the valued of \(||x_n-x_{n-1}||\) is known before choosing \(\zeta _n\). Indeed, the parameter \(\zeta _n\) can be chosen such that \(0 \le \zeta _n \le \bar{\zeta _n}\), where

where \(\{\tau _n\}\) is a positive sequence such that \(\tau _n =o(\alpha _n)\) and \(\zeta _n \in [0,\tau ]\subset [0,1)\).

3.1 Application to image recovery problems

In this section, we illustrate the relevance of our theorem with a linear inverse problem called LASSO (Least Absolute Shrinkage and Selection Operator) in compressed sensing (see in [35,36,37,38]).

Let \(A: H \rightarrow H\) be a bounded linear operator on a real Hilbert space. The image recovery model can be formulated as:

where \(\omega \) is an unknown noise vector, x is an unknown image, and b is the image (degraded observation) measurement. Assume problem (29) is consistent, then it can be recast as a least-squares problem:

where \(\mu > 0\) is a regularization parameter. We now apply our Theorem 3 to approximate the solutions of problem (30). Setting \(\psi (x)= \frac{1}{2}\Vert Ax-b \Vert ^{2}\), \(\varphi (x) = \mu \Vert x \Vert \). We see that both \(\varphi \) and \(\psi \) are convex, lower semi continuous and proper functions with \(\nabla \psi (x)= A^{*}( Ax-b)\) for each \(x\in H.\) Also \(\nabla \psi \) is Lipschitz continuous with \(L= \Vert A \Vert ^{2}.\) Indeed, the composite \( \psi (x) + \varphi (x) := \varPhi \) is coercive and the subdifferential of \(\varphi \) is

While

The conclusion of our results in Theorem 3 thus applies with suitable standard assumption on the sequence parameters.

4 Numerical experiments

In this section, we present the numerical results of proposed algorithm and compare the performance with algorithm (27) of Guo and Cui [16] in signal recovery. For clarity sake, we reformulate problem (30) in \(H={\mathbb {R}}^N\) as follows:

where \(A: {\mathbb {R}}^{N}\rightarrow {\mathbb {R}}^{ M}~(M< N)\) is a linear and bounded matrix operator and \(b\in {\mathbb {R}}^{M}\) is the observed or measured data with a noise \(\omega .\) The positive scalar \(\mu \) called the regularization parameter and \(x\in {\mathbb {R}}^{N}\) is a sparse vector with m nonzero components to be recovered. We claim that \(\psi (x)= \frac{1}{2}\Vert Ax-b \Vert _2^{2}\) and \(\varphi (x) = \mu \Vert x \Vert _1 \) be an indicator function with proximal map as

where \(s_{n}^{k}= \mathbf{sgn} (x_{n}^{k} ) \cdot \max \{ | x_{n}^{k}|- \mu , 0 \}\) for \(k=1, 2, \ldots , N.\) It is worth noting that the subdifferential \(\partial \varphi \) at \(x_n\) is

Then the bounded sequence \(\{ v_{n} \}\) defined by

and the summarizable positive real sequence \(\beta _n=\frac{1}{5n}\). We set \(f(x)=\frac{x}{2}\) and control parameters \(\alpha _{n} =\frac{1}{n+1}\), \(\lambda _{n} =\frac{0.3}{ \Vert A \Vert ^2}\), \(\mu =\frac{1}{L}\). Let \(\tau =0.5\), \(\tau _n=\frac{1}{n^2}\) for each n and define \(\zeta _n\) as in Remark 3

In experiment 1, the matrix \( A^{M\times N} \) entries are sampled independently from a normal distribution of zero mean and unit variance. The observation vector b is generated from a Gaussian noise with signal-to-noise ratio \(\text {SNR}=40\). The sparse vector x is generated from a uniform distribution in the interval \([-2, 2]\) with m nonzero elements. The initial point \(x_0, x_1\) is picked randomly. The restoration accuracy is measured by the mean square error as \(E_{n}=\frac{1}{N} \Vert x_{n}- x^{*} \Vert < 10^{-4},\) where \(x^{*}\) is the estimated signal of x. The numerical results are reported as follows:

Test 1: Set \(N=1024\), \(M=512\) and \(m=10\).

Test 2: Set \(N=512\), \(M=256\) and \(m=10\).

In experiment 2, the matrix \( A^{M\times N} \) entries are sampled independently from a Gaussian distribution of zero mean and unit variance. The observation vector b is generated from a Gaussian noise with signal-to-noise ratio \(\text {SNR}=50\). The vector b is generated from a uniformly distribution in the interval \([-2, 2]\) with m nonzero elements. The restoration accuracy is measured by the mean square error as \(E_{n}=\frac{1}{N} \Vert x_{n}- x^{*} \Vert < 10^{-4},\) where \(x^{*}\) is the estimated signal of x. The numerical results are reported as follows:

Test 3: Set \(N=1024\), \(M=512\) and \(m=35\).

Test 4: Set \(N=512\), \(M=256\) and \(m=35\).

From Figs. 1, 2, 4 and 5, it is guarantee that our algorithm can recover the original signal faster than Guo and Cui algorithm (28) in the compressed sensing. Moreover, Figs. 3 and 6 report that objective function values generated by Algorithm 3.2 decrease faster than Guo and Cui algorithm (28) in all of numerical tests.

5 Conclusions

In this article, we suggest an inertial viscosity proximal gradient algorithm which is proved effective to handle a non-smooth composite optimization problem with large datasets in real Hilbert spaces. We investigate its convergence and bounded perturbation resilience properties. Our results are demonstrated, and its performance is compared with Algorithm (28) of Guo and Cui [16] with respect to the signal recovery problem. The advantage of our proposed algorithm over some previous works is the reduction in the computational cost and the CPU time in computing numerical results. Numerical studies show that inertial effects usually improve the performance of the iterative sequence in terms of convergence in this context.

References

Bauschke HH, Combettes PL (2011) Convex analysis and monotone operator theory in Hilbert spaces. Springer, New York

Engl HW, Hanke M, Neubauer A (1996) Regularization of inverse problems. Kluwer academic, Dordrecht

Bach F, Jenatton R, Mairal J, Obozinski G (2012) Optimization with sparsity-inducing penalties. Found Trends Mach Learn 4(1):1–106

Beck A, Teboulle M (2009) Gradient-based algorithms with applications to signal-recovery problems. In: Palomar D, Eldar Y (eds) Convex optimization in signal processing and communications. Cambridge University Press, Cambridge, pp 42–88. https://doi.org/10.1017/CBO9780511804458.003

Figueiredo M, Nowak R (2003) An EM algorithm for wavelet-based image restoration. IEEE Trans Image Process 12(8):906–916

Nikazad T, Davidi R, Herman GT (2012) Accelerated perturbation-resilient block-iterative projection methods with application to image reconstruction. Inverse Probl 28(3):035005. https://doi.org/10.1088/0266-5611/28/3/035005

Helou ES, Zibetti MVW, Miqueles EX (2017) Superiorization of incremental optimization algorithms for statistical tomographic image reconstruction. Inverse Probl 33(4):044010. https://doi.org/10.1088/1361-6420/33/4/044010

Davidi R, Herman GT, Censor Y (2009) Perturbation-resilient block-iterative projection methods with application to image reconstruction from projections. Int Trans Oper Res 16(4):505–524

Combettes PL, Pesquet JC (2011) Proximal splitting methods in signal processing. In: Bauschke HH, Burachik RS, Combettes PL, Elser V, Russell Luke D, Wolkowicz H (eds) Fixed-point algorithms for inverse problems in science and engineering, vol 49. Springer, New York, pp 185–212

Dunn JC (1976) Convexity, monotonicity, and gradient processes in Hilbert space. J Math Anal Appl 53(1):145–158

Wang C, Xiu N (2000) Convergence of the gradient projection method for generalized convex minimization. Comput Optim Appl 16(2):111–120

Güler O (1991) On the convergence of the proximal algorithm for convex minimization. SIAM J Control Optim 29(2):403–419

Parikh N, Boyd S (2014) Proximal algorithm. Found Trends Optim 1(3):123–231

Rockafellar RT (1976) Monotone operators and the proximal point algorithm. SIAM J Control Optim 14(5):877–898

Xu HK (2014) Properties and iterative methods for the lasso and its variants. Chin Ann Math Ser B 35(3):501–518

Guo Y, Cui W (2018) Strong convergence and bounded perturbation resilience of a modified proximal gradient algorithm. J Inequal Appl. https://doi.org/10.1186/s13660-018-1695-x

Moudafi A (2000) Viscosity approximation methods for fixed-points problems. J Math Anal Appl 241(1):46–55

Censor Y, Davidi R, Herman GT (2010) Perturbation resilience and superiorization of iterative algorithms. Inverse Probl 26(6):065008. https://doi.org/10.1088/0266-5611/26/6/065008

Davidi R, Censor Y, Schulte RW, Geneser S, Xing L (2015) Feasibility-seeking and superiorization algorithm applied to inverse treatment planning in radiation therapy. Contemp Math 636:83–92

Schrapp MJ, Herman GT (2014) Data fusion in X-ray computed tomography using a superiorization approach. Rev Sci Instrum 85:053701. https://doi.org/10.1063/1.4872378

Xu HK (2017) Bounded perturbation resilience and superiorization techniques for the projected scaled gradient method. Inverse Probl 33(4):044008. https://doi.org/10.1088/1361-6420/33/4/044008

He H, Xu HK (2017) Perturbation resilience and superiorization methodology of averaged mappings. Inverse Probl 33(4):044007. https://doi.org/10.1088/1361-6420/33/4/044007

Censor Y, Zaslavski AJ (2013) Convergence and perturbation resilience of dynamic string-averaging projection methods. Comput Optim Appl 54(1):65–74

Polyak BT (1964) Some methods of speeding up the convergence of iterative methods. Ž Vyčisl Mat i Mat Fiz 4:1–17

Nesterov Y (1983) A method for solving the convex programming problem with convergence rate O(1/k2). Dokl. Akad. Nauk SSSR 269:543–547

Dang Y, Sun J, Xu H (2017) Inertial accelerated algorithms for solving a split feasibility problem. J Ind Manag Optim 13(3):1383–1394

Dong QL, Yuan HB, Cho YJ, Rassias ThM (2016) Modified inertial Mann algorithm and inertial CQ-algorithm for nonexpansive mappings. Optim Lett 12(1):87–102

Suantai S, Pholasa N, Cholamjiak P (2018) The modified inertial relaxed CQ algorithm for solving the split feasibility problems. J Ind Manag Optim 14:1595–1615

Cholamjiak W, Cholamjiak P, Suantai S (2018) An inertial forward–backward splitting method for solving inclusion problems in Hilbert spaces. J Fixed Point Theory Appl 20(1):42. https://doi.org/10.1007/s11784-018-0526-5

Kankam K, Pholasa N, Cholamjiak P (2019) On convergence and complexity of the modified forward–backward method involving new line searches for convex minimization. Math Methods Appl Sci 42(5):1352–1362

Suantai S, Pholasa N, Cholamjiak P (2019) Relaxed CQ algorithms involving the inertial technique for multiple-sets split feasibility problems. Rev R Acad Cienc Exactas Fís Nat Ser A Mat 113(2):1081–1099

Kesornprom S, Cholamjiak P (2019) Proximal type algorithms involving linesearch and inertial technique for split variational inclusion problem in Hilbert spaces with applications. Optimization 68(12):2365–2391

Byrne C (2004) A unified treatment of some algorithms in signal processing and image construction. Inverse Probl 20:103–120

Combettes PL, Wajs VR (2005) Signal recovery by proximal forward–backward splitting. Multiscale Model Simul 4(4):1168–1200

Abubakar AB, Kumam P, Awwal AM (2019) Global convergence via descent modified three-term conjugate gradient projection algorithm with applications to signal recovery. Results Appl Math. https://doi.org/10.1016/j.rinam.2019.100069

Abubakar AB, Kumam P, Awwal AM, Thounthong P (2019) A modified self-adaptive conjugate gradient method for solving convex constrained monotone nonlinear equations for signal recovery problems. Mathematics 7(8):693. https://doi.org/10.3390/math7080693

Padcharoen A, Kumam P, Chaipunya P, Shehu Y (2020) Convergence of inertial modified Krasnoselskii–Mann iteration with application to image recovery. Thai J Math 18(1):126–142

Cholamjiak P, Kankam K, Srinet P, Pholasa N (2020) A double forward–backward algorithm using linesearches for minimization problem. Thai J Math 18(1):63–76

Acknowledgements

This work was completed while the first author, Nuttapol Pakkaranang, was visiting North University Center at Baia Mare, Technical University of Cluj-Napoca on 24 Feburuary–24 May, 2019. He thanks Professor Vasile Berinde for their hospitality and support by ERAMUS+ project and also thanks the Thailand Research Fund (TRF) support through the Royal Golden Jubilee Ph.D. (RGJ-PHD) Program (Grant No. PHD/0205/2561). The second author, Poom Kumam, was supported by the Thailand Research Fund and the King Mongkut’s University of Technology Thonburi (KMUTT) under the TRF Research Scholar (Grant No. RSA6080047). The fourth author, Yusuf I. Suleiman, thanks King Mongkut’s University of Technology Thonburi (KMUTT) for visiting research 6 months (October 2018–March 2019). Furthermore, this project was supported by the Center of Excellence in Theoretical and Computational Science (TaCS-CoE), KMUTT.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Pakkaranang, N., Kumam, P., Berinde, V. et al. Superiorization methodology and perturbation resilience of inertial proximal gradient algorithm with application to signal recovery. J Supercomput 76, 9456–9477 (2020). https://doi.org/10.1007/s11227-020-03215-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-020-03215-z

Keywords

- Composite optimization problems

- Proximal gradient algorithm

- Inertial algorithm

- Superiorization methodology

- Bounded perturbation resilience