Abstract

The maximum likelihood estimation in the finite mixture of distributions setting is an ill-posed problem that is treatable, in practice, through the EM algorithm. However, the existence of spurious solutions (singularities and non-interesting local maximizers) makes difficult to find sensible mixture fits for non-expert practitioners. In this work, a constrained mixture fitting approach is presented with the aim of overcoming the troubles introduced by spurious solutions. Sound mathematical support is provided and, which is more relevant in practice, a feasible algorithm is also given. This algorithm allows for monitoring solutions in terms of the constant involved in the restrictions, which yields a natural way to discard spurious solutions and a valuable tool for data analysts.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Finite mixtures of distribution have been extensively applied in the statistical literature to model very different types of data (see, e.g., the monographies by Titterington et al. 1985 and McLachlan and Peel 2000). This wide use has been motivated by the existence of feasible algorithms, mainly based on variations of the expectation-maximization (EM) algorithm of Dempster et al. (1977). However, in practice, there are several difficulties which prevent the simple use for practitioners.

This paper focuses on the most extensively analyzed problem in mixture modeling. This deals with fitting a mixture of \(G\) normal components to a given data set \(\{x_1,\ldots ,x_n\}\) in \(\mathbb {R}^p\). Moreover, we will assume that the number of components \(G\) is fixed beforehand. Our framework is that of “Maximum Likelihood (ML)”, which makes us consider (log-)likelihoods as

where \(\varphi (\cdot ;\mu ,\Sigma )\) stands for the probability density function of the \(p\)-variate normal distribution with mean \(\mu \) and covariance matrix \(\Sigma \).

One of the main difficulties in this context is that the maximization of the log-likelihood (1) without any constraint is an ill-posed problem (Day 1969). It is known that \(\varphi (x_i;x_i,\Sigma _g)\) tends to infinity when \(\det (\Sigma _g)\) approximates 0, making the target function (1) unbounded. Moreover, there exist many non-interesting local maximizers of (1), which are often referred to as spurious solutions. The choice of meaningful local maximizers (avoiding singularities and spurious solutions) is thus an important, but complex, problem.

McLachlan and Peel (2000), after showing some illustrative examples of this problem, proposed monitoring the local maximizers of (1), obtained after the application of EM type algorithms and carefully evaluating them by resorting to appropriate statistical tools. Unfortunately, this evaluation is not an easy task for practitioners without enough statistical expertise as can be seen, for instance, in Sect. 5.2.

An alternative approach is based on considering different constrains on the \(\Sigma _g\) scatter matrices. Most of them are based on imposing constraints on the elements of the decomposition of the scatter matrices in the form

(see, e.g., Banfield and Raftery 1993 and Celeux and Diebolt 1995), where \(\lambda _g\) is the largest eigenvalue, \(D_g\) is the matrix of eigenvectors of \(\Sigma _g\) and \(A_g\) is a diagonal matrix. Considering the \(\lambda _g\), \(D_g\) and \(A_g\) as independent sets of parameters, the idea is to constrain them to be the same among the different mixture components or allow them to vary among mixture components.

Penalized maximum likelihood approaches were considered (see, e.g., Chen and Tan 2009 and Ciuperca et al. 2003) to overcome the problem of unboundedness of the likelihood, and Fraley and Raftery (2007) proposed a Bayesian regularization approach to address the problem of the spurious solutions.

Another possibility for transforming the maximization of (1) into a well-defined problem goes back to Hathaway (1985) [he also refereed to Dennis 1982, who, in turn, cited to Beale and Thompson (oral communications)]. In the univariate case, Hathaway’s approach is based on the maximization of (1) under the constraint

where \(c\ge 1\) is a fixed constant and \(\Sigma _g=\sigma _g^2\) are the variances of the univariate normal mixture components. Hathaway also outlined an extension to multivariate problems based on the eigenvalues of matrices \(\Sigma _j\Sigma _k^{-1}\), with \(1\le j \ne k \le G\). To the best of our knowledge, this extension has not been implemented in practical applications due to the non-existence of appropriate algorithms for carrying out the associated constrained maximization. In fact, Hathaway’s attempt to provide an algorithm for this goal, even in the univariate case, addressed a different (but not equivalent) problem through the constraints

These more feasible constraints were proposed as an alternative to constraints (2) in Hathaway (1983, 1986).

This paper addresses an easy extension of constraint (2), which allows for a computationally feasible algorithm. The approach is based on controlling the maximal ratio between scatter matrices eigenvalues as it has been already considered by the authors in a (robust) clustering framework (García-Escudero et al. 2008). However, our aim there was (robustly) to find clusters or groups in a data set instead of modeling it with a finite mixture. Although the two problems are clearly related, we are now using “mixture” likelihoods instead of (trimmed) “classification” likelihoods. In both approaches, a constant \(c\) serves to control the strength of the constraints on the eigenvalues.

Similar type of constraints on the eigenvalues of the scatter matrices have been also considered in Ingrassia and Rocci (2007). Gallegos and Ritter (2009a) considered other type of constraints on the \(\Sigma _j\) scatter matrices in (robust) clustering by resorting to the Löwner matrix ordering \((\preceq )\). To be more specific, they constrained the scatter matrices to satisfy \(\Sigma _j \succeq c^{-1}\Sigma _k\) for every \(j\) and \(k\). Gallegos and Ritter (2009b) applied this type of constraints to the mixture fitting problem.

The consideration of constraints in these problems must be supported and guided by a double perspective. On the one hand, it should be soundly justified from a mathematical point of view. On the other hand, its numerical implementation should be feasible at an affordable computational cost. Thus, the main contributions of this work are the results justifying the proposed approach from a theoretical point of view and the presentation of a feasible algorithm. Additionally, it is shown that this methodology not only yields a natural way to discard spurious solutions, but it also allows for monitoring solutions in terms of the constant involved in the restrictions. Hence, it can be considered as a valuable tool for data analysts.

Regarding the mathematical aspects of the problem, we will prove the existence and consistency of constrained solutions under very general assumptions. Hathaway (1986) provided existence and consistency results in the univariate case that, surprisingly, have not been properly extended to multivariate cases. In any case, even in the one-dimensional setup, our results are considerably more general due to the milder conditions imposed on the underlying distribution. For instance, our results cover not only the “perfect” normal mixture model (as is the case of Hathaway’s results), but also the more realistic assumption that the underlying model is “close” to a normal mixture model. A direct consequence of these results is that the proposed constraints lead to well-defined underlying theoretical or population problems. Without them, it is not perfectly clear the true goal we are pursuing when applying the EM algorithm. In other words, the “maximization” of the target function (1) without constraints results in an EM algorithm whose performance strongly depends on the probabilistic way that it is initialized (see, e.g., Maitra 2009).

Even though the considered constraints result in mathematically well justified problems, it is very important to develop feasible and fast enough algorithms for their practical implementation. With this in mind, the direct adaptation of the type of algorithm introduced in García-Escudero et al. (2008) is not satisfactory at all. This type of algorithm implies solving several complex optimization problems in each iteration of the algorithm, through Dykstra’s algorithm (Dykstra 1983). Instead of considering this type of algorithm, we propose adapting the algorithm in Fritz et al. (2013) to this mixture fitting problem. The proposed adaptation provides an efficient algorithm for solving the constrained maximization of (1).

As already commented, Gallegos and Ritter (2009b) considered constraints on the scatter matrices by resorting to the Löwner matrix orderings. However, a specific algorithm was not given to solve those problems for a fixed value of the constant \(c\). Instead, they proposed obtaining all local maxima of the (trimmed) likelihood and investigate the value of \(c\) needed in order that each solution fulfills one of these constraints.

Ingrassia and Rocci (2007) proposed algorithms trying to approximate the solution of Hathaway’s original constraints for the multivariate case. In this way, they suggested algorithms based on truncating the scatter matrices eigenvalues by using known lower and upper bounds on these eigenvalues. When no suitable external information is available for bounding them, they also considered a bound on the ratio of the eigenvalues as we do in this work (\(c\) must be replaced by \(c^{-1}\)). However, their algorithm for this last proposal did not directly maximize the likelihood as done in Step 2.2 of our algorithm. Their algorithm was based on obtaining iterative estimates of \(\eta \), which is a lower bound on the scatter matrices eigenvalues, to properly truncate the eigenvalues and thus, as the authors commented in their paper, the proposed algorithm is quite sensitive to the proper determination of an initial good choice \(\eta _0\) for parameter \(\eta \).

The outline of the work is as follows. We properly state the constrained problem and give mathematical properties that support its interest in Sect. 2. Section 3 is devoted to the description of the proposed algorithm. Section 4 presents a simple simulation study to show how the use of the proposed constraints avoids the detection of spurious solutions. In Sect. 5, we analyze some examples already considered in the literature. Throughout them, we illustrate how alternative mixture fits can be explored when moving the constant \(c\) defining the constraints. Finally, we conclude in Sect. 6 where some hints are also presented about how to explore these alternative mixture fits.

2 Problem statement and theoretical results

Let us denote by \(\{x_1,\ldots ,x_n\}\subset \mathbb {R}^p\) an i.i.d random sample from an underlying distribution \(P\). We could ideally assume that \(P\) is a mixture of \(G\) multivariate normal components but, in the presented results, only mild assumptions on the underlying distribution \(P\) will be required. Given this sample, the proposed approach is based on the maximization of the mixture log-likelihood given in (1) but with the additional:

(ER) eigenvalues-ratio constraint

for

with \(\lambda _l(\Sigma _g)\) being the eigenvalues of the \(\Sigma _g\) scatter matrices, with \(g=1,\ldots ,G\) and \(l=1,\ldots ,p\), and \(c\ge 1\) being a fixed constant.

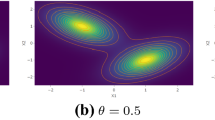

This type of constraints simultaneously controls differences between groups and departure for sphericity. Note that the relative length of the equidensity ellipsoids axes based on \(\varphi (\cdot ;\mu _g,\Sigma _g)\) is forced to be smaller than \(\sqrt{c}\) (Fig. 1). The smaller \(c\), the more similarly scattered and spherical the mixture components are. For instance, these ellipsoids reduce to balls with the same radius in the most constrained \(c=1\) case.

The previously stated empirical problem admits an underlying theoretical or population counterpart:

Constrained mixture-fitting problem Given a probability measure \(P\), maximize:

in terms of the parameters \(\theta \!=(\pi _1,\ldots ,\pi _G,\mu _1,\ldots ,\mu _G,\Sigma _1,\ldots ,\Sigma _G)\) corresponding to weights \( \pi _g \in [0,1]\), with \(\sum _{g=1}^{G}\pi _g=1\), location vectors \(\mu _g \in \mathbb {R}^p\) and symmetric positively definite \((p\times p)\)-matrices \(\Sigma _g\) satisfying the (ER) constraint for a fixed constant \(c\ge 1\). The set of \(\theta \) parameters obeying these conditions is denoted by \(\varTheta _c\).

If \(P_n\) stands for the empirical measure, \(P_n=(1/n)\sum _{i=1}^{n}\delta _{\{x_i\}}\), we recover the original empirical problem by replacing \(P\) by \(P_n\).

In this section, we give results guaranteeing the existence of both empirical and population problem solutions, together with a consistency result of the empirical solutions to the population one. These two results only require mild assumptions on the underlying distribution \(P\). Namely, we require \(P\) to have finite second moment, i.e. \(E_P[\Vert \cdot \Vert ^2]<\infty \), and to avoid that \(P\) is completely unappropriate for a mixture fitting approach by requesting:

- (PR) :

-

The distribution \(P\) is not concentrated on \(G\) points.

This condition trivially holds for absolutely continuous distributions and for empirical measures corresponding to large enough samples drawn from an absolutely continuous distribution.

We can state the following general existence result:

Proposition 1

If (PR) holds for distribution \(P\) and \(E_P[\Vert \cdot \Vert ^2]<\infty \), then there exists some \(\theta \in \varTheta _c\) such that the maximum of (3) under (ER) is achieved.

The following consistency result also holds under similar assumptions:

Proposition 2

Let us assume that (PR) holds for the distribution \(P\) with \(E_P[\Vert \cdot \Vert ^2]<\infty \) and \(\theta _0\) be the unique maximum of (3) under (ER). If \(\theta _n \in \varTheta _c\) denotes a sample version estimator based on the empirical measure \(P_n\), then \(\theta _n \rightarrow \theta _0\) almost surely.

Recall that the original problem without the (ER) constraint is an ill-posed problem and, thus, results like the previous ones are not possible.

The proofs of these results, which will be given in the Appendix, follow similar arguments as those given for the existence and consistency results of the TCLUST method in García-Escudero et al. (2008). However, a special mathematical treatment is now needed. For instance, the consistency result presented in García-Escudero et al. (2008) needed an absolutely continuous distribution \(P\) with strictly positive density function (in the boundary of the set including the non-trimmed part of the distribution). This condition was needed due to the “trimming” approach considered by the TCLUST methodology. On the other hand, the new results for mixtures do not longer need this assumption, but they need finite second order moments to control the tails of the mixture components. The tails of the distribution were not problematic when considering trimming.

With respect to the uniqueness condition, it can be guaranteed when \(P\) is a mixture of \(G\) normal components once we choose a large enough \(c\) such that its scatter matrices belong to the set \(\varTheta _c\). This would also imply the consistency toward the parameters of the mixture. Moreover, the uniqueness condition often holds for smaller values of \(c\). Unfortunately, stating general uniqueness results is not an easy task even in the most simple cases.

The presented approach is obviously not affine equivariant due to the type of constraints considered. Although the approach becomes closer to affine equivariance when considering large \(c\) values, it is always recommended to standardize the variables when very different measurement scales are involved.

Finally, we would like to comment that Hennig (2004) and Greselin and Ingrassia (2010) can also be seen as first steps toward the theoretical results presented here.

3 A feasible algorithm

In this section, we propose an algorithm that essentially follows the same scheme adopted by standard EM algorithms in mixture fitting. However, in this new algorithm, it is very important to update the parameters in the EM algorithm in such a way that the scatter matrices satisfy the required eigenvalues ratio constraint. The proposed algorithm may be described as follows:

-

1.

Initialization The procedure is initialized nstart times by selecting different \(\theta ^{(0)}=(\pi _1^{(0)},\ldots ,\pi _G^{(0)},\mu _1^{(0)},\ldots ,\) \(\mu _G^{(0)}, \Sigma _1^{(0)},\ldots ,\Sigma _G^{(0)})\). For this purpose, we propose randomly selecting \(G(p+1)\) observations and computing \(G\) mean centers \(\mu _g^{(0)}\) and \(G\) scatter matrices \(\Sigma _g^{(0)}\) from them. The cluster scatter matrix constraints (to be described in Step 2.2) are applied to these initial \(\Sigma _g^{(0)}\) scatter matrices, if needed. Weights \(\pi _1^{(0)},\ldots ,\pi _G^{(0)}\) in the interval \((0,1)\) and summing up to 1 are also randomly chosen.

-

2.

EM steps The following steps are alternatively executed until \(\Vert \theta ^{(l+1)}-\theta ^{(l)}\Vert \le \zeta \) for a small constant \(\zeta >0\) (after arranging properly the elements of \(\theta \) in a vector) or until a maximum number of iterations iter.max is reached.

-

2.1.

E-step We compute the posterior probabilities for all the observations by using the current \(\theta ^{(l)}\) as

$$\begin{aligned} \tau _g\left( x_i;\theta ^{(l)}\right) = \frac{\pi _g^{(l)}\varphi \left( x_i;\mu _g^{(l)},\Sigma _g^{(l)}\right) }{ \sum _{g=1}^G \pi _g^{(l)}\varphi \left( x_i;\mu _g^{(l)},\Sigma _g^{(l)}\right) }. \end{aligned}$$(4) -

2.2.

M-step We update the \(\theta ^{(l)}\) parameters as

$$\begin{aligned} \pi _g^{(l+1)}= \sum _{i=1}^n \tau _g\left( x_i;\theta ^{(l)}\right) \big /n \end{aligned}$$and

$$\begin{aligned} \mu _g^{(l+1)}= \sum _{i=1}^n \tau _g\left( x_i;\theta ^{(l)}\right) x_i \bigg / \sum _{i=1}^n \tau _g\left( x_i;\theta ^{(l)}\right) . \end{aligned}$$Updating the scatter estimates is more difficult given that the sample covariance matrices

$$\begin{aligned} T_g&= \sum _{i=1}^n \tau _g\left( x_i;\theta ^{(l)}\right) \left( x_i-\mu _g^{(l+1)}\right) \left( x_i-\mu _g^{(l+1)}\right) '\\&\bigg / \sum _{i=1}^n \tau _g\left( x_i;\theta ^{(l)}\right) \end{aligned}$$may not satisfy the required eigenvalues ratio constraint. In this case, the singular-value decomposition of \(T_g=U_g'D_g U_g\) is considered for each \(T_g\) matrix, with \(U_j\) being orthogonal matrices and \(D_g=\text {diag}(d_{g1},d_{g2},\ldots ,d_{gp})\) diagonal matrices. Let us define the truncated eigenvalues as

$$\begin{aligned}{}[d_{gl}]_m = \left\{ \begin{array}{ll} d_{gl}&{}\quad \text {if}\quad d_{gl}\in [ m,cm] \\ m&{} \quad \text {if}\quad d_{gl}<m \\ cm&{}\quad \text {if}\quad d_{gl}>cm \end{array} \right. , \end{aligned}$$(5)where \(m\) is some threshold value. The scatter matrices are finally updated as

$$\begin{aligned} \sum \nolimits _g^{(l+1)}=U_g'D_g^*U_g, \end{aligned}$$with \(D_g^*=\text {diag}\left( [d_{g1}]_{m_{\text {opt}}},[d_{g2}]_{m_{\text {opt}}},\ldots ,[d_{gp}]_{m_{\text {opt}}} \right) \) and \(m_{\text {opt}}\) minimizing the real valued function

$$\begin{aligned} m \mapsto \sum \limits _{g=1}^{G} \pi _g^{(l+1)} \sum \limits _{l=1}^{p}\left( \log \left( [d_{gl}]_m\right) +\frac{ d_{gl}}{[d_{gl}]_m}\right) . \end{aligned}$$(6)

-

2.1.

-

3.

Evaluate target function After applying the EM steps, the value of the target function (1) is computed for each random initialization. The set of parameters yielding the highest value of the target function is returned as the algorithm final output.

Remark 1

There is a closed form for obtaining \(m_{\text {opt}}\) just by evaluating \(2pG+1\) times the function appearing in (6). To do that, let us consider \(e_1 \le e_2 \le \cdots \le e_{2Gp}\) obtained by ordering the values

and consider any \(2pG+1\) values satisfying \(f_1<e_1\le f_2 \le e_2 \le \cdots \le f_{2Gp} \le e_{2Gp} < f_{2Gp+1}.\) Compute

for \(i=1,\ldots ,2Gp+1\), and choose \(m_{\text {opt}}\) as the value of \(m_i\) which yields the minimum value of (6).

In each M-step, the constrained maximization in (1) just needs to perform the minimization of the univariate function (6) instead of the minimization on \(G p\) parameters in expression (3.4) given in García-Escudero et al. (2008) under \(Gp(Gp-1)/2\) linear constraints. This original problem was computationally expensive even for moderately high values of \(G\) or \(p\). On the other hand, with this new algorithm, the computing times are not drastically increased with respect to other (unrestricted) EM mixture fitting algorithms. Apart from the obtention of the singular-value decompositions of the \(T_g\) matrices, only the evaluation of a real valued function \(2Gp+1\) times is needed in this modified M-step. Therefore, this is a quite affordable modification in terms of computing times with respect to standard EM algorithms.

The justification of Step 2.2 and Remark 1 follows exactly the same lines as in Fritz et al. (2013). Once the \(\tau _g(x_i;\theta ^{(l)})\) weights are fixed, the maximization of the likelihood done in the M-step essentially coincides with that of the “classification” likelihood in Fritz et al. (2013).

4 Simulation study

In this section, a simple simulation study is given to see how the constrained mixture fitting algorithm actually works. The simulation study is based on a random sample drawn from a distribution \(P\) which is made of two normal components in dimension \(p=2\) with density

Independent and identically distributed noise variables drawn from the standard normal distribution are added to the two dimensional data sets generated from (7) in order to obtain data sets in dimensions \(p=6\) and \(10\).

We compare the results of the proposed mixture fitting algorithm for different values of \(c\) when \(G=2\) is assumed as known. Namely, we consider \(c=1\), \(c=6\), \(c=100\) and \(c=10^{10}\). Note that the “true” scatter matrices eigenvalues ratio for this two-component mixture is equal to 6. The value \(c=1\) yields the most constrained case when we would be searching for mixture components with scatter matrices being the same diagonal matrix and with the same value in its diagonal. \(c=100\) can be seen as a “moderate” choice of \(c\) (we do not want the length of any ellipsoid axis to be \(\sqrt{100}=10\) times larger than other) and \(c=10^{10}\) means an (almost) unrestricted case where the algorithm does not force any constraint on the eigenvalues, but still avoids singularities.

In this simulation study, we will need a measure of how two mixture fits, and the classification derived from them, are close to each other. Given a fitted mixture \(\mathcal {M}\) with parameters \(\theta =(\pi _1,\pi _2,\mu _1,\mu _2,\Sigma _1,\Sigma _2)\), we define

or

We can, thus, measure the “discrepancy” between two mixtures \(\mathcal {M}_1\) and \(\mathcal {M}_2\) as

We use the notation \(\delta ^{\text {Classif}}(\mathcal {M}_1, \mathcal {M}_2)\) when considering \(z_i\) as defined in (8) and the notation \(\delta ^{\text {Mixt}}(\mathcal {M}_1, \mathcal {M}_2)\) when considering \(z_i\) as in (9).

Let us denote the mixture (7) which generated our data set by \(\mathcal {M}_0\). Given that \(\mathcal {M}_0\) is known, we can measure through these \(\delta \) discrepancies how close a fitted mixture is to the “true” underlying mixture \(\mathcal {M}_0\).

Table 1 shows the result of applying the presented algorithm with nstart \(=\) 1,000 random initializations, as those proposed in Sect. 3. The performance of the algorithm for different values of the constant \(c\) is evaluated through two criteria:

-

(a)

Concordance: The number of random initializations that ends up with mixtures \(\mathcal {M}\) such that \(\delta (\mathcal {M},\mathcal {M}_0)<0.2\) and \(\delta (\mathcal {M},\mathcal {M}_0)<0.1\). In other words, we are interested in the number of random initialization which lead to mixtures that essentially coincide with the “true” underlying mixture \(\mathcal {M}_0\).

-

(b)

Spuriousness: The number of random initializations that ends up with mixtures \(\mathcal {M}\) such that \(\delta (\mathcal {M},\mathcal {M}_0)\ge 0.2\) and \(\delta (\mathcal {M},\mathcal {M}_0)\ge 0.1\) and taking strictly larger values for the target function (1) than the value obtained for the “true” solution \(\mathcal {M}_0\). That is, they are spurious solutions which do not essentially coincide with the “true” underlying mixture \(\mathcal {M}_0\), but with higher values of the likelihood.

To read this table, we must take into account that Spurious ness = 0 and Concordance \(>\) 0 are really needed for a good performance of the algorithm. With Spuriousness = 0, we are avoiding the detection of spurious solutions that would be eventually preferred (due to the value of their likelihoods) to solutions closer to the “true” one. Concordance \(>\) 0 gives the algorithm some chance of detecting a mixture close to the “true” solution. Notice that, due to the definition of Spuriousness, the sum of Concordance and Spuriousness does not add to 1,000 in Table 1.

We can see in Table 1 that the small sample size \(n=100\) makes it easier for the algorithm to detect spurious solutions. The same happens with higher dimensions as \(p=10\). However, small or even moderate values of \(c\) serve to avoid the detection of these spurious solutions. Note that the consideration of \(c=1\) (smaller than the true eigenvalues ratio which was equal to 6) is not too detrimental. We can also see that the choice of small values of the constant \(c\) increases the chance of initializations ending up close to the “true” solution. On the contrary, the use of more unrestricted algorithms entails the detection of spurious solutions and makes harder the detection of solutions close to the true one, especially, in the higher dimensional cases (even when \(n=200\)).

5 Examples

This section is based in some examples presented in McLachlan and Peel (2000) to illustrate the difficulties that spurious solutions introduce in mixture fitting problems. We see how the proposed constrained mixture fitting approach can be successfully applied to handle these difficulties.

5.1 McLachlan and Peel’s “Synthetic Data Set 3”

This data set corresponds to Fig. 3.8 in McLachlan and Peel (2000). Since it is a simulated data set, the “true” cluster partition is known and shown in Fig. 2a. It is always interesting to monitor the restricted ML solutions for different values of the constant \(c\). Figure 2 shows some of the solutions obtained for different values of constant \(c\). In Fig. 2b–f the associated cluster partition derived from the posterior probabilities is used to summarize the mixture fitting results. As already commented, spurious local maximizers correspond to solutions including populations with “little practical use or real-world interpretation”. This is the case of the solution shown in Fig. 2f. Although that solution yields a likelihood value, which is higher than the one of the “true” solution, it is clear that the model is fitting a small local random pattern in the data, rather than a proper mixture component. The ratio between the maximum and the minimum eigenvalue is close to 1,000 for this spurious solution, while it is only around 3 for the “true” one.

In this example, we obtain cluster partitions close to the “true” solution when enforcing the relative size of the eigenvalues to be smaller than (approximately) \(c=200\). This \(c=200\) level for the constraints would imply that we are no allowing relative variabilities higher than \(\sqrt{200}\simeq 14\) times in the sense of standard deviations.

We can also see that no many essentially different solutions need to be examined in the proposed monitoring approach. In order to highlight this fact, we have plotted in Fig. 3 the value of the constant \(c\) against the obtained scatter matrices eigenvalues ratio from the solution that the proposed algorithm returns for this value of constant \(c\). Note that, of course, the obtained eigenvalue ratio is always smaller or equal than \(c\) but we can also see that many times the constraint is not needed to be “enforced” in the returned solution by the proposed algorithm once an upper bound on the eigenvalues ratio is posed. We say that the constraints are “enforced” by the algorithm if \(M_n/m_n=c\) in (ER).

5.2 “Iris Virginica” data set

The well-known Iris data set, originally collected by Anderson (1935) and first analyzed by Fisher (1936), is considered in this example. This four-dimensional \((p=4)\) data set was collected by Anderson with the aim of seeing whether there was “evidence of continuing evolution in any group of plants”. Thus, it is interesting to evaluate whether “virginica” species should be split into two subspecies or not. Hence, as in McLachlan and Peel (2000)’s Sect. 3.11, we focus on the 50 virginica iris data and fit a mixture of \(G=2\) normal components to them.

McLachlan and Peel (2000) listed 15 possible local ML maximizers together with different quantities summarizing aspects as the separation between clusters, the size of the smallest cluster and the determinants of the scatter matrices corresponding to these solutions. After analyzing this information, an expert statistician could surely choose the so-called “\(S_1\)” solution as the most sensible solution among them, even though this solution is not the one providing the largest likelihood. The cluster partition associated to this \(S_1\) solution is shown in Fig. 4 in the two first principal components.

Plot of the first two principal components of “virginica” data set and the “\(S_1\)” solution in McLachlan and Peel (2000)

Unfortunately, the careful examination of such (typically big) lists of local ML maximizers is not an easy task for non-expert users. Our proposal is to compute constrained ML mixture fits for a grid of \(c\) values and choose a sensible one among the associated constrained solutions. This list of constrained solutions could be even more simplified by considering only those solutions which are essentially different (we will outline this further simplification in Sect. 6). For this example, after setting the restrictions at different \(c\) values ranging from \(c=4\) to \(c=\) 1,000, we only get essentially the solution \(S_1\). In fact, the differences with that solution reduce to less than one observation when analyzing the associated cluster partitions based on maximum posterior probabilities.

In order to reinforce previous claims, we show in Fig. 5, a huge number of local ML maximizers obtained by running the proposed algorithm with a large \(c=10^{10}\) value. McLachlan and Peel (2000) found 51 local maxima with likelihoods greater than that corresponding to \(S_1\) out of 1,000 initializations when using the stochastic version of the EM algorithm (Celeux and Diebolt 1985). Since the proposed algorithm in Sect. 3 is able to visit many local maximizers, we find 787 local maximizers with higher likelihoods, than that corresponding to \(S_1\), when considering nstart \(=\) 50,000 and iter.max \(=100\). Thus, the number of local maxima to be explored is huge even in this very simple example. The values of the log-likelihood and the eigenvalues-ratio for these ML local maximizers are plotted in Fig. 5. The same values are plotted (enclosed by square symbols) for the constrained solutions obtained from a sequence of \(c\) values on an equispaced grid within \([1,10^8]\) in a logarithmical scale. The corresponding values for 13 out of the 15 solutions listed in McLachlan and Peel (2000) are also represented enclosed by circle symbols (2 of these solutions are not found among the here obtained 787 local maxima) and the preferred \(S_1\) solution is also highlighted.

Log-likelihoods and eigenvalues-ratios for several local ML maximizers in the “Iris Virginica” data set and for the constrained ML solutions (square). The considered sequence of \(c\) values is represented by using vertical lines. The 13 solutions listed in McLachlan and Peel (2000), including the “\(S_1\)” solution, are enclosed by circle symbols

As was previously commented, all the constrained solutions when \(c\in [4,10^{3}]\) are very close to solution \(S_1\) (with very similar log-likelihood values). In fact, there are two constrained ML solutions which have smaller eigenvalue ratios than their corresponding \(c\) values which essentially coincide with the \(S_1\) solution (by examining the values reported in Table 3.9 of McLachlan and Peel 2000). Values \(c\le 4\) are only desirable if the user is actually interested in rather homoscedastic solutions (no axes lengths larger than 2 times the others) and \(c>\) 1,000 leads to solutions likely to be considered as spurious since the lengths of the ellipsoid axis are very different. Thus, the practitioner, by choosing a moderate value of constant \(c\), would have obtained the same solution (or a very close solution) as that obtained by an expert statistician.

5.3 Galaxy data set

This data set corresponds to the velocities (in thousand of km/sec) of 82 galaxies in the Corona Borealis region, analyzed in Roeder (1990). This data set was also used in McLachlan and Peel (2000) to show that clusters having relative very small variances (seemingly spurious solutions) may be sometimes also considered as legitimate ones. Of course, this data set has a very small sample size to answer this question and this forces us to be cautious with our statements as McLachlan and Peel did.

Figure 6 shows the galaxy data set and the solution for this data set proposed by McLachlan and Peel (2000) with \(G=6\) components. The obtained parameters for this mixture of normals can be seen in Table 3.8 of that book. In Fig. 6, the two clusters suspicious of being due to a spurious local maximizer of the likelihood are labeled with letters “A” (component centered around 16.127) and “B” (centered around 26.978). Both components “A” and “B” account for \(2\%\) of the mixture distribution and their variances are 781 or 5,000 times, respectively, smaller than the variance of the most scattered component.

Histogram for the galaxy data set and the \(G=6\) normal mixture fitted in McLachlan and Peel (2000)

After examining Fig. 6, it is quite logical to wonder whether components “A” and “B” can be considered just as spurious local maximizers or they are legitimate ones.

Table 2 gives the restricted ML solutions for \(c=4\), 25, 100 and 200. The “A” component is detected for this wide range of \(c\) values and, therefore, “A” can be more clearly considered as a “legitimate” population. The “B” component is also detected when we set the \(c\) value to be greater than 100. A value \(c=100\) corresponds to allowing ten times more relative variability in the sense of standard deviation. Under the premise that this relative scatter variability was acceptable, the population “B” could be seen as a legitimate population too. McLachlan and Peel (1997) provided support for the \(G=6\) components solution and Richardson and Green (1997), following a Bayesian approach, concluded that the number of components \(G\) ranges from 5 to 7.

6 Discussion

We have presented a constrained ML mixture modeling approach. It is based on the traditional maximization of the likelihood, and constraining the ratio between the scatter matrices eigenvalues to be smaller than a fixed in advance constant \(c\). We have seen that this approach has nice theoretical properties (existence and consistency results) and a feasible algorithm has been presented for its practical implementation.

The practitioner has sometimes an initial idea of the maximum allowable difference between mixture component scatters. For instance, a small \(c\) must be fixed if components with very similar scatters are expected. In any case, after standardizing the data variables, we should always choose a small or moderate value of \(c\) just to avoid degeneracies of target function and the detection of non-interesting spurious solutions.

However, we think that more useful information can be obtained from a careful analysis of the fitted mixtures when moving parameter \(c\) in a controlled way. In fact, our experience tell us that no many essentially different solutions are needed to be examined when considering “sensible” values of \(c\) (see some examples of it in this work). This would lead to a very reduced list of candidate mixture fits to be carefully investigated. To give a more accurate picture of this idea, we propose using a grid \(\{c_l\}_{l=1}^L\) of values for the eigenvalues ratio constraint factor \(c\), ranging between \(c_1=1\) and a sensible upper bound \(c_L=c_{\text {max}}\) of this ratio. For instance, an equispaced grid in logarithmical scale may be used for this grid. For this sequence of \(c\) values, we obtain the associated sequence of constrained ML fitted mixtures \(\{\mathcal {M}_l\}_{l=1}^L\) and we can see how many “essentially” different solutions exist. In order to do that, we propose using the discrepancy measures \(\delta ^{\text {Classif}}\) or \(\delta ^{\text {Mixt}}\) introduced in Sect. 4 (they were introduced for the \(G=2\) case but they can be easily extended to higher number of components \(G\)). We say that two mixtures \(\mathcal {M}_i\) and \(\mathcal {M}_j\) are essentially the same when \(\delta (\mathcal {M}_i,\mathcal {M}_j)<\varepsilon \) for a fixed tolerance factor \(\varepsilon \), which can be easily interpreted.

Table 3 shows how many essentially different solutions can be found for the random samples used in Sect. 4 for the two discrepancy measures (\(\delta ^{\text {Classif}}\) and \(\delta ^{\text {Mixt}}\)) and different values of the tolerance factor \(\varepsilon \) (0.01, 0.05 and 0.1). We start from the constrained solutions obtained from 18 values of constant \(c\) taken in \([1,10^8]\) (namely, \(c=\{2^0,2^1,\ldots ,2^{9}, 10^{3}, 10^{4}, \ldots , 10^{10}\}\)). This table also includes the number of essentially different solutions when considering 5 random samples (instead of only one) from these mixtures enclosed in parentheses. We can see that the number of solutions is not very large, apart from the \(p=10\) and \(n=100\) cases where the sample size is not very large for the high dimension considered (in fact, a smaller number of essentially different solutions are found when considering no so large values of constant \(c\) and, thus, avoiding the detection of the most clear spurious solutions). In spite of this huge range of \(c\) values, we find 5, 4, and 3 essentially different solutions for the “Synthetic Data Set 3” in Sect. 5 when considering \(\varepsilon =0.01\), 0.05 and 0.1, respectively. Analogously, we have 8, 6, and 3 for the “Iris Virginica Data Set” and the same values of the tolerance factor \(\varepsilon \). The “true” solution and the \(S_1\) solution are always found in these smaller lists of solutions for these two examples. The discrepancy measure \(\delta ^{\text {Classif}}\) has been used, but similar numbers are obtained with \(\delta ^{\text {Mixt}}\).

Moreover, the solutions which are not “enforced” by the algorithm (i.e., those with \(M_n/m_n<c\)) are often the most interesting ones as shown in Sect. 5. Thus, within the list of essentially different solutions, we can obtain an even smaller list of “sensible” mixture fits by focusing only on the not “enforced” ones.

This type of monitoring approach requires solving several constrained ML problems and, thus, it is only affordable if we rely on efficient and fast enough algorithms as those presented in Sect. 3.

As it usually happens in the literature on mixture models, we have not considered the presence of contamination in the data set, contrary to what we have previously done and published. However, the proposed methodology could also be easily adapted to tackle the problem of robust estimation of mixture models, by defining trimmed ML approaches were a fraction \(\alpha \) of the data is allowed to be trimmed. For instance, the way that eigenvalues ratio constraints are enforced in the proposed algorithm may be easily incorporated to the trimmed likelihood mixture fitting method in Neykov et al. (2007) or to the robust improper ML estimator (RIMLE) introduced in Hennig (2004) (see, also, Coretto and Hennig 2010).

References

Anderson, E.: The irises of the Gaspe Peninsula. Bull. Am. Iris Soc. 59, 2–5 (1935)

Banfield, J.D., Raftery, A.E.: Model-based Gaussian and non-Gaussian clustering. Biometrics 49, 803–821 (1993)

Celeux, G., Govaert, G.: Gaussian parsimonious clustering models. Pattern Recognition 28, 781–793 (1995)

Celeux, G., Diebolt, J.: The SEM algorithm: a probabilistic teacher algorithm derived from the EM algorithm for the mixture problem. Comput. Stat. Quater. 2, 73–82 (1985)

Chen, J., Tan, X.: Inference for multivariate normal mixtures. J. Multivar. Anal. 100, 1367–1383 (2009)

Ciuperca, G., Ridolfi, A., Idier, J.: Penalized maximum likelihood estimator for normal mixtures. Scand. J. Stat. 30, 45–59 (2003)

Coretto, P., Hennig, C.: A simulations study to compare robust clustering methods based on mixtures. Adv. Data Anal. Classif. 4, 111–135 (2010)

Day, N.E.: Estimating the components of a mixture of two normal distributions. Biometrika 56, 463–474 (1969)

Dempster, A.P., Laird, N.M., Rubin, D.B.: Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. 39, 1–38 (1977)

Dennis Jr, J.E.: Algorithms for nonlinear fitting. In: Powell, M.J.D. (ed.) Nonlinear Optimization 1981 (Proceeding of the NATO Advanced Research Institute held at Cambridge in July 1981). Academic Press, Cambridge (1982)

Dykstra, R.L.: An algorithm for restricted least squares regression. J. Am. Stat. Assoc. 78, 837–842 (1983)

Fisher, R.A.: The use of multiple measurements in taxonomic problems. Ann. Eugen. 7, 179–188 (1936)

Fraley, C., Raftery, A.E.: Bayesian regularization for normal mixture estimation and model-based clustering. J. Classif. 24, 155–181 (2007)

Fritz, H., García-Escudero, L.A., Mayo-Iscar, A.: A fast algorithm for robust constrained clustering. Comput. Stat. Data Anal. 61, 124–136 (2013)

Gallegos, M.T., Ritter, G.: Trimming algorithms for clustering contaminated grouped data and their robustness. Adv. Data Anal. Classif. 3, 135–167 (2009a)

Gallegos, M.T., Ritter, G.: Trimmed ML estimation of contaminated mixtures. Sankhya 71, 164–220 (2009b)

García-Escudero, L.A., Gordaliza, A., Matrán, C., Mayo-Iscar, A.: A general trimming approach to robust cluster analysis. Ann. Stat. 36, 1324–1345 (2008)

Greselin, F., Ingrassia, S.: Weakly homoscedastic constraints for mixtures of \(t\)-distributions. In: Fink, A., Lausen, B., Seidel, W., Ultsch, A. (eds.) Advances in Data Analysis, Data Handling and Business Intelligence, Part 3. Studies in Classification, Data Analysis, and Knowledge Organization, pp. 219–228. Springer, Berlin (2010)

Hathaway, R.J.: Constrained maximum-likelihood estimation for a mixture of \(m\) univariate normal distributions. Ph.D. Dissertation, Department of Mathematical Sciences, Rice University (1983)

Hathaway, R.J.: A constrained formulation of maximum likelihood estimation for normal mixture distributions. Ann. Stat. 13, 795–800 (1985)

Hathaway, R.J.: A constrained EM algorithm for univariate normal mixtures. J. Stat. Comput. Simul. 23, 211–230 (1986)

Hennig, C.: Breakdown points for maximum likelihood estimators of location-scale mixtures. Ann. Stat. 32, 1313–1340 (2004)

Ingrassia, S., Rocci, R.: Constrained monotone EM algorithms for finite mixture of multivariate Gaussians. Comput. Stat. Data Anal. 51, 5339–5351 (2007)

Maitra, R.: Initializing partition-optimization algorithms. IEEE/ACM Trans. Comput. Biol. Bioinf. 6, 144–157 (2009)

McLachlan, G., Peel, D.: Contribution to the discussion of paper by S. Richardson and P.J. Green. J. R. Stat. Soc. 59, 772–773 (1997)

McLachlan, G., Peel, D.: Finite Mixture Models. Wiley, New York (2000)

Neykov, N., Filzmoser, P., Dimova, R., Neytchev, P.: Robust fitting of mixtures using the trimmed likelihood estimator. Comput. Stat. Data Anal. 17, 299–308 (2007)

Richardson, S., Green, P.J.: On the Bayesian analysis of mixtures with an unknown number of components (with discussion). J. R. Stat. Soc. 59, 731–792 (1997)

Roeder, K.: Density estimation with confidence sets exemplified by superclusters and voids in galaxies. J. Am. Stat. Assoc. 85, 617–624 (1990)

Titterington, D.M., Smith, A.F., Makov, U.E.: Statistical Analysis of Finite Mixture Distributions. Wiley, New York (1985)

Van der Vaart, A.W., Wellner, J.A.: Weak Convergence and Empirical Processes. Wiley, New York (1996)

Acknowledgments

This research is partially supported by the Spanish Ministerio de Ciencia e Innovación, grant MTM2011-28657-C02-01 and by Consejería de Educación de la Junta de Castilla y León, grant VA212U13. The authors would like to thank the associated editor and two anonymous referees for many suggestions that greatly improved the paper.

Author information

Authors and Affiliations

Corresponding author

Appendix: Proofs of existence and consistency results

Appendix: Proofs of existence and consistency results

1.1 Existence

The proof of these results follow similar arguments as those applied in García-Escudero et al. (2008) but in a mixture framework instead of a clustering one. So, let us first introduce some common notation for the clustering and mixture problems. Given \(\theta =(\pi _1,\ldots ,\pi _G,\mu _1,\ldots ,\mu _G, \Sigma _1,\ldots ,\Sigma _G)\), let us define functions \(D_g(x;\theta )=\pi _g \varphi (x;\mu _g,\Sigma _g)\) and \(D(x;\theta )=\max \{D_1(x;\theta ),\ldots , D_G(x;\theta )\}.\) The mixture problem is defined through the maximization on \(\theta \in \varTheta _c\) of

while, on the other hand, the clustering problem (classification likelihood) is defined through the maximization on \(\theta \in \varTheta _c\) of

with \(z_g(x;\theta )=I\{x: D(x;\theta )=D_g(x;\theta )\}.\)

Let us consider a sequence \(\{\theta _n\}_{n=1}^{\infty }=\{(\pi _1^n,\ldots ,\pi _G^n,\mu _1^n,\ldots , \mu _G^n, \Sigma _1^n, \ldots ,\Sigma _G^n)\}_{n=1}^{\infty }\subset \varTheta _c\) such that

(the boundedness from below for (12) can be easily obtained just considering \(\pi _1=1\), \(\mu _1=0,\) \(\Sigma _1=I\) and the fact that \(P\) has finite second order moments). Since \([0,1]^k\) is a compact set, we can extract a subsequence from \(\{\theta _n\}_{n=1}^{\infty }\) (that will be denoted like the original one) such that

and satisfying for some \(k\in \{0,1,\ldots ,G\}\) (a relabelling could be needed) that

With respect to the scatter matrices, under (ER), we can also consider a further subsequence verifying one (and only one) of these possibilities:

or

Lemma 1

Given a sequence satisfying (12), if \(P\) satisfies (PR) and \(E_P[\Vert \cdot \Vert ^2]<\infty \), then only the convergence (15) is possible.

Proof

The proof is the same as in Lemma A.1 in García-Escudero et al. (2008) but we need an analogous of inequality (A.8) there to be applied in this untrimmed case. This result appears in Lemma 2 below. \(\square \)

Lemma 2

If \(P\) satisfies (PR) then there exists a constant \(h>0\) such that

Proof

Let \(B(y,\varepsilon )\) denote the ball centered at \(y\) with radius \(\varepsilon \). Since \(P\) is not concentrated on \(G\) points, we can choose \(G+1\) points \(y_1,\ldots ,y_{G+1}\) verifying \(P[B(y_g,\varepsilon )]>\delta (\varepsilon )>0\) for \(g=1,\ldots ,G+1\) with \(\delta (\varepsilon )\) only depending on \(\varepsilon \). If we take

and \(h= \delta (\varepsilon _0) \varepsilon _0^2\), we have

\(\square \)

Let us now go back to our original mixture fitting problem and let us assume again that:

(the boundedness from below in (18) again follows from considering \(\pi _1=1\), \(\mu _1=0,\) \(\Sigma _1=I\) and that \(P\) has finite second order moments). Displays (13)–(17) are also considered in this mixture fitting setup.

Lemma 3

Given the sequence satisfying (18), if \(P\) satisfies (PR) and \(E_P[\Vert \cdot \Vert ^2]<\infty \), then only the convergence (15) is possible.

Proof

The proof is trivial from Lemma 1 just taking into account the bound

\(\square \)

Lemma 4

Given a sequence satisfying (18) and assuming condition (PR) and \(E_P[\Vert \cdot \Vert ^2]<\infty \) for \(P\), if every \(\pi _g\) in (13) verifies \(\pi _g>0\) for \(g=1,\ldots ,G\), then \(k=G\) in (14).

Proof

If \(k=0\), we can easily see that \(L(\theta _n;P)\rightarrow -\infty .\) So, let us assume that \(0<k<G\). We will prove that

In order to do that, we can see that

Expression (21) follows from the application of the triangular inequality and (22) from the fact that \(M_n^{-1}\le m_n^{-1}\). Then, for fixed \(x\), the expression (22) tends to 0 due to (13) and that (14) makes \(\min _{g=k+1,\ldots ,G}\Vert \mu _1^n-\mu _g^n\Vert ^2\rightarrow \infty \). Moreover, (22) is uniformly dominated by a function \(k_1+k_2\Vert x\Vert ^2\) by using the elementary inequality \(\log (1+a\exp (x))\le x+\log (1+a)\) for \(x\ge 0\) together with the assumptions (13) and (14). Since \(E_P[\Vert \cdot \Vert ^2]<\infty \), the Lebesgue’s dominated convergence theorem finally proves (20). Note that the constraint \(M_n/m_n \le c\) has been also used for deriving the pointwise convergence and the uniform domination.

Taking into account (20) and if \(\theta ^*\) is the limit of the subsequence \(\{(\pi _1^n,\ldots ,\pi _k^n,\mu _1^n,\ldots , \mu _k^n, \Sigma _1^n, \ldots ,\Sigma _k^n)\}_{n=1}^{\infty }\), we have \( \lim _{n\rightarrow \infty } \sup L(\theta _n;P)= L(\theta ^*;P). \) As \(\sum _{j=1}^{k}\pi _j<1\), the proof ends by defining a subsequence \(\{\widetilde{\theta }_n\}_{n=1}^{\infty }=\{(\widetilde{\pi }_1^n,\ldots ,\widetilde{\pi }_G^n, \widetilde{\mu }_1^n,\ldots , \widetilde{\mu }_G^n, \widetilde{\Sigma }_1^n, \ldots ,\widetilde{\Sigma }_G^n)\}_{n=1}^{\infty }\) where

and

(\(\widetilde{\mu }_g^n\) and \(\widetilde{\Sigma }_g^n\) may be arbitrarily chosen when \(g>k\) only taking into account that the eigenvalues ratio constraint must be satisfied). We now have

This would lead to a contradiction with the optimality in (18) and we thus conclude \(k=G\). \(\square \)

Proof of Proposition 1

Taking into account previous lemmas, the proof is exactly the same as that of Proposition 2 in García-Escudero et al. (2008). Notice that if some weight \(\pi _g\) is equal to 0, then we can trivially choose some \(\mu _g\) and \(\Sigma _g\) such that \(\Vert \mu _g\Vert <\infty \) and such that \(\Sigma _g\) satisfies the eigenvalue-ratio constraint without changing (10). \(\square \)

1.2 Consistency

Given \(\{x_n\}_{n=1}^{\infty }\) an i.i.d. random sample from an underlying (unknown) probability distribution \(P\), let \(\{\theta _n\}_{n=1}^{\infty }=\{(\pi _1^n,\ldots ,\pi _k^n,\mu _1^n, \ldots , \mu _k^n,\Sigma _1^n,\ldots ,\Sigma _k^n)\}_{n=1}^{\infty }\subset \varTheta _c\) denote a sequence of empirical estimators obtained by solving the problem (3) for \(P\) being the sequence of empirical measures \(\{P_n\}_{n=1}^{\infty }\) with the eigenvalue-ratio constraint (ER) (notice that the index \(n\) now stands for the sample size).

First we prove that there exists a compact set \(K\subset \varTheta _c\) such that \(\theta _n \in K\) for \(n\) large enough, with probability 1.

Lemma 5

If \(P\) satisfies (PR) and \(E_P[\Vert \cdot \Vert ^2]<\infty \), then the minimum (resp. maximum) eigenvalue, \(m_n\) (resp. \(M_n\) ) of the matrices \(\Sigma _g^n\)’s can not verify \(m_n \rightarrow 0\) (resp. \(M_n \rightarrow \infty \) ).

Proof

The proof follows similar lines as that of Lemma 1 above by using again the bound (19). We also need a bound like in Lemma 2 but for the empirical measure. I.e., we need a constant \(h'>0\) such that

This constant can be obtained by a similar reasoning as that in the proof of Lemma 2 just taking into account that the class of the balls in \(\mathbb {R}^p\) is a Glivenko-Cantelli class. \(\square \)

Lemma 6

If (PR) holds for distribution \(P\) and \(E_P[\Vert \cdot \Vert ^2]<\infty \), then we can choose empirical centers \(\mu _g^n\)’s such that their norms are uniformly bounded with probability 1.

Proof

The proof of this result follows from applying a reasoning like that in the proof of Lemma 4. Notice that the same uniform convergence to 0 on \(x\) that was needed for proving (20) is applied here too. \(\square \)

In the following lemma, we will use the same notation and terminology as in Vaart and Wellner (1996).

Lemma 7

Given a compact set \(K\subset \varTheta _c\), the class of functions

is a Glivenko-Cantelli class when \(E_P[\Vert \cdot \Vert ^2]<\infty \).

Proof

Let us first consider

where \(B\) is a fixed compact set.

We have that the class of functions

with \(M_{p\times p}\) being positive-definite \(p\times p\) matrices, is a VC class because is a finite-dimensional vector space of measurable functions. Consequently, the class \(\big \{ \sum _{g=1}^G D_g(\cdot ;\theta ) \big \}\) is a VC-hull class. Applying Theorem 2.10.20 in Vaart and Wellner (1996) with \(\phi (x)=I_{B}(x)\log (x)\), we obtain that \(\mathcal {G}\) satisfies the uniform entropy condition. Since it is uniformly bounded, we have that \(\mathcal {G}\) is a Glivenko-Cantelli class.

We can also see that there exist constants \(a\) and \(b\) such that

Since \(K\) is a compact set, there exist constants \(m\) and \(M\) satisfying \(0<m \le \lambda _l(\Sigma _g) \le M < \infty \) for \(g=1,\ldots ,G\) and \(l=1,\ldots ,p\). For these constants, we have

Now take into account that \(\max _{g=1,\ldots ,G}\Vert \mu _g\Vert < \infty \) (recall that \(\theta \in K\) with \(K\) being a compact set). Thus, we see that \(\log (\sum _{g=1}^G D_g(x;\theta ))\ge a'\Vert x\Vert ^2+b'\). On the other hand, it is easy to see that \(\log (\sum _{g=1}^G D_g(x;\theta ))\le (2\pi )^{-p/2} m^{-p/2}\). Thus, a bound like (25) holds.

Finally, for every \(h\in \mathcal {H}\) and \(B\) a compact set on \(\mathbb {R}^p\), we have

The result follows from the fact that \(h(\cdot )I_B(\cdot ) \in \mathcal {G}\) and that \(E_P[\Vert \cdot \Vert ^2]<\infty \). \(\square \)

Proof of Proposition 2

Taking into account Lemma 7, the result follows from Corollary 3.2.3 in Vaart and Wellner (1996). Notice that Lemmas 5 and 6 are needed in order to guarantee the existence of a compact set \(K\) such that the sequence of empirical estimators satisfies \(\{\theta _n\}_{n=1}^{\infty }\subset K\).\(\square \)

Rights and permissions

About this article

Cite this article

García-Escudero, L.A., Gordaliza, A., Matrán, C. et al. Avoiding spurious local maximizers in mixture modeling. Stat Comput 25, 619–633 (2015). https://doi.org/10.1007/s11222-014-9455-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-014-9455-3