Abstract

We consider a discrete-time d-dimensional process \(\{{\varvec{X}}_n\}=\{(X_{1,n},X_{2,n},\ldots ,X_{d,n})\}\) on \({\mathbb {Z}}^d\) with a background process \(\{J_n\}\) on a countable set \(S_0\), where individual processes \(\{X_{i,n}\},i\in \{1,2,\ldots ,d\},\) are skip free. We assume that the joint process \(\{{\varvec{Y}}_n\}=\{({\varvec{X}}_n,J_n)\}\) is Markovian and that the transition probabilities of the d-dimensional process \(\{{\varvec{X}}_n\}\) vary according to the state of the background process \(\{J_n\}\). This modulation is assumed to be space homogeneous. We refer to this process as a d-dimensional skip-free Markov-modulated random walk. For \({\varvec{y}}, {\varvec{y}}'\in {\mathbb {Z}}_+^d\times S_0\), consider the process \(\{{\varvec{Y}}_n\}_{n\ge 0}\) starting from the state \({\varvec{y}}\) and let \({\tilde{q}}_{{\varvec{y}},{\varvec{y}}'}\) be the expected number of visits to the state \({\varvec{y}}'\) before the process leaves the nonnegative area \({\mathbb {Z}}_+^d\times S_0\) for the first time. For \({\varvec{y}}=({\varvec{x}},j)\in {\mathbb {Z}}_+^d\times S_0\), the measure \(({\tilde{q}}_{{\varvec{y}},{\varvec{y}}'}; {\varvec{y}}'=({\varvec{x}}',j')\in {\mathbb {Z}}_+^d\times S_0)\) is called an occupation measure. Our primary aim is to obtain the asymptotic decay rate of the occupation measure as \({\varvec{x}}'\) goes to infinity in a given direction. We also obtain the convergence domain of the matrix moment generating function of the occupation measure.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

For \(d\ge 1\), we consider a discrete-time d-dimensional process \(\{{\varvec{X}}_n\}=\{(X_{1,n},X_{2,n},\ldots ,X_{d,n})\}\) on \({\mathbb {Z}}^d\), where \({\mathbb {Z}}\) is the set of all integers, and a background process \(\{J_n\}\) on a countable set \(S_0=\{1,2,\ldots \}\). We assume that each individual process \(\{X_{i,n}\}\) is skip free, which means that its increments take values in \(\{-1,0,1\}\). Furthermore, we assume that the joint process \(\{{\varvec{Y}}_n\}=\{({\varvec{X}}_n,J_n)\}\) is Markovian and that the transition probabilities of the d-dimensional process \(\{{\varvec{X}}_n\}\) vary according to the state of the background process \(\{J_n\}\). This modulation is assumed to be space homogeneous. We refer to this process as a d-dimensional skip-free Markov-modulated random walk (MMRW for short). The state space of the d-dimensional MMRW is given by \({\mathbb {S}}={\mathbb {Z}}^d\times S_0\). It is also a d-dimensional Markov additive process (MA-process for short) [13], where \({\varvec{X}}_n\) is the additive part and \(J_n\) the background state. A discrete-time d-dimensional quasi-birth-and-death process [18] (QBD process for short) is a d-dimensional MMRW with reflecting boundaries, where the process \({\varvec{X}}_n\) is the level and \(J_n\) the phase. Stochastic models arising from various Markovian multiqueue models and queueing networks such as polling models and generalized Jackson networks with Markovian arrival processes and phase-type service processes can be represented as continuous-time multidimensional QBD processes (in the case of two-dimension, see, for example, [14, 18, 19]) and, by using the uniformization technique, they can be reduced to discrete-time multidimensional QBD processes. It is well known that, in general, the stationary distribution of a Markov chain can be represented in terms of its stationary probabilities on some boundary faces and its occupation measure. In the case of multidimensional QBD processes, such an occupation measure is given as that in the corresponding multidimensional MMRW. For this reason, we focus on multidimensional MMRWs and study their occupation measures, especially the asymptotic properties of the occupation measures. Here, we briefly explain that the skip-free assumption is not so restrictive. For a given \(k>1\), assume that, for \(i\in \{1,2,\ldots ,d\}\), \(X_{i,n}\) takes values in \(\{-k,-(k-1),\ldots ,0,1,\ldots ,k\}\). For \(i\in \{1,2,\ldots ,d\}\), let \({}^kX_{i,n}\) and \({}^kM_{i,n}\) be the quotient and remainder of \(X_{i,n}\) divided by k, respectively, where \({}^kX_{i,n}\in {\mathbb {Z}}\) and \(0\le {}^kM_{i,n} \le k-1\). Then, the process \(\{( {}^kX_{1,n},\ldots ,{}^kX_{d,n},({}^kM_{1,n},\ldots ,{}^kM_{d,n},J_n))\}\) becomes a d-dimensional MMRW with skip-free jumps, where \(({}^kX_{1,n},\ldots ,{}^kX_{d,n})\) is the level and \(({}^kM_{1,n},\ldots ,{}^kM_{d,n},J_n)\) the background state. This means that any multidimensional MMRW with bounded jumps can be reduced to a multidimensional MMRW with skip-free jumps.

Let \(P=\left( p_{({\varvec{x}},j),({\varvec{x}}',j')}; ({\varvec{x}},j),({\varvec{x}}',j')\in {\mathbb {S}}\right) \) be the transition probability matrix of a d-dimensional MMRW \(\{{\varvec{Y}}_n\}\), where \(p_{({\varvec{x}},j)({\varvec{x}}',j')}={\mathbb {P}}({\varvec{Y}}_1=({\varvec{x}}',j')\,|\,{\varvec{Y}}_0=({\varvec{x}},j))\). By the skip-free property, each element of P, say \(p_{({\varvec{x}},j)({\varvec{x}}',j')}\), is nonzero only if \({\varvec{x}}_1'-{\varvec{x}}_1\in \{-1,0,1\}^d\). By the property of space-homogeneity, for every \({\varvec{x}},{\varvec{x}}'\in {\mathbb {Z}}^d\), \({\varvec{i}}\in \{-1,0,1\}^d\) and \(j,j'\in S_0\), we have \(p_{({\varvec{x}},j),({\varvec{x}}+{\varvec{i}},j')}=p_{({\varvec{x}}',j),({\varvec{x}}'+{\varvec{i}},j')}\). Hence, the transition probability matrix P can be represented as a block matrix in terms of only the following blocks:

i.e., for \({\varvec{x}},{\varvec{x}}'\in {\mathbb {Z}}^d\), the block \(P_{{\varvec{x}},{\varvec{x}}'}=(p_{({\varvec{x}},j)({\varvec{x}}',j')}; \,j,j'\in S_0)\) is given as

where \({\mathbf {0}}\) and O are a vector and matrix of 0’s, respectively, whose dimensions are determined in context. Define a set \({\mathbb {S}}_+\) as \({\mathbb {S}}_+={\mathbb {Z}}_+^d\times S_0\), where \({\mathbb {Z}}_+\) is the set of all nonnegative integers, and let \(\tau \) be the stopping time at which the MMRW \(\{{\varvec{Y}}_n\}\) enters \({\mathbb {S}}{\setminus }{\mathbb {S}}_+\) for the first time, i.e.,

For \({\varvec{y}}=({\varvec{x}},j),{\varvec{y}}'=({\varvec{x}}',j')\in {\mathbb {S}}_+\), let \({\tilde{q}}_{{\varvec{y}},{\varvec{y}}'}\) be the expected number of visits to the state \({\varvec{y}}'\) before the process \(\{{\varvec{Y}}_n\}\) starting from the state \({\varvec{y}}\) enters \({\mathbb {S}}{\setminus }{\mathbb {S}}_+\) for the first time, i.e.,

where \(1(\cdot )\) is an indicator function. For \({\varvec{y}}\in {\mathbb {S}}_+\), the measure \(({\tilde{q}}_{{\varvec{y}},{\varvec{y}}'}; {\varvec{y}}'\in {\mathbb {S}}_+)\) is called an occupation measure. Note that \({\tilde{q}}_{{\varvec{y}},{\varvec{y}}'}\) is the \(({\varvec{y}},{\varvec{y}}')\)-element of the fundamental matrix of the truncated substochastic matrix \(P_+\) given as \(P_+=\left( p_{{\varvec{y}},{\varvec{y}}'}; {\varvec{y}},{\varvec{y}}'\in {\mathbb {S}}_+\right) \), i.e., \({\tilde{q}}_{{\varvec{y}},{\varvec{y}}'} = [{\tilde{P}}_+]_{{\varvec{y}},{\varvec{y}}'}\) and

where, for example, \(P_+^2=\left( p^{(2)}_{{\varvec{y}},{\varvec{y}}'}\right) \) is defined by \(p^{(2)}_{{\varvec{y}},{\varvec{y}}'} = \sum _{{\varvec{y}}''\in {\mathcal {S}}_+} p_{{\varvec{y}},{\varvec{y}}''}\,p_{{\varvec{y}}'',{\varvec{y}}'}\). \(P_+\) governs transitions of \(\{{\varvec{Y}}_n\}\) on \({\mathbb {S}}_+\). Our primary aim is to obtain the asymptotic decay rate of the occupation measure \(({\tilde{q}}_{{\varvec{y}},{\varvec{y}}'}; {\varvec{y}}'=({\varvec{x}}',j')\in {\mathbb {S}}_+)\) as \({\varvec{x}}'\) goes to infinity in a given direction. This asymptotic decay rate gives a lower bound for the asymptotic decay rate of the stationary distribution in the corresponding multidimensional QBD process in the same direction. Such lower bounds have been obtained for some kinds of multidimensional reflected process without background states; for example, 0-partially chains in [3], also see comments on Conjecture 5.1 in [13]. With respect to multidimensional reflected processes with background states, such asymptotic decay rates of the stationary tail distributions in two-dimensional reflected processes have been discussed in [13, 14] by using Markov additive processes and large deviations. Note that the asymptotic decay rate of the stationary distribution in a two-dimensional QBD process with finite phase states in each coordinate direction has been obtained in [18, 19].

As mentioned above, the d-dimensional MMRW \(\{{\varvec{Y}}_n\}=\{({\varvec{X}}_n,J_n)\}\) is a d-dimensional MA-process, where the set of blocks, \(\{ A_{{\varvec{i}}}; {\varvec{i}}\in \{-1,0,1\}^d \}\), corresponds to the kernel of the MA-process. For \(\varvec{\theta }\in {\mathbb {R}}^d\), let \(A_*(\varvec{\theta })\) be the matrix moment generating function of one-step transition probabilities defined as

where \(\langle {\varvec{a}},{\varvec{b}}\rangle \) is the inner product of vectors \({\varvec{a}}\) and \({\varvec{b}}\). \(A_*(\varvec{\theta })\) is the Feynman–Kac operator [17] for the MA-process. For \({\varvec{x}},{\varvec{x}}'\in {\mathbb {Z}}_+^d\), define a matrix \(N_{{\varvec{x}},{\varvec{x}}'}\) as \(N_{{\varvec{x}},{\varvec{x}}'}=({\tilde{q}}_{({\varvec{x}},j),({\varvec{x}}',j')}; j,j'\in S_0)\) and \(N_{{\varvec{x}}}\) as \(N_{{\varvec{x}}}=(N_{{\varvec{x}},{\varvec{x}}''}; {\varvec{x}}''\in {\mathbb {Z}}_+^d)\). \({\tilde{P}}_+\) is represented as \({\tilde{P}}_+=(N_{{\varvec{x}},{\varvec{x}}'}; {\varvec{x}},{\varvec{x}}'\in {\mathbb {Z}}_+^d)\). For \({\varvec{x}}\in {\mathbb {Z}}_+^d\), let \(\varPhi _{{\varvec{x}}}(\varvec{\theta })\) be the matrix moment generating function of the occupation measure defined as

which satisfies, for \(j,j'\in S_0\),

For \({\varvec{x}}\in {\mathbb {Z}}_+^d\), define the convergence domain of the vector generating function \(\varPhi _{{\varvec{x}}}(\varvec{\theta })\) as

Define the point sets \(\varGamma \) and \({\mathcal {D}}\) as

where \(\mathrm{cp}(A)\) is the convergence parameter of the matrix A. In the following sections, we prove that, for any nonzero vector \({\varvec{c}}\in {\mathbb {Z}}_+^d\) and for every \(j,j'\in S_0\),

Furthermore, using this asymptotic formula, we also prove that, for any \({\varvec{x}}\in {\mathbb {Z}}_+^d\), \({\mathcal {D}}_{{\varvec{x}}}\) is given by \({\mathcal {D}}\).

In order to prove these results, we mainly use the matrix analytic method in queueing theory introduced by Marcel Neuts and developed in the literature; see, for example, [1, 10, 15, 16]. In the following section, instead of considering stochastic matrices with block tridiagonal structure, we deal with a nonnegative matrix with block tridiagonal structure, whose phase space is countably infinite. We give a simple formula representing the convergence parameter of the nonnegative block tridiagonal matrix, where the matrices corresponding to the rate matrix (R-matrix) and G-matrix in the matrix analytic method play an important role. Block tridiagonal stochastic matrices with a countable phase space have been studied in the literature and a lot of basic results obtained; see, for example, [2, 10, 23]. Those results also hold in the case of nonnegative block tridiagonal matrix with a countable phase space. However, some properties of the convergence parameters of the matrices corresponding to the rate matrix and G-matrix, which we want to use in this paper, have been given only in the case of finite phase space. We will prove such properties also hold in the case of infinite phase space.

The rest of the paper is organized as follows: In Sect. 2, we extend some results in the matrix analytic method. In Sect. 3, we introduce some assumptions and give some properties of MMRWs, including a sufficient condition for the occupation measure in a d-dimensional MMRW to be finite. In Sect. 4, we consider a kind of one-dimensional QBD process with a countable phase space and obtain an upper bound for the convergence parameter of the rate matrix in the QBD process. Using the upper bound, we obtain the asymptotic decay rate of the occupation measure and the convergence domain of the matrix moment generating function in Sect. 5. In the same section, we also consider a single-server polling model with limited services and give some numerical examples. The paper concludes with a remark on an asymptotic property of multidimensional QBD process in Sect. 6.

Notation for matrices For a matrix \(A=(a_{i,j})\), A is said to be a nonnegative matrix if every entry of A is nonnegative. The inequality \(A\ge 0\) is meant elementwise, i.e., \(a_{ij}\ge 0\) for every i and j. We denote by \([A]_{i,j}\) the (i, j)-entry of A, i.e., \([A]_{i,j}=a_{i,j}\). The transpose of a matrix A is denoted by \(A^\top \). The convergence parameter of a nonnegative matrix A with a finite or countable dimension is denoted by \(\mathrm{cp}(A)\), i.e., \(\mathrm{cp}(A) = \sup \{r\in {\mathbb {R}}_+; \sum _{n=0}^\infty r^n A^n<\infty \}\).

2 Nonnegative block tridiagonal matrices and their properties

Note that this section is described independently of the following sections. Our aim in the section is to give an expression for the convergence parameter of a nonnegative block tridiagonal matrix whose block size is countably infinite. The role of the Perron–Frobenius eigenvalue of a nonnegative matrix with a finite dimension is replaced with the reciprocal of the convergence parameter of a nonnegative matrix with a countable dimension. For this purpose, we follow results in the matrix analytic method in queueing theory [1, 10, 15, 16], especially, those in the case of infinite phase space [2, 10, 23], where we replace stochastic and substochastic block matrices with nonnegative block matrices.

Consider a block tridiagonal matrix Q defined as

where \(A_{-1}\), \(A_0\) and \(A_1\) are square matrices with a countable dimension, i.e., for \(k\in \{-1,0,1\}\), \(A_k=(a_{k,i,j}; i,j\in {\mathbb {Z}}_+)\). Hereafter, we adopt the policy of giving a minimal assumption in each place. First, we give the following condition.

Condition 2.1

(a0) Every entry of the matrix Q is nonnegative.

We always assume this condition, on which \(A_{-1}\), \(A_0\) and \(A_1\) are also elementwise nonnegative, i.e., every \(a_{k,i,j}\) is nonnegative. We define a matrix \(A_*\) as

We give the following conditions.

Condition 2.2

(a1) Both \(A_{-1}\) and \(A_1\) are nonzero matrices.

Condition 2.3

(a2) All powers of \(A_*\) are elementwise finite, i.e., for any \(n\in {\mathbb {Z}}_+\), \(A_*^n<\infty \), elementwise.

Here, we note that an elementwise-finite nonnegative matrix may have unbounded entries since the dimension of the matrix is countably infinite. Condition (a1) makes Q a true block tridiagonal matrix. Under condition (a2), all multiple products of \(A_{-1}\), \(A_0\) and \(A_1\) becomes finite elementwise, i.e., for any \(n\in {\mathbb {N}}\) and for any \({\varvec{i}}_{(n)}=(i_1,i_2,\ldots , i_n)\in \{-1,0,1\}^n\), \(A_{i_1} A_{i_2} \cdots A_{i_n}<\infty \), where associativity among matrices is preserved. Hence, for the triplet \(\{ A_{-1}, A_0, A_1\}\), we can define a matrix R corresponding to the rate matrix of a QBD process and a matrix G corresponding to the G-matrix. If \(\mathrm{cp}(A_*)<\infty \), discussions for Q may be reduced to probabilistic arguments. For example, if there exist an \(s>0\) and positive vector \({\varvec{v}}\) such that \(s A_* {\varvec{v}}\le {\varvec{v}}\), then \(\Delta _{{\varvec{v}}}^{-1} s A_* \Delta _{{\varvec{v}}}\) becomes stochastic or substochastic, where \(\Delta _{{\varvec{v}}}=\mathrm{diag}\, {\varvec{v}}\), and discussion for the triplet \(\{ A_{-1}, A_0, A_1\}\) can be replaced with that for \(\{ \Delta _{{\varvec{v}}}^{-1}s A_{-1}\Delta _{{\varvec{v}}}, \Delta _{{\varvec{v}}}^{-1}s A_0\Delta _{{\varvec{v}}}, \Delta _{{\varvec{v}}}^{-1}s A_1\Delta _{{\varvec{v}}} \}\). However, in order to make discussion simple, we directly treat \(\{ A_{-1}, A_0, A_1 \}\) and do not use probabilistic arguments.

Define the following sets of index sequences: for \(n\ge 1\) and for \(m\ge 1\),

where \({\varvec{i}}_{(n)}=(i_1,i_2,\ldots ,i_n)\) and \({\mathbb {N}}_{n-1}=\{1,2,\ldots ,n-1\}\). If \(A_*\) is stochastic, we can consider a QBD process \(\{(X_n,J_n)\}\) on the state space \({\mathbb {Z}}_+^2\) having the triplet \((A_{-1}, A_0, A_1)\) as the blocks of its transition probability block matrix, where \(X_n\) is the level and \(J_n\) the phase. In that QBD process, the set \({\mathscr {I}}_n \) corresponds to the set of all paths of the QBD process on which \(X_0=l>0\), \(X_k\ge l\) for \(k\in {\mathbb {N}}_{n-1}\) and \(X_n=l\), i.e., the level process visits state l at time n without entering states less than l before time n. The set \({\mathscr {I}}_{D,m,n}\) also corresponds to the set of all paths on which \(X_0=l>m\), \(X_k\ge l-m+1\) for \(k\in {\mathbb {N}}_{n-1}\) and \(X_n=l-m\), and \({\mathscr {I}}_{U,m,n}\) to that of all paths on which \(X_0=l>0\), \(X_k\ge l+1\) for \(k\in {\mathbb {N}}_{n-1}\) and \(X_n=l+m\).

For \(n\ge 1\), define \(Q_{0,0}^{(n)}\), \(D^{(n)}\) and \(U^{(n)}\) as

Under (a2), \(Q_{0,0}^{(n)}\), \(D^{(n)}\) and \(U^{(n)}\) are elementwise finite for every \(n\ge 1\). Define N, R and G as

where \(Q_{0,0}^{(0)}=I\). By direct calculation, we obtain the following expressions, which are well-known in the stochastic context [2, 10].

Lemma 2.1

Assume (a1) and (a2). Then, if N, G and R are elementwise finite, which means that the matrix series (2.1) defining them converge elementwise, they satisfy the following equations:

From (2.6), \(N\ge I\) and \(N\ge A_0 N+A_1 G N \ge A_0 +A_1 G\). Hence, if N is elementwise finite, then \(A_0 +A_1 G\) is also finite elementwise and we obtain

We will use Eq. (2.6) in this form. Here, we should note that much attention must be paid to matrix manipulation since the dimension of matrices are countably infinite; for example, see Appendix A of [22].

For \(\theta \in {\mathbb {R}}\), define a matrix function \(A_*(\theta )\) as

where \(A_*=A_*(0)\). This \(A_*(\theta )\) corresponds to a Feynman–Kac operator if the triplet \(\{A_{-1},A_0,A_1\}\) is a Markov additive kernel (see, for example, [17]). By direct calculation, we also obtain the following identity corresponding to the RG decomposition for a Markov additive process, which is also called a Wiener–Hopf factorization; see [2, 12] and references therein.

Lemma 2.2

Assume (a1) and (a2). If R, G and N are elementwise finite, we have, for \(\theta \in {\mathbb {R}}\),

where \(H = A_0+ A_1 G = A_0+ A_1 N A_{-1}\).

Consider the following matrix quadratic equations of X:

By Lemma 2.1, if R and G are elementwise finite, they are solutions to Eqs. (2.9) and (2.10), respectively.

Consider the following sequences of matrices:

Like the case of usual QBD processes, we can demonstrate that both the sequences \(\{X_n^{(1)}\}_{n\ge 0}\) and \(\{X_n^{(2)}\}_{n\ge 0}\) are nondecreasing and that if a nonnegative solution \(X^*\), which is elementwise finite, to Eq. (2.9) [resp. Eq. (2.10)] exists, then for any \(n\ge 0\), \(X^*\ge X_n^{(1)}\) (resp. \(X^*\ge X_n^{(2)}\)). Furthermore, letting \(R_n\) and \(G_n\) be defined as

we can also demonstrate that, for any \(n\ge 1\), \(R_n\le X_n^{(1)}\) and \(G_n\le X_n^{(2)}\) hold. Hence, we immediately obtain the following facts, which are also well-known in the stochastic context [10].

Lemma 2.3

Assume (a1) and (a2). If R and G are elementwise finite, they are the minimum nonnegative solutions to Eqs. (2.9) and (2.10), respectively. Furthermore, if \(\{X_n^{(1)}\}\) and \(\{X_n^{(2)}\}\) converge elementwise as n tends to infinity, R and G are elementwise finite and we have \(R=\lim _{n\rightarrow \infty } X_n^{(1)}\) and \(G=\lim _{n\rightarrow \infty } X_n^{(2)}\).

If \(A_*\) is irreducible, \(A_*(\theta )\) is also irreducible for any \(\theta \in {\mathbb {R}}\). We, therefore, give the following condition.

Condition 2.4

(a3) \(A_*\) is irreducible.

Let \(\chi (\theta )\) be the reciprocal of the convergence parameter of \(A_*(\theta )\), i.e., \(\chi (\theta )=\mathrm{cp}(A_*(\theta ))^{-1}\). We say that a positive function f(x) is log-convex in x if \(\log f(x)\) is convex in x. A log-convex function is also a convex function. Since every element of \(A_*(\theta )\) is log-convex in \(\theta \), we see, by Lemma A.1 in Appendix A, that \(\chi (\theta )\) satisfies the following property.

Lemma 2.4

Under (a1) through (a3), \(\chi (\theta )\) is log-convex in \(\theta \in {\mathbb {R}}\).

Let \(\gamma ^\dagger \) be the infimum of \(\chi (\theta )\), i.e.,

and define a set \({\bar{\varGamma }}\) as

By Lemma 2.4, if \(\gamma ^\dagger <1\) and \({\bar{\varGamma }}\) is bounded, then \({\bar{\varGamma }}\) is a line segment and there exist just two real solutions to equation \(\chi (\theta )=\mathrm{cp}(A_*(\theta ))^{-1}=1\). We denote the solutions by \({\underline{\theta }}\) and \({\bar{\theta }}\), where \({\underline{\theta }}<{\bar{\theta }}\). When \(\gamma ^\dagger =1\), we define \({\underline{\theta }}\) and \({\bar{\theta }}\) as \({\underline{\theta }}=\min \{\theta \in {\mathbb {R}}; \chi (\theta )=1\}\) and \({\bar{\theta }}=\max \{\theta \in {\mathbb {R}}; \chi (\theta )=1\}\), respectively. It is expected that \({\underline{\theta }}={\bar{\theta }}\) if \(\gamma ^\dagger =1\), but it is not obvious. If \(\gamma ^\dagger \le 1\) and \({\bar{\varGamma }}\) is bounded, there exists a \(\theta \in {\bar{\varGamma }}\) such that \(\gamma ^\dagger =\chi (\theta )\). We give the following condition.

Condition 2.5

(a4) \({\bar{\varGamma }}\) is bounded.

If \(A_{-1}\) (resp. \(A_1\)) is a zero matrix, every element of \(A_*(\theta )\) is monotone increasing (resp. decreasing) in \(\theta \) and \({\bar{\varGamma }}\) is unbounded. Hence, if \(\gamma ^\dagger \le 1\), condition (a4) implies (a1). The following proposition extends existing results in the case of finite phase space (see Lemma 2.3 of [6]) to in the case of infinite phase space.

Proposition 2.1

Assume (a2) through (a4).

-

(i)

If \(\gamma ^\dagger \le 1\), then R and G are elementwise finite.

-

(ii)

If R is elementwise finite and there exist a \(\theta _0\in {\mathbb {R}}\) and nonnegative nonzero vector \({\varvec{u}}\) such that \(e^{\theta _0} {\varvec{u}}^\top R={\varvec{u}}^\top \), then \(\gamma ^\dagger \le 1\).

-

(ii’)

If G is elementwise finite and there exist a \(\theta _0\in {\mathbb {R}}\) and nonnegative nonzero vector \({\varvec{v}}\) such that \(e^{\theta _0} G {\varvec{v}}={\varvec{v}}\), then \(\gamma ^\dagger \le 1\).

Proof

-

Statement (i). Assume \(\gamma ^\dagger \le 1\) and let \(\theta ^\dagger \) be a real number satisfying \(\chi (\theta ^\dagger )=\gamma ^\dagger \). Since \(A_*(\theta ^\dagger )\) is irreducible, by Lemma 1 and Theorem 1 of [20], there exists a positive vector \({\varvec{u}}\) satisfying \((\gamma ^\dagger )^{-1} {\varvec{u}}^\top A_*(\theta ^\dagger ) \le {\varvec{u}}^\top \). For this \({\varvec{u}}\), we obtain, by induction using (2.11), the inequality \(e^{\theta ^\dagger } {\varvec{u}}^\top X_n^{(1)} \le {\varvec{u}}^\top \) for any \(n\ge 0\). Hence, the sequence \(\{X_n^{(1)}\}\) is elementwise nondecreasing and bounded, and the limit of the sequence, which is the minimum nonnegative solution to Eq. (2.9), exists. Existence of the minimum nonnegative solution to Eq. (2.10) is analogously proved. As a result, by Lemma 2.3, both R and G are elementwise finite.

-

Statements (ii) and (ii’). Assume the conditions of Statement (ii). Then, we have

$$\begin{aligned} {\varvec{u}}^\top&= e^{\theta _0} {\varvec{u}}^\top R = e^{\theta _0} {\varvec{u}}^\top ( R^2 A_{-1}+R A_0+A_1 ) = {\varvec{u}}^\top A_*(\theta _0), \end{aligned}$$(2.13)and this leads us to \(\gamma ^\dagger \le \chi (\theta _0)=\mathrm{cp}(A_*(\theta _0))^{-1}\le 1\). Statement (ii’) can be proved analogously. \(\square \)

Remark 2.1

In statement (ii) of Proposition 2.1, if such \(\theta _0\) and \({\varvec{u}}\) exist, then, by (2.13) and irreducibility of \(A_*(\theta _0)\), we have \(\theta _0=\theta ^\dagger \) and \({\varvec{u}}\) is positive. An analogous result also holds for statement (ii’).

Remark 2.2

Consider the following nonnegative matrix P:

If the triplet \(\{A_{-1},A_0,A_1\}\) is a Markov additive kernel, this P corresponds to the transition probability matrix of a Markov additive process governed by the triplet. By Proposition B.1 in Appendix B, if such a P is irreducible, then \(\chi (\theta )\) is unbounded in both the directions of \(\theta \) and \({\bar{\varGamma }}\) is bounded.

The expressions for the convergence parameters of R and G in the following lemma are already known in the case of finite phase space (see Lemma 2.2 of [5] and Lemma 2.3 of [11]). The lemma asserts that the same expressions also hold in the case of infinite phase space.

Lemma 2.5

Assume (a2) through (a4). If \(\gamma ^\dagger \le 1\) and N is elementwise finite, then we have

Proof

Since \(\gamma ^\dagger \le 1\) and \({\bar{\varGamma }}\) is bounded, \({\bar{\theta }}\) and \({\underline{\theta }}\) exist and they are finite. Furthermore, R and G are elementwise finite. For a \(\theta \in {\mathbb {R}}\) such that \(\chi (\theta )\le 1\), let \({\varvec{u}}\) be a positive vector satisfying \({\varvec{u}}^\top A_*(\theta )\le {\varvec{u}}^\top \). Such a \({\varvec{u}}\) exists since \(A_*(\theta )\) is irreducible. As mentioned in the proof of Proposition 2.1, for \(X^{(1)}_n\) defined by (2.11), if \(\chi (\theta )\le 1\), then we have \(e^\theta {\varvec{u}}^\top X^{(1)}_n\le {\varvec{u}}^\top \) for any \(n\ge 0\) and this implies \(e^\theta {\varvec{u}}^\top R\le {\varvec{u}}^\top \). Analogously, if \(\chi (\theta )\le 1\), then there exists a positive vector \({\varvec{v}}\) satisfying \(A_*(\theta ){\varvec{v}}\le {\varvec{v}}\) and we have \(e^{-\theta } G {\varvec{v}}\le {\varvec{v}}\). Therefore, setting \(\theta \) at \({\bar{\theta }}\), we obtain \(e^{{\bar{\theta }}} {\varvec{u}}^\top R\le {\varvec{u}}^\top \), and setting \(\theta \) at \({\underline{\theta }}\), we obtain \(e^{-{\underline{\theta }}} G {\varvec{v}}\le {\varvec{v}}\). Since \({\varvec{u}}\) and \({\varvec{v}}\) are positive, this leads us to \(\mathrm{cp}(R)\ge e^{{\bar{\theta }}}\) and \(\mathrm{cp}(G)\ge e^{-{\underline{\theta }}}\).

Next, in order to prove \(\mathrm{cp}(R)\le e^{{\bar{\theta }}}\), we apply a technique similar to that used in the proof of Theorem 1 of [20]. Suppose \(\mathrm{cp}(R)>e^{{\bar{\theta }}}\). Then, there exists an \(\varepsilon >0\) such that

This \({\tilde{R}}({\bar{\theta }}+\varepsilon )\) satisfies \(e^{{\bar{\theta }}+\varepsilon } R {\tilde{R}}({\bar{\theta }}+\varepsilon ) = {\tilde{R}}({\bar{\theta }}+\varepsilon )-I \le {\tilde{R}}({\bar{\theta }}+\varepsilon )\). Hence, for \(j\in {\mathbb {Z}}_+\), letting \({\varvec{v}}_j\) be the j-th column vector of \({\tilde{R}}({\bar{\theta }}+\varepsilon )\), we have \(e^{{\bar{\theta }}+\varepsilon } R {\varvec{v}}_j \le {\varvec{v}}_j\). Furthermore, we have \(e^{{\bar{\theta }}+\varepsilon } R {\tilde{R}}({\bar{\theta }}+\varepsilon ) \ge e^{{\bar{\theta }}+\varepsilon } R \ge e^{{\bar{\theta }}+\varepsilon } X_1^{(1)} = e^{{\bar{\theta }}+\varepsilon } A_1\), and condition (a4) implies \(A_1\) is nonzero. Hence, for some \(j\in {\mathbb {Z}}_+\), both \(R{\varvec{v}}_j\) and \({\varvec{v}}_j\) are nonzero. Set \({\varvec{v}}\) at such a vector \({\varvec{v}}_j\). We have \(\mathrm{cp}(G)\ge e^{-{\underline{\theta }}}> e^{-({\bar{\theta }}+\varepsilon )}\). Hence, using (2.7) and (2.8), we obtain

where \({\varvec{y}}=(I-e^{-({\bar{\theta }}+\varepsilon )} G)^{-1} N {\varvec{v}}= \sum _{n=0}^\infty e^{-n ({\bar{\theta }}+\varepsilon )} G^n\,N {\varvec{v}}\ge N {\varvec{v}}\). Suppose \(N {\varvec{v}}={\mathbf {0}}\), then we have \(R {\varvec{v}}= A_1 N {\varvec{v}}= {\mathbf {0}}\) and this contradicts that \(R {\varvec{v}}\) is nonzero. Hence, \(N {\varvec{v}}\) is nonzero and \({\varvec{y}}\) is also nonzero and nonnegative. Since \(A_*({\bar{\theta }}+\varepsilon )\) is irreducible, the inequality \(A_*({\bar{\theta }}+\varepsilon ) {\varvec{y}}\le {\varvec{y}}\) implies that \({\varvec{y}}\) is positive and \(\mathrm{cp}(A_*({\bar{\theta }}+\varepsilon ))\ge 1\). This contradicts that \(\mathrm{cp}(A_*({\bar{\theta }}+\varepsilon ))=\chi ({\bar{\theta }}+\varepsilon )^{-1}<\chi ({\bar{\theta }})^{-1}=1\), and we obtain \(\mathrm{cp}(R)\le e^{{\bar{\theta }}}\). In a similar manner, we can also obtain \(\mathrm{cp}(G)\le e^{-{\underline{\theta }}}\), and this completes the proof. \(\square \)

Lemma 2.5 requires that N is elementwise finite, but it cannot easily be verified since finiteness of every entry of R and that of G does not always imply finiteness of every entry of N. We, therefore, introduce the following condition.

Condition 2.6

(a5) The nonnegative matrix Q is irreducible.

Condition (a5) implies (a1), (a3) and (a4), i.e., under conditions (a2) and (a5), \(A_{-1}\) and \(A_1\) are nonzero, \(A_*\) is irreducible and \({\bar{\varGamma }}\) is bounded. Let \({\tilde{Q}}\) be the fundamental matrix of Q, i.e., \({\tilde{Q}}=\sum _{n=0}^\infty Q^n\). For \(n\ge 0\), \(Q_{0,0}^{(n)}\) is the (0, 0)-block of \(Q^n\), and N is that of \({\tilde{Q}}\). Hence, we see that all the elements of N simultaneously converge or diverge, finiteness of every entry of R or that of G implies finiteness of every entry of N and if N is elementwise finite, it is positive. Furthermore, under (a2) and (a5), since R is given as \(R=A_1 N\) and N is positive, each row of R is zero or positive and we obtain the following proposition, which asserts that R behaves just like an irreducible matrix.

Proposition 2.2

Assume (a2) and (a5). If R is elementwise finite, then it always satisfies one of the following two statements:

-

(i)

There exists a positive vector \({\varvec{u}}\) such that \(e^{{\bar{\theta }}} {\varvec{u}}^\top R = {\varvec{u}}^\top \).

-

(ii)

\(\sum _{n=0}^\infty e^{{\bar{\theta }}n} R^n <\infty \), elementwise.

Since the proof of this proposition is elementary and lengthy, we put it in Appendix C. By applying the same technique as that used in the proof of Theorem 4.1 of [8], we also obtain the following result.

Corollary 2.1

Assume (a2) and (a5). For \(i,j\in {\mathbb {Z}}_+\), if every element in the i-th row of \(A_1\) is zero, we have \([R^n]_{i,j}=0\) for all \(n\ge 1\); otherwise, we have \([R^n]_{i,j}>0\) for all \(n\ge 1\) and

To make this paper self-contained, we give a proof of the corollary in Appendix C. By Theorem 2 of [20], if the number of nonzero elements of each row of \(A_*\) is finite, there always exists a positive vector \({\varvec{u}}\) satisfying \({\varvec{u}}^\top A_*({\bar{\theta }})={\varvec{u}}^\top \). Also, if the number of nonzero elements of each column of \(A_*\) is finite, there always exists a positive vector \({\varvec{v}}\) satisfying \(A_*({\underline{\theta }}){\varvec{v}}={\varvec{v}}\). To use this property, we give the following condition.

Condition 2.7

(a6) The number of positive elements of each row and column of \(A_*\) is finite.

It is obvious that (a6) implies (a2). Under (a6), we can refine Proposition 2.1, as follows.

Proposition 2.3

Assume (a5) and (a6). Then, \(\gamma ^\dagger \le 1\) if and only if R and G are elementwise finite.

Proof

By Proposition 2.1, if \(\gamma ^\dagger \le 1\), then both R and G are elementwise finite. We, therefore, prove the converse. Assume that R and G are elementwise finite. Then, N is also elementwise finite and, by Lemma 2.5, we have \(\mathrm{cp}(R)=e^{{\bar{\theta }}}\). First, consider case (i) of Proposition 2.2 and assume that there exists a positive vector \({\varvec{u}}\) such that \(e^{{\bar{\theta }}} {\varvec{u}}R = {\varvec{u}}\). Then, by statement (ii) of Proposition 2.1, we have \(\gamma ^\dagger \le 1\). Next, consider case (ii) of Proposition 2.2 and assume \(\sum _{n=0}^\infty e^{n {\bar{\theta }}} R^n <\infty \), elementwise. Then, we have \((I-e^{{\underline{\theta }}} R)^{-1}=\sum _{n=0}^\infty e^{n {\underline{\theta }}} R^n<\infty \) since \({\underline{\theta }}\le {\bar{\theta }}\). Hence, we obtain, from (2.7) and (2.8),

Under the assumptions of the proposition, there exists a positive vector \({\varvec{v}}\) satisfying \(A_*({\underline{\theta }}){\varvec{v}}={\varvec{v}}\) since \(\mathrm{cp}(A_*({\underline{\theta }}))=1\). Hence, from (2.17), we obtain, for this \({\varvec{v}}\), \(e^{-{\underline{\theta }}} G{\varvec{v}}={\varvec{v}}\), and by statement (ii’) of Proposition 2.1, we have \(\gamma ^\dagger \le 1\). This completes the proof. \(\square \)

Recall that \(\gamma ^\dagger \) is defined as \(\gamma ^\dagger =\inf _{\theta \in {\mathbb {R}}}\mathrm{cp}(A_*(\theta ))^{-1}\). Since, if Q is irreducible, all the elements of \({\tilde{Q}}=\sum _{n=0}^\infty Q^n\) simultaneously converge or diverge, we obtain, from Proposition 2.3, the following result.

Proposition 2.4

Assume (a5) and (a6). Then, \(\gamma ^\dagger \le 1\) if and only if \({\tilde{Q}}\) is elementwise finite.

Proof

Under the assumptions of the proposition, if \(\gamma ^\dagger \le 1\), then, by Proposition 2.3, R and G are elementwise finite. Since Q is irreducible, this implies that N is elementwise finite and \({\tilde{Q}}\) is also elementwise finite. On the other hand, if \({\tilde{Q}}\) is elementwise finite, then N is elementwise finite and R and G are also elementwise finite since the number of positive elements of each row of \(A_1\) and that of each column of \(A_{-1}\) are finite. Hence, by Proposition 2.3, we have \(\gamma ^\dagger \le 1\) and this completes the proof. \(\square \)

By this proposition, we obtain the main result of this section, as follows.

Lemma 2.6

Under (a5) and (a6), we have

and Q is \((\gamma ^\dagger )^{-1}\)-transient.

Proof

For \(\beta >0\), \(\beta Q\) is a nonnegative block tridiagonal matrix, whose block matrices are given by \(\beta A_{-1}\), \(\beta A_0\) and \(\beta A_1\). Hence, the assumptions of this lemma also hold for \(\beta Q\). Define \(\gamma (\beta )\) as

By Proposition 2.4, if \(\gamma (\beta ) = \beta \gamma ^\dagger \le 1\), then the fundamental matrix of \(\beta Q\), \(\widetilde{\beta Q}\), is finite elementwise and \(\mathrm{cp}(\beta Q)=\beta ^{-1} \mathrm{cp}(Q)\ge 1\). Hence, if \(\beta \le (\gamma ^\dagger )^{-1}\), then \(\mathrm{cp}(Q)\ge \beta \). Setting \(\beta \) at \((\gamma ^\dagger )^{-1}\), we obtain \(\mathrm{cp}(Q)\ge (\gamma ^\dagger )^{-1}\). Next we prove \(\mathrm{cp}(Q)\le (\gamma ^\dagger )^{-1}\). Suppose \(\mathrm{cp}(Q)> (\gamma ^\dagger )^{-1}\), then there exists an \(\varepsilon >0\) such that the fundamental matrix of \(((\gamma ^\dagger )^{-1}+\varepsilon ) Q\) is elementwise finite. By Proposition 2.4, this implies

and we obtain \(\gamma ^\dagger \le 0\). This contradicts \(\gamma ^\dagger > 0\), which is obtained from the irreducibility of \(A_*\). Hence, we obtain \(\mathrm{cp}(Q)\le (\gamma ^\dagger )^{-1}\). Setting \(\beta \) at \((\gamma ^\dagger )^{-1}\), we have \(\gamma (\beta )=\gamma ((\gamma ^\dagger )^{-1})\le 1\) and, by Proposition 2.4, the fundamental matrix of \(\beta Q=(\gamma ^\dagger )^{-1}Q\) is elementwise finite. This means Q is \((\gamma ^\dagger )^{-1}\)-transient. \(\square \)

Remark 2.3

Expression (2.18) is already known in the case of finite phase space; see Lemma 2.3 of [14]. This lemma asserts that it holds even in the case of infinite phase space under (a5) and (a6). Expression (2.18) is crucial in the analysis in the following sections.

Remark 2.4

For nonnegative block multidiagonal matrices, a property similar to Lemma 2.6 holds. In order to use expression (2.22), we demonstrate it in the case of block quintuple-diagonal matrix. The reblocking technique to reduce block banded matrices to block tridiagonal matrices was first introduced by [4] in a general setting. Let Q be a nonnegative block matrix defined as

where \(A_i,\,i\in \{-2,-1,0,1,2\}\), are nonnegative square matrices with a countable dimension. For \(\theta \in {\mathbb {R}}\), define a matrix function \(A_*(\theta )\) as

Then, assuming that Q is irreducible and the number of positive elements of each row and column of \(A_*(0)\) is finite, we can obtain

Here, we prove this equation. Define blocks \({\hat{A}}_i,\,i\in \{-1,0,1\}\), as

then Q is represented in block tridiagonal form by using these blocks. For \(\theta \in {\mathbb {R}}\), define a matrix function \({\hat{A}}_*(\theta )\) as

then, by Lemma 2.6, we have \(\mathrm{cp}(Q) = \sup _{\theta \in {\mathbb {R}}} \mathrm{cp}({\hat{A}}_*(\theta ))\). To prove Eq. (2.22), it, therefore, suffices to show that, for any \(\theta \in {\mathbb {R}}\),

For \(\theta \in {\mathbb {R}}\) and \(\alpha \in {\mathbb {R}}_+\), if \(\alpha {\varvec{x}}^\top A_*(\theta /2)\le {\varvec{x}}^\top \) for some \({\varvec{x}}>{\mathbf {0}}\), then, letting \({\hat{{\varvec{x}}}}^\top =({\varvec{x}}^\top , e^{-\theta /2} {\varvec{x}}^\top )\), we have \(\alpha {\hat{{\varvec{x}}}}^\top {\hat{A}}_*(\theta ) \le {\hat{{\varvec{x}}}}^\top \). On the other hand, if \(\alpha {\hat{{\varvec{x}}}}^\top {\hat{A}}_*(\theta ) \le {\hat{{\varvec{x}}}}^\top \) for some \({\hat{{\varvec{x}}}}^\top =({\hat{{\varvec{x}}}}_1^\top ,{\hat{{\varvec{x}}}}_2^\top )>{\mathbf {0}}^\top \), then letting \({\varvec{x}}={\hat{{\varvec{x}}}}_1+e^{\theta /2} {\hat{{\varvec{x}}}}_2\), we have \(\alpha {\varvec{x}}^\top A_*(\theta /2)\le {\varvec{x}}^\top \). As a result, we obtain Eq. (2.23).

3 Markov-modulated random walks: preliminaries

We give some assumptions and propositions for the d-dimensional MMRW \(\{{\varvec{Y}}_n\}=\{({\varvec{X}}_n,J_n)\}\) defined in Sect. 1. First, we assume the following condition.

Assumption 3.1

The d-dimensional MMRW \(\{{\varvec{Y}}_n\}\) is irreducible.

Under this assumption, for any \(\varvec{\theta }\in {\mathbb {R}}^d\), \(A_*(\varvec{\theta })\) is also irreducible. Denote \(A_*({\mathbf {0}})\) by \(A_*\), which is the transition probability matrix of the background process \(\{J_n\}\). In order to use the results in the previous section, we assume the following condition.

Assumption 3.2

The number of positive elements in every row and column of \(A_*\) is finite.

Define the mean increment vector \({\varvec{a}}=(a_1,a_2,\ldots ,a_d)\) as

We assume these limits exist with probability one. With respect to the occupation measure defined in Sect. 1, the following property holds.

Proposition 3.1

If there exists some \(i\in \{1,2,\ldots ,d\}\) such that \(a_i<0\), then, for any \({\varvec{y}}\in {\mathbb {S}}_+\), the occupation measure \(({\tilde{q}}_{{\varvec{y}},{\varvec{y}}'}; {\varvec{y}}'\in {\mathbb {S}}_+)\) is finite, i.e.,

where \(\tau \) is the stopping time at which \(\{{\varvec{Y}}_n\}\) enters \({\mathbb {S}}{\setminus }{\mathbb {S}}_+\) for the first time.

Proof

Without loss of generality, we assume \(a_1<0\). Let \({\check{\tau }}\) be the stopping time at which \(X_{1,n}\) becomes less than 0 for the first time, i.e., \({\check{\tau }} = \inf \{n\ge 0; X_{1,n}<0 \}\). Since \(\{(x_1,x_2,\ldots ,x_d,j)\in {\mathbb {S}}; x_1<0 \} \subset {\mathbb {S}}{\setminus }{\mathbb {S}}_+\), we have \(\tau \le {\check{\tau }}\), and this implies that, for any \({\varvec{y}}\in {\mathbb {S}}_+\),

Next, we demonstrate that \({\mathbb {E}}({\check{\tau }}\,|\,{\varvec{Y}}_0={\varvec{y}})\) is finite. For \(i\in \{-1,0,1\}\), define a matrix \({\check{A}}_i\) as

and consider a one-dimensional QBD process \(\{{\check{{\varvec{Y}}}}_n\}=\{({\check{X}}_n,{\check{J}}_n)\}\) on \({\mathbb {Z}}_+\times S_0\), having \({\check{A}}_{-1}\), \({\check{A}}_0\) and \({\check{A}}_1\) as the transition probability blocks when \({\check{X}}_n>0\). We assume the transition probability blocks that govern transitions of the QBD process when \({\check{X}}_n=0\) are given appropriately. Define a stopping time \({\check{\tau }}^Q\) as \({\check{\tau }}^Q=\inf \{n\ge 0; {\check{X}}_n=0\}\). Since \(a_1\) is the mean increment of the QBD process when \({\check{X}}_n>0\), the assumption \(a_1<0\) implies that, for any \((x,j)\in {\mathbb {Z}}_+\times S_0\), \({\mathbb {E}}({\check{\tau }}^Q\,|\,{\check{Y}}_0=(x,j)) < \infty \). We, therefore, have that, for any \({\varvec{y}}=(x_1,x_2,\ldots ,x_d,j)\in {\mathbb {S}}_+\),

and this completes the proof. \(\square \)

Hereafter, we assume the following condition.

Assumption 3.3

For some \(i\in \{1,2,\ldots ,d\}\), \(a_i<0\).

Remark 3.1

If \(A_*\) is positive recurrent, the mean increment vector \({\varvec{a}}=(a_1,a_2,\ldots ,a_d)\) is given as

where \(\varvec{\pi }_*\) is the stationary distribution of \(A_*\) and \({\mathbf {1}}\) a column vector of 1’s whose dimension is determined in context.

We say that a positive function \(f({\varvec{x}})\) is log-convex in \({\varvec{x}}\in {\mathbb {R}}^d\) if \(\log f({\varvec{x}})\) is convex in \({\varvec{x}}\). A log-convex function is also a convex function. By Lemma A.1 in Appendix A, the following property holds.

Proposition 3.2

\(\mathrm{cp}(A_*(\varvec{\theta }))^{-1}\) is log-convex and hence convex in \(\varvec{\theta }\in {\mathbb {R}}^d\).

Let \({\bar{\varGamma }}\) be the closure of \(\varGamma \), i.e., \({\bar{\varGamma }}=\{ \varvec{\theta }\in {\mathbb {R}}^d; \mathrm{cp}(A_*(\varvec{\theta }))^{-1} \le 1\}\). By Proposition 3.2, \({\bar{\varGamma }}\) is a convex set. By Remark 2.2 and Proposition B.1 in Appendix B, the following property holds.

Proposition 3.3

\({\bar{\varGamma }}\) is bounded.

For \({\varvec{y}}=({\varvec{x}},j)\in {\mathbb {S}}_+\), we give an asymptotic inequality for the occupation measure \(({\tilde{q}}_{{\varvec{y}},{\varvec{y}}'}; {\varvec{y}}'\in {\mathbb {S}}_+)\). Under Assumption 3.3, the occupation measure is finite and \(({\tilde{q}}_{{\varvec{y}},{\varvec{y}}'}/E(\tau \,|\,{\varvec{Y}}_0={\varvec{y}}); {\varvec{y}}'\in {\mathbb {S}}_+)\) becomes a probability measure. Let \({\varvec{Y}}=({\varvec{X}},J)\) be a random variable subject to the probability measure, i.e., \(P({\varvec{Y}}={\varvec{y}}')= {\tilde{q}}_{{\varvec{y}},{\varvec{y}}'}/E(\tau \,|\,{\varvec{Y}}_0={\varvec{y}})\) for \({\varvec{y}}'\in {\mathbb {S}}_+\). By Markov’s inequality, for \(\varvec{\theta }\in {\mathbb {R}}^d\) and for \({\varvec{c}}\in {\mathbb {R}}_+^d\) such that \({\varvec{c}}\ne {\mathbf {0}}\), we have, for \(k\ge 1\) and \(j'\in S_0\),

This implies that, for every \({\varvec{l}}\in {\mathbb {Z}}_+^d\),

where \(\lceil {\varvec{c}}\rceil =(\lceil c_1 \rceil ,\lceil c_2 \rceil ,\ldots ,\lceil c_d \rceil )\) and \(\lceil x \rceil \) is the smallest integer greater than or equal to x. Hence, considering the convergence domain of \(\varPhi _{{\varvec{x}}}(\varvec{\theta })\), we immediately obtain the following basic inequality.

Lemma 3.1

For any \({\varvec{c}}\in {\mathbb {Z}}_+^d\) such that \({\varvec{c}}\ne {\mathbf {0}}\) and for every \(({\varvec{x}},j)\in {\mathbb {S}}_+\), \(j'\in S_0\) and \({\varvec{l}}\in {\mathbb {Z}}_+^d\),

4 QBD representations for the MMRW

In this section, we make two kinds of one-dimensional QBD process with a countable phase space from the d-dimensional MMRW defined in Sect. 1 and obtain upper bounds for the convergence parameters of their rate matrices. These upper bounds will give lower bounds for the asymptotic decay rates of the occupation measure in the original MMRW.

4.1 QBD representation with level direction vector \({\mathbf {1}}\)

Let \(\{{\varvec{Y}}_n\}=\{({\varvec{X}}_n,J_n)\}\) be a d-dimensional MMRW. In order to use the results in Sect. 2, hereafter, we assume the following condition.

Assumption 4.1

\(P_+\) is irreducible.

Under this assumption, P is irreducible regardless of Assumption 3.1 and every element of \({\tilde{P}}_+\) is positive. Let \(\tau \) be the stopping time defined in Sect. 1, i.e., \(\tau =\inf \{n\ge 0; {\varvec{Y}}_n\in {\mathbb {S}}{\setminus }{\mathbb {S}}_+\}\). According to Example 4.2 of [13], define a one-dimensional absorbing QBD process \(\{{\hat{{\varvec{Y}}}}_n\}=\{({\hat{X}}_n,{\hat{{\varvec{J}}}}_n)\}\) as

where \(x\wedge y= \min \{x,y\}\), \({\hat{Z}}_{0,n}=\min \{i\in \{1,2,\ldots ,d\}; X_{i,\tau \wedge n}={\hat{X}}_n\}\),

and \({\hat{J}}_n=J_{\tau \wedge n}\). We restrict the state space of \(\{{\hat{{\varvec{Y}}}}_n\}\) to \({\mathbb {Z}}_+\times ({\mathbb {N}}_d\times {\mathbb {Z}}_+^{d-1}\times S_0)\), where \({\mathbb {N}}_d=\{1,2,\ldots ,d\}\). For \(k\in {\mathbb {Z}}_+\), the k-th level set of \(\{{\hat{{\varvec{Y}}}}_n\}\) is given by \({\mathbb {L}}_k=\{ ({\varvec{x}},j)=((x_1,x_2,\ldots ,x_d),j)\in {\mathbb {S}}_+; \min _{1\le i\le d} x_i = k \}\) which satisfies, for \(k\ge 0\),

This means that \(\{{\hat{{\varvec{Y}}}}_n\}\) is a QBD process with level direction vector \({\mathbf {1}}\). The transition probability matrix of \(\{{\hat{Y}}_n\}\) is given in block tridiagonal form as

We omit the specific description of \( {\hat{A}}_{-1}\), \({\hat{A}}_0\) and \({\hat{A}}_1\). Instead, in the case where \({\hat{Z}}_{0,n}=d\) and \({\hat{Z}}_{i,n}\ge 2\), \(i\in \{1,2,\ldots ,d-1\}\), we give their description in terms of \(A_{{\varvec{i}}_{(d)}},\,{\varvec{i}}_{(d)}=(i_1,i_2,\ldots ,i_d)\in \{-1,0,1\}^d\). For \(k\in \{-1,0,1\}\) and \({\varvec{i}}_{(d-1)}=(i_1,i_2,\ldots ,i_{d-1})\in {\mathbb {Z}}^{d-1}\), define a block \({\hat{A}}_{k,{\varvec{i}}_{(d-1)}}\) as

where we assume that \({\varvec{x}}_{(d-1)}=(x_1,x_2,\ldots ,x_{d-1})\ge 2\,{\mathbf {1}}\) and use the fact that the right-hand side does not depend on \({\varvec{x}}_{(d-1)}\) because of the space homogeneity of \(\{{\varvec{Y}}_n\}\). Since the level process \(\{{\varvec{X}}_n\}\) of the original MMRW is skip free in all directions, the block \({\hat{A}}_{k,{\varvec{i}}_{(d-1)}}\) is given as

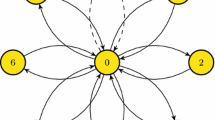

where, for positive integer l, we denote by \({\mathbf {1}}_l\) an l-dimensional vector of 1’s (see Fig. 1).

Recall that \({\tilde{P}}_+\) is the fundamental matrix of the substochastic matrix \(P_+\) and each row of \({\tilde{P}}_+\) is an occupation measure. For \({\varvec{x}},{\varvec{x}}'\in {\mathbb {Z}}_+^d\), the matrix \(N_{{\varvec{x}},{\varvec{x}}'}\) is given as \(N_{{\varvec{x}},{\varvec{x}}'}=({\tilde{q}}_{({\varvec{x}},j),({\varvec{x}}',j')}; j,j'\in S_0)\). In terms of \(N_{{\varvec{x}},{\varvec{x}}'}\), \({\tilde{P}}_+\) is represented as \({\tilde{P}}_+=(N_{{\varvec{x}},{\varvec{x}}'}; {\varvec{x}},{\varvec{x}}'\in {\mathbb {Z}}_+^d)\). We derive a matrix geometric representation for \({\tilde{P}}_+\) according to the QBD process \(\{{\hat{{\varvec{Y}}}}_n\}\). Under Assumption 3.3, the summation of each row of \({\tilde{P}}_+\) is finite and we obtain the following recursive formula for \({\tilde{P}}_+\):

Define \({\hat{N}}_0\) as \({\hat{N}}_0 = \big ( {\hat{N}}_{0,k}; k\in {\mathbb {Z}}_+ \big )\), where

Since \({\hat{N}}_0\) is a submatrix of \({\tilde{P}}_+\), we obtain from (4.4) that

where \(P_+\) in (4.4) is replaced with \({\hat{P}}\) and this \({\hat{P}}\) has the same block structure as \({\hat{N}}_0\). This equation leads us to

Let \({\hat{R}}\) be the rate matrix generated from the triplet \(\{{\hat{A}}_{-1},{\hat{A}}_0,{\hat{A}}_1\}\), which is the minimal nonnegative solution to the matrix quadratic equation

Then, the solution to Eq. (4.6) is given as

where we use the fact that \(\mathrm{cp}({\hat{A}}_0+{\hat{R}}{\hat{A}}_{-1})<1\) since \({\tilde{P}}_+\) is elementwise finite.

Next, we give an upper bound for \(\mathrm{cp}({\hat{R}})\), the convergence parameter of \({\hat{R}}\). For \(\theta \in {\mathbb {R}}\), define a matrix function \({\hat{A}}_*(\theta )\) as

Since \(P_+\) is irreducible and the number of positive elements of each row and column of \({\hat{A}}_*(0)\) is finite, we have, by Lemma 2.5,

We consider the relation between \(\mathrm{cp}(A_*(\varvec{\theta }))\) and \(\mathrm{cp}({\hat{A}}_*(\theta ))\). For \({\varvec{i}}_{(d-1)}\in {\mathbb {Z}}^{d-1}\), define a matrix function \({\hat{A}}_{*,{\varvec{i}}_{(d-1)}}(\theta )\) as

Further define a block matrix \({\hat{A}}_*^\dagger (\theta )\) as

where \({\hat{A}}_{*,{\varvec{x}}'_{(d-1)}-{\varvec{x}}_{(d-1)}}(\theta )= O\) if \({\varvec{x}}'_{(d-1)}-{\varvec{x}}_{(d-1)}\notin \{-2,-1,0,1,2\}^{d-1}\). The matrix \({\hat{A}}_*^\dagger (\theta )\) is a submatrix of \({\hat{A}}_*(\theta )\), obtained by restricting the state space \({\mathbb {N}}_d\times {\mathbb {Z}}_+^{d-1}\times S_0\) to \(\{d\}\times {\mathbb {Z}}_+^{d-1}\times S_0\). Hence, we have

Define a matrix function \({\hat{A}}_{*,*}(\theta ,\varvec{\theta }_{(d-1)})\) as

where \(\varvec{\theta }_{(d-1)}=(\theta _1,\theta _2,\ldots ,\theta _{d-1})\). From (4.3), we see that \({\hat{A}}_*^\dagger (\theta )\) is a multiple-block-quintuple-diagonal matrix and, applying Remark 2.4 to it repeatedly, we obtain

Furthermore, from (4.3), we have

Hence, we obtain the following proposition.

Proposition 4.1

Proof

From (4.10), (4.11) and (4.12), we obtain

This and (4.9) lead us to inequality (4.13). \(\square \)

4.2 QBD representation with level direction vector \({\varvec{c}}\)

Letting \({\varvec{c}}=(c_1,c_2,\ldots ,c_d)\) be a vector of positive integers, we consider another QBD representation of \(\{{\varvec{Y}}_n\}=\{({\varvec{X}}_n,J_n)\}\), whose level direction vector is given by \({\varvec{c}}\). For \(k\in \{1,2,\ldots ,d\}\), denote by \({}^{{\varvec{c}}}X_{k,n}\) and \({}^{{\varvec{c}}}M_{k,n}\) the quotient and remainder of \(X_{k,n}\) divided by \(c_k\), respectively, i.e.,

where \({}^{{\varvec{c}}}X_{k,n}\in {\mathbb {Z}}\) and \({}^{{\varvec{c}}}M_{k,n}\in \{0,1,\ldots ,c_k-1\}\). Define a process \(\{{}^{{\varvec{c}}}{\varvec{Y}}_n\}\) as

where \({}^{{\varvec{c}}}{\varvec{X}}_n=({}^{{\varvec{c}}}X_{1,n},{}^{{\varvec{c}}}X_{2,n},\ldots ,{}^{{\varvec{c}}}X_{d,n})\) and \({}^{{\varvec{c}}}{\varvec{M}}_n=({}^{{\varvec{c}}}M_{1,n},{}^{{\varvec{c}}}M_{2,n},\ldots ,{}^{{\varvec{c}}}M_{d,n})\). The process \(\{{}^{{\varvec{c}}}{\varvec{Y}}_n\}\) is a d-dimensional MMRW with the background process \(\{({}^{{\varvec{c}}}{\varvec{M}}_n,J_n)\}\) and its state space is given by \({\mathbb {Z}}^d\times (\prod _{k=1}^d {\mathbb {Z}}_{0,c_k-1}\times S_0)\), where \({\mathbb {Z}}_{0,c_k-1}=\{0,1,\ldots ,c_k-1\}\). The transition probability matrix of \(\{{}^{{\varvec{c}}}{\varvec{Y}}_n\}\), denoted by \({}^{{\varvec{c}}}P\), has a multiple-tridiagonal block structure like P. Denote by \({}^{{\varvec{c}}}A_{{\varvec{i}}},\,{\varvec{i}}\in \{-1,0,1\}^d\), the nonzero blocks of \({}^{{\varvec{c}}}P\) and define a matrix function \({}^{{\varvec{c}}}A_*(\varvec{\theta })\) as

The following relation holds between \(A_*(\varvec{\theta })\) and \({}^{{\varvec{c}}}A_*(\varvec{\theta })\).

Proposition 4.2

For any vector \({\varvec{c}}=(c_1,c_2,\ldots ,c_d)\) of positive integers, we have

where \(\varvec{\theta }=(\theta _1,\theta _2,\ldots ,\theta _d)\) and \({\varvec{c}}\bullet \varvec{\theta }=(c_1\theta _1,c_2\theta _2,\ldots ,c_d\theta _d)\).

We use the following proposition for proving Proposition 4.2.

Proposition 4.3

Let \(C_{-1}\), \(C_0\) and \(C_1\) be \(m\times m\) nonnegative matrices, where m can be countably infinite, and define a matrix function \(C_*(\theta )\) as

Assume that, for any \(n\in {\mathbb {Z}}_+\), \(C_*(0)^n\) is finite elementwise and \(C_*(0)\) is irreducible. Let k be a positive integer and define a \(k\times k\) block matrix \(C^{[k]}(\theta )\) as

Then, we have \(\mathrm{cp}(C^{[k]}(k \theta ))=\mathrm{cp}(C_*(\theta ))\).

Proof

First, assume that, for a positive number \(\beta \) and measure \({\varvec{u}}\), \(\beta {\varvec{u}}C_*(\theta ) \le {\varvec{u}}\), and define a measure \({\varvec{u}}^{[k]}\) as

Then, we have \(\beta {\varvec{u}}^{[k]} C^{[k]}(k\theta ) \le {\varvec{u}}^{[k]}\) and, by Theorem 6.3 of [21], we obtain \(\mathrm{cp}(C_*(\theta )) \le \mathrm{cp}(C^{[k]}(k\theta ))\).

Next, assume that, for a positive number \(\beta \) and measure \({\varvec{u}}^{[k]}=\begin{pmatrix} {\varvec{u}}_1&{\varvec{u}}_2&\cdots&{\varvec{u}}_k \end{pmatrix}\), \(\beta {\varvec{u}}^{[k]} C^{[k]}(k\theta ) \le {\varvec{u}}^{[k]}\), and define a measure \({\varvec{u}}\) as

Further, define a nonnegative matrix \(V^{[k]}\) as

Then, we have \(\beta {\varvec{u}}^{[k]} C^{[k]}(k\theta ) V^{[k]} = \beta {\varvec{u}}C_*(\theta )\) and \({\varvec{u}}^{[k]} V^{[k]} = {\varvec{u}}\). Hence, we have \(\beta {\varvec{u}}C_*(\theta ) \le {\varvec{u}}\) and this implies \(\mathrm{cp}(C^{[k]}(k\theta )) \le \mathrm{cp}(C_*(\theta ))\). \(\square \)

Proof of Proposition 4.2

Let \(\varvec{\theta }=(\theta _1,\theta _2,\ldots ,\theta _d)\) be a d-dimensional vector in \({\mathbb {R}}^d\) and, for \(k\in \{1,2,\ldots ,d\}\), define \(\varvec{\theta }_{(k)}\) and \(\varvec{\theta }_{[k]}\) as \(\varvec{\theta }_{(k)}=(\theta _1,\theta _2,\ldots ,\theta _k)\) and \(\varvec{\theta }_{[k]}=(\theta _k,\theta _{k+1},\ldots ,\theta _d)\), respectively. We consider the multiple-block structure of \({}^{{\varvec{c}}}A_*(\varvec{\theta })\) according to \({\mathbb {Z}}_{0,c_1-1}\times {\mathbb {Z}}_{0,c_2-1}\times \cdots \times {\mathbb {Z}}_{0,c_d-1}\times S_0\), the state space of the background process of \(\{{}^{{\varvec{c}}}{\varvec{Y}}_n\}\). For \(k\in \{-1,0,1\}\), define \({}^{{\varvec{c}}}A_k^{[1]}(\varvec{\theta }_{[2]})\) as

where \({\varvec{i}}_{[2]}=(i_2,i_3,\ldots ,i_d)\). Due to the skip-free property of the original process, they are given in \(c_1\times c_1\) block form as

where each \(B^{[1]}_{i}(\varvec{\theta }_{[2]})\) is a matrix function of \(\varvec{\theta }_{[2]}\) and we use the fact that \({}^{{\varvec{c}}}M_{1,n}\) is the remainder of \(X_{1,n}\) divided by \(c_1\). Hence, \({}^{{\varvec{c}}}A_*(\varvec{\theta })\) is given in \(c_1\times c_1\) block form as

Define a matrix function \(B^{[1]}_*(\theta _1,\varvec{\theta }_{[2]})\) as

Then, by Proposition 4.3, we have

Analogously, for \(i_1\in \{-1,0,1\}\), \(B^{[1]}_{i_1}(\varvec{\theta }_{[2]})\) is represented in \(c_2\times c_2\) block form as

where each \(B^{[2]}_{i_1,i_2}(\varvec{\theta }_{[3]})\) is a matrix function of \(\varvec{\theta }_{[3]}\). Define a matrix function \(B^{[2]}_*(\theta _1,\theta _2,\varvec{\theta }_{[3]})\) as

Then, by Proposition 4.3, we obtain from (4.19) and (4.21) that

Repeating this procedure more \((d-3)\) times, we obtain

where

and \({\varvec{i}}_{(d-1)}=(i_1,i_2,\ldots ,i_{d-1})\). As a result, we have

where \({\varvec{c}}_{[k]}=(c_k,c_{k+1},\ldots ,c_d)\), and this completes the proof. \(\square \)

Next, we apply the results of the previous subsection to the d-dimensional MMRW \(\{{}^{{\varvec{c}}}{\varvec{Y}}_n\}\). Let \(\{{}^{{\varvec{c}}}{\hat{{\varvec{Y}}}}_n\}\) be a one-dimensional absorbing QBD process with level direction vector \({\mathbf {1}}\), generated from \(\{{}^{{\varvec{c}}}{\varvec{Y}}_n\}\). The process \(\{{}^{{\varvec{c}}}{\hat{{\varvec{Y}}}}_n\}\) is given by

where

and \(\tau \) is the stopping time at which the original MMRW \(\{{\varvec{Y}}_n\}\) enters \({\mathbb {S}}{\setminus }{\mathbb {S}}_+\) for the first time. We restrict the state space of \(\{{}^{{\varvec{c}}}{\hat{{\varvec{Y}}}}_n\}\) to \({\mathbb {Z}}_+\times ({\mathbb {N}}_d\times {\mathbb {Z}}_+^{d-1}\times \prod _{k=1}^d {\mathbb {Z}}_{0,c_k-1}\times S_0)\). For \(k\in {\mathbb {Z}}_+\), the k-th level set of \(\{{}^{{\varvec{c}}}{\hat{{\varvec{Y}}}}_n\}\) is given by

where \(\lfloor x \rfloor \) is the maximum integer less than or equal to x. The level sets satisfy, for \(k\ge 0\),

This means that \(\{{}^{{\varvec{c}}}{\hat{{\varvec{Y}}}}_n\}\) is a QBD process with level direction vector \({\varvec{c}}\). Let \({}^{{\varvec{c}}}{\hat{R}}\) be the rate matrix of the QBD process \(\{{}^{{\varvec{c}}}{\hat{{\varvec{Y}}}}_n\}\). An upper bound for the convergence parameter of \({}^{{\varvec{c}}}{\hat{R}}\) is given as follows.

Lemma 4.1

Proof

By Propositions 4.1 and 4.2, we have

\(\square \)

5 Asymptotic properties of the occupation measure

In this section, we derive the asymptotic decay rate of the occupation measure in the d-dimensional MMRW \(\{{\varvec{Y}}_n\}=\{({\varvec{X}}_n,J_n)\}\). We also obtain the convergence domain of the matrix moment generating function for the occupation measure.

5.1 Asymptotic decay rate in an arbitrary direction

Recall that, for \({\varvec{x}}\in {\mathbb {Z}}_+^d\), the convergence domain of the matrix moment generating function \(\varPhi _{{\varvec{x}}}(\varvec{\theta })\) is given as \({\mathcal {D}}_{{\varvec{x}}} = \text{ the } \text{ interior } \text{ of } \{\varvec{\theta }\in {\mathbb {R}}^d : \varPhi _{{\varvec{x}}}(\varvec{\theta })<\infty \}\). This domain does not depend on \({\varvec{x}}\), as follows.

Proposition 5.1

For every \({\varvec{x}},{\varvec{x}}'\in {\mathbb {Z}}_+^d\), \({\mathcal {D}}_{{\varvec{x}}}={\mathcal {D}}_{{\varvec{x}}'}\).

Proof

For every \({\varvec{x}},{\varvec{x}}'\in {\mathbb {Z}}_+^d\) and \(j\in S_0\), since \(P_+\) is irreducible, there exists \(n_0\ge 0\) such that \({\mathbb {P}}({\varvec{Y}}_{n_0}=({\varvec{x}}',j)\,|\,{\varvec{Y}}_0=({\varvec{x}},j))>0\). Using this \(n_0\), we obtain, for every \(j'\in S_0\),

where \(\tau \) is the stopping time given by \(\tau =\inf \{n\ge 0; {\varvec{Y}}_n\in {\mathbb {S}}{\setminus }{\mathbb {S}}_+\}\). This implies \({\mathcal {D}}_{{\varvec{x}}}\subset {\mathcal {D}}_{{\varvec{x}}'}\). Exchanging \({\varvec{x}}\) with \({\varvec{x}}'\), we obtain \({\mathcal {D}}_{{\varvec{x}}'}\subset {\mathcal {D}}_{{\varvec{x}}}\), and this completes the proof. \(\square \)

A relation between the point sets \(\varGamma \) and \({\mathcal {D}}_x\) is given as follows.

Proposition 5.2

For every \({\varvec{x}}\in {\mathbb {Z}}_+^d\), \(\varGamma \subset {\mathcal {D}}_{{\varvec{x}}}\) and hence \({\mathcal {D}}\subset {\mathcal {D}}_{{\varvec{x}}}\).

Proof

If \(\varvec{\theta }\in \varGamma \), then \(\mathrm{cp}(A_*(\varvec{\theta }))>1\) and we have \( \sum _{k=0}^\infty A_*(\varvec{\theta })^k<\infty \), elementwise. This gives that, for every \(j,j'\in S_0\),

and we have \(\varGamma \subset {\mathcal {D}}_{{\mathbf {0}}}\). Hence, by Proposition 5.1, we obtain the desired result. \(\square \)

Using Lemmas 3.1 and 4.1, we obtain the asymptotic decay rate of the occupation measure, as follows.

Theorem 5.1

For any positive vector \({\varvec{c}}=(c_1,c_2,\ldots ,c_d)\in {\mathbb {Z}}_+^d\), for every \({\varvec{x}}=(x_1,x_2,\ldots ,x_d)\in {\mathbb {Z}}_+^d\) such that \(\min _{1\le i\le d} x_i=0\), for every \({\varvec{l}}=(l_1,l_2,\ldots ,l_d)\in {\mathbb {Z}}_+^d\) such that \(\min _{1\le i\le d} l_i=0\) and for every \(j,j'\in S_0\),

Proof

By Lemma 3.1 and Proposition 5.2, we have, for any positive vector \({\varvec{c}}\in {\mathbb {Z}}_+^d\) and for every \(({\varvec{x}},j)\in {\mathbb {S}}_+\), \(j'\in S_0\) and \({\varvec{l}}\in {\mathbb {Z}}_+\),

Hence, in order to prove the theorem, it suffices to give the lower bound.

Consider the one-dimensional QBD process \(\{{}^{{\varvec{c}}}{\hat{{\varvec{Y}}}}_n\}\) defined in the previous section. Applying Corollary 2.1 to the rate matrix \(\{{}^{{\varvec{c}}}{\hat{R}}\}\) of \(\{{}^{{\varvec{c}}}{\hat{{\varvec{Y}}}}_n\}\), we obtain, for some \({\varvec{z}}''=(i'',{\varvec{x}}'',{\varvec{m}}'',j'')\in {\mathbb {N}}_d\times {\mathbb {Z}}_+^{d-1}\times \prod _{k=1}^d {\mathbb {Z}}_{0,c_k-1}\times S_0\) and every \({\varvec{z}}'=(i',{\varvec{x}}',{\varvec{m}}',j')\in {\mathbb {N}}_d\times {\mathbb {Z}}_+^{d-1}\times \prod _{k=1}^d {\mathbb {Z}}_{0,c_k-1}\times S_0\),

where \({\varvec{x}}'=(x_1',\ldots ,x_{d-1}'), {\varvec{x}}''=(x_1',\ldots ,x_{d-1}'')\in {\mathbb {Z}}_+^{d-1}\) and \({\varvec{m}}'=(m_1',\ldots ,m_d'), {\varvec{m}}''=(m_1'',\ldots ,m_d'')\in \prod _{k=1}^d {\mathbb {Z}}_{0,c_k-1}\). For \(k\ge 0\), \({}^{{\varvec{c}}}{\hat{{\varvec{Y}}}}_n=(k,i',{\varvec{x}}',{\varvec{m}}',j')\) corresponds to \({\varvec{Y}}_n=(k{\varvec{c}}+{\varvec{c}}\bullet {\hat{{\varvec{x}}}}'+{\varvec{m}}',j')\), where \({\hat{{\varvec{x}}}}'=(x_1',\ldots ,x_{i'-1}',0,x_{i'}',\ldots ,x_{d-1}')\). Analogously, \({}^{{\varvec{c}}}{\hat{{\varvec{Y}}}}_n=(0,i'',{\varvec{x}}'',{\varvec{m}}'',j'')\) corresponds to \({\varvec{Y}}_n=({\varvec{c}}\bullet {\hat{{\varvec{x}}}}'' +{\varvec{m}}'',j'')\), where \({\hat{{\varvec{x}}}}''=(x_1'',\ldots ,x_{i''-1}'',0,x_{i''}'',\ldots ,x_{d-1}'')\). Hence, from (4.8), setting \({\varvec{l}}={\varvec{c}}\bullet {\hat{{\varvec{x}}}}' +{\varvec{m}}'\), we obtain, for every \({\varvec{x}}=(x_1,x_2,\ldots ,x_d)\in {\mathbb {Z}}_+^d\) such that \(\min _{1\le i\le d} x_i=0\) and for every \(j\in S_0\),

From (5.5), (5.6) and (4.27), setting \({\varvec{m}}'={\mathbf {0}}\), we obtain

and this completes the proof. \(\square \)

Corollary 5.1

The same result as Theorem 5.1 holds for every direction vector \({\varvec{c}}\in {\mathbb {Z}}_+^d\) such that \({\varvec{c}}\ne {\mathbf {0}}\).

Proof

Let \(\{{\varvec{Y}}_n\}=\{({\varvec{X}}_n,J_n)\}\) be a d-dimensional MMRW on the state space \({\mathbb {Z}}^d\times S_0\) and define an absorbing Markov chain \(\{{\hat{{\varvec{Y}}}}_n\}=\{({\hat{{\varvec{X}}}}_n,{\hat{J}}_n)\}\) as \({\hat{{\varvec{Y}}}}_n={\varvec{Y}}_{\tau \wedge n}\) for \(n\ge 0\), where \(\tau \) is the stopping time given by \(\tau =\inf \{n\ge 0; {\varvec{Y}}_n\in {\mathbb {S}}{\setminus }{\mathbb {S}}_+\}\). We assume that the state space of \(\{{\hat{{\varvec{Y}}}}_n\}\) is given by \({\mathbb {S}}_+\). If \(d=1\), the assertion of the corollary is trivial. Hence, we assume \(d\ge 2\) and set m in \(\{1,2,\ldots ,d-1\}\). Without loss of generality, we assume the direction vector \({\varvec{c}}=(c_1,c_2,\ldots ,c_d)\) satisfies \(c_i>0\) for \(i\in \{1,2,\ldots ,m\}\) and \(c_i=0\) for \(i\in \{m+1,m+2,\ldots ,d\}\). Consider an m-dimensional MMRW \(\{{\hat{{\varvec{Y}}}}_n^{(m)}\}=\{({\hat{X}}_1,\ldots ,{\hat{X}}_m,({\hat{X}}_{m+1},\ldots ,{\hat{X}}_d,{\hat{J}}_n))\}\), where \(({\hat{X}}_1,\ldots ,{\hat{X}}_m)\) is the level and \(({\hat{X}}_{m+1},\ldots ,{\hat{X}}_d,{\hat{J}}_n)\) the background state, and denote by \(A_{{\varvec{i}}}^{(m)},\,{\varvec{i}}\in \{-1,0,1\}^m\), its transition probability blocks. For \(\varvec{\theta }_{(m)}=(\theta _1,\ldots ,\theta _m)\in {\mathbb {R}}^m\), define a matrix function \(A_*^{(m)}(\varvec{\theta }_{(m)})\) as

Since \(\{{\varvec{Y}}_n\}\) is a MMRW, this \(A_*^{(m)}(\varvec{\theta }_{(m)})\) has a multiple tridiagonal structure and, applying Lemma 2.6 repeatedly, we obtain

where \(\varvec{\theta }_{[m+1]}=(\theta _{m+1},\ldots ,\theta _d)\) and \(A_*(\varvec{\theta })=A_*(\varvec{\theta }_{(m)},\varvec{\theta }_{[m+1]})\) is given by (1.3). Hence, applying Theorem 5.1 to \(\{{\hat{{\varvec{Y}}}}_n^{(m)}\}\), we obtain, for every \({\varvec{x}}_{(m)}=(x_1,\ldots ,x_m)\in {\mathbb {Z}}_+^m\) such that \(\min _{1\le i\le m} x_i=0\), for every \({\varvec{x}}_{[m+1]}=(x_{m+1},\ldots ,x_d)\in {\mathbb {Z}}_+^{d-m}\), for every \({\varvec{l}}_{(m)}=(l_1,\ldots ,l_m)\in {\mathbb {Z}}_+^m\) such that \(\min _{1\le i\le m} l_i=0\) and for every \({\varvec{l}}_{[m+1]}=(l_{[m+1]},\ldots ,l_d)\in {\mathbb {Z}}_+^{d-m}\),

where \({\varvec{c}}_{(m)}=(c_1,\ldots ,c_m)\) and we use the assumption that \((c_{m+1},\ldots ,c_d)={\mathbf {0}}\). \(\square \)

5.2 Convergence domain of the matrix moment generating function

From Proposition 5.2 and Theorem 5.1, we obtain the following result for the convergence domain.

Theorem 5.2

For every \({\varvec{x}}\in {\mathbb {Z}}_+^d\), \({\mathcal {D}}_{{\varvec{x}}}={\mathcal {D}}\).

Proof

We prove \({\mathcal {D}}_{{\mathbf {0}}}={\mathcal {D}}\). By Proposition 5.1, this implies \({\mathcal {D}}_{{\varvec{x}}}={\mathcal {D}}\) for every \({\varvec{x}}\in {\mathbb {Z}}_+^d\). Suppose \({\mathcal {D}}_{{\mathbf {0}}} {\setminus }{\mathcal {D}}\ne \emptyset \). Since \({\mathcal {D}}_{{\mathbf {0}}}\) is an open set and, by Proposition 5.2, we have \({\mathcal {D}}\subset {\mathcal {D}}_{{\mathbf {0}}}\), there exists a point \({\varvec{q}}\in {\mathcal {D}}_{{\mathbf {0}}}{\setminus }{\bar{{\mathcal {D}}}}\), where \({\bar{{\mathcal {D}}}}\) is the closure of \({\mathcal {D}}\). This \({\varvec{q}}\) satisfies \(\varPhi _{{\mathbf {0}}}({\varvec{q}})<\infty \). Since \({\bar{{\mathcal {D}}}}\) is a convex set, there exists a hyperplane \({\mathscr {H}}\) satisfying \({\varvec{q}}\in {\mathscr {H}}\) and \({\bar{{\mathcal {D}}}}\cap {\mathscr {H}}=\emptyset \) (see Fig. 2). Denote by \({\varvec{c}}\ge {\mathbf {0}}\) the normal vector of \({\mathscr {H}}\), where we assume \(\Vert {\varvec{c}}\Vert =1\). By the definition, \({\varvec{c}}\) satisfies

Let \({\varvec{c}}'\) be a vector of positive integers satisfying

This is possible because of (5.10) and the fact that \({\bar{{\mathcal {D}}}}\) is bounded in any positive direction. For this \({\varvec{c}}'\) and for \(j,j'\in S_0\), define a moment generating function \(\varphi _{{\varvec{c}}'}(\varvec{\theta })\) as

and a point \({}^{{\varvec{c}}'}\varvec{\theta }\) as \({}^{{\varvec{c}}'}\varvec{\theta }= \arg \max _{\varvec{\theta }\in {\bar{{\mathcal {D}}}}}\, \langle {\varvec{c}}',\varvec{\theta }\rangle \). By Theorem 5.1 and the Cauchy–Hadamard theorem, we see that the radius of convergence of the power series on the right-hand side of (5.12) is \(e^{\langle {\varvec{c}}',{}^{{\varvec{c}}'}\varvec{\theta }\rangle }\) and this implies that \(\varphi _{{\varvec{c}}}(\varvec{\theta })\) diverges if \(\langle {\varvec{c}}',\varvec{\theta }\rangle > \langle {\varvec{c}}',{}^{{\varvec{c}}'}\varvec{\theta }\rangle \). Hence, by (5.11), we have \(\varphi _{{\varvec{c}}'}({\varvec{q}})=\infty \). On the other hand, we obtain from the definition of \(\varphi _{{\varvec{c}}}(\varvec{\theta })\) that

This is a contradiction and, as a result, we obtain \({\mathcal {D}}_{{\mathbf {0}}}{\setminus }{\mathcal {D}}=\emptyset \). \(\square \)

5.3 Asymptotic decay rate of the marginal measure

Let \({\varvec{X}}\) be a vector of random variables subject to the stationary distribution of a multi-dimensional reflected random walk. The asymptotic decay rate of the marginal tail distribution in the form \({\mathbb {P}}(\langle {\varvec{c}},{\varvec{X}}\rangle >x)\) has been discussed in [12] (also see [9]), where \({\varvec{c}}\) is a direction vector. In this subsection, we consider this type of asymptotic decay rate for the occupation measure.

Let \({\varvec{c}}=(c_1,c_2,\ldots ,c_d)\) be a vector of mutually prime positive integers. We assume \(c_1=\min _{1\le i \le d} c_i\); in other cases such as \(c_2=\min _{1\le i \le d} c_i\), analogous results can be obtained. For \(k\ge 0\), define an index set \({\mathscr {I}}_k\) as

For \({\varvec{x}}\in {\mathbb {Z}}_+^d\), the matrix moment generating function \(\varPhi _{{\varvec{x}}}(\theta {\varvec{c}})\) is represented as

where \({\varvec{c}}_{[2]}=(c_2,c_3,\ldots ,c_d)\). By the Cauchy–Hadamard theorem, we obtain the following result.

Theorem 5.3

For any vector of mutually prime positive integers, \({\varvec{c}}=(c_1,c_2,\ldots ,c_d)\), such that \(c_1=\min _{1\le i\le d} c_i\) and for every \(({\varvec{x}},j)\in {\mathbb {S}}_+\) and \(j'\in S_0\),

In other cases, for example, \(c_2=\min _{1\le i\le d} c_i\), an analogous result holds.

5.4 Single-server polling model with limited services: an example

As a simple example, we consider a single-server polling model with three queues, in which first two queues (Q\(_1\) and Q\(_2\)) are served according to 1-limited service and the other queue (Q\(_3\)) according to K-limited service (see Fig. 3). We say that, for \(k\ge 1\), a queue is served according to k-limited service if the server serves at most k customers on a visit to that queue. The single server goes around the queues in order Q\(_1\), Q\(_2\), Q\(_3\), without switchover times. For \(i\in \{1,2,3\}\), customers arrive at Q\(_i\) according to a Poisson process with intensity \(\lambda _i\) and they receive exponential service with mean \(1/\mu _i\). We denote by \(\lambda \) the sum of the arrival rates, i.e., \(\lambda =\lambda _1+\lambda _2+\lambda _3\). For \(i\in \{1,2,3\}\), let \({\tilde{X}}_i(t)\) be the number of customers in Q\(_i\) at time t and denote by \({\tilde{{\varvec{X}}}}(t)=({\tilde{X}}_1(t),{\tilde{X}}_2(t),{\tilde{X}}_3(t))\) the vector of them. Let \({\tilde{J}}(t)\) be the server state indicating which customer is served at time t. Then, \(\{{\tilde{{\varvec{Y}}}}(t)\}=\{({\tilde{{\varvec{X}}}}(t),{\tilde{J}}(t))\}\) becomes a continuous-time three-dimensional QBD process. Let \(S_0\) be the set of server states, which is given as \(S_0=\{1,2,\ldots ,K,K+1,K+2\}\). When \({\tilde{{\varvec{X}}}}(t)>{\mathbf {0}}\), \({\tilde{J}}(t)=1\) means that the server is serving a customer in Q\(_1\) and \({\tilde{J}}(t)=2\) that it is serving a customer in Q\(_2\); for \(j\ge 3\), \({\tilde{J}}(t)=j\) means that it is serving the \((j-2)\)-th customer in Q\(_3\) on a visit to that queue. The nonzero transition rate blocks of \(\{{\tilde{{\varvec{Y}}}}(t)\}\) when \({\tilde{{\varvec{X}}}}(t)>{\mathbf {0}}\) are given as follows:

Let \(\{{\varvec{Y}}(t)\}=\{({\varvec{X}}(t),J(t))\}\) be a continuous-time three-dimensional MMRW on the state space \({\mathbb {Z}}^3\times S_0\), having \({\tilde{A}}_{{\varvec{i}}},\,{\varvec{i}}\in \{-1,0,1\}^3\), as the transition rate blocks. Let \(\{{\varvec{Y}}_n\}=\{({\varvec{X}}_n,J_n)\}\) be a discrete-time three-dimensional MMRW on the state space \({\mathbb {Z}}^3\times S_0\), generated from \(\{{\varvec{Y}}(t)\}\) by the uniformization technique. The transition probability blocks of \(\{{\varvec{Y}}_n\}\) are given by, for \({\varvec{i}}\in \{-1,0,1\}^3\),

where we set \(\nu =\lambda +\mu _1+\mu _2+\mu _3\). Applying Theorem 5.1 and Corollary 5.1 to this MMRW \(\{{\varvec{Y}}_n\}\), we obtain the asymptotic decay rate of the occupation measure, as described in Tables 1 and 2. In both the tables, the value of K varies from 1 to 20. Table 1 deals with a symmetric case, where all the arrival intensities are set at 0.25 and all the service rates are set at 1. Since Q\(_3\) is served according to K-limited service, the absolute value of the asymptotic decay rate in the case where \(c_3=1\) monotonically increases as the value of K increases. On the other hand, that in the case where \(c_3=0\) does not always vary monotonically, for example, in the case where \({\varvec{c}}=(1,1,0)\), the absolute value of the asymptotic decay rate decreases at first and then it increases. Table 2 deals with an asymmetric case, where the arrival intensity of Q\(_3\) is five times as large as those in Q\(_1\) and Q\(_2\), i.e., \(\mu _1=\mu _2=0.1\) and \(\mu _3=0.5\); all the service rates are set at 1. It can be seen from the table that the absolute values of the asymptotic decay rates for all the direction vectors are nearly balanced when K is greater than 5, which means that the absolute value of the asymptotic decay rate in the case where \({\varvec{c}}=(1,1,0)\) is close to that in the case where \({\varvec{c}}=(1,0,1)\) when K is set at 5; the absolute value of the asymptotic decay rate in the case where \({\varvec{c}}=(1,0,0)\) is close to that in the case where \({\varvec{c}}=(0,0,1)\) when K is set at 10.

6 Concluding remark

Using the results in the paper, we can obtain lower bounds for the asymptotic decay rates of the stationary distribution in a multi-dimensional QBD process. Let \(\{{\tilde{{\varvec{Y}}}}_n\}=\{({\tilde{{\varvec{X}}}}_n,{\tilde{J}}_n)\}\) be a d-dimensional QBD process on the state space \({\mathbb {S}}_+={\mathbb {Z}}_+^d\times S_0\), and assume that the blocks of transition probabilities when \({\tilde{{\varvec{X}}}}_n>{\mathbf {0}}\) are given by \(A_{{\varvec{i}}},{\varvec{i}}\in \{-1,0,1\}^d\). Assume that \(\{{\tilde{{\varvec{Y}}}}_n\}\) is irreducible and positive recurrent and denote by \(\varvec{\nu }=(\nu _{{\varvec{y}}},{\varvec{y}}\in {\mathbb {S}}_+)\) the stationary distribution of the QBD process. Furthermore, assume that the blocks \(A_{{\varvec{i}}},{\varvec{i}}\in \{-1,0,1\}^d,\) satisfy the property corresponding to Assumption 3.1. Then, by Theorem 5.1 and Corollary 5.1, for any vector \({\varvec{c}}\) of nonnegative integers such that \({\varvec{c}}\ne {\mathbf {0}}\) and for every \(j\in S_0\), a lower bound for the asymptotic decay rate of the stationary distribution in the QBD process in the direction specified by \({\varvec{c}}\) is given as follows:

where \(A_*(\varvec{\theta })=\sum _{{\varvec{i}}\in \{-1,0,1\}^d} e^{\langle {\varvec{i}},\varvec{\theta }\rangle } A_{{\varvec{i}}}\). Since the QBD process is a reflected Markov additive process, this inequality is an answer to Conjecture 5.1 of [13] in a case with background states.

References

Bini, D.A., Latouche, G., Meini, B.: Numerical Solution of Structured Markov Chains. Oxford University Press, Oxford (2005)

Bini, D.A., Massei, S., Meini, B., Robol, L.: On quadratic matrix equations with infinite size coefficients encountered in QBD stochastic processes. Numer. Linear Algebra Appl. 25, e2128 (2017)

Borovkov, A.A., Mogul’skiĭ, A.A.: Large deviations for Markov chains in the positive quadrant. Russ. Math. Surv. 56, 803–916 (2001)

Gail, H.R., Hantler, S.L., Taylor, B.A.: Solutions of the basic matrix equation for M/G/1 and G/M/1 type Markov chains. Commun. Stat. Stoch. Models 10(1), 1–43 (1994)

He, Q.-M., Li, H., Zhao, T.Q.: Light-tailed behavior in QBD processes with countably many phases. Stoch. Models 25, 50–75 (2009)

Kijima, M.: Quasi-stationary distributions of single-server phase-type queues. Math. Oper. Res. 18(2), 423–437 (1993)

Kingman, J.F.C.: A convexity property of positive matrixes. Q. J. Math. Oxf. (2) 12, 283–284 (1961)

Kobayashi, M., Miyazawa, M., Zhao, Y.Q.: Tail asymptotics of the occupation measure for a Markov additive process with an \(M/G/1\)-type background process. Stoch. Models 26, 463–486 (2010)

Kobayashi, M., Miyazawa, M.: Tail asymptotics of the stationary distribution of a two-dimensional reflecting random walk with unbounded upward jumps. Adv. Appl. Prob. 46, 365–399 (2014)

Latouche, G., Ramaswami, V.: Introduction to Matrix Analytic Methods in Stochastic Modeling. SIAM, Philadelphia (1999)

Miyazawa, M.: Tail decay rates in double QBD processes and related reflected random walks. Math. Oper. Res. 34(3), 547–575 (2009)

Miyazawa, M.: Light tail asymptotics in multidimensional reflecting processes for queueing networks. TOP 19(2), 233–299 (2011)

Miyazawa, M., Zwart, B.: Wiener-Hopf factorizations for a multidimensional Markov additive process and their applications to reflected processes. Stoch. Syst. 2(1), 67–114 (2012)

Miyazawa, M.: Superharmonic vector for a nonnegative matrix with QBD block structure and its application to a Markov modulated two dimensional reflecting process. Queueing Syst. 81, 1–48 (2015)

Neuts, M.F.: It Matrix-Geometric Solutions in Stochastic Models. Dover Publications, New York (1994)

Neuts, M.F.: Structured Stochastic Matrices of M/G/1 Type and Their Applications. Marcel Dekker, New York (1989)

Ney, P., Nummelin, E.: Markov additive processes I. Eigenvalue properties and limit theorems. Ann. Probab. 15(2), 561–592 (1987)

Ozawa, T.: Asymptotics for the stationary distribution in a discrete-time two-dimensional quasi-birth-and-death process. Queueing Syst. 74, 109–149 (2013)

Ozawa, T., Kobayashi, M.: Exact asymptotic formulae of the stationary distribution of a discrete-time two-dimensional QBD process. Queueing Syst. 90, 351–403 (2018)

Pruitt, W.E.: Eigenvalues of non-negative matrices. Ann. Math. Stat. 35, 1797–1800 (1964)

Seneta, E.: Non-negative Matrices and Markov Chains, Revised Printing. Springer, New York (2006)

Takahashi, Y., Fujimoto, K., Makimoto, N.: Geometric decay of the steady-state probabilities in a quasi-birth-and-death process with a countable number of phases. Stoch. Models 17(1), 1–24 (2001)

Tweedie, R.L.: Operator-geometric stationary distributions for Markov chains with applications to queueing models. Adv. Appl. Probab. 14, 368–391 (1982)

Acknowledgements

The author is grateful to anonymous referees for their valuable comments and suggestions to improve the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Convexity of the reciprocal of a convergence parameter

Let n be a positive integer and \({\varvec{x}}=(x_1,x_2,\ldots ,x_n)\in {\mathbb {R}}^n\). We say that a positive function \(f({\varvec{x}})\) is log-convex in \({\varvec{x}}\) if \(\log f({\varvec{x}})\) is convex in \({\varvec{x}}\), and denote by \({\mathfrak {S}}_n\) the class of all log-convex functions of n variables, together with the function identically zero. Note that, \({\mathfrak {S}}_n\) is closed under addition, multiplication, raising to any positive power, and the “\(\limsup \)” operation. Furthermore, a log-convex function is a convex function.

Let \(F({\varvec{x}})=(f_{ij}({\varvec{x}}),i,j\in {\mathbb {Z}}_+)\) be a matrix function each of whose elements belongs to the class \({\mathfrak {S}}_n\), i.e., for every \(i,j\in {\mathbb {Z}}_+\), \(f_{i,j}\in {\mathfrak {S}}_n\). In [7], it has been proved that when \(n=1\) and F(x) is a square matrix of a finite dimension, the maximum eigenvalue of F(x) is a log-convex function in x. Analogously, we obtain the following lemma.

Lemma A.1

For every \({\varvec{x}}\in {\mathbb {R}}^n\), assume that all powers of \(F({\varvec{x}})\) are finite elementwise and \(F({\varvec{x}})\) is irreducible. Then, the reciprocal of the convergence parameter of \(F({\varvec{x}})\), \(\mathrm{cp}(F({\varvec{x}}))^{-1}\), is log-convex in \({\varvec{x}}\) or identically zero.

Proof