Abstract

Markov modulation is versatile in generalization for making a simple stochastic model, which is often analytically tractable, more flexible in application. In this spirit, we modulate a two-dimensional reflecting skip-free random walk in such a way that its state transitions in the boundary faces and interior of a nonnegative integer quadrant are controlled by Markov chains. This Markov-modulated model is referred to as a 2d-QBD process according to Ozawa (Queueing Syst 74:109–149, 2013). We are interested in the tail asymptotics of its stationary distribution, which have been well studied when there is no Markov modulation. Ozawa studied this tail asymptotics problem, but his answer is not analytically tractable. We think this is because Markov modulation is so free to change a model even if the state space for Markov modulation is finite. Thus, some structure, say, extra conditions, would be needed to make the Markov modulation analytically tractable while minimizing its limitation in application. The aim of this paper is to investigate such structure for the tail asymptotic problem. For this, we study the existence of a right-subinvariant positive vector, called a superharmonic vector, of a nonnegative matrix with QBD block structure, where each block matrix is finite dimensional. We characterize this existence under a certain extra assumption. We apply this characterization to the 2d-QBD process and derive the tail decay rates of its marginal stationary distribution in an arbitrary direction. This solves the tail decay rate problem for a two-node generalized Jackson network, which has been open for many years.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Our primary interest is in methodology for deriving the tail asymptotics of the stationary distribution of a Markov-modulated two-dimensional reflecting random walk for queueing network applications, provided it is stable. This process has two components: front and background processes. We assume that the front process is a skip-free reflecting random walk on a nonnegative quarter plane of the lattice, and the background process has finitely many states. We are particularly interested in a two-node generalized Jackson network for its application.

According to Ozawa [36], we assume the following transition structure. The state space of the front process is composed of the inside of the quarter plane and three boundary faces: the origin, and the two half coordinate axes. Within each region, state transitions are homogeneous, that is, subject to a Markov-modulated random walk, but different regions may have different state transitions. Between pairs of the four regions, state transitions may also be different. See Fig. 1 in Sect. 3.1 for details. This Markov-modulated two-dimensional random walk is called a discrete-time 2d-QBD process, 2d-QBD process for short, in [36]. We adopt the same terminology. This process is flexible enough to handle many two-node queueing networks in continuous time through uniformization. The generalized Jackson network is such an example.

For the 2d-QBD process, we assume that it has a stationary distribution, and denote a random vector subject to it by \(({\varvec{L}}, J)\), where \({\varvec{L}}\) represents a random walk component taking values in \(\mathbb {R}_{+}^{2}\) while J represents a background state. For \(i=1,2\), we consider the tail asymptotics by logarithmic ratios of the stationary tail probabilities in the ith coordinate directions:

for each fixed \(\ell \ge 0\) and background state k, and those of the marginal stationary distribution in an arbitrary direction \({\varvec{c}} \equiv (c_{1}, c_{2})\):

It will be shown that those ratios converge to constants (Theorems 3.2, 3.3). They are negative, and their absolute values are called exponential decay rates. We demonstrate those tail asymptotic results for a two-node generalized Jackson network with Markov-modulated arrivals and phase-type service time distributions. This solves a problem which has been open for many years (see Sect. 4.2 for details).

Ozawa [36] studied the tail asymptotics in the coordinate directions, including (1.1). He showed that the method for a two-dimensional reflecting random walk studied by Miyazawa [27] is applicable with help of invariant vectors obtained by Li and Zhao [23]. We refer to this method as a QBD approach, which is composed of the following three key steps:

-

(1)

Formulate the 2d-QBD process as a one-dimensional QBD process with infinitely many background states, where one of the coordinate axes is taken as a level.

-

(2)

Find right- and left-invariant vectors of a nonnegative matrix with QBD block structure, which will be introduced shortly, and get upper and lower bounds of the tail decay rates.

-

(3)

Derive the tail decay rates, combining those results in the two directions.

Here, an infinite-dimensional square matrix is said to have QBD block structure if it is partitioned into blocks in such a way that each block is a square matrix of the same size except for the first row and first column blocks, the whole matrix is block tridiagonal, and each row of blocks is repeated and shifted except for the first two rows (see (2.4) for its definite form). In step (1), the blocks for the one-dimensional QBD are infinite dimensional, while, in step (2), those for the nonnegative matrix are finite dimensional.

A hard part of this QBD approach is in step (2). In [36] the invariant vectors are only obtained by numerically solving certain parametrized equations over a certain region of parameters. This much degrades applicability of the tail asymptotic results. For example, it is hard to get useful information from them for the tail asymptotics in the two-node generalized Jackson network (see, for example, [12, 15]). We think that this analytic intractability cannot be avoided because no structural condition is assumed for the Markov modulation. In applications, it may have certain structure. Thus, it is interesting to find conditions for the invariant vectors to be analytically tractable while minimizing limitations in application.

Another problem in [36] is complicated descriptions. They cannot be avoided because of the complicated modeling structure, but we easily get lost in computations. We think here simplification or certain abstraction is needed.

In addition to those two problems, the QBD approach is not so useful for studying the tail asymptotics in an arbitrary direction. For this, it is known that the stationary inequalities in terms of moment generating functions are useful in the case where there is no Markov modulation (for example, see [19, 28]). It is interesting to see whether this moment-generating function approach still works under Markov modulation.

We attack those three problems in this paper. We first consider the description problem and find a simpler matrix representation for a nonnegative matrix with QBD block structure. This representation is referred to as a canonical form. We then consider the problem in step (2).

For this, we relax the problem by considering a right-subinvariant positive vector, which is said to be superharmonic, instead of a right-invariant positive vector, which is said to be harmonic. It is known that the existence of a right-subinvariant vector is equivalent to that of a left-subinvariant nonnegative vector (for example, see [40]). When a nonnegative matrix is stochastic, a right-subinvariant vector can be viewed as a superharmonic function. Because of this fact, we use the terminology superharmonic vector. In the stochastic case, it obviously exists. When the matrix is substochastic and does not have the boundary blocks, this problem has been considered in studying a quasi-stationary distribution for QBD processes (see, for example, [17, 22, 23]).

In step (2), we do not assume any stochastic or substochastic condition for a nonnegative matrix with QBD block structure, which is crucial in our applications. As we will see, we can find necessary and sufficient conditions for such a matrix to have a superharmonic vector (see Theorem 2.1). The sufficiency is essentially due to Li and Zhao [23] and related to Bean et al. [2] (see Remarks 2.1, 2.2). However, this characterization is not useful in application as we already discussed. So, we will assume a certain extra condition to make our answer tractable. Under this extra assumption, we characterize the existence of a superharmonic vector using primitive data on the block matrices (Theorem 2.2).

This characterization enables us to derive the tail asymptotics of the stationary distribution in the coordinate directions for the 2d-QBD process. For the problem of the tail asymptotics in an arbitrary direction, we show that the moment-generating function approach can be extended for the Markov-modulated case. For this, we introduce a canonical form for the Markov-modulated two-dimensional random walk, which is similar to that for a nonnegative matrix with QBD block structure.

There has been a lot of work on tail asymptotic problems in queueing networks (see, for example, [28] and references therein). Most studies focus on two-dimensional reflecting processes or two-node queueing networks. The 2d-QBD process belongs to this class of models but allows them to have background processes with finitely many states. There is a huge gap between finite and infinite numbers of background states, but we hope that the present results will stimulate the study of higher dimensional tail asymptotic problems.

This paper is made up of five sections and appendices. Section 2 derives necessary and sufficient conditions for a nonnegative matrix with QBD block structure to have a right-subinvariant vector with and without extra assumptions. This result is applied to the 2d-QBD process, and the tail decay rates of its stationary distribution are derived in Sect. 3. The tail decay rates of the marginal stationary distribution in an arbitrary direction are obtained for the generalized Jackson network in Sect. 4. We finally give some concluding remarks in Sect. 5.

We summarize basic notation which will be used in this paper (Table 1).

For nonnegative square matrices \(T, T_{i}, T_{ij}\) with indices \(i, j \in \mathbb {Z}\) such that \(T_{i}\) and \(T_{ij}\) are null matrices except for finitely many i and j, we will use the following conventions (Table 2).

Here, the sizes of those matrices must be the same among those with the same type of indices, but they may be infinite. We also will use those matrices and related notation when the off-diagonal entries of \(T, T_{i}, T_{ij}\) are nonnegative.

2 Nonnegative matrices and QBD block structure

Let K be a nonnegative square matrix with infinite dimension. Throughout this section, we assume the following regularity condition:

-

(2a)

K is irreducible, that is, for each entry (i, j) of K, there is some \(n \ge 1\) such that the (i, j) entry of \(K^{n}\) is positive.

2.1 Superharmonic vector

In this subsection, we do not assume any assumption other than (2a) and introduce some basic notions. A positive column vector \({\varvec{y}}\) satisfying

is called a superharmonic vector of K, where the inequality of vectors is entry-wise. The condition (2.1) is equivalent to the existence of a positive row vector \({\varvec{x}}\) satisfying \({\varvec{x}} K \le {\varvec{x}}\). This \({\varvec{x}}\) is called a subinvariant vector. Instead of (2.1), if, for \(u > 0\),

then \({\varvec{y}}\) is called a u-superharmonic vector. We will not consider this vector, but most of our arguments are parallel to those for a superharmonic vector because \({\varvec{y}}\) of (2.2) is superharmonic for uK.

These conditions can be given in terms of the convergence parameter \(c_{p}(K)\) of K (see Table 2 for its definition). As shown in Chap. 5 of the book of Nummelin [34] (see also Chap. 6 of [38]),

or equivalently \(c_{p}(K) = \sup \{ u\ge 0; u {\varvec{x}} K \le {\varvec{x}} \text{ for } \text{ some } {\varvec{x}} > 0 \}\). Applying this fact to K, we have the following lemma. For completeness, we give its proof.

Lemma 2.1

A nonnegative matrix K satisfying (2a) has a superharmonic vector if and only if \(c_{p}(K) \ge 1\).

Proof

If K has a superharmonic vector, then we obviously have \(c_{p}(K) \ge 1\) by (2.3). Conversely, suppose \(c_{p}(K) \ge 1\). Then, by (2.3), for any \(u < 1\), we can find a positive vector \({\varvec{y}}(u)\) such that \(y_0(u) = 1\), all entries of y(u) are bounded by 1, and

Taking the limit infimum of both sides of the above inequality, and letting \({\varvec{y}} = \liminf _{u \uparrow 1} {\varvec{y}}(u)\), we have (2.1). Thus, K indeed has a superharmonic vector \({\varvec{y}}\). \(\square \)

The importance of Condition (2.1) lies in the fact that \(\Delta _{{\varvec{y}}}^{-1} K \Delta _{{\varvec{y}}}\) is substochastic, that is, K can be essentially considered as a substochastic matrix. This enables us to use probabilistic arguments for manipulating K in computations.

2.2 QBD block structure and its canonical form

We now assume further structure for K. Let \(m_{0}\) and m be arbitrarily given positive integers. Let \(A_{i}\) and \(B_{i}\) for \(i=-1,0,1\) be nonnegative matrices such that \(A_{i}\) for \(i=-1,0,1\) are \(m \times m\) matrices, \(B_{-1}\) is an \(m_{0} \times m\) matrix, \(B_{0}\) is an \(m_{0} \times m_{0}\) matrix and \(B_{1}\) is \(m \times m_{0}\) matrix. We assume that K has the following form:

If K is stochastic, then it is the transition matrix of a discrete-time QBD process. Thus, we refer to K of (2.4) as a nonnegative matrix with QBD block structure.

As we discussed in Sect. 1, we are primarily interested in tractable conditions for K to have a superharmonic vector. Denote this vector by \({\varvec{y}} \equiv ({\varvec{y}}_{0}, {\varvec{y}}_{1}, \ldots )^{\textsc {t}}\). That is, \({\varvec{y}}\) is positive and satisfies the following inequalities:

Although the QBD block structure is natural in applications, there are two extra equations (2.5) and (2.6) which involve the boundary blocks \(B_{i}\). Let us consider how to reduce them to one equation. From (2.5), \(B_{0}{\varvec{y}}_{0} \le {\varvec{y}}_{0}\) and \(B_{0}{\varvec{y}}_{0} \ne {\varvec{y}}_{0}\). Hence, if \(c_{p}(B_{0}) = 1\), then \({\varvec{y}}_{0}\) must be the left-invariant vector of \(B_{0}\) (see Theorem 6.2 of [38]), but this is impossible because \({\varvec{y}}_{1} > {\varvec{0}}\). Thus, we must have \(c_{p}(B_{0}) > 1\), and therefore \((I - B_{0})^{-1}\) is finite (see our convention (Table 2) for this inverse). Let

This suggests that we should define a matrix \(\overline{K}\) as

where \(C_{0}\) is defined by (2.8). Denote the principal matrix of K (equivalently, \(\overline{K}\)) obtained by removing the first row and column blocks by \(K_{+}\). Namely,

Lemma 2.2

(a) K has a superharmonic vector if and only if \(\overline{K}\) has a superharmonic vector. (b) \(\max (c_{p}(K), c_{p}(\overline{K})) \le c_{p}(K_{+})\). (c) If \(c_{p}(K) \ge 1\), then \(c_{p}(K) \le c_{p}(\overline{K}) \le c_{p}(K_{+})\).

Remark 2.1

A similar result for K and \(K_{+}\) is obtained in Bean et al. [2].

Proof

Assume that K has a superharmonic vector \({\varvec{y}} \equiv ({\varvec{y}}_{0}, {\varvec{y}}_{1}, \ldots )^{\textsc {t}}\). Then, we have seen that \(c_{p}(B_{0}) > 1\), and therefore \((I-B_{0})^{-1} < \infty \). Define \(\overline{{\varvec{y}}} \equiv (\overline{{\varvec{y}}}_{0}, \overline{{\varvec{y}}}_{1}, \ldots )^{\textsc {t}}\) by \(\overline{{\varvec{y}}}_{n} = {\varvec{y}}_{n+1}\) for \(n \ge 0\), and define \(C_{0}\) by (2.8). Then, from (2.9) , we have

This and (2.7) verify that \(\overline{{\varvec{y}}}\) is superharmonic for \(\overline{K}\). For the converse assume that \(\overline{K}\) is well defined and \(\overline{{\varvec{y}}} \equiv (\overline{{\varvec{y}}}_{0}, \overline{{\varvec{y}}}_{1}, \ldots )^{\textsc {t}}\) is superharmonic for \(\overline{K}\). Obviously, the finiteness of \(\overline{K}\) implies that \(B_{-1} (I - B_{0})^{-1} B_{1}\) is finite. Suppose that \(c_{p}(B_{0}) \le 1\). Then some principal submatrix of \((I - B_{0})^{-1}\) has divergent entries in every row and column of this submatrix. Denote a collection of all such principal matrices which are maximal in their size by \(\mathcal{P}_{0}\). Then, for all entries (i, j) of submatrices in \(\mathcal{P}_{0}\), we must have, for all \(n \ge 0\),

because of the finiteness of \(B_{-1} (I - B_{0})^{-1} B_{1}\). This contradicts the irreducibility (2a) of K. Hence, we have \(c_{p}(B_{0}) > 1\). Define \({\varvec{y}} \equiv ({\varvec{y}}_{0}, {\varvec{y}}_{1}, \ldots )^{\textsc {t}}\) as

where \((I - B_{0})^{-1} < \infty \) because of \(c_{p}(B_{0}) > 1\). Then, from (2.8), we have

and therefore the fact that \(C_{0} \overline{{\varvec{y}}}_{0} + A_{1} \overline{{\varvec{y}_{1}}} \le \overline{{\varvec{y}}}_{0}\) implies (2.6). Finally, the definition of \({\varvec{y}}_{0}\) implies (2.5) with equality, while the definition of \({\varvec{y}}_{n}\) for \(n \ge 1\) implies (2.7). Hence, \({\varvec{y}}\) is superharmonic for K. This proves (a). (b) is immediate from (2.3) since \(({\varvec{y}}_{1}, \ldots )^{\textsc {t}}\) is superharmonic for \(K_{+}\) if \(({\varvec{y}}_{0}, {\varvec{y}}_{1}, \ldots )^{\textsc {t}}\) is superharmonic for K (or \(\overline{K}\)). For (c), recall that the canonical form of uK is denoted by \(\overline{uK}\) for \(u > 0\). If \(u \ge 1\), we can see that \(u \overline{K} \le \overline{uK}\), and therefore \(c_{p}(K) \le c_{p}(\overline{K})\). This and (b) conclude (c). \(\square \)

By this lemma, we can work on \(\overline{K}\) instead of K to find a superharmonic vector. It is notable that all block matrices of \(\overline{K}\) are \(m \times m\) square matrices and it has repeated row and column structure except for the first row and first column blocks. This greatly simplifies computations. We refer to \(\overline{K}\) as the canonical form of K.

In what follows, we will mainly work on the canonical form \(\overline{K}\) of K. For simplicity, we will use \({\varvec{y}} \equiv ({\varvec{y}}_{0}, {\varvec{y}}_{1}, \ldots )^{\textsc {t}}\) for a superharmonic vector of \(\overline{K}\).

2.3 Existence of a superharmonic vector

Suppose that \(\overline{K}\) of (2.10) has a superharmonic vector \({\varvec{y}} \equiv ({\varvec{y}}_{0}, {\varvec{y}}_{1}, \ldots )^{\textsc {t}}\). That is,

In this section, we consider conditions for the existence of a superharmonic vector.

Letting \(C_{1} = A_{1}\), we recall matrix moment-generating functions for \(\{A_{i}\}\) and \(\{C_{i}\}\) (see Table 2):

From now on, we always assume a further irreducibility in addition to (2a).

-

(2b)

\(A_{*}(0)\) is irreducible.

Since \(A_{*}(\theta )\) and \(C_{*}(\theta )\) are nonnegative and finite-dimensional square matrices, they have Perron–Frobenius eigenvalues \(\gamma ^{(A_{*})}(\theta ) (\equiv \gamma _{\textsc {pf}}(A_{*}(\theta )))\) and \(\gamma ^{(C_{*})}(\theta ) (\equiv \gamma _{\textsc {pf}}(C_{*}(\theta )))\), respectively, and their right eigenvectors \({\varvec{h}}^{(A_{*})}(\theta )\) and \({\varvec{h}}^{(C_{*})}(\theta )\), respectively. That is,

where \(C_{*}(\theta )\) may not be irreducible, so we take a maximal eigenvalue among those which have positive right-invariant vectors. Thus, \({\varvec{h}}^{(A_{*})}(\theta )\) is positive, but \({\varvec{h}}^{(C_{*})}(\theta )\) is nonnegative with possibly zero entries. These eigenvectors are unique up to constant multipliers.

It is well known that \(\gamma ^{(A_{*})}(\theta )\) and \(\gamma ^{(C_{*})}(\theta )\) are convex functions of \(\theta \) (see, for example, Lemma 3.7 of [32]). Furthermore, their reciprocals are the convergence parameters of \(A_{*}(\theta )\) and \(C_{*}(\theta )\), respectively. It follows from the convexity of \(\gamma ^{(A_{*})}(\theta )\) and the fact that some entries of \(A_{*}(\theta )\) diverge as \(|\theta | \rightarrow \infty \) that

We introduce the following notation:

where \(\Gamma ^{(1d)}_{0} \not = \emptyset \) implies that \(C_{0}\) is finite, that is, \(c_{p}(B_{0}) > 1\). By (2.16), \(\Gamma ^{(1d)}_{+}\) is a bounded interval or the empty set.

In our arguments, we often change the repeated row of blocks of K and \(\overline{K}\) so that they are substochastic. For this, we introduce the following notation. For each \(\theta \in \mathbb {R}\) and \({\varvec{h}}^{(A_{*})}(\theta )\) determined by (2.14), let

where we recall that \(\Delta _{{\varvec{a}}}\) is the diagonal matrix whose diagonal entry is given by the same dimensional vector \({\varvec{a}}\). Let

Note that \({\widehat{A}}^{(\theta )} = \Delta _{{\varvec{h}}^{(A_{*})}(\theta )}^{-1} A_{*}(\theta ) \Delta _{{\varvec{h}}^{(A_{*})}(\theta )}\), and therefore \({\widehat{A}}^{(\theta )} {\varvec{1}} = \gamma ^{(A_{*})}(\theta ) {\varvec{1}}\).

The following lemma is the first step in characterizing \(c_{p}(K) \ge 1\).

Lemma 2.3

(a) \(c_{p}(K_{+}) \ge 1\) if and only if \(\Gamma ^{(1d)}_{+} \ne \emptyset \), and therefore \(c_{p}(K) \ge 1\) implies \(\Gamma ^{(1d)}_{+} \ne \emptyset \). (b) \(c_{p}(K_{+}) = (\min \{\gamma ^{A_{*}}(\theta ); \theta \in \mathbb {R}\})^{-1}\).

This lemma may be considered to be a straightforward extension of Theorem 2.1 of Kijima [17] from a substochastic to a nonnegative matrix. So, it may be proved similarly, but we give a different proof in Appendix 1. There are two reasons for this. First, it makes this paper self-contained. Second, we wish to demonstrate that it is hard to remove the finiteness of m on block matrices.

We now present necessary and sufficient conditions for \(\overline{K}\), equivalently K, to have a superharmonic vector.

Theorem 2.1

(a) If \(\Gamma ^{(1d)}_{+} \ne \emptyset \), then \(N \equiv (I-K_{+})^{-1}\) is finite, and therefore \(G_{-} \equiv N_{11} A_{-1}\) is well defined and finite, where \(N_{11}\) is the (1, 1)-entry of N. (b) \(c_{p}(\overline{K}) \ge 1\) holds if and only if \(\Gamma ^{(1d)}_{+} \ne \emptyset \) and

If equality holds in (2.17) then \(c_{p}(\overline{K}) = 1\).

Remark 2.2

The sufficiency in (b) is essentially obtained in Theorem 6 of [23], which is used in Sects. 3.4 and 3.5 of [36], where the eigenvalue \(\gamma _{\textsc {pf}}(C_{0} + A_{1} G_{-})\) corresponds to \(u_{0}(1)\) in [23]. We do not need the function \(u_{0}(\beta )\) there because we work on a nonnegative matrix, while substochasticity is assumed in [23].

Proof

(a) Assume that \(\theta \in \Gamma ^{(1d)}_{+} \ne \emptyset \). Then we can find a \(\theta _{1}\) such that \(\theta _{1} = \arg _{\theta } \min \{\theta \in \mathbb {R}; \gamma ^{A_{*}}(\theta ) = 1\}\). For this \(\theta _{1}\), let \({\varvec{y}}(\theta _{1}) = ({\varvec{h}}^{(A_{*})}(\theta _{1}), e^{\theta _{1}} {\varvec{h}}^{(A_{*})}(\theta _{1}), \ldots )^{\textsc {t}}\), and let

It is easy to see that \(\breve{K}_{+}^{(\theta _{1})}\) is strictly substochastic because the first row of blocks is defective. Hence, \((1-\breve{K}_{+}^{(\theta _{1})})^{-1}\) must be finite, and therefore \((I-K_{+})^{-1}\) is also finite. This proves (a).

(b) Assume that \(c_{p}(\overline{K}) \ge 1\). Then \(\Gamma ^{(1d)}_{+} \ne \emptyset \) by Lemma 2.3. Hence, \((I-K_{+})^{-1}\) is finite by (a). Because of \(c_{p}(\overline{K}) \ge 1\), \(\overline{K}\) has a superharmonic vector. We denote this vector by \({\varvec{y}} = \{{\varvec{y}}_{n}; n=0,1,\ldots \}^{\textsc {t}}\). Let \({\varvec{z}} = \{{\varvec{y}}_{n}; n=1,2,\ldots \}^{\textsc {t}}\). Then we have

It follows from the second equation that \( [(I - K_{+})^{-1}]_{11} A_{-1} {\varvec{y}}_{0} \le {\varvec{y}}_{1}\). Hence, substituting this into (2.18), we have

which is equivalent to (2.17). Conversely, assume (2.17) and \(\Gamma ^{(1d)}_{+} \ne \emptyset \). Then we have (2.20) for some \({\varvec{y}}_{0} > {\varvec{0}}\). Define \({\varvec{z}}\) as

Then we get (2.19) with equality, and (2.20) yields (2.18). Thus, we get the superharmonic vector \(({\varvec{y}}_{0}, {\varvec{z}})^{\textsc {t}}\) for \(\overline{K}\). This completes the proof. \(\square \)

Using the notation in the above proof, let \(\breve{N}^{(\theta _{1})} = (1-\breve{K}_{+}^{(\theta _{1})})^{-1}\), and let

Then \({\widehat{G}}^{(\theta _{1})}_{-}\) must be stochastic because it is a transition matrix for the background state when the random walk component hits one level down. Furthermore,

Hence, for \(m=1\), \(e^{-\theta _{1}} G_{-} = 1\), and therefore (2.17) is equivalent to

which agrees with \(\gamma ^{(C_{*})}(\theta _{1}) \le 1\). Hence, we have the following result.

Corollary 2.1

For \(m=1\), \(c_{p}(K) \ge 1\) if and only if \(\Gamma ^{(1d)}_{+} \cap \Gamma ^{(1d)}_{0} \ne \emptyset \).

This corollary is essentially the same as Theorem 3.1 of [27], so nothing is new technically. Here, we have an alternative proof. However, it is notable that K may have boundary blocks whose size is \(m_{0} \ge 1\) while \(m=1\).

For \(m \ge 2\), Theorem 2.1 is not so useful in application because it is hard to evaluate \(G_{-}\), and therefore, it is hard to verify (2.17). Ozawa [36] proposes computing the corresponding characteristics numerically. However, in its application for the 2d-QBD process, \(G_{-}\) is parametrized, and we need to compute it for some range of parameters. Thus, even numerical computations are intractable.

One may wonder how to replace (2.17) by a tractable condition. In view of the case of \(m=1\), one possible condition is that \(\gamma ^{(C_{*})}(\theta ) \le 1\) for some \(\theta \in \Gamma ^{(1d)}_{+}\), which is equivalent to (2.21) for general m. However, \(\gamma _{\textsc {pf}}\left( C_{0} + e^{\theta _{1}} A_{1}\right) \), which equals \(\gamma ^{(C_{*})}(\theta )\), is generally not identical to \(\gamma _{\textsc {pf}}\left( C_{0} + A_{1} G_{-} \right) \) (see Appendix 3). So far, we will not pursue the use of Theorem 2.1.

2.4 A tractable condition for application

We have considered conditions for \(c_{p}(K) \ge 1\) or, equivalently, \(c_{p}(\overline{K}) \ge 1\). For this problem, we here consider a specific superharmonic vector for \(\overline{K}\). For each \(\theta \in \mathbb {R}\) and \({\varvec{h}} \ge {\varvec{0}}\), define \({\varvec{y}}(\theta ) \equiv ({\varvec{y}}_{0}(\theta ), {\varvec{y}}_{1}(\theta ), \ldots )^{\textsc {t}}\) by

Then, \(\overline{K} {\varvec{y}}(\theta ) \le {\varvec{y}}(\theta )\) holds if and only if

These conditions hold for \({\varvec{y}}(\theta )\) of (2.22), so we only know that they are sufficient but may not be necessary. To fill this gap, we go back to K and consider its superharmonic vector, using (2.22) for off-boundary blocks. This suggests that we should replace (2.24) by the following assumption.

Assumption 2.1

For each \(\theta \in \Gamma ^{(1d)}_{+}\), there is an \(m_{0}\)-dimensional positive vector \({\varvec{h}}^{(0)}(\theta )\) and real numbers \(c_{0}(\theta ), c_{1}(\theta )\) such that one of \(c_{0}(\theta )\) or \(c_{1}(\theta )\) equals one, and

Remark 2.3

If \(c_{0}(\theta ) \le 1\) and \(c_{1}(\theta ) \le 1\), then (2.24) is equivalent to (2.25) and (2.26). However, it is unclear whether or not \(c_{0}(\theta ) = 1\) or \(c_{1}(\theta ) = 1\) implies (2.24). In particular, \(c_{1}(\theta ) = 1\) is the case that we need in our application to the generalized Jackson network. This will be affirmatively answered in Theorem 2.2.

Let

If \(\Gamma ^{(1d)}_{0+} \not = \emptyset \), \(C_{0}\) is finite, and therefore \(c_{p}(B_{0}) > 1\). \(\Gamma ^{(1e)}_{0+}\) is at most a two-point set. Note that \(\Gamma ^{(1d)}_{0+} \subset \Gamma ^{(1d)}_{0} \cap \Gamma ^{(1d)}_{+}\), but \(\Gamma ^{(1d)}_{0+} = \Gamma ^{(1d)}_{0} \cap \Gamma ^{(1d)}_{+}\) may not be true except for \(m=1\). We further note the following facts.

Lemma 2.4

If \(\Gamma ^{(1d)}_{0+} \not = \emptyset \), then \(\Gamma ^{(1d)}_{0+}\) is a bounded convex subset of \(\mathbb {R}\), and it can be written as the closed interval \([\theta ^{(A,C)}_{\min }, \theta ^{(A,C)}_{\max }]\), respectively, where

We prove this lemma in Appendix 2 because it is just technical. Based on these observations, we claim the following fact.

Theorem 2.2

For a nonnegative matrix K with QBD block structure, assume Conditions (2a) and (2b). (a) If \(\Gamma ^{(1d)}_{0+} \ne \emptyset \), then \(c_{p}(K) \ge 1\). (b) Under Assumption 2.1, \(c_{p}(K) \ge 1\) if and only if \(\Gamma ^{(1d)}_{0+} \ne \emptyset \), which can be replaced by \(\Gamma ^{(1e)}_{0+} \ne \emptyset \).

Proof

(a) We already know that \({\varvec{y}}(\theta )\) of (2.22) is a superharmonic vector of \(\overline{K}\) for \(\theta \in \Gamma ^{(1d)}_{0+}\). Thus, Lemma 2.2 implies (a).

(b) The sufficiency of \(\Gamma ^{(1d)}_{0+} \ne \emptyset \) is already proved in (a). To prove its necessity, we first note that \(\Gamma ^{(1e)}_{+}\) is not empty by Lemma 2.3. Hence, there is a \(\theta _{1}\) such that \(\theta _{1} = \min \{\theta \in \mathbb {R}; \gamma ^{(A_{*})}(\theta ) = 1\}\). For this \(\theta _{1}\), we show that (2.24) holds for \({\varvec{h}} = {\varvec{h}}^{(A_{*})}(\theta _{1})\). To facilitate Assumption 2.1, we work on K rather than \(\overline{K}\). Assume that a superharmonic \({\varvec{y}} \equiv ({\varvec{y}}_{0}, {\varvec{y}}_{1}, \ldots )\) exists for K. We define the transition probability matrix \(\breve{P}^{(\theta _{1})} \equiv \{\breve{P}^{(\theta _{1})}_{k\ell }; k, \ell \ge 0\}\) by

where \(\breve{P}^{(\theta _{1})}_{k \ell }\) is the null matrix for \((k,\ell )\) undefined. It is easy to see that \(\breve{P}^{(\theta _{1})}\) is a proper transition matrix with QBD structure by (2.25), (2.26) and \(\gamma ^{(A_{*})}(\theta _{1}) = 1\). Furthermore, as shown in Appendix 1, this random walk has the mean drift (6.2) with \(\theta _{1}\) instead of \(\theta _{0}\). Since the definition of \(\theta _{1}\) implies that \((\gamma ^{(\textsc {A})})'(\theta _{1}) = 0\), this Markov chain is null recurrent.

We next define \(\breve{{\varvec{y}}}^{(\theta _{1})}\) as

Then the 0th row block of \(\breve{P}^{(\theta _{1})} \breve{\varvec{y}}^{(\theta _{1})}\) is

and, similarly, the 1st row block is

Hence, \(K {\varvec{y}} \le {\varvec{y}}\) is equivalent to

where \(I_{0}\) is the \(m_{0}\)-dimensional identity matrix. We now prove that \(c_{0}(\theta _{1}) \le 1\) and \(c_{1}(\theta _{1}) \le 1\) using the assumption that either \(c_{0}(\theta _{1}) = 1\) or \(c_{1}(\theta _{1}) = 1\). First, we assume that \(c_{1}(\theta _{1}) = 1\), and rewrite (2.28) as

where \(I_{+}\) and \(\breve{P}^{(\theta _{1})}_{+}\) are the matrices obtained from I and \(\breve{P}^{(\theta _{1})}\), respectively, by deleting their first row and column blocks. Since \(\breve{P}^{(\theta _{1})}_{+}\) is strictly substochastic, \(I_{+} - \breve{P}^{(\theta _{1})}_{+}\) is invertible. We denote its inversion by U. Then

which yields that

where \(U_{11}\) is the (1, 1) block of U. Since \(\left( \breve{P}^{(\theta _{1})}_{00} + \breve{P}^{(\theta _{1})}_{01} U_{11} \breve{P}^{(\theta _{1})}_{10} \right) \) is a stochastic matrix by the null recurrence of \(\breve{P}^{(\theta _{1})}\), we must have that \(c_{0}(\theta _{1}) \le 1\). The case for \(c_{0}(\theta _{1}) = 1\) is similarly proved. Thus, the proof is completed in view of Remark 2.3. \(\square \)

2.5 The convergence parameter and u-invariant measure

We now turn to consider the invariant measure of K, which will be used in our application. Li and Zhao [23] have shown the existence of such invariant measures for uK for \(u > 0\) when K is substochastic. We will show that their results are easily adapted for a nonnegative matrix. For this, we first classify a nonnegative irreducible matrix T to be transient, null recurrent, or positive recurrent. T is said to be u-transient if

while it is said to be u-recurrent if this sum diverges. For u-recurrent T, there always exists a u-invariant measure, and T is said to be u-positive if the u-invariant measure has a finite total sum. Otherwise, it is said to be u-null. The book of Seneta [38] is a standard reference for these classifications.

Suppose that \(c_{p}(K) \ge 1\). We modify K to be substochastic. For this, recall that \(c_{p}(K) \ge 1\) is equivalent to the existence of a superharmonic vector of K, and that \(\Delta _{{\varvec{a}}}\) is the diagonal matrix whose diagonal entry is given by the vector \({\varvec{a}}\). Define \({\widehat{K}}\) for a superharmonic vector \({\varvec{y}}\) of K by

Then, \({\widehat{K}} {\varvec{1}} \le {\varvec{1}}\), that is, \({\widehat{K}}\) is substochastic. It is also easy to see that, for \(0 < u \le c_{p}(K)\), \({\widehat{{\varvec{x}}}}\) is a u-invariant measure of \({\widehat{K}}\) if and only if \({\widehat{{\varvec{x}}}} \Delta _{{\varvec{y}}}^{-1}\) is a u-invariant measure of K. Furthermore, the classifications for K are equivalent to those for \({\widehat{K}}\). Thus, the results of [23] can be stated in the following form.

Lemma 2.5

(Theorem A of Li and Zhao [23]) For a nonnegative irreducible matrix K with QBD block structure, let \(t = c_{p}(K)\), \(t_{+} = c_{p}(K_{+})\) and assume that \(t \ge 1\). Then, K is classified into either one of the following cases: (a) t-positive if \(t < t_{+}\), (b) t-null or t-transient if \(t = t_{+}\).

Remark 2.4

The t and \(t_{+}\) correspond to \(\alpha \) and \(\overline{\alpha }\) of [23], respectively. In Theorem A of [23], the case (b) is further classified to t-null and t-transient cases, but it requires the Perron–Frobenius eigenvalue of \(t (C_{0} + R(t) A_{-1})\) to be less than 1 for t-transient and to equal 1 for t-null, where R(t) is the minimal nonnegative solution X of the matrix equation:

In general, this eigenvalue is hard to get in closed form, so we will not use this finer classification. Similar but slightly different results are obtained in Theorem 16 of [2].

Lemma 2.6

(Theorems B and C of Li and Zhao [23]) For K satisfying the assumptions of Lemma 2.5, there exist u-invariant measures for \(0 < u \le t \equiv c_{p}(K)\). The form of these invariant measures varies according to three different types: (a1) \(u=t\) for t-recurrent, (a2) \(u = t\) for t-transient, and (b) \(u<t\).

Remark 2.5

By Lemma 2.5, K is t-null for (a1) if and only if \(t = t_{+}\).

3 Application to a 2d-QBD process

In this section we show how Theorem 2.2 can be applied to a tail asymptotic problem. We here consider a 2d-QBD process \(\{Z_{n}\} \equiv \{(L_{1n}, L_{2n}, J_{n})\}\) introduced by Ozawa [36], where \({\varvec{L}}_{n} \equiv (L_{1n}, L_{2n})\) is a random walk component taking values in \(\mathbb {Z}_{+}^{2}\) and \(\{J_{n}\}\) is a background process with finitely many states. It is assumed that \(\{Z_{n}\}\) is a discrete-time Markov chain. The tail decay rates of the stationary distribution of the 2d-QBD process have been studied in [36], but there remain some crucial problems unsolved as we argued in Sect. 1 and will detail in the next subsection. Furthermore, there is some ambiguity in the definition of Ozawa [36]’s 2d-QBD process. Thus, we first reconsider this definition and show that those problems on the tail asymptotics can be well studied using Assumption 2.1 and Theorem 2.2.

3.1 Two-dimensional QBD processes

We will largely change the notation of [36] to make clear assumptions. We partition the state space \(\mathcal{S}\) of \(Z_{n}\) so as to apply Lemma 2.2. Divide the lattice quarter plane \(\mathbb {Z}_{+}^{2}\) into four regions.

where \(\mathcal{U}_{i}\) for \(i=0,1,2\) and \(\mathcal{U}_{+}\) are said to be a boundary face and the interior, respectively. Then, the state space \(\mathcal{S}\) for \(Z_{n}\) is given by

where \(\mathcal{V}_{i}\) are finite sets of numbers such that their cardinality \(|\mathcal{V}_{i}|\) is given by \(m_{0} = |\mathcal{V}_{0}|\), \(m_{1} = |\mathcal{V}_{1}|\), \(m_{2} = |\mathcal{V}_{2}|\), \(m = |\mathcal{V}_{+}|\).

To define the transition probabilities of \(Z_{n}\), we further partition the state space as

On each of those sets, the transition probabilities of \(Z_{n}\) are assumed to be homogeneous. Namely, for \(s, s' \in \mathcal{A} \equiv \{0,1,+\}\), their matrices for background state transitions can be denoted by \(A^{(ss')}_{ij}\) for the transition from \(((\ell ,m),k) \in \mathcal{U}_{ss'}\) to \(((\ell +i,m+j),k') \in \mathcal{S}\). Furthermore, we assume that

Throughout the paper, we denote \(A^{(++)}_{ij}\) by \(A_{ij}\). This greatly simplifies the notation. See Fig. 1 for those partitions of the quarter plane \(\mathbb {Z}_{+}^{2}\) and the transition probability matrices.

Those assumptions on the transition probabilities are essentially the same as those introduced by Ozawa [36], while there is some minor flexibility in our assumption that \(A^{(11)}_{0(-1)}\) (\( A^{(11)}_{(-1)0}\)) may be different from \(A^{(+1)}_{0(-1)}\) (\(A^{(1+)}_{(-1)0}\), respectively), which are identical in [36]. Another difference is in that we have nine families of transition matrices while Ozawa [36] expresses them by four families, \(A^{(s)}_{ij}\) for \(s=0,1,2\), and \(A_{ij}\).

By the homogeneity and independence assumptions, we can define \(Z_{n+1}\) in terms of \(Z_{n}\) and independent increments as

where \({\varvec{X}}^{(ss')}_{n}(k)\) is the increment at time n when the random walk component is on \(\mathcal{U}_{ss'}\) and the background state is k. By the modeling assumption, \({\varvec{X}}^{(ss')}_{n}(k)\) is independent of \(Z_{\ell }\) for \(\ell \le n-1\) and \({\varvec{L}}_{n}\) for given \(s, s'\), and k.

The 2d-QBD process is a natural model for a two-node queueing network in various situations including a Markovian arrival process and phase-type service time distributions. Its stationary distribution is a key characteristic for performance evaluation, but is hard to get. This is even the case for a two-dimensional reflecting random walk, which does not have background states (for example, see [28]). Thus, recent interest has been directed to the tail asymptotics of the stationary distribution.

3.2 Markov additive kernel and stability

Recall that the 2d-QBD process is denoted by \(\{(L_{1n}, L_{2n}, J_{n}); n=0,1,\ldots \}\). To define the one-dimensional QBD process, for \(i =1,2\), let \(L^{(i)}_{n} = L_{in}\) and \({\varvec{J}}^{(i)}_{n} = (L_{(3-i)n}, J_{n})\), representing level and background state at time n, respectively. Thus, \(\{(L^{(i)}_{n}, {\varvec{J}}^{(i)}_{n}); n \ge 0\}\) is a one-dimensional QBD process for \(i=1,2\). Denote its transition matrix by \(P^{(i)}\). For example, \(P^{(1)}\) is given by

where, using \((-j)^{+} = \max (0,-j)\),

We next introduce the Markov additive process by removing the boundary at level 0 of the one-dimensional QBD process generated by \(P^{(1)}\) and denote its transition probability matrix by \(\underline{P}^{(1)}\). That is,

\(\underline{P}^{(2)}\) and \(Q^{(2)}_{i}\)’s are similarly defined, exchanging the coordinates. For \(i=1,2\), let

Then \(Q^{(i)}\) is stochastic. Let \({\varvec{\nu }}^{(i)} \equiv \left\{ {\varvec{\nu }}^{(i)}_{\ell }; \ell =0,1,\ldots \right\} \) be the left-invariant positive vector of \(Q^{(i)}\) when it exists, where \({\varvec{\nu }}^{(i)}_{\ell }\) is an \(m_{0}\)- and m-dimensional vector for \(\ell =0\) and \(\ell \ge 1\), respectively. Define

In order to discuss the stability of the 2d-QBD process, we define the mean drifts \(\mu ^{(i)}_{i}\) for each \(i =1,2\) as

as long as \(Q^{(i)}\) is positive recurrent, where the derivative of a matrix function is taken entry-wise. Let \(A = \sum _{j,k \in \mathbb {H}} A_{jk}\). Since A is stochastic and finite dimensional, it has a stationary distribution. We denote it by the row vector \({\varvec{\nu }}^{(+)}\). Define the mean drifts \(\mu _{1}\) and \(\mu _{2}\) as

Note that if \(\mu _{i} < 0\), then \(Q^{(i)}\) is positive recurrent because \(\mu _{i}\) is the mean drift at off-boundary states of the QBD process generated by \(Q^{(i)}\). We refer to the recent result due to Ozawa [36].

Lemma 3.1

(Theorem 5.1, Remark 5.1 of Ozawa [35]) The 2d-QBD process \(\{Z_{n}\}\) is positive recurrent if any one of the following three conditions holds:

-

(i)

If \(\mu _{1} < 0\) and \(\mu _{2} < 0\), then \(\mu ^{(1)}_{1} < 0\) and \(\mu ^{(2)}_{2} < 0\).

-

(ii)

If \(\mu _{1} \ge 0\) and \(\mu _{2} < 0\), then \(\mu ^{(1)}_{1} < 0\).

-

(iii)

If \(\mu _{1} < 0\) and \(\mu _{2} \ge 0\), then \(\mu ^{(2)}_{2} < 0\).

On the other hand, if \(\mu _{1} > 0\) and \(\mu _{2} > 0\), then the 2d-QBD process is transient. Hence, if \((\mu _{1}, \mu _{2}) \ne {\varvec{0}}\), then \(\{Z_{n}\}\) is positive recurrent if and only if one of the conditions (i)–(iii) holds.

Remark 3.1

The stability conditions of this lemma are exactly the same as those of the two-dimensional reflecting random walk on the lattice quarter plane of [10], which is called a double QBD process in [27] (see also [19]). This is not surprising because the stability is generally determined by the mean drifts of so-called induced Markov chains, which are generated by removing one of the boundary faces. However, its proof requires careful mathematical arguments, which have been done by Ozawa [36].

Throughout the paper, we assume that the 2d-QBD process has a stationary distribution, which is denoted by the row vector \({\varvec{\pi }} \equiv \{\pi ({\varvec{z}},k); ({\varvec{z}},k) \in \mathcal{S}\}\). Lemma 3.1 can be used to verify this stability assumption. However, it is not so useful in application because the signs of \(\mu ^{(1)}_{1}\) and \(\mu ^{(2)}_{2}\) are hard to get. Thus, we will not use Lemma 3.1 in our arguments. We will return to this issue later.

3.3 Tail asymptotics for the stationary distribution

Ozawa [36] studies the tail asymptotics of the stationary distribution of the 2d-QBD process in coordinate directions, assuming stability and some additional assumptions. His arguments are based on the sufficiency part of Theorem 2.1. As discussed at the end of Sect. 2.3, this is intractable for applications. We will consider the problem in a different way. In the first part of this section we derive upper and lower bounds for the tail decay rates using relatively easy conditions. We then assume an extra condition similar to Assumption 2.1, and derive the tail decay rate of the marginal stationary distribution in an arbitrary direction.

To describe the modeling primitives, we will use the following matrix moment-generating functions.

Similarly, \(A_{j*}(\theta _{2}), A_{j+}(\theta _{2}), A^{(1+)}_{(-1)*}(\theta _{2}), A^{(1+)}_{j+}(\theta _{2}), A^{(0+)}_{j*}(\theta _{2})\), and \(A^{(0+)}_{j+}(\theta _{2})\) are defined. Thus, we have many matrix moment-generating functions, but they are generated by the simple rule that subscripts \(*\) and \(+\) indicate taking the sums for indices in \(\mathbb {H} \equiv \{0, \pm 1\}\) and \(\{0,1\}\), respectively.

Similar to \(C_{0}\) of (2.15), we define the \(m \times m\) matrix generating functions:

where, for \(i=1,2\), \(c_{p}(A^{(i)}_{*0}(\theta _{i})) > 1\) is assumed as long as \(C^{(i)}_{**}({\varvec{\theta }})\) is used.

Similar to \(\Gamma ^{(1d)}_{0+}\) and \(\theta ^{(A,C)}_{\max }\) of (2.27), let, for \(i=1,2\),

We further need the following notation:

Recall that the Perron–Frobenius eigenvalue of \(A_{**}({\varvec{\theta }})\) is denoted by \(\gamma ^{(A_{**})}({\varvec{\theta }})\), which is finite because \(A_{**}({\varvec{\theta }})\) is a finite-dimensional matrix. Obviously, we have

We now define key points for \(i = 1,2\).

Using these points, we define the vector \({\varvec{\tau }}\) by

Note that \(\tau _{i}\) is finite because \(\Gamma ^{(2d)}_{i+}\) is a bounded set. It is notable that, in the definitions (3.7), the condition that \(A_{**}({\varvec{\theta }}) {\varvec{h}} \le {\varvec{h}}\) can be replaced by \(A_{**}({\varvec{\theta }}) {\varvec{h}} = {\varvec{h}}\) or, equivalently, \(\gamma ^{A_{**}}({\varvec{\theta }}) = 1\).

For \(i = 1,2\), define the function \(\overline{\xi }^{(i)}(\theta _{3-i})\) for \({\varvec{\theta }} = (\theta _{1}, \theta _{2})\) as

Obviously, \(\overline{\xi }^{(i)}(x)\) is a convex function because \(\Gamma ^{(2d)}_{i+}\) is a bounded set.

As in [18, 19], it is convenient to introduce the following classifications for \({\varvec{\tau }} \equiv (\tau _{1}, \tau _{2})\).

-

(Category I)

\(\theta ^{(1,{\Gamma })}_{2} < \theta ^{(2,{\Gamma })}_{2}\) and \(\theta ^{(2,{\Gamma })}_{1} < \theta ^{(1,{\Gamma })}_{1}\), for which \({\varvec{\tau }} = \left( \theta ^{(1,{\Gamma })}_{1}, \theta ^{(2,{\Gamma })}_{2}\right) \).

-

(Category II-1)

\(\theta ^{(1,{\Gamma })}_{2} \ge \theta ^{(2,{\Gamma })}_{2}\) and \(\theta ^{(2,{\Gamma })}_{1} < \theta ^{(1,{\Gamma })}_{1}\), for which \({\varvec{\tau }} =\, \left( \overline{\xi }^{(1)}(\theta ^{(2,{\Gamma })}_{2}), \theta ^{(2,{\Gamma })}_{2}\right) \).

-

(Category II-2)

\(\theta ^{(1,{\Gamma })}_{2} < \theta ^{(2,{\Gamma })}_{2}\) and \(\theta ^{(2,{\Gamma })}_{1} \ge \theta ^{(1,{\Gamma })}_{1}\), for which \({\varvec{\tau }} =\, \left( \theta ^{(1,{\Gamma })}_{1}, \overline{\xi }^{(2)}(\theta ^{(1,{\Gamma })}_{1})\right) \).

Since it is impossible that \(\theta ^{(1,{\Gamma })}_{2} \ge \theta ^{(2,{\Gamma })}_{2}\) and \(\theta ^{(2,{\Gamma })}_{1} \ge \theta ^{(1,{\Gamma })}_{1}\), these three categories completely cover all cases (for example, see Sect. 4 of [27]). These categories are crucial in our arguments as we shall see in Theorem 3.2 below.

We first derive upper bounds. Let \(\varphi \) be the moment-generating function of \({\varvec{L}}\). Namely, \(\varphi ({\varvec{\theta }}) = \mathbb {E}(e^{\langle {\varvec{L}}, {\varvec{\theta }} \rangle })\). Define its convergence domain as

We prove the following lemma in Appendix 4.

Lemma 3.2

Under the stability assumption,

Using this lemma, the following upper bound is obtained.

Theorem 3.1

Under the stability condition, we have, for each nonzero vector \({\varvec{c}} \ge {\varvec{0}}\),

This theorem is proved in Appendix 4. We next derive lower bounds. We first consider lower bounds concerning the random walk component in an arbitrary direction. For this, we consider the two-dimensional random walk modulated by \(\{A_{jk}; j,k \in \mathbb {H}\}\), which is denoted by \(\{({\varvec{Y}}_{n}, J_{n}); n \ge 1\}\). Similar to Lemma 7 of [19], we have the following fact, which is proved in Appendix 5.

Lemma 3.3

For each nonzero vector \({\varvec{c}} \ge {\varvec{0}}\),

and therefore \(\varphi ({\varvec{\theta }})\) is infinite for \({\varvec{\theta }} \not \in \overline{\Gamma }^{(2d)}_{\max }\), where \(\overline{\Gamma }^{(2d)}_{\max }\) is the closure of \(\Gamma ^{(2d)}_{\max }\).

Note that the upper bound in (3.9) is generally larger than the lower bound in (3.10). To get tighter lower bounds, we use the one-dimensional QBD formulation. For this, we require assumptions similar to Assumption 2.1.

Assumption 3.1

For each \({\varvec{\theta }} \in \mathbb {R}^{2}\) satisfying \(\gamma ^{(A_{**})}({\varvec{\theta }}) = 1\), for each \(i=1,2\), there is an \(m_{0}\)-dimensional positive vector \({\varvec{h}}^{(0i)}({\varvec{\theta }})\) and functions \(c^{(i)}_{0}({\varvec{\theta }})\) and \(c^{(i)}_{1}({\varvec{\theta }})\) such that one of \(c^{(i)}_{1}({\varvec{\theta }})\) or \(c^{(i)}_{2}({\varvec{\theta }})\) equals one, and

where \(*(ik) = *k\) for \(i=1\) and \(*(ik) = k*\) for \(i=2\). We recall that \({\varvec{h}}^{(A_{**})}({\varvec{\theta }})\) is the Perron–Frobenius eigenvector of \(A_{**}({\varvec{\theta }})\).

Theorem 3.2

Assume that the 2d-QBD process has a stationary distribution and Assumption 3.1. Then, we have the following facts for each \(i = 1,2\). For each \(\ell \ge 0\) and either \(k \in \mathcal{V}_{1}\) for \(\ell =0\) or \(k \in \mathcal{V}_{+}\) for \(\ell \ge 1\),

In particular, for Category I satisfying \(\tau _{i} < \theta ^{(i,\max )}_{i}\), there is a positive constant \(c^{(i)}_{\ell k}\) such that

Otherwise, for Category II-1 satisfying \(\tau _{i} < \theta ^{(i,{\Gamma })}_{i}\), there are positive constants \(\underline{d}^{(i)}_{\ell k}\) and \(\overline{d}^{(i)}_{\ell k}\) such that

This theorem will be proved in Appendix 6. Similar results without Assumption 3.1 are obtained as Theorem 4.1 in [36]. However, the method assumes other assumptions such as Assumption 3.1 of [36]. Furthermore, it requires a large amount of numerical work to compute \(\tau _{i}\).

Combining Theorems 3.1 and 3.2 and Lemma 3.3, we have the following tail asymptotics.

Theorem 3.3

Under the assumptions of Theorem 3.2, we have

and, for each nonzero vector \({\varvec{c}} \ge {\varvec{0}}\),

Proof

By Theorem 3.1, we already have the upper bound of the tail probability for (3.18). To consider the lower bound, let

Note that \({\varvec{\theta }}({\varvec{c}}) \le {\varvec{\tau }}\) by Theorem 3.1. We first assume that \({\varvec{\theta }}_{{\varvec{c}}} < {\varvec{\tau }}\). Then, by Theorem 3.1, \({\varvec{\theta }}({\varvec{c}})\in \partial \Gamma ^{(2d)}_{+}\), and therefore Lemma 3.3 leads to

Assume \({\varvec{\theta }}_{{\varvec{c}}} = {\varvec{\tau }}\). In this case, by Theorem 3.1, we have that \([{\varvec{\theta }}({\varvec{c}})]_{1} = \tau _{1}\) or \([{\varvec{\theta }}({\varvec{c}})]_{2} = \tau _{2}\); equivalently, \(c_{1} u_{{\varvec{c}}} = \tau _{1}\) or \(c_{2} u_{{\varvec{c}}} = \tau _{2}\). Since these two cases are symmetric, we only consider the case \(c_{1} u_{{\varvec{c}}} = \tau _{1}\). By Theorem 3.2 we have, for each fixed \(\ell \) and k,

Since \(c_{1} L_{1} + c_{2} L_{2} > n\) for \(L_{2} = \ell \) implies that \(c_{1} L_{1} > n - c_{2} \ell \), this yields

Thus, the limit supremum and the limit infimum are identical, and we get (3.18). \(\square \)

4 Two-node generalized Jackson network

In this section we consider a continuous-time Markov chain \(\{({\varvec{L}}(t),J(t))\}\) whose embedded transitions under uniformization constitute a discrete-time 2d-QBD process. We refer to it as a continuous-time 2d-QBD process. This process is convenient in queueing applications because they are often of continuous time. Since the stationary distribution is unchanged under uniformization, its tail asymptotics are also unchanged. Thus, it is routine to convert the asymptotic results obtained for the discrete-time 2d-QBD process to those for \(\{({\varvec{L}}(t),J(t))\}\). We summarize them for convenience of application.

4.1 Continuous-time formulation of a 2d-QBD process

As discussed above, we define a continuous-time 2d-QBD process \(\{({\varvec{L}}(t),J(t))\}\) by changing \(P^{(1)}\) (or \(P^{(2)}\)) to a transition rate matrix. Denote it by \({\tilde{P}}^{(1)}\) (or \({\tilde{P}}^{(2)}\)). That is, \({\tilde{P}}^{(i)}\) has the same block structure as that of \(P^{(i)}\) while \({\tilde{P}}^{(i)} {\varvec{1}} = {\varvec{0}}\) and all its diagonal entries are not positive. In what follows, continuous-time characteristics are indicated by tilde except for those concerning the stationary distribution, because the stationary distribution is unchanged. Among them, it is notable that \(I - A^{(i)}_{00}\) and \(I - A_{00}\) are replaced by \(-{\tilde{A}}^{(i)}_{00}\) and \(-{\tilde{A}}_{00}\), respectively, while \(A^{(i)}_{ij}\) and \(A_{ij}\) are replaced by \({\tilde{A}}^{(i)}_{ij}\) and \({\tilde{A}}_{ij}\) for \((i,j) \ne (0,0)\). Similarly, \(A^{(i)}_{jk}\), \(A_{**}({\varvec{\theta }})\) and \(C^{(i)}_{**}({\varvec{\theta }})\) are defined. For example, \(I - A^{(1)}_{*0}(\theta _{1})\) is replaced by \(- {\tilde{A}}^{(1)}_{*0}(\theta _{1})\), and therefore

as long as \((- {\tilde{A}}^{(1)}_{*0}(\theta _{1}))^{-1}\) exists and is nonnegative. \({\tilde{C}}^{(2)}_{**}({\varvec{\theta }})\) is similarly defined.

Suppose that we start with the continuous-time 2d-QBD process with primitive data \({\tilde{A}}^{(i)}_{jk}\). These data must satisfy

because of the continuous-time setting. Since the condition for the existence of a superharmonic vector \({\varvec{h}}\) for \(A_{**}({\varvec{\theta }})\) is changed to \({\tilde{A}}_{**}({\varvec{\theta }}) {\varvec{h}} \le {\varvec{0}}\), we define the following sets.

The following auxiliary notation will be convenient:

Using this notation, we define

and define the vector \({\tilde{{\varvec{\tau }}}}\) by

Remark 4.1

In the definition (4.5), we can replace \({\tilde{\Gamma }}^{(2d)}_{i+}\) by \({\tilde{\Gamma }}^{(2e)}_{i+}\) because \({\tilde{\Gamma }}^{(2d)}_{+}\) and \(\{ {\varvec{\theta }} \in \mathbb {R}^{2}; {\tilde{C}}^{(i)}_{**}({\varvec{\theta }}) {\varvec{h}} \le {\varvec{0}} \}\) are closed convex sets.

We also need

Let \({\tilde{\gamma }}^{{\tilde{A}}_{**}}({\varvec{\theta }})\) be the Perron–Frobenius eigenvalue of \({\tilde{A}}_{**}({\varvec{\theta }})\). A continuous-time version of Assumption 3.1 is given by

Assumption 4.1

For each \({\varvec{\theta }} \in \mathbb {R}^{2}\) satisfying \(\gamma ^{({\tilde{A}}_{**})}({\varvec{\theta }}) = 1\), for each \(i=1,2\), there is an \(m_{0}\)-dimensional positive vector \({\tilde{{\varvec{h}}}}^{(i0)}({\varvec{\theta }})\) and functions \({\tilde{c}}^{(i)}_{0}({\varvec{\theta }})\) and \({\tilde{c}}^{(i)}_{1}({\varvec{\theta }})\) such that one of \({\tilde{c}}^{(i)}_{0}({\varvec{\theta }})\) or \({\tilde{c}}^{(i)}_{1}({\varvec{\theta }})\) vanishes, and, for \(i=1\),

and, for \(i=2\),

We recall that \({\tilde{{\varvec{h}}}}^{({\tilde{A}}_{**})}({\varvec{\theta }})\) is the Perron–Frobenius eigenvector of \({\tilde{A}}_{**}({\varvec{\theta }})\).

Define the domain for the stationary distribution of \({\varvec{L}}\) as

where \({\varvec{L}}\) is a random vector subject to the stationary distribution of \({\varvec{L}}(t)\). It is easy to see that Theorems 3.1 and 3.3 can be combined and converted into the following continuous version.

Theorem 4.1

For a continuous-time 2d-QBD process satisfying the irreducibility and stability conditions, \({\tilde{\Gamma }}_{{\tilde{{\varvec{\tau }}}}} \subset \mathcal{D}\) and we have

and this inequality becomes equality with \(\mathcal{D} = {\tilde{\Gamma }}_{{\tilde{{\varvec{\tau }}}}}\) if Assumption 4.1 is satisfied.

4.2 Two-node generalized Jackson network with MAP arrivals and PH-service time distributions

As an example of the 2d-QBD process, we consider a two-node generalized Jackson network with a MAP arrival process and phase-type service time distributions. Obviously, this model can be formulated as a 2d-QBD process. We are interested to see how exogenous arrival processes and service time distributions influence the decay rates. This question has been partially answered for the tail decay rates of the marginal distributions of tandem queues with stationary or renewal inputs (for example, see [3, 14]). They basically use the technique for sample path large deviations, and no joint distributions have been studied for queue lengths at multiple nodes. For Markov-modulated arrivals and more general network topologies, there is seminal work by Takahashi and his colleagues [11, 12, 15, 16]. They started with numerical examinations and finally arrived at upper bounds for the stationary tail probabilities for the present generalized Jackson network in [16]. The author [26] conjectured the tail decay rates of the stationary distribution for a d-node generalized Jackson network with \(d \ge 2\) and renewal arrivals.

Thus, the question has not yet been satisfactorily answered particularly for a network with feedback routes. This motivates us to study the present decay rate problem. As we will see, the answer is relatively simple and naturally generalizes the tandem queue case. However, first we have to introduce yet more notation to describe the generalized Jackson network. This network has two nodes, which are numbered as 1 and 2. We make the following modeling assumptions.

-

(4a)

A customer which completes service at node i goes to node j with probability \(r_{ij}\) or leaves the network with probability \(1 - r_{ij}\) for \((i,j) = (1,2)\) or (2, 1), where \(r_{12} + r_{21} > 0\) and \(r_{12} r_{21} < 1\), which exclude obvious cases. This routing of customers is assumed to be independent of everything else.

-

(4b)

Exogenous customers arrive at node i subject to the Markovian arrival process with generator \({T}_{i}+{U}_{i}\), where \({U}_{i}\) generates arrivals. Here, \({T}_{i}\) and \({U}_{i}\) are finite square matrices of the same size for each \(i=1,2\).

-

(4c)

Node i has a single server, whose service times are independently and identically distributed subject to a phase-type distribution with \(({\varvec{\beta }}_{i}, {S}_{i})\), where \({\varvec{\beta }}_{i}\) is the row vector representing the initial phase distribution and \({S}_{i}\) is a transition rate matrix for internal state transitions. Here, \({S}_{i}\) is a finite square matrix, and \({\varvec{\beta }}_{i}\) has the same dimension as that of \({S}_{i}\) for each \(i=1,2\).

Let \({D}_{i} = (-{S}_{i} {\varvec{1}}) {\varvec{\beta }}_{i}\). Then \({S}_{i}+{D}_{i}\) is a generator for a continuous-time Markov chain which generates completion of service times with rate \({D}_{i}\). Since the service time distribution at node i has the phase-type distribution with \(({\varvec{\beta }}_{i}, {S}_{i})\), its moment-generating function \(g_{i}\) is given by

as long as \(\theta I_{i+2} + {S}_{i}\) is nonsingular (for example, see [21] in which the Laplace transform is used instead of the moment-generating function). Clearly, \(g_{i}(\theta )\) is a increasing function of \(\theta \) from \((-\infty , \theta _{0i})\) to \((0, \infty )\), where \(- \theta _{0i}\) is the Perron–Frobenius eigenvalue of \(S_{i}\).

Let \(L_{i}(t)\), \(J_{ia}(t)\), and \(J_{ib}(t)\) be the number of customers at node i, the background state for arrivals, and the phase of service in progress, respectively, at time t, where \(J_{ib}(t)\) is undefined if there is no customer in node i at time t. Then, it is not hard to see that \(\{({\varvec{L}}(t), {\varvec{J}}(t)); t \ge 0\}\) is a continuous-time Markov chain and considered as a 2d-QBD process, where \({\varvec{L}}(t) = (L_{1}(t), L_{2}(t))\) and \({\varvec{J}}(t) = (J_{1a}(t), J_{2a}(t), J_{1b}(t), J_{2b}(t))\), where \(J_{ib}(t)\) is removed from the components of \({\varvec{J}}(t)\) if it is undefined.

We first note the stability condition for this 2d-QBD process. Since, for node i, the mean exogenous arrival rate \(\lambda _{i}\) and the mean service rate \(\mu _{i}\) are given by

where \({\varvec{\nu }}_{i}\) is the stationary distribution of the Markov chain with generator \(T_{i} + U_{i}\), it is well known that the stability condition is given by

We assume this condition throughout in Sect. 4.2.

We next introduce point processes to count arriving and departing customers from each node. By \(N^{(a)}_{i}(t)\), we denote the number of exogenous arriving customers at node i during the time interval [0, t]. Then, it follows from (4b) (also the comment above (4.14)) that

We define a time-average cumulant moment-generating function \(\gamma ^{(ia)}\) as

It is not hard to see that \(\gamma ^{(ia)}(\theta )\) is the Perron–Frobenius eigenvalue of \({T}_{i} + e^{\theta } {U}_{i}\).

By \(N^{(d)}_{i}(t)\), we denote the number of departing customers from node i during the time interval [0, t] when the server at node i is always busy in this time interval. Let \(\Phi _{i}(n)\) be the number of customers who are routed to node \(3-i\) among n customers departing from node i. Obviously, it follows from (4a) that \(\Phi _{i}(n)\) is independent of \(N^{(d)}_{i}(t)\), and has the Bernoulli distribution with parameter \((n, r_{i(3-i)})\). Then,

where \(r_{i0} = 1 - r_{i(3-i)}\). Similar to \(\gamma ^{(ia)}\), we define a time-average cumulant moment-generating function \(\gamma ^{(id)}\) by

One can see that \(\gamma ^{(id)}({\varvec{\theta }})\) is the Perron–Frobenius eigenvalue of \({S}_{i} + e^{-\theta _{i}} (r_{i0} + e^{\theta _{3-i}} r_{i(3-i)}) {D}_{i}\).

One may expect that the decay rates for the generalized Jackson network are completely determined by the cumulants \(\gamma ^{(1a)}, \gamma ^{(2a)}, \gamma ^{(1d)}, \gamma ^{(2d)}\) since their conjugates are known to be rate functions for the Cramér type of large deviations. We will show that this is indeed the case. Let

We then have the following result.

Theorem 4.2

For the generalized Jackson network satisfying conditions (4a), (4b) and (4c), if the stability condition (4.14) holds then Assumption 4.1 is satisfied, and we have

Define \({\tilde{\tau }}\) and \({\tilde{\Gamma }}_{{\tilde{\tau }}}\) by (4.5) and (4.6). Then the domain \(\mathcal{D}\) for \({\varvec{L}}\) is given by \({\tilde{\Gamma }}_{{\tilde{{\varvec{\tau }}}}}\) and, for a nonzero vector \({\varvec{c}} \ge {\varvec{0}}\),

where \({\varvec{L}}\) is a random vector subject to the stationary distribution of \({\varvec{L}}(t)\).

Remark 4.2

As we will show at the end of Sect. 4.4, the condition that \(\gamma ^{(i)}({\varvec{\theta }}) \le 0\) in (4.17) can be replaced by \(e^{-\theta _{3-i}} (r_{(3-i)0} + e^{\theta _{i}} r_{(3-i)i}) \ge 1\).

Remark 4.3

For \({\varvec{c}} = (1,0), (0,1)\), Katou et al. [15] obtained the right-hand side of (4.18) as an upper bound for its left-hand side (see Theorem 4.1 there). Namely, they derived the inequality (4.12), which is conjectured to be tight in [26]. Theorem 4.2 shows that those upper bounds are indeed tight. Based on the results in [15], Katou et al. [16] derived upper bounds for the decay rate of the probability \(\mathbb {P}({\varvec{L}} = n {\varvec{c}} + {\varvec{d}})\) for positive vectors \({\varvec{c}}, {\varvec{d}}\) with integer entries as \(n \rightarrow \infty \) and numerically examined their tightness. This asymptotic is different from that in (4.18), so we cannot confirm its tightness by (4.18), but conjecture it to be true since similar asymptotics are known for a two-dimensional semimartingale reflecting Brownian motion (see [1, 9]).

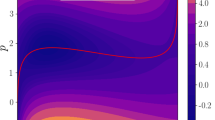

See Fig. 2 to see what the domain looks like.

4.3 Primitive data and matrix moment generation functions

In this section we describe transition rate matrices and their moment-generating functions in terms of the primitive data, \(T_{i}, U_{i}, S_{i}, {\varvec{\beta }}_{i}\), of the generalized Jackson network, and prove (4.16) and (4.17). They will be used to prove Theorem 4.2 in the next subsection.

To specify those matrices for the generalized Jackson network, we will use the Kronecker product \(\otimes \) and sum \(\oplus \), respectively, where \(\oplus \) is defined for square matrices A and B as

where \(I_{1}\) and \(I_{2}\) are the identity matrices with the same sizes as A and B, respectively. From this definition, it is easy to see that if A and B have right eigenvectors \({\varvec{h}}_{A}\) and \({\varvec{h}}_{A}\) with eigenvalues \(\gamma _{A}\) and \(\gamma _{B}\), respectively, then

We also will use this computation.

For transitions around the origin, we let

where other \({\tilde{A}}^{(0)}_{ij}\)’s not specified above are all null matrices. This convention for null matrices is used for all transition matrices. Around \(\mathcal{U}_{+0} \cup \mathcal{U}_{+1}\), that is, the 1st coordinate half axis except for the origin,

Similarly, around \(\mathcal{U}_{0+} \cup \mathcal{U}_{1+}\), that is, the 2nd coordinate half axis except for the origin,

For transitions within \(\mathcal{U}_{+}\), that is, the interior,

Thus, we have

Recall that \(\gamma ^{(ia)}(\theta _{i})\) is the Perron–Frobenius eigenvalue of \({T}_{i} + e^{\theta _{i}} {U}_{i}\). We denote its eigenvector by \({\varvec{h}}^{(ia)}(\theta _{i})\). Similarly, we denote the Perron–Frobenius eigenvalues and vectors of \({S}_{1} + (e^{-\theta _{1}} r_{10} + e^{-\theta _{1}+\theta _{2}} r_{12}) {D}_{1}\) and \({S}_{2} + (e^{-\theta _{2}} r_{20} + e^{\theta _{1}-\theta _{2}} r_{21}) {D}_{2}\) by \(\gamma ^{(1d)}({\varvec{\theta }})\) and \(\gamma ^{(2d)}({\varvec{\theta }})\), and \({\varvec{h}}^{(1d)}({\varvec{\theta }})\) and \({\varvec{h}}^{(1d)}({\varvec{\theta }})\), respectively. That is, they satisfy

Thus, recalling \(\gamma ^{(+)}({\varvec{\theta }})\) and letting

we have, by repeatedly applying (4.19),

Hence, recalling the definition (4.1) of \({\tilde{\Gamma }}^{(2d)}\), we have (4.16).

We next note that \(\gamma ^{(id)}\) can also be obtained from the moment-generating function \(g_{i}\) of the service time distribution at node i. For \(i=1,2\), let

Then it follows from (4.22) and (4.23) that

since \(D_{i} {\varvec{h}}^{(id)}({\varvec{\theta }}) = \langle {\varvec{\beta }}_{i}, {\varvec{h}}^{(id)}({\varvec{\theta }}) \rangle (-S_{i}{\varvec{1}})\). Hence, premultiplying \((\gamma ^{(id)}({\varvec{\theta }}) I - S_{i})^{-1}\), we have

Let us normalize \({\varvec{h}}^{(id)}({\varvec{\theta }})\) in such a way that

Then we have the following facts since \(g_{i}\) is nondecreasing.

Lemma 4.1

For \(i=1,2\), (a) under the normalization (4.24),

and therefore \(g_{i}(-\gamma ^{(id)}({\varvec{\theta }})) = t_{i}({\varvec{\theta }})^{-1}\), which yields \(\gamma ^{(id)}({\varvec{\theta }}) = - g_{i}^{-1}(t_{i}({\varvec{\theta }})^{-1})\), (b) \(\gamma ^{(id)}({\varvec{\theta }}) \ge 0\) if and only if \(t_{i}({\varvec{\theta }}) \ge 1\), which is equivalent to

(c) If \(U_{i} = (-T_{i} {\varvec{1}}) {\varvec{\alpha }}_{i}\) for probability vectors \({\varvec{\alpha }}_{i}\), that is, the arrival process at node i is the renewal process with interarrival distribution determined by the moment-generating function:

then \(\gamma ^{(ia)}(\theta _{i}) = - f_{i}^{-1}(e^{-\theta _{i}})\).

Remark 4.4

(a) and (c) are known (see, for example, Proposition 2 of [39] and Lemma 4.1 of [12]).

4.4 Proof of Theorem 4.2

We first verify Assumption 4.1 for \(i=1\) and \({\tilde{c}}^{(1)}_{1}({\varvec{\theta }}) = 0\), Namely, for \({\varvec{\theta }} \in \mathbb {R}^{2}\) satisfying \(\gamma ^{(+)}({\varvec{\theta }}) = 0\), that is,

we show that there are some \({\tilde{c}}^{(1)}_{0}({\varvec{\theta }})\) and \({\varvec{h}}^{(01)}({\varvec{\theta }}) > {\varvec{0}}\) such that

where

We further require the nonsingularity condition:

From (4.28), this holds if \({\tilde{c}}_{0}^{(1)}({\varvec{\theta }}) \le 0\).

Since \({\tilde{A}}_{**}({\varvec{\theta }}) {\varvec{h}}^{(+)}({\varvec{\theta }}) = {\varvec{0}}\) by (4.27), (4.29) is equivalent to

Note that \( {\tilde{A}}_{*(-1)}(\theta _{1})\) and \( {\tilde{A}}^{(1)}_{*(-1)}(\theta _{1})\) have a similar form, so we let

and guess that, for some scalar \(a({\varvec{\theta }})\),

We first verify (4.28). Since

we choose \(a({\varvec{\theta }}) = \langle {\varvec{\beta }}_{2}, {\varvec{h}}^{(2d)}({\varvec{\theta }}) \rangle \), which is \(t_{2}({\varvec{\theta }})^{-1}\) by (4.24). Then

Hence, we have (4.28) with

We next consider (4.31). Recall that \(D_{2} = (-S_{2}{\varvec{1}}) {\varvec{\beta }}_{2}\). Since

we have (4.31). Thus, we have verified Assumption 4.1, and therefore \({\varvec{\theta }} \in {\tilde{\Gamma }}^{(2e)}_{1+}\) is equivalent to \(\gamma ^{(+)}({\varvec{\theta }}) = 0\) and \(\gamma ^{(1)}({\varvec{\theta }}) \le 0\). Because arguments are symmetric for nodes 1 and 2, we can get similar results for node 2. Thus, Theorem 4.2 follows from Theorem 4.1 because of Remark 4.1.

We finally note that, for \({\varvec{\theta }} \in \mathbb {R}^{2}\) satisfying \(\gamma ^{(+)}({\varvec{\theta }}) = 0\), \(\gamma ^{(3-i)}({\varvec{\theta }}) \le 0\) is equivalent to \(\gamma ^{(id)}({\varvec{\theta }}) \ge 0\), which further is equivalent to (4.26). Hence, we have verified the claim in Remark 4.2.

5 Concluding remarks

We have studied the existence of a superharmonic vector for a nonnegative matrix with QBD block structure. We saw how this existence is useful for studying the tail asymptotics of the stationary distribution of a Markov-modulated two-dimensional reflecting random walk, called the 2d-QBD process. We have assumed that all blocks of the nonnegative matrix are finite dimensional. This is a crucial assumption, but we need to remove it for studying a higher dimensional reflecting random walk. This is a challenging problem. Probably, further structure is needed for the background process. For example, we may assume that each block matrix has again QBD block structure, which is satisfied by a reflecting random walk in any number of dimensions with Markov modulation. We think research in this direction would be useful.

Another issue is about the tail asymptotics for a generalized Jackson network. We have considered the two-node case. In this case, the tail decay rates are determined by time-average cumulant moment generating functions, \(\gamma ^{(a)}_{i}\) and \(\gamma ^{(d)}_{i}\) by Theorem 4.2. This suggests that more general arrival processes and more general routing mechanisms may lead to the decay rates in the same way. Some related issues have been recently considered for a single server queue in Sect. 2.4 of [30], but the network case has not yet been studied. So, it is also an open problem.

In a similar fashion, we may be able to consider a generalized Jackson network with more than two nodes. To make the problem specific, let us consider the k node cases for \(k \ge 2\). Let \(K = \{1,2,\ldots , k\}\), and let

Then, the sets similar to \({\tilde{\Gamma }}^{2e}_{i+}\) for \(k=2\) may be indexed by a nonempty subset A of K, and given by

These together with \({\tilde{\Gamma }}^{kd}_{+} = \left\{ {\varvec{\theta }} \in \mathbb {R}^{k}; \gamma ^{(+)}({\varvec{\theta }}) \le 0 \right\} \) would play the same role as in the two-dimensional case. That is, they would characterize the tail decay rates of the stationary distribution. We may generate those sets from

Thus, the characterization may be much simpler than that for a general k-dimensional random walk with Markov modulation. However, we do not know how to derive the decay rates from them for \(k \ge 3\) except for tandem type models in some simple situations (for example, see [3, 7]). This remains a very challenging problem (for example, see Sect. 6 of [28]).

We finally remark on the continuity of the decay rate for a sequence of the two-node generalized Jackson networks which weakly converges to the two-dimensional SRBM in heavy traffic. Under suitable scaling and appropriate conditions, such convergence is known not only for their processes but for their stationary distributions (see, for example, [6, 13]). Since the tail decay rates are known for this SRBM (see [8]), we can check whether the decay rate also converges to that of the SRBM. This topic is considered in [30].

References

Avram, F., Dai, J.G., Hasenbein, J.J.: Explicit solutions for variational problems in the quadrant. Queueing Syst. 37, 259–289 (2001)

Bean, N., Pollett, P., Taylor, P.: Quasistationary distributions for level-dependent quasi-birth-and-death processes. Stoch. Models 16, 511–541 (2000)

Bertsimas, D., Paschalidis, I., Tsitsiklis, J.N.: On the large deviations behaviour of acyclic networks of \({G/G/1}\) queues. Ann. Appl. Probab. 8, 1027–1069 (1998)

Borovkov, A.A., Mogul’skiĭ, A.A.: The second function of deviations and asymptotic problems of the reconstruction and attainment of a boundary for multidimensional random walks. Sib. Math. Zh. 37, 745–782 (1996). doi:10.1007/BF02104660

Borovkov, A.A., Mogul’skiĭ, A.A.: Large deviations for Markov chains in the positive quadrant. Russ. Math. Surv. 56, 803–916 (2001). doi:10.1070/RM2001v056n05ABEH000398

Budhiraja, A., Lee, C.: Stationary distribution convergence for generalized Jackson networks in heavy traffic. Math. Oper. Res. 34, 45–56 (2009)

Chang, C.-S.: Sample path large deviations and intree networks. Queueing Syst. 20, 7–36 (1995)

Dai, J.G., Miyazawa, M.: Reflecting Brownian motion in two dimensions: exact asymptotics for the stationary distribution. Stoch. Syst. 1, 146–208 (2011). doi:10.1214/10-SSY022

Dai, J.G., Miyazawa, M.: Stationary distribution of a two-dimensional SRBM: geometric views and boundary measures. Queueing Syst. 74, 181–217 (2013). doi:10.1007/s11134-012-9339-1

Fayolle, G., Iasnogorodski, R., Malyshev, V.: Random Walks in the Quarter-Plane: Algebraic Methods, Boundary Value Problems and Applications. Springer, New York (1999)

Fujimoto, K., Takahashi, Y.: Tail behavior of the steady-state distributions in two-stage tandem queues: numerical experiment and conjecture. J. Oper. Res. Soc. Jpn. 39, 525–540 (1996)

Fujimoto, K., Takahashi, Y., Makimoto, N.: Asymptotic properties of stationary distributions in two-stage tandem queueing systems. J. Oper. Res. Soc. Jpn. 41, 118–141 (1998)

Gamarnik, D., Zeevi, A.: Validity of heavy traffic steady-state approximation in generalized Jackson networks. Ann. Appl. Probab. 16, 56–90 (2006)

Ganesh, A., Anantharam, V.: Stationary tail probabilities in exponential server tandems with renewal arrivals. Queueing Syst. 22, 203–247 (1996)

Katou, K., Makimoto, N., Takahashi, Y.: Upper bound for the decay rate of the marginal queue-length distribution in a two-node Markovian queueing system. J. Oper. Res. 47, 314–338 (2004)

Katou, K., Makimoto, N., Takahashi, Y.: Upper bound for the decay rate of the joint queue-length distribution in a two-node Markovian queueing system. Queueing Syst. 58, 161–189 (2008)

Kijima, M.: Quasi-stationary distributions of single-server phase-type queues. Math. Oper. Res. 18, 423–437 (1993)

Kobayashi, M., Miyazawa, M.: Revisit to the tail asymptotics of the double QBD process: refinement and complete solutions for the coordinate and diagonal directions. In: Latouche, G., Squillante, M.S. (eds.) Matrix-Analytic Methods in Stochastic Models, pp. 147–181. Springer, New York (2012). arXiv:1201.3167

Kobayashi, M., Miyazawa, M.: Tail asymptotics of the stationary distribution of a two dimensional reflecting random walk with unbounded upward jumps. Adv. Appl. Probab. 46, 365–399 (2014)

Kobayashi, M., Miyazawa, M., Zhao, Y.Q.: Tail asymptotics of the occupation measure for a Markov additive process with an \(M/G/1\)-type background process. Stoch. Models 26, 463–486 (2010)

Latouche, G., Ramaswami, V.: Introduction to Matrix Analytic Methods in Stochastic Modeling. ASA-SIAM Series on Statistics and Applied Probability. Society for Industrial and Applied Mathematics (SIAM), Philadelphia (1999)

Li, Q.-L., Zhao, Y.Q.: A constructive method for finding \(\beta \)-invariant measures for transition matrices of M/G/1 type. In: Latouche, G., Taylor, P. (eds.) Matrix Analytic Methods: Theory and Application, pp. 237–263. World Scientific Publishing, Singapore (2002)

Li, Q.-L., Zhao, Y.Q.: \(\beta \)-invariant measures for transition matrices of GI/M/1 type. Stoch. Models 19, 201–233 (2003)

Li, H., Miyazawa, M., Zhao, Y.Q.: Geometric decay in a QBD process with countable background states with applications to a join-the-shortest-queue model. Stoch. Models 23, 413–438 (2007). doi:10.1080/15326340701471042

Markushevich, A.I.: Theory of Functions of a Complex Variable, vols. I–III. English ed. Chelsea Publishing Co., New York. Translated and edited by Richard A. Silverman (1977)

Miyazawa, M.: Conjectures on decay rates of tail probabilities in generalized Jackson and batch movement networks. Oper. Res. 46, 74–98 (2003)

Miyazawa, M.: Tail decay rates in double QBD processes and related reflected random walks. Math. Oper. Res. 34, 547–575 (2009). doi:10.1287/moor.1090.0375

Miyazawa, M.: Light tail asymptotics in multidimensional reflecting processes for queueing networks. TOP 19, 233–299 (2011)

Miyazawa, M.: Tail asymptotics of the stationary distribution for a two node generalized Jackson network. ACM SIGMETRICS Perform. Eval. Rev. 42, 70–72 (2014)

Miyazawa, M.: Diffusion approximation approximation for stationary analysis and their networks: a review. J. Oper. Res. Soc. Jpn. 58, 104–148 (2015)

Miyazawa, M., Zhao, Y.Q.: The stationary tail asymptotics in the \(GI/G/1\)-type queue with countably many background states. Adv. Appl. Probab. 36, 1231–1251 (2004). doi:10.1239/aap/1103662965

Miyazawa, M., Zwart, B.: Wiener–Hopf factorizations for a multidimensional Markov additive process and their applications to reflected processes. Stoch. Syst. 2, 67–114 (2012)

Ney, P., Nummelin, E.: Markov additive processes II. Large deviations. Ann. Probab. 15, 593–609 (1987)

Nummelin, E.: General Irreducible Markov Chains and Non-negative Operators. Cambridge University Press, London (1984)

Ozawa, T: Positive recurrence and transience of multidimensional skip-free Markov modulated reflecting random walks. (2012). arXiv:1208.3043

Ozawa, T.: Asymptotics for the stationary distribution in a discrete-time two-dimensional quasi-birth-and-death process. Queueing Syst. 74, 109–149 (2013)

Rockafellar, R.T.: Convex Analysis. Princeton Mathematical Series, No. 28. Princeton University Press, Princeton (1970)

Seneta, E.: Non-negative Matrices and Markov Chains, 2nd edn. Springer, New York (1981)

Takahashi, Y.: Asymptotic exponentiality of the tail of the waiting-time distribution in a ph/ph/c queue. Adv. Appl. Probab. 13, 619–630 (1981)

Vere-Jones, D.: Ergodic properties of nonnegative matrices-I. Pac. J. Math. 22, 361–386 (1967)

Acknowledgments

The author is grateful to an anonymous referee for helpful suggestions to improve exposition. This work is supported by Japan Society for the Promotion of Science under Grant No. 24310115. A part of this work was presented at a workshop of Sigmetrics 2014 (see its Abstract [29]).

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Proof of Lemma 2.3