Abstract

We prove a functional central limit theorem for Markov additive arrival processes where the modulating Markov process has the transition rate matrix scaled up by \(n^{\alpha }\) (\(\alpha >0\)) and the mean and variance of the arrival process are scaled up by n. It is applied to an infinite-server queue and a fork–join network with a non-exchangeable synchronization constraint, where in both systems both the arrival and service processes are modulated by a Markov process. We prove functional central limit theorems for the queue length processes in these systems joint with the arrival and departure processes, and characterize the transient and stationary distributions of the limit processes. We also observe that the limit processes possess a stochastic decomposition property.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Markov additive arrival processes (MAAPs) have been used to model the arrival processes of many stochastic systems, for example, telecommunication and service systems in random environments [2]. Their usefulness lies in capturing burstiness in the arrival processes, thus departing from the usual renewal-type assumptions. MAAPs are described by a couple (A, X), where the process A is the counting process of arrivals, and the process X is a modulating Markov process. A popular model is the Markov modulated Poisson process (MMPP), which has been widely used to model a variety of relevant stochastic systems [2, 25]. For a broad range of queues with MMPP input, analytical results have been derived, often employing the matrix computational approach. The main objective of this paper is to generate functional central limit theorems (FCLTs) for MAAPs, in particular, the counting process A, and their applications in specific, practically relevant, queueing systems.

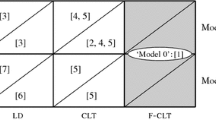

FCLTs for MMPPs have been studied in the literature under two types of scalings. In the first scaling, time is scaled up by a parameter n while space is scaled down by \(\sqrt{n}\), and thus the transition times of the modulating Markov process are implicitly accelerated by a factor n. Under this scaling, assuming that the modulating Markov process has a finite number of states and is irreducible, an FCLT can be proven for the scaled arrival process, where the limit process is a Brownian motion [reviewed in (2.1)–(2.5)]. This has been applied to prove heavy-traffic limits for single-server queueing (network) models; see, for example, [25, Ch. 9]. Under this same scaling, Steichen [23] considered an MAAP where the arrival process in each state can be non-Poisson, and proved an FCLT with a Brownian motion limit. That result was also applied to study some single-server queueing networks in [23].

In the second scaling, time is not scaled, but the arrival rates in each state are scaled up by n and the space is scaled down by \(\sqrt{n}\), while at the same time the transition rates of the modulating Markov process are scaled up by \(n^{\alpha }\) for some \(\alpha >0\). Under this scaling, an FCLT has recently been proved for the scaled arrival process in [1], where the limit process is a Brownian motion [reviewed in (2.6)–(2.8)]. This is then applied in [1] to prove an FCLT for the \(M/M/\infty \) queue with MMPP input. This scaling is useful in many-server systems, where the demand is relatively large but service times do not scale as the demand gets larger, and the modulating Markov process may speed up or slow down.

FCLTs for MAAPs MMPPs, in which the Poisson arrival rate jumps between several values, significantly generalize the traditional Poisson setting. Nonetheless, in many applications the assumption of the input being locally Poisson is not adequate. To remedy this, we consider in this paper a general class of MAAPs, where the arrival process in each state can be a general stationary counting process, including renewal processes. We prove an FCLT for this class of MAAPs, in Theorem 2.1, under the second type of scaling and under three regimes of \(\alpha \) values, i.e., \(0< \alpha <1,\alpha =1\), and \(\alpha >1\). The limit process is also a Brownian motion, whose variance coefficient compactly captures the variabilities in the interarrival times in each state as well as the variabilities in the modulating Markov process. We apply this FCLT to two queueing systems: a general infinite-server queue and a fork–join network with the non-exchangeable synchronization (NES) constraint.

General infinite-server queue Several recent papers have studied infinite-server queues with MMPP input. Exact analysis and related approximations have been derived for specific infinite-server queues in random environments (Markov or semi-Markov modulated) in [3, 5, 9, 11, 12, 17, 19]. In [1], an \(M/M/\infty \) queue with MMPP input is studied, leading to an FCLT for the queue length process under the second type of scaling mentioned above. [4] studies an \(M/GI/\infty \) queue with MMPP input and general service times depending on the state of the modulating Markov process upon arrival. The exact mean and variance formulas for the transient and stationary distributions of the queue length process are provided, and asymptotic results are also obtained in the regime where the arrival rates are scaled up by n and the transition rates are scaled up by \(n^{1+ \epsilon }\) for some \(\epsilon >0\). Central limit theorems are proved for the \(M/M/\infty \) queue with both the arrival and services modulated by a finite-state Markov process in [6, 7], where the arrival rates are scaled up by n.

In Sect. 3, we establish an FCLT for the queue length process joint with the arrival and departure processes in the \(G/G/\infty \) queue where both the arrival process and the service time distributions are modulated by a Markov process (applying Theorem 2.1), thus generalizing the existing literature substantially. The limiting queue length and departure processes are continuous Gaussian processes, of which we characterize the transient and steady-state distributions. We also derive a stochastic decomposition property: the variabilities of the arrival process and modulating Markov process are captured in one limit component, while those of the service process are captured in a second independent limit component.

Fork–join network with NES In our second application, we consider a fork–join network with NES, where both the arrival process and the joint service time distributions of the parallel tasks of each job are modulated by a Markov process. In the network, each job is forked into a fixed number of parallel tasks, each of which is processed in a multiserver service station, and after service completion, each task will join a buffer associated with the service station, waiting for synchronization. The NES constraint requires that synchronization occurs only when all the tasks of the same job are completed. It is important to understand the joint dynamics of the service process as well as the waiting buffers for synchronization.

Heavy-traffic limits are proved for a single-class multiserver fork–join network with NES, in the underloaded quality-driven (QD) regime [14] and the critically loaded quality-and-efficiency driven (QED) regime [16]. The setup considered is such that the arrival process is general (satisfying an FCLT), whereas the service times of the parallel tasks form i.i.d. random vectors that can be correlated. In addition, in [15], an infinite-server fork–join network with NES in a renewal alternating environment (up–down cycles) is studied, where the service vectors of parallel tasks are correlated and the service processes are interrupted during the down periods.

In this paper we study a multiserver fork–join network with NES in the QD regime, where both the arrival and service processes are modulated by a Markov process. We apply our FCLT for the MAAP to obtain a multidimensional Gaussian limit process for the processes representing the number of tasks in service at each station and the number of tasks in the waiting buffer for synchronization associated with each station, jointly with the arrival process and the process representing the number of synchronized jobs. We characterize the transient and steady-state joint distributions of the limit queueing processes, as multivariate Gaussian distributions, and of the synchronized process, as a Gaussian distribution. We also observe a similar stochastic decomposition property as in the infinite-server queues above, where the two independent limit components capture the variabilities of the arrival and modulating Markov processes and of the service processes separately.

1.1 Organization of the paper

The rest of the paper is organized as follows. We finish this section below with a summary of notation used in the paper. In Sect. 2 we present the general MAAP, review the existing FCLTs for MAAPs, and state the new FCLT under the second type of scaling. In Sect. 3 we apply the FCLT for the MAAP to a general infinite-server queueing model with both arrival and service times modulated by a Markov process. In Sect. 4, we apply the FCLT for the MAAP to a fork–join network with both arrival and service processes being modulated by a Markov process. The proofs of these results are presented in Sect. 5. We make some concluding remarks in Sect. 6.

1.2 Notations

The following notation will be used throughout the paper. \({\mathbb R}\) and \({\mathbb R}_{+}\) (\({\mathbb R}^d\) and \({\mathbb R}^d_{+}\), respectively) denote sets of real and real non-negative numbers (d-dimensional vectors, respectively, \(d\ge 2\)). For \(a,b\in {\mathbb R}\), we denote \(a\wedge b:=\min (a,b)\). For any \(x\in {\mathbb R}_{+},\lfloor x \rfloor \) is used to denote the largest integer no greater than x. We use a bold letter to denote a vector, for example, \({\mathbf x}:=(x_1, \ldots ,x_N)\in {\mathbb R}^N\). \({\mathbf 1}(A)\) is used to denote the indicator function of a set A. For two real-valued functions f and g, we write \(f(x)=O(g(x))\) if \(\limsup _{x\rightarrow \infty }|f(x)/g(x)|<\infty \).

All random variables and processes are defined on a common probability space \((\Omega ,{\mathcal {F}},P)\). For any two complete separable metric spaces \({\mathcal {S}}_1\) and \({\mathcal {S}}_2\), we denote by \({\mathcal {S}}_1\times {\mathcal {S}}_2\) their product space, endowed with the maximum metric, i.e., the maximum of two metrics on \({\mathcal {S}}_1\) and \({\mathcal {S}}_2\). \({\mathcal {S}}^k\) is used to represent k-fold product space of any complete and separable metric space \({\mathcal {S}}\) with the maximum metric for \(k\in {\mathbb N}\). For a complete separable metric space \({\mathcal {S}},{\mathbb D}([0,\infty ),{\mathcal {S}})\) denotes the space of all \({\mathcal {S}}\)-valued càdlàg functions on \([0,\infty )\), and is endowed with the Skorohod \(J_1\) topology (see, for example, [8, 10, 25]). Denote \({\mathbb D}\equiv {\mathbb D}([0,\infty ),{\mathbb R})\). The space \({\mathbb D}([0,\infty ),{\mathbb D})\), denoted by \({\mathbb D}_{{\mathbb D}}\), is endowed with the Skorohod \(J_1\) topology, that is, both inside and outside \({\mathbb D}\) spaces are endowed with the Skorohod \(J_1\) topology. Let \({\mathbb D}([0,\infty )^k,{\mathbb R})\equiv {\mathbb D}_k\) denote the space of all “continuous from above with limits from below” real-valued functions on \([0,\infty )^k\) with the generalized Skorohod \(J_1\) topology [18, 24] for \(k\ge 2\). Weak convergence of probability measures \(\mu _n\) to \(\mu \) will be denoted by \(\mu _n\Rightarrow \mu \).

2 An FCLT for Markov additive arrival processes

Consider a Markov additive arrival process (A, X). The process \(X= \{X(t): t\ge 0\}\) is a finite-state irreducible stationary Markov process with state space \({\mathcal {S}}= \{1, \ldots ,I\}\) and transition rate matrix \(Q = (q_{ij})_{i,j =1, \ldots ,I}\). The process \(A= \{A(t): t\ge 0\}\) is a counting process modulated by the Markov process X, defined as follows. Let \(\pi = (\pi _1, \ldots ,\pi _I)\) be the stationary distribution of the Markov process X. We assume that the process starts in stationarity at time 0.

We introduce some auxiliary notations. Let \(\Pi \) be a matrix with each row being the steady-state vector \(\pi \), and \(P(t) = (P_{ij}(t))_{i,j=1, \ldots ,I}\) be the transition matrix, that is, \(P_{ij}(t) := P(X(t)=j|X(0) = i)\) for each \(t \ge 0\). Let \(Z = (Z_{ij})_{i,j= 1, \ldots ,I}\) be the fundamental matrix, given by

It holds that \(Z = (\Pi - Q)^{-1} - \Pi \).

When the process A is an MMPP, that is, arrivals follow a Poisson process with rate \(\lambda _i\) when \(X=i,i\in {\mathcal {S}}\), FCLTs are proved for the process A in two different scalings. In the first scaling that was introduced in Sect. 1, both time and space are scaled by n, and the diffusion-scaled process \(\tilde{A}^n= \{\tilde{A}^n(t): t\ge 0\}\) is defined by

By Theorem 2.3.4 in [26], one can show that

where \(\tilde{A} = \{\tilde{A}(t): t\ge 0\}\) is a driftless Brownian motion with variance coefficient

with

and

See also the discussion in Example 9.6.2 in [25]. Note that under this scaling, the transition rates of the modulating Markov process are scaled up by n.

In the second scaling introduced in Sect. 1, time is not scaled, but the arrival rates \(\lambda \) are scaled by n and transition rate matrices are scaled by \(n^{\alpha }\) for \(\alpha >0\). Namely, we consider a sequence of the processes \((A^n, X^n)\) indexed by n, and write the corresponding quantities by a superscript n. Assume that \(\lambda ^n_i/n\rightarrow \lambda _i>0\) for \(i \in {\mathcal {S}}\) as \(n\rightarrow \infty \) and \(Q^n = n^{\alpha } Q\) for some \(\alpha > 0\). Note that the stationary distribution of \(X^n\) remains the same, \(\pi \). Define the diffusion-scaled process \(\hat{A}^n = \{\hat{A}^n(t): t\ge 0\}\) by

Then it is shown in [1] that

where the limit process \(\hat{A} = \{\hat{A}(t): t\ge 0\}\) is a driftless Brownian motion with variance coefficient

with \(\bar{\lambda }\) and \(\bar{\beta }\) being defined in (2.4) and (2.5), respectively.

Remark 2.1

When \(\alpha =1\), the limit processes under both scalings in fact coincide, as the arrival process and the modulating Markov process are sped up at the same rate. When \(\alpha >1\), the modulating Markov process is sped up at a faster rate than the arrival process in each state, and thus the variability in the limit comes only from the Poisson processes with the spatial scaling \(n^{-1/2}\). When \(0<\alpha <1\), the modulating Markov process is sped up at a slower rate than the arrival process in each state, and thus the variability in the limit comes only from the modulating Markov process with the spatial scaling \(n^{-(1-\alpha /2)}\).

In this paper, we consider the second type of scaling and prove an FCLT for the diffusion-scaled processes \(\hat{A}^n\) when the process \(A^n\) is general, including renewal process, in each state of \(X^n\).

Let \(\tau _k^n\) be the \(k^\mathrm{th}\) jump time of \(X^n\) for \(k=1,2, 3, \ldots \) and \(\tau _0^n\equiv 0\). For each \(i \in {\mathcal {S}}\), define

and

Assume that \(\pmb \lambda ^n = (\lambda ^n_1, \ldots ,\lambda ^n_I)\) and \(\pmb \nu ^n= (\nu ^n_1, \ldots ,\nu ^n_I)\) are positive vectors. Note that when the process \(A^n\) is Poisson in each state of \(X^n\), we have that \(\lambda ^n_i = \nu ^n_i,i=1, \ldots ,I\). Note also that if the arrival process is renewal in each state, the parameter \(\nu ^n_i= \lambda ^n_i (c^n_{a,i})^2\), where \(c_{a,i}^n\) is the coefficient of variation (CV) of the interarrival times when the Markov process \(X^n\) is in state i.

Then, we can write, for each \(t\ge 0,\)

and

We make the following assumption on the parameters.

Assumption 1

The parameters \(\pmb \lambda ^n\) and \(\pmb \nu ^n\) satisfy

The transition rate matrix \(Q^n = n^{\alpha } Q\) for some \(\alpha >0 \).

We now state the main result of this section. Its proof, as well as the proofs of all results presented in Sects. 3 and 4, is provided in Sect. 5.

Theorem 2.1

Under Assumption 1, for the diffusion-scaled process \(\hat{A}^n\) in (2.6), (2.7) holds, where the limit process \(\hat{A}\) is a driftless Brownian motion with variance coefficient

with \(\bar{\beta }\) being defined in (2.5) and

Remark 2.2

When the modulating Markov process \(X^n\) is in state i, if the process \(A^n\) is renewal, we obtain \(\bar{\nu } = \sum _{i=1}^I \pi _i \lambda _i c_{a,i}^2\), where \(\lambda _i\) is the arrival rate and \(c_{a,i}\) is the CV of the interarrival times in the limit and \(\nu _i = \lambda _i c_{a,i}^2 \).

3 Application to infinite-server queues

In this section we apply the FCLT of the MAAP process, Theorem 2.1, to \(G/GI/\infty \) queues with Markov modulated arrival and service processes. It is shown in [13] that an FCLT for the number of customers/jobs in a \(G/GI/\infty \) queue holds, provided that the arrival process satisfies an FCLT and the service times are i.i.d. with a general distribution. Our FCLT below extends the existing results in [1] and [4] by allowing more general arrival process, in that in each state of the underlying Markov process, the arrival process can be a general stationary point process, including a renewal process. It also proves the joint convergence of arrivals, queue length, and departure processes (rather than just queue length). The limiting queue length process is a continuous Gaussian process and possesses a stochastic decomposition property. Our results also generalize [13] for \(G/G/\infty \) queues in a Markov random environment.

Consider a sequence of \(G/G/\infty \) queues modulated by a Markov process \(X^n\), behaving as described in Sect. 2. The arrival process \(A^n\) is an MAAP with \(\tau ^n_k\) denoting the arrival time of job \(k,k\ge 1\). The service times \(\{\eta _{k,i}:k\ge 1\}\) are i.i.d. with a general distribution \(F_i\) (independent of n) when the underlying Markov process \(X^n\) is in state i upon the customer’s arrival. Namely, we assume that the service time distribution of a customer is determined at the epoch of the arrival time, according to the state of the underlying Markov process \(X^n\). We also assume that conditional on the modulating Markov process \(X^n\), the arrival and service processes are independent, and that the system starts empty. Let \(F^c_i := 1- F_i,i=1,\ldots ,I\). Let \(Q^n= \{Q^n(t): t\ge 0\}\) be the queue length process describing the evolution of the number of customers in the system. Let \(D^n = \{D^n(t):t\ge 0\}\) be the departure process counting the number of completed jobs. We have the following balance equation:

Define the diffusion-scaled processes \(\hat{Q}^n= \{\hat{Q}^n(t): t\ge 0\}\) and \(\hat{D}^n=\{\hat{D}^n(t):t\ge 0\}\) by

where

Theorem 3.1

For the sequence of \(G/GI/\infty \) models with Markov modulated arrival and service processes described above,

where \(\hat{A}\) is the arrival limit defined in Theorem 2.1, the process \(\hat{D}=\{\hat{D}(t):t\ge 0\}\) is defined by \(\hat{D}(t):=\hat{A}(t) - \hat{Q}(t),t\ge 0\), and

The limit process \(\hat{Q}_1 = \{\hat{Q}_1(t): t\ge 0\}\) is a continuous Gaussian process, defined by

where W is a standard Brownian motion and \(\sigma ^2(\alpha )\) is defined in (2.13). The limit process \(\hat{Q}_2 = \{\hat{Q}_2(t): t\ge 0\}\) is a continuous Gaussian process defined by

where the processes \(\hat{K}_i = \{\hat{K}_i(s,x): s,x\ge 0\},i=1, \ldots ,I\), are independent Kiefer processes with mean 0 and covariance function

for each \(i=1, \ldots ,I\). The processes W and \(\hat{K}_i,i=1, \ldots ,I\), are independent, and thus so are the processes \(\hat{Q}_1\) and \(\hat{Q}_2\).

Here the integrals in (3.3) are defined in the mean-square sense following [13]; see the precise definition in (5.35)–(5.36).

Remark 3.1

We remark that there is a stochastic decomposition property in the limit process, as shown in the independence of \(\hat{Q}_1\) and \(\hat{Q}_2\). Note that the corresponding prelimit processes are evidently dependent because of the modulating Markov process. The limit process \(\hat{Q}_1\) captures the variabilities resulting from the arrival process, as well as those resulting from the modulating Markov process. The limit process \(\hat{Q}_2\) captures the variabilities from the service process, while, perhaps surprisingly, it is not affected by the variabilities of the modulating Markov process other than the steady-state distribution \(\pi \). This is also shown in the following characterization of the limit processes.

Corollary 3.1

Under the assumptions of Theorem 3.1, the limit process \(\hat{Q}\) is Gaussian, with mean 0 and covariance function

for \(t, u\ge 0\), where \(\bar{\beta }\) and \(\sigma ^2(\alpha )\) are defined in (2.5) and (2.13), respectively. Its stationary distribution has variance

with \(m_{s,i}\) being the mean service time associated with \(F_i\). In addition, the limit process \(\hat{D}\) is Gaussian, with mean 0 and covariance function

for \(t, u\ge 0\), and \( \lim _{t\rightarrow \infty } t^{-1}Var(\hat{D}(t)) = \sigma ^2(\alpha )\).

Remark 3.2

When \(F_i,i=1,\ldots ,I\), are identical, our results establish an FCLT for \(G/GI/\infty \) queues with an MAAP and i.i.d. service times. Moreover, when the arrival process is an MMPP and the service times are exponential with rate \(\mu \) (independent of the modulating Markov process), our results reduce to those in [1] for \(M/M/\infty \) queues.

4 Application to fork–join networks

In this section we apply the FCLT for the MAAP to a many-server fork–join network with the non-exchangeable synchronization (NES) constraint, where both the arrival process and the joint service time distribution of the parallel tasks are modulated by a Markov process.

Consider a sequence of many-server fork–join networks with NES indexed by n and let \(n\rightarrow \infty \). We assume that the systems are operating in the QD regime, which is asymptotically equivalent to systems with infinite-server service stations. There is a single class of customers. Let the arrival processes \(A^n\) be an MAAP as described in Sect. 2. Let \(\pmb \eta ^{\ell ,i} = (\eta ^{\ell ,i}_1, \ldots ,\eta ^{\ell ,i}_K)\) be the service times that customer \(\ell \) brings in for the K parallel tasks when the underlying Markov process \(X^n\) is in state i at the epoch of arrival. Assume that the service times \(\{\pmb \eta ^{\ell ,i}: \ell \ge 1\}\) are i.i.d. with a continuous joint distribution function \(F^{(i)}\) and marginals \(F^{(i)}_{k},k=1, \ldots ,K\) and \(i=1, \ldots ,I\). Let \(F^{(i)}_{j,k}\) be the joint distribution of the service times of parallel tasks j, k for \(j, k=1, \ldots ,K\) and \(i=1, \ldots ,I\). Let \(F^{(i)}_\mathrm{m}\) be the distribution of the maximum of the service times \(\eta ^{\ell ,i}_1, \ldots ,\eta ^{\ell ,i}_K\), i.e., \(F^{(i)}_\mathrm{m}(x) = P(\eta ^{1,i}_j \le x,\ \forall j)\) for \(x\ge 0\). Denote \(G^{(i)}_k := 1- F^{(i)}_k\) for \(k=1, \ldots ,K\), and \(G^{(i)}_\mathrm{m} := 1 - F^{(i)}_\mathrm{m},i=1, \ldots ,I\).

Let \({\varvec{Q}}^n=(Q^n_1, \ldots ,Q^n_K)\) be the numbers of tasks in service at service stations \(k=1, \ldots ,K\). Let \({\varvec{Y}}^n=(Y^n_1, \ldots , Y^n_K)\) be the numbers of tasks that have completed service but are waiting for service at the waiting buffers for synchronization corresponding to the service stations \(k=1, \ldots ,K\). Let \(S^n=\{S^n(t):t\ge 0\}\) be the process counting the number of synchronized jobs. Define the diffusion-scaled processes \(\hat{{\varvec{Q}}}^n = (\hat{Q}^n_1, \ldots ,\hat{Q}^n_K),\hat{{\varvec{Y}}}^n = (\hat{Y}^n_1, \ldots , \hat{Y}^n_K)\) and \(\hat{S}^n\) by

and

where

and

Theorem 4.1

For the fork–join networks with NES and Markov modulated arrival and service processes described above, \((\hat{A}^n,\hat{{\varvec{Q}}}^n, \hat{{\varvec{Y}}}^n, \hat{S}^n) \Rightarrow (\hat{A},\hat{{\varvec{Q}}}, \hat{{\varvec{Y}}},\hat{S})\) in \({\mathbb D}^{2K+2}\) as \(n\rightarrow \infty \), where \(\hat{A}\) is the arrival limit defined in Theorem 2.1, \(\hat{{\varvec{Q}}} = (\hat{Q}_1, \ldots ,\hat{Q}_K),\hat{{\varvec{Y}}} = (\hat{Y}_1, \ldots ,\hat{Y}_K)\), and \(\hat{S}\) are defined as follows:

The limit processes \(\hat{Q}_{k,1} = \{\hat{Q}_{k,1}(t): t \ge 0\},\hat{Y}_{k,1} = \{\hat{Y}_{k,1}(t): t \ge 0\}\), and \(\hat{S}_1=\{\hat{S}_1(t):t\ge 0\}\) are continuous Gaussian processes defined by

where W is a standard Brownian motion with variance coefficient \(\sigma ^2(\alpha )\) as defined in Theorem 2.1. The limit processes \(\hat{Q}_{k,2} = \{\hat{Q}_{k,2}(t): t \ge 0\},\hat{Y}_{k,2} = \{\hat{Y}_{k,2}(t): t \ge 0\}\) and \(\hat{S}_2=\{\hat{S}_2(t):t\ge 0\}\) are continuous Gaussian processes defined by

where \(\hat{{\varvec{K}}}_i(s, {\mathbf x})\) are independent multiparameter Kiefer processes (Gaussian random field) with mean 0 and covariance \(Cov(\hat{{\varvec{K}}}_i(s, {\mathbf x}), \hat{{\varvec{K}}}_i(t, {\mathbf y})) = (s \wedge t) (F^{(i)}({\mathbf x}\wedge {\mathbf y}) - F^{(i)}({\mathbf x}) F^{(i)}({\mathbf y}))\) for \(s, t \ge 0\) and \({\mathbf x}, {\mathbf y}\in {\mathbb R}^{K}_+\). The integrals in \(\hat{Q}_{k,2}(t),\hat{Y}_{k,2}(t)\), and \(\hat{S}_2(t)\) are defined in the mean squared sense. The Brownian motion W is independent from \(\hat{{\varvec{K}}}_i,i=1, \ldots ,I\), and thus \(\hat{Q}_{k,1}\) and \(\hat{Q}_{j,2}\) are independent, and so are \(\hat{Y}_{k,1}\) and \(\hat{Y}_{j,2}\) for each \(k, j=1, \ldots ,K\). \(\hat{S}_1\) and \(\hat{S}_2\) are also independent.

Remark 4.1

We remark that there is also a stochastic decomposition property (analogous to the one we have seen for the infinite-server queueing model). The variabilities in the arrival process and the Markov process are captured in \(\hat{Q}_{k,1},\hat{Y}_{k,1}\), and \(\hat{S}_1\) for each k, while the variabilities in the service process are captured in \(\hat{Q}_{k,2},\hat{Y}_{k,2}\), and \(\hat{S}_2\).

Corollary 4.1

Under the assumptions of Theorem 4.1, the limit process \((\hat{{\varvec{Q}}}, \hat{{\varvec{Y}}})\) is a multidimensional Gaussian process with mean zero and covariance functions, for \(j,k =1, \ldots ,K,t,t'\ge 0\),

where

with \(\sigma ^2(\alpha )\) being defined in (2.13), and, for \(j=1, \ldots ,K\) and \(x,y\ge 0,F_{j,\mathrm m}(x,y):=F({\mathbf z})\) for \({\mathbf z}\in {\mathbb R}^K_{+}\) satisfying \(z_j = x\wedge y\) and \(z_{j'} = y\) for \(j'\ne j\).

In addition, the limit process \(\hat{S}\) is a continuous Gaussian process with mean zero and covariance functions, for \(t,t'\ge 0\),

where

with \(\sigma ^2(\alpha )\) being defined in (2.13), and \(\lim _{t\rightarrow \infty } t^{-1} Var(\hat{S}(t)) = \sigma ^2(\alpha )\).

5 Proofs

5.1 Proof of Theorem 2.1

In this section we prove Theorem 2.1. First of all, we write the process \(\hat{A}^n\) as

where

and

We now focus on proving the convergence of \(\hat{A}^n_1\). Without loss of generality, we pick state 1 as the reference state. Let \(\tilde{T}^n_0\) be the first time that \(X^n(t)\) reaches state 1 from the initial state, and \(T_k^n\) be the \((k+1)^\mathrm{th}\) jump time of \(X^n(t)\) reaching state 1 (i.e., the \(k^\mathrm{th}\) excursion time). Define a counting process associated with the sequence \(\{T^n_k: k=1, 2, \ldots \}\):

Then we can decompose the process \(\hat{A}^n_1\) into three processes:

where

We will prove the convergence of the three processes \(\hat{A}^n_{1,1},\hat{A}^n_{1,2}\), and \(\hat{A}^n_{1,3}\) in the following lemmas.

Before proving the convergence of the three processes \(\hat{A}^n_{1,1},\hat{A}^n_{1,2}\), and \(\hat{A}^n_{1,3}\), we present some properties of the processes \(N^n\) and the sequence \(\{T^n_k: k=0, 1, 2, \ldots \}\). Let

for \(k=1,2, \ldots \). Then \(\{\breve{T}^n_k: k=1,2, \ldots \}\) forms an i.i.d. sequence of random variables. Let \(\gamma ^n:= E[\breve{T}^n_1]\). It is evident that \(\gamma ^n <\infty \) and there exists \(\gamma >0\) such that \(\gamma ^n = n^{-\alpha } \gamma \), since \(X^n\) has transition rate matrix \(Q^n = n^{\alpha } Q\). Thus, it follows from the FLLN for delayed renewal processes that

where \(e(t)\equiv t\) for \(t\ge 0\).

Lemma 5.1

For any \(\epsilon >0\) and fixed \(T>0\),

Proof

It suffices to show that

By (5.5), we obtain the following upper bound:

By Assumption 1, we have that \(\frac{1}{n} \max _{i \in {\mathcal {S}}} \lambda ^n_i \rightarrow \max _{i \in {\mathcal {S}}} \lambda _i < \infty \) as \(n\rightarrow \infty \). Since \(Q^n= n^{\alpha } Q\), it is evident that \(E[\tilde{T}^n_0] = O(1/n^{\alpha })\) (see, for example, [21, pp. 256–257]). Thus, it follows that

and we have proved (5.10). \(\square \)

Lemma 5.2

For any \(\epsilon >0\) and fixed \(T>0\),

Proof

For each \(k=1,2, 3, \ldots \), define

To prove (5.12), it suffices to prove that

By (5.13) and conditioning, we obtain that

where the convergence follows from Assumption 1 and \(E[\breve{T}^n_1] = n^{-\alpha } \gamma \). Thus, the lemma is proved. \(\square \)

Lemma 5.3

in \({\mathbb D}\) as \(n \rightarrow \infty \), where the limit process \(\hat{A}_1\) is a driftless Brownian motion with variance coefficient \(\bar{\nu }\) defined in (2.14).

To prove this lemma, we need the following lemma, whose proof follows from a direct generalization of Theorem 2.7 in [22].

Lemma 5.4

Let \(\{\xi _{n,i}: i \ge 1\}\) be an i.i.d. sequence for each n and \(U_n(t):= \sum _{i=1}^{\lfloor n^{\alpha } t \rfloor } \xi _{n,i} \) for each \(t\ge 0\) and any \(\alpha >0\). Then \(U_n \Rightarrow U\) in \({\mathbb D}\) as \(n \rightarrow \infty \), where U is a stochastic process with stationary independent increments, if and only if \(U_n(t) \Rightarrow U(t)\) in \({\mathbb R}\) for each t as \(n\rightarrow \infty \).

Proof of Lemma 5.3

Define a process \(\breve{A}^n_{1,2} = \{\breve{A}^n_{1,2}(t): t\ge 0\}\) by

where \(\breve{A}^n_k\) is defined in (5.13). We first show that, for each \(t\ge 0\),

in \({\mathbb R}\) as \(n\rightarrow \infty \), where \(\breve{A}(t)\) has a normal distribution with mean 0 and variance \(\bar{\nu } t\), with \(\bar{\nu }\) defined in (2.14). This follows from applying the CLT for doubly indexed sequences by noting that the summation terms in (5.16) are i.i.d. for each given n. It suffices to show that, as \(n\rightarrow \infty \),

By conditioning, we obtain

Under the assumption on the underlying Markov process \(X^n\), we obtain that for each \(i=1, \ldots ,I\) and \(t\ge 0\),

Since \(E[\breve{T}^n_1] = n^{-\alpha } \gamma \), we obtain that as \(n\rightarrow \infty \),

Thus, we have proved (5.17). By Lemma 5.4, we obtain that

in \({\mathbb D}\) as \(n\rightarrow \infty \). Now by (5.8), Theorem 11.4.5 of [25], and the continuous mapping theorem, we can conclude the convergence in (5.15). \(\square \)

Completing the Proof of Theorem 2.1

Recall the representation of the process \(\hat{A}^n\) in (5.1)–(5.3) and (5.4)–(5.7). By Lemmas 5.1 and 5.2, we obtain that \(\hat{A}^n_{1,1} \Rightarrow 0\) and \(\hat{A}^n_{1,3} \Rightarrow 0\) as \(n \rightarrow \infty \), respectively. By Lemma 5.3, we obtain that (i) \(\hat{A}^n_{1,2} \Rightarrow \hat{A}_1\) in \({\mathbb D}\) as \(n\rightarrow \infty \), when \(\delta = 1/2\) and \(\alpha \ge 1\), where \(\hat{A}_1\) is a driftless Brownian motion with variance parameter \(\bar{\nu }\), and (ii) \(\hat{A}^n_{1,2} \Rightarrow 0\) in \({\mathbb D}\) as \(n\rightarrow \infty \), when \(\delta = 1- \alpha /2\) and \(0<\alpha <1\).

By Proposition 3.2 in [1], we obtain that

in \({\mathbb D}\) as \(n\rightarrow \infty \), where the limit process \(\hat{A}_2 = \{\hat{A}_2(t): t\ge 0\}\) is a Brownian motion with mean 0 and variance coefficient \(\bar{\beta }\). Here, the Brownian motion \(\hat{A}_1\) is independent of \(\hat{A}_2\). Thus the proof is complete. \(\square \)

5.2 Proofs for applications to infinite-server queues

Proof of Theorem 3.1

We first note that the process \(Q^n\) can be written as

From this, we obtain the following representation for the diffusion-scaled process \(\hat{Q}^n\): \(\hat{Q}^n(t) = \hat{Q}^n_1(t) + \hat{Q}^n_2(t)\) for \( t\ge 0,\) where

and

We next prove the convergences of \(\hat{Q}^n_1\) and \(\hat{Q}^n_2\).

To prove the convergence of \(\hat{Q}^n_1\), we show that

Note that

where \(\hat{A}\) is the limit process of the arrivals \(\hat{A}^n\) as given in Theorem 2.1. The convergence to zero of the first term on the right-hand side of (5.28) follows from the convergence \(\hat{A}^n \Rightarrow \hat{A}\) in Theorem 2.1. To prove the convergence of the second term in (5.28), we first observe that the process \(\hat{Q}^n_{1,2} = \{\hat{Q}^n_{1,2}(t): t\ge 0 \}\) defined by

is a Markov process. It is easy to check that the generators of the processes \(\hat{Q}^n_{1,2}\) converge to zero. Thus, by [10, Ch. IV, Thm. 2.5], we obtain the convergence of the second term in (5.28). To show the joint convergence

by endowing the product space with the maximum metric we see that the convergence of \(\hat{A}^n\) by assumption and \(\hat{Q}^n_1\) in (5.27), as well as the continuity of their limits \(\hat{A}\) and \(\hat{Q}_1\), imply that (5.29) holds.

Next we will show the convergence of \(\hat{Q}^n_2\). Define the sequential empirical processes \(\hat{K}^n_i =\{\hat{K}^n_i(t,x): t,x\ge 0\}\) by

for each \(i=1, \ldots ,I\). By [13, Lemma 3.1] and the independence of \(\hat{K}^n_i,i=1, \ldots ,I\), we know

where \(\hat{K}_i,i=1, \ldots ,I\), are independent Kiefer processes defined in Theorem 3.1. We let \(A^n_i=\{A^n_i(t): t\ge 0\}\) be the process counting the number of arrivals whose service type is i, i.e.,

where \(k_0:=0\) and \(\tau ^n_0 :=0\), for \(i=1, \ldots ,I\). Define the fluid-scaled processes \(\bar{A}^n_i:= n^{-1} A^n_i\) for each \(i=1, \ldots ,I\). Thus, Theorem 2.1 directly implies the functional weak law of large numbers (FWLLN) for \(A^n_i\), i.e.,

We can rewrite (5.26) as

where the processes \(\hat{Q}^n_{2,i}=\{\hat{Q}^n_{2,i}(t): t\ge 0\}\) are defined by

Tightness of the processes \(\{\hat{Q}^n_{2,i}: n\ge 1\}\) in \({\mathbb D}\) follows directly from the tightness of the corresponding processes for the \(G/GI/\infty \) queues in [13], for \(i=1, \ldots ,I\). Thus, we obtain the processes \(\{\hat{Q}^n_2:n\ge 1\}\) that are tight.

We now focus on proving the joint convergence of finite-dimensional distributions of \(\hat{Q}^n_1\) and \(\hat{Q}^n_2\). We only need to show the case \(\delta = 1/2\), since otherwise the limit \(\hat{Q}_2\) vanishes. Define the process \(\hat{Q}_{2,i}=\{\hat{Q}_{2,i}(t): t\ge 0\}\) by

for \(t\ge 0\) and \(i=1, \ldots ,I\). The integral \(\hat{Q}_{2,i}\) in (5.35) is understood as a mean-square integral. Specifically, we define

where l.i.m represents mean-square limit, that is,

and

with \({\mathbf 1}_{l,t}(\cdot ,\cdot )\) defined by

with the points \(0=s^l_1<s^l_2<\cdots <s^l_l = t\) being chosen so that \(\max _{1\le j\le l}|s^l_j - s^l_{j-1}| \rightarrow 0\) as \(l\rightarrow \infty \), and for \(a_1\le a_2,b_1\le b_2\), and \(i=1, \ldots ,I\),

We define the additional processes \(\hat{Q}^n_{2,i,l}=\{\hat{Q}^n_{2,i,l}(t): t\ge 0\}\) and \(\breve{Q}^n_{2,i,l}=\{\breve{Q}^n_{2,i,l}(t): t\ge 0\}\) by

where \(\Delta _{\hat{K}^n_i}\) is defined similarly to \(\Delta _{\hat{K}_i}\) in (5.37) with \(\hat{K}_i\) replaced by \(\hat{K}^n_i\). By the weak convergence of \(\hat{K}^n_i\) to \(\hat{K}_i\) in \({\mathbb D}_{\mathbb D}\) as \(n\rightarrow \infty \), we easily obtain that, for \(i=1, \ldots ,I\),

where \(\xrightarrow {f.d.d.}\) stands for the convergence in finite-dimensional distributions. By noting that \(\breve{Q}^n_{2,i,l},i=1, \ldots ,I\), and \(A^n\) are independent from each other, together with (5.29), we have

In order to establish the joint convergence of \(\hat{A}^n,\hat{Q}^n_1\), and \(\hat{Q}^n_{2,i}\) in finite-dimensional distributions, \(i=1, \ldots ,I\), i.e.,

it is sufficient to show the following: for any \(T>0\) and \(\epsilon >0\),

and, for \(t>0\) and \(\epsilon >0\),

We can easily obtain (5.39) from (5.30) and (5.32), as well as the continuity of \(\hat{K}_i,i=1, \ldots ,I\). Following the proof of [13, Lemma 5.3], we immediately see that (5.40) also holds. Therefore, we have shown (5.38). By the continuous mapping theorem, together with (5.33) and \(\delta = 1/2\), we further have

Since \(\{\hat{Q}^n_1:n\ge 1\}\) and \(\{\hat{Q}^n_2:n\ge 1\}\) are tight as previously shown, we have established the weak convergence of \(\hat{Q}^n\) joint with \(\hat{A}^n\) when \(\delta = 1/2\) and \(\alpha \ge 1\). Furthermore, by noting (3.1) and (3.2), as well as the continuous mapping theorem, we obtain the weak convergence of \((\hat{A}^n, \hat{Q}^n, \hat{D}^n)\) jointly. The case when \(\delta =1-\alpha /2\) and \(0<\alpha <1\) can be obtained analogously by noting that the limit \(\hat{Q}^n_2\) vanishes as \(n\rightarrow \infty \). Therefore, the proof of Theorem 3.1 is complete. \(\square \)

Proof of Corollary 3.1

The covariance functions of \(\hat{Q}\) can be obtained similarly to [13, Lemma 5.1] in combination with Itô isometry as well as the fact that the Kiefer processes \(\hat{K}_i\), with \(i=1, \ldots ,I\), and the arrival limit \(\hat{A}\) are independent of each other. The covariance functions of \(\hat{D}\) can also be derived similarly. We omit the details here for brevity. \(\square \)

5.3 Proofs for applications to fork–join networks

Proof Sketch of Theorem 4.1

We first note that the processes \(Q^n_k,Y^n_k\), and S can be represented as

Then we can obtain the representations for the diffusion-scaled processes \(\hat{Q}^n_k,\hat{Y}^n_k\), and \(\hat{S}^n\) as follows:

where the processes \(A^n_i,i=1, \ldots ,I\), are defined in (5.31), and the multiparameter sequential empirical processes \(\hat{{\varvec{K}}}^n_i=\{\hat{{\varvec{K}}}^n_i(t,{\mathbf x}): t\ge 0, {\mathbf x}\in {\mathbb R}^K_{+}\}\) are defined by

The weak convergence of the first terms in (5.41)–(5.43) follows analogously from the proof for (5.25) in Theorem 3.1. Note from [14, Thm. 3.1] and the independence of \(\hat{{\varvec{K}}}^n_i,i=1, \ldots ,I\), that

where \(\hat{{\varvec{K}}}_i,i=1, \ldots ,I\), are independent generalized Kiefer processes with covariance functions in Theorem 4.1. With similar argument to the proof in Theorem 3.1 and [14, Sect. 6.2], we can also show the weak convergence of the second terms in (5.41), (5.42) and (5.43), as well as the joint convergence of \((\hat{A},\hat{{\varvec{Q}}},\hat{{\varvec{Y}}},\hat{S})\). The details are omitted for brevity. \(\square \)

Proof of Corollary 4.1

The covariance functions of \(\hat{Q}_j(t)\) and \(\hat{Y}_k(t')\), and \(\hat{S}(t)\) and \(\hat{S}(t')\) are analogous to [14, Thm. 3.4] for \(j,k=1, \ldots ,K\), and \(t,t'\ge 0\), together with the fact that the generalized Kiefer processes \({\hat{\varvec{K}}}_i\)’s are independent, \(i=1, \ldots ,I\). We omit the details for brevity. \(\square \)

6 Concluding remarks

We have studied a large class of MAAPs that can capture more burstiness and variabilities than MMPPs. Under mild conditions on the parameters, we have established an FCLT for the MAAPs. The FCLT is applied to non-Markovian infinite-server systems and fork–join networks with NES. It can be also similarly applied to obtain two-parameter heavy-traffic limits for infinite-server systems as in [20]. The FCLT can be potentially applied to study large-scale service systems in Markov random environments, for example, queueing networks in which all stations are modulated by the same Markov process. The results can also be used to study resource allocation and system design problems for such queueing and network models. It may also be interesting to study the (sample-path) large deviation problems for queueing systems with MAAPs.

References

Anderson, D., Blom, J., Mandjes, M., Thorsdottir, H., de Turck, K.: A functional central limit theorem for a Markov-modulated infinite-server queue. Methodol. Comput. Appl. Probab. 18(1), 153–168 (2016)

Asmussen, S.: Applied Probability and Queues, 2nd edn. Springer, Berlin (2003)

Baykal-Gursoy, M., Xiao, W.: Stochastic decomposition in \(M/M/\infty \) queues with Markov modulated service rates. Queueing Syst. 48(1), 75–88 (2004)

Blom, J., Kella, O., Mandjes, M., Thorsdottir, H.: Markov-modulated infinite-server queues with general service times. Queueing Syst. 76(4), 403–424 (2014)

Blom, J., Mandjes, M., Thorsdottir, H.: Time-scaling limits for Markov-modulated infinite-server queues. Stoch. Models 29(1), 112–127 (2013)

Blom, J., de Turck, K., Mandjes, M.: Analysis of Markov-modulated infinite-server queues in the central-limit regime. Probab. Eng. Inf. Sci. 29(3), 433–459 (2015)

Blom, J., de Turck, K., Mandjes, M.: Functional central limit theorems for Markov-modulated infinite-server systems. Math. Methods Oper. Res. 83(3), 351–372 (2016)

Billingsley, P.: Convergence of Probability Measures. Wiley, New York (2009)

D’Auria, B.: Stochastic decomposition of the \(M/G/\infty \) queue in a random environment. Oper. Res. Lett. 35(6), 805–812 (2007)

Ethier, S.N., Kurtz, T.G.: Markov Processes: Characterization and Convergence. Wiley, New York (2009)

Falin, G.: The \(M/M/\infty \) queue in a random environment. Queueing Syst. 58, 65–76 (2008)

Keilson, J., Servi, L.: The matrix \(M/M/\infty \) system: retrial models and Markov modulated sources. Adv. Appl. Probab. 25, 453–471 (1993)

Krichagina, E.V., Puhalskii, A.A.: A heavy-traffic analysis of a closed queueing system with a \(GI/\infty \) service center. Queueing Syst. 25(1–4), 235–280 (1997)

Lu, H., Pang, G.: Gaussian limits for a fork-join network with non-exchangeable synchronization in heavy traffic. Math. Oper. Res. 41(2), 560–595 (2015a)

Lu, H., Pang, G.: Heavy-traffic limits for an infinite-server fork-join network with dependent and disruptive services. Submitted (2015b)

Lu, H., Pang, G.: Heavy-traffic limits for a fork-join network in the Halfin-Whitt regime. Submitted (2015c)

Nazarov, A., Baymeeva, G.: The \(M/G/\infty \) queue in a random environment. In: Dudlin, A. et al. (eds.) ITMM 2014, CCIS 487, pp. 312–324 (2014)

Neuhaus, G.: On weak convergence of stochastic processes with multidimensional time parameter. Ann. Math. Stat. 42(4), 1285–1295 (1971)

O’Cinneide, C., Purdue, P.: The \(M/M/\infty \) queue in a random environment. J. Appl. Probab. 23(1), 175–184 (1986)

Pang, G., Whitt, W.: Two-parameter heavy-traffic limits for infinite-server queues. Queueing Syst. 65(4), 325–364 (2010)

Ross, S.M.: Stochastic Processes, 2nd edn. Wiley, New York (1996)

Skorohod, A.V.: Limit theorems for stochastic processes with independent increments. Theory Probab. Appl. 2, 138–171 (1957)

Steichen, J.L.: A functional central limit theorem for Markov additive processes with an application to the closed Lu-Kumar network. Stoch. Models 17(4), 459–489 (2001)

Straf, M.L.: Weak convergence of stochastic processes with several parameters. In: Proceedings of the Sixth Berkeley Symposium on Mathematical Statistics and Probability 2, pp. 187–221 (1972)

Whitt, W.: Stochastic-Process Limits. An Introduction to Stochastic-Process Limits and Their Applications to Queues. Springer, Berlin (2002)

Whitt, W.: Stochastic-Process Limits. An Introduction to Stochastic-Process Limits and Their Applications to Queues, Online Supplement (2002)

Acknowledgments

Hongyuan Lu and Guodong Pang acknowledge the support from the NSF Grant CMMI-1538149. Michel Mandjes acknowledges the support from Gravitation project NETWORKS, Grant number 024.002.003, funded by the Netherlands Organization for Scientific Research (NWO).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lu, H., Pang, G. & Mandjes, M. A functional central limit theorem for Markov additive arrival processes and its applications to queueing systems. Queueing Syst 84, 381–406 (2016). https://doi.org/10.1007/s11134-016-9496-8

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11134-016-9496-8

Keywords

- Markov additive arrival process

- Functional central limit theorem

- Infinite-server queues

- Fork–join networks with non-exchangeable synchronization

- Gaussian limits

- Stochastic decomposition