Abstract

This paper analyzes several aspects of the Markov-modulated infinite-server queue. In the system considered (i) particles arrive according to a Poisson process with rate \(\lambda _i\) when an external Markov process (“background process”) is in state \(i\), (ii) service times are drawn from a distribution with distribution function \(F_i(\cdot )\) when the state of the background process (as seen at arrival) is \(i\), (iii) there are infinitely many servers. We start by setting up explicit formulas for the mean and variance of the number of particles in the system at time \(t\ge 0\), given the system started empty. The special case of exponential service times is studied in detail, resulting in a recursive scheme to compute the moments of the number of particles at an exponentially distributed time, as well as their steady-state counterparts. Then we consider an asymptotic regime in which the arrival rates are sped up by a factor \(N\), and the transition times by a factor \(N^{1+\varepsilon }\) (for some \(\varepsilon >0\)). Under this scaling it turns out that the number of customers at time \(t\ge 0\) obeys a central limit theorem; the convergence of the finite-dimensional distributions is proven.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Owing to its wide applicability and its attractive mathematical features, the infinite-server queue has been intensively studied. Such a system describes units of work, e.g., particles or customers, arriving at a resource, that stay present for some random duration that is independent of other customers (in that there is no waiting). In the special case that these customers arrive according to a Poisson process with rate \(\lambda \), and the sojourn times are i.i.d. random variables with finite mean \(1/\mu \)—a system commonly referred to as the M/G/\(\infty \) queue—it is known that the stationary number of particles in the system has a Poisson distribution with mean \(\lambda /\mu \). Also the transient behavior of such an M/G/\(\infty \) queue is well understood; e.g. [24, p. 355].

When relaxing the model assumptions mentioned above, several interesting variants arise. In one branch of the literature, for instance, attention has been paid to the case of renewal (rather than Poisson) arrivals [10, 11]. In the present paper, however, we consider a variant in which we introduce some sort of “burstiness” in the arrivals and service times, using the concept of Markov modulation. This means that both the arrival process and the service-time distributions are driven by an external Markov process (“background process”), in the following manner. Let \(X(t)\) denote an irreducible continuous-time Markov process defined on a finite state space \(\{1,\ldots ,d\}\). When \(X(t)=i\), then the (Poissonian) arrival rate at time \(t\) equals \(\lambda _i\), where \({\varvec{\lambda }}\equiv (\lambda _1,\ldots ,\lambda _d)\) is a vector with nonnegative entries. In addition, it is assumed that the time a particle remains in the system, the service time, has some general distribution with distribution function \(F_i(\cdot )\) that depends on the state of the background process as seen upon arrival by the particle.

The resulting model could be called a Markov-modulated M/G/\(\infty \) queue, or an infinite-server queue in a Markov-modulated environment. This type of system is relevant in a broad variety of application domains, ranging from telecommunication networks to biology. The rationale behind using this model in a communication networks setting is that the arrival rate and service times of customers may vary during the day, or on shorter timescales. In the biological context, one could think of mRNA strings being transcribed and degraded in a cell, where these transcriptions typically tend to occur in a clustered fashion; the proposed model captures the key characteristics of this mechanism well, as argued in [23].

A variety of results exist on Markov-modulated single- and many-server queues, whereas the literature on their infinite-server counterpart is, surprisingly, considerably scarcer. In the case of a single server, the key result is that the stationary distribution of the number of customers is of matrix-geometric form [17], so this system can be viewed as a “matrix generalization” of the normal M/M/1 queue where the stationary distribution is scalar-geometric. In [20] the stationary distribution for the case of infinitely many servers is considered; the results are in terms of the factorial moments of the number of customers. More particularly, it is shown that the corresponding distribution is not of matrix-Poisson type; in other words: this system is not the “matrix generalization” of the M/M/\(\infty \), which has a scalar-Poisson distribution. A somewhat more general model that includes retrials has been studied in [13].

The case of Markov-modulated renewal (rather than Poisson) arrivals, but exponential service times, is covered in [18]. Related results can be found in [16] as well, where special attention is paid to the autocorrelations in infinite-server systems of various types. Steady-state results for the infinite-server queue with modulated service rates have been derived in [2]. Falin [8] furthermore considers the simultaneous modulation of arrival and service rates and finds the mean number of customers in steady-state.

It should also be noted that introducing burstiness using a Markovian background process is by no means the only way to incorporate a nonhomogeneous arrival rate. Willmot and Drekic [25] apply bulk arrivals with a random bulk size, whereas Economou and Fakinos [7] study arrivals generated by a compound Poisson process, both to find the transient distribution of the number of customers in the system using a generating functions based approach.

D’Auria [5] studies the same model as we do in the present paper. Among several other results, he finds a recursion for the factorial moments of the stationary number of particles in the system. A key observation in his analysis is that the number of particles present has, in stationarity, a Poisson distribution with random parameter. Fralix and Adan [9] focus on the situation that the service times have specific phase-type distributions. In Hellings et al. [12] it was shown that if the transition times of the background process are sped up by a factor \(N\), then the arrival process tends (as \(N\rightarrow \infty \)) to a Poisson process; the queue under consideration then essentially behaves as an M/G/\(\infty \) system.

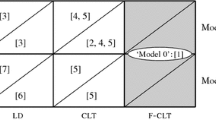

While the above results focus on Markov-modulated infinite-server queues in stationarity, there are considerably fewer results on the associated transient behavior. In [3], we studied both the transient and stationary behavior of a model similar to the one studied in the present paper, viz. the one with exponential service times and a Markovian background process with deterministic transition times. The main focus of [3] lies on specific time scalings. In the first scaling, just the background process’ transition times are sped up by a factor \(N\); then it turns out that the distribution of the resulting queueing system converges to that of an appropriate M/M/\(\infty \) queue (which has, in steady-state, a Poisson distribution). In the second scaling, the background process jumps at a faster rate than the arrival process: the arrival rates are scaled by a factor \(N\) and the transition times by a factor \(N^{1+\varepsilon }\) for some \(\varepsilon >0\). Under this scaling a central limit result was proven, for both the transient and stationary distribution.

The main contributions of our paper are the following. In the first place we develop in Sect. 2 expressions for the transient mean and variance for the number of particles in the system at time \(t\ge 0\). For exponential service times the resulting expressions simplify considerably. In Sect. 3 we exclusively consider the special case of exponential service times: we develop a differential equation that describes the moment generating function of the number of particles in the system, and show how this differential equation facilitates the computation of moments (at an exponentially distributed time epoch, as well as in steady-state). This section also includes a recursive scheme to compute the higher moments. Section 4 considers one of the scalings studied in [3]: the arrival rates \(\lambda _i\) are replaced by \(N\lambda _i\), while the transition times of the background Markov process are sped up by a factor \(N^{1+\varepsilon }\), for some \(\varepsilon >0\), where \(N\) grows large. The objective is to prove a central limit theorem for the number of particles in the system in a finite-dimensional setting, that is, at multiple points in time. The result is established by first setting up a system of differential equations for the number of particles in the system at multiple points in time in the non-scaled system, then applying the scaling, and then deriving (using Taylor approximations) a limiting differential equation (as \(N\rightarrow \infty \)) which eventually provides us with the claimed multivariate central limit theorem. Finally, Sect. 5 contains examples demonstrating analytically and numerically the results from Sects. 3 and 4.

2 General results

In full detail, the model can be described as follows. Consider an irreducible continuous-time Markov process \(X(t)\) on a finite state space \(\{1,\ldots ,d\}\), with \(d\in \mathbb{N }\). \(X(t)\), often referred to as the background process, has a transition rate matrix given by \(Q=\left( q_{ij}\right) _{i,j=1}^d\). The steady-state distribution of \(X(t)\) is given by \({\varvec{\pi }}\), being a \(d\)-dimensional vector with non-negative entries summing to 1, solving \({\varvec{\pi }}Q={\varvec{0}}\). Define \(q_i:=-q_{ii}=\sum _{j\ne i}q_{ij}\).

Now consider the embedded discrete-time Markov chain that corresponds to the jump epochs of \(X(t)\). It has a probability transition matrix \(P=\left( p_{ij}\right) _{i,j=1}^d\), with diagonal elements equalling 0 and \(p_{ij}:= q_{ij}/q_i.\) Let \(\hat{\pi }_i\) be the stationary probability vector at the jump epochs of \(X(t)\); it solves (after normalization to 1) the linear system \(\hat{\varvec{\pi }}D_Q^{-1}Q=0\), with \(D_Q := \mathrm{diag}\{{\varvec{q}}\}\). The time spent by \(X(t)\) in state \(i\), denoted \(T_i\), is referred to as transition time. \(T_i\) has an exponential distribution with mean \(1/q_i\). There is the following obvious relation between \({\varvec{\pi }}\) and \(\hat{\varvec{\pi }}\):

While the process \(X(t)\) is in state \(i\), particles arrive according to a Poisson process with rate \(\lambda _i\ge 0\), for \(i=1,\ldots ,d\). The service times are assumed to be i.i.d. samples distributed as a random variable \(B_i\) with mean \(1/\mu _i\) if the client was generated when the background process was in state \(i\); the corresponding distribution function is \(F_i(x):=\mathbb{P }(B_i\le x)\), with \(x\ge 0.\) The service times are independent of the background process \(X(t)\) and the arrival process. The system we consider is an infinite-server queue, meaning that each particle stays in the system just for the duration of its service time (that is, there is no waiting). In the rest of this section we focus on analyzing the probabilistic properties of the number of particles in the system at given points in time, starting empty. It is assumed that the background process is in stationarity at time \(0\).

We start by considering a somewhat different model than the one introduced above, where the relation with our model becomes clear soon. Consider an M/G/\(\infty \) queue with (i) a nonhomogeneous arrival process with rate function \(\lambda (\cdot )\) (such that the Poissonian arrival rate is \(\lambda (s)\) at time \(s\)), and (ii) a time dependent distribution function \(F(s,\cdot )\) (to be interpreted as the probability that a customer that arrives at time \(s\) leaves before time \(t+s\) is \(F(s,t)\)). Observe that, conditional on the event that there are \(n\) arrivals by time \(t\), the joint distribution of the arrival times is that of the order statistics taken from independent random variables with density

where \(\Lambda (t)=\int _0^t\lambda (s)\mathrm{d}s\). It now follows that if \(M(t)\) is the number of particles in the system at time \(t\), starting with an empty system, then with \(\bar{F}(\cdot ):=1-F(\cdot )\) we find that \(M(t)\) has a Poisson distribution:

and we note for later that

After this general observation, we return to the initial context. Whereas we so far assumed that the input rate function and service-time distribution function were deterministic, we now assume that they are stochastic. More specifically, we use \(\lambda _{i}\) for the arrival rate when the background process \(X(\cdot )\) is in state \(i\), and \(F_{i}(\cdot )\) for the distribution function of particles arriving while the background process is in the state \(i\).

By conditioning on the sample path of the background process, say \(X(s)=f(s)\), we find that \(M(t)\) is Poisson distributed with parameter \(\int _0^t\bar{F}_{f(t-s)}(s)\lambda _{f(t-s)}\mathrm{d}s\). Then by unconditioning, i.e., averaging over all paths \(f(\cdot )\) of the background process, its probability generating function (pgf) equals the moment generating function (mgf) of its random parameter, evaluated at \((z-1)\):

see for example [5, p. 226]. Recalling that \(X(\cdot )\) is assumed to be stationary, we have the distributional equality \(\{X(t+u)|\ u\in \mathbb{R }\}\stackrel{{\hbox {d}}}{=} \{X(u)|\ u\in \mathbb R \}\), so that

or, denoting by \(\hat{X}(\cdot )\) the time-reversed version of \(X(\cdot )\), with \(a_i(s):=\lambda _i\bar{F}_i(s)\),

This probability generating function allows us to analyze the mean and variance of \(M(t).\) It is immediate that the mean of \(M(t)\) equals, cf. [21, Thm. 2.1],

This evidently converges to \(\sum _{i=1}^d \pi _i\varrho _i\) as \(t\rightarrow \infty \), where \(\varrho _i:=\lambda _i\int _0^\infty \bar{F}_i(s)\mathrm{d}s\) is the traffic intensity when in state \(i\).

The variance can be computed as well, as follows. We start with the standard equality (commonly known as the “law of total variance”)

First notice that \(\mathbb{V }{\mathrm{ar}}(M(t)|\hat{X})=\mathbb{E }(M(t)|\hat{X})=\int _0^ta_{\hat{X}(s)}(s)\mathrm{d}s\) because \((M(t)\,|\,\hat{X})\) has a Poisson distribution (as was noted above). Hence,

The only quantity that remains to be computed is now \(\mathbb{V }{\mathrm{ar}}[\mathbb{E }(M(t)|\hat{X})]\). That is done as follows:

where for \(u<s\)

We now make the expressions more explicit for the case where \(t\) tends to \(\infty .\) With \(D_\pi =\mathrm{diag}\{{\varvec{\pi }}\}, Q\) and \(\hat{Q}=D_\pi ^{-1} Q^\mathrm{T}D_\pi ^{-1}\) are the transition rate matrices of \(X\) and \(\hat{X}\), respectively. Let us define the matrix \(\Sigma (s)=(\sigma _{ij}(s))_{i,j=1}^d\) through

Letting \(t\rightarrow \infty \), we obtain

When the service-time distributions are exponential, that is, \(\bar{F}_i(t)=e^{-\mu _i t}\), so that \(a_i(t)=\lambda _ie^{-\mu _i t}\), we have

We summarize (some of) our findings.

Proposition 1

The transient mean of the number of particles is

whereas the stationary variance is

provided that the system started empty.

We finish this section by performing some explicit calculations for the case that \(X\) is reversible and exponential service times; later on we further focus on the situation of \(d=2.\) Due to the reversibility, \(\pi _iq_{ij}=\pi _j q_{ji}\) for all \(i,j\in \{1,\ldots ,d\}\). As a consequence \(D_\pi Q = Q^\mathrm{T}D_\pi \), so that the matrix

is symmetric, and can be written as \(G(-\Delta )G^\mathrm{T}\), where \(G\) is a (real-valued) orthogonal matrix, and \(\Delta =\mathrm{diag}\{{\varvec{\delta }}\}\) is a (real-valued) diagonal matrix (where it is noted that, owing to the background process’ irreducibility all but one entries of \({\varvec{\delta }}\) are strictly positive). It follows that

and therefore

It now follows that

is symmetric, and hence for each \(i,j\in \{1,\ldots ,d\}\) we can write \(\sigma _{ij}(s) = \sum _{k=1}^d c_{ijk} e^{-\delta _k s}-\pi _i\pi _j.\) As a consequence,

In the case of \(d=2\), we have \(\pi _1= q_2/\bar{q}=1-\pi _2\), with \(\bar{q} :=q_1+q_2.\) It is readily verified that \(\delta _1=0\) and \(\delta _2=\bar{q}.\) It requires a standard computation to verify that

and also

Elementary calculus now yields that (3) equals

3 Exponential service times

In this section we analyze the special case of exponential service times in greater detail. We set up a system of differential equations for the moment generating function of the transient number of particles in the system. Then this is used to determine the mean and higher moments after an exponential amount of time.

We start this section with some preliminaries and additional notation. Here and in the remaining sections we denote by \(M_i(t)\) the number of particles in the system at time \(t\), conditional on the background process being in state \(i\) at time 0. It is evident that \(M_i(t)\) can be written as the sum of two independent components: the number of particles still present at time \(t\) out of the original population of size \(x_0\) (in the sequel denoted by \(\check{M}(t)\)), increased by the number of particles that arrived in \((0,t]\) that is still present at time \(t\) (in the sequel denoted by \(\bar{M}_i(t)\) in case the background process is in state \(i\) at time \(0\)).

Due to the assumption that the service times are exponentially distributed, there are positive numbers \(\mu _i\) (for \(i=1,\ldots ,d\)) such that \(\bar{F}_i(t) = e^{-\mu _i t}.\) In the case that the \(\mu _i\) are identical (say equal to \(\mu >0\)), \(\check{M}(t)\) follows a binomial distribution with parameters \(x_0\) and \(e^{-\mu t}\). In the case the \(\mu _i\) are not identical, we need to know the number \(x_{0,i}\) particles present at time 0 that were generated while the background process was in state \(i\). The resulting (independent) random variables \(\check{M}_i(t)\) follow binomial distributions with parameters \(x_{0,i}\) and \(e^{-\mu _i t}\); indeed, \(\check{M}(t)=\sum _{i}\check{M}_i(t).\)

Given these observations we concentrate in the remainder of this section on the more complicated component of \(M(t)\), that is \(\bar{M}_i(t)\).

3.1 Differential equation

Recall that we write, for ease of notation, \(q_i:=1/\mathbb{E }T_i\), and \(q_{ij}:= p_{ij}q_i\) (where \(i\not = j\)), with \(q_{ii}=-q_i\). The main quantity in this subsection is the moment generating function of \(\bar{M}_i(t)\):

Consider a small time period \(\Delta t\), and focus on all terms of magnitude \(O(\Delta t)\) or larger. In our continuous-time Markov setting, the background process has either zero jumps (with probability \(1-q_i\Delta t+o(\Delta t)\)), or a jump to state \(j\ne i\) (with probability \(q_{ij}\Delta t + o(\Delta t)\)); the probability of more than one transition is \(o(\Delta t)\) (see for instance [19, Thm. 2.8.2]).

Note that

here \(p_i(\vartheta ,t)\) is the mgf of a random variable distributed on \(\{0,1\}\), indicating whether a particle arriving in the time period \((0, \Delta t)\) is still present at \(t\). It is seen that the value 1 occurs with probability

Hence, \(p_i(\vartheta ,t) = 1 + e^{-\mu _i t} \left( e^{\vartheta } - 1\right) + O\left( \Delta t\right) \), and thus

Now \(q_i = \sum _{j\ne i} q_{ij}\) yields

where the derivative is with respect to \(t\). We have found the following system of differential equations.

Proposition 2

The mgf s \( \Lambda _i(\vartheta ,t)\) satisfy

Now define \(\psi _i(\alpha ,\vartheta ) :=\int _0^\infty \alpha e^{-\alpha t} \Lambda _i(\vartheta ,t) \mathrm{d}t\). Then, by integrating,

We thus obtain

cf. [1, Eq. (4.6), Cor. 1] in the one-dimensional case and [15, Thm. 3] in the network case for equations that resemble (5) for Markov-modulated shot-noise models. These may be viewed as continuous state-space analogs or weak limits of the infinite-server queue (see [14] regarding a general framework that includes both for the network version in the non-modulated case).

3.2 Mean

To compute \(\mathbb{E }\bar{M}_i(\tau _\alpha )\), with \(\tau _\alpha \sim \exp (\alpha )\), we differentiate Eq. (6) with respect to \(\vartheta \) and let \(\vartheta \downarrow 0\), thus obtaining

or

Now consider the special case that the background process is in equilibrium at time \(0\). It turns out that the expressions simplify significantly. We have, due to (7), using the fact that \(\sum _i\pi _iq_{ij}=0\),

Laplace inversion yields

in line with (1). Now consider steady-state behavior, that is, we let \(\alpha \downarrow 0.\) From the above, we obtain an expression that could as well have been found by applying Little’s law:

3.3 Higher moments

A second differentiation of (6) yields

In other words, once we know the \( \mathbb{E }\bar{M}_i(\tau _{\alpha })\) for all \(\alpha >0\), we can compute the associated second moment as well.

Along the same lines,

As a consequence, these higher moments (at exponentially distributed epochs) can be recursively determined. Again there is a simplification if the background process is in equilibrium at time \(0\). Then we have the equation

For the steady-state we obtain, cf. [1],

4 Asymptotic normality for general service times

In this section we consider our Markov-modulated infinite-server system, but, as opposed to the setting discussed in the previous section, now with generally distributed service times. The main result is a central limit theorem (for \(N\rightarrow \infty \)) under the scaling \(q_{ij}\mapsto N^{1+\varepsilon }q_{ij}\) and \(\lambda _i\mapsto N\lambda _i\); here \(\varepsilon >0\). The intuitive idea behind this scaling is that the state of the background process moves at a faster time scale than the arrival processes (so that the arrival process is effectively a single Poisson process as \(N\rightarrow \infty \)), while this arrival process is sped up by a factor \(N\) (so that a central limit regime kicks in).

Remark 1

We already observed that the number \(\check{M}_i^{(N)}(t)\) of particles still present at time \(t\), out of the initial population of size \(Nx_0\) and that arrived while the background process was in state \(i\), is not affected by the evolution of the background process, as the departure rate has been determined upon arrival. Specializing to the case of exponential service times (with mean \(\mu _i^{-1}\) if the particle under consideration had entered while the background process was in state \(i\)), the corresponding random variables have independent binomial distributions with parameters \(Nx_{0,i}\) and \(e^{-\mu _i t}\). \(Nx_{0,i}\) denotes the number of particles present at time \(0\) that arrived while the background was in state \(i\). Therefore, as \(N \rightarrow \infty \)

For other service-time distributions the quantities \(e^{-\mu _i t}\) have to be replaced by the appropriate survival probability associated with the residual lifetime of a particle that is present at time 0 and that had arrived while the background process was in \(i\).

In light of the above, it suffices to focus on establishing a central limit theorem for the number of particles that arrived in \((0,t]\) that are still present at time \(t\). Let, in line with earlier definitions, this number be denoted by \(\bar{M}_i^{(N)}(t)\) in case the modulating process is in state \(i\) at time 0.\(\Diamond \)

One of the leading intuitions of this section is that, due to the fact that the timescale of the background process is faster than that of the arrival process, we can essentially replace our Markov-modulated infinite-server system, as \(N\rightarrow \infty \), by an M/G/\(\infty \) queue. This effectively means that, irrespective of the initial state \(i, \bar{M}^{(N)}_i(t)\) can be approximated by a Poisson distribution with parameter \(N\varrho _t\). The candidate for \(\varrho _t\) can be easily identified using the theory of Sect. 2:

Let us now focus on identifying a candidate for the limiting covariance between \(\bar{M}^{(N)}_i(t)\) and \(\bar{M}^{(N)}_i(t+u)\); this is a rather elementary computation that we include for the sake of completeness. Let \(N(t)\) be the number present in an M/G/\(\infty \) queue that started off empty at time \(0\); the arrival rate is \(\lambda \) and the distribution function of the service times is denoted by \(F(\cdot )\). In this system it is possible to compute the covariance between \(N(t)\) and \(N(t+u)\) explicitly in terms of the arrival rate and the distribution function \(F(\cdot )\) of the service times. Realize that \(N(t+u)\) can be written as the sum of the particles that were already present at time \(t\) and that are still present at time \(t+u\) (which we denote by \(N_t(t+u)\)), and the ones that have arrived in \((t,t+u]\) and that are still present at time \(t+u\). The latter quantity being independent of \(N(t)\), we have

It thus suffices to compute the quantity \(\mathbb{C }{\mathrm{ov}}(N(t),N_t(t+u))\). To this end, define

the first of these quantities can be interpreted as the probability that an arbitrary particle that has arrived in \([0,t)\) has already left the system at time \(t\), the second as the probability that it is still present at time \(t\) but not at \(t+u\) anymore, and the third as the probability that it is still present at time \(t+u\). It now follows that

which turns out to equal (after some elementary computations) \(q^\mathrm{C}\,\lambda t+ q^\mathrm{C}(1-q^\mathrm{A})\lambda ^2 t^2.\) As \(\mathbb{E }N(t) = (1-q^\mathrm{A})\lambda t\) and \(\mathbb{E }N_t(t+u)=q^\mathrm{C}\,\lambda t\), it follows that

This computation provides us with the candidate for the central limit result in the case of general service times. Define in this context, for \(t_1\le t_2\),

(while if \(t_2<t_1\) we put \(c_{t_1,t_2}=c_{t_2,t_1}\)).

The following result covers the asymptotic multivariate normality. In the proof we consider the bivariate case (time epochs \(t\) and \(t+u\)), but the extension to a general dimension (time epochs \(t_1\) up to \(t_K\) with, without loss of generality, \(t_1\le \ldots \le t_K\)) is straightforward and essentially a matter of careful bookkeeping.

Theorem 1

For any \({\varvec{\alpha }}\in {\mathbb{R }}^K\) and \({\varvec{t}}\in {\mathbb{R }}^K\) (with \(t_1\le \ldots \le t_K\)), and general service times, as \(N\rightarrow \infty \),

with

This theorem shows convergence of the finite-dimensional distributions to a multivariate Normal distribution. A next step would be to prove convergence at the process level, viz. convergence of

to a Gaussian process with a specific correlation structure. Such a result has been proven for the regular (that is, non-modulated) M/M/\(\infty \) queue in which the Poisson arrival rate is scaled by \(N\); the limiting process is then an Ornstein-Uhlenbeck process—see, for example [22]. The proofs of such weak convergence results typically consist of three steps: single-dimensional convergence, finite-dimensional convergence, and a tightness argument, where the tightness step tends to be relatively complicated. In our setup (that is, the Markov-modulated M/G/\(\infty \) queue) we have proven the first two steps; the third step (tightness) is beyond the scope of this paper.

We prove Thm. 1 for the case of \(K=2\), with \(t_1=t\) and \(t_2=t+u\) (for \(t,u\ge 0\)); higher dimensions can be dealt with fully analogously but these require substantially more administration. Our starting point is to set up a system of differential equations for the non-scaled process. This system is derived in the very same way as the differential equations for the univariate exponential case (see Prop. 2). Define, for fixed scalars \(\alpha _1,\alpha _2\), and for \(u\ge 0\) given,

In addition, let

Proposition 3

The mgf s \(\Lambda _i(\vartheta ,t)\) satisfy

where \(\bar{p}_i(\vartheta ,t):=\lambda _i (p_i(\vartheta ,t)-1).\)

Proof

Let \(I_i(t)\) be the indicator function of the event that a particle arriving in \((0,\Delta t]\) (while the background process was in state \(i\)) is still in the system at time \(t\), and consider the random variable \(\alpha _1 I_i(t)+\alpha _2 I_i(t+u)\). Similarly to what we did earlier in this section, \(\alpha _1 I_i(t)+\alpha _2 I_i(t+u)\) can be split into three contributions; one corresponding to the event that a particle that arrived in \((0,{\Delta }t]\) has already left the system at time \(t\), one corresponding to the event that it is still present at time \(t\) but not anymore at time \(t+u\), and finally one corresponding to the event that it is still present at time \(t+u\). With some standard calculus it is readily obtained that

This means that we obtain

Now subtracting \(\Lambda _i(\vartheta ,t-\Delta t)\) from both sides, dividing by \(\Delta t\), and letting \(\Delta t\downarrow 0\) leads to the desired system of differential equations.\(\square \)

Proof of Thm. 1. Now we are ready to prove the bivariate asymptotic normality for the case of general service times. The idea behind the proof is to (i) start off with the differential equations for the non-scaled system as derived in Prop. 3; (ii) incorporate the scaling in the differential equations, and apply the centering and normalization corresponding to the central limit regime; (iii) use Taylor expansions (for large \(N\)); (iv) obtain a limiting differential equation (as \(N\rightarrow \infty \)). This limiting differential equation finally yields the claimed central limit theorem.

We first “center” the random variable \(\alpha _1 \bar{M}_i^{(N)}(t)+\alpha _2 \bar{M}_i^{(N)}(t+u)\); to this end we subtract \(N\varrho (t,u)\) from this random variable, with

and \(\varrho _t\) defined as in Eq. (8). At this point we impose the scaling, that is, we replace \(q_{ij}\) by \(N^{1+\varepsilon } q_{ij}\), and \(\lambda _i\) by \(N\lambda _i\). With these parameters, we now study the appropriately centered and scaled random variable

where we suppress the argument \(u\) in \(\varrho (t,u)\) (as \(u\) is held fixed throughout the proof). It means that we study the “centered and scaled mgf”

where, due to Prop. 3, \(\Lambda _i(\vartheta /\sqrt{N},t)\) satisfies

Realize that, as a straightforward application of the chain rule,

Upon combining the above, we find a relation which is completely in terms of the centered/scaled mgf \(\tilde{\Lambda }_i^{(N)}(\vartheta ,t)\):

We now study the solution of this system of differential equations for \({N}\) large by “Tayloring” the function \(\bar{p}_i(\vartheta /\sqrt{N},t)\) with respect to \({N}\). It is an elementary exercise to check that

with

We thus obtain the differential equation

or in self-evident matrix/vector notation,

Now premultiply this equation by the so-called fundamental matrix \(\mathcal F :=(\Pi -Q)^{-1}\), where \(\Pi :={\varvec{1}}{\varvec{\pi }}^\mathrm{T}\). It holds that \(\Pi ^2=\Pi , \mathcal F \Pi =\Pi \mathcal F =\Pi \), and \(Q\mathcal F =\mathcal F Q=\Pi -I\); see for these properties and more background on fundamental matrices and deviations matrices e.g. [4]. We then obtain

Iterating this identity once, we obtain

Now premultiply the equation by \(d\,{{\varvec{\pi }}^\mathrm{T} = }{\varvec{1}}^\mathrm{T}\Pi \). Recalling the identity \(\Pi \mathcal F =\Pi \) and noting that it follows from the definition of \(\varrho (t)\) that

all terms of \(O(N^\alpha )\) with \(\alpha > 0\) cancel. For \(\lim _{N\rightarrow \infty }{\varvec{\pi }}^\mathrm{T} \tilde{\varvec{\Lambda }}^{(N)}(\vartheta ,t)=:\tilde{\Lambda }(\vartheta ,t)\) we thus obtain the following differential equation:

Using the technique of separation of variables, it follows that

or

for some function \({\kappa }(\vartheta ,u)\) that is independent of \(t\). Now note that this expression should not depend on \(\alpha _1\) if \(t=0\). In addition, if we insert \(u=0\), then \(\alpha _1\) and \(\alpha _2\) should appear in the expression as \(\alpha _1+\alpha _2\). This enables us to identify \({\kappa }(\vartheta ,u)\). We eventually obtain

as desired. We have proven the claimed convergence.

Remark 2

It is remarked that the central limit theorem does not carry over to the case \(\varepsilon \in (-1,0]\), as then the term of order \(N^{1-2\varepsilon }\) cannot be neglected relative to the term of order \(N^{1-\varepsilon }\). As a result, in that situation the variance featuring in the central limit theorem will contain the fundamental matrix \(\mathcal F \) for these values of \(\varepsilon \).\(\Diamond \)

5 Examples

5.1 Two-state model

In this example we consider the case \(d=2\), and exponential sojourn times of the background process, that is, the time spent in state \(i\) is exponential with mean \(1/q_i\in (0,\infty )\). From \(\mathbb{E }\bar{\varvec{M}}(\tau _\alpha ) = (A(\alpha ))^{-1} {\varvec{\varphi }}(\alpha )\) we obtain for the mean number in the system after an exponential time with mean \(1/\alpha \) (ignoring the effect of an initial population)

When sending \(\alpha \) to \(\infty \), we indeed obtain that \(\mathbb{E }\bar{M}_i(\tau _\infty )=0\); when sending \(\alpha \) to \(0\), the resulting formula is consistent with the long-term mean number in the system, as found earlier. Replacing \(q_i\) by \(Nq_i\) (for \(i=1,2\)), we obtain that both components of \(\mathbb{E }\bar{\varvec{M}}(\tau _\alpha )\) converge (as \(N\rightarrow \infty \)) to

which is for \(\mu _1=\mu _2\) in line with the findings in [12].

We now focus on computing the second moment; for ease we consider the stationary case. From Sect. 3.3, we have

which becomes after sending \(\alpha \) to \(0\),

obviously, \(\pi _1=1-\pi _2=q_2/(q_1+q_2)\).

We now find a lower bound on the variance of the stationary number of particles in the system. Restricting ourselves to the case \(\mu _i\equiv \mu \) for all \(i=1,\ldots ,d\), elementary computations yield, with \(r_i:=\lambda _i/\mu \) and \(q:=q_1+q_2\),

We now claim that, with \(R\) denoting the stationary mean \(\pi _1r_1+\pi _2r_2\), the stationary variance is larger than this \(R\), or equivalently

with equality only if \(\lambda _1=\lambda _2\). This can be shown as follows. Writing \(r_1 = a r_2\), the above claim reduces to verifying that, for all \(a\in (0,\infty )\),

with equality only if \(a=1\); here

Observe that \(f_1>\pi _1\), so that the left-hand side of (13) has a minimum. Now realize that \(f_1-\pi _1 = -(f_2-\pi _2)\) and \(g_2-\pi _2=-(g_1-\pi _1)\). As a result, (13) reduces to

which, due to \((f_1-\pi _1)\pi _1=(g_2-\pi _2)\pi _2\) can be rewritten as \((f_1-\pi _1)\pi _1(a-1)^2\ge 0.\) Claim (12) thus follows. We conclude that \(\mathbb{V }{\mathrm{ar}}\bar{M}(\infty ) \ge \mathbb{E }\bar{M}(\infty ),\) with equality if and only if \(\lambda _1=\lambda _2\).

This result can be intuitively understood. As argued before, \(\bar{M}(\infty )\) is distributed as a Poisson random variable with a random parameter. We showed with an elementary argument in the introduction of [12] that this entails that \(\mathbb{V }\mathrm{ar}\bar{M}(\infty ) \ge \mathbb{E }\bar{M}(\infty );\) informally, this says that Markov modulation increases the variability of the stationary distribution. We have now shown that for \(d=2\) this inequality is in fact strict, unless the \(\lambda _i\) match (and equal, say \(\lambda \)). In fact, then the queue is just an M/M/\(\infty \) system which has the Poisson(\(\lambda /\mu \)) distribution as the equilibrium distribution, for which mean and variance coincide (and have the value \(\lambda /\mu \)). In other words, for \(d=2\) there are no other ways to obtain a Poisson stationary distribution than letting all \(\lambda _i\) be equal.

5.2 Computational results

We include computational results demonstrating the converging behavior of the two-state scaled process in one dimension (i.e., \(K=1\) in Thm. 1). Unscaled, the parameters are \({\varvec{\lambda }} = (1,2), {\varvec{\mu }} = (1,1)\), and \({\varvec{q}}=( 1, 3)\). Depicted in Fig. 1 is the limiting behavior of Eq. (9) assuming exponential service times, obtained by solving the scaled version of the differential equation (5) with the mgf parameter \(\vartheta =0.5\) and \(\varepsilon = 0.5\). The corresponding limiting curve from Eq. (11) is plotted as well. As in the case with deterministic transition times [3], we observe loglinear convergence, with the solution curve closely following the limiting curve for \(N=1000\). Tweaking the parameters results in the same convergence behavior.

References

Asmussen, S., Kella, O.: Rate modulation in dams and ruin problems. J. Appl. Probab. 33, 523–535 (1996)

Baykal-Gursoy, M., Xiao, W.: Stochastic decomposition in M/M/\(\infty \) queues with Markov-modulated service rates. Queueing Syst. 48, 75–88 (2004)

Blom, J., Mandjes, M., Thorsdottir, H.: Time-scaling limits for Markov-modulated infinite-server queues. Stoch. Models 29, 112–127 (2012)

Coolen-Schrijner, P., van Doorn, E.: The deviation matrix of a continuous-time Markov chain. Probab. Eng. Inform. Sci. 16, 351–366 (2002)

D’Auria, B.: M/M/\(\infty \) queues in semi-Markovian random environment. Queueing Syst. 58, 221–237 (2008)

Dembo, A., Zeitouni, O.: Large Deviations Techniques and Applications, 2nd edn. Springer, New York (1998)

Economou, A., Fakinos, D.: The infinite server queue with arrivals generated by a non-homogeneous compound Poisson process and heterogeneous customers. Commun. Stat.: Stoch. Models 15, 993–1002 (1999)

Falin, G.: The M/M/ \(\infty \) queue in random environment. Queueing Syst. 58, 65–76 (2008)

Fralix, B., Adan, I.: An infinite-server queue influenced by a semi-Markovian environment. Queueing Syst. 61, 65–84 (2009)

Glynn, P.: Large deviations for the infinite server queue in heavy traffic. Inst. Math. Appl. 71, 387–394 (1995)

Glynn, P., Whitt, W.: A new view of the heavy-traffic limit theorem for infinite-server queues. Adv. Appl. Probab. 23, 188–209 (1991)

Hellings, T., Mandjes, M., Blom, J.: Semi-Markov-modulated infinite-server queues: approximations by time-scaling. Stoch. Models 28, 452–477 (2012)

Keilson, J., Servi, L.: The matrix M/M/\(\infty \) system: retrial models and Markov modulated sources. Adv. Appl. Probab. 25, 453–471 (1993)

Kella, O., Whitt, W.: Linear stochastic fluid networks. J. Appl. Probab. 36, 244–260 (1999)

Kella, O., Stadje, W.: Markov-modulated linear fluid networks with Markov additive input. J. Appl. Probab. 39, 413–420 (2002)

Liu, L., Templeton, J.: Autocorrelations in infinite server batch arrival queues. Queueing Syst. 14, 313–337 (1993)

Neuts, M.: Matrix-Geometric Solutions in Stochastic Models: An Algorithmic Approach. Johns Hopkins University Press, Baltimore (1981)

Neuts, M., Chen, S.: The infinite server queue with semi-Markovian arrivals and negative exponential services. J. Appl. Probab. 9, 178–184 (1972)

Norris, J.: Markov Chains. Cambridge University Press, Cambridge (1997)

O’Cinneide, C., Purdue, P.: The M/M/\(\infty \) queue in a random environment. J. Appl. Probab. 23, 175–184 (1986)

Purdue, P., Linton, D.: An infinite-server queue subject to an extraneous phase process and related models. J. Appl. Probab. 18, 236–244 (1981)

Robert, P: Stochastic Networks and Queues. Springer, Berlin (2003)

Schwabe, A., Rybakova, K., Bruggeman, F.: Transcription stochasticity of complex gene regulation models. Biophysical J. 103, 1152–1161 (2012)

Whitt, W.: Stochastic-Process Limits. Springer, New York (2001)

Willmot, G.E., Drekic, S.: Time-dependent analysis of some infinite server queues with bulk Poisson arrivals. INFOR 47, 297–303 (2009)

Acknowledgments

The authors like to thank Koen de Turck (Ghent University) for helpful discussions. O. Kella is partially supported by The Vigevani Chair in Statistics. M. Mandjes is also with Eurandom (Eindhoven University of Technology, the Netherlands). Part of this work was done while M. Mandjes was visiting The Hebrew University

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Blom, J., Kella, O., Mandjes, M. et al. Markov-modulated infinite-server queues with general service times. Queueing Syst 76, 403–424 (2014). https://doi.org/10.1007/s11134-013-9368-4

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11134-013-9368-4

Keywords

- Markov-modulated Poisson process

- General service times

- Queues

- Infinite-server systems

- Markov modulation

- Laplace transforms

- Fluid and diffusion scaling