Abstract

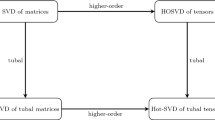

In this paper, we mainly develop the well-known vector and matrix polynomial extrapolation methods in tensor framework. To this end, some new products between tensors are defined and the concept of positive definitiveness is extended for tensors corresponding to T-product. Furthermore, we discuss on the solution of least-squares problem associated with a tensor equation using Tensor Singular Value Decomposition (TSVD). Motivated by the effectiveness of some proposed vector extrapolation methods in earlier papers, we describe how an extrapolation technique can be also implemented on the sequence of tensors produced by truncated TSVD (TTSVD) for solving possibly ill-posed tensor equations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the last few years, several iterative methods have been proposed for solving large and sparse linear and nonlinear systems of equations. When an iterative process converges slowly, the extrapolation methods are required to obtain rapid convergence. The purpose of vector extrapolation methods is to transform a sequence of vector or matrices generated by some process to a new one that converges faster than the initial sequence. The well-known extrapolation methods can be classified into two categories: the polynomial methods that include the minimal polynomial extrapolation (MPE) method of Cabay and Jackson [5], the modified minimal polynomial extrapolation (MMPE) method of Sidi, Ford, and Smith [27], the reduced rank extrapolation (RRE) method of Eddy [22] and Mesina [24], Brezinski [4] and Pugatchev [26]; and the 𝜖-type algorithms including the topological 𝜖-algorithm of Brezinski [4] and the vector 𝜖-algorithm of Wynn [30]. Efficient implementations of some of these extrapolation methods have been proposed by Sidi [28] for the RRE and MPE methods using QR decomposition, while Jbilou and Sadok [12] give an efficient implementation of the MMPE based on a LU decomposition with pivoting strategy. It was also shown that when applied to linearly generated vector sequences, RRE and TEA methods are mathematically equivalent to GMRES and Lanczos methods, respectively. Those results were also extended to the block and global cases dealing with matrix sequences; see [11, 14]. Our aim in this paper is to define the analogue of these vector and matrix extrapolation methods to the tensor framework.

Basically, in the present paper, we develop some tensor extrapolation methods namely, the Tensor RRE (TRRE), the Tensor MPE (TMPE), the Tensor MMPE (TMMPE), and the Tensor Topological 𝜖-Algorithm (TTEA). We define new products and establish some of their properties. As an application, it is shown that these new tensor extrapolation methods can be applied to sequences obtained by truncation of the Tensor Singular Value Decomposition (TSVD) to solve tensor discrete ill-posed problems.

The remainder of this paper is organized as follows. Before ending this section, we recall some fundamental concepts in tensor framework. In Section 2, we give notations, some basic definitions, and properties related to tensors. Moreover, we introduce the concept of positive definiteness for tensors with respect to T-product; some new products are also defined between tensors and their properties are analyzed. In Section 3, we introduce the tensor versions of the vector polynomial extrapolation methods namely the Tensor Reduced Rank Extrapolation (TRRE), the Tensor Minimal Polynomial Extrapolation (TMPE), the Tensor Modified Minimal Polynomial Extrapolation (TMMPE), and the Tensor Topological 𝜖-Algorithm (TTEA). Section 4 describes the TSVD and its the truncated or low rank version. In addition, as an application, the TRRE method is applied to the sequence produced by truncated TSVD for solving linear discrete tensor ill-posed problems. Some numerical experiments are reported in Section 5 for the mentioned application. Concluding remarks are given in Section 6.

Preliminaries

A tensor is a multidimensional array of data. The number of indices of a tensor is called modes or ways. Notice that a scalar can be regarded as a zero mode tensor, first mode tensors are vectors and matrices are second mode tensor. For a given N-mode tensor \( \mathcal {X}\in \mathbb {R}^{n_{1}\times n_{2}\times n_{3}{\ldots } \times n_{N}}\), the notation \(x_{i_{1},\ldots ,i_{N}}\) (with 1 ≤ ij ≤ nj and j = 1,…N) stand for the element \(\left (i_{1},\ldots ,i_{N} \right ) \) of the tensor \(\mathcal {X}\). The norm of a tensor \(\mathcal {X}\in \mathbb {R}^{n_{1}\times n_{2}\times {\cdots } \times n_{\ell }}\) is specified by:

Corresponding to a given tensor \( \mathcal {X}\in \mathbb {R}^{n_{1}\times n_{2}\times n_{3}{\ldots } \times n_{N}}\), the notation:

denotes a tensor in \(\mathbb {R}^{n_{1}\times n_{2}\times n_{3}{\ldots } \times n_{N-1}}\) which is obtained by fixing the last index and is called frontal slice. Fibers are the higher order analogue of matrix rows and columns. A fiber is defined by fixing all the indexes except one. A matrix column is a mode-1 fiber and a matrix row is a mode-2 fiber. Third-order tensors have column, row, and tube fibers. An element \(c\in \mathbb {R}^{1\times 1 \times n}\) is called a tubal-scalar of length n [17]. More details are found in [16, 19].

2 Definitions and new tensor products

The current section is concerned with two main parts. In the first part, we recall definitions and properties related to T-product. Furthermore, we develop the definition of positive definiteness for tensors and establish some basic results. The second part deals with presenting some new products between tensors which can be used for simplifying the algebraic computations of the main results.

2.1 Definitions and properties

In this part, we briefly review some concepts and notations related to the T-product; see [3, 8, 17, 18] for more details.

Definition 1

The T-product (∗) between two tensors \(\mathcal {X} \in \mathbb {R}^{n_{1}\times n_{2}\times n_{3}} \) and \(\mathcal {Y} \in \mathbb {R}^{n_{2}\times m_{2}\times n_{3}} \) is an n1 × m2 × n3 tensor given by:

where

here for i = 1,…,n3, Xi and Yi are frontal slices of the tensors \(\mathcal {X}\) and \(\mathcal {Y}\), respectively.

The n1 × n1 × n3 identity tensor \(\mathcal {I}_{n_{1} n_{1} n_{3}}\) is the tensor whose first frontal slice is the n1 × n1 identity matrix, and whose other frontal slices are all zeros, that is:

where \(I_{n_{1}n_{1}}\) is the identity matrix.

In the special case in which n1 = 1, the identity tensor is a tubal-scalar and denoted by e, in other words MatVec(e) = (1,0,0…,0)T.

Definition 2

We have the following definitions:

-

1.

An n1 × n1 × n3 tensor \(\mathcal {A}\) is invertible, if there exists a tensor \({\mathscr{B}}\) of order n1 × n1 × n3 such that:

$$\mathcal{A}\ast \mathcal{B}=\mathcal{I}_{n_{1} n_{1} n_{3}} \qquad \text{and}\qquad \mathcal{B}\ast \mathcal{A}=\mathcal{I}_{n_{1} n_{1} n_{3}}$$It is clear that \(\mathcal {A}\) is invertible if and only if \(\text {bcirc}(\mathcal {A})\) is invertible (see [25]).

-

2.

If \(\mathcal {A} \) is an n1 × n2 × n3 tensor, then \(\mathcal {A}^{T}\) is the n2 × n1 × n3 tensor obtained by transposing each of the front-back frontal slices and then reversing the order of transposed frontal slices 2 through n3. It should be commented that one may define \(\mathcal {A}^{T}\) as the tensor satisfying \(\text {bcirc}(\mathcal {A})^{T}=\text {bcirc}(\mathcal {A}^{T}):\)

Example 1

If \(\mathcal {A} \in \mathbb {R}^{n_{1}\times n_{2}\times 5}\) and its frontal slices are given by the n1 × n2 matrices A1,A2,A3,A4,A5, then:

Remark 1

As pointed out earlier, the tensor \(\mathcal {A}\) of order m × m × n is invertible if \(\text {bcirc}(\mathcal {A})\) is invertible. It is equivalent to say that \(\mathcal {A}\) is invertible if \(\mathcal {A} \ast \mathcal {X}=\mathcal {O}\) implies \(\mathcal {X}=\mathcal {O}\) where \(\mathcal {X}\in \mathbb {R}^{m\times 1 \times n}\) and \(\mathcal {O}\) is zero tensor.

Here, we define the notion of positive definiteness for tensors in term of T-product which can be seen as a natural extension of the same concept for matrices.

Definition 3

The tensor \(\mathcal {A}\in \mathbb {R}^{m\times m \times n_{}}\) is said to be positive (semi) definite if

for all nonzero tensors \(\mathcal {X}\in \mathbb {R}^{m\times 1 \times n}\).

Here, we comment that it is not difficult to verify that the summation of positive definite tensors is positive definite.

Remark 2

In view of Remark 1, it is immediate to see that every positive definite tensor is invertible. It can be also verified that the inverse of a positive definite tensor is also positive definite. For any tensor \(B\in \mathbb {R}^{n_{1}\times n_{2} \times n_{3}}\) and arbitrary nonzero tensor \(\mathcal {X}\in \mathbb {R}^{n_{2}\times 1 \times n_{3}}\), it can be seen that:

in which the last equality follows from [18]. As a result, the tensor \({\mathscr{B}}^{T}\ast {\mathscr{B}}\) is positive semi-definite. Evidently, for any scalar 𝜖 > 0:

This shows that the tensor \({\mathscr{B}}^{T}\ast {\mathscr{B}}+\epsilon ~ \mathcal {I}_{n_{2}n_{2}n_{3}}\) is positive definite.

Definition 4

Let \(\mathcal {A}\in \mathbb {R}^{n_{1}\times n_{2} \times n_{3}}\). Then, the tensor \( \mathcal {X}\in \mathbb {R}^{n_{2}\times n_{1} \times n_{3}}\) satisfying the following four conditions:

(a) | \(\mathcal {A}\ast \mathcal {X} \ast \mathcal {A}=\mathcal {A}\) | (b) | \(\mathcal {X}\ast \mathcal {A} \ast \mathcal {X}=\mathcal {X}\), | |

(c) | \((\mathcal {A} \ast \mathcal {X})^{T}=\mathcal {A} \ast \mathcal {X}\), | (d) | \((\mathcal {X}\ast \mathcal {A})^{T} = \mathcal {X}\ast \mathcal {A}\). |

is called the Moore-Penrose inverse of \(\mathcal {A}\) and denoted by \(\mathcal {A}^{\dagger }\).

In view of [18, Lemmas 3.3 and 3.16] and by using straightforward computations, one can easily observe that conditions (a)-(d) determine \(\mathcal {A}^{\dagger }\) uniquely. Here, we further comment that if \(\mathcal {A}\) is invertible, then \(\mathcal {A}^{\dagger } =\mathcal {A}^{-1}\).

For \(\mathcal {X},\mathcal {Y}\), two tensors in \(\mathbb {R}^{n_{1}\times 1\times n_{3}}\), the T-scalar product \(\left \langle {.,.} \right \rangle \) is a bilinear form defined by:

Let \(\mathcal {X}_{1},\ldots , \mathcal {X}_{\ell }\) a collection of ℓ third tensors in \(\mathbb {R}^{n_{1}\times 1\times n_{3}}\), if

where αi is a non-zero scalar, then the set \(\mathcal {X}_{1},\ldots , \mathcal {X}_{\ell }\) is said to be an orthogonal collection of tensors. The collection is called orthonormal if αi = 1, i = 1,…,l.

Definition 5

An n × n × ℓ real-values tensor \(\mathcal {Q}\) is said to be orthogonal if \(\mathcal {Q}^{T} \ast \mathcal {Q} =\mathcal {Q} \ast \mathcal {Q}^{T} =\mathcal {I}_{nn\ell }\).

We end this part with the following proposition.

Proposition 1

Suppose that \(\mathcal {A}\) and \({\mathscr{B}}\) are two tensors of order n1 × n2 × n3. Then

Proof

Let \(\widetilde {\mathcal {I}}_{\tau }\) be an n2 × 1 × n3 tensor whose all frontal slices are zero except its first frontal being the τ-th column of the n2 × n2 identity matrix. Then, we have:

Notice also that:

From [18, Lemma 3.3], it is known that:

which completes the proof. □

We end this part by the following simple remark which is helpful in the sequel for more clarification.

Remark 3

In what follows, we may need to solve a system of tensor equations in the following form:

where \(\mathcal {X}_{1}, \mathcal {X}_{2},\ldots ,\mathcal {X}_{k}\) are the unknown tensors to be determined. The above system is uniquely solvable if the following system of equations has only the trivial solution:

where \(\mathcal {O}\) stands for the zero tensor. When the diagonal tensors \(\mathcal {A}_{11}, \mathcal {A}_{22},\ldots ,\mathcal {A}_{kk}\) are invertible, we can easily conclude that (2) has a unique solution in the following two special cases:

-

\(\mathcal {A}_{ij}=0\) for j > i,

-

\(\mathcal {A}_{ij}=0\) for j < i,

for i,j = 1,2,…,k.

2.2 New tensor products

In order to simplify derivation of generalized extrapolation methods in tensor format, we need to define new tensor products.

Definition 6

Let \(\mathcal {A}\) and \({\mathscr{B}}\) be 4-mode tensors with frontal slices \(\mathcal {A}_{i}\in \mathbb {R}^{n_{1}\times n_{2} \times n_{3}}\) and \({\mathscr{B}}_{j}\in \mathbb {R}^{n_{1}\times n_{2} \times n_{3}}\) for i = 1,2,…,ℓ and j = 1,2,…,k, respectively. The product \(\mathcal {A} \diamondsuit {\mathscr{B}} \) is defined as a 5-mode tensor of order n2 × n2 × n3 × k × ℓ defined as follows:

for i = 1,2,…,ℓ and j = 1,2,…,k. In the case where k = 1, i.e. \({\mathscr{B}}\in \mathbb {R}^{n_{1}\times n_{2} \times n_{3}}\), \(\mathcal {A} \diamondsuit {\mathscr{B}} \) is a 4-mode tenor whose i-th frontal slice is given by \(\mathcal {A}_{i}^{T} \ast {\mathscr{B}}\) for i = 1,2,…,ℓ.

Here, we comment that the ♢ product can be seen as a generalization of ◇-product between two matrices given in [2]. In the sequel, we further present an alternative product called ⋆-product which can be seen as an extension of the ∗-product between a set of matrices and vectors; see [13] for more details.

Definition 7

Let \(\mathcal {A}\) be a 5-mode tensor of order n2 × n1 × n3 × k × ℓ and \({\mathscr{B}}\) be a 4-mode tensor with frontal slices \({\mathscr{B}}_{1},\ldots ,{\mathscr{B}}_{k}\in \mathbb {R}^{n_{1}\times n_{2} \times n_{3}}\). The product \(\mathcal {A} \star {\mathscr{B}}\) is defined as a 4-mode tensor of order n2 × n2 × n3 × ℓ such that:

Evidently, a 4-mode tensor of order n2 × n1 × n3 × k can be considered as an 5-mode tensor of order n2 × n1 × n3 × k × 1. As a result, if \(\mathcal {A}\) is a 4-mode tensor of order n2 × n1 × n3 × k, i.e., ℓ = 1, then \(\mathcal {A} \star {\mathscr{B}}\in \mathbb {R}^{n_{2} \times n_{2} \times n_{3}}\) defined by \(\mathcal {A} \star {\mathscr{B}}= {\sum }_{\eta =1}^{k} \mathcal {A}_{\eta } \ast {\mathscr{B}}_{\eta }\) where \(\mathcal {A}_{i}\) stands for the i-th frontal slice of \(\mathcal {A}\) for i = 1,2,…,k.

In the main results, it is worth to define a notion of the left inverse of a 5-mode tensors dealing with ∗, ⋆, and ♢ products. To this end, we define the \(\bar {\star }\)-product between two 5-mode tensors which can be seen as an extension of ⋆ product.

Definition 8

Let \(\mathcal {A}\in \mathbb {R}^{n_{1}\times n_{2} \times n_{3} \times k \times \ell }\) and \({\mathscr{B}}\in \mathbb {R}^{n_{2}\times n_{1} \times n_{3} \times k \times \ell }\), the product \(\mathcal {A}~ \bar {\star }~ {\mathscr{B}}\) is a 5-mode tensor of order n1 × n1 × n3 × k × k such that for τ,η = 1,2,…,k,

Definition 9

The tensor \({\mathscr{B}}^{+}\in \mathbb {R}^{n_{1}\times n_{2} \times n_{3} \times k \times \ell }\) is called a left inverse of \({\mathscr{B}}\in \mathbb {R}^{n_{2}\times n_{1} \times n_{3} \times k \times \ell }\), if

for τ,η = 1,2,…,k. Here, \(\mathcal {O} \) is the 3-mode zero tensor of order n1 × n1 × n3.

Now, we establish a proposition which reveals the relation between the two proposed products ⋆ and \(\bar {\star }\).

Proposition 2

Let \(\mathcal {A}\in \mathbb {R}^{n_{1}\times n_{2} \times n_{3} \times k \times k}\), \({\mathscr{B}}\in \mathbb {R}^{n_{2}\times n_{1} \times n_{3} \times k \times k}\), and \(\mathcal {Y} \in \mathbb {R}^{n_{1} \times n_{2} \times n_{3} \times k}\). Then, the following relation holds:

Here, \(\mathcal {A}^{*}\) stands for an 5-mode tensor of order n1 × n2 × n3 × k × k associated with \(\mathcal {A}\) where \(\mathcal {A}^{*}_{:::ij}=\mathcal {A}_{:::ji}\) for 1 ≤ i,j ≤ k.

Proof

It is clear that both sides of the above relation are 4-mode tensors of order n1 × n2 × n3 × k. To prove the assertion, we show that the frontal slices of both sides are equal. Let 1 ≤ z ≤ k; we can observe that:

The result follows immediately from the above computations. □

Remark 4

Notice that the system (2) in Remark 3 can be rewritten in the following form:

where \(\mathcal {A}\) is 5-mode tensor such that \(\mathcal {A}_{:::ji}=\mathcal {A}_{ij}\) and \(\mathcal {X},{\mathscr{B}}\) are 4-mode tensors with frontal slices \(\mathcal {X}_{i},{\mathscr{B}}_{i}\) for i,j = 1,2,…,k. In theoretical point of view, it turns out that the solution of above equation can be uniquely derived if the left inverse of \(\mathcal {A}\) (uniquely) exists. In fact if \(\mathcal {C}=\mathcal {A}^{+}\) then \(\mathcal {C}^{*}\star (\mathcal {A} \star \alpha ) =\mathcal {C}^{*}\star {\mathscr{B}}\). Now, from the previous proposition, we get \(\mathcal {X} =\mathcal {C}^{*}\star {\mathscr{B}}\).

We end this section by a proposition which can be established by using straightforward algebraic computations.

Proposition 3

Let \(\mathcal {A},{\mathscr{B}},\mathcal {C}\in {\mathbb R}^{n_{1}\times s \times n_{3}\times \ell }\) and \(\mathcal {D}\in {\mathbb R}^{s\times n_{2} \times n_{3}}\). The following statements hold:

-

1.

\( (\mathcal {A}+{\mathscr{B}})\diamondsuit \mathcal {C} = \mathcal {A}\diamondsuit \mathcal {C} +{\mathscr{B}}\diamondsuit \mathcal {C}\)

-

2.

\(\mathcal {A}\diamondsuit ({\mathscr{B}}+ \mathcal {C}) = \mathcal {A}\diamondsuit {\mathscr{B}} +\mathcal {A}\diamondsuit \mathcal {C}\)

-

3.

\((\mathcal {A}\diamondsuit {\mathscr{B}})\star \mathcal {D}=\mathcal {A} \diamondsuit ({\mathscr{B}} \star \mathcal {D})\).

3 Extrapolation methods based on tensor formats

In this section, we define new tensor extrapolation methods. In the first part, we present in the tensor polynomial-type extrapolation methods by using the new tensor products introduced in the preceding section. In the second part, a tensor topological 𝜖-algorithm is developed. We notice that when we are dealing with vectors, all these new methods reduce to the classical vector extrapolation methods.

3.1 Tensor polynomial-type extrapolation methods

Corresponding to a given sequence of tensors (Sn) in \(\mathbb {R}^{n_{1}\times n_{2} \times n_{3} }\), we consider the transformation \(({T}_{k}^{(n)})\) defined by:

where the 4-mode \({\mathcal {G}}_{k,n}\) with frontal slices \({G}_{i}(n) \in \mathbb {R}^{n_{1}\times n_{2}\times n_{3} }\) is given for i = 1,…,k. The 4-mode tensor \(\alpha ^{}_{k}\) is unknown and its frontal slices are denoted by \(\alpha _{i}^{(n)} \in \mathbb {R}^{n_{2}\times n_{2}\times n_{3}}\) for i = 1,…,k. As the vector and matrix cases, in extrapolation methods, we aim to determine the unknown tensors. To this end, we use the transformation \(\widetilde {T}_{k}^{(n)}\) obtained from \({T}_{k}^{(n)}\) as follows:

here, the 4-mode \({\mathcal {G}}_{k,n+1}\) has frontal slices \( {G}_{i}(n+1) \in \mathbb {R}^{n_{1}\times n_{2}\times n_{3} }\) for i = 1,…,k. Let Δ denote the forward difference operator on the index n defined as ΔSn = Sn+ 1 − Sn and \({\varDelta } {\mathcal {G}}_{k,n} \) stand for the 4-mode tensor whose frontal slices are given by ΔGi(n) = Gi(n + 1) − Gi(n) for i = 1,…,k. The generalized residual of \({T}_{k}^{(n)}\) is represented by \({R}({T}_{k}^{(n)})\) defined as follows:

For an arbitrary given set of tensors \({Y}_{1}^{(n)}, \ldots ,{Y}_{k}^{(n)}\in \mathbb {R}^{n_{1}\times n_{2} \times n_{3} }\), let \(\widetilde {\mathbf {H}}_{k,n} \) and \(\widetilde {\mathbf {L}}_{k,n}\) denote the subspaces generated by ΔG1(n),…,ΔGk(n) and \({Y}_{1}^{(n)}, \ldots ,{Y}_{k}^{(n)}\) respectively. We comment that subspace generated by ΔG1(n),…,ΔGk(n) is the subspace generated with respect to the multiplication ⋆ . Evidently, we have

and the unknown tensors \(\alpha _{i}^{(n)}\) are determined by imposing the following condition,

Let \({\mathscr{L}}_{k,n}\) be a 4-mode tensor with frontal slices \({Y}_{1}^{(n)}, \ldots ,{Y}_{k}^{(n)}\), the above orthogonality condition can be equivalently expressed by

where \(\mathcal {O}\) is a zero tensor of order n2 × n2 × n3 × k. Therefore, from Proposition 3, we have:

In fact the unknown tensor αk can be seen as the solution of thee following tensor equation:

The choices of sequence of tensors G1(n),…,Gk(n) and \({Y}_{1}^{(n)},\ldots ,{Y}_{k}^{(n)}\) determine the type of the tensor polynomial extrapolation method. In fact, for all these polynomial-type methods, the auxiliary sequence of tensors is given by Gi(n) = ΔSn+i− 1 for i = 1,…,k (n ≥ 0). The following choices for \({Y}_{1}^{(n)},\ldots , {Y}_{k}^{(n)}\) can be used:

where the operator Δ2 refers to the second forward difference with respect to the index n defined as:

for i = 1,…,k. The approximation \({T}_{k}^{(n)}\) produced by TMPE, TRRE, and TMMPE can be also expressed as follows:

and the unknown tensors \(\gamma _{0}^{(k)},\gamma _{1}^{(k)},\ldots ,\gamma _{k}^{(k)}\) are determined by imposing the following condition:

such that \(\gamma _{k}^{(k)}\) is assumed to be invertible. Here, \(\mathcal {O}_{n_{2}n_{2}n_{3}}\in {\mathbb R}^{n_{2}\times n_{2} \times n_{3}} \) is the tensor with all entries equal to 0, \(\eta _{i,j}^{(n)}= ({Y}_{i+1}^{(n)})^{T} \ast {\varDelta } {S}_{n+j}\).

From now on and for simplification, we set n = 0. The system of (7) is given in the following form:

Let \({\beta }_{i}^{(k)} = {\gamma }_{i}^{(k)} \ast ({\gamma }_{k}^{(k)})^{-1} \) where \(({\gamma }_{k}^{(k)})^{-1} \) is the inverse of \({\gamma }_{k}^{(k)}\), i.e., \({\gamma }_{k}^{(k)}\ast ({\gamma }_{k}^{(k)})^{-1}=\mathcal {I}_{n_{2}n_{2}n_{3}}\), for 0 ≤ l ≤ k. The system of (8) can be rewritten as follows:

The above system can be transformed in the following form:

where βk is the 4-mode tensor with the k frontal slices \({ \beta }^{(k)}_{0},\ldots ,{ \beta }^{(k)}_{k-1}\) and \(\mathcal {V}_{k}\) is a 4-mode tensor whose i-th frontal slice is given by ΔSi− 1 for i = 1,2,…,k. We consider the case that (10) has unique solution (see Remark 3). Notice that from the first relation in (8), we get:

Therefore, setting \({\beta }_{k}^{(k)} =\mathcal {I}_{n_{2}n_{2}n_{3}},\) we conclude that the inverse of \({{\sum }_{l=0}^{k} {\beta }_{l}^{(k)} }\) exists when \(\gamma _{k}^{(k)}\) is assumed to be invertible. It is not difficult to verify that:

Having γ0,γ1,…,γk computed, we set:

Setting \({T}_{k}= {T}_{k}^{(0)}\), we get:

where Vj = ΔSj the (j + 1)-th frontal slice of \(\mathcal {V}_{k}\) for j = 0,…,k − 1 and αk is a 4-mode tensor with frontal slices \({\\alpha}_{0}^{(k)},\ldots ,{\alpha }_{k-1}^{(k)}\). To determine \(\gamma ^{(k)}_{i}\) for i = 0,1,…,k, we first we need to compute β(k) by solving system of (10). Using (4), (12), and (13), the generalized residual R(Tk) can be also seen as follows:

in which γk and \(\mathcal {V}_{k+1}\) are 4-mode tensors with whose i-th frontal slices are respectively given by \({\gamma }_{i-1}^{(k)}\) and Vi− 1 for i = 1,2,…,k + 1.

3.2 The tensor toplogical 𝜖- transformation

For vector sequences, Brezinski [4] proposed the well-known topological 𝜖-algorithm (TEA) which is a generalization of the scalar 𝜖-algorithm [30] known as a technique for transforming slowly convergent or divergent sequences. In this section, we briefly see how to extend this idea in tensor framework and define the Tensor Toplogical 𝜖-Transformation (TTET). The following results can be regarded as the generalization of discussions in [11, Subsection 3.2] to the tensor framework.

Let (Sn) be a given sequence of tensors in \(\mathbb {R}^{n_{1}\times n_{2}\times n_{3} }\). We consider approximations \({E}_{k}({S}_{n})={E}_{k}^{(n)}\) of the limit or the anti-limit of the of sequence \(({S}_{n})_{n\in \mathbb {N}} \) such that:

where \({\beta }_{i}^{(n)}\in \mathbb {R}^{n_{2}\times n_{2} \times n_{3} }\) are the unknown tensors to be determined for i = 1…,k. We set:

where \(\widetilde {{E}}_{k,0}^{(n)}={E}_{k}^{(n)}\). Let \( \widetilde {R}_{j}(\bar {E}^{(n)}_{k})\) denote the j-th generalized residual tensor, i.e.:

Let \({Y}\in \mathbb {R}^{n_{1}\times n_{2}\times n_{3} }\) be a given third-order tensor. The coefficients \({\beta }_{i}^{(n)}\) in (15) are computed such that:

where \(\mathcal {O}\in \mathbb {R}^{n_{2}\times n_{2}\times n_{3}}\). The above conditions result in the following system of equations:

In the case that the above system is uniquely solvable, we can obtain \({E}_{k}^{(n)}\).

4 Application of tensor extrapolation methods to solve ill-posed tensor problems

The main purpose of this section is to adopt the idea used by Jbilou et al. [15] for solving a class of ill-posed tensor equations. To this end, first, we need to give a brief overview on some basic concepts related to tensor SVD (TSVD) and its truncated version [18] based on the T-product of tensors.

4.1 TTSVD: truncated tensor singular value decomposition

Truncating the SVD of a matrix can lead to an efficient approximation for its Moore–Penrose inverse which is helpful for solving least-squares problem. More precisely, the truncated version consumes less space of storage when the rank of matrix is not very large. This inspired Miao et al. [25] to extend the theory of TSVD [18] to truncated TSVD (TTSVD).

In this part, first, we review some existing results for TSVD and TTSVD. Then, an explicit form is obtained for the minimum norm solution of least-squares problem with respect to the T-product. In the sequel, an F-diagonal tensor refers to a third-order tensor whose all frontal slices are diagonal.

The following theorem is proved in [18] where the existence of TSVD is established by construction.

Theorem 1

[18] Let \(\mathcal {A}\in \mathbb {R}^{n_{1}\times n_{2}\times n_{3}}\) be a real valued tensor, then there exists orthogonal tensors \(\mathcal {U}\in \mathbb {R}^{n_{1}\times n_{1}\times n_{3}} \) and \(\mathcal {V}\in \mathbb {R}^{n_{2}\times n_{2}\times n_{3}} \) such that

in which \(\mathcal {S}\in \mathbb {R}^{n_{1}\times n_{2}\times n_{3}} \) is an F-diagonal tensor.

The notion of TTSVD of \(\mathcal {A}\) was used in [18, 25]. In particular, the following result was proved in [25, Corollary 6].

Corollary 1

[25] Let \(\mathcal {A}\in \mathbb {R}^{n_{1}\times n_{2} \times n_{3}}\) be a real valued tensor and

where \(\mathcal {U}_{(k)}\in \mathbb {R}^{n_{1}\times k\times n_{3}} \) and \(\mathcal {V}_{(k)}\in \mathbb {R}^{n_{2}\times k\times n_{3}} \) are unitary tensors extracted from TSVD of \(\mathcal {A}\). The Moore–Penrose inverse of tensor \(\mathcal {A}_{k}\) is given by:

where \(\mathcal {S}_{k}\in \mathbb {R}^{k\times k\times n_{3}} \) is a F-diagonal tensor and k < min(n1,n2) is called the tubal-rank of \(\mathcal {A}\) based on the T-product.

The expression (17) can be regarded the rank-k approximation of the tensor \(\mathcal {A}\), denoted by \(\mathcal {A}\approx \mathcal {A}_{k}\). We comment that the decomposition (17) is also called the tensor compact SVD (T-CSVD); see [25] for instance.

The TTSVD of \(\mathcal {A}\) can be expressed as follows:

where \(\bar {U}_{j}=\mathcal {U}_{(k)}(:,j,:) \in \mathbb {R}^{n_{1}\times 1\times n_{3}} \), \(\bar {V}_{j}=\mathcal {V}_{(k)}(:,j,:)\in \mathbb {R}^{n_{2}\times 1\times n_{3}}\) and \(d_{j}=\mathcal {S}(j,j,:)\in \mathbb {R}^{1\times 1\times n_{3}} \) for j = 1,2,…,k.

Remark 5

Let \(\mathcal {A}=\mathcal {U}\ast \mathcal {S} \ast \mathcal {V}^{T}\) be the TSVD of \(\mathcal {A}\). In view of Proposition 1, we can see that:

Therefore, we have \(\bar {U}_{i}^{T} \ast \bar {U}_{j}\) and \(\bar {V}_{i}^{T} \ast \bar {V}_{j}\) are zero tubal-scalar of length n3 for i≠j; \(\bar {U}_{i}^{T} \ast \bar {U}_{i}=\textbf {e}\) and \(\bar {V}_{i}^{T} \ast \bar {V}_{i}= \textbf {e}.\)

The following theorem reveals that \(\mathcal {A}_{k}\) is an optimal approximation of a tensor; see [18] for the proof.

Theorem 2

Let the TSVD of \(\mathcal {A}\in \mathbb {R}^{n_{1}\times n_{2} \times n_{3}}\) be given by \(\mathcal {A}=\mathcal {U} \ast \mathcal {S} \ast \mathcal {V}^{T}\) and for \(k < \min \limits (n_{1},n_{2})\), the tensor \(\mathcal {A}_{k}\) given by (18) satisfies:

where \(M=\{\mathcal {C}=\mathcal {X}\ast \mathcal {Y}~|~\mathcal {X}\in \mathbb {R}^{n_{1}\times k\times n_{3}}, \mathcal {Y}\in \mathbb {R}^{k\times n_{2}\times n_{3}}\}\).

In view of Theorem 2, the Moore-Penrose inverse of tensor \(\mathcal {A}\) is efficiently estimated on \(\widetilde {M}=\{\mathcal {C}=\mathcal {X}\ast \mathcal {Y}~|~\mathcal {X}\in \mathbb {R}^{n_{2}\times k\times n_{3}}, \mathcal {Y}\in \mathbb {R}^{k\times n_{1}\times n_{3}}\}\) by

in which tubal-scalar \({d}_{j}^{\dagger }\in \mathbb {R}^{1\times 1 \times n_{3}}\) stands for the (j,j,:) entry of \(\mathcal {S}_{(k)}^{\dagger }\).

The following theorem has a key role in deriving the results of the next section.

Theorem 3

Assume that \(\mathcal {A}\in \mathbb {R}^{n_{1}\times n_{2} \times n_{3}}\) and \({\mathscr{B}}\in \mathbb {R}^{n_{1}\times s\times n_{3}}\). If \(\hat {\mathcal {X}}=\mathcal {A}^{\dagger } \ast {\mathscr{B}}\), then

Moreover, for any \(\tilde {\mathcal {X}} \in \mathbb {R}^{n_{1}\times s\times n_{3}}\) such that \(\tilde {\mathcal {X}}\ne \hat {\mathcal {X}} \) and \(\|\mathcal {A}\ast \hat {\mathcal {X}} -{\mathscr{B}}\|=\|\mathcal {A}\ast \tilde {\mathcal {X}} -{\mathscr{B}}\|\), then \(\|\hat {\mathcal {X}}\| < \|\tilde {\mathcal {X}}\|\).

Proof

Let \(A=\mathcal {U}\ast \mathcal {S} \ast \mathcal {V}^{T}\) be the TSVD of \(\mathcal {A}\). From [18, Lemma 3.19], it can be seen that

By some straightforward computations, we have:

Setting \(\mathcal {Z}=\mathcal {V}^{T} \ast \mathcal {X}\) and \(\mathcal {W}=\mathcal {U}^{T} \ast {\mathscr{B}}\), we get:

where ∥⋅∥F is the well-known Frobenius matrix norm, the notation ⊗ stands for the Kronecker product and the matrix \(F_{n_{3}}\) is the discrete Fourier matrix of size n3 × n3 defined by (see [6]):

in which \(\omega =e^{-2\pi \textbf {i} /n_{3}}\) is the primitive n3–th root of unity in which \(\textbf {i}=\sqrt {-1}\) and F∗ denotes the conjugate transpose of F.

For simplicity, we set \(Z=(F_{n_{3}}^{*}\otimes I_{n_{2}})\text {MatVec} (\mathcal {Z})\) and \(W=(F_{n_{3}}^{*}\otimes I_{n_{1}})\text {MatVec} (\mathcal {W})\); hence, (21) can be rewritten as follows:

Notice that

From [18, 25], it is known that there exist diagonal (rectangular) matrices \({\varSigma }_{1},{\varSigma }_{2},\ldots ,{\varSigma }_{n_{3}}\) such that:

and

From the above computations, we have:

It is well-known from the literature that the minimum Frobenius norm solution of ∥Σ Z − W∥F over \(\mathbb {R}^{n_{2}n_{3}\times s}\) is given by \(\hat {Z}={\varSigma }^{\dagger } W\), i.e.:

Let \(\hat {\mathcal {X}}\) be the tensor such that

It is immediate to deduce the following equality:

which is equivalent to say that \(\mathcal {V}^{T}\ast \hat {\mathcal {X}}=\mathcal {S}^{\dagger }\ast \mathcal {U}^{T}\ast {\mathscr{B}}\). Finally, the result follows from (22). □

Similar to the TSVD [18], the TTSVD can be obtained using the fast Fourier transform. Notice that circulant matrices are diagonalizable via the normalized DFT. This fact was used for proving Theorem 1 and deriving the Matlab pseudocode for TSVD; see Algorithm 1 for more details. Here, we further use this fact and present a Matlab pseudocode for computing TTSVD and the corresponding approximation of Moore-Penrose inverse in Algorithm 2. Note that the Matlab function pinv(.) reduces to inv(.) when the diagonal matrix S in Step 2 of the algorithm is nonsingular.

4.2 Tensor extrapolation methods applied to TTSVD sequences in ill-posed problems

Let \(\mathcal {A}\in \mathbb {R}^{n_{1}\times n_{2}\times n_{3} }\) be given and consider its TSVD, i.e., let us apply Algorithm 1 for \(\mathcal {A}\). In case the matrix \(\text {bcirc}(\mathcal {A})\) has too many singular values being close to zero, the tensor \(\mathcal {A}\) is called ill-determined rank.

In this subsection, we discuss solutions of tensor equations in the following form:

where the ill-determined rank tensor \(\mathcal {A}\in \mathbb {R}^{n_{1}\times n_{2}\times n_{3} }\) and right-hand side \({{\mathscr{B}}}\in \mathbb {R}^{n_{1}\times n_{2}\times n_{3} }\) are given and \(\mathcal {X}\in \mathbb {R}^{n_{2}\times n_{2}\times n_{3} }\) is an unknown tensor to be determined. We assume that the right-hand side \({{\mathscr{B}}} \in \mathbb {R}^{n_{1}\times n_{2}\times n_{3} }\) is contaminated by an error \(\mathcal {E}\), i.e., \( {{\mathscr{B}}}={ \widetilde {{\mathscr{B}}}}+\mathcal {E} \) where \(\widetilde {{\mathscr{B}}}\) denotes the unknown error-free right-hand side. We are interested in determining an accurate approximation of the minimum norm (least-squares) solution of (23). From Theorem 3, it is known that the exact solution is given by:

Systems of tensors (23) with a tensor of ill-determined rank often are referred to as linear discrete tensor ill-posed problems. They arise in several areas in science and engineering, such as the restoration of color and multispectral images [1, 20, 29], and blind source separation [21], when one seeks to determine the cause of an observed effect.

In the matrix case, when the size of the rank deficient matrix A is moderate, using the truncated SVD is a popular method for computing an approximation for the (least-squares) solution of (in)consistent linear system of equations Ax = b. The approach replaces the matrix A† by a low-rank approximation; see, e.g., Golub and Van Loan [7] or Hansen [9]. Following the same idea, we approximate the exact solution by using the expression (19) and available right-hand side \({{\mathscr{B}}}\), i.e., \(\widetilde {\mathcal {X}}\approx \mathcal {A}_{}^{\dagger }\ast {{\mathscr{B}}}\). Basically, we take \(\widetilde {\mathcal {X}}_{k}\approx \widetilde {\mathcal {X}}\) in which:

where \(\bar {U}_{j}=\mathcal {U}_{(k)}(:,j,:) \in \mathbb {R}^{n_{1}\times 1\times n_{3}} \), \(\bar {V}_{j}=\mathcal {V}_{(k)}(:,j,:)\in \mathbb {R}^{n_{2}\times 1\times n_{3}}\) and \(d_{j}=\mathcal {S}(j,j,:)\in \mathbb {R}^{1\times 1\times n_{3}} \) are determined by Algorithm 2 for j = 1,2,…,k. For the matrix case, Jbilou et al. [14] proposed the application of the RRE to the sequence of vectors generated by the truncated SVD. In the remainder of this paper, we follow the same idea and consider the application of TRRE to the sequence of tensors generated by the truncated TSVD (TTSVD). To do so, we set:

in which \(\ell =\min \limits (n_{1},n_{2})\) and (Sk)k≥ 0 is the tensor sequence generated by the TTSVD. Thus,

where \(\delta _{j}= {d}_{j}^{\dagger }\ast \bar {U}_{j}^{T} \ast {\widetilde {{\mathscr{B}}}}\) and S0 is set to be a zero tensor of order n2 × n2 × n3. It can be observed that:

We assume here that \({\delta }_{k}^{T}\ast \delta _{k}\) is invertible and notice that if \({\delta }_{k}^{T}\ast \delta _{k}\) is zero, then we can delete the corresponding member from the sequence (24) and compute the next one by keeping the same index notation. To overcome the cases \({\delta }_{k}^{T}\ast \delta _{k}\) is numerically non invertible, we can use a small shift \(\epsilon \mathcal {I}\) (say 𝜖 = 1e − 8) on the positive semi-definite tensor \({\delta }_{k}^{T}\ast \delta _{k}\); see Remark 2.

Let \({\varDelta }{\mathcal {S}_{k}}\) and \({\varDelta }^{2}{\mathcal {S}_{k}}\) be 4-mode tensors whose i-th frontal slices are given by ΔSi− 1 and \({\varDelta }^{2} {S}_{i-1}\) for i = 1,2,…,k, respectively, for i = 1,2,…,k.

Notice that \({\varDelta }^{2} {S}_{j-1}= \bar { V}_{j+1}\ast \delta _{j+1} -\bar { V}_{j}\ast \delta _{j}\) for j = 1,2,…,k. Hence,

Using Remark 5, we can deduce that the non-zero frontal slices of \({\varDelta }^{2}\tilde {\mathcal {S}_{k}} \diamondsuit {\varDelta } {\mathcal {S}_{k}}\) are given by:

and

Straightforward computations together with Remark 5 show that the frontal slices of the 4-mode tensor \(({\varDelta }^{2} {\mathcal {S}_{k}} \diamondsuit {\varDelta } {S}_{k})\) are equal to zero except the last frontal slice being equal to \(\delta _{k+1}^{T} \ast \delta _{k+1}\). For notational simplicity, we define \({\varTheta }_{i+1}=\delta _{i+1}^{T}\ast \delta _{i+1}\) for i = 0,…,k. In summary, we need to solve the following tensor equation:

where βk is a 4-mode tensor with frontal slices \({\beta }_{0}^{(k)},{\beta }_{1}^{(k)},\ldots ,{\beta }_{k-1}^{(k)}\). Or equivalently, we need to find the solution of the following system of tensor equations:

here \(\mathcal {O}\) stands for zero tensor of order n2 × n2 × n3. It is immediate to see that

is a solution of (26). Evidently, we have:

where \({\beta }_{k}^{(k)}=\mathcal {I}_{n_{2}n_{2}n_{3}}\). Note that \({\sum }_{i=0}^{k} {\varTheta }_{i+1}^{-1}\) is the summation of positive definite tensors which implies its invertibility. In fact, we have:

We comment here that the assumption made on the invertibility of \({\gamma }_{k}^{(k)}\) in Section 3.1 is implicitly satisfied here.

By the discussions in Section 3.1, from (11), we can derive \({\gamma }_{j}^{(k)}\) for j = 0,1,…,k − 1 noticing that \({\gamma }_{k}^{(k)}=\mathcal {I}_{n_{2}n_{2}n_{3}}-{\sum }_{i=0}^{k-1}\gamma _{i}^{(k)}\). More precisely, we have:

Having \({\gamma }_{0}^{(k)} ,{\gamma }_{1}^{(k)} ,\ldots ,{\gamma }_{k}^{(k)} \) obtained, we can further compute \({\alpha }_{i}^{(k)}\) for i = 0,1,…,k − 1 by (12).

Finally, the extrapolated third tensor can be written as follows:

where \({\varDelta } {\mathcal {S}_{k}}\) and \(\alpha _{}^{(k)} \) are 4-mode tensors whose j-th frontal slices are receptively given by \({\varDelta } {S}_{j-1}={S}_{j}-{S}_{j-1}= \bar {V}_{j}\ast \delta _{j}\) and \({\alpha }_{j-1}^{(k)}\) for j = 1,2,…,k.

The generalized residual can be written in the following form:

where γ(k) is a 4-mode tensor with frontal slices \({\gamma }_{0}^{(k)},{\gamma }_{2}^{(k)},\ldots ,{\gamma }_{k}^{(k)}\). By Remark 5, one can derive:

Notice that Θi+ 1 is symmetric, i.e., \({\varTheta }_{i+1}={\varTheta }_{i+1}^{T}\). It can be verified that the inverse of a symmetric tensor is also a symmetric tensor. From (27) and (29), we can observe:

Now, we can deduce that (see the proof of [18, Lemma 3.19] for more details):

For ill-posed problems, the value of ∥R(Tk)∥ decreases when k increases and is sufficiently small. However, the norm of R(Tk) may increase with k. Hence, similar to [15], we may need to simultaneously exploit an alternative stopping criterion when the problem is ill-posed. To this end, we can stop iterations once either ∥R(Tk)∥ or

is smaller than a prescribed tolerance. Using Remark 5 and some computations, one may simplify the above relation exploiting the following relations,

and

We end this part by summarizing the above discussions in Algorithm 3.

5 Numerical experiments

In this part, we consider a test problem to numerically compare the performance of Algorithm 3 with TTSVD for solving ill-conditioned problems. All computations are carried out using MATLAB with machine epsilon about 10− 16.

The desired solution \(\widetilde {\mathcal {X}}\) is chosen as a tensor with all entries being equal to 1. The error-free right-hand side is given by \(\widetilde {{\mathscr{B}}}=\mathcal {A} \ast \widetilde {\mathcal {X}}\) and the associated error-contaminated right-hand side \({\mathscr{B}}=\widetilde {{\mathscr{B}}}+\mathcal {E}\) where the error-tensor \(\mathcal {E}\) has normally distributed entries with zero mean and is normalized to correspond to a specific noise-level:

For all the experiments, we reported the relative error norms:

corresponding to TTSVD and TRRE-TSVD, respectively.

We used the Regularization Tools package by Hansen [10] to define the frontal slices A1 = shaw, A2 = baart, and A3 = foxgood of the tensor \(\mathcal {A}\). The computed condition numbers of the frontal slices of \(\mathcal {A}\) are κ(A1) = 1.25 ⋅ 1020 and κ(A2) = 1.5 ⋅ 1018, and κ(A3) = 7 ⋅ 1019 where \(\kappa (A_{i})=\Vert A_{i}\Vert _{2} \Vert A_{i}^{-1} \Vert _{2}\). In Fig. 1, we plotted, in a logarithmic scale, the singular values of the first frontal slice of the tensor \(\mathcal {S}\) such that \(\mathcal {A}=\mathcal {U} \ast \mathcal {S} \ast \mathcal {V}^{T}\) is the TSVD of the tensor \(\mathcal {A}\).

As seen, in Fig. 1, only a small number of those singular values are not close to 0 which means that the problem is very ill-conditioned. The computed tubal rank (the number of non-zero elements in the first frontal slices of \(\mathcal {S}\)) is \({\text rank}_{t}(\mathcal {A})=32\); see [23] for more details on the definition of a tubal rank.

We set n1 = n2 = 400, n3 = 3, and chose ν = 10− 2,10− 3. The obtained relative error norms corresponding to approximate solutions computed by TRRE-TTSVD and TTSVD are reported in Tables 1 and 2.

We observed numerically that after iteration k = 10 the error norm for TTSVD begins to increase while the one for TRRE-TTSVD stagnates and this is the same phenomena observed in the vector case.

6 Conclusion

We proposed in this work some tensor extrapolation methods. The first class contains the tensor extrapolation polynomial-type methods while for the second class, we introduced the tensor topological 𝜖-transformation. These techniques can be regarded as generalizations of the well-known vector and matrix extrapolation methods. Besides, we introduced some new products between two tensors which can simplify the derivation of extrapolation methods based on tensor format. Some theoretical results were also established including the properties of introduced tensor products and an expression for the minimum norm least-square solution of a tensor equation. Finally, the proposed techniques were applied on the sequence of tensors corresponding to the truncated tensor singular value decomposition which can be used for solving tensor ill-posed problems.

References

Bentbib, A.H., El Guide, M., Jbilou, K., Onunwor, E., Reichel, L.: Solution methods for linear discrete ill-posed problems for color image restoration. BIT Numer. Math. 58, 555–578 (2018)

Bouyouli, R., Jbilou, K., Sadaka, R., Sadok, H.: Convergence properties of some block Krylov subspace methods for multiple linear systems. J. Comput. Appl. Math. 196, 498–511 (2006)

Braman, K.: Third-order tensors as linear operators on a space of matrices. Linear Algebra Appl. 433, 1241–1253 (2010)

Brezinski, C.: Généralisation de la transformation de Shanks, de la table de padéé et l’epsilon algorithm. Calcolo 12, 317–360 (1975)

Cabay, S., Jackson, L.W.: A polynomial extrapolation method for finding limits and antilimits for vector sequences. SIAM J. Numer. Anal. 13, 734–752 (1976)

Chan, R., Jin, X.: An Introduction to Iterative Toeplitz Solvers. SIAM, Philadelphia (2007)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 3rd ed. Johns Hopkins University Press, Baltimore (1996)

Hao, N., Kilmer, M.E., Braman, K., Hoover, R.C.: Facial recognition using tensor-tensor decompositions. SIAM J. Imaging Sci. 6, 437–463 (2013)

Hansen, P.C.: Rank-Deficient And discrete Ill-Posed problems. SIAM, Philadelphia (1998)

Hansen, P.C.: Regularization tools version 4.0 for MATLAB 7.3. Numer. Algorithms 46, 189–194 (2007)

Jbilou, K., Messaoudi, A.: Block extrapolation methods with applications. Appl. Numer. Math. 106, 154–164 (2016)

Jbilou, K., Sadok, H.: LU-Implementation of the modified minimal polynomial extrapolation method. IMA J. Numer. Anal. 19, 549–561 (1999)

Jbilou, K., Messaoudi, A., Sadok, H.: Global FOM and GMRES algorithms for matrix equations. Appl. Numer. Math. 31, 49–63 (1999)

Jbilou, K., Sadok, H.: Matrix polynomial and epsilon-type extrapolation methods with applications. Numer. Algorithms 68, 107–119 (2015)

Jbilou, K., Reichel, L., Sadok, H.: Vector extrapolation enhanced TSVD for linear discrete ill-posed problems. Numer. Algorithms 51, 195–208 (2009)

Kalyani, M.N., Beik, F.P.A., Jbilou, K.: On global iterative schemes based on Hessenberg process for (ill-posed) Sylvester tensor equations. J. Comput. Appl. Math. 373, 112216 (2020)

Kilmer, M.E., Braman, K., Hao, N., Hoover, R.C.: Third-order tensors as operators on matrices: a theoretical and computational framework with applications in imaging. SIAM J. Matrix Anal. Appl. 34, 148–172 (2013)

Kilmer, M.E., Martin, C.D.: Factorization strategies for third-order tensors. Linear Algebra Appl. 435, 641–658 (2011)

Kolda, T.G., Bader, B.W.: Tensor decompositions and applications. SIAM Rev. 51, 455–500 (2009)

Li, F., Ng, M.K., Plemmons, R.J.: Coupled segmentation and denoising/deblurring for hyperspectral material identification. Numer. Lin. Alg. Appl. 19, 153–173 (2012)

Li, N., Navasca, C., Glenn, C.: Iterative methods for symmetric outer product tensor decomposition. Electron. Trans. Numer. Anal. 44, 124–139 (2015)

Long, Z., Liu, Y, Chen, L., Zhu, C.: Low rank tensor completion for multiway visual data. Sig. Proc. 155, 301–316 (2019)

Lu, C., Feng, J., Chen, Y., Liu, W., Yan, S.: Tensor robust principal component analysis with a new tensor nuclear norm. arXiv:1804.03728 (2019)

Mesina, M.: Convergence acceleration for the iterative solution of x = Ax + f. Comput. Meth. Appl. Mech. Engrg. 10, 165–173 (1977)

Miao, Y., Qi, L., Wei, Y.: Generalized Tensor Function via the Tensor Singular Value Decomposition based on the T-Product. Lin. Alg. Appl. 590, 258–303 (2020)

Pugatchev, B.P.: Acceleration of the convergence of iterative processes and a method for solving systems of nonlinear equations. USSR Comp. Math. Phys. 17, 199–207 (1977)

Sidi, A., Ford, W.F., Smith, D.A.: Acceleration of convergence of vector sequences. SIAM J. Numer. Anal. 23, 178–196 (1986)

Sidi, A.: Convergence and stability of minimal polynomial and reduced rank extrapolation algorithms. SIAM J. Numer. Anal. 23, 197–209 (1986)

Signoretto, M., Van de Plas, R., De Moor, B., Suykens, J.A.K.: Tensor versus matrix completion: a comparison with application to spectral data. IEEE Signal Process. Lett. 18, 403–406 (2011)

Wynn, P.: Acceleration technique for iterated vector and matrix problems. Math. Comp. 16, 301–322 (1962)

Acknowledgments

The authors would like to express their sincere thanks to the anonymous referee for his/her useful comments which improved the quality of paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Beik, F.P.A., Ichi, A.E., Jbilou, K. et al. Tensor extrapolation methods with applications. Numer Algor 87, 1421–1444 (2021). https://doi.org/10.1007/s11075-020-01013-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-020-01013-5