Abstract

Accelerating slowly convergent sequences is one of the main purposes of extrapolation methods. In this paper, we present a new tensor polynomial extrapolation method, which is based on a modified minimisation problem and some ideas leading to the recent Tensor Global Minimal Extrapolation Method (TG-MPE). We discuss the application of our method to fixed-point iterative process. An efficient algorithm via the higher order Singular Value Decomposition (HOSVD) is proposed for its implementation. The numerical tests show clearly the effectiveness and performance of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Accelerating slowly convergent sequences is one of the primary objectives of extrapolation methods. A common source of such sequences, for example, is the numerical solution of systems of linear or nonlinear equations using fixed-point iterative methods or sequences generated by quadrature techniques for integral computation. Typically, these sequences exhibit slow convergence towards the desired fixed point. As a result, to attain a satisfactory approximation of the limits of these sequences with a prescribed level of accuracy, a substantial number of iterations is necessary. This, in turn, results in a considerable computational burden.

Formally, the principle of extrapolation methods consists of transforming the terms of a certain slowly convergent sequence \((s_n)\) into a new sequence \((t_n)\) that converges more rapidly to the same limit. In fact, each extrapolation method employs a specific transformation T. For a given number \(k+1\) of elements from the basic sequence, the transformation T produces a new term \(t_n^{(k)}\) as: \(t_n^{(k)}=T(s_n,s_{n+1},\ldots ,s_{n+k})\) such that, under certain assumptions, \(\dfrac{\Vert t_n^{(k)}-s\Vert }{\Vert s_n -s\Vert }\underset{n\rightarrow \infty }{\longrightarrow }\ 0\) where s is the limit of \((s_n)\) and \(\Vert .\Vert \) is a suitable norm. As demonstrated in [12], it is worth noting that a universal transformation that accelerates the convergence of all sequences cannot exist. Actually, each transformation is only able to accelerate the convergence of a limited class of sequences. This implies that it will always be important to discover and study new transformations.

For scalars, vector and matrix sequences, many extrapolation methods have been introduced, see for example [7, 27] and the references therein. As for the tensor case that interests us in this work, extrapolation methods have been recently proposed. See for instance [2, 6, 10, 11, 16,17,18, 20, 22, 24, 25]. The primary reason for the interest in generalizing extrapolation methods to tensor sequences stems from the significant roles they have played in various disciplines, such as color and multispectral image and video processing [28], psychometrics, chemometrics, biomedical signal processing, higher-order statistics (HOS), and econometrics [1, 14]. Consequently, it becomes highly intriguing to search for appropriate transformations that expedite the convergence of tensor sequences.

In this paper, our aim is to introduce a new extrapolation method based on the combination of Higher-Order Singular Value Decomposition (HOSVD) [13], and the tensor global minimal polynomial extrapolation method (TG-MPE) [16]. A numerically stable algorithm is proposed for the implementation of the proposed method.

The outline of this paper is organized as follows. In Sect. 2, we provide the necessary definitions, notations, and some results required for this work. In Sect. 3, after a brief overview of the tensor global MPE (TG-MPE) method [16], we develop the new HOSVD-TMPE (Higher-Order Singular Value Decomposition method which is based on Tensor Minimal Polynomial Extrapolation). Section 4 outlines the algorithm for implementing this method. We establish error analysis results in Sect. 5, and in Sect. 6, we present numerical experiments that confirm the effectiveness of the proposed method.

2 Basic definitions

This section reviews some definitions and proprieties of tensors and uses notations defined by [19]. A tensor is a multidimensional array of data. The elements of a tensor are referred by using multiple indices. The required number of indices defines the order of a tensor. For a given (N-order) tensor \({\mathcal {A}} \in {\mathbb {R}}^{I_{1}\times I_{2}\times I_{3}\times \cdots \times I_{N}}\) we use the notation

The values \({\mathcal {A}}_{i_{1}i_{2}...i_{n-1}i_n i_{n+1}...i_N}\) are the entries of \({\mathcal {A}}.\)

Definition 1

[19] Given a tensor \({\mathcal {A}} \in {\mathbb {R}}^{I_{1}\times I_{2}\times I_{3}\times \cdots \times I_{N}}\) and a matrix \(M \in {\mathbb {R}}^{J_{n}\times I_{n}} \), the n-mode product of \({\mathcal {A}}\) by M, denoted by \({\mathcal {A}}\times _{n} M\), is the \((I_{1} \times I_{2}...\times I_{n-1}\times J_n \times I_{n+1}\times \cdots \times I_{N})\)-tensor defined by

The n-mode product of the tensor \({\mathcal {A}}\) by a vector \(w\in {\mathbb {R}}^{I_{n}}\), denoted by \( {\mathcal {A}}\bar{\times }_{n}w\), is the subtensor of order \((I_{1} \times I_{2}...\times I_{n-1}\times I_{n+1}\times \cdots \times I_{N})\) defined as

Definition 2

(n-mode unfolding matrix) For a given tensor \({\mathcal {A}} \in {\mathbb {R}}^{I_{1}\times I_{2}\times I_{3}\times \cdots \times I_{N}}\) and \(1\le n\le N\), the n-mode unfolding matrix of \({\mathcal {A}}\), is the (\(I_n\times I_{1}I_{2}\cdots I_{n-1} I_{n+1}\cdots I_{N}\)) matrix, denoted by \({\mathcal {A}}_{(n)}\) such that

where \(j=1+\sum _{k=1k\ne n}^{N}(i_k-1)L_k\) with \(L_k=\prod _{m=1m\ne n}^{k-1}I_m\). The columns of \({\mathcal {A}}_{(n)}\) are called the mode-n fibres of \({\mathcal {A}}.\)

Definition 3

Let \({\mathcal {A}}\) and \({\mathcal {B}}\) two tensors of the same size \(I_{1}\times I_{2}\times I_{3}\times \cdots \times I_{N}\), the (Frobenius) inner product of \({\mathcal {A}}\) and \({\mathcal {B}}\) is the scalar defined as

Proposition 1

[13] For \({\mathcal {A}} \in {\mathbb {R}}^{I_{1}\times I_{2}\times I_{3}\times \cdots \times I_{N}}\), \(M_1\in {\mathbb {R}}^{J_{n}\times I_{n}}, M_3\in {\mathbb {R}}^{J_{p}\times I_{N}}\) and \(M_2\in {\mathbb {R}}^{K_{n}\times J_{n}}\) (\(n\ne p\)), we have the following properties

-

1.

\(({\mathcal {A}} \times _n M_1)\times _p M_3=({\mathcal {A}} \times _p M_3)\times _n M_1={\mathcal {A}} \times _n M_1\times _p M_3.\)

-

2.

\(({\mathcal {A}} \times _n M_1)\times _n M_2 ={\mathcal {A}} \times _n(M_2M_1)\).

-

3.

If \(M_1\) is orthogonal, then \(\parallel {\mathcal {A}} \times _n M_1\parallel _{F}=\parallel {\mathcal {A}}\parallel _{F}.\)

-

4.

If \(x\in {\mathbb {R}}^{I_n} \) and \(J_{n}=I_n\) then \(({\mathcal {A}} \times _n M_1)\bar{\times }_n x ={\mathcal {A}} \bar{\times }_n(M_{1}^{T}x)\).

-

5.

For \(x\in {\mathbb {R}}^{I_n}\), \({\mathcal {A}} \bar{\times }_n x= {\mathcal {A}} \times _n x^{T}\).

Definition 4

[16]. The Einstein product of two tensors \({\mathcal {A}} \in {\mathbb {R}}^{I_{1} \times \ldots \times I_{N} \times J_{1} \times \cdots \times J_{M}}\) and \({\mathcal {B}} \in {\mathbb {R}}^{J_{1} \times \cdots \times J_{M} \times K_{1} \times \cdots \times K_{L}}\), is the \(I_{1} \times \ldots \times I_{N} \times K_{1} \times \cdots \times K_{L}\) tensor denoted by \({\mathcal {A}} *_{M} {\mathcal {B}}\) whose entries are given by

Definition 5

[16]. The tensor product denoted \(\boxdot ^{(N+1)}\) of two \((N+1)\)-mode tensors \({\mathcal {A}}=\left[ {\mathcal {A}}_{1}, {\mathcal {A}}_{2},\ldots ,{\mathcal {A}}_{m}\right] \in {\mathbb {R}}^{I_{1}\times I_{2}\times I_{3}\times \cdots \times I_{N}\times m}\) and \({\mathcal {B}}=\left[ {\mathcal {B}}_{1}, {\mathcal {B}}_{2},\ldots ,{\mathcal {B}}_{n}\right] \in {\mathbb {R}}^{I_{1}\times I_{2}\times I_{3}\times \cdots \times I_{N} \times n}\), where \({\mathcal {A}}_{i}\in {\mathbb {R}}^{I_{1}\times I_{2}\times I_{3}\times \cdots \times I_{N}}\) and \({\mathcal {B}}_{i}\in {\mathbb {R}}^{I_{1}\times I_{2}\times I_{3}\times \cdots \times I_{N}}\), is the \(m\times n\) matrix whose (i, j) entries, \(i=1,\ldots ,m \) and \(j=1,\ldots ,n,\) is given by

Proposition 2

Let \({\mathcal {A}}\), \({\mathcal {B}} \in {\mathbb {R}}^{I_{1}\times I_{2}\times I_{3}\times \cdots \times I_{N}\times I_{N+1}}\), \({\mathcal {C}} \in {\mathbb {R}}^{I_{1}\times I_{2}\times I_{3}\times \cdots \times I_{N}}\) and \(x\in {\mathbb {R}}^{I_{N+1}}\). Then we have

-

1.

\(({\mathcal {A}}\boxdot ^{(N+1)}{\mathcal {B}})^{T}={\mathcal {B}}\boxdot ^{(N+1)}{\mathcal {A}}\)

-

2.

\(({\mathcal {A}}\boxdot ^{(N+1)}{\mathcal {B}})x={\mathcal {A}}\boxdot ^{(N+1)}({\mathcal {B}}\bar{\times }_{(N+1)}x).\)

-

3.

\(\langle ({\mathcal {A}}\boxdot ^{N+1}{\mathcal {B}})x,x\rangle =\langle {\mathcal {B}}\bar{\times }_{(N+1)}x,{\mathcal {A}}\bar{\times }_{(N+1)}x\rangle .\)

-

4.

\(\langle {\mathcal {C}},{\mathcal {A}}\bar{\times }_{(N+1)}x\rangle =({\mathcal {C}}\boxdot ^{(N+1)}{\mathcal {A}})x\)

Proof

By definitions of the involved tensor products. \(\square \)

3 The HOSVD-TMPE method

First of all, let us recall the Tensor Global Minimal Extrapolation Method (TG-MPE) proposed in [16] that is the starting point of this work.

Let \({\mathcal {S}}_{0},{\mathcal {S}}_{1},{\mathcal {S}}_{2}...\) be a given sequence of real tensors of the same dimension \({I_{1}\times I_{2}\times I_{3}\times \cdots \times I_{N}}\) and set

Define for some fixed n and k, the \(I_{1}\times I_{2}\times I_{3}\times \cdots \times I_{N}\times I_{N+1}\) tensor \({\mathbb {D}}_{k}^{(n)}\) by

such that the \(i^{th}\) frontal slice, obtained by fixing the last index at i, is \(\left[ {\mathbb {D}}^{(n)}_{k}\right] _{:,:,\ldots ,:,i}={\mathcal {D}}_{n+i}\), \(0\le i\le k\).

The TG-MPE method is based on the solution, for \(\bar{\alpha }^{(k)}=\left( \alpha ^{(k)}_{0},\alpha ^{(k)}_{1},\ldots ,\alpha ^{(k)}_{k-1}\right) \in {\mathbb {R}}^{k}\), of the problem

for which the authors adopt a tensor QR based approach to obtain the solution (see [16] for details).

Once \(\alpha ^{(k)}_{0},\alpha ^{(k)}_{1},\ldots ,\alpha ^{(k)}_{k-1}\) have been determined, set \(\alpha ^{(k)}_{k}=1\). Provided that \(\displaystyle \sum _{i=0}^{k}\alpha ^{(k)}_{i}\ne 0\), compute the coefficients \(\delta ^{(k)}_{0}, \delta ^{(k)}_{1},\ldots ,\delta ^{(k)}_{k}\) as

Finally we set

as an approximation to the limit of the sequence \(({\mathcal {S}}_n).\)

Now, let us outline the approach we followed in developing our proposed method.

First, using assertion 1 of Proposition 2, we rewrite the problem in (1) as follows

The next proposition allows us to transform the above problem to an equivalent minimisation one.

Proposition 3

The equation (4) is equivalent to the minimization least squares tensor problem

Proof

We set \(g(x)=\parallel {\mathbb {D}}_{k-1}^{(n)}\bar{\times }_{(N+1)}x+{\mathcal {D}}_{n+k}\parallel _F^{2}\). For a vector \(h\in {\mathbb {R}}^{k}\) and a non zero scalar t, we have

Therefore, the gradient of g at x is as \(\bigtriangledown g(x)={\mathbb {D}}_{k-1}^{(n)}\boxdot ^{N+1}({\mathbb {D}}_{k-1}^{(n)}\bar{\times }_{(N+1)}x+{\mathcal {D}}_{n+k})\). It is easy to check that the matrix \({\mathbb {D}}_{k-1}^{(n)}\boxdot ^{N+1}{\mathbb {D}}_{k-1}^{(n)}\) is positive semi-definite, which guarantees the convexity of g. As a result

\(\square \)

Therefore, the problem in (4) is equivalent to

which can be expressed as the following constrained problem

Rewriting the basic problem as a constrained minimization problem is indeed the core concept of this work. Specifically, for our proposed method (HOSVD-TMPE), we have replaced the constraint \(\alpha ^{(k)}_{k}=1\) in (7) with the new constraint \(\parallel \alpha ^{(k)}\parallel _2=1\), resulting in the new constrained minimization problem

Again, with \(\alpha ^{(k)}_{0},\alpha ^{(k)}_{1},\ldots ,\alpha ^{(k)}_{k}\) determined and provided \(\sum _{i=0}^{k}\alpha ^{(k)}_{i}\ne 0\), we compute similarly the scalars \(\delta ^{(k)}_{0}, \delta ^{(k)}_{1},\ldots ,\delta ^{(k)}_{k}\) as in (2) and the new sequence term \({\mathcal {T}}_{k}^{(n)}\) as in (3).

Remark 1

The purpose of this new constraint choice is not to obtain a unit solution, as it may seem, since we can achieve that simply through the normalization of the result obtained by TG-MPE. Instead, as will be demonstrated, it enables us to easily characterize the desired approximate solution and develop an efficient algorithm for its determination.

The next theorem recalls the HOSVD decomposition [13], which is a highly valuable tool for establishing the main result in this work.

Theorem 4

(HOSVD) [13] Let \({\mathcal {A}}\) be a real tensor of dimension \(I_{1}\times I_{2}\times I_{3}\times \cdots \times I_{N}\). Then \({\mathcal {A}}\) can be decomposed as

where \(V^{(n)}=\left[ v_{1}^{(n)}v_{2}^{(n)}...v_{I_n}^{(n)}\right] \) is a unitary \(I_{n}\times I_{n}\) matrix \((1\le n\le N)\), \(\Sigma \) is a real tensor of the same dimension as \({\mathcal {A}}\) and the subtensors \(\Sigma _{i_{n}=m}\) \((1\le m\le I_{n})\) obtained by fixing the \(n^{th}\) index to m, have the following properties

-

All-orthogonality \(\langle \Sigma _{i_{n}=m},\Sigma _{i_{n}=m'}\rangle =0 \hspace{0.4cm} for\hspace{0.2cm} m\ne m'.\)

-

\(\parallel \Sigma _{i_{n}=1}\parallel _{F}\geqslant \parallel \Sigma _{i_{n}=2}\parallel _{F}\geqslant \cdots \parallel \Sigma _{i_{n}=I_{n}}\parallel _{F}\geqslant 0\) for all possible values of n.

The quantity \(\parallel \Sigma _{i_{n}=i}\parallel _{F}\) is called the \(i^{th}\) n-mode singular value of \({\mathcal {A}}\), it is symbolized by \(\sigma _{i}^{(n)}\); and the vector \(v_{i}^{(n)}\) is the \(i^{th}\) n-mode singular vector.

In the following main result, we provide a characterization of the solution \(\alpha ^{(k)}\) of the minimisation problem (8).

Theorem 5

Let \(\sigma _{1}^{(N+1)},\sigma _{2}^{(N+1)},\ldots ,\sigma _{k+1}^{(N+1)}\) be the \((N+1)\)-mode singular values of \({\mathbb {D}}_{k}^{(n)}\) ordered as in

and let \(\lbrace v_{i}^{(N+1)}\rbrace _{i=1}^{k+1}\) be the corresponding \((N+1)\)-mode singular vectors obtained from the HOSVD,

If the smallest \((N+1)\)-mode singular value \(\sigma _{k+1}^{(N+1)}\) of \({\mathbb {D}}_{k}^{(n)}\), is simple, in the sense that \(\sigma _{k}^{(N+1)}\ne \sigma _{k+1}^{(N+1)} \), then the solution \(\alpha ^{(k)} \) to the minimization problem (8) is unique (up to a multiplicative constant \(\rho \), \(\mid \rho \mid =1)\) and is given as \(\alpha ^{(k)}=v_{k+1}^{(N+1)}. \)

Proof

First, let us show that

In fact, by Assertion 4 of Proposition 1, we have

Since \(V^{(N+1)}\) is unitary, one has \( (V^{(N+1)})^{T}v_{k+1}^{(N+1)}=(0,0,\ldots ,1)^{T}=e_{k+1}\in {\mathbb {R}}^{k+1}. \) Therefore, using Assertion 1 and 5 of Proposition 1,

As a result, using Assertion 3 of Proposition 1, we get

Now, let \(\beta \) be an arbitrary vector in \({\mathbb {R}}^{k+1}\) such that \(\Vert \beta \Vert _2=1\). Since \( V^{(N+1)}=\left[ v_{1}^{(N+1)}v_{2}^{(N+1)}...v_{I_N+1}^{(N+1)}\right] \) is a unitary matrix in \({\mathbb {R}}^{(k+1)\times (k+1)}\), then there exist scalars \(\beta _{1},\ldots ,\beta _{k+1}\) such that

Taking into account the orthogonality of \(\lbrace \Sigma _{i}^{(N+1)}\rbrace _{i=1,\ldots , k+1}\), and the fact that \(\sigma _{k+1}^{(N+1)}\) is the smallest \((N+1)\)-mode singular value, we can easily establish that

Therefore

which leads to

\(\square \)

4 Implementation of HOSVD-TMPE

As demonstrated in the previous section, determining the sequence term \({\mathcal {T}}{n}^{(k)}\) in (3) requires the computation of \(v{k+1}\), the \((k+1)^{th}\) vector of the \((N+1)\)-mode matrix \(V^{(N+1)}\) corresponding to the smallest \((N+1)\)-mode singular value \(\sigma _{k+1}^{(N+1)}\) from the decomposition (10). Obtaining the matrix \(V^{(N+1)}\) directly through HOSVD applied to \({\mathbb {D}}{k}^{(n)}\) can be expensive. However, in this case, we employ a less computationally intensive method to obtain the matrix \(V^{(N+1)}\) and consequently, the vector \(v{k+1}^{(N+1)}\). To begin, let us introduce the following lemma, which was proven in [3].

Lemma 1

[3] Let \({\mathcal {A}}\) be a real tensor of dimension \(I_{1}\times I_{2}\times I_{3}\times \cdots \times I_{N},\) and consider its HOSVD decomposition as

Then a matrix representation of (11) can be obtained, for \(n\in \left\{ 1,\cdots ,N\right\} \), as follows

where \(\otimes \) stands for the Kronecker product of matrices.

Proposition 6

For each \(n\in \left\{ 1,\ldots ,N\right\} \) we have:

Proof

We use (12) and the orthogonality of the matrices \(V^{(n)}\), 1\(\le n\le N\). \(\square \)

The decomposition (13) is, in fact, the spectral decomposition of the symmetric matrix \({\mathcal {A}}_{(n)}{\mathcal {A}}_{(n)}^{T}\). Therefore, to get the n-mode matrix \(V^{(n)}\) appearing the HOSVD-decomposition of the tensor \({\mathcal {A}}\), we can compute it throughout the eigenvalue decomposition (EVD) of the \(I_n \times I_n\) matrix \({\mathcal {A}}_{(n)}{\mathcal {A}}_{(n)}^{T}\).

Now, we adopt this process to determine the matrix \(V^{(N+1)}\) in (10). We first compute the unfolding matrix \(({\mathbb {D}}_{k}^{(n)})_{(N+1)}\) and the matrix \(M=(\mathbb { D}_{k}^{(n)})_{(N+1)}({\mathbb {D}}_{k}^{(n)})_{(N+1)}^{T}\) of size \((k+1)\times (k+1)\). Therefore, applying the EVD decomposition on M allows us to obtain the matrix \(V^{(N+1)}\) ( we have \(M=V^{(N+1)}\Sigma (V^{(N+1)})^T\)). Then, the \((k+1)^{th}\) vector (solution \(\alpha ^{(k)}\)) \(v_{k+1}=V^{(N+1)}e_{k+1}\) where \(e_{k+1}=(0,0,\ldots ,1)^{T}\in {\mathbb {R}}^{k+1}\).

Remark 2

In practice, the integer k is not typically large. Therefore, applying the Eigenvalue Decomposition EVD to the symmetric positive semi definite \((k+1)\times (k+1)\) matrix \(M=(\mathbb { D}_{k}^{(n)})_{(N+1)}({\mathbb {D}}_{k}^{(n)})_{(N+1)}^{T}\) is less computationally expensive compared to performing the HOSVD decomposition of the tensor \({\mathbb {D}}_{k}^{(n)}.\)

After obtaining the solution \(\alpha ^{(k)}\), we used (2) and (3) for computing the extrapolated tensor \({\mathcal {T}}^{(k)}_{n}\). We summarize the different steps of the implementation of HOSVD-TMPE in Algorithm 1.

It is clear that Algorithm 1 outlines only the computation of a single extrapolated tensor \({\mathcal {T}}^{(k)}_{n}\) (with fixed values for n and k), and does not provide any information about the sequence \(({\mathcal {T}}^{(k)}_{n})_{n}\) as n varies. To address this aspect, in the experimental section of this work, we employ two distinct schemes following the manner in which the sequence is generated.

In case where the sequence \(({\mathcal {S}}_{n})\) is produced by a some fixed-point process

we apply our proposed algorithm 1 in the restarted acceleration scheme explained in [10, 11]. That is, starting from an iterate \({\mathcal {S}}_{n}\), we generate via the fixed-point process 14 a sequence of iterates \(\left\{ {\mathcal {S}}_{n}\right\} _{i=0}^{k+1}\) and then use Algorithm 1 to produce \( {\mathcal {T}}^{(k)}_{n}\). Next, we use \({\mathcal {F}}({\mathcal {T}}^{(k)}_{n})\) as the starting point of (14) and the process continues. In the absence of a specific convergence measurement tool, it is generally acceptable to stop computations after a fixed number of iterations \(iter_{max}\) or when the relative residual error

is less than a given threshold \(\epsilon \).

We summarized that in Algorithm 2. The error in Step 7 can either represent the relative residual res in (15) or some specific error depending on the studied problem.

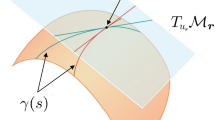

Now, in cases where the sequence is not of a fixed-point type or when we lack information about its production process, we can apply our method in the acceleration scheme (see Fig. 1) as follows: we apply the extrapolation on the first \(k+2\) iterates to produce the first term \({\mathcal {T}}^{(k)}_{0}\). Next, we start from the second iterate \({\mathcal {S}}_{1}\) and consider a set of \(k+2\) iterates to produce the second term \({\mathcal {T}}^{(k)}_{1}\) via extrapolation and this process continues. The following figure illustrates this acceleration scheme.

Now, through the following result, we reveal a class of sequences on which the application of HOSVD-TMPE produces, just from a certain finite number of terms and in one time, the sought limit \({\mathcal {S}}\). Such property is known as finite termination property, see [27].

Theorem 7

Let \({\mathcal {S}}\) be the limit of the tensor sequence \(({\mathcal {S}}_{n})\) and let \({\mathcal {E}}_{n}={\mathcal {S}}_{n}-{\mathcal {S}}\) and \({\mathcal {D}}_{n}={\mathcal {S}}_{n+1}-{\mathcal {S}}_{n}\). Assume that there exists a fixed number k such that, for all \(n=0,1,\ldots \)

and the tensors \(\lbrace {\mathcal {D}}_{0},{\mathcal {D}}_{1},\ldots ,{\mathcal {D}}_{k-1}\rbrace \) are linearly independent. Then the tensor \({\mathcal {T}}^{(n)}_k\) obtained by applying HOSVD-TMPE to the \(k+2\) tensors \({\mathcal {S}}_{n},{\mathcal {S}}_{n+1},\ldots ,{\mathcal {S}}_{n+k+1},\) satisfies \({\mathcal {T}}^{(n)}_k={\mathcal {S}}\) for all \(n=0,1,\ldots \).

Proof

Let us first show by induction on n the linear independence of \( {\mathcal {D}}_{n},{\mathcal {D}}_{n+1},\ldots ,\) \({\mathcal {D}}_{n+k-1}\). For \(n=0\), it is true by the hypothesis. Assume now that that \( {\mathcal {D}}_{n},{\mathcal {D}}_{n+1},\ldots ,{\mathcal {D}}_{n+k-1}\) are linearly independent, and show that so is for \( {\mathcal {D}}_{n+1},{\mathcal {D}}_{n+2},\) \(\ldots ,{\mathcal {D}}_{n+k}\). From (16), we get

Since \(\alpha ^{(k)}_k\ne 0\), let us set \(\alpha ^{(k)}_k=-1\). Then (17) becomes

To show that \({\mathcal {D}}_{n+1},{\mathcal {D}}_{n+2},\ldots ,{\mathcal {D}}_{n+k}\) are independent, assume that there exist scalars \(\beta _{1},\beta _{2},\ldots ,\beta _{k}\) such that

Substituting (18) into (19) and rearranging terms leads to

The induction hypothesis implies that

Since \(\alpha ^{(k)}_{0}\ne 0\), it follows that

which implies the linear independence of \({\mathcal {D}}_{n+1},{\mathcal {D}}_{n+2},\ldots ,{\mathcal {D}}_{n+k}\).

On the other hand, using (16), we obtain

from which it follows that

Therefore, setting \( \check{\delta }^{(k)}_i=\dfrac{\alpha ^{(k)}_i}{\sum _{i=0}^{k}\alpha ^{(k)}_i}\), gives

Again from (16), we have

The linear independence of \(\lbrace {\mathcal {D}}_{n},{\mathcal {D}}_{n+1},\ldots ,{\mathcal {D}}_{n+k-1}\rbrace \) implies that the problem \({\mathbb {D}}_{k}^{(n)}\times _{(N+1)}\alpha ^{(k)}=0\) along with \(\Vert \alpha ^{(k)}\Vert =1\) has a unique solution (up to a multiplicative constant \(\rho \), \(\mid \rho \mid =1)\). That is, the scalars \(\check{\delta }^{(k)}_i\) in (21) are the same as \(\delta ^{(k)}_i\) in \( {\mathcal {T}}^{(k)}_{n}=\sum _{i=0}^{k}\delta ^{(k)}_i {\mathcal {S}}_{n+i}\) resulting from HOSVD-TMPE, and as a result \( {\mathcal {T}}^{(k)}_{n}={\mathcal {S}}.\) \(\square \)

5 Error analysis

In this section, we consider a convergent tensor sequence \(({\mathcal {S}}_n)\) with limit \({\mathcal {S}}\) obtained via the fixed-point process (14):

where \({\mathcal {S}}_{0}\) is a given tensor and \({\mathcal {F}}\) is assumed to be a differentiable function from \({\mathbb {R}}^{I_{1}\times I_{2}\times \cdots \times I_{N}}\) into \( {\mathbb {R}}^{I_{1}\times I_{2}\times \cdots \times I_{N}}\). We associate with the iterate \({\mathcal {S}}_{n}\), the residual error given as:

The limit \({\mathcal {S}}\) satisfies the equations \({\mathcal {S}}={\mathcal {F}}({\mathcal {S}})\) and \({\mathcal {R}}({\mathcal {S}})=0.\)

As examples of \({\mathcal {F}}\), we consider the following cases:

-

1.

\({\mathcal {F}}\) is linear:

-

\({\mathcal {F}}({\mathcal {X}})={\mathcal {X}}\times _{N}A+{\mathcal {B}},\) with \({\mathcal {B}}\in {\mathbb {R}}^{I_{1}\times I_{2}\times \cdots \times I_{N}}\) and \(A\in {\mathbb {R}}^{I_{N}\times I_{N}}\) such that \((I-A)\) is non-singular.

-

\({\mathcal {F}}({\mathcal {X}})= {\mathcal {A}}*_{N}{\mathcal {X}}+{\mathcal {B}}\) with \({\mathcal {B}}\in {\mathbb {R}}^{I_{1}\times I_{2}\times \cdots \times I_{N}}\) and \({\mathcal {A}}\in {\mathbb {R}}^{I_{1}\times I_{2}\times \cdots \times I_{N}\times I_{1}\times I_{2}\times \cdots \times I_{N}}\) and \(*_{N}\) stands for the Einstein product (Definition 4).

-

-

2.

\({\mathcal {F}}\) is not linear: \({\mathcal {F}}({\mathcal {X}})= {\mathcal {X}}^{T}*_{N}{\mathcal {A}}*_{N}{\mathcal {X}}+{\mathcal {X}}^{T}*_{N}{\mathcal {C}}\quad {\text {with}} \quad {\mathcal {A}}, {\mathcal {C}}\in {\mathbb {R}}^{I_{1}\times I_{2}\times \cdots \times I_{N}\times I_{1}\times I_{2}\times \cdots \times I_{N}}\). Notice that in this case, one has

$$\begin{aligned} {\mathcal {F}}({\mathcal {X}})=\dfrac{\partial {\mathcal {F}}}{\partial {\mathcal {X}}}({\mathcal {S}})*_{N}({\mathcal {X}}-{\mathcal {S}})+ {\mathcal {F}}({\mathcal {S}})+\textbf{O}(\parallel {\mathcal {X}}-{\mathcal {S}}\parallel _{F}^{2}) \quad \text {as}\quad {\mathcal {X}}\longrightarrow {\mathcal {S}} \end{aligned}$$where the tensor \(\dfrac{\partial {\mathcal {F}}}{\partial {\mathcal {X}}}({\mathcal {S}})={\mathcal {A}}*_{N}{\mathcal {S}}-{\mathcal {C}}\) is the gradient of \({\mathcal {F}}\) at \({\mathcal {S}}\). Thus for a large n, the sequence \(({\mathcal {S}}_n)\) can be generated as follows

$$\begin{aligned} {\mathcal {S}}_{n+1}={\mathcal {F}}({\mathcal {S}}_n)\approx \dfrac{\partial {\mathcal {F}}}{\partial {\mathcal {X}}}({\mathcal {S}})*_{N}({\mathcal {S}}_n-{\mathcal {S}})+ {\mathcal {F}}({\mathcal {S}}). \end{aligned}$$(23)That is, the sequence \(({\mathcal {S}}_n)\) behaves as if it were being generated by approximate linear system of the form \(({\mathcal {I}}-{\mathcal {P}})*_{N}{\mathcal {X}}\approx {\mathcal {B}}\) through

$$\begin{aligned} {\mathcal {X}}_{n+1}={\mathcal {P}}*_{N}{\mathcal {X}}_n+{\mathcal {B}} \end{aligned}$$with \({\mathcal {P}}=\dfrac{\partial {\mathcal {F}}}{\partial {\mathcal {X}}}({\mathcal {S}})\) and \({\mathcal {B}}=({\mathcal {I}}-{\mathcal {P}})*_{N}{\mathcal {S}}.\)

Coming back to the general case, assume that \({\mathcal {F}}\) has a unique fixed point denoted by \({\mathcal {S}}.\) Applying HOSVD-TMPE on \(({\mathcal {S}}_n)\), the residual \( {\mathcal {R}}({\mathcal {T}}^{(k)}_{n})={\mathcal {T}}^{(k)}_{n+1}-{\mathcal {T}}^{(k)}_{n} \) can be expressed as

where ’\(\cong \)’ represents exact equality ’\(=\)’ when the sequence \(({\mathcal {S}}_{n})\) is generated linearly, while, it is just an approximation ’\(\approx \)’ when \({\mathcal {F}}\) is non-linear.

Assume that \(({\mathcal {S}}_{n})\) is generated linearly, the subsequent theorem demonstrates that the computation of \(\Vert {\mathcal {R}}({\mathcal {T}}^{(k)}_{n})\Vert _{F}\) can be achieved without incurring any additional computational expense, relying solely on the quantities derived from our algorithm, and without the need to directly compute \({\mathcal {R}}({\mathcal {T}}^{(k)}_{n})\). The result remains true for the nonlinear case when replacing \(=\) with \(\approx \).

Theorem 8

The residual \({\mathcal {R}}({\mathcal {T}}^{(k)}_{n})\) in (24) satisfies

where \(\sigma _{k+1}^{(N+1)}\) is the smallest \((N+1)\)-mode singular value of \({\mathbb {D}}_{k}^{(n)}.\)

Proof

Using the relation (2), it follows that

with \(\alpha ^{(k)}=\left( \alpha ^{(k)}_0,\alpha ^{(k)}_2,\ldots ,\alpha ^{(k)}_k \right) ^T\) is the solution of (8). Then, using Theorem 5, we obtain

\(\square \)

6 Numerical experiments

In this section, we will illustrate, via some numerical experiments, the effectiveness of our proposed method (HOSVD-TMPE). We compared it in the examples below, in addition to the basic sequences (Basic-Iterations), with the TG-MPE method and the Nesterov’s transformation [23] defined by

where \(\lbrace \eta _{n}\rbrace \) is the scalar sequence given by \(\eta _{n+1} =\dfrac{\sqrt{4(\eta _{n})^{2}+1)+1}}{2}\) with \(\eta _{1}=1.\)

For Examples 1, 2, and 4, we implemented the HOSVD-TMPE and TG-MPE methods using the restarted acceleration scheme outlined in Algorithm 2. Since the exact solution is known, we employed the relative error

to illustrate the behaviour of the produced sequences.

For Example 3, we adopted the acceleration scheme outlined in Fig. 1, utilizing the residual error-norm

to assess the convergence behaviour of the methods.

In addition, in Example 4, which addresses image restoration problems, we used the PSNR (Peak Signal-to-Noise Ratio) to assess the quality of the restored images. Furthermore, in this specific example, we evaluated the convergence rates of TG-MPE and HOSVD-TMPE by considering the ratio \(\frac{\parallel {\mathcal {T}}_{n}^{method}-{\mathcal {S}}\parallel _{F}}{\parallel {\mathcal {S}}_{n}-{\mathcal {S}}\parallel _{F}}.\)

As shown in Algorithm 2, the iteration process is terminated as soon as the norm of the used error is less than predefined \(\epsilon \), or the number of iterations reaches a given number \(iter_{max}\). We carried out the computations using MATLAB 2023a in HP computer running Windows 11 with Intel Core i7 and 16 GB RAM.

6.1 Example 1

We consider tensor sequence \(({{\mathcal {S}}_{n}})\) with \({{\mathcal {S}}_{n}}\in {\mathbb {R}}^{d\times d \times d}\), obtained from the linear iterative scheme

\(A=V\Sigma V^{T}\in {\mathbb {R}}^{d\times d}\) where V is a random orthogonal matrix, and \(\Sigma \) is a diagonal matrix such that \( \Sigma (i,i)=1-0.95\times \frac{i}{d}\), \(i=1,..,d\). The tensor \({\mathcal {B}}\) is such that the limit (exact solution) is the tensor \({\mathcal {S}}\) with all elements equal to one:

Fig. 2 illustrates the relative error-norm versus the iteration index n for both the Basic iteration and Nesterov process, TG-MPE, and our proposed method HOSVD-TMPE with \(k=3\), considering different dimensions (\(d=25\) and 40). We set \(\epsilon \) to \(10^{-14}\). The curves clearly reveal the acceleration effect of our method, showcasing its superiority compared to TG-MPE and Nesterov.

6.2 Example 2

In this example, we consider the tensor sequence \(({\mathcal {S}}_{n})\) from \({\mathbb {R}}^{N\times N \times N}\) obtained by the following linear iterative scheme

with \({\mathcal {A}}\in {\mathbb {R}}^{N\times N\times N\times N\times N\times N}\) such that

The tensor right-hand \({\mathcal {B}}\) is such that the limit \({\mathcal {S}}\) is the tensor whose all elements equal to one, and is given by

Fig. 3 illustrates the behavior of the relative error-norm for the basic sequence, the Nesterov process, TG-MPE, and our method HOSVD-TMPE (\(k=3\)) across different dimensions (\(N=10\) and \(N=15\)). We set \(\epsilon \) to \(10^{-13}\) as a stopping criterion. It is evident that the application of TG-MPE and HOSVD-TMPE results in much faster convergence compared to Basic Iterations and the Nesterov process. As observed in the previous example, the HOSVD-TMPE method the HOSVD-TMPE method exhibits rapid convergence.

6.3 Example 3

In this example, we consider the partial differential equation

The central finite difference discretization of (28) leads to the following tensor equation with Einstein product (see Definition 4)

with \({\mathcal {A}}=(\alpha _{ijkl})\in {\mathbb {R}}^{N\times N\times N\times N}\) and \({\mathcal {B}}=(\beta _{ij}) \in {\mathbb {R}}^{N\times N}\) such that

where \(\otimes \) denotes the Kronecker product, ivec is the index mapping function defined as follows: For given integers \(j_1\), \(j_2\) and dimensions \(N_1\), \(N_2\), the integer \(ivec([i, j], [N_1, N_2])\) is given by

\(F = tridiag(1,-4,1)\) and \( E = [e_{ij}]\) are tridiagonal and diagonal matrices of order N and \( b = (b_i) \in {{\mathbb {R}}}^{N^2}\), defined by

\(b_t= \left\{ \begin{aligned} -\sin \frac{3t}{N+1}\pi \hspace{1.2cm}&\text {if}\hspace{0.2cm}t=vN, \hspace{0.2cm} v=1,\ldots ,N, \\ -\sin \frac{3t}{N+1}\pi \cos \frac{t}{N+1}\pi \hspace{0.2cm}&\text {if} \hspace{0.2cm}t= (N - 1)N + w,\hspace{0.2cm} w=1,\ldots ,N-1,\\ -\sin \frac{3N}{N+1}\pi (1 + \cos \frac{N}{N+1}\pi )&\hspace{0.2cm}\text {if} \hspace{0.2cm}t=N^2, \\ \hspace{-2cm}0 \hspace{1.7cm}&\text {otherwise}. \end{aligned} \right. \)

We solve (29) using the following iterative scheme [29]:

with \({\mathcal {P}}_{0}={\mathcal {R}}_{0}={\mathcal {B}}-{\mathcal {A}}*_2 {\mathcal {X}}_{0}\) where \({\mathcal {X}}_{0}\) is an initial tensor guess.

In Table 1, we present the iteration number n, the residual error-norm \({\mathcal {R}}e({\mathcal {T}}_{n}^{(k)})=\parallel {\mathcal {B}}-{\mathcal {A}}*_2 {\mathcal {T}}_{n}^{(k)}\parallel _{F}\), and the CPU time for the four methods for different dimensions \(N=10, 40\) and 100. Note that the stopping criterion \(\epsilon \) is adjusted according to the dimension N ( \(\epsilon =10^{-16}\) for \(N=10\), \(\epsilon =10^{-8}\) for \(N=40\) and \(\epsilon =10^{-2}\) for \(N=100\) ). The results highlight the acceleration effect of our proposed method (HOSVD-TMPE). Across various dimensions (\(N=10, 40,\) and 100), both the number of iterations and the associated CPU time for HOSVD-TMPE and TG-MPE are lower compared to those obtained with the initial sequence (Basic Iteration) and the Nesterov process. We can also note the effectiveness of HOSVD-TMPE in comparison to TG-MPE method, particularly when considering the case of \(N=100\).

In Fig. 4, we have plotted the residual error \({\mathcal {R}}e\) versus the iteration number n for four methods: Basic Iterations, Nesterov, TG-MPE, and HOSVD-TMPE, considering two different values of dimensions, \(N=30\) (left) and \(N=50\) (right). Iterations are terminated when the residual \({\mathcal {R}}e\) reaches \(10^{-8}\). The acceleration effect of HOSVD-TMPE is evident, showcasing its competitiveness with TG-MPE for both \(N=30\) and \(N=50\). They exhibit nearly similar behaviors, with a slight advantage observed for HOSVD-TMPE.

The residual error \({\mathcal {R}}e\) for dimensions \(N=30\) (left) and \(N=50\) (right) for the Basic-iteration (30), Nesterov, TG-MPE and HOSVD-TMPE (Example 3)

6.4 Example 4

Image inpainting is the process of reconstructing missing regions in an image. This task is crucial in computer vision and serves as an essential functionality in numerous imaging and graphics applications; for more details, refer to [28].

The tensor total variation is one of the techniques employed for this purpose. In this example, we address the regularization tensor-least squares problem

where \({\mathcal {P}}_\textsf{E}\) is the tensor projection on \({\mathbb {R}}^{I_{1}\times I_{2}\times I_{3}\times \cdots \times I_{N}}\), such that for \({\mathcal {X}} \in {\mathbb {R}}^{I_{1}\times I_{2}\times I_{3}\times \cdots \times I_{N}}\) the entries of \({\mathcal {P}}_\textsf{E}({\mathcal {X}})\) are given as

with \(\textsf{E}=\lbrace (i_{1},i_{2},...,i_{N}):{\mathcal {X}}_{{i_{1},i_{2},...,i_{N}}}\) observed \(\rbrace \) (see [8]).Let \({\mathcal {X}}^{exact}\) be the original image, and the tensor \({\mathcal {B}}\) represents its text-added version. Utilizing the tensorial double proximal gradient algorithm (TDPG) proposed in [4], we iteratively solve the problem (31).

For the first experiment, we consider the image ’coloredChips.png’ from the MATLAB R2020a collection, with dimensions of (\(150 \times 190 \times 3\)). We corrupted it by adding text, as shown in Fig. 5.

Fig. 6 displays the restored images using TDPG, TG-MPE, and the proposed method (HOSVD-TMPE). It is evident that our proposed method successfully removed nearly all the added text, a result not achieved by the basic iteration TDPG or the TG-MPE method.

Table 2 presents the iteration number, relative error-norm, recovered image PSNR, and the required CPU time for TDPG (Basic Iterations), TG-MPE, and HOSVD-TMPE. We use \(\epsilon =5 \times 10^{-2}\) as a stopping criterion for computations. The results obtained confirm the superiority of our proposed method in terms of PSNR and relative error-norm, as well as in terms of CPU time.

Figure 7 displays the relative error-norm (on the left), the rate of acceleration (in the middle), and PSNR (on the right) versus the iteration index for the three methods: TDPG, TG-MPE, and HOSVD-TMPE. Compared to TDPG (Basic Iterations) and TG-MPE, the curves confirm that our proposed algorithm is more effective.

Now, let us consider another color image obtained from ’sherlock.jpg’ in the MATLAB R2020a collection. We corrupted it by adding a scribble, as illustrated in Fig. 8.

Figure 9 illustrates the restored images obtained using TDPG, TG-MPE, and the proposed algorithm (HOSVD-TMPE). It is evident that our proposed method and TG-MPE yield nearly identical results, with a slight advantage observed for HOSVD-TMPE. Compared to the basic iterative TDPG method, both HOSVD-TMPE and TG-MPE methods have effectively removed most of the superimposed scribble.

Table 3 presents various values, including the iteration number, relative error, recovered image PSNR, and required CPU time for TDPG (Basic Iterations), TG-MPE, and HOSVD-TMPE. For the size \(150 \times 160 \times 3\), we stopped computations when the relative error was less than \(\epsilon =8 \times 10^{-2}\), while for the size \((325 \times 300 \times 3)\), we set \(\epsilon =10^{-1}\). The obtained results confirm the advantage of our proposed method in terms of PSNR, relative error, as well as the required number of iterations and CPU time.

The curves depicted in Fig. 10 illustrate the superior performance of our proposed method compared to TG-MPE. It is evident that HOSVD-TMPE excels in terms of PSNR, relative error-norm, and convergence rate, highlighting its effectiveness

7 Conclusion

The acceleration of slowly convergent sequences is an interesting purpose of extrapolation methods. In this paper, we introduced a new tensor extrapolation method for tensor sequences, namely HOSVD-TMPE. We presented an efficient algorithm that is based on high-order singular value decomposition for implementing the proposed extrapolation method. The presented numerical tests show the performance and effectiveness of the algorithm. Additionally, we provide some applications in color image restoration and completion.

Change history

08 July 2024

A Correction to this paper has been published: https://doi.org/10.1007/s10092-024-00601-4

References

Amblard, P.O., Gaeta, M., Lacoume, J.L.: Statistics for complex variables and signals-Part I: variables. Signal Process. 53, 1–13 (1996)

Beik, F.P.A., El Ichi, A., Jbilou, K., Sadaka, R.: Tensor extrapolation methods with applications. Numer. Algor. 87, 1421–1444 (2021)

Bellman, R.: Introduction to matrix analysis. SIAM (1970)

Benchettou, O., Bentbib, A.H., Bouhamidi, A.: An accelerated tensorial double proximal Gradient Method for Total variaitional regularization problem. J. Optim. Theory Appl. 198, 111–134 (2023)

Bentbib, A.H., Khouia, A., Sadok, H.: The LSQR method for solving tensor least-squares problems. Electron. Trans. Numer. Anal. 55, 92–111 (2021)

Bentbib, A.H., Jbilou, K., Tahiri, R., Bentbib, A.H., Jbilou, K., Tahiri, R.: N-mode minimal tensor extrapolation methods. Numer. Algor. 95, 665–691 (2024)

Brezinski, C., Redivo-Zaglia, M.: Extrapolation methods: theory and practice. Elsevier (2013)

Candes, E.J., Tao, T.: The power of convex relaxation: near-optimal matrix completion. IEEE Trans. Inf. Theory. 56, 2053–2080 (2010)

Chen, J., Saad, Y.: On the tensor SVD and the optimal low rank orthogonal approximation of tensors. SIAM J. Matrix Anal. Appl. 30, 1709–1734 (2009)

Cipolla, S., Redivo-Zaglia, M., Tudisco, F.: Extrapolation methods for fixed? Point multilinear PageRank computations. Numer. Linear Algebra Appl. 27, e2280 (2020)

Cipolla, S., Redivo-Zaglia, M., Tudisco, F.: Shifted and extrapolated power methods for tensor \(\ell ^ p \)-eigenpairs. Electron. Trans. Numer. Anal. 53, 1–27 (2020)

Delahaye, J.P., Germain-Bonne, B.: The set of logarithmically convergent sequences cannot be accelerated. SIAM J. Numer. Anal. 19, 840–844 (1982)

De Lathauwer, L., De Moor, B., Vandewalle, J.: A multilinear singular value decomposition. SIAM J. Matrix Anal Appl. 21, 1253–1278 (2000)

Duminil, S., Sadok, H., Silvester, D.: Fast solvers for discretized Navier–Stokes problems using vector extrapolation. Numer Algor. 66, 89–104 (2014)

El Guide, M., El Ichi, A., Jbilou, K., Beik, F.P.A.: Tensor Krylov subspace methods via the Einstein product with applications to image and video processing. Appl. Numer. Math. 181, 347–363 (2022)

El Ichi, A., Jbilou, K., Sadaka, R.: Tensor global extrapolation methods using the \(n\)-mode and the Einstein products. Mathematics. 8, 1298 (2020)

He, H., Xi, Y., Ho, J. C.: Accelerated SGD for tensor decomposition of sparse count data. Int. Conf. Data Mining Works, pp 284-291 (2020). https://doi.org/10.1109/ICDMW51313.2020.00047

He, H., Xi, Y., Ho, J. C.: Fast and accurate tensor decomposition without a high performance computing machine. IEEE Int. Conf. Big Data, pp 163-170 (2020). https://doi.org/10.1109/BigData50022.2020.9378111

Kolda, T.G., Bader, B.W.: Tensor decompositions and applications. SIAM Rev. 51, 455–500 (2009)

Lai, F., Li, W., Peng, X., Chen, Y.: Anderson accelerated fixed-point iteration for multilinear PageRank. Numer. Linear Algebra Appl. 30, e2499 (2023)

Liang, M.L., Zheng, B., Zhao, R.J.: Tensor inversion and its application to the tensor equations with Einstein product. Linear Multilinear Algebra. 67, 843–870 (2019)

Liu, D., Liu, X.: Restarted nonnegativity preserving tensor splitting methods via relaxed anderson acceleration for solving multi-linear systems (2022). arXiv preprint arXiv:2211.10857

Nesterov, Y.: Gradient methods for minimizing composite functions. Math. Program. 140, 125–161 (2013)

Pollock, S., Shroff, R.: Accelerating the Computation of Tensor \(Z\)-eigenvalues (2023). arXiv preprint arXiv:2307.11908

Schosser, J.: Tensor extrapolation: an adaptation to data sets with missing entries. J. Big Data. 9, 26 (2022)

Scieur, D., d’Aspremont, A., Bach, F.: Regularized nonlinear acceleration. Math. Program. 179, 47–83 (2020)

Sidi, A.: Vector extrapolation methods with applications. SIAM (2017)

Sridevi, G., Srinivas-Kumar, S.: Image inpainting based on fractional order nonlinear diffusion for image reconstruction. Int. J. Circ. Syst. Signal Process. 38, 3802–3817 (2019)

Wang, Q.W., Xu, X.: Iterative algorithms for solving some tensor equations. Linear Multilinear Algebra. 67, 1325–1349 (2019)

Yuan, L., Zhao, Q., Gui, L., Cao, J.: High-order tensor completion via gradient-based optimization under tensor train format. Signal Process. Image Commun. 73, 53–61 (2019)

Acknowledgements

Authors thank the anonymous referee for his very careful review of the paper and for his comments, corrections and suggestions that improved the paper.

The work was partially supported by the Moroccan Ministry of Higher Education, Scientific Research and Innovation and the OCP Foundation through the APRD research program.

Author information

Authors and Affiliations

Contributions

The third author conceived of the presented idea, developed the theory and performed the computations. The first and the second authors verified the analytical methods and supervised the findings of this work. All authors discussed the results and contributed to the final manuscript.

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bentbib, A.H., Jbilou, K. & Tahiri, R. Hosvd-tmpe: an extrapolation method for multidimensional sequences. Calcolo 61, 27 (2024). https://doi.org/10.1007/s10092-024-00582-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10092-024-00582-4