Abstract

We use the physics-informed neural network to solve a variety of femtosecond optical soliton solutions of the high-order nonlinear Schrödinger equation, including one-soliton solution, two-soliton solution, rogue wave solution, W-soliton solution and M-soliton solution. The prediction error for one-soliton, W-soliton and M-soliton is smaller. As the prediction distance increases, the prediction error will gradually increase. The unknown physical parameters of the high-order nonlinear Schrödinger equation are studied by using rogue wave solutions as data sets. The neural network is optimized from three aspects including the number of layers of the neural network, the number of neurons, and the sampling points. Compared with previous research, our error is greatly reduced. This is not a replacement for the traditional numerical method, but hopefully to open up new ideas.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the development of plasma physics, optical fiber communication and other disciplines, the nonlinear problem has attracted more and more attention [1,2,3]. These nonlinear phenomena can be described by nonlinear partial differential equations (NPDEs). Solving the NPDEs, one can reveal the nature of nonlinear phenomena [4, 5]. The nonlinear Schrödinger equation (NLSE) can be used to describe the propagation of optical solitons in optical fibers. In recent years, the research of ultrashort pulse lasers has become a popular direction. But for describing the femtosecond light pulses propagating in optical fibers, the standard NLSE becomes insufficient. High-order effects, such as third-order dispersion (TOD) and nonlinear response effects, will play a crucial part in the propagation of ultrashort pulses, such as femtosecond pulses. In order to understand this phenomenon, the high-order nonlinear Schrödinger equation (HNLSE) was proposed [6]. Recently, neural networks have also been used to study NLSE and other partial differential equations [7,8,9] and get multiple forms of solutions [10, 11]. However, they have not been extended to study optical soliton excitations of HNLSE.

In recent decades, the rapid development of data processing and computing capabilities has promoted the application of deep learning in the field of data mining, such as face recognition, machine translation, biomedical analysis, traffic prediction, and autonomous driving [12,13,14]. However, the acquisition of data is a huge challenge. How to obtain information effectively and accurately when part of the data is missing is an urgent and arduous task [15, 16]. At the same time, due to the lack of sample data and poor robustness, the results obtained by the traditional maximum likelihood method are often unreliable. In fact, it seems impossible to use the maximum likelihood method of input and output data to draw conclusions related to the laws of physics, especially for high-dimensional problems [15, 17]. Researchers speculate that defects in the prior laws related to physical systems may be one of the main reasons. Therefore, many researchers try to use the maximum likelihood algorithm and some physical laws to improve the accuracy of the unknown solution of the physical model [18, 19].

Recently, Raissi et al. fully integrated the information related to the physical system into the neural network, using the maximum likelihood technique to establish a deep learning method for physical constraints [20, 21], called the physics-informed neural network (PINN) and its related improvements [22, 23]. It is a method that is not only suitable for solving the forward problem of NPDEs, but also suitable for solving the inverse problem of NPDEs [24, 25]. The PINN can obtain very accurate solutions with less data and has good robustness [26]. At the same time, the physical information is expressed by differential equations, which also provides good physical meaning for predicting the solution [27]. This paper proposed a data-driven algorithm with high computational efficiency to derive solutions of more complex NPDEs.The general form of HNLSE is as follows [28]

where \(Q\) is a complex function related to the delay time x and longitudinal propagation distance t in Eq. (1). \(\uplambda _{1}\),\(\uplambda _{2}\),\(\uplambda _{3}\),\(\uplambda _{4}\) and \(\uplambda _{5}\) are the real parameters, respectively, related to group velocity dispersion, Kerr nonlinearity, TOD, self-steepening, and self-frequency coming from stimulated Raman scattering. If a picosecond optical pulse is studied, then \(\uplambda _{3}\),\(\uplambda _{4}\) and \(\uplambda _{5}\) in Eq. (1) are all zero, and the HNLSE degenerates into the standard NLSE. If the duration of the pulse is less than 100 fs,\(\uplambda _{3}\), \(\uplambda _{4}\) and \(\uplambda _{5}\) will not be zero.

The main novelty of this paper is as follows. (i) The HNLSE is firstly studied by the PINN; (ii) five femtosecond optical soliton excitations including one-soliton, two-soliton, rogue wave and W-soliton, M-soliton solutions of the HNLSE are trained by the PINN. Compared with the previous research [26], the error in this paper is smaller. (iii) In the inverse problem, the structure of the neural network is optimized from three aspects: the number of layers of the neural network, the number of neurons, and the sampling points. These research perspectives are not considered by previous literatures.

The main content of this paper is as follows. In Sect. 2, we introduce the PINN method. In Sect. 3, we derive the one-soliton, W-soliton solution, M-soliton solution, two-soliton solution and rogue wave solution of the HNLSE. In Sect. 4, the higher order model is established by using the rogue wave data set in PINN. The conclusion is given in the Sect. 5.

2 PINN method

Neural networks have the properties of general function approximators and can approximate any function. Therefore, it can be directly used to deal with nonlinear problems, avoiding limitations such as preset, linearization, or local time stepping. In this paper, the improved PINN method is used to reconstruct the dynamic characteristics of the HNLSE, and the parameters of the HNLSE are obtained by data driving. The general form of (1 + 1)-dimensional complex NPDE is as follows

where \(N\) is a combination of linear and nonlinear terms about \(Q\). Equation (1) is the underlying physical constraint, thus forming one multilayer feed forward neural network \(\tilde{Q}(t,x)\) and PINN \(f(t,x)\) that share parameters with each other (such as, scaling factors, weights, and deviations). Neural networks learn shared parameters by minimizing the mean square error (MSE) caused by the initial boundary value conditions associated with the feed forward neural network \(\tilde{Q}(t,x)\) and PINN \(f(t,x)\). Since \(Q(t,x)\) is a complex valued number, we need to separate the real and imaginary parts of \(Q(t,x)\), whose real part is \(g(t,x)\) and imaginary part is \(h(t,x)\). From Eq. (2), we get

The predicted solution \(\hat{Q}(t,x) \, (\hat{Q} = \sqrt {g^{2} + h^{2} } )\) is embedded in PINN through Eqs. (3) and (4), which are used as physical constraints to prevent the overfitting phenomenon of the neural network, as well as to predict the solution, which provides a good physical interpretation. The loss function \(\Gamma\) has the following form

in which

Here, the initial value and boundary value data about \(Q(t,x)\) are obtained from \(\left\{ {t_{g}^{l} ,x_{g}^{l} ,g^{l} } \right\}_{l = 1}^{{N_{q} }}\),and \(\left\{ {t_{h}^{l} ,x_{h}^{l} ,h^{l} } \right\}_{l = 1}^{{N_{q} }}\), In the same way, the collocation points of \(f_{h} (t,x)\) and \(f_{{\text{g}}} (t,x)\) are specified by \(\left\{ {t_{{f_{g} }}^{j} ,x_{{f_{g} }}^{j} } \right\}_{j = 1}^{{N_{f} }}\) and \(\left\{ {t_{{f_{h} }}^{j} ,x_{{f_{h} }}^{j} } \right\}_{j = 1}^{{N_{f} }}\).In this paper, \(N_{q} = 100\), \(N_{f} = 10000.\) In PINN, we choose to use the Adam optimizer to optimize the loss functions expressed by Eq. (5), combining the advantages of AdaGrad and RMSProp, which has become one of the mainstream optimizers. In addition, we choose the hyperbolic tangent function \(\tanh\) as the activation function, which has better learning ability, especially in the early stage of training, and has greatly improved the convergence speed and solving precision [29]. The specific form is as follows \(\tanh (na^{k} {\mathcal{L}}(z^{{k{ - }1}} )),\) where \(n \ge 1\) is a predefined scaling factor, the variable \(a \in {\mathbb{R}}\) is the slope of the activation function, and \({\mathcal{L}}(z^{{k{ - }1}} )\) is the output of the \(k - 1\) layer of the neural network. In this article, the initial \(na = 1,n = 20,a = 0.05.\)

3 Data-driven optical soliton solutions

In this section, we investigate HNLSE by using a PINN, and train five femtosecond optical soliton excitations including one-soliton, two-soliton, rogue wave and W-soliton, M-soliton solutions via PINN.

3.1 One-soliton solution

We take the parameter \(\uplambda _{1} = 0.5\),\(\uplambda _{2} = 1\),\(\uplambda _{3} = - 0.18\),\(\uplambda _{4} = - 1.08\) and \(\uplambda _{5} = 1.08\) in Eq. (1), and the exact solution of the one soliton is as follows [30]

To use the PINN deep learning, we let the initial condition

and the Dirichlet–Neumann periodic boundary condition

The sampling points set is obtained by means of pseudo-spectral method with space–time region \((x,t) \in [ - 15,15] \times [0,5][0,5]\), and the exact one-soliton solution is discretized into \([256 \times 201]\) data points. The initial and Dirichlet periodic boundary data are obtained by Latin hypercube sampling [31]. The sampling points set used in the nine-layer neural networks consists of points \(N_{q} = 100\) randomly sampled from the initial data given by Eq. (11), and the periodic boundary data given by Eq. (12), and collocation points \(N_{f} = 10000\) for the PINN \(f(t,x)\) given by Eq. (1). In addition, the MSE loss function given by Eq. (5) is learned by using a PINN with seven hidden layers of 30 neurons in each layer. After \(4000\) iterations of learning, the network achieved a relative error \(L_{2}\) as \(L_{2} = [\left| {\hat{Q}(t,x) - Q(t,x)} \right|]/Q(t,x)\) of \(9.125253 \times 10^{ - 3}\) in about \(5545.7248\) seconds.

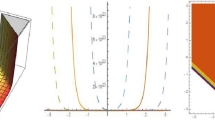

Figure 1 exhibits reconstructed one-soliton space–time dynamics. In Fig. 1a, the sampling point configuration \(|Q(t,x)|{ = }\sqrt {g^{2} (t,x) + h^{2} (t,x)}\) of the initial and boundary is clearly analyzed. Figure 1b reconstructs the space–time dynamics of one-soliton for the HNLSE. We compare the predicted solution with exact solution for three different distances \(t = 1.5,2.5,4\) in Fig. 1d. Figure 1d shows an Error \(Er = \left| {\hat{Q}(t,x) - Q(t,x)} \right|\) between the predicted solution \(\hat{Q}(t,x)\) and exact solution \(Q(t,x)\).

One-soliton solution \(Q(t,x)\): a Exact one-soliton solution \(\left| {Q(t,x)} \right|\) with the boundary and initial sampling points depicted by the cross symbol; b Reconstructed one-soliton space–time dynamics; c Comparison between exact and predicted solutions at three distances, with the red hollow dots as the predicted values and the blue solid lines as the exact values; d Three-dimensional stereogram of error. (Color figure online)

3.2 Two-soliton solution

Here, we consider two-soliton interaction and take the parameter \(\uplambda _{1} = 0.5\),\(\uplambda _{2} = 1\),\(\uplambda _{3} = \frac{1}{30}\),\(\uplambda _{4} = 0.2\) and \(\uplambda _{5} = - 0.2\) in Eq. (1). Exact solution of the two-soliton interaction is given in Ref [32] as follows

Among the region \(x \in \left[ { - 10,10} \right], \, t \in \left[ { - 5,5} \right]\), we get the initial condition

and the Dirichlet–Neumann periodic boundary condition

The sampling points set is obtained by means of pseudo-spectral method with space–time region \((x,t) \in [ - 10,10] \times [ - 5,5]\), and the exact two-soliton solution is discretized into \([512 \times 401]\) data points. Similar to the procedure of one-soliton, after \(4000\) iterations of learning, the network achieved a relative error \(L_{2}\) of \(1.633679 \times 10^{ - 2}\) in about \(11801.0978\) seconds.

Figure 2 exhibits reconstructed two-soliton space–time dynamics. In Fig. 2a, the sampling point configuration of the initial and boundary is clearly analyzed. Figure 2b reconstructs the space–time dynamics of the two solitons of the HNLSE. In Fig. 2c, we compare the predicted solution with exact solution at three different distances \(t = - 2.5,0,2.5\). Figure 2d shows the Error \(Er = \left| {\hat{Q}(t,x) - Q(t,x)} \right|\) between the predicted and exact solutions. It can be seen from the density map that the optimized PINN prediction results have good accuracy in the whole time and space domains.

Two-soliton solution \(Q(t,x)\): a Exact two-soliton solution \(\left| {Q(t,x)} \right|\) with the boundary and initial sampling points; b Reconstructed two-soliton space–time dynamics; c Comparison between exact and predicted solutions at three distances, with the red hollow dots as the predicted values and the blue solid lines as the exact values; d The error density plot. (Color figure online)

3.3 Rogue wave solution

We take the parameter \(\uplambda _{1} = 0.5\),\(\uplambda _{2} = 1\),\(\uplambda _{3} = 0.1\),\(\uplambda _{4} = 0.6\) and \(\uplambda _{5} = - 0.6\) and derive exact solution of rogue wave for Eq. (1) as follows [30]

and thus

with the Dirichlet–Neumann periodic boundary condition

The sampling points are obtained by means of pseudo-spectral method with space–time region \((x,t) \in [ - 2,2] \times [ - 1.5,1.5]\), and the rogue wave solution is discretized into \([513 \times 401]\) data points. Similar to the procedure of one-soliton, after \(4000\) iterations of learning, the network achieved a relative error \(L_{2}\) of \(2.555952 \times 10^{ - 2}\) in about \(47319.1462\) seconds.

Figure 3 exhibits space–time dynamics of reconstructed rogue wave. In Fig. 3a, the sampling point configuration of the initial and boundary is clearly analyzed. Figure 3b reconstructs the space–time dynamics of the rogue wave for the HNLSE. In Fig. 3c, we compare the predicted solution with exact solution at three different distances \(t = - 0.375,0,0.375\). Figure 3d shows the Error \(Er = \left| {\hat{Q}(t,x) - Q(t,x)} \right|\) between the predicted and exact solutions with an error below \(2 \times 10^{ - 1}\), and thus these predictions are relatively accurate.

Rogue wave solution \(Q(t,x)\): a Exact solution \(\left| {Q(t,x)} \right|\) with the boundary and initial sampling points; b Reconstruct spatiotemporal dynamics of rogue wave solution; c Comparison between the exact and predicted solutions at three distances, with the red hollow dots as the predicted values and the blue solid lines as the exact values; d The three-dimensional stereogram of error. (Color figure online)

3.4 W-soliton solution

We take the parameters \(\uplambda _{1} = 0\),\(\uplambda _{2} = 0.1\),\(\uplambda _{3} = 0\),\(\uplambda _{4} = 0.01\) and \(\uplambda _{5} = - 0.015\) in Eq. (1), and exact solution of W-soliton is as follows [33]

with the initial conditions

and the periodic condition

The sampling points set is obtained by means of pseudo-spectral method with space–time region \((x,t) \in [ - 10,10] \times [0,5]\), and the W-soliton solution is discretized into \([256 \times 201]\) data points. Similar to the procedure of one-soliton, the MSE loss function given by Eq. (5) is learned by using a PINN consisting of seven hidden layers with 40 neurons in each layer. After \(4000\) iterations of learning, the network achieved a relative error \(L_{2}\) of \(9.128569 \times 10^{ - 3}\) in about \(3826.5934\) seconds.

Figure 4 displays space–time dynamics of reconstructed W-soliton. In Fig. 4a, the sampling point configuration of the initial and boundary is clearly analyzed. Figure 4b reconstructs the space–time dynamics of the W-soliton for the HNLSE. In Fig. 4c, we compare the predicted solution with exact solution at three different distances \(t = 0.55,2.5,4.5\). Figure 4d shows that the error between the predicted and exact solutions is very small, and the predicted result is accurate in the whole time and space domains.

W-soliton solution \(Q(t,x)\): a Exact solution \(\left| {Q(t,x)} \right|\) with the boundary and initial sampling points; b Reconstructed W-soliton space–time dynamics; c Comparison between the exact and predicted solutions at three distances, with the red hollow dots as the predicted values and the blue solid lines as the exact values; d The three-dimensional stereogram of error. (Color figure online)

3.5 M-soliton solution

We take the parameters \(\uplambda _{1} = 0\),\(\uplambda _{2} = 2\),\(\uplambda _{3} = 0\),\(\uplambda _{4} = 0.0247\) and \(\uplambda _{5} = - 0.03705\) in Eq. (1), and the exact solution of M-soliton is as follows [34]

To use the PINN deep learning, we consider the initial condition

and the Dirichlet–Neumann periodic boundary condition

Similar to the procedure of W-soliton, after \(4000\) iterations of learning, the network achieved a relative error \(L_{2}\) of \(6.015803 \times 10^{ - 3}\) in about \(7924.9628\) seconds. Figure 5 displays space–time dynamics of reconstructed M-soliton. In Fig. 5a, the sampling point configuration of the initial and boundary is clearly analyzed. Figure 5b reconstructs the space–time dynamics of the M-soliton for the HNLSE. In Fig. 5c, we compare the predicted solution with exact solution at three different distances \(t = 0.55,2.5,4.5\). Figure 5d represents the density map of the error between the predicted and exact solution. From the density map, it can be seen that the prediction results of the optimized PINN are very accurate globally.

M-soliton solution \(Q(t,x)\): a Exact solution \(\left| {Q(t,x)} \right|\) with the boundary and initial sampling points; b Reconstructed M-soliton space–time dynamics; c Comparison between the exact and predicted solutions at three distances, with the red hollow dots as the predicted values and the blue solid lines as the exact values; d The error density plot. (Color figure online)

4 Data-driven parameter discovery of HNLSE

In this section, we considered the data-driven parameters discovery of the HNLSE using the PINN. Mathematically, for Eq. (1), many authors have studied the completely integrable case, which called Hirota equation [30]. The HNLSE is as follows

where the pulse envelope \(Q = u(x,t) + iv(x,t)\) with the real and imaginary parts \(u\),\(v\), coefficients \(\uplambda _{1} ,\uplambda _{2} {,\lambda }_{3} {,\lambda }_{4}\) are unknown parameters that need to be determined by the PINN training.

The network \(f(x,t)\) is defined as

and the \(f(x,t)\) has both real and imaginary parts as \(f(x,t) = f_{u} (x,t) + if_{v} (x,t)\), thus

to build a multi-output neural network with the output being the complex value of network \(f(x,t)\). These unknown parameters in PINN can be learned by minimizing the MSE loss function

According to the previous research [26], we use the rogue wave solution as the data set. By using the pseudo-spectral method, exact rogue wave solution for the HNLSE (25) with parameters \(\uplambda _{1} { = 0}{.5,\lambda }_{2} { = 1,\lambda }_{3} { = 0}{.1,\lambda }_{4} = 0.6\) is considered to make sampling pointsets with space–time region \((x,t) \in [ - 8,8] \times [ - 2,2]\), and exact rogue wave solution is discretized into \([256 \times 201]\) data points.

In order to learn these unknown parameters \(\uplambda _{1}\),\(\uplambda _{2}\),\(\uplambda _{3}\) and \(\uplambda _{4}\) via the PINN, the number of samples is randomly selected from the sampling points \(N_{f} = 5000\), and six layers of deep neural network and network structure of 50 neurons in each layer are chosen to learn the PINN \(f(x,t)\). Tables 1, 2, 3 illustrate the training results of unknown parameters in different situations and show the training error is given at the last column. In Table 1, parameters \(\uplambda _{3}\) and \(\uplambda _{4}\) are fixed as 0.1 and 0.6 in the neural network, and parameter \(\uplambda _{4}\) is fixed as 0.6 in the neural network in Table 2. We find that even if the sampling points are destroyed by noise, the PINN can correctly identify unknown parameters and give very high accuracy. The network can recognize HNLSE with significant accuracy, even if the sampling points are corrupted by 1% irrelevant noise. Meanwhile, with the gradual increase in training parameters, the error will gradually add, but these results are still robust.

Under the condition of a small amount of sampling points, our main purpose is to predict the important physical parameters of the model and make our error further reduced. However, our optimization for HNLSE may be effective for this model, but not for other models, so we need to find a more general rule. This will be our next research direction.

In order to further analyze how to reduce the training error, we conducted a systematic study on the total number of sampling points, the number of neurons and the number of hidden layers of neural networks. We use the control variable method to find an optimal structure for the neural network. These results are shown in Figs. 6 and 7. Even if the number of neurons changes, the training results are still stable. By observing the training results of four unknown parameters, we find 50 neurons per layer is a suitable choice considering the operation speed of neural network. In Fig. 7, by changing the randomly sampled sampling points and the number of hidden layers, we find that the sampling points as \(N_{f} = 5000\) and the hiding layer as 6 will be a better choice. Time cost of different structures of the neural network corresponding to Figs. 6, 7a, b is given in Tables 4, 5, 6. Increasing the number of neurons, the sampling points, and the number of layers of the neural network, the time cost will gradually increase. Because the neural network structure is more complex, the amount of calculation is even greater. Due to different computer performance, time will change, but the growth trend will not change. Compared with previous literatures on data-driven parameter discovery [20, 26], our error is greatly reduced. Similar training results can be obtained by using bright solitons and other exact solutions as data sets.

Of course, our neural network structure is only a local optimal choice, and we are not sure whether there will be better results if we continue to increase these three variables, that is, the total number of sampling points, the number of neurons and the number of hidden layers of neural networks. We hope to find a general rule in the next research.

5 Conclusion

In conclusion, we study the one-soliton solution, two-soliton solution, rogue wave solution, W-soliton solution and M-soliton solution of HNLSE via the multilevel PINN deep learning method under different initial and periodic boundary conditions. In particular, when we use the network to train W-soliton and M-soliton, we find that the relative error \(L_{2}\) of the predicted results reaches \(1 \times 10^{ - 3}\), and the training speed is fast. The results show that the training effect for simple soliton is better. In Figs.4d and 5d, as the learning distance increases, the error gradually increases. Since the sampling position is on the initial boundary, as the learning distance increases, the error between the predicted result and the actual situation will gradually increase.

In addition, based on rogue wave solution, the data-driven parameter discovery of HNLSE is studied. We add the noise to judge the stability of the neural network. The prediction error will increase as the number of unknown parameters adds. These changes of error are within the controllable range, which is enough to prove the excellent performance of PINN. We have optimized the structure of the neural network mainly from three aspects including the number of layers of the neural network, the number of neurons, and the sampling points. Compared with previous studies [26], our error is greatly reduced.

In this work, we use a data-driven algorithm to predict solutions of HNLSE, and physical parameters of the model. We want to apply neural networks into optical fields, such as dynamical behavior prediction of solitons and model predictive control. The present work is our first step. We expect to derive partial soliton image data by experimental means to predict the propagation process of solitons.

References

Peregrine, D.H.: Water waves, nonlinear Schrodinger equations and their solutions. J. Aust. Math. Soc. Ser. B 25, 16–43 (1983)

Ablowitz, M.J., Clarkson, P.A.: Solitons, Nonlinear Evolution Equations and Inverse Scattering. Cambridge University Press, Cambridge (1992)

Han, H.B., Li, H.J., Dai, C.Q.: Wick-type stochastic multi-soliton and soliton molecule solutions in the framework of nonlinear Schrödinger equation. Appl. Math. Lett. 120, 107302 (2021)

Li, P.F., Li, R.J., Dai, C.Q.: Existence, symmetry breaking bifurcation and stability of two-dimensional optical solitons supported by fractional diffraction. Opt. Express 29, 3193–3209 (2021)

Wang, B.H., Wang, Y.Y., Dai, C.Q., Chen, Y.X.: Dynamical characteristic of analytical fractional solitons for the space-time fractional Fokas-Lenells equation. Alex. Eng. J. 59, 4699–4707 (2020)

Kodama, Y., Hasegawa, A.: Nonlinear pulse propagation in a monomode dielectric guide. IEEE J. Quantum Electron 23, 510–515 (1987)

Marcucci, G., Pierangeli, D., Conti, C.: Theory of neuromorphic computing by waves: machine learning by rogue waves, dispersive shocks, and solitons. Phys. Rev. Lett 125, 093901 (2020)

Bar-Sinai, Y., Hoyer, S., Hickey, J., et al.: Learning data driven discretizations for partial differential equations. PNAS 116, 15344–15349 (2019)

Zhang, R.F., Bilige, S.: Bilinear neural network method to obtain the exact analytical solutions of nonlinear partial differential equations and its application to p-gBKP equation. Nonlinear Dyn 95, 3041–3048 (2019)

Zhang, R.F., Bilige, S., et al.: Bright-dark solitons and interaction phenomenon for p-gBKP equation by using bilinear neural network method. Phys. Scr. 96, 025224 (2021)

Zhang, R.F., Li, M.C., et al.: Generalized lump solutions, classical lump solutions and rogue waves of the (2+1)-dimensional Caudrey-Dodd-Gibbon-Kotera-Sawada-like equation. Appl. Math. Comput. 403, 126201 (2021)

Urbaniak, I., Wolter, M.: Quality assessment of compressed and resized medical images based on pattern recognition using a convolutional neural network. Commun. Nonlin. Sci. Numer. Simul. 95, 105582 (2021)

Ge, C.P., Liu, Z., Fang, L.M., et al.: A hybrid fuzzy convolutional neural network based mechanism for photovoltaic cell defect detection with electroluminescence images. IEEE Trans. Parallel Distrib. Syst. 32, 1653–1664 (2021)

Alipanahi, B., Delong, A., Weirauch, M.T., Frey, B.J.: Predicting the sequence specificities of DNA-and RNA-binding proteins by deep learning. Nat. Biotech-nol 33, 831–838 (2015)

Duda, R.O., Hart, P.E., Stork, D.G.: Pattern Classification. Springer-Verlag, New York (2000)

Lake, B.M., Salakhutdinov, R., Tenenbaum, J.B.: Human-level concept learning through probabilistic program induction. Science 350, 1332–1338 (2015)

P. Johri, J. K. Verma, S. Paul (ed.), Applications of Machine Learning, Springer Singapore, (2020).

Lagaris, I.E., Likas, A., Fotiadis, D.I.: Artificial neural networks for solving ordinary and partial differential equations. IEEE Trans. Neural Netw 9, 987–1000 (1998)

Liu, Y., Yi, Xu., Ma, J.: Synchronization and spatial patterns in a light-dependent neural network. Commun. Nonlin. Sci. Numer. Simul. 89, 105297 (2020)

Raissi, M., Perdikaris, P., Karniadakis, G.E.: physics-informed neural networks: a deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Compt. Phys. 378, 686–707 (2019)

Raissi, M., Karniadakis, G.E.: Hidden physics models: machine learning of nonlinear partial differential equations. J. Comput. Phys. 357, 125–141 (2018)

Raissi, M., Perdikaris, P., Karniadakis, G.E.: Numerical Gaussian processes for time-dependent and non-linear partial differential equations. SIAM J. Sci. Comput. 40, A172–A198 (2017)

Raissi, M., Yazdani, A., Karniadakis, G.E.: Hidden fluid mechanics: learning velocity and pressure fields from flow visualizations. Science 367, 1026–1030 (2020)

Jagtap, A.D., Kharazmi, E., Karniadakis, G.E.: Conservative physics-informed neural networks on discrete domains for conser-vation laws: Applications to forward and inverse problems. Comput. Methods Appl. Mech. Engrg 365, 113028 (2020)

Rudy, H.S., Brunton, S.L., Proctor, J.L., Kutz, J.N.: Data-driven discovery of partial differential equations. Sci. Adv. 3, 2375–2548 (2016)

Wang, L., Yan, Z.Y.: Data-driven rogue waves and parameter discovery in the defocusing NLS equation with a potential using the PINN deep learning. Phys. Lett. A 404, 127408 (2021)

Pu, J.C., Li, J., Chen, Y.: Soliton, breather and rogue wave solutions for solving the nonlinear schrodinger equation using a deep learning method with physical constraints. Chin. Phys. B (2021). https://doi.org/10.1088/1674-1056/abd7e3

Xu, Z.Y., Li, L., Li, Z.H., Zhou, G.S.: Modulation instability and solitons on a cw background in an optical fiber with higher-order effects. Phys. Rev. E 67, 026603 (2003)

Jagtap, A.D., Kawaguchi, K., Karniadakis, G.E.: Locally adaptive activation functions with slope recovery term for deep and physics-informed neural networks. Proc. R. Soc. A 476, 2239 (2020)

Tao, Y.S., He, J.S.: Multisolitons, breathers, and rogue waves for the Hirota equation generated by the Darboux transformation. Phys. Rev. E 85, 026601 (2012)

Stein, M.L.: Large sample properties of simulations using latin hypercube sampling. Technometrics 29, 143–151 (1987)

Xu, Z.Y., Li, L., Li, Z.H., Zhou, G.S.: Soliton interaction under the influence of higher-order effects. Optics Communications 210, 375–384 (2002)

Li, Z.H., Li, L., Tian, H.P., Zhou, G.S.: New types of solitary wave solutions for the higher order nonlinear Schrödinger equation. Phys. Rev. Lett 84, 18 (1999)

Tian, J.P., Tian, H.P., Li, Z.H., Kang, L.S., Zhou, G.S.: An inter-modulated solitary wave solution for the higher order nonlinear Schrödinger equation. Phys. Scr. 67, 325–328 (2003)

Acknowledgements

This work is supported by the Zhejiang Provincial Natural Science Foundation of China (Grant No. LR20A050001), the National Natural Science Foundation of China (Grant Nos. 12075210 and 11874324) and the Scientific Research and Developed Fund of Zhejiang A&F University (Grant No. 2021FR0009).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors have declared that no conflict of interest exists.

Ethical Standards

This research does not involve human participants and/or animals.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Y. Fang and G. Z. Wu contributed equally to this work.

Rights and permissions

About this article

Cite this article

Fang, Y., Wu, GZ., Wang, YY. et al. Data-driven femtosecond optical soliton excitations and parameters discovery of the high-order NLSE using the PINN. Nonlinear Dyn 105, 603–616 (2021). https://doi.org/10.1007/s11071-021-06550-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-021-06550-9