Abstract

The edge node selection problem in edge computing is a typical multi-criteria group decision-making problem. In this paper, we put forward an ELECTRE II method with the probabilistic linguistic information to handle the edge node selection problem. First, a novel distance measure is developed for probabilistic linguistic term sets (PLTSs) and an entropy measure is devised to measure the uncertainty degree of PLTSs. Based on the score value and entropy, a novel method is put forward to compare two PLTSs. Next, a weight-determining method for criteria based on multiple correlation coefficient and a weight-determining method for experts based on entropy theory are proposed. After that, a novel probabilistic linguistic ELECTRE II method is put forward to deal with the edge node selection problem. Comparison with previous methods is provided to verify the superiority of our method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the last 10 years, cloud computing, offering elastic computing and storage resources via the means of purchase-on-demand, has dominated the IT (Information Technology) market [1]. Many companies have released their cloud computing services, which help small and medium enterprises to decrease the high cost for expanding and maintaining the IT infrastructure. For example, Amazon has launched the Amazon Web Services that provide the users with a set of cloud computing services including elastic computing, storage, database, and applications. To reduce the cost and better focus on the business [2], many enterprises choose to migrate their applications from traditional computer systems to cloud computing platforms. Mobile users upload their requests to cloud computing platforms for being processed when they are connected to cloud computing platforms.

However, with the quick development of Internet of Things (IoTs), the data generated by mobile users grow explosively. It is expected that 26 billion IoT devices will be churning out and the average amount of data produced by per person per day will be 1.5 GB by 2020 [3]. Cloud computing platforms cannot satisfy so big computing requirements for handling explosively growing data. Moreover, the transmission of huge data between cloud computing centers and mobile users could result in the network congestion since cloud computing centers are usually far way from mobile users. They could lead to high network latency, which cannot meet the real-time response requirement of time-sensitive applications. For example, live-streaming applications and game applications need high real-time responses to ensure the high quality of experience (QoE) for mobile users. The edge computing, as a supplement to cloud computing, takes advantage of edge nodes in the edge of networks to bear part of requests from mobile users for providing real-time responses. The edge computing can help to alleviate the computing pressure of cloud computing platforms [4]. When the mobile users enter an edge computing network, they should choose an appropriate edge node to handle their requests. However, there usually exist some available edge nodes to be chosen. The key factors should be considered, such as the amount of available computing resource, security level, and network bandwidth. Moreover, there may be many mobile users taking part in the assessment process of edge nodes. Hence, the edge node selection problem in edge computing can be considered as a typical multi-criteria group decision-making (MCGDM) problem.

Due to the complexity of decision-making problems, people usually cannot use crisp values to express their preference information [5]. Sometimes, decision makers (DMs) prefer to utilize qualitative terms rather than quantitative values to assess objects [6,7,8,9,10,11]. In this case, a fuzzy linguistic approach was put forward by Zadeh [12] to model the qualitative assessment information. A virtual linguistic model [13] was devised to redefine the syntax and semantics of linguistic computational models. In some cases, DMs may hesitate among several different linguistic terms when they assess the alternatives. Therefore, Rodriguez et al. [14] proposed the definition of hesitant fuzzy linguistic term set (HFLTS) to capture the hesitancy degree under the linguistic environment. For example, when the performance of the motor of a car is evaluated, the DM may hesitate between the linguistic terms “medium” and “bad,” and then, the evaluation information is modeled as a HFLTS {“bad”, “medium”}. However, in some cases, the DM may prefer the linguistic term “medium” to the linguistic term “bad,’ namely the probabilities of these two linguistic terms are different [15]. It can be seen that the HFLTS cannot model this kind of complex qualitative information. To overcome this defect and exactly model this kind of complex qualitative information, Pang et al. [16] proposed the definition of the probabilistic linguistic term set (PLTS), which allows DMs to give their preference information as a set of several linguistic terms associated with probabilities.

The concept of PLTS has attracted much attention from researchers and scholars. They mainly focused on the comparison methods [17], entropy measures [18], and operation laws [19]. Pan et al. [20] combined the PLTS with ELECTRE II method and proposed a probabilistic linguistic ELECTRE II method to handle the therapeutic schedule evaluation problem. As a supplement to it, we propose a novel score-entropy-based ELECTRE II method for PLTSs in this study and then apply it to handle the edge node selection problem in the edge computing network. Our contributions can be summarized as follows:

-

(1)

We first develop a function to obtain PLTS vectors from PLTSs and then propose a novel distance measure based on PLTS vector for PLTSs.

-

(2)

An information entropy is put forward to measure the uncertainty degrees of PLTSs. Combined the score function with information entropy, a novel comparison method is developed to compare PLTSs.

-

(3)

The concept of multiple correlation coefficient (MCC) is utilized to compute the weights of criteria for MCGDM problems with probabilistic linguistic information. In addition, a weight-determining method based on entropy theory is developed to calculate the weights of DMs.

-

(4)

The comparison method and weight-determining method are combined with ELECTRE II method to develop a novel probabilistic linguistic ELECTRE II method. Then, this method is utilized to handle the edge node selection problem in the edge computing network.

The remainder of this paper is organized as follows: Section 2 presents some basic knowledge about the linguistic term set and PLTSs. In Sect. 3, a novel distance measure and a comparison method of PLTSs are presented. A novel probabilistic linguistic ELECTRE II method is developed in Sect. 4. Section 5 gives a demonstrative example concerning the edge node selection problem to illustrate the process of the proposed probabilistic linguistic ELECTRE II method. Comparative analysis is provided in Sect. 6, and the study ends with some conclusions in Sect. 7.

2 Preliminaries

2.1 The linguistic term set

The linguistic term set (LTS), which is also referred to as the linguistic evaluation scale, is an essential tool for linguistic decision making. It is composed of finite and totally ordered linguistic terms, which can be mathematically denoted as \(S_1 =\left\{ {s_\alpha | \alpha =0,1,\ldots , \tau } \right\} \) [21], where \(\tau \) denotes a positive integer. The subscript of each linguistic term in \(S_1\) is discrete, which would lead to the information loss during the computation processes of linguistic terms. To solve this issue, Xu [22] extended the discrete LTS to the continuous LTS \(\overline{S_1 } =\left\{ {s_\alpha |\alpha \in \left[ {0,q} \right] } \right\} \) with \(q(q>\tau )\). This continuous LTS \(\overline{S_1}\) satisfies: (1) \(s_a \oplus s_b =s_{a+b}\), \(s_a, s_b \in \bar{{S}}_1\); (2) \(\lambda s_a =s_{\lambda a}\), \(\lambda \in [0,1]\).

For a LTS \(S_1 =\left\{ s_0 =very\;small,\;s_1 =small\right. \), \(\left. s_2 =moderate,\;s_3 =big,\;s_4 =very\;big \right\} \), we can get \(s_1 \oplus s_2 =s_3\), which implies that the sum of linguistic terms “small” and “moderate” is equal to the linguistic term “big”. Obviously, it does not make any sense. To avoid this defect, Xu [23] defined a subscript-symmetric LTS as \(S_2 =\{s_\alpha | \alpha =-\tau , \ldots , 0,\ldots , \tau \}. s_{-\tau }\) and \(s_{\tau }\) denote the lower and upper values of the linguistic terms in \(S_2\), respectively. The linguistic terms in \(S_2\) satisfy: (1) If \(a>b\), then \(s_a >s_b\); (2) there is a negation operator: \(neg\left( {s_a } \right) =s_{-a}\).

2.2 Concepts of probabilistic linguistic term set

The HFLTS is an important tool to model the hesitancy under the linguistic setting [24]. Liao et al. [25] presented the mathematical form of the HFLTS on \(S_2\) as \(H_S =\{\langle x,h_s \left( x \right) \left| {x\in X} \right\rangle \}\), where a hesitant fuzzy linguistic element (HFLE) \(h_s \left( x \right) =\{s_k \left( x \right) |s_k \left( x \right) \in S_2, k=1,2\ldots , \# h_s \}\) is a set of some possible linguistic terms in \(S_2\). In each HFLE, the linguistic terms have equal weight or probability. In real applications, DMs may prefer one linguistic term to another one when providing evaluation information, namely the linguistic terms should have different weights or probabilities. To extend the modeling capability of HFLTSs, Pang et al. [16] developed the definition of PLTSs to associate each linguistic term with a probability value.

Definition 1

[16] Let \(S_1 =\left\{ {s_0, \ldots , s_\tau } \right\} \) be a subscript-asymmetric LTS, and then, the mathematical form of a PLTS can be expressed as

where the element \(L^{\left( k \right) }\left( {p^{\left( k \right) }} \right) \) is composed of the \(k\hbox { th}\) linguistic term \(L^{\left( k \right) }\) and its probability \(p^{\left( k \right) }\) and the term \(\# L\left( p \right) \) denote the number of elements in \(L\left( p \right) \). The linguistic terms \(L^{\left( k \right) }, k=1,2,\ldots ,\# L\left( p \right) \) in \(L\left( p \right) \) are arranged in ascending order.

From Definition 1, it can be noted that the sum of probabilities in a PLTS may be less than 1, namely \({\mathop {\sum }\nolimits _{k=1}^{\# L\left( p \right) }} {p^{\left( k \right) }} <1\). In this case, it implies that some DMs give up rating the object. For example, ten consumers are called to assess the performance of a computer. Three consumers said that it is “good,” five consumers said that it is “medium,” and other consumers do not give any evaluation information. Then, the evaluation information regarding the performance of this computer can be expressed a PLTS \(\{``\hbox {good''} (0.3), ``\hbox {medium''} (0.5)\}\), where the sum of probabilities is equal to 0.8.

To avoid the deficiency of the LTS \(S_1\), Zhang et al. [26] proposed the definition of PLTS based on the subscript-symmetric LTS \(S_2\). When the sum of probabilities of the linguistic terms in a PLTS is less than 1, \(L\left( p \right) =\left\{ {\left. {L^{\left( k \right) }\left( {p^{\left( k \right) }} \right) } \right| L^{\left( k \right) }\in S_2, \;\mathop \sum \nolimits _{k=1}^{\# L\left( p \right) } {p^{\left( k \right) }} \le 1} \right\} \) is normalized to \(L^{N}\left( p \right) =\left\{ \left. {L^{\left( k \right) }\left( {p^{N\left( k \right) }} \right) } \right| L^{\left( k \right) }\in S_2,\mathop \sum \nolimits _{k=1}^{\# L\left( p \right) } {p^{N\left( k \right) }} =1 \right\} \) with \(p^{N\left( k \right) }={p^{\left( k \right) }}/{\mathop \sum \nolimits _{k=1}^{\# L\left( p \right) } {p^{\left( k \right) }}}\) for each k [16]. Let \(L\left( p \right) =\left\{ \left. {L^{\left( k \right) }\left( {p^{\left( k \right) }} \right) } \right| k=1,2,...,\# L\left( p \right) \right\} \) denote a PLTS, then the score function of \(L\left( p \right) \) is defined as \(E\left( {L\left( p \right) } \right) =s_{\bar{{\gamma }}}\), where \(\bar{{\gamma }}={\sum _{k=1}^{\# L\left( p \right) } {\gamma ^{\left( k \right) }} p^{\left( k \right) }}/{\sum _{k=1}^{\# L\left( p \right) } {p^{\left( k \right) }}}\) and \(\gamma ^{\left( k \right) }\) is the subscript of linguistic term \(L^{\left( k \right) }\) [16].

For two PLTSs \(L_1 \left( p \right) =\{L_1^{\left( k \right) } \left( {p_1^{\left( k \right) } } \right) |k=1,2,\ldots , \# L_1 \left( p \right) \}\) and \(L_2 \left( p \right) =\left\{ \left. {L_2^{\left( k \right) } \left( {p_2^{\left( k \right) } } \right) } \right| k=1,2,...,\# L_2 \left( p \right) \right\} \), they usually have different numbers of elements. Pang et al. [16] made them have the same number of elements using the following method. If \(\# L_1 \left( p \right) >\# L_2 \left( p \right) \), then \(\# L_1 \left( p \right) -\# L_2 \left( p \right) \) linguistic terms are added into \(L_2 \left( p \right) \) so that the numbers of elements in \(L_1 \left( p \right) \) and \(L_2 \left( p \right) \) are equal. The added linguistic terms are the smallest one in \(L_2 \left( p \right) \), and the probabilities of these added linguistic terms are zero.

To fuse PLTSs in the decision-making processes, Pang et al. [16] developed two aggregation operators.

Definition 2

[16] Let \(L_i \left( p \right) =\left\{ L_i^{\left( k \right) } \left( {p_i^{\left( k \right) } } \right) |k=1,2,\ldots , \# L_i (p) \right\} \left( {i=1,2,\ldots , n} \right) \) denote n PLTSs with \(L_i^{\left( k \right) }\) and \(p_i^{\left( k \right) }\) being the \(k\hbox {th}\) linguistic term and its probability in the \(i\hbox {th}\) PLTS \(L_i \left( p \right) \). \(w=\left( {w_1, w_2, \ldots , w_n} \right) ^{T}\) is the weight vector of PLTSs that satisfies \(w_j \in \left[ {0,1} \right] \) and \({\mathop {\sum }\nolimits _{{\mathrm{j}}=1}^{\mathrm{n}}} w_j =1\). Then, the probabilistic linguistic weighted averaging (PLWA) operator is defined as:

Pang et al. [16] defined the distance between two PLTSs as follows:

Definition 3

[16] Let \(L_1 \left( p \right) =\left\{ L_1^{\left( k \right) } \left( {p_1^{\left( k \right) } } \right) |k=1,2,...,\# L_1 \left( p \right) \right\} \) and \(L_2 \left( p \right) =\left\{ L_2^{\left( k \right) } \left( {p_2^{\left( k \right) } } \right) |k=1,2,...,\# L_2 \left( p \right) \right\} \) be two PLTSs with \(\# L_1 \left( p \right) =\# L_2 \left( p \right) \); then, the distance between these two PLTSs can be computed as

where \(r_1^{\left( k \right) }\) and \(r_2^{\left( k \right) }\) are the subscripts of the linguistic terms \(L_1^{\left( k \right) }\) and \(L_2^{\left( k \right) }\).

Suppose that \(L_1 \left( p \right) =\left\{ {s_0 \left( {0.2} \right) ,s_1 \left( {0.6} \right) ,s_2 \left( {0.2} \right) } \right\} \) and \(L_2 \left( p \right) =\left\{ {s_0 \left( {0.6} \right) ,s_2 \left( {0.3} \right) ,s_4 \left( {0.1} \right) } \right\} \) are two PLTSs based on the LTS \(S_2 =\left\{ s_{-4}, s_{-3}, s_{-2}, s_{-1}, s_0, s_1, s_2, s_3, s_4 \right\} \). According to Eq. (3), the distance between them is computed as \(d\left( {L_1 \left( p \right) ,L_2 \left( p \right) } \right) =0\). Obviously, \(L_1 \left( p \right) \) and \(L_2 \left( p \right) \) are not equal and the distance between them cannot be 0. Hence, the above distance measure is not reasonable.

3 New distance measure and comparison method for PLTSs

Based on the transformation function for PLTSs [27, 28], we develop a function, which can map the PLTSs into a high-dimensional space and obtain the PLTS vectors with the same length as follows.

Definition 4

Given a PLTS \(L\left( p \right) =\left\{ \left. {L^{\left( k \right) }\left( {p^{\left( k \right) }} \right) } \right| k=1,2,...,\# L\left( p \right) \right\} \) with \(L^{\left( k \right) }\in S_2 =\left\{ \left. {s_\alpha } \right| \alpha =-\tau , ...,0,...,\tau \right\} \), all the linguistic terms in \(S_2\) except the ones in \(L\left( p \right) \) are added to \(L\left( p \right) \) and the added linguistic terms are assigned with the probability of 0. All the elements in \(L\left( p \right) \) are ordered according to the subscripts of linguistic terms from \(-\tau \) to \(\tau \). Then, \(L\left( p \right) \) can be mapped into a vector \(L\left( p \right) ^{T}=\left( {\delta _1, \delta _2, ...,\delta _k, ...,\delta _{2\tau +1} } \right) ^{T}\) using the following function:

where \(\gamma ^{(\theta )}\) means the subscript of the linguistic term \(L^{(\theta )}\) in \(L\left( p \right) \).

Given a subscript-symmetric LTS \(S_2 =\left\{ \left. {s_\alpha } \right| \alpha =-\tau , ...,0,...,\tau \right\} \) and two PLTSs \(L_1 \left( p \right) \) and \(L_2 \left( p \right) \), the distance between \(L_1 \left( p \right) \) and \(L_2 \left( p \right) \) can be defined as

where \(L_1 \left( p \right) ^{T}=\left( {\delta _1, \delta _2, ...,\delta _{\theta _1 }, ...,\delta _{2\tau +1} } \right) ^{T}\) and \(L_2 \left( p \right) ^{T}=\left( {\delta _1, \delta _2, ...,\delta _{\theta _2 }, ...,\delta _{2\tau +1} } \right) ^{T}\) are the PLTS vectors of PLTSs \(L_1 \left( p \right) \) and \(L_2 \left( p \right) \), respectively.

Property 1

The proposed distance between \(L_1 \left( p \right) \) and \(L_2 \left( p \right) \) satisfies: (1) \(0\le d\left( {L_1 \left( p \right) ,L_2 \left( p \right) } \right) \le 1\); (2) \(d\left( {L_1 \left( p \right) ,L_2 \left( p \right) } \right) =d\left( {L_2 \left( p \right) ,L_1 \left( p \right) } \right) \); (3) \(d\left( {L_1 \left( p \right) ,L_2 \left( p \right) } \right) =0\), if and only if \(L_1 \left( p \right) =L_2 \left( p \right) \).

Proof

-

(1)

According to Eq. (4), we have

$$\begin{aligned} 0\le & {} d\left( {L_1 \left( p \right) ,L_2 \left( p \right) } \right) \\= & {} \sqrt{\mathop \sum \limits _{\theta _1 =\theta _2 =1}^{2\tau +1} {\left( {\delta _{\theta _1 } -\delta _{\theta _2 } } \right) ^{2}} }\\\le & {} \max \left( {\sqrt{\mathop \sum \limits _{\theta _1 =1}^{2\tau +1} {\left( {\delta _{\theta _1 } } \right) ^{2}} },\sqrt{\mathop \sum \limits _{\theta _2 =1}^{2\tau +1} {\left( {\delta _{\theta _2 } } \right) ^{2}} }} \right) \\\le & {} \max \left( {\sqrt{\left( {\mathop \sum \limits _{\theta _1 =1}^{2\tau +1} {\delta _{\theta _1 } } } \right) ^{2}},\sqrt{\left( {\mathop \sum \limits _{\theta _2 =1}^{2\tau +1} {\delta _{\theta _2 } } } \right) ^{2}}} \right) \\= & {} \max \left( {\mathop \sum \limits _{\theta _1 =1}^{2\tau +1} {\delta _{\theta _1 } }, \mathop \sum \limits _{\theta _2 =1}^{2\tau +1} {\delta _{\theta _2 } } } \right) \\= & {} \max \left( \mathop \sum \limits _{\theta _1 =1}^{2\tau +1} {\left( {\frac{\gamma ^{\left( {\theta _1 } \right) }+\tau }{2\tau }\times p^{\left( {\theta _1 } \right) }} \right) },\right. \\&\left. \mathop \sum \limits _{\theta _2 =1}^{2\tau +1} {\left( {\frac{\gamma ^{\left( {\theta _2 } \right) }+\tau }{2\tau }\times p^{\left( {\theta _2 } \right) }} \right) } \right) \\\le & {} \max \left( {\mathop \sum \limits _{\theta _1 =1}^{2\tau +1} {\left( {p^{\left( {\theta _1 } \right) }} \right) }, \mathop \sum \limits _{\theta _2 =1}^{2\tau +1} {\left( {p^{\left( {\theta _2 } \right) }} \right) } } \right) =1 \end{aligned}$$Hence, \(0\le d\left( {L_1 \left( p \right) ,L_2 \left( p \right) } \right) \le 1\).

-

(2)

It is straightforward.

-

(3)

\(d\left( {L_1 \left( p \right) ,L_2 \left( p \right) } \right) =0\Rightarrow \sqrt{\sum _{\theta _1 =\theta _2 =1}^{2\tau +1} {\left( {\delta _{\theta _1 } -\delta _{\theta _2 } } \right) ^{2}} }=0\Rightarrow \delta _{\theta _1 } =\delta _{\theta _2 } \Rightarrow L_1 \left( p \right) =L_2 \left( p \right) \), which completes the proof.

\(\square \)

The PLTS vectors of PLTSs \(L_1 \left( p \right) \) and \(L_2 \left( p \right) \) in Sect. 3 are \(L_1 \left( p \right) ^{T}=\left\{ {0,0,0,0,\frac{1}{10},\frac{3}{8},\frac{3}{20},0,0} \right\} \) and \(L_2 \left( p \right) ^{T}=\left\{ {0,0,0,0,\frac{3}{10},0,\frac{9}{40},0,\frac{1}{10}} \right\} \). Using Eq. (4), we have \(d\left( {L_1 \left( p \right) ,L_2 \left( p \right) } \right) =0.4430\). It can be seen that the distance defined as Eq. (4) is more reasonable.

When the score function values of two PLTSs are equal, the information entropy is utilized to measure the uncertainty degree of each PLTS.

Definition 5

Let \(L\left( p \right) =\left\{ \left. {L^{\left( k \right) }\left( {p^{\left( k \right) }} \right) } \right| k=1,2,...,\# L\left( p \right) \right\} \) denote a PLTS, and then, the information entropy of \(L\left( p \right) \) is defined as \(\mu \left( {L\left( p \right) } \right) =-\sum _{k=1}^{\# L\left( p \right) } {p^{\left( k \right) }\log _2 p^{\left( k \right) }}\).

Theorem 1

For a PLTS \(L\left( p \right) =\left\{ \left. {L^{\left( k \right) }\left( {p^{\left( k \right) }} \right) } \right| k=1,2,...,\# L\left( p \right) \right\} \), if \(p^{\left( 1 \right) }=p^{\left( 2 \right) }=\cdots =p^{\left( {\# L\left( p \right) } \right) }=\frac{1}{\# L\left( p \right) }\), then \(L\left( p \right) \) has a maximum entropy, i.e., \(-\ln \frac{1}{\# L\left( p \right) }\).

Proof

In a PLTS, the probabilities of the linguistic terms satisfy that \(\sum _{k=1}^{\# L\left( p \right) } {p^{\left( k \right) }} =1\). Bring a Lagrange multiplier \(\lambda \) and construct a Lagrange function as

Then, we take the partial derivatives with respect to \(p^{\left( k \right) }\) and \(\lambda \) as

Solving the above equations, we have \(p^{\left( 1 \right) }=p^{\left( 2 \right) }=\cdots =p^{\left( {\# L\left( p \right) } \right) }=\frac{1}{\# L\left( p \right) }\). Hence, the maximum value of \(\mu \left( {L\left( p \right) } \right) \) is \(-\ln \frac{1}{\# L\left( p \right) }\). The higher the entropy of PLTS is, the higher the uncertainty degree is.

Based on the score function and entropy, a method is devised to compare two PLTSs. For \(L_1 \left( p \right) \) and \(L_2 \left( p \right) \), if \(E\left( {L_1 \left( p \right) } \right) >E\left( {L_2 \left( p \right) } \right) \), then \(L_1 \left( p \right) \succ L_2 \left( p \right) \). If \(E\left( {L_1 \left( p \right) } \right) =E\left( {L_2 \left( p \right) } \right) \), then if \(\mu \left( {L_1 \left( p \right) } \right) >\mu \left( {L_2 \left( p \right) } \right) \), \(L_1 \left( p \right) \prec L_2 \left( p \right) \); if \(\mu \left( {L_1 \left( p \right) } \right) =\mu \left( {L_2 \left( p \right) } \right) \), then \(L_1 \left( p \right) \sim L_2 \left( p \right) \).

4 A novel probabilistic linguistic ELECTRE II method for MCGDM problems

In this section, we combine the score function and entropy of PLTSs to develop a novel probabilistic linguistic ELECTRE II method. We also propose two methods to determine the weight vectors for criteria and DMs.

4.1 Problem description

Consider a multi-criteria group decision-making (MCGDM) problem that consists of m alternatives, denoted as \(X=\left\{ {x_1, x_2, \ldots x_m } \right\} \), and n criteria expressed as \(A=\left\{ {a_1, a_2, \ldots , a_n } \right\} \). The weight vector of criteria is \(w=\left( {w_1, w_2, \ldots , w_n } \right) \), which satisfies that \(w_j \ge 0\) and \(\sum _{j=1}^n {w_j } =1\). A group of decision makers, denoted as \(DM=\left\{ {dm_1, dm_2, \ldots , dm_u } \right\} \), are invited to take part in this MCGDM process, and the weight vector of them is \(e=\left( {e_1, e_2, \ldots , e_u } \right) \), satisfying that \(e_\varphi \ge 0\) and \(\sum _{\varphi =1}^u {e_\varphi } =1\). Because of the complexity and uncertainty of real MCGDM problems, the weight information of criteria and DMs are supposed to be completely unknown. The DMs choose the linguistic terms from the LTS \(S_2 =\left\{ {\left. {s_\alpha } \right| \alpha =-\tau , \ldots , 0,\ldots , \tau } \right\} \) to express their evaluation information over each alternative \(x_i\) concerning to each criterion \(a_j\). The linguistic decision matrix given by the DM \(dm_\varphi \) is

Wu et al. [29] put forward a method to form the collective evaluation information for a group of DMs. If the linguistic decision matrix provided by the DM \(dm_\varphi \) is \(R_\varphi =\left[ {r_{ij}^\varphi } \right] _{m\times n}\), then the group decision matrix, namely the probabilistic linguistic decision matrix (PLDM) of this group of DMs, can be obtained as

where \(\nu _{ij}^\varphi \) is the probability of \(s_{ij}^{\left( l \right) }\) in \(r_{ij}^\varphi \) and \(\nu _{ij}^\varphi =\left\{ {{\begin{array}{l} {1,\;if\;s_{ij}^{\left( l \right) } \in r_{ij}^\varphi } \\ {0,\;if\;s_{ij}^{\left( l \right) } \notin r_{ij}^\varphi } \\ \end{array} }} \right. \). If the weights are not given, we can assume that \(e_\varphi =1/u\), \(\varphi =1,2,\ldots , u\).

Example 1

Suppose that three DMs are called to evaluate a third-party reverse logistics provider and their opinions are expressed as HFLTSs \(H_1 =\left\{ {s_1 } \right\} \), \(H_2 =\left\{ {s_2 } \right\} \), and \(H_3 =\left\{ {s_1 } \right\} \). Let \(e=\left( {1/3,1/3,1/3} \right) \) denote the weight vector of DMs, and then, the collective evaluation information of the group of DMs can be aggregated as \(L_G \left( p \right) =\left\{ {s_1 \left( {2/3} \right) ,s_2 \left( {1/3} \right) } \right\} \).

4.2 A weight-determining method for criteria based on multiple correlation coefficient

The multiple correlation coefficient [30, 31] is an important indicator that can reflect the correlation degree between one dependent variable and a set of independent variables. When the multiple correlation coefficient (MCC) becomes bigger, it means that the correlation degree between the dependent variable and the group of independent variables goes closer. In this section, we use the multiple correlation coefficient to measure the correlation degree between each criterion and other criteria, based on which, the weight vector is computed for criteria.

To facilitate the calculation, the PLDM is divided into \(\# L_{ij} \left( p \right) \) parts. The \(k\hbox { th}\) part of the PLDM is denoted as \(R^{k}=\left[ {\xi _{ij}^{\left( k \right) } } \right] _{m\times n}\), which is named the \(k\hbox { th}\) expected value decision matrix, where \(\xi _{ij}^{\left( k \right) } =\gamma _{ij}^{\left( k \right) } \times p_{ij}^{\left( k \right) }\) and \(\gamma _{ij}^{\left( k \right) }\) is the subscript of the linguistic term \(L_{ij}^{\left( k \right) }\). Assume that the criterion \(a_j\) is linearly related to the other ones, and then, the multiple linear regression can be obtained as

To obtain the parameter vector \(\beta =\left( \beta _0, \beta _1, \ldots , \beta _{j-1}, \beta _{j+1}, \ldots , \beta _n \right) ^{T}\), the least square method is utilized to calculate its estimated value \(\hat{{\beta }}=\left( {\left( {\Delta ^{\left( k \right) }} \right) ^{T}\Delta ^{\left( k \right) }} \right) ^{-1}\left( {\Delta ^{\left( k \right) }} \right) ^{T}\xi _j^{\left( k \right) } \), where \(\Delta ^{\left( k \right) }=\left( 1,\xi _1^{\left( k \right) }, \ldots , \xi _{j-1}^{\left( k \right) }, \xi _{j+1}^{\left( k \right) }, \ldots , \xi _n^{\left( k \right) } \right) \) and \(\xi _{j-1}^{\left( k \right) } =\left( {\xi _{1\left( {j-1} \right) }^{\left( k \right) }, \xi _{2\left( {j-1} \right) }^{\left( k \right) }, \ldots , \xi _{m\left( {j-1} \right) }^{\left( k \right) } } \right) \). Let \(\beta =\hat{{\beta }}\), and then, an empirical regression equation with known parameters is obtained as \(\hat{{\xi }}_j^{\left( k \right) } =\beta _0 +\beta _1 \xi _1^{\left( k \right) } +\cdots +\beta _{j-1} \xi _{j-1}^{\left( k \right) } +\beta _{j+1} \xi _{j+1}^{\left( k \right) } +\cdots +\beta _n \xi _n^{\left( k \right) }\). Thus, the multiple correlation coefficient of criterion \(a_j\) in the kth expected value decision matrix is defined as \(\rho _j^{\left( k \right) } =\frac{\sum _{i=1}^m {\left( {\hat{{\xi }}_{ij}^{\left( k \right) } -\bar{{\xi }}_{ij}^{\left( k \right) } } \right) ^{2}} }{\sum _{i=1}^m {\left( {\xi _{ij}^{\left( k \right) } -\bar{{\xi }}_{ij}^{\left( k \right) } } \right) ^{2}} }\), where \(\bar{{\xi }}_{ij}^{\left( k \right) }\) denotes the average value of \(\xi _{ij}^{\left( k \right) }\). If the value of multiple correlation coefficient \(\rho _j^{\left( k \right) }\) approaches 0, then it means that the criterion \(a_j\) can be easy to be replaced by other criteria in the kth expected value decision matrix \(R^{k}\). Hence, the weight of criterion \(a_j\) is defined as

4.3 A weight-determining method based on entropy theory for DMs

In this subsection, a weight-determining method based on entropy theory is put forward to derive the weight vector of DMs.

We utilize the LWA operator [32] to aggregate the criterion values of each alternative \(x_i\) provided by the \(\varphi \hbox {th}\) DM as \(V\left( {x_i^\varphi } \right) \) and the PLWA operator to fuse the collective criterion values of each alternative \(x_i\) as \(V\left( {x_i } \right) \). Let \(V_\varphi =\left( {V\left( {x_1^\varphi } \right) ,V\left( {x_2^\varphi } \right) ,\ldots , V\left( {x_m^\varphi } \right) } \right) ^{T}\) and \(GV=\left( {E\left( {V\left( {x_1 } \right) } \right) ,E\left( {V\left( {x_2 } \right) } \right) ,\ldots , E\left( {V\left( {x_m } \right) } \right) } \right) ^{T}\), and then, the deviation degree of evaluation information between the DM \(dm_\varphi \) and the group of DMs is defined as \(D_\varphi =\sqrt{\sum _{i=1}^m {\left( {V\left( {x_i^\varphi } \right) -E\left( {V\left( {x_i } \right) } \right) } \right) ^{2}} }\), where \(E\left( {V\left( {x_i } \right) } \right) \) denotes the average value of elements in \(V\left( {x_i } \right) \). Hence, when the deviation degree is used, the weight of the DM \(dm_\varphi \) is given as \(e_d^\varphi ={1\bigg /{D_\varphi }}\Bigg /{\sum _\varphi ^u {D_\varphi }}\).

If h DMs provide their evaluation information as \(H_\varphi =\left\{ {\left. {s_\varphi ^{\left( k \right) } } \right| s_\varphi ^{\left( k \right) } \in S_2, k=1,2,\ldots , \# H_\varphi } \right\} \left( \varphi =1,2,\ldots , h \right) \) and \(u-h\) DMs do not give the evaluation information, then the entropy value of the DM \(dm_\varphi \) can be defined as \(Entropy\left( {dm_\varphi } \right) =-\sum _{i=1}^m {f_i^\varphi } \log _2 f_i^\varphi \), where \(f_i^\varphi ={B\left( {x_i^\varphi } \right) }\bigg /{\sum _{i=1}^m {B\left( {x_i^\varphi } \right) }}\) with \(B\left( {x_i^\varphi } \right) =\frac{I\left( {V\left( {x_i^\varphi } \right) } \right) +\tau }{2\tau }\) and the term \(I\left( {V\left( {x_i^\varphi } \right) } \right) \) is the subscript of the linguistic term \(V\left( {x_i^\varphi } \right) \). When \(f_{i\varphi } =0\), then \(-\sum _{i=1}^m {f_{i\varphi } } \log _2 f_{i\varphi } =0\). When \(f_1^\varphi =f_2^\varphi =\cdots =f_m^\varphi \), then the entropy value of the DM \(dm_\varphi \) could be maximum, and the maximum entropy value is \(\max \left( {Entropy\left( {dm_\varphi } \right) } \right) =\log _2 m\). Hence, when the entropy value is used, the weight of the DM \(dm_\varphi \) is \(e_e^\varphi =\frac{1-{E}'\left( {dm_\varphi } \right) }{u-\sum _{\varphi =1}^u {{E}'\left( {dm_\varphi } \right) }}\), where \({E}'\left( {dm_\varphi } \right) =\frac{Entropy\left( {dm_\varphi } \right) }{\log _2 m}\).

Based on the above two measurements for determining the weight vector of DMs, a linear weighting function is used to adjust the weight of each DM as \({e}'_\varphi =ae_d^\varphi +\left( {1-a} \right) e_e^\varphi \), where \(\varphi =1,2,\ldots u\).

According to Eq. (5), when the weight vector e of DMs is changed to \({e}'\), then the PLDM of the group of DMs is changed to \({L}'=\left[ {{L}'_{ij} \left( p \right) } \right] _{m\times n}\). Hence, the deviation degree between these two PLDMs can be calculated as \(D_g \left( {L,{L}'} \right) =\sqrt{\sum _{i=1}^m {\left( {E\left( {V\left( {x_i } \right) } \right) -E\left( {{V}'\left( {x_i } \right) } \right) } \right) ^{2}} }\), where \(V\left( {x_i } \right) =PLWA\left( {L_{i1} \left( p \right) ,L_{i2} \left( p \right) ,\ldots L_{in} \left( p \right) } \right) \) and \({V}'\left( {x_i } \right) =PLWA\left( {{L}'_{i1} \left( p \right) ,{L}'_{i2} \left( p \right) ,\ldots {L}'_{in} \left( p \right) } \right) \).

An algorithm is developed to adjust the weight vector of DMs as follows.

Algorithm 1

-

Input: u linguistic decision matrices \(R_\varphi =\left[ {r_{ij}^\varphi } \right] _{m\times n} \left( {\varphi =1,2,\ldots , u} \right) \) given by DMs, the initial weight vector of DMs denoted as \(e=\left( {e_1, e_2, \ldots , e_u } \right) \), the convergence parameter \(a\in [0,1]\), the threshold l of the deviation degree between two PLDMs.

-

Output: The PLDM \(L=\left[ {L_{ij} \left( p \right) } \right] _{m\times n}\), the adjusted weight vector of DMs denoted as \(e=\left( {e_1, e_2, \ldots , e_u } \right) \).

-

Step 1. Use the LWA operator to aggregate the criterion value of each alternative in each linguistic decision matrix \(R_\varphi \) as \(V\left( {x^{\varphi }} \right) =LWA\left( {r_{i1}^\varphi , r_{i2}^\varphi , \ldots , r_{in}^\varphi } \right) =r_{i1}^\varphi w_1 \oplus r_{i2}^\varphi w_2 \oplus \cdots \oplus r_{in}^\varphi w_n\), where \(w=\left( {w_1, w_2, \ldots , w_n } \right) \) denotes the weight vector of criteria.

-

Step 2. According to Eq. (5), the initial PLDM \(L=\left[ {L_{ij} \left( p \right) } \right] _{m\times n}\) can be obtained.

-

Step 3. Compute the hybrid weight vector of DMs.

-

Step 4. Utilizing Eq. (5) and the hybrid weight vector \({e}'=\left( {{e}'_1, {e}'_2, \ldots , {e}'_u } \right) \), a new PLDM \({L}'=\left[ {{L}'_{ij} \left( p \right) } \right] _{m\times n}\) can be obtained.

-

Step 5. Compute the deviation degree between these two PLDMs \(L=\left[ {L_{ij} \left( p \right) } \right] _{m\times n}\) and \({L}'=\left[ {{L}'_{ij} \left( p \right) } \right] _{m\times n}\) as \(D_g \left( {L,{L}'} \right) =\sqrt{\sum _{i=1}^m {\left( {E\left( {V\left( {x_i } \right) } \right) -E\left( {{V}'\left( {x_i } \right) } \right) } \right) ^{2}}}\).

-

Step 6. If \(D_g \left( {L,{L}'} \right) \le l\), then end the algorithm; otherwise, let \(L={L}'\) and \(e={e}'\), then turn to Step 3.

4.4 Score-entropy-based ELECTRE II method

Based on the novel comparison method presented in Sect. 3, a novel score-entropy-based ELECTRE II method is proposed to handle MCGDM problems with probabilistic linguistic information.

For a set of alternatives A, four kinds of binary relations among alternatives can be obtained: 1) iPj means that “i is strictly preferred to j ”; 2) iIj means that “i is indifferent to j ”; 3) iWj means that “i is weakly preferred to j ”; 4) iCj means that “i is not comparable to j”. Each outranking approach is devised based on the notions of concordance and discordance that describe the reasons for and against an outranking relation [33,34,35]: 1) to verify an outranking relation iSb, a sufficient majority of criteria should support this assertion (concordance); 2) when it satisfies the concordance condition, none of criteria in the minority is not against this assertion too strongly (non-discordance). When the assertion iSb is validated, the above two conditions should hold at the same time. The probabilistic linguistic concordance set (PLCS) of the alternatives \(x_i\) and \(x_j\), expressed as \(J_{c_{ij} } =\left\{ {\left. k \right| L_{ik} \left( p \right) \succ L_{jk} \left( p \right) } \right\} \), consists of the criteria where the satisfactory degree of alternative \(x_i\) is superior to that of alternative \(x_j\). It can be divided into: the strong PLCS \(J_{sc_{ij}}\), medium PLCS \(J_{mc_{ij}}\), and weak PLCS \(J_{wc_{ij}}\):

where J is the set of criteria that satisfy the condition or outranking relation.

These three PLCSs have different degrees that capture the intensity that alternative \(x_i\) is superior to alternative \(x_j\). The difference between \(J_{sc_{ij}}\) and \(J_{mc_{ij}}\) results from the information entropy. A lower value of information entropy implies that the evaluation information of the DMs has a higher consistency degree. Hence, \(J_{sc_{ij}}\) is more concordant than \(J_{mc_{ij}}\). Compared to the information entropy, the score function has a more important role when determining the outrank relations among alternatives. Thus, \(J_{mc_{ij}}\) containing the criteria with higher score function values is more concordant than \(J_{wc_{ij}}\).

Similarly, the probabilistic linguistic discordance set (PLDS) \(J_{d_{ij}}\) consists of the criteria where the satisfaction degree of alternative \(x_i\) is inferior to that of alternative \(x_j\). It is also divided into: the strong PLDS \(J_{sd_{ij}}\), medium PLDS \(J_{md_{ij}}\), and week PLDS \(J_{wd_{ij}}\):

Finally, the indifferent PLDS \(J_{ij}^=\) of alternatives \(x_i\) and \(x_j\) is defined as:

According to the PLCS and PLDS on each pair of alternatives \(x_i\) and \(x_j\), the probabilistic linguistic concordance index (PLCI) of PLCS can be computed as

where \(c_{ij}\) denotes the PLCI. \(w_k\) is the weight of criterion \(a_k\). \(\omega _{sc}\), \(\omega _{mc}\), \(\omega _{wc}\), and \(\omega _{ij}^=\) are the attitude weights of strong PLCS, medium PLCS, weak PLCS, and probabilistic linguistic indifferent set. The PLCI \(c_{ij}\) denotes the relative importance of alternative \(x_i\) over alternative \(x_j\), and it satisfies \(0\le c_{ij} \le 1\). Then, the probabilistic linguistic concordance matrix C can be constructed using all the PLCIs of pairs of alternatives as:

The probabilistic linguistic discordance index (PLDI) indicates the relative inferior of alternative \(x_i\) over alternative \(x_j\), which can be defined as

where \(d_{ij}\) denotes the PLDI of alternative \(x_i\) over alternative \(x_j\). The larger \(d_{ij}\) implies the stronger it goes against the assertion that “the alternative \(x_i\) is at least as good as the alternative \(x_j\)”. \(\omega _{sd}\), \(\omega _{md}\), and \(\omega _{wd}\) denote the attitude weights of strong PLDS, medium PLDS, and weak PLDS. \(d\left( {L_{ik} \left( p \right) ,L_{jk} \left( p \right) } \right) \) denotes the distance between \(x_i\) and \(x_j\) under the \(k\hbox { th}\) criterion, which can be measured using Eq. (4).

Similarly, all the PLDIs of alternatives can form the probabilistic linguistic discordance matrix D as

When the concordance and discordance sets are defined, it is easy to devise the ranking procedure of ELECTRE II. The ranking procedure constructs the concordance Boolean matrix and discordance Boolean matrix using the thresholds that are computed as the average of the elements in the probabilistic linguistic concordance matrix and probabilistic linguistic discordance matrix. Finally, a global matrix can be defined to confirm the outranking relation about each pair of alternatives.

Definition 6

Let \(C=\left[ {c_{ij} } \right] _{m\times m}\) be the probabilistic linguistic concordance matrix, then the threshold \({\bar{C}} ={\mathop {\sum }\nolimits _{i=1}^m} {\mathop {\sum }\nolimits _{k=1}^m} c_{ik} \bigg /m\left( {m-1} \right) \), and the probabilistic linguistic concordance Boolean matrix E is obtained as

where the element \(e_{ij}\) has a binary value of 0 or 1 and when (1) \(c_{ij} \ge \bar{{c}}\Rightarrow e_{ij} =1\); (2) \(c_{ij} <\bar{{c}}\Rightarrow e_{ij} =0\). If \(e_{ij} =1\), then we assume that alternative \(x_i\) dominates alternative \(x_j\) in concordant perspective.

Definition 7

Let \(D=\left[ {d_{ij} } \right] _{m\times m} \) be the probabilistic linguistic discordance matrix, then the threshold \(\bar{{d}}=\sum _{i=1}^m {\sum _{j=1}^m {{d_{ij} }\bigg /{m\left( {m-1} \right) }} }\), and the probabilistic linguistic discordance Boolean matrix Q is obtained as

where the element \(q_{ij}\) has the binary value of 0 or 1 and when (1) \(d_{ij} <\bar{{d}}\Rightarrow q_{ij} =1\); (2) \(d_{ij} \ge \bar{{d}}\Rightarrow q_{ij} =0\).

Let E and Q be the probabilistic linguistic concordance Boolean matrix and probabilistic linguistic discordance Boolean matrix, and then, the global matrix M is defined by multiplying the matrices E and Q component-wise, whose element \(m_{ik} =e_{ij} \times q_{ij}\).

According to the graph theory, the relationships included in the global matrix can be described using a digraph \(G=\left( {V,A} \right) \) where V is a set of vertices \(\left\{ {v_1, v_2, \ldots , v_m } \right\} \) and A is the set of arcs associated with the vertices. Each vertex stands for an alternative and each directed arc stands for an outranking relation. In such graph, if there is a directed arc from vertices \(v_i\) to \(v_j\), then it means that alternative \(x_i\) outperforms alternative \(x_j\). Another situation is that there is no arc between \(x_i\) and \(x_j\) since they are incomparable.

Liao et al. [36] utilized the averaging values of the probabilistic linguistic concordance matrix C and discordance matrix D as the thresholds for constructing the probabilistic linguistic concordance Boolean matrix E and discordance Boolean matrix Q. Then, the values in the symmetric positions of the global matrix M are not always complementary, which may fail to compare two alternatives. To solve this issue, we improve the method that was proposed by Liao et al. [36].

Let M be a global matrix, which is obtained by multiplying the matrices E and Q in component-wise, whose element \(m_{ij} =e_{ij} \times q_{ij}\) If the elements \(m_{ij} =1\) and \(m_{ji} =0\), then it implies that alternative \(x_i\) is strictly superior to alternative \(x_j\). If \(m_{ij} =0\) and \(m_{ji} =0\) or \(m_{ij} =1\) and \(m_{ji} =1\), then it means that the elements in the matrices E and Q satisfy that \(\left\{ {\begin{array}{l} m_{ij} =e_{ij} \times q_{ij} =0 \\ m_{ji} =e_{ji} \times q_{ji} =0 \\ \end{array}} \right. \) and \(\left\{ {\begin{array}{l} m_{ij} =e_{ij} \times q_{ij} =1 \\ m_{ji} =e_{ji} \times q_{ji} =1 \\ \end{array}} \right. \). When a graph is drawn to depict the relationships among the alternatives using the global matrix M, then there is no arc between \(x_i\) and \(x_j\) since they are incomparable. In this case, we set \(m_{ij} =1\) and \(m_{ji} =0\), which means that alternative \(x_i\) weakly excels alternative \(x_j\) when \(\left\{ {\begin{array}{l} m_{ij} =e_{ij}^*\times q_{ij}^*=1 \\ m_{ji} =e_{ji}^*\times q_{ji}^*=0 \\ \end{array}} \right. \), in which \(e_{ij}^*=1\) when \(c_{ij} >c_{ji}\) and \(q_{ij}^*=1\) when \(d_{ij} <d_{ji}\).

4.5 The decision-making procedure

According to the above analysis, the decision-making procedure of our proposed score-entropy-based ELECTRE II method can be summarized as follows:

-

Step 1. Use Eq. (5) to fuse the linguistic decision matrices provided by the DMs \(dm_\varphi ( \varphi =1,2,\ldots , u )\) and obtain the group decision matrix \(L=\left[ {L_{ij} \left( p \right) } \right] _{m\times n}\) of this group of DMs.

-

Step 2. Use Eq. (6) to calculate the weight vector \(w=\left( {w_1, w_2, \ldots w_n } \right) ^{T}\) of criteria.

-

Step 3. Use Algorithm 1 with the weight vector \(w=\left( {w_1, w_2, \ldots w_n } \right) ^{T}\) of criteria to calculate the weight vector \(e=\left( {e_1, e_2, \ldots , e_u } \right) ^{T}\) of the DMs \(DM=\left\{ {dm_1, dm_2, \ldots , dm_u } \right\} \) and get a new PLDM \({L}'=\left[ {{L}'_{ij} \left( p \right) } \right] _{m\times n}\).

-

Step 4. Set the attitude weights of probabilistic linguistic concordance, discordance, and indifference sets, which are denoted as \(\omega _{sc}\), \(\omega _{mc}\), \(\omega _{wc}\), \(\omega _{sd}\), \(\omega _{md}\), \(\omega _{wd}\), and \(\omega _{ij}^=\), respectively.

-

Step 5. Compute the score function value and entropy value of the PLDM \({L}'=\left[ {{L}'_{ij} \left( p \right) } \right] _{m\times n}\) as

$$\begin{aligned} E\left( {{L}'_{ij} \left( p \right) } \right)= & {} s_{{\sum _{k=1}^{\# L\left( p \right) } {\gamma _{ij}^{\left( k \right) } } p^{\left( k \right) }}/{\sum _{k=1}^{\# L\left( p \right) } {p^{\left( k \right) }}}} \end{aligned}$$(16)$$\begin{aligned} \mu \left( {{L}'_{ij} \left( p \right) } \right)= & {} \mathop {-\sum }\limits _{k=1}^{\# L\left( p \right) } {p^{\left( k \right) }\log _2 p^{\left( k \right) }} \end{aligned}$$(17)where \(\gamma _{ij}^{\left( k \right) }\) denotes the subscript of the linguistic term \({L}'_{ij}\).

-

Step 6. Use Eqs. (7)–(9) to obtain the strong PLCS, medium PLCS, and weak PLCS.

Use Eqs. (10)–(12) to obtain the strong PLDS, medium PLDS, and weak PLDS and Eq. (13) to obtain the probabilistic linguistic indifference set.

-

Step 7. Calculate the PLCIs of pairs of alternatives by Eq. (14) to construct the probabilistic linguistic concordance matrix C and then also calculate the PLDIs of pairs of alternatives by Eq. (15) to construct the probabilistic linguistic discordance matrix D.

-

Step 8. Use Definitions 6 and 7 to obtain the probabilistic linguistic concordance Boolean matrix E and the probabilistic linguistic discordance Boolean matrix Q, respectively.

-

Step 9. Compute the global matrix M and then draw the outranking graph.

Locate the symmetrical positions in the global matrix M where the elements are not complementary. Then the elements in the same positions of the matrices E and Q are updated and the outranking graph is redrawn.

To demonstrate the process of group decision-making process with probabilistic linguistic information, we summarize its procedures in Fig. 1.

5 Demonstrative example: edge node selection

How to choose an appropriate edge node to process the requests from mobile users is a very important problem in the edge computing network [37, 38]. The edge nodes are usually assessed using seven factors, such as the workload \(A_1\), availability \(A_2\), reliability \(A_3\), network latency \(A_4\), quality of experience (QoE) \(A_5\), resource utilization \(A_6\), and security level \(A_7\). Assume that there are five edge nodes to be evaluated, which are denoted as \(x_1\), \(x_2\), \(x_3\), \(x_4\), \(x_5\). Let \(S=\left\{ {s_\tau |\tau =-4,\ldots , 0,\ldots 4} \right\} \) denote a LTS, and four experts \(\left\{ {dm_1, dm_2, dm_3, dm_4 } \right\} \) are invited to use the LTSs to express the preferences for these five alternatives with respect to these seven criteria. Then, we construct the linguistic decision matrices \(R_\varphi =\left[ {r_{ij}^\varphi } \right] _{m\times n}\) as follows:

The initialization weight vector of these experts is set to \(e=\left( {e_1, e_2, e_3, e_4 } \right) ^{T}=\left( {0.25,0.25,0.25,0.25} \right) ^{T}\).

Step 1. Use Eq. (5) to aggregate the above linguistic decision matrices and then construct the PLDM \(L=\left[ {L_{ij} \left( p \right) } \right] _{m\times n} \) as (Table 1):

Step 2. Use Eq. (6) to compute the weight vector of criteria as

Step 3. Utilize Algorithm 1 with the parameters \(a=0.5\) and \(l=0.01\) to calculate the weight vector of experts as \(e=\left( {0.2746,0.1876,0.1943,0.3435} \right) ^{T}\) and obtain a new PLDM \({L}'=\left[ {{L}'_{ij} \left( p \right) } \right] _{m\times n} \) as (Table 2)

Step 4. Initialize the attitude weights of all the probabilistic linguistic concordance, discordance, and indifference sets as \(\omega =\left( \omega _{sc}, \omega _{mc}, \omega _{wc}, \omega _{sd}, \omega _{md}, \omega _{wd}, \omega _{ij}^= \right) =\left( {1,0.9,0.8,1,0.9,0.8,0.7} \right) \).

Step 5. Compute the score function values and entropy values of the elements included in the PLDM \({L}'=\left[ {{L}'_{ij} \left( p \right) } \right] _{m\times n} \) as shown in Table 3.

Step 6. Obtain the strong PLCS, medium PLCS, weak PLCS, denoted as \(J_{sc} \), \(J_{mc} \), and \(J_{wc} \), as

Obtain the strong PLDS, medium PLDS, weak PLDS, denoted as \(J_{sd} \), \(J_{md} \), \(J_{wd} \), as

and obtain the probabilistic linguistic indifference set as

Step 7. Calculate the PLCI of each pair of alternatives and then construct the probabilistic linguistic concordance matrix C as

Then, we calculate the PLDI of each pair of alternatives and then construct the probabilistic linguistic discordance matrix D as

The concordance threshold \(\bar{{c}}=0.4727\) and discordance threshold \(\bar{{d}}=0.3495\).

Step 8. Use Definitions 6 and 7 to obtain the probabilistic linguistic concordance Boolean matrix E and probabilistic linguistic discordance Boolean matrix Q as

Step 9. Compute the global matrix M by multiplying the matrices E and Q as

and draw the strong outranking graph as

As depicted in Fig. 2, the directional arc S implies that there exists strong outranking relation between two alternatives.

Locate the symmetrical positions of the global matrix M, where the elements are not complementary, and mark them using the symbol of “*”, then we have

The elements in the same positions of the matrices E and Q are updated as

Then, the final global matrix M can be obtained as

and the final outranking graph is redrawn as

As depicted in Fig. 3, the directional arc W implies that there exists weak-order relation between two alternatives.

6 Comparative analysis

To verify the superiority of our proposed score-entropy-based ELECTRE II method, the comparative analysis among the score-deviation-based ELECTRE II method is proposed by Liao et al. [36] to process the hesitant fuzzy linguistic term sets, the classical ELECTRE II method [39], the PL-ELECTRE II method [20] using the possibility degree and our proposed score-entropy-based ELECTRE II method.

6.1 Compare with score-deviation-based ELECTRE II method

The score-deviation-based ELECTRE II method in [36] uses a different comparison method from that in our proposed score-entropy-based ELECTRE II method to compare two PLTSs.

Let \(L\left( p \right) =\left\{ {\left. {L^{\left( k \right) }\left( {p^{\left( k \right) }} \right) } \right| k=1,2,...,\# L\left( p \right) } \right\} \) denote a PLTS, and then, the deviation degree of \(L\left( p \right) \) in [36] is computed as \(D\left( {L\left( p \right) } \right) =\left( \sum _{k=1}^{\# L\left( p \right) } \left( p^{\left( k \right) }\left( {\gamma ^{\left( k \right) }-\bar{{\gamma }}} \right) \right) ^{2} \right) ^{\frac{1}{2}}\bigg /{\sum _{k=1}^{\# L\left( p \right) } {p^{\left( k \right) }} }\), where \(\bar{{\gamma }}=\sum _{k=1}^{\# L\left( p \right) } {\gamma ^{\left( k \right) }} p^{\left( k \right) }\bigg /{\sum _{k=1}^{\# L\left( p \right) } {p^{\left( k \right) }} }\) and \(\gamma ^{\left( k \right) }\) denotes the subscript of linguistic term \(L^{\left( k \right) }\). If \(E\left( {L_1 \left( p \right) } \right) >E\left( {L_2 \left( p \right) } \right) \), then \(L_1 \left( p \right) \succ L_2 \left( p \right) \). If \(E\left( {L_1 \left( p \right) } \right) =E\left( {L_2 \left( p \right) } \right) \), then their deviation degrees should be further compared. If \(D\left( {L_1 \left( p \right) } \right) >D\left( {L_2 \left( p \right) } \right) \), then \(L_1 \left( p \right) \prec L_2 \left( p \right) \). If \(D\left( {L_1 \left( p \right) } \right) =D\left( {L_2 \left( p \right) } \right) \), then \(L_1 \left( p \right) \sim L_2 \left( p \right) \).

The score-deviation-based ELECTRE II method is utilized to solve the example concerning the edge node selection and its decision-making procedure is listed as follows:

Steps 1–4. Steps 1 to 4 are the same as that in Sect. 5.

Step 5. Compute the score function value \(E\left( {{L}'_{ij} \left( p \right) } \right) \) and deviation degree \(D\left( {{L}'_{ij} \left( p \right) } \right) \) of each PLTS in the probabilistic linguistic decision matrix \({L}'=\left[ {{L}'_{ij} \left( p \right) } \right] _{m\times n} \), and the results are listed in Table 4.

Step 6. According to the comparison method presented in [36], the strong PLCS \(\bar{{J}}_{sc} \), medium PLCS \(\bar{{J}}_{mc} \), and weak PLCS \(\bar{{J}}_{wc} \) can be obtained as

The strong PLDS \(\bar{{J}}_{sd} \), medium PLDS \(\bar{{J}}_{md} \), and weak PLDS \(\bar{{J}}_{wd} \) can be obtained as

The probabilistic linguistic indifference set can be obtained as

Step 7. Compute the PLCI of each pair of alternatives and then construct the probabilistic linguistic concordance matrix \(C_2 \) as:

and the concordance threshold \(\bar{{C}}_2 =0.4743\).

Calculate the PLDI of each pair of alternatives and construct the probabilistic linguistic discordance matrix \(D_2 \) as:

and the discordance threshold \(\bar{{D}}_2 =0.3764\).

Step 8. Obtain the probabilistic linguistic concordance Boolean matrix \(E_2 \) and probabilistic linguistic discordance Boolean matrix \(Q_2 \) using the concordance threshold \(\bar{{C}}_2 =0.4743\) and discordance threshold \(\bar{{D}}_2 =0.3764\). Then, we have

Step 9. Compute the global matrix \(M_2 \) as

and draw the outranking graph as

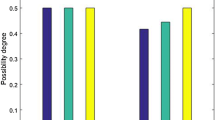

As shown in Fig. 4, the directional arc S means that the there is strong-order relationship between two alternatives. However, the relationship between alternatives \(x_4 \) and \(x_5 \) and the relationship between \(x_3 \) and \(x_4 \) cannot be determined by the score-deviation-based ELECTRE II method, which can be solved by our proposed score-entropy-based ELECTRE II method.

6.2 Compare with classical ELECTRE II method

In this section, our proposed score-entropy-based ELECTRE II method is compared with the classical ELECTRE II method [39]. We define the concepts of the strong relationship \(O_F \) and the weak relationship \(O_f \) by the indices of concordance and discordance levels.

Let \(c^{-}\), \(c^{0}\), and \(c^{+}\) denote three concordance levels with \(0<c^{-}<c^{0}<c^{+}<1\) and \(d^{0}\) and \(d^{+}\) be two discordance levels with \(0<d^{0}<d^{+}<1\). Then, the strong outranking relation in the graph implies that alternative \(x_i \) strongly outranks alternative \(x_k\), denoted as \(x_i O_F x_j \), if

and the weak outranking relation in the graph implies that alternative \(x_i \) weakly outranks alternative \(x_k\), denoted as \(x_i O_f x_k\), if

Here, the concordance levels \(\left( {c^{-},c^{0},c^{+}} \right) \) are set to \(\left( {0.5,0.6,0.7} \right) \) and the discordance levels \(\left( {d^{0},d^{+}} \right) \) is set to \(\left( {0.6,0.8} \right) \). We draw the strong outranking graph and the weak outranking graph as shown in Fig. 5.

The values of \({v}'\left( x \right) \) and \({v}''\left( x \right) \) are listed in Table 5, where \({v}'\left( x \right) \) is the direct ranking and \({v}''\left( x \right) \) denotes the reverse ranking.

According to the value of \(\frac{{v}'\left( x \right) +{v}''\left( x \right) }{2}\), the final ranking is obtained as

The above ranking is contained in Fig. 3. However, the outranking graph presented in Fig. 3 can offer more information about the relationships among alternatives.

6.3 Compare with existing method

In this section, comparisons between our proposed score-entropy-based ELECTRE II method and the PL-ELECTRE II method in [20] are conducted. The PL-ELECTRE II method uses the possibility degree to construct the probabilistic linguistic concordance sets and the probabilistic linguistic discordance sets. Our proposed score-entropy-based ELECTRE II method is utilized to process the example presented in [20] and the outranking graph can be constructed as:

As shown in Fig. 6, the outranking graph of our proposed score-entropy-based ELECTRE II method is different from that in [20] since Pan et al. [20] introduced the concept of possibility degree to construct the probabilistic linguistic concordance sets and probabilistic linguistic discordance sets.

The differences between our proposed score-entropy-based ELECTRE II method and PL-ELECTRE II presented in [20] are summarized in Table 6.

From Table 6, it can be seen that our proposed score-entropy-based ELECTRE II method shows some differences from the PL-ELECTRE II method that is presented in [20]. Our proposed score-entropy-based ELECTRE II method uses the comparison method to compare PLTSs, while the PL-ELECTRE II method uses the possibility degree. When the weights of criteria are calculated, our proposed score-entropy-based ELECTRE II method uses an objective method, namely the multiple correlation coefficient, which considers the correlation degree between each criterion and other criteria. The PL-ELECTRE II method in [20] uses a subjective method, in which the relative importance of one criterion over another one is evaluated by the DMs, and then, a Chi-square method is utilized to obtain the weight vector of criteria. The weight vector of DMs is given directly in the PL-ELECTRE II method [20], while it is determined using the entropy theory in our proposed score-entropy-based ELECTRE II method.

7 Conclusions

In this paper, we proposed a novel score-entropy-based ELECTRE II method to process the edge node selection problem with the evaluation information of PLTSs. We first defined a novel distance measure for PLTSs and developed a novel comparison method based on the score function and information entropy of PLTSs. We also utilized the multiple correlation coefficient to calculate the weight vector of criteria and introduced the entropy theory to calculate the weight vector of the DMs. Based on the weight-determining method for criteria and the weight-determining method for DMs as well as the comparison method, a novel score-entropy-based ELECTRE II method was put forward and its decision-making procedure was given. Finally, a demonstrative example was provided to illustrate the implementation process of our proposed score-entropy-based ELECTRE II method and it was compared with existing decision-making methods.

In the future, we plan to establish a two-layer index system to evaluate the edge node from the view of quality of service and introduce the theory of analytic hierarchical process to handle the edge node selection problems.

References

Yan, Z., Yu, X., Ding, W.: Context-aware verifiable cloud computing. IEEE Access 5, 2211–2227 (2017)

Li, C.T., Lee, C.W., Shen, J.J.: An extended chaotic maps-based keyword search scheme over encrypted data resist outside and inside keyword guessing attacks in cloud storage services. Nonlinear Dyn. 80(3), 1601–1611 (2015)

Xiong, J.B., Ren, J., Chen, L., Yao, Z.Q., Lin, M.W., Wu, D.P., Niu, B.: Enhancing privacy and availability for data clustering in intelligent electrical service of IoT. IEEE IoT J. (2018). https://doi.org/10.1109/JIOT.2018.2842773

Laghari, A.A., He, H., Khan, A., Kumar, N., Kharel, P.: Quality of experience framework for cloud computing (QoC). IEEE Access 6, 64876–64890 (2018)

Abdullah, S., Amin, N.U.: Analysis of S-box image encryption based on generalized fuzzy soft expert set. Nonlinear Dyn. 79(3), 1679–1692 (2014)

He, Y., Guo, H., Jin, M., Ren, P.: A linguistic entropy weight method and its application in linguistic multi-attribute group decision making. Nonlinear Dyn. 84(1), 399–404 (2016)

Qu, J.J., Ji, Z.J., Lin, C., Yu, H.S.: Fast consensus seeking on networks with antagonistic interactions. Complexity (2018). Article ID 7831317

Xi, J.X., Wang, C., Liu, H., Wang, Z.: Dynamic output feedback guaranteed-cost synchronization for multiagent networks with given cost budgets. IEEE Access 6, 28923–28935 (2018)

Ji, Z.J., Yu, H.S.: A new perspective to graphical characterization of multiagent controllability. IEEE Trans. Cybern. 47(6), 1471–1483 (2017)

Xi, J.X., Wang, C., Liu, H., Wang, L.: Completely distributed guaranteed-performance consensualization for high-order multiagent systems with switching topologies. IEEE Trans. Syst. Man Cybern.: Syst. (2018). https://doi.org/10.1109/TSMC.2018.2852277

Sun, K.K., Mou, S.S., Qiu, J.B., Wang, T., Gao, H.J.: Adaptive fuzzy control for non-triangular structural stochastic switched nonlinear systems with full state constraints. IEEE Trans. Fuzzy Syst. (2018). https://doi.org/10.1109/TFUZZ.2018.2883374

Zadeh, L.A.: The concept of a linguistic variable and its application to approximate reasoning—I. Inf. Sci. 8(3), 199–249 (1975)

Xu, Z.S., Wang, H.: On the syntax and semantics of virtual linguistic terms for information fusion in decision making. Inf. Fusion 34, 43–48 (2017)

Rodriguez, R.M., Martinez, L., Herrera, F.: Hesitant fuzzy linguistic term sets for decision making. IEEE Trans. Fuzzy Syst. 20(1), 109–119 (2012)

Lin, M.W., Xu, Z.S., Zhai, Y.L., Yao, Z.Q.: Multi-criterion group decision-making under probabilistic uncertain linguistic environment. J. Oper. Res. Soc. 69(2), 157–170 (2018)

Pang, Q., Wang, H., Xu, Z.S.: Probabilistic linguistic term sets in multi-criterion group decision making. Inf. Sci. 369, 128–143 (2016)

Bai, C., Zhang, R., Qian, L., Wu, Y.: Comparisons of probabilistic linguistic term sets for multi-criteria decision making. Knowl.-Based Syst. 119, 284–291 (2017)

Liu, H., Jiang, L., Xu, Z.S.: Entropy measures of probabilistic linguistic term sets. Int. J. Comput. Intell. Syst. 11(1), 45–57 (2018)

Liu, P., Teng, F.: Some Muirhead mean operators for probabilistic linguistic term sets and their applications to multiple criterion decision-making. Appl. Soft Comput. 68, 396–431 (2018)

Pan, L., Ren, P.J., Xu, Z.S.: Therapeutic schedule evaluation for brain-metastasized non-small cell lung cancer with a probabilistic linguistic ELECTRE II method. Int. J. Environ. Res. Public Health 15, 1799 (2018). https://doi.org/10.3390/ijerph15091799

Xu, Z.S.: A method based on linguistic aggregation operators for group decision making with linguistic preference relations. Inf. Sci. 166(1–4), 19–30 (2004)

Xu, Z.S.: Multi-period multi-criterion group decision-making under linguistic assessments. Int. J. Gener. Syst. 38(8), 823–850 (2009)

Xu, Z.S.: Linguistic decision making: theory and methods. Springer, Berlin (2012)

Gou, X.J., Xu, Z.S.: Novel basic operational laws for linguistic terms, hesitant fuzzy linguistic term sets and probabilistic linguistic term sets. Inf. Sci. 372, 407–427 (2016)

Liao, H.C., Xu, Z.S., Zeng, X.J., Merigo, J.M.: Qualitative decision making with correlation coefficients of hesitant fuzzy linguistic term sets. Knowl.-Based Syst. 76, 127–138 (2015)

Zhang, Y.X., Xu, Z.S., Wang, H., Liao, H.: Consistency-based risk assessment with probabilistic linguistic preference relation. Appl. Soft Comput. 49, 817–833 (2016)

Liao, H.C., Xu, Z.S.: Approaches to manage hesitant fuzzy linguistic information based on the cosine distance and similarity measures for HFLTSs and their application in qualitative decision making. Expert Syst. Appl. 42(12), 5328–5336 (2015)

Bai, C., Zhang, R., Shen, S., Huang, C., Fan, X.: Interval-valued probabilistic linguistic term sets in multi-criteria group decision making. Int. J. Intell. Syst. 33(6), 1301–1321 (2018)

Wu, X.L., Liao, H.C., Xu, Z.S., Hafezalkotob, A., Herrera, F.: Probabilistic linguistic MULTIMOORA: a multicriteria decision making method based on the probabilistic linguistic expectation function and the improved Borda rule. IEEE Trans. Fuzzy Syst. 26(6), 3688–3702 (2018)

Zebende, G.F., da Silva Filho, A.M.: Detrended multiple cross-correlation coefficient. Phys. A: Stat. Mech. Appl. 510, 91–97 (2018)

Qiu, J.B., Sun, K.K., Wang, T., Gao, H.J.: Observer-based fuzzy adaptive event-triggered control for pure-feedback nonlinear systems with prescribed performance. IEEE Trans. Fuzzy Syst. https://doi.org/10.1109/TFUZZ.2019.2895560

Wu, X.L., Liao, H.C.: An approach to quality function deployment based on probabilistic linguistic term sets and ORESTE method for multi-expert multi-criteria decision making. Inf. Fusion 43, 13–26 (2018)

Roy, B.: ELECTRE III: Un algorithme de classements fondé sur une représentation floue des préférences en présence de critères multiples. Cahiers du Centre d’Etudes de Recherche Opérationnelle 20(1), 3–24 (1978)

Chen, N., Xu, Z.S.: Hesitant fuzzy ELECTRE II approach: a new way to handle multi-criteria decision making problems. Inf. Sci. 292, 175–197 (2015)

Wan, S.P., Xu, G.L., Dong, J.Y.: Supplier selection using ANP and ELECTRE II in interval 2-tuple linguistic environment. Inf. Sci. 385–386, 19–38 (2017)

Liao, H.C., Yang, L.Y., Xu, Z.S.: Two new approaches based on ELECTRE II to solve the multiple criteria decision making problems with hesitant fuzzy linguistic term sets. Appl. Soft Comput. 63, 223–234 (2018)

Lin, M., Chen, R., Lin, L., Li, X., Huang, J.: Buffer-aware data migration scheme for hybrid storage systems. IEEE Access 6, 47646–47656 (2018)

Lin, B., Guo, W.Z., Xiong, N.X., Chen, G.L., Vasilakos, A.V., Zhang, H.: A pretreatment workflow scheduling approach for big data applications in multi-cloud environments. IEEE Trans. Netw. Serv. Manag. 13(3), 581–594 (2016)

Duckstein, L., Gershon, M.: Multicriterion analysis of a vegetation management problem using ELECTRE II. Appl. Math. Model. 7, 254–261 (1983)

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant Nos. 61872086, 71771156, and Distinguished Young Scientific Research Talents Plan in Universities of Fujian Province (2017).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lin, M., Chen, Z., Liao, H. et al. ELECTRE II method to deal with probabilistic linguistic term sets and its application to edge computing. Nonlinear Dyn 96, 2125–2143 (2019). https://doi.org/10.1007/s11071-019-04910-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-019-04910-0