Abstract

Statistical analysis of stochastic dynamical systems is of considerable importance for engineers as well as scientists. Engineering applications require approximate statistical methods with a trade-off between accuracy and simplicity. Most exact and approximate methods available in the literature to study stochastic differential equations (SDEs) are best suited for linear or lightly nonlinear systems. When a system is highly nonlinear, e.g., a system with multiple equilibria, the accuracy of conventional methods degrades. This problem is addressed in this article, and a novel method is introduced for statistical analysis of special types of essentially nonlinear SDEs. In particular, second-order dynamical systems with nonlinear stiffness and additive random excitations are considered. The proposed approximate method can estimate second-order moments of the state vector (namely position and velocity), not only in the case of white noise excitation, but also when the excitation is a correlated noise. To illustrate the efficiency, a second-order dynamical system with bistable Duffing-type nonlinearity is considered as the case study. Results of the proposed method are compared with the Gaussian moment closure approximation for two types of colored noise excitations, one with first-order dynamics and the other with second-order dynamics. In the absence of exact closed-form solutions, Monte Carlo simulations are considered as the reference ideal solution. Results indicate that the proposed method gives proper approximations for the mean square value of position, for which the Gaussian moment closure method cannot provide good estimations. On the other hand, both methods provide acceptable estimations for the mean square value of velocity in terms of accuracy. Such nonlinear SDEs especially arise in energy-harvesting applications, when the ambient vibration can be modeled as a wideband random excitation. In such conditions, linear energy harvesters are no longer optimal designs, but nonlinear broadband harvesting techniques are hoped to show much better performance.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

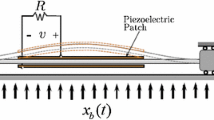

A differential equation that is forced by an irregular process such as a Wiener process or Brownian motion is called a stochastic differential equation (SDE) [1]. Earthquakes, winds and ocean waves are important examples of stochastic excitations [2]. Stochastic differential equations arise in many engineering applications such as filtering problems, fluid mechanical turbulence and random vibration [3]. Special interest on SDEs exists in the analysis of vibration energy harvesters that are designed to harvest energy from ambient random vibrations [4, 5].

In the context of analytical study of stochastic differential equations (SDEs), several well-known techniques have been addressed in the literature so as to extract various statistical properties of the stochastic system such as the probability distribution function (PDF) of the system response, the spectral density of it and/or the first-, second- and higher-order moments of the state variables [6,7,8]. For linear stochastic systems with additive (white or colored) random excitations, statistical analysis is a straightforward procedure and is completely documented [2, 6]. But difficulties arise in the case of nonlinear SDEs for which the approximate analytical methods are different in terms of difficulty and accuracy [9]. Available methods include but not limited to statistical and equivalent linearization [9,10,11,12], moment closure [13,14,15,16,17], stochastic averaging [18,19,20,21] and approximate solution of the Fokker–Plank equation [22,23,24,25].

For analytical study of nonlinear SDEs, it is important whether the random excitation is white or colored. Analytical methods are highly limited in the case of nonwhite excitations. Among the available techniques, linearization and stochastic averaging techniques are applicable on SDEs with both white and colored excitations, while closure methods as well as PDF approximation methods can be applied only when the excitation is white [7, 26]. However, in many cases, especially when the system is highly nonlinear, the linearization and stochastic averaging methods cannot predict the statistical behavior of the system correctly [27].

It is a common practice to consider a colored noise as a filtered white noise, i.e., the colored Gaussian noise is assumed to be the output of a linear system (called noise dynamics) excited with white Gaussian noise. Then, the state vector of the noise dynamics is augmented to the fundamental state vector of the system [28]. So, a SDE with colored noise excitation can be converted to a SDE with white noise excitation, in the cost of a larger state space. Then, a wider variety of analytical methods can be applied on the SDE.

The moment closure technique is one of the most popular approximate methods for the study of nonlinear SDEs with white noise excitation. This technique is based on moment equations. The moment equations for a nonlinear SDE form an infinite hierarchy of equations. These equations can be algebraic or differential depending on whether the SDE is stationary or nonstationary. The moment closure method truncates the moment equations for the system at an arbitrary order n. Then, based on a closure scheme (often the cumulant-neglect closure [15]), higher-order moments with orders greater than n which appear in the truncated equations are expressed in terms of lower-order moments, so as to achieve a closed set of algebraic or differential equations. The most important and most simple case \(n=2\) is equivalent to assuming a Gaussian shape for the joint PDF of the state vector. This Gaussian moment closure method truncates moment equations at second order and substitutes third- and higher-order moments in terms of moments up to the second order, resulting in a closed set of equations for the first- and second-order moments [8, 14, 26].

For highly nonlinear systems, Gaussian approximation may give erroneous estimations [29]. To increase the accuracy, moment closure methods with higher truncation orders can be applied. In fact, one can truncate the moment equations for example at \(n=4\), and write fifth- and higher-order moments appearing in the equations in terms of lower-order ones [30, 31]. This approach, however, highly increases the number of coupled moment equations, bringing in difficulties for solving the set of equations, along with different problems concerning existence and uniqueness of the solution [26, 29]. This condition is severer in the case of colored noise excitation when the noise dynamics is augmented to the state vector of the system. Other approximate methods, such as stochastic averaging, have similar shortcomings for highly nonlinear systems.

One other method is to find an approximate solution for the Fokker–Plank (FP) equation. The Fokker–Plank equation in stationary conditions is a partial differential equation, defined on the whole state space of the SDE. The exact solution of the FP equation exists only for special types of SDEs with white noise excitation [26]. For other types of SDEs, finding an approximate analytical solution is nontrivial and the procedure is case dependent. One other method is to solve the FP equation numerically for example with the FE method [32], but numeric solution of the FP equation is challenging and massive, especially for high-dimensional state spaces [33, 34].

In this article, a novel method is proposed to approximately solve the FP equation and calculate the required moments, applicable on special types of highly nonlinear SDEs. The proposed method is applied on a second-order system with highly nonlinear (bistable) stiffness and colored noise excitation. For this special system, in the case of white noise excitation, the FP equation has an exact analytical solution which is addressed in the literature [26]. But when the type of excitation changes to nonwhite and the noise dynamics is augmented to the system model, no analytical solution is available for the statistical behavior of the system. In this situation, the proposed method provides good approximations for both the mean displacement and mean velocity of oscillations, whereas the Gaussian approximation performs well only in the mean velocity estimation.

The problem statement in this paper is mainly adapted from the energy-harvesting literature. It is important to design and analyze a nonlinear energy-harvesting device, which is able to harvest vibrational energy from the broadband or narrow-band random vibrations in the environment. However, except the Gaussian approximation method, no simple and accurate analytical tool is available to analyze the performance of the energy harvester, especially when the excitation is a colored noise and the system is highly nonlinear. This is while designing a vibration energy harvester may be highly facilitated when an approximate measure of performance is in hand. In other words, having an approximate analytical solution for the statistical properties of the system, can be used to assist the optimal and robust designs.

This paper is organized as follows. Section 2 briefly describes the system model in the state-space form. The FP equation corresponding to this system is developed in Sect. 3. Then in Sect. 4, the Gaussian approximation method is applied on the system to illustrate its strengths and weaknesses for highly nonlinear systems. Then the proposed method is introduced in Sect. 5 followed by two case studies in Sects. 6 and 7. Finally, some concluding remarks are declared in Sect. 8.

2 System model

Consider a mass–spring–damper system with nonlinear stiffness and random excitation. The governing differential equation is therefore a nonlinear SDE with the following state-space representation:

where \(x_1 \) and \(x_2 \) are the nondimensional displacement and velocity and \(f\left( {x_1 } \right) \) is an odd function of \(x_1 \). For the special case of the Duffing oscillator [35], \(f\left( {x_1 } \right) =k_1 x_1 +k_3 x_1^3 \) with \(k_3 >0\). The linear stiffness \(k_1 \) can be positive, zero or negative. The spring force will have a single stable equilibrium at the origin if \(k_1 \) is nonnegative. On the other hand, for negative linear stiffness \(k_1 <0\), the system shows a bistable behavior with two off-center stable equilibria at \(x_1 =\pm \,\sqrt{\left| {k_1 /k_3 } \right| }\) and one unstable equilibrium at the origin.

The input signal \(\nu \left( t \right) \) in (1) is a zero-mean random excitation, which is assumed to be Gaussian, but not necessarily a white noise. Instead, \(\nu \left( t \right) \) is modeled as the output of an n-dimensional linear SDE, with white noise excitation:

In this model, \(\mathbf{w}\left( t \right) \) is an n-dimensional vector of Gaussian white noise excitations and the n-by-n symmetric positive-definite matrix \({{\varvec{D}}}\) defines the amplitude of the excitation. Furthermore, \({{\varvec{L}}}\) is an invertible square matrix and \({{\varvec{b}}}\) is a column vector. In the one-dimensional case with \(b=1\), \(L=\tau \) and \(D=D_0 \), \(\nu \left( t \right) \) reduces to an Ornstein–Uhlenbeck (exponentially correlated) noise with correlation time \(\tau \):

It should be noted that the power spectral density of the excitation \(\nu \left( t \right) \) is related to the vector \({{\varvec{b}}}\) and the matrices \({{\varvec{L}}}\) and \({{\varvec{D}}}\):

3 Fokker–Plank equation

Consider a stochastic differential equation with additive and/or multiplicative Gaussian white noise excitation in the state-space form:

where \({{\varvec{x}}}\) is the \(n\times 1\) state vector, \({{\varvec{a}}}\left( {{{\varvec{x}}},t} \right) \) is the drift vector, \({\varvec{\xi }} \) is a vector of Wiener processes and \({{\varvec{g}}}\left( {{{\varvec{x}}},t} \right) \) is called the diffusion matrix. The FPK equation for the PDF \(p\left( {{{\varvec{x}}},t} \right) \) corresponding to this system is written as (Einstein notation is used) [33, 36]:

If both the drift vector \({{\varvec{a}}}\left( {{{\varvec{x}}},t} \right) \) and the diffusion matrix\(\,{{\varvec{g}}}\left( {{{\varvec{x}}},t} \right) \) have no explicit functionality of time, the PDF \(p\left( {{{\varvec{x}}},t} \right) \) gradually converges to its steady state value and the FPK equation can be written for the stationary system. Furthermore, for a SDE with only additive excitations, the FPK equation will be further simplified since the diffusion matrix \({{\varvec{g}}}\) does not depend on the state vector \({{\varvec{x}}}\):

The dynamical system (1) along with the noise dynamics (2) forms a \(\left( {n+2} \right) \)-dimensional system of stochastic differential equations in the state-space form, with Gaussian white noise excitation. Let \({{\varvec{x}}}=\left( {x_1 ,x_2 } \right) \), and define \(p\left( {{{\varvec{x,u}}}} \right) \) as the joint PDF of the \(\left( {n+2} \right) \)-dimensional state vector. The stationary FPK equation can be expanded for this system as follows:

where \(\nabla _\mathbf{u} \) is the gradient operator w.r.t. the vector \({{\varvec{u}}}\) and is a column vector equal to \(\left[ {\partial _{u_1 } ,\partial _{u_2 } ,\ldots ,\partial _{u_n } } \right] ^{\mathrm {T}}\).

4 Gaussian approximation

For a linear system, i.e., when the stiffness function \(f\left( {x_1 } \right) \) is linear, the joint PDF \(p\left( {{{\varvec{x,u}}}} \right) \) is Gaussian in all state variables \({{\varvec{x}}}\) and \({{\varvec{u}}}\). This is not exactly true for a nonlinear SDE, but one may approximately assume a Gaussian shape for the joint PDF and try to find the best fit for the parameters.

Consider the stationary FPK equation (7) corresponding to the SDE (5) with n state variables. Gaussian approximation assumes the PDF \(p\left( {{\varvec{x}}} \right) \) to have a Gaussian shape in the following form:

This shape for \(p\left( {{\varvec{x}}} \right) \) is equivalent to assuming third and higher-order cumulants vanish. Substituting (9) in (7) and taking first- and second-order moments of the equation result in a complete set of algebraic equations for the unknown vector \({\varvec{\mu }} \) and the unknown symmetric matrix \(\Sigma \).

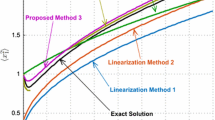

To verify the accuracy of the Gaussian approximation method, we apply this method on the second-order system (1) with Duffing-type spring forces \(f\left( {x_1 } \right) =k_1 x_1 +k_3 x_1^3 \) and white noise excitation, for which the corresponding FPK equation has a closed-form solution [26]. The dynamical system (1) is mono-stable for \(k_1 \ge 0\) and bistable for \(k_1 <0\). The mono-stable stiffness is more likely to be approximated with an equivalent linear one, while the bistable stiffness is known to be “highly nonlinear.” Accordingly, although Gaussian approximation provides appropriate estimations of the statistical properties of the mono-stable system (Fig. 1), it encounters large errors in the analysis of the bistable system (Fig. 2), when compared to the true values obtained from the analytical solution of the FPK equation. Specifically, the estimation of \(\langle x_1^2\rangle \) (i.e., the expected value of \(x_1^2 )\) is weak in accuracy when utilizing the Gaussian approximation.

The Gaussian approximation method can be applied in the Fourier space as well. One can take an n-dimensional Fourier transform of the FPK equation (7) and obtain the governing differential equation for the characteristic function \(P\left( {\varvec{\omega }} \right) \) corresponding to the PDF \(p\left( {{\varvec{x}}} \right) \), which is defined as \(P\left( {\varvec{\omega }} \right) ={{\mathcal {F}}}_x \left[ {p\left( {{\varvec{x}}} \right) } \right] \). This procedure is possible only if all the drift components \(a_i \left( {{\varvec{x}}} \right) \) be polynomials of the state vector \({{\varvec{x}}}\). This condition is necessary for \(P\left( {\varvec{\omega }} \right) \) to be extractable from the Fourier transform of (7). The characteristic function of the assumed

Gaussian PDF (9) is also Gaussian.

Substituting (10) in the Fourier transform of (7) leads to an algebraic equation in \(\varvec{\upomega }\) for which the unknowns are the n-by-1 vector \({\varvec{\mu }} \) and the n-by-n symmetric matrix \({\varvec{\Sigma }} \). In the Fourier space, to apply the Gaussian closure, it is not required to calculate the first- and second-order moments; instead, one can simply separate different orders of \(\omega _i \), eliminate third- and higher-order terms and set the coefficients of lower orders of \(\omega _i \) equal to zero. This results in a set of algebraic equations that can be solved for \({\varvec{\mu }} \) and \({\varvec{\Sigma }} \). Note that the solution of this method is the same as the standard Gaussian approximation method. The difference is in the simplicity of application.

Combination of these two methods is also possible. Instead of taking a full-state (n-dimensional) Fourier transform, one can take a partial-state Fourier transform and then calculate the moments for the remaining state variables. This hybrid procedure is utilized in the proposed method.

5 The proposed method

The Gaussian approximation method results in simple and relatively accurate approximations for weakly nonlinear systems. But for strongly nonlinear systems, large errors appear in the Gaussian approximation. More accurate approximations may be achieved by migrating from Gaussian to non-Gaussian closure schemes, but this highly increases the complexity of moment equations especially for nonwhite excitations when the noise state variables are augmented to the system state space. In this article, a novel quasi-Gaussian method is proposed that shows a trade-off between accuracy and simplicity.

Consider the FPK equation (8) which corresponds to the second-order dynamical system (1) with noise dynamics (2). For this system, \(p\left( {{{\varvec{x,u}}}} \right) \) defines the \(\left( {n+2} \right) \)-dimensional joint PDF. Also define \(p\left( {{\varvec{x}}} \right) \) as the joint PDF of the fundamental state vector \({{\varvec{x}}}=\left( {x_1 ,x_2 } \right) \). In fact, \(p\left( {{\varvec{x}}} \right) \) is more valuable than \(p\left( {{{\varvec{x,u}}}} \right) \). Numerically, \(p\left( {{\varvec{x}}} \right) \) is the integral of \(p\left( {{{\varvec{x,u}}}} \right) \) over the whole \({{\varvec{u}}}\in \mathbb {R}^{n}\). Also, one may calculate the n-dimensional Fourier transform \(P\left( {{{\varvec{x}}},{\varvec{\omega }} } \right) \) and set \({\varvec{\omega }} =\mathbf{0 }\) to reach the desired joint PDF \(p\left( {{\varvec{x}}} \right) \):

In the Gaussian approximation method, \(p\left( {{{\varvec{x,u}}}} \right) \) is assumed to be Gaussian w.r.t all state variables \({{\varvec{x}}}\) and \({{\varvec{u}}}\). Then, \(P\left( {{{\varvec{x}}},{\varvec{\omega }} } \right) \) will also be Gaussian w.r.t \({\varvec{\omega }} \) as well as \({{\varvec{x}}}\). Finally, one can check that \(p\left( {{\varvec{x}}} \right) \) has a Gaussian shape in both \(x_1 \) and \(x_2 \).

A modification on the Gaussian approximation method can though improve the accuracy of the estimation of \(\langle x_1^2\rangle \), while preserving the adequate performance of the Gaussian approximation for the estimation of other statistical properties, the most important of which being \(\langle x_2^2\rangle \).

In the proposed “quasi-Gaussian” method, we assume \(p\left( {{{\varvec{x,u}}}} \right) \) to be Gaussian w.r.t the noise state vector \({{\varvec{u}}}\), but not necessarily in the fundamental state vector \({{\varvec{x}}}\). Equivalently, \(P\left( {{{\varvec{x}}},{\varvec{\omega }} } \right) \) is assumed to be Gaussian w.r.t \(\varvec{\upomega }\) but not necessarily w.r.t \({{\varvec{x}}}\):

where \(\phi \left( {{\varvec{x}}} \right) \), \({{\varvec{q}}}\left( {{\varvec{x}}} \right) \) and \({{\varvec{Q}}}\left( {{\varvec{x}}} \right) \) are real scalar, vector and matrix functions of \({{\varvec{x}}}\), respectively, and \({{\varvec{Q}}}\left( {{\varvec{x}}} \right) \) is symmetric. Then, one can simply check that \(p\left( {{\varvec{x}}} \right) =\exp \left( {-\,\phi \left( {{\varvec{x}}} \right) } \right) \). The governing differential equation for \(P\left( {{{\varvec{x}}},{\varvec{\omega }} } \right) \) can be derived by taking the Fourier transform of Eq. (8):

Now, one can substitute the assumed shape (12) for \(P\left( {{{\varvec{x}}},{\varvec{\omega }} } \right) \) in (13). The result is an approximate partial differential equation in which the unknown functions are \(\phi \left( {{\varvec{x}}} \right) \), \(q\left( {{\varvec{x}}} \right) \) and \(Q\left( {{\varvec{x}}} \right) \), and the vector \({\varvec{\omega }} \) explicitly appears in the equation. This PDE is not solvable for all values of \({\varvec{\omega }} \). Instead, according to the second-order moment closure technique, one can write the Taylor expansion of the equation w.r.t. \({\varvec{\omega }} \) just up to the second order and set the coefficients of \({\varvec{\omega }}^{0}\), \({\varvec{\omega }}^{1}\) and \({\varvec{\omega }}^{2}\) equal to zero (\({\varvec{\omega }}^{n}\) is an abbreviated notation for \(\mathop \prod \nolimits _i \omega _i^{n_i } \) with \(n=\mathop \sum \nolimits _i n_i )\). Then, the following set of differential equations will be developed:

This set of equations is formed from one scalar, one vector and one matrix differential equation for the scalar, vector and matrix unknown functions \(\phi \left( {{\varvec{x}}} \right) \), \(q\left( {{\varvec{x}}} \right) \) and \(Q\left( {{\varvec{x}}} \right) \), respectively. The third equation in (14) states that the left-hand side of the equation has to be an anti-symmetric matrix. According to the symmetry of \({{\varvec{Q}}}\left( x \right) \), the number of equations matches the number of unknowns. However, looking for the exact solution of (14) seems not to be simpler than solving the original differential equation (13). But, through further assumptions and approximations, the system (14) reduces to much more simple and solvable equations.

Assume the joint PDF to be Gaussian w.r.t \(x_{2}\) as well. This restricts the three functions \(\phi \left( {{\varvec{x}}} \right) \), \(q\left( {{\varvec{x}}} \right) \) and \({{\varvec{Q}}}\left( {{\varvec{x}}} \right) \) to be of the following forms:

Substituting (15) in (14), three ordinary differential equations are obtained for six unknown functions, all of which are functions of the single variable \(x_1 \). Furthermore, the all three equations are polynomials in \(x_2 \). These three differential equations are not solvable for all values of \(x_2 \) and must be solved in an approximate manner. According to the assumption for the PDF to be Gaussian w.r.t. \(x_2 \) as well as \(\varvec{\upomega }\), moments of different orders of \(x_2 \) up to the second order are set to zero. To this end, since the first (scalar) equation in (14) corresponds to \({\varvec{\omega }}^{0}\), moments of the zeroth, first and second orders are set to zero. Denoting by eqn the left-hand side of the first equation in (14), the zeroth-, first- and second-order moments are \(\mathop \int \nolimits _{-\infty }^\infty x_2^n \, eqn \hbox { d}x_2 \), with n equal to zero, one and two, respectively. Similarly, since the second (vector) equation in (14) corresponds to \({\varvec{\omega }}^{1}\), the zeroth- and first-order moments are set to zero. For the third (matrix) equation in (14), only the zeroth-order moment is assumed to be zero because it corresponds to \({\varvec{\omega }}^{2}\). This results in six ordinary differential equations for the six unknown functions \(\phi _0 \left( {x_1 } \right) \), \(\phi _1 \left( {x_1 } \right) \), \(\phi _2 \left( {x_1 } \right) \), \({{\varvec{q}}}_0 \left( {x_1 } \right) \), \({{\varvec{q}}}_1 \left( {x_1 } \right) \) and \({{\varvec{Q}}}_0 \left( {x_1 } \right) \).

Note that \(\phi _0^{\prime } \left( {x_1 } \right) \) denotes differentiation of \(\phi _0 \left( {x_1 } \right) \) w.r.t. \(x_1 \). The same notation is used for \(\phi _1^{\prime } \left( {x_1 } \right) \), \(\phi _2^{\prime } \left( {x_1 } \right) \), \({{\varvec{q}}}_0^{\prime } \left( {x_1 } \right) \) and \({{\varvec{q}}}_1^{\prime } \left( {x_1 } \right) \). Briefly, we assumed the joint PDF \(p\left( {{{\varvec{x,u}}}} \right) \) to be Gaussian w.r.t \({{\varvec{u}}}\) as well as \(x_2 \). Differences between the proposed method and the Gaussian approximation method appear hereafter. If one further assumes the PDF to also be Gaussian w.r.t \(x_1 \), the result will be identical to the Gaussian approximation method discussed in Sect. 4. For the PDF to be Gaussian w.r.t \(x_1 \), the unknown functions \({{\varvec{Q}}}_0 \left( {x_1 } \right) \), \({{\varvec{q}}}_1 \left( {x_1 } \right) \) and \(\phi _2 \left( {x_1 } \right) \) must be constants, \({{\varvec{q}}}_0 \left( {x_1 } \right) \) and \(\phi _1 \left( {x_1 } \right) \) must be multiples of \(x_1 \), and \(\phi _0 \left( {x_1 } \right) \) must be a multiple of \(x_1^2 \). Then, examining equations (16) proves \(\phi _1 \left( {x_1 } \right) =0\) which implies statistical independency of \(x_1 \) and \(x_2 \). After taking suitable moments w.r.t \(x_1 \) according to the Gaussian closure scheme, the system (16) reduces to a set of algebraic equations for the unknown parameters which can be solved either analytically or numerically.

However, Gaussian approximation gives erroneous estimations for some statistical behavior of the system, especially the second-order moment \(\langle x_1^2\rangle \). In fact, the largest distortion in the joint PDF from the Gaussian shape appears in the \(x_1 \) direction. One can simply check that the behavior of the joint PDF \(p\left( {{{\varvec{x,u}}}} \right) \) with the aforementioned assumptions along the \(x_1 \) axis is mainly controlled by the unknown function \(\phi _0 \left( {x_1 } \right) \). Therefore, a light modification of the Gaussian approximation may present much better estimations of the desired second-order moment \(\langle x_1^2\rangle \). So, we can justify all the aforementioned Gaussian assumptions on the six unknown functions, except the assumption on \(\phi _0 \left( {x_1 } \right) \). In fact, the third equation in (16) is supposed to completelydefine\(\phi _0 \left( {x_1 } \right) \). The other five equations form a complete set of equations for the five remaining unknowns.

Let \(\phi _2 \left( {x_1 } \right) =\phi _2 \), \({{\varvec{q}}}_1 \left( {x_1 } \right) ={{\varvec{q}}}_1 \) and \({{\varvec{Q}}}_0 \left( {x_1 } \right) ={{\varvec{Q}}}_0 \) all be constant functions. Also assume \({{\varvec{q}}}_0 \left( {x_1 } \right) ={\bar{{{\varvec{q}}}}}_0 x_1 \) and \(\phi _1 \left( {x_1 } \right) ={\bar{\phi }}_1 x_1 \) as in the Gaussian closure technique. Then applying the Gaussian closure technique on the six equations in (16) except the third one, the following set of algebraic equations is achieved and has to be solved for \(\phi _2 \), \({\bar{{{\varvec{q}}}}}_0 \), \({{\varvec{q}}}_1 \)and \({{\varvec{Q}}}_0 \) (note that \(\bar{\phi }_1\) is zero).

And the third equation in (16) can be solved for \(\phi _0 \left( {x_1 } \right) \) as:

where \(U\left( {x_1 } \right) \) is the potential function corresponding to \(f\left( {x_1 } \right) \), i.e., \(f\left( {x_1 } \right) =\hbox {d}U\left( {x_1 } \right) /\hbox {d}x_1 \). Adopted from the statistical linearization method, the term \(\langle x_1 f\left( {x_1 } \right) \rangle /\langle x_1^2\rangle \) can be named the equivalent linear stiffness denoted by \(k_{eq} \) and can be calculated as follows:

Therefore, the numerical value of \(k_{eq} \) can be calculated by an iterative procedure.

Two different noise models are investigated in the following sections to prove the performance of the proposed method. Note that in the case of white noise excitation, the proposed method gives the exact solution for the joint PDF and hence for the desired second-order moments \(\langle x_1^2\rangle \) and \(\langle x_2^2\rangle \).

6 Case study I: noise with first-order dynamics

As a special case, consider the model (3) for the random excitation with first-order dynamics (Fig. 3). For this system \(L=\tau \), \(b=1\) and \(D=D_0 \) as presented in Eq. (3). The solution for the unknown function \(\phi _0 \left( {x_1 } \right) \) and the unknown constant \(\phi _2 \) according to the proposed method are as follows:

Especially at \(\tau =0\), the solution reduces to the well-known probability distribution function for a second-order SDE with nonlinear stiffness and white noise excitation. On the other hand, for linear stiffness \(f\left( {x_1 } \right) =k\, x_1 \), the solution coincides with the exact Gaussian solution.

The result for \(\tau =0.1\) and \(\gamma =0.04\) and a bistable Duffing-type stiffness \(f\left( {x_1 } \right) =k_1 x_1 +k_3 x_1^3 \) with \(k_1 =-\,1\) and \(k_3 =1\) are shown in Fig. 4. In this figure, the results from the Gaussian approximation and the proposed method are compared with the Monte Carlo simulations. The estimation of the mean square value of velocity \(\langle x_2^2\rangle \) has similar accuracies in both methods. However, the proposed method shows much more accurate approximations for the mean square of displacement \(\langle x_1^2\rangle \) than the Gaussian method.

7 Case study II: noise with second-order dynamics

Many environmental excitations are band-limited. Band-limited excitations can be approximately modeled as white noise signals passed through a second-order filter. Mathematically, the model is characterized by the noise center frequency \(\omega _\nu \), the damping ratio \(\zeta _\nu \) and the amplitude of the white noise \(D_0 \). Then, the parameters in the state-space model (2) for the excitation are as follows:

Defining \(\gamma _\nu =2\zeta _{\nu } \upomega _{\mathrm{\nu }} \), the solution for \(\phi _2 \) and \(\phi _0 \left( {x_1 } \right) \) is as follows:

One can check that for linear stiffness \(f\left( {x_1 } \right) =k\, x_1 \), this solution will be the same as the exact Gaussian solution.

The results for \(\omega _\nu =1.0,\, \, \zeta _\nu =0.2\) and \(\omega _\nu =1.5,\, \, \zeta _\nu =0.1\) are presented in Figs. 5 and 6, respectively. The mechanical parameters \(k_1 \), \(k_3 \) and \(\gamma \) are the same as in the first case study. Again, the results from the Gaussian approximation and the proposed method are compared with the Monte Carlo simulations. In both figures, the estimation of \(\langle x_1^2\rangle \) is considerably more accurate for the proposed method than the Gaussian approximation method. For \(\langle x_2^2\rangle \), the accuracy of the proposed method is not better than the Gaussian approximation method. In fact, the proposed method modifies the Gaussian approximation method so as to deal with the essential nonlinearity in \(\langle x_1\rangle \) direction and provide better approximations for \(\langle x_1^2\rangle \), for which the Gaussian approximation gives erroneous estimates. On the other hand, the joint PDF of the system in \(x_2\) direction is mostly Gaussian, and the Gaussian approximation gives good estimations for moments in this direction and the proposed method does not improve the accuracy of estimations in \(x_2 \) direction.

8 Conclusion

A quasi-Gaussian moment closure method was introduced in this paper. This method is applied on a Duffing oscillator with highly nonlinear (bistable) stiffness and small damping coefficient, subject to colored noise excitation. Random excitations with both first-order and second-order dynamics are considered. The results show an improved accuracy for the proposed method in the estimation of the mean amplitude of vibration compared to the Gaussian approximation method.

The method proposed in this paper is only applied on a special type of SDEs, i.e., the Duffing-type oscillator, excited by colored random Gaussian noise with first- and second-order dynamics. However, the formulation in Eqs. (17) and (18) is general enough to be used for any shape of the stiffness function as well as any order of the noise dynamics.

References

Smith, R.C.: Uncertainty Quantification: Theory, Implementation, and Applications. SIAM, Bangkok (2013)

Yang, C.Y.: Random Vibration of Structures. Wiley, New York (1986)

Henderson, D., Plaschko, P.: Stochastic Differential Equations in Science and Engineering. World Scientific Publishing, Singapore (2006)

Cottone, F., Vocca, H., Gammaitoni, L.: Nonlinear energy harvesting. Phys. Rev. Lett. 102(8), 80601 (2009)

Briand, D., Yeatman, E., Roundy, S. (eds.): Micro Energy Harvesting, vol. XXXIII. Wiley, Weinheim (2015)

Newland, D.E.: An Introduction to Random Vibrations, Spectral and Wavelet Analysis, 3rd edn. Wiley, New York (1993)

Socha, L.: Linearization Methods for Stochastic Dynamic Systems. Springer, Berlin (2008)

Ibrahim, R.A.: Parametric Random Vibration. Wiley, New York (1985)

Socha, L.: Linearization in analysis of nonlinear stochastic systems: recent results-part I: theory. Appl. Mech. Rev. 58(3), 178 (2005)

Caughey, T.K.: Equivalent linearization techniques. J. Acoust. Soc. Am. 34(12), 2001–2001 (1962)

Roberts, J.B., Spanos, P.D.: Random Vibration and Statistical Linearization. Courier Dover Publications, New York (2003)

Spanos, P.T.D.: Formulation of stochastic linearization for symmetric or asymmetric MDOF nonlinear systems. J. Appl. Mech. 47(1), 209 (1980)

Di Paola, M., Falsone, G., Pirrotta, A., Palermo, U., Scienze, V.: Stochastic response analysis of nonlinear systems under Gaussian inputs. Probab. Eng. Mech. 7, 15–21 (1992)

Sun, J., Hsu, C.S.: Cumulant-neglect closure method for nonlinear systems under random excitations. J. Appl. Mech. 54(3), 649 (1987)

Wu, W.F., Lin, Y.K.: Cumulant-neglect closure for non-linear oscillators under random parametric and external excitations. Int. J. Nonlinear. Mech. 19(4), 349–362 (1984)

Crandall, S.H.: Non-Gaussian closure for random vibration of non-linear oscillators. Int. J. Nonlinear. Mech. 15(4–5), 303–313 (1980)

Makarem, H., Pishkenari, H.N., Vossoughi, G.R.: A modified Gaussian moment closure method for nonlinear stochastic differential equations. Nonlinear Dyn. 89(4), 2609–2620 (2017)

Zhu, W.Q.: Stochastic averaging methods in random vibration. Appl. Mech. Rev. 41(5), 189 (1988)

Roberts, J.B., Spanos, P.D.: Stochastic averaging: an approximate method of solving random vibration problems. Int. J. Nonlinear Mech. 21(2), 111–134 (1986)

Lin, Y.K.: Some observations on the stochastic averaging method. Probab. Eng. Mech. 1(1), 23–27 (1986)

Zhu, B.W., Lin, Y.K.: Stochastic averaging of energy envelope. J. Eng. Mech. 117(8), 1890–1905 (1992)

Di Paola, M., Sofi, A.: Approximate solution of the Fokker–Planck–Kolmogorov equation. Probab. Eng. Mech. 17, 369–384 (2002)

Soize, C.: Steady-state solution of Fokker–Planck equation in higher dimension. Probab. Eng. Mech. 3(4), 196–206 (1988)

Er, G.-K.: A consistent method for the solution to reduced FPK equation in statistical mechanics. Phys. A Stat. Mech. Appl. 262(1–2), 118–128 (1999)

Er, G.-K., Iu, V.P.: The approximate solutions of FPK equations in high dimensions for some nonlinear stochastic dynamic systems. Commun. Comput. Phys. 10(5), 1241–1256 (2011)

To, C.W.S.: Nonlinear Random Vibration: Analytical Techniques and Applications, 2nd edn. CRC Press, Boca Raton (2012)

Joo, H.K., Sapsis, T.P.: A moment-equation-copula-closure method for nonlinear vibrational systems subjected to correlated noise. Probab. Eng. Mech. 46, 120–132 (2016)

Daqaq, M.F.: Transduction of a bistable inductive generator driven by white and exponentially correlated Gaussian noise. J. Sound Vib. 330(11), 2554–2564 (2011)

Fan, F.G., Ahmadi, G.: On loss of accuracy and non-uniqueness of solutions generated by equivalent linearization and cumulant-neglect methods. J. Sound Vib. 137(3), 385–401 (1990)

Daqaq, M.F.: On intentional introduction of stiffness nonlinearities for energy harvesting under white Gaussian excitations. Nonlinear Dyn. 69(3), 1063–1079 (2012)

Ibrahim, R.A., Soundararajan, A.: An improved approach for random parametric response of dynamic systems with non-linear inertia. Int. J. Non. Linear. Mech. 20(4), 309–323 (1985)

Kumar, P., Narayanan, S., Adhikari, S., Friswell, M.I.: Fokker–Planck equation analysis of randomly excited nonlinear energy harvester. J. Sound Vib. 333(7), 2040–2053 (2014)

Risken, H.: The Fokker–Planck Equation: Methods of Solution and Applications. Springer, Berlin (1989)

Spencer, B.F., Bergman, L.: On the numerical solution of the Fokker–Planck equation for nonlinear stochastic systems. Nonlinear Dyn. 4, 357–372 (1993)

Kovacic, I., Brennan, M.J.: The Duffing Equation: Nonlinear Oscillators and Their Behaviour. Wiley, New York (2011)

Jazwinski, A.H.: Stochastic Processes and Filtering Theory. Academic Press, Cambridge (1970)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Makarem, H., Pishkenari, H.N. & Vossoughi, G.R. A quasi-Gaussian approximation method for the Duffing oscillator with colored additive random excitation. Nonlinear Dyn 96, 825–835 (2019). https://doi.org/10.1007/s11071-019-04824-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-019-04824-x