Abstract

The fractional-order derivative is a powerful and promising concept to describe many physical phenomena due to its heredity/memory feature. This paper aims to establish a general methodology for parameter identification of nonlinear fractional-order systems based on the time domain response data and the sensitivity analysis. The development of the enhanced response sensitivity approach is mainly threefold. Firstly, a computational scheme based on the Adams-type discretization and the Newmark-\(\beta \) method is presented to get the numerical solution of the nonlinear fractional-order systems. Thereafter, a hybrid strategy is developed to proceed the sensitivity analysis where the sensitivity to the fractional-order parameters is obtained through finite different calculation, while the sensitivity to other parameters is analyzed via direct differentiation. Secondly, the trust-region constraint is incorporated into the response sensitivity approach, and as a result, a weak convergence is reached. Thirdly, the optimal choice of the weight matrix within the framework of the response sensitivity approach is derived by minimizing the identification error, and eventually, the reciprocal of the measurement error covariance is found to be the optimal weight matrix. Numerical examples are conducted to testify the feasibility and efficiency of the present approach for parameter identification of nonlinear fractional-order systems and to verify the improvement in the identification accuracy brought up by the optimal weight matrix.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Fractional-order derivatives are the fundamental theories of fractional-order dynamical systems with a history as long as calculus [1]. Compared to the integer-order derivatives, fractional-order derivatives are much more complex in both definitions and numerical calculations. Nevertheless, due to the simple form in modeling and the heredity/memory feature [2, 3], fractional-order derivatives have been widely used in many scientific and engineering systems, such as viscoelasticity system [4], electrode–electrolyte polarization [5], signal processing [6], quantitative finance [7] and electromagnetic wave [8]. A critical thing before analysis and design of such fractional-order systems is that the parameters including the fractional-order parameters and other system parameters should be calibrated or identified, and this is just the goal of this work.

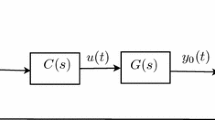

Classically, identifying or calibrating the parameters of the fractional-order systems from the measured response data belongs to a class of inverse problems. Following the general idea, the parameter identification problem can be modeled as a nonlinear least-squares optimization problem whose objective function is the weighted least squares of the misfit between the measured and calculated response data. Over the years, two main categories of methods have been developed to get the solution of the optimization problem, and they are the meta-heuristic algorithms and the gradient-based methods.

The meta-heuristic algorithms such as the genetic algorithm [9, 10], the artificial bee colony algorithm [11], the hybrid stochastic fractal search algorithm [12], the particle swarm optimization algorithm [13] have been widely used in many optimization problems, including parameter identification of fractional-order systems [12, 13]. In such algorithms, only the forward problems are required to be solved and no sensitivity analysis is involved [12]; this renders the whole identification procedure very simple. Moreover, these algorithms are free from the careful determination of a proper choice of initial parameters and the global minima can often be reached. However, due to the random search nature, the meta-heuristic algorithms will admit a considerable number of iterations and thereof a quite slow convergence speed. In particular for parameter identification of the fractional-order systems, the slow convergence speed would give rise to the prohibitively high computational cost because even a single forward problem should be solved in a costly manner.

In contrast, much quicker convergence is often reached by the gradient-based methods where the gradient or more specifically the sensitivity analysis is involved. Notwithstanding, due to the complexity in the sensitivity analysis, limited publications can be found for parameter identification of the fractional-order systems using the gradient-based methods. On the one hand, for linear fractional-order systems, the frequency response function is much simpler to describe the fractional-order systems than the dynamic equations. Therefore, the frequency response data were often preferred to establish the objective function [14, 15]. Based on this objective function, Poinot and Trigeassou [14] proposed to use the Levenberg–Marquardt algorithm [16] to get the solution where only the first-order sensitivity analysis is required; Leyden and Goodwine [15] directly applied the sequential quadratic programming algorithm to identify the parameters, and to do so, the fmincon function in MATLAB is simply called. On the other hand, for nonlinear fractional-order systems, only the time domain response data can be used. To this end, Mani et al. [17] defined the objective function as the least squares of the error in the dynamical equation and thereafter developed a two-step iteration algorithm to identify the parameters on considering the complexity of the fractional-order derivative term. Specifically, in each iteration, two steps are involved: In the first step, the parameters excluding the fractional-order parameters are quickly determined from the measured data and the assumed fractional-order parameters, while in the second step, the fractional-order parameters are to be estimated through a gradient search procedure. However, all state variables are required to be measured in order to carry out the two-step algorithm and the system is required to be linearly dependent on the parameters excluding the fractional-order parameters. As a consequence, new gradient-based methods for parameter identification of general nonlinear fractional-order systems with partial time domain measurements are still in demanded.

In this paper, the focus is to establish a general methodology for parameter identification of nonlinear fractional-order systems, and before doing so, some properties of nonlinear fractional-order systems are summarized below

-

For nonlinear systems, the Laplace/Fourier transformation cannot be conducted in a simple and straightforward manner as for the linear systems. Thus, the time domain response data rather than the frequency response data should be used for parameter identification.

-

For nonlinear fractional-order systems, the forward problem should be numerically solved in a very costly manner, and therefore, the gradient-based methods rather than the meta-heuristic algorithms are preferred for parameter identification.

-

The fractional-order derivative term is much more complex than the integer derivatives, and therefore, special attention should be paid to the sensitivity analysis with respect to the fractional-order parameters when using the gradient-based methods.

On considering these properties, the enhanced response sensitivity method which is primitively proposed and developed by Lu and his co-workers [18, 19] for damage identification is found to be suitable for parameter identification of nonlinear fractional-order systems. The reasons are given in the following:

-

The enhanced response sensitivity approach pertains to the gradient-based methods and has been shown to admit a quick convergence in damage identification [19].

-

Unlike the Newton method where the second-order sensitivity is involved, only the first-order sensitivity analysis is required so that the enhanced response sensitivity approach becomes simpler and computationally cheaper than the Newton method.

-

The enhanced response sensitivity approach has been proved to be weakly convergent for parameter identification problems [19].

Actually, the enhanced response sensitivity approach has been successfully used to parameter identification of chaotic/hyperchaotic systems [20] and nonlinear hysteretic systems [21], and this to some extent shows that the enhanced response sensitivity approach would be a feasible tool for parameter identification of nonlinear fractional-order systems in this paper. Consequently, the contributions of the whole work are mainly threefold:

-

Firstly, a new and practical hybrid strategy for sensitivity analysis of nonlinear fractional-order systems is developed. In doing so, the sensitivity to the fractional-order parameters is calculated through finite difference, while the sensitivity to other parameters is obtained through direct differentiation;

-

Secondly, a generic framework for parameter identification of nonlinear fractional-order systems is established based on the sensitivity analysis. Such a framework can be applied for general linear/nonlinear fractional-order systems and arbitrary type of measurements, e.g., partial time domain measurements.

-

Thirdly, an optimal choice of the weight matrix as the reciprocal of the measurement error covariance for general nonlinear parameter identification is derived theoretically to minimize the expectation of the squares of the identification error.

The remainder of this paper is structured as follows. In Sect. 2, a numerical scheme based on the Adams-type discretization and the Newmark-\(\beta \) method is presented for numerical solution of nonlinear fractional-order systems. In Sect. 3, how to identify the parameters from the time domain response data by the enhanced response sensitivity approach is elaborated. Herein, a hybrid strategy is developed to proceed the sensitivity analysis and the optimal choice of the weight matrix is also derived theoretically. Numerical examples are studied in Sect. 4, and the final conclusions are drawn in Sect. 5.

2 Formulation of forward problem

Consider a typical nonlinear fractional-order system over the time interval [0, T] whose governing equation is

where

-

\({\varvec{M}}, {\varvec{C}}, {\varvec{K}}\) and \({\varvec{\xi }}\) represent the respective mass matrix, damping matrix, stiffness matrix and the parameters in the nonlinear term \({\varvec{F\big (\xi , q, \dot{q}\big )}}\), and \({\varvec{P}}(t)\) denotes the external loading vector;

-

\({\varvec{q}}=[q_1;q_2; \ldots ;q_N],\dot{{\varvec{q}}},\ddot{{\varvec{q}}}\) are the displacements, velocities and accelerations, respectively, and for this dynamical problem, \({\varvec{q}}_0,\dot{{\varvec{q}}}_0\) are initial displacements and velocities;

-

\({\varvec{\alpha }}=[\alpha _1;\alpha _2; \ldots ;\alpha _l]\) are the fractional-order parameters and \({\varvec{D^{\alpha }q}}\) is the fractional-order differential vector that has been shown to pose the effect of damping. Herein, the Caputo fractional-order derivative is used so that \(D^{\alpha }q,0<\alpha <1\) is defined as

$$\begin{aligned} D^{\alpha }q=\frac{1}{\varGamma (1-\alpha )}\int \nolimits _0^t \frac{\dot{q}(\tau )}{(t-\tau )^{\alpha }} \mathrm {d} \tau . \end{aligned}$$(2)

As for parameter identification of such a system (1) in this paper, the parameters \({\varvec{\chi }}=[\chi _1;\chi _2; \ldots ;\chi _m] \in \mathcal {P}\) with \(\mathcal {P}\) being the admissible parameter space shall contain the fractional-order parameters \({\varvec{\alpha }}\), the nonlinear term parameters \({\varvec{\xi }}\), and the parameters in \({\varvec{M,C,K}}\) and even the load \({\varvec{P}}(t)\). From the complex definition of the fractional-order derivative in equation (2), the fractional-order parameters \({\varvec{\alpha }}\) should receive particular attention in parameter identification, and therefore, it is set that \({\varvec{\chi }}=[\hat{{\varvec{\chi }}};{\varvec{\alpha }}]\) where \(\hat{{\varvec{\chi }}}\) collects the parameters excluding the fractional-order parameters.

Remark 1

Herein, some nonlinear fractional-order systems are presented for exemplification. The simplest model of nonlinear fractional-order systems in the form of Eq. (1) is the Duffing oscillator [17] which has a nonlinearity in stiffness, that is,

Besides, the van der Pol oscillator is a self-excited system with nonlinearity appearing in the damping, leading to

Combining the above two, the forced van der Pol–Duffing oscillator is obtained

In the above oscillators, the fractional-order derivative term occurs in the damping; nevertheless, it can also appear in the acceleration term, e.g., the extended Van der Pol oscillator

It is noteworthy that only the Duffing oscillator fits with the form (1) in this paper; nevertheless, analysis and parameter identification of other forms of the nonlinear fractional-order systems, e.g., (4)–(6), can be proceeded likewise.

For the nonlinear fractional-order system (1), numerical methods should be called to get the approximate solution. To do so, a series of time nodes \(0=t_0<t_1< \cdots <t_l=T\) is obtained at first, and then, \({\varvec{q}}_n={\varvec{q}}(t_n),\dot{{\varvec{q}}}_n=\dot{{\varvec{q}}}(t_n), \ddot{{\varvec{q}}}_n=\ddot{{\varvec{q}}}(t_n)\) are to be successively derived from \({\varvec{q}}_{n-1},\dot{{\varvec{q}}}_{n-1},\ddot{{\varvec{q}}}_{n-1}\). Following the general idea of the Newmark-\(\beta \) method, one can have

where \(\beta \) and \(\gamma \) are the two parameters of Newmark-\(\beta \) method and \(\Delta t=t_n-t_{n-1}\) is the time step size. One difficulty in numerical solution of the nonlinear fractional-order systems resides at the numerical treatment of the fractional-order derivative term \(D^{\alpha }q\) (2), and usually, an Adams-type discretization scheme can be used

where \(\dot{q}_i=\dot{q}(t_i)\) and \(c_{i,n}\) is the quadrature coefficient with the following form

To be complete, Eq. (1) at time \(t_n\) should be additionally introduced, that is,

Combination of Eqs. (7), (8) and (10) can lead to a nonlinear equation about \({\varvec{q_n}}\), and such a nonlinear equation can be solved by the Newton–Raphson iteration method. It should be pointed out that this strategy requires \({\varvec{q_n}}\) to have a suitable initial guess value. In addition to the Newton–Raphson iteration method, one can cope with the nonlinear term \({\varvec{F}}\big ({\varvec{\xi }}, {\varvec{q}}_n, \dot{{\varvec{q}}}_n\big )\) by the Taylor expansion, i.e.,

Then, using (11), \({\varvec{q_n}}\) shall be obtained by directly solving a linear equation. The Taylor expansion makes the solution easily obtained; however, the accuracy would be substantially degraded, and thereof, the time step size should be small enough.

Remark 2

The above numerical solution is derived upon the condition \(0<\alpha <1\) for the fractional-order parameter; nevertheless, if \(1<\alpha <2\), the fractional derivative \(D^{\alpha }q\) is in another form,

and then, the numerical solution can be obtained likewise via replacing Eq. (8) by the following Adams-type discretization scheme

where the quadrature coefficient \(d_{i,n}\) has the following form

3 Parameter identification of nonlinear fractional-order systems

3.1 Inverse problem formulation

For inverse problems, the parameters \({\varvec{\chi }}\) are to be identified from the measured time domain response data that may contain the displacement response and/or the acceleration response. Assume that the measured time domain response data over the sampling time nodes \(0=t_0<t_1< \cdots <t_l=T\) is designated as

where \(\hat{q}_j(t_i),\hat{\ddot{q}}_k(t_i)\) denote the jth displacement and the kth acceleration at time \(t_i\) and \(S_q,S_{\ddot{q}}\) collect all measured displacement and acceleration DOFs. From the forward problem (1) and the numerical solution procedure thereafter, it is easily deduced that the solution is an implicit function of the time and the parameters, i.e., \({\varvec{q}}={\varvec{q}}(t,{\varvec{\chi }})\). Then, the calculated data that are derived from governing Eq. (1) under the given parameters \({\varvec{\chi }}\) in correspondence to the measured data are also an implicit function of the parameters \({\varvec{\chi }}\) with the following expression

With the above preparation, the parameter identification problem can be stated as: Determine the parameters \({\varvec{\chi }} \in \mathcal {P}\) that minimize the misfit between the measured data (15) and the calculated data (16), or mathematically, find the solution \({\varvec{\chi }}^* \in \mathcal {P}\) of the following weighted nonlinear least-squares optimization problem,

where \(\hbar ({\varvec{\chi }})\) is the objective function, \(||(\cdot )||_{{\varvec{W}}}=\sqrt{(\cdot )^T{{\varvec{W}}}(\cdot )}\) and \({\varvec{W}}\) is a user-defined positive definite weight matrix. Problem (17) is a typical nonlinear optimization problem, and the enhanced response sensitivity approach [19] that has been shown to be suitable for solution of such kind of problems will be called to get the solution. In doing so, the following three aspects should be clarified for the sake of completeness:

-

1.

At first, the sensitivity analysis of the nonlinear fractional-order system (1) should be conducted to get the sensitivity matrix.

-

2.

Next, the trust-region constraint is introduced along with the sensitivity matrix to get the solution \({\varvec{\chi }}^*\) and to enhance the convergence property.

-

3.

Finally, based on the above solution, the optimal choice of the weight matrix \({\varvec{W}}\) shall be derived to minimize the expectation of the squares error in the identification parameters from the noised response data.

The above three aspects are to be elaborately analyzed in Sects. 3.2, 3.3 and 3.4, respectively.

3.2 Time domain sensitivity analysis

The sensitivity analysis is indispensable for solution of the inverse problem (17) by the gradient-based methods, including the enhanced response sensitivity approach. In this paper, the response sensitivities with respect to the parameters

are to be obtained through a hybrid strategy, that is,

-

For the sensitivity to the fractional-order parameters \({\alpha }_i \in {\varvec{\alpha }} \subset {\varvec{\chi }}\), direction differentiation of the fractional-order derivative term \(D^{\alpha _i} q\) with respect to \(\alpha _i\) is very complex and would give rise to the prohibitively high computation cost. Instead, the finite difference scheme is reasonably used to approximately compute the sensitivity to the fractional-order parameters herein. Specifically, let \({\varvec{\alpha }}_{\epsilon ,i}\) be the parameters obtained after the small perturbation of \({\varvec{\alpha }}\) so that \({\alpha }_i \in {\varvec{\alpha }}\) is perturbed to \({\alpha }_i+\epsilon \in {\varvec{\alpha }}_{\epsilon ,i}\) and others keep unchanged. Then, the sensitivity can be obtained by central difference

$$\begin{aligned} \frac{\partial {\varvec{q}}}{\partial {\alpha }_i}= & {} \frac{{\varvec{q}}(t,[\hat{{\varvec{\chi }}};{\varvec{\alpha }}_{\epsilon ,i}]) -{\varvec{q}}(t,[\hat{{\varvec{\chi }}};{\varvec{\alpha }}_{-\epsilon ,i}])}{2\epsilon },\nonumber \\ \frac{\partial \ddot{{\varvec{q}}}}{\partial {\alpha }_i}= & {} \frac{\ddot{{\varvec{q}}}(t,[\hat{{\varvec{\chi }}};{\varvec{\alpha }}_{\epsilon ,i}])-\ddot{{\varvec{q}}}(t,[\hat{{\varvec{\chi }}};{\varvec{\alpha }}_{-\epsilon ,i}])}{2\epsilon } \end{aligned}$$(19)where \(\epsilon \) is a small enough positive number and \({\varvec{q}}(t,[\hat{{\varvec{\chi }}};{\varvec{\alpha }}_{\epsilon ,i}]),\ddot{{\varvec{q}}}(t,[\hat{{\varvec{\chi }}};{\varvec{\alpha }}_{\epsilon ,i}])\) (resp. \({\varvec{q}}(t,[\hat{{\varvec{\chi }}};{\varvec{\alpha }}_{-\epsilon ,i}])\), \(\ddot{{\varvec{q}}}(t,[\hat{{\varvec{\chi }}};{\varvec{\alpha }}_{-\epsilon ,i}])\)) are acquired by solving the forward problem (1) under the parameters \([\hat{{\varvec{\chi }}};{\varvec{\alpha }}_{\epsilon ,i}]\) (resp. \([\hat{{\varvec{\chi }}};{\varvec{\alpha }}_{-\epsilon ,i}]\)). In this paper, \(\epsilon =0.001\) is taken.

-

For the sensitivity to other parameters \(\chi _i \in \hat{{\varvec{\chi }}} \subset {\varvec{\chi }}\), direct differentiation leads to

$$\begin{aligned} {\left\{ \begin{array}{ll} \begin{aligned} &{} {\varvec{M}}\frac{\partial \ddot{{\varvec{q}}}}{\partial \chi _i}+{\varvec{C D}}^{{\varvec{\alpha }}}\frac{\partial {\varvec{q}}}{\partial \chi _i}+{\varvec{K}}\frac{\partial {\varvec{q}}}{\partial \chi _i}+\frac{\partial {\varvec{F}}\big ({\varvec{\xi , q, \dot{q}}}\big )}{\partial \dot{{\varvec{q}}}} \frac{\partial \dot{{\varvec{q}}}}{\partial \chi _i}\\ &{}\quad +\frac{\partial {\varvec{F}}\big ({\varvec{\xi , q, \dot{q}}}\big )}{\partial {\varvec{q}}} \frac{\partial {\varvec{q}}}{\partial \chi _i} \\ &{} \quad =\frac{\partial {\varvec{P}}(t)}{\partial \chi _i} -\bigg (\frac{\partial {\varvec{M}}}{\partial \chi _i}\ddot{{\varvec{q}}}+\frac{\partial {\varvec{C}}}{\partial \chi _i}{\varvec{D^{\alpha }q}}+\frac{\partial {\varvec{K}}}{\partial \chi _i} {\varvec{q}}\\ &{}\qquad +\frac{\partial {\varvec{F}}\big ({\varvec{\xi , q, \dot{q}}}\big )}{\partial \chi _i}\bigg ) \\ &{} \frac{\partial {\varvec{q}}}{\partial \chi _i}(0)={\varvec{0}}, \frac{\partial \dot{{\varvec{q}}}}{\partial \chi _i}(0)={\varvec{0}} \end{aligned} \end{array}\right. } \end{aligned}$$(20)where \(\frac{\partial {\varvec{F}}\big ({\varvec{\xi , q, \dot{q}}}\big )}{\partial \dot{{\varvec{q}}}}=[\frac{\partial {\varvec{F}}\big ({\varvec{\xi , q, \dot{q}}}\big )}{\partial \dot{{q}}_1}, \ldots ,\frac{\partial {\varvec{F}}\big ({\varvec{\xi , q, \dot{q}}}\big )}{\partial \dot{{q}}_n}]\) and \(\frac{\partial {\varvec{F}}\big ({\varvec{\xi , q, \dot{q}}}\big )}{\partial {{\varvec{q}}}}=[\frac{\partial {\varvec{F}}\big ({\varvec{\xi , q, \dot{q}}}\big )}{\partial {{q}}_1}, \ldots ,\frac{\partial {\varvec{F}}\big ({\varvec{\xi , q, \dot{q}}}\big )}{\partial {{q}}_n}]\). Obviously, sensitivity Eq. (20) is again a nonlinear fractional-order equation and can be solved in a similar way as described in Sect. 2.

With the above sensitivities obtained, the sensitivity matrix \({\varvec{S}}({{\varvec{\chi }}})\) corresponding to the calculated data \({\varvec{R}}({\varvec{\chi }})\) is assembled in the following way

In what follows, the sensitivity matrix \({\varvec{S}}({{\varvec{\chi }}})\) will be shown to play a significant role for parameter identification by the enhanced response sensitivity approach.

3.3 Enhanced response sensitivity approach

The parameter identification problem (17) is a nonlinear and non-quadratic optimization problem, and therefore, it should be solved iteratively by the gradient-based methods, for which the general procedure reads: Given the initial parameters \({\varvec{\chi }}^{(0)}\), the following successively update procedure is called for \(k=1,2, \ldots \) ,

until convergence. The key of the iterative solution procedure lies in how to quickly find a reasonable update \(\delta {\varvec{\chi }}\) based on the arbitrarily known parameters \(\bar{{\varvec{\chi }}} \in \mathcal {P}\) such that \(\hbar (\bar{{\varvec{\chi }}}+\delta {\varvec{\chi }})\) is as small as possible. A usual way is to linearize the nonlinear residual \(\hat{{\varvec{R}}}-{\varvec{R}}(\bar{{\varvec{\chi }}}+\delta {\varvec{\chi }})\) at \(\bar{{\varvec{\chi }}}\) such that the original nonlinear least-squares objective function \(\hbar (\bar{{\varvec{\chi }}}+\delta {\varvec{\chi }})\) is approximately transformed into a linear least-squares objective function \(\hat{\hbar }(\delta {\varvec{\chi }},\bar{{\varvec{\chi }}})\) [16, 19], i.e.,

where the sensitivity matrix \({\varvec{S}} (\bar{{\varvec{\chi }}})\) has been defined in Eq. (21).

The approximate linear least-squares problem (23) can be easily solved; however, to be proper, the update \(\delta {\varvec{\chi }}\) should be small enough so that the linearization becomes sensible, or \(\hat{\hbar }(\delta {\varvec{\chi }},\bar{{\varvec{\chi }}})\) agrees well with \(\hbar (\bar{{\varvec{\chi }}}+\delta {\varvec{\chi }})\); this is also known as the trust-region constraint. To measure how well \(\hat{\hbar }(\delta {\varvec{\chi }},\bar{{\varvec{\chi }}})\) agree with \(\hbar (\bar{{\varvec{\chi }}}+\delta {\varvec{\chi }})\), an agreement indicator is defined

Usually, good agreement requires the satisfaction of the agreement condition, i.e.,

Under the fact that \(\hat{\hbar }(0,\bar{{\varvec{\chi }}})\ge \hat{\hbar }(\delta {{\varvec{\chi }}},\bar{{\varvec{\chi }}})\), the agreement condition guarantees that the update \(\delta {\varvec{\chi }}\) is always in the descending direction, or \(\hbar (\bar{{\varvec{\chi }}})\ge \hbar (\bar{{\varvec{\chi }}}+\delta {\varvec{\chi }})\). To conclude, the trust-region constraint is mathematically stated as: Find a small enough update \(\delta {\varvec{\chi }}\) so that the agreement condition (25) is satisfied.

Usually, the trust-region constraint shall be tackled by solving an inequality-constrained optimization problem [16, 22], which is cumbersome and costly. Luckily, Lu and Wang [19] have proved that the trust-region constraint is equivalent to the Tikhonov regularization with a proper regularization parameter. Specifically, incorporating the Tikhonov regularization into the approximate goal function \(\hat{\hbar }(\delta {\varvec{\chi }},\bar{{\varvec{\chi }}})\) results in

where \(\lambda \ge 0\) is the regularization parameter, \(||\cdot ||\) is the usual \(\ell ^2\)-norm for vectors and \(\mathbf {I}\) denotes the identity matrix. The reason why the Tikhonov regularization can be used to cope with the trust-region constraint resides at the fact that under the condition \(\big \Vert {\varvec{S}}^T(\bar{{\varvec{\chi }}}){\varvec{W}}\delta {\varvec{R}}(\bar{{\varvec{\chi }}})\big \Vert \ne 0\), there holds [19]

The result (27) indicates that the agreement condition can always be satisfied for a large enough regularization parameter \(\lambda \). In other words, there exists a critical regularization parameter \(\lambda _\mathrm{cr}\) so that the agreement condition is satisfied for all \(\lambda \ge \lambda _\mathrm{cr}\). A practical way to determine a proper regularization parameter \(\lambda \) so that the trust-region constraint is verified can be found in references [19, 21], and then, the proper update \(\delta {\varvec{\chi }}_{\lambda }\) based on the known parameters \(\bar{{\varvec{\chi }}} \in \mathcal {P}\) is obtained. Along these lines, the iterative solution procedure (22) can proceed for which the algorithmic details are listed in Table 1 and it has been proved that such an approach is weakly convergent [19], i.e.,

3.4 Optimal choice of weight matrix

Section 3.3 presents the enhanced response sensitivity approach for parameter identification of the nonlinear fractional-order system (1) under the measured data \(\hat{{\varvec{R}}}\) and the user-defined weight matrix \({\varvec{W}}\); nevertheless, a good choice of the weight matrix \({\varvec{W}}\) remains to be determined; this is to be investigated in the following.

Let \({\varvec{\chi }}^\mathrm{ex}\) be the exact parameters and assume that the measured data admits some small perturbations (or measurement noises), i.e.,

where \(\varvec{\upvarepsilon }\) denotes the measurement noise vector, pertaining to the Gaussian distribution with zero means and the positive definite covariance \({\varvec{Q}}=\mathbb {E}[\varvec{\upvarepsilon }\varvec{\upvarepsilon }^T]\) with \(\mathbb {E}[\cdot ]\) being the expectation operator. Then, the identified solution \({\varvec{\chi }}^*\) (see (17)) is reasonably believed to be in the vicinity of \({\varvec{\chi }}^\mathrm{ex}\) by referring to the weak convergence (28), or \(||{\varvec{\chi }}^*-{\varvec{\chi }}^\mathrm{ex}||\) is small enough. In this way, the objective function \(\hbar ({\varvec{\chi }})\) for all \({\varvec{\chi }}\) in the vicinity of \({\varvec{\chi }}^\mathrm{ex}\) can be approximately treated by resorting to the Taylor expansion as follows

where \({\varvec{S}}={\varvec{S}}({\varvec{\chi }}^\mathrm{ex})\) is the sensitivity matrix under the exact parameters. Considering the definition of the identified solution in (17) and the approximate form of \(\hbar ({\varvec{\chi }})\) in (30), there yields

By Eq. (31), the identification error would also be random numbers with approximately zero means if the measurement error \(\varvec{\upvarepsilon }\) pertains to random distribution with zero means. In this way, the optimal weight matrix \({\varvec{W}}\) shall render the expectation of the squares of the identification error \(\mathbb {E}[({\varvec{\chi }}^*-{\varvec{\chi }}^\mathrm{ex})^T({\varvec{\chi }}^*-{\varvec{\chi }}^\mathrm{ex})]\) minimized. To go further, note that \(({\varvec{\chi }}^*-{\varvec{\chi }}^\mathrm{ex})^T({\varvec{\chi }}^*-{\varvec{\chi }}^\mathrm{ex})\) is a scalar number, and therefore, the following would hold

and then, by referring to Eq. (31), one can have

where \(\hbox {tr}(\cdot )\) denotes the trace of a matrix and \({\varvec{Q}}=\mathbb {E}[\varvec{\upvarepsilon }\varvec{\upvarepsilon }^T]\) is used. Eventually, with the result (33), the optimal weight matrix problem is stated as:

In following theorem, the optimal weight matrix by solving the problem (34) is to be derived.

Theorem 1

The optimal weight matrix that minimizes \(F({\varvec{W}}):=\hbox {tr}({\varvec{L_W}} {\varvec{Q}} {\varvec{L_W}}^T )\) is

and then, there is

Proof

The optimization problem is solved upon the auxiliary matrix \({\varvec{L_W}}\) rather than directly upon the weight matrix \({\varvec{W}}\). At first, considering the definition of \({\varvec{L_W}}\) in Eq. (31), an additional equality constraint is essentially introduced,

and the optimization problem over \({\varvec{L_W}}\) is found to be an equality-constrained optimization problem that can be solved by resorting to the Lagrange multiplier method. Next, to get the minimum solution of \(F({\varvec{W}})\) under the constraint (37), a Lagrange function is defined

where \({\varvec{\Lambda }}\) is the auxiliary Lagrange multiplier matrix to enforce the constraint (37). Optimization of \(\ell ({\varvec{L_W}},{\varvec{\Lambda }})\) over \({\varvec{L_W}}\) results in

Combination of Eqs. (39) and (37) yields

Then, substituting Eq. (40) into Eq. (39), there would be

Finally, the conclusions (35) and (36) follow naturally from Eq. (41) and the definition of \({\varvec{L_W}}\) in Eq. (31).

Remark 3

Theorem 1 reveals that the optimal weight matrix shall be the reciprocal of the measurement error covariance for the nonlinear least-squares optimization problem and similar conclusion has been recognized in [25] for linear problems. To see more, assume that the measured displacement \(\hat{q}_j(t_i), i=1, \ldots ,l\) and the measured acceleration \(\hat{\ddot{q}}_k(t_i), i=1, \ldots ,l\) pertain to perturbations with covariance being respective \(\sigma _{q_j}^2\) and \(\sigma _{\ddot{q}_k}^2\). Then, under the optimal weight matrix (35) with \(c=1\), the objective function (17) is simply regrouped into

By Eq. (42), the optimal weight matrix (35) is found to be quite proper and the reason is twofold. On the one hand, the optimal weight matrix would lead to the scaling effect, or more specifically, equivalent change of the dimensions, e.g., 1 m to 1000 mm, would no longer affect the objective function because the terms \(\frac{\hat{q}_j(t_i)-q_j(t_i,{\varvec{\chi }})}{\sigma _{q_j}}, \frac{\hat{\ddot{q}}_k(t_i)-\ddot{q}_k(t_i,{\varvec{\chi }})}{\sigma _{\ddot{q}_k}}\) become dimensionless. On the other hand, since the optimal weight is inverse proportional to the measurement error covariance, lager error \(\sigma _{q_j},\sigma _{\ddot{q}_k}\) would reasonably correspond to less weight.

4 Numerical examples

In this section, three examples concerning an SDOF (single-degree-of-freedom) fractional-order Duffing system with analytical solution, an SDOF Duffing system with two fractional-order parameters and a MDOF (multiple-degree-of-freedom) van der Pol–Duffing system are studied to verify the feasibility and efficiency of the present enhanced response sensitivity approach for parameter identification of nonlinear fractional-order systems. For the measured data, it is obtained from numerical simulation with the addition of the measurement noise in the following way

where \(e_v\) denotes the measurement noise level, \({\varvec{r}}_\mathrm{and}\) is a random vector of the same size with \({\varvec{v}}_\mathrm{cal}\) pertaining to the standard normal distribution, \(\text {std}(\cdot )\) denotes the standard deviation of a vector and \({\varvec{v}}_\mathrm{cal}\) is the calculated response data directly from Eq. (1) with \({\varvec{v}}_\mathrm{cal}=[{q}_j(t_1),{q}_j(t_2),\dots ,{q}_j(t_l)]^T\) for the jth displacement measurement and \({\varvec{v}}_\mathrm{cal}=[{\ddot{q}}_k(t_1),{\ddot{q}}_k(t_2),\dots ,{\ddot{q}}_k(t_l)]^T\) for the kth acceleration measurement. With the measured data, the algorithm in Table 1 is applied to get the solution of the parameter identification problem and some of the algorithmic parameters are chosen as \(tol=1\times 10^{-6}, \sigma =2, \varpi _\mathrm{cr}=0.5, Nit=1000, Ntr=20\). Other algorithmic parameters including the initial parameters \({\varvec{\chi }}^{(0)}\) and the user-defined weight matrix \({\varvec{W}}\) are to be discussed later in the examples.

4.1 Example 1: SDOF fractional-order Duffing system with analytical solution

Consider a fractional-order Duffing system with the cubic nonlinearity [refer to Eq. (3)] and the following governing equation

Assume that the following analytical solution (\(\omega =2\))

is obtained and to achieve this goal, the load is of the form \(p(t)=-m\sin (\omega t)+k_1\sin (\omega t)+k_2\sin ^3(\omega t)+\omega \mu t^{1-\alpha }\sum \nolimits _{k=0}^{\infty }\frac{(-1)^k(\omega t)^{2k}}{\varGamma (2k+2-\alpha )}\), and the initial states are \(q_0=0,\dot{q}_0=2\). For the parameters, the mass is fixed at \(m=1\) and the parameters to be identified in this example are \({\varvec{\chi }}=[\mu ,k_1,k_2,\alpha ]\). For later analysis, the actual/exact parameters are set to be \({\varvec{\chi }}^\mathrm{ex}=[0.8,1.2,1.5,0.5]\). The numerical method described in Sect. 2 is adopted to solve the forward problem where the Newmark parameters are set to \(\beta =0.25, \gamma =0.5\) and comparison of the numerical solution with the analytical solution (45) over the time interval \([0,T=20]\) is depicted in Fig. 1. Evidently, the numerical solution coincides well with the analytical solution, indicating that the numerical scheme in Sect. 2 is effective for solution of the nonlinear fractional-order system. Furthermore, the sensitivity analysis can also be proceeded following the guidelines in Sect. 3.2 and the calculated sensitivities of the displacement and the acceleration to the four parameters under the exact parameters \({\varvec{\chi }}^\mathrm{ex}\) are displayed in Figs. 2 and 3, respectively.

Next, to see the performance of the present parameter identification approach, eight cases I–VIII as listed in Table 2 are considered to identify the parameters \({\varvec{\chi }}\) where the effect of different initial parameters \({\varvec{\chi }}^{(0)}\), different measurement quantities–acceleration \(\ddot{q}\) or displacement q, different noise levels \(e_v\) and even different weight matrices \({\varvec{W}}\) is to be exploited in detail. More specifically, the choice \({\varvec{W}}=\mathbf {I}\) means the conventional identity weight matrix, while the choice \({\varvec{W}}={\varvec{W}}^\mathrm{opt}\) indicates that the optimal weight matrix (35) \({\varvec{W}}^\mathrm{opt}={\varvec{Q}}^{-1}\) is used. It is also noteworthy that in case when only one of the two quantities \(\ddot{q},q\) is measured and the noise is added by means of Eq. (43), the identity matrix would coincide with the optimal weight matrix, i.e, \({\varvec{W}}^\mathrm{opt}=\mathbf {I}\); otherwise, they are often different. The acceleration or displacement response data over the time interval [0, 20] are measured at the sampling rate of 100 Hz. Applying the algorithm in Table 1, the parameters can be identified and the detailed results are summarized in Table 3 where the relative error throughout this paper is defined as

with \(\chi _i^{*},\chi _i^\mathrm{ex}\) being the respective identified and exact values of the ith parameter. The ’Iter #’ in the table means the number of iterations for the enhanced response sensitivity approach involved to reach the convergence criterion \(\big ||{\varvec{\chi }}^{(k)}-{\varvec{\chi }}^{(k-1)} \big ||/\big ||{\varvec{\chi }}^{(k)}\big ||\le tol (1\times 10^{-6})\) is also counted in Table 3.

Firstly, in Cases I–III, the acceleration data are used with the addition of different levels of the measurement noise. The three cases are intended to show the effect of the measurement noise in parameter identification for which the results are again graphically shown in Fig. 4. Obviously, without measurement noise, the parameters are almost exactly identified, while the relative errors in identification results increase with the increase in the measurement noise level \(e_v\) (see also Cases I–III of Table 3) and more iterations are taken to get the convergence under higher level of the measurement noise. When the measurement noise level reaches 5% in Case III, the maximum relative error arrives at 11.35%; nevertheless, the parameters are reasonably identified because the absolute errors for \(\mu \) and \(\alpha \) are merely 0.086 and 0.056.

Secondly, in Cases III–V, three different initial parameters \({\varvec{\chi }}^{(0)}\) are considered in the algorithm to see whether the present approach is sensitive to the initial choice of the parameters in parameter identification of the nonlinear fractional-order system. The detailed identification results are presented in Table 3, and it is seen that the three initial parameters have led to almost the same identification results, indicating that the present approach is to some extent insensitive to the initial parameters and can admit a reasonable range of the initial parameters to reach the desired convergence. To further visualize the convergence of the identification procedure in the three cases, the evolution of the parameters with the iterations is plotted in Fig. 5. Though it requires more than 100 iterations to reach the convergence \(tol=1\times 10^{-6}\) for Cases III–V in Table 3, acceptably good identification results are actually obtainable in no more than 50 iterations from Fig. 5; the convergence of the present approach is well verified.

Thirdly, in Cases III, VI and VII, different quantities are measured to see their effect on parameter identification. Besides the identification results in Table 3, the identified parameters of the three cases are additionally depicted in Fig. 6. The parameters are well identified for the three cases using the acceleration data, the displacement data and the hybrid data, respectively. Moreover, the use of the displacement data gives rise to almost the same good identification results with the use of the acceleration data, while the use of the hybrid data obviously leads to more accurate identification; this is reasonable because more data often lead to better identification.

Finally, the effect of the optimal weight matrix is investigated in Cases VII–VIII. The identification results of the two cases regarding use of the identity weight matrix and the optimal weight matrix \({\varvec{W}}^\mathrm{opt}\) are listed in Table 3. It is found that the maximum relative error under the optimal weight matrix is 2.55%, which approaches the half of the maximum relative error 4.41% under the identity weight matrix. Moreover, less iterations are required to get the convergence under the optimal weight matrix. Indeed, the improvement in the identification accuracy by use of the optimal weight matrix as illustrated in Sect. 3.4 is perfectly verified.

4.2 Example 2: SDOF Duffing system with two fractional-order parameters

In some physical phenomena, multiple fractional-order operators may be needed to describe the complex behavior. In this example, an SDOF Duffing system with two fractional-order derivative operators is considered and its governing equation is given as follows

where the initial displacement and velocity of the system are set to \(q_0=0,\dot{q}_0=1\). Herein, the mass and the frequency of the load are fixed at \(m=1,\omega =1.5\), while other unknown parameters are to be identified, i.e., \({\varvec{\chi }}=[k_1, k_2, k_3, k_4, k_5, f, \alpha _1, \alpha _2]\). Assume that the exact values of the parameters in this example are set to \(\alpha _1=0.6,\alpha _2=1.4,k_1=0.8, k_2=0.6, k_3=1, k_4=10, k_5=15\) and \(f=5\), or \({\varvec{\chi }}^\mathrm{ex}=[0.8, 0.6, 1, 10, 15, 5, 0.6, 1.4]\) where \(\alpha _1 \in (0,1), \alpha _2 \in (1,2)\) indicate two different types of the fractional-order derivatives. By the numerical method described in Sect. 2, numerical solution of the displacement and the acceleration can be obtained and the results are depicted in Fig. 7a. It is seen that the solution is nearly periodic with the period approaching \(1.33\pi \), which is the reasonably good approximation of the theoretic period \(2\pi /\omega \); this also explains why the frequency of the external load \(\omega \) is often known a priori. For later parameter identification, the measurement data are obtained from the simulated results in Fig. 7a with the addition of the measurement noise as in Eq. (43), and under the measurement noise at level \(e_v=3\%\), the response data that will be used later are displayed in Fig. 7b. The response data are measured over the time interval \([0,T=20]\) at the sampling rate of 100 Hz. It is noteworthy that sensitivity analysis is inevitably invoked in the present parameter identification approach and then, to gain a schematic impression on the sensitivity, the sensitivities of the displacement and acceleration responses to the parameters are shown in Figs. 8 and 9, respectively.

For parameter identification of the nonlinear fractional-order system, six cases concerning different measurement data, different initial parameters and different weight matrices are studied in this example as presented in Table 4. By proceeding the algorithm in Table 1, the parameters can be identified and the detailed results are listed in Table 5. Here, it is again noted that in case when only one of the two quantities \(\ddot{q},q\) are measured, the identity weight matrix \(\mathbf {I}\) would equal to the optimal weight matrix, while if both of the two quantities are measured, the optimal weight matrix would be different from the identity weight matrix.

Firstly, for Cases I–III, different quantities are measured to see the effect of different measurements on parameter identification. The initial fractional-order parameters \(\alpha _1^{(0)}=0.5,\alpha _2^{(0)}=1.5\) are reasonably chosen at the middle of the corresponding intervals \(\alpha _1 \in (0,1)\) and \(\alpha _2 \in (1,2)\). The results in Table 5 show that good identification of the parameters has been reached for all the three cases. Specifically, the maximum relative error is 2.31% when using the acceleration data and reaches 3.64% when using the displacement data; this indicates that acceleration data can lead to better identification than the displacement data. While when both the displacement and acceleration data are used, the maximum relative error is 2.14%, which is slightly less than the case using merely the acceleration data; the improvement by using the hybrid data is slight under the identity weight matrix.

Secondly, for Cases I, IV and V, different initial parameters are called to get the solution. Almost the same good identification of the parameters has been reached by referring to the results in Table 5. Actually, the initial parameters of the three cases are to some extent far away from the exact parameters and this shows that a reasonably wide range of feasible initial parameters can be chosen for parameter identification. Again, it is shown that the present approach is insensitive to initial choice of the parameters.

Finally, for Cases III and VI, two different weight matrices—the conventional identity weight matrix and the optimal weight matrix \({\varvec{W}}^\mathrm{opt}={\varvec{Q}}^{-1}\)—are used to see the effect of the weight matrix on parameter identification with the hybrid data. It is seen that the relative errors under the optimal weight matrix are almost all less than those under the identity weight matrix. To conclude, the identification accuracy has been improved by the use of the optimal weight matrix.

4.3 Example 3: MDOF fractional-order van der Pol–Duffing system

In this example, a two-DOF fractional-order van der Pol–Duffing system is considered to see the feasibility and performance of the present approach for parameter identification of MDOF fractional-order systems and the governing equation is given as follows

where the initial states are \(q_{10}=1,\dot{q}_{10}=\dot{q}_{20}=q_{20}=0\) and the frequency of external load is fixed at \(\omega =1.5\). For this problem, the fractional-order parameters are assumed to satisfy \(\alpha _1 \in (0,1)\) and \(\alpha _2 \in (1,2)\), implying that two different types of fractional-order derivatives are invoked. Other parameters are to identified, i.e., \({\varvec{\chi }}=[k_1, \mu _1, \epsilon _1, \delta _1, k_2, \mu _2, \epsilon _2, \delta _2, f, \alpha _1, \alpha _2]\). For parameter identification, the exact parameters are set to be \(k_1=1.6, \mu _1=2, \epsilon _1=1, \delta _1=0.75, k_2=0.8, \mu _2=2.3, \epsilon _2=0.8, \delta _1=1.2, f=5, \omega =1.5\), \(\alpha _1=0.6\) and \(\alpha _2=1.4\), or, \({\varvec{\chi }}^\mathrm{ex}=[1.6, 2, 1, 0.75, 0.8, 2.3, 0.8, 1.2, 5, 0.6, 1.4]\). Numerical solution of this system can be obtained by the numerical method in Sect. 2, and the results are graphically shown in Fig. 10. Moreover, the sensitivity analysis can also proceed along the lines of Sect. 3.2, and then, the results on sensitivity of the accelerations to the parameters are plotted in Fig. 11. For the measured data, it is obtained over the time interval \([0,T=15]\) with the sampling rate being 100 Hz. The level of the measurement noise for this example is fixed at \(e_v=3\%\).

For parameter identification, five cases concerning different types of measurements and different initial parameters (see Table 6) are considered herein. In the previous two examples, the optimal weight matrix has been shown to give superior identification results over the conventional identity weight matrix, and therefore, the optimal weight matrix \({\varvec{W}}^\mathrm{opt}={\varvec{Q}}^{-1}\) is used in this example. For initial guesses of \(\alpha _1^{(0)}\) and \(\alpha _2^{(0)}\) in parameter identification, they are taken at the middle of the possible parameter intervals, i.e., \(\alpha _1^{(0)} =0.5\in (0,1) \) and \(\alpha _2^{(0)}=1.5 \in (1,2)\). Again, by resorting to the proposed algorithm in Table 1, the parameters can be identified and the detailed results are listed in Table 7. It takes less than 50 iterations to get the convergent solution for all cases, verifying the convergence of the enhanced response sensitivity approach.

On the one hand, for Cases I–III, different measured data are used to see which type of data are preferred in parameter identification. By the results in Table 7, when using the second acceleration data (Case I), the maximum relative error reaches 5.20%, while if both accelerations are measured (Case II), the maximum relative error is reduced to 1.05%; the improvement is due to the fact that more data often lead to better identification. In Case III, when the first displacement and the second acceleration are measured, the maximum relative error is found to be 2.84%, lying in the interval [1.05%,5.20%] for Case III and Case I, respectively. This means that the acceleration data leads to more accurate identification than the displacement data. To conclude, two acceleration measurements are found to give rise to best identification accuracy.

On the other hand, the effect of the initial parameters \({\varvec{\chi }}^{(0)}\) on parameter identification is explored in Cases II, IV and V where three choices of the initial parameters as presented in Table 6 are taken into account. As can be seen from the results, satisfactory identification results are gained and the eventually identified parameters are almost the same for the three cases. Again, the present approach is shown to insensitive to the initial parameters.

5 Conclusions

An enhanced response sensitivity approach has been established for parameter identification of nonlinear fractional-order systems using the time domain response data. For sensitivity analysis, a hybrid scheme was developed where the sensitivity to the fractional-order parameters is obtained through finite difference calculation, while the sensitivity to other parameters is proceeded through direct differentiation. Then, the trust-region constraint was introduced to enhance the response sensitivity approach. Furthermore, an optimal weight matrix as the reciprocal of the measurement error covariance was theoretically derived for the enhanced response sensitivity approach. Three numerical examples have been conducted, and results show that

-

The present approach can admit a wide range of the initial parameters for good identification of the parameters. In other words, the present approach is found insensitive to the initial parameters.

-

The present approach can give rise to reasonably good identification of the parameters even under 5% measurement noise level.

-

The proposed optimal weight matrix indeed improves the identification accuracy.

-

Convergence of the present approach is well verified.

-

The present approach is shown to work well for parameter identification of both SDOF and MDOF nonlinear fractional-order systems.

Thus, it is believed that the present enhanced response sensitivity approach would be an effective tool for parameter identification of nonlinear fractional-order systems.

References

Machado, J.T., Galhano, A.M., Trujillo, J.J.: On development of fractional calculus during the last fifty years. Scientometrics 98(1), 577–582 (2014)

Cuadrado-Laborde, C., Poveda-Wong, L., et al.: Analogous photonic fractional signal processing. Prog. Opt. 63, 93–178 (2018)

Liu, L., Zheng, L., Zhang, X.: Fractional anomalous diffusion with Cattaneo–Christov flux effects in a comb-like structure. Appl. Math. Model. 40(13–14), 6663–6675 (2016)

Muller, S., Kastner, M., Brummund, J., et al.: On the numerical handling of fractional viscoelastic material models in a FE analysis. Comput. Mech. 51(6), 999–1012 (2013)

Ichise, M., Nagayanagi, Y., Kojima, T.: An analog simulation of non-integer order transfer functions for analysis of electrode processes. J. Electroanal. Chem. Interfacial Electrochem. 33(2), 253–265 (1971)

Yuan, L., Agrawal, O.P.: A numerical scheme for dynamic systems containing fractional derivatives. J. Vib. Acoust. 124(2), 321–324 (2002)

Laskin, N.: Fractional market dynamics. Physica A 287(3), 482–492 (2000)

Zhang, K., Li, D.: Electromagnetic Theory for Microwaves and Optoelectronics. Springer, New York (2013)

Dizqah, A.M., Maheri, A., Busawon, K.: An accurate method for the PV model identification based on a genetic algorithm and the interior-point method. Renew. Energy 72(2), 212–222 (2014)

Arikoglu, A.: A new fractional order derivative model for linearly viscoelastic materials and parameter identification via genetic algorithms. Rheol. Acta 53(3), 219–233 (2014)

Wei, H., Yu, Y., Gu, W.: Parameter estimation of fractional-order arbitrary dimensional hyperchaotic systems via a hybrid adaptive artifical bee colony algorithm with simulated annealing algorithm. Eng. Appl. Artif. Intell. 68, 172–191 (2018)

Lin, J., Wang, Z.J.: Parameter identification for fractional-order chaotic systems using a hybrid stochastic fractal search algorithm. Nonlinear Dyn. 90, 1243–1255 (2017)

Yuan, L.G., Yang, Q.C.: Parameter identification and synchronization of fractional-order chaotic systems. Commun. Nonlinear Sci. Numer. Simul. 17, 305–316 (2012)

Poinot, T., Trigeassou, J.C.: Identification of fractional systems using an output-error technique. Nonlinear Dyn. 38, 133–154 (2004)

Leyden, K., Goodwine, B. (2018) Fractional-order system identification for health monitoring. Nonlinear Dyn. https://doi.org/10.1007/s11071-018-4128-y

More, J.J.: The Levenberg–Marquardt algorithm: implementation and theory. Chapter numerical analysis, volume 630 of the Series. In: Watson, G.A. (ed.) Lecture Notes in Mathematics, pp. 105–116. Springer, Berlin (1978)

Mani, A.K., Narayanan, M.D., Sen, M.: Parameter identification of fractional-order nonlinear systems. Nonlinear Dyn. (2018). https://doi.org/10.1007/s11071-018-4238-6

Lu, Z.R., Law, S.S.: Features of dynamic response sensitivity and its application in damage detection. J. Sound Vib. 303, 305–329 (2007)

Lu, Z.R., Wang, L.: An enhanced response sensitivity approach for structural damage identification: convergence and performance. Int. J. Numer. Methods Eng. 111, 1231–1251 (2017)

Wang, L., Liu, J., Lu, Z.R.: Incremental response sensitivity approach for parameter identification of chaotic and hyperchaotic systems. Nonlinear Dyn. 89, 153–167 (2017)

Lu, Z.R., Yao, R., Wang, L., Liu, J.K.: Identification of nonlinear hysteretic parameters by enhanced response sensitivity approach. Int. J. Non Linear Mech. 96, 1–11 (2017)

Bakir, P.G., Reynders, E., De Roeck, G.: Sensitivity-based finite element model updating using constrained optimization with a trust region algorithm. J. Sound Vib. 305, 211–225 (2007)

Hansen, P.C.: Analysis of discrete ill-posed problems by means of the L-curves. SIAM Rev. 34(4), 561–580 (1992)

Hansen, P.C.: Regularization tools—a matlab package for analysis and solution of discrete ill-posed problem. Numer. Algorithms 6(1), 1–35 (1994)

Brownlee, K.A.: Statistical Theory and Methodology in Science and Engineering. Wiley, Hoboken (1965)

Acknowledgements

The present investigation was performed under the support of National Natural Science Foundation of China (Nos. 11572356 and 11702336), Guangdong Province Natural Science Foundation (No. 2017A030313007), Guangdong Province Science and Technology Program (No. 2016A020223006) and the Fundamental Research Funds of the Central Universities (Nos. 17lgjc42 and 17lgpy54).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Lu, ZR., Liu, G., Liu, J. et al. Parameter identification of nonlinear fractional-order systems by enhanced response sensitivity approach. Nonlinear Dyn 95, 1495–1512 (2019). https://doi.org/10.1007/s11071-018-4640-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-018-4640-0