Abstract

An identification method for fractional order models with time delay is presented. The proposed method, based on the output error optimization, simultaneously estimates model orders, coefficients and time delay from a single noisy step response. Analytical expressions for logarithmic derivatives of the step input are derived to evaluate the Jacobian and the Hessian required for the Newton’s algorithm for optimization. A simplified initialization procedure is also outlined that assumes an integral initial order and uses estimated coefficients as the initial guess. Simulation results are presented to demonstrate the efficacy of the proposed approach. Convergence of the Newton’s method and the Gauss–Newton scheme are also studied in simulation. Identification results from noisy step response data for time delay models with different structures are presented.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fractional order model identification faces an additional challenge as it requires to estimate the model orders on a large space. Moreover, if there is a time delay, the parameter estimation problem becomes further complicated due to the nonlinear appearance of the delay term in the model equation. To overcome these issues, several approaches have been adopted in the literature. Victor et al. [40] used a two-stage algorithm to estimate the orders using an optimization approach and estimated the model coefficients by solving a least-squares equation. Narang et al. [25] used a similar approach for time delay models. There are only a few methods for simultaneous estimation of all parameters of fractional order models [36]. However, these methods are not applicable for the step input, although the characteristics of step responses of fractional order models have been studied in the literature [22, 35, 37]. To the best of the knowledge of the author, there is no reported method that simultaneously estimates orders, coefficients and the delay from the step response. Ahmed [1] presented a method for estimation of coefficients and delay, however, for known fractional orders.

In this article, an output error approach for simultaneous estimation of model orders, coefficients and time delay is proposed by adopting the Newton and the Gauss–Newton optimization algorithms. The optimization approach requires estimation of the output sensitivity functions. For fractional order models, these functions include the logarithmic derivatives of the input signal. Victor and Malti [39] and Victor et al. [40] commented that simulating the logarithmic derivative is not trivial and used a numerical approach to calculate the sensitivity functions. The contribution of this article lies in its presentation of the analytical expressions for the logarithmic derivatives of the step input signal and derivations of the analytical expressions for the Jacobian and the Hessian required for the Newton’s algorithm. The efficacy of the algorithm lies in its ability to identify all the parameters simultaneously.

The output error approach is the most commonly used methodology for fractional order model identification [10, 17, 31, 39, 40]. Other approaches include an extension [18] of the so-called simplified refined instrumental variable algorithm [41], use of the state variable filter [8], fractional Laguerre basis function modeling [3], frequency domain identification [26], ARX model development using fractional order and orthonormal basis filter [23], integral-based approach [34] and so on. Fractional order nonlinear system identification problem has also been addressed in the literature [19, 33]. The time delay adds an extra degree of complexity to any identification method due to its nonlinear appearance. Narang et al. [25] extended the linear filter method [2] for fractional order model identification that iteratively estimates the model parameters and the delay. Tavakoli-Kakhki and Tavazoei [36] and Yuan et al. [42] also considered time delay estimation.

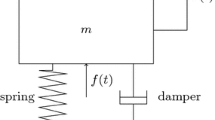

Motivations for addressing the fractional order identification problem arises from the advantages of the use of the fractional order models [4, 20] and the use of such models for real-life applications. Reported applications include thermal processes [12], processes involving diffusive mass transfer [5], Archimedes wave swing [38], vibration suppression [24], health monitoring [15] and other engineering applications, see, e.g., [22, 40]. Another motivation is the use of fractional order controllers. With introduction of the TID controller [16], the \(PI^{\lambda }D^{\mu }\) controller [30], the CRONE controller [29] and the lead-lag compensator [32], fractional order representation of process and controllers are gaining more and more attention.

The remainder of the article is organized as follows. The proposed methodology is outlined in Sect. 2 that includes the optimization algorithm, expressions for the logarithmic derivatives and the initialization procedure. Simulation results along with the simulation method for fractional order differentiation and integration are presented in Sect. 3. Concluding remarks are drawn in Sect. 4.

2 Mathematical Formulations

The proposed identification method is outlined in this section. The method is based on the output error optimization. Analytical expressions for logarithmic derivatives of the step input required to evaluate the Jacobian and the Hessian are also presented.

For convenience in presentation, both time and Laplace domain expressions are used interchangeably. The Laplace domain expressions are presented in terms of ‘s’ while an equivalent symbol ‘p’ is used to represent the derivative operator in the time domain. Considering that step response methods are typically used for models with parsimonious structures, mathematical derivations are provided for two structures as in (1) and (2). We will refer to these two structures as Class I and Class II models, respectively, throughout the manuscript. Although the method is demonstrated using these two structures, the same approach can be followed for a general structure with more parameters.

2.1 Parameter Estimation

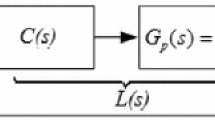

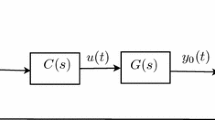

For a single input single output system, the relation between the input and the output can be expressed using the following Laplace domain expression.

where Y(s) and U(s) are the input and the output, respectively, \(G(s,\varvec{\theta })\) is the model transfer function with \(\varvec{\theta } =[\theta _{1}, \ldots , \theta _{n}]\) as the set of parameters with n being the total number of parameters. E(s) represents the noise in the output measurements. For a fractional order model, the parameter vector contains the coefficients of the numerator and the denominator polynomials, the time delay as well as the fractional orders of the derivatives. The objective of an identification algorithm is to estimate the parameters \(\varvec{\theta }\) from a set of time domain measurements \([u(t_k)\;y(t_k)], k=1,2,\ldots N\) with N being the number of available data points. The goal of the output error approach is to estimate \(\varvec{\theta }\), by minimizing a norm of the errors between measured and model outputs.

An equivalent time domain expression for the error is given by

The lower case letters correspond to the variables in the time domain. Using the notation \(e_k = e(t_k,\varvec{\theta })\), the goal of an output error algorithm can be defined as to minimize the following objective function

A number of different approaches can be taken for solution of the optimization problem. We follow the Newton’s algorithm to simultaneously estimates all the parameters. In this approach, estimated parameters at an iteration step \(i+1\) is given by

where \(g(\varvec{\theta })\) is the gradient of \(f(\varvec{\theta })\) given by

with \(\varvec{A}\) being the Jacobian matrix.

The columns of \(\varvec{A}\) are the first derivative vectors \(\nabla e_k\) of the components of \(\varvec{e}\), i.e.,

where \(\theta _j\) is the j-th element of \(\varvec{\theta }\). The Hessian, \(\varvec{H}\) is defined as

For the Hessian, it requires

So the Hessian is given by

where \(\varvec{A^T}\) is the transpose of \(\varvec{A}\), and

The main disadvantage of the above approach, namely the Newton’s method is that it requires formulae from which the second derivative matrix can be evaluated. However, there are methods closely related to this approach which use only the first derivatives. One such approach is the finite difference Newton method. In this approach, to estimate \(\varvec{H}(\varvec{\theta }^i)\), increments in each coordinate direction of the output error are taken by differences in gradient vectors [11]. Another approach is the so-called quasi-Newton methods which approximate \([\varvec{H}(\varvec{\theta })]^{-1}\) by a symmetric positive definite matrix and update it as the iteration proceeds.

The second term in (13) contains \(e_k\) as multipliers which are reasonably small especially as the iteration approaches the optimum. This led to the assumption that \(\varvec{H}\) can be approximated as

Thus, the basic Newton’s method becomes the Gauss–Newton method when (15) is used. Accordingly, the solution of \(\varvec{\theta }\) in the \((i+1)\)-th iteration step is given by

The basic Newton’s method as well as the Gauss–Newton method may not be suitable for many cases since \(\varvec{H}\) may not be positive definite when \(\varvec{\theta }^i\) is remote from the solution. Moreover, convergence may not occur even when \(\varvec{H}\) is positive definite [11, 21]. To avoid the latter case, Newton’s method with line search can be implemented where the Newton correction is used to generate a search direction [11]. Following this approach (7) is modified as

The Gauss–Newton solution can also be modified similarly. The advantages and disadvantages of Newton’s method and the Gauss–Newton method have been widely addressed in the literature [11]. An advantage of the Gauss–Newton method is that the second derivative matrix is approximated using the first-order derivatives. On the other hand, the Gauss–Newton method is equivalent to making a linear approximation of the residuals and hence the method is valuable either for the residuals or the degree of nonlinearity to be small [11]. While a detailed study of applicability of these two approaches is beyond the scope of this article, observations from extensive simulation results will be presented in the result section.

The optimization step follows a standard procedure. Using an initial guess of the parameters, the Jacobian and Hessian are evaluated and the parameters are iteratively updated until convergence. Next sections provide the analytical expressions for the matrices required to evaluate the Jacobian and the Hessian.

2.1.1 Class I Model

The parameter vector for the Class I model in (1) is given by \(\varvec{\theta }=[a\;b\;\delta \;\alpha ]^T\). So, the column elements of the Jacobian can be expressed in the Laplace domain as

An equivalent time domain expression is given by

Here, \(\ln (s)\) and \(\ln (p)\) are logarithms of the derivative operator, expressed in the Laplace and time domain, respectively. The logarithmic operation is on the operator s or p. Similarly, the matrix \(\varvec{R_k}\) can be obtained in the time domain as

2.1.2 Class II Model

For the Class II model as in (2), the parameter vector \(\varvec{\theta }=[a_1\;a_0\;b\; \delta \;\alpha _2\;\alpha _1]^T\). Denoting \(D(p)=p^{\alpha _2}+a_1 p^{\alpha _1}+a_0\), the expressions for the elements of \(\varvec{A}\) and \(\varvec{R_k}\) for the Class II model can be given as

Evaluation of \(\varvec{A}\) and \(\varvec{R_k}\) needs estimation of \(\ln (p)u(t_i)\) as well as \(\ln (p)\ln (p)u(t_i)\). Victor et al. [40] suggested numerical estimation of the Jacobian as logarithm of the derivative operator is not trivial to simulate. We use analytical expressions to evaluate the logarithmic derivatives of the input signal.

2.2 Evaluation of the Logarithmic Derivative

The above methodology is applicable irrespective of the input type. As this article is concerned with the step input, analytical expressions for logarithmic derivatives of the step, expressed as in (23), is derived.

where h is the size of the step input and \(\varOmega (T)\) is the unit step function defined as

The log derivative, \(\ln (p)\) of a constant, c, is expressed [4, 14] as

where \(\gamma \) is the Euler–Mascheroni constant [27] given by

The numerical value for the Euler-Mascheroni constant can be approximated as \(\gamma \approx 0.57721\). Following the above expressions, \(\ln (p)\) of a step signal can be obtained as

Also, estimation of \(\varvec{R_k}\) requires evaluation of \(\ln (p) \ln (p)\) which can be obtained from

[4] derived the logarithmic derivative of the logarithmic function as

where \(\zeta \) is the Riemann zeta function, also known as the Hurwitz function [27] given as

Following (30), \(\zeta (2)\), can be obtained as [13]

Using (28) and (29), \(\ln (p) \ln (p)\) for the step input signal can be obtained as

2.3 Implementation Issues

2.3.1 Initialization

Initialization plays an important role and poses a significant challenge for optimization schemes. In the proposed methodology, initialization of model orders, coefficients and the delay are required. For orders, we propose to initiate the optimization algorithm assuming integer values. For example, for a Class I model an order of 1 is used as the initial guess. For Class II models, a second-order model is used as the initial guess. Regarding the coefficients, we propose to estimate an integer order model using conventional identification method. In this article, the integral equation approach [9] is used to estimate the initial coefficients assuming a model without delay. The estimation procedure is detailed below. For the time delay, the initial guess can be obtained from the step response. We suggest to use a small initial value for the delay.

To describe the integral equation approach let us take an example differential equation representing the input–output relation of a process.

Here, \(\nu \) is the numerator coefficient and \(\mu _1\) and \(\mu _0\) are the denominator coefficients in the second-order model. The relation can be presented in the equation error form as

The equation is then integrated to get

where for any variable, y(t), \(y^{[j]}(t)\) is its j-th order integral for time limit 0 to t. The estimation equation (35) can be reformulated to get it in a least-squares solution form

where

Equation (36) can be written for \(t = t_{1}, t_{2} \ldots t_N\) and combined to give the estimation equation

with

The parameter vector \(\varvec{\vartheta }\) is then obtained as the solution of the least-squares equation as

2.4 Overall Algorithm

The overall methodology is summarized as Algorithm 1 taking the Class II model as an example. The same algorithm is applicable for model with other structures when appropriate equations are used.

3 Simulation Results

3.1 Simulation Environment

For the general fractional differentials and integrals of a function \(\omega (t)\), the Grunwald-Letnikov (GL) definition (41) is commonly used; see, e.g., [28].

Here, \(t_0\) and t are the limits of the operator, \(\eta \) is the step size and \(\rho \) is the order with \(\rho >0\) means a derivative operation and \(\rho <0\) means integral operation. Also |.| means the integer part and

with \(\varGamma (.)\) being the Euler’s Gamma function.

For numerical computation, a revised version of (41), presented in [6] is used where

where \(w_j^{(\rho )}\) can be evaluated recursively from

In this work, MATLAB is used to perform the required numerical calculations. The step input is considered to be noise free. The step responses generated for corresponding fractional order models are corrupted with white Gaussian noise. Monte Carlo simulations (MCS) are performed by changing the ‘seed’ for the noise signal. The noise-to-signal ratio (NSR) is defined as the ratio of the variance of the noise to that of the signal. The results presented in this section are from 100 MCS. The presented estimated parameters are the mean of 100 estimates. Parameters are presented along with their standard deviations. The model structures are assumed to be known.

3.2 Convergence of Iteration Schemes

The previous section outlines both the Newton’s algorithm and the Gauss–Newton algorithm. Convergence issues with both of the approaches have been discussed in the optimization literature [7, 11]. In this section, a set of simulation results are presented to show the convergence of the two schemes. The following Class I model is used for this purpose.

A set of models having different values of \(\alpha \) are used. For model (46), the parameter vector is identified as \(\varvec{\theta }^T=[a\;b\;\delta \;\alpha ]=[0.05\;0.06\;7\;\alpha ]\). In the above format \(G(s)=\frac{K}{\tau s^{\alpha } + 1}\), the parameter vector is presented as \([\tau \;K\;\delta \;\alpha ] =[20\;1.2\;7\;\alpha ]\). Although the coefficients are identified as a, b, we present those as \(\tau \) and K for the sake of numerical convenience and clarity in interpretation. A total of 1000 data points are used in each cases with a sampling interval 0.1. A unit step signal is used. The order is initialized with a value of 1. An integer order model is identified using the integral equation approach, and the estimated values are used for initialization. The delay is initialized with a value of 1. \(\lambda \) is set at 0.5.

Figure 1 shows the trajectories of all parameters and the error from initialization to convergence. The Newton’s algorithm was found to show smooth trajectories for all the parameters. On the other hand, the Gauss–Newton algorithm required less iterations for responses without overshoots. For the responses with overshoots (\(\alpha >1\)) both algorithms required almost the same number of steps.

The above results are obtained using noise free data. To demonstrate the performance of the two schemes for noisy data, results are shown in Table 1 for data with NSR \(10\%\). Mean values of 100 MCS are presented along with corresponding standard deviations for model (46) with \(\alpha =1.7\).

These results show that both of the algorithms perform comparably in terms of quality of the estimates. The convergence rates are \(100\%\) for both cases with an initial guess of 1 for the delay. The convergence rate is defined as the \(\%\) of times out of 100 MCS for which the algorithm converged. To compare the performance of the two algorithms in terms of convergence rate, results are presented in Fig. 2 for model (46) with \(\alpha =1.7\). This model represents a step response with overshoot. As seen from the figure, the Newton’s algorithm showed high convergence rate of \(100\%\) or close to it for a wide range of initial guess of delay from 0.1 to 13. On the other hand, for the Gauss–Newton approach, the convergence rate was close to \(100\%\) only for the range of initial delay from 1 to 4. Similar data are generated for a model with \(\alpha =0.7\), which represents a step response without any overshoot; for this case, the convergence rates are at or near \(100\%\) for a range of initial delay from 1 to 12 for both algorithms. However, the required number of iterations for the Newton’s algorithm is twice as many as that of the Gauss–Newton algorithm.

Convergence rate for the Newton’s and Gauss–Newton algorithm with different initial delay for model (46) with \(\alpha =1.7\)

Based on extensive simulation results, the following remarks can be made

With a suitable initial guess of the delay and the initial assumption of an integer order model, both of the schemes are able to provide satisfactory estimates of the parameters.

For responses with overshoots, the Newton’s algorithm converges for a wider range of initial guess of the delay compared to the Gauss–Newton scheme.

For responses without overshoots, the Gauss–Newton algorithm requires less number of iterations.

Based on these observations, the Gauss–Newton method is used for the following studies.

3.3 Effect of Data Quality

Two important quality measures for sampled data, namely the length of data and the noise-to-signal ratio are considered to study the effects of data on parameter estimates.

Table 2 shows the identified parameters and their standard deviations for different data lengths. For all these cases, the NSR is \(10\%\). The results show that satisfactory estimates are obtained for data length as low as 100 for the Class I model. As expected, the results show better consistency for larger data sets. Also the required numbers of iteration decreased slightly with the increase in data length.

Figure 3 shows the effect of noise on parameter estimates. A total of 1000 data points are used for all cases. The results show satisfactory performance of the algorithm in terms of mean and standard deviation for NSR as high as \(50\%\).

Effect of noise on parameter estimates for the model (46) with \(\alpha =1.4\)

3.4 Identification of Different Class I Models

A list of Class I models with different orders is considered in this section. The parameters presented are the means of 100 MCS with the corresponding standard deviations in the parentheses. NSRs for each cases are \(10\%\) and 1000 data points are used. The convergence rates for each of the cases are \(100\%\). The number of iterations varies between 11 and 18 with less iterations required for higher values of \(\alpha \). Initial guess of the time delay is 1 for all cases and an integer order of 1 is used as initial guess. Initial guess of coefficients are obtained assuming an integer order model. The mean values of the estimated parameters match quite well with the corresponding true values; also the standard deviations are comparable for a NSR of \(10\%\).(Table 3)

3.5 Identification of Class II Models

Table 4 shows the identification results for a set of Class II models. Models with both integer and fractional orders are considered. For each case, 2000 data points are used and data are corrupted with a noise having NSR \(10\%\). An initial guess of 1 is used for the delay and integer orders \([\alpha _2\;\alpha _1]=[2\;1]\) is used for initialization. Convergence rates are \(100\%\) for all cases. The obtained results are quite satisfactory in terms of the mean and standard deviations.

4 Concluding Remarks

The step has not been used as input for simultaneous estimation of orders, coefficients and the delay for fractional order models. Also none of the optimization methods for fractional order identification estimates the logarithmic derivatives of input signals which are required to evaluate the Jacobian and the Hessian. This article provides analytical expressions for logarithmic derivatives of the step input and those for the Jacobian and the Hessian; the resulting optimization scheme does not need to resort to numerical approximations. A simplified initialization procedure is also presented which, in fact, requires a choice of the delay only; however, convergence for a wide range of initial guess of the delay is observed. The orders are initially assumed to be integers. Initial value of the coefficients is obtained assuming an integral order model. Simulation results show the robustness of the optimization scheme in the presence of noise. Satisfactory results are obtained for data length as low as 100 and for NSR as high as \(50\%\) for different models. The required number of iteration steps is in the range 10–20. Quality of parameter estimates and low number of iteration required show the performance and efficiency of the algorithm.

References

S. Ahmed, Parameter and delay estimation of fractional order models from step response, in 9th IFAC Symposium on Advanced Control of Chemical Processes, Whistler, BC, Canada (2015), pp. 942–947

S. Ahmed, B. Huang, S.L. Shah, Parameter and delay estimation of continuous-time models using a linear filter. J. Process Control 16(4), 323–331 (2006)

M. Aoun, R. Malti, F. Levron, A. Oustaloup, Synthesis of fractional Laguerre basis for system approximation. Automatica 43, 1640–1648 (2007)

D. Babusci, G. Dattoli, On the logarithm of the derivative operator arXiv e-prints (2011)

A. Benchellal, T. Poinot, C. Trigeassou, Approximation and identification of diffusive interfaces by fractional systems. Signal Process. 86(10), 2712–2727 (2006)

Y. Chen, I. Petras, D. Xue, Fractional order control—A tutorial, in 2009 American Control Conference, St. Louis, USA (2009), pp. 1397–1411

E.K. Chong, S.H. Zak, An Introduction to Optimization (Wiley, New York, 1996)

O. Cois, A. Oustaloup, T. Poinot, J. Battaglia, Fractional state variable filter for system identification by fractional model, in European Control Conference, Porto, Portugal (2001)

J.E. Diamessis, A new method for determining the parameters of physical systems, in Proceedings of the IEEE (1965), pp. 205–206

S.M. Fahim, S. Ahmed, S.A. Imtiaz, Fractional order model identification using the sinusoidal input. ISA Trans. 83, 35–41 (2018). https://doi.org/10.1016/j.isatra.2018.09.009

R. Fletcher, Practical Methods of Optimization. Vol. 1: Unconstrained Optimization (Wiley, New York, 1980)

J.D. Gabano, T. Poinot, H. Kanoun, Identification of a thermal system using continuous linear parameter-varying fractional modelling. IET Control Theory Appl. 5(7), 889–899 (2011)

E.V. Hayngworth, K. Goldbe, Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables, chap. Bernoulli and Euler Polynomials- Riemann Zeta Function, U.S. Department of Commerce, NIST, Washington DC (1972) pp. 803–820

J. Hines, Operator mathematics II. Math. Mag. 28(4), 199–207 (1955)

K. Leyden, B. Goodwine, Fractional-order system identification for health monitoring. Nonlinear Dyn. 92(3), 1317–1334 (2018)

B. Lurie, Three-parameter tunable tilt-integral-derivative (TID) controller. US Patent 5371670 (1994)

R. Malti, S. Victor, A. Oustaloup, Advances in system identification using fractional models. J. Comput. Nonlinear Dyn. 3(2), 0214011–7 (2008)

R. Malti, S. Victor, A. Oustaloup, H. Garnier, An optimal instrumental variable method for continuous-time fractional order model identification, in Proceedings 17th IFAC World Congress, Seoul, Korea (2008), pp. 14379–14384

A.K. Mani, M.D. Narayanan, M. Sen, Parametric identification of fractional-order nonlinear systems. Nonlinear Dyn. 93(2), 945–960 (2018)

R. Mansouri, M. Bettayeb, S. Djennoune, Approximation of high order integer systems by fractional order reduced parameter models. Math. Comput. Model. 51, 53–62 (2010)

W.F. Mascarenhas, Newton iterates can converge to non-stationary points. Math. Program. Ser. A 112, 327–334 (2008)

C. Monje, Y. Chen, B. Vinagre, D. Xue, V. Feliu-Batlle, Fractional-order Systems and Controls (Springer, London, 2010)

M. Muddu, A. Narang, S. Patwardhan, Development of ARX models for predictive control using fractional order and orthonormal basis filter parameterization. Ind. Eng. Chem. Res. 48(19), 8966–8979 (2009)

C.I. Muresan, S. Folea, I.R. Birs, C. Ionescu, A novel fractional-order model and controller for vibration suppression in flexible smart beam. Nonlinear Dyn. 93(2), 525–541 (2018). https://doi.org/10.1007/s11071-018-4207-0

A. Narang, S.L. Shah, T. Chen, Continuous-time model identification of fractional-order models with time delays. IET Control Theory Appl. 15(5), 900–912 (2010)

P. Nazarian, M. Haeri, M.S. Tavazoei, Identifiability of fractional-order systems using input output frequency contents. ISA Trans. 49, 207–214 (2010)

K. Oldham, J. Myland, J. Spanier, An Atlas of Functions, 2nd edn. (Springer, New York, 2000)

K. Oldham, J. Spanier, The Fractional Calculus (Academic Press, New York, 1974)

A. Oustaloup, X. Moreau, M. Nouillant, The CRONE suspension. Control Eng. Pract. 4(8), 1101–1108 (1996)

I. Podlubny, Fractional order systems and \( {PI}^{\lambda } {D}^{\mu }\) controllers. IEEE Trans. Autom. Control 44(1), 208–214 (1999)

T. Poinot, J.C. Trigeassou, Identification of fractional systems using an output-error technique. Nonlinear Dyn. 38(1), 133–154 (2004)

H. Raynaud, A. ZergaInoh, State-space representation for fractional order controllers. Automatica 36, 1017–1021 (2000)

L. Sersour, T. Djamah, M. Bettayeb, Nonlinear system identification of fractional wiener models. Nonlinear Dyn. 92(4), 1493–1505 (2018). https://doi.org/10.1007/s11071-018-4142-0

M. Tavakoli-Kakhki, M. Haeri, M. Tavazoei, Simple fractional order model structures and their applications in control system design. Eur. J. Control 16(6), 680–694 (2010)

M. Tavakoli-Kakhki, M. Haeri, M.S. Tavazoei, Over- and under-convergent step responses in fractional-order transfer functions. Trans. Inst. Meas. Control 32(4), 376–394 (2010)

M. Tavakoli-Kakhki, M. Tavazoei, Estimation of the order and parameters of fractional order models from a noisy step response data. J. Dyn. Syst. Meas. Control 136(3), 0310201–6 (2014)

M.S. Tavazoei, Overshoot in the step response of fractional-order control systems. J. Process Control 22, 90–94 (2012)

D. Valerio, M.D. Ortigueira, J.S. da Costa, Identifying a transfer function from a frequency response. J. Comput. Nonlinear Dyn. 3(2), 0212071–7 (2008)

S. Victor, R. Malti, Model order identification for fractional-order models, in European Control Conference, Zurich, Switzerland (2013), pp. 3470–3475

S. Victor, R. Malti, H. Garnier, A. Oustaloup, Parameter and differential order estimation of fractional-order models. Automatica 49, 926–935 (2013)

P.C. Young, Optimal IV identification and estimation of continuous-time TF models, in IFAC World Congress, Barcelona, Spain (2002), pp. 337–358

L. Yuan, Q. Yang, C. Zeng, Chaos detection and parameter identification in fractional-order chaotic systems with delay. Nonlinear Dyn. 73(1), 439–448 (2013)

Acknowledgements

The author acknowledges the financial supports from Research and Development Corporation (RDC) of Newfoundland and Labrador and Natural Sciences and Engineering Research Council (NSERC) of Canada.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The author declares that there is no conflict of interest.

Human Participants

The study does not involve any human participants and/or animal. The manuscript has not been published previously (partly or in full).

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ahmed, S. Step Response-Based Identification of Fractional Order Time Delay Models. Circuits Syst Signal Process 39, 3858–3874 (2020). https://doi.org/10.1007/s00034-020-01344-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-020-01344-7