Abstract

Autapses are a class of special synapses of neurons. In those neurons, their axons are not connected to the dendrites of other neurons but are attached to their own cell bodies. The output signal of a neuron feeds back to itself, thereby allowing the neuronal firing behavior to be self-tuned. Autapses can adjust the firing accuracy of a neuron and regulate the synchronization of a neuronal system. In this paper, we investigated the information capacity and energy efficiency of a Hodgkin–Huxley neuron in the noisy signal transmission process regulated by delayed inhibitory chemical autapse for different feedback strengths and delay times. We found that the information transmission, coding efficiency, and energy efficiency are maximized when the delay time is half of the input signal period. With the increase in the inhibitory strength of autapse, this maximization is increasingly obvious. Therefore, we propose that the inhibitory autaptic structure can serve as a mechanism and enable neural information processing to be energy efficient.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Neurons are basic units of neural systems through which information is transmitted from one neuron to other neurons in the form of action potentials (APs) via synapses. Conduction is unidirectional; thus, APs can only be transmitted from the axon of a neuron to other neuron’s dendrites or a cell body and cannot be transmitted in the opposite direction because neurotransmitters are released only in the axon terminal of a presynaptic neuron. However, anatomical experiments found that some neurons in the brain have a special structure, in which the axon ending of a neuron is not connected to dendrites of other neurons but instead to itself. Neurons with this type of structure are called autaptic neurons. Autaptic neurons are found in a variety of brain regions, for example, the rat hippocampus [1], the pyramidal cells [2], the visual cortex of cats [3, 4], the substantia nigra and white matter [5]. In the cerebral cortex, there is a class of specific inhibitory neurons called “fast-spiking cells”, which generate rich autapses. Because of the self-limitation with the body, inhibitory autapses are more common in neurons. In contrast, excitatory autapse generates positive feedback and leads to the senses being out of control and epileptic events, which is clearly not the best state of the brain [6]. The arrival of APs at synapses will cause the release of neurotransmitters through the cleft, resulting in the depolarization or hyperpolarization of the postsynaptic membrane potential. Accordingly, the autapse could be divided into two categories [7, 8]: excitatory and inhibitory.

Recently, some experiments have shown that the autaptic structure plays an important role in the regulation of brain function [9]. In a physiological experiment, Saada [10] found the positive feedback caused by excitatory autapses can stimulate the B31/B32 neurons of Aplysia to be active persistently. It is necessary for these neurons to initiate and maintain components of feeding behavior via persistent activities. Therefore, the autaptic structure contributes to control of the continuous operation of a nervous system through self-adjustment of some areas [11, 12]. In addition, there are also some studies that reveal the effects of the neuronal autaptic structure on their dynamic behaviors [13,14,15,16,17], bifurcations and enhancement of neuronal firings induced by negative feedback from an inhibitory autapse [18, 19], as well as the disturbance of ion channel noise to autaptic neurons [20]. For example, in fast-spiking interneurons, autaptic inhibition influences the threshold for APs, input-output gain, firing patterns and multi-stability regions under different parameters setting [21]. The inhibitory chemical autapse can maintain the accuracy of firing sequences of a neuron to control the synchronization of the neuron APs [22]. Self-feedback induced by autapse adjusts the synchronization transition in a Newman-Watts neuronal network with time delay [23,24,25] and mediates propagation of weak rhythmic activity across small-world neuronal networks [26, 27]. Stochastic resonance which occurs at the beat frequency in neural systems at both the single neuron and the population level, is studied here. [28]. The dynamics of multiple synchronous behaviors are controlled or adjusted based on the time delay and coupling strength in inhibitory coupled neurons [29], and the autapse also plays a regulatory role of irregular firing behaviors [30]. According to different types of autapses, irregular firings are generated based on a single neuron and the autaptic innervation can tame and modulate neural dynamics. In addition to a single neuron, information transmission and autapse-induced synchronization are studied in a coupled neuronal network [31,32,33].

In the nervous system, information processing costs an enormous amount of metabolic energy [34, 35]. More than \(70\%\) of the cortical energy costs are directly used for neural signal processing within cortical circuits in the subcellular level [36]. For example, opening or closing of ionic channels for APs, and neurotransmitters release underlying synaptic transmission [37, 38]. This huge energy consumption suggests that our brains must operate energy efficiently, i.e., the brains should process as much as possible information at the lowest energy cost and it is argued that maximizing the ratio of the coding capacity to energy cost is one of key principles chosen by the nervous system to evolve under selective pressure [39, 40]. In the past decades, many studies have revealed the strategy that the neural systems employed to operate energy efficiently, including optimizing the ion channel kinetics [41, 42] or developing a warm body temperature to minimize the energy cost of single action potentials [43, 44], optimizing the number of channels on a single neuron and the number of neurons in the neuronal network [45,46,47], low probability release of neurotransmitters at the synapse [48, 49], representing information with sparse spikes [50,51,52].

In this paper, we study the information transmission capacity and energy efficiency of a single Hodgkin–Huxley (HH) neuron with the inhibitory chemical autapse. Experimental results show that in the inhibitory chemical autapse HH model, the transmitted effective information of the system is the highest, the encoding ability of information is the highest, and the energy efficiency of the system is optimal when the delay time of the autapse is half of the input signal period at a certain autaptic coupling strength. In addition, at the same delay time, the greater the self-synaptic coupling intensity is, the more obvious the information transmission capability, and the more optimal energy efficiencies of the system are.

The paper is organized as follows: Sect. 2 describes the chemical autapse HH neuronal model. The methods for calculation of the information entropy and energy cost efficiency are also introduced in this section. In Sect. 3, we first demonstrate the firing dynamics of the HH neuron with both excitatory and inhibitory autapses, and then we focus on the information capacity and the energy cost of the HH neuron with inhibitory autapses. We demonstrated in this section how the autaptic strength and the delay time influence the information transmission and the energy efficiency of neurons. Conclusions are made in Sect. 4.

2 Model and methods

2.1 The chemical autaptic HH model

The chemical autaptic HH neuron model is as follows:

where V is the membrane potential of the neuron, C is the membrane capacitance, \(I_{\mathrm{ext}}\) is the external stimulus current, and \(I_{\mathrm{asy}}\) is the autaptic current. m, h and n are the gating variables of the sodium and potassium channels. \(\alpha _{i} (V)\) and \(\beta _{i} (V)\) (\(i=m,h,n\)) are the voltage-dependent opening and closing rates, respectively, which read

The explicit form of the autaptic current \(I_{\mathrm{asy}}\) is defined as follows:

where \(g_{\mathrm{asy}}\) is the maximal conductance and \(E_{\mathrm{A}}\) is the reversal potential of the autapse. \(t_{\mathrm{spikes}}\) is the presynaptic spiking time, and \(\tau \) is the delay time caused by the propagation of AP along the axons from initiation zone to synapse [53]. The sum is extended over the train of presynaptic spikes occurring at \(t_{\mathrm{spikes}}\). The dynamic process of the postsynaptic conductance is as follows:

where \(\tau _{d}\) and \(\tau _{r}\) represent the decay and rise time, respectively, of the function and determine the duration of the synaptic response.

The external stimulus current \(I_{\mathrm{ext}}\) is a train of pulse-like current stimuli with the inter-pulse interval following a Poisson distribution with mean interval of 50 ms:

where \(I^{j}(t)\) is the single pulse current in the following form:

where j represents the jth pulse, \(I_{0}\) is the intensity of pulse currents and \(I_{0}= 10~\upmu \mathrm{A}\). \(t_{s}^{j}\) is the time when the released neurotransmitter is in contact with the postsynaptic membrane (start time of a pulse), \(t_{c}^{j}\) is a pulse off time (end time of a pulse, \(t_{c}^{j}=t_{s}^{j}+\varDelta t\), \(\varDelta t=8\) ms), and the value of the time constant \(\sigma \) is 2 ms.

A Gaussian white noise \(\xi (t)\) with zero mean value is also added in Eq. (1) to mimic the background activities from other neurons,

where D is noise density and \(D=2\) by default.

The stochastic equations in the simulation were solved using the Euler-forward method with a time step of 0.01 ms. The parameters involved in the calculation are listed in Table 1.

2.2 Methods of the information entropy and energy efficiency

To quantify the information transmission ability of the HH neuronal model with autapse, we use the “direct method” proposed by Strong [54] to measure the information entropy rate of the spiking sequence generated by the neuron. First, the spiking train is packaged into time bins with length of \(\varDelta t\) and discretized to a binary string, which is composed elements of “0” (no APs) or “1” (APs). Next, the binary sequence is scanned by moving a sliding time window T and then converted to a sequence of “words” with the length of k (\(k=\frac{T}{\varDelta t}\)). Finally, after obtaining the probability \(P_{i}\) of i-th word, the total entropy rate is calculated as,

The time-dependent word probability distribution at a particular time is estimated over all the repeated presentations of the stimulus [54]. At each time t, we calculate the time-dependent entropy of words and then take the average (over all times) of these entropies,

where \(< \ldots >_{t}\) denotes the average over all times t.

The average information rate that the neurons spike trains encode regarding the stimulus is then calculated as the difference between \(H_{\mathrm{total}}\) and \(H_{\mathrm{noise}}\),

The coding efficiency is defined as the ratio of mutual information rate I to total entropy rate \(H_{\mathrm{total}}\),

The coding efficiency ranges between zero and one. If all of the spike train structure is utilized to encode information regarding the stimulus, then the noise entropy is zero, and the efficiency measure equals one; on the contrary, if the spike train carries no information regarding the stimulus, then the coding efficiency is zero.

The generation of APs in the neurons requires an energy supply that is realized by hydrolyzing ATP molecules to restore the ion concentration inside and outside the cell. Normally, the energy cost can be estimated by calculating the total ATP consumption by counting the number of entries of Na\(^{+}\) [55]. However, the energy cost can also be estimated through calculating the equivalent electrical circuit of the HH model, which is argued to be more accurate than the Na\(^{+}\) counting method. The energy consumption rate of the HH neuron is then calculated [56].

Because each AP will lead to one synaptic event at the autapse, we assume each synaptic event cost \(\alpha \) times of energy \(E_{\mathrm{sgl}}\) for one AP. \(E_{\mathrm{sgl}}\) is estimated by integrating Eq. (20) during the period of one AP. Thus, the energy cost per second of a spike train could be estimated in the following manner:

where E is the E(t) integrated over entire period of the spike train, and n is the number of spikes in the spike train. \({<}...{>}_{t}\) denotes the average over entire period of the spike train.

Finally, the energy efficiency that measures how much information the system encoded in the spike train with the consumption of unit energy is defined as

3 Results and discussion

In the following, we set the delay time \(\tau \) of the autapse between 0 and 60 ms (the mean rate of pulse inputs) and the coupling intensity \(g_{\mathrm{asy}}\) in the range of 0–\(3~\mathrm{ms/cm}^{2}\), and then we calculate the corresponding information capacity, as well as the energy cost, to investigate how the delay time and coupling strength affect the energy efficiency of autaptic neurons.

3.1 Dynamical behaviors of the chemical autapse HH model

First, we set the reversal potential of autapse to \(E_{\mathrm{A}}=0\) so that it could induce the excitatory autaptic currents. As demonstrated in Fig. 1a, b, the APs (spikes at \(t=100\) ms and \(t=500\) ms, green line in Fig. 1a) evoked by the input would generate an excitatory synaptic current at approximately \(t=400\) ms and \(t=800\) ms with the time delay of \(\tau =300\) ms (shown in Fig. 1b). This excitatory synaptic current will cause another two APs at \(t=400\) ms and \(t=800\) ms (blue line in Fig. 1a). Meanwhile, the autaptic current induced AP at \(t=400\) ms will induce another AP at \(t=700\) ms (shown in Fig. 1a). Therefore, during the above process, this positive feedback will lead to the increase in spike rate, until the refractory period of neuron stops the increase in spikes per unit time. In contrast, for the inhibitory autapse with reversal potential of \(E_{\mathrm{A}}=-80\) mV, as demonstrated in Fig. 1c, d, the APs evoked by inputs (shown in Fig. 1c) will generate inhibitory synaptic current (shown in Fig. 1d), which will hyperpolarize the membrane potential. Thus, it appears that this negative feedback cannot play a positive role in the neural information processing.

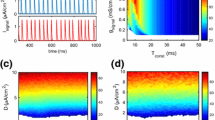

However, when the background noise is taken into consideration, the noise can induce spontaneous firings. As demonstrated in Fig. 2 for the raster plot of 200 neurons with the same inputs, the spontaneous APs occur between the input-induced APs. We then calculated the firing probability of the spontaneous APs among the mean inter-pulse interval and found that when the autaptic time delay is added (shown in Fig. 2c, d), there are less spontaneous APs because some of them are suppressed by inhibitory synaptic currents; moreover, the distribution of the spontaneous APs is narrower than that when there is no autapse (shown in Fig. 2a, b). The narrow distribution of spontaneous APs implies that there is less variability in the firing sequence of the autaptic neurons; the underlying mechanism will be elucidated in the following context.

The response of the chemical autapse HH model to square wave stimulus currents. Sub-graphs a and b show the membrane potentials and the autaptic currents, respectively, of the neuron in the excitatory autaptic mode, where the autaptic intensity is \(g_{\mathrm{asy}}=0.3~\mathrm{ms/cm}^{2}\). Sub-graphs c and d show the membrane potentials and the autaptic currents, respectively, of the neuron in the inhibitory autaptic mode, where the autaptic intensity is \(g_{\mathrm{asy}}=1~\mathrm{ms/cm}^{2}\). In the whole model, the intensity of external stimulus current is \(I_{0}=10~\upmu \)A and the autaptic delay time is \(\tau =300\) ms. (Color figure online)

The firing raster of the inhibitory chemical autapse HH model for pulse stimulus currents and the firing probability of the spontaneous APs among the mean inter-pulse interval. a shows firings of 200 neurons, and b shows the firing probability when the autaptic coupling strength is \(g_{\mathrm{asy}}=0~\mathrm{ms/cm}^{2}\) and the delay time is \(\tau =0\) ms. c shows firings of 200 neurons, and d shows the firing probability when the autaptic coupling strength is \(g_{\mathrm{asy}}=3~\mathrm{ms/cm}^{2}\) and the delay time is \(\tau =35\) ms. In the whole model, the intensity of external stimulus current is \(I_{0}=10~\upmu \)A, and the noise density is \(D=2\)

3.2 Information transmission

As demonstrated above, the inhibitory autaptic current induced by the spikes in response to external signal will act on the neuron itself. This negative feedback loop will finally have an impact on the firing dynamics, and thus the information transmission of the neuron.

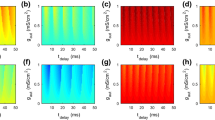

The dependence of the total information rate on the autaptic strength and the time delay is demonstrated in Fig. 3. It is seen that when the autaptic strength is zero, i.e., there is no autaptic current acting on the neuron, the total information rate is the largest. Once the inhibitory autaptic current takes effects, it will reduce the excitability of the neuron. However, even in the presence of the autaptic current, there are two cases that the inhibitory effects are canceled by the APs. One case is when the delay time is very short, such that the inhibitory current induced by an AP acts on it immediately. The other case is when the time delay \(\tau \) is approximately 35 ms, in which case there is a high probability that the inhibitory current will act on the AP induced by the next pulse input. In both cases, after the threshold-crossing of membrane potential and before the ending of refractory period, the neuron’s membrane potential will not be disturbed by the external inputs. Therefore, the total entropy rate is almost the same as that without autapse.

When autaptic strength is not zero, the total entropy rate exhibits two minima, one around the delay time of \(\tau = 18\) ms and the other around the delay time of 50 ms. The minimum becomes lower as the autaptic strength is increased, because of the higher inhibitory current generated by the autapse. The first minimum at approximately \(\tau = 18\) ms is mainly caused by the reduction in the spontaneous firings by the inhibitory autaptic current. After the end of the refractory period of an AP evoked by pulse input, the noise can evoke spontaneous firings alone. Theoretically, these spontaneous APs can fires at any time with the same probability in the intervals between two input evoked APs. Therefore, an input evoked AP would suppress the spontaneous AP that occurs soon after it via its inhibitory autaptic current, as long as the time delay is just the same as the time interval between these two APs. However, the spontaneous APs cannot be suppressed by an autapse with longer time delay because its membrane potential has already crossed the threshold. Therefore, between the time point when the refractory period of input evoked AP ends and when the autaptic current starts to take effect, there exists a time window that allows the spontaneous APs to occur. The longer the delay time, the wider this time window, and thus, the more spontaneous APs to occur. As a result, the total entropy rate increases again as the time delay increases. Further increasing the time delay will cause the total entropy to drop again approximately \(\tau = 50\) ms. This second minimum occurs mainly because the inhibitory current suppressed the following input evoked APs when their inter-pulse intervals are less than the mean value of 50 ms.

As discussed above, the inhibitory autaptic current would suppress the spontaneous firings evoked by noise in the time interval between the two inputs, thus reducing the trial-to-trial variability of the spike trains. Therefore, we expect the noise entropy to decrease in this time interval, as seen in Fig. 4. Finally, the mutual information rate (the information entropy rate I), which is the difference between the total entropy rate and the noise entropy rate, exhibits a maximum for the time delay that is half of the inter-pulse interval of the inputs (Fig. 5). Note that, compared to the case in which there is no autapse and the mutual information rate is enhanced as the inhibitory autaptic current is introduced, increasing the autaptic strength increases the information rate. As we discussed above, the mismatch between the refractory period and the time delay of the autapse will result in a narrow time window that allows the generation of noise-induced APs. Spontaneous APs generated in this narrow window are relatively reliable, leading to much less variability of the spike trains compared to the case without autapse. In other words, the inhibitory autapse enables the noise-induced APs to fire in a consistent manner, and take the noise-induced APs as the second responses to the pulse inputs. This phenomenon appears as if the excitatory autapse can induce other APs; however, the difference in our case is the second AP evoked by noise, and the autapse plays the role of regulating these spontaneous firings.

Because the increased amount of information through regulation of the spontaneous firings with inhibitory autaptic current does not introduce additional spikes in the spike train, the autapse also increases the average information carried by each spike, which is defined as the ratio of the mutual information rate to the mean firing rate of the spike train (Fig. 6). Moreover, when the delay time is half of the inter-pulse interval, the relatively high total entropy rate and lower noise entropy rate yields a maximum of the coding efficiency (Fig. 7).

3.3 Energy consumption and energy efficiency

In this subsection, we calculated the energy cost of the HH neuron with the inhibitory autaptic synapse in the above information transmission processing, according to Eq. (21). First, we fixed the synaptic strength \(g_{\mathrm{asy}}=3\) to investigate how \(\alpha \), the ratio of the energy cost for one synaptic event to the energy cost of one AP, affects the dependence of the energy cost of the autaptic neuron on the delay time. It is seen from Fig. 8 that for different values of \(\alpha \), the dependence of the energy cost on the delay time is the same as the dependence of the total entropy rate on the delay time, i.e., one minimum at \(\tau =18\) ms and another minimum at \(\tau =45\) ms. In addition, a larger value of \(\alpha \) yields a larger contrast between the maximal and minimal values of the energy cost. Next, the dependence of the total energy cost on different delay times and autaptic strengths are plotted in Fig. 9. It is seen that the dependence of the energy cost on the autaptic strength and delay time is the same as the dependence on the total entropy rate, because a higher total entropy rate implies a higher spike rate, and thus a higher energy cost.

Note that the energy efficiency, defined as the ratio between the mutual information rate and the energy cost per second (Fig. 10), is maximized when the delay time is half of the mean inter-pulse interval. This result implies that, when the delay time is half of the input rate, the inhibitory autapse could enable the neuron to transmit information not only with maximal capacity (as discussed above), but also in an energy efficient manner, i.e., the information transmission consumes minimal metabolic energy. In addition, with this inhibitory autapse, the information and energy efficiency could be maximized simultaneously, whereas for other parameters, such as, temperature or number of ion channels, the optimal value for information and that for energy efficiency are not identical; thus, the information and energy efficiency could not be maximized simultaneously.

4 Conclusions

In this paper, we investigated the effects of inhibitory autapse on the information transmission and energy efficiency of the HH neuron in response to the pulse train inputs. We found that when the delay time is approximately half of the mean inter-pulse interval of the input pulse train, the inhibitory autaptic current acting on the neuron itself leads to more ordered firing of the spontaneous APs, resulting in spike trains that are more informational (measured with mutual information rate) than that of the neuron without autapse. Meanwhile, the energy efficiency is also maximized around this optimal autaptic time delay.

The neural information is carried by APs and then transmitted from one neuron to other neurons through synapses [57, 58]. However, in some neurons, the synapses are connected to the neurons themselves, forming positive or negative feedback loops [59,60,61]. Many works showed that this autaptic feedback may play a positive role in the regulation of the firing rhythm of the neurons or the neuronal network. Here, in this paper, we used information theory to further elucidate the constructive role of inhibitory autapse in the information transmission of the neurons. It is interesting to find that the inhibitory feedback of autapse could regulate the spontaneous firings induced by noise; thus, these spontaneous APs could also carry information about the inputs. However, it still remains unknown what characters of the inputs are encoded in these spontaneous firings and how the information carried by these spontaneous firings is utilized by the neural systems.

The processing of neural information requires a huge amount of metabolic energy, which acts as a selective pressure to influence many aspects of neural design and functions. In this work, we also investigated how the inhibitory autapse influences the energy efficiency of the neuronal information transmission. We found that there exists an optimal delay time of the autapse with which the energy efficiency could be maximized. The efficient energy usage is the result of two aspects caused by the autapse: (1) the reduced spontaneous APs that carry no information regarding the inputs but waste energy and (2) the increased information because of more regulated spontaneous firings via negative feedbacks. The results suggest that the inhibitory autaptic feedback could serve as a mechanism to transmit neural information efficiently.

In conclusion, we studied the effects of the inhibitory autaptic synapse on the information transmission and energy efficiency of neurons in a noisy environment. We found that there exists an optimal autaptic delay time (which is almost half of the mean input rate), with which the neurons could not only transmit information with maximal capacity, but also with highest energy efficiency. The underlying mechanism is the more ordered spontaneous firings induced by inhibitory synaptic currents. In other words, the negative feedback of autapse enables the spontaneous firings to carry more information of the inputs. Future work will focus on how the neuronal systems determine the information carried by these spontaneous firings. Our work elucidated the role of inhibitory autaptic current in the energy efficient information processing of neuronal systems, and may help to further understand the basic design principles [62] of neural systems.

References

Bekkers, J.M., Stevens, C.F.: Excitatory and inhibitory autaptic currents in isolated hippocampal neurons maintained in cell culture. Proc. Nat. Acad. Sci. U. S. A. 88(17), 7834 (1991)

Cobb, S.R., Halasy, K., Vida, I., et al.: Synaptic effects of identified interneurons innervating both interneurons and pyramidal cells in the rat hippocampus. Neuroscience 79(3), 629–648 (1997)

Tams, G., Buhl, E.H., Somogyi, P.: Massive autaptic self-innervation of GABAergic neurons in cat visual cortex. J. Neurosci. 17(16), 6352–6364 (1997)

Bekkers, J.M.: Synaptic transmission: functional autapses in the cortex. Curr. Biol. 13(11), 433 (2003)

Karabelas, A.B., Purpura, D.P.: Evidence for autapses in the substantia nigra. Brain Res. 200(200), 467–473 (1980)

Kaori, I., Bekkers, J.M.: Autapses. Curr. Biol. 16(9), R308–R308 (2006)

Bekkers, J.M.: Synaptic transmission: excitatory autapses find a function? Curr. Biol. 19(7), 296–8 (2009)

Bacci, A., Huguenard, J.R.: Enhancement of spike-timing precision by autaptic transmission in neocortical inhibitory interneurons. Neuron 49(1), 119 (2006)

Wang, H., Sun, Y., Li, Y., et al.: Influence of autapse on mode-locking structure of a Hodgkin–Huxley neuron under sinusoidal stimulus. J. Theor. Biol. 358(23), 25–30 (2014)

Saada, R., Miller, N., Hurwitz, I., et al.: Autaptic excitation elicits persistent activity and a plateau potential in a neuron of known behavioral function. Curr. Biol. 19(6), 479–484 (2009)

Boussa, S., Pasquier, J., Leboulenger, F., et al.: Exploring modulation of action potential firing by artificial graft of fast GABAergic autaptic afferences in hypophyseal neuroendocrine melanotrope cells. J. Physiol. Paris 104(1), 99–106 (2009)

Bushell, T.J., Chong, C.L., Shigemoto, R., et al.: Modulation of synaptic transmission and differential localisation of mGlus in cultured hippocampal autapses. Neuropharmacology 38(10), 1553–1567 (1999)

Wang, H., Chen, Y.: Response of autaptic Hodgkin–Huxley neuron with noise to subthreshold sinusoidal signals. Phys. A Stat. Mech. Appl. 462, 321–329 (2016)

Qin, H.X., Ma, J., Jin, W.Y., et al.: Dynamics of electric activities in neuron and neurons of network induced by autapses. Sci. China Technol. Sci. 57(5), 936–946 (2014)

Li, Y., Schmid, G., Hanggi, P., et al.: Spontaneous spiking in an autaptic Hodgkin–Huxley setup. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 82(1), 061907 (2010)

Wang, H., Ma, J., Chen, Y., et al.: Effect of an autapse on the firing pattern transition in a bursting neuron. Commun. Nonlinear Sci. Numer. Simul. 19(9), 3242–3254 (2014)

Hengtong, W., Yong, C.: Firing dynamics of an autaptic neuron. Chin. Phys. B 24(12), 53–64 (2015)

Zhao, Z., Jia, B., Gu, H.: Bifurcations and enhancement of neuronal firing induced by negative feedback. Nonlinear Dyn. 86(3), 1–12 (2016)

Zhao, Z., Gu, H.: Transitions between classes of neuronal excitability and bifurcations induced by autapse. Sci. Rep. 7(1), 1 (2017)

Fox, R.F., Gatland, I.R., Roy, R., et al.: Fast, accurate algorithm for numerical simulation of exponentially correlated colored noise. Phys. Rev. A 38(11), 5938 (1988)

Guo, D., Chen, M., Perc, M., et al.: Firing regulation of fast-spiking interneurons by autaptic inhibition. EPL 114(3), 30001 (2016)

Rusin, C.G., Johnson, S.E., Kapur, J., et al.: Engineering the synchronization of neuron action potentials using global time-delayed feedback stimulation. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 84(2), 066202 (2011)

Wang, Q., Gong, Y., Wu, Y.: Autaptic self-feedback-induced synchronization transitions in Newman-Watts neuronal network with time delays. Eur. Phys. J. B 88(4), 103 (2015)

Gong, Y., Wang, B., Xie, H.: Spike-timing-dependent plasticity enhanced synchronization transitions induced by autapses in adaptive Newman-Watts neuronal networks. Bio Syst. 150, 132–137 (2016)

Wang, B., Gong, Y., Xie, H., et al.: Optimal autaptic and synaptic delays enhanced synchronization transitions induced by each other in Newman-Watts neuronal networks. Chaos Solitons Fractals 91, 372–378 (2016)

Yilmaz, E., Baysal, V., Ozer, M., et al.: Autaptic pacemaker mediated propagation of weak rhythmic activity across small-world neuronal networks. Phys. A Stat. Mech. Appl. 444, 538–546 (2016)

Ao, X., Hanggi, P., Schmid, G.: In-phase and anti-phase synchronization in noisy Hodgkin–Huxley neurons. Math. Biosci. 245(1), 49–55 (2013)

Guo, D., Perc, M., Zhang, Y., et al.: Frequency-difference dependent stochastic resonance in neural systems. Phys. Rev. E 96(2), 022415 (2017)

Gu, H., Zhao, Z.: Dynamics of time delay-induced multiple synchronous behaviors in inhibitory coupled neurons. PLoS ONE 10(9), e0138593 (2015)

Guo, D., Wu, S., Chen, M., et al.: Regulation of irregular neuronal firing by autaptic transmission. Sci. Rep. 6, 26096 (2016)

Ma, J., Song, X., Jin, W., et al.: Autapse-induced synchronization in a coupled neuronal network. Chaos Solitons Fractals Interdiscip. J. Nonlinear Sci. Nonequilib. Complex Phenom. 80, 31–38 (2015)

Tang, J., Luo, J.M., Ma, J.: Information transmission in a neuron-astrocyte coupled model. PLoS ONE 8(11), e80324 (2013)

Ma, J., Song, X., Tang, J., et al.: Wave emitting and propagation induced by autapse in a forward feedback neuronal network. Neurocomputing 167(C), 378–389 (2015)

Kety, S.S.: The general metabolism of the brain in vivo. Metab. Nerv. Syst. 46(1), 221–237 (1957)

Bear, M.F., Connors, B.W.: Neuroscience: exploring the brain. J. Child Fam. Stud. 5(3), 377–379 (2007)

Howarth, C., Gleeson, P., Attwell, D.: Updated energy budgets for neural computation in the neocortex and cerebellum. J. Cereb. Blood Flow Metab. 32(7), 1222 (2012)

Valente, P., Castroflorio, E., Rossim, P., et al.: PRRT2 is a key component of the Ca\(^{2+}\)-dependent neurotransmitter release machinery. Cell Rep. 15(1), 117 (2016)

Laughlin, S.B., van Steveninck, R.R., Anderson, J.C.: The metabolic cost of neural information. Nat. Neurosci. 1(1), 36–41 (1998)

Attwell, D., Laughlin, S.B.: An energy budget for signaling in the grey matter of the brain. J. Cereb. Blood Flow Metab. 21(10), 1133–1145 (2001)

Niven, J.E., Laughlin, S.B.: Energy limitation as a selective pressure on the evolution of sensory systems. J. Exper. Biol. 211(11), 1792–1804 (2008)

Alle, H., Roth, A., Geiger, J.R.: Energy-efficient action potentials in hippocampal mossy fibers. Science 325(5946), 1405–8 (2009)

Schmidt, H.C., Bischofberger, J.: Fast sodium channel gating supports localized and efficient axonal action potential initiation. J. Neurosci. 30(30), 10233–42 (2010)

Yu, Y., Hill, A.P., Mccormick, D.A.: Warm body temperature facilitates energy efficient cortical action potentials. PLoS Comput. Biol. 8(4), e1002456 (2012)

Long, F.W., Fei, J., Xiao, Z.L., et al.: Temperature effects on information capacity and energy efficiency of Hodgkin–Huxley neuron. Chin. Phys. Lett. 32(10), 166–169 (2015)

Schreiber, S., Machens, C.K., Herz, A.V., Laughlin, S.B.: Energy-efficient coding with discrete stochastic events. Neural Comput. 14(6), 1323–46 (2002)

Yu, L., Liu, L.: Optimal size of stochastic Hodgkin–Huxley neuronal systems for maximal energy efficiency in coding pulse signals. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 89(3), 032725 (2014)

Yu, L., Zhang, C., Liu, L., Yu, Y.: Energy-efficient population coding constrains network size of a neuronal array system. Sci. Rep. 6, 19369 (2016)

Harris, J.J., Jolivet, R., Attwell, D.: Synaptic energy use and supply. Neuron 75(5), 762–77 (2012)

Levy, W.B., Baxter, R.A.: Energy efficient neuronal computation via quantal synaptic failures. J. Neurosci. 22(11), 4746–55 (2002)

Yu, Y., Migliore, M., Hines, M.L., Shepherd, G.M.: Sparse coding and lateral inhibition arising from balanced and unbalanced dendrodendritic excitation and inhibition. J. Neurosci. 34(41), 13701–13 (2014)

Olshausen, B.A., Field, D.J.: Sparse coding of sensory inputs. Curr. Opin. Neurobiol. 14(4), 481–7 (2004)

Lorincz, A., Palotai, Z., Szirtes, G.: Efficient sparse coding in early sensory processing: lessons from signal recovery. PLoS Comput. Biol. 8(3), e1002372 (2012)

Vicente, R., Gollo, L.L., Mirasso, C.R., et al.: Dynamical relaying can yield zero time lag neuronal synchrony despite long conduction delays. Proc. Nat. Acad. Sci. U. S. A. 105(44), 17157–62 (2008)

Strong, S.P., Koberle, R.: Entropy and information in neural spike trains. Phys. Rev. Lett. 80(1), 197–200 (1996)

Ju, H., Hines, M.L., Yu, Y.: Cable energy function of cortical axons. Sci. Rep. 6, 1 (2016)

Moujahid, A., D”Anjou, A., Torrealdea, F.J., et al.: Energy and information in Hodgkin–Huxley neurons. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 83(3), 031912 (2011)

Shilnikov, A.: Complete dynamical analysis of a neuron model. Nonlinear Dyn. 68(3), 305–328 (2012)

Xu, X., Luo, J.W.: Dynamical model and control of a small-world network with memory. Nonlinear Dyn. 73(3), 1659–1669 (2013)

Wang, L., Zeng, Y.: Control of bursting behavior in neurons by autaptic modulation. Neurol. Sci. 34(11), 1977–1984 (2013)

Bekkers, J.M.: Neurophysiology: are autapses prodigal synapses? Curr. Biol. 8(2), 52–5 (1998)

Sengupta, B., Tozzi, A., Cooray, G.K., et al.: Towards a neuronal gauge theory. PLoS Biol. 14(3), e1002400 (2016)

Friston, K.: The free-energy principle: a unified brain theory? Nat. Rev. Neurosci. 11(2), 127 (2010)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant Nos. 11105062 and 11564034) and the Fundamental Research Funds for the Central Universities (No. lzujbky-2015-119).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yue, Y., Liu, L., Liu, Y. et al. Dynamical response, information transition and energy dependence in a neuron model driven by autapse. Nonlinear Dyn 90, 2893–2902 (2017). https://doi.org/10.1007/s11071-017-3850-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-017-3850-1