Abstract

The first objective of this paper was to study the Darboux integrability of the polynomial differential system

and the second one is to show that for \(a>0\) sufficiently small this model exhibits two small amplitude periodic solutions that bifurcate from a zero-Hopf equilibrium point localized at the origin of coordinates when \(a=0\). We note that this polynomial differential system introduced by Chen and Wang (Nonlinear Dyn 71:429–436, 2013) is relevant in the sense that it is the first system in \(\mathbb {R}^3\) exhibiting chaotic motion without having equilibria.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction and statement of the main result

In the qualitative theory of differential equations, it is important to know whether a given differential system is chaotic or not. One might think that it is not possible to generate a chaotic system without equilibrium points. The answer to this question was given by Chen and Wang [21] where the authors introduce the following polynomial differential system in \(\mathbb {R}^3\)

where \(a\in \mathbb {R}\) is a parameter. They observe that when \(a>0\) system (1) has two equilibria \((\pm \sqrt{a},0,0)\), when \(a=0\) the two equilibria collide at the origin \((0,0,0)\) and for \(a<0\) system (1) has no equilibria but still generates a chaotic attractor, see for more details again [21]. The Chen–Wang [21] differential system is relevant, because it seems that it is the first example of a differential system in \(\mathbb {R}^3\) which exhibits chaotic motion and has no equilibria, as the authors of claimed.

The first objective of this paper is to study the integrability of system (1). We recall that the existence of a first integral for a differential system in \(\mathbb {R}^3\) allows to reduce its study in one dimension. This is the main reason to look for first integrals. Moreover, inside the first integrals, the simpler ones are the so called Darboux first integrals, for more information about these kind of first integrals see for instance [8, 12–14] and the Sect. 2.1.

The second objective is to study the zero-Hopf bifurcation which exhibits the polynomial differential system (1). The main tool up to now for studying a zero-Hopf bifurcation is to pass the system to the normal form of a zero-Hopf bifurcation, later on in this introduction we provide references about this. Our analysis of the zero-Hopf bifurcation is different; we study them directly using the averaging theory, see the Sect. 2.2 for a summary of the results of this theory that we need in this paper.

Let \(U\) be an open and dense subset of \(\mathbb R^3\), we say that a non-locally constant \(C^1\) function \(H :U \rightarrow \mathbb R\) is a first integral of system (1) on \(U\) if \(H(x(t), y(t),z(t))\) is constant for all of the values of \(t\) such for which \((x(t),y(t),z(t))\) is a solution of system (1) contained in \(U\). Obviously, \(H\) is a first integral of system (1) if and only if

for all \((x,y,z)\in U\).

The first main result of this paper is:

Theorem 1

The following statements hold.

-

(a)

System (1) has neither invariant algebraic surfaces, nor polynomial first integrals.

-

(b)

All the exponential factors of system (1) are \(\exp (x)\), \(\exp (y)\) and linear combinations of these two. Moreover, the cofactors of \(\exp (x)\) and \(\exp (y)\) are \(y\) and \(z\), respectively.

-

(c)

System (1) has no Darboux first integrals.

Theorem 1 is proved in Sect. 4. See Sect. 2 for the definition of invariant algebraic surface, exponential factor, and Darboux first integral.

The second main objective of this paper is to show that system (1) exhibits two small amplitude periodic solutions for \(a>0\) sufficiently small that bifurcate from a zero-Hopf equilibrium point localized at the origin of coordinates when \(a=0\).

We recall that an equilibrium point is a zero-Hopf equilibrium of a \(3\)-dimensional autonomous differential system, if it has a zero real eigenvalue and a pair of purely imaginary eigenvalues. We know that generically a zero-Hopf bifurcation is a two-parameter unfolding (or family) of a \(3\)-dimensional autonomous differential system with a zero-Hopf equilibrium. The unfolding can exhibit different topological type of dynamics in the small neighborhood of this isolated equilibrium as the two parameters vary in a small neighborhood of the origin. This theory of zero-Hopf bifurcation has been analyzed by Guckenheimer, Han, Holmes, Kuznetsov, Marsden, and Scheurle in [9, 10, 15, 16, 19]. In particular, they show that some complicated invariant sets of the unfolding could bifurcate from the isolated zero-Hopf equilibrium under convenient conditions, showing that in some cases the zero-Hopf bifurcation could imply a local birth of “chaos”, see for instance the articles [2–4, 7, 19] of Baldomá and Seara, Broer and Vegter, Champneys and Kirk, Scheurle, and Marsden.

Note that the differential system (1) only depends on one parameter so it cannot exhibit a complete unfolding of a zero-Hopf bifurcation. For studying the zero-Hopf bifurcation of system (1), we shall use the averaging theory in a similar way at it was used in [5] by Castellanos, Llibre and Quilantán.

In the next result, we characterize when the equilibrium points of system (1) are zero-Hopf equilibria.

Proposition 1

The differential system (1) has a unique zero-Hopf equilibrium localized at the origin of coordinates when \(a=0\).

The second main result of this paper characterizes the Hopf bifurcation of system (1). For a precise definition of a classical Hopf bifurcation in \(\mathbb {R}^3\) when a pair of complex conjugate eigenvalues cross the imaginary axis and the third real eigenvalue is not zero, see for instance [17].

Theorem 2

The following statements hold for the differential system (1).

-

(a)

This system has no classical Hopf bifurcations.

-

(b)

This system has a zero-Hopf bifurcation at the equilibrium point localized at the origin of coordinates when \(a=0\) producing two small periodic solutions for \(a>0\) sufficiently small of the form

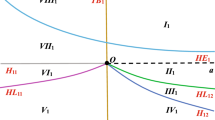

$$\begin{aligned}&x(t)= \pm \sqrt{a}+ O(a), \quad y(t)= O(a), \\&z(t)= O(a). \end{aligned}$$Both periodic solutions have two invariant manifolds, one stable and one unstable, each of them formed by two cylinders. See Fig. 1 for the zero-Hopf periodic solution with initial conditions near \((\sqrt{a},a,a)\) with \(a=1/10{,}000\). The other Hopf periodic orbit is symmetric of this under the symmetry \((x,y,z,t)\rightarrow (-x,y,-z,-t)\) which leaves the differential system (1) invariant.

The paper is organized as follows. In Sect. 2, we present the basic definitions and results necessary to prove Theorems 1 and 2. In Sect. 2, we prove Theorem 1, and in Sect. 4, we present the proof of Proposition 1 and Theorem 2.

2 Preliminaries

2.1 Darboux theory of integrability

As usual \(\mathbb C[x,y,z]\) denotes the ring of polynomial functions in the variables \(x,y\) and \(z\). Given \(f \in \mathbb C[x, y,z]\setminus \mathbb {C}\), we say that the surface \(f(x,y,z)=0\) is an invariant algebraic surface of system (1) if there exists \(k \in \mathbb C[x, y,z]\) such that

The polynomial \(k\) is called the cofactor of the invariant algebraic surface \(f=0\), and it has degree at most \(1\). When \(k=0\), \(f\) is a polynomial first integral. When a real polynomial differential system has a complex invariant algebraic surface, then it has also its conjugate. It is important to consider the complex invariant algebraic surfaces of the real polynomial differential systems because sometimes these forces the real integrability of the system.

Let \(f,g \in \mathbb C[x,y,z]\) and assume that \(f\) and \(g\) are relatively prime in the ring \(\mathbb C[x,y,z]\), or that \(g=1\). Then, the function \(\exp (f/g)\not \in \mathbb {C}\) is called an exponential factor of system (1) if for some polynomial \(L \in \mathbb C[x,y,z]\) of degree at most \(1\) we have

As before, we say that \(L\) is the cofactor of the exponential factor \(\exp {(f/g)}\). We observe that in the definition of exponential factor if \(f,g \in \mathbb C[x,y,z]\) then the exponential factor is a complex function. Again, when a real polynomial differential system has a complex exponential factor surface, then it has also its conjugate, and both are important for the existence of real first integrals of the system. The exponential factors are related with the multiplicity of the invariant algebraic surfaces, for more details see [6], Chapter 8 of [11], and [13, 14].

A first integral is called a Darboux first integral if it is a first integral of the form

where \(f_i=0\) are invariant algebraic surfaces of system (1) for \(i=1,\ldots p\), and \(F_j\) are exponential factors of system (1) for \(j= 1, \ldots , q\), \(\lambda _i, \mu _j \in \mathbb C\).

The next result, proved in [11], explains how to find Darboux first integrals.

Proposition 2

Suppose that a polynomial system (1) of degree \(m\) admits \(p\) invariant algebraic surfaces \(f_i = 0\) with cofactors \(k_i\) for \(i = 1, \ldots ,p\) and \(q\) exponential factors \(\exp (g_j/h_j)\) with cofactors \(L_j\) for \(j = 1,\ldots ,q\). Then, there exist \(\lambda _i\) and \(\mu _j \in \mathbb C\) not all zero such that

if and only if the function

is a Darboux first integral of system (1).

The following result whose proof is given in [13, 14] will be useful to prove statement (b) of Theorem 1.

Lemma 1

The following statements hold.

-

(a)

If \(\exp (f/g)\) is an exponential factor for the polynomial differential system (1) and \(g\) is not a constant polynomial, then \(g=0\) is an invariant algebraic surface.

-

(b)

Eventually, \(\exp (f)\) can be an exponential factor, coming from the multiplicity of the infinity invariant plane.

2.2 Averaging theory

We also present a result from the averaging theory that we shall need for proving Theorem 2, for a general introduction to the averaging theory see the book of Sanders, Verhulst and Murdock [18].

We consider the initial value problems

and

with \(\mathbf {x}\) , \(\mathbf {y}\) and \(\mathbf {x}_0 \) in some open subset \(\Omega \) of \({\mathbb {R}^n}\), \(t\in [0,\infty )\), \(\varepsilon \in (0,\varepsilon _0]\). We assume that \(\mathbf {F_1}\) and \(\mathbf {F_2}\) are periodic of period T in the variable t, and we set

We will also use the notation \(D_\mathbf {x}g\) for all the first derivatives of \(g\), and \(D_\mathbf {xx}g\) for all the second derivatives of \(g\).

For a proof of the next result, see [20].

Theorem 3

Assume that \(F_1\), \(D_\mathbf {x}F_1\) ,\(D_\mathbf {xx}F_1\) and \(D_\mathbf {x}F_2\) are continuous and bounded by a constant independent of \(\varepsilon \) in \([0,\infty )\times \ \Omega \times (0,\varepsilon _0]\), and that \(y(t)\in \Omega \) for \(t\in [0,1/\varepsilon ]\). Then, the following statements hold:

-

1.

For \(t\in [0,1/\varepsilon ]\), we have \(\mathbf {x}(t)- \mathbf {y}(t)= O(\varepsilon )\) as \(\varepsilon \rightarrow 0\).

-

2.

If \(p \ne 0\) is a singular point of system (6) such that

$$\begin{aligned} detD_\mathbf {y}g(p) \ne 0, \end{aligned}$$(8)then there exists a periodic solution \(\mathbf {x}(t,\varepsilon )\) of period \(T\) for system (5) which is close to p and such that \(\mathbf {x}(0,\varepsilon )-p= O(\varepsilon )\) as \(\varepsilon \rightarrow 0\).

-

3.

The stability of the periodic solution \(\mathbf {x}(t, \varepsilon )\) is given by the stability of the singular point.

3 Proof of Theorem 1

To prove Theorem 1(a), we state and prove two auxiliary results. As usual, we denote by \(\mathbb N\) the set of positive integers.

Lemma 2

If \(h=0\) is an invariant algebraic surface of system (1) with nonzero cofactor \(k\), then \(k = k_0- m x\) for some \(k_0 \in \mathbb C\) and \(m \in \mathbb N \cup \{0\}\).

Proof

Let \(h\) be an invariant algebraic surface of system (1) with nonzero cofactor \(k\). Then, \(k = k_0 + k_1 x + k_2 y + k_3 z\) for some \(k_0, k_1, k_2, k_3 \in \mathbb C\). Let \(n\) be the degree of \(h\). We write \(h\) as sum of its homogeneous parts as \(h = \sum _{i=1}^{n} h_i\) where each \(h_i\) is a homogenous polynomial of degree \(i\). Without loss of generality, we can assume that \(h_n \ne 0\) and \(n \ge 1\).

Computing the terms of degree \(n + 1\) in (2), we get that

Solving this linear partial differential equation, we get

where \(C_n\) is an arbitrary function in the variables \(x\) and \(y\) and, \(p(x,y)=-k_1+k_3- \dfrac{k_2 y}{x}- \dfrac{3 k_3 y^2}{x^2}\). Since \(h_n\) must be a homogeneous polynomial we must have \(k_3=k_2=0\) and \(k_1=-m\) with \(m \in \mathbb N \cup \{0\}\). This concludes the proof of the lemma. \(\square \)

Lemma 3

Let \(g=g(x,y)\) be a homogeneous polynomial of degree \(n\) with \(n\ge 1\) satisfying

Then, \(g=0\).

Proof

We write the homogeneous polynomial of degree \(n\) as \(g=g(x,y)= \sum _{i=0}^{n} a_i x^i y^{n-i}\) with \(a_i \in \mathbb C\). Using that \(g\) satisfies (9) we get

Computing the coefficients of \(x^j y^{n+1-j}\), for \(0 \le j \le n+1\) in Eq. (10), we get

for \(1 \le i \le n-2\).

Taking into account that

it follows recursively from (11) that \(a_i=0\) for all \(0\le i\le n\), so \(g=0\). \(\square \)

Proof of Theorem 1(a)

Let \(f=0\) be an invariant algebraic surface of degree \(n\ge 1\) of system (1) with cofactor \(k(x,y,z)=k_0 + k_1 x +k_2 y + k_3 z\). It follows from Lemma 2 that \(k=k(x,y,z)=k_0 - m x\), with \(m \in \mathbb N \cup \{0\}\). We write \(f\) as sum of its homogeneous parts as \(f=\sum _{i=0}^{n} f_i\) where \(f_i=f_i(x,y,z)\) is a homogeneous polynomial of degree \(i\).

Computing the terms of degree \(n + 1\) in (2), we get that

Solving this linear differential equation, we get that

where \(g=g(x,y)\) is a homogeneous polynomial of degree \(n-2m\) in the variables \(x\) and \(y\).

Assume first \(m=0\). Computing the terms of degree \(n\) in (2), we obtain

Solving (13), we have

where \(K\) is an arbitrary function in the variables \(x\) and \(y\). Since \(f_{n-1}\) must be a homogeneous polynomial of degree \(n-1\) we must have

It follows from Lemma 3 that \(g=0\) and from (12) \(f_n=0\), i.e., \(f\) is a constant, which is a contradiction with the fact that \(f=0\) is an invariant algebraic surface. So \(m>0\).

For simplifying the computations, we introduce the weight change of variables

with \(\mu \in \mathbb R{\setminus }\{0\}\). Then, system (1) becomes

where the prime denotes derivative with respect to the variable \(T\).

Set

where \(F_i\) is the weight homogeneous part with weight degree \(n-i\) of \(F\) and \(n\) is the weight degree of \(F\) with weight exponents \(s=(0,-1,-1)\). We also set \(K(X,Y,Z)=k(X, \mu ^{-1}Y, \mu ^{-1}Z)= k_0 - m X\).

From the definition of an invariant algebraic surface, we have

Equating in (15) the terms with \(\mu ^0\), we get

where \(F_0\) is a weight homogeneous polynomial of degree \(n\).

Solving (16) we readily obtain, by direct computation, that

where \(G\) is an arbitrary function in the variables \(Y\) and \(Z\). Since \(F_0\) must be a polynomial and \(m> 0\) we must have \(F_0=0\). This implies that \(F=0\) is not an invariant algebraic surface of system (14); consequently, \(f=0\) is not an invariant algebraic surface of system (1). This completes the proof of Theorem 1(a). \(\square \)

Proof of Theorem 1(b)

Let \(E=\exp (f/g)\notin \mathbb {C}\) be an exponential factor of system (1) with cofactor \(L=L_0 + L_1 x + L_2 y + L_3 z\), where \(f,g \in \mathbb C[x,y,z]\) with \((f,g)=1\). From Theorem 1(a) and Lemma 1, \(E=\exp (f)\) with \(f=f(x,y,z) \in \mathbb C[x,y,z]\setminus \mathbb C\).

It follows from Eq. (3) that \(f\) satisfies

where we have simplified the common factor \(\exp (f)\).

We write \(f=\sum _{i=0}^n f_i(x,y,z)\), where \(f_i\) is a homogeneous polynomial of degree \(i\). Assume \(n>1\). Computing the terms of degree \(n+1\) in (17), we obtain

Solving it and using that \(f_n\) is a homogeneous polynomial of degree \(n\), we get \(f_n(x,y,z)=g_n(x,y)\), where \(g_n(x,y)\) is a homogeneous polynomial of degree \(n\). Computing the terms of degree \(n\) in (17), we obtain

Solving (18), we get

where \(g_{n-1}(x,y)\) is an arbitrary function in the variables \(x\) and \(y\). Since \(f_{n-1}\) must a homogeneous polynomial of degree \(n-1\) we must have

which yields

Taking into account that \(g_n\) must be a homogeneous polynomial of degree \(n\), we get \(g_n=0\). This implies that \(f_n=0\), so \(n=1\).

We can write \(f=a_1 x + a_2 y + a_3 z\) with \(a_i \in \mathbb {C}\). Imposing that \(f\) must satisfy (17), we get \(f=a_1 x + a_2 y\) with cofactor \(a_1 y+a_2 z\). This concludes the proof of Theorem 1(b). \(\square \)

Proof of Theorem 1(c)

It follows from Proposition 2 and statements (a) and (b) of Theorem 1 that if system (1) has a Darboux first integral then there exist \(\mu _1,\mu _2 \in \mathbb C\) not both zero such that (4) holds, that is, such that

But this is not possible. In short, there are no Darboux first integrals for system (1) and the proof of Theorem 1(c) is completed. \(\square \)

4 Zero-Hopf bifurcation

In this section, we prove Proposition 1 and Theorem 2.

Proof of Proposition 1

System (1) has two equilibrium points \(e_\pm =(\pm \sqrt{a},0,0)\) when \(a>0\), which collide at the origin when \(a=0\). The proof is made computing directly the eigenvalues at each equilibrium point. Note that the characteristic polynomial of the linear part of system (1) at the equilibrium point \(e_\pm \) is

As \(p(\lambda )\) is a polynomial of degree 3, it has either one, two (then one has multiplicity \(2\)), or three real zeros. Using the discriminant of \(p(\lambda )\), it follows that \(p(\lambda )\) has a unique real root, see the ”Appendix” for more details.

Imposing the condition

with \(\rho ,\varepsilon ,\beta \in \mathbb {R}\) and \(\beta >0\), we obtain a system of three equations that correspond to the coefficients of the terms of degree \(0, 1\) and \(2\) in \(\lambda \) of the polynomial \(p(\lambda )-(\lambda -\rho )(\lambda - \varepsilon - i\beta )(\lambda - \varepsilon + i\beta )\). This system has only two solutions in the variables \((a,\beta ,\rho )\), which are

and

Clearly, at \(\varepsilon =0\), only the first solution is well defined and gives \((a,\beta ,\rho )=(0,1,0)\). Hence, there is a unique zero-Hopf equilibrium point when \(a=0\) at the origin of coordinates with eigenvalues \(0\) and \(\pm i\). This completes the proof of Proposition 1. \(\square \)

Proof of Theorem 2

It was proven in Proposition 1 that when \(a=0\) the origin is zero-Hopf equilibrium point. We want to study if from this equilibrium it bifurcates some periodic orbit moving the parameter \(a\) of the system. We shall use the averaging theory of first order described in Sect. 2 (see Theorem 3) for doing this study. But for applying this theory, there are three main steps that we must solve in order that the averaging theory can be applied for studying the periodic solutions of a differential system.

-

Step 1

Doing convenient changes of variables we must write the differential system (1) as a periodic differential system in the independent variable of the system, and the system must depend on a small parameter as it appears in the normal form (5) for applying the averaging theory. To find these changes of variables sometimes is the more difficult step.

-

Step 2

We must compute explicitly the integral (7) related with the periodic differential system in order to reduce the problem of finding periodic solutions to a problem of finding the zeros of a function \(g(\mathbf {y})\), see (7).

-

Step 3

We must compute explicitly the zeros of the mentioned function, in order to obtain periodic solutions of the initial differential system (1).

In order to find the changes of variables for doing the step 1 and write our differential system (1) in the normal form for applying the averaging theory, first we write the linear part at the origin of the differential system (1) when \(a=0\) into its real Jordan normal form, i.e., into the form

To do this, we apply the linear change of variables

In the new variables \((u,v,w)\), the differential system (1) becomes

Now, we write the differential system (19) in cylindrical coordinates \((r,\theta , w)\) doing the change of variables

and system (19) becomes

Now, we do a rescaling of the variables through the change of coordinates

After this, rescaling system (20) becomes

This system can be written as

where

Using the notation of the averaging theory described in Sect. 2, we have that if we take \(t=\theta \), \(T=2 \pi \), \(\varepsilon =\sqrt{a}\), \(\mathbf{x}=(R, W)^{T}\) and

it is immediate to check that the differential system (22) is written in the normal form (5) for applying the averaging theory and that it satisfies the assumptions of Theorem 3. This completes the step 1.

Now, we compute the integral in (7) with \(\mathbf{y}= (R,W)^{T}\), and denoting

we obtain

So the step 2 is done.

The system \(g_{11}(R,W)=g_{12}(R,W)=0\) has the unique real solutions \((W, R)= (\pm 2, 0)\). The Jacobian (8) is

and evaluated at the solutions \((R,W)=(0,\pm 2)\) takes the value \(-1\ne 0\). Then, by Theorem 3, it follows that for any \(a>0\) sufficiently small system (21) has a periodic solution \(\mathbf{x}(t,\varepsilon )=(R(\theta ,a), W(\theta , a))\) such that \((R(0, a), W(0,a))\) tends to \((0,\pm 2)\) when \(a\) tends to zero. We know that the eigenvalues of the Jacobian matrix at the solution \((0,- 2)\) are \(2,-1/2\) and the eigenvalues of the Jacobian matrix at the solution \((0,2)\) are \(-2,1/2\). This shows that both periodic orbits are unstable having a stable manifold and an unstable manifold both formed by two cylinders.

Going back to the differential system (20), we get that such a system for \(a>0\) sufficiently small has two periodic solutions of period approximately \(2\pi \) of the form

These two periodic solutions become for the differential system (19) into two periodic solutions of period also close to \(2\pi \) of the form

for \(a>0\) sufficiently small. Finally, we get for the differential system (1) the two periodic solutions

of period near \(2\pi \) when \(a>0\) is sufficiently small. Clearly, these periodic orbits tend to the origin of coordinates when \(a\) tends to zero. Therefore, they are small amplitude periodic solutions starting at the zero-Hopf equilibrium point. This concludes the proof of Theorem 2. \(\square \)

References

Abramowitz, M., Stegun, I.A.: Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. National Bureau of Standards Applied Mathematics Series, vol. 55 (1964)

Baldomá, I., Seara, T.M.: Brakdown of heteroclinic orbits for some analytic unfoldings of the Hopf-Zero singularity. J. Nonlinear Sci. 16, 543–582 (2006)

Baldomá, I., Seara, T.M.: The inner equation for genereic analytic unfoldings of the Hopf-Zero singularity. Discret. Contin. Dyn. Syst. Ser. B 10, 232–347 (2008)

Broer, H.W., Vegter, G.: Subordinate silnikov bifurcations near some singularities of vector fields having low codimension. Ergod. Theory Dyn. Syst. 4, 509–525 (1984)

Castellanos, V., Llibre, J., Quilantán, I.: Simultaneous periodic orbits bifurcating from two Zero-Hopf equilibria in a tritrophic food chain model. J. Appl. Math. Phys. 1, 31–38 (2013)

Christopher, C., Llibre, J., Pereira, J.V.: Multiplicity of invariant algebraic curves in polynomial vector fields. Pac. J. Math. 229, 63–117 (2007)

Champneys, A.R., Kirk, V.: The entwined wiggling of homoclinic curves emerging from saddle-node/Hopf instabilities. Phys. D Nonlinear Phenom. 195, 77–105 (2004)

Darboux, G.: Mémoire sur les équations différentielles algébriques du premier ordre et du premier degré (Mélanges). Bull. Sci. math. 2ème série. 2 , 60–96; 123–144; 151–200 (1878)

Guckenheimer, J.: On a codimension two bifurcation. In: Dynamical Systems and Turbulence, Warwick (Coventry, 1979/1980), Lecture Notes in Math., no. 654886 (83j:58088) vol. 898, pp. 99–142. Springer, Berlin (1981)

Guckenheimer, J., Holmes, P.: Nonlinear Oscillations, Dynamical Systems, and Bifurcations of Vector Fields, Applied Mathematical Sciences, vol. 42. Springer, Berlin (2002)

Dumortier, F., Llibre, J., Artés, J.C.: Qualitative Theory of Planar Differential Systems. Springer, New York (2006)

Jouanolou, J.P.: Equations de Pfaff algébriques. In: Lectures Notes in Mathematics, vol. 708. Springer, Berlin (1979)

Llibre, J., Zhang, X.: Darboux theory of integrability in \(\mathbb{C}^n\) taking into account the multiplicity. J. Differ. Equ. 246, 541–551 (2009)

Llibre, J., Zhang, X.: Darboux theory of integrability for polynomial vector fields in \(\mathbb{R}^n\) taking into account the multiplicity at infinity. Bull. Sci. Math. 133, 765–778 (2009)

Han, M.: Existence of periodic orbits and invariant tori in codimension two bifurcations of three-dimensional systems. J. Syst. Sci. Math. Sci. 18, 403–409 (1998)

Kuznetsov, Yu.A.: Elements of applied bifurcation theory. In: Applied Mathematical Sciences, 3rd ed., vol. 12, Springer, New York (2004)

Marsden, J. E., McCracken, M.: The Hopf bifurcation and its applications. In: Chernoff, P., Childs, G., Chow, S., Dorroh, J. R., Guckenheimer, J., Howard, L., Kopell, N., Lanford, O.,Mallet-Paret, J., Oster, G., Ruiz, O., Schecter, S., Schmidt., D. and Smale, S. (eds.) Applied Mathematical Sciences, vol. 19.Springer-Verlag, New York (1976)

Sanders, J.A., Verhulst, F., Murdock, J.: Averaging methods in nonlinear dynamical systems. In: Applied Mathematical Sciences. 2nd ed., vol. 59. Springer, New York (2007)

Scheurle, J., Marsden, J.: Bifurcation to quasi-periodic tori in the interaction of steady state and Hopf bifurcations. SIAM J. Math. Anal. 15, 1055–1074 (1984)

Verhulst, F.: Nonlinear Differential Equations and Dynamical Systems. Springer, Berlin (1991)

Wang, X., Chen, G.: Constructing a chaotic system with any number of equilibria. Nonlinear Dyn. 71, 429–436 (2013)

Acknowledgments

The first author is partially supported by a MINECO/ FEDER Grant MTM2008-03437, and MTM2013-40998-P, an AGAUR Grant Number 2014SGR-568, an ICREA Academia, the Grants FP7-PEOPLE-2012-IRSES 318999 and 316338, FEDER-UNAB-10-4E-378. The first two authors are also supported by the joint projects FP7-PEOPLE-2012-IRSES numbers 316338 and a CAPES Grant Number 88881.030454/2013-01 from the program CSF-PVE. The third author is supported by Portuguese National Funds through FCT - Fundação para a Ciência e a Tecnologia within the project PEst-OE/EEI/ LA0009/2013 (CAMGSD).

Author information

Authors and Affiliations

Corresponding author

Appendix: Roots of a cubic polynomial

Appendix: Roots of a cubic polynomial

We recall that the discriminant \(\Delta \) of the polynomial \(ax^3+bx^2+cx+d\) is

It is known that

-

If \(\Delta > 0\), then the equation has three distinct real roots.

-

If \(\Delta = 0\), then the equation has a root of multiplicity \(2\) and all its roots are real.

-

If \(\Delta < 0\), then the equation has one real root and two non–real complex conjugate roots.

For more details see [1].

Rights and permissions

About this article

Cite this article

Llibre, J., Oliveira, R.D.S. & Valls, C. On the integrability and the zero-Hopf bifurcation of a Chen–Wang differential system. Nonlinear Dyn 80, 353–361 (2015). https://doi.org/10.1007/s11071-014-1873-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-014-1873-4