Abstract

This paper addresses the passivity problem for uncertain neural networks with both discrete and distributed time-varying delays. It is assumed that the parameter uncertainties are norm-bounded. By construction of an augmented Lyapunov–Krasovskii functional and utilization of zero equalities, improved passivity criteria for the networks are derived in terms of linear matrix inequalities (LMIs) via new approaches. Through three numerical examples, the effectiveness to enhance the feasible region of the proposed criteria is demonstrated.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Since neural networks have been extensively applied in many areas such as reconstruction of moving image, signal processing, the tasks of pattern recognition, associative memories, fixed-point computations, and so on, the stability analysis of the concerned neural networks is a very important and prerequisite job because the application of neural networks heavily depends on the dynamic behavior of equilibrium points [1–5]. Also, due to the finite speed of information processing in the implementation of the network, time-delay occurs in many neural networks. It is well known that time-delay often causes undesirable dynamic behaviors such as oscillation and instability of the networks. Thus, delay-dependent stability and stabilization problem for neural networks with time-delay have been paid more attention than delay-independent ones because the information on the size of time-delays is utilized in delay-dependent criteria, which lead to reduce the conservatism of stability and stabilization criteria. To confirm this, see [6–23] and references therein.

In practice, it should be noted that the signal propagation is sometimes instantaneous and can be modeled with discrete delays. Also, it may be distributed during a certain time period so that the distributed delays should be incorporated in the model. That is, it is often the case that the neural network model possesses both discrete and distributed delays [24]. In this regard, the stability of cellular neural networks with discrete and distributed delays has been investigated in [25–28]. Furthermore, the norm-bounded parametric uncertainties, which sometimes affect the stability of systems, are considered in the works [26–28].

On the other hand, in various scientific and engineering problems, stability issues are often linked to the theory of dissipative systems. It postulates that the energy dissipated inside a dynamic system is less than the energy supplied from the external source [29]. Based on the concept of energy, the passivity is the property of dynamical systems and describes the energy flow through the system. It is also an input/output characterization and related to Lyapunov method. In the field of nonlinear control, the concept of dissipativeness was firstly introduced by Willems [30] in the form of inequality including supply rate and the storage function. The main idea of passivity theory is that passive properties of a system can keep the system internally.

In this regard, in [31–38], the passivity problem for the uncertain neural networks with both discrete and distributed time-varying delays was considered. Chen et al. [31] investigated the passivity problem of the neural networks by utilizing free-weighting matrices and the LMI framework. In [32], improved delay-dependent passivity criteria for the networks were proposed. Xu et al. [33] studied the problem of passivity analysis for neural networks with both time-varying delays and norm-bounded parameter uncertainties. In [34], improved passivity criteria for stochastic neural networks with interval time-varying delays and norm-bounded parameter uncertainties were proposed via an improved approximation method. In [35], for two types of time-varying delays, new delay-dependent passivity conditions of delayed neural networks were derived. Recently, by taking more information of states as augmented vectors, an augmented Lyapunov–Krasovskii functional was utilized in [36] to derive passivity criteria for uncertain neural networks with time-varying delays. Song and Cao [37] investigated the passivity problem for a class of uncertain neural networks with leakage delay and time-varying delays by employing Newton–Leibniz formulation and the free weighting matrix method. Very recently, in [38], by constructing a novel Lyapunov–Krasovskii functional including a tuning parameter in time-varying delays and introducing some proper free-weighting matrices, new passivity conditions for neural networks with both discrete and distributed time-varying delays were developed to guarantee the passivity performance of the networks. However, there are rooms for further improvement in enhancing the feasible regions of passivity criteria.

Motivated by above discussion, in this paper, the problem on delay-dependent passivity for uncertain neural networks with both discrete and distributed time-varying delays is addressed. The parameter uncertainties are assumed to be norm-bounded. The main contribution of this paper lies in two aspects:

-

1.

Unlike the method of [38], no tuning parameters in a time-varying delay are utilized. Instead, by taking more information of states, a newly constructed Lyapunov–Krasovskii functional is proposed. Then, inspired by the work of [39–41], a passivity condition for neural networks with both discrete and distributed time-varying delays and parameter uncertainties is derived in terms of LMIs which will be introduced in Theorem 1.

-

2.

A novel approach partitioning m-interval of the range of the activation function divided by state will be proposed. Through three numerical examples, it will be shown the maximum delay bounds for guaranteeing the passivity of the considered neural networks increase when the partitioning number of the bounding of activation function gets larger.

Based on the result of Theorem 1, a passivity criterion for uncertain neural networks with only discrete time-varying delays will be proposed in Theorem 2. Finally, three numerical examples are included to show the effectiveness of the proposed methods.

Notation

\(\mathbb{R}^{n}\) is the n-dimensional Euclidean space, and \(\mathbb{R}^{m \times n}\) denotes the set of all m×n real matrices. For symmetric matrices X and Y, X>Y (respectively, X≥Y) means that the matrix X−Y is positive definite (respectively, nonnegative). X ⊥ denotes a basis for the null-space of X. I n , 0 n, and 0 m⋅n denotes n×n identity matrix, n×n and m×n zero matrices, respectively. ∥⋅∥ refers to the Euclidean vector norm or the induced matrix norm. \(\operatorname{diag} \{ \cdots\}\) denotes the block diagonal matrix. For square matrix S, \(\operatorname{sym}\{ S\}\) means the sum of S and its symmetric matrix S T; i.e., \(\operatorname{sym}\{S\}=S+S^{T}\). ⋆ represents the elements below the main diagonal of a symmetric matrix.

2 Problem statements

Consider the following uncertain neural networks with both discrete and distributed time-varying delays:

where n denotes the number of neurons in a neural network, \(x(t)=[x_{1}(t),\ldots,x_{n}(t)]^{T}\in\mathbb{R}^{n}\) is the neuron state vector, \(y(t)\in\mathbb{R}^{n}\) is the output vector, \(f(x_{i}(\cdot))=[f_{1}(x_{i}(\cdot)),\ldots,f_{n}(x_{i}(\cdot))]^{T}\in\mathbb{R}^{n}\) denotes the neuron activation function vector, \(u(t)\in\mathbb{R}^{n}\) is the input vector, \(A=\operatorname{diag}\{a_{1},\ldots,a_{n}\}\in\mathbb {R}^{n\times n}\) is a positive diagonal matrix, \(W_{i}\in\mathbb{R}^{n\times n}\ (i=0,1,2)\) are the interconnection weight matrices, \(C_{i} \in\mathbb{R}^{n\times n}\ (i=1,2)\) are known constant matrices, and ΔA(t) and ΔW i (t)(i=0,1,2) are the parameter uncertainties of the form

where F(t) is the time-varying nonlinear function satisfying

The delays h(t) and τ(t) are time-varying delays satisfying

where h U , h D , and τ U are known positive scalars.

It is assumed that the neuron activation functions satisfy the following condition.

Assumption 1

[42] The neuron activation functions f i (⋅), i=1,…,n are continuous, bounded, and satisfy

where \(k^{+}_{i}\) and \(k^{-}_{i}\) are constants.

Remark 1

In Assumption 1, \(k^{+}_{i}\) and \(k^{-}_{i}\) can be allowed to be positive, negative, or zero. As mentioned in [14], Assumption 1 describes the class of globally Lipschitz continuous and monotone nondecreasing activation when \(k^{-}_{i}=0\) and \(k^{+}_{i}>0\). Also, the class of globally Lipschitz continuous and monotone increasing activation functions can be described when \(k^{+}_{i}>k^{-}_{i}>0\).

For passivity analysis, the systems (1) can be rewritten as

The objective of this paper is to investigate delay-dependent passivity conditions for system (4). Before deriving our main results, we state the following definition and lemmas.

Definition 1

The system (1) is called passive if there exists a scalar γ≥0 such that

for all t p ≥0 and for all solution of (1) with x(0)=0.

Lemma 1

[43] Let \(\zeta\in\mathbb{R}^{n}\), \(\varPhi=\varPhi^{T}\in\mathbb{R}^{n \times n}\), and \(\varUpsilon\in\mathbb{R}^{m \times n}\) such that \(\operatorname{rank}(\varUpsilon) < n\). The following statements are equivalent:

-

(i)

ζ T Φζ<0, ∀ϒζ=0, ζ≠0,

-

(ii)

ϒ ⊥ T Φϒ ⊥<0.

3 Main results

In this section, new passivity criteria for network (4) will be proposed. For the sake of simplicity on matrix representation, \(e_{i}\in\mathbb{R}^{18n \times n}\ (i=1,2,\ldots,18)\) are defined as block entry matrices (for example, e 2=[0 n ,I n ,016n×n ]T). The notations of several matrices are defined as

Then the main result is given by the following theorem.

Theorem 1

For given positive scalars h U , h D , τ U , and a positive integer m, diagonal matrices \(K^{-}=\operatorname{diag}\{k_{1}^{-},\ldots,k_{n}^{-}\}\) and \(K^{+}=\operatorname{diag}\{k_{1}^{+},\ldots,k_{n}^{+}\}\), the system (4) is passive for 0≤h(t)≤h U , \(\dot{h}(t)\leq h_{D}\) and 0≤τ(t)≤τ U , if there exist positive scalars ε and γ, positive diagonal matrices \(\varLambda_{a}=\operatorname{diag}\{\lambda _{a1},\ldots,\lambda_{an}\}\) (a=1,2), \(\Delta_{a}=\operatorname{diag}\{ \delta_{a1},\ldots,\delta_{an}\}\ (a=1,2)\), \(H_{b}=\operatorname{diag}\{h_{1}^{b},\ldots,h_{n}^{b}\}\ (b=1,2,\ldots,3m)\), \(\tilde{H}_{b}= \operatorname{diag}\{\tilde{h}_{1}^{b},\ldots,\tilde {h}_{n}^{b}\}\ (b=1,2,\ldots,m)\), positive definite matrices \(R\in\mathbb{R}^{5n\times5n}\), \(N\in\mathbb {R}^{3n\times3n}\), \(M\in\mathbb{R}^{2n\times2n}\), \(G\in\mathbb {R}^{2n\times2n}\), \(Q_{1}\in\mathbb{R}^{3n\times3n}\), \(Q_{2}\in\mathbb {R}^{n\times n}\), \(Q_{3}\in\mathbb{R}^{n\times n}\), any symmetric matrices \(P_{1}\in\mathbb{R}^{n\times n}\), \(P_{2}\in\mathbb {R}^{n\times n}\), and any matrices \(S_{1}\in\mathbb{R}^{3n\times3n}\), \(S_{2}\in\mathbb{R}^{n\times n}\), satisfying the following LMIs:

where ϒ, \(\bar{P}_{1}\), \(\bar{P}_{2}\), Ψ, and Θ j are defined in (6).

Proof

Consider the following Lyapunov–Krasovskii functional candidate as

where

Time-derivative of V 1, V 2, and V 3 are calculated as

Inspired by the work of [40], by adding the following two zero equalities with any symmetric matrices P 1 and P 2:

into the time-derivative of V 4 and using Jensen’s inequality [44], we get

If the inequality (8) hold, then the two inequalities, \(Q_{1} +\bar{P}_{1} \geq 0\) and \(Q_{1} +\bar{P}_{2} \geq0\), are satisfied. Thus, \(\dot{V}_{4}\) can be estimated as

where \(\alpha(t)=1-h(t)h_{U}^{-1}\) and \(\beta(t)=1-\tau(t)\tau _{U}^{-1}\), which satisfy 0<α(t)<1 and 0<β(t)<1 when 0<h(t)<h U and 0<τ(t)<τ U , respectively. Then, by reciprocally convex approach [41], if the LMIs (8) and (9) satisfy, then the following inequalities hold for any matrices S 1 and S 2

Also, when h(t)=0, h(t)=h M and τ(t)=0, τ(t)=τ U , respectively, we get

and

respectively.

Thus, from (17) and (18), the following inequality still holds:

Here, if the inequality (8) holds, then an upper bound of the \(\dot{V}_{4}\) can be rebounded as

Lastly, an upper bound of \(\dot{V}_{5}\) can be obtained as

where Lemma 2 in [36] was utilized in above inequality.

Since the inequality p T(t)p(t)≤q T(t)q(t) holds from (2) and (4), there exists a positive scalar ε satisfying the following inequality:

Let us choose v=0 from (3) and divide its range of (3) into m interval. It should be noted that subinterval of the range of (3) can be described as

where m is positive integer, and each condition is equivalent to

From the conditions just above, the following inequalities hold for any positive diagonal matrices: \(H_{3(j-1)+l}=\operatorname{diag} \{h_{1}^{3(j-1)+l},\ldots ,h_{n}^{3(j-1)+l} \}\) and \(\tilde{H}_{j}=\operatorname{diag} \{\tilde{h}_{1}^{j},\ldots ,\tilde{h}_{n}^{j} \}\), where j=1,2,…,m and l=1,2,3.

Case (j) for Range (j) with j=1,2,…,m:

From (11)–(23) and by applying S-procedure [45], an upper bound of \(\dot {V}-2y^{T}(t)u(t)-\gamma u^{T}(t)u(t)\) can be

By Lemma 1, ζ T(t)(Ψ+Θ j )ζ(t) with ϒζ(t)=0 n×1 is equivalent to (ϒ ⊥)T(Ψ+Θ j )ϒ ⊥<0. Therefore, if LMIs (7), (8), and (9) hold, then (ϒ ⊥)T(Ψ+Θ j )ϒ ⊥<0 holds, which means

By integrating (25) with respect to t over the time period from 0 to t p , we have

for x(0)=0. Since V(x(0))=0, the inequality (5) in Definition 1 holds. This implies that the neural networks (1) is passive in the sense of Definition 1. This completes our proof. □

Remark 2

Unlike the method of [38], the utilized augmented vector ζ(t) includes the state vector such as f(x(t−τ U )) and x(t−τ U ). These state vectors have not been utilized as an element of augmented vector ζ(t) in any other literature, which is the main difference between Theorem 1 and the methods in other literature. Correspondingly, in (23), the terms such as \(-2\sum^{n}_{i=1}\tilde{h}_{i}^{j} [f_{i} (x_{i}(t-\tau_{U})) -(k_{i}^{-}+\frac {j-1}{m}(k_{i}^{+}-k_{i}^{-}))x_{i} (t-\tau_{U})][f_{i} (x_{i}(t-\tau _{U}))-(k_{i}^{-}+\frac{j}{m}(k_{i}^{+}-k_{i}^{-})) x_{i} (t-\tau_{U})]\) are utilized for the first time.

Remark 3

Recently, the reciprocally convex optimization technique to reduce the conservatism of stability for systems with time-varying delays was proposed in [41]. Motivated by this work, in (15)–(19), the proposed method of [41] was utilized in obtaining upper bounds of the terms such as

and

Remark 4

In (14), two zero equalities are proposed inspired by the work of [40] and utilized in Theorem 1 to reduce the conservatism of the stability condition. As presented in (14), the terms x T(t)(h U P 1)x(t)−x T(t−h(t))(h U P 1)x(t−h(t)) and \(x^{T}(t-h(t))\* (h_{U} P_{2})x(t-h(t))-x^{T}(t-h_{U})(h_{U} P_{2})x(t-h_{U})\) provide the enhanced feasible region of the passivity criterion. Furthermore, as shown in (15), the two integral terms such as \(- 2h_{U} \int^{t}_{t-h(t)}\dot{x}^{T}(s) P_{1} x(s)\,ds\) and \(- 2h_{U} \int^{t-h(t)}_{t-h_{U}}\dot{x}^{T}(s) P_{2} x(s)\,ds\) presented in (14) are merged into the integral terms \(- h_{U} \int^{t}_{t-h(t)}\mu^{T}(s)\* Q_{1} \mu(s)\,ds\) and \(- h_{U} \int^{t-h(t)}_{t-h_{U}}\mu^{T}(s) Q_{1} \mu(s)\,ds\), which cause the conservatism of the passivity criterion.

Remark 5

Inspired by the fact that the stability and performance of neural networks are related to the choice of activation functions [46], the range of the term, \(k^{-}_{i} \leq\frac{f_{i} (u)}{u} \leq k^{+}_{i}\), is divided into m subintervals such as \(k^{-}_{i} \leq\frac{f_{i} (u)}{u} \leq k^{-}_{i} + \frac{1}{m}(k^{+}_{i}-k^{-}_{i})\), \(k^{-}_{i} + \frac{1}{m}(k^{+}_{i}-k^{-}_{i}) \leq\frac{f_{i} (u)}{u} \leq k^{-}_{i} + \frac{2}{m}(k^{+}_{i}-k^{-}_{i}),\ldots, k^{-}_{i} + \frac{m-2}{m}(k^{+}_{i}-k^{-}_{i}) \leq\frac{f_{i} (u)}{u} \leq k^{-}_{i} + \frac{m-1}{m}(k^{+}_{i}-k^{-}_{i})\), and \(k^{-}_{i} + \frac{m-1}{m}(k^{+}_{i}-k^{-}_{i}) \leq\frac{f_{i} (u)}{u} \leq k^{+}_{i} \). By choosing u as x(t), x(t−h(t)), x(t−h U ), and x(t−τ U ), the inequalities (23) are utilized in Theorem 1. This idea has not been proposed in passivity analysis for uncertain neural networks with mixed time-varying delays. The advantage of this approach is that the feasible region of passivity criterion can be enhanced as the partitioning number m increases. It should be pointed unlike the delay-partitioning approach, the augmented vector are not changed. However, as m increases, the number of decision variables becomes larger. Through three numerical examples, it will be shown the feasible region of passivity criterion introduced in Theorem 1 can be significantly enhanced as the partitioning number m increases.

As a special case, the networks (4) without distributed delays can be rewritten as

We can obtain a passivity criterion of the network (29) by the similar method of the proof of Theorem 1. This result will be introduced in Theorem 2. For the sake of simplicity on matrix representation, \(e_{i}\in\mathbb{R}^{14n \times n}\) (i=1,…,14) are defined as block entry matrices (for example, e 2=[0 n ,I n ,012n×n ]T). Before introducing this, the notations of several matrices are defined as:

where \(\bar{P}_{1}\), \(\bar{P}_{2}\), Π a (a=3,…,6) and Φ 2 are defined in (6).

Now, we have the following theorem.

Theorem 2

For given positive scalars h U , h D and a positive integer m, diagonal matrices \(K^{-}= \operatorname{diag}\{k_{1}^{-},\ldots,k_{n}^{-}\}\) and \(K^{+}=\operatorname{diag}\{k_{1}^{+},\ldots,k_{n}^{+}\}\), the system (4) is passive for 0≤h(t)≤h U and \(\dot{h}(t)\leq h_{D}\), if there exist positive scalars ε and γ, positive diagonal matrices \(\varLambda_{a}=\operatorname{diag}\{\lambda _{a1},\ldots,\lambda_{an}\}\) (a=1,2), \(\Delta_{a}=\operatorname{diag}\{ \delta_{a1},\ldots,\delta_{an}\}\) (a=1,2), \(H_{b}=\operatorname{diag}\{h_{1}^{b},\ldots,h_{n}^{b}\}\) (b=1,2,…,3m), positive definite matrices \(R\in\mathbb{R}^{4n\times4n}\), \(N\in\mathbb {R}^{3n\times3n}\), \(M\in\mathbb{R}^{2n\times2n}\), \(G\in\mathbb {R}^{2n\times2n}\), \(Q_{1}\in\mathbb{R}^{3n\times3n}\), \(Q_{3}\in\mathbb {R}^{n\times n}\) and any symmetric matrix \(P_{a}\in\mathbb{R}^{n\times n}\) (a=1,2), and any matrix \(S_{1}\in\mathbb{R}^{3n\times3n}\) satisfying the following LMIs with (8):

where \(\hat{\varUpsilon}\) and \(\hat{\varPsi}\) are defined in (30), and \(\bar{P}_{a}\) (a=1,2) and Θ 1j are in (6).

Proof

Consider the following Lyapunov–Krasovskii functional candidate as

where

The other procedure of proof is straightforward from the proof of Theorem 1, so it is omitted. □

4 Numerical examples

In this section, three numerical examples will be shown to illustrate the effectiveness of the proposed criteria. In examples, MATLAB, YALMIP 3.0, and SeDuMi 1.3 are used to solve LMI problems.

Example 1

Consider the neural networks (1) with

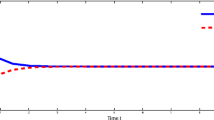

Tables 1 and 2 show the results of the upper bound of time-delay for the above system. It can be seen that Theorem 1 in this paper provides larger delay bound than the previous results. Furthermore, when the partitioning number m increases, the maximum delay bounds get larger. This indicates that the presented passivity conditions relieve the constraint of the passivity caused by time-delay. To confirm one of the obtained results in Table 2 (h D =0.1, h U =0.7174, τ U =0.5070), a simulation result when x(0)=[−1,−0.5]T, h(t)=0.7174sin2(0.13t), τ(t)=0.5070sin2(t), u(t)=0.1sin(2πt), \(\Delta A(t)=\Delta W_{0} (t)=\Delta W_{1} (t) = \Delta W_{2} (t)=0.2 \operatorname{diag} \{\sin(t), \sin(t)\}\) are given in Fig. 1. Figure 1 shows that the system (1) with above parameters is passive in the sense of Definition 1.

Example 2

Consider the neural networks (29) with

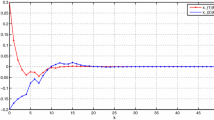

In Table 3, the results of the upper bound of time-delay for guaranteeing passivity are compared with the previous results. From the results of Table 3, it can be seen that the maximum delay bounds for guaranteeing the passivity of the above neural networks are larger than those of other literature listed in Table 3. To confirm one of the obtained result in Table 3 (h D =0.5, h U =1.1590), a simulation result when x(0)=[1,−1]T, h(t)=1.1590sin2(0.43t), u(t)=1, \(\Delta A(t)=0.01 \operatorname{diag} \{\sin(t), \sin(t)\}\), \(\Delta W_{0} (t)= 0.02 \operatorname{diag} \{\sin(t), \sin (t)\}\), \(\Delta W_{1} (t) =0.03 \operatorname{diag} \{\sin(t), \sin (t)\}\) are shown in Fig. 2. From Fig. 2, it can be confirmed that the neural networks (29) with above parameters when 0≤h(t)≤1.1590 and \(\dot{h}(t) \leq0.5\) is passive in the sense of Definition 1.

Example 3

Consider the neural networks (29) with

When h D =0.5, the obtained upper bounds of time-delay for guaranteeing the passivity of the above neural networks in [33] and [35] were 0.7230 and 1.3752, respectively. By applying Theorem 2 with m=2, it can be obtained that the upper bound of time-delay is 35.3121, which is much larger delay bound than one in [33] and [35]. When h D is unknown, the upper bound of time-delay obtained 0.6791 and 1.3027 in [33] and [35], respectively. However, by using Theorem 2 with m=2, one can obtain the upper bound of time-delay is 3.9715. Moreover, by utilizing Theorem 2 with m=1 and m=3, the upper bounds of time-delay with different h D listed in Table 4. From the results of Table 4, it can be confirmed that Theorem 2 gives larger delay bounds than those obtained by the method of [33] and [35]. To confirm one of the obtained result in Table 4 (h D is unknown, h U =4.7368), a simulation result when x(0)=[0.5,1]T, h(t)=4.7368|sin(t)|, u(t)=0.1sin(2πt) are given in Fig. 3. From Fig. 3, it can be also verified that the neural networks (29) with above parameters when h U =4.7368 and h D is unknown is passive in the sense of Definition 1.

5 Conclusion

In this paper, the improved passivity criteria for uncertain neural networks with both discrete and distributed time-varying delays have been proposed. In order to drive less conservative results, the suitable Lyapunov–Krasovskii functional and decomposed conditions of activation function divided by states are utilized to enhance the feasible region of passivity criteria. Three numerical examples have been illustrated to show the effectiveness of the proposed methods. Future works will focus on passivity analysis and passification of various neural networks such as fuzzy neural networks, static neural networks, and so on. Furthermore, some new passivity analysis for discrete-time neural network with time-varying delays will be investigated in the near future.

References

Ensari, T., Arik, S.: Global stability of a class of neural networks with time-varying delay. IEEE Trans. Circuits Syst. II 52, 126–130 (2005)

Xu, S., Lam, J., Ho, D.W.C.: Novel global robust stability criteria for interval neural networks with multiple time-varying delays. Phys. Lett. A 342, 322–330 (2005)

Ma, Q., Xu, S., Zou, Y., Shi, G.: Synchronization of stochastic chaotic neural networks with reaction–diffusion terms. Nonlinear Dyn. 67, 2183–2196 (2012)

Balasubramaniam, P., Vembarasan, V.: Synchronization of recurrent neural networks with mixed time-delays via output coupling with delayed feedback. Nonlinear Dyn. 70, 677–691 (2012)

Faydasicok, O., Arik, S.: Robust stability analysis of a class of neural networks with discrete time delays. Neural Netw. 29–30, 52–59 (2012)

Kwon, O.M., Park Ju, H.: New delay-dependent robust stability criterion for uncertain neural networks with time-varying delays. Appl. Math. Comput. 205, 417–427 (2008)

Xu, S., Lam, J.: A survey of linear matrix inequality techniques in stability analysis of delay systems. Int. J. Syst. Sci. 39, 1095–1113 (2008)

Balasubramaniam, P., Lakshmanan, S.: Delay-range dependent stability criteria for neural networks with Markovian jumping parameters. Nonlinear Anal. Hybrid Syst. 3, 749–756 (2009)

Wang, G., Cao, J., Liang, J.: Exponential stability in the mean square for stochastic neural networks with mixed time-delays and Markovian jumping parameters. Nonlinear Dyn. 57, 209–218 (2009)

Balasubramaniam, P., Lakshmanan, S., Rakkiyappan, R.: Delay-interval dependent robust stability criteria for stochastic neural networks with linear fractional uncertainties. Neurocomputing 72, 3675–3682 (2009)

Kwon, O.M., Park, J.H.: Improved delay-dependent stability criterion for neural networks with time-varying delays. Phys. Lett. A 373, 529–535 (2009)

Tian, J., Xie, X.: New asymptotic stability criteria for neural networks with time-varying delay. Phys. Lett. A 374, 938–943 (2010)

Tian, J., Zhong, S.: Improved delay-dependent stability criterion for neural networks with time-varying delay. Appl. Math. Comput. 217, 10278–10288 (2011)

Li, T., Zheng, W.X., Lin, C.: Delay-slope-dependent stability results of recurrent neural networks. IEEE Trans. Neural Netw. 22, 2138–2143 (2011)

Mathiyalagan, K., Sakthivel, R., Marshal Anthoni, S.: Exponential stability result for discrete-time stochastic fuzzy uncertain neural networks. Phys. Lett. A 376, 901–912 (2012)

Mathiyalagan, K., Sakthivel, R., Marshal Anthoni, S.: New robust exponential stability results for discrete-time switched fuzzy neural networks with time delays. Comput. Math. Appl. 64, 2926–2938 (2012)

Sakthivel, R., Mathiyalagan, K., Marshal Anthoni, S.: Design of a passification controller for uncertain fuzzy Hopfield neural networks with time-varying delays. Phys. Scr. 84, 045024 (2011)

Sakthivela, R., Arunkumarb, A., Mathiyalaganb, K., Marshal Anthoni, S.: Robust passivity analysis of fuzzy Cohen–Grossberg BAM neural networks with time-varying delays. Appl. Math. Comput. 218, 3799–3899 (2011)

Mathiyalagan, K., Sakthivel, R., Marshal Anthoni, S.: New robust passivity criteria for stochastic fuzzy BAM neural networks with time-varying delays. Commun. Nonlinear Sci. Numer. Simul. 17, 1392–1407 (2012)

Mathiyalagan, K., Sakthivel, R., Marshal Anthoni, S.: New robust passivity criteria for discrete-time genetic regulatory networks with Markovian jumping parameters. Can. J. Phys. 90, 107–118 (2012)

Wu, Z.G., Shi, P., Su, H., Chu, J.: Stability and dissipativity analysis of static neural networks with time delay. IEEE Trans. Neural Netw. 23, 199–210 (2012)

Chen, H.: Improved stability criteria for neural networks with two additive time-varying delay components. Circuits Syst. Signal Process. doi:10.1007/s00034-013-9555-x

Chen, H., Zhu, C., Hu, P., Zhang, Y.: Delayed-state-feedback exponential stabilization for uncertain Markovian jump systems with mode-dependent time-varying state delays. Nonlinear Dyn. 69, 1023–1039 (2012)

Ruan, S., Filfil, R.S.: Dynamics of a two-neuron system with discrete and distributed delays. Physica D 191, 323–342 (2004)

Park, J.H.: A delay-dependent asymptotic stability criterion of cellular neural networks with time-varying discrete and distributed delays. Chaos Solitons Fractals 33, 436–442 (2007)

Park, J.H.: On global stability criterion for neural networks with discrete and distributed delays. Chaos Solitons Fractals 30, 897–902 (2006)

Lien, C.-H., Chung, L.-Y.: Global asymptotic stability for cellular neural networks with discrete and distributed time-varying delays. Chaos Solitons Fractals 34, 1213–1219 (2007)

Park, J.H.: An analysis of global robust stability of uncertain cellular neural networks with discrete and distributed delays. Chaos Solitons Fractals 32, 800–807 (2007)

Park, J.H.: Further results on passivity analysis of delayed cellular neural networks. Chaos Solitons Fractals 34, 1546–1551 (2007)

Willems, J.C.: Dissipative dynamical systems. Arch. Ration. Mech. Anal. 45, 321–393 (2008)

Chen, B., Li, H., Lin, C., Zhou, Q.: Passivity analysis for uncertain neural networks with discrete and distributed time-varying delays. Phys. Lett. A 373, 1242–1248 (2009)

Chen, Y., Li, W., Bi, W.: Improved results on passivity analysis of uncertain neural networks with time-varying discrete and distributed delays. Neural Process. Lett. 30, 155–169 (2009)

Xu, S., Zheng, W.X., Zou, Y.: Passivity analysis of neural networks with time-varying delays. IEEE Trans. Circuits Syst. II 56, 325–329 (2009)

Fu, J., Zhang, H., Ma, T., Zhang, Q.: On passivity analysis for stochastic neural networks with interval time-varying delay. Neurocomputing 73, 795–801 (2010)

Zeng, H.-B., He, Y., Wu, M., Xiao, S.P.: Passivity analysis for neural networks with a time-varying delay. Neurocomputing 74, 730–734 (2011)

Kwon, O.M., Lee, S.M., Park, J.H.: On improved passivity criteria of uncertain neural networks with time-varying delays. Nonlinear Dyn. 67, 1261–1271 (2012)

Song, Q., Cao, J.: Passivity of uncertain neural networks with both leakage delay and time-varying delay. Nonlinear Dyn. 2012, 1695–1707 (2012)

Li, H., Lam, J., Cheung, K.C.: Passivity criteria for continuous-time neural networks with mixed time-varying delays. Appl. Math. Comput. 218, 11062–11074 (2012)

Ariba, Y., Gouaisbaut, F.: An augmented model for robust stability analysis of time-varying delay systems. Int. J. Control 82, 1616–1626 (2009)

Kim, S.H., Park, P., Jeong, C.K.: Robust H ∞ stabilisation of networks control systems with packet analyser. IET Control Theory Appl. 4, 1828–1837 (2010)

Park, P., Ko, J.W., Jeong, C.K.: Reciprocally convex approach to stability of systems with time-varying delays. Automatica 47, 235–238 (2011)

Liu, Y., Wang, Z., Liu, X.: Global exponential stability of generalized recurrent neural networks with discrete and distributed delays. Neural Netw. 19, 667–675 (2006)

de Oliveira, M.C., Skelton, R.E.: Stability Tests for Constrained Linear Systems pp. 241–257. Springer, Berlin (2001)

Gu, K.: An integral inequality in the stability problem of time-delay systems. In: Proceedings of the 39th IEEE Conference on Decision and Control, December, Sydney, Australia, pp. 2805–2810 (2000)

Boyd, S., El Ghaoui, L., Feron, E., Balakrishnan, V.: Linear Matrix Inequalities in System and Control Theory. SIAM, Philadelphia (1994)

Morita, M.: Associative memory with nonmonotone dynamics. Neural Netw. 6, 115–126 (1993)

Acknowledgements

This research was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education, Science, and Technology (2012-0000479), and by a grant of the Korea Healthcare Technology R & D Project, Ministry of Health & Welfare, Republic of Korea (A100054).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kwon, O.M., Park, M.J., Park, J.H. et al. Passivity analysis of uncertain neural networks with mixed time-varying delays. Nonlinear Dyn 73, 2175–2189 (2013). https://doi.org/10.1007/s11071-013-0932-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-013-0932-6