Abstract

The uniaxial compressive strength and static Young’s modulus are among the key design parameters typically used in geotechnical engineering projects. In this paper, three artificial intelligence techniques, namely the local linear neuro-fuzzy (LLNF) technique, artificial neural network (ANN) and the hybrid cuckoo optimization algorithm-artificial neural network (COA-ANN), were used to estimate the uniaxial compressive strength and the static Young’s modulus of limestone. For this purpose, 115 limestone samples were subjected to the tests of uniaxial compressive strength, ultrasonic velocity, and physical properties (density and porosity) tests. From the laboratory results obtained, the values of the P-wave velocity, density, porosity and dynamic Poisson’s ratio were tested as the model input parameters to determine the best input configuration for estimating the uniaxial compressive strength and the static Young’s modulus. Different models with different input combinations were practiced, and the models with the highest estimation accuracy are reported here. Performance evaluation was carried out using three criteria including coefficient of determination, variance accounted for, and normalized mean-square error. Evaluating the correlation coefficients and error criteria resulting from the three methods used demonstrates the superiority of LLNF method to ANN and COA-ANN methods. The developed ANN models display lower correlation coefficients and higher amount of error compared to the other models. However, using cuckoo optimization algorithm has led to significant improvement in accuracy and precision of estimations carried out by ANN and has improved its efficiency. Results have confirmed that the employed hybrid method outperforms in estimating untrained data (test data) compared to the LLNF and ANN methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Proper assessment of the uniaxial compressive strength (UCS) and the static Young’s modulus (Es) of rocks is an essential prerequisite for designing dams and tunnels, excavation in rock bodies, slope stability analysis, wellbore stability analysis, prediction of optimum drilling mud pressure, and evaluation of settlement of rocky foundations (Fjar et al. 2008; Alemdag et al. 2015). The strength and elastic characteristics of a rock material are heavily influenced by its type, mineralogical composition, texture and structure, grain size and shape, porosity, anisotropy, pore water, temperature and weathering (Vutukuri et al. 1974; Ali et al. 2014; Zhang 2017). The compressive strength and elastic parameters of a rock material can be directly obtained from laboratory tests. In this approach, the rock material must be subjected to uniaxial and triaxial loads until it fails under induced stresses. These tests have been standardized by the American Society of Testing and Materials (ASTM) (1984) and by the International Society for Rock Mechanics (ISRM) (2007).

Laboratory tests are fairly straightforward and reliable, but they are expensive and time-consuming. Besides, collecting high-quality samples from weathered, weak and extensively fractured rocks can be difficult (Kilic and Teymen 2008; Moradian and Behnia 2009; Yurdakul and Akdas 2013; Kahraman et al. 2017; Sharma et al. 2017). These problems can be circumvented by the use of indirect method, whereby the strength and elastic modulus of rock can be expressed as a function of simple characteristics such as lithological and mineralogical parameters, P-wave velocity, point load index, Schmidt hammer rebound number, density, and porosity (Kahraman 2001; Yasar and Erdogan 2004; Shalabi et al. 2007; Dincer et al. 2008; Mishra and Basu 2013; Ozturk and Altinpinar 2017; Ghafoori et al. 2018).

The UCS and Es values can also be estimated by empirical relationships derived via simple or nonlinear regressions. Nevertheless, these empirical relationships can yield highly diverse values even for the same type of rock (Zhang 2017). In addition, according to Meulenkamp and Grima (1999), one of the major flaws of statistical relationships is estimating average values, which perhaps can lead to overestimating the low values of UCS, and vice versa. Another disadvantage of statistical relationships is their inability to predict highly nonlinear phenomena (Baykasoglu et al. 2004). Besides regression methods, soft computing techniques including artificial neural networks (ANN), fuzzy logic systems (FLS), adaptive neuro-fuzzy interface system (ANFIS), and optimization algorithms have recently found widespread applications in geotechnical engineering. The impetus for this approach arises from the uncertain and error-prone nature of the data used in Earth sciences and the decent ability of computational intelligence techniques to deal with this type of data. A comprehensive review of recent applications of computational intelligence techniques in predicting rock strength and deformation parameters has concluded that computational intelligence can serve as a powerful tool for estimating various rock properties (Aminzadeh and Brouwer 2006; Briševac et al. 2016). One of the soft computing tools that has rapidly found extensive applications in various disciplines and especially in geotechnical engineering is the ANN (Goh 2001). The appeal of ANN can be attributed to its desirable data analysis such as the ability to model nonlinear data, high parallelism, robustness, fault tolerance, and the ability to learn from the available data and then handle vague and imprecise information by generalizing the results. Meulenkamp and Grima (1999) modeled the uniaxial strength obtained through testing 194 rock samples and found that estimations of ANN are more accurate than those of the regression method. In a study by Singh et al. (2001), UCS, tensile strength and axial point load strength were simultaneously modeled using certain basic parameters of the tested rocks. Tiryaki (2008) modeled the UCS of quartz based on its effective porosity and dry density using ANN and regression tree techniques. Yilmaz and Yuksek (2009) utilized ANN to predict the UCS and elastic modulus of gypsum based on point load strength, effective porosity, Schmidt hammer rebound number, and slake durability index. Dehghan et al. (2010) took advantage of regression analysis and ANN to model the UCS and elastic modulus of travertine using index properties (e.g., P-wave velocity, point load index, porosity, and Schmidt hammer rebound number) measured in 30 samples. In their study, the results of a feed-forward ANN were found to be far more reliable than those of the regression method. Yagiz et al. (2012) employed an ANN and nonlinear regression to develop a model for estimating the UCS and elastic modulus of carbonate rocks based on their characteristics. Yesiloglu-Gultekin et al. (2013a) used a variety of methods including multiple regression, an ANN and an adaptive neuro-fuzzy system to develop a number of models for estimating the UCS of six types of granite from different regions of Turkey. In another study by Yesiloglu-Gultekin et al. (2013b), the UCS of granite blocks was modeled using regression methods and the adaptive neuro-fuzzy system. In this study, rock mineralogy was assumed as a strength control factor. The results of the study showed that both the nonlinear regression method and the adaptive neuro-fuzzy system had an acceptable UCS estimation capability. In a study by Majidi and Rezaei (2013), an ANN and multivariate regression analysis were used to model the UCS of rocks surrounding a roadway. In their model, rock type, Schmidt hardness, density, and porosity were used as model input, and UCS was assumed as model output. The results of this study showed the superiority of an ANN over multivariate regression analysis. Ali et al. (2014) used various methods including multivariate regression, fuzzy inference, and an ANN to model the UCS of banded amphibolite rocks based on micro-fabric characteristics such as grain size, form factor, and quartz content. Torabi-Kaveh et al. (2015) used the results of the tests conducted on 105 samples of Asmari limestone collected from two different dam sites to model and predict the UCS and elastic modulus of carbonate rocks and to evaluate the ability of linear regression, nonlinear regression and an ANN in this respect. Ultimately, they concluded that ANN-based models provide far better estimations than regression methods. Tonnizam Mohamad et al. (2015) employed the ANN and the hybrid method of particle swarm optimization with ANN (PSO-ANN) techniques in order to predict the UCS of soft rocks and concluded that the optimization algorithm could improve the performance of ANN. Jahed Armaghani et al. (2016) employed various modeling methods such as simple, linear, nonlinear regression, a standalone ANN and integrated imperialist competitive algorithms with ANN (ICA-ANN) to develop a number of models for predicting the UCS of sandstone. Their results showed the superiority of the ICA-ANN hybrid technique over the other tested methods. The literature comprises numerous studies that have focused on predicting the uniaxial compressive strength of rocks using artificial intelligence methods (Table 1).

As it was explained, previous studies have concentrated on estimating the UCS through regression, ANN, and ANFIS methods and have less focused on models for predicting the elastic modulus of rocks. The present study, however, provides new models for predicting the UCS and static Young’s modulus of limestone based on sonic velocity, density, porosity and Poisson’s ratio. For this purpose, local linear neuro-fuzzy and cuckoo optimization algorithm-ANN methods were employed.

Methods

Neuro-fuzzy system

Neuro-fuzzy computing is a hybrid modeling method. Fuzzy systems are often designed by employing a group of experts to formulate expert knowledge into fuzzy rules. One way of creating fuzzy rules is to derive them from the available data. Combining the learning capabilities of ANNs (Jang et al. 1997) with the knowledge representation capabilities of fuzzy logic has led to the advent of neuro-fuzzy systems capable of deriving fuzzy rules from training datasets. Typically, neuro-fuzzy systems are designed with an architectural feature similar to that of ANNs and are trained using similar training methods.

LLNF models may be given a mathematical description. A typical LLNF model splits a complex modeling problem into a large number of smaller and simpler sub-problems that can be individually solved by a linear model. The LLNF model is a neuro-fuzzy network with one hidden layer and a linear neuron in the output layer. Each neuron consists of a local linear model (LLM) and a validity function ϕi (u), specifying a range in which LLM is valid. The output of each LLM is given by:

where \( \underline{u} = \left[ {u_{1}^{{}} u_{2} \cdots u_{l} } \right]^{\text{T}} \) is the input vector, l is the number of input dimension, and wil and yi are the LLM parameter and the output for the ith neuron, respectively. The output of the model with K neurons is given by:

where \( \varphi_{i} (\underline{u} ) \) is the validity function of the \( i_{\text{th}} \) neuron for the input vector u. The model output is, therefore, the weighted sum of LLM outputs. The validity function of each neuron is a value within the interval [0, 1] and represents the validity of the solution of the LLM related to that neuron. Being a multiplier of the LLM output, this value can be used to adjust the contribution of each neuron to the final output. Validity functions are typically formulated as normalized Gaussian functions. In the case of orthogonal Gaussians, the validity function is expressed as:

where μi can be obtained from the following relationship:

In the above relationship, c is the center, and σij is the standard deviation of the Gaussian functions.

Local optimization of the parameters of each LLM can be easily done with the help of the least squares method, that is, by creating a weight matrix and a regression matrix from the input and then applying the least squares method to the matrices. The regression matrix \( X \) for \( N \) measured data samples is expressed as:

where:

The local estimates of the parameters and the output of each model are calculated by Eq. 7:

where \( \alpha \) is a regularization parameter for avoiding any near singularity of the matrix \( X^{T} X \).

This model can be optimized by several different methods, but simplicity and clarity of tree-based methods can make them particularly suitable for this purpose (Nelles 2001).

Local Linear Model Tree (LOLIMOT) Learning Algorithm

A local linear model tree (LOLIMOT) is an incremental learning method based on a partition of input space (Nelles 2001). In each iteration of this algorithm, a new rule or a local linear model is added to the model, the validity function corresponding to the actual partitioning is computed, and the corresponding rules are optimized using the locally weighted least squares method. In this way, the algorithm can prevent time-consuming nonlinear optimizations. A LOLIMOT algorithm consists of five steps, briefly explained below (Nelles 2001):

-

Step 1 Create an initial model by considering the entire input space as one LLM while assuming that K = 1 and φi(u) = 1 (K = the number of LLMs, φi(u) = the validity function).

-

Step 2 Find the worst LLM: Calculate the local loss function to find the worst-performing LLM using the root mean-square error (RMSR) measure.

-

Step 3 Check all the divisions of LLM: Select the worst LLM for further improvement, and split the hyper-rectangle of this new LLM into two halves. Test all the divisions in all the dimensions. For each division, follow the below steps for dimensions 1 to l:

-

a)

Create multidimensional membership degrees for both hyper-rectangles.

-

b)

Recreate all validity functions.

-

c)

Locally estimate the parameters of both new LLMs.

-

d)

Calculate the error function of the current model.

-

Step 4 Select and apply the best division by selecting the best alternative among the l dimensions checked in Step 3 (i.e., the alternative leading to greatest error reduction) and applying the validity function formed in Step 3-a and the LLMs optimized in Step 3-c to the model. Increase the number of LLMs from K to K + 1.

-

Step 5 Test the convergence. Stop if the termination condition is met; otherwise, go to Step 2 and iterate the operation.

Artificial Neural Network

Different ANN models are distinguished by their three characteristics, namely transfer function, network architecture and learning rules. Every neural network works in three steps including learning, generalization, and operation (Haykin 1999). In the first step, a neural network categorizes and learns the patterns in the input data. In the second step, the neural network improves its ability to find acceptable responses to the inputs that have not been existing in the leaning set. In the third step, the neural network fulfills the purpose for which it has been designed, that is, estimation of new data. Multi-layer perceptron (MLP) is among the most popular and widely used variants of artificial neural networks. This network consists of an input layer, an output layer, and one or more hidden layers between these two. The task of hidden layers is to enhance the ability of the network to model complex functions. The optimal number of hidden layers can be determined by trial and error. Each layer of this network contains multiple neurons, the number of which varies depending on the application. Each neuron receives one or more inputs, which it processes using a transfer function (linear, sigmoid, logistic-sigmoid) to produce an output signal. The signal is then sent to subsequent neurons. The mentioned processing in a typical neuron consists of multiplying each input by a corresponding weight, summing the answer with a value known as bias, and using the result as the input of the transfer function to produce the output signal. The most significant step in developing a neural network is learning (also known as training). The neural network can be trained through several methods, the most notable of which is the error back propagation algorithm. The error back propagation algorithm creates a set of outputs using randomly selected weights, determines the deviation of the predicted outputs from the real values, and then distributes the error among the weights using a back-forward approach. This weight modification process continues until the error becomes smaller than the desired value, and the network reaches a stable condition (Haykin 1999).

Cuckoo Optimization Algorithm

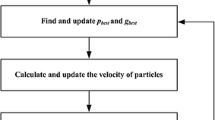

The cuckoo optimization algorithm (COA) is an optimization method inspired by the life of cuckoo birds. The COA was first introduced by Yang and Deb (2010), but it was further developed by Rajabioun (2011). This algorithm is one of the most powerful optimization techniques with particularly good ability to determine global optima. Like other evolutionary algorithms, the COA starts its operation with an initial population (here, a population of cuckoos). This population of cuckoos produces a number of eggs laid in the nest of other bird species, known as hosts. Those cuckoo eggs that are more similar to the host eggs have a better chance of hatching and growing to mature cuckoos; otherwise, they will be identified and destroyed by the host. The number of eggs that grow to maturity represents the suitability of the host nests in a habitat. The more eggs survive in a given habitat, the greater profit will be gained from that habitat. Thus, the term that the COA seeks to optimize is the location where the greatest numbers of eggs can survive. In other words, cuckoos seek the habitat most appropriate for egg-laying to maximize their offspring’s rate of survival. The cuckoo eggs that survive grow to maturity and form a new population of cuckoos, which will migrate toward the best (or most profitable) habitat among all alternatives, but they will not be able to move very far away from their current habitat. The maximum migration distance is limited by an egg-laying radius. This egg-laying radius is computed based on the number of eggs that each cuckoo lays and the bird’s distance from the best-found habitat. Consequently, new cuckoos start to randomly lay eggs in the nest within the computed egg-laying radius, and this process is repeated until the best egg-laying location (i.e., the habitat with the highest profits) is found. This location is the place to which the majority of cuckoos converge.

To solve an optimization problem with the COA, the problem variables must be organized into a matrix called habitat. For an Nvar-dimensional optimization problem, the habitat is an array of \( 1 \times N_{\text{var}} \) representing the current position of cuckoos defined as follows:

The profit of the current habitat is obtained by calculating the profit function fp for that habitat:

The COA is a profit maximization algorithm. Therefore, minimization problems are analyzed using the following function:

An optimization procedure starts by creating a habitat matrix of the size \( N_{\text{opt}} \times N_{\text{var}} \) and then assigning a random number of eggs to each of these habitats. In nature, a cuckoo lays from 5 to 20 eggs. These numbers are, therefore, typically used as the lower and upper bounds of egg-laying per cuckoo per iteration. As mentioned above, cuckoos lay their eggs within a maximum radius from their current position, known as egg-laying radius (ELR). In an optimization problem, every variable has an upper limit (Varhi) and a lower limit (Varlow), which define ELR as follows:

where α is a variable for adjusting the maximum value of ELR. Every cuckoo randomly lays some eggs in the host nests located within its ELR. Once all the cuckoos have laid their eggs, some of the eggs that are less similar to host eggs are detected and destroyed by the host bird. In mathematical terms, a percent of cuckoo eggs (usually 10%) with the lowest profit function value are discarded, but other eggs hatch and grow to form a new population. Figure 1 shows the flowchart of the COA algorithm.

Performance Evaluation

The performance of the developed models was evaluated by three criteria including coefficient of determination (R2), normalized mean-square error (NMSE), and variance accounted for (VAF). These criteria are defined as follows:

In the above relationships, \( N \) is the number of samples, \( P_{i} \) and \( M_{i} \) are the predicted and measured output values, \( \overline{P} \) and \( \overline{M} \) are the means of the predicted and measured output values, and \( {\text{Var}} \) is the variance. In an ideal model, R2 = 1, VAF = 100, and NMSE = 0.

Rock Characteristics and Testing Procedure

The samples examined in this study were the cylindrical core samples of limestone collected from nine exploratory boreholes drilled through the limestone of the Dalan Formation. These boreholes are located near the hydropower cavern of the Roudbar pumped storage power plant project, Lorestan province, Iran (Figs. 2 and 3).

Samples were prepared according to the method suggested by ISRM (2007). During the sampling practice, caution was taken to get rock samples without layering to avoid any anisotropic effect in measurements. Ultimately, 115 high-quality samples were prepared. The prepared samples had diameters ranging from 54 to 82 mm and the length-to-diameter (L/D) ratios between 2 and 3. Although the sample diameters range from 54 to 82 mm, they comply with the suggestion of Fairhurst and Hudson (1999) that for UCS, test samples should have at least 54 mm diameter and L/D ratio between 2 and 3. Samples were subjected to a non-destructive ultrasonic test, and their P-wave velocity was measured using a pundit tool. The P and S-wave velocities were determined by measuring the travel time and the distance between the transmitter and the receiver. In reality, wave travel time is the sum of the real travel time (inside the sample) and the time delay due to the presence of electronic components, transducers, and bonds. Thus, prior to measuring the travel time, the time delays in the P and S-wave measurements were separately determined separately using a standard sample or a face-to-face method (Starzec 1999). Figure 4 shows a schematic diagram of the ultrasonic equipment. To improve the signal-to-noise ratio, a constant stress of 10 Newton per square centimeter was applied along the axial direction (ISRM 2007). In addition, an ultrasonic couplant was used to improve the contact between the sample and the transducer.

After ultrasonic testing, the porosity and density of all the 115 samples were measured in the laboratory. Subsequently, all the 115 samples were subjected to UCS tests and their static Young’s modulus were determined. The failure modes from the UCS tests showed predominantly axial splitting mode and in limited number of cases shear failure mode. Figure 5 shows examples of failure modes observed in some of the limestone samples loaded in the UCS test. The basic statistics of the results obtained from these tests are presented in Table 2. The results of laboratory experiments span a range of variations for either mechanical or physical parameters. This can be attributed to natural variations including existence of microstructures in samples collected from deep boreholes drilled from surface toward position of designed underground structures in the Dalan formation. As the significant correlation between independent variables can undermine the multivariate modeling efficiency, multicollinearity between input parameters including P-wave velocity, density, porosity and the dynamic Poisson’s ratio was checked prior to the main analysis. In this study, variance inflation factor (VIF) was used to investigate the presence of collinearity between independent variables; VIF values greater than 10 were attributed to the existence of multicollinearity (Gunset 1983). According to Table 2, it can be concluded that no multicollinearity existed between input variables.

Model Implementation and Results

LLNF

By developing a MATLAB code, the LLNF model was implemented to estimate the UCS parameters and the static Young’s modulus. Different combinations of P-wave velocity, Poisson’s ratio, density, and porosity were tested as the model input to estimate the UCS and static Young’s modulus of the limestone rock. The ordinary least squares (OLS) (Billings et al. 1998) method was employed to achieve the best model for every combination of inputs. The linear neuro-fuzzy model was trained by the LOLIMOT. As mentioned above, the LOLIMOT splits the input space in the axis-orthogonal direction into a number of hyper-rectangles (here, the division ratio was ½), and fits a Gaussian validity function to the resulting hyper-rectangles. The center of these Gaussian validity functions is the center of the hyper-rectangles, and their standard deviation is one-third of the hyper-rectangle extension (\( \alpha = \frac{1}{3} \)). To develop and test the models, 70% of the data was randomly selected and assigned to the learning subset to be used for network training, and 30% was assigned to the testing subset to be reserved for performance evaluation. The UCS performance of the model was analyzed by combinations of one to four parameters used as model input. In this part of the study, four analyses with four configurations were performed for estimating the UCS. As mentioned above, the best input for each combination was determined by the OLS algorithm. The impact of the number of neurons on the results was evaluated by repeating the analyses with different numbers of neurons. The results of the developed UCS estimation models are presented in Table 3.

The same process was repeated for the Es using different combinations of the inputs. The results of the best models obtained for this purpose are presented in Table 4.

According to Tables 3 and 4, the developed models showed reasonable accuracy in estimating the UCS and Es of the limestone, but the UCS estimation models outperformed the models dedicated to estimating the Es. This difference might be due to the weaker correlation between this parameter and the input variables.

As further observed, the calculated static indexes for the training and testing subsets proved to be so close together, denoting the appropriacy of the modeling process and the resulting models. Moreover, the best VAF and NMSE values for the UCS estimation were observed in the analysis A1, where the variables \( \rho ,\upsilon ,V_{p} ,n \) were used as model inputs. Also, the best predicting model for Es has resulted from A2 examination in which \( \rho ,\,\upsilon \,{\text{and}}\,V_{p} \) are introduced as input parameters. The weakest model in this respect was found to be the one with only one input in the analysis A4. It is notable that, except for the analysis A4, all the estimations of the UCS and Es had the errors of less than 3 and 4%, respectively, which reflects the good modeling capability of the employed method. Figures 6 and 7 depict the best results emerging from LLNF method in the estimation of UCS and Es that correspond to A1 and A2 analyses, respectively. As it can be seen, all the points, including those pertaining to the trained and untrained cases, are almost completely located within a narrow range around the angle bisector.

ANN

A MATLAB code was used for performing ANN mode. A feed-forward neural network with back propagation (BP) algorithm was utilized for this research (Haykin 1999). The tangent sigmoid (tansig) was utilized between the input layer and hidden layer, whereas the linear transfer function (purelin) was used between the hidden layer and output layer. Learning of ANN model by means of BP algorithm is an iterative optimization process in which the error between the predicted and measured data, MSE (mean-squared error, equation below) is minimized by adjusting the weight and bias appropriately (Haykin 1999):

where \( P_{i} \) and \( M_{i} \) are the predicted and measured output, respectively, and N is the total data number. The learning process is repeated until the error between the predicted and measured data meets the threshold error.

There are many variations of BP algorithms for learning neural network. The Levenberg–Marquardt (LM) algorithm with back propagation was employed as the ANN models’ learning algorithm because of its superior efficiency in learning compared to other gradient descent methods (Haykin 1999). To avoid over-fitting, input and output parameters were normalized between uniform range of [− 1, 1] according to the following equation:

where \( x_{\text{norm}}^{{}} \) is the normalized value of the input, \( x \) is the real value of the input, \( x_{\hbox{min} } \) is the minimum value, and \( x_{\hbox{max} } \) is the maximum value of the input. Once the network was constructed, different combinations of parameters were used as input parameters in modeling. The optimal results from the neural network model have been presented in Tables 5 and 6. As can be seen, the best result for both the UCS and Es parameters estimate has been achieved from A1 analysis where the combination of the \( \rho ,^{{}} \upsilon ,^{{}} V_{p} ,^{{}} {\text{and}}_{{}} n \) parameters have been used. Also, the weakest model for both the UCS and Es parameters is the model which is based on one input only. However, examining the correlation coefficient and error value shows that the models developed by the neural network have reasonable accuracy in estimating the values of the target parameters. Figures 8 and 9 (analysis A2) show that, for most cases in the learning and testing subsets, the UCS and the Es of limestone were well estimated by the ANN models. Comparison between the results of this section with those of LLNF method makes it clear that precision of ANN method is less than LLNF method.

COA-ANN

In this part of the study, a cuckoo search algorithm was used to optimize the input space for an ANN. The ANN used in this study is a multi-layered perceptron whose weights and biases are optimized by the COA in the learning process. Typically, an ANN suffers from certain flaws, such as low speed and convergence to local minima, which can be remedied by the proper use of the COA. Using a MATLAB code, a COA-ANN hybrid model was implemented to estimate the UCS and the Es. The model inputs and their combination were coded as described in the previous section. The COA-specific parameters used in the modeling included the maximum number of cuckoos (20), the maximum number of eggs (4), and the minimum number of eggs (2). The ELR was initially considered to be 2, but it declined at the rate of 0.99. This decline rate was chosen to limit the egg-laying space as the algorithm converged to a specific area and, thus, to determine the global optimum point more accurately.

The results of the COA-ANN hybrid models with the best estimations of the UCS and the Es are presented in Tables 7 and 8.

The obtained statistics point to the good estimation ability of the hybrid method was used in this study. The best estimation of the UCS was observed in the analysis A1, where the three variables \( \rho ,\;\upsilon ,\;V_{p} \;{\text{and}}\;n \) served as model inputs. Also, the best estimation of Es is related to the combination of \( \rho ,^{{}} \upsilon ,^{{}} {\text{and}}_{{}} V_{p} \) parameters in A2 analysis. The worst estimations, however, were observed in the model developed by only one parameter. Figures 10 and 11 display the results of A1 and A2 analysis, respectively. The resulting diagrams clearly demonstrate developed models are well-trained and are able to predict untrained data. Comparison between the results of COA-ANN and ANN models reveals that the COA optimization algorithm has been able to improve ANN performance. The coefficient of variation for COA-ANN model has increased noticeably and its error is decreased in comparison with ANN demonstrating the applicability of COA-ANN used in this research.

Conclusions

Based on the results obtained in this study, it can be concluded that the proposed local linear neuro-fuzzy and hybrid cuckoo optimization algorithm-artificial neural network models can estimate the uniaxial compressive strength and the static Young’s modulus of limestone with great precision and are, therefore, highly practical for this purpose. It was revealed that, among the tested parameters, density, P-wave velocity, and Poisson’s ratio were the most important variables for estimating the uniaxial compressive strength and the static Young’s modulus of limestone. Moreover, the model developed based on a combination of these three parameters showed a superior estimation performance. The comparison between the three methods showed that the local linear neuro-fuzzy model presented a generally better estimation capability than artificial neural network and hybrid cuckoo optimization algorithm-artificial neural network, but the last method resulted in better estimations with untrained data. The correlation coefficients and the amount of errors associated with different models show that artificial neural network holds the lowest precision among the three methods. However, by applying cuckoo optimization algorithm, its performance has been improved. Consequently, both the local linear neuro-fuzzy and the cuckoo optimization algorithm-artificial neural network models proved to be adequately capable of solving nonlinear problems, and consequently they could also be utilized to solve other complex engineering problems.

References

Alemdag, S., Gurocak, Z., & Gokceoglu, C. (2015). A simple regression-based approach to estimate deformation modulus of rock masses. Journal of African Earth Sciences, 110, 75–80.

Ali, E., Guang, W., & Ibrahim, A. (2014). Empirical relations between compressive strength and microfabric properties of amphibolites using multivariate regression, fuzzy inference and neural networks: A comparative study. Engineering Geology, 183(9), 230–240.

Aminzadeh, F., & Brouwer, F. (2006). Integrating neural network and fuzzy logic for improved reservoir property prediction and prospect ranking. SEG Technical expanded abstracts, doi, 10(1190/1), 2369863.

ASTM (American Society for Testing and Materials). (1984). Annual book of ASTM standards 4.08. ASTM, Philadelphia, PA.

Baykasoglu, A., Dereli, T., & Tanis, S. (2004). Prediction of cement strength using soft computing techniques. Cement and Concrete Research, 34(11), 2083–2090.

Billings, S., Korenberg, M., & Chen, S. (1998). Identification of nonlinear output-affine systems using an orthogonal least-squares algorithm. International Journal of System Science, 19(8), 1559–1568.

Briševac, Z., Hrženjak, P., & Buljan, R. (2016). Models for estimating uniaxial compressive strength and elastic modulus. Građevinar, 68(1), 19–28. https://doi.org/10.14256/JCE.1431.2015.

Ceryan, N., Okkan, U., & Kesimal, A. (2012). Prediction of unconfined compressive strength of carbonate rocks using artificial neural networks. Environmental Earth Sciences, 68(3), 807–819.

Dehghan, S., Sattari, G. H., Chehreh, C. S., & Aliabadi, M. A. (2010). Prediction of unconfined compressive strength and modulus of elasticity for Travertine samples using regression and artificial neural networks. Mining Science and Technology (China), 20(1), 41–46. https://doi.org/10.1016/S1674-5264(09)60158-7.

Dincer, I., Acar, A., & Ural, S. (2008). Estimation of strength and deformation properties of quaternary Caliche deposits. Bulletin of Engineering Geology and the Environment, 67(3), 353–366.

Fairhurst, C. E., & Hudson, J. A. (1999). Draft ISRM suggested method for the complete stress-strain curve for intact rock in uniaxial compression. International Journal of Rock Mechanics and Mining Sciences, 36(3), 279–289.

Fjar, E., Holt, R. M., Raaen, A. M., Risnes, R., & Horsrud, P. (2008). Petroleum related rock mechanics (Vol. 53, pp. 325–327). New York: Elsevier.

Garrett, J. H. (1994). Where and why artificial neural networks are applicable in civil engineering. Journal of Computing in Civil Engineering, 8(2), 129–130.

Ghafoori, M., Rastegarnia, A., & Lashkaripour, G. R. (2018). Estimation of static parameters based on dynamical and physical properties in limestone rocks. Journal of African Earth Sciences, 137, 22–31.

Goh, A. T. C. (2001). Neural network applications in geotechnical engineering. Scientia Iranica, 8(1), 1–9.

Gunset, R. F. (1983). Regression analysis with multicollinear predictor variables: definition, detection, and effects. Communications in Statistics, 12(19), 2217–2260.

Haykin, S. (1999). Neural networks (2nd ed.). Englewood Cliffs, NJ: Prentice-Hall.

ISRM. (2007). The complete ISRM suggested methods for rock characterization, testing and monitoring: 1974-2006. In R. Ulusay & J. A. Hudson (Eds.), Suggested methods prepared by the commission on testing methods. Ankara: International Society for Rock Mechanics, ISRM Turkish National Group.

Jahed Armaghani, D., Amin, M. F. M., Yagiz, S., Shirani Faradonbeh, R., & Asnida Abdullah, R. (2016). Prediction of the uniaxial compressive strength of sandstone using various modeling techniques. International Journal of Rock Mechanics and Mining Sciences, 85, 174–186.

Jang, J., Sun, C., & Mizutani, E. (1997). Fuzzy-neuro and soft computing: a computational approach to learning and machine intelligence. Upper saddle river, New Jersey: Prentice hall.

Kahraman, S. (2001). Evaluation of simple methods for assessing the uniaxial compressive strength of rock. International Journal of Rock Mechanics and Mining Sciences, 38(7), 981–994.

Kahraman, S., Fener, M., & Gunaydin, O. (2017). Estimating the uniaxial compressive strength of pyroclastic rocks from the slake durability index. Bulletin of Engineering Geology and the Environment, 76(3), 1107–1115.

Kilic, A., & Teymen, A. (2008). Determination of mechanical properties of rocks using simple methods. Bulletin of Engineering Geology and the Environment. https://doi.org/10.1007/s10064-008-0128-3.

Majdi, A., & Rezaei, M. (2013). Prediction of unconfined compressive strength of rock surrounding a roadway using artificial neural network. Neural Computing and Applications, 23(2), 381–389.

Maji, V. B., & Sitharam, T. G. (2008). Prediction of elastic modulus of jointed rock mass using artificial neural network. Geotechnical and Geological Engineering, 26(4), 443–452.

Meulenkamp, F., & Grima, M. A. (1999). Application of neural network for the prediction of the unconfined compressive strength (UCS) from Equotip hardness. International Journal of Rock Mechanics and Mining Sciences, 36(1), 29–33.

Mishra, D. A., & Basu, A. (2013). Estimation of uniaxial compressive strength of rock materials by index tests using regression analysis and fuzzy inference system. Engineering Geology, 160(27), 54–68.

Monjezi, M., Khoshalan, H. A., & Razifard, M. (2012). A neuro-genetic network for predicting uniaxial compressive strength of rocks. Geotechnical and Geological Engineering, 30(4), 1053–1062.

Moradian, Z. A., & Behnia, M. (2009). Predicting the uniaxial compressive strength and static Young’s modulus of intact sedimentary rocks using the ultrasonic test. International Journal of Geomechanics, 9(1), 14–19.

Nelles, O. (2001). Nonlinear system identification. Berlin: Springer.

Ozturk, H., & Altinpinar, M. (2017). The estimation of uniaxial compressive strength conversion factor of trona and interbeds from point load tests and numerical modeling. Journal of African Earth Sciences. https://doi.org/10.1016/j.jafrearsci.2017.04.015.

Rajabioun, R. (2011). Cuckoo optimization algorithm. Applied Soft Computing, 11(8), 5508–5518.

Shalabi, F., Cording, E. J., & Al-Hattamleh, O. H. (2007). Estimation of rock engineering properties using hardness tests. Engineering Geology, 90(3–4), 138–147.

Sharma, L. K., Vishal, V., & Singh, T. N. (2017). Developing novel models using neural networks and fuzzy systems for the prediction of strength of rocks from key geomechanical properties. Journal of Measurement, 102, 158–169.

Singh, V. K., Singh, D., & Singh, T. N. (2001). Prediction of strength properties of some schistose rocks from petrographic properties using artificial neural networks. International Journal of Rock Mechanics and Mining Sciences, 38(2), 269–284.

Starzec, P. (1999). Dynamic elastic properties of crystalline rocks from south-west Sweden. International Journal of Rock Mechanics and Mining Sciences, 36(2), 265–272.

Tiryaki, B. (2008). Predicting intact rock strength for mechanical excavation using multivariate statistics, artificial neural networks, and regression trees. Engineering Geology, 99(1–2), 51–60.

Tonnizam Mohamad, E., Jahed Armaghani, D., Momeni, E., & Alavi Nezhad Khalil Abad, S. V. (2015). Prediction of the unconfined compressive strength of soft rocks: a PSO- based ANN approach. Bulletin of Engineering Geology and the Environment, 74(3), 745–757.

Torabi-Kaveh, M., Naseri, F., Saneie, S., & Sarshari, B. (2015). Application of artificial neural networks and multivariate statistics to predict UCS and E using physical properties of Asmari limestones. Arabian Journal of Geosciences, 8(5), 2889–2897.

Vutukuri, V. S., Lama, R. D., & Saluja, S. S. (1974). Handbook on mechanical properties of rocks-testing techniques and results—volume I (pp. 32–61). Germany: Trans Tech Publications.

Yagiz, S., Sezer, E. A., & Gokceoglu, C. (2012). Artificial neural networks and nonlinear regression techniques to assess the influence of slake durability cycles on the prediction of uniaxial compressive strength and modulus of elasticity for carbonate rocks. International Journal for Numerical and Analytical Methods in Geomechanics, 36, 1636–1650.

Yang, X.-S., & Deb, S. (2010). Engineering optimization by cuckoo search. International Journal of Mathematical Modelling and Numerical Optimisation, 1(14), 330–343.

Yasar, E., & Erdogan, Y. (2004). Estimation of rock physico mechanical properties using hardness methods. Engineering Geology, 71, 281–288.

Yesiloglu-Gultekin, N., Gokceoglu, C., & Sezer, E. A. (2013a). Prediction of uniaxial compressive strength of granitic rocks by various nonlinear tools and comparison of their performances. International Journal of Rock Mechanics and Mining Sciences, 62, 113–122.

Yesiloglu-Gultekin, N., Sezer, E. A., Gokceoglu, C., & Bayhan, H. (2013b). An application of adaptive neuro fuzzy inference system for estimating the uniaxial compressive strength of certain granitic rocks from their mineral contents. Expert System with Applications, 40(3), 921–928.

Yilmaz, L., & Yuksek, G. (2009). Prediction of the strength and elasticity modulus of gypsum using multiple regression, ANN, and ANFIS models. International Journal of Rock Mechanics and Mining Sciences, 46(4), 803–810.

Yurdakul, M., & Akdas, H. (2013). Modeling uniaxial compressive strength of building stones using non-destructive test results as neural networks input parameters. Construction and Building Materials, 47, 1010–1019.

Zhang, L. (2017). Engineering properties of rocks (2nd ed., pp. 251–271). New York: Elsevier.

Acknowledgments

We would like to thank two anonymous reviewers and the Editor-in-Chief of Natural Resources Research, Dr. John Carranza, for their valuable and constructive comments that greatly helped to improve the quality of this paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Mokhtari, M., Behnia, M. Comparison of LLNF, ANN, and COA-ANN Techniques in Modeling the Uniaxial Compressive Strength and Static Young’s Modulus of Limestone of the Dalan Formation. Nat Resour Res 28, 223–239 (2019). https://doi.org/10.1007/s11053-018-9383-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11053-018-9383-6