Abstract

Uniaxial Compressive Strength (UCS) is considered as one of the most important parameters in designing rock structures. Determination of this parameter requires preparation of rock samples which is costly and time consuming. Moreover discrepancy of laboratory test results is often observed. To overcome the drawbacks of traditional method of UCS measurement, in this paper, predictive models based on neuro-genetic approach and multivariable regression analysis have been developed for predicting compressive strength of different type of rocks. Coefficient of determinatoin (R2) and the Mean Square Error (MSE) were calculated for comparison of the models’ efficiency. It was observed that accuracy of the neuro-genetic model is significantly better than regression model. For the neuro-genetic and regression models, R2 and MSE were equal to 95.89 % and 0.0045 and 77.4 % and 1.61, respectively. According to sensitivity analysis for neuro-genetic model, Schmidt rebound number is the most effective parameter in predicting UCS.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Uniaxial compressive strength of rocks is a competent parameter for designing surface and underground rock structures (Bieniawski 1974). Direct determination of this parameter in the laboratory is carried out according to standards of the American Society for Testing and Materials (ASTM) and the International Society for Rock Mechanics (ISRM). To do so, rock samples have to be prepared, which is expensive and time consuming. Furthermore, in some cases of weak rocks, sampling is almost impossible. To overcome problems with direct laboratory determination of UCS, indirect methods have been developed (e.g. empirical models and artificial intelligence (AI) based models). In the empirical models, predictive functions are usually derived from simple tests such as Schmidt rebound number, point load tests, impact strength, and sound velocity using traditional regression analysis (Fener et al. 2005; Singh et al. 2001). In the past investigations these models have been employed by many researchers. However, accuracy of such models is rather low which may be attributed to linearity assumptions (Singh et al. 1983; Haramy and DeMarco 1985; O’Rourke 1989; Garret 1994; Huang and Wanstedt 1998). In view of the above shortcomings of the empirical methods, artificial neural network (ANN) models, a subsystem of artificial intelligence, may properly be used for predicting UCS. This method has been used in the field of rock mechanics and mining sciences and particularly for predicting UCS by many investigators (Nie and Zhang 1994; Huang 1999; Cevik et al. 2011; Atici 2011).

In this study, a new ANN model was developed to predict UCS. Specialty of this new so-called neuro-genetic model is optimizing the network parameters (number of neurons in hidden layers, learning rate and momentum) with the help of genetic algorithm. It should be mentioned that this is the first application of this kind for predicting UCS.

2 UCS of Rocks: Measurement and Prediction Methods

UCS is regarded as the highest stress that a rock specimen can carry when a stress is applied in an axial direction to the ends of a cylindrical specimen. The UCS test allows comparisons to be made between rocks and affords some indication of rock behavior under more complex stress systems (Bell, 2005). As previously mentioned, ASTM and ISRM specifications are direct methods for measurement of this parameter in the laboratory. Measurements of UCS can be time-consuming and expensive and requires carefully prepared rock samples. Therefore, several different ways to predict UCS, including the point load test, Schmidt hammer test, shore hardness test, porosity, sonic velocity, etc. have been recently employed by various researchers. There are a vast agreement of published empirical relationships between point load index and UCS. Broch and Franklin (1972) reported that UCS is about 24 times the point load index. Bienawski (1975) proposed this coefficient to be approximately 23. ISRM (1985) suggested this value as 20–25. In a laboratory study, Kahraman (2001) presented a comprehensive list of such relationships. Isik Yilmaz (2009) applied core strangle test (CST) instead of point load index to estimate UCS for different types of rocks. This research indicated that CST will be more efficient than point load index test for estimation of UCS. Besides, Kayabali and Selcuk (2010) offered a new and practical index test method, nail penetration Test (NPT), to estimate intact rocks’ UCS, as well as an alternative to the point load test (PLT). Further studies have been done by Russell and Wood (2009), Basu and Kamr (2010).

The shore hardness test is also reported for evaluating and comparing the hardness of rocks. These reports showed that the relations between shore hardness and UCS are weaker than those obtained from the Schmidt hammer (Yasar and Erdogan 2004; Altindag and Guney 2005). Another method applied for UCS estimation is block punch index (BPI) test. However, this test is only performed on very thin specimens, which is considered as a deficiency for this method (Ulusay et al. 2001).

Many researchers have used ultrasonic velocity index to predict rock strength by measurement of ultrasonic velocities in directions parallel and perpendicular to weakness planes of anisotropic rocks (Vasconcelos et al. 2007; Sharma and Singh 2008; Vasconcelos et al. 2008; Khaksar et al. 2009; Moradian and Behnia 2009; Vishnu et al. 2010; Kelessidis 2011, Rigopoulos et al. 2011). Shalabi et al. (2007) proved that the relationships from this test were weaker than the results obtained by Schmidt hammer and shore tests.

Some researchers have studied the effect of petrographic characteristics (e.g. grain size, grain shape, type and amount of cement, and packing density) on compressive strength of concrete and rocks (Ulusay et al. 1994; Hale and Shakoor, 2003). Meddah et al. (2010) revealed that compressive strength of concrete increases with maximum size of coarse aggregate. Zhang et al. (2011) studied the scale effect on intact rock strength using particle flow modeling.

According to Demirdag et al. (2010) physical properties of rock materials such as porosity, unit volume weight, and Schmidt hardness have more significant effects on the dynamic mechanical behavior of the rock samples. For both the quasi-static and dynamic loading conditions, the compressive strength of the rock samples increased with increase in their unit volume weight and Schmidt hardness values while it increased with decrease of their porosity.

Moreover, Bell (1978) concluded that the strength of sandstone increases as packing density increases. Doberenier and De Freitas (1986), also, confirmed that a low packing density generally characterized weak sandstones. Porosity has an important effect on mechanical properties of rocks. The researches by Dube and Singh (1972) showed that strength properties decrease as porosity increases.

Schmidt rebound number (SRN) has widely been employed for prediction of UCS. Schmidt hammer is a portable test tool which imparts a known amount of energy to the rock through a spring-loaded plunger. Two type hammers are used for SRN, L-type and N-type. The studies have been confirmed that the N-type should be used for rocks with UCS > 20 MPa (Sheorey et al. 1984). However, the previous studies show that both types have been employed to predict the strength of various rock types (Katz et al. 2000; Kahraman et al. 2002; Aydin and Basu 2005; Porto and Hurlimann 2009).

Moh’d (2009) pointed out that compressive strength of the studied samples has positive relationships with density and sonic velocity and inverse relationships with permeability, modified saturation, total and other porosity types. According to Moh’d (2009) studies, dry density, as the easiest parameter to measure in laboratory or field, can be used for predicting compressive strength of rocks.

Considering mentioned methods for measuring and predicting of UCS, Schmidt rebound number, porosity and density, convenient and inexpensive tests could be preferably used to estimate the UCS of various rock types. For this goal, in this study, a neural network optimized by genetic algorithm is employed using the mentioned parameters as input. The majority of models used for prediction of UCS in literature have been developed based on a simple or multivariate linear or nonlinear regression analysis using a limited number of data and parameters. If new available data are different from the original ones then the form of obtained equation is necessary to be update. On the contrary, a trained ANN can conveniently re-train and adapt to new data (Lee 2003). Atici (2011) used a backpropagation neural network with levenberg–marquardt algorithm (considering blast-furnace slag, mixed age, rebound number, and ultrasonic pulse velocity as input parameters and UCS as output parameter) to predict strength of the mineral admixture concrete. The results showed a high accuracy of ANN model rather than multivariable regression analysis. Selver et al. (2008) predicted brecciated rock specimens UCS using neural networks and different learning models. Also, Cevik et al. (2011) used neural network modeling to predict UCS of some clay-bearing rocks. These studies showed the superiority of ANN compared to traditional prediction models. It is worth to mention that the architectural parameters of all these networks (number of neurons in hidden layers, learning rate, and momentum coefficient) are obtained by trial and error process and in this paper these parameters are calculated by genetic algorithm optimization process.

3 Neuro-Genetic Hybridization

3.1 ANN

Artificial neural network can be defined as an information-processing system that is identical to biological neural networks. This type of network was first introduced by Mc Culloch and Pitts (1988). ANN structure is fundamentally composed of several fully interconnected layers; input layer, output layer and hidden layer(s). The number of hidden layers is determined on the basis of problem complexity. To increase prediction capability, it is normally recommended to utilize two hidden layers for more complex problems. Each layer contains a number of simple information processing units called neurons. The number of input and output neurons is simply equal to the number of input and output problem variables. However, the number of neurons in the hidden layer(s) is dependent to the unknown interrelationship among the input–output variables. The neurons in each layer are connected to the neurons of the subsequent layer through weighted connections. By this way, each connection weight multiplies into the signal transmitted from the preceding layer. In the neural networks, with the exception of the input layer, all the other neurons are associated with a bias neuron and a transfer function. A bias vector which is referred to as the temperature of a neuron is similar to a weight with a constant value of 1. The biases are applied in the transfer functions to distinguish between neurons. The purpose of a particular neural network determines the type of transfer function is to be used. Activation of the neurons is performed using simple step transfer functions which are usually nonlinear. Through activation process, sum of weighted net input signals and their corresponding biases for each and every neuron is filtered to determine its output signal.

In training of the neural networks different types of algorithms can be applied. Back-propagation algorithm provides the most efficient learning procedure. This technique is especially suitable for solving predicting problems. During training process a sufficient number of sample datasets are required to reach pretend results. For each dataset, input and corresponding output or training pairs, processing starts from the input layer and lasts to the output layer (Feedforward). At this point, the output is compared to the measured actual values. The calculated difference or error is back propagated through the network (Back propagation) updating the weights and the biases. The above mentioned process is repeated for all the training pairs. Convergence of the network error to a minimum threshold which is usually determined by a cost function—known as Mean Square Error (MSE)—is the end of training process.

It is hereby mentioned that efficiency of the ANN model is considerably influenced by its topology, learning rate, and momentum. During training process, lack of sufficient number of neurons in the hidden layer(s) can cause this stage not performed properly, means that relationship between the input and output variables is not recognized. On the other hand, if the neurons are too high, model training time and computations is increased and also memorization due to over fitting may be occurred. Therefore, maximum efforts should be incurred to create as possible as simple network topology with tolerable errors. Too small learning rates or weights adjustment velocity would cause elongation of training and keeping them too large may result in lack of convergence. Finally, an unsuitable momentum can cause the model to be trapped in a local minima and inaccuracy of the network.

In a routine ANN network all of the aforesaid parameters are determined on the basis of trial and error approach, which is tedious and time consuming process in which supreme optimized model may not be acquired. To overcome the shortcomings encountered in application of ANN, genetic algorithm in combination with neural networks, so-called neuro-genetic network, can be effectively utilized.

3.2 GA

Holland (1975) developed the first GA for optimizing problems using Charles Darwin theory of natural evolution in the origin of species. This approach can efficiently be used when the exploration space is extensive. The basic of GAs is as follows:

At first a population or set of chromosomes (sequence of genes) is randomly initialized. A chromosome itself is one of the possible problem solutions not necessarily being the best one. Normally, genes of each chromosome are a set of bits with a binary formation (genotype). In the second step, fitness of each decoded (phenotype) chromosome in the initial random population is evaluated using an objective function. To initialize a new evolved population, an optimization process known as reproduction with genetic operators such as “selection”, “crossover” (recombination), and “mutation” is applied. In the “selection” process, two parent chromosomes are randomly selected using Roulette wheel method, Tournament method, etc. The criterion behind selection is conformity of each chromosome, which is determined on the basis of a fitness function such as Mean Square Error (MSE). The more the fitness is the higher the chance of selection of a chromosome. In the “crossover”, the parent chromosomes from selection step are used to probabilistically produce new chromosomes by a swapping mechanism. Probabilistic crossover is conducted aiming to generate better chromosomes from parts of the parents. This process is applied to describe how often crossover would be performed. If no probability is considered, new generation is made from exact copies of chromosomes from old population. This does not mean that the new generation is the same. On the other hand, if probability of 100 % is employed, then all of the old population would be changed. But, it is better to let some part of the old population to be survived for the next generation. Finally, in the “mutation”, new versions of some of the chromosomes (individuals) are produced. In fact, mutation prevents the GA from falling into local optima. In the mutation process, randomly bits of genes existed in the original chromosomes are flipped to form a new string. Mutation is probabilistically performed for selecting the number of bits to be mutated. In a similar fashion to the crossover, mutation probability determines how often parts of chromosome is to be mutated. If mutation of 0 % is selected nothing would be changed and offspring is generated immediately after crossover. On the other hand, if mutation probability is 100 % the whole chromosome would be changed. Mutation should not occur very often because the GA convergence would be very difficult or even impossible. The reproduction process continues until a particular selected stopping criterion such as maximum generations and maximum evolution time is satisfied. For example, number of generated populations can be considered to stop the process. To obtain more accurate results, sufficient number of generations should be applied. In the last stage, the best solution which is a chromosome with maximum fitness is introduced by the GA (Goldberg 1989; Sivanandam and Deepa 2008).

3.3 Combination of ANN and GA

The GA can be utilized to design and construct an optimum neural network, a combination of GA and ANN so-called neuro-genetic. In the first step, an initial population of neural networks with their own individual parameters (number of neurons in hidden layers, learning rate, and momentum) is randomly created. In the second step, each of the networks is trained and evaluated to determine its fitness. In the third step, to create a new evolved population, the operators “selection”, “crossover”, and “mutation” are applied. The new processed population is again evaluated in the same manner. This process is repeated until the maximum generations or maximum evolution time is reached. Figure 1 illustrates the process of optimizing neural network parameters using GA applied in this study. Many investigators (Fogel et al. 1990; Bornholdt and Graudenz 1992) used this technique to train feedforward networks. Regular neural networks were optimized by applying evolutionary algorithms. Same applications were reported for generalized regression neural network (Hansem and Meservy 1996) and Hopfield neural networks (Lin et al. 1995).

4 Datasets

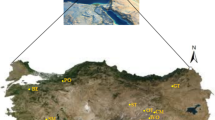

In this study, 93 samples of different rock types including sandstone, limestone, dolomite, granite, chalk, gneiss, siltstone, tuff, gypsum, olivine, granodiorite, slate, schist, conglomerate, quartzite, gabbro, and amphibolite were collected and tested for determination of parameters density, porosity, Schmidt rebound number and UCS. N-type Schmidt hammer was selected and applied according to methods proposed by ISRM (1981). In all carried out tests, the hammer was held vertically downwards. It must be added that the tests can be conducted in the field if ISRM suggested methods is followed (Kahraman et al. 2002). Finally, a universal testing machine was implemented for determination of UCS. In this study, density, porosity, and Schmidt rebound number was considered as input parameters to predict UCS as output parameter. A summary of the laboratory test results are given in Table 1.

5 Regression Analysis

Regression analysis can be applied to establish a mathematical model for realizing the relationships between independent and dependent variables (Jennrich 1995). Multivariable regression analysis gives more realistic results where number of variables is too high. Application of this particular method in the mining related problems has been reported by many researchers (Alveraz Grima and Babuska 1999). Applying the statistical software SPSS16 and using the prepared database collected from the laboratory, an arithmetical model (Eq. 1) was developed to predict UCS using new input parameters.

From this equation, correlation of determination (R2) and mean square error (MSE) were calculated as 0.774 and 1.61, respectively.

6 Neuro-Genetic Based Analysis

Implementing GA, the parameters of ANN was determined to find the optimal architecture of neural network. Here, the process of optimizing ANN is performed by “NeuroSolution” for Excel Release 5.05 software package, produced by Neuro Dimension, Inc. To apply this software, the concerned data should be normalized to keep the values within the range (0, 1). Data normalization is fulfilled using Eq. (2):

where, Varn is normalized value, Vari is the real value, Varmin and Varmax are the minimum and the maximum real values, respectively.

In the next step, the database was randomly divided into three groups, i.e. training (60 %), cross validation (15 %), and (25 %) testing. In this study, a feedforward backpropagation neural network with two hidden layers was identified to be suitable. This network was trained using GA. In the training process of the ANN, initial population is generated. The chromosomes of each population contain three genes (i.e. the number of neurons in the hidden layers, the momentum and the learning rates). The number of hidden neurons and training parameters were represented by haploid chromosomes consisting of ‘‘genes’’ of binary numbers. The genes themselves have also a few numbers of bits which determine the length of the chromosomes. The process of determination of the chromosomes lengths is automatically made by the NeuroSolution package. For generation of the initial population a boundary limit should be defined for chromosomes’ components of this population. The boundary limits for number of the hidden neurons, the learning rate, and the momentum were set as (1, 30), (0, 1) and (0, 1), respectively. After the limits are set, for each components, the software randomly select a value from the defined limits and then automatically produce the initial population.

Network performance is evaluated using Mean Square Error (MSE) as defined in Eq. (3). The errors of training datasets were computed and network with the smallest MSE was considered to be optimum (Niculescu 2003).

where Oi is the desired output for training data or cross validation data i, Ti is the network output for training data or cross validation data i, and n is the number of data.

To start working with GA, setting the concerned parameters (population size, stopping criterion, “Selection”, “Crossover”, and “Mutation”) is essential. Normally, trial and error mechanism and/or previous experiences are applied for selecting the parameters. Following this procedure, population size and stopping criterion were considered to be 40 and 30, respectively. Reproduction of the new chromosomes is commenced with “selection” which was performed using Roulette wheel ranking algorithm-based method. In this way, chromosomes are arranged according to their relative fitness. The chromosome with the lowest fitness is received ranking 0 and accordingly the worst chromosome is assigned ranking 1. After ranking is finished, the chromosomes are placed into the intermediate population (Kim et al. 2004). In continuation of reproduction process the crossover and mutation operators are applied for the intermediate population. For this study, two point crossover and uniform mutation were used and accordingly their probabilities were determined to be 0.7 and 0.01, respectively (Table 2). The process of creating new generations is repeated until the stopping criterion is satisfied. Figure 2 shows average and best improving MSE for new generations. Finally, the best chromosome available in the last generation is considered to be the problem optimum solution.

Table 3 shows the details of the Generation No. 23 which is considered the best solution. As a result, it was revealed that the optimum number of neurons, which was obtained by GA, in first and second hidden layers, is 9 and 5, respectively. Furthermore, the other network parameters, learning rate and momentum, also optimized by the GA, were equal to 0.66 and 0.53, respectively (Fig. 3).

Performance of the proposed model was evaluated using selected datasets considered for testing the model. Coefficient of determination for measured and predicted UCS was computed 0.96 which shows superiority of the neuro-genetic network over conventional statistical method (Fig. 4).

7 Sensitivity Analysis

Sensitivity analysis is a method for extracting the cause and effect relationship between the inputs and outputs of the network. The network learning is disabled during this operation such that the network weights are not affected. The basic idea is that the inputs to the network are shifted slightly and the corresponding change in the output is reported either as a percentage or a raw difference. Figure 5 illustrates the sensitivity analysis results.

8 Conclusion

In this paper, a hybrid neuro-genetic network was implemented to predict uniaxial compressive strength of rocks. In this regard, neural network parameters including number of neurons in hidden layers, learning rate, and momentum coefficient were optimized by genetic algorithm. For this study, two point crossover and uniform mutation were used and accordingly their probabilities were determined to be 0.7 and 0.01, respectively. Determination of the optimum model with this method as compared with the classic networks (based on trial and error process) is faster and more convenient. In optimization process by GA, the optimum number of neurons obtained 9 in first and 5 in second hidden layers. Also, learning rate and momentum were equal to 0.66 and 0.53, respectively. The results showed the robustness of this hybrid network for estimation of UCS, a time consuming and costly test, with easily-attained parameters Schmidt rebound number, density, and porosity. Competency of the method over conventional regression analysis was also confirmed. To compare neuro-genetic network results and statistical analysis, R2 and MSE were calculated. Significant efficiency of neuro-genetic model was proved with R2 and MSE, 0.9589 and 0.0045, respectively. For regression model R2 and MSE were calculated 0.774 and 1.61, respectively. Rather poor performance of this method may be attributed to applying linearity assumption. Finally, sensitivity analysis revealed that the most sensitive parameter on the predicting UCS is Schmidt rebound number, which is consistent with the previous experiences.

References

Altindag R, Guney A (2005) Effect of the specimen size on the determination of consistent shore hardness values. Int J Rock Mech Min Sci 42:153–160

Alveraz Grima M, Babuska R (1999) Fuzzy model for the prediction of unconfined compressive strength of rock samples. Int J Rock Mech Min Sci 36:339–349

Atici U (2011) Prediction of the strength of mineral admixture concrete using multivariable regression analysis and an artificial neural network. Expert Sys Appl 38:9609–9618

Aydin A, Basu A (2005) The Schmidt hammer in rock material characterization. Eng Geol 81:1–14

Basu A, Kamran M (2010) Point load test on schistose rocks and its applicability in predicting uniaxial compressive strength. Int J Rock Mech Min Sci 47:823–828

Bell FG (1978) The physical and mechanical properties of the fell sandstones, Northumberland, England. Eng Geol 12:1–29

Bell FG (2005) Rock properties and their assessment. In: Selley RC, Cocks LRM, Plimer IR (eds) Encyclopedia of geology. Elsevier, Amsterdam, pp 566–580

Bieniawski ZT (1974) Estimating the strength of rock materials. J S Afr Inst Min Metall 74:312–320

Bieniawski ZT (1975) Point load test in geotechnical practice. Eng Geol 9(1):1–11

Bornholdt S, Graudenz D (1992) General asymmetric neural networks and structure design by genetic algorithms. Neural Netw 5:327–334

Broch E, Franklin JA (1972) Point-load strength test. Int J Rock Mech Min Sci 9(6):241–246

Cevik A, Sezer EA, Cabalar AF, Gokceoglu C (2011) Modeling of the uniaxial compressive strength of some clay-bearing rocks using neural network. Appl Soft Comput 11:2587–2594

Demirdag S, Tufekci K, Kayacan R, Yavuz H, Altindag R (2010) Dynamic mechanical behavior of some carbonate rocks. Int J Rock Mech Min Sci 47:307–312

Doberenier L, DeFreitas MH (1986) Geotechnical properties of weak sandstones. Geotechnique 36:79–94

Dube AK, Singh B (1972) Effect of humidity on tensile strength of sandstone. J Mines Met Fuels 20(1):8–10

Fener M, Kahraman S, Bilgil A, Gunaydin O (2005) A comparative evaluation of indirect methods to estimate the compressive strength of rocks. Rock Mech Rock Eng 38(4):329–343

Fogel DB, Fogel LJ, Porto VW (1990) Evolving neural networks. Biol Cybern 63:487–493

Garret JHJ (1994) Where and why artificial neural networks are applicable in civil engineering. J Comput Civil Eng 8(2):129–130

Goldberg DE (1989) Genetic Algorithms in Search. Addison-Wesley, Optimization and Machine learning

Hale PA, Shakoor A (2003) A laboratory investigation of the effects of cyclic heating and cooling, wetting and drying, and freezing and thawing on the compressive strength of selected sandstones. Environ Eng Geosci 9(2):117–130

Hansem J, Meservy R (1996) Learning experiments with genetic optimization of a generalized regression and neural network. Decis Support Syst 18:317–325

Haramy KY, DeMarco MJ (1985) Use of Schmidt hammer for rock and coal testing. In: 26th US Symposium on Rock Mechanics, 26–28 June, Rapid City Balkema, Rotterdam, pp 549–555

Holland J (1975) Adaptation in Natural and Artificial Systems. University of Michigan Press, Ann Arbor

Huang Y (1999) Application of artificial neural networks to predictions of aggregate quality parameters. Int J Rock Mech Min Sci 36:551–561

Huang Y, Wanstedt S (1998) The introduction of neural network system and its applications in rock engineering. Eng Geol 49:253–260

ISRM (1985) Suggested method for determining point-load strength. Int J Rock Mech Min Sci 22:53–60

Jennrich RI (1995) An introduction to computational statistics-regression analysis. Prentice-Hall, Englewood Cliffs, NJ

Kahraman S (2001) Evaluation of simple methods for assessing the uniaxial compressive strength of rock. Int J Rock Mech Min Sci 38:981–994

Kahraman S, Fener M, Gunaydin O (2002) Predicting the Schmidt hammer values of in situ intact rock from core samples values. Int J Rock Mech Min Sci 39(3):395–399

Katz O, Reches Z, Roegiers JC (2000) Evaluation of mechanical rock properties using a Schmidt Hammer. Int J Rock Mech Min Sci 37(4):723–728

Kayabali K, Selcuk L (2010) Nail penetration test for determining the uniaxial compressive strength of rock. Int J Rock Mech Min Sci 47:265–271

Kelessidis VC (2011) Rock drillability prediction from in situ determined unconfined compressive strength of rock. J S Afr Inst Min Metall 111:429–436

Khaksar A, Taylor PG, Fang Z, Kayes T, Salazar A, Rahman K (2009) Rock strength from core and logs: where we stand and ways to go. Paper SPE 121972, presented at the EUROPEC/EAGE Annual Conference and Exhibition, Amsterdam

Kim GH, Yoon JE, An SH, Cho HH, Kanga KI (2004) Neural network model incorporating a genetic algorithm in estimating construction costs. Build Environ 39:1333–1340

Lee S (2003) prediction of concrete strength using artificial neural networks. Eng Struct 25:849–857

Lin S, Punch W, Goodman ED (1995) A hybrid model utilizing genetic algorithms and hopfield neural networks for function optimization. Proceeding of the Sixth International Conference on Genetic Algorithms, Morgan Kaufmann, San Francisco

Mc Culloch WS, Pitts W (1988) A Logical Calculus of the Ideas Immanent in Nervous Activity. Bull Math Biophys 5:115–133

Meddah MS, Zitouni S, Belaabes S (2010) Effect of content and particle size distribution of coarse aggregation the compressive strength of concrete. Constr Build Mater 24:505–512

Moradian Z, Behnia M (2009) Predicting the uniaxial compressive strength and static Young’s modulus of intact sedimentary rocks using the ultrasonic test. Int J Geomech :14–19

Niculescu SP (2003) Artificial neural networks and genetic algorithms in QSAR. J Mol Struct 622:71–83

Nie X, Zhang Q (1994) Prediction of rock mechanical behavior by artificial neural network. A comparison with traditional method. IV CSMR, Integral Approach to Applied Rock Mechanics. Santiago

O’Rourke JE (1989) Rock index properties for geoengineering in underground development. Min Eng 106–110

Porto DR, Hurlimann M (2009) A comparison of different indirect techniques to evaluate volcanic intact rock strength. Rock Mech Rock Eng 42:931–938

Rigopoulos I, Tsikouras B, Pomonis P, Hatzipanagiotou K (2011) Microcracks in ultrabasic rocks under uniaxial compressive stress. Eng Geol 117:104–113

Russell AR, Wood DM (2009) Point load tests and strength measurements for brittle spheres. Int J Rock Mech Min Sci 46:272–280

Selver MA, Ardali E, Onal O, Akay O (2008) Predicting Uniaxial Compressive Strengths of Brecciated Rock Specimens using Neural Networks and Different Learning Models. IEEE. doi:10.1109/ISCIS.2008.4717937

Shalabi FI, Cording EJ, Al-Hattamleh OH (2007) Estimation of rock engineering properties using hardness tests. Eng Geol 90:138–147

Sharma PK, Singh TN (2008) A correlation between P-wave velocity, impact strength index, slake durability index and uniaxial compressive strength. Bull Eng Geol Environ 67:17–22

Sheorey PR, Barat D, Das MN, Mukherjee KP, Singh B (1984) Schmidt hammer rebound data for estimation of large scale in situ coal strength. Int J Rock Mech Min Sci Geomech Abstr 21:39–42

Singh RN, Hassani FP, Elkington PAS (1983) The application of strength and deformation index testing to the stability assessment of coal measures excavations. Proceedings of the 24th US Symposium on Rock Mech, Texas A&M University, AEG Balkema, Rotterdam, pp 599–609

Singh VK, Singh D, Singh TN (2001) Prediction of strength properties of some schistose rocks from petrographic properties using artificial neural networks. Int J Rock Mech Min Sci 38:269–284

Sivanandam SN, Deepa SN (2008) Introduction to Genetic Algorithms. Springer, Berlin, Heidelberg

Ulusay R, Tureli K, Ider MH (1994) Prediction of engineering properties of a selected litharenite sandstone from its petrographic characteristics using correlation and multivariate statistical techniques. Eng Geol 38(2):135–157

Ulusay R, Gokceoglu C, Sulukcu S (2001) Draft ISRM suggested method for determining block punch strength index (BPI). Int J Rock Mech Min Sci 38:1113–1119

Vasconcelos G, Lourenco PB, Alves CSA, Pamplona J (2007) Prediction of the mechanical properties of granites by ultrasonic pulse velocity and Schmidt hammer hardness. Proceedings, 10th North American Masonry Conference, pp 981–991

Vasconcelos G, Lourenco PB, Alves CSA, Pamplona J (2008) Ultrasonic evaluation of the physical and mechanical properties of granites. Ultrasonic’s 48:453–466

Vishnu CS, Mamtani MA, Basu A (2010) AMS, ultrasonic P-wave velocity and rock strength analysis in quartzites devoid of mesoscopic foliations—implications for rock mechanics studies. Tectonophysics 494:191–200

Yasar E, Erdogan Y (2004) Estimation of rock physicomechanical properties using hardness methods. Eng Geol 71:281–288

Yilmaz I (2009) A new testing method for indirect determination of the unconfined compressive strength of rocks. Int J Rock Mech Min Sci 46:1349–1357

Zhang Q, Zhu H, Zhang L, Ding X (2011) Study of scale effect on intact rock strength using particle flow modeling. Int J Rock Mech Min Sci 48:1320–1328

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Monjezi, M., Amini Khoshalan, H. & Razifard, M. A Neuro-Genetic Network for Predicting Uniaxial Compressive Strength of Rocks. Geotech Geol Eng 30, 1053–1062 (2012). https://doi.org/10.1007/s10706-012-9510-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10706-012-9510-9