Abstract

In this article, we propose a mixed-noise removal model which incorporates with a nonsmooth and nonconvex regularizer. To solve this model, a multistage convex relaxation method is used to deal with the optimization problem due to the nonconvex regularizer. Besides, we adopt the number of iteration steps as the termination condition of the proposed algorithm and select the optimal parameters for the model by a genetic algorithm. Several experiments on classic images with different level noises indicate that the robustness, running time, ISNR (Improvement in Signalto-Noise ratio) and PSNR (Peak Signal to Noise Ratio) of our model are better than those of other three models, and the proposed model can retain the local information of the image to obtain the optimal quantitative metrics and visual quality of the restored images.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

With the rapid development of computer technology, images are widely used in each field, especially in remote sensing and medical imaging. However, the images are usually polluted by all kinds of noises in the transmission. Due to the poor characteristics of noisy image, we cannot directly use noisy image in practical image applications (such as image classification, super-resolution, image enhancement and recognition). Therefore, image noise removal is an indispensable step in many practical applications, and an active topic in computer low-level vision. Formally speaking, image noise removal is an inverse problem which reconstructs the original image as much as possible from its polluted one, and the problem has been extensively studied in recent decades [8, 11, 18, 21,22,23, 25, 34, 39, 41,42,43,44,45, 47, 48, 68, 70]. Image denosing can be modeled as follows,

Here, u denotes the origin image and Ω is a bounded open domain with a compact Lipschitz boundary. The observed image f : Ω → R is partitioned into a true image and additive noise n. A can either be an identity operator or a blurring operator. In practical applications, two types of noises are often encountered during image transmission and image acquisition: white Gaussian noise (GN) and impulse noise (IN). Commonly, GN is brought up into images due to the thermal motion in camera, while IN is generally caused by the damaged pixels in camera transmission and sensors [6].

In recent decades, various techniques are proposed for image denoising, such as the methods in [63, 71, 74] for GN, the methods for IN removal [41, 44, 68, 70], and Poisson noise [28, 73]. Although those algorithms have powerful accomplishment for single noise reduction, they don’t perform well in mixed noise denoising because different noises have different distributions.

In most previous works, Maximum a posteriori (MAP) estimation approach is often used for image denosing algorithms. We can formalize the problem as follows,

Here, the observed image f(x), and the original image u(x) are two random variables for each x ∈Ω. Therefore, we can apply Bayes rule, and then obtain,

Normally, the first term captures the noise-data distribution which is often called data fidelity term, and the second term denotes the prior information of origin image which is usually named regular term. In practical applications, the L2-norm is used to fit the GN distribution, and L1-norm data fidelity term is employed to simulate IN distribution, respectively [41, 44, 63, 68, 70, 71, 74]. Consequently, based on Bayes method, GN can be removed successfully by solving the following optimization problem,

here, f ∈ RN, u ∈ RN, λ, and R(u) refer to noisy image, clean image, weight parameter, and the regularization term, respectively. Similarly, IN can be removed by solving the following minimization problem,

With the above derivations for the GN or IN image, now we can derive a model that links both types of noises. If we set up a multi-noise likelihood, according to Bayes’ Theorem, and then we can obtain,

here, μ1 and μ2 are also weight parameters.

Minimization problem (6) is a template of many variational optimization models. And in the past years, the variational optimization model for image processing is an active topic in computer vision all the time. Those models mainly consist of two parts: data-fidelity and regularization term. Regularization term can maintain the smoothness of the objective function and recover a clean image with sharp edges and fine image detail information. Model with regularization term is also called variational regularization [62], which have been applied to both statistical learning and variational workframe problems [4, 26, 30,31,32, 46], and if it is a convex regularization model, a unique solution of optimization problem exists. However, if it is a nonconvex regularization one, the solution of optimization problem is not necessarily unique. Recently, nonconvex regularization has become a popular method because it can restore high-quality images with well-kept detail information of local characteristics and edges. Therefore, Some sholars explored the theoretical solution and numerical solution in [53], [31, 32, 50, 55, 59], respectively. Since the nonconvex and nonsmooth regularization is a powerful method, there is still exploration space for improvement of performance in computer vison, especially for image noise reduction. Subsequently, in this paper, we introduce an efficient image mixed noised removal algorithm with multi-fidelity terms based on nonsmooth and nonconvex optimization.

Although a theoretical solution to the minimization problem with a nonconvex and nonsmooth regularization has not yet been proposed, many numerical algorithms, such as iteratively reweighted least squares algorithms [19, 31, 54, 66], half-quadratic algorithms [2, 14, 65], and graduated nonconvexity algorithms (GNC) [5, 55, 56], have been proposed. However, the GNC method, which accommodates the minimizer of nonsmooth and nonconvex potentials, requires considerable computational time. The iteratively reweighted (IR) method [15] was proposed to track the sparsity properties of the regularization comprehensive sensing problems, which has been introduced to process the image restoration problems [35, 57]. In consequence, in this paper, a nonsmooth and nonconvex regularization method is used to solve the image restoration problem. Meanwhile, due to the nonconvex regularization, we adopt a multistage nonconvex relaxation method [72] that gave a more better solution than the standard convex relaxation solution.

Apart from variational optimization models, the most often-used mixed noise removal approaches are based on the “detecting then filtering” strategy. Trilateral filter [29] combined a gradient bilateral filter and an intensity bilateral filter with a pyramidbased method to limit filter window. Nonlocal mean (NLM) [8, 9] filter averages all pixels in the image to remove image noise and the filter can also be set as a regular term in variational optimization models. Recently, with development of compressive sensing technology, sparse representation has become a powerful tool in various computer vision assignments. Some scientific researchers used the detection trick for mixed noise removal based on the sparse representation model. Tao et al. [64] have done a lot of precious works, and they used a powerful manifold ranking and optimization algorithm-based matrix factorization method for the saliency detection.

With the widespred appllication of deep learning, several discriminative learning methods have been developed to learning image prior models in the context of truncated inference procedure. For example, Chen et al. [16, 17, 27] proposed a trainable nonlinear reaction diffusion model, which learnt a modified fields of experts [61] image prior by unfolding a fixed number of gradient descent inference steps. And He et al. [37] proposed a pulse-coupled neural network (PCNN)-based algorithm to underwater image enhancement scheme for robotic visual systems. The experimental results shown that the enhanced result improved the color and contrast of the source image and enhanced the details and edges of darker regions, which made a great contribution for deep-sea exploration tasks.

In this paper, we propose an efficient mixed GN and IN removal approach based on the L1 and L2 data-fidelity terms. Moreover, we also introduce a nonsmooth and nonconvex regularization term for solving the image restoration problem. Additionally, we use the multistage convex relaxation method in order to deal with the nonconvex regularization, which provides a good performance solution. Experimental results of simulated noisy images illustrate that our method outperforms current state-of-the-art mixed-noise removal methods.

2 Preliminaries

2.1 Alternating direction method of multipliers

In this subsection, the theories underpinning the proposed image mixed noise removal algorithm are introduced. Firstly, the Alternating direction method of multipliers (ADMM) will be explained. Therefore, now we consider the following structured constrained convex optimization problem, called the Alternating Direction Method [7, 26, 36],

here, \({\theta _{1}}\left (x \right ):{R^{{n_{1}}}} \to R\), \({\theta _{2}}\left (x \right ):{R^{{n_{2}}}} \to R \) are convex functions (but not necessary smooth). \(A \in {R^{m \times {n_{1}}}}\), \(B \in {R^{m \times {n_{2}}}}\) and b ∈ Rm, \(X \in {R^{{n_{1}}}}\), and \( Y \in {R^{{n_{2}}}}\) are given closed convex sets. Let λ be the Lagrangian multiplier for the linear constraints Ax + By = b in (7). Then, the augmented Lagrangian function is as follows,

which is defined on X × Y × Rm. Thirdly, let (x∗, y∗, z∗) be a saddle point of the Lagrangian function. So, (x∗, y∗, z∗) ∈Ω, and it satisfies,

here, Ω = X × Y × Rm. By defining

and 𝜃 (u) = 𝜃1 (x) + 𝜃2 (y). The first-order optimal condition (9) can be written in a compact form as,

Note that the mapping F is monotone. We use Ω∗ to denote the solution set of the variational inequality (11). For convenience, we use the notations, namely,

For a given λk, uk+ 1 = (xk+ 1, yk+ 1) is the solution of the following problem,

The multiplier update is defined by,

For a given (yk, λk), xk+ 1 is the solution of the following problem,

Using operator separation technique for λk, xk+ 1, and yk+ 1 respectively, we have the solution of the following problem,

and

Note that (15) and (16) are equivalent to (18) and (19), respectively.

2.2 The multi-stage convex relaxation method

In this subsection, because the multistage convex relaxation method will be used, so we first introduce it as following. From the multi-stage convex relaxation method first proposed in [72], we have the following optimization problem,

where g0 (w) is convex in w and each gi (w) is nonconvex. The convex/concave duality that was first defined in [72] can solve this optimization problem efficiently.

Let \({h_{i}}\left (w \right ):{R^{d}} \to {{\Omega }_{i}} \subset {R^{{d_{i}}}}\) be a vector function with range Ωi, and assume that there is a function \({\bar g_{i}}\), which is defined on Ωi, such that,

Suppose that exists hi such that the function \({\bar g_{i}}\left ({{z_{i}}} \right )\) is concave on zi ∈Ωi. Then, the function gi (w) can be rewritten as,

Using the concave duality [60], if \( g_{i}^{*}\left ({{v_{i}}} \right ) \) is the concave dual of \({\bar g_{i}}\left ({{z_{i}}} \right )\), we define,

The minimum of (22) can be obtained by solving the following equation,

Secondly, we present a simple convex relaxation of (20),

Therefore, we can summarize the multistage convex relation algorithm as Algorithm 1.

3 The related work

Numerous previous methods have been developed for image denoising in the past decades, and most of these works can be classified as two types methods, namely, deep learning Neural Network based method and regularization optimization based method.

3.1 Deep neural networks for image denoising

Several deep neural networks have been attempted to handle image noise reduction and image enhancement. Scientific scholars used sparse representation (SR) to deal with various image computer vision and image processing assignments in the past few years. In [69], the authors used sparse denoising auto-encoders technique to handle GN removal and obtained satisfactory results compared to K-SVD [25]. The multilayer perceptron (MLP) was victoriously used for image noise reduction in [10]. Convolutional neural networks (CNNs) for image noise removal has been used by Kingma and Ba [40], which have achieved comparable results. In [16] a trainable nonlinear reaction diffusion (TNRD) model was proposed which can be expressed as a feed-forward deep network by unfolding a fixed number of gradient descent inference steps. In [27] a trainable nonlinear reaction diffusion (TNRD) model for passion noise is proposed and achieves promising performance. In [37] pulse-coupled neural network (PCNN)-based image enhancement and color transfer algorithms are combined to enhance the underwater image, which make some contribution for deep-sea exploration businesses. Kai Zhang and Lei Zhang et. [75] used residual learning and batch normalization to speed up the training process as well as boost the denoising performance. To the best of our knowledge, it remains uninvestigated to develop CNN for general image denoising.

3.2 Regularization optimization for image noise reduction

Traditional regularization optimization is still an active method in image processing and computer vision tasks, and most of these works use different regularization terms about the prior information to constraint image u. If an observed image u is corrupted with GN only, the noise distribution can be fitted successfully by the L2-norm of the data fidelity term, thus we can solve the following optimization problem to remove advantageously the GN,

here, R (u) is a regular function. If we formulate the Gaussian model as a MAP estimation, we can obtain the following general form,

here, p (u) = R (u). Usually, we assume that u follows a Gibbs prior [3],

here, ϕ is a nonnegative function. Let ϕ (u (x)) = ∥∇u (x)∥1, ∇u (x) = (∇xu,∇yu) is the gradient of u, and ∥∇u (x)∥1 is the total variation (TV) of u in [62].

The TV-L1 model [13] is obtained as a variant of the ROF model by replacing the squared-norm in the data fidelity term by the robust-norm. Although this was only a slight change, the TV-L1 model has some potential advantages over the ROF model. For example, 1) the TV-L1 model contrasts in variant; and 2) the TV-L1 model is much more effective in removing strong impulse noise (e.g., salt- and pepper noise). Because the Mumford-Shah (MS) model [49] is non-convex, therefore, it is difficult to solve the functional minimizer. To explore the true implicit solution of the MS function, Cai et al. [12] proposed the following equivalence model,

here, γ1 and α are positive weight parameters.

Because the TV-L1 model is more effective than the MS model at removing strong impulse noise (e.g., salt-and pepper noise), the L1-data fidelity term ∥f − Au∥1 plays an important role in the processing. The L1 data fidelity term has been extensively studied in [1, 51, 52]. Some researchers proposed the following model which is based on the TV-L1 model to remove strong impulse noise.

To remove both GN and IN [38], a combined L1-L2 data fidelity terms were suggested by Shi, who combined (28) and (29) to obtain [43].

here, α > 0, γ1 ≥ 0, and γ2 ≥ 0 are parameters that balance the data fidelity terms and the regularization terms.

Currently, the patch-based nonlocal low rank regularization was shown to have better performance than the nuclear norm regularization in image reconstruction [22, 24, 34, 39, 41, 42]. Because the rank minimization problem is the key subproblem in patch-based nonlocal rank regularization methods, and it can be approximately solved by minimizing the following optimization problem [24].

here, u ∈ Rn×n is a symmetric positive semidefinite matrix, I is the identity matrix. In [58], Osher et al. proposed a novel low dimensional manifold model (LDMM), not only applied it to image noise reduction, but also achieved successful accomplishment. Tao et al. [64] used Manifold Ranking-Based Matrix Factorization (MRMF) for Saliency Detection. In the paper, they proved that the MRMF has good generalizability, and developed an efficient optimization algorithm based on the Nesterov method for Saliency Detection.

4 Proposed nonconvex image denoising model and algorithms

Based on the Shi-Model [43], we propose the following image denosing model,

Using the Gibbs prior \(p\left (u \right ) = \exp \left ({ - \gamma \int \limits _{\Omega } {\phi \left ({u\left (x \right )} \right )} } \right )dx\), Shi let ϕ (u (x)) = ∥∇u (x)∥1, which resulted in [16]. To improve the image denoising effect, we define the Gibbs prior nonconvex regularizer as \(\int {\phi \left ({\left | {\nabla u} \right |} \right )} dx\), here, ϕ is a nonconvex function, namely,

for p ≥ 1.

Enven though the existence and uniqueness of solution of nonconvex regularizing optimization is still an open problem, which can preserve the more detail information of image during image processing. Therefore, we propose the following model (based on the Shi-model),

here, the Gibbs prior \(p\left (u \right ) = \exp \left ({ - \gamma \int \limits _{\Omega } {\phi \left ({u\left (x \right )} \right )} } \right )dx = \int {\phi \left ({\left | {\nabla u} \right |} \right )} dx\), and ϕ is the nonconvex function defined in (34).

In the following subsections, we present the optimization algorithm to solve the proposed model (35), which is based on a variable splitting technique [67]. To deal with the nonconvex regularizer, a multistage convex relaxation method is used.

We exploit the algorithmic framework of the variable splitting technique [67] to solve the unconstrained problem was described by (35). To obtain variable splitting, we introduce the auxiliary variables h and d under the constraints h = f − Au, ∇u = d subject to

Next, we exploit the algorithmic framework of the Split Bregman Iteration Method (SBIM) [33] to solve (36). The additional details of the SBIM algorithm are as follows,

here,

To solve the minimization problem (37), an alternative iteration algorithm is used. Specifically, the solution of minimization problem (uk+ 1, hk+ 1, dk+ 1) are defined as follows,

It is noteworthy that the alternative (SBIM) is equivalent to the alternative ADMM [7] when ϕ is convex.

4.1 u-subproblem

Due to (41), the optimal value of uk+ 1 must satisfy the following Euler-Lagrange equation,

We can use the Gauss-Seidel method to solve this equation as in [3]. Equation (44) may also be solved efficiently in the discrete Fourier domain if we assume periodic boundary conditions. If we define \(\mathcal {F}\left (u \right )\) as the Fourier transform of u, the close-form solution of (44) can be written as follows,

4.2 h-subproblem

Similarly, due to the minimization problem of (42), and we can get its approximate solution by the following shrinkage operator,

here, shrink (s,t) is the shrinkage operator [67], and which was defined at each point α ∈ [0,1]2 by

4.3 d-subproblem

Moreover, resembling to the u-subproblem, we can obtain dk+ 1 by solving the following nonconvex minimization problem,

here, ϕ is the nonconvex regularizer in (36), and \(s = \nabla {u^{k + 1}} + {b_{2}^{k}}\). To handle the nonconvex term, we apply the multistage convex relaxation technique, which was described above.Consequently, we directly use the result in [3]. Specifically, the solution of (48) can be easily obtained as follows,

In this paper, we decompose the difficult optimization problem (37) into three subproblems (the u, h, and d-subproblems) based on the SBIM. In addition, all the subproblems have fast and accurate techniques for obtaining the solutions. For example, the u-subproblem can be solved efficiently by using the FFT technique or Gauss-Seidel iteration method. Furthermore, the h-subproblem and d-subproblem can be resolved successfully through applying the shrinkage operator. Eventually, the optimization procedure is summarized in Algorithm 2.

5 Experimental results

In this section, we present the results of our application of a nonconvex and nonsmooth mixed noise removal algorithm to the image restoration problem. Firstly, we employ a genetic algorithm aims to select the optimal parameters of the model. Secondly, we compare the noise removal effects of other models on multiple different levels noise images.

Because the proposed model with regularizer is nonconvex and nonsmooth, therefore, we could not give analysis convergence of the algorithm with an increasing number of iteration steps (Fig. 1b). Accordingly, we use the number of iterations of the algorithm as our termination condition. Additionally, we calculate the relationship between the number of algorithm iteration steps and the optimal ISNR on 100 images and chose to terminate with 16 iterations (Fig. 1a).

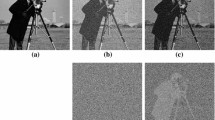

Moreover, we compare the models TVL1 [13], LRTM [20] and SHI [43] by applying them to several images, e.g., Parrots, Monarch, Boats, Cameraman, House and Lena (Fig. 2). Furthermore, we compare the models’ ISNR and PSNR values after the image was denoised. The peak signal to noise ratio (PSNR) and improvement signal to noise ratio (ISNR) were chosen as the quantitative measures of image quality.

We utilize the improvement in the signal-to-noise ratio (ISNR) and the Peak Signal to Noise Ratio (PSNR) to evaluate the effectiveness of all methods for removing the noise, and the two metrics can be defined as follows,

here, u∗∈ Rm×n is the clean image, \(\hat u \in {R^{m \times n}}\) is the restored image, and \(\tilde u \in {R^{m \times n}}\) is the contaminated image. Note that a high value (ISNR and PSNR) indicates a better restored result.

5.1 Parameter selection

There are a total of 5 required parameters in our algorithm, and there are two methods with which to select them. In the first method, the parameters were chosen by their experience. In the second method, one fixes the values of one or more of the parameters and varies the values of other parameters. We used a genetic algorithm to find the best parameters. In the proposed model, the parameters (a,b,c, and d) should be satisfied the following conditions, namely, a > 0, b < 0, and d > 0. Our goal is to choose one of the best points in the parameter space. Therefore, we chose our parameters to maximize the average of the ISNR and PSNR values on the training set P, and such that the objective function of the genetic algorithm is defined by,

here, pi is an image in training set, and a, b, c, and d are the model parameters that need to be selected. M represents the proposed model, and O is the objective function of genetic algorithm.

In the genetic algorithm, the number of chromosomes is 20, the heritability is 0.85, and the number of reproduction generation is 400. The following figure is the result of the parameter selection with 0.1 Gauss noise (Fig. 3).

According to the result of the operation, we choose the optimal parameters as follows, a = 0.01, b = 0.20,... It needs to be explained that in the following experiments, we used this same method to select the optimal parameters for different types of noise.

5.2 Comparison of pure noise experiments

In this subsection, we compare the denoising effects of the different models on pure noise images. Moreover, we compare Gauss noise with salt and pepper noise with the noise levels set at 0.01, 0.1, and 0.4, respectively. The denoising ISNR and PSNR results of the four models for different images are shown in Tables 1 and 2. The GN0.01 denoising visualization effect of Parrots, Monarch, Boats, Cameraman, House and Lena have been shown in Fig. 4. And The SP0.1 denoising visualization effect has been shown in Fig. 5.

The results in Tables 1 and 2 illustrate that our proposed model algorithm is superior to other models in terms of: running time, indices of Gaussian and salt-and-pepper noise images, and the local visualization quality after restoration.

5.3 Comparison of mixed noise experiments

In this subsection, in order to verify the robustness of the proposed model, we perform an experimental comparison on images with mixed noise. Where the mixed noise is a mixture of Gaussian noise and salt and pepper noise, the noise levels are GN0.01 + SP0.01, GN0.01+SP0.1, GN0.1+SP0.01, and GN0.1+SP0.1, respectively. The experimental results are shown in Table 3, and the effective images before and after denoising of the different models are shown in Fig. 5.

It can be seen from Table 3 that the proposed model algorithm is superior to the other three models in terms of running time and indexes on images with mixed noise, and the quality of local visualization after restoration is better than that of other models (Fig. 6).

6 Conclusion

In this paper, we proposed a nonconvex and nonsmooth regular image mixed noise removal algorithm based on the Shi model by solving the nonconvex minimization problem. we use the multistage nonconvex relaxation technique, which aims to deal with the nonconvex term. Furthermore, we employ a genetic algorithm to select the optimal parameters for the model and set the number of iteration steps as the termination condition for the algorithm. Several, experiments on different images with different noise levels illustrate that the model’s robustness, running time, ISNR and PSNR are better than the other three models. Additionally, our algorithm can maintain the local information of the images with better visual quality.

References

Alliney S (1997) A property of the minimum vectors of a regularizing functional defined by means of the absolute norm. IEEE Trans Signal Process 45(4):913–917

Aubert G, Kornprobst P (2006) Mathematical problems in image processing: partial differential equations and the calculus of variations, vol. 147. Springer Science & Business Media

Aubert G, Aujol J-F (2008) A variational approach to removing multiplicative noise. SIAM J Appl Math 68(4):925–946

Bar L, Chan TF, Chung G, Jung M, Kiryati N, Mohieddine R, Sochen N, Vese LA (2011) Mumford and shah model and its applications to image segmentation andimage restoration. In: Handbook of mathematical methods in imaging. Springer, pp 1095–1157

Blake A, Zisserman A (1987) Visual reconstruction. MIT Press, Cambridge

Bovik AC (2010) Handbook of image and video processing. Academic, New York

Boyd S, Parikh N, Chu E, Peleato B, Eckstein J et al (2011) Distributed optimization and statistical learning via the alternating direction method of multipliers. Found Trends®; Machine Learn 3(1):1– 122

Buades A, Coll B, Morel J-M (2005) A non-local algorithm for image denoising. In: IEEE computer society conference on computer vision and pattern recognition, 2005. CVPR 2005, vol 2. IEEE, pp 60–65

Buades A, Coll B, Morel J-M (2005) A review of image denoising algorithms, with a new one. Multiscale Model Simul 4(2):490–530

Burger HC, Schuler CJ, Harmeling S (2012) Image denoising: can plain neural networks compete with bm3d?. In: 2012 IEEE conference on computer vision and pattern recognition (CVPR). IEEE, pp 2392–2399

Cai J-F, Chan RH, Nikolova M (2008) Two-phase approach for deblurring images corrupted by impulse plus gaussian noise. Inverse Probl Imaging 2(2):187–204

Cai X, Chan R, Zeng T (2013) A two-stage image segmentation method using a convex variant of the mumford–shah model and thresholding. SIAM J Imag Sci 6(1):368–390

Chan TF, Esedoglu S (2005) Aspects of total variation regularized l 1 function approximation. J SIAM Appl Math 65(5):1817–1837

Charbonnier P, Blanc-Féraud L, Aubert G, Barlaud M (1997) Deterministic edge-preserving regularization in computed imaging. IEEE Trans Image Process 6 (2):298–311

Chartrand R, Yin W (2008) Iterative reweighted algorithms for compressive sensing. Tech. Rep.

Chen Y, Pock T (2017) Trainable nonlinear reaction diffusion: a flexible framework for fast and effective image restoration. IEEE Trans Pattern Anal Mach Intell 39 (6):1256–1272

Chen Y, Yu W, Pock T (2015) On learning optimized reaction diffusion processes for effective image restoration. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5261–5269

Dabov K, Foi A, Katkovnik V, Egiazarian K (2007) Image denoising by sparse 3-d transform-domain collaborative filtering. IEEE Trans Image Process 16(8):2080–2095

Daubechies I, DeVore R, Fornasier M, Güntürk CS (2010) Iteratively reweighted least squares minimization for sparse recovery. Commun Pure Appl Math: A Journal Issued by the Courant Institute of Mathematical Sciences 63(1):1–38

Deng L, Zhu H, Li Y, Yang Z (2018) A low-rank tensor model for hyperspectral image sparse noise removal. IEEE Access 6:62120–62127

Dong W, Li X, Zhang L, Shi G (2011) Sparsity-based image denoising via dictionary learning and structural clustering. In: 2011 IEEE conference on computer vision and pattern recognition (CVPR). IEEE, pp 457–464

Dong W, Shi G, Li X (2013) Nonlocal image restoration with bilateral variance estimation: a low-rank approach. IEEE Trans Image Process 22(2):700–711

Dong W, Zhang L, Shi G, Li X (2013) Nonlocally centralized sparse representation for image restoration. IEEE Trans Image Process 22(4):1620–1630

Dong W, Shi G, Li X, Ma Y, Huang F (2014) Compressive sensing via nonlocal low-rank regularization. IEEE Trans Image Process 23(8):3618–3632

Elad M, Aharon M (2006) Image denoising via sparse and redundant representations over learned dictionaries. IEEE Trans Image Process 15(12):3736–3745

Esser E (2009) Applications of lagrangian-based alternating direction methods and connections to split bregman. CAM Report 9:31

Feng W, Qiao P, Chen Y (2018) Fast and accurate poisson denoising with trainable nonlinear diffusion. IEEE Trans Cybern 48(6):1708–1719

Figueiredo MA, Bioucas-Dias JM (2010) Restoration of poissonian images using alternating direction optimization. IEEE Trans Image Process 19(12):3133–3145

Garnett R, Huegerich T, Chui C, He W (2005) A universal noise removal algorithm with an impulse detector. IEEE Trans Image Process 14(11):1747–1754

Geman S, Geman D (1984) Stochastic relaxation, gibbs distributions, and the bayesian restoration of images. IEEE Trans Pattern Anal Mach Intell 6:721–741

Geman D, Reynolds G (1992) Constrained restoration and the recovery of discontinuities. IEEE Trans Pattern Anal Mach Intell 3:367–383

Geman D, Yang C (1995) Nonlinear image recovery with half-quadratic regularization. IEEE Trans Image Process 4(7):932–946

Goldstein T, Osher S (2009) The split bregman method for l1-regularized problems. SIAM J Imag Sci 2(2):323–343

Gu S, Zhang L, Zuo W, Feng X (2014) Weighted nuclear norm minimization with application to image denoising. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2862–2869

Han Y, Feng X-C, Baciu G, Wang W-W (2013) Nonconvex sparse regularizer based speckle noise removal. Pattern Recognit 46(3):989–1001

He B, Liao L-Z, Han D, Yang H (2002) A new inexact alternating directions method for monotone variational inequalities. Math Program 92(1):103–118

He K, Wang R, Tao D, Cheng J, Liu W (2018) Color transfer pulse-coupled neural networks for underwater robotic visual systems. IEEE Access 6:32850–32860

Hintermüller M, Langer A (2012) Subspace correction methods for a class of non-smooth and non-additive convex variational problems in image processing

Huang T, Dong W, Xie X, Shi G, Bai X (2017) Mixed noise removal via laplacian scale mixture modeling and nonlocal low-rank approximation. IEEE Trans Image Process 26(7):3171–3186

Jain V, Seung S (2009) Natural image denoising with convolutional networks. In: Advances in neural information processing systems, pp 769–776

Ji H, Huang S, Shen Z, Xu Y (2011) Robust video restoration by joint sparse and low rank matrix approximation. SIAM J Imag Sci 4(4):1122–1142

Ji H, Liu C, Shen Z, Xu Y (2010) Robust video denoising using low rank matrix completion

Jia T, Shi Y, Zhu Y, Wang L (2016) An image restoration model combining mixed L1/L2 fidelity terms. J Vis Commun Image Represent 38:461–473

Jiang J, Zhang L, Yang J (2014) Mixed noise removal by weighted encoding with sparse nonlocal regularization. IEEE Trans Image Process 23(6):2651–2662

Jung M, Kang M (2015) Efficient nonsmooth nonconvex optimization for image restoration and segmentation. J Sci Comput 62(2):336–370

Li SZ (1994) Markov random field models in computer vision. In: European conference on computer vision. Springer, pp 361–370

Liu L, Chen L, Chen CP, Tang YY et al (2017) Weighted joint sparse representation for removing mixed noise in image. IEEE Trans Cybern 47(3):600–611

Mairal J, Bach F, Ponce J, Sapiro G, Zisserman A (2009) Non-local sparse models for image restoration. In: 2009 IEEE 12th international conference on computer vision. IEEE, pp 2272–2279

Mumford D, Shah J (1989) Optimal approximations by piecewise smooth functions and associated variational problems. Commun Pure Appl Math 42(5):577–685

Nikolova M (1999) Markovian reconstruction using a gnc approach. IEEE Trans Image Process 8(9):1204–1220

Nikolova M (2002) Minimizers of cost-functions involving nonsmooth data-fidelity terms. application to the processing of outliers. SIAM J Numer Anal 40(3):965–994

Nikolova M (2004) A variational approach to remove outliers and impulse noise. J Math Imaging Vision 20(1–2):99–120

Nikolova M (2005) Analysis of the recovery of edges in images and signals by minimizing nonconvex regularized least-squares. Multiscale Model Simul 4(3):960–991

Nikolova M, Chan RH (2007) The equivalence of half-quadratic minimization and the gradient linearization iteration. IEEE Trans Image Process 16(6):1623–1627

Nikolova M, Ng MK, Zhang S, Ching W-K (2008) Efficient reconstruction of piecewise constant images using nonsmooth nonconvex minimization. SIAM J Imag Sci 1(1):2–25

Nikolova M, Ng MK, Tam C-P (2010) Fast nonconvex nonsmooth minimization methods for image restoration and reconstruction. IEEE Trans Image Process 19 (12):3073–3088

Ochs P, Dosovitskiy A, Brox T, Pock T (2013) An iterated l1 algorithm for non-smooth non-convex optimization in computer vision. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1759–1766

Osher S, Shi Z, Zhu W (2017) Low dimensional manifold model for image processing. SIAM J Imag Sci 10(4):1669–1690

Robini MC, Lachal A, Magnin IE (2007) A stochastic continuation approach to piecewise constant reconstruction. IEEE Trans Image Process 16(10):2576–2589

Rockafellar R (1997) Convex analysis, Princeton University Press, Princeton, 1970. MATH Google Scholar

Roth S, Black MJ (2009) Fields of experts. Int J Comput Vis 82(2):205–229

Rudin LI, Osher S, Fatemi E (1992) Nonlinear total variation based noise removal algorithms. Physica D: Nonlinear Phenomena 60(1–4):259–268

Shao L, Yan R, Li X, Liu Y (2014) From heuristic optimization to dictionary learning: a review and comprehensive comparison of image denoising algorithms. IEEE Trans Cybern 44(7):1001–1013

Tao D, Cheng J, Song M, Lin X (2016) Manifold ranking-based matrix factorization for saliency detection. IEEE Trans Neural Netw Learn Syst 27(6):1122–1134

Teboul S, Blanc-Feraud L, Aubert G, Barlaud M (1998) Variational approach for edge-preserving regularization using coupled pdes. IEEE Trans Image Process 7(3):387–397

Vogel CR, Oman ME (1998) Fast, robust total variation-based reconstruction of noisy, blurred images. IEEE Trans Image Process 7(6):813–824

Wang Y, Yang J, Yin W, Zhang Y (2008) A new alternating minimization algorithm for total variation image reconstruction. SIAM J Imag Sci 1(3):248–272

Xiao Y, Zeng T, Yu J, Ng MK (2011) Restoration of images corrupted by mixed gaussian-impulse noise via l1–l0 minimization. Pattern Recognit 44(8):1708–1720

Xie J, Xu L, Chen E (2012) Image denoising and inpainting with deep neural networks. In: Advances in neural information processing systems, pp 341–349

Yan M (2013) Restoration of images corrupted by impulse noise and mixed gaussian impulse noise using blind inpainting. SIAM J Imag Sci 6(3):1227–1245

Yan R, Shao L, Liu Y (2013) Nonlocal hierarchical dictionary learning using wavelets for image denoising. IEEE Trans Image Process 22(12):4689–4698

Zhang T (2010) Analysis of multi-stage convex relaxation for sparse regularization. J Mach Learn Res 11:1081–1107

Zhang X, Lu Y, Chan T (2012) A novel sparsity reconstruction method from poisson data for 3d bioluminescence tomography. J Sci Comput 50(3):519–535

Zhang H, Yang J, Zhang Y, Huang TS (2013) Image and video restorations via nonlocal kernel regression. IEEE Trans Cybern 43(3):1035–1046

Zhang K, Zuo W, Chen Y, Meng D, Zhang L (2017) Beyond a gaussian denoiser: residual learning of deep cnn for image denoising. IEEE Trans Image Process 26(7):3142–3155

Acknowledgments

Funding were provided by the Natural Science Foundation of China under Grant NO.61361126011, No. 90912006; the Special Project of Informatization of Chinese Academy of Sciences in “the Twelfth Five-Year Plan” under Grant No. XXH12504-1-06, Science and Technology Service Network Initiative, CAS, (STS Plan); he IT integrated service platform of Sichuan Wolong Natural Reserve, under Grant No. Y82E01; The National R&D Infrastructure and Facility Development Program of China, “Fundamental Science Data Sharing Platform” (DKA2018-12-02-XX); Supported by the Strategic Priority Research Program of the Chinese Academy of Sciences, Grant No. XDA19060205; the Special Project of Informatization of Chinese Academy of Sciences (XXH13505-03-205); the Special Project of Informatization of Chinese Academy of Sciences (XXH13506-305); the Special Project of Informatization of Chinese Academy of Sciences (XXH13506-303); Supported by Around Five Top Priorities of “One-Three-Five” Strategic Planning, CNIC(Grant No. CNIC_PY-1408); Supported by Around Five Top Priorities of “One-Three-Five” Strategic Planning, CNIC(Grant No. CNIC_PY-1409) The authors wish to gratefully thank all anoymous reriewers who provided insightful and helpful comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, C., Li, Y., Zhao, Z. et al. A mixed noise removal algorithm based on multi-fidelity modeling with nonsmooth and nonconvex regularization. Multimed Tools Appl 78, 23117–23140 (2019). https://doi.org/10.1007/s11042-019-7625-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-019-7625-1