Abstract

Forest landscape modeling encompasses many core principles of landscape ecology: spatial resolution and extent, spatially explicit local and regional context, disturbance dynamics, integration of human activity, and explicit links to management and policy. Models of forest change inform land managers about strategies to adapt to the effects of an altered or changing environment across large, forested landscapes. Despite past successes, major challenges remain for landscape ecologists representing the dynamics of complex systems with a computer model, particularly given climate change. Here, I review major modeling challenges unique to climate change and suggest paths forward as climate change increasingly becomes a focus of landscape modeling efforts.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

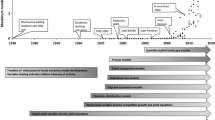

Forest landscape modeling has been a pillar of landscape ecology (Baker 1989; Scheller and Mladenoff 2007; Gustafson 2013). Forest landscape modeling engages many core principles of landscape ecology: spatial resolution and extent, spatially explicit local and regional context, disturbance dynamics, integration of human activity, and explicit links to management and policy. Forest landscape modeling has seen dramatic improvements over time. Among the improvement, model spatial and temporal resolution has increased considerably as have the number and complexity of the ecological processes incorporated. For example, 15 years ago only one or two processes—typically either fire, wind, or harvesting—were included in a study. Today, we can access large libraries of processes from which to choose.

Perhaps rightly so, then, many landscape and forest ecologists view forest landscape modeling as a success story. These models inform land managers strategies to adapt to effects of an altered or changing environment across large, forested landscapes.

Despite our successes, major challenges remain for landscape ecologists representing complex systems within a computer model, particularly given climate change. Some challenges are not unique to climate change, but are inherent to any attempt to capture the dynamics of highly complex systems. For example, many (or most?) ecological systems are constantly in flux (‘non-stationary’) due to human pressures on landscapes (including climate change) and, as a result, environmental changes outpace ecological resilience. Indeed, these changes are happening faster than our capacity to measure, model, and assess them. Other universal challenges include non-linear dynamics, higher-order interactions among processes, and validating the myriad components.

Some challenges are unique to climate change; these are detailed below. Though I draw from my experience as a forest ecologist, most issues are applicable to other ecosystems. Uncertainty is a common theme throughout. For example, climate uncertainty and management for restoration given climate change challenges forecasting of the Everglades (Obeysekera et al. 2015). The introduction and spread of novel pests and pathogens synchronous with climate change may be the largest source of uncertainty in agricultural systems (Rosenzweig et al. 2001). I present these challenges in a sequence that roughly follows the sequence of activities in a typical study that seeks to project forest landscape changes.

Defining forecasting goals

Our biggest challenge may be defining our goals. Is landscape forecasting a reasonable goal, considering the large climatic uncertainties and the high rate of change? Can we learn about the future fast enough to positively influence policy choices?

Goals for forecasting forest response to climate change typically include broad assessments of potential futures (‘How will community composition change if the climate changes by 5 °C?’) and reflect the information needs of forest managers (‘How should forest managers alter their harvesting practices if the climate changes?’) (Millar et al. 2007; Puettmann et al. 2013). Goals can also include testable hypotheses, though this is less common. Good hypotheses address possible shifts in community composition (e.g., dominance by r-selected tree species), the relative importance of different drivers (e.g., comparing the effects of climate vs. disturbance vs. human actions), or the relative importance of different demographic processes (e.g., mortality vs. establishment, colonization vs. competition) under climate change (Xu et al. 2012).

Goals determine the compromises among accuracy, realism, and generality that can be achieved by any particular model (Levins 1966). Within forest modeling, realism is often equated with fine-scale mechanistic detail. Examples include ecophysiological representation of photosynthesis (Aber and Federer 1992), and flame length as a function of sub-canopy micro-climate (Rothermal 1972). Such ‘realism’ may be superficially desirable, but often incurs a high parameterization cost (‘realism requires more reality’, as my PhD advisor David Mladenoff always said). Extensive parameterization limits spatial extent and may necessitate the exclusion of critical processes that operate at larger spatial scales, thereby self-limiting ‘realism’. Thus, determining an appropriate degree of process complexity and the degree of realism or mechanistic detail in a forest landscape model is non-trivial, and rationale for the tradeoff should be discussed.

Improved forecasting accuracy is often an implicit goal. But researchers must also strive for forecasts that improve our understanding of the drivers of change. Regression or machine learning algorithms (‘statistical models’) may produce highly accurate near-term (< 10 years into the future) predictions, but do not illuminate the underlying processes. For example, we could model how forests have changed in the past using machine learning with relatively high accuracy without advancing our understanding of why they changed.

Eluciding universal patterns or rules—or ‘generality’—is another common goal. Landscape managers typically seeks high local accuracy, while landscape ecologists seek universal patterns. But generality may be unnecessary. Ultimately, the research questions should drive choices about generality. Do we seek generality for generality’s sake? Unless specific goals or hypotheses that require generality (a cross-landscape comparison, for example), local accuracy with limited generality might be acceptable. On the other hand, if an implicit goal is to save resources (i.e., funding), using a general model is also acceptable, but the tradeoff should be acknowledged.

Model formulation

Model formulation is the process of developing a conceptual model, independent of the construction or implementation of the model. Model formulation includes important decisions about which ecological or social processes to include, and the mathematical or logical rules that determine model behavior.

As we enter an era of “no-analog” forest ecosystems (Williams et al. 2007), species dynamics will be substantially altered and will therefore reduce the accuracy of models formulated from current or recent historical relationships. For example, the relationships between climate and forests is generally lagged, or non-equilibrium, because trees have long life-spans; today’s forests reflect the climate when they were established (Overpeck et al. 1990). Statistical models derived from equilibrium assumptions will become less robust under climate change. Whenever forecasting longer-term (> 20 years) change, statistical models will lose accuracy, as they do not incorporate changes to the data-generating processes (Evans 2012).

By comparison, a process-oriented approach may better capture the emergent dynamics expected with climate change. A process-oriented approach resolves an ecosystem into its constituent components and their interactions. For example, instead of modeling succession as species and structural change correlated with time, a process-oriented model could simulate regeneration, competition, facilitation, and dispersal; these individual processes would interact and succession would emerge from the contribution of each process. Such an approach could incorporate climate change effects (particularly via regeneration) and subsequent species dynamics, improving our understanding of succession and adaptive management (Millar et al. 2007; Nitschke and Innes 2008; Puettmann et al. 2013). The evolution of our conceptual models highlights how model formulation contributes to uncertainty (Higgins et al. 2003).

Yet despite our best intentions, no forest landscape model is built entirely from processes—we do not have the knowledge or computing power to simulate any ecosystem based only on “first-principles” (Gustafson 2013). All models incorporate statistical relationships (e.g., between photosynthetic rate and leaf nitrogen) but do so at a scale that allows novel and emergent behaviors within our forecasts. The challenge is to select the best overall compromise between current day accuracy (as demonstrated via validation, see below) and the flexibility to accommodate climate effects.

Further challenging our model formulations are process interactions (e.g., how forest fire and insect outbreaks interact spatially and temporally) and how climate change will transform them (Buma and Wessman 2011; Keane et al. 2015). Process interactions are particularly sensitive to the disparity of scales at which different processes operate. If we capture only one or two scales of process interactions—fine scale interactions (e.g., neighboring tree interactions), meso-scale processes (disturbance regimes), or very broad-scale climate effects (shifting biomes)—we may miss critical process interactions. For example, focusing only on broad-scale biome shifts (migration) or fine-scale neighborhood interactions neglects changes in disturbance severity that may ultimately determine species composition. Likewise, when focusing on meso-scale and larger processes, it is important to capture fine-scale heterogeneity (aka “climate refugia” or “stepping stones” Hannah et al. 2014) to estimate the ability of rare species to colonize locally.

Finally, we need to focus more model development resources on human adaptation to climate change (Millar et al. 2007). Humans are the dominate disturbance across nearly all landscapes (Masek et al. 2011; Crowther et al. 2015) and humans are unlikely to be passive observers of change. Much effort to date has focused on ‘ecological forecasting’ (Clark et al. 2001), leaving out human response to changing climates and landscapes. As an example, I often simulate forest management actions (e.g., Scheller et al. 2018), but either they only obliquely respond to climate change (e.g., are informed by shifting species distributions), or the response to climate change is assumed (defined within scenarios); the response does not represent emergent or novel changes in human behavior. This approach does not capture dynamic human response to a changing climate. Human response to climate-induced change may happen individually (e.g., a landowner planting climate-adapted tree species) or may be mediated by organizations. Depending on the local context, human adaptation can be represented by agent-based models that reflect the heterogeneity of individual responses (Spies et al. 2017). More broadly, human adaptation should be considered when formulating any process. For example, fire modeling should represent intensified suppression efforts—a logical management response to increased risk—as a potential adaptation to climate change (Scheller et al. in prep.).

Model construction

Model construction is the process of implementing conceptual models—turning ideas into executables. Every conceptual model has innumerable possible implementations, dependent upon programming language, design (e.g., object-oriented programming), conventions, and individual style. Twenty years ago, computer code often was a jealously guarded resource. Today open-source standards and tools encourage broader sharing and interoperability. Nevertheless, we must continually guard against unnecessary and counter-productive model ‘balkanization.’ For example, all forest models require spatial data as inputs, and shared libraries (e.g., the Geospatial Data Abstraction Library) now help manage spatial data. Such libraries should be extended to include spatial interactions (how individual cells or pixel share information with their neighbors or neighborhood). If models are built on common libraries or platforms, components could more readily be exchanged and ecologists could focus more on the science and less on the implementation (i.e., coding and architecture). Although model platforms have been developed, e.g., SELES (Fall and Fall 2001), they are often encapsulated within a higher-level programming language that limits process representation. In contrast, program libraries written in a lower-level computer language (e.g., C or C++) can be operating system agnostic and easily wrapped for use with many other languages. Shared libraries also allow more frequently cross-scale model synthesis and comparison, which help researchers identify areas of agreement and highlight model strengths and weaknesses.

We also need increased communication across model scales and groups. As model complexity has grown over the decades, the enterprise has become increasingly specialized with distinct communities forming around scales or assumptions or platforms and technologies. Not only does this ultimately lead to duplication of effort, it compounds our inability to communicate with managers and train students. We need to seek out more opportunities to collaborate across scales (e.g., working from global to regional to landscape scales) and to train our students to think at multiple scales.

Parameterization

Parameterization is the process of estimating parameters for all the processes and statistical relationships within a model, including current conditions. Parameterization is one of the greatest challenges for modeling generally and for climate change forecasting in particular.

First, we lack consistent data about the future: Many emissions scenarios and 10+ global circulation models all project climate change, and countless others project interrelated processes, such as land use change, N deposition, and management. Climate change will also produce novel events for which projections do not exist, such as insect outbreaks, invasive species introductions, and human actions. Uncertainty constrains all these model inputs. Even commonly understood inputs—shade tolerance of a tree species, for example—contains uncertainty due to genetic variation and phenotypic plasticity.

Parameterization can be improved via collaboration with experimental ecologists and long-term research sites (Kretchun et al. 2016). Estimating initial conditions—for example, the demographics and spatial distribution of tree species or the distribution of soils across a landscape—has improved considerably (Zald et al. 2014; Duveneck et al. 2015), and this higher initial accuracy improves long-term projections.

We need to reduce parameter uncertainty across all scales. Until recently, landscape ecologists reduced parameter uncertainty by emphasizing the ‘focal scale’: the scale for which a hypothesis or problem was formulated. But climate change challenges the concept that a single focal scale will be sufficient. As an example, early successional processes are complex and high sensitivity to initial conditions and therefore contain large uncertainty (Scheller and Swanson 2015). Consequently, we cannot ignore critical fine-scale processes: seed rain density, competition for light, available soil water, nutrients, seedling herbivory. Inclusion of finer-scale processes won’t require that we accurately count the number of stomates per leaf (the proverbial hobgoblin of forest modeling) but rather that our models are sensitive to emerging data and questions. Achieving accuracy across a wide range of scales (sensitive to local influences, applicable to broad landscapes) will require sophisticated computational approaches to estimate local conditions across broad areas (e.g., Seidl et al. 2012).

An increased capacity to assimilate data is therefore required. Data assimilation is an ever-expanding challenge and opportunity (Luo et al. 2011). In numerous domains, more and more data are becoming widely and freely available and with sufficient metadata and standardized formats. In particular, spatial data availability has grown tremendously with more convenient access (e.g., Google Earth Engine). Substantial investments are nonetheless necessary. Often, an initial investment of funding is provided for model formulation and construction. Adequate funding to maintain and upgrade existing models to assimilate new data is a challenge that increases with continued increasing model complexity and data needs. Given the current state of funding, we need a cultural shift towards open-source and exchangeable tools for data assimilation, parallel to the changing culture of model construction.

Model validation

The flip side of parameterization is validation: How well do model projections compare to independent, empirical data? If we are focused on longer-term emergent behaviors (the most interesting), we must wait (or build a time machine) to discover whether our model behaves in a reasonable fashion. Model forecasts or projections of the effects of climate change cannot be fully validated (Brown and Kulasiri 1996; Gardner and Urban 2003; Araujo et al. 2005). Back-casting (e.g., Ray and Pijanowski 2010) is often suggested as a solution, but presents its own problems (e.g., lack of sufficient climate data or data about the initial conditions).

Our inability to validate forecasts could be regarded as a total failure of the modeling enterprise. Why should anyone trust un-validated results? But validation is only one dimension of building confidence in forecasts. Perhaps it is the “gold standard” of evidence, much as double-blind, randomized trials are to clinical medicine. However, as we know from medicine, the gold standard is not always an option (in their case, due to ethical concerns). Instead of hand-wringing over validation, I advocate redefining the basis for discerning the quality model results. Rather than strict validation, we need to emphasize the role of confidence building when evaluating models. We all know that all models are wrong (and some are useful) (Box 1976). But how do we provide a more useful narrative to help non-modelers discern the quality of model results?

I suggest a multi-pronged approach to building model confidence: (1) Validation of model components where possible: Were the model components compared against empirical data? Space-for-time substitution is often a necessary step towards component validation. (2) Has the model undergone rigorous sensitivity testing? Sensitivity testing provides critical information about which parameters have the largest influence on model results. Why invest in improving unimportant parameters? (3) Model transparency: Is model code open for public review? Is the code well documented, both internally and via accessible documentation? (4) Is the model robust and verified? Modern software engineering practices are critical for ensuring reliability (Scheller et al. 2010).

I intentionally exclude the often applied ‘reasonable behavior’ (also known as the ‘face validity’ or ‘passes the sniff test’) whereby model performance is assessed based on whether the model generally behaves ‘as expected’ based on criteria informed by the education or experience of the modeler or the forest manager. Under a changing climate, however, the unexpected or surprising results may be more accurate and the validity of personal experience is expected to decline. Together these criteria can help users and policy makers place a model along the continuous spectrum from fully validated (not possible given climate change) to completely speculative.

Parameterization and ‘confidence building’ represent the bulk of the work ahead of us. Creating new models is relatively easy by comparison. Climate change make our work ever more difficult—and exciting!

Model application and communication

Specific challenges are inherent to applying, interpreting, and communicating results when forecasting forest change under climate change. The most difficult component of model application, in my experience, is managing expectations. Managers often engage in the modeling process, sometimes under duress or obligation, without a clear understanding of the goals and tradeoffs. They often overestimate the predictive capacity of models and underestimate the large uncertainty introduced by climate change.

A false divide separates “believers” and “opponents” regarding projections based on simulation models (Aber 1997). Modeling is not the province of a few isolated computer enthusiasts. Rather, modeling (in the sense of abstraction and simplification) is inherent to the scientific process. Nevertheless, more education is needed before people become indoctrinated into a particular viewpoint towards models (either frivolous distractions or miraculous soothsayers). Fortunately, in my experience, models are rapidly becoming accepted as critical tools in the management and policy-making toolbox. Any lingering mistrust is our fault for poorly communicating model purpose (see above).

A central challenge is clearly communicating the uncertainty of climate change projections. We already ask managers to absorb enormous amounts of complex information. Dense graphics and statistics of the results squanders audience goodwill. Graphs copied from our publications are often presented but these depictions of uncertainty are often not accessible to the public and policymakers. Spatial uncertainty is underrepresented. Local land managers subsequently over-interpret the results for areas they know well.

We can avoid model misuse (‘do no harm’) by clearly communicating the purpose for each model and application. Researchers should guide the intended users as to how the results should be used and do so early and beyond the peer-reviewed literature, which managers rarely find time to read. We must clearly convey the purpose and limitations of each model and each model application: Does the model emphasize near-term landscape change for decision support? Should model results be used in conjunction with scenarios? Or is the model most useful for clarifying how processes will change and interact with a changing climate?

Models produce many terabytes of data annually without a clear way for managers and policymakers will access and use them on an ongoing basis. Addressing this problem requires improved communication, better access to output data (e.g., cloud access), and ongoing and sincere engagement (taking the time know stakeholders and their needs).

My experience suggests that the following approaches improve communication: (1) Animated data, pairing time series with map data; time series alone can be too abstract and animated maps too pixelated but the combination of time series and a map with a coordinated color scheme appeals to a broad audience; (2) Mapping uncertainty by combining a ‘sample’ map from a single replicate with a map showing relative uncertainty among scenarios reveals hidden information, e.g., which locations are most resilient to climate change, and emphasizes the stochastic underpinnings of simulation models; (3) Time series with colored percentile envelopes—for example, a light blue envelope encompassing the 95/5 percentiles; (4) Virtual reality captures the public’s imaginations of potential futures. (4) Gamification—turning models into simple, playable games—can communicate conceptual models. Regardless of approach, continual investment in communication is required.

The road forward

Landscape ecology and landscape managers need better models of forest change under climate change. How do we produce the next generation of forest simulation models? What are the potential roadblocks? And how do we ensure that the journey was worth the effort?

To stretch the road metaphor to the breaking point, I assume no single road forward. No one wants an all-encompassing forest landscape model that purports to answer every question, to test every hypothesis, and to operate at every scale. Science doesn’t work that way. Science is competitive and diverse and dynamic. Today’s little-known research project becomes tomorrow’s standard approach, which becomes the future’s outdated relic. Failure is an option and may be the best option for maintaining the requisite diversity of thinking and actors.

Forest simulations models are necessary tools in policy and decision support, particularly in relation to the effects of climate change. Though the uncertainties remain numerous and large, current human and management actions will determine how many systems respond to climate change. Forest landscape models can play a critical role in evaluating adaptive strategies. Those engaged in forest modeling must therefore push harder to remove the barriers that often separate landscape forecasts from policy.

In conclusion, I am optimistic that we can meet the objectives discussed above. Many strides have been taken and progress continues on all fronts. Creative solutions are required and ever-evolving. Landscape ecology has provided the platform for developing such creative solutions and should continue to do so.

References

Aber JD (1997) Why don’t we believe the models. Bull Ecol Soc Am 73(3):232–233

Aber JD, Federer CA (1992) A generalized, lumped-parameter model of photosynthesis, evapotranspiration and net primary production in temperate and boreal forest ecosystems. Oecologia 92:463–474

Araujo MB, Pearson RG, Thuillers W, Erhard M (2005) Validation of species-climate impact models under climate change. Glob Change Biol 11:1504–1513

Baker WL (1989) A review of models of landscape change. Landscape Ecol 2:111–333

Box GE (1976) Science and statistics. J Am Stat Assoc 71(356):791–799

Brown TN, Kulasiri D (1996) Validating models of complex, stochastic, biological systems. Ecol Model 86(2–3):129–134

Buma B, Wessman CA (2011) Disturbance interactions can impact resilience mechanisms of forests. Ecosphere 2(5):1–13

Clark JS, Carpenter SR, Barber M, Collins S, Dobson A, Foley JA, Lodge DM, Pascual M, Pielke R, Pizer W, Pringle C (2001) Ecological forecasts: an emerging imperative. Science 293(5530):657–660

Crowther TW, Glick HB, Covey KR, Bettigole C, Maynard DS, Thomas SM, Smith JR, Hintler G, Duguid MC, Amatulli G, Tuanmu MN (2015) Mapping tree density at a global scale. Nature 525(7568):201

Duveneck MJ, Thompson JR, Wilson BT (2015) An imputed forest composition map for New England screened by species range boundaries. For Ecol Manag 347:107–115

Evans MR (2012) Modelling ecological systems in a changing world. Philos Trans R Soc B 367:181–190

Fall A, Fall J (2001) A domain-specific language for models of landscape dynamics. Ecol Model 141(1–3):1–18

Gardner RH, Urban DL (2003) In: Canham CD, Cole JJ, Lauenroth WK (Eds.), Model validation and testing: Past lessons, present concerns, future prospects. Princeton University Press, Princeton, pp 184–203

Gustafson EJ (2013) When relationships estimated in the past cannot be used to predict the future: using mechanistic models to predict landscape ecological dynamics in a changing world. Landscape Ecol 28:1429–1437

Hannah L, Flint L, Syphard AD, Moritz MA, Buckley LB, McCullough IM (2014) Fine-grain modeling of species’ response to climate change: holdouts, stepping-stones, and microrefugia. Trends Ecol Evol 29:390–397

Higgins SI, Clark JS, Nathan R, Hovestadt T, Schurr F, Fragoso JMV, Aguiar MR, Ribbens E, Lavorel S (2003) Forecasting plant migration rates: managing uncertainty for risk assessment. J Ecol 91:341–347

Keane RE, McKenzie D, Falk DA, Smithwick EA, Miller C, Kellogg LKB (2015) Representing climate, disturbance, and vegetation interactions in landscape models. Ecol Model 309:33–47

Kretchun AM, Loudermilk EL, Scheller RM, Hurteau MD, Belmecheri S (2016) Climate and bark beetle effects on forest productivity—linking dendroecology with forest landscape modeling. Can J For Res 46(8):1026–1034

Levins R (1966) The strategy of model building in population biology. Am Sci 54(4):421–431

Luo Y, Ogle K, Tucker C, Fei S, Gao C, LaDeau S, Clark JS, Schimel DS (2011) Ecological forecasting and data assimilation in a data-rich era. Ecol Appl 21(5):1429–1442

Masek JG, Cohen WB, Leckie D, Wulder MA, Vargas R, de Jong B, Healey S, Law B, Birdsey R, Houghton RA, Mildrexler D (2011) Recent rates of forest harvest and conversion in North America. J Geophys Res 116(G4):1–22

Millar CI, Stephenson NL, Stephens SL (2007) Climte change and forests of the future: managing in the face of uncertainty. Ecol Appl 17(8):2145–2151

Nitschke CR, Innes JL (2008) A tree and climate assessment tool for modelling ecosystem response to climate change. Ecol Model 210:263–277

Obeysekera J, Barnes J, Nungesser M (2015) Climate sensitivity runs and regional hydrologic modeling for predicting the response of the greater Florida Everglades ecosystem to climate change. Environ Manag 55:749–762

Overpeck JT, Rind D, Goldberg R (1990) Climate-induced changes in forest disturbance and vegetation. Nature 343:51–53

Puettmann K, Messier C, Coates KD (2013) In: Puettmann K, Messier C (eds), Managing forests as complex adaptive systems. Routledge, London, pp 3–16

Ray DK, Pijanowski BC (2010) A backcast land use change model to generate past land use maps: application and validation at the Muskegon River watershed of Michigan, USA. J Land Use Sci 5(1):1–29

Rosenzweig C, Iglesias A, Yang XB, Epstein PR, Chivian E (2001) Climate change and extreme weather events; implications for food production, plant diseases, and pests. Glob Change Hum Health 2:90–104

Rothermal RC (1972) A mathematical model for predicting fire spread in wildland fuels. USDA Forest Service Research Paper INT-115. Intermountain Forest and Range Experiment Station. Ogden, Utah, USA

Scheller RM, Mladenoff DJ (2007) An ecological classification of forest landscape simulation models: tools and strategies for understanding broad-scale forested ecosystems. Landscape Ecol 22:491–505

Scheller RM, Swanson ME (2015) Simulating forest recovery following disturbances: vegetation dynamics and biogeochemistry. In: Perera AH, Sturtevant BR, Buse LJ (eds) Simulation modeling of forest landscape disturbances. Springer International Publishing Switzerland, Cham

Scheller RM, Kretchun AM, Hawbaker TJ, Henne P (In preparation) Social-Climate Related Pyrogenic Processes and their Landscape Effects (SCRPPLE): a landscape model of variable social-ecological fire regimes

Scheller RM, Kretchun AM, Loudermilk EL, Hurteau MD, Weisberg PJ, Skinner C (2018) Interactions among fuel management, species composition, bark beetles, and climate change and the potential effects on forests of the lake tahoe basin. Ecosystems 21:643–656

Scheller RM, Sturtevant BR, Gustafson EJ, Mladenoff DJ, Ward BC (2010) Increasing the reliability of ecological models using modern software engineering techniques. Front Ecol Environ 8(5):253–260

Seidl R, Rammer W, Scheller RM, Spies TA (2012) An individual-based process model to simulate landscape-scale forest ecosystem dynamics. Ecol Model 231:87–100

Spies TA, White E, Ager A, Kline JD, Bolte JP, Platt EK, Olsen KA, Pabst RJ, Barros AMG, Bailey JD, Charnley S, Morzillo AT, Koch J, Steen-Adams MM, Singleton PH, Sulzman J, Schwartz C, Csuti B (2017) Using an agent-based model to examine forest management outcomes in a fire-prone landscape in Oregon, USA. Ecol Soc 22(1):25

Williams JW, Jackson ST, Kutzbach JE (2007) Projected distributions of novel and disappearing climates by 2100 AD. Proc Natl Acad Sci 104(14):5738–5742

Xu C, Gertner GZ, Scheller RM (2012) Pathways for forest landscape response to global climatic change: competition or colonization? Clim Change 110:53–83

Zald HS, Ohmann JL, Roberts HM, Gregory MJ, Henderson EB, McGaughey RJ, Braaten J (2014) Influence of lidar, Landsat imagery, disturbance history, plot location accuracy, and plot size on accuracy of imputation maps of forest composition and structure. Remote Sens Environ 143:26–38

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Scheller, R.M. The challenges of forest modeling given climate change. Landscape Ecol 33, 1481–1488 (2018). https://doi.org/10.1007/s10980-018-0689-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10980-018-0689-x