Abstract

Researchers and natural resource managers need predictions of how multiple global changes (e.g., climate change, rising levels of air pollutants, exotic invasions) will affect landscape composition and ecosystem function. Ecological predictive models used for this purpose are constructed using either a mechanistic (process-based) or a phenomenological (empirical) approach, or combination. Given the accelerating pace of global changes, it is becoming increasingly difficult to trust future projections made by phenomenological models estimated under past conditions. Using forest landscape models as an example, I review current modeling approaches and propose principles for developing the next generation of landscape models. First, modelers should increase the use of mechanistic components based on appropriately scaled “first principles” even though such an approach is not without cost and limitations. Second, the interaction of processes within a model should be designed to minimize a priori constraints on process interactions and mimic how interactions play out in real life. Third, when a model is expected to make accurate projections of future system states it must include all of the major ecological processes that structure the system. A completely mechanistic approach to the molecular level is not tractable or desirable at landscape scales. I submit that the best solution is to blend mechanistic and phenomenological approaches in a way that maximizes the use of mechanisms where novel driver conditions are expected while keeping the model tractable. There may be other ways. I challenge landscape ecosystem modelers to seek new ways to make their models more robust to the multiple global changes occurring today.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Researchers and natural resource managers need to be able to predict how multiple global changes such as climate change, rising levels of air pollutants and enhanced migrations (invasions) will affect landscape composition and ecosystem function. There is a vast body of literature documenting individual studies that have added to our understanding of relationships between environmental conditions and ecological response. Other papers have synthesized these results into a corpus of theory and systems knowledge that provides a sound basis for assessments of future ecosystem and landscape functioning. However, to make predictions about future landscape dynamics under specific scenarios of climate or management, some type of formal model is usually required because most ecosystems have such a complex web of interacting environmental drivers and ecological processes that they exceed the capacity of a mental model.

Since the invention of the digital computer a large number of ecological predictive models have been developed for a similarly large number of purposes (Mladenoff and Baker 1999). Many of these have recently been called upon to make predictions about the impact of climate and other global changes on ecosystem properties and function across space and time. Because these global changes are expected to produce environmental conditions that have not been empirically studied at any time in recorded history, it is prudent to ask how robust the predictions of these ecological models might be to such markedly novel conditions. The purpose of this essay is to raise this question and explore options that may improve the current situation. My own expertise is with forest landscape models (FLMs), and this class of ecological models is currently particularly suspect in projecting forest dynamics under global change. Similar models operating at finer and coarser spatial and temporal scales (e.g., “gap” models and Dynamic Global Vegetation Models (DGVM), respectively) have design characteristics that avoid some of the weaknesses seen in FLMs. Although my exploration will focus on FLMs, I believe the principles I discuss apply widely to ecological and landscape models regardless of scale.

FLMs are a class of predictive stochastic simulation models that model forest generative (establishment, development, aging) and degenerative (disturbance, senescence) processes over broad spatial and temporal scales. Their particular strength is in their ability to explicitly model spatial processes such as seed dispersal and disturbance spread, and to account for interactions in both time and space, which are important determinants of future landscape composition and spatial pattern. They simulate distinct ecological processes, defined as a sequence of events within a sub-system domain having causal drivers and producing effects on the system (i.e., possibly resulting in a change in some system property). Processes can be represented in a model either mechanistically or phenomenologically, as discussed below. These models have a distinct and interesting genealogy that has been reviewed by Mladenoff and Baker (1999) and He (2008). They are used to study how abiotic environmental factors, disturbances and management activities interact to affect forest dynamics in terms of tree species and age composition, spatial pattern and other ecosystem attributes (e.g., wildlife habitat, tree biomass).

FLMs have been designed using one of three fundamental approaches (Suffling and Perera 2004). (1) Mechanistic (sometimes called process-based) models take a reductionist approach in which the mechanisms by which causes produce effects within a process are explicitly modeled. Model parameters typically have direct ecological meaning and can be measured in the real-world. Thus, a system is represented as a set of mechanistic processes that each describe cause and effect relationships between system variables. (2) Phenomenological (sometimes called empirical or statistical) models take a more holistic approach where the causes of a process produce effects (phenomena) according to how the system has typically behaved in the past. That is, the effect of the process is predicted using various types of surrogates for the mechanism, thus mimicking the effect of the mechanism on the system. Model parameters are often statistical equation coefficients or probabilities. For example, forest growth may be represented by statistical relationships between a readily measured environmental attribute (site index) and system response (growth) instead of simulating the mechanisms (e.g., tree physiology) that determine the system response. Other processes are modeled stochastically with the necessary probabilities typically estimated using empirical data. Still other processes may be handled by way of assumptions, and these can be based on past behavior or on theoretical expectations of future behavior. (3) In reality, most FLMs (and most ecosystem models) are a hybrid of these two approaches. Primarily phenomenological models usually have some mechanisms that are simulated explicitly (e.g., fire spread), and primarily mechanistic models usually avoid simulating particularly difficult or time-consuming processes (e.g., insect pest population outbreaks) using a simpler phenomenological approach.

Mechanistic FLMs are rarely favored because they tend to be complex, difficult to run and parameter-hungry compared to phenomenological models. Phenomenological models avoid the computational overhead of simulating a process by using surrogates for the mechanism, usually based on past system behavior. Even the most mechanistic FLMs contain a number of phenomenological components to keep them tractable. However, given the accelerating pace of multiple global changes, it is becoming increasingly difficult to trust projections of the future made by phenomenological models estimated under the conditions in the past (Cuddington et al. 2013). Here, the old adage is true: past performance is not necessarily indicative of future results. For example, will successional pathways (sequence, timing and likelihood) remain the same as the climate changes? Will community assemblages change? How will CO2-fertilization, increased temperatures, physiological changes in water use efficiency of trees and changes in cloudiness and solar radiation flux interact to affect tree species growth rates and species competition? How will disturbance regimes (rotation intervals, event size, severity and frequency) change? Will the effect of increased stressors (e.g., drought, ozone pollution) be linear or non-linear and/or synergistic? Will the interactions among ecological processes change? Will exotic invaders change the interactions among the native species in an ecosystem? There is really no way to confidently answer these questions by looking at how systems behaved in the past. Consequently, although there are many sound reasons for modeling forest ecosystem dynamics using a phenomenological approach, the realities of global change and the critical need to evaluate management options to respond to those changes require a more robust approach.

What might a more robust approach look like? (1) Ideally, it should explicitly link all aspects of system behavior to variation in the fundamental drivers (e.g., temperature, moisture, light, CO2 concentration, limiting factors such as pollutants and invaders) that are changing. Such links can be direct (simulation of processes based on “first principles” of physiology and biophysics) or indirect (simplification of a process or a defensible extrapolation of empirical relationships). Obviously, direct links are more robust. It is probably not feasible to expect every aspect of modeled system behavior to be linked explicitly to fundamental drivers, but the more these links can be made, the more robust the predictions will be under novel conditions. It may be possible to develop these links empirically where the driver variables have been studied across a range that nearly includes the values expected in the future, but given the magnitude of many expected global changes, this seems unlikely. (2) Interactions among ecological processes should rarely be specified based on expectations derived from the past. Because the nature of interactions is usually a big unknown under any conditions, models should be designed so that processes can interact based on system drivers and system state, and that the system outcome is an emergent property of mechanistic interactions. (3) All the ecological processes necessary to ensure that model projections are realistic and reliable should be included (Kimmins and Blanco 2011). Many FLMs were developed to answer focused research questions. Thus, specific processes of interest were included so that variability in their drivers and behavior could be studied and all the other processes were assumed to be held constant and were often not included in the model. This is valid for research purposes, but can be problematic when such models are applied to make projections of actual future forest conditions (dynamics) to inform management decisions. For example, a FLM run for Alberta Canada in (for example) the year 2000 that did not include mountain pine beetle as a disturbance, nor the link to temperature as a limiting factor of beetle populations, would have completely botched projections of forest composition even just a few decades into the future given the fact that the beetle population recently invaded the east side of the Rockies because of warmer winters (Cullingham et al. 2011). Similarly, a FLM that does not include windthrow as a disturbance cannot account for a major factor structuring forests in the upper Midwest US (Schulte and Mladenoff 2005), New England US (Boose et al. 2001) or Siberian Russia (Gustafson et al. 2010).

Currently, no FLM achieves the ideals enumerated above. (1) Most FLMs have at least some mechanistic components, but the state-of-the-art does not yet solidly link the fundamental drivers of climate, CO2 concentration, etc. with tree physiology, life history attributes and mechanisms of disturbance and succession throughout their implementation. Most FLMs are being updated to include environmental drivers that are changing, although most such modifications are thus far crude and do not take the plunge into the “first principles” mechanistic approach. Drivers such as temperature and precipitation are being added, but others such as relative humidity, PAR and CO2 and ozone concentrations are lagging. Simulation of biological invaders that are competitors rather than disturbance agents is very rare. Climate and atmospheric scientists are now able to forecast future dynamics of many of these drivers (IPCC 2007), which allows a mechanistic landscape model to explicitly link forecasts of the drivers to various biological and physical mechanisms to make projections of future forest dynamics. Nevertheless, the modeled processes that respond to those drivers are not yet adequately robust. (2) Most FLMs currently simulate forest dynamics as an emergent property of interacting independently modeled processes acting on various ecosystem variables (e.g., vegetation type, fuel class, habitat type). However, the degree to which the processes can produce novel system states and behavior varies considerably. Model data structure and process design must have sufficient degrees of freedom to allow all plausible future system states to occur. (3) The specific processes (mostly disturbances) that various FLMs can model also vary widely, ranging from one (HARVEST, Gustafson and Crow 1996) to six (LANDIS-II, Scheller and Mladenoff 2004). The processes currently included in many FLMs include succession, fire (wild and prescribed), disease and vegetation management activities. Other processes available in some FLMs include insect outbreaks, windthrow and drought. Potentially important processes still in need of development include ungulate herbivory, beaver activity, exotic earthworms, competitive invaders and severe weather events (e.g., early thaw or late frost) (Keane et al. in review).

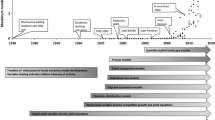

History of forest landscape modeling

Forest landscape dynamics modeling evolved in concert with the development of the field of landscape ecology and computer technology (Scheller and Mladenoff 2007). The earliest forest dynamics models were tree and stand growth models used for silviculture research (see examples in Fries (1974)). Regional forest change models (e.g., Shugart et al. 1973) and plot-level “gap” models such as JABOWA (Botkin et al. 1972) and FOREST (Ek and Monserud 1974) were developed in the 1970s to answer more ecological research questions. In the 1980s, FOREST-BGC (Running and Coughlan 1988), a mechanistic plot-level model based on tree physiology was combined with a gap model (ZELIG, Urban et al. 1991) to better model growth in the gap model (HYBRID, Friend et al. (1993)). These developments set the stage for the development of DGVMs which couple vegetation models with global circulation models at coarse scales (Shugart and Woodward 2011). Concurrently, a number of stand-level models were developed for forestry applications, most notably strategic planning and growth and yield models such as FORPLAN (Johnson 1992) and FVS (Crookston and Dixon 2005). By the 1990s, a convergence of events provided the impetus to develop landscape-level forest dynamics models. First, the emerging field of landscape ecology (Risser and Iverson 2013) combined forces with a new ecosystem management paradigm (Grumbine 1994) to create a huge demand for projections of future forest landscape dynamics for research and management purposes. It became accepted throughout academia and within land management agencies that a landscape perspective was essential for sound ecosystem and forest management (Turner 2005). Second, exponential advances in the capability and accessibility of computers made landscape modeling feasible. Third, scientific advances in ecological theory (e.g., disturbances, spatial ecology) and computational algorithms (e.g., pattern recognition, spatial processing, object-oriented programming) provided the conceptual basis to rapidly advance the sophistication of landscape models.

Modern FLMs were developed for various purposes and their complexity and modeling approach follows the purpose. They all include some level of spatial interaction, but vary in the dynamism of the species assemblages (communities) that can occur and the specific ecosystem processes that can be simulated (Scheller and Mladenoff 2007). Many of the simpler models were developed for heuristic study of specific disturbances to help develop management options (e.g., HARVEST (Gustafson and Crow 1996), LANDSUM (Keane et al. 2006), BFOLDS (Perera et al. 2004)). Other models that integrate many ecological and physical processes have been used to understand ecosystem dynamics in the face of interacting natural and anthropogenic perturbations (e.g., FireBGCv2 (Keane et al. 2011), SIMPPLLE (Chew et al. 2004), LANDIS (Mladenoff 2004), RMLANDS (McGarigal and Romme 2012)). As FLMs have become more integral to the management planning process, some FLMs are continually being expanded to include an ever-increasing variety of ecological processes and disturbances to increase the accuracy and specificity of the projections.

In recent years, FLMs have been applied to help answer questions about the potential effects of completely novel environmental conditions such as climate change, air pollution (elevated CO2 and ozone, nitrogen deposition), exotic invasions and land use change. These questions require parameter inputs that in some cases are well outside the range of values for which the models were designed, a fact that is especially problematic for phenomenological models. This fact is readily acknowledged in most such applications of empirically-based model components, and adjustments of various kinds are made in an attempt to compensate. For example, Keane et al. (2008) used LANDSUM to study climate change effects on fire-prone landscapes in Montana (USA) by modifying input maps as a function of climatic drivers and modifying fire regimes as an ad hoc proportion of the historical fire regime. Successional transition parameters for the future are unknown, but were not modified from current values. In another Montana example, Cushman et al. (2011) simply increased the probability of fires and insect outbreaks by 10 % to simulate the effects of climate change on wildlife habitat using RMLANDS. Again, successional state transition probabilities were not changed. Gustafson et al. (2010) simulated the effects of climate change on the composition of Siberian forests using LANDIS-II by (1) adjusting species growth rates according to results of PnET-II (Aber et al. 1995) model runs forced by the future climate projections of a GCM, (2) modifying the temperature and precipitation values fed into the fire extension by the difference between current climate and that projected by the GCM, and (3) by lengthening fire seasons by 10 %. Clearly, the adjustments in each of these examples were ad hoc and crude. They did allow researchers to make some heuristic advances while waiting for better modeling solutions, but the results are not robust enough for making specific management decisions that will play out in the globally-changing future.

Next generation landscape models

How should we proceed as we develop the next generation of landscape models, including FLMs? A number of proposals have been put forth, of which I mention but two. Cushman et al. (2007) laid out a thoughtful research agenda that integrates gradient modeling (to empirically establish relationships between biophysical gradients and forest response), ecosystem models and FLMs. The gradient modeling part of this approach seeks to empirically get very close to the mechanisms of forest response, but nevertheless is not quite mechanistic. Kimmins and Blanco (2011) advocate for a multi-scale hybrid modeling approach combining tree-level, stand-level and landscape-level models. Rather than make my own proposal, let me rather propose some principles that I believe will increase the robustness of landscape models in the face of global changes. First, I believe that landscape modelers should increasingly adopt a more mechanistic approach wherever one or more drivers of landscape dynamics are affected by some aspect of global change. This should include modifying existing mechanistic components to take a more “first principles” approach and migrating phenomenological components to a more mechanistic approach where it is feasible. I do not go so far as to say that the phenomenological approach should be abandoned altogether. Landscape models are designed around a number of tradeoffs (e.g., extent v. resolution, complexity v. parsimony, mechanistic v. phenomenological) that are necessary to keep them tractable. For some processes a phenomenological approach may remain viable and even desirable because it reduces parameter burden and uncertainty. However, for those where the relationships of the past may not hold in the future, a more mechanistic approach is needed. Furthermore, model developers should strive to simulate processes at the most fundamental level that is reasonable, while avoiding the temptation to add unnecessary detail just because it is feasible. For example, simulating tree growth as a function of soil water availability and potential evapotranspiration would be more robust to novel future conditions than simulating growth as a function of temperature and precipitation because the latter is a (perhaps weak) surrogate for the former. Thus, hybrid models will continue to be the norm, but the percentage of mechanistic components should be increased. FLM modelers in particular may be able to borrow from the approaches used in gap models and DGVMs. This would serve to increase the robustness of landscape models in the face of multiple global changes that have no historical analog (Korzukhin et al. 1996).

Secondly, I suggest that the interaction of processes within a model should be carefully designed to minimize a priori constraints on process interactions within model runs. Interactions among causal factors can have important effects on system outcomes. It is critical that these interactions reflect reality. I believe that the most effective way to ensure this is to allow each ecosystem process to independently operate on the fundamental “currency” of ecosystems (vegetation) at the lowest hierarchical level possible. If each process is driven solely (as much as possible) by the fundamental system drivers and by conditions of the system state, then interactions will emerge in terms of their combined effect on the vegetation, which mimics how interactions play out in real life. For example, if a succession process simulates how species establish, grow and compete for growing space as a function of temperature, precipitation and CO2 concentration, and rising temperatures result in outbreaks of an insect pest that kills only slower-growing conifers, then modeled rates of carbon storage may increase because relatively fast growing species become dominant. So, even though this interaction is not specified, it plays out as an emergent property of the independent processes acting on the common “currency” of vegetation. It should be noted that interactions between ecosystem states and the drivers themselves (e.g., vegetation and climate) may also be important, but such interactions are very difficult to simulate in a FLM (Keane et al. in review).

Thirdly, I submit that any model that is expected to make accurate projections of future system states and dynamics must include all of the critical ecological processes that structure the system. It is not enough to account for most of those processes when a missing process may in fact significantly alter the projected future. On the negative side, this will require significant additional work for many landscape models. On the positive side however, any new component development can be designed using a mechanistic approach, which is often easier than modifying code designed using a phenomenological approach.

Having said all this, I must acknowledge that there is a fly in the ointment. A truly robust mechanistic approach is not possible at landscape scales because ultimate reductionism to the molecular level is intractable and undesirable. Furthermore, the premise that we might actually be able to correctly model multiple complex processes mechanistically and have their combined behavior reliably reflect a future reality without being able to test that assertion is arguably audacious. Therefore, compromises must be made. I submit that the best solution is to blend mechanistic and phenomenological approaches in a way that maximizes the use of mechanisms (especially where novel driver conditions are expected) while achieving modeling objectives and keeping the model tractable. Hierarchy theory (Urban et al. 1987) can be applied to help determine the appropriate level of mechanistic detail for a model and its process components (Cuddington et al. 2013). For a given scale, it is necessary to consider larger scales to understand the context and smaller scales to understand mechanisms (Allen and Hoekstra 1992). Modelers must ask themselves a number of questions. What is the most appropriate level of resolution and detail for representing each ecological process? What is the best system “currency” that best reflects the real-world outcome of the processes and allows interactions to occur in an unfettered way? Are there adequate data to support a mechanistic approach for an ecosystem or process? At what level of complexity does the model become intractable or the uncertainty too high? There are doubtless a number of very different ways that models can be constructed or linked to address the principles and problems raised here.

Let me illustrate my thinking with an example of how my colleagues and I are wrestling with these issues as we seek to apply our FLM (LANDIS-II) to make projections of future forest dynamics. The LANDIS-II Biomass succession extension (Scheller and Mladenoff 2004) takes a mechanistic approach to simulate succession (and species biomass accumulation) by using species life history attributes (e.g., probability of establishment, maximum growth rate, shade tolerance, longevity, dispersal distance, etc.) to simulate competition for growing space within each site (grid cell) on a landscape. Species assemblages are highly dynamic and non-deterministic in space and time, being determined probabilistically by the relative establishment and growth rates of species and by the various disturbances that can be simulated. However, the extension was not designed to easily accommodate changing values for some parameters that are projected to change in the future. For example, the maximum growth rate parameter does not vary directly in response to climate and atmospheric (e.g., CO2 concentration) drivers, but can only be modified on an ad hoc basis at user-specified time steps. The establishment probability of species cannot be varied through time at all. Although this extension is one of the most mechanistic of all FLM succession simulators, it only approximates first principles of growth and competition. A modified approach that better incorporates first principles is under development by our group (De Bruijn et al. in prep). Algorithms from the PnET-II ecophysiology model (Aber et al. 1995) have been modified and embedded within the biomass extension to simulate the competition of species cohorts for light and soil water. The extension estimates water balance and LAI of canopy layers on each site as a function of temperature, precipitation, CO2 (and ozone) concentrations (among other factors) and simulates the growth of each cohort as a competition for the available light and water. Establishment probability of species is also calculated at each time step as a function of climate drivers. The extension can be fed annual climate variables through time from downscaled GCM projections. This approach causes establishment, growth, competition (and therefore succession) to respond directly to the changes in the fundamental drivers through simulated time and should provide a more robust projection of forest dynamics under changing future conditions. This comes at a cost of slightly longer run times and several more parameters, but the stronger link between changing drivers and projected system response should make those costs reasonable.

Other modelers are similarly adding mechanistic components to FLMs improve their ability to address questions of climate change. For example, Kimmins and Blanco (2011) formally linked models that operate at different scales to address forest management questions. Keane et al. (2011) merged the ecophysiology model BIOME-BGC (Running and Hunt 1993) with FIRESUM (Keane et al. 1989) to create the FireBGCv2 model. Their objective was to describe potential fire dynamics in the western US under future climates and land management strategies to provide critical information to help fire managers mitigate potential adverse effects. They rightly point out that the mechanistic approach is not without significant difficulties. Although it does provide a potentially robust method to make projections under novel conditions, landscape models that combine many mechanistic components are extremely difficult to validate. Furthermore, model complexity may increase dramatically and the combined model and parameter uncertainty may become quite high (Kimmins et al. 2008). Finding ways to manage complexity and uncertainty in more mechanistic models is not a trivial matter and considerable research and creativity will be required. Integration of several types of models operating at different scales (e.g., Cushman et al. 2007; Kimmins and Blanco 2011) is being explored. Bayesian hierarchical models (Berliner 2003; Parslow et al. 2013) can explicitly model three sources of uncertainty (initial conditions, process and parameter uncertainty), and this approach may help us find a robust balance between empirical and mechanistic components in our landscape models. Pareto optimality analysis (Kennedy and Ford 2011) has been proposed as a way to assess deficiencies and uncertainty in the structure of complex process-based models, but such applications are thus far rare.

Conclusion

Phenomenological landscape models are limited in their ability to address the novel conditions of the future because they rely on relationships estimated in the past to predict ecosystem dynamics in the changing future. A mechanistic approach based on “first principles” has potential to overcome some of those limitations, although such an approach is not without cost and other limitations (Bugmann et al. 2000). I challenge landscape ecosystem modelers to seek new ways to make their models more robust to the multiple global changes occurring today. I have argued for an increasing use of mechanistic model components based on hierarchically scaled “first principles” in landscape models. There may be other ways. But this problem needs to be addressed because landscape models that can robustly predict future ecosystem dynamics are urgently needed by land managers and policy-makers. It may be prudent to view the shift toward more robust landscape models as a long road, but we need to start the journey now.

References

Aber JD, Ollinger SV, Federer CA, Reich PB, Goulden ML, Kicklighter DW, Mellilo JM, Lathrop RG (1995) Predicting the effects of climate change on water yield and forest production in the northeastern US. Clim Change Res 5:207–222

Allen TFH, Hoekstra TW (1992) Toward a unified ecology. Columbia University, New York

Berliner LM (2003) Physical-statistical modeling in geophysics. J Geophys Res. doi:10.1029/2002JD002865

Boose ER, Chamberlin KE, Foster DR (2001) Landscape and regional impacts of hurricanes in New England. Ecol Monogr 71:27–48

Botkin DB, Janak JF, Wallis JR (1972) Some ecological consequences of a computer model of forest growth. J Ecol 60:849–873

Bugmann H, Lindner M, Lasch P, Flechsig M, Ebert B, Cramer W (2000) Scaling issues in forest succession modeling. Clim Change 44:265–289

Chew JD, Stalling C, Moeller K (2004) Integrating knowledge for simulating vegetation change at landscape scales. W J Appl For 19:102–108

Crookston NL, Dixon GE (2005) The forest vegetation simulator: a review of its structure, content, and applications. Comp Electron Agric 49:60–80

Cuddington K, Fortin M-J, Gerber LR, Hastings A, Liebhold A, O’Connor M, Ray C (2013) Process-based models are required to manage ecological systems in a changing world. Ecosphere 4:20 http://dx.doi.org/10.1890/ES12-00178.1

Cullingham CI, Cooke JEK, Dang S, Davis CS, Cooke BJ, Coltman DW (2011) Mountain pine beetle host-range expansion threatens the boreal forest. Mol Ecol 20:2157–2171. doi:10.1111/j.1365-294X.2011.05086.x

Cushman SA, McKenzie D, Peterson DL, Littell J, McKelvey KS (2007) Research agenda for integrated landscape modeling. USDA Forest Service Gen. Tech. Rep. RMRS-194. Rocky Mountain Research Station, Fort Collins

Cushman S, Tzeidle A, Wasserman N, McGarigal K (2011) Modeling landscape fire and wildlife habitat. In: McKenzie D, Miller C, Falk DA (eds), The landscape ecology of fire, Ecological Studies 213, Springer, New York, p 223–245 doi 10.1007/978-94-007-0301-8_9

De Bruijn AMG, Gustafson EJ, Sturtevant B, Jacobs D (in prep) Merging PnET and LANDIS-II to model succession: mechanistic simulation of competition for water and light to project landscape forest dynamics. Ecol Modelling

Ek AR, Monserud RA (1974) FOREST: computer model for the growth and reproduction simulation for mixed species forest stands. Research Report A2635, College of Agricultural and Life Sciences, University of Wisconsin, Madison

Friend AD, Schugart HH, Running SW (1993) A physiology-based model of forest dynamics. Ecology 74:792–797

Fries J (ed) (1974) Growth models for tree and stand simulation. Research Notes 30. Royal College of Forestry, Stockholm, p 397

Grumbine RE (1994) What is Ecosystem Management? Cons Biol 8:27–38

Gustafson EJ, Crow TR (1996) Simulating the effects of alternative forest management strategies on landscape structure. J Environ Manage 46:77–94

Gustafson EJ, Shvidenko AZ, Sturtevant BR, Scheller RM (2010) Predicting global change effects on forest biomass and composition in south-central Siberia. Ecol Appl 20:700–715

He HS (2008) Forest landscape models: definitions, characterization, and classification. For Ecol Manage 254:484–498

IPCC (2007) Climate change 2007: the physical science basis. In: Solomon S, Qin D, Manning M, Chen Z, Marquis M, Averyt KB, Tignor Miller MHL (eds) Contribution of working group I to the fourth assessment report of the intergovernmental panel on climate change. Cambridge University, Cambridge

Johnson KN (1992) Consideration of watersheds in long-term forest planning models: the case of FORPLAN and it use on the national forests. In: Naiman RJ (ed) Watershed management: balancing sustainability and environmental change. Springer, New York, pp 347–360

Keane RE, Arno SF, Brown JK (1989) FIRESUM—an ecological process model for fire succession in western conifer forests. USDA Forest Service Gen. Tech. Rep. INT-266. Intermountain Research Station, Ogden

Keane RE, Holsinger LM, Pratt SD (2006) Simulating historical landscape dynamics using the landscape fire succession model LANDSUM version 4.0. USDA Forest Service General Tech Rep RMRS-171CD. Rocky Mountain Research Station, Fort Collins

Keane RE, Holsinger LM, Parsons RA, Gray K (2008) Climate change effects on historical range and variability of two large landscapes in western Montana, USA. For Ecol Manage 254:375–389

Keane RE, Loehman RA, Holsinger LM (2011) The FireBGCv2 landscape fire and succession model: a research simulation platform for exploring fire and vegetation dynamics. USDA Forest Service Gen. Tech. Rep. RMRS-255. Rocky Mountain Research Station, Fort Collins

Keane RE, Miller C, Smithwick E, McKenzie D, Falk D, Kellogg L (in review) Representing climate, disturbance, and vegetation interactions in landscape simulation models. Ecol Modelling

Kennedy MC, Ford ED (2011) Using multicriteria analysis of simulation models to understand complex biological systems. Bioscience 61:994–1004

Kimmins JP, Blanco JA (2011) Issues facing forest management in Canada, and predictive ecosystem management tools for assessing possible futures. In: Li C, Lafortezza R, Chen J (eds) Landscape ecology in forest management and conservation. Higher Education Press/Springer, Bejing/Berlin, pp 46–72

Kimmins JP, Blanco JA, Seely B, Welham C, Scoullar K (2008) Complexity in modeling forest ecosystems: how much is enough? For Ecol Manage 256:1646–1658

Korzukhin MD, Ter-Mikaelian MT, Wagner RG (1996) Process versus empirical models: which approach for forest ecosystem management? Can J For Res 26:879–887

McGarigal K, Romme WH (2012) Modeling historical range of variation at a range of scales: example application. In: Wiens J, Regan C, Hayward G, Safford H (eds) Historical environmental variation in conservation and natural resource management. Wiley, New York, pp 128–145

Mladenoff DJ (2004) LANDIS and forest landscape models. Ecol Modelling 180:7–19

Mladenoff DJ, Baker WL (1999) Development of forest and landscape modeling approaches. In: Mladenoff DJ, Baker WL (eds) Spatial modeling of forest landscape change: approaches and applications. Cambridge University, Cambridge UK, pp 1–13

Parslow J, Cressie N, Campbell EP, Jones E, Murray L (2013) Bayesian learning and predictability in a stochastic nonlinear dynamical model. Ecol Appl 23:679–698

Perera AH, Yemshanov D, Schnekenburger F, Baldwin DJB, Boychuk D, Weaver K (2004) Spatial simulation of broad-scale fire regimes as a tool for emulating natural forest landscape disturbance. In: Perera AH, Buse LJ, Weber MG (eds) Emulating natural forest landscape disturbances: concepts and applications. Columbia University, New York, pp 112–122

Risser PG, Iverson LR (2013) 30 years later—landscape ecology: directions and approaches. Landscape Ecol 28:367–369

Running SW, Coughlan JC (1988) A general model of forest ecosystem processes for regional applications I. Hydrologic balance, canopy gas exchange and primary production processes. Ecol Modelling 42:125–154

Running SW, Hunt ER Jr (1993) Generalization of a forest ecosystem process model for other biomes, BIOME-BGC, and an application for global-scale models. In: Ehleringer JR, Field CB, Roy J (eds) Scaling physiological processes: leaf to globe. Academic Press, San Diego, pp 141–157

Scheller RM, Mladenoff DJ (2004) A forest growth and biomass module for a landscape simulation model, LANDIS: design, validation, and application. Ecol Modelling 180:211–229

Scheller RM, Mladenoff DJ (2007) An ecological classification of forest landscape simulation models: tools and strategies for understanding broad-scale forested ecosystems. Landscape Ecol 22:491–505

Schulte LA, Mladenoff DJ (2005) Severe wind and fire regimes in northern forests; historical variability at the regional scale. Ecology 86:431–445

Shugart HH, Woodward FI (2011) Global change and the terrestrial biosphere: achievements and challenges. Wiley–Blackwell, Oxford UK 242 p

Shugart HH Jr, Crow TR, Hett JM (1973) Forest succession models: a rationale and methodology for modeling forest succession over large regions. For Sci 19:203–212

Suffling R, Perera AH (2004) Characterizing natural forest disturbance regimes. In: Perera AH, Buse LJ, Weber MG (eds) Emulating natural forest landscape disturbances: concepts and applications. Columbia University, New York, pp 43–54

Turner MG (2005) Landscape ecology in North America: past present, and future. Ecology 86:1967–1974

Urban DL, O’Neill RV, Shugart HH Jr (1987) Landscape ecology. Bioscience 37:119–127

Urban DL, Bonan GB, Smith TM, Schugart HH (1991) Spatial applications of gap models. For Ecol Manag 42:95–110

Acknowledgments

I thank Brian Sturtevant, Robert Scheller, David Mladenoff, Robert Keane, Kevin McGarigal, Ajith Perera and three anonymous reviewers for insightful comments and perspectives that greatly helped to clarify my thinking and presentation.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gustafson, E.J. When relationships estimated in the past cannot be used to predict the future: using mechanistic models to predict landscape ecological dynamics in a changing world. Landscape Ecol 28, 1429–1437 (2013). https://doi.org/10.1007/s10980-013-9927-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10980-013-9927-4