Abstract

We show that large critical multi-type Galton–Watson trees, when conditioned to be large, converge locally in distribution to an infinite tree which is analogous to Kesten’s infinite monotype Galton–Watson tree. This is proven when we condition on the number of vertices of one fixed type, and with an extra technical assumption if we count at least two types. We then apply these results to study local limits of random planar maps, showing that large critical Boltzmann-distributed random maps converge in distribution to an infinite map.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A planar map is a proper embedding of a finite connected planar graph in the sphere, taken up to orientation-preserving homeomorphisms. These objects were first studied from a combinatorial point of view in the works of Tutte in the 1960s (see notably [32]) and have since been of use in different domains of mathematics, such as algebraic geometry (see, e.g., [18]) and theoretical physics (as in [4]). There has been great progress in their probabilistic study ever since the work of Schaeffer [30], which has among other things led to finding the scaling limit of many large random maps (we mention [26] and [19]).

Our subject of interest here is the local convergence of large random maps, which means that we are not interested in scaling limits but in the combinatorial structure of a map around a chosen root. Such problems were first studied by Angel and Schramm [5] and Krikun [16], who showed that the distributions of uniform triangulations and quadrangulations with n vertices converge weakly as n goes to infinity. Each limit is the distribution of an infinite random map, respectively known as the uniform infinite planar triangulation (UIPT) and the uniform infinite planar quadrangulation (UIPQ). Of particular interest to us is the paper [10] where the convergence to the UIPQ is shown by a method involving the well-known Cori–Vauquelin–Schaeffer bijection [30].

We will generalize this to a large family of random maps called the class of Boltzmann-distributed random maps. Let \({{\mathbf {q}}}=(q_n)_{n\in \mathbb {N}}\) be a sequence of nonnegative numbers. We assign to every finite planar map a weight which is equal to the product of the weights of its faces, the weight of a face being \(q_d\) where d is the number of edges adjacent to said face, counted with multiplicity. If the sum of all the weights of all the maps is finite, then one can normalize this into a probability distribution.

The use of the so-called Bouttier–Di Francesco–Guitter bijection (see [8], or Sect. 5.3) allows us to obtain the convergence to infinite maps for a fairly large class of weight sequences \({\mathbf {q}}\). For \({\mathbf {q}}\) in this class, let \((M_n,E_n)\) be a \({\mathbf {q}}\)-Boltzmann rooted map conditioned to have n vertices (or n edges or n faces) our main Theorem 6.1 states that this sequence converges in distribution to a random map \((M_{\infty },E_{\infty })\), which we call the infinite \({\mathbf {q}}\) -Boltzmann map. Due to combinatorial reasons, we have to restrict n to a sublattice of \(\mathbb {Z}_+\).

The classes of weight sequences for which this is true are the class of critical sequences when we condition by the number of vertices, and regular critical when we condition by the number of edges or faces (both are defined in Sect. 5.2). These classes contain all sequences with finite support (up to multiplicative constants). Taking \(q_n={\mathbbm {1}}_{\{n=p\}}\) with \(p\geqslant 3\) gives us the case of the uniform p-angulation, making our results an extension of what was known about the UIPT and UIPQ.

Local limits of Boltzmann random maps have notably been studied recently in [7]. A key difference with our work here is the fact that the maps are supposed to be bipartite in [7] (the weight sequence \({\mathbf {q}}\) is supported on the even integers). In this context, conditioning maps by their number of edges ends up being more natural than in our work, and it is sufficient to only assume criticality and not regular criticality.

The proof of convergence to an infinite map hinges on a similar result for critical multi-type Galton–Watson trees and forests (Theorem 3.1). This theorem itself generalizes the well-known fact that critical monotype Galton–Watson trees, when conditioned to be large, converge to an infinite tree formed by a unique infinite spine to which many finite trees are grafted. This infinite tree was first indirectly mentioned in [15], Lemma 1.14, and many details about the convergence are given in [2] and [13]. One of its properties is that one can obtain its distribution from the distribution of the finite tree by a size-biasing process, as explained in [20].

In the multi-type case, as with maps, we have two different kinds of conditionings. If the tree is simply critical, then we must condition it by the number of vertices of one type early, while if it is regular critical (criticality and regular criticality being defined in Sect. 2.1), we can condition it by its “size,” for a general notion of size where we count all the vertices, giving some integer weight to each vertex depending on its type. The distribution of the infinite limiting tree can once again be described by a biasing process from the original tree, as explained in Proposition 3.1, something which was anticipated in [17].

A fairly important issue in Theorem 3.1 is the problem of periodicity: as with maps, a multi-type Galton–Watson tree cannot have any number of vertices. To be precise, the size of the tree is always in \(\alpha +d{\mathbb {Z}}_+\), where d and \(\alpha \) are integers which depend on the offspring distribution and the type of the root (for \(\alpha \) only). Particular care must thus be taken when counting the vertices of forests or specific subtrees.

We end this introduction by mentioning two papers which deal with similar limits and have appeared since the start of work. In [28] is studied the limit of the multi-type Galton–Watson process associated to the tree, while the authors of [3] are also interested in the local limit of the tree. The difference is that they are focused on the aperiodic case and that they condition on the vector of population sizes of each types, and not a linear function of it. It is, however, shown in [3] that, when we condition on only one type, our result can be deduced from theirs.

The paper is split into two halves: we start by working on trees and later on apply the results to maps. To be precise, after recalling facts about multi-type Galton–Watson trees in Sect. 2, we state in Sect. 3 the convergence of large critical multi-type Galton–Watson forests to their infinite counterpart, the proof of which is done in Sect. 4. Section 5 then states the basic background on planar maps, and we state and prove Theorem 6.1, our main theorem of convergence of maps, in Sect. 6. The final section is then dedicated to an application, namely showing that the infinite Boltzmann map is almost surely a recurrent graph.

2 Background on Multi-type Galton–Watson Trees

2.1 Basic Definitions

Multi-type Plane Trees We recall the standard formalism for family trees, first introduced by Neveu [27]. We denote by \(\mathbb {N}\) the set of strictly positive integers, and \(\mathbb {Z}_+\) the set of nonnegative integers. Let

be the set of finite words on \(\mathbb {N}\), also known as the Ulam–Harris tree. Elements of \({\mathcal {U}}\) are written as sequences \(u=u^1u^2\ldots u^k\), and we call \(|u|=k\) the height of u. We also let \(u^-=u^1u^2\ldots u^{k-1}\) be the father of u when \(k>0\). In the case of the empty word \(\emptyset \), we let \(|\emptyset |=0\) and we do not give it a father. If \(u=u^1\ldots u^k\) and \(v=v^1\ldots v^l\) are two words, we define their concatenation \(uv=u^1\ldots u^k v^1\ldots v^l.\)

A plane tree is a subset \({\mathbf {t}}\) of \({\mathcal {U}}\) which satisfies the following conditions:

-

\(\emptyset \in {\mathbf {t}}\),

-

\(u \in {\mathbf {t}}{\setminus }\{\emptyset \} \Rightarrow u^-\in {\mathbf {t}}\),

-

\(\forall u\in {\mathbf {t}}, \exists k_u({\mathbf {t}})\in \mathbb {Z}_+, \forall j\in \mathbb {N}, uj\in {\mathbf {t}} \Leftrightarrow j \leqslant k_u({\mathbf {t}})\).

Given a tree \({\mathbf {t}}\) and an integer \(n\in \mathbb {Z}_+\), we let \({\mathbf {t}}_n=\{u\in {\mathbf {t}},|u|= n\}\) and \({\mathbf {t}}_{\leqslant n}=\{u\in {\mathbf {t}},|u|\leqslant n\}\). We call height of \({\mathbf {t}}\) the supremum ht(t) of the heights of all its elements. If \(u\in {\mathbf {t}}\), we let \({\mathbf {t}}_u=\{v\in {\mathcal {U}},uv\in {\mathbf {t}}\}\) be the subtree of \({\mathbf {t}}\) rooted at u.

Note that the finiteness of \(k_u({\mathbf {t}})\) for any vertex u implies that all the trees which we consider are locally finite: a vertex can only have a finite number of neighbors. We do, however, allow infinite trees.

Let now \(K\in \mathbb {N}\) be an integer. We call \([K]=\{1,2,\ldots ,K\}\) the set of types. A K-type tree is then a pair \(({\mathbf {t}},{\mathbf {e}})\) where \({\mathbf {t}}\) is a plane tree and \({\mathbf {e}}\) is a function: \({\mathbf {t}}\rightarrow [K]\), which gives a type \({\mathbf {e}}(u)\) to every vertex \(u\in {\mathbf {t}}\). For a vertex \(u\in {\mathbf {t}}\), we also let \({\mathbf {w}}_{{\mathbf {t}}}(u)=\big ({\mathbf {e}}(u1),\ldots ,{\mathbf {e}}(uk_u({\mathbf {t}}))\big )\) be the list of types of the ordered offspring of u. Note of course that the knowledge of \({\mathbf {e}}(\emptyset )\) and of all the \({\mathbf {w}}_{{\mathbf {t}}}(u)\), \(u\in {\mathbf {t}}\) gives us the complete type function \({\mathbf {e}}\).

We let

be the set of finite type-lists. Given such a list \({\mathbf {w}}\in \mathcal {W}_K\) and a type \(i\in [K]\), we let \(p_i({\mathbf {w}})=\#\{j,w_j=i\}\) and \(p({\mathbf {w}})=(p_i({\mathbf {w}}))_{i\in [K]}\). This defines a natural projection from \(\mathcal {W}_K\) onto \((\mathbb {Z}_+)^{K}\). We also let \(|{\mathbf {w}}|=\sum _i p_i({\mathbf {w}})\) be the length of \({\mathbf {w}}\). Elements of \(\mathcal {W}_K\) should be seen as orderings of types, such that the type i appears \(p_i({\mathbf {w}})\) times in the order \({\mathbf {w}}\).

Offspring Distributions We call ordered offspring distribution any sequence \(\mathbf {\zeta }=(\zeta ^{(i)})_{i\in [K]}\) where, for all \(i\in [K]\), \(\zeta ^{(i)}\) is a probability distribution on \(\mathcal {W}_K\). Letting for all i \(\mu ^{(i)}=p_*\zeta ^{(i)}\) be the image measure of \(\zeta ^{(i)}\) on \((\mathbb {Z}_+)^K\) by p, we then call \(\mu =(\mu ^{(i)})_{i\in [K]}\) the associated unordered offspring distribution.

We will always assume the condition

to avoid degenerate cases which lead to infinite linear trees.

Uniform Orderings Let us give details about a particular case of ordered offspring distribution. For \({\mathbf {n}}=(n_i)_{i\in [K]}\in (\mathbb {Z}_+)^K\), we call uniform ordering of \({\mathbf {n}}\) any uniformly distributed random variable on the set of words \({\mathbf {w}}\in \mathcal {W}_K\) satisfying \(p({\mathbf {w}})={\mathbf {n}}\). Such a random variable can be obtained by taking the word \((1,1,\ldots ,1,2,\ldots ,2,3,\ldots ,K,\ldots ,K)\) (where each i is repeated \(n_i\) times) and applying a uniform permutation to it. Now let \(\mathbf {\mu }=(\mu ^{(i)})_{i\in [K]}\) be a family of distributions on \((\mathbb {Z}_+)^K\), we call uniform ordering of \(\mathbf {\mu }\) the ordered offspring distribution \(\mathbf {\zeta }=(\zeta ^{(i)})_{i\in [K]}\) where, for each i, \(\zeta ^{(i)}\) is the distribution of a uniform ordering of a random variable with distribution \(\mu ^{(i)}\).

Galton–Watson Distributions We can now define the distribution of a K-type Galton–Watson tree rooted at a vertex of type \(i\in [K]\) and with ordered offspring distribution \(\mathbf {\zeta }\), which we call \(\mathbb {P}^{(i)}_{\mathbf {\zeta }}\), by

for any finite tree \(({\mathbf {t}},{\mathbf {e}})\). This formula only defines a subprobability measure in general; however, in the cases which interest us (namely critical offspring distributions, see the next section) we will indeed have a probability distribution. In practice, we are not interested in this formula as much as in the branching property, which also characterizes these distributions: the types of the children of the root of a tree \(({\mathbf {T,E}})\) with law \(\mathbb {P}^{(i)}_{\mathbf {\zeta }}\) are determined by a random variable with law \(\zeta ^{(i)}\) and, conditionally on the offspring of the root being equal to a word \({\mathbf {w}}\), the subtrees rooted at points j with \(j\in [|{\mathbf {w}}|]\) are independent, each one with distribution \(\mathbb {P}^{(j)}_{\mathbf {\zeta }}\).

Criticality Let \(M=(m_{i,j})_{i,j\in [K]}\) be the \(K\times K\) matrix defined by

We assume that M is irreducible, which means that, for all i and j in [K], there exists some power p such that the (i, j)th entry of \(M^p\) is nonzero. In this case, we know by the Perron–Frobenius theorem that the spectral radius \(\rho \) of M is in fact an eigenvalue of M. We say that \(\zeta \) (or \(\mu \), or M) is subcritical if \(\rho <1\) and critical if \(\rho =1\), which both in particular imply that Eq. (2.1) does define a probability distribution and that Galton–Watson trees with ordered offspring distribution \(\zeta \) are almost surely finite. We will always assume criticality in the rest of the paper. The Perron–Frobenius theorem also tells us that, up to multiplicative constants, the left and right eigenvectors of M for \(\rho \) are unique. We call them \({\mathbf {a}}=(a_1,\ldots ,a_K)\) and \({\mathbf {b}}=(b_1,\ldots ,b_K)\) and normalize them such that \(\sum _i a_i= \sum _i a_ib_i=1\), in which case their components are all strictly positive.

The fact that \({\mathbf {b}}\) is a right eigenvector of M translates as

where \(\cdot \) is the usual dot product. One can deduce from this the existence of a martingale naturally associated with the Galton–Watson tree. Let \(({\mathbf {T}},{\mathbf {E}})\) have distribution \(\mathbb {P}^{(i)}_{\mathbf {\zeta }}\) for some \(i\in [K]\) and, for all \(n\in \mathbb {N}\) and \(j\in [K]\), let \(Z^{(j)}_n\) be the number of vertices of \({\mathbf {T}}\) which have height n and type j, and set \({\mathbf {Z}}_n=(Z^{(j)}_n)_{j\in [K]}\). Define then, for \(n\in \mathbb {Z}_{+}\),

The process \((X_n)_{n\in \mathbb {Z}_+}\) is then a martingale.

Finally, we say that \(\zeta \) (or \(\mu \)) is regular critical if, in addition to being critical, \(\zeta \) has small exponential moments in the following sense:

Spatial Trees Later on in this paper we will be looking at spatial K-type trees, that is trees coupled with labels on their vertices. We define a K-type spatial tree to be a triple \(({\mathbf {t}},{\mathbf {e}},{\mathbf {l}})\) where \(({\mathbf {t}},{\mathbf {e}})\) is a K-type tree and \({\mathbf {l}}\) is any real-valued function on \({\mathbf {t}}\). Note that, given \({\mathbf {t}}\), \({\mathbf {e}}\) and \({\mathbf {l}}(\emptyset )\), the rest of \({\mathbf {l}}\) is completely determined by the differences \({\mathbf {l}}(u)-{\mathbf {l}}(u^-)\) for \(u\in {\mathbf {t}}{\setminus }\{\emptyset \}\). This is why we let, for \(u\in {\mathbf {t}}\), \({\mathbf {y}}_u=\Big ({\mathbf {l}}(u1)-{\mathbf {l}}(u),{\mathbf {l}} (u2)-{\mathbf {l}}(u),\ldots ,{\mathbf {l}}\big (uk_u ({\mathbf {t}})\big )-{\mathbf {l}}(u)\Big )\in {\mathbb {R}}^{|{\mathbf {w}}_{{\mathbf {t}}}(u)|}\) be the list of ordered label displacements of the offspring of u.

Consider, for all types \(i\in [K]\) and words \({\mathbf {w}}\in \mathcal {W}_K\), a probability distribution \(\nu ^{(i)}_{{\mathbf {w}}}\) on \({\mathbb {R}}^{|{\mathbf {w}}|}\), as well as a number \(\varepsilon \). We let \(\mathbb {P}^{(i,\varepsilon )}_{\mathbf {\zeta },{\mathbf {\nu }}}\) be the distribution of a triple \(({\mathbf {T,E,L}})\) where \(({\mathbf {T,E}})\) is a K-type tree with distribution \(\mathbb {P}^{(i)}_{\mathbf {\zeta }}\), the root \(\emptyset \) has label \(\varepsilon \) and the label displacements \(\big ({\mathbf {L}}(u1)-{\mathbf {L}}(u), {\mathbf {L}}(u2)-{\mathbf {L}}(u),\ldots , {\mathbf {L}}(uk_u( {\mathbf {T}}))-{\mathbf {L}}(u)\big )\) (with \(u\in {\mathbf {T}}\)) are all independent, each one having distribution \(\nu ^{\big ({\mathbf {E}}(u)\big )}_{{\mathbf {w}}_{{\mathbf {T}}}(u)}\) conditionally on \({\mathbf {E}}(u)\) and \({\mathbf {w}}_{\mathbf {T}}(u)\).

Forests We will not only look at trees but also at multi-type (and, when needed, labelled) forests, a forest being defined as an ordered finite collection of trees: elements of the form \(({\mathbf {f,e,l}}) =\big (({\mathbf {t}}^1,{\mathbf {e}}^1,{\mathbf {l}}^1),\ldots , ({\mathbf {t}}^p,{\mathbf {e}}^p,{\mathbf {l}}^p)\big )\).

A Galton–Watson random forest will be a forest where the trees are mutually independent, and each one has a Galton–Watson distribution with the same ordered offspring distribution (and label increment distribution, in the labelled case). We can thus let, for \({\mathbf {w}}\in \mathcal {W}_K\), \(\mathbb {P}^{({\mathbf {w}})}_{\mathbf {\zeta }}\) be the distribution of \(({\mathbf {T}}^i,{\mathbf {E}}^i)_{i\in [|{\mathbf {w}}|]}\) where the \(({\mathbf {T}}^i,{\mathbf {E}}^i)\) are independent, and each \(({\mathbf {T}}^i,{\mathbf {E}}^i)\) has distribution \(\mathbb {P}^{(w_i)}_{\mathbf {\zeta }}\) and, given also a list of initial labels \(\varepsilon =(\varepsilon _1,\ldots ,\varepsilon _{|{\mathbf {w}}|})\), \(\mathbb {P}^{({\mathbf {w}}),(\varepsilon )}_{\mathbf {\zeta },\nu }\) be the distribution of \(({\mathbf {T}}^i,{\mathbf {E}}^i,{\mathbf {L}}^i)_{i\in [|{\mathbf {w}}|]}\) where the terms of the sequence are independent and, for a given i, \(({\mathbf {T}}^i,{\mathbf {E}}^i,\mathbf {L}^i)\) has distribution \(\mathbb {P}^{(w_i,\varepsilon _i)}_{\zeta ,\nu }.\)

All previous notation will be adapted to forests; for example, the height of a forest \({\mathbf {f}}\) is the maximum of the heights of its elements, \({\mathbf {f}}_{\leqslant n}\) is the forest where each tree has been cut at height n, and so on.

Remarks Concerning Notation For readability, we will throughout the paper use the canonical variable \(({\mathbf {T}},{\mathbf {E}})\), which is simply the identity function of the space of K-type trees, as well as \(({\mathbf {T}},{\mathbf {E}},{\mathbf {L}})\), \((\mathbf {F},{\mathbf {E}})\) \(({\mathbf {F}},{\mathbf {E}},{\mathbf {L}})\) when looking at labelled trees or forests. Thus, we will, for instance, write \(\mathbb {P}^{(i)}_{\zeta }\big (({\mathbf {T}},{\mathbf {E}})=({\mathbf {t}},{\mathbf {e}})\big )\) instead of \(\mathbb {P}^{(i)}_{\zeta }({\mathbf {t}},{\mathbf {e}})\), for a given type i and a given K-type tree \(({\mathbf {t}},{\mathbf {e}})\).

Moreover, since we will never change the types and labels of vertices of a tree, we will often drop \({\mathbf {e}}\) and \({\mathbf {l}}\) from the notation, once again for readability, and, in the same vein, since we only consider one offspring distribution at a time, we also often drop \(\zeta \) from the \(\mathbb {P}_{\zeta }\) notation.

Local Convergence of Multi-type Trees and Forests Take a sequence of K-type forests \(({\mathbf {f}}^{(n)},{\mathbf {e}}^{(n)})_{n\in \mathbb {N}}\). We say that this sequence converges locally to a K-type forest \(({\mathbf {f}},{\mathbf {e}})\) if, for all \(k\in \mathbb {N}\), and \(n\in \mathbb {N}\) large enough (depending on k), we have \(({\mathbf {f}}^{(n)}_{\leqslant k},{\mathbf {e}}^{(n)}_{\leqslant k})=({\mathbf {f}}_{\leqslant k},{\mathbf {e}}_{\leqslant k})\). This convergence can be metrized: we can, for example, set, for two K-type forests \(({\mathbf {f}},{\mathbf {e}})\) and \(({\mathbf {f}}',{\mathbf {e}}')\), \(d\big (({\mathbf {f}},{\mathbf {e}}),({\mathbf {f}}',{\mathbf {e}}')\big )=\frac{1}{1+p}\) where p is the supremum of all integers k such that \(({\mathbf {f}}_{\leqslant k},{\mathbf {e}}_{\leqslant k})=({\mathbf {f}}'_{\leqslant k},{\mathbf {e}}'_{\leqslant k})\).

Convergence in distribution of random forests for this metric is simply characterized: if \(({\mathbf {F}}^{(n)},{\mathbf {E}}^{(n)})_{n\in \mathbb {N}}\) is a sequence of random K-type forests, it converges in distribution to a certain random forest \(({\mathbf {F}},{\mathbf {E}})\) if and only if, for all \(k\in \mathbb {N}\) and finite K-type forests \(({\mathbf {f}},{\mathbf {e}})\), the quantity \(\mathbb {P}\big (({\mathbf {F}}^{(n)}_{\leqslant k},{\mathbf {E}}^{(n)}_{\leqslant k})=({\mathbf {f}},{\mathbf {e}})\big )\) converges to \(\mathbb {P}\big (({\mathbf {F}}_{\leqslant k},{\mathbf {E}}_{\leqslant k})=({\mathbf {f}},{\mathbf {e}})\big ).\)

All these definitions can directly be adapted to the case of spatial forests: when asking for equality between the forests below height k, we also ask equality of the labels below this height.

2.2 Cutting a Tree at the First Generation of Fixed Type

In this section, we fix a reference type \(j\in [K]\). We are interested in the first generation of type j, that is, in a K-type tree \({\mathbf {t}}\), the set of vertices of \({\mathbf {t}}\) with type j which have no ancestors of type j, except maybe for the root. We then call \(C_j({\mathbf {t}})\) the tree formed by all the vertices which lie below or on the first generation of type j, including all vertices which lie on branches with no individuals of this type. If \({\mathbf {T}}\) has distribution \(\mathbb {P}^{(i)}_{\zeta }\) for some type i, we let \(\mathbb {P}^{(i)}_{\text {cut}_j}\) be the distribution of \(C_j({\mathbf {T}})\) and let \(\mu _{i,j}\) be the distribution of the number of leaves of \(C_j({\mathbf {T}})\) which have type j (that is, the size of the first generation of type j in \(\mathbf {T}\)). Finally, if \(\mathbf {T}\) has distribution \(\mathbb {P}^{(j)}\), we let \(\xi _{i,j}\) be the distribution of the number of vertices of type i in \(C_j({\mathbf {T}})\) (excluding the root, so that when \(i=j\) we end up with \(\xi _{j,j}=\mu _{j,j}\)).

The following proposition gives a few properties of the moments of the \(\mu _{i,j}\) and \(\xi _{i,j}\). Most of them are already proven in [25].

Proposition 2.1

Let i and j be two different types.

-

(i)

$$\begin{aligned} \sum _{k=0}^{\infty } k\mu _{i,j}(k)=\frac{b_i}{b_j}. \end{aligned}$$

-

(ii)

$$\begin{aligned} \sum _{k=0}^{\infty } k\xi _{i,j}(k)=\frac{a_i}{a_j}. \end{aligned}$$

-

(iii)

Assume that \(\zeta \) has finite second moments. Then

$$\begin{aligned} \text {Var}(\mu _{i,i})=\frac{\sigma ^2}{a_ib_i^2}, \end{aligned}$$where the number \(\sigma > 0\) is defined by \(\sigma ^2=\sum _{i,j,k} a_ib_jb_kQ^{(i)}_{j,k}\), with

\(Q^{(i)}_{j,j}=\sum _{{\mathbf {z}}\in (\mathbb {Z}_+)^K} \mu ^{(i)}({\mathbf {z}}) z_j(z_j-1)\) and \(Q^{(i)}_{j,k}=\sum _{{\mathbf {z}}\in (\mathbb {Z}_+)^K} \mu ^{(i)}({\mathbf {z}}) z_jz_k\) for \(j\ne k\).

-

(iv)

Assume that \(\zeta \) is regular critical. Then \(\mu _{i,i}\) and \(\xi _{i,j}\) also have some finite exponential moments:

$$\begin{aligned} \exists z>1, \sum _{n\in \mathbb {Z}_+} \mu _{i,i}(n) z^n<\infty \text { and } \sum _{n\in \mathbb {Z}_+} \xi _{i,j}(n)z^n<\infty . \end{aligned}$$

Proof

We start with point (i). We fix \(j\in [K]\) and, for all \(i\in [K]\), let \(c_i=\sum _{k=0}^{\infty } k\mu _{i,j}(k)\). The proof that \(c_i=\frac{b_i}{b_j}\) for all i is done in two steps: first show that \(c_j=1\) and then that the vector \({\mathbf {c}}=(c_i)_{i\in [K]}\) is a right eigenvector of M for the eigenvalue 1.

The fact that \(c_j=1\) is proven in [25], Proposition 4, (i). It is obtained by removing the types different from j one by one, and noticing that criticality is conserved at every step until we are left with a critical monotype Galton–Watson tree.

To prove that \({\mathbf {c}}\) is a right eigenvector of M, consider a type \(i\in [K]\) and apply the branching property at height 1 in a tree with distribution \(\mathbb {P}^{(i)}_{\zeta }\), we get

Since \(\sum _{l=1}^k m_{i,l}c_l\) is the ith component of \((M{\mathbf {c}})\), the proof is complete.

Point (ii) was also proven in [25], as part of the proof of Proposition 4, (ii). Similarly, points (iii) and (iv) feature in [25], Proposition 4. \(\square \)

2.3 Size of a Tree and Periodicity

As said earlier, we plan on conditioning trees on being large. To do this extent, we need to define a notion of “size” of a tree. One natural notion of size would be the total number of vertices of the tree. Another one, which, as will be shown later, is easier to work with combinatorially, would be to count only the number of vertices of one fixed type. We propose a fairly general notion of size which contains the above two examples: let \(\gamma =(\gamma _1,\ldots ,\gamma _K)\) be a vector of nonnegative integers, one of them at least being nonzero. We then let, for a K-type tree \({\mathbf {(t,e)}}\)

where \(\#_i({\mathbf {t}})\) denotes the number of vertices of \({\mathbf {t}}\) with type \(i\in [K]\).

One consequence of criticality is that, while a Galton–Watson tree with ordered offspring distribution \(\zeta \) is almost surely finite, the expected value of its size is infinite.

Lemma 2.1

For all \(i\in [K]\), we have

Proof

We just need to check that, for all \(j\in [K]\), we have \({\mathbb {E}}^{(i)}\big [\#_j{\mathbf {T}}\big ]=\infty .\) Let us generalize the previous section by calling, recursively, for \(k>1\), the k th generation of type j of a tree the set of its vertices of type j whose closest ancestor of type j is in the \((k-1)\)th generation of type j. By Proposition 2.1, point (i), the number of vertices on each of those generations has expected value \(\frac{b_j}{b_i}>0\), and thus, their sum has infinite expected value. \(\square \)

As it happens, when \({\mathbf {(T,E)}}\) is a Galton–Watson tree, then \(|{\mathbf {T}}|_{\gamma }\) cannot take any integer value. For example, in a classical monotype tree, if an individual can only have an even number of children, then the total number of vertices in the tree has to be odd. Here is a precise statement for the general case.

Proposition 2.2

There exists an integer \(d\in \mathbb {N}\) and integers \(\alpha _i\) in \(\{0,1,\ldots ,d-1\}\) for all types \(i\in [K]\) such that, for \(n\in \mathbb {Z}_+\):

-

if \(\mathbb {P}^{(i)}(|{\mathbf {T}}|_{\gamma }=n)>0\), then \(n\equiv \alpha _i \pmod d\).

-

if \(n\equiv \alpha _i \pmod d\) and n is large enough, then \(\mathbb {P}^{(i)}(|\mathbf {T}|_{\gamma }=n)>0\).

Remark 1

This immediately extends to forests: if \({\mathbf {w}}\in \mathcal {W}_K\), then let \(\alpha _{{\mathbf {w}}}\in \{0,\ldots ,d-1\}\) such that \(\alpha _{{\mathbf {w}}}\equiv \sum _{k=1}^{|{\mathbf {w}}|} \alpha _{w_k}\). Then the size in a forest with distribution \(\mathbb {P}^{({\mathbf {w}})}_{\zeta }\) is a.s. of the form \(\alpha _{{\mathbf {w}}}+dn\) with \(n\in \mathbb {Z}_+\) and, if n is large enough, the forest has size \(\alpha _{{\mathbf {w}}}+dn\) with nonzero probability.

The proof of Proposition 2.2 requires the following lemma, which is a variant of the well-known “Frobenius coin problem.”

Lemma 2.2

Let \(n_1,\ldots ,n_p\) be p nonnegative integers and let \(d=gcd(n_1,\ldots ,n_p)\). There exists integers \(N_2,\ldots ,N_p\) such that the set

contains all large enough multiples of d.

The values of \(N_2,\ldots ,N_p\) are of no importance for us in this paper. All we need to know is that, when adding multiples of \(n_1,\ldots ,n_p\), if we allow all multiples of \(n_1\), then we only need a finite amount of multiples of the others.

Proof

A straightforward induction shows that, if we can prove Lemma 2.2 in the case \(p=2\), then we can generalize it to all p. We will thus restrict ourselves to the case where \(p=2\), and can in fact further simplify the problem by dividing \(n_1\) and \(n_2\) by their gcd, making them coprime. In this case, \(N_2\) and “large enough” can easily be explicited: we will show that every integer greater than or equal to \(n_1n_2\) can be written as \(k_1n_1+k_2n_2\) with \(k_1\in \mathbb {Z}_+\) and \(k_2\in \{0,\ldots ,n_1-1\}\).

Let \(n\geqslant n_1n_2\). Since \(n_1\) and \(n_2\) are coprime, \(n_2\) is invertible modulo \(n_1\), and we know that there exists \(k_2\) in \(\{0,\ldots ,n_1-1\}\) such that \(k_2n_2 \equiv n \pmod {n_1}\). Therefore, there exists \(k_1\in \mathbb {Z}\) such that \(n-k_2n_2=k_1n_1\), and since, \(n\geqslant n_1n_2\) and \(k_2 \leqslant n_1-1\), we also have \(k_1\geqslant 0\).\(\square \)

Throughout the following proof, we use for \(i\in [k]\) the notation \(\mathbb {T}^{(i)}_{\zeta }\) for the set of trees which can be obtained with positive probability starting with a root of type i:

Proof of Proposition 2.2

Let \(j\in [K]\) be a type such that \(\zeta ^{(j)}(\emptyset )>0\), i.e., an individual of type j can die without having any children. We start by proving the existence of d and \(\alpha _j\) by focusing on what happens when we jump from one generation of type j to the next. Let

Let us also introduce some notation: if \(A_1,\ldots ,A_p\) are subsets of \(\mathbb {Z}\), then we let \(A_1+\ldots +A_p\) be their sumset, that is the set of integers which can be obtained as sums \(\sum _{i=1}^p a_i\) with \(a_i\in A_i\) for all i. We also let \(G^{+p}\) the p-fold iterated sumset of G:

The set G can be obtained inductively by cutting \(\mathbf {T}\) at its first generation of type j, and then grafting new trees at each vertex of this generation. To be precise, take a tree \({\mathbf {t}}\in \mathbb {T}^{(j)}_{\zeta }\). Let \(a_{{\mathbf {t}}}\) be the sum of all the \(\gamma \)-weights of all the vertices which do not have type j in the cut tree \(C_j({\mathbf {t}})\), and let \(p_{{\mathbf {t}}}\) be the number of vertices in the first generation of type j of \({\mathbf {t}}\). We then have

Note of course that there is much redundance in this union, since \(a_{{\mathbf {t}}}\) and \(p_{{\mathbf {t}}}\) only depend on \(C_j({\mathbf {t}})\) (\({\mathbf {t}}\) up to its first generation of type j). Next, we do some reindexing to remove the overlap, and at the same time separate the union into three classes:

-

the tree with only one vertex (of type j) is isolated in its own class.

-

the second class contains the trees with no vertices of type j except for the root.

-

the third class contains all the other possible trees cut at their first generation of type j.

We thus obtain

where X and Y are two abstract sets which we need not worry about, \(a_x\in \mathbb {N}\) for all \(x\in X\) and \((b_y,p_y)\in \mathbb {Z}_+\times \mathbb {N}\) for \(y\in Y\). Note that Y is non-empty (by criticality or aperiodicity).

It then follows that

From this, let

and let \(\alpha _j\in \{0,\ldots ,d-1\}\) be the remainder of \(\gamma _j\) mod d. Then it is immediate that \(G\subset \alpha _j+d\mathbb {Z}_+\), and Lemma 2.2 ensures that G contains all large enough members of \(\alpha _j+d\mathbb {Z}_+\) (the condition \(\sum _{x\in X}k_x \leqslant \sum _{y\in Y}k_yp_y\) being weaker than the condition of Lemma 2.2, we in fact only need all the \(k_x\) and \(k_y\) to be in a finite set, except for any one specific \(k_y\)).

Now, let i be a different type from j. We first want to show that, if \({\mathbf {t}}\) and \({\mathbf {t'}}\) are both in \(\mathbb {T}^{(i)}_{\zeta }\), then \(|{\mathbf {t}}|_{\gamma }\equiv |{\mathbf {t'}}|_{\gamma } \pmod d\). To this end, consider a tree \({\mathbf {t}}^0\) in \(\mathbb {T}^{(j)}_{\zeta }\) which contains at least one vertex u of type i (such a tree exists by virtue of irreducibility). Now let \({\mathbf {t}}^1\) and \({\mathbf {t}}^2\) to be \({\mathbf {t}}^0\) except that we replace the subtree rooted at u by, respectively, \({\mathbf {t}}\) and \({\mathbf {t'}}\). Both \({\mathbf {t}}^1\) and \({\mathbf {t}}^2\) also belong to \(\mathbb {T}^{(j)}_{\zeta }\), which implies that \(|{\mathbf {t}}^1|_{\gamma }\equiv |{\mathbf {t}}^2|_{\gamma }\equiv \alpha _j \pmod d\), which itself implies \(|{\mathbf {t}}|_{\gamma }\equiv |{\mathbf {t'}}|_{\gamma } \pmod d\). This shows the existence of \(\alpha _i\).

Finally, we want to show that, if n is large enough, then \(\mathbb {P}^{(i)} \big (|{\mathbf {T}}|_{\gamma }=\alpha _i+dn\big )>0\). Take any tree \({\mathbf {(t,e)}}\in \mathbb {T}^{(i)}_{\zeta }\) which contains at least one vertex u of type j. Let m and p be integers such that \(|{\mathbf {t}}|_{\gamma }=\alpha _i+dm\) and \(|{\mathbf {t}}_u|_{\gamma }=\alpha _j+dp\), where \({\mathbf {t}}_u\) is the subtree rooted at u. We know that, if n is large enough, there exists \({\mathbf {t'}}\) in \(\mathbb {T}^{(j)}_{\zeta }\) such that \(|{\mathbf {t'}}|_{\gamma }=\alpha _j+d(n+p-m)\). Replacing \({\mathbf {t}}_u\) by \({\mathbf {t'}}\) in \({\mathbf {t}}\) then yields a tree with size \(\alpha _i+dn\) which itself is in \(\mathbb {T}^{(i)}_{\zeta }\), thus ending our proof. \(\square \)

Proposition 2.2 can be refined in the case where we only count the number of vertices of one specific type. We leave the proof of the following corollary to the reader.

Corollary 2.1

Assume that \(\gamma _i={\mathbbm {1}}_{i=1}\). Then:

-

the period d is gcd of the support of \(\mu _{1,1}\), and \(\alpha _1=1\).

-

for \(i\in \{2,\ldots ,K\}\) the measure \(\mu _{i,1}\) is supported on \(\alpha _i+d\mathbb {Z}_+\).

3 Infinite Multi-type Galton–Watson Trees and Forests

In this section we will consider unlabelled trees and forests with a critical ordered offspring distribution \(\mathbf {\zeta }\), and will omit mentioning \(\mathbf {\zeta }\) for readability purposes. We could in fact work with spatial trees; however, since the labellings are done conditionally on the tree and in independent fashion for each vertex, the reader can check that the proofs do not change at all if we add the labellings in.

Just as in the case of critical monotype Galton–Watson trees, multi-type trees have an infinite variant which is obtained through a size-biasing method which was first introduced in [17].

3.1 Existence of the Infinite Forest

Proposition 3.1

Let \({\mathbf {w}}\in \mathcal {W}\). There exists a unique probability measure \(\widehat{\mathbb {P}}^{({\mathbf {w}})}\) on the space of infinite K-type forests such that, for any \(n\in \mathbb {Z}_{+}\) and for any finite K-type forest \({\mathbf {f}}\) with height n,

where the normalizing constant \(Z_{{\mathbf {w}}}\) is equal to \(\sum _{i=1}^{|{\mathbf {w}}|} b_{w_i}=p({\mathbf {w}})\cdot {\mathbf {b}}.\)

Proof

Our proof is structured as the one given in [20] for monotype trees. Let \(n\in \mathbb {Z}_{+}\), we will first define a probability distribution \(\widehat{\mathbb {P}}^{({\mathbf {w}})}_n\) on the space of K-type forests with height exactly n paired with a point of height n. Let \(({\mathbf {f}},{\mathbf {e}})\) be such a forest and \(u\in {\mathbf {f}}_n\), and set

The martingale property of the process \((X_n)_{n\in \mathbb {Z}_{+}}\) defined by (2.2) under \(\mathbb {P}^{({\mathbf {w}})}\) ensures us that we do have probability measures: the total mass of \(\widehat{\mathbb {P}}^{({\mathbf {w}})}_n\) is \(\frac{1}{Z_{{\mathbf {w}}}} {\mathbb {E}}^{({\mathbf {w}})}_{\mathbf {\zeta }}[X_n] =\frac{p({\mathbf {w}})\cdot {\mathbf {b}}}{Z_{{\mathbf {w}}}}=1\).

We will check that these are compatible in the sense that, for \(n\in \mathbb {Z}_{+}\), if \(({\mathbf {F}},U)\) has distribution \(\widehat{\mathbb {P}}^{({\mathbf {w}})}_{n+1}\) then \(({\mathbf {F}}_{\leqslant n},U^-)\) has distribution \(\widehat{\mathbb {P}}^{({\mathbf {w}})}_n\). Fix therefore \({\mathbf {f}}\) a K-type forest of height n and u a vertex of t at height n. We have

Kolmogorov’s consistency theorem then allows us to define a distribution \(\widehat{\mathbb {P}}^{({\mathbf {w}})}_{\infty }\) on the set of forests where one of the trees has a distinguished infinite path. Forgetting the infinite path then gives us the distribution \(\widehat{\mathbb {P}}^{({\mathbf {w}})}\) which we were looking for. \(\square \)

For \(n\in \mathbb {Z}_+\), \({\mathbf {f}}\) a forest of height \(n+1\) and \(u\in {\mathbf {f}}_{n+1}\), we have

From this formula follows a simple description of these infinite forests.

Given a type \(i\in [K]\), a random tree with distribution \(\widehat{\mathbb {P}}^{(i)}\) can be described in the following way: it is made of a spine, that is an infinite ascending chain starting at the root, on which we have grafted independent trees with ordered offspring distribution \(\mathbf {\zeta }\). Elements of the spine have a different offspring distribution, called \(\widehat{\mathbf {\zeta }}\), which is a size-biased version of \(\mathbf {\zeta }\). It is defined by

with \(j\in [K]\) and \({\mathbf {x}}\in \mathcal {W}_K\). Given an element of the spine \(u\in {\mathcal {U}}\) and its offspring \({\mathbf {x}}\in \mathcal {W}_K\), the probability that the next element of the spine is uj for \(j\in [|{\mathbf {x}}|]\) is proportional to \(b_{x_j}\), and therefore equal to

To get a forest with distribution \(\widehat{\mathbb {P}}^{({\mathbf {w}})}\), let first J be a random variable taking values in \([|{\mathbf {w}}|]\) such that \(J=j\) with probability proportional to \(b_{w_j}\). Conditionally on J, let \(\mathbf {T}_J\) be a tree with distribution \(\widehat{\mathbb {P}}^{(J)}\), and let \({\mathbf {T}}_i\), for \(i\in [|{\mathbf {w}}|]\), \(i\ne J\) be a tree with distribution \(\mathbb {P}^{(i)}\), all these trees being mutually independent. Then the forest \(({\mathbf {T}}_i)_{i\in [|{\mathbf {w}}|]}\) has distribution \(\widehat{\mathbb {P}}^{({\mathbf {w}})}\).

Remark 2

Recall that a tree with law \(\mathbb {P}^{(i)}\) is finite for any \(i\in [K]\). Therefore, a forest with distribution \(\widehat{\mathbb {P}}^{({\mathbf {w}})}\) can only have one infinite path, and thus, we do not lose any information by going from \(\widehat{\mathbb {P}}^{({\mathbf {w}})}_{\infty }\) to \(\widehat{\mathbb {P}}^{({\mathbf {w}})}\).

3.2 Convergence to the Infinite Forest

Recall from Sect. 2.3 the notations d and \(\alpha _{{\mathbf {w}}}\): the size of a forest with distribution \(\mathbb {P}^{({\mathbf {w}})}_{\zeta }\) is always of the form \(\alpha _{{\mathbf {w}}}+dn\).

Theorem 3.1

Assume one of the following:

-

\(\gamma _j={\mathbbm {1}}_{j=1}\) for \(j\in [K]\).

-

\(\zeta \) is regular critical.

As n tends to infinity, a forest \({\mathbf {F}}\) with distribution \(\mathbb {P}^{({\mathbf {w}})}\), conditioned on \(|{\mathbf {F}}|_{\gamma }=\alpha _{{\mathbf {w}}}+dn\), converges in distribution to a forest with distribution \(\widehat{\mathbb {P}}^{({\mathbf {w}})}\). In other words, given a forest \(({\mathbf {f}},{\mathbf {e}})\) of height k, we have

This theorem is split into two quite distinct parts. For the first part, we assume that the notion of size of a tree we take is simply the amount of vertices of one fixed type, which we can take as 1 by symmetry. In this case, the theorem will be proved with purely combinatorial tools, notably ratio limit theorems for random walks. In the second part, we do not make any assumptions on \(\gamma \), and in exchange for that we have to restrict ourselves to the case where the offspring distribution has exponential moments. The result will then be proved with the help of techniques from analytic combinatorics.

4 Proof of Theorem 3.1

4.1 The Main Ingredient

Whether we count only one type of vertex or the offspring distribution is regular critical, the proof of Theorem 3.1 will rely on the following asymptotic equivalence, indexed by any word \({\mathbf {w}}\in \mathcal {W}_K\):

What equation \((H_{{\mathbf {w}}})\) means is that, when we ask for a forest to have size of order dn with large n, then exactly one of its tree components will have size or order dn, while the others will be comparatively microscopic.

Proof that Theorem 3.1 Follows from \((H_{{\mathbf {w}}})\) Take a K-type forest \({\mathbf {f}}\) with height \(k\in \mathbb {N}\), and let \({\mathbf {x}}\in \mathcal {W}_K\) be the word obtained by taking the types of the vertices of \({\mathbf {f}}\) with height k (the order of the elements \({\mathbf {x}}\) actually has no influence). For n large enough, we have

where \(q=|{\mathbf {f}}_{\leqslant k-1}|_{\gamma }\). By the results of Sect. 2.3, if \(\mathbb {P}^{({\mathbf {w}})}\big ({\mathbf {F}}_{\leqslant k}={\mathbf {f}}\big )>0\) then \(\alpha _{{\mathbf {w}}}-q\) must be congruent to \(\alpha _{{\mathbf {x}}}\) modulo d, giving us

for some signed integer p. Now if we let n tend to infinity, using both \((H_{{\mathbf {w}}})\) and \((H_{{\mathbf {x}}})\), we obtain

which concludes the proof of Theorem 3.1, assuming \((H_{{\mathbf {w}}})\). \(\square \)

4.2 Proving \((H_{{\mathbf {w}}})\) When Counting Only One Type

We assume from now on that \(\gamma _j={\mathbbm {1}}_{j=1}\) for all \(j\in [K]\), and will therefore from now on write \(\#_1 {\mathbf {T}}\) for \(|{\mathbf {T}}|_{\gamma }\). Recall from Sect. 2.3 in particular that d is the gcd of the support of \(\mu _{1,1}\) and that \(\alpha _1=1\).

Obtaining \((H_{{\mathbf {w}}})\) for every word \({\mathbf {w}}\) will be done in several small steps. We will first prove it for some fairly simple words and gradually enlarge the class of \({\mathbf {w}}\) for which it holds, until we have every element of \(\mathcal {W}_K\).

4.2.1 Ratio Limit Theorems for a Random Walk

Let \((S_n)_{n\in \mathbb {N}}\) be a random walk which starts at 0 and whose jumps are all greater than or equal to \(-1\), their distribution being given by \(\mathbb {P}(S_1=k)=\mu _{1,1}(k+1)\) for \(k\geqslant -1\).

Lemma 4.1

For all \(\alpha \in \{0,\ldots ,d-1\}\) we have

Proof

The first thing to notice is that the random walk \((\frac{S_{dn}}{d})_{n\in \mathbb {N}}\) is irreducible, recurrent and aperiodic on \(\mathbb {Z}\). First, it is indeed integer-valued because, by definition, for every n, \(S_{n+1}\equiv S_n-1\pmod d\), and thus, we stay in the same class modulo d if we take d steps at a time. Irreducibility comes from the fact that steps of \((S_n)_{n\in \mathbb {N}}\) has a nonzero probability of being equal to \(-1\) because \(\mu _{j,j}(0)>0\), and thus, \((\frac{S_{dn}}{d})_{n\in \mathbb {N}}\) can have positive jumps or jumps equal to \(-1\). Since the jumps of \((S_n)_{n\in \mathbb {N}}\) are centered by Proposition 2.1, point (i) this makes \((\frac{S_{dn}}{d})_{n\in \mathbb {N}}\) an irreducible and centered random walk on \(\mathbb {Z}\), so that it is recurrent (see, e.g., Theorem 8.2 in [14]). Finally, aperiodicity is obtained from the fact that, if \(\mu _{j,j}(n)>0\), then \(\mathbb {P}(S_n=0)>0\) by jumping straight to \(n-1\) and going down to 0 one step at a time.

As a consequence of this, we can apply Spitzer’s strong ratio theorem (see [31], p.49) to the random walk \((\frac{S_{dn}}{d})_{n\in \mathbb {N}}\). We obtain that, for any \(k\in \mathbb {Z}\),

This proves the second half of Lemma 4.1 and can also be used to prove the first half. Let \(\mu _{j,j}^{* \alpha }\) be the distribution of the sum of \(\alpha \) independent variables with distribution \(\mu _{j,j}\). For \(n\in \mathbb {N}\), we then have

Fatou’s lemma then gives us

A similar argument also shows that

and this ends the proof. \(\square \)

4.2.2 The Case Where \({\mathbf {w}}=(1,1,\ldots ,1)\)

Consider a tree \(\mathbf {T}\) with distribution \(\mathbb {P}^{(1)}\). Consider then the reduced tree \(\Pi ^{(1)}(\mathbf {T})\) where all the vertices with types different from 1 have been erased but ancestral lines are kept (such that the father of a vertex of \(\Pi ^{(1)}(T)\) is its closest ancestor of type 1 in \({\mathbf {T}}\)). This tree is precisely studied in [25], where it is shown that it is a monotype Galton–Watson tree, its offspring distribution naturally being \(\mu _{1,1}\). As a result, the well-known cyclic lemma (see [29], Sections 6.1 and 6.2) tells us that

where \((S_n)_{n\in \mathbb {N}}\) is the random walk defined in Sect. 4.2.1. One particular consequence of this is the fact that, thanks to Lemma 4.1, in order to prove \((H_{{\mathbf {w}}})\) for a certain word \({\mathbf {w}}\), we can restrict ourselves to proving the asymptotic equivalence for a single value of p, which will we take to be 0.

Consider now a word \({\mathbf {w}}=(1,1,\ldots ,1)\) of length k, where k is any integer. The cyclic lemma can be adapted to forests (see [29] again), and we have

Lemma 4.1 then implies \((H_{{\mathbf {w}}})\) in this case since \(Z_{{\mathbf {w}}}=kb_1\) and \(\alpha _{{\mathbf {w}}}=k\).

The cases where \({\mathbf {w}}\) contains types different from 1 will be much less simple, and we first start with an inequality.

4.2.3 A Lower Bound for General \({\mathbf {w}}\)

Let \({\mathbf {w}}\in \mathcal {W}_K\). In order to count the number of vertices of type 1 of a forest with distribution \(\mathbb {P}^{({\mathbf {w}})}\), we cut it at its first generation of type 1.

where q is the number of times 1 appears in \({\mathbf {w}}\) and 1 is repeated \(k_1+k_2,\ldots +k_{|{\mathbf {w}}|}\) times in \(\mathbb {P}^{(1,\ldots ,1)}\). By Corollary 2.1, whenever \(\mu _{{w_i},1}(k_i)>0\), we have \(\alpha _{w_i}\equiv k_i-{\mathbbm {1}}_{w_i=1} \pmod d\), and thus, the use of \(H_{(1,\ldots ,1)}\), combined with Fatou’s lemma, gives us the following lower bound:

We can then use point (i) of Proposition 2.1 to identify the right-hand side and obtain

To prove the reverse inequality for the limsup, we will try to fit a forest with distribution \(\mathbb {P}^{({\mathbf {w}})}\) “inside” a tree with distribution \(\mathbb {P}^{(1)}\). We first need some additional notions.

4.2.4 The Extension Relation

We describe here a tool which will be useful in the future. Let \(({\mathbf {t}},{\mathbf {e}})\) and \(({\mathbf {t'}},{\mathbf {e'}})\) be two K-type trees. We say that \({\mathbf {t}}'\) extends \({\mathbf {t}}\), which we write \({\mathbf {t}}'\vdash {\mathbf {t}}\) (omitting as usual the type functions for clarity) if \({\mathbf {t}}'\) can be obtained from \({\mathbf {t}}\) by grafting trees on the leaves of \({\mathbf {t}}'\). More precisely, \({\mathbf {t}}'\vdash {\mathbf {t}}\) if:

-

\({\mathbf {t}}\subset {\mathbf {t}}'\).

-

\(\forall u\in {\mathbf {t}}\), \({\mathbf {e}}(u)=\mathbf {e'}(u)\).

-

\(\forall u\in {\mathbf {t}}'{\setminus }{\mathbf {t}},\exists v\in \partial {\mathbf {t}},w\in {\mathcal {U}}: \;u=vw\).

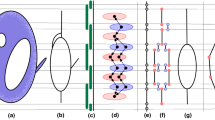

Here, \(\partial {\mathbf {t}}\) is the set of leaves of \({\mathbf {t}}\), that is the set of vertices v of \({\mathbf {t}}\) such that \(k_v({\mathbf {t}})=0\). See Fig. 1 for an example.

This is once again adaptable to forests: if \(({\mathbf {f}},{\mathbf {e}})\) and \((\mathbf {f'},\mathbf {e'})\) are two k-type forests, then we say that \({\mathbf {f}}'\vdash {\mathbf {f}}\) if they have the same number of tree components and each tree of \({\mathbf {f}}'\) extends the corresponding tree of \({\mathbf {f}}\).

The extension relation behaves well with Galton–Watson random forests. For example, the following is immediate from the branching property:

Lemma 4.2

If \((\mathbf {f,e})\) is a finite forest and \({\mathbf {w}}\) the list of types of the roots of its components, then

Moreover, we have a generalization of the branching property: conditionally on \({\mathbf {F}}\vdash {\mathbf {f}}\), \({\mathbf {F}}\) is obtained by appending independent trees at the leaves of \({\mathbf {f}}\), and for every such leaf v, the tree grafted at v has distribution \(\mathbb {P}^{({\mathbf {e}}(v))}\).

For infinite trees, we get a generalization of (3.1):

Lemma 4.3

If \(({\mathbf {f,e}})\) is a finite forest, let \({\mathbf {x}}\) be the word formed by the types of the leaves of \({\mathbf {f}}\) in lexicographical order. We have

Proof

Let n be the height of \({\mathbf {f}}\). Any forest of height n which extends \({\mathbf {f}}\) can be obtained by adding after each leaf u of \({\mathbf {f}}\) a tree with height smaller than \(n-|u|\). Let \(u_1,\ldots ,u_p\) be the leaves of \({\mathbf {f}}\), and \(e_1,\ldots ,e_p\) be their types, we will append for all i a tree \(({\mathbf {t}}^i,{\mathbf {e}}^i)\) to the leaf \(u_i\) and call the resulting forest \((\tilde{{\mathbf {f}}},\tilde{{\mathbf {e}}})\), implicitly a function of \({\mathbf {f}}\) and \({\mathbf {t}}^1,\ldots ,{\mathbf {t}}^p\). Thus, recalling the notation X for the martingale defined in Eq. (2.2),

\(\square \)

4.2.5 The Case Where There is a Tree \({\mathbf {t}}\) such that \(\mathbb {P}^{(1)}({\mathbf {T}}\vdash {\mathbf {t}})>0\) and \({\mathbf {w}}\) is the Word Formed by the Leaves of \({\mathbf {t}}\)

Let \(({\mathbf {t}},{\mathbf {e}})\) be a tree with root of type 1 such that \(\mathbb {P}^{(1)}({\mathbf {T}}\vdash {\mathbf {t}})>0\). Let \({\mathbf {w}}\) be the word formed by the types of the leaves of \({\mathbf {t}}\), we will prove \((H_{{\mathbf {w}}})\). We first need an intermediate lemma.

Lemma 4.4

There exists a countable family of trees \({\mathbf {t}}^{(2)},{\mathbf {t}}^{(3)}\ldots \) such that, for any K-type tree \({\mathbf {t}}'\) with root of type 1:

-

either \({\mathbf {t}}\vdash {\mathbf {t}}'\).

-

or \({\mathbf {t}}'\vdash {\mathbf {t}}\).

-

or there is a unique i such that \({\mathbf {t}}'\vdash {\mathbf {t}}^{(i)}\).

Proof

For all \(k\in \{2,3,\ldots ,ht({\mathbf {t}})\}\), take all the trees \({\mathbf {t'}}\) which have height k and which satisfy both \({\mathbf {t'}}_{\leqslant k-1}={\mathbf {t}}_{\leqslant k-1}\) and \({\mathbf {t'}}_k\ne {\mathbf {t}}_k.\) These are in countable amount, and we can therefore call them \(({\mathbf {t}}^{(i)})_{i\geqslant 2}\) in any order. Now for any K-type tree \({\mathbf {t'}}\) with root of type 1, by considering the highest integer k such that \({\mathbf {t'}}_{\leqslant k-1}={\mathbf {t}}_{\leqslant k-1}\), we directly obtain that, if none of \({\mathbf {t}}\) and \({\mathbf {t'}}\) extend the other, then \({\mathbf {t}}'\) extends one of the \({\mathbf {t}}^{(i)}\). \(\square \)

Now let \({\mathbf {t}}^{(1)}={\mathbf {t}}\), and, for all \(i\in \mathbb {N}\), let also \({\mathbf {w}}^i\) be the word formed by the types of the leaves of \({\mathbf {t}}^{(i)}\). Write

where \(q^{(i)}\) is the number of vertices of type 1 of \({\mathbf {t}}^{(i)}\) which are not leaves. Divide by \(\mathbb {P}^{(1)}(\#_1 {\mathbf {T}}=1+dn)\) on both sides of the equation to obtain

Note that

is equal to 0 for n large enough, since \({\mathbf {t}}\) is finite.

By the results of Sect. 2.3, we have \(1-q^{(i)}\equiv \alpha _{{\mathbf {w}}^{(i)}} \pmod d\) for all \(i\in \mathbb {N}\), and thus, using the lower bound (4.1), we have

for all \(i\in \mathbb {N}\). However, by Lemmas 4.3 and 4.4, we have

and thus, whenever \(\mathbb {P}^{(1)}({\mathbf {T}}\vdash {\mathbf {t}}^{(i)})\) is nonzero, we must have

which ends the proof of \((H_{{\mathbf {w}}})\).

4.2.6 Removing One Element from \({\mathbf {w}}\)

Lemma 4.5

Let \({\mathbf {w}}\in \mathcal {W}_K\) be such that \((H_{{\mathbf {w}}})\) holds. Let m be any integer in \([|{\mathbf {w}}|]\) and let \(\tilde{{\mathbf {w}}}\) be \({\mathbf {w}}\), except that we remove \(w_m\) from the list. Then \((H_{\tilde{{\mathbf {w}}}})\) also holds.

Proof

For \(n\in \mathbb {N}\), we split the event \(\{\#_1{\mathbf {F}}=\alpha _{{\mathbf {w}}}+dn\}\) according to the first and second generations of type 1 in the mth tree of the forest. By calling k the number of vertices in the first generation of type 1 issued from the mth tree, and then \(i_1,\ldots ,i_k\) the numbers of vertices in the first generation of type 1 of each corresponding subtree, we have

where \(\tilde{{\mathbf {w}}}^{i_1+\cdots +i_r}\) is the word \({\mathbf {w}}\) where \(w_m\) has been replaced by 1, repeated \(i_1+\cdots +i_r\) times. Note that the term of the sum where \(k=0\) is to be interpreted as \(\mathbb {P}^{(\tilde{{\mathbf {w}}})}(\#_1{\mathbf {F}}=\alpha _{{\mathbf {w}}}- {\mathbbm {1}}_{\{w_m=1\}}+dn)\).

We now use the same argument as in the end of the previous section: we first divide by \(\mathbb {P}^{({{\mathbf {w}}})}(\#_1{\mathbf {F}}=\alpha _{{\mathbf {w}}}+dn)\) to get

For each choice of k and \(i_1,\ldots ,i_k\), using lower bound (4.1) as well as \((H_{{\mathbf {w}}})\), we have

A repeated use of point (i) of Proposition 2.1 shows that these add up to 1, and thus, for k and \(i_1,\ldots ,i_k\) such that \(\mu _{w_m,1}(k)\prod _{r=1}^k\mu _{1,1}(i_r)\ne 0\), we do have

By irreducibility, one can find k such that \(\mu _{w_m,1}(k)\ne 0\), and by criticality one has \(\mu _{1,1}(0)\ne 0\), meaning that we can take \(i_1,\ldots ,i_k\) all equal to zero, and this ends the proof. \(\square \)

4.2.7 End of the Proof

By applying Lemma 4.5 repeatedly and using the fact that \((H_{{\mathbf {w}}})\) stays true if we permute the terms of \({\mathbf {w}}\), we obtain that, if \({\mathbf {w}}\) and \({\mathbf {w}}'\) are two words such that any type features fewer times in \({\mathbf {w}}'\) than in \({\mathbf {w}}\), then \((H_{{\mathbf {w}}})\) implies \((H_{{\mathbf {w}}'})\). Thus, by Sect. 4.2.5, we now only need to show the following lemma.

Lemma 4.6

For all nonnegative integers \(n_1,\ldots ,n_K\), there exists a K-type tree \(({\mathbf {t}},{\mathbf {e}})\) which has more than \(n_i\) leaves of type i for all \(i\in [K]\), and such that \(\mathbb {P}^{(1)}({\mathbf {T}}\vdash {\mathbf {t}})>0\).

Proof

The first step is showing that, for p large enough, the pth generation of type 1 of \({\mathbf {T}}\) has positive probability of having more than \(n_1+\cdots +n_K\) vertices, where the pth generation of type 1 is the set of vertices of type 1 which have exactly p ancestors of type 1 including the root. This is immediate because the average of \(\mu _{1,1}\) is 1 and we are not in a degenerate tree, and thus, the size of each generation of type 1 has positive probability of being strictly larger than the previous generation.

Irreducibility then tells us that, after each vertex of the pth generation of type 1, there is a positive probability of finding a vertex of type i for any i. \(\square \)

4.3 Proving \((H_{{\mathbf {w}}})\) When \(\zeta \) is Regular Critical

We now take general \(\gamma \) and assume that \(\zeta \) is regular critical. Our aim here is to prove the following refinement of \((H_{{\mathbf {w}}})\): there exists a constant \(C>0\) such that, for all \({\mathbf {w}}\in \mathcal {W}_K\)

where \({\mathbf {a}}\) is the left eigenvector of the mean matrix M, and \(\sigma ^2\) was defined in Sect. 2.2. The actual values do not matter much; however, the important part is that the right-hand side is \(Z_{{\mathbf {w}}}\) divided by \(n^{3/2}\), times a constant. We will prove this by using analytic methods, notably the smooth implicit-function schema theorem (see notably [11], Section VII.4 and [22]).

4.3.1 Proving \((H'_{{\mathbf {w}}})\) for One-Letter Words

Let \(i\in [K]\) and, for appropriate \(z\in {\mathbb {C}}\), let

This power series has nonnegative coefficients, and, since \(\zeta \) is critical, its radius of convergence is 1. This is because \(\psi _i(1)=1\) (since \(\psi ^{(i)}\) is the generating function of a probability distribution) and \(\psi '_i(1)=\infty \) (Lemma 2.1). We let \({\mathbb {D}}\) be the open unit disk. The periodicity structure of Sect. 2.3 lets us rewrite \(\psi _i\) in a more precise way: there exists another power series \(\phi _i\) such that

and all the coefficients of \(\phi _i\), except for a finite amount, are strictly positive. Our aim is then to show that the coefficient of \(z^n\) in \(\phi _i\) behaves like \(n^{-3/2}\) as n tends to infinity.

Recall from Sect. 2.2 the distribution \(\mathbb {P}^{(i)}_{\text {cut}_i}\) of the Galton–Watson tree cut at its first generation of type i. Given such a cut tree \({\mathbf {t}}\), we call \(p_{{\mathbf {t}}}\) its number of leaves of type i. We obtain from the Galton–Watson construction the following equation:

This can be refined with the periodicity structure: we know from Proposition 2.2 that, if \(\mathbb {P}^{(i)}_{\text {cut}_i}({\mathbf {t}})>0\), then \(\alpha _i\equiv \gamma _i+\sum _{j\ne i}\gamma _j\#_{j}({\mathbf {t}})+p_{{\mathbf {t}}}\alpha _i\pmod d\). We let \(n_{{\mathbf {t}}}\in \mathbb {Z}_+\) be such that \(\gamma _i+\sum _{j\ne i}\gamma _j\#_{j}({\mathbf {t}})+p_{{\mathbf {t}}}\alpha _i=\alpha _i+n_{{\mathbf {t}}}d\), and then obtain

which reduces to

The function \(\phi _i\) thus solves

where, for appropriate z and w,

We will now apply smooth implicit-function schema theorem, as stated in [11], Theorem VII.3. We have to check several conditions on the double power series \(G(z,w)=\sum _{n,m}g_{m,n}z^mw^n\) with positive coefficients first.

-

We show that G is analytic in a domain \(\{|z|<R, |w|<R\}\) with \(R>1\). Because of regular criticality and Proposition 2.1, the number of vertices lying before or on the first generation of type i is both exponentially integrable variables (in the sense of “Appendix”), and thus, their sum, which is the total number of vertices lying before the first generation of type i, is also exponentially integrable. Thus, there exists \(z>1\) such that \({\mathbb {E}}^{(i)}_{\text {cut}_i}\big [ z^{\#\mathbf {T}}\big ]<\infty \), and then bounding \(|\mathbf {T}|_{\gamma }\) by \(\gamma _{\max } \#\mathbf {T}\) (\(\gamma _{\max }\) being the highest value of \(\gamma _i,\) \(i\in [K]\)) and rewriting \(n_{{\mathbf {T}}}\) in terms of \(|\mathbf {T}|_{\gamma }\), we get \(R>1\) such that \(G(R,R)<\infty \).

-

Unlike the assumptions of [11], it is possible that \(g_{0,0}=0\) (e.g., if \(\gamma _i=0\) and an individual of type i can die without giving birth to any offspring), but this is just an unneeded normalization assumption. We do know, however, that the coefficient for \(g_{0,1}\ne 1\) and that \(g_{0,n}\ne 0\) for some \(n\geqslant 2\) since the measure \(\mu _{i,i}\) has expected value 1 and nonzero variance.

-

The pair \((r,s)=(1,1)\) lies inside the domain of analyticity of G and satisfies the so-called characteristic system

$$\begin{aligned} G(r,s)=s \quad \text {and}\quad \partial _{w}G(r,s)=1. \end{aligned}$$Of course, in our setting, we are just saying that the coefficients of G sum up to 1 and that the average of \(\mu _{i,i}\) is 1, which we know since Proposition 2.1.

Knowing all of this and the fact that \(\phi _i\) is aperiodic (in the sense of [11], since only a finite number of its coefficients are not 0), the analytic implicit-function schema gives us the following estimate for the coefficient of \(z^n\) in \(\phi _i\):

Proposition 2.1 gives us the wanted values for the partial derivatives:

and

and this ends our proof. \(\square \)

4.3.2 Moving on to General Words

The general case of \((H'_{{\mathbf {w}}})\) follows from the following lemma:

Lemma 4.7

Let \(a>1\) and let X and Y be two independent integer-valued random variables such that

Then we also have

Proof

We will separately show that

and

For \(n\in \mathbb {Z}_+\), let \(x_n=\mathbb {P}(X=n)\), \(y_n=\mathbb {P}(Y=n)\) and \(z_n=\mathbb {P}(X+Y=n)=\sum _{k=0}^nx_ky_{n-k}\). Cut the sum the following way:

For the lower bound, let \(\varepsilon >0\), and choose K large enough that \(\sum _{k=0}^K x_k\geqslant (1-\varepsilon )\), \(\sum _{k=0}^K y_k\geqslant (1-\varepsilon )\) and, for n larger than K, \(x_n\geqslant (1-\varepsilon )C_X n^{-a}\) and \(y_n\geqslant (1-\varepsilon )C_Y n^{-a}\). Now take \(n\geqslant 2K\). In the first sum, use \(y_{n-k}\geqslant (1-\varepsilon )C_Y(n-K)^{-a}\), and in the third, use \(x_k\geqslant (1-\varepsilon )C_X(n-K)^{-a}\) to obtain

Taking n to infinity, we get

and letting \(\varepsilon \) tend to 0 gives us the lower bound.

The upper bound will require more work. Let \(\varepsilon >0\) and \(0<\varepsilon <1/2\), we will do the same cut as in Eq. (4.3), but with a varying K, equal to \(\lfloor \varepsilon n\rfloor .\) Take n large enough such that, for \(k\geqslant \lfloor \varepsilon n\rfloor \), \(k^{a}x_k\leqslant (1+\varepsilon )C_X\) and \(k^{a}y_k\leqslant (1+\varepsilon )C_Y.\) Write in the first sum \(y_{n-k}\leqslant (1+\varepsilon )C_Y(n-\lfloor \varepsilon n\rfloor )^{-a}\) and in the third one \(x_k\leqslant (1+\varepsilon )C_X(n-\lfloor \varepsilon n\rfloor )^{-a}\), while for the middle one we use \(x_ky_{n-k}\leqslant (1+\varepsilon )^2C_XC_Y \lfloor \varepsilon n\rfloor ^{-2a}\). We then have

Since \(a>1,\) we have \(1-2a<a,\) and thus, the last term is negligible compared to \(n^{-a}\). Hence, \(\limsup n^az_n\leqslant (1+\varepsilon )(1-\varepsilon )^{-a},\) and letting \(\varepsilon \) tend to 0 gives us the wanted bound. \(\square \)

The case \(a=3/2\) coupled with a simple induction then proves \((H'_{{\mathbf {w}}})\) for general \({\mathbf {w}}\in \mathcal {W}_K\).

5 Background on Random Planar Maps

5.1 Planar Maps

As stated in the Introduction, a planar map is a proper embedding m of a finite connected planar graph in the sphere, in the sense that edges do not intersect. These are taken up to orientation-preserving homeomorphisms of the sphere, thus making them combinatorial objects. We call faces of a map m the connected components of its complement in the sphere, and let \({\mathcal {F}}_m\) be their set. The degree of a face f, denoted by \(\deg (f)\), is the number of edges it is adjacent to, counting multiplicity: we count every edge as many times as we encounter it when circling around f. The numbers of vertices, edges and faces of a map are respectively denoted by \(\#V(m)\), \(\#E(m)\) and \(\#F(m)\). Finally, the graph distance on m is denoted by d.

We are going to look at maps which are both rooted and pointed. These are triplets (m, e, r), where m is a planar map, e is an oriented edge of m called the root edge, starting at a vertex \(e^-\) and pointing to a vertex \(e^+\), and r is a vertex of m. We call \(\mathcal {M}\) the set of all such maps and \(\mathcal {M}_n\) the set of such maps with n vertices for \(n\in \mathbb {N}\). A map (m, e, r) will be called positive (resp. null, negative) if \(d(r,e^+)=d(r,e^-)+1\) (resp. \(d(r,e^-)\), \(d(r,e^-)-1\)). We call \(\mathcal {M}^+\), \(\mathcal {M}^0\) and \(\mathcal {M}^-\) the corresponding sets of maps and, for \(n\in \mathbb {N}\), \(\mathcal {M}_n^+\), \(\mathcal {M}_n^0\) and \(M_n^-\) the corresponding sets of maps which have n vertices. Since there is a trivial bijection between positive and negative maps, we will mostly restrict ourselves to \(\mathcal {M}^+\) and \(\mathcal {M}^0\). By convention, we add to \(\mathcal {M}^+\) the vertex map \(\dagger \), which consists of one vertex, no edges and one face.

5.2 Boltzmann Distributions

Let \({\mathbf {q}}=(q_n)_{n\in \mathbb {N}}\) be a sequence of nonnegative numbers such that there exists \(i\geqslant 3\) with \(q_i>0\). For any map m, let

Note that this quantity only depends on the map m, and not on any root r or point m. We say that the sequence \({\mathbf {q}}\) is admissible if the sum

is finite. When \({\mathbf {q}}\) is admissible, we can define the Boltzmann probability distribution \(B_{{\mathbf {q}}}\) by setting, for a pointed rooted map (m, e, r),

We also introduce the versions of \(B_{{\mathbf {q}}}\) conditioned to be positive or null: let \(Z_{{\mathbf {q}}}^+= \sum _{(m,e,r)\in \mathcal {M}^+} W_{{\mathbf {q}}}(m)\) and \(Z_{{\mathbf {q}}}^0= \sum _{(m,e,r)\in \mathcal {M}^0} W_{{\mathbf {q}}}(m)\) and, for any map (m, e, r), \(B^+_{{\mathbf {q}}}(m,e,r)=\frac{W_{{\mathbf {q}}}(m)}{Z_{{\mathbf {q}}}^+}\) if it is positive and \(B^0_{{\mathbf {q}}}(m,e,r)=\frac{W_{{\mathbf {q}}}(m)}{Z_{{\mathbf {q}}}^0}\) if it is null.

For nonnegative numbers x and y, let

and

It was shown in [24], Proposition 1, that \({\mathbf {q}}\) is admissible if and only if the system

has a solution with \(x>1\), such that the spectral radius of the matrix

is smaller than or equal to 1. The existence of such a solution implies its uniqueness, with \(x=Z_{{\mathbf {q}}}^+\) and \(y=\sqrt{Z_{{\mathbf {q}}}^0}\). We let \(Z^{\diamond }_{{\mathbf {q}}}=\sqrt{Z_{{\mathbf {q}}}^0}\).

We then say that \({\mathbf {q}}\) is critical if the spectral radius of the aforementioned matrix is exactly 1 and that it is regular critical if, moreover, for some \(\varepsilon >0\), we have \(f^{\bullet }(Z_{{\mathbf {q}}}^++\varepsilon ,Z_{{\mathbf {q}}^{\diamond }}+\varepsilon )<\infty \).

Random Non-pointed Maps We will also occasionally consider rooted maps (m, e) without any specified point r. If \({\mathbf {q}}\) is admissible, we let \(B^{\emptyset }_{{\mathbf {q}}}\) be the probability measure on the set of rooted maps such that, for a rooted map (m, e),

where \(Z^{\emptyset }_{{\mathbf {q}}}\) is an appropriate constant.

Note that, if a random rooted and pointed map (M, E, R) has distribution \(B_{{\mathbf {q}}}\), then the distribution of (M, E) (ignoring R) is not \(B^{\emptyset }_{{\mathbf {q}}}\), but \(B^{\emptyset }_{{\mathbf {q}}}\) biased by the number of vertices: if (m, e) is a rooted map with n vertices, then \(\sum _{r\in m} B_{{\mathbf {q}}}(m,e,r)\) is proportional to \(nB^{\emptyset }_{{\mathbf {q}}}\). This is because, there are exactly n ways of pointing (m, e), and they all lead to a different rooted and pointed map.

5.3 The Bouttier–Di Francesco–Guitter Bijection

In [8] was exposed a bijection between rooted and pointed maps and a certain class of 4-type labelled trees called mobiles. Let us quickly recall the facts here, with a few variations to make the bijection more adapted to our study.

5.3.1 Mobiles

A finite spatial 4-type tree \(({\mathbf {t}},{\mathbf {e}},{\mathbf {l}})\) is called a mobile if the types satisfy the following conditions:

-

The root has type 1 or 2,

-

The children of a vertex of type 1 all have type 3,

-

If a vertex has type 2, then it has only one child, which has type 4, except if it is the root, if \(\emptyset \) has type 2 then it has exactly two children, both of type 4,

-

Vertices of types 3 and 4 can only have children of types 1 and 2,

and the labels satisfy the following conditions:

-

Vertices of types 1 and 3 have integer labels, and vertices of types 2 and 4 have labels in \(\mathbb {Z}+\frac{1}{2}\),

-

The root has label 0 if it is of type 1, \(\frac{1}{2}\) if it is of type 2,

-

Vertices of type 3 or 4 have the same label as their father.

-

If \(u\in {\mathbf {t}}\) has type 3 or 4, let by convention \(u0=u\underline{k_u({\mathbf {t}})+1}=u^-\). Then, for all \(i\in \{0,\ldots ,k_u({\mathbf {t}})\}\), \({\mathbf {l}}\big (u\underline{i+1}\big )-{\mathbf {l}}(ui)\geqslant -\frac{1}{2} ({\mathbbm {1}}_{\{{\mathbf {e}}(ui)=1\}} + {\mathbbm {1}}_{\{{\mathbf {e}}(u\underline{i+1})=1\}})\).

The notation \(u\underline{i+1}\) means that we are looking at \(i+1\) as a letter, the word \(u\underline{i+1}\) being the concatenation of u and \(i+1\).

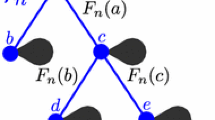

Traditionally, vertices of type 1 are represented as white circles \(\bigcirc \), vertices of type 2 are “flags” \(\diamond \) while the other two types are dots \(\bullet \). Notice also that we do not need to mention the labels of vertices with types 3 and 4 since the label of such a vertex is the same as that of its father. We let \({\mathbb {T}}_M\) be the set of finite mobiles, \({\mathbb {T}}_M^+\) be the set of finite mobiles such that \({\mathbf {e}}(\emptyset )=1\) and \({\mathbb {T}}_M^0\) be the set of finite mobiles such that \({\mathbf {e}}(\emptyset )=2\). See Fig. 2 for an illustration of the labelling rule, and Fig. 3 for an example of a mobile.

5.3.2 The Bijection

Let \(({\mathbf {t}},{\mathbf {e}},{\mathbf {l}})\) be a mobile and let us describe how to transform it into a map (an illustration is given in Fig. 4). Let \(v_1,v_2,\ldots ,v_p\) be, in order, the vertices of type 1 or 2 of \({\mathbf {t}}\) appearing in the standard contour process and \(e_1,e_2,\ldots ,e_p\) and \(l_1,l_2,\ldots ,l_p\) be the corresponding types and labels. We refer to \(v_1,\ldots ,v_p\) as the corners of the tree because a vertex will be visited a number of times equal to the number of angular sectors around it delimited by the tree. Draw \({\mathbf {t}}\) in the plane and add an extra type 1 vertex r outside of \({\mathbf {t}}\), giving it label \(\underset{{\mathbf {e}}(u)=1}{\min }{\mathbf {l}}(u)-1\). Now, for every \(i\in [p]\), define the successor of the ith corner as the next corner of type 1 with label \(l_i-1\) if \(e_i=1\) and \(l_i-\frac{1}{2}\) if \(e_i=2\). If there is no such vertex, then let its successor be r. In both cases, draw an arc between \(v_i\) and the successor. This construction can be done without having any of the arcs intersect. Now erase all the original edges of the tree, as well as vertices of types 3 and 4. Erase as well all the vertices of type 2, merging the corresponding pairs of arcs. We are left with a planar map, with a distinguished vertex r. The root edge depends on the type of the root of the tree: if \({\mathbf {e}}(\emptyset )=1\) then we let the root edge be the first arc which was drawn (have it point to \(\emptyset \) for a positive map, and away from \(\emptyset \) for a negative map). If \({\mathbf {e}}(\emptyset )=2\) then we let the root edge be the result of the merging of the two edges adjacent to \(\emptyset \), pointing to the successor of the first corner encountered in the contour process.

This construction gives us two bijections: one between \({\mathbb {T}}_M^+\) and \({\mathcal {M}}^+\) and one between \({\mathbb {T}}_M^0\) and \({\mathcal {M}}^0\), which we both call \(\Psi \).

Having added a vertex with label \(-2\) to the mobile of Fig. 3, we transform it into a map

It was shown in [24] that the BDFG bijection serves as a link between Galton–Watson mobiles and Boltzmann maps.

Proposition 5.1

Consider an admissible weight sequence \({\mathbf {q}}\) and define an unordered 4-type offspring distribution \(\mathbf {\mu }\) by

Let then \(\mathbf {\zeta }\) be the ordered offspring distribution which is uniform ordering of \(\mu \), as explained in Sect. 2.1. This offspring distribution is irreducible, and it is critical (resp. regular critical) if the weight sequence \({\mathbf {q}}\) is critical (resp. regular critical), while it is subcritical if \({\mathbf {q}}\) is admissible but not critical. Define also, for all ordered offspring type-list \({\mathbf {w}}\), \(\nu ^{(i)}_{{\mathbf {w}}}\) as the uniform measure on the set \(D^{(i)}_{{\mathbf {w}}}\) of allowed displacements to have a mobile, which is precisely \(D^{(i)}_{{\mathbf {w}}} = \{0\}^{|{\mathbf {w}}|}\) if \(i=1\) or \(i=2\) and

if \(i=3\) or \(i=4\), in which case we set by convention \(w_0=w_{|{\mathbf {w}}|+1}=i-2\) and \(y_0=y_{|{\mathbf {w}}|+1}=0\).

Then:

-

if \(({\mathbf {T,E,L}})\) has distribution \(\mathbb {P}^{(1),(0)}_{\mathbf {\zeta },\nu }\), then the random map \(\Psi ({\mathbf {T,E,L}})\) has distribution \(\mathbb {B}^+_{{\mathbf {q}}}\).

-

if \(({\mathbf {F,E,L}})\) is a forest with distribution \(\mathbb {P}^{(2,2),(\frac{1}{2},\frac{1}{2})}_{\mathbf {\zeta },\nu }\), consider the mobile formed by merging both tree components at their roots. The image of this mobile by \(\Psi \) has law \({\mathbb {B}}_{{\mathbf {q}}}^0\).

Remark 3

The operation of merging two trees at their roots can be formalized the following way. Consider two trees \(({\mathbf {t}}_1,{\mathbf {e}}_1)\) \(({\mathbf {t}}_2,{\mathbf {e}}_2)\) which are such that, in both trees, the root has type 2 and has a unique child, with type 4. For \(u\in {\mathbf {t}}_2{\setminus }\{\emptyset \}\), we can write \(u=1u^2\ldots u^k\). Let then \(u'=2u^2\ldots u^k\), and let \({\mathbf {t}}_2'=\left\{ u',\; u\in {\mathbf {t}}_2{\setminus }\{\emptyset \}\right\} \). We can now define \({\mathbf {t}}={\mathbf {t}}_1\cup {\mathbf {t}}_2'\), which is easily checked to be a tree. Types can then simply be assigned by setting, for \(u\in {\mathbf {t}}_1\), \({\mathbf {e}}(u)={\mathbf {e}}_1(u)\) and, for \(u\in {\mathbf {t}}_2{\setminus }\{\emptyset \}\), \({\mathbf {e}}(u')={\mathbf {e}}_2(u)\).

This operation is of course continuous for the local convergence topology since, for any \(k\in \mathbb {Z}_+\), the kth generation of \({\mathbf {t}}\) is completely determined by the kth generations of \({\mathbf {t}}_1\) and \({\mathbf {t}}_2\).

Remark 4

If the weight sequence \({\mathbf {q}}\) is such that \(q_{2n+1}=0\) for all \(n\in \mathbb {Z}_+\), then a \({\mathbf {q}}\)-Boltzmann map is a.s. bipartite, which implies that there will be no vertices of type 2 or 4 in the corresponding tree, in which case we consider the mobile as a tree with two types, and it stays irreducible. Moreover, we then have \(Z^{\diamond }_{{\mathbf {q}}}=0\).

Remark 5

The number of vertices, edges and faces of the map can be read on the tree.

-

\(\#V\big (\Psi ({\mathbf {T,E,L}})\big )=1 + \#_1{\mathbf {T}}.\)

-

\(\#E\big (\Psi ({\mathbf {T,E,L}})\big )=|{\mathbf {T}}|_{\gamma }-1\) with \(\gamma =(1,0,1,1).\)

-

\(\#F\big (\Psi ({\mathbf {T,E,L}})\big ) =|{\mathbf {T}}|_{\gamma }\) with \(\gamma =(0,0,1,1).\)

5.4 Infinite Maps and Local Convergence

If (m, e) is a rooted map and \(k\in \mathbb {N}\), we let \(B_{m,e}(k)\) be the map formed by all vertices whose graph distance to \(e^+\) is less than or equal to k, and all edges connecting such vertices, except if the distance between each vertex of such an edge and \(e^+\) is exactly k. The map \(B_{m,e}(k)\) is still rooted at the same oriented edge e. For two rooted maps (m, e) and \((m',e')\), let \(d\big ((m,e),(m',e')\big )=\frac{1}{1+p}\) where p is the supremum of all integers k such that \(B_{m,e}(k)\) is equivalent to \(B_{m',e'}(k)\). This defines a metric on the set of rooted maps. Call then \(\overline{\mathcal {M}}\) the completion of this set. Elements of \(\overline{\mathcal {M}}\) which are not finite maps are then called infinite maps, which we mostly consider as a sequence of compatible finite maps: \((m,e)=(m_i,e_i)_{i\in \mathbb {N}}\) with \((m_i,e_i)=B_{m_{i+1},e_{i+1}}(i)\) for all i. Note in particular that infinite maps are not pointed.

As with trees and forests, convergence in distribution is simply characterized: if \((M_n,E_n)_{n\in \mathbb {N}}\) is a sequence of random rooted maps, one can check that it converges in distribution to a certain random map (M, E) if and only if, for all finite deterministic maps \((m',e')\) and all \(k\in \mathbb {N}\), \(\mathbb {P}\big (B_{(M_n,E_n)}(k)=(m',e')\big )\) converges to \(\mathbb {P}\big (B_{(M,E)}(k)=(m',e')\big ).\)

6 Convergence to Infinite Boltzmann Maps

We now take a critical weight sequence \({\mathbf {q}}\), and take \(\mu \), \(\mathbf {\zeta }\) and \(\nu \) as defined in Proposition 5.1. Since, in the BDFG bijection, the number of vertices, edges and faces of the map correspond to the vertices of certain types of the tree, we expect Theorem 3.1 to tell us that Boltzmann maps with large amounts of vertices converge locally. This section is dedicated to establishing the fact that this is indeed the case.

We first define three different periodicity factors \(d_V\), \(d_E\) and \(d_F\) corresponding to vertices, edges and faces

Let also \(\alpha _V=2\) and \(\alpha _E=\alpha _F=0\).

Theorem 6.1

Let \(I\in \{V,E,F\}\). If \(I=E\) or \(I=F\), we also assume that \({\mathbf {q}}\) is regular critical. For appropriate \(n\in \mathbb {N}\), let \((M_n,E_n,R_n)\) be a variable with distribution \(B_{{\mathbf {q}}}\), conditioned on \(\#I(M)=n\). We then have

in distribution for the local convergence, where \((M_{\infty },E_{\infty })\) is an infinite rooted map which we call the infinite \({\mathbf {q}}\)-Boltzmann map.

If \(I=E\) or \(I=F\), assuming \({\mathbf {q}}\) is regular critical, consider now \((M_n,E_n)\) with distribution \(B^{\emptyset }_{{\mathbf {q}}}\) conditioned on \(\#I(M)=n\). We then also have

with the same limiting map \((M_{\infty },E_{\infty })\).

The choice of the subsequence \((\alpha _I+d_In)_{n\in \mathbb {Z}_+}\) is explained by the fact that, just as with trees, the number of vertices/edges/faces of a map with distribution \(B_{{\mathbf {q}}}\) can only be of the form \(\alpha _I+d_In\) for integer n, and this has nonzero probability for n large enough. This will be explained in Sect. 6.3.1.

The infinite map \((M_{\infty },E_{\infty })\) is moreover planar, in the sense that it is possible to embed it in the plane in such a way that bounded subsets of the plane only encounter a finite number of edges, see Lemma 6.3.

6.1 Two Applications

6.1.1 The Example of Uniform p-Angulations

Here we take an integer \(p\geqslant 3\) and consider maps which only have faces of degree p, which we call p-angulations. The well-known Euler’s formula will show us that the number of vertices and edges of such a map is determined by its number of faces. Let m be a finite p-angulation, and let V be its number of vertices, E be its number of edges and F be its number of faces. Since each edge is adjacent to two faces, we have \(p\#F(m)=2\#E(m)\). Euler’s formula, on the other hand, states that \(\#V(m)-\#E(m)+\#F(m)=2\). Combining the two shows that

Note that these relations imply that there is no real difference between pointed and non-pointed maps when looking at p-angulations, since a uniform pointed map with a fixed number of faces can be obtain by taking a uniform non-pointed map and uniformly choosing the specific point afterward.

At this point, we must split the discussion according to the parity of p.

Uniform Infinite 2p -angulation Let \(p\geqslant 2\). It has been shown in [21] that the weight sequence \({\mathbf {q}}\) defined by