Abstract

We study the local convergence of critical Galton–Watson trees and Lévy trees under various conditionings. Assuming a very general monotonicity property on the measurable functions of critical random trees, we show that random trees conditioned to have large function values always converge locally to immortal trees. We also derive a very general ratio limit property for measurable functions of critical random trees satisfying the monotonicity property. Finally we study the local convergence of critical continuous-state branching processes, and prove a similar result.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The local convergence of conditioned Galton–Watson trees (GW trees) has been studied for a long time, dating back to Kesten [14] at least, see Lemma 1.14 therein. Over the years, several different conditionings have been studied, in particular, the conditioning of large height, the conditioning of large total progeny, and the conditioning of large number of leaves. Recently, Abraham and Delmas [1, 2] provided a convenient framework to study the local convergence of conditioned GW trees, and then they used this framework to prove essentially all previous results on the local convergence of conditioned GW trees and also some new ones. Later, in [11], we studied the local convergence of GW trees under a new conditioning, that is, the conditioning of large maximal outdegree. An interesting phenomenon is that under any of the conditionings considered in those papers, a conditioned critical GW tree always converges locally to a certain size-biased tree with an infinite spine, which we call an immortal tree in this paper. Naturally, one would want to ask: Is it true that conditioned critical GW trees always converge locally to immortal trees, under any “reasonable” conditioning? Is it possible to prove such a general result?

The answer turns out to be a partial yes. More specifically, we need to distinguish two different formulations of local convergence. We call one formulation the tail versions of local convergence, and the other the density versions. For example, let us consider the classical conditioning of large height: If we condition GW trees to have height greater than a large value, then we are considering the tail versions; if we condition GW trees to have height equal to a large value, then the density versions. For the tail versions, if we assume a very general monotonicity property on the functions of GW trees, then we can prove that critical GW trees conditioned on large function values always converge locally to immortal trees. For the density versions, such a general result seems to be unattainable. Nevertheless, we may impose a more restrictive additivity property on the functions of GW trees and argue with several specific conditionings in mind, to get the density versions under any of the classical conditionings. At this point, let us mention that there are some more recent works on local convergence of random trees, which cannot be readily fitted into the framework of the present paper, see [3] for the local convergence of GW trees conditioned on the n-th generation to be of size \(a_n\), and [20] for the local convergence of GW trees rerooted at a random vertex, under the conditioning of large total progeny. See also [4, 19] for the local convergence of conditioned multi-type GW trees, which is closely related to the local convergence of random planar maps, about which [6] is a seminal work.

Now we review our results on the local convergence of critical GW trees. In Theorem 2.1, we prove our general result on the tail versions of local convergence of conditioned critical GW trees. Although this result shows that critical GW trees always converge locally to immortal trees under essentially “any” conditioning, we only apply it to the conditioning of large width in Corollary 2.2, which is one of our main motivations of this paper. Next we study the corresponding density versions in Theorem 2.3, where we require a more restrictive additivity property on the functions of GW trees. Then we apply Theorem 2.3 to four specific conditionings, which are the conditioning of large maximal outdegree, the conditioning of large height, the conditioning of large width, and the conditioning of large number of nodes with outdegree in a given set. Finally we take the argument in the proof of Theorem 2.1 further to derive in Theorem 2.7 a very general ratio limit property for functions of GW trees satisfying the monotonicity property. In particular, we give in Proposition 2.8 two ratio limit results for the width of GW trees.

We have to admit that several results in this paper on the local convergence of conditioned critical GW trees are already known from [1, 11]. Note that a unified framework for the local convergence of conditioned critical GW trees has been proposed and used in [1] and also used in [11] later. The reason that we revisit all these results here is that we have a different approach. Compared to the approach in [1], we feel that our approach has some advantages: First it seems that our approach is somewhat more direct and intuitive; second it seems that our approach is more natural for the proofs of our general results Theorems 2.1 and 2.7; finally our approach can also be used for the local convergence of conditioned critical Lévy trees, which is also one of our main motivations of this paper. Recall that Lévy trees are certain scaling limits of GW trees, see Aldous [5] for his seminal work on Brownian trees, and Duquesne and Le Gall [9] for their seminal work on Lévy trees. Although technically Lévy trees are more involved than GW trees, we are able to get essentially all the corresponding results for Lévy trees.

Now let us review our results on the local convergence of conditioned critical Lévy trees. Here we only consider the tail versions of local convergence. Recall that Duquesne [8] proved the tail versions of local convergence of critical or subcritical Lévy trees to continuum immortal trees, under the conditioning of large height. We prove in Theorem 4.1 that critical Lévy trees conditioned on large function values always converge locally to continuum immortal trees, as long as the measurable function of Lévy trees satisfies a very general monotonicity property. We apply this general result to three specific conditionings, which are the conditioning of large width, the conditioning of large total mass, and the conditioning of large maximal degree. Next by taking the argument in the proof of Theorem 4.1 further, we derive in Theorem 4.4 a very general ratio limit property for measurable functions of Lévy trees satisfying the monotonicity property. Finally by adapting the proofs of Theorems 4.1 and 4.4, we prove in Corollary 4.6 that conditioned critical continuous-state branching processes (CB processes) always converge locally to certain continuous-state branching processes with immigration (CBI processes), again only assuming a very general monotonicity property on the measurable functions of CB processes.

Our approach in this paper depends crucially on the criticality of random trees, so consequently it cannot be directly used for the local convergence of subcritical random trees (however, see Corollary 2.6). Recall that relying on the framework and approach of [2], it has been proved in [11] that under the conditioning of large maximal outdegree, the local limit of a subcritical GW tree is a condensation tree, which is different from an immortal tree but closely related to it. Naturally, we expect certain continuum condensation trees to be the correct local limits of subcritical Lévy trees under the conditioning of large maximal degree; however, it seems that the desired proof is much more involved and currently, we do not have it yet.

This paper is organized as follows: In Sect. 2, we study both the tail versions and the density versions of local convergence of conditioned critical GW trees. In Sect. 3, we review several basic topics of Lévy trees. Finally Sect. 4 is devoted to the local convergence of conditioned critical Lévy trees and CB processes.

2 Local Convergence of Critical GW Trees

In this section, first we review several basic topics of GW trees. Then we study the local convergence of conditioned critical GW trees, assuming a very general monotonicity property for the tail versions and a more restricted additivity property for the density versions. Finally we derive a very general ratio limit property for functions of GW trees satisfying the monotonicity property.

2.1 Preliminaries of GW Trees

This section is extracted from [1]. For more details refer to Sect. 2 of [1]. Denote by \(\mathbb {Z}_+ = \{0,1,2,\ldots \}\) the set of nonnegative integers and by \(\mathbb {N}= \{1,2,\ldots \}\) the set of positive integers. Use

to denote the set of finite sequences of positive integers with the convention \(\mathbb {N}^0=\{\emptyset \}\). For \(n \ge 1\) and \(u = (u_1,\ldots ,u_n) \in \mathbb {N}^n\), let \(|u| = n\) be the height of u and \(|\emptyset | = 0\) the height of \(\emptyset \). If u and v are two sequences of \({\mathcal {U}}\), denote by uv the concatenation of the two sequences, with the convention that \(uv = u\) if \(v = \emptyset \) and \(uv = v\) if \(u = \emptyset \). The set of ancestors of u is the set

A tree \(\mathbf {t}\) is a subset of \({{\mathcal {U}}}\) that satisfies:

-

\(\emptyset \in \mathbf {t}\).

-

If \(u\in \mathbf {t}\), then \(A_u\subset \mathbf {t}\).

-

For every \(u\in \mathbf {t}\), there exists \(k_u(\mathbf {t}) \in \mathbb {Z}_+\) such that, for every \(i\in \mathbb {N}\), \(ui \in \mathbf {t}\) if and only if \(1 \le i \le k_u(\mathbf {t})\).

The node \(\emptyset \) is called the root of \(\mathbf {t}\). The integer \(k_u(\mathbf {t})\) represents the number of offsprings of the node u in the tree \(\mathbf {t}\), and we call it the outdegree of the node u in the tree \(\mathbf {t}\). The maximal outdegree \(M(\mathbf {t})\) of a tree \(\mathbf {t}\) is defined by

The height \(H(\mathbf {t})\) of a tree \(\mathbf {t}\) is defined by

Denote by \(\mathbb {T}\) the set of trees, by \(\mathbb {T}_0\) the subset of finite trees, and by \(\mathbb {T}^{(h)}\) the subset of trees with height at most h,

Finally a forest of k finite trees is just a sequence of k finite trees.

For any \(\mathbf {t}\in \mathbb {T}\) and \(h \in \mathbb {Z}_+\), write \(Y_h(\mathbf {t})\) for the total number of nodes of the tree \(\mathbf {t}\) at height h. Also write \(\mathbf {t}_{(h)}=(\mathbf {t}_{(h),i},1\le i\le Y_h(\mathbf {t}))\) for the collection of all subtrees above height h. For \(h \in \mathbb {Z}_+\), the restriction function \(r_h\) from \(\mathbb {T}\) to \(\mathbb {T}\) is defined by

We endow the set \(\mathbb {T}\) with the ultra-metric distance

Then a sequence \((\mathbf {t}_n, n\in \mathbb {N})\) of trees converges to a tree \(\mathbf {t}\) with respect to the distance d if and only if for every \(h \in \mathbb {N}\),

Let \((T_n, n \in \mathbb {N})\) and T be \(\mathbb {T}\)-valued random variables (with respect to the Borel \(\sigma \)-algebra on \(\mathbb {T}\)). Denote by dist(T) the distribution of the random variable T, and denote by

for the convergence in distribution of the sequence \((T_n, n \in \mathbb {N})\) to T. It can be proved that the sequence \((T_n, n \in \mathbb {N})\) converges in distribution to T if and only if for any \(h \in \mathbb {N}\) and \(\mathbf {t}\in \mathbb {T}^{(h)}\),

Let \(p=(p_0,p_1,p_2,\ldots )\) be a probability distribution on the set of nonnegative integers. We exclude the trivial case of \(p=(0,1,0,\ldots )\). Denote by \(\mu \) the expectation of p and assume that \(0<\mu <\infty \). A \(\mathbb {T}\)-valued random variable \(\tau =\tau (p)\) is a Galton–Watson tree (GW tree) with the offspring distribution p if the distribution of \(k_\emptyset (\tau )\) is p and for \(n\in \mathbb {N}\), conditionally on \(\{k_\emptyset (\tau )=n\}\), the subtrees \((\tau _{(1),1}, \tau _{(1),2},\ldots , \tau _{(1),n})\) are independent and distributed as the original tree \(\tau \). From this definition, we can obtain the branching property of GW trees, which says that under the conditional probability \({\mathbf {P}}[\cdot | Y_h(\tau )= n]\) and conditionally on \(r_h(\tau )\), the subtrees \((\tau _{(h),1}, \tau _{(h),2},\ldots , \tau _{(h),n})\) are independent and distributed as the original tree \(\tau \). The GW tree is called critical (resp. subcritical, supercritical) if \(\mu = 1\) (resp. \(\mu < 1\), \(\mu >1\)). In the critical and subcritical case, we have that a.s. \(\tau \) belongs to \(\mathbb {T}_0\).

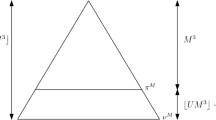

Immortal trees can be defined for critical or subcritical offspring distributions. We recall the following informal but intuitive description from Sect. 1 of [2], which is adapted from Sect. 5 of [13]. Let p be a critical or subcritical offspring distribution. Let \(\tau ^*(p)\) denote the random tree which is specified by:

-

i)

There are two types of nodes: normal and special.

-

ii)

The root is special.

-

iii)

Normal nodes have offspring distribution p.

-

iv)

Special nodes have offspring distribution the size-biased distribution \({\hat{p}}\) on \(\mathbb {Z}_+\) defined by \({\hat{p}}_k =kp_k/\mu \) for \(k \in \mathbb {Z}_+\).

-

v)

The offsprings of all the nodes are independent of each other.

-

vi)

All the children of a normal node are normal.

-

vii)

When a special node gets several children, one of them is selected uniformly at random and is special while the others are normal.

Notice that a.s. \(\tau ^*(p)\) has one unique infinite spine. We call it an \(immortal tree \). By the definitions of GW trees and immortal trees, it can be shown that for any \(h \in \mathbb {Z}_+\) and \(\mathbf {t}\in \mathbb {T}^{(h)}\),

2.2 The Tail Versions of Local Convergence

Let A be a nonnegative integer-valued function defined on \(\mathbb {T}_0\). Recall that for any \(\mathbf {t}\in \mathbb {T}_0\), we write \((\mathbf {t}_{(h),i},1\le i\le Y_h(\mathbf {t}))\) for the collection of all subtrees above height h. We introduce the following monotonicity property of A:

The meaning of this monotonicity property (3) should be clear: For any \(h\in \mathbb {N}\), the value of A on the whole tree is not less than that on any subtree above height h.

Define \(v_n={\mathbf {P}}[A (\tau )>n]\in [0,1]\) and \({\mathbf {P}}_n[\cdot ]={\mathbf {P}}[\cdot |A (\tau )>n]\) when \(v_n>0\). The following theorem asserts that if the monotonicity property (3) holds for A, then under the conditional probability \({\mathbf {P}}_n\), the GW tree \(\tau (p)\) converges locally to the immortal tree \(\tau ^*(p)\). We write \(\tau ^*=\tau ^*(p)\) if the offspring distribution p is clear from the context.

Theorem 2.1

Assume that p is critical and \(v_n>0\) for all n. If A satisfies the monotonicity property (3), then as \(n\rightarrow \infty \),

Proof

By (1), we only need to prove that for any \(h \in \mathbb {N}\) and \(\mathbf {t}\in \mathbb {T}^{(h)}\),

Recall from (2) that when \(\mu =1\), for any \(h \in \mathbb {N}\) and \(\mathbf {t}\in \mathbb {T}^{(h)}\),

So it suffices to show that for any \(h \in \mathbb {N}\) and \(\mathbf {t}\in \mathbb {T}^{(h)}\),

To prove (4), first recall that if the value of A on a subtree above height h is greater than n, than the value of A on the whole tree is greater than n, by the monotonicity property (3). Then recall from the branching property in Sect. 2.1 that under \({\mathbf {P}}\) and conditional on \(r_h(\tau )\) the probability that the value of A is greater than n on at least one subtree above height h is

So the monotonicity property and the branching property imply that

Thus we get

where the second inequality follows from Fatou’s lemma. Note that \(v_n\rightarrow 0\) as \(n\rightarrow \infty \).

Finally note that

since \({\mathbf {E}}[Y_h(\tau )]=1\) by (2). This implies (4), since by Fatou’s lemma for sums we get

Note that it is easy to think of a conditioning under which the local limits of conditioned critical GW trees are not immortal trees, such as the conditioning of large minimal outdegree, where the minimal outdegree of a tree is defined to be the minimum of positive outdegrees of all nodes in the tree. It should be clear that the minimal outdegree does not satisfy the monotonicity property (3).

Although Theorem 2.1 holds for any A satisfying the monotonicity property (3), one of our original motivations for this result is the local convergence under the conditioning of large width. So right now we will only apply Theorem 2.1 to this specific conditioning. Here the width \(W(\mathbf {t})\) of a tree \(\mathbf {t}\) is defined to be \(\sup _{h\in \mathbb {Z}_+} Y_h(\mathbf {t})\). Note that \({\mathbf {P}}[W(\tau )>n]>0\) for any n if and only if \(p_0+p_1<1\). Recall that we exclude the trivial case of \(p=(0,1,0,\ldots )\). Then Theorem 2.1 immediately gives the local convergence of critical GW trees to immortal trees, under the conditioning of large width.

Corollary 2.2

Assume that \(\Phi \) is critical. Then as \(n\rightarrow \infty \),

2.3 The Density Versions of Local Convergence

The density versions automatically imply the corresponding tail versions, since the tail versions can be written as sums of the corresponding density versions. More precisely, we have

To get the density versions, we have to impose a more restrictive additivity property on the function A, which is similar in spirit to the additivity property (3.1) of [1].

Let A be a nonnegative integer-valued function defined on the space of finite forests. Recall that \(\mathbf {t}_{(h)}\) is the sub-forest of the tree \(\mathbf {t}\) above height h and \(r_h(\mathbf {t})\) is the subtree of the tree \(\mathbf {t}\) below height h. We introduce the following additivity property of A: There exists a nonnegative integer-valued function B on \(\mathbb {T}_0\), such that for any fixed \(h\in \mathbb {N}\) and \(\mathbf {s}\in \mathbb {T}^{(h)}\), there exists \(n'\in \mathbb {N}\) (\(n'\) may depend on h and \(\mathbf {s}\)) such that for any \(\mathbf {t}\in \mathbb {T}_0\) with \(r_h(\mathbf {t})=\mathbf {s}\) and \(A(\mathbf {t})>n'\), we have

Define \(v_{(n)}={\mathbf {P}}[A (\tau )=n]\in [0,1]\) and \({\mathbf {P}}_{(n)}[\cdot ]={\mathbf {P}}[\cdot |A (\tau )=n]\) when \(v_{(n)}>0\). Let \(\tau ^{(k)} = (\tau _1,\ldots ,\tau _k)\) be the forest of k i.i.d. GW trees with offspring distribution p. Write \(v_{(n)}(k)={\mathbf {P}}[A (\tau ^{(k)})=n]\). The following theorem asserts that if the additivity property (5) holds for A and some additional ratio limit properties hold for \(v_{(n)}\) and \(v_{(n)}(k)\), then under the conditional probability \({\mathbf {P}}_{(n)}\), the GW tree \(\tau (p)\) also converges locally to the immortal tree \(\tau ^*(p)\).

Theorem 2.3

Assume that \(v_n>0\) for all n, the additivity property (5) holds for A,

and one of the following two conditions holds:

-

I.

\(\mu =1\), and \(\liminf _{n\rightarrow \infty }v_{(n-B(\mathbf {t}))}/v_{(n)}\ge 1\) for any \(\mathbf {t}\in \mathbb {T}_0\).

-

II.

\(0<\mu \le 1\), \(B(\mathbf {t})=H(\mathbf {t})\) for \(\mathbf {t}\in \mathbb {T}_0\), and \(\liminf _{n\rightarrow \infty }v_{(n-1)}/v_{(n)}\ge 1/\mu \).

Then as \(n\rightarrow \infty \),

Note that all limits are understood along the infinite sub-sequence \(\{n: v_{(n)} > 0\}\).

Proof

For Case I, by the additivity property (5) and the branching property, we see that for any \(h\in \mathbb {N}\) and \(\mathbf {t}\in \mathbb {T}^{(h)}\), when n is large enough,

Then by our assumptions and Fatou’s lemma, we get

From the first paragraph and the last paragraph of the proof of Theorem 2.1, we see that the above inequality is enough to imply the local convergence for Case I.

The proof of Case II is similar. We first argue that

Since \(\mu ^{-h}{\mathbf {E}}[Y_h(\tau )]=1\), clearly the above inequality is also enough to imply the local convergence for Case II. \(\square \)

Next we will apply Theorem 2.3 to four specific conditionings, which are the conditioning of large height, the conditioning of large maximal outdegree, the conditioning of large width, and the conditioning of large number of nodes with outdegree in a given set. First we show in the following lemma that the condition (6) in Theorem 2.3 holds when a certain maximum property holds for the function A. This result will be applied to the maximal outdegree and the height.

Lemma 2.4

Assume that \(A(\mathbf {t}_1,\ldots ,\mathbf {t}_k)=\max _{1\le i\le k} A(\mathbf {t}_i)\) for any forest \((\mathbf {t}_1,\ldots ,\mathbf {t}_k)\), and \(v_n>0\) for all n. Then for any \(k\in \mathbb {N}\),

where the limit is understood along the infinite sub-sequence \(\{n: v_{(n)} > 0\}\).

Proof

Just notice that

Then since \(v_n\rightarrow 0\) as \(n\rightarrow \infty \),

\(\square \)

For the forest \(\mathbf {t}^{(k)}=(\mathbf {t}_1,\mathbf {t}_2,\ldots ,\mathbf {t}_k)\), again we write \(Y_h(\mathbf {t}^{(k)})\) for the total number of nodes in the forest \(\mathbf {t}^{(k)}\) at height h, that is, \(Y_h(\mathbf {t}^{(k)})=\sum _{1\le i\le k}Y_h(\mathbf {t}_i)\). Then define

Lemma 2.5

Assume that \(A=W\), and the critical or subcritical p has bounded support with \(p_0+p_1<1\). Then for any \(k\in \mathbb {N}\),

where the limit is understood along the infinite sub-sequence \(\{n: v_{(n)} > 0\}\).

Proof

Assume that \(k\ge 2\). Let \(N=\sup \{n: p_n>0\}<\infty \) be the supremum of the support of p. Use \(\lfloor r \rfloor \) to denote the largest integer less than or equal to r. We argue that if \(W(\tau _1)=n\) and \(H(\tau _i)<\lfloor \log _N (n/k)\rfloor \) for \(2\le i \le k\), then the width of \(\tau ^{(k)}\) strictly below generation \(\lfloor \log _N (n/k)\rfloor \) is less than \(kN^{\lfloor \log _N (n/k)\rfloor } \le n\), that is,

which implies that \(W(\tau _1)=n\) is achieved after generation \(\lfloor \log _N (n/k)\rfloor \) and \(W(\tau ^{(k)})=W(\tau _1)=n\). Using this observation, we see that

\(\square \)

We turn to the conditioning of large number of nodes with outdegree in a given set, which is the main topic of [1, 2]. For any \({\mathcal {A}}\subset \mathbb {Z}_+\), denote by \(L_{{\mathcal {A}}}(\mathbf {t})\) the total number of nodes in the tree \(\mathbf {t}\) with outdegree in \({\mathcal {A}}\). For example, \(L_{\mathbb {Z}_+}(\mathbf {t})\) is just the total progeny of the tree \(\mathbf {t}\), and \(L_{\{0\}}(\mathbf {t})\) is just the total number of leaves of the tree \(\mathbf {t}\).

Now we show that when combined with several results from [1] (which are not directly related to the local convergence), our Theorem 2.3 can also be used to prove all the known density versions of local convergence of critical GW trees from [1, 11]. For \({\mathcal {A}}\subset \mathbb {Z}_+\), define \(p({\mathcal {A}})=\sum _{k\in {\mathcal {A}}}p_k\). Also recall that for a finite tree \(\mathbf {t}\), we use \(M(\mathbf {t})\) to denote the maximal outdegree, \(W(\mathbf {t})\) the width, and \(H(\mathbf {t})\) the height.

Corollary 2.6

If the offspring distribution p is critical with unbounded support, then as \(n\rightarrow \infty \),

where the limit is understood along the infinite sub-sequence \(\{n\in \mathbb {N}: p_n>0\}\). If p is critical with bounded support, then as \(n\rightarrow \infty \),

where the limit is understood along the infinite sub-sequence \(\{n\in \mathbb {N}: {\mathbf {P}}(W(\tau )=n)>0\}\). If p is critical or subcritical, then as \(n\rightarrow \infty \),

For the critical offspring distribution p, take any \({\mathcal {A}}\subset \mathbb {Z}_+\) with \(p({\mathcal {A}})>0\), then as \(n\rightarrow \infty \),

where the limit is understood along the infinite sub-sequence \(\{n\in \mathbb {N}:{\mathbf {P}}(L_{{\mathcal {A}}}(\tau )=n)>0\}\).

Proof

For the conditioning of large maximal outdegree, clearly we may let \(A(\mathbf {t})=M(\mathbf {t})\), then let \(A(\mathbf {t}^{(k)})=\max _{1\le i\le k} M(\mathbf {t}_i)\) for any forest \(\mathbf {t}^{(k)}=(\mathbf {t}_1,\ldots ,\mathbf {t}_k)\) and \(B\equiv 0\). Now the local convergence follows from Case I in Theorem 2.3, Lemma 2.4, and the simple fact that for any \(n>0\), \({\mathbf {P}}[M(\tau )=n]>0\) if and only if \(p_n>0\).

For the conditioning of large width, we let \(A(\mathbf {t})=W(\mathbf {t})=\sup _h Y_h(\mathbf {t})\), \(A(\mathbf {t}^{(k)})=\sup _h Y_h(\mathbf {t}^{(k)})\) for any forest \(\mathbf {t}^{(k)}=(\mathbf {t}_1,\ldots ,\mathbf {t}_k)\), and \(B\equiv 0\). Now the local convergence follows from Case I in Theorem 2.3, Lemma 2.5, and the simple fact that \({\mathbf {P}}[W(\tau )>n]>0\) for any n if and only if \(p_0+p_1<1\).

For the conditioning of large height, we let \(A(\mathbf {t})=H(\mathbf {t})\), \(A(\mathbf {t}^{(k)})=\max _{1\le i\le k} H(\mathbf {t}_i)\) for any forest \(\mathbf {t}^{(k)}=(\mathbf {t}_1,\ldots ,\mathbf {t}_k)\) and \(B(\mathbf {t})=H(\mathbf {t})\). Now the local convergence follows from (4.5) of [1], Case II in Theorem 2.3, Lemma 2.4, and the trivial fact that \({\mathbf {P}}[H(\tau )=n]>0\) for any \(n\in \mathbb {N}\).

For the conditioning of large number of nodes with outdegree in a given set \({\mathcal {A}}\subset \mathbb {Z}_+\), we only give an outline of our proof and leave the details to the reader. It is easy to see that when \(p({\mathcal {A}})>0\), the set \(\{n\in \mathbb {N}:{\mathbf {P}}(L_{{\mathcal {A}}}(\tau )=n)>0\}\) is infinite. let \(A(\mathbf {t})=L_{{\mathcal {A}}}(\mathbf {t})\), then let \(A(\mathbf {t}^{(k)})=\sum _{1\le i\le k} L_{{\mathcal {A}}}(\mathbf {t}_i)\) for any forest \(\mathbf {t}^{(k)}=(\mathbf {t}_1,\ldots ,\mathbf {t}_k)\) and \(B(\mathbf {t})=L_{{\mathcal {A}}}(\mathbf {t})-Y_{H(\mathbf {t})}(\mathbf {t}){\mathbf {1}}\{0\in {\mathcal {A}}\}\). First consider the case of \({\mathcal {A}}=\mathbb {Z}_+\). Then the local convergence follows from Theorem 2.3, the Dwass formula, and a strong ratio theorem for random walks. See, e.g., (4.6) in [1] for the Dwass formula and (4.8) and (4.10) in [1] for the strong ratio theorem. These two results combined imply the condition (6) and the condition I in our Theorem 2.3. Finally by Sect. 5.1 of [1], we know that the case of any general \({\mathcal {A}}\subset \mathbb {Z}_+\) can be reduced to the case of \({\mathcal {A}}=\mathbb {Z}_+\) in the sense that \(L_{{\mathcal {A}}}\) of any critical GW tree equals \(L_{\mathbb {Z}_+}\) of a corresponding critical GW tree. So for any \({\mathcal {A}}\subset \mathbb {Z}_+\), the condition (6) and the condition I in our Theorem 2.3 hold since they hold for \(\mathbb {Z}_+\). \(\square \)

2.4 A General Ratio Limit Property

Let A be a nonnegative integer-valued function defined on the space of finite forests. For a finite forest \(\mathbf{f}\), we also write \(\mathbf{f}_{(h)}\) for the sub-forest of \(\mathbf{f}\) above height h. We introduce the following monotonicity property of A:

Write \({\mathbf {P}}[A>n]\) for \({\mathbf {P}}[A (\tau )>n]\) and \({\mathbf {P}}^{(k)}[A>n]\) for \({\mathbf {P}}[A (\tau ^{(k)})>n]\). The following theorem asserts that if the monotonicity property (7) holds for A, then \({\mathbf {P}}^{(k)}[A>n]\) and \(k{\mathbf {P}}[A>n]\) are asymptotically equivalent as \(n\rightarrow \infty \).

Theorem 2.7

Assume that p is critical, \({\mathbf {P}}[A>n]>0\) for every \(n\in \mathbb {N}\), and A satisfies the monotonicity property (7). Then for any \(k\in \mathbb {N}\),

Assume additionally that for some \(h\in \mathbb {N}\), \(\mathbf {s}\in \mathbb {T}^{(h)}\) with \({\mathbf {P}}[r_h(\tau )=\mathbf {s}]>0\), there exists some \(n'\in \mathbb {N}\) such that for any \(\mathbf {t}\in \mathbb {T}_0\) with \(r_h(\mathbf {t})=\mathbf {s}\) and \(A(\mathbf {t})>n'\), we get \(A(\mathbf {t})-A(\mathbf {t}_{(h)})= r'>0\). Then for any \(k\in \mathbb {N}\) and \(r\in \mathbb {N}\),

Proof

First as in the proof of Theorem 2.1, for any \(k\in \mathbb {N}\),

Next we argue that for any \(k\in \mathbb {N}\), if there exists some \(h\in \mathbb {N}\) with \({\mathbf {P}}[Y_h(\tau )=k]>0\), then

To prove this, pick \(\mathbf {t}\) such that \({\mathbf {P}}[r_h(\tau )=\mathbf {t}]>0\) and \(Y_h(\mathbf {t})=k\). As in the proof of Theorem 2.1, by the monotonicity property (7) and the branching property we get

Then again as in the proof of Theorem 2.1, we can derive from the inequalities above that

which implies (9).

Finally assume that for some \(k\in \mathbb {N}\),

By the facts that \({\mathbf {E}}[Y_h(\tau )]=1\) and a.s. \(\lim _{h\rightarrow \infty }Y_h(\tau )=0\), we can pick some \(k'\in \mathbb {N}\) such that \(k'>k\) and there exists some \(h\in \mathbb {N}\) with \({\mathbf {P}}[Y_h(\tau )=k']>0\). So (9) holds for \(k'\). However as in (8), by considering the first k trees as a whole and the next \(k'-k\) ones separately we also have

which implies that

a contradiction to (9) for \(k'\).

For the second statement, again by the argument in the proof of Theorem 2.1 and the assumptions, we have for \(k=Y_h(\mathbf {s})\) and \(r'\) in the assumptions,

noticing that in the middle term we use \({\mathbf {P}}^{(k)}[A>n-r']\) instead of \({\mathbf {P}}^{(k)}[A>n]\). This implies that for \(k=Y_h(\mathbf {s})\) and \(r'\),

Then by the first statement we have for \(r'\),

which implies that for any \(r\in \mathbb {N}\),

\(\square \)

For the probability \({\mathbf {P}}[A=n]\), it is not possible to obtain a result as general as Theorem 2.7. However for some specific functions, it is possible to improve the inequality (6) in Theorem 2.3 to an equality. Recall Theorem 1 of [18] and Theorem 1 of [7]. The following proposition might be regarded as a generalization of those two results.

Proposition 2.8

Assume that p is critical. Then for any \(k\in \mathbb {N}\),

Assume additionally that p has bounded support. Then for any \(k\in \mathbb {N}\),

where the limit is understood along the infinite sub-sequence \(\{n: {\mathbf {P}}[W=n] > 0\}\).

Proof

The first statement is immediate from Theorem 2.7.

For the second statement, first recall Lemma 2.5 and the fact that W satisfies the additivity property (5) with \(B\equiv 0\). As in the proof of Theorem 2.7, we have for any \(k\in \mathbb {N}\), if there exists some \(h\in \mathbb {N}\) with \({\mathbf {P}}[Y_h(\tau )=k]>0\), then

Now assume that for some \(k\in \mathbb {N}\),

As in the proof of Theorem 2.7, there exists some \(k'\in \mathbb {N}\) such that \(k'>k\) and (10) holds for \(k'\). However as in the proofs of Lemma 2.5 and Theorem 2.7, by considering the first k trees as a whole and the next \(k'-k\) ones separately we also have

which implies that

a contradiction to (10) for \(k'\). This completes the proof. \(\square \)

We mention that when the offspring distribution has unbounded support, it seems to be much more difficult to study the quantity \({\mathbf {P}}^{(k)}[W=n]\), compared to the quantity \({\mathbf {P}}^{(k)}[W>n]\).

3 Preliminaries of Lévy Trees

This section is mainly extracted from [8]. For more details refer to Sects. 1.2, 3.1, and 3.3 of [8].

3.1 Branching Mechanisms of Lévy Trees

We consider a Lévy tree with the branching mechanism

where \(\alpha \ge 0\), \(\beta \ge 0\), and the Lévy measure \(\pi \) is a \(\sigma \)-finite measure on \((0,\infty )\) satisfying \(\int _{(0,\infty )}\pi (d\theta )(\theta \wedge \theta ^2)<\infty \). When we talk about height processes of Lévy trees (see Sect. 3.3), we always assume the condition

which implies that

We then consider a spectrally positive Lévy process \(X=(X_t,t\ge 0)\) with the Laplace exponent \(-\Phi \), that is, for \(\lambda \ge 0\),

We also consider a bivariate subordinator \((U,V)=((U_t,V_t),t\ge 0)\), that is, a \([0,\infty )\times [0,\infty )\)-valued Lévy process such that \((U_0,V_0)=(0,0)\) (see, e.g., page 162 of [15]). Define \(\Phi (p,q)\) by

Then the distribution of (U, V) is characterized by the Laplace exponent \(\Phi (p,q)\): For \(p,q\ge 0\),

3.2 The Excursion Representation of CB Processes

We also consider a continuous-state branching process (CB process) \(Y=(Y_t,t\ge 0)\) with the branching mechanism \(\Phi \) given by (11). The branching mechanism and the corresponding CB process and Lévy tree are called subcritical if \(\alpha >0\) and critical if \(\alpha =0\). Let \({\mathbf {P}}_x[Y\in \cdot ]\) be the distribution of Y started from x, and \({\mathbf {E}}_x\) the corresponding expectation. It is well known that the distribution of Y can be specified by \(\Phi \) as follows: For \(\lambda \ge 0\),

where \(v_t(\lambda )\) is the unique nonnegative solution of

The excursion representation of CB processes is crucial in this paper. Take a CB process Y with the branching mechanism \(\Phi \), we can define an excursion measure N and reconstruct Y from excursions. Let \({\mathbb {D}}([0,\infty ),{\mathbb {R}}_+)\) be the standard Skorohod space. Let \({\mathbb {D}}_0([0,\infty ),{\mathbb {R}}_+)\) be the subspace of \({\mathbb {D}}([0,\infty ),{\mathbb {R}}_+)\), such that all paths in \({\mathbb {D}}_0([0,\infty ),{\mathbb {R}}_+)\) start from 0 and stop upon hitting 0. Under the condition (13), we can define a \(\sigma \)-finite measure N on \({\mathbb {D}}_0([0,\infty ),{\mathbb {R}}_+)\) such that:

1. \(N(\{{\mathbf {0}}\})=0\), where \({\mathbf {0}}\) denotes the trivial path in \({\mathbb {D}}([0,\infty ),{\mathbb {R}}_+)\), that is, \({\mathbf {0}}_t=0\) for any \(t\ge 0\).

2. Let Z be a Poisson random measure on \({\mathbb {D}}_0([0,\infty ),{\mathbb {R}}_+)\) with intensity xN. Define the process \(e=(e_t,t\ge 0)\) by \(e_0= x\) and

Then e is a CB process with the branching mechanism \(\Phi \).

3.3 Height Processes and the Branching Property of Lévy Trees

The height process \(H=(H_t,t\ge 0)\) is introduced by Le Gall and Le Jan [16] and further developed by Duquesne and Le Gall [10], to code the complete genealogy of Lévy trees. It is obtained as a functional of the spectrally positive Lévy process X with the Laplace exponent \(-\Phi \). Intuitively, for every \(t \ge 0\), \(H_t\) “measures” in a local time sense the size of the set \(\{s \le t: X_s=\inf _{r\in [s,t]}X_r\}\). It is known that the condition (12) holds if and only if the height process H has a continuous modification. From now on, we only consider this modification, still denoted by H. For any \(h\ge 0\), the local time \(L^h=(L^h_t,t\ge 0)\) of H at height h can be defined, which is continuous and increasing. Intuitively, the measure induced by \(L^h\) is distributed “uniformly” on all “particles” of the Lévy trees at height h. Note that all processes introduced so far are defined under the underlying probability \({\mathbf {P}}\), so that all these processes correspond to a Poisson collection of Lévy trees.

In order to talk about a single Lévy tree, a certain excursion measure \({\mathbf {N}}\) needs to be introduced. Recall the spectrally positive Lévy process \(X=(X_t,t\ge 0)\) with the Laplace exponent \(-\Phi \), and its infimum process \(I=(I_t,t\ge 0)\) defined by \(I_t=\inf _{s\le t}X_s\). When the condition (13) holds, the point 0 is regular and instantaneous for the strong Markov process \(X-I\). We denote by \({\mathbf {N}}\) the corresponding excursion measure, and by \(\zeta \) the duration of the excursion. We also denote by X the canonical process under \({\mathbf {N}}\). Note that normally we need to specify the normalization of \({\mathbf {N}}\), but for our purposes in this paper this normalization always cancels out.

In general, H is not Markovian under \({\mathbf {P}}\), but \(H_t\) only depends on the values of \(X-I\), on the excursion interval of \(X-I\) away from 0 that straddles t. Also it can be checked that a.s. for all t, \(H_t>0\) if and only if \(X_t-I_t>0\). So under \({\mathbf {N}}\) we may define H as a functional of X (recall that X is the canonical process under \({\mathbf {N}}\)). Consequently we may also define the local time \(L^h=(L^h_t,0\le t\le \zeta )\) of H at any height \(h > 0\), under the excursion measure \({\mathbf {N}}\). Note that it is then standard to define the Lévy tree with the branching mechanism \(\Phi \) as a random metric space \({\mathcal {T}}(\Phi )\) from the height process H.

The branching property of Lévy trees is crucial for us in this paper. For any \(h>0\), define the conditional probability \({\mathbf {N}}_{(h)}\) as the distribution of the canonical process X conditioned on having height greater than h, that is,

Then intuitively the branching property says that under \({\mathbf {N}}_{(h)}\) and conditional on all information below height h, all the subtrees above height h are distributed as i.i.d. copies of the complete Lévy tree under \({\mathbf {N}}\), and the roots of all these subtrees distribute as a Poisson random measure with intensity the measure induced by \(L^h=(L^h_t,0\le t\le \zeta )\). Define \(L^0_\zeta =0\), then the process \(L_\zeta =(L^h_\zeta ,h\ge 0)\) has an rcll modification (still denoted by \(L_\zeta \)) and it is well known that

So consequently from the excursion representation of CB processes, we see that under \({\mathbf {N}}_{(h)}\) and conditional on all information below height h, the real-valued process \(\left( L^{h+h'}_\zeta ,h'\in [0,\infty )\right) \) distributes as a CB process with initial value \(L^h_\zeta \). For a rigorous presentation of this branching property, refer to Theorem 4.2 of [9] and Proposition 3.1 of [8]. Note that Theorem 4.2 of [9] is formulated in the real tree framework, while Proposition 3.1 of [8] is formulated in the height process framework. Although the former is more intuitive to understand, we will mainly rely on the latter, since in this paper we use the height process framework.

3.4 Continuum Immortal Trees

Recall (14) and (15). Let H be the height process associated with the Lévy process X with the Laplace exponent \(-\Phi \), and let \((X',H')\) be a copy of (X, H). Let \(I=(I_t,t\ge 0)\) and \(I'=(I'_t,t\ge 0)\) be the infimum processes of X and \(X'\), respectively. Let (U, V) be a bivariate subordinator with the Laplace exponent \(\Phi (p,q)\). Let \(U^{-1}=(U^{-1}_t,t\ge 0)\) and \(V^{-1}=(V^{-1}_t,t\ge 0)\) be the right-continuous inverses of U and V, respectively. Assume that (X, H), \((X',H')\), and (U, V) are independent. We define \(\overleftarrow{H}\) and \(\overrightarrow{H}\) by

The processes \(\overleftarrow{H}\) and \(\overrightarrow{H}\) are called, respectively, the left and right height processes of the continuum immortal tree with the branching mechanism \(\Phi \). Then one can proceed to define the continuum immortal tree as a random metric space \({\mathcal {T}}^*(\Phi )\) from the height processes \(\overleftarrow{H}\) and \(\overrightarrow{H}\). For details refer to page 103 of [8].

For \(\omega =(\omega _t,t\ge 0)\in {\mathbb {C}}([0,\infty ),{\mathbb {R}}_+)\), define \(\zeta (\omega )=\inf \{t>0:\omega _t=0\}\) with the convention that \(\inf \emptyset =\infty \). Denote by \({\mathbb {C}}_0([0,\infty ),{\mathbb {R}}_+)\) the subspace of all excursions in \({\mathbb {C}}([0,\infty ),{\mathbb {R}}_+)\), that is, \(\omega \in {\mathbb {C}}_0([0,\infty ),{\mathbb {R}}_+)\) if and only if \(\omega \in {\mathbb {C}}([0,\infty ),{\mathbb {R}}_+)\), \(\zeta (\omega )\in (0,\infty )\), \(\omega _t>0\) when \(t\in (0,\zeta )\), and \(\omega _t=0\) otherwise. For \(\omega =(\omega _t,t\ge 0)\in {\mathbb {C}}([0,\infty ),{\mathbb {R}}_+)\) and \(h>0\), define \(r_h(\omega )=((r_h(\omega ))_s,s\ge 0)\in {\mathbb {C}}([0,\infty ),{\mathbb {R}}_+)\) by \((r_h(\omega ))_s=\omega _{s_h}\), where

when \(\int _0^{\infty } da{\mathbf {1}}\{\omega _a\le h\}> s\), and \(\omega _{s_h}=h\) when \(\int _0^{\infty } da{\mathbf {1}}\{\omega _a\le h\}\le s\).

Introduce the last time under level h for the left and the right height processes:

It is easy to see that \(\overleftarrow{\sigma }_h<\infty \) and \(\overrightarrow{\sigma }_h<\infty \) a.s. Define \(\overleftrightarrow {H}^{h}=(\overleftrightarrow {H}^{h}_t, t\ge 0)\) by \(\overleftrightarrow {H}^{h}_t=\overleftarrow{H}_t\) when \(t\in [0,\overleftarrow{\sigma }_h]\), \(\overleftrightarrow {H}^{h}_t=\overrightarrow{H}_{(\overleftarrow{\sigma }_h+\overrightarrow{\sigma }_h-t)}\) when \(t\in [\overleftarrow{\sigma }_h,\overleftarrow{\sigma }_h+\overrightarrow{\sigma }_h]\), and \(\overleftrightarrow {H}^{h}_t=0\) when \(t>\overleftarrow{\sigma }_h+\overrightarrow{\sigma }_h\). Consider \(r_h\left( \overleftrightarrow {H}^h\right) \), we denote it by \(r_h\left( \overleftrightarrow {H}\right) \) for clarity. Now let us recall Lemma 3.2 of [8], which relates the distribution of a Lévy tree and that of the corresponding continuum immortal tree.

Lemma 3.1

For any nonnegative measurable functions F and G on \({\mathbb {C}}([0,\infty ),{\mathbb {R}}_+)\), and any \(h>0\),

For any nonnegative measurable function F on \({\mathbb {C}}_0([0,\infty ),{\mathbb {R}}_+)\) and any \(h>0\),

Proof

The first statement is from Lemma 3.2 of [8]. Then we have

Recall that we have constructed \(r_h\left( \overleftrightarrow {H}\right) \) from \(r_h\left( \overleftarrow{H}_{\cdot \wedge \overleftarrow{\sigma }_h}\right) \) and \(r_h\left( \overrightarrow{H}_{\cdot \wedge \overrightarrow{\sigma }_h}\right) \). By Theorem 4.2.(i) of [9], \({\mathbf {N}}\) a.e., then for \(d L^h_{\cdot }\) a.e. s, we can construct \(r_h(H)\) from \(r_h(H_{\cdot \wedge s})\) and \(r_h\left( H_{(\zeta -\cdot )\wedge (\zeta -s)}\right) \) in exactly the same way. So the second statement follows from the above formula. \(\square \)

Note that taking \(F=G\equiv 1\) in Lemma 3.1 gives

4 Local Convergence of Critical Lévy Trees and CB Processes

In this section, first we study the local convergence of conditioned critical Lévy trees. Then we derive a very general ratio limit property for certain measurable functions of critical Lévy trees. Finally we treat the local convergence of conditioned critical CB processes. Recall that when we talk about height processes of Lévy trees, we always assume the condition (12).

4.1 Local Convergence of Critical Lévy Trees

Let A be a nonnegative measurable function defined on the excursion space \({\mathbb {C}}_0([0,\infty ),{\mathbb {R}}_+)\). For an excursion \(\omega \in {\mathbb {C}}_0([0,\infty ),{\mathbb {R}}_+)\), write \(\omega _{(h)}=(\omega _{(h),i},i\in {\mathcal {I}}_{(h)})\) for the collection of all sub-excursions above height h. We introduce the following monotonicity property of A:

Suppose that \(\omega \in {\mathbb {C}}_0([0,\infty ),{\mathbb {R}}_+)\) codes a real tree, then the monotonicity property (17) says that for any \(h>0\) the value of A on the whole tree is not less than the value of A on any subtree above height h.

Define \(v_r={\mathbf {N}}[A (H)>r]\in [0,\infty ]\) and \({\mathbf {N}}_r[\cdot ]={\mathbf {N}}[\cdot |A (H)>r]\) when \(v_r\in (0,\infty )\). The following theorem asserts that if the monotonicity property (17) holds for A, then under the conditional probability \({\mathbf {N}}_r\), the Lévy tree \({\mathcal {T}}(\Phi )\) converges locally to the continuum immortal tree \({\mathcal {T}}^*(\Phi )\), as \(r\rightarrow \infty \). More specifically, we see that combined with Lemma 2.3 of [9], the following theorem says that for any \(h>0\), under the conditional probability \({\mathbf {N}}_r\), the subtree of the Lévy tree \({\mathcal {T}}(\Phi )\) below height h converges as \(r\rightarrow \infty \) to the subtree of the continuum immortal tree \({\mathcal {T}}^*(\Phi )\) below height h, with respect to the (pointed) Gromov–Hausdorff distance on the space of all equivalence classes of rooted compact real trees.

Theorem 4.1

Assume that \(\Phi \) is critical and \(v_r\in (0,\infty )\) for large enough r. If the function A satisfies the monotonicity property (17), then for any \(h>0\), as \(r\rightarrow \infty \),

weakly in \({\mathbb {C}}_0([0,\infty ),{\mathbb {R}})\). Also as \(r\rightarrow \infty \),

weakly in \({\mathbb {C}}([0,\infty ),{\mathbb {R}}^2)\).

Proof

For the first statement, it suffices to prove the following convergence for any bounded measurable function F on \({\mathbb {C}}_0([0,\infty ),{\mathbb {R}}_+)\),

Clearly we may assume that \(0\le F\le 1\). The by Lemma 3.1, it suffices to show that

To prove (19), first recall that if the value of A on a subtree above height h is greater than r, then the value of A on the whole tree is greater than r, by the monotonicity property (17). Then recall the branching property from Sect. 3.3 (more precisely, we use Proposition 3.1 of [8]) and note that under \({\mathbf {N}}_{(h)}\) and conditional on all information below height h, the number of subtrees above height h having the value of A greater than r is distributed as a Poisson random variable with parameter

So the probability that the value of A on at least one subtree above height h is greater than r is

So the monotonicity property and the branching property imply that

which further implies that

Thus we get

where the second inequality follows from Fatou’s lemma.

Now recall that \(0\le F\le 1\). Then we apply the above inequality to \(1-F\) to get

which implies that

since \({\mathbf {N}}[L^h_\zeta ]=1\) by (16). Thus we have proved (19).

For the second statement we introduce \(\tau _h(\omega )=\inf \{s\ge 0: \omega (s)=h\}\) for \(\omega \in {\mathbb {C}}([0,\infty ),{\mathbb {R}}_+)\). To simplify notations we set

To get the second statement, we only have to prove the following convergence for any bounded measurable function F on \({\mathbb {C}}([0,\infty ),{\mathbb {R}}^2)\),

since it implies that for any \(t > 0\),

Finally notice that (18) implies (20). \(\square \)

Next we will apply Theorem 4.1 to three specific conditionings, which are the conditioning of large width, the conditioning of large total mass, and the conditioning of large maximal degree. We first introduce the conditioning of large width. Under \({\mathbf {N}}\), define the width of the Lévy tree H by \(W(H)=\sup _{h\ge 0}L^h_\zeta \). Consider \({\mathbf {N}}[\cdot |W(H)>r]\) when \({\mathbf {N}}[W (H)>r]\in (0,\infty )\), this is the conditioning of large width. Then let us consider the conditioning of large total mass. Under \({\mathbf {N}}\), define the total mass of the Lévy tree H by \(\sigma (H)=\int _0^\infty L^h_\zeta dh\). Consider \({\mathbf {N}}[\cdot |\sigma (H)>r]\) when \({\mathbf {N}}[\sigma (H)>r]\in (0,\infty )\), this is the conditioning of large total mass.

Finally we introduce the conditioning of large maximal degree. Recall from Sect. 3.3 that \({\mathbf {N}}\) is the excursion measure of the strong Markov process \(X-I\) at zero. Also recall that we write X for the canonical process under \({\mathbf {N}}\), which is rcll. Finally recall from Theorem 4.6 of [9] that Lévy trees have two types of nodes (i.e., branching points), binary nodes (i.e., vertices of degree 3) and infinite nodes (i.e., vertices of infinite degree). Infinite nodes correspond to the jumps of the canonical process X under \({\mathbf {N}}\), and the sizes of these jumps correspond to the masses of those infinite nodes. We call the mass of a node its degree. Then define the maximal degree of the Lévy tree H by \(M(H)=\sup _{0\le s\le \zeta }\Delta X_s\). Note that under \({\mathbf {N}}\) we can write \(\max _{0\le s\le \zeta }\Delta X_s\) as a measurable function of H, since jumps of X correspond to jumps of the process \((L^h_\zeta ,h\ge 0)\), which is a measurable functional of H. Consider \({\mathbf {N}}[\cdot |M(H)>r]\) when \({\mathbf {N}}[M (H)>r]\in (0,\infty )\), this is the conditioning of large maximal degree.

Since the monotonicity property (17) is trivial to check, we then only have to check that \(v_r\in (0,\infty )\) for large enough r. In the following lemma, we only assume (13). Note that to define W, \(\sigma \), and M, we only need the real-valued process \((L^h_\zeta ,h\ge 0)\), which when (13) holds can be defined by the excursion representation of CB processes, so the introduction of the height process H and its local times is not needed. Also note that the measurable functions W, \(\sigma \), or M can be similarly defined for CB processes. We write \({\mathbf {P}}_x\) for probabilities of CB processes with initial value x.

Lemma 4.2

Assume (13). Then for any \(x>0\), if \(\alpha \ge 0\), then \({\mathbf {P}}_x[W>r]>0\) and \(N[W>r]\in (0,\infty )\) for any \(r\in (0,\infty )\), and \({\mathbf {P}}_x[\sigma>r]>0\) and \(N[\sigma >r]\in (0,\infty )\) for any \(r\in (0,\infty )\). Again for any \(x>0\), if \(\alpha \ge 0\) and the Lévy measure \(\pi \) has unbounded support, then \({\mathbf {P}}_x[M>r]>0\) and \(N[M>r]\in (0,\infty )\) for any \(r\in (0,\infty )\).

Proof

For the width, first we argue that for any \(x\in (0,\infty )\) and \(r\in (0,\infty )\),

When \(\alpha \ge 0\), for the CB process Y we may define \(Y_\infty =0\) and regard Y as a supermartingale over the time interval \([0,\infty ]\). Then by optional sampling, it is easy to get the above inequality. Now by the excursion representation of CB processes and the above inequality, we get

which implies that \(N[W>r]<\infty \) for any \(r>0\). Next by Corollary 12.9 of [15] and the fact that scale functions are strictly increasing, we see that \({\mathbf {P}}_x[W>r]>0\) for any \(x>0\) and \(r>0\). Finally by the Markov property of the excursions under N, for any \(r>0\) and \(h>0\) we have

since \(N[\omega _h>0]>0\) for any \(h>0\).

For the total mass, first denote by \(\Phi ^{-1}\) the inverse function of \(\Phi \), then recall that for \(\lambda >0\), \(N[1-\exp (-\lambda \sigma )]=\Phi ^{-1}(\lambda )\) (see, e.g., the beginning of Sect. 3.2.2 in [10]), which implies that \(N[\sigma >r]<\infty \) for any \(r>0\). Also clearly \(N[\sigma>r]>0\) for some \(r>0\), then by the excursion representation of CB processes, we see that \({\mathbf {P}}_x[\sigma>r]>0\) for any \(r>0\). Finally by the Markov property of the excursions under N, for any \(r>0\) and \(h>0\), we have

Finally the statement on the maximal degree follows from Corollary 4.2 of [12], the excursion representation of CB processes, and the simple observation that different excursions do not jump at the same time, \({\mathbf {P}}_x\) a.s. \(\square \)

Now Theorem 4.1 and Lemma 4.2 immediately imply the local convergence of critical Lévy trees, under any of the three conditionings we introduced above.

Corollary 4.3

Assume that \(\Phi \) is critical and \(h>0\). Then for \(A=W\) and \(A=\sigma \), respectively, as \(r\rightarrow \infty \),

weakly in \({\mathbb {C}}_0([0,\infty ),{\mathbb {R}})\). The above convergence also holds for \(A=M\) under the additional assumption that the Lévy measure \(\pi \) has unbounded support.

4.2 A General Ratio Limit Property

Denote by \({\mathbb {C}}_0^\infty ([0,\infty ),{\mathbb {R}}_+)\) the product space of countably infinitely many copies of \({\mathbb {C}}_0([0,\infty ),{\mathbb {R}}_+)\). Let A be a nonnegative measurable function defined on \({\mathbb {C}}_0^\infty ([0,\infty ),{\mathbb {R}}_+)\), which is invariant under permutation. For any \(\omega ,\omega '\in {\mathbb {C}}_0^\infty ([0,\infty ),{\mathbb {R}}_+)\), we write \(\omega '\le \omega \) if \(\omega =(\omega _1,\omega _2,\ldots )\), \(\omega '=(\omega '_1,\omega '_2,\ldots )\), and \(\omega '_n \le \omega _n\) for any \(n\ge 1\). For any \(\omega \in {\mathbb {C}}_0^\infty ([0,\infty ),{\mathbb {R}}_+)\), we also write \(\omega _{(h)}\in {\mathbb {C}}_0^\infty ([0,\infty ),{\mathbb {R}}_+)\) for the collection of sub-excursions of \(\omega \) above height h. We introduce the following monotonicity property of A:

Let \(N^{(x)}\) be a Poisson random measure on \({\mathbb {C}}_0([0,\infty ),{\mathbb {R}}_+)\) with intensity \(x{\mathbf {N}}[H\in \cdot ]\). Write \(\omega ^{(x)}=(\omega ^{(x),i},i\in {\mathcal {I}}^{(x)})\) for the collection of all excursions in \(N^{(x)}\). Also write \({\mathbf {N}}[A>r]\) for \({\mathbf {N}}[A(H)>r]\), and \({\mathbf {P}}^{(x)}[A>r]\) for \({\mathbf {P}}[A(\omega ^{(x)})>r]\).

Theorem 4.4

Assume that \(\Phi \) is critical, \({\mathbf {N}}[A>r]\in (0,\infty )\) for large enough r, and A satisfies the monotonicity property (21). Then for any \(x>0\),

Proof

First as in the proof of Theorem 4.1, for any \(x>0\),

Also note that as in the proof of Theorem 4.1, for any Borel subset B of \({\mathbb {R}}_+\),

Now recall a basic property of Poisson random measures, which asserts that for \(0<x<y\), if we add a Poisson random measures with intensity \(x{\mathbf {N}}\) to another independent Poisson random measures with intensity \((y-x){\mathbf {N}}\), we get a Poisson random measures with intensity \(y{\mathbf {N}}\). So

which implies that

Suppose that we can pick some \(x'>0\), such that for some \(\delta >0\),

Recall from Lemma 4.2 that \(W=\sup _{h>0}L^h_\zeta \) has unbounded support, so we can pick some \(h>0\) and \(x''>x'\), such that for any \(\varepsilon >0\),

Fix a strictly decreasing sequence \((\varepsilon _n)_{n \ge 1}\) such that \(\varepsilon _1<x''-x'\) and \(\lim _{n\rightarrow \infty }\varepsilon _n=0\). For any \(n \ge 1\), we know from (23) that

Then pick an increasing sequence \((r_k)_{k \ge 1}\) (may depend on n) such that \(\lim _{k\rightarrow \infty }r_k=\infty \) and

Clearly for any \(y>x''-\varepsilon _n\),

So we get

Since trivially

by (22) we can derive that

thus we get \(x''-\varepsilon _n+\delta /2\le x''+\varepsilon _n\) by (24), that is, \(\delta \le 4\varepsilon _n\) for any \(n\ge 1\), a contradiction to \(\delta >0\). This completes the proof. \(\square \)

In the next subsection, more specifically, in the following Proposition 4.5, we will apply Theorem 4.4 to both width and total mass of Lévy forests. Here we only mention that we do not need to apply Theorem 4.4 to the maximal degree of Lévy forests since it is the trivial case. Assume that for any \(\omega ^\infty =(\omega _1,\omega _2,\ldots )\in {\mathbb {C}}_0^\infty ([0,\infty ),{\mathbb {R}}_+)\), the measurable function A has the property that

Then clearly

which implies that

The maximal degree and the height of Lévy forests are two examples of measurable functions satisfying (25). Clearly for the maximal jump and the height of CB processes, we have a similar situation, just notice that in the excursion representation of CB processes, different excursions of any CB process do not jump at the same time.

4.3 Local Convergence of Critical CB Processes

In this subsection, we first discuss the corresponding result of Theorem 4.4 in the setting of CB processes and then use it to treat the local convergence of critical CB processes.

Let A be a nonnegative measurable function defined on \({\mathbb {D}}([0,\infty ),{\mathbb {R}}_+)\), the standard Skorohod space. For any \(\omega \in {\mathbb {D}}([0,\infty ),{\mathbb {R}}_+)\), we write \(\omega _{(h)}\in {\mathbb {D}}([0,\infty ),{\mathbb {R}}_+)\) for the sub-path of \(\omega \) after time h. We introduce the following monotonicity property of A:

Let \(Y=(Y_t,t\ge 0)\) be a CB process with the branching mechanism \(\Phi \) and write \(({\mathcal {F}}_t,t\ge 0)\) for the filtration induced by Y. Recall from Sect. 3.2 that we use \({\mathbf {P}}_x[Y\in \cdot ]\) to denote the distribution of Y with \(Y_0=x\) and N the excursion measure.

The following result is just the corresponding version of Theorem 4.4 in the setting of CB processes, plus two applications. The first application is about the width of CB processes and the scale functions of Lévy processes. For the definition of the scale function \(W^{(0)}=(W^{(0)}(r),r\ge 0)\), see, e.g., Sect. 8.2 of [15]. Note that we use W to denote the width and \(W^{(0)}\) to denote the scale function. The second application is about the total mass of CB processes. In the first application, it might be interesting to note that the convergence of the scale function \(W^{(0)}\) below also has an intuitive meaning for the first passage times of Lévy processes, see (8.11) in [15].

Proposition 4.5

Assume that \(\Phi \) is critical and satisfies (13), \(N[A>r]\in (0,\infty )\) for large enough r, and A satisfies the monotonicity property (26). Then for any \(x>0\),

where we write \({\mathbf {P}}_x[A>r]\) for \({\mathbf {P}}_x[A(Y)>r]\). In particular, for any \(x>0\),

Expressed in terms of the scale function \(W^{(0)}=(W^{(0)}(r),r\ge 0)\), the above convergence means that for any \(x>0\),

Also for any \(x>0\),

Proof

For the first statement, note that Theorem 4.4 is about height processes of Lévy trees, so we have to assume the condition (12) to get continuous height processes. However the proof there does not rely on any specific property of height processes. In fact, if we are interested only in the real-valued process \((L^h_\zeta ,h\ge 0)\), not in the height process and its local times, then as long as the branching mechanism satisfies the weaker condition (13), we can make the same proof work by using the excursion representation of CB processes. The first statement now follows from the fact that

The other statements follow immediately from the first statement, Lemma 4.2 in the present paper, and Corollary 12.9 of [15]. \(\square \)

The following result asserts that if the monotonicity property (26) holds for A, then under the conditional probability \({\mathbf {P}}_x[\cdot |A (Y)>r]\), the CB process Y converges locally to a certain CB process with immigration (CBI process), such that the branching mechanism of this CBI process is still \(\Phi \) and the immigration mechanism is \(\Phi '\), the derivative of \(\Phi \), see Remark 4.7.

Corollary 4.6

Assume that \(\Phi \) is critical and satisfies (13), \({\mathbf {P}}_x[A (Y)>r]>0\) for any \(x,r>0\), and A satisfies the monotonicity property (26). Then for any \(h \ge 0\) and any bounded \({\mathcal {F}}_h\)-measurable random variable F,

Proof

We consider only \(F \ge 0\). The monotonicity property (26) of A and the Markov property of the CB process Y imply that

The first statement of Proposition 4.5 and Fatou’s lemma imply that

Recall that \({\mathbf {E}}_x[{Y_h}]=x\). As in the proof of Theorem 4.1, the above two inequalities are enough to imply that

\(\square \)

Remark 4.7

Define a new probability \({\mathbf {P}}^*_x\) by

for any \(h\ge 0\) and any bounded \({\mathcal {F}}_h\)-measurable random variable F. It is well known that \({\mathbf {P}}^*_x[Y\in \cdot ]\) is the distribution of a CBI process \(Y^*\) with the branching mechanism \(\Phi \) and the immigration mechanism \(\Phi '\), where \(\Phi '\) is the derivative of \(\Phi \). See, e.g., Sect. 3.3 of [17] for some details on CBI processes. Then Theorem 4.6 implies that for any \(h \ge 0\), \((Y_t,t\in [0,h])\) under the conditioning of \(\{A(Y)>r\}\) converges weakly to \((Y^*_t,t\in [0,h])\) as \(r\rightarrow \infty \). In this case, we say that under the conditioning of \(\{A(Y)>r\}\) the critical CB process Y converges locally to the CBI process \(Y^*\).

Now Theorem 4.6 and Lemma 4.2 imply immediately that the critical CB process Y converges locally to \(Y^*\), under any of the three conditionings introduced in Sect. 4.1.

References

Abraham, R., Delmas, J.F.: Local limits of conditioned Galton–Watson trees: the infinite spine case. Electron. J. Probab. 19, 19 (2014)

Abraham, R., Delmas, J.F.: Local limits of conditioned Galton–Watson trees II: the condensation case. Electron. J. Probab. 19, 29 (2014)

Abraham, R., Delmas, J.F.: Asymptotic properties of expansive Galton–Watson trees. Electron. J. Probab. 24, 15 (2019)

Abraham, R., Delmas, J.F., Guo, H.: Critical multi-type Galton–Watson trees conditioned to be large. J. Theor. Probab. 31, 757–788 (2018)

Aldous, D.: The continuum random tree III. Ann. Probab. 21, 248–289 (1993)

Benjamini, I., Schramm, O.: Recurrence of distributional limits of finite planar graphs. Electron. J. Probab. 6, 23 (2001)

Borovkov, K.A., Vatutin, V.A.: On distribution tails and expectations of maxima in critical branching processes. J. Appl. Probab. 33(3), 614–622 (1996)

Duquesne, T.: Continuum random trees and branching processes with immigration. Stoch. Process. Appl. 119, 99–129 (2008)

Duquesne, T., Le Gall, J.-F.: Probabilistic and fractal aspects of Lévy trees. Probab. Theory Relat. Fields 131, 553–603 (2005)

Duquesne, T., Le Gall, J.-F.: Random trees, Lévy processes and spatial branching processes. Astérisque 281 (2005)

He, X.: Conditioning Galton–Watson trees on large maximal outdegree. J. Theor. Probab. 30, 842–851 (2017)

He, X., Li, Z.: Distributions of jumps in a continuous-state branching process with immigration. J. Appl. Prob. 53, 116–1177 (2016)

Janson, S.: Simply generated trees, conditioned Galton–Watson trees, random allocations and condensation. Probab. Surv. 9, 103–252 (2012)

Kesten, H.: Subdiffusive behavior of random walk on a random cluster. Ann. Inst. H. Poincaré Probab. Statist. 22, 425–487 (1986)

Kyprianou, A.E.: Fluctuations of Lévy Processes with Applications: Introductory Lectures, 2nd edn. Springer, New York (2014)

Le Gall, J.F., Le Jan, Y.: Branching processes in Lévy processes: the exploration process. Ann. Probab. 26, 213–252 (1998)

Li, Z.: Measure-Valued Branching Markov Processes. Springer, Heidelberg (2011)

Lindvall, T.: On the maximum of a branching process. Scand. J. Statist. Theory Appl. 3, 209–214 (1976)

Stephenson, R.: Local convergence of large critical multi-type Galton–Watson trees and applications to random maps. J. Theor. Probab. 31, 159–205 (2018)

Stufler, B.: Local limits of large Galton–Watson trees rerooted at a random vertex. Ann. Inst. H. Poincaré Probab. Statist. 55, 155–183 (2019)

Acknowledgements

I am very grateful to an anonymous referee, especially for him or her pointing out a gap in the original proof of Theorem 4.4, but also for providing many useful suggestions which helped me to improve the presentation of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supported by the Fundamental Research Funds for the Central Universities (WK0010000063).

Rights and permissions

About this article

Cite this article

He, X. Local Convergence of Critical Random Trees and Continuous-State Branching Processes. J Theor Probab 35, 685–713 (2022). https://doi.org/10.1007/s10959-021-01074-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10959-021-01074-9