Abstract

In this paper, we establish strong duality between affinely adjustable two-stage robust linear programs and their dual semidefinite programs under a general uncertainty set, that covers most of the commonly used uncertainty sets of robust optimization. This is achieved by first deriving a new version of Farkas’ lemma for a parametric linear inequality system with affinely adjustable variables. Our strong duality theorem not only shows that the primal and dual program values are equal, but also allows one to find the value of a two-stage robust linear program by solving a semidefinite linear program. In the case of an ellipsoidal uncertainty set, it yields a corresponding strong duality result with a second-order cone program as its dual. To illustrate the efficacy of our results, we show how optimal storage cost of an adjustable two-stage lot-sizing problem under a ball uncertainty set can be found by solving its dual semidefinite program, using a commonly available software.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Duality theory of linear programming and optimality principles of many classes of nonlinear optimization problems are often grounded in Farkas’ lemma or its generalizations. In 1901, Farkas [1] initially established a dual characterization for a system of linear inequalities, which was then used to derive necessary optimality principles for nonlinear programming problems, and these principles, known as Kuhn–Tucker conditions, were published in the early 1950s by Kuhn and Tucker [2]. Importantly, Farkas’ lemma provides a numerically verifiable dual condition for non-negativity of a linear function over a system of linear inequalities as the dual condition can be verified by solving a linear program. We refer the interested reader to [3,4,5,6,7,8] for the generalizations of Farkas’ lemma and their applications to linear and nonlinear optimization problems.

In this paper, by establishing a new generalization of Farkas’ lemma, we present strong duality theorems for affinely adjustable two-stage robust linear programs with a general uncertainty set. Affinely adjustable two-stage robust linear programs appear in dynamic decision-making models in the face of evolving uncertainty (see Sect. 3 of this paper and also [9, 10]). Adjustable robust optimization is a powerful methodology, which was pioneered by Ben-Tal and his co-workers (see, e.g., [11]) for treating multi-stage robust optimization problems. It offers less conservative decisions than the classical (deterministic) robust optimization [9] as some of the decisions can be adjusted at later stages.

However, even two-stage robust optimization problems with adjustable variables are in general computationally intractable. Indeed, the second-stage adjustable decision is a mapping and so it is generally very difficult to establish numerically verifiable solvability characterizations or approximations of the system with a mapping unless the system is restricted to some special classes of mappings. A great deal of recent research on two-stage adjustable robust linear programming assumes that the second-stage adjustable decision satisfies an affine decision rule, which restricts the second-stage mapping to depend affinely on the uncertain parameters (see, e.g., [9, 11,12,13,14,15,16] and the references therein).

Our main contributions in this paper include the following:

-

(i)

By introducing the notion of a parameterized affine rule, we first establish a new affinely adjustable two-stage version of Farkas’ lemma (see Theorem 2.1). This two-stage Farkas’ lemma provides a numerically verifiable dual condition for non-negativity of a linear function over a two-stage system of linear homogeneous inequalities with affinely adjustable variables, where the dual condition can be verified by solving a semidefinite linear program. We give a direct and self-contained proof for deriving the generalized Farkas’ lemma using the Hahn–Banach hyperplane separation theorem together with a variable transformation.

-

(ii)

Employing the adjustable Farkas’ lemma, we then establish strong duality between an affinely adjustable two-stage robust linear program and its dual semidefinite program. In the case of an ellipsoidal uncertainty set, we derive a strong duality for a two-stage robust linear program with its dual second-order cone program. These strong duality theorems not only show that the primal and dual program values are equal, but also allow one to find the optimal value of a two-stage robust linear program by solving a conic linear program such as a semidefinite linear program or a second-order cone program.

Although we employ a traditional approach by making use of the Hahn–Banach hyperplane separation theorem to establish the new version of Farkas’ lemma, our use of a variable transformation permits us to obtain numerically verifiable dual conditions and consequently formulate a dual conic linear program for a two-stage robust linear program. We utilize a general closed cone regularity condition, which guarantees that strong duality holds between the primal and dual pairs, and hence obtain an exact conic linear program for the two-stage robust program.

The regularity condition is guaranteed by a Slater-type strict inequality condition. As an illustration, we obtain a semidefinite linear program as an exact dual problem for an adjustable two-stage lot-sizing problem on a network under a simple strict inequality condition and show how optimal storage cost of an adjustable two-stage lot-sizing problem under a ball uncertainty set can be found by solving its dual semidefinite program, using a commonly available software package.

The outline of the paper is as follows. Section 2 presents generalizations of Farkas’ lemma for systems of parametric linear inequalities involving adjustable variables. Section 3 establishes strong duality between two-stage robust linear programs and their dual conic linear programs and provides exact conic linear programs for these two-stage programs under a regularity condition. Section 4 describes an application of our duality results to find the optimal storage cost of an adjustable two-stage lot-sizing problem with uncertain demand by solving its dual semidefinite program. Numerical examples are also given to illustrate how a two-stage program and a two-stage lot-sizing problem can be solved, using a MATLAB software package. The last section summarizes the main results and provides perspectives.

2 Adjustable Farkas’ Lemma

Let us first start by fixing some notations and definitions. The notation \({\mathbb {R}}^n\) signifies the Euclidean space whose norm is denoted by \(\Vert \cdot \Vert \) for each \(n\in {\mathbb {N}}\). The inner product in \({\mathbb {R}}^n\) is defined by \(\langle x,y\rangle :=x^\top y\) for all \(x, y\in {\mathbb {R}}^n.\) The origin of any space is denoted by 0 but we may use \(0_n\) for the origin of \({\mathbb {R}}^n\) in situations, where some confusion might be possible. For a non-empty set \(\varOmega \subset {\mathbb {R}}^n,\) \(\mathrm{conv\,}\varOmega \) denotes the convex hull of \(\varOmega \) and \(\mathrm{cl\,}\varOmega \) stands for the closure of \(\varOmega \), while \(\mathrm{coneco\,}\varOmega :={\mathbb {R}}_+\mathrm{conv\,}\varOmega \) stands for the convex conical hull of \(\varOmega \cup \{0_n\}\), where \({\mathbb {R}}_+:=[0,+\infty [\subset {\mathbb {R}}.\) As usual, the symbol \(I_n\) stands for the identity \((n\times n)\) matrix. A symmetric \((n\times n)\) matrix A is said to be positive semidefinite, denoted by \(A\succeq 0\), whenever \(x^\top Ax\ge 0\) for all \(x\in {\mathbb {R}}^n.\) If \(x^\top Ax> 0\) for all \(x\in {\mathbb {R}}^n\setminus \{0_n\}\), then A is called positive definite, denoted by \(A\succ 0\).

A general parametric (semi-infinite) linear homogeneous system with adjustable variables reads as follows:

where \(a_j^r\in {\mathbb {R}}^n, r=0,1,\ldots ,s, j=1,\ldots ,q\) and \(\theta _j:=(\theta ^1_j,\ldots ,\theta _j^m)\in {\mathbb {R}}^m, j=1,\ldots ,q\) are fixed and the set U is assumed to be a spectrahedron (see, e.g., [17]) given by

with the symmetric square matrices \(A_r, r=0,1,\ldots ,s\). In what follows, we assume that U is non-empty and bounded.

Note that, in (1), the adjustable variable \(x(\cdot )\) is a mapping \(x:U\rightarrow {\mathbb {R}}^m\), and so it is generally hard to obtain numerically verifiable dual solvability characterizations of the system with a mapping \(x(\cdot )\) unless the system is restricted to some special classes of mappings. We now introduce a parameterized affine rule, where the mapping \(x(\cdot )\) satisfies the rule:

Here, \(y\in {\mathbb {R}}^m\) and \(W\in {\mathbb {R}}^{m\times s}\) are non-adjustable variables and \(\rho \in [0,1]\) is a scale parameter. If \(\rho \in ]0,1[\) then, by considering \({{\widetilde{y}}}:=\rho y\) and \({{\widetilde{W}}}:=(1-\rho )W\), the second-stage decision rule in (3) becomes the standard affine rule \(x(u):={{\widetilde{y}}}+{{\widetilde{W}}}u\) (cf. [11, Page 356]) (see also, [10, Equation (8)]), where \({{\widetilde{y}}}\in {\mathbb {R}}^m\) and \({{\widetilde{W}}}\in {\mathbb {R}}^{m\times s}\) are non-adjustable variables. Other special cases of commonly used affine rules and their corresponding results are given later in this section.

We now consider a parametric linear homogeneous inequality system with affinely adjustable variables as follows:

where U is the parameter set given as in (2). In what follows, we use \(W_r, r=1,\ldots ,s\) as the columns of the matrix W.

Let \(a_j:{\mathbb {R}}^s\rightarrow {\mathbb {R}}^n, j=1,\ldots ,q,\) be given by

with \(a_j^r\in {\mathbb {R}}^n, r=0,1,\ldots ,s, j=1,\ldots ,q\) fixed as above and denote

Theorem 2.1

(Adjustable Farkas’ Lemma) Let \(\wp \in {\mathbb {R}}^n, \upsilon \in {\mathbb {R}}^m, \sigma _r\in {\mathbb {R}}^m, r=1,\ldots ,s\) and assume that the adjustable linear homogeneous inequality system (RS) has a solution; i.e.,

Let \(W_r, r=1,\ldots ,s\) be the columns of the matrix W. Suppose that the cone C in (5) is closed. Then, the following statements are equivalent:

(a) The following implication holds:

(b) There exist \(\lambda _j^0\ge 0, \lambda _j^r\in {\mathbb {R}}, j=1,\ldots ,q, r=1,\ldots ,s\) such that

To prove this theorem, we need the following technical lemma.

Lemma 2.1

Consider the cone C given as in (5). Then, for each \(v^*\in C\), there exist \(\alpha _j\ge 0, j=1,\ldots ,q\) and \( u^j:=(u^j_1,\ldots ,u^j_s)\in U, j=1,\ldots ,q\) such that

where the affine maps \(a_j, j=1,\ldots ,q\) are given as in (4) and the convex set U is defined as in (2).

Proof

Let \(v^*\in C.\) By the definition of C and the Carathéodory’s theorem, we find \(\alpha _{ji}\ge 0, j=1,\ldots ,q\) and \( u^{ji}:=(u^{ji}_1,\ldots ,u^{ji}_s)\in U, j=1,\ldots ,q, i=1,\ldots ,n+m+ms+1\) such that

For each \(j\in \{1,\ldots ,q\},\) let \(\alpha _{j}:=\sum \limits _{i=1}^{n+m+ms+1}\alpha _{ji}\ge 0.\) If \(\alpha _j=0,\) then we take \(u^j:=(u^{j1}_1,\ldots ,u^{j1}_s)\in U.\) If \(\alpha _j>0\), then we put \(u^j:=\frac{1}{\alpha _j}\sum \limits _{i=1}^{n+m+ms+1}\alpha _{ji}u^{ji}\in U,\) where the latest relation holds due to the convexity of U. As \(a_j\) is an affine map, it holds that \(\alpha _ja_j(u^j)=\sum \limits _{i=1}^{n+m+ms+1}\alpha _{ji}a_j(u^{ji}).\) Now, we get by (6) that

which completes the proof of the lemma. \(\square \)

Proof of Theorem 2.1 [(a) \(\Longrightarrow \) (b)] Assume that (a) holds. We first show that

where C is given by (5).

(Applying the separation theorem) Suppose by contradiction that \(-(\wp ,\upsilon ,\sigma _1,\ldots ,\sigma _s)\notin C.\) As C is closed and convex, we can invoke the strong separation theorem (see, e.g., [18, Theorem 2.2]) to find \((x^*,y^*,z^*_1,\ldots ,z^*_s)\in {\mathbb {R}}^{n+m+ms}\setminus \{0_{n+m+ms}\}\) such that

Note by \(0_{n+m+ms}\in C\) that

We assert further that

Otherwise, there exists \(c_0\in C\) such that \(\langle (x^*,y^*,z^*_1,\ldots ,z^*_s),c_0\rangle > 0.\) This, together with the fact that \(\lambda c_0\in C\) for all \(\lambda >0\), implies that

This clearly contradicts (8), and hence, assertion (10) is valid. This, together with the fact that \( \big (a_j(u), \rho \theta _j, (1-\rho )u_1\theta _j,\ldots , (1-\rho )u_s\theta _j\big )\in C, j=1,\ldots ,q \) for all \(u\in U\), implies that

By \(X\ne \emptyset ,\) we find \(({{\overline{x}}},{{\overline{y}}},{{\overline{W}}})\in {\mathbb {R}}^{n+m+m\times s}\) such that \(a_j(u)^\top {{\overline{x}}}+\rho \theta _j^\top \overline{y}+(1-\rho )\sum \limits _{r=1}^su_r\theta _j^\top {{\overline{W}}}_r\le 0, j=1,\ldots ,q\) for all \(u\in U\), where \({{\overline{W}}}_r, r=1,\ldots ,s\), are the columns of the matrix \({{\overline{W}}}\). This, together with (11), entails that

where \(x^\lambda :={{\overline{x}}}+\lambda x^*, y^\lambda :={{\overline{y}}}+\lambda y^*, W_r^\lambda :={{\overline{W}}}_r+\lambda z^*_r, r=1,\ldots , s\) for \(\lambda >0.\) In other words, by denoting \(W^\lambda , \lambda >0,\) the matrix with its columns as \(W_r^\lambda , r=1,\ldots ,s\), we assert by (12) that \(x^\lambda \in {\mathbb {R}}^n, y^\lambda \in {\mathbb {R}}^m, W^\lambda \in {\mathbb {R}}^{m\times s} \) and \( a_j(u)^\top x^\lambda + \theta _{j}^\top x(u) \le 0, x(u)=\rho y^\lambda +(1-\rho )W^\lambda u, j=1,\ldots ,q \) for all \(u\in U\). On the one hand, as (a) holds, we arrive at

Now, by (9), we obtain that

as \(\lambda \rightarrow +\infty .\) This clearly contradicts (13), and consequently, (7) holds.

(Employing a variable transformation) By (7) and Lemma 2.1, we find \(\alpha _j\ge 0, j=1,\ldots ,q\) and \( u^j:=(u^j_1,\ldots ,u^j_s)\in U, j=1,\ldots ,q\) such that

Letting \(\lambda ^0_j:=\alpha _j\ge 0, \lambda _j^r:=\alpha _ju^j_r\in {\mathbb {R}}, j=1,\ldots ,q, r=1,\ldots , s\), we obtain that

The relation \(u^j\in U\) means that \(A_0+\sum \limits _{r=1}^su^j_rA_r\succeq 0\) for \(j=1,\ldots ,q.\) We show that

Let \(j\in \{1,\ldots ,q\}\) be arbitrary. If \(\lambda _j^0= 0\), then \(\lambda _j^r=0\) for all \(r=1,\ldots ,s,\) and hence, (14) holds trivially. If \(\lambda _j^0\ne 0,\) then

showing (14) holds, too. So, we arrive at the conclusion that (b) holds.

[(b) \(\Longrightarrow \) (a)] Assume that (b) holds. Then, there exist \(\lambda _j^0\ge 0, \lambda _j^r\in {\mathbb {R}}, j=1,\ldots ,q, r=1,\ldots ,s\) such that

(Employing an inverse variable transformation) Given \(j\in \{1,\ldots ,q\}\), we consider the following matrix inequality

We claim by (17) and the boundedness of U that if \(\lambda _j^0=0\), then \(\lambda _j^r=0\) for all \(r=1,\ldots ,s.\) Suppose, on the contrary, that \(\lambda _j^0=0\) but there exists \(r_0\in \{1,\ldots ,s\}\) with \(\lambda _j^{r_0}\ne 0.\) In this case, (17) becomes \(\sum \limits ^{s}_{r=1}\lambda _j^rA_r\succeq 0\). Let \({{\overline{u}}}:= ({{\overline{u}}}_1,\ldots ,{{\overline{u}}}_s)\in U.\) It follows by definition that \(A_0+\sum \limits _{r=1}^s{{\overline{u}}}_rA_r\succeq 0\) and

This guarantees that \(\overline{u}+\gamma (\lambda _j^1,\ldots ,\lambda _j^s)\in U\) for all \(\gamma >0\), which contradicts the fact that U is a bounded set. So, our claim must be true.

By \(U\ne \emptyset ,\) for each \(j\in \{1,\ldots ,q\}\), we can take \({{\widehat{u}}}^j:=({{\widehat{u}}}^j_1,\ldots ,{{\widehat{u}}}^j_s)\in U\) and define \(\widetilde{u}^j:=({{\widetilde{u}}}^j_1,\ldots ,{{\widetilde{u}}}^j_s)\), where

Then, it can be checked that

which shows that \(A_0+\sum \limits _{r=1}^s{{\widetilde{u}}}^j_rA_r\succeq 0\), and so, \(\widetilde{u}^j\in U.\) Then, we have

where we note that if \(\lambda _j^0=0\), then \(\lambda _j^r=0\) for all \(r=1,\ldots ,s, j=1,\ldots , q\) as shown above.

To prove (a), let \((x,y,W)\in {\mathbb {R}}^{n+m+m\times s}\) be such that \(a_j(u)^\top x+ \theta _{j}^\top x(u) \le 0, j=1,\ldots ,q\) for all \(u\in U\), where \(x(u)=\rho y+(1-\rho )Wu \). It means that

where \(W_r, r=1,\ldots ,s\) are the columns of the matrix W. Observe by (15), (16), (18) and (19) that

where the last inequality holds due to (20) and the fact that \({{\widetilde{u}}}^{j}\in U, j=1,\ldots ,q.\) So, (a) holds. The proof of the theorem is complete. \(\square \)

Let us now examine some particular cases of the adjustable linear homogeneous inequality system (RS). The first particular case is \(x(u):=\rho (x_1,\ldots ,x_m)+(1-\rho )Wu\) with \(1\le m<n\); i.e., the adjustable variable \(x(\cdot )\) depends on some components of the non-adjustable (first-stage) variable \(x:=(x_1,\ldots ,x_m,\ldots ,x_n).\)

Corollary 2.1

(Partially adjustable Farkas’ lemma) Let \(1\le m<n\) and denote \(x:=(\xi ,\zeta ),\) where \(\xi :=(x_1,\ldots ,x_m)\) and \(\zeta :=(x_{m+1},\ldots ,x_n)\) are partitions of \(x:=(x_1,\ldots ,x_m,\ldots ,x_n)\). Let \((\wp _1,\wp _2)\in {\mathbb {R}}^m\times {\mathbb {R}}^{n-m}, \sigma _r\in {\mathbb {R}}^m, r=1,\ldots , s\), and assume that the adjustable linear homogeneous inequality system (RS) with \(x(u):=\rho \xi +(1-\rho )Wu\) has a solution; i.e.,

Suppose that the following cone

is closed, where \(a_j(u)=(a_j^1(u),\ldots ,a_j^n(u))\) for \(u\in U.\) We also partition \(a^r_j \) as \(a^r_j:=(a^r_{j1},a^r_{j2})\) with \(a^r_{j1}\in {\mathbb {R}}^m\) and \(a^r_{j2}\in {\mathbb {R}}^{n-m}\) for \( r=0,1,\ldots ,s, j=1,\ldots , q. \) Then, the following statements are equivalent:

(i) The following implication holds:

where \(W_r, r=1,\ldots ,s\) are the columns of the matrix W.

(ii) There exist \(\lambda _j^0\ge 0, \lambda _j^r\in {\mathbb {R}}, j=1,\ldots ,q, r=1,\ldots ,s\) such that

Proof

Let \({\widetilde{x}}:=(\xi ,\zeta ,W_1,\ldots ,W_s),\) \({\widetilde{a}}_j^0:=(a^0_{j1}+\rho \theta _j, a^0_{j2}, 0_{m},\ldots ,0_m), {\widetilde{a}}_j^r:=(a^r_{j1},a^r_{j2},\varTheta ^r_j), r=1,\ldots ,s, j=1,\ldots , q, \) where \(\varTheta ^r_j:=(0_m,\ldots ,(1-\rho )\theta _j,\ldots ,0_m)\in {\mathbb {R}}^{m\times s}\) with \(\theta _j\) standing at the rth position, and define affine maps \({\widetilde{a}}_j:{\mathbb {R}}^{s}\rightarrow {\mathbb {R}}^{n+m\times s}, j=1,\ldots ,q\) as

In what follows, we also use the notation \({\widetilde{a}}_j(u):=({\widetilde{a}}_j^1(u),\ldots ,{\widetilde{a}}_j^{n+m\times s}(u))\) for \(u\in U\) and \(j=1,\dots , q.\) Set \({\widetilde{\theta }}_{j}:=0_m, j=1,\ldots ,q\), and observe that \(X_2\ne \emptyset \), if and only if \({\widetilde{X}}\ne \emptyset \), where

Assume (i) holds. Then, the following implication holds:

where \( {\widetilde{\wp }}:=(\wp _1,\wp _2,\sigma _1,\ldots ,\sigma _s).\) Moreover, it can be verified that the closedness of \(C_2\) guarantees the closedness of the following cone \({\widetilde{C}}\), where

Applying the adjustable Farkas’ lemma given in Theorem 2.1, we find \(\lambda _j^0\ge 0, \lambda _j^r\in {\mathbb {R}}, j=1,\ldots ,q, r=1,\ldots ,s\) such that

which shows that

This means that (ii) is valid. In this way, we can employ Theorem 2.1 to show that the inverse implication also holds and so, the proof is complete. \(\square \)

Remark 2.1

For the case of \(x(u):=\rho x+(1-\rho )Wu\) (i.e., the adjustable variable depends on the entire components of the non-adjustable variable), we can employ the adjustable Farkas’ lemma in Theorem 2.1 to derive the following version of the Farkas’ lemma.

Let \(\wp \in {\mathbb {R}}^n, \sigma _r\in {\mathbb {R}}^n, i=1,\ldots , s\), and assume that the adjustable linear homogeneous inequality system (RS) with \(m=n\) and \(x(u):=\rho x+(1-\rho )Wu\) has a solution; i.e.,

Suppose that the following cone \( C_1\) is closed, where

Then, the following statements are equivalent:

-

(i)

The following implication holds:

$$\begin{aligned} \big [&x\in {\mathbb {R}}^n, W\in {\mathbb {R}}^{n\times s}, \; a_j(u)^\top x+ \theta _{j}^\top x(u) \le 0, \; j=1,\ldots ,q,\\&x(u)=\rho x+(1-\rho )Wu ,\; \forall u\in U \big ]\Longrightarrow \wp ^\top x+ \sum _{r=1}^s\sigma _r^\top W_r \ge 0, \end{aligned}$$where \(W_r, r=1,\ldots ,s\) are the columns of the matrix W.

-

(ii)

There exist \(\lambda _j^0\ge 0, \lambda _j^r\in {\mathbb {R}}, j=1,\ldots ,q, r=1,\ldots ,s\) such that

$$\begin{aligned}&\wp +\sum \limits _{j=1}^q(\lambda _j^0a^0_j+\lambda _j^0\rho \theta _j+\sum ^{s}_{r=1}\lambda ^r_ja^r_j)=0, \; \sigma _r+\sum ^{q}_{j=1}\lambda _j^r(1-\rho )\theta _j=0, r=1,\ldots ,s,\\&\lambda _j^0A_0+\sum _{r=1}^s\lambda ^r_jA_r\succeq 0, \; j=1,\ldots ,q. \end{aligned}$$

In the second particular case, where \(\theta _j:=0_m, j=1,\ldots ,q\) (i.e., there are no adjustable variables in the system (RS)), the adjustable Farkas’ lemma reduces to the semi-infinite Farkas’ lemma (see, e.g., [19]) for a linear homogeneous inequality system.

Corollary 2.2

(Semi-infinite Farkas’ Lemma) Let \(\wp \in {\mathbb {R}}^n\), and assume that the adjustable linear homogeneous inequality system (RS) with \(\theta _j:=0_m, j=1,\ldots ,q\) has a solution; i.e.,

Suppose that the following cone \({{\widetilde{C}}}\) is closed, where

Then, the following statements are equivalent:

-

(i)

The following implication holds:

$$\begin{aligned} \big [ x\in {\mathbb {R}}^n, \; a_j(u)^\top x \le 0, \; j=1,\ldots ,q,\; \forall u\in U \big ]\Longrightarrow \wp ^\top x \ge 0. \end{aligned}$$ -

(ii)

There exist \(\lambda _j^0\ge 0, \lambda _j^r\in {\mathbb {R}}, j=1,\ldots ,q, r=1,\ldots ,s\) such that

$$\begin{aligned}&\wp +\sum \limits _{j=1}^q(\lambda _j^0a^0_j+\sum ^{s}_{r=1}\lambda ^r_ja^r_j)=0, \; \lambda _j^0A_0+\sum _{r=1}^s\lambda ^r_jA_r\succeq 0, \; j=1,\ldots ,q. \end{aligned}$$

Proof

Observe first that \({{\widetilde{X}}}\ne \emptyset \), if and only if \(X\ne \emptyset \), where

with \(\theta _j:=0_m, j=1,\ldots ,q.\)

Assume (i) holds. Then, by letting \(\upsilon :=\sigma _r:=0_m, r=1,\ldots ,s\), we get from (i) the following implication

which lands in the form (a) of Theorem 2.1. In this setting, it can be verified that the closedness of \({{\widetilde{C}}}\) implies the closedness of C in (5) as

Applying now Theorem 2.1, we assert that (24) is equivalent to saying that there exist \(\lambda _j^0\ge 0, \lambda _j^r\in {\mathbb {R}}, j=1,\ldots ,q, r=1,\ldots ,s\) such that

Note that conditions (25) and (26) are exactly (ii) because \(\upsilon =\sigma _r=0_m, r=1,\ldots , s\) and \(\theta _j:=0_m, j=1,\ldots ,q.\) In this way, we can employ Theorem 2.1 to show that the inverse implication also holds, and so the proof is complete. \(\square \)

We close this section with a remark that, in a special case where the parameter set U in (2) is a singleton, the adjustable Farkas’ lemma reduces to a classical form of the Farkas’ lemma for a linear homogeneous inequality system.

3 Exact SDPs for Two-Stage Robust Linear Programs

In this section, we apply the adjustable Farkas’ lemma obtained in Theorem 2.1 to derive strong duality relations for a two-stage robust linear optimization problem, where the dual problem is a conic linear program and so, the optimal value of the underlying two-stage robust linear optimization problem can be found by solving this conic linear program.

A linear program in the face of constraint data uncertainty can be captured by the program

where \(c\in {\mathbb {R}}^n\) is fixed, \(u\in U\), the uncertainty set

is a non-empty bounded spectrahedron defined as in (2), and \(a_j:{\mathbb {R}}^s\rightarrow {\mathbb {R}}^n, b_j:{\mathbb {R}}^s\rightarrow {\mathbb {R}}, j=1,\ldots ,q\) are affine mappings given, respectively, by

where \(a_j^i\in {\mathbb {R}}^n, b_j^i\in {\mathbb {R}}, i=0,1,\ldots ,s, j=1,\ldots ,q\) are fixed.

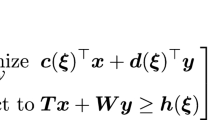

We consider an adjustable uncertain two-stage linear program of (27) as

where \(\theta _j:=(\theta ^1_j,\ldots ,\theta _j^m)\in {\mathbb {R}}^m, j=1,\ldots ,q\) are fixed, \(x:=(x_1,\ldots ,x_n)\) is the first-stage (here-and-now) decision vector and \(x(\cdot )\) is the second-stage (wait-and-see) adjustable decision vector given by

with the non-adjustable \(y\in {\mathbb {R}}^m\), \(W\in {\mathbb {R}}^{m\times s}\) and \(\rho \in [0,1]\) as in (3). The first-stage decisions \(x_1,\ldots ,x_n\) are made before the uncertain parameters \(u_1, \ldots ,u_s\) are realized, whereas the second-stage adjustable decisions can be made after some of the uncertain parameters have revealed their values.

The adjustable robust two-stage counterpart of (UP) reads as follows:

We propose a semidefinite programming (SDP) dual problem for (RP) as

The following theorem describes a strong duality relation between the adjustable robust two-stage linear problem (RP) and its semidefinite programming dual problem (DP).

Theorem 3.1

(Strong duality with SDP duals) Let the feasible set of problem (RP) be non-empty and let the following cone \(C_P\) be closed, where

Then, we have

Proof

(Verifying weak duality) Let us first show that

This holds trivially if the feasible set of problem (DP) is empty. Now, take any \(\lambda _j^0\ge 0, \lambda _j^i\in {\mathbb {R}}, , j=1,\ldots ,q, i=1,\ldots ,s \) such that

Consider any \(j\in \{1,\ldots ,q\}\). By the compactness of U, as shown in the proof of Theorem 2.1, we conclude by (31) that if \(\lambda _j^0=0\), then \(\lambda _j^i=0\) for all \(i=1,\ldots ,s.\) Then, take \({{\widehat{u}}}^j:=({{\widehat{u}}}^j_1,\ldots ,{{\widehat{u}}}^j_s)\in U\) and define \({{\widetilde{u}}}^j:=({{\widetilde{u}}}^j_1,\ldots ,{{\widetilde{u}}}^j_s)\) with

It shows that \({{\widetilde{u}}}^j\in U.\) Then, we have

where we note that if \(\lambda _j^0=0\), then \(\lambda _j^i=0\) for all \(i=1,\ldots ,s, j=1,\ldots , q\) as said above.

Now, let \((x,y,W)\in {\mathbb {R}}^{n+m+m\times s}\) be a feasible point of problem (RP);

i.e., \(a_j(u)^\top x+\theta _{j}^\top \big (\rho y+(1-\rho )Wu\big ) \le b_j(u), j=1,\ldots ,q\) for all \(u\in U\). Denote by \(W_i, i=1,\ldots ,s\) the columns of the matrix W. We get by (30), (32) and (33) that

where we should remind that \({{\widetilde{u}}}^j\in U\) for \(j=1,\ldots ,q.\) It entails that \(c^\top x\ge -\sum \limits _{j=1}^q(\lambda ^0_jb^0_j+\sum \limits _{i=1}^s\lambda ^i_jb^i_j)\), and so (29) is valid.

(Verifying strong duality) To prove the converse inequality of (29), we may assume \(\overline{\mu }:=\inf {(RP)}\in {\mathbb {R}}.\) Then, it holds that

We now verify that

To see this, assume on the contrary that there exist \(({{\widehat{x}}},{{\widehat{y}}},\widehat{W})\in {\mathbb {R}}^{n+m+m\times s}\) and \({{\widehat{t}}}\le 0\) such that

where \({{\widehat{W}}}_i, i=1,\ldots ,s\) are the columns of the matrix \({{\widehat{W}}}.\) Let us consider the following possibilities:

Case 1 (\({{\widehat{t}}}=0\)): We get by (36) and (37) that

Let \(({{\overline{x}}},{{\overline{y}}},{{\overline{W}}})\in {\mathbb {R}}^{n+m+m\times s}\) be a feasible point of problem (RP);

i.e., \(a_j(u)^\top {{\overline{x}}}+\rho \theta _j^\top \overline{y}+(1-\rho )\sum \limits _{r=1}^su_r\theta _j^\top {{\overline{W}}}_r\le b_j(u), j=1,\ldots ,q\) for all \(u\in U\), where \({{\overline{W}}}_r, r=1,\ldots ,s\) are the columns of the matrix \({{\overline{W}}}\). It entails that

where \(x^\lambda :=\overline{x}+\lambda {{\widehat{x}}}, y^\lambda :={{\overline{y}}}+\lambda {{\widehat{y}}}, W_i^\lambda :={{\overline{W}}}_i+\lambda {{\widehat{W}}}_i, i=1,\ldots , s\) for \(\lambda >0.\) In other words, by denoting \(W^\lambda , \lambda >0,\) the matrix with its columns as \(W_i^\lambda , i=1,\ldots ,s\) we assert by (38) that \(x^\lambda \in {\mathbb {R}}^n, y^\lambda \in {\mathbb {R}}^m, W^\lambda \in {\mathbb {R}}^{m\times s} \) and \( a_j(u)^\top x^\lambda + \theta _{j}^\top x(u) \le b_j(u), x(u)=\rho y^\lambda +(1-\rho )W^\lambda u, j=1,\ldots ,q \) for all \(u\in U\). Then, by (34), we arrive at the assertion that \(c^\top x^\lambda \ge {{\overline{\mu }}}\) for all \(\lambda >0\). This assertion contradicts the fact that \(c^\top {{\widehat{x}}}<0\) and thus

Case 2 (\({{\widehat{t}}}<0\)): We get by (36) that

Similarly, by (34), we obtain that \(c^\top \left( \frac{{{\widehat{x}}}}{-{{\widehat{t}}}}\right) \ge {{\overline{\mu }}} \), which contradicts to the inequality in (37). Consequently, (35) holds.

(Homogenization of (35)) Let \({\widetilde{x}}:=(x,t), {\widetilde{a}}_j^r:=(a^r_j,b^r_j), r=0,1,\ldots ,s, j=1,\ldots , q, {\widetilde{a}}_{q+1}^0:=(0_n,1), \) \({\widetilde{a}}_{q+1}^r:=(0_n,0), r=1,\ldots , s\) and \(\theta _{q+1}:=0_m\), and define affine maps \({\widetilde{a}}_j:{\mathbb {R}}^{s}\rightarrow {\mathbb {R}}^{n+1}, j=1,\ldots ,q+1\) as

In what follows, we also use the notation \({\widetilde{a}}_j(u):=({\widetilde{a}}_j^1(u),\ldots ,{\widetilde{a}}_j^{n+1}(u))\) for \(u\in U\) and \(j=1,\dots , q+1.\) From (35), we obtain the following implication:

where \( {\widetilde{c}}:=(c,{{\overline{\mu }}}).\) Moreover, it can be verified that the closedness of \(C_P\) guarantees the closedness of the following cone \({\widetilde{C}}\), where

Applying the adjustable Farkas’ lemma given in Theorem 2.1, we find \(\lambda _j^0\ge 0, \lambda _j^r\in {\mathbb {R}}, j=1,\ldots ,q+1, r=1,\ldots ,s\) such that

which shows that

The last equality entails that \({{\overline{\mu }}}\le -\sum \limits _{j=1}^q(\lambda _j^0b^0_j+\sum \limits ^{s}_{i=1}\lambda ^i_jb^i_j)\). So, \({{\overline{\mu }}}\le \sup {(DP)}\), which, together with (29), guarantees that \(\inf {(RP)}= \sup {(DP)}\) and the dual problem (DP) attains its solutions. The proof of the theorem is complete. \(\square \)

Remark 3.1

For the case of \(x(u):=\rho (x_1,\ldots ,x_m)+(1-\rho )Wu\) with \(1\le m<n\) (i.e., the second-stage decision variable \(x(\cdot )\) depends on some components of the first-stage decision variable \(x:=(x_1,\ldots ,x_m,\ldots ,x_n)\)), we can employ the Farkas’ lemma in Corollary 2.1 instead of Theorem 2.1 to derive a strong duality relation between the adjustable robust two-stage linear problem (RP) and its corresponding dual semidefinite programming problem.

Let us consider a particular case of the adjustable robust two-stage linear problem (RP), where the uncertainty set U in (2) is replaced by the following ellipsoid

with a symmetric \(({s}\times {s})\) matrix \(M\succ 0\). Let B be an \(({s}\times {s})\) matrix such that \(M=B^\top B\).

In this case, we obtain strong duality with a second-order cone programming (SOCP) dual for the adjustable robust two-stage linear problem (RP) under ellipsoidal uncertainty.

Corollary 3.1

(Strong duality with SOCP duals) Consider the problem (RP) with U in (2) replaced by E in (40), and let the feasible set of problem (RP) be non-empty. Assume the following cone \(C_P\) be closed, where

Then, we have

Proof

Let \(A_i, i=0,1,\ldots ,s\) be \((s+1)\times (s+1)\) matrices given by

where \(e_i\in {\mathbb {R}}^{s}\) is a vector whose ith component is one and all others are zero. Then, the ellipsoid E in (40) lands in the form of the spectrahedron U in (2). Therefore, we invoke Theorem 3.1 to assert that

where \(A_i, i=0,1,\ldots ,s\) are given as in (41). In this case, we can verify that

So, the proof is complete. \(\square \)

As an application of Theorem 3.1, we derive, in the following corollary, a characterization of solution in terms of linear matrix inequalities for the adjustable robust two-stage linear problem (RP).

Corollary 3.2

(Characterization of solution for (RP)) Let the optimal solution set of problem (RP) be non-empty and let the following cone \(C_P\) be closed, where

Let \(({{\overline{x}}},\overline{y},{{\overline{W}}})\in {\mathbb {R}}^{n+m+m\times s}\) be a feasible point of problem (RP). Then, \(({{\overline{x}}},{{\overline{y}}},{{\overline{W}}})\) is an optimal solution of problem (RP) if and only if there exist \(\lambda _j^0\ge 0, \lambda _j^i\in {\mathbb {R}}, j=1,\ldots ,q, i=1,\ldots ,s \), such that

Proof

[\(\Longrightarrow \)] Let \(({{\overline{x}}},{{\overline{y}}},{{\overline{W}}})\in {\mathbb {R}}^{n+m+m\times s}\) be an optimal solution of problem (RP). Since \(C_P\) is closed, we assert by Theorem 3.1 that

This shows that there exist \(\lambda _j^0\ge 0, \lambda _j^i\in {\mathbb {R}}, j=1,\ldots ,q, i=1,\ldots ,s \) such that

and so, we obtain (42).

[\(\Longleftarrow \)] Assume that there exist \(\lambda _j^0\ge 0, \lambda _j^i\in {\mathbb {R}}, , j=1,\ldots ,q, i=1,\ldots ,s \) such that (42) holds. Under the closedness of \(C_P,\) we invoke Theorem 3.1 again to conclude that

Now, let \(({{\widehat{x}}},{{\widehat{y}}},\widehat{W})\in {\mathbb {R}}^{n+m+m\times s}\) be an arbitrary feasible point of problem (RP). Taking (42) and (43) into account, we see that

So, \(({{\overline{x}}},\overline{y},{{\overline{W}}})\) is an optimal solution of problem (RP), which finishes the proof. \(\square \)

We now provide some sufficient criteria, which guarantee that the cone \(C_P\) in Theorem 3.1 is closed.

Proposition 3.1

(Sufficiency for the closedness of \({\varvec{C}_P}\)) Assume that one of the following conditions holds:

- (a) :

-

The uncertainty set U of problem (RP) is a polytope;

- (b) :

-

The Slater condition holds for the problem (RP); i.e., there is \(({{\widehat{x}}},{{\widehat{y}}},\widehat{W})\in {\mathbb {R}}^{n+m+m\times s}\) such that

$$\begin{aligned} a_j(u)^\top {{\widehat{x}}}+ \theta _{j}^\top x(u)< b_j(u), \; x(u)=\rho \widehat{y}+(1-\rho ){{\widehat{W}}}u,\; j=1,\ldots ,q,\;\forall u\in U. \end{aligned}$$(44)

Then, the following cone \(C_P\) is closed, where

Proof

For each \(j\in \{1,\ldots ,q\},\) let us define an affine map \(H_j:{\mathbb {R}}^s\rightarrow {\mathbb {R}}^n\times {\mathbb {R}}\times {\mathbb {R}}^m\times {\mathbb {R}}^{ms}\) as

Then, \(H_j(U):=\bigcup \limits _{u \in U}H_j(u)\), and so it follows that

(a) When U is a polytope, the set \(H_j(U)\) is also a polytope for each \(j\in \{1,\ldots ,q\}\) (see, e.g., [20, Proposition 1.29]), and so \(H_j(U)\) is the convex hull of some finite set (see, e.g., [20, Theorem 1.26]). It means that for each \(j\in \{1,\ldots ,q\},\) there exist \(k_j\in {\mathbb {N}}\) and \(u^i\in U, i=1,\ldots , k_j\) such that

\(H_j(U)=\mathrm{conv}\big \{\big (a_j(u^i),b_j(u^i), \rho \theta _j, (1-\rho )u^i_1\theta _j, \ldots , (1-\rho )u^i_s\theta _j\big ) \,:\, i=1,\ldots ,k_j\big \}.\) It follows that

is a polytope. So, \(C_P=\mathrm{coneco}\left[ \mathrm{conv}\left( \bigcup \limits _{j=1}^{q}H_j(U)\right) \right] \) is a polyhedral cone and hence is closed (see, e.g., [18, Proposition 3.9]).

(b) As U is compact and \(H_j\) is affine (and thus continuous) for \(j\in \{1,\ldots ,q\}\), the set \(H_j(U)\) is a compact set. This entails that \(\left( \bigcup \limits _{j=1}^{q}H_j(U)\right) \) is also a compact set. Moreover, \(H_j(U)\) is a convex set for each \(j\in \{1,\ldots ,q\}\) due to the convexity of U (see, e.g., [18, Proposition 1.23]). Assume now that the Slater condition (44) holds. We assert that

Assume on the contrary that there exist \( u^j\in U, {{\overline{\mu }}}_j\ge 0, j=1,\ldots ,q\) with \(\sum \limits _{j=1}^q{{\overline{\mu }}}_j=1\) such that

Denote by \({{\widehat{W}}}_r, r=1,\ldots ,s\), the columns of the matrix \({{\widehat{W}}}\). From (44), we obtain that

which clearly contradicts (46), and so (45) holds. Invoking [7, Proposition 1.4.7], we conclude that \(C_P\) is closed. \(\square \)

We close this section with a simple example that shows how we can employ the strong duality obtained in Theorem 3.1 to verify the optimal value of an adjustable robust two-stage linear problem by solving its corresponding SDP dual problem.

Example 3.1

Consider an adjustable uncertain two-stage linear problem of the form:

where \(\theta :=(1,1,1)\), \(x:=(x_1,x_2,x_3)\in {\mathbb {R}}^3\) is the first-stage decision variable and \(x(\cdot )\) is the second-stage decision variable, which is an adjustable variable that is depending on uncertain \(u:=(u_1,u_2,u_3)\in U.\) Here, we consider a linear decision rule \(x(\cdot )\) given by

where \(y\in {\mathbb {R}}^3\) and \(W\in {\mathbb {R}}^{3\times 3}\) are non-adjustable variables, \(\rho \in ]0,1[\) and the uncertainty U is an elliptope (see, e.g., [21, Pages 255–256]) given by

To treat this problem, let us consider a robust counterpart of (EU) as follows:

Note that the uncertainty set U in (47) is a spectrahedron and is depicted in Fig. 5.8 of [21, Page 232], and so the problem (ER) can be expressed in terms of problem (RP), where \(c:=(-1,-2,-3), \theta _1:=\theta _4:=(1,1,1),\) \( \theta _2:=\theta _3:=0_3\), the spectrahedron U is described by

and the affine mappings \(a_j:{\mathbb {R}}^3\rightarrow {\mathbb {R}}^3, b_j:{\mathbb {R}}^3\rightarrow {\mathbb {R}}, j=1,2,3,4\) are defined by \(a^0_1:=(0,0,0), a^1_1:=(1,0,0), \) \(a^2_1:=(0,1,0), a^3_1:=(0,0,1), a^0_2:=(1,2,0), a^0_3:=(0,0,1), a^0_4:=(-3,-1,-1), a^i_j:=(0,0,0)\in {\mathbb {R}}^3, i=1,2,3,\) \( j=2,3,4, b^0_1:=8, b^0_j:=1, j=2,3, b^0_4:=0, b^i_j:=0\in {\mathbb {R}}, i=1,2,3, j=1,2,3,4.\)

By direct calculation, we see that the problem (ER) has optimal solutions (for instance, \(({{\overline{x}}},{{\overline{y}}}, {{\overline{W}}})\), where \({{\overline{x}}}:=(1,0,1), {{\overline{y}}}:=0_3\) and \( {{\overline{W}}}:=0_{3\times 3}\), is an optimal solution of problem (ER)) with the optimal value \(\min {(ER)}=-4.\) Let us now verify the optimal value of problem (ER). Since the Slater condition holds for the problem (ER), the corresponding cone \(C_P\) is closed as shown by Proposition 3.1. So, we assert by Theorem 3.1 that the exact SDP duality between (ER) and (ED) holds, where (ED) is the following SDP dual problem:

which amounts to the following one

Using the MATLAB toolbox CVX (see, e.g., [22, 23]), we solve the problem (ES) and the solver returns the optimal value as \(-4.\) It shows that the exact SDP duality holds for the problem (ER), that is, \(\max {(ES)}=-4=\min {(ER)}\).

4 Adjustable Two-Stage Lot-Sizing Problems

In this section, we describe how the optimal storage cost of an adjustable two-stage lot-sizing problem with uncertain demand can be found by solving a corresponding semidefinite program in our duality scheme.

A lot-sizing on a network problem is to determine the stock allocation \(x_i\), for \(i\in \{1,\ldots ,n\}\) stores prior to knowing the realization of the demand u at each location, where the demand u is uncertain and is assumed to reside in an uncertainty set \(U\subset {\mathbb {R}}^n.\) After we observe the realization of the demand u, we can transport stock \(y_{ij}, i=1,\ldots ,n,j=1,\ldots ,n\) from store i to store j at unit cost \(\tau _{ij}\) in order to meet all demand. The goal is to minimize the worst case storage costs with unit costs \(c_i, i=1,\ldots ,n\) and the cost arising from shifting the products from one store to another. This model can be rewritten as an adjustable two-stage linear program (see, e.g., [16, 24]).

We consider an adjustable uncertain two-stage lot-sizing on a network problem of the form:

where \(c:=(c_1,\ldots ,c_n), c_i\in {\mathbb {R}}, i=1,\ldots ,n \) are the storage unit costs and \(M_i>0, i=1,\ldots ,n\) are the capacities of the stores, \(\tau _{ij}\ge 0, \tau _{ii}= 0, i=1,\ldots ,n,j=1,\ldots ,n\) are transport unit costs, u is uncertain and the uncertainty set \(U:=\big \{u:=(u_1,\ldots ,u_n)\in {\mathbb {R}}^n \,:\, A_0+\sum _{r=1}^nu_rA_r\succeq 0\big \}\) is defined as in (2) with \(s:=n.\)

The adjustable robust two-stage lot-sizing on a network counterpart of (UL) reads as follows:

For a vector \(y\in {\mathbb {R}}^{n^2}\), we can label it as \(y:=(y_{11},\ldots ,y_{1n},y_{21},\ldots ,y_{2n},\ldots ,y_{n1},\ldots ,y_{nn}):=\sum _{i=1}^n\sum _{j=1}^ny_{ij}e_{ij},\) where \(e_{ij}\) is a vector in \({\mathbb {R}}^{n^2}\) whose ijth element is one and the others are zero for \(i,j\in \{1,\ldots ,n\}.\) In this vein, we may write \(y:=(y_{ij}) \in {\mathbb {R}}^{n^2}\) for short. We also use the notation \(e_k\) to denote the unit vector \(e_k\in {\mathbb {R}}^n, k\in \{1,\ldots ,n\}\), whose kth element is one and the others are zero. Let us denote \({{\widetilde{c}}}:=(c,1), {{\widetilde{x}}}:=(x,t)\in {\mathbb {R}}^n\times {\mathbb {R}}, \)

\( a^0_{ij}:=a^r_{ij}:=0_{n+1}, b^0_{ij}:=b^r_{ij}:=0, \theta _{ij}:=-e_{ij}, r=1,\ldots , s, i=1,\ldots ,n,j=1,\ldots ,n\) and set

Then, the problem (RL) can be written as

where \( a_{ij}(u):=a^{0}_{ij}+\sum \limits ^{n}_{r=1}u_ra_{ij}^r,\; b_{ij}(u):= b^0_{ij}+\sum \limits ^{n}_{r=1}u_rb_{ij}^r, a_{k}(u):=a^{0}_{k}+\sum \limits ^{n}_{r=1}u_ra_{k}^r,\; b_{k}(u):= b^0_{k}+\sum \limits ^{n}_{r=1}u_rb_{k}^r\) for \( u:=(u_1,\ldots , u_n)\in {\mathbb {R}}^n.\)

The problem (AL) lands in the form of (RP) with \(\rho :=1\), and therefore, we have a corresponding SDP dual problem for (RL) as

which amounts to the following one:

The following result provides a strong duality relation between the adjustable robust two-stage lot-sizing problem (RL) and its semidefinite programming dual problem (DL).

Corollary 4.1

(Strong SDP duality for (RL)) Assume that there exist \({{\widehat{x}}}:=({{\widehat{x}}}_1,\ldots ,{{\widehat{x}}}_n)\in {\mathbb {R}}^n\) and \( {{\widehat{y}}}:=({{\widehat{y}}}_{ij})\in {\mathbb {R}}^{n^2}\) such that \( {{\widehat{y}}}_{ij}\ge 0, i=1,\ldots ,n, j=1,\ldots ,n, 0\le {{\widehat{x}}}_i\le M_i, i=1,\ldots ,n, \) \( u_i-{{\widehat{x}}}_i< \sum _{j=1}^n{{\widehat{y}}}_{ji}-\sum _{j=1}^n{{\widehat{y}}}_{ij}, i=1,\ldots ,n,\) for all \(u:=(u_1,\ldots ,u_n)\in U\). Then, we have

Proof

As mentioned above, it holds that

and the problem (AL) is in the form of (RP) with \(\rho :=1\). Under our assumption, we see that the feasible set of problem (AL) is non-empty and the following cone \(C_0\) is closed (cf. the proof of Proposition 3.1), where

The closedness of \(C_0\) implies the closedness of \(C_L\), where

Invoking now Theorem 3.1 with \(\rho :=1\), we arrive at \( \inf {(AL)}= \max {(DL)}.\) This, together with (49), proves that (48) is valid. \(\square \)

Now, let us apply Corollary 4.1 to find the optimal storage cost of the problem (RL) by solving its corresponding SDP dual problem (DL) in the case, where the uncertainty set is \(U:=\{(u_1,\dots ,u_n)\in {\mathbb {R}}^n\,:\, u_1^2+\cdots +u^2_n\le 1\}.\) For the purpose of simplicity, we choose the capacities of the stores \(M_i:=100, i=1,\ldots ,n\), the unit storage costs \(c_i=\mathrm{C}>0, i=1,\ldots ,n\) and the transport unit costs \(\tau _{ij}:=i+j\) if \(i\ne j\) and \(\tau _{ij}:=0\) if \(i=j\) for \( i=1,\ldots ,n, j=1,\ldots ,n.\)

More explicitly, we solve the SDP dual problem (DL) for \(n=2,3,4\) by using the MATLAB toolbox CVX (see, e.g., [22, 23]). The size of the SDP often depends on the matrices \(A_r, r=1,\ldots ,n\) of the uncertainty sets. For instance, when n=4, our procedure involves 29 \((5\times 5)\) matrices. The numerical tests are conducted on a computer with a 3.60GHz Intel(R) Core(TM) i7-4790 and 16.0GB RAM, equipped with MATLAB R2017b. The following table of test results shows the worst-case total storage cost and the corresponding time in seconds for the problem (RL) (Table 1).

In passing, we note that in the case of a polytope uncertainty set U, the problem (RL) and its reformulation (DL) reduce to linear programs, respectively. We refer the interested reader to [24] for a dual formulation test and to [16] for a Fourier–Motzkin elimination test on lot-sizing problems.

5 Conclusion and Future Work

In this paper, we have shown how strong duality between an affinely adjustable two-stage robust linear program with a spectrahedron uncertainty set and its dual conic linear program can be obtained by establishing, for the first time, a new version of Farkas’ lemma for a parametric linear inequality system with affinely adjustable variables. We have also established that in the case of an ellipsoidal uncertainty set, our strong duality yields a second-order cone program as its exact dual with the same optimal value of its robust linear program. We have evaluated the efficacy of our main duality theorem by applying it to find the optimal storage cost of an adjustable two-stage lot-sizing problem under a ball uncertainty set via its dual semidefinite program.

Recently, exact conic programming relaxations and duals have been given for various classes of (static) single-stage robust SOS-convex polynomial programs under suitable uncertainty sets [25,26,27]. This allowed robust solutions of special classes of uncertain convex programs to be found by solving related conic programs. The approach, presented in this paper, may be extended to study affinely adjustable robust two-stage SOS-convex polynomial programs by way of examining suitable dual conic programs and it will be treated in a forthcoming study.

References

Farkas, J.: Theorie der einfachen Ungleichungen. J. fur die reine und angewandte Mathematik 124, 1–27 (1901)

Kuhn, H.W., Tucker, A.W.: Nonlinear programming. In: Proceedings of the Second Berkeley Symposium on Mathematical Statistics and probability, pp. 481–492. University of California Press, Berkeley, California (1951)

Dinh, N., Goberna, M.A., López, M.A., Mo, T.H.: From the Farkas lemma to the Hahn-Banach theorem. SIAM J. Optim. 24, 678–701 (2014)

Dinh, N., Goberna, M.A., López, M.A., Mo, T.H.: Robust optimization revisited via robust vector Farkas lemmas. Optimization 66, 939–963 (2017)

Goberna, M.A., López, M.A.: Linear Semi-Infinite Optimization. Wiley, Chichester (1998)

Dinh, N., Jeyakumar, V.: Farkas’ lemma: Three decades of generalizations for mathematical optimization. TOP 22, 1–22 (2014)

Hiriart-Urruty, J.-B., Lemarechal, C.: Convex Analysis and Minimization Algorithms. I Fundamentals. Springer, Berlin (1993)

Rockafellar, R.T.: Convex Analysis. Princeton University Press, Princeton (1970)

A. Ben-Tal, A., El Ghaoui, L., Nemirovski, A.: Robust Optimization, Princeton Ser. Appl. Math., Princeton University Press, Princeton (2009)

Yanikoglu, I., Gorissen, B.L., den Hertog, D.: A survey of adjustable robust optimization. Eur. J. Oper. Res. 277, 799–813 (2019)

Ben-Tal, A., Goryashko, A., Guslitzer, E., Nemirovski, A.: Adjustable robust solutions of uncertain linear programs. Math. Program. 99, 351–376 (2004)

Chen, A., Zhang, Y.: Uncertain linear programs: extended affinely adjustable robust counterparts. Oper. Res. 57(6), 1469–1482 (2009)

Delage, E., Iancu, D.A.: Robust multistage decision making. INFORMS Tutorials Oper. Res. Chap. 2, 20–46 (2015)

Kuhn, D., Wiesemann, W., Georghiou, A.: Primal and dual linear decision rules in stochastic and robust optimization. Math. Program. 130, 177–209 (2011)

Hadjiyiannis, M.J., Goulart, P.J., Kuhn, D.: A scenario approach for estimating the suboptimality of linear decision rules in two-stage robust optimization. 50th IEEE Conference on Decision and Control and European Control Conference (CDC-ECC) Orlando, FL, USA, December 12–15 (2011)

Zhen, J., den Hertog, D., Sim, M.: Adjustable robust optimization via Fourier-Motzkin elimination. Oper. Res. 66, 1086–1100 (2018)

Ramana, M., Goldman, A.J.: Some geometric results in semidefinite programming. J. Global Optim. 7, 33–50 (1995)

Mordukhovich, B.S., Nam, M.N.: An Easy Path to Convex Analysis and Applications, Synthesis Lectures on Mathematics and Statistics, 14. Morgan & Claypool Publishers, Williston (2014)

Chuong, T.D., Jeyakumar, V.: A generalized Farkas lemma with a numerical certificate and linear semi-infinite programs with SDP duals. Linear Algebra Appl. 515, 38–52 (2017)

Bruns, W., Gubeladze, J.: Polytopes, Rings, and K-Theory. Springer, Dordrecht (2009)

Blekherman, G., Parrilo, P.A., Thomas, R.: Semidefinite Optimization and Convex Algebraic Geometry. World Publications, Philadelphia (2012)

Grant, M., Boyd, S.: Graph implementations for nonsmooth convex programs. In: Blondel, V., Boyd, S., Kimura, H. (eds.) Recent Advances in Learning and Control, Lecture Notes in Control and Information Sciences, pp. 95–110. Springer, Berlin (2008)

Grant, M., Boyd, S.: CVX: Matlab software for disciplined convex programming, version 2.1. http://cvxr.com/cvx, March (2014)

Bertsimas, D., de Ruiter, F.J.C.T.: Duality in two-stage adaptive linear optimization: faster computation and stronger bounds. Inf. J. Comput. 28, 500–511 (2016)

Chuong, T.D., Jeyakumar, V.: Tight SDP relaxations for a class of robust SOS-convex polynomial programs without the slater condition. J. Convex Anal. 25(4), 1159–1182 (2018)

Jeyakumar, V., Li, G., Perez, J.V.: Robust SOS-convex polynomial optimization problems: exact SDP relaxations. Optim. Lett. 9, 1–18 (2015)

Jeyakumar, V., Perez, J.V.: Dual semidefinite programs without duality gaps for a class of convex minimax programs. J. Optim. Theory Appl. 162, 735–753 (2014)

Acknowledgements

The authors would like to thank the associate editor and reviewer for valuable comments. Research was supported by a research grant from Australian Research Council.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Marcin Studniarski.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chuong, T.D., Jeyakumar, V. Generalized Farkas Lemma with Adjustable Variables and Two-Stage Robust Linear Programs. J Optim Theory Appl 187, 488–519 (2020). https://doi.org/10.1007/s10957-020-01753-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-020-01753-3

Keywords

- Generalized Farkas’ lemma

- Semi-infinite linear system

- Adjustable robust linear programming

- Strong duality

- Conic programming