Abstract

In this paper, we present two Douglas–Rachford inspired iteration schemes which can be applied directly to N-set convex feasibility problems in Hilbert space. Our main results are weak convergence of the methods to a point whose nearest point projections onto each of the N sets coincide. For affine subspaces, convergence is in norm. Initial results from numerical experiments, comparing our methods to the classical (product-space) Douglas–Rachford scheme, are promising.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Given N closed and convex sets with nonempty intersection, the N-set convex feasibility problem asks for a point contained in the intersection of the N sets. Many optimization and reconstruction problems can be cast in this framework, either directly or as a suitable relaxation if a desired bound on the quality of the solution is known a priori.

A common approach to solving N-set convex feasibility problems is the use of projection algorithms. These iterative methods assume that the projections onto each of the individual sets are relatively simple to compute. Some well known projection methods include von Neumann’s alternating projection method [1–8], the Douglas–Rachford method [9–11] and Dykstra’s method [12–14]. Of course, there are many variants. For a review, we refer the reader to any of [15–20].

On certain classes of problems, various projection methods coincide with each other, and with other known techniques. For example, if the sets are closed affine subspaces, alternating projections = Dykstra’s method [13]. If the sets are hyperplanes, alternating projections = Dykstra’s method = Kaczmarz’s method [17]. If the sets are half-spaces, alternating projections = the method Agmon, Motzkin and Schoenberg (MAMS), and Dykstra’s method = Hildreth’s method [19, Chap. 4]. Applied to the phase retrieval problem, alternating projections = error reduction, Dykstra’s method = Fienup’s BIO, and Douglas–Rachford = Fienup’s HIO [21].

Continued interest in the Douglas–Rachford iteration is in part due to its excellent—if still largely mysterious—performance on various problems involving one or more non-convex sets. For example, in phase retrieval problems arising in the context of image reconstruction [21, 22]. The method has also been successfully applied to NP-complete combinatorial problems including Boolean satisfiability [23, 24] and Sudoku [23, 25]. In contrast, von Neumann’s alternating projection method applied to such problems often fails to converge satisfactorily. For progress on the behaviour of non-convex alternating projections, we refer the reader to [26–29].

Recently, Borwein and Sims [30] provided limited theoretical justification for non-convex Douglas–Rachford iterations, proving local convergence for a prototypical Euclidean case involving a sphere and an affine subspace. For the two-dimensional case of a circle and a line, Borwein and Aragón [31] were able to give an explicit region of convergence. Even more recently, a local version of firm nonexpansivity has been utilized by Hesse and Luke [28] to obtain local convergence of the Douglas–Rachford method in limited non-convex settings. Their results do not directly overlap with the work of Aragón, Borwein and Sims (for details see [28, Example 43]).

Most projection algorithms can be extended in various natural ways to the N-set convex feasibility problem without significant modification. An exception is the Douglas–Rachford method, for which only the theory of 2-set feasibility problems has so far been successfully investigated. For applications involving N>2 sets, an equivalent 2-set feasibility problem can, however, be posed in a product space. We shall revisit this later in our paper.

The aim of this paper is to introduce and study the cyclic Douglas–Rachford and averaged Douglas–Rachford iteration schemes. Both can be applied directly to the N-set convex feasibility problem without recourse to a product space formulation.

The paper is organized as follows: In Sect. 2, we give definitions and preliminaries. In Sect. 3, we introduce the cyclic and averaged Douglas–Rachford iteration schemes, proving in each case weak convergence to a point whose projections onto each of the constraint sets coincide. In Sect. 4, we consider the important special case when the constraint sets are affine. In Sect. 5, the new cyclic Douglas–Rachford scheme is compared, numerically, to the classical (product-space) Douglas–Rachford scheme on feasibility problems having ball or sphere constraints. Initial numerical results for the cyclic Douglas–Rachford scheme are quite positive.

2 Preliminaries

Throughout this paper,

and induced norm ∥⋅∥. We use \(\stackrel {w.}{\rightharpoonup }\) to denote weak convergence.

We consider the N-set convex feasibility problem:

Given a set \(S\subseteq\mathcal{H}\) and point \(x\in\mathcal{H}\), the best approximation to x from S is a point p∈S such that

If for every \(x\in\mathcal{H}\) there exists such a p, then S is said to be proximal. Additionally, if p is always unique then S is said to be Chebyshev. In the latter case, the projection onto S is the operator \(P_{S}:\mathcal{H}\to S\) which maps x to its unique nearest point in S and we write P S (x)=p. The reflection about S is the operator \(R_{S}:\mathcal{H}\to\mathcal{H}\) defined by R S :=2P S −I where I denotes the identity operator which maps any \(x\in\mathcal{H}\) to itself.

Fact 2.1

Let \(C\subseteq\mathcal{H}\) be non-empty closed and convex. Then:

-

1.

C is Chebyshev.

-

2.

(Characterization of projections)

$$\begin{aligned} P_C(x)=p\quad \iff\quad p\in C\quad\textit{and}\quad\langle x-p,c-p\rangle\leq 0 \quad\textit{for all }c\in C. \end{aligned}$$ -

3.

(Characterization of reflections)

$$\begin{aligned} &R_C(x)=r \quad\iff\quad \frac{1}{2}(r+x)\in C\quad\textit{and}\quad\langle x-r,c-r\rangle\leq\frac{1}{2}\|x-r\|^2\\ &\quad \textit{for all }c\in C. \end{aligned}$$ -

4.

(Translation formula) For \(y\in\mathcal{H}\), P y+C (x)=y+P C (x−y).

-

5.

(Dilation formula) For \(0\neq\lambda\in\mathbb{R}\), P λC (x)=λP C (x/λ).

-

6.

If C is a subspace then P C is linear.

-

7.

If C is an affine subspace then P C is affine.

Proof

See, for example, [32, Theorem 3.14, Proposition 3.17, Corollary 3.20], [19, Theorem 2.8, Exercise 5.2(i), Theorem 3.1, Exercise 5.10] or [18, Theorems 2.1.3 and 2.1.6]. Note the equivalence of (ii) and (iii) by substituting r=2p−x. □

Given \(A,B\subseteq\mathcal{H}\) we define the 2-set Douglas–Rachford operator \(T_{A,B}:\mathcal{H}\to\mathcal{H}\) by

Note that T A,B and T B,A are typically distinct, while for an affine set A we have T A,A =I.

The basic Douglas–Rachford algorithm originates in [9] and convergence was proven as part of [10].

Theorem 2.1

(Douglas–Rachford [9], Lions–Mercier [10])

Let \(A,B\subseteq\mathcal{H}\) be closed and convex with nonempty intersection. For any \(x_{0}\in\mathcal{H}\), the sequence \(T_{A,B}^{n}x_{0}\) converges weakly to a point x such that P A x∈A∩B.

Theorem 2.1 gives an iterative algorithm for solving 2-set convex feasibility problems. For applications involving N>2 sets, an equivalent 2-set formulation is posed in the product space \(\mathcal{H}^{N}\). This is discussed in detail in Remark 3.4.

Let \(T:\mathcal{H}\to\mathcal{H}\). We recall that T is asymptotically regular if T n x−T n+1 x→0, in norm, for all \(x\in\mathcal{H}\). We denote the set of fixed points of T by \(\operatorname {Fix}T=\{x:Tx=x\}\). Let \(D\subseteq\mathcal{H}\) and \(T:D\to\mathcal{H}\). We say T is nonexpansive if

(i.e. 1-Lipschitz). We say T is firmly nonexpansive if

It immediately follows that every firmly nonexpansive mapping is nonexpansive.

Fact 2.2

Let \(A,B\subseteq\mathcal{H}\) be closed and convex. Then P A is firmly nonexpansive, R A is nonexpansive and T A,B is firmly nonexpansive.

Proof

See, for example, [32, Proposition 4.8, Corollary 4.10, Remark 4.24], or [18, Theorem 2.2.4, Corollary 4.3.6]. □

The class of nonexpansive mappings is closed under convex combinations, compositions, etc. The class of firmly nonexpansive mappings is, however, not so well behaved. For example, even the composition of two projections onto subspaces need not be firmly nonexpansive (see [5, Example 4.2.5]).

A sufficient condition for firmly nonexpansive operators to be asymptotically regular is the following.

Lemma 2.1

Let \(T:\mathcal{H}\to\mathcal{H}\) be firmly nonexpansive with \(\operatorname {Fix}T\neq\emptyset\). Then T is asymptotically regular.

Proof

See, for example, [33, Corollary 1], [18, Lemma 4.3.5] or [34, Corollary 1.1, Proposition 2.1]. □

The composition of firmly nonexpansive operators is always nonexpansive. However, nonexpansive operators need not be asymptotically regular. For example, reflection with respect to a singleton, clearly is not; nor are most rotations. The following is a sufficient condition for asymptotic regularity.

Lemma 2.2

Let \(T_{i}:\mathcal{H}\to\mathcal{H}\) be firmly nonexpansive, for each i, and define T:=T r ⋯T 2 T 1. If \(\operatorname {Fix}T\neq\emptyset\) then T is asymptotically regular.

Proof

See, for example, [32, Theorem 5.22]. □

Remark 2.1

Recently Bauschke, Martín-Márquez, Moffat and Wang [35, Theorem 4.6] showed that any composition of firmly nonexpansive, asymptotically regular operators is also asymptotically regular, even when \(\operatorname {Fix}T=\emptyset\).

The following lemma characterizes fixed points of certain compositions of firmly nonexpansive operators.

Lemma 2.3

Let \(T_{i}:\mathcal{H}\to\mathcal{H}\) be firmly nonexpansive, for each i, and define T:=T r …T 2 T 1. If \(\bigcap_{i=1}^{r}\operatorname {Fix}T_{i}\neq\emptyset\) then \(\operatorname {Fix}T=\bigcap_{i=1}^{r}\operatorname {Fix}T_{i}\).

Proof

See, for example, [32, Corollary 4.37] or [34, Proposition 2.1, Lemma 2.1]. □

There are many way to prove Theorem 2.1. One is to use the following well-known theorem of Opial [36].

Theorem 2.2

(Opial)

Let \(T:\mathcal{H}\to\mathcal{H}\) be nonexpansive, asymptotically regular, and \(\operatorname {Fix}T\neq\emptyset\). Then for any \(x_{0}\in\mathcal{H}\), T n x 0 converges weakly to an element of \(\operatorname {Fix}T\).

Proof

See also, for example, [36] or [32, Theorem 5.13]. □

In addition, when T is linear, the limit can be identified and convergence is in norm.

Theorem 2.3

Let \(T:\mathcal{H}\to\mathcal{H}\) be linear, nonexpansive and asymptotically regular. Then for any \(x_{0}\in\mathcal{H}\), in norm,

Proof

See, for example, [32, Proposition 5.27]. □

Remark 2.2

A version of Theorem 2.3 was used by Halperin [2] to show that von Neumann’s alternating projection, applied to finitely many closed subspaces, converges in norm to the projection on the intersection of the subspaces.Footnote 1

Summarizing we have the following:

Corollary 2.1

Let \(T_{i}:\mathcal{H}\to\mathcal{H}\) be firmly nonexpansive, for each i, with \(\bigcap_{i=1}^{r}\operatorname {Fix}T_{i}\neq\emptyset\) and define T:=T r ⋯T 2 T 1. Then for any \(x_{0}\in\mathcal{H}\), T n x 0 converges weakly to an element of \(\operatorname {Fix}T=\bigcap_{i=1}^{N}\operatorname {Fix}T_{i}\). Moreover, if T is linear, then T n x 0 converges, in norm, to \(P_{\operatorname {Fix}T}x_{0}\).

Proof

Since T is the composition of nonexpansive operators, T is nonexpansive. By Lemma 2.3, \(\operatorname {Fix}T\neq\emptyset\). By Lemma 2.2, T is asymptotically regular. The result now follows by Theorems 2.2 and 2.3. □

We note that the verification of many results in this section can be significantly simplified for the special cases we require.

3 Cyclic Douglas–Rachford Iterations

We are now ready to introduce our first new projection algorithm, the cyclic Douglas–Rachford iteration scheme. Let \(C_{1},C_{2},\dots,C_{N}\subseteq\mathcal{H}\) and define \(T_{[C_{1}\,C_{2}\,\dots\,C_{N}]}:\mathcal{H}\to\mathcal{H}\) by

Given \(x_{0}\in\mathcal{H}\), the cyclic Douglas–Rachford method iterates by repeatedly setting

Remark 3.1

In the two set case, the cyclic Douglas–Rachford operator becomes

That is, it does not coincide with the classic Douglas–Rachford scheme.

Recall the following characterization of fixed points of the Douglas–Rachford operator.

Lemma 3.1

Let \(A,B\subseteq\mathcal{H}\) be closed and convex with nonempty intersection. Then

Proof

See, for example, [21, Fact A1] or [11, Corollary 3.9]. □

We are now ready to present our main result regarding convergence of the cyclic Douglas–Rachford scheme.

Theorem 3.1

(Cyclic Douglas–Rachford)

Let \(C_{1},C_{2},\dots,C_{N}\subseteq\mathcal{H}\) be closed and convex sets with a nonempty intersection. For any \(x_{0}\in\mathcal{H}\), the sequence \(T^{n}_{[1\,2\,\dots\,N]}x_{0}\) converges weakly to a point x such that \(P_{C_{i}}x = P_{C_{j}}x\), for all indices i,j. Moreover, \(P_{C_{j}}x\in \bigcap_{i=1}^{N}C_{i}\), for each index j.

Proof

By Fact 2.2, T i,i+1 is firmly nonexpansive, for each i. Further,

By Corollary 2.1, \(T^{n}_{[1\,2\,\dots\,N]}x_{0}\) converges weakly to a point \(x\in \operatorname {Fix}T_{[1\,2\,\dots\,N]}=\bigcap_{i=1}^{N}\operatorname {Fix}T_{i,i+1}\). By Lemma 3.1, \(P_{C_{i}}x\in C_{i+1}\), for each i. Now we compute

Thus, \(P_{C_{i}}x=P_{C_{i-1}}x\), for each i; and we are done. □

Again by invoking Opial’s theorem, a more general version of Theorem 3.1 can be abstracted.

Theorem 3.2

Let \(C_{1},C_{2},\dots,C_{N}\subseteq\mathcal{H}\) be closed and convex sets with nonempty intersection, let \(T_{j}:\mathcal{H}\to\mathcal{H}\), for each j, and define T:=T N ⋯T 2 T 1. Suppose the following three properties hold.

-

1.

T=T M ⋯T 2 T 1, is nonexpansive and asymptotically regular,

-

2.

\(\operatorname {Fix}T=\bigcap_{j=1}^{M}\operatorname {Fix}T_{j}\neq\emptyset\),

-

3.

\(P_{C_{j}}\operatorname {Fix}T_{j}\subseteq C_{j+1}\), for each j.

Then, for any \(x_{0}\in\mathcal{H}\), the sequence T n x 0 converges weakly to a point x such that \(P_{C_{i}}x=P_{C_{j}}x\) for all i,j. Moreover, \(P_{C_{j}}x\in\bigcap_{i=1}^{N}C_{i}\), for each j.

Proof

By Theorem 2.2, T n x 0 converges weakly to point \(x\in \operatorname {Fix}T\). The remainder of the proof is the same as Theorem 3.1. □

Remark 3.2

We give a sample of examples of operators which satisfy the three conditions of Theorem 3.2.

-

1.

\(T_{[A_{1}\,A_{2}\,\dots\,A_{M}]}\) where A j ∈{C 1,C 2 ,…,C N }, and is such that each C i appear in the sequence A 1,A 2,…,A M at least once.

-

2.

T is any composition of \(P_{C_{1}},P_{C_{2}},\dots,P_{C_{N}}\), such that each projection appears in said composition at least once. In particular, setting \(T=P_{C_{N}}\cdots P_{C_{2}}P_{C_{1}}\) we recover Bregman’s seminal result [3].

-

3.

T j =(I+P j )/2 where P j is any composition of \(P_{C_{1}},P_{C_{2}},\dots,P_{C_{N}}\) such that, for each i, there exists a j such that \(\mathbf{P}_{j}=P_{C_{i}}Q_{j}\) for some composition of projections Q j . A special case is,

$$\begin{aligned} T= \biggl(\frac{I+P_{C_1}P_{C_N}}{2} \biggr)\cdots \biggl(\frac{I+P_{C_3}P_{C_2}}{2} \biggr) \biggl(\frac{I+P_{C_2}P_{C_1}}{2} \biggr). \end{aligned}$$ -

4.

If T 1,T 2…,T M are operators satisfying the conditions of Theorem 3.2, replacing T j with the relaxation α j I+(1−α j )T j where α j ∈ ]0,1/2], for each i. Note the relaxations are firmly nonexpansive [32, Remark 4.27].

Of course, there are many other applicable variants. For instance, Krasnoselski–Mann iterations (see [32, Theorem 5.14] and [38]).

We now investigate the cyclic Douglas–Rachford iteration in the special-but-common case where the initial point lies in one of the target sets; most especially the first target set.

Corollary 3.1

Let \(C_{1},C_{2},\dots,C_{N}\subseteq\mathcal{H}\) be closed and convex sets with a nonempty intersection. If y∈C i then \(T_{i,i+1}y=P_{C_{i+1}}y\). In particular, if x 0∈C 1, the cyclic Douglas–Rachford trajectory coincides with that of von Neumann’s alternating projection method.

Proof

For any \(y\in\mathcal{H}\), \(T_{i,i+1}y=P_{C_{i+1}}y \iff R_{C_{i+1}}y=R_{C_{i+1}}R_{C_{i}}y\). If y∈C i then \(R_{C_{i}}y=y\). In particular, if x 0∈C 1 then

and the result follows. □

Remark 3.3

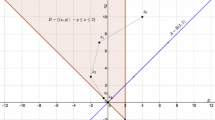

If \(x_{0}\not\in C_{1}\), then the cyclic Douglas–Rachford trajectory need not coincide with von Neumann’s alternating projection method. We give an example involving two closed subspaces with codimension 1 (see Fig. 1). Define

where \(a_{1},a_{2}\in\mathcal{H}\) such that 〈a 1,a 2〉≠0. By scaling if necessary, we may assume that ∥a 1∥=∥a 2∥=1. Then one has,

and

Similarly,

By Remark 4.1,

Hence, 〈a 1,T [1 2] x〉=〈a 1,a 2〉2〈a 1,x〉. Similarly, 〈a 2,T [1 2] x〉=〈a 1,a 2〉2〈a 2,x〉.

Thus, if 〈a i ,x〉≠0, for each i, then 〈a i ,T [1 2] x〉≠0, for each i. In particular, if \({x_{0}\not\in C_{1}\cup C_{2}}\), then none of the cyclic Douglas–Rachford iterates lie in C 1 or C 2.

A second example, involving a ball and an affine subspace is illustrated in Fig. 2.

Remark 3.4

(A product version)

We now consider the classical product formulation of (1). Define two subsets of \(\mathcal{H}^{N}\):

which are both closed and convex (in fact, D is a subspace). Consider the 2-set convex feasibility problem

Then (1) is equivalent to (4) in the sense that

Further the projections, and hence reflections, are easily computed since

Let x 0∈D and define x n :=T [D C] x n−1. Then Corollary 3.1 yields

That is, if—as is reasonable—we start in D, the cyclic Douglas–Rachford method coincides with averaged projections.

In general, the iteration is based on

If x=(x 1,x 2,…,x N ), then the ith coordinate of (5) can be expressed as

where

which is a considerably more complex formula.

Let \(A,B\subseteq\mathcal{H}\). Recall that points (x,y)∈A×B form a best approximation pair relative to (A,B) if

Remark 3.5

(a) Consider \(C_{1}=B_{\mathcal{H}}:=\{x\in\mathcal{H}:\|x\|\leq 1\}\) and C 2={y}, for some \(y\in\mathcal{H}\). Then

where \(P_{C_{1}}z=z\) if z∈C 1, and z/∥z∥ otherwise. Now,

Thus,

-

If x∈C 1 then \(x=P_{C_{1}}y\).

-

If \(y-x+P_{C_{1}}x\in C_{1}\) then x=y.

-

Else, ∥x∥>1 and ∥y−x+P A x∥>1. By (6),

$$\begin{aligned} x=\lambda y\quad\text{where } \lambda = \biggl(\frac{\|x\|}{\|y-x+P_{C_1}x\|+\|x\|-1} \biggr) \in]0,1[. \end{aligned}$$Moreover, since 1<∥x∥=λ∥y∥, we obtain λ∈]1/∥y∥,1[.

In each case, \(P_{C_{1}}x=P_{C_{1}}y\) and \(P_{C_{2}}x=y\). Therefore, \((P_{C_{1}}x,P_{C_{2}}x)\) is a best approximation pair relative to (C 1,C 2) (see Fig. 3). In particular, if C 1∩C 2≠∅, then \(P_{C_{1}}y=y\) and, by Theorem 3.1, the cyclic Douglas–Rachford scheme weakly converges to y, the unique element of C 1∩C 2.

When C 1∩C 2=∅, Theorem 3.1 cannot be invoked to guarantee convergence. However, the above analysis provides the information that

(b) Suppose instead, \(C_{1}=S_{\mathcal{H}}:=\{x\in\mathcal{H}:\|x\|=1\}\). A similar analysis can be performed. If y≠0 and \(x\in \operatorname {Fix}T_{[1\,2]}\) are such that \(x,y-x+P_{C_{1}}x\neq 0\), then

-

If x∈C 1 then \(x=P_{C_{1}}y\).

-

If \(y-x+P_{C_{1}}x\in C_{1}\) then x=y.

-

Else, x=λy where

$$\begin{aligned} \lambda = \biggl(\frac{\|x\|}{\|y-x+P_{C_1}x\|+\|x\|-1} \biggr) \geq \biggl(\frac{\|x\|}{\|y-x\|+\|P_{C_1}x\|+\|x\|-1} \biggr)>0. \end{aligned}$$

Again, \((P_{C_{1}}x,P_{C_{2}}x)\) is a best approximation pair relative to (C 1,C 2).

Experiments with interactive Cinderella Footnote 2 dynamic geometry applets suggest similar behaviour of the cyclic Douglas–Rachford method applied to many other problems for which C 1∩C 2=∅. For example, see Fig. 4. This suggests the following conjecture.

An interactive Cinderella applet showing the cyclic Douglas–Rachford method applied to the case of a non-intersecting ball and a line. The method appears convergent to a point whose projections onto the constraint sets form a best approximation pair. Each green dot represents a cyclic Douglas–Rachford iteration (Color figure online)

Conjecture 3.1

Let \(C_{1},C_{2}\subseteq\mathcal{H}\) be closed and convex with C 1∩C 2=∅. Suppose that a best approximation pair relative to (C 1,C 2) exists. Then the two-set cyclic Douglas–Rachford scheme converges weakly to a point x such that \((P_{C_{1}}x,P_{C_{2}}x)\) is a best approximation pair relative to the sets (C 1,C 2).

Remark 3.6

If there exists an integer n such that either \(T_{[1\,2]}^{n}x_{0}\in C_{1}\) or \(T_{1,2}T_{[1\,2]}^{n}x_{0}\in C_{2}\), by Corollary 3.1, the cyclic Douglas–Rachford scheme coincides with von Neumann’s alternating projection method. In this case, Conjecture 3.1 holds by [39, Theorem 2]. In this connection, we also refer the reader to [4, 14].

It is not hard to think of non-convex settings in which Conjecture 3.1 is false. For example, in \(\mathbb{R}\), let C 1=[0,1] and \(C_{2}=\{0,\frac{11}{10}\}\). If x 0=1 then T [1 2] x 0=x 0, but

which is not a best approximation pair relative to (C 1,C 2).

We now present an averaged version of our cyclic Douglas–Rachford iteration.

Theorem 3.3

(Averaged Douglas–Rachford)

Let \(C_{1},C_{2},\dots,C_{N}\subseteq\mathcal{H}\) be closed and convex sets with a nonempty intersection. For any \(x_{0}\in\mathcal{H}\), the sequence defined by

converges weakly to a point x such that \(P_{C_{i}}x = P_{C_{j}}x\) for all indices i,j. Moreover, \({P_{C_{j}}x\in \bigcap_{i=1}^{N}C_{i}}\), for each index j.

Proof

Consider \(C,D\subseteq\mathcal{H}^{N}\) as (3) and define \(T:=P_{D}(\prod_{i=1}^{N}T_{i,i+1})\). By Fact 2.2, P D is firmly nonexpansive. By Fact 2.2, T i,i+1 is firmly nonexpansive in \(\mathcal{H}\), for each i, hence \(\prod_{i=1}^{N}T_{i,i+1}\) is firmly nonexpansive in \(\mathcal{H}^{N}\). Further, \(\operatorname {Fix}(\prod_{i=1}^{N}T_{i,i+1})\cap P_{D}\supseteq C\cap D\neq\emptyset\). By Corollary 2.1, x n converges weakly to a point \(\mathbf{x}\in \operatorname {Fix}T\).

Let \(\mathbf{x}_{0}=(x_{0},x_{0},\dots,x_{0})\in\mathcal{H}^{N}\). Since T x n ∈D, for each n, we write x n =(x n ,x n ,…,x n ) for some \(x_{n}\in\mathcal{H}\). Then

independent of i. Similarly, since \(\mathbf{x}\in \operatorname {Fix}P_{D}=D\), we write \(\mathbf{x}=(x,x,\dots,x)\in\mathcal{H}^{N}\) for some \(x\in\mathcal{H}\). Since \(\mathbf{x}\in \operatorname {Fix}(\prod_{i=1}^{N}T_{i,i+1})\), \(x\in \operatorname {Fix}T_{i,i+1}\), for each i, and hence \(P_{C_{i}}x\in C_{i+1}\). The same computation as in Theorem 3.1 now completes the proof. □

Since each 2-set Douglas–Rachford iteration can be computed independently, the averaged iteration is easily parallelizable.

4 Affine Constraints

In this section, we observe that the conclusions of Theorems 3.1 and 3.3 can be strengthened when the constraints are affine.

Lemma 4.1

(Translation formula)

Let \(C_{1}',C_{2}',\dots,C_{N}'\subseteq\mathcal{H}\) be closed and convex sets with a nonempty intersection. For fixed \(y\in\mathcal{H}\), define \(C_{i}:=y+C_{i}'\), for each i. Then

and

Proof

By the translation formula for projections (Fact 2.1), we have

The first result follows since

Iterating gives

from which the second result follows. □

Theorem 4.1

(Norm convergence)

Let \(C_{1},C_{2},\dots,C_{N}\subseteq\mathcal{H}\) be closed affine subspaces with a nonempty intersection. Then, for any \(x_{0}\in\mathcal{H}\),

is norm convergent.

Proof

Let \(c\in\bigcap_{i=1}^{N} C_{i}\). Since C i are affine we write \(C_{i}=c+C_{i}'\), where \(C_{i}'\) is a closed subspace. Since \(T_{C_{i}',C_{i+1}'}\) is linear, for each i, so is \(T_{[C_{1}'\,C_{2}'\,\dots\,C_{N}']}\). By Fact 2.2, for each i, \(T_{C_{i}',C_{i+1}'}\) is firmly nonexpansive. Further, \(\bigcap_{i=1}^{N}\operatorname {Fix}T_{C_{i}',C_{i+1}'}\supseteq\bigcap_{i=1}^{N}C_{i}'\neq\emptyset\). By Lemma 4.1 and Corollary 2.1,

This completes the proof. □

Remark 4.1

For closed affine A, R A is affine (a consequence of Fact 2.1) and \(R_{A}^{2}=I\). Thus, for the case of two affine subspaces,

That is, the cyclic Douglas–Rachford and averaged Douglas–Rachford methods coincide. For N>2 closed affine subspaces, the two methods do not always coincide. For instance, when N=3,

hence,

This includes a term which is the composition of four reflection operators whereas the averaged iteration can be expressed as a linear combination of terms which are the composition of at most two reflection operators.

Theorem 4.2

(Averaged norm convergence)

Let \(C_{1},C_{2},\dots,C_{N}\subseteq\mathcal{H}\) be closed affine subspaces with a nonempty intersection. Then, in norm

Proof

Let \(C,D\subseteq\mathcal{H}^{N}\) as in (3). Let \(c\in\bigcap_{i=1}^{N}C_{i}\) and define \(\mathbf{c}=(c,c,\dots,c)\in\mathcal{H}^{N}\). Since C i are affine we may write \(C_{i}=c+C_{i}'\), where \(C_{i}'\) is a closed subspace, and hence C=c+C′ where \(C'=\prod_{i=1}^{N}C_{i}'\).

For convenience, let Q denote \(\prod_{i=1}^{N}T_{C_{i}',C_{i+1}'}\) and let T=P D Q. Since C′ and D are subspaces, T is linear. By Fact 2.2, \(T_{C_{i}',C_{i+1}'}\) is firmly nonexpansive, hence so is Q. Further, \(\operatorname {Fix}T\supseteq \operatorname {Fix}Q\cap \operatorname {Fix}P_{D} \supseteq \operatorname {Fix}Q\cap D\neq\emptyset\) since \(\bigcap_{i=1}^{N}C_{i}'\neq\emptyset\).

As a consequence of Lemma 4.1, we have the translation formula

As in the proof of Theorem 4.1, the translation formula, together with Corollary 2.1, shows T n x 0→P kerT x 0=:z where \(\mathbf{x}_{0}=(x_{0},x_{0},\dots,x_{0})\in\mathcal{H}^{N}\). As x n ∈D, we may write x n =(x n ,x n ,…,x n ) for some \(x_{n} \in {\mathcal{H}}\). Similarly, we write z=(z,z,…,z). Then

Hence, \(z=P_{\bigcap_{i=1}^{N}\operatorname {Fix}T_{i,i+1}}x_{0}\). By Lemma 2.3, \(\operatorname {Fix}T_{[C_{1}\,C_{2}\,\dots\,C_{N}]}=\bigcap_{i=1}^{N}\operatorname {Fix}T_{i,i+1}\), and the proof is complete. □

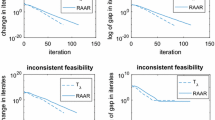

5 Numerical Experiments

In this section, we present the results of computational experiments comparing the cyclic Douglas–Rachford and (product-space) Douglas–Rachford schemes—as serial algorithms. These are not intended to be a complete computational study, but simply a first demonstration of viability of the method. From that vantage-point, our initial results are promising.

Two classes of feasibility problems were considered, the first convex and the second non-convex:

Here \(B_{\mathcal{H}}\) (resp., \(S_{\mathcal{H}}\)) denotes the closed unit ball (resp., unit sphere).

To ensure all problem instances were feasible, constraint sets were randomly generated using the following criteria:

-

Ball constraints: Randomly choose x i ∈[−5,5]n and r i ∈[∥x i ∥,∥x i ∥+0.1].

-

Sphere constraints: Randomly choose x i ∈[−5,5]n and set r i =∥x i ∥.

In each case, by design, the non-empty intersection contains the origin. We consider both over- and under-constrained instances.

Note, if C i is a sphere constraint then \(P_{C_{i}}(x_{i})=C_{i}\) (i.e. nearest points are not unique), and \(P_{C_{i}}\) a set-valued mapping. In this situation, a random nearest point was chosen from C i . In every other case, \(P_{C_{i}}\) is single valued.

For the comparison, the classical Douglas–Rachford scheme was applied to the equivalent feasibility problem (4), which is formulated in the product space \((\mathbb{R}^{n})^{N}\).

Computations were performed using Python 2.6.6 on an Intel Xeon E5440 at 2.83 GHz (single threaded) running 64-bit Red Hat Enterprise Linux 6.4. The following conditions were used:

-

Choose a random x 0∈[−10,10]n. Initialize the cyclic Douglas–Rachford scheme with x 0, and the parallel Douglas–Rachford scheme with \((x_{0},x_{0},\dots,x_{0})\in (\mathbb{R}^{n})^{N}\).

-

Iterate by setting

$$\begin{aligned} x_{n+1}=Tx_n\quad\text{where }T=T_{[1\,2\,\dots\,N]}\text{ or }T_{C,D}. \end{aligned}$$An iteration limit of 1000 was enforced.

-

Stopping criterion:

$$\begin{aligned} \|x_n-x_{n+1}\|<\epsilon \quad\text{where } \epsilon=10^{-3}\text{ or }10^{-6}. \end{aligned}$$ -

After termination, the quality of the solution was measured by

$$\begin{aligned} \text{error} =\sum_{i=2}^N \|P_{C_1}x-P_{C_{i}}x\|^2. \end{aligned}$$

Results are tabulated in Tables 1, 2, 3, and 4. A “0” error (without decimal place) represents zero within the accuracy the numpy.float64 data type. Illustrations of low dimensional examples are shown in Figs. 5, 6 and 7.

We make some comments on the results.

-

The cyclic Douglas–Rachford method easily solves both problems.

Solutions for 1000 dimensional instances, with varying numbers of constraints, could be obtained in under half-a-second, with worst case errors in the order of 10−13. Many instances of the (P1) where solved without error. Instances involving fewer constraints required a greater number of iterations before termination. This can be explained by noting that each application of T [1 2 … N] applies a 2-set Douglas–Rachford operator N times, and hence iterations for instances with a greater number of constraints are more computationally expensive.

-

When the number of constraints was small, relative to the dimension of the problem, the Douglas–Rachford method was able to solve (P1) in a comparable time to the cyclic Douglas–Rachford method.

For larger numbers of constraints the method required significantly more time. This is a consequence of working in the product space, and would be ameliorated in a parallel implementation.

-

Applied to (P2), the original Douglas–Rachford method encountered difficulties.

While it was able to solve (P2) reliably when ϵ=10−3, when ϵ=10−6 the method failed to terminate in every instance. However, in these cases the final iterate still yielded a point having a satisfactory error. The number of iterations and time required, for the Douglas–Rachford method was significantly higher compared to the cyclic Douglas–Rachford method. As with (P1), the difference was most noticeable for problems with greater numbers of constraints.

-

Both methods performed better on (P1) compared to (P2).

This might well be predicted. For in (P1), all constraint sets are convex, hence convergence is guaranteed by Theorems 3.1 and 2.1, respectively. However, in (P2), the constraints are non-convex, thus neither theorem can be evoked. Our results suggest that the cyclic Douglas–Rachford as a heuristic.

-

We note that there are some difficulties in using the number of iterations as a comparison between two methods.

Each cyclic Douglas–Rachford iteration requires the computation of 2N reflections, and each Douglas–Rachford iteration (N+1). Even taking this into account, performance of the cyclic Douglas–Rachford method was superior to the original Douglas–Rachford method on both (P1) and (P2). However, in light of the “no free lunch” theorems of Wolpert and Macready [40], we are heedful about asserting dominance of our method.

Remark 5.1

Applied to combinatorial feasibility problems, experimental evidence suggests that unlike the Douglas–Rachford scheme, the cyclic Douglas–Rachford scheme fails to converge satisfactorily. For details, see [41].

6 Conclusions

Two new projection algorithms, the cyclic Douglas–Rachford and averaged Douglas–Rachford iteration schemes, were introduced and studied. Applied to N-set convex feasibility problems in Hilbert space, both weakly converge to point whose projections onto each of the N-set coincide. This behaviour is analogous to that of the classical Douglas–Rachford scheme. For inconsistent 2-set problems, it is conjectured that the two set cyclic Douglas–Rachford scheme yields best approximation pairs. This is known to be true in a number of non-trivial cases, as outlined in Sect. 3. Numerical experiments suggest that that the cyclic Douglas–Rachford scheme outperforms the classical Douglas–Rachford scheme, which suffers as a result of the product formulation. An advantage of our schemes is that they can be used in the original space, without recourse to this formulation. It would be interesting to numerically compare the two schemes on a wider range of feasibility problems.

HTML versions of the interactive Cinderella applets are available at:

Notes

Kakutani had earlier proven weak convergence for finitely many subspaces [37]. Von Neumann’s original two-set proof does not seem to generalize.

References

von Neumann, J.: Functional Operators, vol. II. The Geometry of Orthogonal Spaces vol. 22. Princeton University Press, Princeton (1950)

Halperin, I.: The product of projection operators. Acta Sci. Math. (Szeged) 23, 96–99 (1962)

Bregman, L.: The method of successive projection for finding a common point of convex sets. J. Sov. Math. 6, 688–692 (1965)

Bauschke, H., Borwein, J.: On the convergence of von Neumann’s alternating projection algorithm for two sets. Set-Valued Anal. 1(2), 185–212 (1993)

Bauschke, H., Borwein, J., Lewis, A.: The method of cyclic projections for closed convex sets in Hilbert space. Contemp. Math. 204, 1–38 (1997)

Kopecká, E., Reich, S.: A note on the von Neumann alternating projections algorithm. J. Nonlinear Convex Anal. 5(3), 379–386 (2004)

Kopecká, E., Reich, S.: Another note on the von Neumann alternating projections algorithm. J. Nonlinear Convex Anal. 11, 455–460 (2010)

Pustylnik, E., Reich, S., Zaslavski, A.: Convergence of non-periodic infinite products of orthogonal projections and nonexpansive operators in Hilbert space. J. Approx. Theory 164(5), 611–624 (2012)

Douglas, J., Rachford, H.: On the numerical solution of heat conduction problems in two and three space variables. Trans. Am. Math. Soc. 82(2), 421–439 (1956)

Lions, P., Mercier, B.: Splitting algorithms for the sum of two nonlinear operators. SIAM J. Numer. Anal. 16(6), 964–979 (1979)

Bauschke, H., Combettes, P., Luke, D.: Finding best approximation pairs relative to two closed convex sets in Hilbert spaces. J. Approx. Theory 127(2), 178–192 (2004)

Dykstra, R.: An algorithm for restricted least squares regression. J. Am. Stat. Assoc. 78(384), 837–842 (1983)

Boyle, J., Dykstra, R.: A method for finding projections onto the intersection of convex sets in Hilbert spaces. In: Advances in Order Restricted Statistical Inference. Lecture Notes in Statistics, vol. 37, pp. 28–47. Springer, Berlin (1986)

Bauschke, H., Borwein, J.: Dykstra’s alternating projection algorithm for two sets. J. Approx. Theory 79(3), 418–443 (1994)

Bauschke, H.: Projection algorithms: results and open problems. Stud. Comput. Math. 8, 11–22 (2001)

Bauschke, H., Borwein, J.: On projection algorithms for solving convex feasibility problems. SIAM Rew. 38(3), 367–426 (1996)

Deutsch, F.: The method of alternating orthogonal projections. In: Approximation Theory, Spline Functions and Applications, pp. 105–121. Kluwer Academic, Dordrecht (1992)

Tam, M.: The method of alternating projections. http://docserver.carma.newcastle.edu.au/id/eprint/1463. Honours thesis, Univ. of Newcastle (2012)

Escalante, R., Raydan, M.: Alternating Projection Methods. Fundamentals of Algorithms. Society for Industrial and Applied Mathematics, Philadelphia (2011)

Borwein, J.: Maximum entropy and feasibility methods for convex and nonconvex inverse problems. Optimization 61(1), 1–33 (2012)

Bauschke, H., Combettes, P., Luke, D.: Phase retrieval, error reduction algorithm, and Fienup variants: a view from convex optimization. J. Opt. Soc. Am. A 19(7), 1334–1345 (2002)

Bauschke, H., Combettes, P., Luke, D.: Hybrid projection–reflection method for phase retrieval. J. Opt. Soc. Am. A 20(6), 1025–1034 (2003)

Elser, V., Rankenburg, I., Thibault, P.: Searching with iterated maps. Proc. Natl. Acad. Sci. 104(2), 418–423 (2007)

Gravel, S., Elser, V.: Divide and concur: a general approach to constraint satisfaction. Phys. Rev. E 78(3), 036,706 (2008)

Schaad, J.: Modeling the 8-queens problem and sudoku using an algorithm based on projections onto nonconvex sets. Master’s thesis, Univ. of British Columbia (2010)

Lewis, A., Luke, D., Malick, J.: Local linear convergence for alternating and averaged nonconvex projections. Found. Comput. Math. 9(4), 485–513 (2009)

Bauschke, H., Luke, D., Phan, H., Wang, X.: Restricted normal cones and the method of alternating projections. Set-Valued Var. Anal. To appear (2013). http://arxiv.org/pdf/1205.0318v1

Hesse, R., Luke, D.: Nonconvex notions of regularity and convergence of fundamental algorithms for feasibility problems. Preprint (2012). http://arxiv.org/pdf/1205.0318v1

Drusvyatskiy, D., Ioffe, A., Lewis, A.: Alternating projections: a new approach. In preparation

Borwein, J., Sims, B.: The Douglas–Rachford algorithm in the absence of convexity. In: Fixed-Point Algorithms for Inverse Problems in Science and Engineering, pp. 93–109 (2011)

Aragón Artacho, F., Borwein, J.: Global convergence of a non-convex Douglas–Rachford iteration. J. Glob. Optim. (2012). doi:10.1007/s10898-012-9958-4

Bauschke, H., Combettes, P.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces. Canadian Mathematical Society Societe Mathematique Du Canada. Springer, New York (2011)

Reich, S., Shafrir, I.: The asymptotic behavior of firmly nonexpansive mappings. Proc. Am. Math. Soc. 101(2), 246–250 (1987)

Bruck, R., Reich, S.: Nonexpansive projections and resolvents of accretive operators in Banach space. Houst. J. Math. 4 (1977)

Bauschke, H., Martín-Márquez, V., Moffat, S., Wang, X.: Compositions and convex combinations of asymptotically regular firmly nonexpansive mappings are also asymptotically regular. Fixed Point Theory Appl. 2012(53), 1–11 (2012)

Opial, Z.: Weak convergence of the sequence of successive approximations for nonexpansive mappings. Bull. Am. Math. Soc. 73(4), 591–597 (1967)

Netyanun, A., Solmon, D.: Iterated products of projections in Hilbert space. Am. Math. Mon. 113(7), 644–648 (2006)

Borwein, J., Reich, S., Shafrir, I.: Krasnoselski–Mann iterations in normed spaces. Can. Math. Bull. 35(1), 21–28 (1992)

Cheney, W., Goldstein, A.: Proximity maps for convex sets. Proc. Am. Math. Soc. 10(3), 448–450 (1959)

Wolpert, D., Macready, W.: No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1(1), 67–82 (1997)

Aragón Artacho, F., Borwein, J., Tam, M.: 2013, Recent results on Douglas–Rachford methods for combinatorial optimization problems. Preprint. arXiv:1305.2657v1

Acknowledgements

The authors wish to acknowledge Francisco J. Aragón Artacho, Brailey Sims, Simeon Reich, and the two anonymous referees for their helpful comments and suggestions.

Jonathan M. Borwein’s research is supported in part by the Australian Research Council.

Matthew K. Tam’s research is supported in part by an Australian Postgraduate Award.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Borwein, J.M., Tam, M.K. A Cyclic Douglas–Rachford Iteration Scheme. J Optim Theory Appl 160, 1–29 (2014). https://doi.org/10.1007/s10957-013-0381-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-013-0381-x