Abstract

The Douglas–Rachford algorithm is a classical and very successful method for solving optimization and feasibility problems. In this paper, we provide novel conditions sufficient for finite convergence in the context of convex feasibility problems. Our analysis builds upon, and considerably extends, pioneering work by Spingarn. Specifically, we obtain finite convergence in the presence of Slater’s condition in the affine-polyhedral and in a hyperplanar-epigraphical case. Various examples illustrate our results. Numerical experiments demonstrate the competitiveness of the Douglas–Rachford algorithm for solving linear equations with a positivity constraint when compared to the method of alternating projections and the method of reflection–projection.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Throughout this paper, we assume that

i.e., a finite-dimensional real Hilbert space with inner product \(\left\langle {\cdot },{\cdot } \right\rangle \) and induced norm \(\Vert \cdot \Vert \), and

Consider the convex feasibility problem

and assume that it is possible to evaluate the projectors (nearest point mappings) \(P_A\) and \(P_B\) corresponding to A and B, respectively. We denote the corresponding reflectors by \(R_A := 2P_A-{\text {Id}}\) and \(R_B := 2P_B-{\text {Id}}\), respectively. Projection methods combine the projectors and reflectors in a suitable way to generate a sequence converging to a solution of (3)—we refer the reader to [2, 8], and [9] and the references therein for further information.

One celebrated algorithm for solving (3) is the so-called Douglas–Rachford algorithm (DRA) [11]. The adaption of this algorithm to optimization and feasibility is actually due to Lions and Mercier was laid out beautifully in their landmark paper [16] (see also [12]). The DRA is based on the Douglas–Rachford splitting operator,

which is used to generate a sequence \((z_n)_{n\in {\mathbb {N}}}\) with starting point \(z_0\in X\) via

Then the “governing sequence” \((z_n)_{n\in {\mathbb {N}}}\) converges to a point \(z\in {\text {Fix }}T\), and, more importantly, the “shadow sequence” \((P_Az_n)_{n\in {\mathbb {N}}}\) converges to \(P_Az\) which is a solution of (3).

An important question concerns the speed of convergence of the sequence \((P_Az_n)_{n\in {\mathbb {N}}}\). Linear convergence was more clearly understood recently, see [14, 21], and [6].

The aim of this paper is to provide verifiable conditions sufficient for finite convergence.

Our two main results reveal that Slater’s condition, i.e.,

plays a key role and guarantees finite convergence when (MR1) A is an affine subspace and B is a polyhedron (Theorem 3.7); or when (MR2) A is a certain hyperplane and B is an epigraph (Theorem 5.4). Examples illustrate that these results are applicable in situations where previously known conditions sufficient for finite convergence fail. When specialized to a product space setting, we derive a finite-convergence result due to Spingarn [26] for his method of partial inverses [25]. Indeed, the proof of Theorem 3.7 follows his pioneering work, but, at the same time, we simplify his proofs and strengthen the conclusions. These sharpenings allow us to obtain finite-convergence results for solving linear equations with a positivity constraint. Numerical experiments support the competitiveness of the DRA for solving (3).

It would be interesting to derive additional conditions guaranteeing finite convergence, not only in the present finite-dimensional setting but also in a more general infinite-dimensional setting. Moreover, how these results generalize (if at all) from convex feasibility to finding the zero of the sum of two maximally monotone operators is currently quite unclear.

1.1 Organization of the paper

The paper is organized as follows. In Sect. 2, we present several auxiliary results which make the eventual proofs of the main results more structured and transparent. Section 3 contains the first main result (MR1). Applications using the product space set up, a comparison with Spingarn’s work, and numerical experiments are provided in Sect. 4. The final Sect. 5 concerns the second main result (MR2).

1.2 Notation

The notation employed is standard and follows largely [2]. The real numbers are \(\mathbb {R}\), and the nonnegative integers are \(\mathbb {N}\). Further, \(\mathbb {R}_+:= \left\{ {x \in \mathbb {R}}~\big |~{x \ge 0}\right\} \) and \(\mathbb {R}_{++}:= \left\{ {x \in \mathbb {R}}~\big |~{x >0}\right\} \). Let C be a subset of X. Then the closure of C is \(\overline{C}\), the interior of C is \({\text {int}}\,C\), the boundary of C is \({\text {bdry }}C\), and the smallest affine and linear subspaces containing C are, respectively, \({\text {aff }}C\) and \({{\text {span}}}\,C\). The relative interior of C, \({\text {ri}}\,C\), is the interior of C relative to \({\text {aff }}C\). The orthogonal complement of C is \(C^\perp :=\left\{ {y \in X}~\big |~{(\forall x\in C)\; \left\langle {x},{y} \right\rangle =0}\right\} \), and the dual cone of C is \(C^\oplus :=\left\{ {y \in X}~\big |~{(\forall x \in C)\; \left\langle {x},{y} \right\rangle \ge 0}\right\} \). The normal cone operator of C is denoted by \(N_C\), i.e., \(N_C(x) =\left\{ {y \in X}~\big |~{(\forall c \in C)\; \left\langle {y},{c-x} \right\rangle \le 0}\right\} \) if \(x \in C\), and \(N_C(x) =\varnothing \) otherwise. If \(x \in X\) and \(\rho \in \mathbb {R}_{++}\), then \({\text {ball}}({x};{\rho }) :=\left\{ {y \in X}~\big |~{\Vert x -y\Vert \le \rho }\right\} \) is the closed ball centered at x with radius \(\rho \).

2 Auxiliary results

In this section, we collect several auxiliary results that will be useful in the sequel.

2.1 Convex sets

Lemma 2.1

Let C be a nonempty closed convex subset of X, let \(x \in X\), and let \(y \in C\). Then \(x -P_Cx \in N_C(y)\) \(\Leftrightarrow \) \(\left\langle {x -P_Cx},{y -P_Cx} \right\rangle =0\).

Proof

Because \(y\in C\) and \(x-P_Cx\in N_C(P_Cx)\), we have \(\left\langle {x-P_Cx},{y-P_Cx} \right\rangle \le 0\). “\(\Rightarrow \)”: From \(x-P_Cx\in N_C(y)\) and \(P_Cx \in C\), we have \(\left\langle {x-P_Cx},{P_Cx-y} \right\rangle \le 0\). Thus \(\left\langle {x-P_Cx},{P_Cx-y} \right\rangle =0\). “\(\Leftarrow \)”: We have \((\forall c\in C)\) \(\left\langle {x-P_Cx},{c-y} \right\rangle = \left\langle {x-P_Cx},{c-P_Cx} \right\rangle +\left\langle {x-P_Cx},{P_Cx-y} \right\rangle = \left\langle {x-P_Cx},{c-P_Cx} \right\rangle \le 0\). Hence \(x-P_Cx\in N_C(y)\). \(\square \)

Lemma 2.2

Let C be a nonempty convex subset of X. Then \({\text {int}}\,C \ne \varnothing \) \(\Leftrightarrow \) \(0 \in {\text {int}}(C-C)\).

Proof

“\(\Rightarrow \)”: Clear. ”\(\Leftarrow \)”: By [22, Theorem 6.2], \({\text {ri}}\,C \ne 0\). After translating the set if necessary, we assume that \(0 \in {\text {ri}}\,C\). Then \(0 \in C\), and so \(Y :={\text {aff }}C ={{\text {span}}}\,C\). Since \(0 \in {\text {int}}(C -C)\subseteq {\text {int}}({{\text {span}}}\,C)={\text {int}}\,Y\), this gives \({\text {int}}\,Y \ne \varnothing \) and thus \(Y =X\). In turn, \({\text {int}}\,C ={\text {ri}}\,C \ne \varnothing \). \(\square \)

2.2 Cones

Lemma 2.3

Let K be a nonempty convex cone in X. Then there exists \(v \in {\text {ri}}\,K \cap {\text {ri}}\,K^\oplus \) such that

Proof

By [26, Lemma 2], there exists \(v \in {\text {ri}}\,K \cap {\text {ri}}\,K^\oplus \). Then \(v \in {\text {ri}}\,K^\oplus ={\text {ri}}\,\overline{K}^\oplus \). Noting that \(\overline{K}\) is a closed convex cone and using [26, Lemma 3], we complete the proof. \(\square \)

Lemma 2.4

Let \((z_n)_{n\in {\mathbb {N}}}\) be a sequence in X, and let \(f :X \rightarrow \mathbb {R}\) be linear. Assume that \(z_n \rightarrow z \in X\), and that

Then there exist \(n_0 \in \mathbb {N}{\backslash } \{0\}\) and \((\mu _1, \ldots , \mu _{n_0}) \in \mathbb {R}_+^{n_0}\) such that \(\sum _{k=1}^{n_0}\mu _k=1\) and

Proof

Introducing

we get

Let K be the convex cone generated by \(\{y_n\}_{n \in \mathbb {N}{\backslash } \{0\}}\). We see that

Therefore,

Setting

we immediately have \(w_n \rightarrow 0\), and so

From (8) we get

Moreover, \(f(w_n) \rightarrow f(0) =0\), hence

Together with (13) and (15), this gives

By Lemma 2.3, there exists \(v \in {\text {ri}}\,K\cap {\text {ri}}\,K^\oplus \) such that

Then we must have \(v \ne 0\). Since \(v \in K\), after scaling if necessary, there exist \(n_0\in \mathbb {N}{\backslash }\{0\}\) and \((\mu _1,\ldots ,\mu _{n_0})\in \mathbb {R}_+^{n_0}\) such that

This combined with (19) implies

and so (9) holds. \(\square \)

Lemma 2.5

Let K be a nonempty pointedFootnote 1 convex cone in X. Then the following hold:

-

(i)

Let \(m\in \mathbb {N}{\backslash }\{0\}\) and let \((x_1,\ldots ,x_m)\in K^m\). Then \(x_1 +\cdots +x_m =0\) \(\Leftrightarrow \) \(x_1 =\cdots =x_m =0\).

-

(ii)

If K is closed and \(L:X\rightarrow X\) is linear such that

$$\begin{aligned} \ker L\cap K =\{0\}, \end{aligned}$$(22)then L(K) is a nonempty pointed closed convex cone.

Proof

-

(i)

Assume that \(x_1 +\cdots +x_m =0\). Then since K is a convex cone,

$$\begin{aligned} -x_1 =x_2 +\cdots +x_m \in K, \end{aligned}$$(23)and so \(x_1 \in K\cap (-K)\). Since K is pointed, we get \(x_1 =0\). Continuing in this fashion, we eventually conclude that \(x_1 =\cdots =x_m =0\). The converse is trivial.

-

(ii)

Since K is a closed convex cone, so is \(M :=L(K)\) due to assumption (22) and [7, Proposition 3.4]. Now let \(z \in M\cap (-M)\). Then \(z =L(r) =-L(s)\) for some points r, s in K. Thus \(L(r +s) =L(r) +L(s) =0\), which gives \(r +s \in \ker L\), and so \(r +s \in \ker L\cap K\). By again (22), \(r +s =0\), and now (i) implies \(r =s =0\). Therefore, \(z =0\), and M is pointed.

\(\square \)

Lemma 2.6

Let \((a_n)_{n\in {\mathbb {N}}}\) be a sequence in X such that \(a_n \rightarrow a \in X\), and K be a pointed closed convex cone of X. Assume that

Then

Proof

Since K is a closed convex cone and \(a_n \rightarrow a\), it follows from (24) that

and so \(a_{p+1} -a \in K\). Since \(a_p =a\), (24) gives \(a -a_{p+1} \in K\). Noting that K is pointed, this implies \(a_{p+1} -a \in K\cap (-K) \subseteq \{0\}\), and hence \(a_{p+1} =a\). Repeating this argument, we get the conclusion. \(\square \)

2.3 Locally polyhedral sets

Definition 2.7

(Local polyhedrality) Let C be a subset of X. We say that C is polyhedral at \(c\in C\) if there exist a polyhedralFootnote 2 set D and \(\varepsilon \in \mathbb {R}_{++}\) such that \(C\cap {\text {ball}}({c};{\varepsilon }) =D\cap {\text {ball}}({c};{\varepsilon })\).

It is clear from the definition that every polyhedron is polyhedral at each of its points and that every subset C of X is polyhedral at each point in \({\text {int}}\,C\).

Lemma 2.8

Let C be a subset of X, and assume that C is polyhedral at \(c \in C\). Then there exist \(\varepsilon \in \mathbb {R}_{++}\), a finite set I, \((d_i)_{i\in I}\in (X{\backslash }\{0\})^I\), \((\delta _i)_{i\in I}\in \mathbb {R}^I\) such that

\((\forall i\in I)\) \(\left\langle {d_i},{c} \right\rangle =\delta _i\), and

Proof

Combine Lemma 2.12 with [24, Theorem 6.46]. \(\square \)

Lemma 2.9

Let C be a nonempty closed convex subset of X that is polyhedral at \(c \in C\). Then there exists \(\varepsilon \in \mathbb {R}_{++}\) such that

Proof

We adopt the notation of the conclusion of Lemma 2.8. Let \(x\in X\) such that \(y := P_Cx\in {\text {ball}}({c};{\varepsilon })\). Then \(x-y\in N_C(y)\) and Lemma 2.8 guarantees the existence of \((\lambda _i)_{i\in I(y)}\in \mathbb {R}_+^{I(y)}\) such that

Then

and so \(\left\langle {x-P_Cx},{c-P_Cx} \right\rangle = 0\). Furthermore, by Lemma 2.1, \(x -P_Cx \in N_C(c)\). \(\square \)

2.4 Two convex sets

Proposition 2.10

Let A and B be closed convex subsets of X such that \(A\cap B\ne \varnothing \). Then the following hold:

-

(i)

\((\forall a\in A)(\forall b\in B)\quad N_{A-B}(a -b) =N_A(a)\cap \big (-N_B(b)\big )\).

-

(ii)

\(0 \in {\text {int}}(A -B) \quad \Leftrightarrow \quad N_{A-B}(0) =\{0\}\).

-

(iii)

\({\text {int}}\,B \ne \varnothing \quad \Leftrightarrow \quad (\forall x \in B)\; N_B(x) \cap (-N_B(x)) =\{0\}\).

Proof

(i) Noting that \(N_{-B}(-b) =-N_B(b)\) by definition, the conclusion follows from [20, Proposition 2.11(ii)] or from a direct computation. (ii) Clear from [2, Corollary 6.44]. (iii) Let \(x \in B\). By (i), \(N_B(x) \cap (-N_B(x)) =N_{B-B}(0)\). Now Lemma 2.2 and (ii) complete the proof. \(\square \)

Corollary 2.11

Let A be a linear subspace of X, and let B be a nonempty closed convex subset of X such that \(A\cap B\ne \varnothing \). Then the following hold:

-

(i)

\(0 \in {\text {int}}(A -B) \quad \Leftrightarrow \quad \left[ (\forall x \in A\cap B)\; A^\perp \cap N_B(x) =\{0\} \right] \).

-

(ii)

\(A\cap {\text {int}}\,B \ne \varnothing \quad \Leftrightarrow \quad \big [ 0 \in {\text {int}}(A -B) \quad \text {and}\quad {\text {int}}\,B \ne \varnothing \big ]\).

Proof

-

(i)

Let \(x \in A\cap B\). Since A is a linear subspace, Proposition 2.10(i) yields

$$\begin{aligned} A^\perp \cap N_B(x) =-(N_A(x)\cap (-N_B(x))) =-N_{A-B}(0). \end{aligned}$$(32)Now apply Proposition 2.10(ii).

-

(ii)

If \(0 \in {\text {int}}(A -B)\) and \({\text {int}}\,B \ne \varnothing \), then \(0 \in {\text {ri}}(A -B)\) and \({\text {ri}}\,B={\text {int}}\,B\). Since A is a linear subspace, we have \({\text {ri}}\,A =A\), and using [22, Corollary 6.6.2], we get

$$\begin{aligned} 0 \in {\text {ri}}(A -B) ={\text {ri}}\,A -{\text {ri}}\,B = A -{\text {int}}\,B, \end{aligned}$$(33)which implies \(A\cap {\text {int}}\,B \ne \varnothing \). The converse is obvious.

\(\square \)

Lemma 2.12

Let A and B be closed convex subsets of X, and let \(c\in A\cap B\) and \(\varepsilon \in \mathbb {R}_{++}\) be such that \(A\cap {\text {ball}}({c};{\varepsilon })=B\cap {\text {ball}}({c};{\varepsilon })\). Then \(N_A(c)=N_B(c)\).

Proof

Let \(u\in X\). Working with the directional derivative and using [2, Proposition 17.17(i)], we have \(u\in N_A(c) = \partial \iota _A(c)\) \(\Leftrightarrow \) \((\forall h\in X)\) \(\left\langle {u},{h} \right\rangle \le \iota _A'(c;h)\) \(\Leftrightarrow \) \((\forall h\in X)\) \(\left\langle {u},{h} \right\rangle \le \iota _B'(c;h)\) \(\Leftrightarrow \) \(u\in N_B(c) = \partial \iota _B(c)\). \(\square \)

2.5 Monotone operators

Lemma 2.13

Let \(L:X\times X\rightarrow X\times X:(x,y)\mapsto (L_{11}x+L_{12}y,L_{21}x+L_{22}y)\), where each \(L_{ij}:X\rightarrow X\) is linear. Assume that \(L_{11}^*L_{22} +L_{21}^*L_{12} ={\text {Id}}\) and that \(L_{11}^*L_{21}\) and \(L_{22}^*L_{12}\) are skew.Footnote 3 Then the following hold:

-

(i)

If \((x,y)\in X\times X\) and \((u,v)=L(x,y)\), then \(\left\langle {u},{v} \right\rangle =\left\langle {x},{y} \right\rangle \).

-

(ii)

Let \(M:X\rightrightarrows X\) be a monotone operator, and define \(M_L:X\rightrightarrows X\) via \({\text {gra }}M_L = L({\text {gra }}M)\). Then for all ordered pairs (x, y) and \((x',y')\) in \({\text {gra }}M\) and \((u,v)=L(x,y)\), \((u',v')=L(x',y')\), we have \(\left\langle {u-v},{u'-v'} \right\rangle = \left\langle {x-x'},{y-y'} \right\rangle \); consequently, \(M_L\) is monotone.

Proof

-

(i)

The assumptions indeed imply

$$\begin{aligned} \left\langle {u},{v} \right\rangle&=\left\langle {L_{11}x +L_{12}y},{L_{21}x +L_{22}y} \right\rangle \end{aligned}$$(34a)$$\begin{aligned}&=\left\langle {x},{(L_{11}^*L_{22} +L_{21}^*L_{12})y} \right\rangle +\left\langle {x},{L_{11}^*L_{21}x} \right\rangle +\left\langle {y},{L_{22}^*L_{12}y} \right\rangle =\left\langle {x},{y} \right\rangle . \end{aligned}$$(34b) -

(ii)

Since \((x-x',y-y') = (x,y)-(x',y')\) and L is linear, the result follows from (i).

\(\square \)

Corollary 2.14

Let A be a linear subspace of X, and let (x, y) and \((x',y')\) be in \(X\times X\). Then the following hold:

-

(i)

\(\left\langle {P_Ay -P_{A^\perp }x},{P_Ax -P_{A^\perp }y} \right\rangle =\left\langle {y},{x} \right\rangle \)

-

(ii)

\(\left\langle {(P_Ay -P_{A^\perp }x)-(P_Ay' -P_{A^\perp }x')},{(P_Ax -P_{A^\perp }y)-(P_Ax' -P_{A^\perp }y')} \right\rangle =\left\langle {y-y'},{x-x'} \right\rangle \).

Proof

Set \(L_{11}=L_{22}=P_A\) and \(L_{12}=L_{21}=-P_{A^\perp }\) in Lemma 2.13. \(\square \)

Remark 2.15

Spingarn’s original partial inverse [25] arises from Lemma 2.13 by setting \(L_{11}=L_{22}=P_A\) and \(L_{12}=L_{21}=P_{A^\perp }\) while in Corollary 2.14 we used \(L_{11}=L_{22}=P_A\) and \(L_{12}=L_{21}=-P_{A^\perp }\). Other choices are possible: e.g., if \(X=\mathbb {R}^2\) and R denotes the clockwise rotator by \(\pi /4\), then a valid choice for Lemma 2.13 is \(L_{11}=L_{22}=\tfrac{1}{2}R\) and \(L_{12}=L_{21}=\tfrac{1}{2}R^*\).

2.6 Finite-convergence conditions for the proximal point algorithm

It is known (see, e.g., [12, Theorem 6]) that the DRA is a special case of the exact proximal point algorithm (with constant parameter 1). The latter generates, for a given maximally monotone operator \(M:X\rightrightarrows X\) with resolvent \(T := J_M = ({\text {Id}}+M)^{-1}\), a sequence by

in order to solve the problem

A classical sufficient condition dates back to Rockafellar (see [23, Theorem 3]) who proved finite convergence when

It is instructive to view this condition from the resolvent side:

Note that since \({\text {Fix }}T\) is convex, this implies that

is a singleton, which severely limits the applicability of this condition.

Later, Luque (see [17, Theorem 3.2]) proved finite convergence under the more general condition

On the resolvent side, his condition turns into

However, when \(M^{-1}0={\text {Fix }}T\ne \varnothing \), it is well known that \(z_n-Tz_n\rightarrow 0\); thus, the finite-convergence condition is essentially a tautology.

When illustrating our main results, we shall provide examples where both (37) and (40) fail while our results are applicable (see Remark 3.8 and Example 5.5 below).

2.7 Douglas–Rachford operator

For future use, we record some results on the DRA that are easily checked. Recall that, for two nonempty closed convex subsets A and B, the DRA operator is

The following result, the proof of which we omit since it is a direct verification, records properties in the presence of affinity/linearity.

Proposition 2.16

Let A be an affine subspace of X and let B be a nonempty closed convex subset of X. Then the following hold:

-

(i)

\(P_A\) is an affine operator and

$$\begin{aligned} P_AR_A =P_A, \quad P_AT =P_AP_BR_A \quad \text {and}\quad T =(P_A +P_B -{\text {Id}})R_A. \end{aligned}$$(43) -

(ii)

If A is a linear subspace, then \(P_A\) is a symmetric linear operator and

$$\begin{aligned} T = \big (P_AP_B -P_{A^\perp }({\text {Id}}-P_B)\big )R_A. \end{aligned}$$(44)

The next result will be used in Sect. 4.1 below to clarify the connection between the DRA and Spingarn’s method.

Lemma 2.17

Let A be a linear subspace of X, let B be a nonempty closed convex subset of X, let \((a,a^\perp )\in A\times A^\perp \), and set \((a_+,a_+^\perp ) := (P_AP_B(a+a^\perp ), P_{A^\perp }({\text {Id}}-P_B)(a+a^\perp ))\in A\times A^\perp \). Then \(T(a-a^\perp ) = a_+-a_+^\perp \).

Proof

Clearly, \(a_+\in A\) and \(a_+^\perp \in A^\perp \). Since \(R_A(a-a^\perp ) = (P_A-P_{A^\perp })(a-a^\perp ) = a+ a^\perp \), the conclusion follows from (44). \(\square \)

3 The affine-polyhedral case with Slater’s condition

In this section, we are able to state and prove finite convergence of the DRA in the case where A is an affine subspace and B is a polyhedral set such that Slater’s condition, \(A\cap {\text {int}}\,B\ne \varnothing \), is satisfied. We start by recalling our standing assumptions. We assume that

and that

The DRA is based on the operator

Given a starting point \(z_0 \in X\), the DRA sequence \((z_n)_{n\in {\mathbb {N}}}\) is generated by

We also set

We now state the basic convergence result for the DRA.

Fact 3.1

(Convergence of DRA) The DRA sequences (48) satisfy

Proof

Combine [3, Corollary 3.9 and Theorem 3.13]. \(\square \)

Fact 3.1 can be strengthened when a constraint qualification is satisfied.

Lemma 3.2

Suppose that \(0 \in {\text {int}}(A -B)\). Then there exists a point \(c\in A\cap B\) such that the following hold for the DRA sequences (48):

-

(i)

\({\text {ri}}(A)\cap {\text {ri}}(B)\ne \varnothing \) and hence \((z_n)_{n\in {\mathbb {N}}}\), \((a_n)_{n\in {\mathbb {N}}}\) and \((r_n)_{n\in {\mathbb {N}}}\) converge linearly to c.

-

(ii)

If \(c \in {\text {int}}\,B\), then the convergence of \((z_n)_{n\in {\mathbb {N}}}\), \((a_n)_{n\in {\mathbb {N}}}\) and \((r_n)_{n\in {\mathbb {N}}}\) to c is finite.

Proof

-

(i)

From \(0 \in {\text {int}}(A -B)\), we have \(N_{A-B}(0) =\{0\}\) due to Proposition 2.10. This gives \({\text {Fix }}T =A\cap B\), and Fact 3.1 implies \(z_n \rightarrow c \in A\cap B\) and \(a_n \rightarrow P_Ac =c\). Then \(r_n =2a_n -z_n \rightarrow c\). Using again \(0 \in {\text {int}}(A-B)\), [22, Corollary 6.6.2] yields \(0 \in {\text {ri}}(A -B) ={\text {ri}}\,A -{\text {ri}}\,B\), and thus \({\text {ri}}\,A\cap {\text {ri}}\,B \ne \varnothing \). Now the linear convergence follows from [21, Theorem 4.14] or from [6, Theorem 8.5(i)].

-

(ii)

Since \(r_n \rightarrow c\) and \(a_n \rightarrow c\) by (i), there exists \(n \in \mathbb {N}\) such that \(r_n \in B\) and \(a_n \in B\). Then \(P_Br_n =r_n\) and

$$\begin{aligned} z_{n+1} =z_n -a_n +P_Br_n =z_n -a_n +r_n =a_n \in A\cap B = {\text {Fix }}T. \end{aligned}$$(50)Hence \(a_n=z_{n+1}=z_{n+2}=\cdots \) and we are done.

\(\square \)

Lemma 3.3

Suppose that A is a linear subspace. Then the DRA sequences (48) satisfy

and

Proof

(51): Clear from (i). (52): Use (51) and the linearity of \(P_A\). \(\square \)

Lemma 3.4

Suppose that A is a linear subspace and that for the DRA sequences (48) there exists \(p \in \mathbb {N}\) such that \(a_p =a_{p+1} =c \in A\cap B\), and that there is a subset N of X such that \(r_p -P_Br_p \in N\) and \(A^\perp \cap N =\{0\}\). Then \((\forall n\ge p+1)\) \(z_{n}=c\).

Proof

Since \(a_p -a_{p+1} =0\), (52) implies \(r_p -P_Br_p \in A^\perp \cap N=\{0\}\). Thus \(P_Br_p =r_p\) and therefore \(z_{p+1} =z_p - a_p + r_p =a_p =c \in A\cap B \subseteq {\text {Fix }}T\). \(\square \)

Lemma 3.5

Suppose that A is a linear subspace and let \(a \in A\). Then the DRA sequence (48a) satisfies

and

Proof

Let \(n\in \mathbb {N}\). Using (51), we find that

Next, (44) yields

Moreover, \(r_n -P_Br_n \in N_B(P_Br_n) =N_{B-a}(P_Br_n -a)\) and \(N_{B-a}\) is a monotone operator. The result thus follows from Corollary 2.14. \(\square \)

Lemma 3.6

Suppose that A is a linear subspace and that the DRA sequences (48) satisfy

Then there is no linear functional \(f :X \rightarrow \mathbb {R}\) such that

Proof

Suppose to the contrary that there exists a linear function \(f :X \rightarrow \mathbb {R}\) satisfying (57). Now set

On the one hand, Lemma 2.4 yields \(n_0 \in \mathbb {N}{\backslash } \{0\}\) and \((\mu _1, \ldots , \mu _{n_0}) \in \mathbb {R}_+^{n_0}\) such that

On the other hand, Lemma 3.5 and (56) yield

consequently, with the help of [2, Lemma 2.13(i)],

Comparing (59) with (61), we arrive at the desired contradiction. \(\square \)

We are now ready for our first main result concerning the finite convergence of the DRA.

Theorem 3.7

(Finite convergence of DRA in the affine-polyhedral case) Suppose that A is an affine subspace, that B is polyhedral at every point in \(A\cap {\text {bdry }}B\), and that Slater’s condition

holds. Then the DRA sequences (48) converge in finitely many steps to a point in \(A\cap B\).

Proof

After translating the sets if necessary, we can and do assume that A is a linear subspace of X. By Corollary 2.11(ii), (62) yields

Lemma 3.2(i) thus implies that \((z_n)_{n\in {\mathbb {N}}}\), \((a_n)_{n\in {\mathbb {N}}}\) and \((r_n)_{n\in {\mathbb {N}}}\) converge linearly to a point \(c \in A\cap B\). Since \(P_B\) is (firmly) nonexpansive, it also follows that \((P_Br_n)_{n\in {\mathbb {N}}}\) converges linearly to c. Since B is clearly polyhedral at every point in \({\text {int}}\,B \) it follows from the hypothesis that B is polyhedral at c. Lemma 2.9 guarantees the existence of \(n_0 \in \mathbb {N}\) such that

and

Because of \({\text {int}}\,B \ne \varnothing \) and \(c\in B\), Proposition 2.10(iii) yields \(N_B(c)\cap (-N_B(c)) =\{0\}\). Hence \(N_B(c)\) is a nonempty pointed closed convex cone. Using again \(0 \in {\text {int}}(A -B)\), Corollary 2.11(i) gives

In view of Lemma 2.5(ii),

Combining (52) and (65), we obtain

Since \(a_n \rightarrow c\) and K is a closed convex cone, we have

We now consider two cases.

Case 1: \((\exists \,p\ge n_0)\) \(a_p=c\).

Using (67) and (68), we deduce from Lemma 2.6 that \(a_p =a_{p+1} =c\). Now (65), (66), and Lemma 3.4 yield \((\forall n\ge p+1)\) \(z_n=c\) as required.

Case 2: \((\forall n\ge n_0)\) \(a_n\ne c\).

By (69), \((\forall n\ge n_0)\) \(a_n -c \in K{\backslash }\{0\}\). Since K is pointed [see (67)], Lemma 2.3 yields \(v \in {\text {ri}}\,K\cap {\text {ri}}\,K^\oplus \subseteq K\) such that

Recalling (67), we get \(u\in N_B(c)\) such that \(v=P_Au\). Clearly, \((\forall n \ge n_0)\) \(\left\langle {u},{a_n -c} \right\rangle = \left\langle {u},{P_A(a_n -c)} \right\rangle = \left\langle {P_Au},{a_n -c} \right\rangle = \left\langle {v},{a_n -c} \right\rangle \). It follows from (70) that

Since \(u \in N_B(c)\) we also have

Now define a linear functional on X by

In view of (71) with (72), we obtain \((\forall n\ge n_0)\) \(f(z_n -z_{n+1}) =f(a_n -P_Br_n) =f(a_n -c) -f(P_Br_n -c) =\left\langle {u},{a_n -c} \right\rangle -\left\langle {u},{P_Br_n -c} \right\rangle >0\). Therefore,

However, this and (56) together contradict Lemma 3.6 (applied to \((z_n)_{n \ge n_0}\)). We deduce that Case 2 never occurs which completes the proof of the theorem. \(\square \)

Remark 3.8

Some comments on Theorem 3.7 are in order.

-

(i)

Suppose we replace the Slater’s condition “\(A\cap {\text {int}}\,B\ne \varnothing \)” by “\(A\cap {\text {ri}}\,B \ne \varnothing \)”. Then one still obtains linear convergence (see [21, Theorem 4.14] or [6, Theorem 8.5(i)]); however, finite convergence fails in general: indeed, B can chosen to be an affine subspace (see [1, Section 5]).

-

(ii)

Under the assumptions of Theorem 3.7, Rockafellar’s condition (37) is only applicable when both \(A=\{\bar{a}\}\) and \(\bar{a}\in {\text {int}}\,B\) hold. There are many examples where this condition is violated yet our condition is applicable (see, e.g., the scenario in the following item).

-

(iii)

Suppose that \(X =\mathbb {R}^2\), that \(A =\mathbb {R}\times \{0\}\) and that \(B ={\text {epi }}f\), where \(f:\mathbb {R}\rightarrow \mathbb {R}:x\mapsto |x|-1\). It is clear that B is polyhedral and that \(A\cap {\text {int}}\,B\ne \varnothing \). Define \((\forall \varepsilon \in \mathbb {R}_{++})\) \(z_\varepsilon := (1 +\varepsilon , \varepsilon )\). Then

$$\begin{aligned} (\forall \varepsilon \in \mathbb {R}_{++})\quad Tz_{\varepsilon } = (1,\varepsilon )\notin {\text {Fix }}T = [-1,1]\times \{0\} \;\;\text {yet}\;\; \Vert z_\varepsilon -Tz_{\varepsilon }\Vert =\varepsilon . \end{aligned}$$(75)We conclude that (the resolvent reformulation of) Luque’s condition (41) fails.

4 Applications

4.1 Product space setup and Spingarn’s method

Let us now consider a feasibility problem with possibly more than two sets, say

where

This problem is reduced to a two-set problem as follows. In the product Hilbert space \({\mathbf {X}}:=X^M\), with the inner product defined by \(((x_1, \ldots , x_M), (y_1, \ldots , y_M)) \mapsto \sum _{j=1}^M \left\langle {x_j},{y_j} \right\rangle \), we set

Because of

the M-set problem (76), which is formulated in X, is equivalent to the two-set problem

which is posed in \({\mathbf {X}}\). By, e.g., [2, Proposition 25.4(iii) and Proposition 28.3], the projections of \(\mathbf {x}=(x_1, \ldots , x_M) \in {\mathbf {X}}\) onto \({\mathbf {A}}\) and \({\mathbf {B}}\) are respectively given by

This opens the door of applying the DRA in \({\mathbf {X}}\): indeed, set

fix a starting point \(\mathbf {z}_0 \in {\mathbf {X}}\), and generate the DRA sequence \((\mathbf {z}_n)_{n\in {\mathbb {N}}}\) via

We now obtain the following result as a consequence of Theorem 3.7.

Corollary 4.1

Suppose that \(C_1,\ldots ,C_M\) are polyhedral such that \({\text {int}}\,C\ne \varnothing \). Then the DRA sequence defined by (83) converges finitely to \(\mathbf {z}=(z, \ldots , z) \in {\mathbf {A}}\cap {\mathbf {B}}\) with \(z \in C =\bigcap _{j=1}^M C_j\).

Proof

Since \({\text {int}}\,C \ne \varnothing \), there exists \(c \in C\) and \(\varepsilon \in \mathbb {R}_{++}\) such that \((\forall j\in \{1,\ldots ,M\})\) \({\text {ball}}({c};{\varepsilon }) \subseteq C_{j}\). Then \((c, \ldots , c) \in {\mathbf {A}}\cap {\text {int}}\,{\mathbf {B}}\), and so \({\mathbf {A}}\cap {\text {int}}\,{\mathbf {B}}\ne \varnothing \). Since \({\mathbf {B}}=C_1\times \cdots \times C_M\) is a polyhedral subset of \({\mathbf {X}}\), the conclusion now follows from Theorem 3.7. \(\square \)

Problem (76) was already considered by Spingarn [26] for the case where all sets \(C_1, \ldots , C_M\) are halfspaces. He cast the resulting problem into the form

and suggested solving it by a version of his method of partial inverses [25], which generates a sequence \((\mathbf {a}_n,\mathbf {b}_n)_{n\in {\mathbb {N}}}\) via

It was pointed out in [15, Section 1], [12, Section 5], [19, Appendix] and [10, Remark 2.4] that this scheme is closely related to the DRA. (In fact, Lemma 2.17 above makes it completely clear why (85) is equivalent to applying the DRA to \({\mathbf {A}}\) and \({\mathbf {B}}\), with starting point \((\mathbf {a}_0-\mathbf {b}_0)\).) However, Spingarn’s proof of finite convergence in [26] requires

and he chooses a linear functional f based on the “diagonal” structure of \({\mathbf {A}}\)—unfortunately, his proof does not work for problem (3). Our proof in the previous section at the same time simplifies and strengthens his proof technique to allow us to deal with polyhedral sets rather than just halfspaces. While every polyhedron is an intersection of halfspaces, the problems are theoretically equivalent—in practice, however, there can be huge savings as the requirement to work in Spingarn’s setup might lead to much larger instances of the product space \({\mathbf {X}}\)! It also liberates us from being forced to work in the product space. Our extension is also intrinsically more flexible as the following example illustrates.

Example 4.2

Suppose that \(X =\mathbb {R}^2\), that \(A =\left\{ {(x, x)}~\big |~{x \in \mathbb {R}}\right\} \) is diagonal, and that \(B =\left\{ {(x, y)\in \mathbb {R}^2}~\big |~{-y \le x \le 2}\right\} \). Clearly, B is polyhedral and \(A\cap {\text {int}}\,B\ne \varnothing \). Moreover, B is also not the Cartesian product of two polyhedral subsets of \(\mathbb {R}\), i.e., of two intervals. Therefore, the proof of finite convergence of the DRA in [26] no longer applies. However, A and B satisfy all assumptions of Theorem 3.7, and thus the DRA finds a point in \(A\cap B\) after a finite number of steps (regardless of the location of starting point). See Fig. 1 for an illustration, created with GeoGebra [13].

4.2 Solving linear equalities with a strict positivity constraint

In this subsection, we assume that

and that

where \(L\in \mathbb {R}^{M\times N}\) and \(a\in \mathbb {R}^M\). Note that the set \(A\cap B\) is polyhedral yet \(A\cap B\) has empty interior (unless \(A=X\) which is a case of little interest). Thus, Spingarn’s finite-convergence result is never applicable. However, Theorem 3.7 guarantees finite convergence of the DRA provided that Slater’s condition

holds. Using [5, Lemma 4.1], we obtain

where \(L^\dagger \) denotes the Moore-Penrose inverse of L. We also have (see, e.g., [2, Example 6.28])

where \(\xi ^+ = \max \{\xi ,0\}\) for every \(\xi \in \mathbb {R}\). This implies \(R_A:X\rightarrow X:x\mapsto x-2L^\dagger (Lx-a)\) and \(R_B:X\rightarrow X:x =(\xi _1, \ldots , \xi _N) \mapsto (|\xi _1|,\ldots ,|\xi _N|)\). We will compare three algorithms, all of which generate a governing sequence with starting point \(z_0\in X\) via

The DRA uses, of course,

The second method is the classical method of alternating projections (MAP) where

while the third method of reflection–projection (MRP) employs

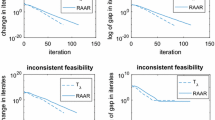

We now illustrate the performance of these three algorithms numerically. For the remainder of this section, we assume that that \(X =\mathbb {R}^2\), that \(A =\left\{ {(x, y) \in \mathbb {R}^2}~\big |~{x +5y =6}\right\} \), and that \(B =\mathbb {R}_+^2\). Then A and B satisfy (89), and the sequence (93) generated by the DRA thus converges finitely to a point in \(A\cap B\) regardless of the starting point. See Fig. 2 for an illustration, created with GeoGebra [13]. Note that the shadow sequence \((P_Az_n)_{n\in {\mathbb {N}}}\) for the DRA finds a point in \(A\cap B\) even before a fixed point is reached. For each starting point \(z_0 \in [-100, 100]^2 \subseteq \mathbb {R}^2\), we perform the DRA until \(z_{n+1} =z_n\), and run the MAP and the MRP until \(d_B(z_n) =\max \{d_A(z_n), d_B(z_n)\} <\varepsilon \), where we set the tolerance \(\varepsilon =10^{-4}\). Figure 3 compares the number of iterations needed to stop each algorithm. Note that even though we put the DRA at an “unfair disadvantage” (it must find a true fixed point while the MAP and the MRP will stop with \(\varepsilon \)-feasible solutions), it does extremely well. In Fig. 4, we level the playing field and compare the distance from \(P_Az_n\) (for the DRA) or from \(z_n\) (for the MAP and the MRP) to B, where \(n \in \{5, 10\}\).

Now we look at the process of reaching a solution for each algorithm. For the DRA, we monitor the shadow sequence \((P_Az_n)_{n\in {\mathbb {N}}}\) and for the MAP and the MRP, we monitor \((z_n)_{n\in {\mathbb {N}}}\), which is actually the same as \((P_Az_n)_{n\in {\mathbb {N}}}\). Because all three monitored sequences lie in A, we are concerned about the distance to B. Our stopping criterion is therefore

for all three algorithms. From top to bottom in Fig. 5, we check how many iterates are required to get to tolerance \(\varepsilon =10^{-m}\), where \(m \in \{2, 4\}\), respectively. Computations were performed with MATLAB R2013b [18]. These experiments illustrate the superior convergence behaviour of the DRA compared to the MAP and the MRP.

5 The hyperplanar-epigraphical case with Slater’s condition

In this section we assume that

We will work in \(X\times \mathbb {R}\), where we set

and

Then

and the projection onto B is described in the following result.

Lemma 5.1

Let \((x, \rho ) \in (X\times \mathbb {R}){\backslash } B\). Then there exists \(p\in X\) such that \(P_B(x, \rho ) =(p, f(p))\),

and

Moreover, the following hold:

-

(i)

If u is a minimizer of f, then \(\left\langle {u -p},{x -p} \right\rangle \le 0\).

-

(ii)

If x is a minimizer of f, then \(p =x\).

-

(iii)

If x is not a minimizer of f, then \(f(p) <f(x)\).

Proof

According to [2, Proposition 28.28], there exists \((p,p^*)\in {\text {gra }}\partial f\) such that \(P_B(x, \rho ) =(p, f(p))\) and \(x = p +(f(p) -\rho )p^*\). Next, [2, Propositions 9.18] implies that \(\rho <f(p)\) and (100). The subgradient inequality gives

Hence \(f(p) \le f(x)\), and this completes the proof of (99),

-

(i)

It follows from (100) that \(\left\langle {u -p},{x -p} \right\rangle \le (f(u) -f(p))(f(p) -\rho )\). Since \(f(p) -\rho >0\) and \(f(u) \le f(p)\), we have \(\left\langle {u -p},{x -p} \right\rangle \le 0\).

-

(ii)

Apply (i) with \(u =x\).

-

(iii)

By (99), \(\rho < f(p) \le f(x)\). We show the contrapositive and thus assume that \(f(p) =f(x)\). Since \(f(p) -\rho >0\), (101) yields \(p^* =0\), and so \(0 \in \partial f(p)\). It follows that p is a minimizer of f, and therefore x also minimizes f.

\(\square \)

Remark 5.2

When \(X =\mathbb {R}\) and u is a minimizer of f, then \((u -p)(x -p) \le 0\) by Lemma 5.1(i). Therefore, p lies between x and u (see also [4, Corollary 4.2]).

Define the DRA operator by

It will be convenient to abbreviate

and to analyze the effect of performing one DRA step in the following result.

Corollary 5.3

(One DRA step) Let \(z =(x, \rho ) \in X\times \mathbb {R}\), and set \(z_+ :=(x_+, \rho _+) =T(x, \rho )\). Then the following hold:

-

(i)

Suppose that \(z \in B'\). Then \(z_+ =(x, 0) \in A\). Moreover, either (\(f(x)\le 0\) and \(z_+ \in A\cap B\)) or (\(f(x) > 0\) and \(z_+ \not \in B\cup B'\)).

-

(ii)

Suppose that \(z \not \in B'\). Then there exists \(x_+^* \in \partial f(x_+)\) such that

$$\begin{aligned} x_+ =x -\rho _+x_+^*,\; f(x_+) \le f(x), \;\text {and}\; \rho _+ =\rho +f(x_+) >0. \end{aligned}$$(104)Moreover, either (\(\rho \ge 0\) and \(z_+ \in B\)) or (\(\rho <0\), \(z_+ \not \in B\cup B'\) and \(Tz_+ \in B\)).

-

(iii)

Suppose that \(z \in B\cap B'\). Then \(z_+ \in A\cap B\).

-

(iv)

Suppose that \(z \in B{\backslash } B'\). Then \(z_+ \in B\).

Proof

-

(i)

We have \(P_Az =(x, 0)\) and \(R_Az =(x, -\rho ) \in B\). Thus \(P_BR_Az =P_B(x, -\rho ) =(x, -\rho )\), which gives

$$\begin{aligned} z_+ =({\text {Id}}-P_A +P_BR_A)z =(x, \rho ) -(x, 0) +(x, -\rho ) =(x, 0) \in A. \end{aligned}$$(105)If \(f(x) \le 0\), then \(z_+ =(x, 0) \in B\), and hence \(z_+ \in A\cap B\). Otherwise, \(f(x) >0\) which implies \(-f(x) <0\) and further \(z_+ =(x, 0) \not \in B\cup B'\).

-

(ii)

We have \(R_Az =(x, -\rho ) \not \in B\), and by (99), \(P_B(x, -\rho ) =(p, f(p))\), where

$$\begin{aligned} p =x -(\rho +f(p))p^* \text { for some } p^* \in \partial f(p), \quad \text {and}\quad -\rho <f(p) \le f(x). \end{aligned}$$(106)We obtain

$$\begin{aligned} z_+ =(x_+, \rho _+) =({\text {Id}}-P_A +P_BR_A)z =(x, \rho ) -(x, 0) +(p, f(p)) =(p, \rho +f(p)), \end{aligned}$$(107)which gives \(x_+ =p\) and \(\rho _+ =\rho +f(p) >0\), so (104) holds. If \(\rho \ge 0\), then \(\rho _+ =\rho +f(x_+) \ge f(x_+)\), and thus \(z_+ \in B\). Otherwise, \(\rho <0\), so \(\rho _+ <f(x_+)\) and also \(f(x_+) >-\rho >0\). Hence \(\rho _+ >0 >-f(x_+)\), which implies \(z_+ \not \in B\cup B'\), and then \(Tz_+ \in B\) because \(\rho _+ >0\) and the previous case applies.

-

(iii)

We have \(f(x) \le \rho \le -f(x)\), and so \(f(x) \le 0\). Now apply (i).

-

(iv)

We have \(\rho \ge f(x)\) and \(\rho >-f(x)\). Then \(\rho \ge |f(x)| \ge 0\), and (ii) gives \(z_+ \in B\).

\(\square \)

Theorem 5.4

(Finite convergence of DRA in the hyperplanar-epigraphical case) Suppose that \(\inf _X f <0\), and, given a starting point \(z_0 =(x_0, \rho _0) \in X\times \mathbb {R}\), generate the DRA sequence \((z_n)_{n\in {\mathbb {N}}}\) by

Then \((z_n)_{n\in {\mathbb {N}}}\) converges finitely to a point \(z \in A\cap B\).

Proof

In view of Corollary 5.3(i)&(iii), we can and do assume that \(z_0 \in B{\backslash } B'\), where \(B'\) was defined in (103). It follows then from Corollary 5.3(iii)&(iv), that \((z_n)_{n\in {\mathbb {N}}}\) lies in B.

Case 1: \((\exists n \in \mathbb {N})\) \(z_{n}\in B\cap B'\).

By Corollary 5.3(iii), \(z_{n+1}\in A\cap B\) and we are done.

Case 2: \((\forall {n\in {\mathbb {N}}})\) \(z_n \in B{\backslash } B'\).

By Corollary 5.3(ii),

Next, it follows from [22, Lemma 7.3] that \({\text {int}}\,B =\left\{ {(x, \rho ) \in X\times \mathbb {R}}~\big |~{f(x)<\rho }\right\} \). Because \(\inf _X f <0\), we obtain \(A\cap {\text {int}}\,B \ne \varnothing \), which, due to Lemma 3.2(i) yields \(z_n \rightarrow z =(x, \rho ) \in A\cap B\). Since \(z \in A\), we must have \(\rho =0\). If \((\forall {n\in {\mathbb {N}}})\) \(f(x_{n+1}) \ge 0\), then, by (109), \(0<\rho _1\le \rho _2\le \cdots \le \rho _n\rightarrow \rho = 0\) which is absurd. Therefore,

In view of (109), we see that \((\forall n \ge n_0+1)\) \(\rho _n \le \rho _{n_0} +(n -n_0)f(x_{n_0+1})\). Since \(f(x_{n_0+1}) <0\), there exists \(n_1 \in \mathbb {N}\), \(n_1 \ge n_0 +1\) such that

Noting that \(f(x_{n_1}) \le f(x_{n_0+1})\), we then obtain

Hence \(z_{n_1} \in B'\), which contradicts the assumption of Case 2. Therefore, Case 2 never occurs and the proof is complete. \(\square \)

We conclude by illustrating that finite convergence may be deduced from Theorem 5.4 but not necessarily from the finite-convergence conditions of Sect. 2.6.

Example 5.5

Suppose that \(X =\mathbb {R}\), that \(A =\mathbb {R}\times \{0\}\), and that \(B ={\text {epi }}f\), where \(f :\mathbb {R}\rightarrow \mathbb {R}:x\mapsto x^2 -1\). Let \((\forall \varepsilon \in \mathbb {R}_{++})\) \(z_\varepsilon = (1 +\varepsilon , -\varepsilon )\). Then \((\forall \varepsilon \in \mathbb {R}_{++})\) \(Tz_\varepsilon \notin {\text {Fix }}T =[-1,1]\times \{0\}\), and \(z_\varepsilon -Tz_\varepsilon \rightarrow 0\) as \(\varepsilon \rightarrow 0^+\). Consequently, Luque’s condition (41) fails.

Proof

Let \(\varepsilon \in \mathbb {R}_{++}\). Then \(-f(1 +\varepsilon ) =-2\varepsilon -\varepsilon ^2 <-\varepsilon \), and so \(z_\varepsilon =(1 +\varepsilon , -\varepsilon ) \notin B'\). By Corollary 5.3(ii), there exists \(x_\varepsilon \in \mathbb {R}\) such that

The last inequality shows that \(Tz_\varepsilon \notin {\text {Fix }}T =[-1,1]\times \{0\}\). It follows from the expression of \(x_\varepsilon \) that

Note that \((x_\varepsilon ,f(x_\varepsilon ))+ (0,-\varepsilon ) = Tz_\varepsilon = z_\varepsilon -P_Az_\varepsilon + P_BR_Az_\varepsilon = (1+\varepsilon ,-\varepsilon )-(1+\varepsilon ,0)+P_B(1+\varepsilon ,\varepsilon ) = P_B(1+\varepsilon ,\varepsilon )+(0,-\varepsilon )\) and hence \((x_\varepsilon , f(x_\varepsilon )) =P_B(1 +\varepsilon , \varepsilon )\). Remark 5.2 gives \(0 \le x_\varepsilon \le 1 +\varepsilon \). If \(0 \le x_\varepsilon \le 1\), then (114) yields \(0 \le 2x_\varepsilon -(1 +2\varepsilon )x_\varepsilon -1 -\varepsilon =(x_\varepsilon -1) -2\varepsilon x_\varepsilon -\varepsilon <0\), which is absurd. Thus \(1 <x_\varepsilon \le 1 +\varepsilon \). Now as \(\varepsilon \rightarrow 0^+\), we have \(x_\varepsilon \rightarrow 1\), \(f(x_\varepsilon ) \rightarrow f(1) =0\), and at the same time, \(z_\varepsilon -Tz_\varepsilon =(1 +\varepsilon -x_\varepsilon , -f(x_\varepsilon )) \rightarrow 0\). \(\square \)

Notes

Recall that a cone K is pointed if \(K\cap (-K)\subseteq \{0\}\).

Recall that a set is polyhedral if it is a finite intersection of halfspaces.

Recall that \(S:X\rightarrow X\) is skew if \(S^*=-S\).

References

Bauschke, H.H., Bello Cruz, J.Y., Nghia, T.T.A., Phan, H.M., Wang, X.: The rate of linear convergence of the Douglas–Rachford algorithm for subspaces is the cosine of the Friedrichs angle. J. Approx. Theory 185, 63–79 (2014)

Bauschke, H.H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces. Springer, Berlin (2011)

Bauschke, H.H., Combettes, P.L., Luke, D.R.: Finding best approximation pairs relative to two closed convex sets in Hilbert spaces. J. Approx. Theory 127, 178–192 (2004)

Bauschke, H.H., Dao, M.N., Noll, D., Phan, H.M.: Proximal point algorithm, Douglas–Rachford algorithm and alternating projections: a case study. J. Convex Anal. 23 (2016)

Bauschke, H.H., Kruk, S.G.: Reflection–projection method for convex feasibility problems with an obtuse cone. J. Optim. Theory Appl. 120, 503–531 (2004)

Bauschke, H.H., Noll, D., Phan, H.M.: Linear and strong convergence of algorithms involving averaged nonexpansive operators. J. Math. Anal. Appl. 421, 1–20 (2015)

Borwein, J.M., Moors, W.B.: Stability of closedness of convex cones under linear mappings. J. Convex Anal. 16, 699–705 (2009)

Cegielski, A.: Iterative Methods for Fixed Point Problems in Hilbert Spaces. Springer, Berlin (2012)

Censor, Y., Zenios, S.A.: Parallel Optimization. Oxford University Press, Oxford (1997)

Combettes, P.L.: Iterative construction of the resolvent of a sum of maximal monotone operators. J. Convex Anal. 16, 727–748 (2009)

Douglas, J., Rachford, H.H.: On the numerical solution of heat conduction problems in two and three space variables. Trans. AMS 82, 421–439 (1956)

Eckstein, J., Bertsekas, D.P.: On the Douglas–Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 55, 293–318 (1992)

GeoGebra software. http://www.geogebra.org

Hesse, R., Luke, D.R.: Nonconvex notions of regularity and convergence of fundamental algorithms for feasibility problems. SIAM J. Optim. 23, 2397–2419 (2013)

Lawrence, J., Spingarn, J.E.: On fixed points of nonexpansive piecewise isometric mappings. Proc. Lond. Math. Soc. Third Ser. 55, 605–624 (1987)

Lions, P.-L., Mercier, B.: Splitting algorithms for the sum of two nonlinear operators. SIAM J. Numer. Anal. 16, 964–979 (1979)

Luque, F.J.: Asymptotic convergence analysis of the proximal point algorithm. SIAM J. Control Optim. 22, 277–293 (1984)

MATLAB software. http://www.mathworks.com/products/matlab/

Mahey, P., Oualibouch, S., Tao, P.D.: Proximal decomposition on the graph of a maximal monotone operator. SIAM J. Optim. 5, 454–466 (1995)

Mordukhovich, B.S., Nam, N.M.: An easy path to convex analysis and applications. Morgan & Claypool Publishers, San Rafael (2014)

Phan, H.M.: Linear convergence of the Douglas–Rachford method for two closed sets. Optimization (2015). doi:10.1080/02331934.2015.1051532

Rockafellar, R.T.: Convex Analysis. Princeton University Press, Princeton (1970)

Rockafellar, R.T.: Monotone operators and the proximal point algorithm. SIAM J. Control Optim. 14(5), 877–898 (1976)

Rockafellar, R.T., Wets, R.J.-B.: Variational Analysis. Springer, Berlin (1998)

Spingarn, J.E.: Partial inverse of a monotone operator. Appl. Math. Optim. 10, 247–265 (1983)

Spingarn, J.E.: A primal–dual projection method for solving systems of linear inequalities. Linear Algebra Appl. 65, 45–62 (1985)

Acknowledgments

The authors thank an anonymous referee for careful reading and constructive comments. H.H.B. was partially supported by the Natural Sciences and Engineering Research Council of Canada and by the Canada Research Chair Program. M.N.D. was partially supported by an NSERC accelerator grant of H.H.B.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bauschke, H.H., Dao, M.N., Noll, D. et al. On Slater’s condition and finite convergence of the Douglas–Rachford algorithm for solving convex feasibility problems in Euclidean spaces. J Glob Optim 65, 329–349 (2016). https://doi.org/10.1007/s10898-015-0373-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-015-0373-5

Keywords

- Alternating projections

- Convex feasibility problem

- Convex set

- Douglas–Rachford algorithm

- Epigraph

- Finite convergence

- Method of reflection–projection

- Monotone operator

- Partial inverse

- Polyhedral set

- Projector

- Slater’s condition