Abstract

Making activities and environments have been shown to foster the development of computational thinking (CT) skills for students in science, technology, engineering, and math (STEM) subject areas. To properly cultivate CT skills and the related dispositions, educators must understand students’ needs and build awareness of how CT informs a deeper understanding of the academic content area. “Assessing Computational Thinking in Maker Activities” (ACTMA) is a design-based research study that developed a curricular unit around physics, making, and CT. The project in this paper studied how instructors could use formative assessment to uncover students’ prior knowledge and improve their use of CT. This study aims to provide a qualitative analysis of one lesson in the unit implementation of an informal makerspace environment that strived to be culturally responsive. The study examined “moments of notice,” or instances where formative assessment could guide students’ understanding of CT. We found elements in the establishment of a classroom culture that can generate a continual use of informal formative assessment between instructors and students. This culture includes using materials in conjunction with the promotion of CT concepts and dispositions, focusing on drawing for understanding, the practice of debugging, and fluidity of roles in the learning space.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Makerspaces

The recent rally around the concept of computational thinking (CT) is an opportunity to be more intentional about the integration of science, technology, engineering, and math (STEM). As Martin (2018) stated, CT is the “connecting tissue” between computer science and disciplinary knowledge. Computation has become so intertwined with the work of STEM (Henderson et al. 2007) that it is nearly impossible to do research or solve practical problems in any scientific or engineering discipline without the ability to think computationally. Seeing CT as an integral part of the integration of STEM, the Next Generation Science Standards (NGSS) has placed it as one of its key practices (“Using mathematics and computational thinking”). Students need to develop CT skills as part of the NGSS focus on deeper understanding of key scientific concepts and their relevance to students’ lives (NGSS Lead States, 2013). It is imperative that all students are given the opportunity to develop CT skills.

As CT is the process that students need to understand to fully participate in STEM, it is important to develop activities to help students carry out this process. A number of studies introduced students to CT mostly through programming skills (e.g., Tarkan et al. 2010; Touretzky et al. 2013; Kazimoglu et al. 2012). But, programming is only a part of CT. Some researchers have turned to the maker movement for a more integrated approach to develop CT skills and dispositions (e.g., Basawapatna et al. 2010; Denner et al. 2012; Wolz et al. 2011; Brennan and Resnick 2012; Brady et al. 2016). Making involves developing an idea into a tangible artifact. Makerspaces, where making happens in community, are built upon the dispositions that enhance CT, such as perseverance, tolerance for ambiguity, communication, and collaboration (International Society for Technology in Education and Computer Science Teachers Association 2011). Because makerspaces have roots in the hackerspace culture, computing and technology play a key role in problem solving and product development (Cavalcanti 2013). The iterative CT process of Use-Modify-Create (Lee et al. 2011) mirrors the kind of hacking that one would find in makerspaces. Making also has the potential to give students agency over their own learning and develops their understanding of concepts through new materials, tools, and methods (Herold 2016).

Makerspaces have the potential to provide a context of CT in STEM that fits into Bransford, Brown, and Cocking’s description of an effective learning environment (1999), an interconnected system of knowledge-centered, learner-centered, community-centered, and assessment-centered perspectives. Knowledge-centered environments help students become knowledgeable by understanding a domain so well that they can transfer their skills to varied contexts (Bruner 1981; Collins et al. 1988). Makerspaces can be knowledge-centered, in which students “practice” as active learners as if they were professionals in a field working directly with materials. Making supports youth in learning STEM content and practices, situating their learning for them to feel they are part of a community of practice even as novices (Lave and Wenger 1991) and developing their identities as people in STEM. This engagement results in sustained practices in STEM and CT, as students enthusiastically return to explore ways to better build their projects. The maker’s iterative process can help students progressively make meaning of certain concepts, as well as being a type of scaffolding in the gradual development of skills and practices (Kolodner et al. 2003).

Makerspaces can be learner-centered, i.e., culturally responsive to young people and their identities in STEM. Researchers showed that employing the iterative cycle of a design process, often used in makerspaces, can help elicit prior knowledge as well as misconceptions (Kolodner et al. 2003). Blikstein (2013) noted how, especially in low-income schools, many students had experience with hands-on projects because they had family or friends that had manual labor jobs, but that this experience was disconnected from their school life. Valuing prior knowledge and funds of knowledge (Moll et al. 1992) can help students redefine their communities and their own identities (Chávez and Soep 2005; Esteban-Guitart and Moll 2014), especially in the areas of STEM, making them realize what they are capable of (Fields and King 2014). By funds of knowledge, we are referring to the historical accumulation of abilities, information, assets, and ways of interacting that cultures build (Vélez-Ibáñez and Greenberg 1992).

Makerspaces are generally community-centered and attached to a growing and strong culture, which can help students feel as though they belong to an academic community (Farrington et al. 2012), and can make feedback useful and non-threatening. Maintaining this kind of community is central to the maker movement (Litts 2015). Whether a student is in a room of other students working on related projects, or in a makerspace with experts, or working alone in their room but interacting with making groups online, they are operating within a community. What is most important in makerspaces is neither the space nor the equipment, but how they foster learning and agency by encouraging inquiry through collaboration. Makers are identified as having a culture, and the keys to the advancement of this culture are learning and working with others (Tierney 2015).

Formative Assessments in Makerspace

Assessments are needed to measure learning outcomes. The introduction of assessments into a making environment, however, can be somewhat controversial. Making is built on the constructionist ideas of learning through making (Harel and Papert 1991) and any type of assessment, while a student is making and working towards her own end is an interruption (Martinez and Stager 2013). Consequently, structured activities and lessons conflict with the go-at-your-own-pace ethos of the makerspace. However, assessment is necessary to assist the student in determining what they know (learner-centered), what they need to know (knowledge-centered), how they can share what they have learned (community-centered), and objectives that align with constructionism. Makerspaces can be assessment-centered, if informal, observational formative feedback is part of the culture, provided throughout the process, and assisting in the progress of the project, providing students with opportunities to revise their thinking and understanding. The goal is for students to gain metacognitive abilities like CT for better understanding beyond mere fact memorization (Bransford et al. 1999).

Formative assessments guide and advance learning as it is happening, as opposed to summative assessments, which are useful in determining what students have learned for use beyond the classroom (National Research Council 2014). Cowie and Bell (1999) define formative assessment as “the process used by teachers and students to recognize and respond to students learning in order to enhance that learning, during the learning.” Formative assessments can be classified into formal and informal practices (e.g., Furtak and Ruiz-Primo 2007; Shavelson et al. 2008). Formal formative assessment involves providing evidence about students’ learning by gathering data, taking time to analyze and interpret the data, and then planning actions based on the analysis. Examples of formal formative assessments are the worksheets or short writing assignments a teacher may ask students to do in class. Informal formative assessment is evidence of learning generated during daily activities, where the teacher elicits information from the student, reacts in the moment to that information, and then uses that information immediately to shape the lesson. They are conversational and embedded in the classroom discussion.

If effectively implemented, formative assessments that are closely aligned and integrated with instruction and their results can help guide teaching decisions. For students to develop CT skills, or any strategic processing skills, they need to be aware of when to use the skills and how to use them in situ. Instructors can provide feedback to students about how they can improve their own learning, and students adjust accordingly (Black and Wiliam 2009). In this way, formative assessment can improve content learning as well as metacognitive understanding. Implemented effectively, formative assessment guides students in understanding learning objectives, and what strategies they need to learn the content to meet that objective (Heritage 2010). As such, formative assessment sets the stage for the development of metacognitive skills (Nicol and Macfarlane-Dick 2006; Hudesman et al. 2013). Assessing what metacognitive understanding and performance students bring to the classroom provides a foundation for new knowledge (Winne 1995). Such strategies can be practiced in context for students to understand their importance and power (Schoenfeld 2016). By engaging in formative assessment to highlight the CT skills being used in the moment, teachers can encourage students to reflect on the usefulness of those strategies and how they work with their individual learning path to improve their CT skills.

Research Question

To effectively incorporate CT into a K–12 curriculum, it is necessary to provide teachers with guidance for how to assess it (Grover and Pea 2013). As reported by Tang et al. (2018), most CT-related research assessed the development of CT skills in computing-related subjects like programming, for formal classroom contexts, and are designed for summative purposes. This study examines the use of formative assessment of CT in a physics/engineering unit that integrated making activities. In particular, we examined the implementation of an activity from the unit and how formative assessment was used with one group of students in a summer program. In this activity, students were asked to build and diagram electric circuits and exchange those diagrams with other groups, who then had to recreate the circuit.

In this paper, we share the observations of the implementation of the activity in an electricity unit that integrated CT designed for high school students studying or interested in physics and engineering. Our goal was to identify points at which informal formative assessment could elicit CT or help understand whether CT was happening. Our inquiry was guided by the research question “What kinds of informal formative assessment approaches can facilitate student understanding of CT as applied to physics/engineering-based making?”

Method

Case study

This article presents a single qualitative case study investigating the implementation of an activity in an electricity unit that integrated CT designed for high school students studying or interested in physics and engineering. The method was undertaken to acquire in-depth understanding of interactions between students and an instructor in a makerspace environment (Creswell 2013). We were interested in “moments of notice” where informal formative assessment of CT took place, or could potentially take place. This is an instrumental case study, or the study of a case to provide insight into a particular issue (Stake 1995). The issue, in this instance, is the informal formative assessment of CT skills in a makerspace environment.

Setting

The observed case took place at the main branch of the library located in a large Midwestern city in the summer of 2016. The library is accessible by many lines of public transportation, allowing students from different parts of the city to convene there. This library also has a large makerspace and is a hub for training informal educators as makerspace facilitators for the other branches in the city.

Professional Development for Instructors

The instructors of this study participated in professional development that focused on physics concepts, CT, and cultural responsiveness in learning environments. The professional development lasted about 12 h and 13 library staff participated. Although most participants of the professional development led makerspaces, the library staff had varied experiences with physics. They were given a refresher course on electricity, circuits, and magnets. The professional development focused on process-based CT concepts as described by Csizmadia et al. (2015) (decomposition, pattern recognition, abstraction, algorithm design, evaluation) and the dispositions proposed by ISTE & CSTA (2011) (e.g., confidence in dealing with complexity, persistence in working with difficult problems, ability to communicate and work with others to achieve a common goal or solution). The professional development focused on these two definitions of CT because of their clarity, accessibility, and overlap with the work instructors are often already doing. Library staff completed activities that helped them understand the concepts in terms of CT, using examples that they would find relevant to their lives (e.g., For decomposition, “How would you plan a party? What are the different problems that make up this one event?”; For pattern recognition, paying attention to repeated actions they take every morning to get to school or work, “What is your daily routine when you go to school/work?”; For algorithm design, following a recipe, “How do you cook your favorite food? Can you describe the procedure so that others can replicate it?”). The focus on relevance was also part of the professional development on cultural responsiveness in learning environments. Participants discussed culturally responsiveness in terms of flexibility in collaborative and individual learning spaces that encourage play and failure, integrating the students’ lives and culture, connecting students to resources within and outside of the classroom, and using inclusive teaching techniques. These inclusive teaching techniques include incorporative diverse learning styles, holding high expectations, and continually assessing student understanding, and scaffolding learning based on that assessment (Ladson-Billings 1995; Sleeter 2012).

After the professional development, three library staff members were able to attend the summer academy for our study and they acted as mentors for students, assisting the lead instructor of the summer academy.

Curriculum Unit: Physics and Engineering in Everyday Life

Our study was conducted in a 9-day summer academy held in the public library. The arc of the unit of the summer academy was intended to enhance the integration between the physics content and CT. The unit began with basic circuitry, including series and parallel circuits, then moved onto e-textiles, Makey Makeys, and then culminated with Arduinos. Aside from activities to reinforce the understanding of content through making, the unit included formal formative assessment activities (worksheets and notebook reflections), as well as team building activities to encourage community development through content review. The goal of the unit is to expose students to CT problem-solving skills as they progress from completing simpler electricity prompts (that do not use computers) to more complex and open-ended projects using the Makey Makey and Arduino—tools that demonstrate what is possible with CT in combining their physics learning in the hardware and computing learning in the software. Each activity is meant to spiral the learning back to previous concepts, but with more depth at each point. In other words, as Bruner (1960) suggested, subjects were taught at levels of gradually increasing difficulty, spiraling to more and more complex ideas.

The activity this study focused on took place one third of the way through the unit. Before this activity, students had already learned the basics of circuits and how to diagram a circuit. For this study, we observed the activity on the third day of the summer program, after students learned how to make a basic circuit, parallel and series of circuits, and learned about circuit diagrams. Nineteen students, ages ranging from 13 to 18, participated in the program, and they were seated at five different tables, four or five to a table. An experienced library staff led the summer academy, and he also participated in the development of the maker activities. Each of the five tables had a mentor, who assisted the students. The mentors all participated in the professional development described above.

Each day before this study, students took part in team building activities to warm up for the day. Students were also continually encouraged to use their notebook to record their ideas and what they learned, as well as draw the simple, series, and parallel circuits they had built. A mentor was seated at each table, and there was a lead instructor that directed the activity. The lead instructor placed on all of the tables a collection of alligator clips and components (light bulbs, LEDs, buzzers, switches, and buttons), as well as batteries. He asked the students to work in groups to create a circuit that included at least one instance of a parallel circuit and used more than two components. The instructor advised the students that they could make any kind of circuit they liked, as long as it met the requirements. Students were asked to draw their circuits in their notebooks.

After 25 min of building the circuit, the lead instructor gave the students 5 min to draw out the schematic of a circuit they built on a large post-it note. They then passed the schematic they drew to the next group. At that point, the students had 10 min to recreate the circuit from the schematic they were given. All of the groups traveled together from table to table to compare the recreated circuit to the original. Students from the original group tested the recreated circuits to determine whether they accurately reproduced the original circuit. At the end of each group interaction, a short discussion took place. If students made a mistake when drawing on the large post-it, they were instructed to use masking tape to correct the mistake, instead of starting all over, so they could reflect and discuss what they had drawn incorrectly.

For the purpose of this study, one table was selected and explored in more depth and detail. The research was focused on a table of four students who identified as female and the mentor that worked with them. Three of the students identified as Latinx/Hispanic and the other as Asian. The mentor identified as Latinx/Hispanic and male. One student would occasionally speak to the mentor in Spanish, but he consistently responded in English. This table was selected because the mentor had a more interactive approach than the other table mentors that let the students solve problems more on their own. In addition, this mentor had working experience with electronics in makerspaces; therefore, he was more familiar with the content than other table mentors.

Data Collection

We collected multiple sources of data including student engineering notebooks, field observations, photographs, and video and audio recordings of the events surrounding the activities to flesh out a rich description of the learning experience (Creswell 2013). Student notebooks were photocopied. The first author, who attended the whole process of activity development and professional development, sat with the group, closely observing the interactions, writing descriptive notes, and taking pictures. The team recorded audio and video of the table.

Data Analysis

Specifically, the team focused on coding and analyzing “moments of notice,” meaningful points in the activities where students have an opportunity to demonstrate CT skills based on formal and informal formative assessment. We classified key CT constructs that were applied in the activities. These constructs included the concepts of Csizmadia et al. (2015) and the dispositions proposed by ISTE & CSTA (2011). We also coded for the conditions and practices that reflected cultural responsiveness (Ladson-Billings 1995; Sleeter 2012).

The researchers discussed initial observations in-person during debriefings, immediately after the activities took place, and then in-phone meetings after more in-depth analysis. To improve descriptive validity, we used a triangulation of the observation notes, video, and audio. Triangulation is a process providing cross-validation of qualitative data, which allows researchers to compare information and to determine findings and confirmation of data (Ma and Norwich 2007). We used the observation notes as the primary source, with video and audio to double check the accuracy and provide exact transcripts.

To reduce the effects of researcher biases on the interpretations of the findings, the first and second authors independently reviewed and coded the observation notes. They then looked across these cases for patterns that seemed to emerge and created memos. They specifically focused on moments of formal and informal formative assessment, as well as classroom conditions and practices. Through this process, the team developed broad themes on the implementation of informal formative assessment in making environments.

Findings

After the assigned mentor suggested the students start with a simple circuit and build onto it, the students decided on incorporating a single pole double throw switch (SPDT) into their circuit. SPDTs have three terminals: one common pin and two pins which alternate their connection to the common pins. By exploring with the mentor to understand how the switch worked, the students created a circuit that lit an LED or an incandescent bulb (depending on how the switch was thrown). They drew the iterations of their circuits in their notebooks and then on the large post-it for another group to recreate. The other group had difficulty connecting the power correctly on the circuit in their recreation, but the original creators of the circuit helped them correct the problem. When it came time to recreating another group’s circuit (a parallel circuit with two different switches to control an LED or an incandescent bulb), the students did so with much less input from the mentor than when they were creating their initial circuit.

In the study, we observed the activity highlight how the Bransford et al. (1999) perspectives on learning environments can structure informal formative assessment of CT. We observed four key approaches to informal formative assessment for CT: (a) using materials in conjunction with the promotion of CT concepts and dispositions, (b) focusing on drawing for understanding, (c) the practice of debugging, and (d) fluidity of roles.

Using Materials in Conjunction with the Promotion of CT Concepts and Dispositions

The “moments of notice” for informal formative assessment were most apparent when the mentor used CT language while interacting with materials in the making of the circuit. Once they were ready to build onto their simple circuit, the mentor asked the students what all of their “variables” were, prompting them to physically categorize their power source, switches, buttons, lights, and buzzers into groups on the table. Additionally, by asking what “end result” the students wanted, he made them consider what they wanted their output to be as they were determining their input.

Mentor: If that’s your first circuit, how can you make it a little more complicated? How can you change it? What if you use one of the other emitters, how would that work? What different … You have a power source, you have the wires, right? You also have these, ah … controllers here. What controllers do you have? You have this and this and this. Is that it? And you have a couple of those. So these are your two choices. You only 1 type of battery. What kind of end results do you have? For like?

Student 1: You mean … like right now the light is pretty dim.

Mentor: So right now, you have a bulb, what else can you be using instead of a bulb as well… get all of your variables.

Student 3: This

Mentor: An LED? What else do you have?

Student 3: Where’s the positive?

Mentor: Positive is the right side

Student 3: So then you connect the positive here.

Mentor: That’s right. Do you have anything else besides that? So you have the switches for controlling, you wire for connecting, you can only have one type of battery.

Student 1 starts taking apart simple circuit and adding LED. It doesn’t light up.

Student 2: You need more battery for that light.

Student 3 and Student 4 take apart the circuit they were building to give Student 1 and Student 2 more batteries.

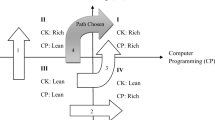

Although the students were struggling with understanding how the SPDT worked, they continued playing with it as the mentor encouraged them to try different connections (Fig. 1). He asked the students where they saw “patterns” to determine how the connections should be made, as they connected the different contacts with power and current switching. Eventually, they understood how the switch worked by recognizing the patterns of whether the LED or light bulb was lit depending on how they made the connections and how the switch was thrown. The mentor also asked the students to “trace” the circuit, gesturing along the circuit to each of the different components, in order to understand the current’s path. He did not do it here, but he could have used the term “debugging” to describe their process.

Mentor: No, see right now you’re just going in a loop. See? It comes out here and just goes right back around to here [gestures along the circuit].

Student 1: Yeah, but now right there, no ...

Mentor: No, you’re right. At this moment here. Now, can you get that one to light up? Oh, I see why. Do you guys see why? Trace the … So follow this power right here [gestures along circuit] to see where it goes. Oh you guys moved it back. Okay, I’m reverting it back to where you guys had it a moment ago. So this is a positive and it goes out. Someone follow this line, so you understand [Student 1 gestures along the circuit]. Now, look at the switch. You saw this earlier. So can you try to guess what happened earlier. So earlier when you had it like this, you could turn on that light. And when you put it over here, you can do it again. But when you put it over here, they wouldn’t light.

Student 1: The polarity?

Mentor: It’s not about polarity, actually. I’m going to put it back to how you had it a moment ago and see if you guys can break it down again. Remember this one here is the one with the power.

Student 2: What if we do this?

Mentor: Close, but now you have the exact same thing happening as earlier. Hm. It’s actually not working now. I think that it’s just that the contact is weak. Is this an LED? It looks a little burned out. Are you sure your polarity is correct?

Student 1: Negative … put it the other way around

Student 3: [Gestures]

Mentor: Are they touching each other?

[it works]

Student 1: Ah!

The mentor also used the interaction with the materials as a way to incorporate language around CT dispositions (ISTE & CSTA, 2011), continually asking the students as they iterated on their circuit how they could complicate it more expressing high expectations, and pushing them to be comfortable with complicating their circuit, while assessing if they knew what they could do next. He also asked them, “How do you guys feel?” as they were in the process of recreating the circuit from the diagram given to them, allowing space for them to express frustration or confusion. In order to promote effective communication skills and solidify their understanding, he encouraged the students to explain how the push button or SPDT worked to each other, other mentors, and by using gestures. Using language to challenge, connect emotionally, and elicit communication created an overlap of an assessment-centered environment with that of a learner-centered one by paying careful attention and being sensitive to the attitudes and beliefs learners bring to the educational setting, as well as setting high expectations that are scaffolded along the way (Bransford et al. 1999).

Focusing on Drawing

The drawings served as a focal point of assessment, moving the mentor and the students between formal and informal formative assessment. Because the students were asked to draw their circuits in the previous activities, it became part of their practice and part of the community norms. They drew every iteration of the circuit as they developed it without being prompted, showing their drawings to each other and to the mentor. They had varying success in conforming to the common schematic format, with one student drawing the circuit wires as they were configured in reality, but adjusted her future drawings by observing what her peers were doing and from receiving guidance from the mentor (Fig. 2). The mentor checked the drawings to ensure comprehension and to discuss how to draw an SPDT (Fig. 3). Importantly, the abstracted drawing of the SPDT itself clarified how the switch worked for the students. By encouraging an externalization of their thinking through drawing, a learner- and knowledge-centered environment is promoted concurrently with an assessment-centered one, clarifying the level of student skill for the mentor and developing a deeper understanding of the physics and CT content for the student.

Student 2: Could we just use the switch symbol then?

Mentor: I think you should use this symbol (showing the SPDT symbol they Googled on a phone) maybe and draw the 2 paths. It would be a learning for the whole group if they didn’t do what you did here.

Student 2: El otro?

Mentor: Yeah, it’s single pull as he was saying and double throw

Researcher: Wait, why single?

Mentor 1: There is only one power source. Two ends.

Student 2: [Inaudible] es bueno, right?

Mentor: … So there are switches with three positions. There is one on the left to start this one, there is one in the middle to turn one off like this this one doesn’t actually lock there and one on the right to turn the other one off. There are 3 position switches. This is not one of them.

Student 2: Both end for two switches

Mentor: You would have a variety of methods for doing that. If you got more complicated, you could do that so that it lights up one or the other, that would involve two more of these switches where one switch controlled whether it was powered by two switches or one switch, later down the line.

Student 2: Can I see the picture?

Mentor: Yeah. [shows phone].

[girls busily draw circuit]

Mentor: This thing? (points to Student 2 drawing) You draw it as one circuit being closed or one being open.

Student 4: Like this?

Mentor: yeah, either this one or this one. They’re both the same.

Student 2: Like this? [showing her drawing]

Mentor: Yeah, that’s right.

The drawings also provided a point of discussion about abstraction: Did the students have to draw both batteries? They agreed that they would draw the batteries for the purpose of this activity, but the mentor reminded them that it would not be necessary to do so in professional practice because just the voltage could be indicated.

In another instance, in order to prepare the students for whatever schematic they would have to recreate, the mentor discussed with them how LEDs, buzzers, light bulbs, etc., could all be abstracted to being resistors, and that they might encounter drawings that use only the resistor symbols for a component. The mentor was not explicit about the benefits of abstraction in computing, but with more intention, an instructor could use this activity to address the elimination of unnecessary details to communicate what is important to the computer and facilitate communication, in order to scale to more complex operations (National Research Council 2004). These ideas situate the learning in a real-world context, making it relevant for a knowledge-centered lens.

The Practice of Debugging

The process of debugging also served as a rich source for informal formative assessment of CT. As an approach to CT, Csizmadia et al. (2015) describe debugging as a “systematic application of analysis and evaluation using skills such as testing, tracing, and logical thinking to predict and verify outcomes” (p. 9). While the students worked through how the SPDT worked, the mentor coached them to “break down” the circuit and then asked separate questions as they went through possibilities, targeting polarity, the flow of the current, or if an LED was burned out. The instance served as an operationalization of the concept of decomposition. As they went through recreating the circuit from the diagram they were given, they broke down the circuit on their own and conducted step-by-step analyses when a bulb was not working. Because it was a group project, debugging served as a generator for assessment, as they discussed problems amongst themselves.

Mentor: There you go. Again, your problem is with the switch. Disconnect everything and rearrange it.

Student 1 tries connecting a wire on a different end of the SPDT.

Mentor: Pull the switch.

[both lights go off]

Mentor: now, what I was trying to say earlier -- take everything off of it. And look at it again from the beginning. Now, it’s the switch we’re having trouble with, so look at the switch, study the switch. Do you see a pattern? The power is coming from here. Where should this one be connected to?

Student 1: This one because then this one controls each circuit, right?

Mentor: That’s right

Student 1: So then this connects to this and then each circuit could be … or not.

Mentor: Try it. You haven’t rocked the switch, yet. This one hasn’t lit up.

Students smile as different bulbs are lit depending on how the switch is pulled.

Fluidity of Roles

Interestingly, the formative assessment process was not uni-directional, i.e., assessment did not only take the shape of mentor assessing the student. Students conducted forms of self-assessment, comparing their drawings with those of their peers when they were unsure, and then adjusting them accordingly. They also assessed each other within the group, clarifying positions on the appropriate direction to arrange the batteries or how to correctly draw an LED. When analyzing the recreation by the other group of the circuit they drew, the students caught the error and explained to the other group of students how they had missed a connection to the power contact on the SPDT switch.

Lead instructor: Which group was this?

Student 1: Mine.

Lead instructor: How close was this to your original?

Student 1 and Student 2: They’re missing a wire. One wire.

Student 1: They’re missing the wire that gives it the power.

Student 5 (from the group that recreated it): You all gonna change it now?

[Mentor and students laugh]

Student 1 changes the circuit, adding the wire that goes from the switch to the battery

Lead instructor: Aaaahhh. Ok. So if you look at the diagram, that’s where the switch is there, and there are two leads going out. You were very close, though.

Students began eventually assessing the mentor’s understanding, asking him if it would not just be easier to move the switch to a different place on the circuit when they were recreating the other group’s circuit.

Student 1: We need another button and another ...

Student 2: LED.

Student 1: wouldn’t it be easier if we did that ...

Mentor: It’s on that … you want to follow what’s on there. Oh yeah, exactly … mm .. is it there?

Student 1: It’s on the positive.

Student 2: Yeah.

Mentor: It’s on both of them, yeah.

They would do this sometimes in Spanish, allowing them to take communicative control by framing the conversation in their own language. In other words, there was a culture in which the idea of formative assessment as a process to enhance learning was pervasive and existed in all types of relationships within the space, without any attention to a supposed hierarchy. It served as a type of tool that anyone could use to further understanding, not unlike a ruler or a calculator that was passed around a classroom, in a very community-centered way.

Discussion

We observed a making activity and found four key approaches to informal formative assessment in a making environment that held promise for developing CT skills and dispositions: (a) using materials in conjunction with the promotion of CT concepts and dispositions, (b) focus on drawing for understanding, (c) the practice of debugging, and (d) fluidity of roles. These approaches are centered around the knowledge, the learner, and the community, allowing for broad integration in effective making learning environments.

Maker culture has much of its foundation in the ideas of constructionism (Harel and Papert 1991), which uses artifacts as “objects to think with,” representations to externalize knowledge. These artifacts serve as anchors for shared understanding when learners congregate around them, build with them, and then converse about them. Embedding the language and ideas of CT in the interactions around materials allows for a more explicit understanding of how to use CT.

Appropriate vocabulary is not indicative of the depth of understanding (Bell et al. 2001). However, by incorporating computing language, ideas, and dispositions that were applicable in the making context, the mentor was introducing students to the academic community of computing (Lave and Wenger 1991) and trying to develop a sense of understanding CT in conjunction with the hands-on work, maintaining a knowledge-centered approach to the assessment (Greeno 1991).

Working directly with materials in maker environments serves as a point of student engagement and maintains the culturally responsive practice of incorporating diverse learning styles. Being able to abstract the concepts through the act of drawing engages in these ways as well, but also may reduce cognitive load (Sweller 1988) and clarifies thinking. Research has shown that students perform better on near transfer problems when learning using abstracted diagrams of circuits (Moreno et al. 2011, 2009; Johnson et al. 2014). The importance of drawing for understanding has been demonstrated in computing as well: experienced programmers frequently “doodle” when provided a new piece of code, drawing diagrams and making annotations in order to determine how the code can function (Lister et al. 2004). More generally, studies of both experts and novices demonstrate that representing problems visually facilitates thinking and problem solving performance (Brenner et al. 1997; Collins and Ferguson 1993; Rittle-Johnson et al. 2001; Zhang 1997). By selecting tasks and assessments that help expose misconceptions, instructors in a knowledge- and learner-centered environment can better understand where their students are coming from, and either help them build upon those ideas, or restructure them (Bell and Purdy 1985; Bransford et al. 1999). Implementing drawing as part of an environment can serve to provide students with a tool to facilitate their problem solving process, make their CT visible, provide a focal point for formative assessment, and connect their learning with real-world practices.

The process of debugging as a group allowed for students to work through their ideas of how electricity works and being able to articulate that understanding, while demonstrating the CT concept of decomposition (Csizmadia et al. 2015) and CT dispositions of persistence in working with difficult problems and tolerance for ambiguity (ISTE & CSTA, 2011). Fields et al. (2016) noticed similar dynamics in their research on student pairs debugging e-textile artifacts that were intentionally faulty. Their research found that the pairing of students required them to justify what they thought the problem solving process should be, making their thinking explicit in their interactions. In addition, they saw that debugging engaged students in the cycles of observation, hypothesis generation and testing, and evaluation, a process also found by Sullivan (2008) when observing students debugging robotics projects. These cycles dovetail with the teacher elicits response, student responds, teacher recognizes student response, and teacher uses student response (ESRU) cycle of informal formative assessment, in which teachers elicit, students respond, the teacher recognizes the response, and use student thinking and engagement during instruction (Furtak and Ruiz-Primo 2007). In other words, the process of debugging allowed for self-, peer-, and mentor-led formative assessment, as the mentor “debugged” student thinking, eliciting from the students what their understanding was as they struggled with getting the switch to work properly, evaluating their position, and redirecting his coaching based on their thinking.

Along these lines, Bell et al. (2001) take the idea of dispersed sources of formative assessment and describe it as having the potential of being a form of distributed cognition, socially constructing knowledge, “through collaborative efforts toward shared objectives or by dialogues and challenges brought about by differences in persons’ perspectives” (Pea 1993, p. 48). Taking a combined community- and assessment-centered approach, the entire community learns more effectively if students learn to assess their own work, as well as the work of their peers, as it is vitally important to metacognition (Pintrich 2002). Allowing for formative assessment to be generated from a variety of sources also develops more of a sense of community and less of a hierarchy, a necessity to build a culturally responsive environment.

These informal formative assessment approaches show promise for developing CT skills and dispositions, but the insights are preliminary. Our study was limited in the number and diversity of subjects and the length and variety of observation. More formal research is needed to better understand these patterns and how to support implementation of these informal formative assessment approaches in the classroom, specifically: (a) how differences in professional development structure and dosage impact the use of CT language while making; (b) whether establishing consistent informal formal formative assessments of drawing encourage students to utilize drawing in more diverse contexts in using CT; (c) what classroom structures encourage a culture of debugging; (d) determining the impact of consistent team building or other activities on promoting fluidity of roles in the classroom; and (e) what kind of impact more formal training in cultural responsiveness would have on informal formative assessment. Additionally, more thorough study is necessary to better understand whether these informal formative assessment approaches allowed students to grasp CT problem solving skills and dispositions in non-computing activities and utilize them effectively when similar computing-based opportunities were presented. Further detailed and more expansive research are needed to validate the findings.

References

Basawapatna, A. R., Koh, K. H., & Repenning, A. (2010). Using scalable game design to teach computer science from middle school to graduate school. Proceedings of the fifteenth annual conference on Innovation and technology in computer science education - ITiCSE 10. https://doi.org/10.1145/1822090.1822154

Bell, A. W., & Purdy, D. (1985). Diagnostic teaching: Some problems of directionality. Nottingham: Shell Centre for Mathematical Education, University of Nottingham.

Bell, B., Bell, N., & Cowie, B. (2001). Formative assessment and science education (Vol. 12). Berlin: Springer Science & Business Media.

Black, P., & Wiliam, D. (2009). Developing the theory of formative assessment. Educational Assessment, Evaluation and Accountability, 21, 5–31. https://doi.org/10.1007/s11092-008-9068-5.

Blikstein, P. (2013). Digital fabrication and ‘making’ in education: The democratization of invention. In J. Walter-Herrmann & C. Büching (Eds.), FabLabs: Of Machines, Makers and Inventors (pp. 1–21). Bielefeld: Transcript Publishers.

Brady, C., Orton, K., Weintrop, D., Anton, G., Rodriguez, S., & Wilensky, U. (2016). All Roads Lead to Computing: Making, Participatory Simulations, and Social Computing as Pathways to Computer Science. IEEE Transactions on Education, 60, 1.

Bransford, J. D., Brown, A. L., & Cocking, R. R. (Eds.). (1999). How people learn: Brain, mind, experience, and school. Washington, DC: National Academy Press.

Brennan, K. & Resnick, M. (2012). New frameworks for studying and assessing the development of computational thinking. Retrieved August 12, 2016, from http://web.media.mit.edu/~kbrennan/files/Brennan_Resnick_AERA2012_CT.pdf.

Brenner, M. E., Mayer, R. E., Moseley, B., Brar, T., Duran, R., Reed, B. S., & Webb, D. (1997). Learning by understanding: The role of multiple representations in learning algebra. American Educational Research Journal, 34(4), 663–689. https://doi.org/10.2307/1163353.

Bruner, J. S. (1960). The Process of education. Cambridge: Harvard University Press.

Bruner, J. S. (1981). The social context of language acquisition. Language & Communication.

Cavalcanti, G. (2013). Is it a Hackerspace, Makerspace, TechShop, or FabLab? Make Magazine (May 22, 2013). Available from: http://makerzine.com/2013/05/22/the-difference-between-hackerpaces-makerspaces-techshops-and-fablabls/.

Chávez, V., & Soep, E. (2005). Youth radio and the pedagogy of collegiality. Harvard Education Review, 75(4), 409–434.

Collins, A., & Ferguson, W. (1993). Epistemic forms and epistemic games: Structures and strategies to guide inquiry. Educational Psychologist, 28, 25–42. https://doi.org/10.1207/s15326985ep2801_3.

Collins, A., Brown, J. S., & Newman, S. E. (1988). Cognitive apprenticeship: Teaching the crafts of reading, writing, and mathematics. Knowing, learning, and instruction: Essays in honor of Robert Glaser, 18, 32–42.

Cowie, B., & Bell, B. (1999). A model of formative assessment in science education. Assessment in Education: Principles, Policy & Practice, 6(1), 101–116.

Creswell, J. W. (2013). Qualitative inquiry and research design: Choosing among five approaches (3rd ed.). Thousand Oaks: Sage.

Csizmadia, A., Curzon, P., Dorling, M., Humphreys, S., Ng, T., Selby, C., & Woolard, J. (2015) Computational thinking - A guide for teachers. Retrieved from http://community.computingatschoo.org.uk.resources/2324.

Denner, J., Werner, L., & Ortiz, E. (2012). Computer games created by middle school girls: Can they be used to measure understanding of computer science concepts? Computers & Education, 58(1), 240–249.

Esteban-Guitart, M., & Moll, L. C. (2014). Funds of identity: A new concept based on the funds of knowledge approach. Culture & Psychology, 20(1), 31–48.

Farrington, C. A., Roderick, M., Allensworth, E., Nagaoka, J., Keyes, T. S., Johnson, D. W., & Beechum, N. O. (2012). Teaching adolescents to become learners. The role of non-cognitive factors in shaping school performance: A critical literature review. Chicago: University of Chicago Consortium of Chicago School Research.

Fields, D. A., & King, W. L. (2014). “So, I think I'm a programmer now.” Developing connected learning for adults in a university craft technologies course. In J. L. Polman et al. (Eds.), Learning and Becoming in Practice: The International Conference of the Learning Sciences (ICLS) 2014 (Vol. 1, pp. 927–936). Boulder: International Society of the Learning Sciences.

Fields, D. A., Searle, K. A., & Kafai, Y. B. (2016). Deconstruction kits for learning: Students’ collaborative debugging of electronic textile designs. In Proceedings of the 6th Annual Conference on Creativity and Fabrication in Education (pp. 82–85). New York: ACM.

Furtak, E. M., & Ruiz-Primo, M. A. (2007) Studying the effectiveness of four types of formative assessment prompts in providing information about students’ understanding in writing and discussions. Paper presented at the American Educational Research Association Annual Meeting, Chicago, IL.

Greeno, J. G. (1991). Number sense as situated knowing in a conceptual domain. Journal for research in mathematics education.

Grover, S., & Pea, R. (2013). Computational thinking in K–12: A review of the state of the field. Educational researcher, 42(1), 38–43.

Harel, I. E., & Papert, S. E. (1991). Constructionism. Norwood: Ablex Publishing.

Henderson, P. B., Cortina, T. J., & Wing, J. M. (2007). Computational thinking. ACM SIGCSE Bulletin, 39(1), 195–196.

Heritage, M. (2010). Formative assessment and next-generation assessment systems: Are we losing an opportunity? Paper prepared for the Council of Chief State School Officers. Los Angeles: University of California, National Center for Research on Evaluation, Standards, and Student Testing. Retrieved from http://www.ccsso.org/Documents/2010/Formative_Assessment_Next_Generation_2010.pdf.

Herold, B. (2016). The maker movement in K-12 education: A guide to emerging research. Education Week. Retrieved from http://blogs.edweek.org/edweek/DigitalEducation/2016/04/maker_movement_in_k-12_education_research.html.

Hudesman, J., Crosby, S., Flugman, B., Issac, S., Everson, H., & Clay, D. B. (2013). Using formative assessment and metacognition to improve student achievement. Journal of Developmental Education, 37(1), 2.

International Society for Technology in Education & Computer Science Teachers Association. (2011). Operational Definition of Computational Thinking for K-12 Education. Retrieved from http://csta.acm.org/Curriculum/sub/CurrFiles/CompThinkingFlyer.pdf.

Johnson, A. M., Butcher, K. R., Ozogul, G., & Reisslein, M. (2014). Introductory circuit analysis learning from abstract and contextualized circuit representations: Effects of diagram labels. IEEE Transactions on Education, 57(3), 160–168.

Kazimoglu, C., Kiernan, M., Bacon, L., & Mackinnon, L. (2012). A serious game for developing computational thinking and learning introductory computer programming. Procedia-Social and Behavioral Sciences, 47, 1991–1999.

Kolodner, J. L., Camp, P. J., Crismond, D., Fasse, B., Gray, J., Holbrook, J., et al. (2003). Problem-based learning meets case-based reasoning in the middle-school science classroom: Putting learning by designTM into practice. Journal of Learning Sciences, 12(4), 495–547.

Ladson-Billings, G. (1995). Toward a theory of culturally relevant pedagogy. American Educational Research Journal, 32(3), 465–491 Retrieved from http://aer.sagepub.com/content/32/3/465.full.pdf.

Lave, J., & Wenger, E. (1991). Situated learning. Legitimate peripheral participation. Cambridge: University of Cambridge Press.

Lee, I., Martin, F., Denner, J., Coutler, B., Allan, W., Erickson, J., Malyn-Smith, J., & Werner, L. (2011). Computational thinking of youth in practice. Acm Inroads, 2(1), 32–37.

Lister, R., Adams, E. S., Fitzgerald, S., Fone, W., Hamer, J., Lindholm, M., McCartney, R., Moström, J. E., Sanders, K., Seppälä, O., & Simon, B. (2004). A multi-national study of reading and tracing skills in novice programmers. ACM SIGCSE Bulletin, 36(4), 119–150.

Litts, B. (2015). Making learning: Makerspaces as learning environments Retrieved from http://www.informalscience.org/sites/default/files/Litts_2015_Dissertation_Published.pdf.

Ma, A., & Norwich, B. (2007). Triangulation and Theoretical Understanding. International Journal of Social Research Methodology, 10(3), 211–226. https://doi.org/10.1080/13645570701541878.

Martin, F. (2018). Rethinking Computational Thinking. Retrieved April 13, 2018, from http://advocate.csteachers.org/2018/02/17/rethinking-computational-thinking/.

Martinez, S. L., & Stager, G. (2013). Invent to learn. Torrance: Constructing modern knowledge press.

Moll, L. C., Amanti, C., Neff, D., & Gonzalez, N. (1992). Funds of knowledge for teaching: Using a qualitative approach to connect homes and classrooms. Theory into practice, 31(2), 132–141.

Moreno, R., Reisslein, M., & Ozogul, G. (2009). Pre-college electrical engineering instruction: Do abstract or contextualized representations promote better learning?. In Frontiers in Education Conference, 2009. FIE'09. 39th IEEE (pp. 1-6). IEEE.

Moreno, R., Ozogul, G., & Reisslein, M. (2011). Teaching with concrete and abstract visual representations: Effects on students' problem solving, problem representations, and learning perceptions. Journal of Educational Psychology, 103(1), 32.

National Research Council. (2004). Computer Science: Reflections on the Field, Reflections from the Field. Washington, D.C.: National Academies Press.

National Research Council. (2014). STEM integration in K-12 education: Status, prospects, and an agenda for research. Washington, D.C.: National Academies Press.

Next Generation Science Standards Lead States (NGSS). (2013). Next generation science standards: for states, by states. Washington, DC: The National Academies Press.

Nicol, D., & Macfarlane-Dick, D. (2006). Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Studies in Higher Education, 31, 199–218.

Pea, R. D. (1993). Practices of distributed intelligence and designs for education. Distributed cognitions: Psychological and educational considerations, 11, 47–87.

Pintrich, P. R. (2002). The role of metacognitive knowledge in learning, teaching, and assessing. Theory into practice, 41(4), 219–225.

Rittle-Johnson, B., Siegler, S., & Alibali, M. (2001). Developing conceptual understanding and procedural skill in mathematics: An iterative process. Journal of Educational Psychology, 93, 346–362. https://doi.org/10.1037/0022-0663.93.2.346.

Schoenfeld, A. H. (2016). Learning to think mathematically: Problem solving, metacognition, and sense making in mathematics (Reprint). Journal of Education, 196(2), 1–38.

Shavelson, R. J., Yin, Y., Furtak, E. M., Ruiz-Primo, M. A., & Ayala, C. C. (2008). On the role and impact of formative assessment on science inquiry teaching and learning. In J. Coffey, R. Douglas, & C. Stearns (Eds.), Assessing science learning: Perspectives from research and practice (pp. 21–36). Arlington: National Science Teachers Association Press.

Sleeter, C. E. (2012). Confronting the marginalization of culturally responsive pedagogy. Urban Education, 47(3), 562–584.

Stake, R. (1995). The art of case study research. Thousand Oaks: Sage.

Sullivan, F. R. (2008). Robotics and science literacy: Thinking skills, science process skills and systems understanding. Journal of Research in Science Teaching, 45(3), 373–394.

Sweller, J. (1988). Cognitive load during problem solving: Effects on learning. Cognitive Science, 12, 257–285.

Tang, X., Yin, Y., Lin, Q., & Hadad, R. (2018). Assessing computational thinking: A systematic review of the literature. Poster presented at the 2018 annual meeting of the American Educational Research Association (AERA), New York, NY.

Tarkan, S., Sazawal, V., Druin, A., Golub, E., Bonsignore, E. M., Walsh, G., & Atrash, Z. (2010, April). Toque: Designing a cooking-based programming language for and with children. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 2417–2426). New York: ACM.

Tierney, J. (2015). The Dilemmas of maker culture. Thinking through the consequences of the proliferation of powerful tools and technologies. The Atlantic. Retrieved from https://www.theatlantic.com/technology/archive/2015/04/the-dilemmas-of-maker-culture/390891/.

Touretzky, D. S., Marghitu, D., Ludi, S., Bernstein, D., & Ni, L. (2013, March). Accelerating K-12 computational thinking using scaffolding, staging, and abstraction. In Proceeding of the 44th ACM technical symposium on Computer science education (pp. 609–614). New York: ACM.

Vélez-Ibáñez, C., & Greenberg, J. (1992). Formation and transformation of funds of knowledge among U.S.-Mexican Households. Anthropology & Education Quarterly, 23(4), 313–335.

Winne, P. H. (1995). Inherent details in self-regulated learning. Educational Psychologist, 30, 173–188.

Wolz, U., Pearson, K., Pulimood, M., Stone, M., & Switzer, M. (2011). Computational thinking and expository writing in the middle school: A novel approach to broadening participation in computing. ACM Transactions on Computing Education (TOCE), 11(2), 1–22.

Zhang, J. (1997). The nature of external representations in problem solving. Cognitive Science, 21(2), 179–217.

Acknowledgments

We would like to thank the students and mentors who participated in the study for the time and effort they dedicated.

Funding

Research on this project was developed with support from the National Science Foundation (1543124). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Research Involving Human Participants and/or Animals

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hadad, R., Thomas, K., Kachovska, M. et al. Practicing Formative Assessment for Computational Thinking in Making Environments. J Sci Educ Technol 29, 162–173 (2020). https://doi.org/10.1007/s10956-019-09796-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10956-019-09796-6