Abstract

We consider Kac’s 1D N-particle system coupled to an ideal thermostat at a fixed temperature, introduced by Bonetto, Loss, and Vaidyanathan in 2014. We obtain a propagation of chaos result for this system, with an explicit and uniform-in-time rate of order \(N^{-1/3}\) in terms of the 2-Wasserstein metric squared. We also show well-posedness and equilibration for the limit kinetic equation in the space of probability measures. The proofs use a coupling argument previously introduced by Cortez and Fontbona in 2016.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Main Result

1.1 Thermostated Kac Particle System

We are interested in Kac’s 1D particle system, subjected to interactions against particles taken from an ideal external thermostat, as studied for instance in [4, 22]. It can be described as follows: consider N particles characterized by their one-dimensional velocities, subjected to two types of random interactions:

-

Kac collisions: at rate \(\lambda N\), randomly select two particles in the system and update their velocities \(v,v_*\in \mathbb {R}\) according to the rule

$$\begin{aligned} \left( \begin{array}{c} v \\ v_* \end{array} \right) \mapsto \left( \begin{array}{c} v' \\ v_*' \end{array} \right) {:}{=} \left( \begin{array}{c} v\cos \theta - v_*\sin \theta \\ v\sin \theta + v_*\cos \theta \end{array} \right) , \end{aligned}$$(1)where \(\theta \in [0,2\pi )\) is selected uniformly at random. This rule preserves the energy: \(v^2 + v_*^2 = (v')^2 + (v_*')^2\).

-

Thermostat interactions: at rate \(\mu N\), randomly select a particle in the system and update its velocity \(v\in \mathbb {R}\) according to the rule

$$\begin{aligned} v \mapsto v\cos \theta - w\sin \theta , \end{aligned}$$(2)where \(\theta \) is again selected uniformly at random on \([0,2\pi )\), and w is sampled with the Gaussian density \(\gamma (w) = (2\pi T)^{-1/2} e^{-w^2/2T}\). This can be seen as an interaction against a particle taken from an ideal thermostat, that is, from an infinite reservoir at thermal equilibrium with temperature \(T>0\).

Here \(\lambda>0, \mu >0\) are given fixed constants representing the rate of Kac and thermostat collisions, respectively. The initial velocities of the N particles are chosen according to some prescribed symmetric distribution \(f_0^N\) on \(\mathbb {R}^N\), and all previous random choices are made independently. These rules unambiguously specify the law of the particle system as an \(\mathbb {R}^N\)-valued pure-jump continuous-time Markov process, whose state at time \(t\ge 0\) is denoted \(\mathbf {V}_t = (V_t^1,\ldots ,V_t^N)\), and we also write \(f_t^N = \text {Law}(\mathbf {V}_t)\) for its symmetric distribution. For simplicity, in our notation we omit the dependence on N in the particle system \(\mathbf {V}_t\).

Kac’s original model [17], corresponding to the case \(\mu =0\), represents the evolution of a large number of indistinguishable particles that exchange energies via random collisions in a one dimensional caricature of a gas, as a simplification of the more realistic spatially homogeneous Boltzmann equation. The form of the collision rule (1) implies that the average energy \(\frac{1}{N}\sum _i (V_t^i)^2\) is preserved a.s., and one typically assumes that the initial average energy is a.s. equal to 1. Thus, \(f_t^N\) is supported on the sphere \(S^N = \{\mathbf {v}\in \mathbb {R}^N : \sum _i (v^i)^2 = N\}\) for all \(t\ge 0\), and the dynamics has \(\sigma ^N\), the uniform measure on \(S^N\), as the unique stationary distribution. Kac worked with initial conditions \(f_0^N\) having a density in \(L^2(S^N,\sigma ^N)\), for which we now know that \(f_t^N\) equilibrates exponentially fast in the \(L^2\) norm, with rates uniform in N, see [5, 16]. However, the \(L^2\) norm is a crude upper bound for the \(L^1\) norm; moreover, the \(L^2\) norm of typical initial distributions \(f_0^N\) with a near-product structure (specifically, chaotic sequences, see below) grows exponentially with N, which means that one has to wait a time proportional to N in order for the \(L^2\) bound to start providing evidence of convergence.

Thus, one looks for alternative ways to quantify equilibration, such as convergence in relative entropy. The relative entropy of near-product measures grows linearly (and not exponentially) with N, which is a crucial advantage over the \(L^2\) norm. The usual approach is to control the entropy production, in order to obtain an exponential rate of equilibration in relative entropy. Unfortunately, there exist sequences of initial distributions for which the entropy production degenerates as \(N\rightarrow \infty \), as shown in [12]. It is worth noting, however, that the sequence constructed in [12] is physically unlikely, in the sense that \(f_0^N\) gives half the total energy of the system to a small fraction of the particles. This raises the question of whether there is a smaller, but still rich, class of initial conditions for which one can have good control on the entropy production. We refer the reader to [7] for more details about Kac model and equilibration in relative entropy.

Picking up the challenge of choosing good (physical) initial conditions, and in order to avoid the badly behaved initial distributions for which entropy production degenerates, Bonetto et al. [4] introduced the model (1)-(2), called the thermostated Kac particle system, to describe a system in which all but a few particles are at equilibrium. This thermostated particle system no longer preserves the energy, so \(f_t^N\) is supported on the whole space \(\mathbb {R}^N\), and the equilibrium distribution is the N-dimensional Gaussian with density \(\gamma ^{\otimes N}(\mathbf {v}) = \prod _i \gamma (v^i)\). In this case, the system approaches equilibrium in relative entropy exponentially fast, with rates uniform in the number of particles, see [4, Theorem 3]. Later, in [3], the use of the ideal thermostat (2) was justified by approximating it with a finite but large reservoir of particles at equilibrium in a quantitative way.

1.2 Propagation of Chaos

Besides the long-time behaviour of the particle system, one can also study convergence of \(f_t^N\) as \(N\rightarrow \infty \). Notice however that this is not an easy task, because even if we consider particles whose velocities are independent at \(t=0\), the collisions amongst them will destroy this independence for later times. Nevertheless, for the thermostated Kac system, one expects the correlations between particles to become weaker as N grows. The following concept formalizes this idea of asymptotic independence:

Definition 1

(chaos) For each \(N\in \mathbb {N}\), let \(f^N\) be a symmetric probability measure on \(\mathbb {R}^N\). The collection \((f^N)_{N\in \mathbb {N}}\) is said to be chaotic with respect to some given probability measure f on \(\mathbb {R}\), if for all \(k\in \mathbb {N}\), the marginal distribution of \(f^N\) on the first k variables converges in distribution, as \(N\rightarrow \infty \), to the tensor product measure \(f^{\otimes k}\). That is: for every \(k\in \mathbb {N}\) and every bounded and continuous function \(\phi :\mathbb {R}^k \rightarrow \mathbb {R}\), it holds

For Kac’s model, that is, when \(\mu =0\), we know that if the sequence \(( f_0^N )_{N\in \mathbb {N}}\) is chaotic to some probability measure \(f_0\) on \(\mathbb {R}\), then for all \(t\ge 0\) the sequence \(( f_t^N )_{N\in \mathbb {N}}\) will also be chaotic to some \(f_t\); this property is known as propagation of chaos. The limit \(f_t\) is the solution to the so-called Boltzmann–Kac equation, which reads

in the case where \(f_0\), and thus every \(f_t\), has a density. This was first shown by Kac [17] in the special case where \(f_t^N\) has a density in \(L^2(S^N,\sigma ^N)\). The solution to (3) also preserves the initial energy, i.e., \(\int v^2 f_t(dv) = \int v^2 f_0(dv) = 1\) for all \(t\ge 0\). It is straightforward to verify that the Gaussian density with energy 1 is a stationary distribution of the equation, and it is known that the solution converges to it, see for instance [15, 17].

When we introduce the thermostat to Kac’s original model, propagation of chaos still holds, as shown in [4], Theorem 5], and the limit density satisfies

which we refer to as the thermostated Boltzmann–Kac equation, or simply the kinetic equation. As with the particle system, the solution to (4) does not preserve the initial energy, and its equilibrium distribution is \(\gamma \), the Gaussian density with energy T. When the initial condition \(f_0\) has a density with finite relative entropy, it follows from [4], Propostition 15] that there is exponential convergence to equilibrium in relative entropy. In Definition 3 we will provide a notion of weak solution for (4), which will allow us to work with probability measures instead of densities. Using this notion, we give an existence and uniqueness result in Theorem 5.

We mention that, starting from Kac’s work [17], propagation of chaos has been studied for other related kinetic models, most notably the spatially homogeneous Boltzmann equation, see for instance [11, 14, 18, 19] and the references therein. Propagation of chaos has also been studied for similar models involving thermostats; for example, the authors in [2, 6] consider the Gaussian isokinetic thermostat, which is used to keep the total energy of the system fixed.

1.3 Main Result

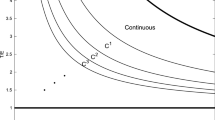

Chaoticity, and thus propagation of chaos, can be made quantitative. For Kac’s model this was done in [9] using Wasserstein distances, defined below, and providing explicit convergence rates in N which are uniform in time. Similar quantitative results for the spatially homogeneous Boltzmann equation can be found for instance in [11, 18].

The goal of the present article is to strengthen the propagation of chaos result for the thermostated Kac model in [4], by making it quantitative in N with rates that are uniform in time. To quantify chaos we will use the following metric: given f, g probability measures on \(\mathbb {R}^k\), their 2-Wasserstein distance is given by

where the infimum is taken over all pairs of random vectors \({\mathbf {X}} = (X^1,\ldots ,Y^k)\) and \({\mathbf {Y}} = (Y^1,\ldots ,Y^k)\) such that \(\text {Law}({\mathbf {X}}) = f\) and \(\text {Law}({\mathbf {Y}}) = g\). This defines a distance in the space of probability measures with finite second moment. The infimum is always achieved by some \(({\mathbf {X}},{\mathbf {Y}})\), and such a pair is called an optimal coupling; see [23] for details.

We will use the following characterization of chaoticity, see for instance [19]: a sequence \((f^N)_{N\in \mathbb {N}}\) is f-chaotic if and only if for a sequence of random vectors \({\mathbf {X}}\) on \(\mathbb {R}^N\) with \(\text {Law}({\mathbf {X}}) = f^N\), it holds that the sequence of random empirical measures

almost surely converges to the constant probability measure f. We can now state our main result.

Theorem 2

(uniform propagation of chaos) Assume that \(\int |v|^r f_0(dv) < \infty \) for some \(r>4\). Let \((\mathbf {V}_t)_{t\ge 0}\) be the thermostated Kac N-particle system described by (1)-(2), and let \((f_t)_{t\ge 0}\) be the unique weak solution of (4). Then there exists a constant C depending only on \(\lambda \), \(\mu \), T, r, and \(\int |v|^r f_0(dv)\), such that for all \(t\ge 0\) we have:

We remark that in this result \((f_0^N)_{N\in \mathbb {N}}\) can be any family of symmetric initial distributions; thus, Theorem 2 provides a uniform-in-time propagation of chaos rate of order \(N^{-1/3}\) whenever \(W_2^2(f_0^N,f_0^{\otimes N})\) converges to 0 at the same rate or faster. For instance, one can simply take \(f_0^N = f_0^{\otimes N}\), so the first term in (5) vanishes; another common choice for \(f_0^N\) is the one described in [7], where the authors construct a chaotic sequence by conditioning \(f_0^{\otimes N}\) to the Kac sphere \(S^N\); quantitative rates of chaoticity in \(W_2\) for this kind of construction can be found in [8].

The rate \(N^{-1/3}\) is not so far from the optimal rate \(N^{-1/2}\), valid for the convergence of the empirical measure of an N-tuple of i.i.d. variables towards their common law, with the same metric as in (5); see [13, Theorem 1]. We remark that if one only assumes \(\int |v|^r f_0(dv) < \infty \) for some \(2<r<4\), we can still deduce (5), but with a slower chaos rate of order \(N^{-\eta (r)}\) for some \(0<\eta (r)<1/3\). We also note that the value \(\mu /2\), corresponding to the rate of decay of the initial condition term in (5) (see also the contraction estimates given in Lemmas 7 and 9 below), coincides with the spectral gap of the generator of the particle system, and with the bound on the entropy production obtained in [4]; see [22] for the optimality of this bound.

The proof of Theorem 2 is based on a coupling argument developed in [10] and later used in [9] to prove uniform propagation of chaos for Kac’s original model. This argument makes use of a probabilistic object called the Boltzmann process, which is a stochastic process \((Z_t)_{t\ge 0}\) satisfying \(\text {Law}(Z_t) = f_t\) for all \(t\ge 0\). More specifically, we will construct our particle system \(\mathbf {V}_t = (V_t^1,\ldots ,V_t^N)\) using a Poisson point measure, and couple it with a collection \(\mathbf {Z}_t = (Z_t^1,\ldots ,Z_t^N)\) of Boltzmann processes, in a way that the two remain close on expectation. Some adaptations are required in order to use this technique. For instance, we will need to introduce an additional Poisson point measure to represent thermostat interactions in the particle system. Also, whereas Kac’s original model is known to have useful properties like well-posedness, propagation of moments, and convergence to equilibrium (which the argument of [9, 10] requires), for the thermostated Kac model of the present paper we will need to prove these properties, or adapt previously known results. For instance, see Theorem 5 for well-posedness, Lemma 8 for propagation of moments, and Lemmas 7 and 9 for convergence to equilibrium in the \(W_2\)-metric.

The structure of the article is as follows. In Sect. 2 we provide a notion of weak solution for the thermostated Boltzmann–Kac equation (4), valid for collections \((f_t)_{t\ge 0}\) of probability measures, and we then prove a well-posedness result for this notion. In Sect. 3 we specify the coupling construction mentioned above and we prove Theorem 2. Along the way, we will use this construction to prove some interesting results, such as the equilibration in \(W_2\) for the particle system in Lemma 7, and an analogous result for the kinetic equation in Lemma 9. Some final comments are given in Sect. 4.

2 Well-Posedness for the Kinetic Equation

In this section, we define a notion of weak solution to (4), and prove its well-posedness. We will not require each \(f_t\) to have a density; instead, it will be an element of the space \(\mathcal {M}\) of bounded non-negative Borel measures on \(\mathbb {R}\) metrized by total variation \(\Vert \cdot \Vert \). We will see that, if \(f_0\) is a probability measure, then \(f_t\) will also be a probability measure for all \(t>0\). Similarly, if \(f_0\) has a density, so will \(f_t\).

For convenience, let us introduce the mapping \(B:\mathcal {M}\times \mathcal {M}\rightarrow \mathcal {M}\), given by

for all bounded and continuous function \(\phi \). Notice that when \(\nu _1\) and \(\nu _2\) have densities \(g_1\) and \(g_2\) with respect to the Lebesgue measure, then \(B[\nu _1, \nu _2]\), also denoted by \(B[g_1,g_2]\), satisfies

We note that (4) is equivalent to

This motivates the following notion of weak solution:

Definition 3

A function \(f \in C([0,\infty ), \mathcal {M})\) is a weak solution to (4) with initial condition \(f_0\) if, for all \(t\ge 0\), we have

We summarize some of the useful properties of the mapping B in the following lemma, which we state without proof.

Lemma 4

-

(i)

Monotonicity: If \(\nu _1, \nu _2, \pi _1\), and \(\pi _2\) in \(\mathcal {M}\) are such that

$$\begin{aligned} \nu _1(A) \ge \pi _1(A) \text { and } \nu _2(A) \ge \pi _2(A) \quad \forall A \text {measurable}, \end{aligned}$$then

$$\begin{aligned} B[\nu _1, \nu _2](A) \ge B[\pi _1, \pi _2](A), \quad \forall A \text {measurable}. \end{aligned}$$ -

(ii)

Norm: for all \(\nu _1, \nu _1 \in \mathcal {M}\), it holds

$$\begin{aligned} \Vert B[\nu _1, \nu _2] \Vert = \Vert \nu _1 \Vert \Vert \nu _2 \Vert . \end{aligned}$$If \(\nu _1\) and \(\nu _2\) are bounded, signed, Borel measures, then

$$\begin{aligned} \Vert B[\nu _1, \nu _2] \Vert \le \Vert \nu _1 \Vert \Vert \nu _2 \Vert . \end{aligned}$$ -

(iii)

Second moments and arbitrary moments: If \(\nu _1\) and \(\nu _2\) in \(\mathcal {M}\) have finite second moments \(e_1\) and \(e_2\) respectively, then

$$\begin{aligned} \int _\mathbb {R}x^2 B[\nu _1, \nu _2] (dx) = \frac{ e_2 + e_2 }{2}. \end{aligned}$$If \(\nu _1\) and \(\nu _2\) have finite \(r^{\text {th}}\) moments \(n_r\) and \(m_r\) for some \(r > 0\), then

$$\begin{aligned} \int _\mathbb {R}\vert x\vert ^r B[\nu _1, \nu _2](dx) \le 2^{\max \{\frac{r}{2},1\}} \frac{n_r + m_r}{2} \int _0^{2\pi } | \cos \theta |^r \frac{d\theta }{2\pi }. \end{aligned}$$

We are now ready to state and prove our well-posednes result:

Theorem 5

(well-posedness) For every probability measure \(f_0 \in \mathcal {M}\), there is a unique solution f to (7). \(f_t\) is a probability measure for every t. If \(f_0\) has a density or a finite \(r^{\text {th}}\) moment for some \(r\ge 2\), then so does \(f_t\) for all t.

Proof

We will use the following equivalent form of (6):

We use the iterative construction in [20]. Let \(f_0\) be a Borel probability measure on \(\mathbb {R}\). Define the sub-probability measures \(( u^n_t)_{n=0}^\infty \) inductively by

Using Lemma 4 we see that \(u^n_t\) is continuous in t for each n, that \(u^n_t(\mathbb {R}) \le 1\), and that \((u^n_t)_n\) is increasing in n. Hence, for each t, \((u^n_t)_n\) converges to some element \(u_t\) in \(\mathcal {M}\) and \(u_t(\mathbb {R}) \le 1\). Note that \(u_t-u_t^n\) is a non-negative measure for each t, thus we have convergence in total variation, since

This, together with Lemma 4, implies that

and

Thus we can take the infinite n limit in (8) and establish that \(u_t\) solves (7). Being an increasing limit of continuous functions, \(u: [0,\infty ) \rightarrow \mathcal {M}\) is lower semi-continuous, and thus measurable. Since \(u_t(\mathbb {R}) \le 1\), \(\forall t\), u belongs to \(L^\infty ( [0,\infty ), \mathcal {M})\). To show that \(u_t\) is continuous (in t) we note that it equals

and the integrand above is in the Bochner space \(L^1([0,\tau ], \mathcal {M})\) for all \(\tau \). This makes \(u_t\) continuous. A consequence of this continuity is that: \(h(t)= u_t(\mathbb {R})\) is differentiable and satisfies the differential equation

Since \(h(0)=1\), \(h(t) \equiv 1\). Hence, \(u_t\) is a probability measure for all t. To show the uniqueness of \(u_t\), let \(g_t \in C([0,\infty ), \mathcal {M})\) satisfy (7). On one hand, \(g_t\ge u^0_t\) by definition. And thus, by induction, \(g_t \ge u^n_t\) a.e. t for all n. By the monotone convergence theorem, we have

for every measurable set A. On the other hand, using Lemma 4, for each t we obtain

which shows that \(g_t\) is continuous in t, and just like \(u_t\), must be a probability measure for all t. Thus, \(\Vert g_t - u_t \Vert = g_t(\mathbb {R})- u_t(\mathbb {R}) = 1 - 1 =0\).

To prove the last statement of the theorem, we note that if \(f_0 \in L^1(\mathbb {R})\), then \(u_t^n \in L^1(\mathbb {R})\) for all \(\mathbb {R}\) and we use the completeness of \(L^1\) under the total variation norm. If \(f_0\) has a finite \(r^{\text {th}}\) moment for some \(r>0\), then by Lemma 4 and induction, we see that, for each t, \((\int _\mathbb {R}u_t^n(dv) \vert v\vert ^r)_n\) is finite, monotone increasing, and bounded above by the solution R(t) to the following integral equation:

Here \(C_r = 2^{\max \{\frac{r}{2},1\}}\int _0^{2\pi } \vert \cos \theta \vert ^r \frac{d\theta }{2\pi }\) is as in Lemma 4. R(t) is finite due to Gronwall’s inequality. The monotone convergence theorem implies that R(t) controls the \(r^{\text {th}}\) moment of \(f_t\). \(\square \)

It is straightforward to verify that, if \(\int _\mathbb {R}v^2 f_0(dv)<\infty \), then we have

Remark 6

The uniqueness of the solution to (6) holds in the larger space \(L^2_\text {loc}([0, \infty ), \mathcal {M})\), provided we identify functions \(f_t\) that agree t-a.s.

3 Coupling Construction

3.1 Particle System

We provide an explicit construction of the particle system using an SDE, following [10]. To this end, for fixed \(N\in \mathbb {N}\), let \(\mathcal {R}(dt,d\theta ,d\xi ,d\zeta )\) be a Poisson point measure on \([0,\infty )\times [0,2\pi )\times [0,N)^2\) with intensity

where \(\mathbf {i}\) is the function that associates to a variable \(\xi \in [0,N)\) the discrete index \(\mathbf {i}(\xi ) = \lfloor \xi \rfloor +1 \in \{1,\ldots ,N\}\). In words: at rate \(N\lambda \), the measure \(\mathcal {R}\) selects collision times \(t\ge 0\), and for each such time, it independently samples a parameter \(\theta \) uniformly at random on \([0,2\pi )\), and a pair \((\xi ,\zeta ) \in [0,N)^2\) such that \(\mathbf {i}(\xi ) \ne \mathbf {i}(\zeta )\), also uniformly. The pair \((\mathbf {i}(\xi ),\mathbf {i}(\zeta ))\) provides the indices of the particles involved in Kac-type collisions. The fact that we use continuous variables \(\xi ,\zeta \in [0,N)\), instead of discrete indices in \(\{1,\ldots ,N\}\), will be crucial to define our coupling with a collection of Boltzmann processes.

Let \(\mathcal {Q}_1(dt, d\theta , dw),\ldots ,\mathcal {Q}_N(dt, d\theta , dw)\) be a collection of independent Poisson point measures on \([0,\infty )\times [0,2\pi ) \times \mathbb {R}\), also independent of \(\mathcal {R}\), each having intensity \(\mu dt \frac{d\theta }{2\pi } \gamma (dw)\). Finally, let \(\mathbf {V}_0 = (V_0^1,\ldots ,V_0^N)\) be an exchangeable collection of random variables with \(\text {Law}(\mathbf {V}_0) = f_0^N\), independent of everything else.

The particle system \(\mathbf {V}_t = (V_t^1,\ldots ,V_t^N)\) is defined as the unique jump-by-jump solution of the SDE

that starts at \(\mathbf {V}_0\). Here, for \(\mathbf {v}\in \mathbb {R}^N\), the vectors \({\mathbf {a}}_{ij}(\mathbf {v},\theta )\in \mathbb {R}^N\) and \({\mathbf {b}}_i(\mathbf {v},\theta ,w)\in \mathbb {R}^N\) are defined as

For any \(i=1,\ldots ,N\), from (10) it follows that particle \(V_t^i\) satisfies the SDE

where \(\mathcal {P}_i\) is defined as

and where we use \(-d\theta \) to transform \(\sin \theta \) into \(-\sin \theta \). Clearly, \(\mathcal {P}_i\) is a Poisson point measure on \([0,\infty )\times [0,2\pi )\times [0,N)\) with intensity

As mentioned earlier, \(f_t^N = \text {Law}(\mathbf {V}_t)\) converges exponentially fast to the Gaussian density \(\gamma ^{\otimes N}\) in relative entropy, as shown in [4, Theorem 3]. Similarly, the following result provides equilibration in \(W_2\), which does not require \(f_t^N\) to have a density:

Lemma 7

(contraction and equilibration for the particle system) Let \(f_t^N\) and \(\tilde{f}_t^N\) be the laws of the thermostated Kac N-particle systems starting from (possibly different) symmetric initial distributions \(f_0^N\) and \(\tilde{f}_0^N\), respectively. Then

Consequently, taking \(\tilde{f}_0^N\) as the stationary distribution \(\gamma ^{\otimes N}\), gives

Proof

Let \((\mathbf {V}_t)_{t\ge 0}\) and \(({\tilde{\mathbf {V}}}_t)_{t\ge 0}\) be the solutions to the SDE (10) with respect to the same Poisson point measures \(\mathcal {R}\), \(\mathcal {Q}_1,\ldots ,\mathcal {Q}_N\), but starting from initial conditions \((\mathbf {V}_0,{\tilde{\mathbf {V}}}_0)\) which we take as an optimal coupling between \(f_0^N\) and \(\tilde{f}_0^N\). Call \(h(t) = \mathbb {E}[(V_t^1 - \tilde{V}_t^1)^2]\), then \(W_2^2(f_t^N,\tilde{f}_t^N) \le \mathbb {E}[\frac{1}{N} \sum _i (V_t^i - \tilde{V}_t^i)^2 ] = h(t)\) by exchangeability, with equality at \(t=0\). Thus, it suffices to study h(t). Since both \(V_t^1\) and \(\tilde{V}_t^1\) satisfy (11) with \(i=1\), when computing the increments of \((V_t^1-\tilde{V}_t^1)^2\) the terms \(w \sin \theta \) cancel, thus obtaining

where we used that \(\int _0^{2\pi } \cos ^2\theta \frac{d\theta }{2\pi } = \frac{1}{2} = \int _0^{2\pi } \sin ^2\theta \frac{d\theta }{2\pi }\) and \(\int _0^{2\pi } \cos \theta \sin \theta \frac{d\theta }{2\pi } = 0\). Notice that

thus the first term in (12) vanishes, which then gives \(h'(t) = -\frac{\mu }{2} h(t)\). The desired bound follows. \(\square \)

3.2 Coupling with Boltzmann Processes

For a given probability measure \(f_0\), let \((f_t)_{t\ge 0}\) be the unique weak solution of (4) given by Theorem 5. We will now construct a stochastic process \((Z_t)_{t\ge 0}\), called the Boltzmann process, such that \(\text {Law}(Z_t) = f_t\) for all \(t\ge 0\). This process is the probabilistic counterpart of (4), and it represents the trajectory of a single particle immersed in the infinite population. It was first introduced by Tanaka [21] in the context of the Boltzmann equation for Maxwell molecules.

Consider a Poisson point measure \(\mathcal {P}(dt,d\theta ,dz)\) on \([0,\infty ) \times [0,2\pi ) \times \mathbb {R}\) with intensity \(2\lambda dt \frac{d\theta }{2\pi } f_t(dz)\), and an independent Poisson point measure \(\mathcal {Q}(dt,d\theta ,dw)\) on \([0,\infty ) \times [0,2\pi ) \times \mathbb {R}\) with intensity \(\mu dt \frac{d\theta }{2\pi } \gamma (dw)\). Consider also a random variable \(Z_0\) with law \(f_0\), independent of \(\mathcal {P}\) and \(\mathcal {Q}\). The process \(Z_t\) is defined as the unique solution, starting from \(Z_0\), to the stochastic differential equation

Strong existence and uniqueness of solutions for this SDE is straightforward, since the rates of \(\mathcal {P}\) and \(\mathcal {Q}\) are finite on bounded time intervals. To show that \(\text {Law}(Z_t) = f_t\), the argument is classical: one first shows that \(\ell _t {:}{=} \text {Law}(Z_t)\) solves

which is a linearized version of (6). This equation has a unique solution in the space \(C([0, \infty ), \mathcal {M})\) because the mapping \(\nu \mapsto B[\nu ,f_t]\) is non-expanding in total variation for all t. Since \(f_t\) is a solution of this linearized version, we must have that \(\ell _t = f_t\).

Since \(\text {Law}(Z_t) = f_t\), we can thus use the Boltzmann process as a tool to prove properties of the solution of the thermostated Boltzmann–Kac equation (4). For instance, we have the following lemma, which will be needed later to prove our uniform-in-time propagation of chaos result.

Lemma 8

(propagation of moments) Let \((f_t)_{t\ge 0}\) be the weak solution to (4). Let \(r\ge 2\), and assume that \(\int _\mathbb {R}\vert v \vert ^r f_0(dv)< \infty \). Then \(\sup _{t \ge 0} \int _\mathbb {R}\vert v\vert ^r f_t(dv)<\infty \).

Proof

The case \(r=2\) follows from (9), so we assume \(r>2\). Let \((Z_t)_{t\ge 0}\) be the Boltzmann process, i.e., the solution to (13). Let \(h(t) = \mathbb {E}\vert Z_t \vert ^r = \int _\mathbb {R}\vert v\vert ^r f_t(dv)\). We know from Theorem 5 that \(h(t)<\infty \) for all t. Then h(t) satisfies

Note that \(\mathbb {E}|Z_t|^{r-1} \le h(t)^{1-1/r}\) and \(\mathbb {E}|Z_t| \le \max \{T, \int _\mathbb {R}v^2 f_t(dv)\}^{1/2}\), thanks to (9) and Jensen’s inequality. Using the inequality \((a+b)^r \le a^r + b^r + 2^{r-1} (a b^{r-1} +a^{r-1} b)\) valid for \(a,b \ge 0\), we thus obtain

where

and \(C_2, C_3>0\) are constants depending on \(\lambda \), \(\mu \), r, T, \(\int _\mathbb {R}v^2 f_t(dv)\), and some moments of \(\gamma \) of order at most r. The statement follows from (14). \(\square \)

The Boltzmann process (13) is particularly useful in coupling arguments, as the next result shows. It provides contraction for the thermostated Boltzmann–Kac equation in \(W_2\)-distance:

Lemma 9

(contraction and equilibration for the thermostated Boltzmann–Kac equation) Let \(f_t\), \(\tilde{f}_t\) be the weak solutions to (4) starting from some possibly different probability measures \(f_0\), \(\tilde{f}_0\). Then

Consequently, taking \(f_0 = \gamma \), gives

Proof

For all \(t\ge 0\), let \(\Pi _t\) be an optimal coupling between \(f_t\) and \(\tilde{f}_t\), that is, \(\Pi _t\) is a probability measure on \(\mathbb {R}\times \mathbb {R}\) such that \(\int (z-\tilde{z})^2 \Pi _t(dz,d\tilde{z}) = W_2^2(f_t,\tilde{f}_t)\). Let \({\mathcal {S}}(dt,d\theta ,dz,d\tilde{z})\) be a Poisson point measure on \([0,\infty )\times [0,2\pi )\times \mathbb {R}\times \mathbb {R}\) with intensity \(2\lambda dt \frac{d\theta }{2\pi }\Pi _t(dz,d\tilde{z})\), and define \(\mathcal {P}(dt,d\theta ,dz) = {\mathcal {S}}(dt,d\theta ,dz,\mathbb {R})\) and \({\tilde{\mathcal {P}}}(dt,d\theta ,d\tilde{z}) = {\mathcal {S}}(dt,d\theta ,\mathbb {R},d\tilde{z})\). In words, \(\mathcal {P}\) and \({\tilde{\mathcal {P}}}\) are Poisson point measures, with intensities \(2\lambda dt \frac{d\theta }{2\pi } f_t(dz)\) and \(2\lambda dt \frac{d\theta }{2\pi } \tilde{f}_t(d\tilde{z})\) respectively, which have the same atoms in the t and \(\theta \) variables, and with optimally-coupled realizations of \(f_t\) and \(\tilde{f}_t\) on the z and \(\tilde{z}\) variables. Also, let \(\mathcal {Q}(dt,dw)\) be a Poisson point measure with intensity \(\mu dt \gamma (dw)\) that is independent of \({\mathcal {S}}\), and set \({\tilde{\mathcal {Q}}} = \mathcal {Q}\). Let also \((Z_0, \tilde{Z}_0)\) be a realization of \(\Pi _0\), independent of everything else; in particular we have \(\mathbb {E}[(Z_0-\tilde{Z}_0)^2] = W_2^2(f_0,\tilde{f}_0)\).

Let \(Z_t\) and \(\tilde{Z}_t\) be the solutions to the SDE (13) with respect to \((\mathcal {P},\mathcal {Q})\) and \(({\tilde{\mathcal {P}}},{\tilde{\mathcal {Q}}})\), respectively, thus \(\text {Law}(Z_t) = f_t\) and \(\text {Law}(\tilde{Z}_t) = \tilde{f}_t\). Consequently, we have \(W_2^2(f_t,\tilde{f}_t) \le \mathbb {E}[(Z_t - \tilde{Z}_t)^2] =: h(t)\). Using Itô calculus, we have:

where in the last step the cross term vanished because \(\int _0^{2\pi } \cos \theta \sin \theta d\theta = 0\). Since \(\int (z-\tilde{z})^2 \Pi _t(dz,d\tilde{z}) = W_2^2(f_t,\tilde{f}_t) \le h(t)\), the integral in the last line is bounded above by 0. We thus obtain \(h'(t) \le - \frac{\mu }{2} h(t)\), which yields the result. \(\square \)

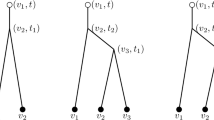

We now specify the coupling construction that will allow us to prove our main result. We closely follow [10], see also [9]. The key idea is to define a system \(\mathbf {Z}_t = (Z_t^1,\ldots ,Z_t^N)\) of Boltzmann processes such that, for each \(i=1,\ldots ,N\), the process \(Z_t^i\) mimics as closely as possible the dynamics of particle \(V_t^i\). Comparing (11) and (13), we see that a way of achieving this is to define \(Z_t^i\) as the solution of (11), but replacing \(V_{t^{-}}^{\mathbf {i}(\xi )}\), which is a \(\xi \)-realization of the (random) empirical measure \(\frac{1}{N-1} \sum _{j\ne i} \delta _{V_{t^{-}}^j}\), with a \(\xi \)-realization of \(f_t\). Moreover, we will do this in an optimal way.

Specifically: we define \(Z_t^i\) as the unique jump-by-jump solution to

where we have used the same Poisson point measures \(\mathcal {P}_i\) and \(\mathcal {Q}_i\) as in (11). Here, \(F^i\) is a measurable function \([0,\infty ) \times \mathbb {R}^N \times [0,N) \ni (t,\mathbf {z},\xi ) \mapsto F_t^i(\mathbf {z},\xi ) \in \mathbb {R}\) with the following property: for any \(t\ge 0\), \(\mathbf {z}\in \mathbb {R}^N\), and any random variable U uniformly distributed on the set \([0,N) \backslash [i-1,i)\), the pair \((z^{\mathbf {i}(U)}, F_t^i(\mathbf {z}, U))\) is an optimal coupling between the empirical measure \({\bar{\mathbf {z}}}^i {:}{=}\frac{1}{N-1} \sum _{j\ne i} \delta _{z^j}\) and \(f_t\). In other words,

(The values of \(F_t^i(\mathbf {z},\xi )\) for \(\xi \in [i-1,1)\) are irrelevant). We refer the reader to [10, Lemma 3] for a proof of existence of such a function. The same result also ensures that \(F_t^i\) satisfies the following: for any exchangeable random vector \({\mathbf {X}}\) in \(\mathbb {R}^N\), and any measurable function \(\phi \), one has for \(j\ne i\)

We take an initial condition \(\mathbf {Z}_0 = (Z_0^1,\ldots ,Z_0^N)\) with distribution \(f_0^{\otimes N}\) and optimally coupled to \(\mathbf {V}_0\), thus

by exchangeability. We have thus defined a collection \(\mathbf {Z}_t = (Z_t^1,\ldots ,Z_t^N)\), where each \(Z_t^i\) is a Boltzmann process by construction; in particular, we have \(\text {Law}(Z_t^i) = f_t\). However, notice that \(Z_t^i\) and \(Z_t^j\) have a simultaneous jump whenever \(V_t^i\) and \(V_t^j\) undergo a Kac collision, which implies that \(Z_t^i\) and \(Z_t^j\) are not independent. In order for this construction to be useful, one needs to prove that these Boltzmann processes become asymptotically independent as \(N\rightarrow \infty \), as is done in [9, 10]. This is the content of the following lemma, which moreover provides explicit rates in N, uniformly on time:

Lemma 10

(decoupling of Boltzmann processes) There exists a constant \(C<\infty \) depending only on \(\lambda \), \(\mu \), T, and \(\int v^2 f_0(dv)\), such that for all fixed \(k\in \mathbb {N}\) we have for all \(t\ge 0\):

Proof

The argument is the same as in [10, Lemma 6] and [9, Lemma 3], so we only provide the main steps of the proof here. The idea is to again use a coupling argument: for fixed \(k \le N\), we will define k independent Boltzmann processes \(\tilde{Z}_t^1,\ldots ,\tilde{Z}_t^k\) that remain close to \(Z_t^1,\ldots ,Z_t^k\) on expectation. To achieve this, each \(\tilde{Z}_t^i\) will use the same randomness that defines \(Z_t^i\) (i.e., the SDE (15)), except when \(Z_t^i\) has a simultaneous jump with \(Z_t^j\) for some \(j\in \{1,\ldots ,k\}\), in which case either \(\tilde{Z}_t^i\) or \(\tilde{Z}_t^j\) will not jump. To compensate for the missing jumps, we will use an additional independent source of randomness to define new jumps. Since on expectation this occurs only a proportion k/N of the jumps of the collection \(Z_t^1,\ldots ,Z_t^k\), this construction will give the desired estimate.

To this end, let \({\tilde{\mathcal {R}}}\) be an independent copy of the Poisson point measure \(\mathcal {R}\) introduced at the beginning of Sect. 3.1, and for \(i=1,\ldots ,k\), define

which is a Poisson point measure with intensity \(2\lambda dt d\theta d\xi \mathbf {1}_{\{\mathbf {i}(\xi )\ne i\}}/ [2\pi (N-1)]\), just as \(\mathcal {P}_i\). Note that the Poisson measures \({\tilde{\mathcal {P}}}_1,\ldots ,{\tilde{\mathcal {P}}}_k\) are independent by construction. Mimicking (15), we define \(\tilde{Z}_t^i\) as the solution, starting from \(\tilde{Z}_0^i = Z_0^i\), to the SDE

It is clear that \(\tilde{Z}_t^1,\ldots ,\tilde{Z}_t^k\) is an exchangeable collection of Boltzmann processes. Moreover, using the independence of \({\tilde{\mathcal {P}}}_1,\ldots ,{\tilde{\mathcal {P}}}_k\) and the fact that \(F_t^i({\mathbf {z}},\xi )\) has distribution \(f_t\) for any \(\mathbf {z}\in \mathbb {R}^N\) and any \(\xi \) uniformly distributed on \([0,N)\backslash [i-1,i)\), one can prove that the processes \(\tilde{Z}_t^1,\ldots ,\tilde{Z}_t^k\) are independent. For a full proof of this fact in a very similar setting, we refer the reader to [10, Lemma 6].

Call \(h(t) {:}{=} \mathbb {E}[(Z_t^1 - \tilde{Z}_t^1)^2]\). By exchangeability, we have

thus it suffices to obtain the desired estimate for h(t). From (15) and (19), using Itô calculus, we obtain:

where \(\Delta _1\) corresponds to the increment of \((Z_t^1 - \tilde{Z}_t^1)^2\) when \(Z_t^1\) and \(\tilde{Z}_t^1\) have a simultaneous Kac-type jump, \(\Delta _2\) is the increment when only \(Z_t^1\) jumps, \(\Delta _3\) is the increment when only \(\tilde{Z}_t^1\) jumps, and \(\Delta _4\) is the increment when there is a thermostat interaction.

Thanks to the indicator \(\mathbf {1}_{[0,k)}(\xi )\), the fact that \(\Delta _2\) and \(\Delta _3\) involve only second-order products of \(f_t\)-distributed variables, using (17), and recalling that (9) implies that \(\int v^2 f_t(dv) \le \max \left\{ \int v^2 f_0(dv) , T \right\} \), we deduce that the second and third terms in (20) are bounded above by \(\frac{Ck}{N}\). On the other hand, since the term \(F_t^i({\mathbf {Z}}_{t^{-}},\xi )\) appears in both (15) and (19), it will cancel out in \(\Delta _1\); more specifically, we have

Similarly, it can be easily seen that \(\Delta _4 = -(1-\cos ^2\theta ) (Z_{t^{-}}^1-\tilde{Z}_{t^{-}}^1)^2\), then the last term in (20) is equal to \(-\frac{\mu }{2} h(t)\). Thus, simply discarding the term \(\Delta _1 \mathbf {1}_{[k,N)}(\xi ) \le 0\) in the first line of (20), we deduce that

Thus \(h'(t) + (\lambda + \frac{\mu }{2}) h(t) \le \frac{Ck}{N}\). Since \(h(0) = 0\), the desired bound follows from the last inequality by multiplying by \(e^{(\lambda + \frac{\mu }{2})t}\) and integrating. \(\square \)

We now want to obtain an estimate for the decoupling property of the system of Boltzmann processes in terms of \(\mathbb {E}[W_2^2({\bar{\mathbf {Z}}}_t, f_t)]\); this is the content of Lemma 11 below. To this end, we will need to recall two results.

For a probability measure \(\nu \) on \(\mathbb {R}\) and for any \(k\in \mathbb {N}\), we will let \(\varepsilon _k(\nu )\) be given by

where \({\mathbf {X}} = (X_1, \dots , X_k)\) is a collection of i.i.d. variables with law \(\nu \). The first result, see [13, Theorem 1], provides rates of convergence for \(\varepsilon _k(\nu )\): if \(\nu \) has a finite \(r^{\text {th}}\) moment for some \(r>4\), then there is a constant \(C_r\) that depends only on r such that

The second result, which is a special case of [10, Lemma 7], states that if \({\mathbf {X}}\) is any exchangeable random vector on \(\mathbb {R}^N\) and \(\nu \) is any probability measure on \(\mathbb {R}\), then there is a constant C depending only on the second moments of \(X^1\) and \(\nu \) such that for any \(k \le N\) we have:

We are now ready to state and prove:

Lemma 11

Assume that \(\int _\mathbb {R}f_0(dv) \vert v \vert ^r < \infty \) for some \(r>4\). Then there is a constant C depending only on \(\lambda \), \(\mu \), T, r, and \(\int _\mathbb {R}f_0(dv) \vert v\vert ^r\), such that for all \(t\ge 0\) we have

Moreover, this bound also holds if we replace \(\bar{{\mathbf {Z}}}_t\) by \(\bar{{\mathbf {Z}}}_t^i = \frac{1}{N-1} \sum _{j\ne i} \delta _{Z_t^j}\).

Proof

For \(k\le N\), (22) applied to \(\nu =f_t\) and \({\mathbf {X}} = {\mathbf {Z}}_t\) gives:

where in the last step we used Lemma 10. The finite initial \(r^{\text {th}}\) moment hypothesis, together with Lemma 8, implies that

Thus, from (21), we obtain \(\varepsilon _k(f_t) \le C/k^{1/2}\) for all \(t\ge 0\) (since \(r>4\)). Taking \(k \sim N^{2/3}\) gives the result. The estimate for \(\bar{{\mathbf {Z}}}_t^i\) is deduced similarly, taking \({\mathbf {X}} = (Z_t^j)_{j\ne i}\) in (22). \(\square \)

We now prove Theorem 2.

Proof

Call \(h(t) = \mathbb {E}[(V_t^1-Z_t^1)^2]\). Using Lemma 11 and exchangeability, we obtain

Thus, it suffices to prove that \(h(t) \le 2 e^{-\frac{\mu }{2} t} h(0) + C N^{-1/3}\), because \(h(0) = W_2^2(f_0^N, f_0^{\otimes N})\) thanks to (18).

We thus study the evolution of h(t). We have

Here \(S_t^K\) corresponds to the Kac interactions coming from the \(\mathcal {P}_i\) terms in (11) and (15), and \(S_t^T\) corresponds to the thermostat interactions coming from the \(\mathcal {Q}_i\) terms. For brevity, let us call \(V_t^\mathbf {i}= V_t^{\mathbf {i}(\xi )}\), \(Z_t^\mathbf {i}= Z_t^{\mathbf {i}(\xi )}\), and \(F_t^1 = F_t^1({\mathbf {Z}}_{t^{-}},\xi )\). We now study each of \(S_t^K\) and \(S_t^T\). For the Kac term \(S_t^k\), we recall that the intensity of \(\mathcal {P}_1(dt,d\theta ,d\xi )\) is \(\frac{2 \lambda dt d\theta d\xi \mathbf {1}_{\{\mathbf {i}(\xi )\ne 1\}}}{2\pi (N-1)}\). Thus from (11) and (15), using Itô calculus, for \(S_t^K\) we obtain:

where in the second equality the cross-term vanished since \(\int _0^{2\pi } \cos \theta \sin \theta d\theta = 0\). We now control the positive term in (23) by subtracting and then adding \(Z_t^\mathbf {i}\) inside the square. Set a(t) to be \(\mathbb {E}\int _1^N (Z_t^\mathbf {i}- F_t^1)^2 \frac{d\xi }{N-1}\), thus \(a(t) = \mathbb {E}[W_2^2(\bar{{\mathbf {Z}}}_t^1, f_t)]\) thanks to (16). Also note that \(\mathbb {E}\int _1^N (V_t^\mathbf {i}- Z_t^\mathbf {i})^2 \frac{d\xi }{N-1} = \frac{1}{N-1}\sum _{i=2}^N \mathbb {E}(V_t^j- Z_t^j)^2\) which equals h(t) by exchangeability. Therefore, we have

where we have used the Cauchy–Schwarz inequality. Plugging this into (23) gives

Next, for the thermostat term \(S_t^T\), we recall that the intensity of \(\mathcal {Q}_1(dt,d\theta , dw)\) is \(\mu dt \frac{d\theta }{2\pi }\gamma (dw)\). Thus, again from (11) and (15), we have for \(S_t^T\):

Joining the bounds for \(S_t^K\) and \(S_t^T\), we see that

Lemma 11 showed that \(a(t) \le C/N^{1/3}\). Thus, the Theorem follows from (24) by a Gronwall-type inequality (see for example [1, Lemma 4.1.8]). \(\square \)

4 Conclusion

In this work we showed that the thermostated Kac N-particle system propagates chaos uniformly in time, at a polynomial rate of order \(N^{-1/3}\) in terms of the 2-Wasserstein metric squared, improving the propagation of chaos result in [4]. This illustrates that the coupling method in [10] can be adapted to include thermostats. We also used coupling arguments to deduce equilibration estimates for both the particle system and the kinetic equation.

We plan on developing this coupling method further to a Kac-type model where, in addition to the particle collisions (1) and the thermostat interactions (2), the system has an energy restoring mechanism that pushes the total energy of the system to its initial value after each interaction with the thermostat. This is the subject of future research.

References

Ambrosio, L., Gigli, N., Savaré, G.: Gradient Flows in Metric Spaces and in the Space of Probability measures. Lectures in Mathematics, 2nd edn. ETH Zürich, Basel (2008)

Bonetto, F., Carlen, E.A., Esposito, R., Lebowitz, J.L., Marra, R.: Propagation of chaos for a thermostated kinetic model. J. Stat. Phys. 154(1–2), 265–285 (2014)

Bonetto, F., Loss, M., Vaidyanathan, R.: The Kac model coupled to a thermostat. J. Stat. Phys. 156(4), 647–667 (2014)

Bonetto, F., Loss, M., Tossounian, H., Vaidyanathan, R.: Uniform approximation of a Maxwellian thermostat by finite reservoirs. Commun. Math. Phys. 351(1), 311–339 (2017)

Carlen, E., Carvalho, M.C., Loss, M.: Many-body aspects of approach to equilibrium. In: Journées “Équations aux Dérivées Partielles” (La Chapelle sur Erdre, 2000), pp. Exp. No. XI, 12. University of Nantes, Nantes (2000)

Carlen, E.A., Carvalho, M.C., Le Roux, J., Loss, M., Villani, C.: Entropy and chaos in the Kac model. Kinet. Relat. Models 3(1), 85–122 (2010)

Carlen, E., Mustafa, D., Wennberg, B.: Propagation of chaos for the thermostatted Kac master equation. J. Stat. Phys. 158(6), 1341–1378 (2015)

Carrapatoso, K.: Quantitative and qualitative Kac’s chaos on the Boltzmann’s sphere. Ann. Inst. Henri Poincaré Probab. Stat. 51(3), 993–1039 (2015)

Cortez, R.: Uniform propagation of chaos for Kac’s 1D particle system. J. Stat. Phys. 165(6), 1102–1113 (2016)

Cortez, R., Fontbona, J.: Quantitative propagation of chaos for generalized Kac particle systems. Ann. Appl. Probab. 26(2), 892–916 (2016)

Cortez, R., Fontbona, J.: Quantitative uniform propagation of chaos for Maxwell molecules. Commun. Math. Phys. 357(3), 913–941 (2018)

Einav, A.: On Villani’s conjecture concerning entropy production for the Kac master equation. Kinet. Relat. Models 4(2), 479–497 (2011)

Fournier, N., Guillin, A.: On the rate of convergence in Wasserstein distance of the empirical measure. Probab. Theory Relat. Fields 162(3–4), 707–738 (2015)

Graham, C., Méléard, S.: Stochastic particle approximations for generalized Boltzmann models and convergence estimates. Ann. Probab. 25(1), 115–132 (1997)

Hauray, M.: Uniform contractivity in Wasserstein metric for the original 1D Kac’s model. J. Stat. Phys. 162(6), 1566–1570 (2016)

Janvresse, E.: Spectral gap for Kac’s model of Boltzmann equation. Ann. Probab. 29(1), 288–304 (2001)

Kac, M.: Foundations of kinetic theory. In: Proceedings of the Third Berkeley Symposium on Mathematical Statistics and Probability, 1954–1955, vol. III, pp. 171–197, Berkeley and Los Angeles (1956). University of California Press, California

Mischler, S., Mouhot, C.: Kac’s program in kinetic theory. Invent. Math. 193(1), 1–147 (2013)

Sznitman, A.-S.: Topics in propagation of chaos. In: École d’Été de Probabilités de Saint-Flour XIX—1989, volume 1464 of Lecture Notes in Mathematics. pp. 165–251. Springer, Berlin (1991)

Tanaka, S.: An extension of Wild’s sum for solving certain non-linear equation of measures. Proc. Jpn. Acad. 44, 884–889 (1968)

Tanaka, H.: On the uniqueness of Markov process associated with the Boltzmann equation of Maxwellian molecules. In: Proceedings of the International Symposium on Stochastic Differential Equations (Res. Inst. Math. Sci., Kyoto University, Kyoto, 1976), pp 409–425. Wiley, New York (1978)

Tossounian, H., Vaidyanathan, R.: Partially thermostated Kac model. J. Math. Phys. 56(8), 083301 (2015)

Villani, C.: Optimal Transport, Old and New. Grundlehren der Mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences], vol. 338. Springer, Berlin (2009)

Acknowledgements

We would like to thank Federico Bonetto and Joaquin Fontbona for fruitful discussions during our work.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Eric A. Carlen.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

R. Cortez: Supported by Iniciación Fondecyt Grant 11181082 and by Programa Iniciativa Científica Milenio through Nucleus Millenium Stochastic Models of Complex and Disordered Systems.

H. Tossounian: Supported by Programa Iniciativa Científica Milenio through Nucleus Millenium Stochastic Models of Complex and Disordered Systems.

Rights and permissions

About this article

Cite this article

Cortez, R., Tossounian, H. Uniform Propagation of Chaos for the Thermostated Kac Model. J Stat Phys 183, 28 (2021). https://doi.org/10.1007/s10955-021-02763-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10955-021-02763-9