Abstract

We consider a system of N point particles moving on a d-dimensional torus \(\mathbb{T}^{d}\). Each particle is subject to a uniform field E and random speed conserving collisions \(\mathbf{v}_{i}\to\mathbf{v}_{i}'\) with \(|\mathbf{v}_{i}|=|\mathbf{v}_{i}'|\). This model is a variant of the Drude-Lorentz model of electrical conduction (Ashcroft and Mermin in Solid state physics. Brooks Cole, Pacific Grove, 1983). In order to avoid heating by the external field, the particles also interact with a Gaussian thermostat which keeps the total kinetic energy of the system constant. The thermostat induces a mean-field type of interaction between the particles. Here we prove that, starting from a product measure, in the limit N→∞, the one particle velocity distribution f(q,v,t) satisfies a self consistent Vlasov-Boltzmann equation, for all finite time t. This is a consequence of “propagation of chaos”, which we also prove for this model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The derivation of autonomous kinetic equations describing the time evolution of the one particle phase-space distribution function f(q,v,t) in a macroscopic system of N interacting particles, N≫1, is a central problem in non-equilibrium statistical mechanics. Examples of such equations are the Boltzmann (BE), Vlasov (VE) and Boltzmann-Vlasov (BVE) equations [14]. All of these equations have a quadratic non-linearity, and their derivation from the N-particle microscopic dynamics requires proving (or assuming) that the two particle distribution function, f (2)(q,q′,v,v′,t) factorizes (in an appropriate sense) for times t, 0<t<T, as a product f(q,v,t)f(q′,v′,t) when N→∞ given that the N-particle distribution has a factorized form at t=0. Such a property of the N-particle dynamics, which goes under the name of “propagation of chaos” (POC), was first proved by Marc Kac for a spatially homogeneous stochastic model leading to a BE [10]. In his model, one picks a pair of particles at random and changes their velocities (v,w)↦(v′,w′) as if they had undergone an energy conserving collision. Kac’s proof makes essential use of a boundedness property of the generator of the master equation (Kolmogorov forward equation) corresponding to the Kac process. This boundedness property is valid for a Maxwellian collision model in which the rate of collision is independent of the speed of the particles. It is not valid for hard-sphere collisions, for which the rate depends on single-particle speeds, which can become arbitrarily large with large N even when the energy per particle is of unit order.

Propagation of chaos for the Kac model with hard sphere collisions was studied by McKean and his student Grunbaum [9]. The first complete proof was given by Sznitman [17] in 1984. These authors used a more probabilistic methodology, and avoided direct analytic estimates on the master equation. This method has been extended to more general collisions and to higher dimensions by many authors: for the latest results, which are quantitative, see [13]. This method was also used by Lanford in deriving the BE, for short times T, for a model of hard spheres evolving under Newtonian dynamics, in the Boltzmann-Grad limit, see [11] and book and articles by H. Spohn [15, 16].

The derivation of the VE for a weakly interacting Hamiltonian system was first done by Braun and Hepp [6]. They considered a system of N-particles in which there is a smooth force on each particle that is a sum of contributions from O(N) particles with each term being of O(1/N), see also Lanford [11]. There is then, in the limit N→∞, an essentially deterministic smooth force on a given particle and the evolution of the one particle distribution is then described by the VE.

There are no rigorous derivations of the VBE where, in addition to the smooth collective force, there are also discontinuous collisions. Actually, even the appropriate scaling limit in which the VBE could be derived is not obvious: the collision part is obtained in the Grad-Boltzmann limit which means low density; the Vlasov force is obtained in the mean field limit. The two regimes could be incompatible. In [2], it is shown that this is not the case, by proposing a scaling limit in which the VBE is derived formally from the BBGKY hierarchy.

In this paper we go some way towards deriving such a VBE. The system we study is a variation of the Drude-Lorentz model of electrical conduction. In this model, the electrons are described as point particles moving in a constant external electric field E and undergoing energy conserving collisions with much heavier ions. In order to avoid the gain of energy by the electrons from the field, we use a Gaussian thermostat that fixes the total kinetic energy of the N-particle system.

A model in which one particle moves on a two dimensional torus with fixed disc scatterers subject to a field E and a Gaussian thermostat was originally introduced by Moran and Hoover [12]. Exact results for this system were derived in [7]. More information, both analytic and numerical, on this system for N=1 can be found in [4, 5]. There are no rigorous results for the deterministic case when N>1, which was first studied in [3], and further studied in [5].

To obtain a many-particle model that is amenable to analysis, it is natural to replace the deterministic collisions with the fixed disc scatterer by stochastic collisions. This too was done in [3] which introduced a variant of the model in which the deterministic collisions are replaced by “virtual collisions” in which each particle undergoes changes in the direction of its velocity, as in a collision with a disc scatterer, but now with the redirection of the velocity being random, and the “collision times” arriving in a Poisson stream, with a rate proportional to the particle speed. This variant is amenable to analysis for N>1, as shown in [3]. In particular, [3] presented a heuristic derivation of a BE for this many-particle system. To make this derivation rigorous, one must prove POC for the model, which is the aim of the present paper.

In this class of models the collisions involve only one particle at a time, and are not the source of any sort of correlation. The particles interact only through the Gaussian thermostat. Dealing with this is naturally quite different from dealing with the effects of binary collisions. On a technical level, the novelty of the present paper is to provide means to deal with the correlating effects of the Gaussian thermostat. To keep the focus on this issue, we make one more simplifying assumption on the collisions: In the model we treat here, the virtual collision times arrive in a Poisson stream that is independent of particle speeds. In other words, our virtual collisions are “Maxwellian”. However, one still cannot use Kac’s strategy for proving POC in this case since the terms in the generator of the process representing the Gaussian thermostat involve derivatives, and are unbounded. Because the virtual collisions are not responsible for any correlation between the particles, the assumption of Maxwellian collisions is for technical reasons only. In a following paper we plan to build on our present results and treat head-sphere virtual collisions as well. Interesting generalizations of the Gaussian thermostat problem that involve binary collisions have been studied by Wennberg and his students [18].

We now turn to a careful specification of the model we consider.

1.1 The Gaussian Thermostatted Particle System

The microscopic configuration of our system is given by X=(q 1,…,q N ,v 1,…,v N )=(Q,V), with \(\mathbf{q}_{i}\in\mathbb{T}^{d}\), \(\mathbf{v}_{i}\in {\mathord {\mathbb{R}}}^{d}\). The time evolution of the system between collisions is given by:

where

The term \(\frac{\mathbf {E}\cdot \mathbf {j}}{u}\mathbf{v}_{i}\) in (1.1) represents the Gaussian thermostat. It ensures that the kinetic energy per particle u(V) is constant in time. Each particle also undergoes collisions with “virtual” scatterers at random times according to a Poisson process with unit intensity which is independent of its velocity or position. A collision changes the direction but not the magnitude of the particle velocity. When a “virtual” collision takes place with incoming velocity v, a unit vector \(\hat{\mathbf{n}}\) is randomly selected according to a distribution \(k(\hat{\mathbf{v}}\cdot\hat{\mathbf{n}})\), where \(\hat{\mathbf{v}}=\mathbf{v}/|\mathbf{v}|\), and the velocity of the particle is changed from v to v′, where

For d=1 this means that v′=−v.

Remark 1.1

Observe that since \(\hat{\mathbf{n}}\) and \(-\hat{\mathbf{n}}\) both yield the same outgoing velocity, we may freely suppose that k is an even function on [−1,1]. Since \(\hat{\mathbf{v}}\cdot\hat{\mathbf{v}}' = 1 -2(\hat{\mathbf{v}}\cdot\hat{\mathbf{n}})^{2}\), the two collision v→v′ and w→w′ are equally likely if

Moreover, for any two vectors v and v′ satisfying |v|=|v′| there is a collision that takes v into v′: Simply take \(\hat{\mathbf{n}}= (\mathbf{v} - \mathbf{v}')/(|\mathbf{v} - \mathbf{v}'|)\).

In the absence of an electric field, E=0, each particle would move independently keeping its kinetic energy \(\mathbf{v}_{i}^{2}\) constant while the collisions would cause the velocity v i to become uniformly distributed on the (d−1) dimensional sphere of radius |v i |. The force exerted by the Gaussian thermostat on the i-th particle, (E⋅j)v i /u induces, through j, a mean-field type of interaction between the particles. When a particle speeds up (slows down) due to the interaction with the field some other particles have to slow down (speed up).

The probability distribution W(Q,V,t) for the position and velocity of the particles will satisfy the master equation

where

\(\mathbf{V}_{j}'=(\mathbf{v}_{1},\ldots,\mathbf{v}_{j-1},\mathbf{v}'_{j},\mathbf{v}_{j+1},\ldots,\mathbf{v}_{N})\) and the integration is over the angles n such that \(\mathbf{v}_{j}'\to\mathbf{v}_{j}\). Equation (1.3) is to be solved subject to some initial condition W(Q,V,0)=W 0(Q,V). It is easy to see that the projection of W(Q,V,t) on any energy surface u(V)=e will evolve autonomously. This evolution will satisfy the Döblin condition [8] for E≠0. Hence for any finite N the effect of the stochastic collisions combined with the thermostated force is to make the W(Q,V,t) approach a unique stationary state on each energy surface as t→∞. In fact this approach will be exponential for any finite N. However in this paper we rather focus on the time evolution of the one particle marginal distribution f(q,v,t) for fixed t as N→∞.

Now it seems reasonable to believe that in this limit one can use the law of large numbers to replace j(V) in (1.3) by its expectation value with respect to W. When this is true, starting from a product measure

the system will stay in a product measure

and f(q,v,t) will satisfy an autonomous non-linear Vlasov-Boltzmann equation (VBE)

where \(\tilde{\mathbf {j}}(t)\) and \(\tilde{u}\) are given by

It is easy to check that \(d\tilde{u}/dt=0\) and so \(\tilde{u}(t)=\tilde{u}(0)\) independent of t.

An important feature of the VBE (1.6) is that the current \(\tilde{\mathbf {j}}(t)\) given by (1.7) satisfies an autonomous equation:

Lemma 1.2

Let f(q,v,t) be a solution of the VBE (1.6), and let the corresponding current \(\tilde{\mathbf {j}}(t)\) be defined by (1.7). Then \(\tilde{\mathbf {j}}(t)\) satisfies the equation

where, for d≥2,

in which |S d−2| denotes the area of S d−2 in \({\mathord {\mathbb{R}}}^{d-1}\). In particular, for d=2, S d−2={−1,1}, and |S d−2|=2. For d=1, we have ρ k =1.

Proof

We treat the case d≥2. By the definition of \(\tilde{\mathbf {j}}(t)\) it suffices to show that

Since \(d\hat{\mathbf{n}}d\mathbf{v} = d\hat{\mathbf{n}}d\mathbf{v}'\), the left hand side of (1.10) equals

Note that

To evaluate this last integral, introduce coordinates in which v′=(0,…,0,|v′|), and parameterize S d−1 by \(\hat{\mathbf{n}}= (\boldsymbol{\omega}\sin\theta,\cos\theta)\) where ω∈S d−2 and θ∈[0,π]. Then \(d\hat{\mathbf{n}}= \sin^{n-2}\theta d\boldsymbol{\omega}d\theta \), and since \(\int_{S^{d-2}}\boldsymbol{\omega}d\boldsymbol{\omega} = 0\),

Combining calculations yields (1.10), and completes the proof. □

The initial value problem for the current equation (1.8) is readily solved: Write \(\tilde{\mathbf {j}}= \tilde{\mathbf {j}}_{\Vert}+ \tilde{\mathbf {j}}_{\perp}\) where \(\tilde{\mathbf {j}}_{\Vert}= |\mathbf {E}|^{-2}(\tilde{\mathbf {j}}\cdot \mathbf {E})\mathbf {E}\) is the component of \(\tilde{\mathbf {j}}\) parallel to E. Then defining \(y(t) := |\mathbf {E}|^{-1}(\tilde{\mathbf {j}}\cdot \mathbf {E})\),

The equation for \(\tilde{\mathbf {j}}_{\perp}\) is easily solved once the equation for y is solved. Rescaling y→y|E|=z, and writing the equation for z in the form z′=v(z), so that \(v(z) = |\mathbf {E}|^{2} - z^{2}/\tilde{u} - \rho_{k} z\), we see that v(z)=0 at

and hence

Since ρ k >0, \(y_{- }< -\sqrt{\tilde{u}}\), and by the definitions of \(\tilde{\mathbf {j}}\) and y, \(y^{2} (0) < \tilde{u}\). Hence the equation for y has a unique solution with lim t→∞ y(t)=y +. The equation is solvable by separating variables, but we shall not need the explicit form here, though we use the uniqueness below.

The fact that \(\tilde{\mathbf {j}}\) solves an autonomous equation that has a unique solution allows us to prove existence and uniqueness for the spatially homogeneous VBE (1.6); see the Appendix.

In the large N limit, the mean current of the particle model will satisfy this same equation as a consequence of POC: It follows from (1.3) that

where

Propagation of chaos then shows that, as expected, in the limit N→∞,

Our main result is contained in the following theorem. For its proof we will need to control the fourth moment of f(q,v,t). We thus set

We also remind the reader that a function φ on \(\mathbb{R}^{d}\) is 1-Lipschitz if for any \(\mathbf{x},\mathbf{y}\in\mathbb{R}^{d}\) we have |φ(x)−φ(y)|≤|x−y|.

Theorem 1.3

Let f 0(q,v) be a probability density on \({\mathord {\mathbb{R}}}\) with

Let W(Q,V,t) be the solution of (1.3) with initial data W 0(Q,V) given by (1.5). Then for all 1-Lipschitz function φ on \(({\mathord {\mathbb{R}}}^{d}\times\mathbb{T}^{d} )^{2}\), and all t>0,

where f(q,v,t) is the solution of (1.6) with initial data f 0(q,v).

Because the interaction between the particles does not depend at all on their locations, the main work in proving this theorem goes into estimates for treating the spatially homogeneous case. Once the spatially homogeneous version is proved, it will be easy to treat the general case.

We now begin the proof, starting with the introduction of an auxiliary process that propagates independence.

2 The B Process

By integrating (1.3) on the position variables Q we see that the marginal of W on the velocities

satisfies the autonomous equation

This implies that, starting from an initial data f 0(v) in (1.5) independent from q the solution of (1.3) will remain independent of Q and will satisfy (2.1). We will thus ignore the position and study propagation of chaos for (2.1). Theorem 1.3 will be an easy corollary of the analysis in this section, as we show at the end of Sect. 4.

We start the system with an initial state

Given f 0(v) we can solve the BE equation (1.6) and find f(v,t), see Appendix.

To compare the solution of the Boltzmann equation to the solution of the master equation (2.1) we introduce a new stochastic process. Consider a system of N particles with the following dynamics between collisions

where \(\tilde{\mathbf {j}}(t)\) is obtained from the solution of (1.8). The particles also undergo “virtual” collisions with the same distribution described after (1.1). It is easy to see that the master equation associated to this process is

where we have assumed that \(\widetilde{W}(\mathbf{V},0)\) is independent of Q. Clearly, under this dynamics each particle evolves independently so that if the initial condition for this system is of the form \(\widetilde{W}_{0}(\mathbf{V})\) of (2.2) then \(\widetilde{W}(\mathbf{V},t)\) will also have that form,

where f(v,t) solves the spatially homogeneous BE (1.6).

We shall call the system described by the dynamics (2.3) the “Boltzmann” or B-system and the original system described by the dynamics (1.1) the A-system. We will prove that, for fixed t and N→∞ every trajectory of the A-system with the dynamics (1.1) can be made close (in an appropriate sense) to that of the B-system with dynamics (2.3). It will then follow that, starting the B-system and A-system with the same product measure, the A-system pair correlation function will be close to that of the B-system, and since the B-dynamics propagates independence, the latter will be a product. In this way we shall see that (1.6) is satisfied.

2.1 Comparison of the A and B Processes

To compare the path of the two processes, we first observe that a collision is specified by its time t, the index i of the particle that collides, and the unit vector \(\hat{\mathbf{n}}\) that specifies the post-collisional velocity. We will call \(\omega=\{(t_{k},i_{k},\hat{\mathbf{n}}_{k})\}_{k=0}^{n}\), with s<t k <t k+1<t, a collision history in (s,t) with n collisions.

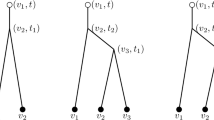

Let Ψ t−s (V s ) be the flow generated by the autonomous dynamics (1.1) and Φ s,t (V s ) the flow generated by the non autonomous dynamics (2.3) both starting at \(\mathbf{V}_{s}\in {\mathord {\mathbb{R}}}^{dN}\) at time s. Given a collision history ω in (s,t) with n collisions the A-process starting from V s at time s, has the path

while the B-process has the path

where R n , respectively \(\widetilde{R}_{n}\), is the updating of the velocity \(\mathbf{v}_{i_{n}}\) of the i n -th particle generated by the n-th collision in the A (resp. B) -dynamics. To simplify notation, here and in what follows, we will use V s =V(s,V,ω) and \(\widetilde{\mathbf{V}}_{s}=\widetilde{\mathbf{V}}(s,\mathbf{V},\omega)\) for the A and B processes.

We want to compare the trajectories of the two processes. We first observe that, given two incoming velocities v and w and a collision vector \(\hat{\mathbf{n}}\) for v we can always find a corresponding collision vector \(\hat{\mathbf{m}}\) for w such that:

-

The probability of selecting \(\hat{\mathbf{n}}\) for an incoming velocity v is equal to the probability of selecting \(\hat{\mathbf{m}}\) for an incoming velocity w. By Remark 1.1, this is equivalent to having

$$ \hat{\mathbf{v}}\cdot\hat{\mathbf{v}}'=\hat{\mathbf{w}}\cdot\hat{\mathbf{w}}' . $$(2.8) -

The distance between the two outgoing velocities is equal to the distance between the incoming velocities, i.e. |w′−v′|=|w−v|. Since |v|=|v′| and |w|=|w′|, this is equivalent to

$$ \hat{\mathbf{v}}\cdot\hat{\mathbf{w}}=\hat{\mathbf{v}}'\cdot\hat{\mathbf{w}}' . $$(2.9)

Our claim amounts to:

Proposition 2.1

Given any three unit vectors \(\hat {\mathbf{v}}\), \(\hat {\mathbf{v}}'\) and \(\hat{\mathbf{w}}\), we can find a fourth unit vector \(\hat{\mathbf{w}}'\) such that both (2.8) and (2.9) are satisfied.

Proof

For d=1, a solution is given by \(\hat{\mathbf{w}}' =(\hat{\mathbf{v}}\cdot \hat{\mathbf{v}}')\hat{\mathbf{w}}\), i.e. \(\hat{\mathbf{w}}'=-\hat{\mathbf{w}}\). For d=2, let R be any planar rotation such that \(\hat {\mathbf{v}}' = R\hat {\mathbf{v}}\), and define \(\hat{\mathbf{w}}' = R\hat{\mathbf{w}}\). Then (2.8) and (2.9) reduce to \(\hat {\mathbf{v}}\cdot R \hat {\mathbf{v}} = \hat{\mathbf{w}}\cdot R \hat{\mathbf{w}}\) and \(\hat {\mathbf{v}}\cdot \hat{\mathbf{w}}= R\hat {\mathbf{v}}\cdot R\hat{\mathbf{w}}\), so that \(\hat{\mathbf{w}}' = R\hat{\mathbf{w}}\) is a solution.

For d≥3, we reduce to the planar case as follows: Let P be the orthogonal projection onto the plane spanned by v′ and w. Then w′ is a solution of (2.8) and (2.9) if and only if

Let us seek a solution with \(|P\hat{\mathbf{w}}'| = |P\hat{\mathbf{v}}|\). If \(|P\hat{\mathbf{v}}| =0\), we simply choose \(\hat{\mathbf{w}}'\) so that \(P\hat{\mathbf{w}}' =0\). Otherwise, divide both (2.10) by \(|P\hat{\mathbf{w}}'| = |P\hat{\mathbf{v}}|\), and we have reduced to the planar case: If R is a rotation in the plane spanned by \(\hat{\mathbf{v}}'\) and \(\hat{\mathbf{w}}\) such that \(R\hat{\mathbf{v}} = P\hat{\mathbf{v}}'/|P\hat{\mathbf{v}}'|\), then we define \(P\hat{\mathbf{w}}' = |P\hat {\mathbf{v}}|R\hat{\mathbf{w}}\), and finally

where \(\hat{\mathbf{z}}\) is any unit vector orthogonal to \(\hat{\mathbf{v}}'\) and \(\hat{\mathbf{w}}'\). □

From Proposition 2.1 it follows that to every collision history ω for the A process we can associate a collision history p(ω) for the B process such that for every collision time t k we have that

where \(\mathbf{V}(t_{k}^{-},\mathbf{V}_{0},\omega)\), resp. \(\mathbf{V}(t_{k}^{+},\mathbf{V}_{0},\omega)\), is the path of the A-process just before, resp. just after, the k-th collision, and similarly for the B-process, and given a vector \(\mathbf{V}\in {\mathord {\mathbb{R}}}^{dN}\) we set

This means that we can see the A and B processes as taking place on the same probability sample space defined by the initial distribution and the collision histories ω. We will call \(\mathbb{P}\) the probability measure on this space.

The result of this comparison is summarized in the following:

Theorem 2.2

(Pathwise Comparison of the Two Processes)

Let W 0(V) satisfy (2.2) with f 0(v) satisfying (1.12). Then for all ϵ>0,

where E=|E| and Δ>0 is defined in (3.5) below.

3 Proof of Theorem 2.2

To prove this theorem we need to find an expression for \(\mathbf{V}(t,\mathbf{V}_{0},\omega)-\widetilde{\mathbf{V}}(t,\mathbf{V}_{0},\omega)\). First observe that between collisions we have

Differentiating the above expression with respect to s and calling DΨ t the differential of the flow Ψ t , i.e.

we get

If the two flows start from different initial condition we get

where \(\sigma \mathbf{V}+(1-\sigma)\widetilde{\mathbf{V}}\) is the segment from V to \(\widetilde{V}\).

The main idea of the proof is to iterate (3.2) collision after collision, using the fact that the distance between the A and B trajectory is preserved by the collisions.

To control (3.2) we need first to show that

can be bounded uniformly in N. We will obtain such an estimate in Sect. 3.3 but our estimate will depend on \(u(\mathbf{V})^{-\frac{1}{2}}\). Since the B-process does not preserve the total energy of the particles we will need to show that \(u(\widetilde{\mathbf{V}}_{s})^{\frac{1}{2}}\) is, with a large probability uniformly in N, bounded away from 0. This is the content of the technical Lemma in Sect. 3.1.

Having bounded the differential of Ψ t we will need to show that

is small uniformly in N. This is the content of Sect. 3.4 and it is based on the Law of Large Numbers.

Thus we will have that the distance between the corresponding paths of the two processes at time t can be estimated in term of the norm (3.3) of the differential of Ψ t , the integral (3.4) and the distance just after the last collision at \(t^{+}_{n}\). Using (2.11) we can reduce the problem to an estimate of the distance before the last collision. Finally we can get a full estimate iterating the above procedure over all the collisions.

3.1 A Technical Lemma

While the propagation of independence is an important advantage of the master equation (2.4), one disadvantage is that, while the A-evolution (1.1) conserves the energy u(V), the B evolution does not: it only does so in the average.

In what follows we will need a bound on \(\sup_{0 \leq s \leq t} (u(\widetilde{\mathbf{V}}(s)) )^{-1/2}\) showing that the event that this quantity is large has a small probability.

Lemma 3.1

Let \(\widetilde{\mathbf{V}}_{t}\) be the B process with initial data given by (2.2) with f 0(v) satisfying (1.12). Define

with \(\tilde{u}\) given by (1.12) and for any given t>0 let m(t) denote the least integer m such that mΔ≥t. Then

Proof

Observe that the collisions do not affect u. Thus \(u(\widetilde{\mathbf{V}}(t))\) is path-wise differentiable, and

By the Schwarz inequality, \(|\mathbf {j}(\widetilde{\mathbf{V}})| \leq (u(\widetilde{\mathbf{V}}) )^{1/2}\) and \(\tilde{\mathbf {j}}(t) \leq \sqrt{\tilde{u}}\). Therefore,

The differential equation

is solved by

Let t 1 denote the time at which x(t 1)=2x 0:

Let us choose \(x_{0} = \sqrt{2/\tilde{u}}\). Then

Note that the length of the interval [t 0,t 1] is independent of t 0.

It now follows from (3.7) and what we have proven about the differential equation (3.8) that if \(1/\sqrt{u(\widetilde{\mathbf{V}}_{t_{0}})}\leq \sqrt{2/\tilde{u}}\), then for all t on the interval [t 0,t 1], \(1/\sqrt{u(\widetilde{\mathbf{V}}_{t})} \leq 2\sqrt{2/\tilde{u}}\). Then, provided that

we have

Moreover, for any given t 0, there is only a small (for large N) probability that \(1/\sqrt{u(\widetilde{\mathbf{V}}_{t_{0}})} \geq \sqrt{2/\tilde{u}}\). Indeed since \(\mathbb{E} [ u(\widetilde{\mathbf{V}}_{t_{0}}) -\tilde{u} ] ^{2}= a(t_{0})/N\), we have

It follows that

Combining this estimate with (3.10) and (3.11) from the following Lemma leads to (3.6). □

Lemma 3.2

Let f 0(v) be a probability density on \({\mathord {\mathbb{R}}}^{d}\) satisfying (1.12) then for all t>0,

Proof

Using (1.6), we compute

By the Schwarz inequality, \(|\tilde{\mathbf {j}}(t)| \leq \sqrt{\tilde{u}}\) and

so that

□

3.2 Estimate on (3.4)

The reason the quantity in (3.4) will be small for large N with high probability is that the B process propagates independence, so that \(\widetilde{\mathbf{V}}_{s}\) has independent components. Thus, the Law of Large Numbers says that with high probability, \(\mathbf {j}(\widetilde{\mathbf{V}}_{s})\) is very close to \(\tilde{\mathbf {j}}(s)\) and thus \(\mathbf {F}(\widetilde{\mathbf{V}}_{s}) \) will be very close to \(\widetilde{\mathbf {F}}(\widetilde{\mathbf{V}}_{s})\). The following proposition makes this precise.

Proposition 3.3

(Closeness of the Two Forces for Large N)

Let f 0(v) be a probability density on \({\mathord {\mathbb{R}}}^{d}\) satisfying (1.12). Then,

where \(\mathbb{E}\) is the expectation with respect to \(\mathbb{P}\).

Proof

Clearly

so that by Schwarz inequality and the triangle inequality, we get

Taking the expectation and using the Schwarz inequality again

We recall that for the B-process, the N particle distribution at time t is \(\widetilde{W}(\mathbf{V},t)=\prod_{j=1}^{N} f(\mathbf{v}_{j},t)\). Thus the expectation of \(\mathbf {j}(\widetilde{\mathbf{V}}) - \tilde{\mathbf {j}}= \frac{1}{N}\sum_{j=1}^{N} (\hat{\mathbf{v}}_{j,s} - \tilde{\mathbf {j}}(s))\) is given by

Likewise,

where the last inequality comes form Lemma 3.2. Altogether, we have

□

3.3 The Lyapunov Exponent

The next proposition gives an estimate on \(\Vert D\varPsi_{t}(\widetilde{\mathbf{V}})\Vert \) defined in (3.3) in term of u(V).

Proposition 3.4

Given \(\mathbf{V}\in \mathbb{R}^{Nd}\) we have:

where

Proof

Clearly,

with initial condition \(D\varPsi_{0}(\mathbf{V})= \operatorname{Id}\). Given a vector \(\mathbf{W}\in {\mathord {\mathbb{R}}}^{N}\) we get

We thus get

where ∥D F(Ψ t (V))∥=sup∥W∥=1|(D F(Ψ t (V))W⋅W)| with \(\Vert \mathbf{W}\Vert =\sqrt{(\mathbf{W}\cdot \mathbf{W})}\). We have

so that

Clearly we have

Finally, form \(|\mathbf {j}(\mathbf{V})|\leq \sqrt{u(V)}\) and the above estimates we get

Since the A-dynamics preserves u(V), this differential inequality may be integrated to obtain the stated bound. □

3.4 Proof of Theorem 2.2

We can now conclude the proof of Theorem 2.2. Given a set T={t 1,…,t n } of n collision times 0<t 1<⋯<t n <t let Ω T be the event that there are exactly n collision in (0,t) and they take place at the times t k , k=1,…,n. We now estimate the growth of \(\Vert \mathbf{V}(t,\mathbf{V}_{0},\omega)-\widetilde{\mathbf{V}}(t,\mathbf{V}_{0},\omega)\Vert _{N}\) along each sample path ω. We will “peel off” the collision, one at a time.

First we get

where \(\mathbf{V}(t_{n}^{-} ,\mathbf{V}_{0},\omega)\) to denote the configuration just before the nth collision, and likewise for \(\widetilde{\mathbf{V}}\), and R n and \(\widetilde{R}_{n}\) are the corresponding updating of the velocities at the n-th collision. We deal with the last term first. Since there are no collisions between t n and t, and since the configurations coincide at t n , Proposition 3.4 gives

Now, connect \(R_{n}(V(t_{n}^{-},\mathbf{V}_{0},\omega))\) and \(\widetilde{R}_{n}(\widetilde{V}(t_{N}^{-},\mathbf{V}_{0},\omega))\) by a straight line segment. Again by Proposition 3.4, we get

where λ n is the maximum of \(4/\sqrt{u(\mathbf{V})}\) along the line segment joining \(R_{n}(V(t_{n}^{-},\mathbf{V}_{0},\omega))\) and \(\widetilde{R}_{n}(\widetilde{V}(t_{n}^{-},\mathbf{V}_{0},\omega))\), and where we have used the distance preserving property of R n and \(\widetilde{R}_{n}\). Altogether, we have

Applying the same procedure to estimate \(\Vert \mathbf{V}(t_{n}^{-} ,\mathbf{V}_{0},\omega)- \widetilde{\mathbf{V}}(t_{n}^{-} ,\mathbf{V}_{0},\omega)\Vert _{N}\) in terms of \(\Vert \mathbf{V}(t_{n-1}^{-} ,\mathbf{V}_{0},\omega)- \widetilde{\mathbf{V}}(t_{n-1}^{-} ,\mathbf{V}_{0},\omega)\Vert _{N}\), and so forth, we obtain the estimate

Our next task is to control max1≤m≤n λ m . Let \({\mathord {\mathcal{A}}}\) be the event that

Since

we get

Therefore, for any \(0<\delta < \sqrt{\widetilde{u}/\sqrt{32}}\), if \(u(\widetilde{\mathbf{V}}) > \widetilde{u}/8\) and \(\widetilde{\mathbf{V}}\) is in the ball centered at V with radius δ in the ∥⋅∥ N norm, we have

We can now define

and note δ 0=0. The above estimates show that

Let m ⋆ be the least m such that λ m >K if such an m exists with t m <t. We shall now show that this cannot happen in \({\mathord {\mathcal{A}}}\cup {\mathord {\mathcal{B}}}\) where \({\mathord {\mathcal{B}}}\) is the event

Rewriting the estimate (3.15) for m ⋆ we get

so that, if \(t_{m_{\star}} < t\), we have, by (3.16), \(\lambda_{m^{*}} < K\). Thus no such m ∗ exists.

Let now \({\mathord {\mathcal{B}}}'\) be the event

with ϵ sufficiently small, depending on t and \(\widetilde{u}\), so that \({\mathord {\mathcal{B}}}'\subset {\mathord {\mathcal{B}}}\). On \({\mathord {\mathcal{A}}}\cap {\mathord {\mathcal{B}}}\) we have that

Moreover we have

Finally Theorem 2.2 follows using Lemma 3.1, Proposition 3.3 and observing that \(\mathbb{P}(({\mathord {\mathcal{A}}}\cup {\mathord {\mathcal{B}}}')^{c})\leq \mathbb{P}({\mathord {\mathcal{A}}}^{c})+\mathbb{P}({\mathord {\mathcal{B}}}'^{c})\).

4 Propagation of Chaos

For each fixed N and t>0, we introduce the two empirical distributions

where \(\delta_{\mathbf{v}_{j}}=\delta(\mathbf{v}-\mathbf{v}_{j})\). By the Law of Large Numbers, we have almost surely

in distribution. We now use this to complete the proof of Theorem 1.3 in the spatially homogeneous case.

By the permutation symmetry that follows from (1.5),

and moreover,

Next, for every fixed \({\bf x}\),

Therefore,

Choosing ϵ=N −1/4, we see that

where \(\psi({\bf x})\) is defined by

Since φ is 1-Lipschitz on \({\mathord {\mathbb{R}}}^{2d}\), \(\psi({\bf x})\) is 1-Lipschitz on \({\mathord {\mathbb{R}}}^{d}\). That is, for all \(\mathbf{v},\mathbf{w}\in {\mathord {\mathbb{R}}}^{d}\),

Moreover, ∥ψ∥∞≤∥φ∥∞.

Then, arguing just as above,

and

Therefore,

Again, choosing ϵ=N −1/4, we see that

Altogether, we now have

But by the Law of Large Numbers,

This shows how our estimates give us propagation of chaos in the spatially homogeneous case. To prove Theorem 1.3, we need only explain how to use these same estimates to treat the spatial dependence.

Proof of Theorem 1.3

It remains to take into account the spatial dependence. Note that

It suffices to show that for each ϵ>0,

goes to zero as N goes to infinity, since in this case we may repeat the argument we have just made, but with test functions φ on phase space.

If we knew that V(s,V 0,ω) is close to \(\widetilde{\mathbf{V}}(s,\mathbf{V}_{0},\omega)\) everywhere along most paths, we would conclude that Q(t,Q 0,V 0,ω) would be close to \(\widetilde{\mathbf{Q}}(t,\mathbf{Q}_{0},\mathbf{V}_{0},\omega)\). However, in Theorem 2.2, we exclude a set of small probability that could depend on t.

Hence one has to prove the phase space version of Theorem 2.2, which is easily done: The vector fields to be treated in the phase space analog of Proposition 3.3 are now \((\widetilde{\mathbf{V}},\mathbf {F})\) and \((\widetilde{\mathbf{V}},\widetilde{\mathbf {F}})\). But the position components are the same, and cancel identically, so the phase space analog of Proposition 3.3 can be proved in exactly the same way, only with a more elaborate notation.

Next, we may obtain an estimate for the Lyapunov exponent of the phase space flow from the one we obtained in Proposition 3.4. To see this, note that since the phase space flow Ξ t is given by

the Jacobian of Ξ t has the form

The crucial point here is that we have control on ∥DΨ s ∥ uniformly in s for all but a negligible set of paths. Thus we obtain control on ∥DΞ t ∥ uniformly in t, except for a negligible set of paths.

Form here the proof of Theorem 3.3 may be adapted to the phase space setting using these two estimates exactly as before, with only a more elaborate notation. □

Remark 4.1

In Theorem 1.3, we have proved that if the initial distribution is a product distribution, then at later times t we have a chaotic distribution. This is somewhat less than propagation of chaos, though it is all that is needed to validate our Boltzmann equation.

One can, however, adapt the proof to work with an chaotic sequence of initial distributions. We have used the product nature of the initial distribution to invoke the law of large numbers in two places: Once in the proof of Proposition 3.3, on the smallness of \(\Vert \widetilde{\mathbf {F}}- \mathbf {F}\Vert \), and again in the argument leading to (4.1). However, the estimates we made only required pairwise independence of the velocities. Since this is approximately true for large N for a chaotic sequence of distributions, by taking into account the small error term, one can carry out the same proof assuming only a chaotic initial distribution.

References

Ashcroft, N.W., Mermin, N.D.: Solid State Physics. Brooks Cole, Pacific Grove (1983)

Bastea, S., Esposito, R., Lebowitz, J.L., Marra, R.: Binary fluids with long range segregating interaction I: Derivation of kinetic and hydrodynamic equations. J. Stat. Phys. 101, 1087–1136 (2000)

Bonetto, F., Daems, D., Lebowitz, J.L., Ricci, V.: Properties of stationary nonequilibrium states in the thermostatted periodic Lorentz gas: the multiparticle system. Phys. Rev. E 65, 051204 (2002)

Bonetto, F., Chernov, N., Korepanov, A., Lebowitz, J.L.: Spatial structure of stationary nonequilibrium states in the thermostated periodic Lorentz gas. J. Stat. Phys. 146, 1221–1243 (2012)

Bonetto, F., Chernov, N., Korepanov, A., Lebowitz, J.L.: Nonequilibrium stationary state of a current-carrying thermostatted system. Europhys. Lett. 102, 15001 (2013)

Braun, W., Hepp, K.: The Vlasov dynamics and its fluctuations in the 1/N limit of interacting classical particles. Commun. Math. Phys. 56, 101–113 (1977)

Chernov, N., Eyink, G.L., Lebowitz, J.L., Sinai, Ya.G.: Steady state electric conductivity in the periodic Lorentz gas. Commun. Math. Phys. 154, 569–601 (1993)

Doob, J.L.: Stochastic Processes. Wiley, New York (1953)

Grunbaum, A.: Propagation of chaos for the Boltzmann equation. Arch. Ration. Mech. Anal. 42, 323–345 (1971)

Kac, M.: Foundations of kinetic theory. In: Neyman, J. (ed.) Proc. 3rd Berkeley Symp. Math. Stat. Prob., vol. 3, pp. 171–197. Univ. of California, Berkeley (1956)

Lanford, O.: Time evolution of large classical systems. In: Moser, J. (ed.) Dynamical Systems, pp. 1–97 (1975)

Moran, B., Hoover, W.: Diffusion in the periodic Lorentz billiard. J. Stat. Phys. 48, 709–726 (1987)

Mouhot, C., Mischler, S.: Kac program in kinetic theory. Invent. Math. 193, 1–147 (2013)

Neunzert, H.: An introduction to the nonlinear Boltzmann-Vlasov equation. In: Cercignani, C. (ed.) Lecture Notes in Mathematics, vol. 1048, pp. 80–100 (1984)

Spohn, H.: Kinetic equations from Hamiltonian dynamics: Markovian limits. Rev. Mod. Phys. 56, 569 (1980)

Spohn, H.: Large Scale Dynamics of Interacting Particles. Springer, Berlin (1991)

Sznitman, A.: Equations de type Boltzmann, spatialment homogenes. Z. Wahrscheinlichkeitstheor. Verw. Geb. 66, 559–592 (1984)

Wennberg, B., Wondmagegne, Y.: Stationary states for the Kac equation with a Gaussian thermostat. Nonlinearity 17, 633–648 (2004)

Acknowledgements

F. Bonetto, R. Esposito and R. Marra gratefully acknowledge the hospitality of Rutgers University, and E. A. Carlen and J. L. Lebowitz gratefully acknowledge the hospitality of Università di Roma Tor Vergata.

Author information

Authors and Affiliations

Corresponding author

Additional information

Dedicated to Herbert Spohn in friendship and appreciation.

Work of E.A. Carlen is partially supported by U.S. National Science Foundation grant DMS 1201354.

Work of J.L. Lebowitz is partially supported by U.S. National Science Foundation grant PHY 0965859.

Work of R. Marra is partially supported by MIUR and GNFM-INdAM.

Appendix: Properties of the Boltzmann Equation

Appendix: Properties of the Boltzmann Equation

In this appendix, we prove existence and uniqueness for our Boltzmann equation. As in the proof of Theorem 1.3, we do this first in the spatially homogeneous case, where the notation is less cumbersome, and then explain how to extend the argument to phase space.

As we have indicated, the key is the fact that the current satisfies an autonomous equation. Let \(\tilde{\mathbf {j}}_{0}\) be computed from f 0(v), the initial data for (1.6). Then solve for \(\tilde{\mathbf {j}}(t)\). Using this expression for \(\tilde{\mathbf {j}}\) in (1.6) we obtain the equation

where \(\mathcal{C}\) denotes the collision operator on the right side of (1.6), and where \({\bf Y}(\mathbf{v},t)\) is the vector field

which is linear, and hence globally Lipschitz. Let Φ t (v) denote the flow corresponding to this vector field, so that v(t):=Φ t (v) is the unique solution to \(\dot {\mathbf{v}}(t) = {\bf Y}(\mathbf{v}(t),t)\) with v(0)=v. Calling g(v,t) the push-forward of f(v,t) under the flow transformation Φ t , that is g(v,t)=det(DΦ t (v))f(Φ t (v),t), where DΦ t is the Jacobian of Φ t , we deduce that f(v,t) satisfies (A.1) if and only if g(v,t) satisfies

where

Under our hypotheses, \(\widetilde {\mathcal{C}}_{t}\) is bounded for each t, and depends continuously on t, and hence existence and uniqueness of solutions of (A.3) is straightforward to prove. It is also straightforward to prove that the current generated by the solution f(v,t) satisfies the differential equation and initial conditions of Lemma 1.2 and hence it equals \(\tilde{\mathbf {j}}\).

To obtain the same result in the spatially inhomogeneous case, we replace the vector field \({\bf Y}\) in (A.2) by

and let Φ t be the phase space flow it generates. Again, the vector field is linear and hence globally Lipschitz which guarantees the existence, uniqueness and smoothness of flow. Now the same argument may be repeated.

Rights and permissions

About this article

Cite this article

Bonetto, F., Carlen, E.A., Esposito, R. et al. Propagation of Chaos for a Thermostated Kinetic Model. J Stat Phys 154, 265–285 (2014). https://doi.org/10.1007/s10955-013-0861-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-013-0861-2