Abstract

In this article, an inhomogeneous Erdős–Rényi random graph on \(\{1,\ldots , N\}\) is considered, where an edge is placed between vertices i and j with probability \(\varepsilon _N f(i/N,j/N)\), for \(i\le j\), the choice being made independently for each pair. The integral operator \(I_f\) associated with the bounded function f is assumed to be symmetric, non-negative definite, and of finite rank k. We study the edge of the spectrum of the adjacency matrix of such an inhomogeneous Erdős–Rényi random graph under the assumption that \(N\varepsilon _N\rightarrow \infty \) sufficiently fast. Although the bulk of the spectrum of the adjacency matrix, scaled by \(\sqrt{N\varepsilon _N}\), is compactly supported, the kth largest eigenvalue goes to infinity. It turns out that the largest eigenvalue after appropriate scaling and centering converges to a Gaussian law, if the largest eigenvalue of \(I_f\) has multiplicity 1. If \(I_f\) has k distinct non-zero eigenvalues, then the joint distribution of the k largest eigenvalues converge jointly to a multivariate Gaussian law. The first order behaviour of the eigenvectors is derived as a byproduct of the above results. The results complement the homogeneous case derived by [18].

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Given a graph on N vertices, say, \(\{1,\ldots , N\}\), let \(A_N\) denote the adjacency matrix of the graph, whose (i, j)th entry is 1 if there is an edge between vertices i and j and 0 otherwise. Important statistics of the graph are the eigenvalues and eigenvectors of \(A_N\) which encode crucial information about the graph. The present article considers the generalization of the most studied random graph, namely the Erdős–Rényi random graph (ERRG). It is a graph on N vertices where an edge is present independently with probability \(\varepsilon _N\). The adjacency matrix of the ERRG is a symmetric matrix with diagonal entries zero, and the entries above the diagonal are independent and identically distributed Bernoulli random variables with parameter \(\varepsilon _N\). We consider an inhomogeneous extension of the ERRG where the presence of an edge between vertices i and j is given by a Bernoulli random variable with parameter \(p_{i,j}\) and these \(\{p_{i,j}:\, 1\le i< j\le N\}\) need not be same. When \(p_{i,j}\) are same for all vertices i and j it shall be referred as (homogeneous) ERRG.

The mathematical foundations of inhomogeneous ERRG where the connection probabilities \(p_{i,j}\) come from a discretization of a symmetric, non-negative function f on \([0,1]^2\) was initiated in [9]. The said article considered edge probabilities given by

In that case the average degree is bounded and the phase transition picture on the largest cluster size was studied in the same article (see also [8, 32] for results on inhomogeneous ERRG). The present article considers a similar set-up where the average degree is unbounded and studies the properties of eigenvalues of the adjacency matrix. The connection probabilities are given by

with the assumption that

Let \(\lambda _1(A_N)\ge \cdots \ge \lambda _N(A_N)\) be the eigenvalues of \(A_N\). It was shown in [13] (see also [35] for a graphon approach) that the empirical distribution of the centered adjacency matrix converges, after scaling with \(\sqrt{N\varepsilon _N}\), to a compactly supported measure \(\mu _f\). When \(f\equiv 1\), the limiting law \(\mu _f\) turns out to be the semicircle law. Note that \(f\equiv 1\) corresponds to the (homogeneous) ERRG (see [16, 31] also for the homogeneous case). Quantitative estimates on the largest eigenvalue of the homogeneous case (when \(N\varepsilon _N\gg (\log N)^4\)) were studied in [20, 34] and it follows from their work that the smallest and second largest eigenvalue converge to the edge of the support of semicircular law. The results were improved recently in [7] and the condition on sparsity can be extended to the case \(N\varepsilon _N\gg \log N\) (which is also the connectivity threshold). It was shown that inhomogeneous ERRG also has a similar behaviour. The largest eigenvalue of inhomogeneous ERRG when \(N\varepsilon _N\ll \log N\) was treated in [6]. Under the assumption that \(N^{\xi }\ll N\varepsilon _N\) for some \(\xi \in (2/3, 1]\), it was proved in [17, Theorem 2.7] that the second largest eigenvalue of the (homogeneous) ERRG after appropriate centering and scaling converges in distribution to the Tracy–Widom law. The results were recently improved in [27]. The properties of the largest eigenvector in the homogeneous case was studied in [1, 17, 22, 27, 31].

The scaling limit of the maximum eigenvalue of inhomogenous ERRG also turns out to be interesting. The fluctuations of the maximum eigenvalue in the homogeneous case were studied in [18]. It was proved that

The above result was shown under the assumption that

for some \(\xi >8\), which is a stronger assumption than (1.1).

It is well known that in the classical case of a (standard) Wigner matrix, the largest eigenvalue converges to the Tracy–Widom law. We note that there is a different scaling between the edge and bulk of the spectrum in ERRG. As pointed out before, the bulk is of the order \((N\varepsilon _N)^{1/2}\) and the order of the largest eigenvalue is \(N\varepsilon _N\). Letting

where \(\mathrm{E}(A_N)\) is the entrywise expectation of \(A_N\), it is easy to see that

where \(\mathbf{1}\) is the \(N\times 1\) vector with each entry 1. Since the empirical spectral distribution of \((N\varepsilon _N)^{-1/2}W_N\) converges to semi-circle law, the largest eigenvalue of the same converges to 2 almost surely. As \(\mathrm{E}[A_N]\) is a rank-one matrix, it turns out that the largest eigenvalue of \(A_N\) scales like \(N\varepsilon _N\), which is different from the bulk scaling.

The above behaviour can be treated as a special case of the perturbation of a Wigner matrix. When \(W_N\) is a symmetric random matrix with independent and identically distributed entries with mean zero and finite variance \(\sigma ^2\) and the deformation is of the form

the largest eigenvalue is well-studied. Motivated by the study of adjacency matrix of homogeneous ERRG, [20] studied the above deformation with \(P_N= m N^{-1/2} \mathbf{1}\mathbf{1}^\prime \), \(m\ne 0\). They showed that

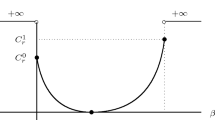

Since the bulk of \(N^{-1/2} M_N\) lies within \([-2\sigma , \, 2\sigma ]\), the largest eigenvalue is detached from the bulk. In general, when the largest eigenvalue of the perturbation has the same order as that of the maximum eigenvalue of \(W_N\), the problem is more challenging. One of the seminal results in this direction was obtained in [3]. They exhibited a phase transition in the behaviour of the largest eigenvalue for complex Wishart matrix, which is now referred to as the BBP (Baik–Ben Arous–Péché) phase transition. It is roughly as follows. Suppose \(P_N\) is a deterministic matrix of rank k with non-trivial eigenvalues \(\theta _1\ge \theta _2\dots \ge \theta _k>0\). If \(\theta _i\le \sigma \), then \(\lambda _i(M_N)\rightarrow 2\sigma \) almost surely, and if \(\theta _i> \sigma \) then

See [2, 19] for further extensions. It is clear that when \(\theta _i>\sigma \) the corresponding eigenvalue lies outside the bulk of the spectrum. The phase transition is also present at the level of fluctuations around \(2\sigma \) or \(\theta _i+\sigma ^2/\theta _i\). It is known that under some moment conditions on the entries of \(W_N\) (see [11, 24, 25]), when \(\theta _i\le \sigma \), the fluctuations are governed by the Tracy–Widom law, and when \(\theta _i>\sigma \), the limiting distribution is given by the eigenvalues of a random matrix of order k. This limiting random matrix depends on the eigenvectors of \(P_N\) and also on the entries of \(W_N\). The non-universal nature was pointed out in [11]. For example, when \(W_N\) is a Gaussian orthogonal ensemble and \(P_N=\theta _1 \mathbf{1}\mathbf{1}^{\prime }\) then the limit is Gaussian and if the entries of \(W_N\) are not from a Gaussian distribution, then the limit is a convolution of Gaussian and the distribution from which the entries of \(W_N\) are sampled. One can find further details in [3,4,5, 11, 12, 24, 25] and the survey by [29]. The case when the rank k depends on N was considered in [10, 23, 26]. Various applications of these results on outliers can be found in the literature, for example, [14, 15, 30].

The adjacency matrix of the inhomogeneous ERRG does not fall directly into purview of the above results, since \(W_N\), as in (1.3), is a symmetric matrix, with independent entries above the diagonal, but the entries have a variance profile, which also depends on the size of the graph. The inhomogeneity does not allow the use of local laws suitable for semicircle law in an obvious way. The present article aims at extending the results obtained in [18] for the case that f is a constant to the case that f is a non-negative, symmetric, bounded, Riemann integrable function on \([0,1]^2\) which induces an integral operator of finite rank k, under the assumption that (1.2) holds. The case \(k\ge 2\) turns out to be substantially difficult than the case \(k=1\) for the following reason. If \(k=1\), that is,

for some \(N\times 1\) deterministic column vector \(u_N\), then with high probability it holds that

where \(\lambda \) is the largest eigenvalue of \(A_N\). The above equation facilitates the asymptotic study of \(\lambda \). However, when \(k\ge 2\), the above equation takes a complicated form. The observation which provides a way out of this is that \(\lambda \) is also an eigenvalue of a \(k\times k\) matrix with high probability; the same is recorded in Lemma 5.2 of Sect. 5. Besides, working with the eigenvalues of a \(k\times k\) matrix needs more linear algebraic work when \(k\ge 2\). For example, the proof of Lemma 5.8, which is one of the major steps in the proof of a main result, becomes a tautology when \(k=1\).

The following results are obtained in the current paper. If the largest eigenvalue of the integral operator has multiplicity 1, then the largest eigenvalue of the adjacency matrix has a Gaussian fluctuation. More generally, it is shown that the eigenvalues which correspond to isolated eigenvalues, which will be defined later, of the induced integral operator jointly converge to a multivariate Gaussian law. Under the assumption that the function f is Lipschitz continuous, the leading order term in the expansion of the expected value of the isolated eigenvalues is obtained. Furthermore, under an additional assumption, the inner product of the eigenvector with the discretized eigenfunction of the integral operator corresponding to the other eigenvalues is shown to have a Gaussian fluctuation. Some important examples of such f include the rank-one case, and the stochastic block models. It remains an open question to see if the \((k+1)\)th eigenvalue follows a Tracy–Widom type scaling.

The mathematical set-up and the main results of the paper are stated in Sect. 2. Theorem 2.3 shows that of the k largest eigenvalues, the isolated ones, centred by their mean and appropriately scaled, converge to a multivariate normal distribution. Theorem 2.4 studies the first and second order of the expectation of the top k isolated eigenvalues. Theorems 2.5 and 2.6 study the behaviour of the eigenvectors corresponding to the top k isolated eigenvalues. Section 3 contains the special case when f is rank one and the example of stochastic block models. A few preparatory estimates are noted in Sect. 4, which are used later in the proofs of the main results, given in Sect. 5. The estimates in Sect. 4 are proved in Appendix.

2 The Set-Up And The Results

Let \(f:[0,1]\times [0,1]\rightarrow [0,\infty )\) be a function which is symmetric, bounded, and Riemann integrable, that is,

and the set of discontinuities of f in \([0,1]\times [0,1]\) has Lebesgue measure zero.

The integral operator \(I_f\) with kernel f is defined from \(L^2[0,1]\) to itself by

Besides the above, we assume that \(I_f\) is a non-negative definite operator and the range of \(I_f\) has a finite dimension.

Under the above assumptions \(I_f\) turns out to be a compact self-adjoint operator, and from the spectral theory one obtains \(\theta _1\ge \theta _2\ge \cdots \ge \theta _k>0\) as the non-zero eigenvalues of \(I_f\) (where k is the dimension of the range of \(I_f\)), and eigenfunctions \(r_i\) corresponding to \(\theta _i\). Therefore, \(\{r_1,\ldots ,r_k\}\) is an orthonormal set in \(L^2[0,1]\), and by assumption, each \(r_i\) is Riemann integrable (see Lemma 6.1 in Appendix). Also, for any \(g\in L^2[0,1]\) one has

Note that this gives

Since g is an arbitrary function in \(L^2[0,1]\) this immediately gives

Since the functions on both sides of the above equation are Riemann integrable, the corresponding Riemann sums are approximately equal, and hence there is no loss of generality in assuming that the above equality holds for every x and y.

That is, we now assume that

where \(\theta _1\ge \cdots \ge \theta _k>0\) and \(\{r_1,\ldots ,r_k\}\) is an orthonormal set in \(L^2[0,1]\). The assumptions on \(r_1,\ldots ,r_k\) are listed below for easy reference.

Assumption F1. The functions \(r_1,\ldots ,r_k\) from [0, 1] to \({{\mathbb {R}}}\) are bounded and Riemann integrable.

Assumption F2. For each \(i=1,\ldots ,k\), \(r_i\) is Lipschitz, that is,

for some fixed \(K_i<\infty \). This is clearly stronger than Assumption F1, and will be needed in a few results. A consequence of this assumption is that there exists K such that

Let \((\varepsilon _N:N\ge 1)\) be a real sequence satisfying

The following will be the bare minimum assumption for all the results.

Assumption E1. For some \(\xi >8\), fixed once and for all,

that is, (1.2) holds. Furthermore,

for some \(\varepsilon _\infty \ge 0\). It’s worth emphasizing that we do not assume that \(\varepsilon _N\) necessarily goes to zero, although that may be the case.

For one result, we shall have to make a stronger assumption on \((\varepsilon _N)\) which is the following.

Assumption E2. As \(N\rightarrow \infty \),

For \(N\ge 1\), let \({{\mathbb {G}}}_N\) be an inhomogeneous Erdős–Rényi graph where an edge is placed between vertices i and j with probability \(\varepsilon _N f(i/N,j/N)\), for \(i\le j\), the choice being made independently for each pair in \(\{(i,j):1\le i\le j\le N\}\). Note that we allow self-loops. Let \(A_N\) be the adjacency matrix of \({{\mathbb {G}}}_N\). In other words, \(A_N\) is an \(N\times N\) symmetric matrix, where \(\{A_N(i,j):1\le i\le j\le N\}\) is a collection of independent random variable, and

A few more notations are needed for stating the main results. For a moment, set \(\theta _0=\infty \) and \(\theta _{k+1}=-\infty \), and define the set of indices i for which \(\theta _i\) is isolated as follows:

For an \(N\times N\) real symmetric matrix M, let \(\lambda _1(M)\ge \cdots \ge \lambda _N(M)\) denote its eigenvalues, as mentioned in Sect. 1. Finally, after the following definition, the main results will be stated.

Definition

A sequence of events \(E_N\) occurs with high probability, abbreviated as w.h.p., if

for some \(\eta >1\). For random variables \(Y_N,Z_N\),

means there exists a deterministic finite constant C such that

and

means that for all \(\delta >0\),

We shall say

to mean that

and

to mean that for all \(\delta >0\),

The reader may note that if \(Z_N\ne 0\) a.s., then “\(Y_N=O_{p}(Z_N)\)” and “\(Y_N=o_{p}(Z_N)\)” are equivalent to “\((Z_N^{-1}Y_N:N\ge 1)\) is stochastically tight” and “\(Z_N^{-1}Y_N{\mathop {\longrightarrow }\limits ^{P}}0\)”, respectively. Besides, “\(Y_N=O_{hp}(Z_N)\)” is a much stronger statement than “\(Y_N=O_p(Z_N)\)”, and so is “\(Y_N=o_{hp}(Z_N)\)” than “\(Y_N=o_{p}(Z_N)\)”.

In the rest of the paper, the subscript ‘N’ is dropped from notations like \(A_N\), \(W_N\), \(\varepsilon _N\) etc. and the ones that will be introduced. The first result is about the first order behaviour of \(\lambda _i(A)\).

Theorem 2.1

Under Assumptions E1 and F1, for every \(1\le i\le k\),

An immediate consequence of the above is that for all \(1\le i\le k\), \(\lambda _i(A)\) is non-zero w.h.p. and hence dividing by the same is allowed, as done in the next result. Define

The second main result studies the asymptotic behaviour of \(\lambda _i(A)\), for \(i\in {\mathcal {I}}\), after appropriate centering and scaling.

Theorem 2.2

Under Assumptions E1 and F1, for every \(i\in {\mathcal {I}}\), as \(N\rightarrow \infty \),

where W is as defined in (1.3).

The next result is the corollary of the previous two.

Theorem 2.3

Under Assumptions E1 and F1, if \({\mathcal {I}}\) is a non-empty set, then as \(N\rightarrow \infty \),

where the right hand side is a multivariate normal random vector in \({{\mathbb {R}}}^{|{{\mathcal {I}}}|}\), with mean zero and

for all \(i,j\in {\mathcal {I}}\).

For \(i,j\in {\mathcal {I}}\), that W is a symmetric matrix whose upper triangular entries are independent and zero mean implies that as \(N\rightarrow \infty \),

With the help of the above, it may be checked that the Lindeberg–Lévy central limit theorem implies that as \(N\rightarrow \infty \),

where the right hand side is a zero mean Gaussian vector with covariance given by (2.8). Therefore, Theorem 2.3 would follow from Theorems 2.1 and 2.2 .

Remark 2.1

If \(f>0\) a.e. on \([0,1]\times [0,1]\), then the Krein–Rutman theorem (see Lemma 6.2) implies that \(1\in {\mathcal {I}}\), and that \(r_1>0\) a.e. Thus, in this case, if \(\varepsilon _\infty =0\), then

Remark 2.2

For a fixed \(\theta >1\), define

In this case, the integral operator associated with f has exactly two non-zero eigenvalues, which are \(\theta /2\) and 1/2, with corresponding normalized eigenfunctions \(r_1(x)=\sqrt{2}\mathbf{1}(x<1/2)\) and \(r_2(x)=\sqrt{2}\mathbf{1}(x\ge 1/2)\), respectively. Let \((\varepsilon _N)\) satisfy Assumption E1 and suppose that \(\varepsilon _\infty =0\). Theorem 2.3 implies that as \(N\rightarrow \infty \),

where the right hand side has a bivariate normal distribution with mean zero. Furthermore, since \(r_1r_2\) is identically zero, it follows that \(G_1\) and \(G_2\) are uncorrelated and hence independent.

Remark 2.3

That the claim of Theorem 2.3 may not hold if \(i\notin \mathcal I\) is evident from the following example. As in Remark 2.2, suppose that Assumption E1 holds and that \(\varepsilon _\infty =0\). Let

In this case, the integral operator associated with f has exactly one non-zero eigenvalue, which is 1/2, and that has multiplicity 2, with eigenfunctions \(r_1\) and \(r_2\) as in Remark 2.2. In other words, f doesn’t have any simple eigenvalue.

Theorem 2.3 itself implies that there exists \(\beta _N\in {{\mathbb {R}}}\) such that

where \(G_1\) and \(G_2\) are independent from normal with mean 0. Furthermore,

That is, \(G_1\) and \(G_2\) are i.i.d. from N(0, 2). Hence, there doesn’t exist a centering and a scaling by which \(\lambda _1(A)\) converges weakly to a non-degenerate normal distribution.

The next main result of the paper studies asymptotics of \(\mathrm{E}(\lambda _i(A))\) for \(i\in {\mathcal {I}}\).

Theorem 2.4

Under Assumptions E1 and F2, it holds for all \(i\in {\mathcal {I}}\),

where B is a \(k\times k\) symmetric deterministic matrix, depending on N, defined by

and \(e_j\) and W are as defined in (2.6) and (1.3), respectively.

The next result studies the asymptotic behaviour of the normalized eigenvector corresponding to \(\lambda _i(A)\), again for isolated vertices i. It is shown that the same is asymptotically aligned with \(e_i\), and hence it is asymptotically orthogonal to \(e_j\). Upper bounds on rates of convergence are obtained.

Theorem 2.5

As in Theorem 2.4, let Assumptions E1 and F2 hold. Then, for a fixed \(i\in {\mathcal {I}}\),

If v is the eigenvector, with \(L^2\)-norm 1, of A corresponding to \(\lambda _i(A)\), then

that is, \(N\varepsilon (1-e_i^\prime v)\) is stochastically tight. When \(k\ge 2\), it holds that

The last main result of this paper studies finer fluctuations of (2.13) under an additional condition.

Theorem 2.6

Let \(k\ge 2\), \(i\in {\mathcal {I}}\) and Assumptions E2 and F2 hold. If v is as in Theorem 2.5, then, for all \(j\in \{1,\ldots ,k\}\setminus \{i\}\),

Remark 2.4

An immediate consequence of Theorem 2.6 is that under Assumption E2., there exists a deterministic sequence \((z_N:N\ge 1)\) given by

such that as \(N\rightarrow \infty \),

converges weakly to a normal distribution with mean zero, for all \(i\in {\mathcal {I}}\) and \(j\in \{1,\ldots ,k\}\setminus \{i\}\). Furthermore, the convergence holds jointly for all i and j satisfying the above. This, along with (2.7), implies that the collection

converges weakly, as \(N\rightarrow \infty \), to

which is a zero mean Gaussian vector in \({{\mathbb {R}}}^{k|{{\mathcal {I}}}|}\). The covariance matrix of \((G_i)\) is as in (2.8), and \(\mathrm{Cov}(G_{ij},G_{i^\prime \,j^\prime })\) and \(\mathrm{Cov}(G_{ij},G_{i^\prime })\) are not hard to calculate by proceeding as in (2.9).

3 Examples and Special Cases

3.1 The Rank One Case

Let us consider the special case of \(k=1\), that is,

for some \(\theta >0\), and a bounded Riemann integrable \(r:[0,1]\rightarrow [0,\infty )\) satisfying

In this case, Theorem 2.3 implies that

as \(N\rightarrow \infty \), where

with

If r is Lipschitz and \(\varepsilon _\infty =0\), then the claim of Theorem 2.4 boils down to

where

Lipschitz continuity of r implies that

and hence (3.1) becomes

Clearly,

In conjunction with (3.2) this yields

3.2 Stochastic Block Model

Another important example is the stochastic block model, defined as follows. Suppose that

where p is a \(k\times k\) symmetric positive definite matrix, and \(B_1,\ldots ,B_k\) are disjoint Borel subsets of [0, 1] whose boundaries are sets of measure zero, that is, their indicators are Riemann integrable. We show below how to compute the eigenvalues and eigenfunctions of \(I_f\), the integral operator associated with f.

Let \(\beta _i\) denote the Lebesgue measure of \(B_i\), which we assume without loss of generality to be strictly positive. Rewrite

where

and

Thus, \(\{s_1,\ldots ,s_k\}\) is an orthonormal set in \(L^2[0,1]\). Let

be a spectral decomposition of \({\tilde{p}}\), where U is a \(k\times k\) orthogonal matrix, and

for some \(\theta _1\ge \cdots \ge \theta _k>0\).

Define functions \(r_1,\ldots ,r_k\) by

It is easy to see that \(r_1,\ldots ,r_k\) are orthonormal in \(L^2[0,1]\), and for \(0\le x,y\le 1,\)

Thus, \(\theta _1,\ldots ,\theta _k\) are the eigenvalues of \(I_f\), and \(r_1,\ldots ,r_k\) are the corresponding eigenfunctions.

4 Estimates

In this section, we’ll record a few estimates that will subsequently be used in the proof. Since their proofs are routine, they are being postponed to Appendix. Let W be as defined in (1.3). Throughout this section, Assumptions E1 and F1 will be in force.

Lemma 4.1

There exist constants \(C_1\), \(C_2 > 0\) such that

where \(M=\sup _{0\le x,y\le 1}f(x,y)\). Consequently,

The notations \(e_1\) and \(e_2\) introduced in the next lemma and used in the subsequent lemmas should not be confused with \(e_j\) defined in (2.6). Continuing to suppress ‘N’ in the subscript, let

where [x] is the largest integer less than or equal to x.

Lemma 4.2

There exists \(0<C_1<\infty \) such that if \(e_1\) and \(e_2\) are \(N\times 1\) vectors with each entry in \([-1/\sqrt{N},1/\sqrt{N}]\), then

Lemma 4.3

There exists \(\eta _1>1\) such that for \(e_1,e_2\) as in Lemma 4.2, it holds that

where \(\xi \) is as in (1.2). In addition,

Lemma 4.4

If \(e_1,e_2\) are as in Lemma 4.2, then

and

5 Proof of the Main Results

This section is devoted to the proof of the main results. The section is split into several subsections, each containing the proof of one main result, for the ease of reading. Unless mentioned otherwise, Assumptions E1 and F1. are made.

5.1 Proof of Theorem 2.1

We start with showing that Theorem 2.1 is a corollary of Lemma 4.1. At this point, it should be clarified that throughout this section, \(e_j\) will always be as defined in (2.6).

Proof of Theorem 2.1

For a fixed \(i\in \{1,\ldots ,k\}\), it follows that

by Lemma 4.1. In order to complete the proof, it suffices to show that

which however follows from the observation that (2.2) implies that

This completes the proof. \(\square \)

5.2 Proof of Theorem 2.2

Proceeding towards the proof of Theorem 2.2, let us fix \(i\in {\mathcal {I}}\), once and for all, denote

and let V be a \(k\times k\) real symmetric matrix, depending on N which is suppressed in the notation, defined by

for all \(1\le j,l\le k\). It should be noted that if \(\Vert W\Vert <\mu \), then \(I-W/\mu \) is invertible.

The proof of Theorem 2.2, which is the main content of this paper, involves several steps, and hence it is imperative to sketch the outline of the proof for the reader’s convenience, which is done below.

-

(1)

The first major step of the proof is to show that w.h.p., \(\mu \) exactly equals \(\lambda _i(V)\). This is done in Lemma 5.2. When \(i=k=1\), the matrix V is a scalar and hence in that case the equation boils down to \(\mu =V\) which is a consequence of the resolvent equation. For higher values of k, the Gershgorin circle theorem is employed for the desired claim. Therefore, this step is a novelty of the given proof.

-

(2)

The next step in the proof is to write \(\mu \) as the solution of an equation of the form

$$\begin{aligned} \mu =\lambda _i\left( \sum _{n=0}^L\mu ^{-n}Y_n\right) +Error, \end{aligned}$$for suitable matrices \(Y_1,Y_2,\ldots \). This is done in Lemma 5.4.

-

(3)

The third step is to replace \(Y_n\) by \(\mathrm{E}(Y_n)\) in the equation obtained in the above step, for \(n\ge 2\). This is done in Lemma 5.5.

-

(4)

Arguably the most important step in the proof is to obtain an equation of the form

$$\begin{aligned} \mu ={\bar{\mu }}+\mu ^{-1}\zeta +Error, \end{aligned}$$for some deterministic \({\bar{\mu }}\) depending on N and random \(\zeta \). Once again, this is achieved from the previous step with the help of the Gershgorin circle theorem and other linear algebraic tools. This is done in Lemma 5.8.

-

(5)

The final step of the proof is to show that \({\bar{\mu }}\) of the above step can be replaced by \(\mathrm{E}(\mu )\).

Now let us proceed towards executing the above steps for proving Theorem 2.2. As the zeroth step, we show that \(V/N\varepsilon \) converges to \(\mathrm{Diag}(\theta _1,\ldots ,\theta _k)\), that is, the \(k\times k\) diagonal matrix with diagonal entries \(\theta _1,\ldots ,\theta _k\), w.h.p.

Lemma 5.1

As \(N\rightarrow \infty \),

Proof

For fixed \(1\le j,l\le k\), writing

we get that

Since

and

by Lemma 4.1 and Theorem 2.1, the proof follows. \(\square \)

The next step, which is one of the main steps in the proof of Theorem 2.2, shows that the ith eigenvalues of A and V are exactly equal w.h.p.

Lemma 5.2

With high probability,

The proof of the above lemma is based on the following fact which is a direct consequence of the Gershgorin circle theorem; see Theorem 1.6, pg 8 of [33].

Fact 5.1

Suppose that U is an \(n\times n\) real symmetric matrix. Define

If for some \(1\le m\le n\) it holds that

and

then

Remark 5.1

The assumptions (5.3) and (5.4) of Fact 5.1 mean that the Gershgorin disk containing the mth largest eigenvalue is disjoint from any other Gershgorin disk.

Proof of Lemma 5.2

The first step is to show that

To that end, fix \(N\ge 1\) and a sample point for which \(\Vert W\Vert <\mu \). The following calculations are done for that fixed sample point.

Let v be an eigenvector of A, with norm 1, corresponding to \(\lambda _i(A)\). That is,

by (5.1). Since \(\mu >\Vert W\Vert \), \(\mu I-W\) is invertible, and hence

Fixing \(j\in \{1,\ldots ,k\}\) and premultiplying the above by \(\sqrt{\theta _j}\mu e_j^\prime \) yields

As the above holds for all \(1\le j\le k\), this means that if

then

Recalling that in the above calculations a sample point is fixed such that \(\Vert W\Vert <\mu \), what we have shown, in other words, is that a vector u satisfying the above exists w.h.p.

In order to complete the proof of (5.5), it suffices to show that u is a non-null vector w.h.p. To that end, premultiply (5.6) by \(v^\prime \) to obtain that

Dividing both sides by \(N\varepsilon \) and using Lemma 4.1 implies that

Thus, u is a non-null vector w.h.p. From this and (5.9), (5.5) follows.

Lemma 5.1 shows that for all \(l\in \{1,\ldots ,i-1\}\),

as \(N\rightarrow \infty \). Since \(i\in {\mathcal {I}}\), \(\theta _i-\theta _l<0\), and hence

A similar calculation shows that for \(l\in \{i+1,\ldots ,k\}\),

In view of (5.5) and Fact 5.1, the proof would follow once it can be shown that for all \(l\in \{1,\ldots ,k\}\setminus \{i\}\),

This follows, once again, by dividing both sides by \(N\varepsilon \) and using Theorem 2.1 and Lemma 5.1. This completes the proof. \(\square \)

The next step is to write

which is possible because \(\Vert W\Vert <\mu \). Denote

which should not be confused with \(Z_{ij}\) defined in (2.14), and for \(n\ge 0\), let \(Y_n\) be a \(k\times k\) matrix with

The following bounds will be used several times.

Lemma 5.3

It holds that

and

Proof

Lemma 4.4 implies that

Hence,

the equality in the second line using the fact that \(Z_{j,l,1}\) has mean 0. This proves the first claim. The second claim follows from (4.3) of Lemma 4.3. \(\square \)

The next step is to truncate the infinite sum in (5.10) to level L, where \(L=[\log N]\) as defined before.

Lemma 5.4

It holds that

Proof

From the definition of V, it is immediate that for \(1\le j,l\le k\),

and hence

For the sake of notational simplicity, let us suppress \(\mathbf{1}(\Vert W\Vert <\mu )\). Therefore, with the implicit understanding that the sum is set as zero if \(\Vert W\Vert \ge \mu \), for the proof it suffices to check that

To that end, Theorem 2.1 and Lemma 4.1 imply that

In order to prove (5.11), it suffices to show that as \(N\rightarrow \infty \),

To that end, recall (1.2) to argue that

and

By (5.13), it follows that

the last line using (5.14). Therefore, (5.12) follows, which ensures (5.11), which in turn completes the proof. \(\square \)

In the next step, \(Y_n\) is replaced by its expectation for \(n\ge 2\).

Lemma 5.5

It holds that

Proof

In view of Theorem 2.1 and Lemma 5.4, all that has to be checked is

For that, invoke Lemma 4.3 to claim that

where \(\xi \) is as in (1.2).

Our next claim is that there exists \(C_2>0\) such that for N large,

To see this, suppose that the event on the left hand side holds. Then, for fixed \(1\le j,l\le k\), and large N,

Thus, (5.17) holds for some \(C_2>0\).

Combining (5.16) and (5.17), it follows that

This, with the help of (1.2), establishes (5.15) from which the proof follows. \(\square \)

The goal of the next two lemmas is replacing \(\mu \) by a deterministic quantity in

Lemma 5.6

For N large, the deterministic equation

has a solution \({\tilde{\mu }}\) such that

Proof

Define a function

by

Our first claim is that for any fixed \(x>0\),

To that end, observe that since \(\mathrm{E}(Y_1)=0\),

Recalling that

it follows by (5.2) that

Lemma 4.2 implies that

uniformly for \(2\le n\le L\), and hence there exists \(0<C_3<\infty \) with

Therefore,

as \(N\rightarrow \infty \). With the help of (5.21), this implies that

and hence (5.20) follows. It follows that for a fixed \(0<\delta <\theta _i\).

and thus, for large N,

Similarly, again for large N,

Hence, for N large, (5.18) has a solution \({\tilde{\mu }}\) in \([(N\varepsilon )(\theta _i-\delta ),(N\varepsilon )(\theta _i+\delta )]\), which trivially satisfies (5.19). Hence the proof. \(\square \)

Lemma 5.7

If \({\tilde{\mu }}\) is as in Lemma 5.6, then

Proof

Thus,

Equations (5.19) and (5.22) imply that

This completes the proof with the help of (5.23). \(\square \)

The next lemma is arguably the most important step in the proof of Theorem 2.2, the other major step being Lemma 5.2.

Lemma 5.8

There exists a deterministic \({\bar{\mu }}\), which depends on N, such that

Proof

Define a \(k\times k\) deterministic matrix

which, as usual, depends on N. Lemma 5.7 and (5.24) imply that

By Lemma 5.5 it follows that

Let

and

Clearly,

Thus, the proof would follow with the aid of (5.25) if it can be shown that

If \(k=1\), then \(i=1\) and hence \(H=M=0\). Thus, the above is a tautology in that case. Therefore, assume without loss of generality that \(k\ge 2\).

Proceeding towards proving (5.26) when \(k\ge 2\), set

and

The main idea in the proof of (5.26) is to observe that the eigenvector of \(U_1\) corresponding to \(\lambda _i(U_1)\) is same as that of M corresponding to \(\lambda _i(M)\), and likewise for \(U_2\) and X. Hence, the first step is to use this to get a bound on the differences between the eigenvectors in terms of \(\Vert U_1-U_2\Vert \).

An important observation that will be used later is that

The second claim of Lemma 5.3 implies that the right hand side above is \(o_{hp}(1)\). The same implies that for \(m=1,2\) and \(1\le j,l\le k\),

In other words, as \(N\rightarrow \infty \), \(U_1\) and \(U_2\) converge to \(\mathrm{Diag}(\theta _1-\theta _i,\ldots ,\theta _k-\theta _i)\) w.h.p. Therefore,

Let \({\tilde{U}}_m\), for \(m=1,2,\) be the \((k-1)\times (k-1)\) matrix (recall that \(k\ge 2\)) obtained by deleting the ith row and the ith column of \(U_m\), and let \({\tilde{u}}_m\) be the \((k-1)\times 1\) vector obtained from the ith column of \(U_m\) by deleting its ith entry. It is worth recording, for possible future use, that

which follows from (5.30), and that

follows from (5.29).

Equations (5.30) and (5.31) imply that \({\tilde{U}}_m-\lambda _i(U_m)I_{k-1}\) converges w.h.p. to

Since \(i\in {\mathcal {I}}\), the above matrix is invertible. Fix \(\delta >0\) such that every matrix in the closed \(\delta \)-neighborhood \(B_\delta \), in the sense of operator norm, of the above matrix is invertible. Let

Then, \(C_4<\infty \). Besides, there exists \(C_5<\infty \) satisfying

Fix \(N\ge 1\) and a sample point such that \(\tilde{U}_m-\lambda _i(U_m)I_{k-1}\) belongs to \(B_\delta \). Then, it is invertible. Define a \((k-1)\times 1\) vector

and a \(k\times 1\) vector

It is immediate that

Our next claim is that

This claim is equivalent to

Let \({\bar{U}}_m\) be the \((k-1)\times k\) matrix obtained by deleting the ith row of \(U_m-\lambda _i(U_m)I_k\). Since the latter matrix is singular, and \({\tilde{U}}_m-\lambda _i(U_m)I_{k-1}\) is invertible, it follows that the ith row of \(U_m-\lambda _i(U_m)I_k\) lies in the row space of \({\bar{U}}_m\). In other words, the row spaces of \(U_m-\lambda _i(U_m)I_k\) and \({\bar{U}}_m\) are the same, and so do their null spaces. Thus, (5.38) is equivalent to

To see the above, observe that the ith column of \({\bar{U}}_m\) is \({\tilde{u}}_m\), and hence we can partition

where \({\bar{U}}_{m1}\) and \({\bar{U}}_{m2}\) are of order \((k-1)\times (i-1)\) and \((k-1)\times (k-i)\), respectively. Furthermore,

Therefore,

Hence, (5.38) follows, which proves (5.37).

Next, we note

\(C_4\) and \(C_5\) being as in (5.34) and (5.35), respectively. Recalling that the above calculation was done on an event of high probability, what we have proven, with the help of (5.29) and (5.33), is that

Furthermore, (5.32) and (5.36) imply that

Finally, noting that

and that

it follows that

Recalling (5.27) and (5.28), (5.26) follows, which completes the proof in conjunction with (5.25). \(\square \)

Now, we are in a position to prove Theorem 2.2.

Proof of Theorem 2.2

Recalling that

it suffices to show that

Lemma 5.8 implies that

a consequence of which, combined with Lemma 5.3, is that

Thus,

Lemma 5.3 implying the second line, the third line following from (5.40) and the fact that

which is also a consequence of the former lemma, being used for the last line. Using Lemma 5.8 once again, we get that

Let

Clearly,

and (5.44) implies that for \(\delta >0\) there exists \(\eta >1\) with

Lemma 5.3 implies that

Next, (5.41) and that \(|\mu |\le N^2\) a.s. imply that

Thus,

and hence

This, in view of (5.44), implies that

the second line following from (5.43). This establishes (5.39) with the help of (5.42), and hence the proof. \(\square \)

5.3 Proof of Theorem 2.3

Theorems 2.1 and 2.2 establish Theorem 2.3 with the help of (2.10).

5.4 Proof of Theorem 2.4

Now we shall proceed toward proving Theorem 2.4. For the rest of this section, that is, this subsection and the subsequent two, Assumption F2. holds. In other words, \(r_1,\ldots ,r_k\) are assumed to be Lipschitz continuous and hence so is f.

The following lemma essentially proves Theorem 2.4.

Lemma 5.9

Under Assumptions E1 and F2.,

Proof

Lemma 5.5 implies that

Equation (5.43) implies that

From (5.22), it follows that

and hence

Lemma 4.4, in particular (4.5) therein, implies that

and hence

This, in conjunction with (5.45), implies that

An immediate consequence of the above and (5.22) is that

Applying Fact 5.1 as in the proof of Lemma 5.2, it can be shown that

Since \(r_i\) and \(r_j\) are Lipschitz functions, it holds that

Hence, it follows that

and similarly,

Combining these findings with (5.48) yields that

Equations (5.47) and (5.49) together imply that

Therefore,

This in conjunction with (5.46) completes the proof. \(\square \)

Theorem 2.4 is a simple corollary of the above lemma, as shown below.

Proof of Theorem 2.4

A consequence of Theorem 2.2 is that

The claim of Lemma 5.9 is equivalent to

The proof follows by adding the two equations, and noting that B is a deterministic matrix. \(\square \)

5.5 Proof of Theorem 2.5

Next we proceed towards the proof of Theorem 2.5, for which the following lemma will be useful.

Lemma 5.10

Under Assumptions E1 and F2, as \(N\rightarrow \infty \),

Proof

For a fixed \(n=1,2,\) expand

The proof can be completed by proceeding along similar lines as in the proof of Lemma 5.9. \(\square \)

Now we are in a position to prove Theorem 2.5.

Proof of Theorem 2.5

Theorem 2.1 implies that (2.11) holds for any \(i\in {\mathcal {I}}\). Fix such an i, denote

and let v be the eigenvector of A, having norm 1, corresponding to \(\mu \), which is uniquely defined with probability close to 1.

Fix \(k\ge 2\), and \(j\in \{1,\ldots ,k\}\setminus \{i\}\). Premultiplying (5.7) by \(e_j^\prime \) yields that

Therefore,

Lemma 5.10 implies that as \(N\rightarrow \infty \),

Therefore,

the last line being another consequence of Lemma 5.10. Thus, (2.13) holds.

Using (5.7) once again, we get that

that is,

Using Lemma 5.10 once again, it follows that

Thus, (2.12) would follow once it’s shown that

and that for all \((l,m)\in \{1,\ldots ,k\}^2\setminus \{(i,i)\}\),

Equation (5.53) is a trivial consequence of (5.50). For (5.54), assuming without loss of generality that \(l\ne i\), (2.13) implies that

the last line following from Lemma 5.10. Thus, (5.54) follows, which in conjunction with (5.53) establishes (2.12). This completes the proof. \(\square \)

5.6 Proof of Theorem 2.6

Finally, Theorem 2.6 is proved below, based on Assumptions E2. and F2.

Proof of Theorem 2.6

Fix \(i\in {\mathcal {I}}\). Recall (5.8) and (5.9), and let u be as defined in the former. Let \({\tilde{u}}\) be the column vector obtained by deleting the ith entry of u, \({\tilde{V}}_i\) be the column vector obtained by deleting the ith entry of the ith column of V, and \({\tilde{V}}\) be the \((k-1)\times (k-1)\) matrix obtained by deleting the ith row and ith column of V. Then, (5.9) implies that

Lemma 5.1 implies that

and hence \(I_{k-1}-\mu ^{-1}{\tilde{V}}\) is non-singular w.h.p. Thus, (5.55) implies that

The next step is to show that

To see this, use the fact that f is Lipschitz to write for a fixed \(1\le j,l\le k\),

the last line following from the fact that

which is a consequence of (2.5). This along with (5.50) implies that

Combining this with (5.58) yields that

Thus, (5.57) follows, an immediate consequence of which is that

where

Next, fix \(j\in \{1,\ldots ,k\}\setminus \{i\}\). By similar arguments as above, it follows that

using (5.59) once again, because

and

by (4.5). Thus,

the second line following from Lemma 4.3, and the last line from (5.59), (5.60) and Lemma 4.2. In particular,

The above in conjunction with (5.61) implies that

In light of (5.56), the above means that

the last line following from (2.12) and (5.59). Using (5.60) once again yields that

This completes the proof. \(\square \)

Change history

01 April 2024

A Correction to this paper has been published: https://doi.org/10.1007/s10955-024-03258-z

References

Alt, J., Ducatez, R., Knowles, A.: Delocalization transition for critical Erdős–Rényi graphs. ArXiv:2005.14180 (2020)

Baik, J., Silverstein, J.W.: Eigenvalues of large sample covariance matrices of spiked population models. J. Multivar. Anal. 97(6), 1382–1408 (2006). https://doi.org/10.1016/j.jmva.2005.08.003

Baik, J., Ben Arous, G., Péché, S.: Phase transition of the largest eigenvalue for nonnull complex sample covariance matrices. Ann. Probab. 33(5), 1643–1697 (2005). https://doi.org/10.1214/009117905000000233

Benaych-Georges, F., Nadakuditi, R.R.: The eigenvalues and eigenvectors of finite, low rank perturbations of large random matrices. Adv. Math. 227(1), 494–521 (2011). https://doi.org/10.1016/j.aim.2011.02.007

Benaych-Georges, F., Guionnet, A., Maida, M.: Fluctuations of the extreme eigenvalues of finite rank deformations of random matrices. Electron. J. Probab. 16, 1621–1662 (2011)

Benaych-Georges, F., Bordenave, C., Knowles, A.: Largest eigenvalues of sparse inhomogeneous Erdős–Rényi graphs. Ann. Probab. 47(3), 1653–1676 (2019)

Benaych-Georges, F., Bordenave, C., Knowles, A.: Spectral radii of sparse random matrices. Ann. Inst. H. Poincaré Probab. Statist. 56(3), 2141–2161 (2020). https://doi.org/10.1214/19-AIHP1033

Bhamidi, S., Van Der Hofstad, R., van Leeuwaarden, J., et al.: Scaling limits for critical inhomogeneous random graphs with finite third moments. Electron. J. Probab. 15, 1682–1702 (2010)

Bollobás, B., Janson, S., Riordan, O.: The phase transition in inhomogeneous random graphs. Random Struct. Algorithms 31(1), 3–122 (2007)

Capitaine, M., Péché, S.: Fluctuations at the edges of the spectrum of the full rank deformed GUE. Probab. Theory Relat. Fields 165(1–2), 117–161 (2016). https://doi.org/10.1007/s00440-015-0628-6

Capitaine, M., Donati-Martin, C., Féral, D., et al.: The largest eigenvalues of finite rank deformation of large Wigner matrices: convergence and nonuniversality of the fluctuations. Ann. Probab. 37(1), 1–47 (2009)

Capitaine, M., Donati-Martin, C., Féral, D.: Central limit theorems for eigenvalues of deformations of Wigner matrices. Ann. Probab. Stat. 48(1), 107–133 (2012)

Chakrabarty, A., Hazra, R.S., den Hollander, F., Sfragara, M.: Spectra of adjacency and Laplacian matrices of inhomogeneous Erdős–Rényi random graphs. To appear in Random Matrices: Theory and Applications (2019). https://doi.org/10.1142/S201032632150009X

Chapon, F., Couillet, R., Hachem, W., Mestre, X.: The outliers among the singular values of large rectangular random matrices with additive fixed rank deformation. Markov Process. Relat. Fields 20(2), 183–228 (2014)

Couillet, R., Hachem, W.: Fluctuations of spiked random matrix models and failure diagnosis in sensor networks. IEEE Trans. Inform. Theory 59(1), 509–525 (2013). https://doi.org/10.1109/TIT.2012.2218572

Ding, X., Jiang, T., et al.: Spectral distributions of adjacency and Laplacian matrices of random graphs. Ann. Appl. Probab. 20(6), 2086–2117 (2010)

Erdős, L., Knowles, A., Yau, H.-T., Yin, J.: Spectral statistics of Erdős–Rényi graphs II: eigenvalue spacing and the extreme eigenvalues. Commun. Math. Phys. 314(3), 587–640 (2012). https://doi.org/10.1007/s00220-012-1527-7

Erdős, L., Knowles, A., Yau, H.-T., Yin, J.: Spectral statistics of Erdős–Rényi graphs I: local semicircle law. Ann. Probab. 41(3B), 2279–2375 (2013). https://doi.org/10.1214/11-AOP734

Féral, D., Péché, S.: The largest eigenvalue of rank one deformation of large Wigner matrices. Commun. Math. Phys. 272(1), 185–228 (2007). https://doi.org/10.1007/s00220-007-0209-3

Füredi, Z., Komlós, J.: The eigenvalues of random symmetric matrices. Combinatorica 1(3), 233–241 (1981). https://doi.org/10.1007/BF02579329

Hoeffding, W.: Probability inequalities for sums of bounded random variables. J. Am. Stat. Assoc. 58(301), 13–30 (1963)

Huang, J., Landon, B., Yau, H.-T.: Transition from Tracy–Widom to Gaussian fluctuations of extremal eigenvalues of sparse Erdős—Rényi graphs. Ann. Probab. 48(2), 916–962 (2020). https://doi.org/10.1214/19-AOP1378

Johansson, K.: From Gumbel to Tracy–Widom. Probab. Theory Relat. Fields 138(1–2), 75–112 (2007). https://doi.org/10.1007/s00440-006-0012-7

Knowles, A., Yin, J.: The isotropic semicircle law and deformation of Wigner matrices. Commun. Pure Appl. Math. 66(11), 1663–1750 (2013). https://doi.org/10.1002/cpa.21450

Knowles, A., Yin, J.: The outliers of a deformed Wigner matrix. Ann. Probab. 42(5), 1980–2031 (2014). https://doi.org/10.1214/13-AOP855

Lee, J.O., Schnelli, K.: Extremal eigenvalues and eigenvectors of deformed Wigner matrices. Probab. Theory Relat. Fields 164(1–2), 165–241 (2016). https://doi.org/10.1007/s00440-014-0610-8

Lee, J.O., Schnelli, K.: Local law and Tracy–Widom limit for sparse random matrices. Probab. Theory Relat. Fields 171(1–2), 543–616 (2018)

Ninio, F.: A simple proof of the Perron–Frobenius theorem for positive symmetric matrices. J. Phys. A Math. Gen. 9(8), 1281 (1976)

Péché, S.: Deformed ensembles of random matrices. In: Proceedings of the International Congress of Mathematicians—Seoul 2014, vol. III. pp. 1159–1174. Kyung Moon SA, Seoul (2014).

Tiomoko Ali, H., Couillet, R.: Improved spectral community detection in large heterogeneous networks. J. Mach. Learn. Res. 18, Paper No. 225, 49 (2017)

Tran, L.V., Vu, V.H., Wang, K.: Sparse random graphs: eigenvalues and eigenvectors. Random Struct. Algorithms 42(1), 110–134 (2013). https://doi.org/10.1002/rsa.20406

van der Hofstad, R.: Critical behavior in inhomogeneous random graphs. Random Struct. Algorithms 42(4), 480–508 (2013)

Varga, R.S.: Geršgorin and His Circles. Springer Series in Computational Mathematics, vol. 36. Springer, Berlin (2004). https://doi.org/10.1007/978-3-642-17798-9

Vu, V.H.: Spectral norm of random matrices. Combinatorica 27(6), 721–736 (2007). https://doi.org/10.1007/s00493-007-2190-z

Zhu, Y.: Graphon approach to limiting spectral distributions of Wigner-type matrices. arXiv.1806.11246 (2018)

Acknowledgements

The authors thank an anonymous referee for insightful comments that helped improve the paper significantly. RSH thanks Kavita Ramanan for a fruitful discussion. The research of AC and RSH was supported by the MATRICS grant of SERB. SC thanks Matteo Sfragara for helpful discussions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Abhishek Dhar.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Lemma 6.1

The eigenfunctions \(\{r_i:1\le i\le k\}\) of the operator \(I_f\) are Riemann integrable.

Proof

Let \(D_f\subset [0,1]\times [0,1]\) be the set of discontinuity points f. Since f is Riemann integrable, the Lebesgue measure of \(D_f\) is 0. Let

If \(\lambda \) is the one dimensional Lebesgue measure, then Fubini’s theorem implies that

has full measure. Fix an \(x\in E\) and consider \(x_n\rightarrow x\) and observe that

Fix \(1\le i\le k\) and let \(\theta _i\) be the eigenvalue with corresponding eigenfunction \(r_i\), that is,

Using f is bounded and \(r\in L^2[0,1]\), dominated convergence theorem implies

and hence r is continuous at x. So the discontinuity points of \(r_i\) form a subset of \(E^c\) which has Lebesgue measure 0. Further, (6.1) shows that \(r_i\) is bounded and hence Riemann integrability follows. \(\square \)

The following result is a version of the Perron–Frobenius theorem in the infinite dimensional setting (also known as the Krein–Rutman theorem). Since our integral operator is positive, self-adjoint and finite dimensional so the proof in this setting is much simpler and can be derived following the work of [28]. In what follows, we use for \(f, g\in L^2[0,1]\), the inner product

Lemma 6.2

Suppose \(f>0\) a.e. on \([0,1]\times [0,1]\). Then largest eigenvalue \(\theta _1\) of \(T_f\) is positive and the corresponding eigenfunction \(r_1\) can be chosen such that \(r_1(x)>0\) for almost every \(x\in [0,1]\). Further, \(\theta _1>\theta _2\).

Proof

First observe that

where \(u_1(x)=|r_1|(x)\) and the last inequality follows from the Rayleigh-Ritz formulation of the largest eigenvalue. Hence note that the string of inequalities is actually an equality, that is,

Breaking \(r_1= r_1^{+}-r_1^{-}\) implies either \(r_1^+=0\) or \(r_1^{-}=0\) almost everywhere. Without loss of generality assume that \(r_1\ge 0\) almost everywhere. Using

Note that if \(r_1(x)\) is zero for some x then due to the positivity assumption on f, \(r_1(y)=0\) for almost every \(y\in [0,1]\) which is a contradiction. Hence we have that \(r_1(x)>0\) almost every \(x\in [0, 1]\).

For the final claim, without loss of generality assume that \(\int _0^1 r_1(x)\, dx\ge 0\). If \(\theta _1=\theta _2\), then the previous argument would give us \(r_2(x)>0\) and this will contradict the orthogonality of \(r_1\) and \(r_2\). \(\square \)

Lemmas 4.1–4.4 are proved in the rest of this section. Therefore, the notations used here should refer to those in Sect. 4 and should not be confused with those in Sect. 5. For example, \(e_1\) and \(e_2\) are as in Lemma 4.2.

Proof of Lemma 4.1

Note that for any even integer k

Using \(\mathrm{E}(W(i,j)^2)\le \varepsilon M\) and condition (1.2) it is immediate that conditions of Theorem 1.4 of [34] are satisfied. We shall use the following estimate from the proof of that result. It follows from [34, Sect. 4]

where \(K_1\) is some positive constant and there exists a constant \(a>0\) such that k can be chosen as

Using (6.2), (6.3) and \((1-x)^k \le e^{-kx}\) for k, \(x > 0\),

Now plugging in the value of k in the bound (6.4) and using

we have

for some constant \(C_2>0\) and N large enough. This proves (4.1) and hence the lemma.

\(\square \)

Proof of Lemma 4.2

Let A be the event where Lemma 4.1 holds, that is, \(\Vert W\Vert \le C \sqrt{N\varepsilon }\) for some constant C. Since the entries of \(e_1\) and \(e_2\) are in \([-1/\sqrt{N}, 1/\sqrt{N}]\) so \(\Vert e_i\Vert \le 1\) for \(i=1,2\). Hence on the high probability event it holds that

We show that the above expectation on the low probability event \(A^c\) is negligible. For that first observe

for some constant \(0<C^\prime <\infty \). Thus using Lemma 4.1 one has

Since \(n\le \log N\) and \(\xi >8\) the result follows. \(\square \)

Proof of Lemma 4.3

The proof is similar to the proof of Lemma 6.5 of [18]. The exponent in the exponential decay is crucial, so the proof is briefly sketched. Observe that

To use the independence, one can split the matrix W as \(W^{\prime } + W^{{\prime \prime }}\) where the upper triangular matrix \(W^{\prime }\) has entries \(W^{\prime }(i,j) = W(i,j) \mathbf{1} (i\leqslant j)\) and the lower triangular matrix \(W^{{\prime \prime }}\) with entries \(W^{{\prime \prime }}(i,j) = W(i,j) \mathbf{1} (i > j)\). Therefore the above quantity under the sum breaks into \(2^n\) terms each having similar properties. Denote one such term as

Using the fact that each entry of \(e_1\) and \(e_2\) are bounded by \(1/\sqrt{N}\), it follows by imitating the proof of Lemma 6.5 of [18] that

where p is an even integer and C is a positive constant, independent of n and p. Rest of the \(2^n-1\) terms arising in (6.5) have the same bound and hence

Choose \(\eta \in (1,\, \xi /4)\) and consider

(with N large enough to make p an even integer) to get

Note that \(n\le L\), ensures that \(p>1\). Since the bound is uniform over all \(2\le n\le L\), the first bound (4.2) follows.

For (4.3) one can use Hoeffding’s inequality [21, Theorem 2] as follows.

Define

Since A(k, l) are Bernoulli random variables, so one has \(\{{\widetilde{A}}(k,l): 1\le k\le l\le N\}\) are independent random variables taking values in \([-1/N, 1/N]\) and hence by Hoeffding’s inequality we have, for any \(\delta >0\),

Dealing with the case \(k>l\) similarly, the desired bound on\(e_1^\prime We_2\) follows. \(\square \)

Proof of Lemma 4.4

Follows by a simple moment calculation. \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chakrabarty, A., Chakraborty, S. & Hazra, R.S. Eigenvalues Outside the Bulk of Inhomogeneous Erdős–Rényi Random Graphs. J Stat Phys 181, 1746–1780 (2020). https://doi.org/10.1007/s10955-020-02644-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-020-02644-7

Keywords

- Adjacency matrices

- Inhomogeneous Erdős–Rényi random graph

- Largest eigenvalue

- Scaling limit

- Stochastic block model