Abstract

A strongly orthogonal decomposition of a tensor is a rank one tensor decomposition with the two component vectors in each mode of any two rank one tensors are either colinear or orthogonal. A strongly orthogonal decomposition with few number of rank one tensors is favorable in applications, which can be represented by a matrix-tensor multiplication with orthogonal factor matrices and a sparse tensor; and such a decomposition with the minimum number of rank one tensors is a strongly orthogonal rank decomposition. Any tensor has a strongly orthogonal rank decomposition. In this article, computing a strongly orthogonal rank decomposition is equivalently reformulated as solving an optimization problem. Different from the ill-posedness of the usual optimization reformulation for the tensor rank decomposition problem, the optimization reformulation of the strongly orthogonal rank decomposition of a tensor is well-posed. Each feasible solution of the optimization problem gives a strongly orthogonal decomposition of the tensor; and a global optimizer gives a strongly orthogonal rank decomposition, which is however difficult to compute. An inexact augmented Lagrangian method is proposed to solve the optimization problem. The augmented Lagrangian subproblem is solved by a proximal alternating minimization method, with the advantage that each subproblem has a closed formula solution and the factor matrices are kept orthogonal during the iteration. Thus, the algorithm always can return a feasible solution and thus a strongly orthogonal decomposition for any given tensor. Global convergence of this algorithm to a critical point is established without any further assumption. Extensive numerical experiments are conducted, and show that the proposed algorithm is quite promising in both efficiency and accuracy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Tensors (a.k.a. hypermatrices) are natural generalizations of vectors and matrices, serving as much more apparent and convenient tools to encode, analyze, as well as represent data and information in diverse disciplines of applied science. As one of the two sides of a coin, opposite to their capability over matrices, computations with tensors are subject to the curse of dimensionality [29, 32]. A vector can be represented as an array indexed by one subscript, a matrix by two, whereas a higher order tensor by several. Usually, we refer to order as the number of subscripts needed to express a tensor, each subscript represents one mode, whereas the range of each subscript is the dimension in that mode. As is well-known, almost all computations of tensors have complexity exponential with respect to their orders and dimensions [29]. This brings a heavy obstacle to warrant tensors in many real applications. This tough situation dramatically improves if the tensor has a rank one decomposition with few rank one components, since in which case the computational complexity becomes linear with respect to either the order, the dimensions, or the rank of this tensor. Therefore, there is a natural invitation to the study of tensor rank one decompositions. As a result, the focus of research on tensors has been putting on both theoretical and computational properties of tensor rank one decompositions. This article falls into this main stream as well, and will be devoted to a particular rank one decomposition of tensors—the strongly orthogonal (rank) decomposition.

The conception of strongly orthogonal rank and the corresponding decomposition were proposed in the thesis of Franc in 1992 [17]. It is a natural generalization of SVD for matrices from a restricted perspective (cf. Sect. 2.4). In [33], “free orthogonal rank” and “free rank decomposition” were employed instead of “strong orthogonal rank” and “strong orthogonal rank decomposition” respectively by Leibovici and Sabatier. We will follow the terminologies of [17, 27]. The number of rank one tensors in a strongly orthogonal decomposition is referred as the length of this decomposition.

In the following, we want outline three aspects that motivate this work. The first one is the wide range applications of strongly orthogonal (rank) decompositions of tensors, please refer to [3, 12, 13, 27, 29, 30, 33] and references therein. Particularly, in statistical modelings for learning latent variables [3] and blind source separations [12, 13], orthogonality is a reasonable requirement. Unlike the second order case in which a complete orthogonality (a.k.a. diagonalization) can be assumed (guaranteed by SVD of matrices, cf. Sect. 2.4), complete orthogonality for higher order tensors is impossible in general [27, 32, 41]. Therefore, strong orthogonality becomes an appropriate tradeoff between orthogonality and diagonality—it preserves the orthogonality and seeks the most sparsity on the representation. As a result, strongly orthogonal decompositions as well as general orthogonal decompositions have being adopted in diverse applications [6, 10, 13, 14, 24, 28, 29, 36].

The second one is the appealing theoretical and computational benefits by considering strong orthogonality over the general rank one decompositions. The most general tensor rank one decomposition is decomposing a tensor with rank one components without further requirements on the structures of these rank one tensors. The smallest number of rank one tensors in such a decomposition is the rank of that tensor. It is well-known that determining the rank, computing a rank decomposition of a tensor is very difficulty [32]. From the computational complexity perspective, they are respectively NP-complete and NP-hard problems [15, 19]. Given the freedom on the structures of the decomposed rank one tensors, the tensor rank one decomposition and approximation problems suffer from many numerical difficulties as well, such as the component matrices of the iteration become unbounded, etc, please see [15, 29] and references therein. However, whenever strong orthogonality is imposed on the decomposed rank one tensors, both the decomposition and the approximation become well-possed (cf. Sect. 2.3). Thus, from the computational point of view, the strongly orthogonal decomposition is a good candidate for tensor decomposition.

The strongly orthogonal (rank) decomposition has a geometric meaning as well. One widely admitted rule of science is simplification. A tensor (hypermatrix) is actually the coordinate representation of an element in a certain tensor space under a chosen coordinate system. A representation with simple coordinates should be the goal in many tasks. SVD for matrices and principal component analysis are both ruled by this principle, and it can represent a matrix with the simplest coordinates (i.e., a diagonal matrix). With the matrix-tensor multiplication, it is easy to see that a strongly orthogonal rank decomposition of a tensor also falls into this principle (cf. Proposition 2.1). Thus, geometrically, computing a strongly orthogonal rank decomposition is equivalent to finding a coordinate system such that the representation of this tensor is the simplest in the sense of having the fewest nonzero coordinates. This concise mathematical interpretation will provide insights on investigations of strongly orthogonal rank decompositions.

The last but not the least is a direct motivation for this article—computing out a strongly orthogonal (rank) decomposition for a given tensor. There lacks a systematic study on this issue in the literature, since the works [17, 27, 33]. To compute a strongly orthogonal decomposition for a given tensor, in [27], a greedy tensor decomposition is proposed. In each step of this algorithm, a best rank one approximation problem subject to orthogonality constraints must be solved. As observed already by Kolda [27], solving such a sequence of optimization problems is a very challenging task. Moreover, it is pointed out that the difficulty with this approach is in enforcing the constraints [27, Page 252]. A purpose of this article is providing a numerical method enforcing the orthogonality constraints during the iteration and computing a strongly orthogonal decomposition with the rank one tensors all at once.

At present, there has no computational complexity of computing a strongly orthogonal rank decomposition for a given tensor [27,28,29]. However, it is suspected to be NP-hard, in viewing of the NP-hardness of some related problems [11, 20, 34]. Thus, it would be difficult and impossible (if this problem is indeed NP-hard and P\(\ne \)NP) to compute out a strongly orthogonal rank decomposition of a general tensor in polynomial time. Therefore, the primary purpose of this article is presenting a heuristic approach for computing a strongly orthogonal decomposition of a given tensor with length as short as possible and a numerical method to realize it. Hopefully, this heuristic approach could give a strongly orthogonal rank decomposition in several cases as well.

The main contributions of this article are

- (1)

With \(l_0\)-norm and matrix-tensor multiplication, the strongly orthogonal rank decomposition is reformulated as an optimization problem over the matrix manifolds (cf. (9)) the first time (Proposition 2.1). This should serve as a standard tool and accumulate a step towards a systematic way for analyzing strongly orthogonal rank decompositions.

- (2)

A heuristic optimization problem (cf. (12)) is proposed to approximate the hard problem (9) which has a discontinuous objective function. An inexact augmented Lagrangian method is proposed to solve (12), i.e., Algorithm 3.1. With a careful design, all the subproblems have closed formula solutions, all the iterations fulfill the orthogonality constraints, and thus this algorithm always give a strongly orthogonal decomposition (Algorithm 4.1). Global convergence to a critical point of (12) is established without any further hypothesis (Proposition 3.3). Extensive numerical experiments show that this approach is quite promising (Sect. 5), and in several cases strongly orthogonal rank decompositions can be found.

The equivalent reformulation (9) actually reflects the difficulty in the sense that the task is minimizing a discontinuous (letting alone differentiable) function over a system of a large number of highly nonlinear equations and a manifold constraint. Note that even the optimization of a differentiable function over a smooth manifold is a hard computational problem in general [2]. Therefore, there is no guarantee that an algorithm can always return a global optimizer for (9). Actually, advances on manifold optimization are more focused on smooth functions for finding critical points, see [2, 25] and references therein; a call for study on nonsmooth manifold optimization is given by Absil and Hosseini very recently [1]. This gives a motivation for the particularly designed inexact augmented Lagrangian method to problem (12).

With the discontinuous objective function in (9), a common strategy is replacing it with a heuristic continuous surrogate [1, 16]. The \(l_1\)-norm is applied in this article (cf. (12)). It is a reasonable choice, which can be viewed as a penalty approach (cf. Sect. 2.5). Although (12) belongs to the class of optimization problems with continuous objective function over a compact feasible set, there is no theoretical guarantee for a numerical optimization method on finding their global optimizers for such problems [2, 7], since the constraints are highly nonlinear and nonconvex. Usually, a wisely designed optimization method could converge to a critical point [2, 7].

The \(l_1\)-norm is a nonsmooth function. The nonsmoothness of (12) and the particular structure that the variables of the manifold constraints are not related directly to the objective function (cf. (12)) motivate us to a particularly designed algorithm. A general thought is employing the penalty technique. The augmented Lagrangian method is a modification of the penalty method in a wise way to avoid the penalty parameter being forced to going infinite. Thus, the augmented Lagrangian method has more stable numerical performance over the penalty method [7]. The general picture in this article is applying the augmented Lagrangian method to (12) and keeping the orthogonality of the iterations with a careful design. On the other hand, the “inexact version” is studied as (i) subproblems cannot always be guaranteed to be solved exactly and (ii) the subproblem can be solved only inexactly within appropriate required precision to improve the overall efficiency.

The rest paper is organized as follows. For the convenience of reading, several technical details are put in Appendixes. Preliminaries on matrix-tensor multiplication (Sect. 2.1), the orthogonal group and related optimization properties (Sect. 2.2), strongly orthogonal decompositions of a tensor (Sect. 2.3), some optimization techniques (Sect. 2.6) will be given in Sect. 2. In Sect. 2.5, the problem of computing a strongly orthogonal rank decomposition of a tensor is equivalently reformulated as a nonlinear optimization problem with orthogonality constraints and \(l_0\)-norm objective function. Replacing the \(l_0\)-norm by the \(l_1\)-norm surrogate, we get a heuristic of the problem, i.e., (12). In Sect. 3, an inexact augmented Lagrangian method (ALM) is proposed to solve this optimization problem. Critical points of the augmented Lagrangian function (Sect. 3.1), KKT points of the problem (12) (Sect. 3.2) are investigated. In order to study the KKT points, a nonsmooth version of the standard Lagrange multiplier theory is reviewed in Appendix B, since the \(l_1\)-norm is a nonsmooth function. Global convergence of the algorithm is established without any further hypothesis in Sect. 3.3, whose proof is put in Appendix C. The augmented Lagrangian subproblem is discussed in Sect. 4. Section 4.1 presents more details on implementing the ALM algorithm. The augmented Lagrangian subproblem is solved by a proximal alternating minimization (PAM) method, whose global convergence is also established without any further hypothesis in Sect. 4.2 and the proof is put in Appendix D. To this end, the convergence theory for a general PAM method is reviewed in Appendix A. Extensive numerical experiments are reported in Sect. 5. Sect. 5.1 is for concrete examples taken from literatures, Sect. 5.2 is for completely orthogonal decomposable tensors, in which a kind of condition numbers for tensors are discussed, Sect. 5.3 is for random examples, whereas Sect. 5.4 draws some conclusions for the numerical computations. Some final conclusions are given in Sect. 6.

2 Preliminaries

In this section, we will review some basic notions on tensors and preliminaries on strongly orthogonal (rank) decompositions of a tensor, as well as those of optimization theory which will be conducted in this article.

Given a positive integer \(k\ge 2\), and positive integers \(n_1,\dots ,n_k\), the tensor space consisting of real tensors of dimension \(n_1\times \dots \times n_k\) is denoted as \(\mathbb {R}^{n_1}\otimes \dots \otimes \mathbb {R}^{n_k}\). In this Euclidean space, inner product and the induced norm can be defined. The Hilbert–Schmidt inner product of two given tensors \(\mathcal {A}, \mathcal {B}\in \mathbb {R}^{n_1}\otimes \dots \otimes \mathbb {R}^{n_k}\) is defined as

The Hilbert–Schmidt norm \(\Vert \mathcal {A}\Vert \) is then defined as

2.1 Matrix-tensor multiplication

Given a tensor \(\mathcal {A}\in \mathbb {R}^{n_1}\otimes \dots \otimes \mathbb {R}^{n_k}\) and k matrices \(B^{(i)}\in \mathbb {R}^{n_i\times n_i}\) for \(i\in \{1,\dots ,k\}\), the matrix-tensor multiplication\((B^{(1)},\dots ,B^{(k)})\cdot \mathcal {A}\) results in a tensor in \(\mathbb {R}^{n_1}\otimes \dots \otimes \mathbb {R}^{n_k}\), defined entry-wisely as

for all \(i_t\in \{1,\dots ,n_t\}\) and \(t\in \{1,\dots ,k\}\).

Let \(n^*:=\prod _{i=1}^kn_i\). The mode-jmatrix flattening of \(\mathcal {A}\) is a matrix \(A^{(j)}\in \mathbb {R}^{n_j\times \frac{n^*}{n_j}}\) defined entry-wisely as

with

We will denote by \(\mathcal {A}^{({\text {f}},i)}=A^{(i)}\) the mode-i matrix flattening of \(\mathcal {A}\). Let I be the identity matrix of appropriate size. It follows that

for all \(i\in \{1,\dots ,k\}\).

It follows from the Hilbert–Schmidt inner product that

for any pair \(\mathcal {A},\mathcal {B}\in \mathbb {R}^{n_1}\otimes \dots \otimes \mathbb {R}^{n_k}\) and any \(i\in \{1,\dots ,k\}\).

Let \(\mathbb {O}(n)\subset \mathbb {R}^{n\times n}\) be the group of \(n\times n\) orthogonal matrices. Whenever \(B^{(i)}\in \mathbb {O}(n_i)\) for each \(i\in \{1,\dots ,k\}\), it is a direct calculation to see that

2.2 Orthogonal group and its normal cone

For any \(A\in \mathbb {O}(n)\), the Fréchet normal cone to \(\mathbb {O}(n)\) at A is defined as

Usually, we set \(\hat{N}_{\mathbb {O}(n)}(A)=\emptyset \) whenever \(A\notin \mathbb {O}(n)\). The (limiting) normal cone to \(\mathbb {O}(n)\) at \(A\in \mathbb {O}(n)\) is denoted by \(N_{\mathbb {O}(n)}(A)\) and is defined as

It is easily seen from the definition that the normal cone \(N_{\mathbb {O}(n)}(A)\) is always closed. The indicator function \(\delta _{\mathbb {O}(n)}\) of \(\mathbb {O}(n)\) is defined as

Given a function \(f : \mathbb {R}^n\rightarrow \mathbb {R}\cup \{\infty \}\), the regular subdifferential of f at \(\mathbf {x}\in \mathbb {R}^n\) is defined as

and the (limiting) subdifferential of f at \(\mathbf {x}\) is defined as

We refer to [40] for more details on variational analysis concepts. An important fact about normal cone \(N_{\mathbb {O}(n)}(A)\) and the subdifferential of the indicator function \(\delta _{\mathbb {O}(n)}\) of \(\mathbb {O}(n)\) at A is (cf. [40])

Note that the group \(\mathbb {O}(n)\) of orthogonal matrices of size \(n\times n\) is a smooth manifold of dimension \(\frac{n(n-1)}{2}\). It follows from [40, Chapter 6.C] that

where \({\text {S}}^{n\times n}\subset \mathbb {R}^{n\times n}\) is the subspace of \(n\times n\) symmetric matrices.

Given a matrix \(B\in \mathbb {R}^{n\times n}\), the projection of B onto the normal cone of \(\mathbb {O}(n)\) at A is

Therefore,

since

and

is an invertible matrix. The invertibility follows from the fact that \(\mathbf {x}^\mathsf {T}(I-\frac{1}{2}AA^\mathsf {T})\mathbf {x}=\frac{1}{2}\Vert \mathbf {x}\Vert ^2\).

2.3 Strongly orthogonal decomposition

Two rank one tensors

with unit component vectors \(\mathbf {u}^{(i)}\)’s and \(\mathbf {v}^{(i)}\)’s are strongly orthogonal, denoted as \(\mathcal {U}\perp _{\text {s}} \mathcal {V}\), if \(\mathcal {U}\perp \mathcal {V}\), i.e.,

and

Given a tensor \(\mathcal {A}\in \mathbb {R}^{n_1}\otimes \dots \otimes \mathbb {R}^{n_k}\), a strongly orthogonal decomposition means a rank one decomposition of \(\mathcal {A}\)

such that the set of rank one tensors \(\{\mathbf {u}^{(1)}_i\otimes \dots \otimes \mathbf {u}^{(k)}_i\}_{i=1}^r\) is a set of mutually strongly orthogonal rank one tensors. For each given tensor \(\mathcal {A}\in \mathbb {R}^{n_1}\otimes \dots \otimes \mathbb {R}^{n_k}\), there is a natural strongly orthogonal decomposition as

where \(\{\mathbf {e}^{(s)}_1,\dots ,\mathbf {e}^{(s)}_{n_s}\}\) is the standard basis of \(\mathbb {R}^{n_s}\) for all \(s\in \{1,\dots ,k\}\). It is immediate to see that this can be done for any given orthogonal basis of \(\mathbb {R}^{n_s}\) for all \(s\in \{1,\dots ,k\}\).

A strongly orthogonal decomposition of \(\mathcal {A}\) with the minimum r is called a strongly orthogonal rank decomposition of \(\mathcal {A}\) and the corresponding r is the strongly orthogonal rank of \(\mathcal {A}\), denoted as \({\text {rank}}_{{\text {SO}}}(\mathcal {A})\) [27]. It is called free orthogonal rank by Leibovici and Sabatier [33]. Some properties on strongly orthogonal rank of a tensor are investigated in [22], including an upper bound of the strongly orthogonal ranks and expected strongly orthogonal rank for a given tensor space.

2.4 An SVD perspective of strongly orthogonal rank decomposition

Given a matrix \(A\in \mathbb {R}^{n_1\times n_2}\), the classical singular value decomposition (SVD) of A reads that there exist orthogonal matrices \(U\in \mathbb {O}(n_1)\), \(V\in \mathbb {O}(n_2)\) and a diagonal matrix \(\Lambda \in \mathbb {R}^{n_1\times n_2}\) such that (cf. [21])

The diagonality of the matrix \(\Lambda \) ensures that we can take a nonnegative diagonal of \(\Lambda \), and define these diagonals as singular values of the matrix A. Arrange these singular values in nonincreasing order along the diagonals. Suppose without loss of generality that \(n_1\le n_2\), and the columns of U and V are denoted as \(\mathbf {u}_i\)’s and \(\mathbf {v}_j\)’s. Then the SVD (6) can be expanded as

where \(r={\text {rank}}(A)\) is the rank of the matrix A. Obviously, (7) is a strongly orthogonal rank decomposition of A. The importance of SVD for matrices and its fundamental influence are widely known [18].

The beautiful nature of the matrix case makes many essential features of orthogonal decompositions simple and vague to picture their higher order counterparts, i.e., tensors (a.k.a. hypermatrices). There exist various attempts to generalize this fundamental singular value decomposition of a matrix to tensors. As the philosophy suggests, each generalization has its own advantages with the sacrifice of losing some attracting properties of their matrix counterpart [14].

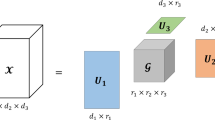

Perhaps the most important fact that people unwilling to see is the missing of diagonalization for a tensor. It is a commonly admitted fact that for a given tensor \(\mathcal {A}\in \mathbb {R}^{n_1}\otimes \dots \otimes \mathbb {R}^{n_k}\) there cannot always exist a set of orthogonal matrices \(U_i\in \mathbb {O}(n_i)\) for all \(i\in \{1,\dots ,k\}\) and a diagonal tensor \(\Lambda \in \mathbb {R}^{n_1}\otimes \dots \otimes \mathbb {R}^{n_k}\) (i.e., the only possible nonzero entries of \(\Lambda \) are \(\lambda _{i_1\dots i_k}\) with \(i_1=\dots =i_k=i\) for \(i\in \{1,\dots ,\min \{n_1,\dots , n_k\}\}\)) such that (cf. [32])

Therefore, searching a decomposition of the form (8) keeping the orthogonality of \(U_i\)’s and allowing possible a non-diagonal tensor \(\Lambda \) should be the main task in decomposing a tensor and a reasonable alternative orthogonal decomposition scheme for a tensor. Compared with the other decompositions of a tensor, the decomposition (8) has the advantage of interpolation with orthogonal coordinates change, parallelling to the discussion on geometry of vector spaces in the matrix case by Jordan [26].

2.5 Optimization reformulation for the strongly orthogonal rank decomposition

Given a tensor \(\mathcal {A}\in \mathbb {R}^{n_1}\otimes \dots \otimes \mathbb {R}^{n_k}\), we consider the following optimization problem

where \(\Vert \mathcal {B}\Vert _0\) is the zero norm of \(\mathcal {B}\), i.e., counting the number of nonzero entries of \(\mathcal {B}\).

Proposition 2.1

(Optimization Reformulation) For any given tensor \(\mathcal {A}\in \mathbb {R}^{n_1}\otimes \dots \otimes \mathbb {R}^{n_k}\), the minimization problem (9) has an optimizer \((\mathcal {B},U^{(1)},\dots ,U^{(k)})\) with optimal value being \({\text {rank}}_{{\text {SO}}}(\mathcal {A})\), and a strongly orthogonal rank decomposition of \(\mathcal {A}\) is given by

where \(\mathbf {u}^{(s)}_i\) is the i-th row of \(U^{(s)}\) for all \(i\in \{1,\dots ,n_s\}\) and \(s\in \{1,\dots ,k\}\).

Proof

We first show that the optimization problem (9) always has an optimizer. Note that each \(\mathbb {O}(n_i)\) is a compact set. By the matrix-tensor multiplication, we have that

Thus, the feasible set of (9) is compact. Since the zero norm is lower semi-continuous, we conclude that the minimum in (9) is always attainable by an optimizer \((\mathcal {B},U^{(1)},\dots ,U^{(k)})\).

In the following, we assume that \((\mathcal {B},U^{(1)},\dots ,U^{(k)})\) is such an optimizer. It follows from the matrix-tensor multiplication that

where \(\mathbf {u}^{(s)}_i\) is the i-th row of \(U^{(s)}\) for all \(i\in \{1,\dots ,n_s\}\) and \(s\in \{1,\dots ,k\}\). Thus, each optimizer \((\mathcal {B},U^{(1)},\dots ,U^{(k)})\) of (9) gives a strongly orthogonal decomposition of \(\mathcal {A}\), and the number of strongly orthogonal rank one tensors in this decomposition is exactly the number of nonzero entries of \(\mathcal {B}\), i.e., \(\Vert \mathcal {B}\Vert _0\). Consequently, we must have \({\text {rank}}_{{\text {SO}}}(\mathcal {A})\le \Vert \mathcal {B}\Vert _0\) and hence the optimal value of (9) is lower bounded by \({\text {rank}}_{{\text {SO}}}(\mathcal {A})\).

In the following, we complete the proof by constructing a feasible solution of (9) from a strongly orthogonal rank decomposition of \(\mathcal {A}\). Suppose that (5) gives such a decomposition, i.e.,

By the definition of strong orthogonality, we have that each pair of the vectors \(\mathbf {u}^{(s)}_1,\dots ,\mathbf {u}^{(s)}_r\) consists of either orthogonal or equal or two opposite vectors, for all \(s\in \{1,\dots ,k\}\). Thus, without loss of generality (changing \(\lambda _i\) to \(-\lambda _i\) if necessary), we can assume that the set \(\{\mathbf {u}^{(s)}_1,\dots ,\mathbf {u}^{(s)}_r\}\) forms an orthnormal set of vectors for all \(s\in \{1,\dots ,k\}\). Let \(p_s\) be the cardinality of the set \(\{\mathbf {u}^{(s)}_1,\dots ,\mathbf {u}^{(s)}_r\}\) for all \(s\in \{1,\dots ,k\}\). Note that \(p_s\le \min \{r,n_s\}\) and strict inequality can happen for all \(s\in \{1,\dots ,k\}\). Let \(U^{(s)}\in \mathbb {O}(n_s)\) with the first \(p_s\) columns being these of \(\{\mathbf {u}^{(s)}_1,\dots ,\mathbf {u}^{(s)}_r\}\) for all \(s\in \{1,\dots ,k\}\), and tensor \(\mathcal {B}\in \mathbb {R}^{n_1}\otimes \dots \otimes \mathbb {R}^{n_k}\) with entries

Immediately, we see that \(\Vert \mathcal {B}\Vert _0=r\) and

Therefore, the above constructed \((\mathcal {B}, U^{(1)},\dots ,U^{(k)})\) is a feasible solution to (9) with objective function value \(\Vert \mathcal {B}\Vert _0=r={\text {rank}}_{{\text {SO}}}(\mathcal {A})\). As a result, the optimal value of (9) is upper bounded by \({\text {rank}}_{{\text {SO}}}(\mathcal {A})\).

Hence, an optimizer \((\mathcal {B}, U^{(1)},\dots ,U^{(k)})\) of (9) exists with \(\Vert \mathcal {B}\Vert _0={\text {rank}}_{{\text {SO}}}(\mathcal {A})\) and a strongly orthogonal rank decomposition of \(\mathcal {A}\) is given as (10) with this optimizer. The proof is then complete. \(\square \)

With Proposition 2.1, computing a strongly orthogonal rank decomposition of a given tensor \(\mathcal {A}\) can be done, if one can solve the optimization problem (9). Moreover, optimizers of (9) will give such decompositions. Therefore, we will concentrate on solving the problem (9) in the rest article.

The constraint is nonlinear in \(U^{(i)}\)’s and linear in \(\mathcal {B}\). However, the objective function is not even continuous. In order to resolve this, we would like to use a continuous sparsity measure \(\Vert \mathcal {B}\Vert _1\) (i.e., the absolute sum of all the entries of \(\mathcal {B}\)) as a surrogate for \(\Vert \mathcal {B}\Vert _0\).

The heuristic of employing \(l_1\)-norm \(\Vert \mathcal {B}\Vert _1\) for \(l_0\)-norm \(\Vert \mathcal {B}\Vert _0\) is quite popular and useful in compressive sensing as well as in convex mathematical optimization models [16]. Theoretical justification for this convex relaxation has been established in the literature. For our problem (9), (12) is still nonconvex, and hence it is hard to give a theoretical guarantee on exactness of the relaxation. However, this heuristic is quite reasonable: If \(\mathcal {B}\) is an optimizer of (9) such that \(b_{i_1\dots i_k}=0\) for \((i_1,\dots ,i_k)\in S\) with a subset \(S\subseteq \{1,\dots ,n_1\}\times \dots \times \{1,\dots ,n_k\}\), then the constraint \(b_{i_1\dots i_k}=0\) for \((i_1,\dots ,i_k)\in S\) can be added into (9). The objective function value is then constant and irrelevant to the optimization, and hence it can be removed. On the other hand, \(|b_{i_1\dots i_k}|\) is a nonsmooth penalty for the constraint \(b_{i_1\dots i_k}=0\). By adding \(\sum _{(i_1,\dots ,i_k)\in S}|b_{i_1\dots i_k}|\) into the objective function, we get a penalized problem with equal penalty weights. \(\Vert \mathcal {B}\Vert _1-\sum _{(i_1,\dots ,i_k)\in S}|b_{i_1\dots i_k}|\) can be added into the objective function as a regularizer for selecting an optimizer with the minimum absolute sum from the set of optimizers with \(b_{i_1\dots i_k}=0\) for \((i_1,\dots ,i_k)\in S\).

2.6 Optimization techniques

In this section, we give some basic optimization techniques that will be used in the sequel.

For a given \(\alpha \in \mathbb {R}\), \(\text {sign}(\alpha )\) is the sign of \(\alpha \), defined as

Given a vector \(\tilde{\mathbf {x}}\in \mathbb {R}^n\) and parameter \(\gamma >0\), the optimizer of the following optimization problem

is given by

It is known as the soft-thresholding, and \({\text {T}}_{\alpha }\) is the soft-thresholding operator for a given \(\alpha >0\).

Given a matrix \(A\in \mathbb {R}^{n\times n}\), an optimizer of the following optimization problem

is given by \(X^*=UV^\mathsf {T}\) [18], where \(U,V\in \mathbb {O}(n)\) are taken from the full singular value decomposition of A, i.e., \(U\Sigma V^\mathsf {T}=A\) for some nonnegative diagonal matrix \(\Sigma \in \mathbb {R}^{n\times n}\).

3 Augmented Lagrangian method

In this section, we apply the classical augmented Lagrangian method (ALM) for solving problem (12).

A standard reformulation of (12) by putting the orthogonality constraints into the objective function is

With Lagrangian multiplier \(\mathcal {X}\) and penalty parameter \(\rho \), the augmented Lagrangian function of problem (13) is (cf. [7])

For given matrices \(U^{(i)}_s\in \mathbb {O}(n_i)\) for all \(i\in \{1,\dots ,k\}\) and \(s=1,2,\dots \), let

For convenience, we define

Note that minimization problem (13) is an equality constrained nonlinear optimization problem with nonsmooth objective function. The classical inexact augmented Lagrangian method for solving (13) is

Algorithm 3.1

inexact ALM

Several problems should be addressed for Algorithm 3.1: (i) Step 1 is well-defined, i.e., there exists a solution for (15), (16) and (17); and (ii) efficient computation of such a solution as \(\epsilon _s\downarrow 0\). For a succinct representation, these algorithmic implementations will be discussed later. Convergence properties will be established first, assuming Algorithm 3.1 is well-defined.

To that end, critical points of the augmented Lagrangian function and KKT points of the original optimization problem (12) will be discussed.

3.1 Critical points

In the following, we study the critical points of the augmented Lagrangian. For a given multiplier \(\mathcal {X}\), we can split \(L_{\rho }(\mathbb {U},\mathcal {B};\mathcal {X})\) as

where

and

with

With the natural structure of the variables, we can partition the subdifferentials or gradients of the functions involved so far accordingly. The subdifferentials and gradients are kept aligned as the variables without vectorizing, e.g., the partial derivatives of \(Q(\mathbb {U},\mathcal {B})\) are collected in the same tensor format as \(\mathcal {B}\) and the block matrix structure of \(\mathbb {U}\). This notation can be easily understood from the linear operator perspective of subdifferentials and gradients. In particular,

and

where

Likewise,

We also have that

for all \(i_j\in \{1,\dots ,n_j\}\) and \(j\in \{1,\dots ,k\}\).

Given a lower semicontinuous function f, a critical point of f is a point \(\mathbf {x}\) such that \(0\in \partial f(\mathbf {x})\).

Proposition 3.2

(Critical Points) With notation as above, a point \((\mathbb {U},\mathcal {B})\in (\mathbb {R}^{n_1\times n_1}\times \dots \times \mathbb {R}^{n_k\times n_k})\times (\mathbb {R}^{n_1}\otimes \dots \otimes \mathbb {R}^{n_k})\) is a critical point of the minimization problem

if and only if

and

Proof

By the structure of the augmented Lagrangian (cf. (20)), we have that

The rest then follows from (4). \(\square \)

3.2 KKT points

In this section, we study KKT points of the optimization problem (12). A general theory on Lagrange multiplier rule for optimization problems with nonsmooth objective functions is presented in Appendix B, for more details, we refer to [40].

In the following, we first rewrite (12) in the form (43) asFootnote 1

where \(\mathcal {O}\in \mathbb {R}^{n_1}\otimes \dots \otimes \mathbb {R}^{n_k}\) is the zero tensor. Note that all requirements in Appendix B for the objective and constraint functions and abstract set X are satisfied. In the following, we will show that the constraint qualification (44) is satisfied at each feasible point of (23).

Note that in this case

and

Let matrices \(V^{(i)}\in \mathbb {R}^{n_i\times \frac{n^*}{n_i}}\) be defined as (21) for all \(i\in \{1,\dots ,k\}\). Let \(\mathcal {X}\in \mathbb {R}^{n_1}\otimes \dots \otimes \mathbb {R}^{n_k}\). With a direct calculation, the system (44) for problem (23) becomes

which implies directly \(\mathcal {X}=\mathcal {O}\) from the last relation. Therefore, the constraint qualification (44) is satisfied at each feasible, and hence local minimum, solution of (23).

Next, we present the KKT system of (23) in an explicit form. With (24) and the fact that each \(N_{\mathbb {O}(n_i)}(U^{(i)})\) is a linear space (cf. Sect. 2.2), it is easy to see that a feasible point \((\mathbb {U},\mathcal {B})=(U^{(1)},\dots ,U^{(k)},\mathcal {B})\) is a KKT point of (23) (as well as (12)) if and only if the following system is fulfilled

3.3 Global convergence

Suppose that \(\{(\mathbb {U}_s,\mathcal {B}_s,\mathcal {X}_s)\}\) is a sequence generated by Algorithm 3.1. We will show first that the sequence \(\{(\mathbb {U}_s,\mathcal {B}_s,\mathcal {X}_s)\}\) is bounded. Then, suppose that \((\mathbb {U}_*,\mathcal {B}_*,\mathcal {X}_*)\) is one of its limit points. We will next show that \((\mathbb {U}_*,\mathcal {B}_*,\mathcal {X}_*)\) is a KKT point of (12), i.e., the system (25) is satisfied.

Proposition 3.3

Let \(\{(\mathbb {U}_s,\mathcal {B}_s,\mathcal {X}_s)\}\) be the iteration sequence generated by Algorithm 3.1. Then it is a bounded sequence, every limit point \((\mathbb {U}_*,\mathcal {B}_*,\mathcal {X}_*)\) of this sequence satisfies the feasibility of problem (12), i.e.,

and \((\mathbb {U}_*,\mathcal {B}_*)\) is a KKT point of problem (12).

The proof of Proposition 3.3 is given in Appendix C. Proposition 3.3 gives the global convergence result for Algorithm 3.1. In the following Sect. 4, we will address the well-definiteness and computation issues.

4 Augmented Lagrangian subproblem

In this section, we apply a proximal alternating minimization (PAM) method to solve the augmented Lagrangian subproblem (15), (16) and (17). For the sake of notational simplicity, we will omit the outer iteration indices of Algorithm 3.1 and present the algorithm for the problem

for given multiplier \(\mathcal {X}\) and penalty parameter \(\rho \). The initial guess for this problem is denoted as \((\mathbb {U}_0,\mathcal {B}_0)\), which can be taken as the previous outer iteration of Algorithm 3.1.

4.1 Proximal alternating minimization algorithm

The algorithm is a regularized proximal multi-block nonlinear Gauss–Seidel method: starting from \(s=1\), iteratively solve the following problems

in which \(c^{(j)}_s\ge 0\) for all \(j\in \{0,\dots ,k\}\) and \(s=1,2,\dots \) are proximal parameters chosen by the user. There are \(k+1\) subproblems in (26), whereas the last k subproblems are of the same structure. A good news is that all the subproblems have optimal solutions in closed formulae. In the sequel, we will derive these closed formulae.

The first subproblem is equivalent to

whose solution is analytic and obtained by the soft-thresholding (cf. Sect. 2.6)

In the next, we derive optimal solutions for the rest subproblems. Let \(j\in \{1,\dots ,k\}\), and

Then the subproblem for computing \(U^{(j)}_s\) is

Note that

Likewise,

With these facts and \(U^{(j)}\in \mathbb {O}(n_j)\), the subproblem (27) is equivalent to

which in turn can be solved by singular value decomposition (SVD) or polar decomposition [18] (cf. Sect. 2.6).

We are now in the position to present the proximal alternating minimization algorithm for the augmented Lagrangian subproblem.

Algorithm 4.1

PAM

4.2 Convergence analysis

In this section, we will establish the global convergence of Algorithm 4.1. To that end, optimality conditions of problem (26) will be derived first.

A direct calculation shows that the optimality conditions for the subproblem (26) are the following system:

With the fact that the normal cones of the orthogonal groups are linear subspaces, the above system can be simplified as

Let

where for \(j\in \{1,\dots ,k\}\)

Compared with the critical points of the augmented Lagrangian function (cf. Sect. 3.1), we have that

We will show that Algorithm 4.1 converges. It is based on a general result established in [4, Theorem 6.2]. We summarized the convergence theory for this general PAM in Appendix A.

Proposition 4.2

For any given tensors \(\mathcal {A}, \mathcal {X}\in \mathbb {R}^{n_1}\otimes \dots \otimes \mathbb {R}^{n_k}\), and parameters \(\rho >0\), \(\epsilon >0\), \(0<\underline{c}<\overline{c}\), we have that the sequence \(\{(\mathbb {U}_s,\mathcal {B}_s)\}\) produced by Algorithm 4.1 converges and

for the sequence \(\{\Theta _s\}\) generated by (28), (29) and (30). Then Algorithm 4.1 is finite termination.

The proof of Proposition 4.2 is given in Appendix D.

5 Numerical experiments

In this section, we test Algorithm 3.1 for several classes of tensors. All the tests were conducted on a Dell PC with RAM 4GB and 3.2GHz CPU in 64bt Windows operation system. All codes were written in MatLab with Tensor ToolBox by Bader and Kolda [5]. Some default parameters were chosen as \(\gamma =1.05\), \(\tau =0.8\), and

with \(\hat{\epsilon }_{s-1}:=\Vert \Theta _{s-1}\Vert \le \epsilon _{s-1}\) for \(s\ge 2\). The initial guess \(\mathcal {X}_1=\mathcal {O}\), and \(\mathcal {B}_1=((U^{(1)}_1)^\mathsf {T},\dots ,(U^{(k)}_1)^\mathsf {T})\cdot \mathcal {A}\) with each \(U^{(i)}_1\in \mathbb {O}(n_i)\) randomly chosen for all \(i\in \{1,\dots ,k\}\) (unless otherwise stated). The other parameters are given concretely. The maximum outer iteration number (i.e., the maximum iteration number allowed for Algorithm 3.1) is 1000. The termination rule is

representing the relative feasibility and optimality residuals. Unless otherwise stated, the inner iteration Algorithm 4.1 is terminated whenever either the optimality condition (17) is satisfied or the number of iterations exceeds 1000. If the number of the outer iteration exceeds 1000 and (32) is not satisfied, then the algorithm fails for this test; otherwise the problem is solved successfully.

As problem (12) is nonlinear and nonconvex, the solution found heavily depends on the initial point. Thus, each instance is tested 10 times (unless otherwise stated) with randomly generated initializations. In the tables, the column \(\mathbf sucp \) indicates the probability of successful computations in the 10 simulations; \(\mathbf max(len) \) is the maximum length of the strongly orthogonal decomposition computed among the simulations, and \(\mathbf min(len) \) for the minimum; prob is the probability the algorithm computes out a strongly orthogonal decomposition with length \(\mathbf min(len) \). All the other columns are the mean of the successfully solved simulations: len is the length of the strongly orthogonal decomposition, \(\mathbf out-it \) and \(\mathbf inner-it \) are respectively the numbers of the outer and inner iterations, \(\mathbf cpu \) is the cpu time consumed, \(\mathbf in (10^{-9})\) and \(\mathbf op (10^{-9})\) are respectively the final infeasibility and optimality residuals in the magnitude \(10^{-9}\).

5.1 Examples

In the following, we test several classes of examples from known literatures.

Example 5.1

This example is taken from Kolda [28, Section 3]. Let \(\mathbf {a},\hat{\mathbf {a}}\in \mathbb {R}^n\) be two unit vectors and orthogonal to each other, and \(\sigma _1>\sigma _2>0\). The tensor \(\mathcal {A}\in \mathbb {R}^{n}\otimes \mathbb {R}^{n}\otimes \mathbb {R}^n\) is given by

where \(\mathbf {b} = \frac{1}{\sqrt{2}}(\mathbf {a}+\hat{\mathbf {a}})\). The rank of \(\mathcal {A}\) is two, and (33) is a rank decomposition, while it is not a strongly orthogonal decomposition. Obviously, \({\text {rank}}_{{\text {SO}}}(\mathcal {A})\le 5\), by expanding \(\mathbf {b}\) into \(\mathbf {a}\) and \(\hat{\mathbf {a}}\).

We tested sampled examples with the dimensions n varying from 2 to 8. Usually, we get a strongly orthogonal decomposition with length 5; sometimes we get 4. The starting penalty parameter is 10 and the maximum inner iteration number is \((n-1)*100\). For each case, 10 simulations were generated with random \([\mathbf {a},\hat{\mathbf {a}}]\in {\text {St}}(n,2)\), and random \(\sigma _2<\sigma _1\in (0,1)\). The results are collected in Table 1.

Example 5.2

This example is taken from Ishteva et al. [24, Section 4.1]. Let \(\mathbf {a},\mathbf {b},\mathbf {c}\in \mathbb {R}^n\) be three unit vectors and orthogonal to each other. The tensor \(\mathcal {A}\in \mathbb {R}^{n}\otimes \mathbb {R}^{n}\otimes \mathbb {R}^n\) is given by

Obviously, this already gives a strongly orthogonal rank decomposition of \(\mathcal {A}\).

For each case, 10 simulations were generated with random \([\mathbf {a},\mathbf {b},\mathbf {c}]\in {\text {St}}(n,3)\). The penalty parameter is chosen as 10. The computational results are listed in Table 2. All simulations were solved by the algorithm successfully. Thus the column sucp is omitted. It is easily seen from Table 2 that in most cases the algorithm can find out a strongly orthogonal rank decomposition.

Example 5.3

This example is taken from Kolda [27, Example 3.3]. Let \(\mathbf {a},\mathbf {b}\in \mathbb {R}^n\) be two unit vectors and orthogonal to each other, and \(\sigma _1>\sigma _2>\sigma _3>0\). The tensor \(\mathcal {A}\in \mathbb {R}^{n}\otimes \mathbb {R}^{n}\otimes \mathbb {R}^n\) is given by

The definition of \(\mathcal {A}\) gives a strongly orthogonal rank decomposition already. The parameters are chosen the same as Example 5.2. The initialization for the orthogonal matrices is by the factor matrices of the higher order singular value decomposition (HOSVD) of the underlying tensor [14]. Computational results are shown in Table 3.

Example 5.4

This example is taken from Kolda [27, Example 3.6]. Let \(\mathcal {U}_1,\mathcal {U}_2\in \mathbb {R}^{n_1}\otimes \mathbb {R}^{n_2}\otimes \mathbb {R}^{n_3}\) be two unit rank one tensors that are not orthogonal to each other, and \(\sigma _1\ge \sigma _2\). The tensor \(\mathcal {A}\in \mathbb {R}^{n_1}\otimes \mathbb {R}^{n_2}\otimes \mathbb {R}^{n_3}\) is given by

The penalty parameter is chosen as 10. The computational results are given in Table 4.

Example 5.5

This example is taken from Kolda [27, Example 5.1]. Let \(\mathbf {a},\mathbf {b},\mathbf {c},\mathbf {d} \in \mathbb {R}^n\) be four unit vectors and orthogonal to each other,

and

The tensor \(\mathcal {A}\in \mathbb {R}^{n}\otimes \mathbb {R}^{n}\otimes \mathbb {R}^n\) is given by

The definition of \(\mathcal {A}\) gives a strongly orthogonal rank decomposition already. The penalty parameter is chosen as 10. The computational results are given in Table 5.

Example 5.6

This example is taken from Nie [37, Example 5.6]. The tensor \(\mathcal {A}\in \otimes ^5\mathbb {R}^3\) is given by

This tensor is a hard one, since the entries of the tensor varies from \(\frac{38}{15}\) to \(-\frac{369268}{5}=-7.38\times 10^{4}\). The computed orthogonal decomposition has rank 213, slightly smaller than the upper bound \(3^5-15=228\) (cf. [22]). We take the penalty parameter 10, and run 100 simulations. Each simulation finds a decomposition with length 213. The average outer iteration number is 83.15, the inner iteration number is 5435.59, the cpu time is 78.771, the infeasibility is \(1.7597\times 10^{-11}\), and the optimality residual is \(3.6463\times 10^{-9}\).

Example 5.7

This example is taken from Nie [37, Example 5.8]. The tensor \(\mathcal {A}\in {\text {S}}^3(\mathbb {R}^n)\), the subspace of symmetric tensors in \(\otimes ^3\mathbb {R}^n\), is given by

The penalty parameter is chosen as 10. The computational results are given in Table 6.

Example 5.8

This example is taken from Nie[37, Example 5.9]. The tensor \(\mathcal {A}\in {\text {S}}^4(\mathbb {R}^n)\) is given by

This example is also not easy to solve, since the entries of the tensor vary with large magnitudes. The penalty parameter is chosen as 10. The computational results are given in Table 7.

Example 5.9

This example is taken from Nie [37, Example 5.10]. The tensor \(\mathcal {A}\in {\text {S}}^4(\mathbb {R}^n)\) is given by

The penalty parameter is chosen as 10. The computational results are given in Table 8.

Example 5.10

The last concrete example is taken from Batselier et al. [6, Example 6]. The tensor \(\mathcal {A}\in \otimes ^3\mathbb {R}^n\) is given by

Similar reason as that in Example 5.8, this class of tensors is hard. The penalty parameter is 100. The computational results are given in Table 9.

5.2 Completely orthogonally decomposable tensors

We would like to separate a section for the class of completely orthogonally decomposable tensors (CODT), as it is of particular interest [3, 23]. For this class of tensors, we can consider an analogue condition number for tensors.

A tensor \(\mathcal {A}\in \mathbb {R}^{n_1}\otimes \dots \otimes \mathbb {R}^{n_k}\) is called completely orthogonally decomposable (cf. [27, 38, 41]) if there exist orthogonal matrices

and numbers \(\lambda _d\in \mathbb {R}_+\) for \(d=1,\dots ,D_0:=\min \{n_1,\dots ,n_k\}\) such that

Note that some of the \(\lambda _d\)’s can be zeros. By eliminating the zeros, we can further assume that a nonzero completely orthogonally decomposable tensor takes the form

with \(D \le D_0:=\min \{n_1,\dots ,n_k\}\) and \(\lambda _d>0\), \(d=1,\ldots ,D\). It is easy to see that D is then the strongly orthogonal rank of \(\mathcal {A}\).

Unlike the matrix case (i.e., \(k=2\)), the rank one decomposition of a completely orthogonally decomposable tensor is always unique [41], regardless of the possibility of equal \(\lambda _i\)’s. It can be derived from Kruskal’s uniqueness theorem [31] as well, in which the prerequisity is the trivial inequality \(k-1\le (k-2)D\) when \(D\ge 2\) and the case \(D=1\) is obvious. Therefore, in this case, problem (13) has an optimizer with a diagonal tensor \(\mathcal {B}\).

For this class of tensors, we implement two tests for stability of the algorithm. The first test is on tensors with respectively small, medium, and large strongly orthogonal ranks. All the tensors are third order, and the dimensions are listed in Table 10 case by case. In this table, \({\text {rk}}\) indicates the rank of the generated tensor. In each case, 10 simulations were generated with the factor orthogonal matrices being the orthogonalization of the columns of randomly generated matrices. The penalty parameter for tensors with dimensions 30, 50, 80, and 100 are chosen respectively as 10, 20, 30, and 40.

Denote by \(\lambda _{\max }:=\max \{\lambda _d\mid 1\le d\le D\}\), and \(\lambda _{\min }:=\min \{\lambda _d\mid 1\le d\le D\}\). Then

will serve as the role of condition number in the tensor case. The other test is on third order tensors of strongly orthogonal rank 3 and dimension 30 with different levels of condition numbers, which are indicated as cond in Table 11. Computational tests are given in Table 11 for the performance. The penalties for these nine cases are respectively ranging from 20 to 100 with equi-gap 10.

5.3 Random examples

In this section, we test random examples to see the performance of Algorithm 3.1.

We generate two sets of random examples, the first one is generated with each entry being drawn randomly in \([-1,1]\). The second one is generated as the sum of rk rank one tensors, with each component vector in the rank one tensor being drawn componentwisely in \([-1,1]\). In this set, we also generate symmetric tensors. The penalty parameter is chosen as 100 for all simulations, and the results are listed in Tables 12, 13 and 14. In Table 14, sym indicates the symmetry of the tensors; it is symmetric when it takes value 1, and nonsymmetric otherwise.

5.4 Conclusions

We see that problem (13) is highly nonlinear, especially when the tensor size is large, thus solutions of Algorithm 3.1 depend on the initializations heavily. While, for most cases, the convergence is fast with high accuracy. These can be seen from Tables 1, 2, 10, 11, 12, 13 and 14. We can also see from these computations that when the strongly orthogonal ranks are small relative to the tensor sizes or the tensor sizes are small, the computational performance is very well, which can be seen from Tables 3, 4, 5, 6 and 11. On the other hand, when the tensor components have a large deviation in magnitude or the strongly orthogonal ranks are large, the performance is reduced, which can be seen from Tables 7, 8, 9, 10, 12 and 13.

We want to emphasize Table 11, from which we can see that the condition number defined as (35) plays a key role in the performance of the algorithm. We think this is an intrinsic property of the underlying tensor, which will domonstrate the efficiency of most computations. Since the rank one decomposition for CODT is unique, and then the condition number as (35) for CODTs is well-defined. More sophisticated definition and investigation for general tensors should be carried out in the future. Also, theoretical justification of the dependence of the efficiency of an algorithm on the condition number should be investigated in the next.

6 Conclusions

In this article, computing the strongly orthogonal rank decomposition of a given tensor is formulated as solving an optimization problem with the help of matrix-tensor multiplication. This optimization problem has discrete-valued objective function (the \(l_0\)-norm of the tensor) subject to a system of nonlinear equality constraints and a set of orthogonal constraints. As the number of components of a tensor increases exponentially with respect to the tensor size, the number of nonlinear equality constraints becomes huge for tensors with large sizes. For example, it is one million for a sixth order ten dimensional tensor. As we can image, this class of problem is very difficult to solve in general, partly due to (i) the huge number of equality constraints, (ii) the orthogonality constraints, and (iii) the discrete-valued objective function.

Nevertheless, we propose to replace the objective function by a widely used surrogate–the \(l_1\)-norm of the tensor. Then, we apply an inexact augmented Lagrangian multiplier method to solve the resulting optimization problem. During the iterations, the orthogonality requirements are always guaranteed. Thus, the algorithm will always return a strongly orthogonal decomposition whatever the termination situations were met. Moreover, the augmented Lagrangian subproblem is solved by a proximal alternating minimization method with the benefit being that each subproblem has a closed formula solution. This is one key ingredient to keep the orthogonality constraints. Global convergence of the ALM algorithm is established without any further assumptions. Surprisingly, though as simple this algorithm as it sounds, the performance of the proposed algorithm is quite well. Extensive numerical computations were conducted with quite sounding efficiency as well as high accuracy. Note that in Table 14, the number of nonlinear equality constraints is 3,200,000 for the case \((n,k)=(20,5)\).

It follows from the computations that several issues need further investigation. The first is the exactness of the \(l_1\)-norm surrogate with respect to the original \(l_0\)-norm. It can be seen from the computations that quite often, \(l_1\)-norm can realize the strongly orthogonal rank decomposition. Thus, theoretical justifications should be established. The second would be a more efficient method to deal with tensors with larger strongly orthogonal ranks, which are the hard ones in the present computations. Another is that various other surrogates for the \(l_0\)-norm should be studied.

Notes

We can reformulate (23) as an optimization problem with a smooth objective function by packing \(\Vert \mathcal {B}\Vert _1\) into the constraints as well. Then, optimality conditions can be derived as [39]. While, it seems that it is not a wise choice here to destroy the smooth nature of the constraints and introduce a heavy task on computing the normal cone of a feasible set whose constraints involve nonsmooth functions.

References

Absil, P.-A., Hosseini, S.: A collection of nonsmooth Riemannian optimization problems. In: Hosseini, S., Mordukhovich, B., Uschmajew, A. (eds.) Nonsmooth Optimization and Its Applications, International Series of Numerical Mathematics, vol. 170, pp. 1–15. Birkhäuser, Cham (2019)

Absil, P.-A., Mahony, R., Sepulchre, R.: Optimization Algorithms on Matrix Manifolds. Princeton University Press, Princeton (2008)

Anandkumar, A., Ge, R., Hsu, D., Kakade, S.M., Telgarsky, M.: Tensor decompositions for learning latent variable models. J. Mach. Learn. Res. 15, 2773–2832 (2014)

Attouch, H., Bolte, J., Svaiter, B.F.: Convergence of descent methods for semi-algebraic and tame problems: proximal algorithms, forward-backward splitting, and regularized Gauss–Seidel methods. Math. Program. 137, 91–129 (2013)

Bader, B.W., Kolda, T.G.: MATLAB Tensor Toolbox Version 2.6, February 2015. http://www.sandia.gov/~tgkolda/TensorToolbox/

Batselier, K., Liu, H., Wong, N.: A constructive algorithm for decomposing a tensor into a finite sum of orthonormal rank-1 terms. SIAM J. Matrix Anal. Appl. 36, 1315–1337 (2015)

Bertsekas, D.P.: Constrained Optimization and Lagrange Multiplier Methods. Athena Scientific, Belmont (1982)

Bochnak, J., Coste, M., Roy, M.-F.: Real Algebraic Geometry. Ergebnisse der Mathematik und ihrer Grenzgebiete, vol. 36. Springer, Berlin (1998)

Bolte, J., Sabach, S., Teboulle, M.: Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program. 146, 459–494 (2014)

Chen, J., Saad, Y.: On the tensor SVD and the optimal low rank orthogonal approximation of tensors. SIAM J. Matrix Anal. Appl. 30, 1709–1734 (2009)

Chen, Y., Ye, Y., Wang, M.: Approximation hardness for a class of sparse optimization problems. J. Mach. Learn. Res. 20, 1–27 (2019)

Comon, P.: MA identification using fourth order cumulants. Signal Process. 26, 381–388 (1992)

Comon, P.: Independent component analysis, a new concept? Signal Process. 36, 287–314 (1994)

De Lathauwer, L., De Moor, B., Vandewalle, J.: A multilinear singular value decomposition. SIAM J. Matrix Anal. Appl. 21, 1253–1278 (2000)

De Silva, V., Lim, L.-H.: Tensor rank and the ill-posedness of the best low-rank approximation problem. SIAM J. Matrix Anal. Appl. 30, 1084–1127 (2008)

Donoho, D.L.: For most large underdetermined systems of linear equations the minimal \(1\)-norm solution is also the sparsest solution. Commun. Pure Appl. Math. 59, 797–829 (2006)

Franc, A.: Etude Algébrique des Multitableaux: Apports de l’Algébre Tensorielle, Thèse de Doctorat, Spécialité Statistiques. Univ. de Montpellier II, Montpellier (1992)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 4th edn. Johns Hopkins University Press, Baltimore (2013)

Håstad, J.: Tensor rank is NP-complete. J. Algorithms 11, 644–654 (1990)

Hillar, C.J., Lim, L.-H.: Most tensor problems are NP-hard. J. ACM 60(6), 1–39 (2013)

Horn, R.A., Johnson, C.R.: Matrix Analysis. Cambridge University Press, New York (1985)

Hu, S.: Bounds on strongly orthogonal ranks of tensors, revised manuscript (2019)

Hu, S., Li, G.: Convergence rate analysis for the higher order power method in best rank one approximations of tensors. Numer. Math. 140, 993–1031 (2018)

Ishteva, M., Absil, P.-A., Van Dooren, P.: Jacobi algorithm for the best low multilinear rank approximation of symmetric tensors. SIAM J. Matrix Anal. Appl. 34, 651–672 (2013)

Jiang, B., Dai, Y.H.: A framework of constraint preserving update schemes for optimization on Stiefel manifold. Math. Program. 153, 535–575 (2015)

Jordan, C.: Essai sur la géométrie à n dimensions. Bull. Soc. Math. 3, 103–174 (1875)

Kolda, T.G.: Orthogonal tensor decompositions. SIAM J. Matrix Anal. Appl. 23, 243–255 (2001)

Kolda, T.G.: A counterexample to the possibility of an extension of the Eckart–Young low-rank approximation theorem for the orthogonal rank tensor decomposition. SIAM J. Matrix Anal. Appl. 24, 762–767 (2003)

Kolda, T.G., Bader, B.W.: Tensor decompositions and applications. SIAM Rev. 51, 455–500 (2009)

Kroonenberg, P.M., De Leeuw, J.: Principal component analysis of three-mode data by means of alternating least squares algorithms. Psychometrika 45, 69–97 (1980)

Kruskal, J.B.: Three-way array: rank and uniqueness of trilinear decompositions, with application to arithmetic complexity and statistics. Linear Algebra Appl. 18, 95–138 (1977)

Landsberg, J.M.: Tensors: Geometry and Applications, Graduate Studies in Mathematics, vol. 128. AMS, Providence (2012)

Leibovici, D., Sabatier, R.: A singular value decomposition of a \(k\)-way array for principal component analysis of multiway data, PTA-k. Linear Algebra Appl. 269, 307–329 (1998)

Liu, Y.F., Dai, Y.H., Luo, Z.Q.: On the complexity of leakage interference minimization for interference alignment. In: 2011 IEEE 12th International Workshop on Signal Processing Advances in Wireless Communications, pp. 471–475 (2011)

Mangasarian, O.L., Fromovitz, S.: The Fritz–John necessary optimality conditions in the presence of equality and inequality constraints. J. Math. Ana. Appl. 7, 34–47 (1967)

Martin, C.D.M., Van Loan, C.F.: A Jacobi-type method for computing orthogonal tensor decompositions. SIAM J. Matrix Anal. Appl. 30, 1219–1232 (2008)

Nie, J.: Generating polynomials and symmetric tensor decompositions. Found. Comput. Math. 17, 423–465 (2017)

Robeva, E.: Orthogonal decomposition of symmetric tensors. SIAM J. Matrix Anal. Appl. 37, 86–102 (2016)

Rockafellar, R.T.: Lagrange multipliers and optimality. SIAM Rev. 35, 183–238 (1993)

Rockafellar, R.T., Wets, R.: Variational Analysis. Grundlehren der Mathematischen Wissenschaften, vol. 317. Springer, Berlin (1998)

Zhang, T., Golub, G.H.: Rank-one approximation to high order tensors. SIAM J. Matrix Anal. Appl. 23, 534–550 (2001)

Acknowledgements

This work is partially supported by National Science Foundation of China (Grant No. 11771328). The author is very grateful for the annoynous referees for their helpful suggestions and comments in revising this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A. Convergence theorem for PAM

Let \(f : \mathbb {R}^{n_1}\times \dots \times \mathbb {R}^{n_k}\rightarrow \mathbb {R}\cup \{+\infty \}\) be a function of the following structure

where Q is a \(C^1\) (continuously differentiable) function with locally Lipschitz continuous gradient, and \(g_i : \mathbb {R}^{n_i}\rightarrow \mathbb {R}\cup \{+\infty \}\) is a proper lower semicontinuous function for each \(i\in \{1,\dots ,k\}\).

We introduce the following algorithmic scheme to solve the optimization problem

Since f is proper and lower semicontinuous, \(\mathbf {x}\) is an optimizer of this minimization problem only if it is a critical point of f, i.e., \(0\in \partial f(\mathbf {x})\).

Algorithm A.1

general PAM

Step 1 can be implemented through several methods. In particular, (36), (37) and (38) are fulfilled if for all \(j\in \{1,\dots ,k\}\), \(\mathbf {x}^s_j\) is taken as a minimizer of the optimization problem

We now state the global convergence of Algorithm A.1 for a wide class of objective functions [4, Theorem 6.2].

Theorem A.2

(Proximal Alternating Minimization) Let f be a Kurdyka–Łojasiewicz function and bounded from below. Let \(\{\mathbf {x}^s\}\) be a sequence produced by Algorithm A.1. If \(\{\mathbf {x}^s\}\) is bounded, then it converges to a critical point of f.

Appendix B. Nonsmooth Lagrange multiplier

The following materials can be found in [40, Chapter 10].

Let \(X\subseteq \mathbb {R}^n\) be nonempty and closed, \(f_0 : \mathbb {R}^n\rightarrow \mathbb {R}\) be locally Lipschitz continuous, \(F : \mathbb {R}^n\rightarrow \mathbb {R}^m\) with \(F:=(f_1,\dots ,f_m)\) and each \(f_i\) locally Lipschitz continuous, and \(\theta : \mathbb {R}^m\rightarrow \mathbb {R}\cup \{\pm \infty \}\) be proper, lower semicontinuous, convex with effective domain D.

Consider the following optimization problem

If \(\overline{\mathbf {x}}\) is a local optimal solution to (40) such that the following constraint qualification being satisfied

then there exists a vector \(\overline{\mathbf {y}}\) such that

A vector \(\overline{\mathbf {y}}\) satisfying (42) is called a Lagrange multiplier, and the pair \((\overline{\mathbf {x}},\overline{\mathbf {y}})\) satisfying (42) is a Karush–Kuhn–Tucker pair with \(\overline{\mathbf {x}}\) a KKT point. Let \(M(\overline{\mathbf {x}})\) be the set of Lagrange multipliers for a KKT point \(\overline{\mathbf {x}}\). Under the constraint qualification (41), the set \(M(\overline{\mathbf {x}})\) is compact.

A particular case is \(\theta =\delta _{\{\mathbf {0}\}}\), the indicator function of the set \(\{\mathbf {0}\}\subset \mathbb {R}^m\). Then problem (40) reduces to

If each \(f_i\) is continuously differentiable for \(i\in \{1,\dots ,m\}\), then the constraint qualification is

It is the basic constraint qualification discussed in [39], an extension of the Mangasarian–Fromovitz constraint qualification [35].

The optimality condition (42) becomes

or in a more familiar form as

Appendix C. Proof of Proposition 3.3

Proof

It follows from (16) that \(U^{(i)}_s\in \mathbb {O}(n_i)\) for all \(i\in \{1,\dots ,k\}\) and \(s=1,2,\dots \) and hence the sequence \(\{\mathbb {U}_s\}\) is bounded.

Let \(\Xi _s\in \partial L_{\rho _s}(\mathbb {U}_s,\mathcal {B}_s;\mathcal {X}_s)\) be such that \(\Vert \Xi _s\Vert \le \epsilon _s\) which is guaranteed by (17). Thus,

for some \(\mathcal {W}_s\in \partial \Vert \mathcal {B}_s\Vert _1\), and \(B^{(i)}_s\in N_{\mathbb {O}(n_i)}(U^{(i)}_s)\) for all \(i\in \{1,\dots ,k\}\).

It follows from the last row in (45) and (17) that

By the fact that \(\mathcal {W}_s\) is uniformly bounded (cf. (22)), and \(\epsilon _s\rightarrow 0\), we conclude that \(\rho _s\big (\mathcal {B}_s-(U_s^{(1)},\dots ,U_s^{(k)})\cdot \mathcal {A}-\frac{1}{\rho _s}\mathcal {X}_s\big )\) is bounded. Therefore, the sequence \(\{\mathcal {X}_{s+1}\}\) is bounded by the multiplier update rule (18).

Since \(\mathcal {W}_s\) and \(\mathcal {X}_s\) are both bounded, it follows from (46) that \(\rho _s\big (\mathcal {B}_s-(U_s^{(1)},\dots ,U_s^{(k)})\cdot \mathcal {A}\big )\) is bounded. As \(\{\rho _s\}\) is a nondecreasing sequence of positive numbers and \(\{\mathbb {U}_s\}\) is bounded, we must have that the sequence \(\{\mathcal {B}_s\}\) is bounded.

In a conclusion, the sequence \(\{\mathbb {U}_s,\mathcal {B}_s,\mathcal {X}_s\}\) is bounded.

For the feasibility, note that \(\mathbb {U}_*\) satisfies the orthogonality by (16). The rest proof is divided into two parts, according to the boundedness of the sequence \(\{\rho _s\}\).

Part I. Suppose first that the penalty sequence \(\{\rho _s\}\) is bounded. By the penalty parameter update rule (19), it follows that \(\rho _s\) stabilizes after some \(s_0\), i.e., \(\rho _s=\rho _{s_0}\) for all \(s\ge s_0\). Thus,

The feasibility result then follows from a standard continuity argument.

Part II. In the following, we assume that \(\rho _s\rightarrow \infty \) as \(s\rightarrow \infty \).

Likewise, it follows from the last row in (45) and (17) that

By the fact that \(\mathcal {W}_s\) and \(\mathcal {X}_s\) are both bounded, \(\rho _s\rightarrow \infty \), and \(\epsilon _s\rightarrow 0\), we have that

Thus, by continuity, we have that \((\mathbb {U}_*,\mathcal {B}_*)\) is a feasible point.

In the following, we show that \((\mathbb {U}_*,\mathcal {B}_*)\) is a KKT point. Let

It follows from the above analysis that \(\{\mathcal {M}_s\}\) is bounded. By the multiplier update rule (18), the system (45) can be rewritten as

The boundedness of \(\{\mathbb {U}_s,\mathcal {B}_s,\mathcal {X}_s,\mathcal {M}_s\}\) and \(\{\Xi _s\}\) implies the boundedness of each \(\{B_s^{(i)}\}\) for all \(i\in \{1,\dots ,k\}\) as well. We assume without loss of generality that

for an infinite index set \(\mathcal {K}\subseteq \{1,2,\dots \}\), and in where

Taking limitations on both sides of (49) within \(\mathcal {K}\), we have then

where \(V^{(i)}_*\) is defined as (21) with \(U^{(i)}\)’s being replaced by \(U^{(i)}_*\)’s. By the closedness of subdifferentials, we have

and

Since each \(N_{\mathbb {O}(n_i)}(U^{(i)}_*)\) is a linear subspace, we have shown that \((\mathbb {U}_*,\mathcal {B}_*)\) is a KKT point of (12) with Lagrange multiplier \(\mathcal {X}_*+\mathcal {M}_*\) (cf. (25)). The proof is complete. \(\square \)

Appendix D. Proof of Proposition 4.2

Proof

It is known that for all \(i\in \{1,\dots ,k\}\) each orthogonal group \(\mathbb {O}(n_i)\) is an algebraic set, defined by a system of polynomial equations. Therefore, \(\mathbb {O}(n_i)\) is a semi-algebraic set and its indicator function is semi-algebraic [8]. The \(l_1\)-norm \(\Vert \cdot \Vert _1\) is also semi-algebraic. Also known is that each semi-algebraic function is a Kurdyka–Łojasiewicz function (cf. [9, Appendix]). Thus, as a summation of the \(l_1\)-norm, the indicator functions of the orthogonal groups, and polynomials, the augmented Lagrangian function \(L_{\rho }(\cdot ,\cdot ;\mathcal {X})\) is a Kurdyka–Łojasiewicz function.

If the iteration sequence \(\{(\mathbb {U}_s,\mathcal {B}_s)\}\) generated by Algorithm 4.1 is bounded, and the function \(L_{\rho }(\cdot ,\cdot ;\mathcal {X})\) is bounded from below, then the sequence \(\{(\mathbb {U}_s,\mathcal {B}_s)\}\) converges by Theorem A.2.

For any given \(\mathcal {X}\), it follows immediately from (14) that the function \(L_{\rho }(\cdot ,\cdot ;\mathcal {X})\) is bounded from below, since

In the language of Appendix A, the variable \(\mathcal {B}\) refers to the \(j=0\)-th block variable, and \(U^{(j)}\) the j-th block for \(j\in \{1,\dots ,k\}\). Then,

and the function Q is defined naturally to comprise \(L_\rho \) in (50).

We first show that (38) is satisfied. By (26), we know that (38) is satisfied by \(\alpha _j=\overline{c}\) for all \(j\in \{0,1,\dots ,k\}\).

It follows from (26), (28) and (29) that

Summing up these inequalities, we have

Therefore, the sequence \(\{L_{\rho }(\mathbb {U}_s,\mathcal {B}_s;\mathcal {X})\}\) monotonically decreases to a finite limit.

On the other hand, since each component matrix of \(\mathbb {U}_s\) is an orthogonal matrix, the sequence \(\{\mathbb {U}_s\}\) is bounded. Suppose that the sequence \(\{\mathcal {B}_s\}\) is unbounded. Then, it follows from (50) that the sequence \(\{L_{\rho }(\mathbb {U}_s,\mathcal {B}_s;\mathcal {X})\}\) should diverge to infinity, which is an immediate contradiction. Thus, the iteration sequence \(\{(\mathbb {U}_s,\mathcal {B}_s)\}\) must be bounded, and hence converges by Theorem A.2.

In the following, we show that \(\Vert \Theta _s\Vert \rightarrow 0\) as \(s\rightarrow \infty \). First of all, we derive an upper bound estimate for \(\Vert V^{(j)}_s-\tilde{V}^{(j)}_s\Vert \) as

where the second inequality follows from the fact that \(U^{(i)}_t\in \mathbb {O}(n_i)\) for all \(i\in \{1,\dots ,k\}\) and \(t=1,2,\dots \), and the third from a standard induction.

Likewise, we have

Thus, we have

Since the iteration sequence \(\{(\mathbb {U}_s,\mathcal {B}_s)\}\) converges, we conclude that \(\Vert \Theta _s\Vert \rightarrow 0\) as \(s\rightarrow \infty \). As \(\epsilon >0\) is a given parameter, Algorithm 4.1 terminates after finitely many iterations. \(\square \)

Rights and permissions

About this article

Cite this article

Hu, S. An inexact augmented Lagrangian method for computing strongly orthogonal decompositions of tensors. Comput Optim Appl 75, 701–737 (2020). https://doi.org/10.1007/s10589-019-00128-3

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-019-00128-3