Abstract

The objective of the present work is to introduce a computational approach employing Chebyshev Tau method for approximating the solutions of constant coefficients systems of multi-order fractional differential equations. For this purpose, a series representation for the exact solutions in a neighborhood of the origin is obtained to monitor their smoothness properties. We prove that some derivatives of the exact solutions of the underlying problem often suffer from discontinuity at the origin. To fix this drawback and design a high order approach a regularization procedure is developed. In addition to avoid high computational costs, a suitable strategy is implemented such that approximate solutions are obtained by solving some triangular algebraic systems. Complexity and convergence analysis of the proposed scheme are provided. Various practical test problems are presented to exhibit capability of the given approach.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

For nearly three centuries, the theory of fractional calculus has been considered by mathematicians as a branch of pure mathematics. However, many researchers have recently found that non-integer derivatives and integrals are more useful than integer ones for modeling the phenomena that have inherited and memory properties [3, 17, 31, 43], and in this regard various numerical methods have been introduced to approximate the solutions of the arising fractional order functional equations [22,23,24,25, 34,35,36,37,38,39]. There are many physical issues which correlate a number of separated elements and thereby one may expect that their mathematical modeling leads to systems of differential equations. In this connection, systems of fractional differential equations (FDEs) have recently been used to describe the various properties of the phenomena in physics and engineering such as pollution levels in a lake [7, 29], hepatitis B disease in medicine [9], fractional-order financial system [11], population dynamics [14, 15], fractional order Bloch system [26], electrical circuits [28], fractional-order love triangle system [32], nuclear magnetic resonance (NMR) [33, 50], fractional-order Volta’s system [42], magnetic resonance imaging (MRI) [44], fractional-order Lorenz system [49] and fractional-order Chua’s system [51].

Due to high usage of the systems of FDEs, the researchers have tried to find analytic and numerical methods to solve them. Since it is very difficult or practically impossible to obtain accurate solutions for most systems of FDEs, it is important to provide suitable approximate methods for solving them. Specially due to wide applications for constant coefficients systems of FDEs, researchers have recently adopted various numerical techniques to approximate their solutions such as Homotopy perturbation method [1, 4], Chebyshev Tau method [2], fractional order Laguerre and Jacobi Tau methods [5, 6], Legendre wavelets method [12], Adomian decomposition method [13, 40], differential transform method [19], spectral collocation method [29, 30], variational iteration method (VIM) [40, 46] and Bernoulli wavelets method [47].

However, in some of the aforementioned studies, the effect of the possible discontinuity behavior in the derivatives of the solution has not paid attention, and basis functions are selected from infinitely smooth functions. Most importantly, the available researches often provide numerical methods for systems of single order FDEs and there are a few articles related to a comprehensive numerical analysis of systems of multi-order FDEs. In this regard, the main object of this paper is to fill this gap with providing a reliable and high order numerical technique using Chebyshev Tau method for approximating the solutions of the following constant coefficients system of multi-order FDEs

where \(\lceil . \rceil \) is the ceiling function, \(a_{ji}\) are given constants, \(p_j(x)\) are continuous functions on \(\Lambda \) and \(y_j(x)\) are unknowns. Here \(D^{\alpha _{j}}_{C}\) is the Caputo type fractional derivative of order \(\alpha _{j}\) defined by [10, 17, 31, 43]

where \(J^{\lceil \alpha _j \rceil -\alpha _{j}}\) is the Riemann–Liouville fractional integral operator of order \(\lceil \alpha _j \rceil -\alpha _{j}\) and is defined by

and \(\Gamma (.)\) denotes as the Gamma function. It can be seen that for \(\alpha , \beta \ge 0\) the following relations hold

under validity of some requirements for the function y(x) [10, 17, 31, 43].

Although the classical implementation of spectral methods provides a useful tool to produce high order approximations for smooth solutions of functional equations, there are some disadvantages including need for solving complex and ill-conditioned algebraic systems as well as a significant reduction in the accuracy for problems with non-smooth solutions. In this paper, in order to avoid these drawbacks, the numerical approach is designed such that not only the expected higher accuracy is reconstructed regarding the non-smooth problems by proceeding a regularization technique, but also approximate solutions are computed by solving well-conditioned triangular systems.

The remainder of this paper is divided into six sections as follows. In the later section, we first introduce a result on the existence and uniqueness of the solutions of (1). Then, the smoothness theorem is given, which derives a series representation for the solutions of (1) and concludes that some derivatives of the exact solutions often suffer from discontinuity at the origin. To fix this difficulty, a regularization strategy is proceeded. In Sect. 3, to survey the effect of this regularization process on providing high-order approximations, the Chebyshev Tau approach is developed to approximate the solutions of (1) which satisfy the assumptions of the existence, uniqueness and smoothness theorems. The uniquely solvability and complexity analysis of the numerical solution are also justified by solving some triangular algebraic systems. In Sect. 4, we provide a detailed convergence analysis for the proposed scheme in uniform norm. In Sect. 5, efficiency and applicability of the proposed method are examined by different illustrative examples. The final section contains our conclusive remarks.

2 Existence, Uniqueness and Smoothness Results

In this section we investigate existence, uniqueness and smoothness properties of the solutions of (1). First, the existence and uniqueness theorem is given as follows.

Theorem 1

Assume that the functions \(\{p_{j}(x)\}_{j=1}^n\) are continuous on \(\Lambda \). Then the system of Eq. (1) has a unique continuous solution on \(\Lambda \).

Proof

Clearly, it is a straightforward consequence of Theorem 8.11 of [17] and Theorem 2.3 of [16]. \(\square \)

From the well-known existence and uniqueness theorems of FDEs, we expect some derivatives of the exact solutions of (1) to have a discontinuity at the origin, even for smooth input functions depending on the fractional derivative order [17]. Therefore, to develop high order approximate approaches, recognizing the smoothness properties of the solutions of (1) under certain assumptions on the given functions \(p_{j}(x)\) is essential. In this regard, recently in [16] Diethelm et al. investigated the degree of smoothness and asymptotic behavior of the solutions of homogeneous constant coefficients multi-order FDEs when fractional derivatives lie in the interval (0,1). In the following theorem we try to derive the same properties for the constant coefficients systems of multi-order FDEs in the general form (1) by exploring a series representation of the solutions in a neighborhood of the origin.

Theorem 2

Let \(\{\alpha _{j}=\eta _{j}/\gamma _{j}\}_{j=1}^n\), such that the integers \(\eta _{j}\ge 1\) and \(\gamma _{j}\ge 2\) are co-prime and the given continuous functions \(p_j(x)\) can be written as \(p_j(x)=\bar{p}_j(x^{1/\gamma _1}, x^{1/\gamma _2}, \ldots , x^{1/\gamma _n})\) with analytic functions \(\bar{p}_j\) in the neighborhood of \((\underbrace{0,0,\ldots ,0}_{n})\). Then the series representation of the solution \(y_j(x)\) of the Eq. (1) in a neighborhood of the origin is given by

where \(\phi _j(x)=\sum \nolimits _{i=0}^{\lceil \alpha _j \rceil -1}{\frac{y_{j,0}^{(i)}}{i!}x^i}\) and \(\bar{y}_{j,\nu _{1},\nu _{2},\ldots ,\nu _{n}}\) are known coefficients.

Proof

Consider the functions

satisfying the initial condition of Eq. (1). On the other hand, since the functions \(\bar{p}_j\) are analytic, the functions \(p_j\) can be written as

where \(\{\tilde{p}_{j,\nu _{1},\nu _{2},\ldots ,\nu _{n}}\}_{j=1}^n\) are known coefficients. In the sequel, we show that the coefficients \(\bar{y}_{j,\nu _{1},\nu _{2},\ldots ,\nu _{n}}\) are calculated in such a way that the representation (3) converges and solves the Eq. (1). Trivially the Eq. (1) is equivalent to the following system of second kind Volterra integral equations

Therefore, assuming uniform convergence and substituting the relations (3) and (4) into (5), the coefficients \(\bar{y}_{j,\nu _{1},\nu _{2},\ldots ,\nu _{n}}\) satisfy in the following equality

Using (2) the above equality can be written as

where \(\xi _{j}=\frac{\Gamma {(\sum \nolimits _{k=1}^{n}{\frac{\nu _{k}}{\gamma _k}+1})}}{\Gamma {(\sum \nolimits _{k=1}^{n}{\frac{\nu _{k}}{\gamma _k}+\alpha _{j}+1})}}\). Substituting \(\nu _j=\nu _j-\eta _j\) in the both series of the right-hand side of (6), we obtain

in which \(\tilde{\xi }_{j}=\frac{\Gamma {(\sum \nolimits _{k=1}^{n}{\frac{\nu _{k}}{\gamma _k}-\alpha _{j}+1})}}{\Gamma {(\sum \nolimits _{k=1}^{n}{\frac{\nu _{k}}{\gamma _k}+1})}}\). Now, we try to obtain the unknown coefficients \(\bar{y}_{j,\nu _{1},\nu _{2},\ldots ,\nu _{n}}\) by comparing the coefficients of \(x^{\frac{\nu _{1}}{\gamma _1}} x^{\frac{\nu _{2}}{\gamma _2}}...x^{\frac{\nu _{n}}{\gamma _n}}\) on both sides of (7). The results of this comparison depend on \(\nu _{j}\). Clearly for \(\{\nu _{j}<\eta _j\}_{j=1}^n\), we have

For \(\{\nu _{j}\ge \eta _j\}_{j=1}^n\) and \(\nu _{k} \ge 0\), \(k \ne j\), we obtain

and thereby the coefficients \(\bar{y}_{j,\nu _{1}, \nu _{2},\ldots , \nu _{n}}\) with \(\nu _{1}+\nu _{2}+\cdots +\nu _{n}=l\), \(l \ge \eta _j\) can be calculated from (8), such that this calculation requires the knowledge of \(\bar{y}_{j,\nu _{1}, \nu _{2},\ldots , \nu _{n}}\) with \(\nu _{1}+\nu _{2}+\cdots +\nu _{n}\le l-1\). Therefore, we should first evaluate all the coefficients with \(\nu _{1}+\nu _{2}+\cdots +\nu _{n}=\eta _j\), then with \(\nu _{1}+\nu _{2}+\cdots +\nu _{n}=\eta _{j}+1\), etc. This means that the series representation (3) solves (1).

Now, it should be proved that this series is uniformly and absolutely convergent in a neighborhood of the origin. For this purpose, we apply a suitable modification of the well-known Lindelof’s theorem [17, 27]. Consider the following system of the second kind Volterra integral equations

where \(\tilde{\phi }_j(x)=\sum \nolimits _{k=0}^{\lceil \alpha _j \rceil -1}{\frac{x^k}{k!}|y_{j,0}^{(k)}|}\). Evidently the right-hand side of the above equation is a majorant of the right-hand side of the main Eq. (5) and the formal solution \(\{Y_j(x)\}_{j=1}^n\) can be calculated exactly as the previous step such that all of its coefficients are positive. Now we show that the series expansion of \(Y_j(x)\) is absolutely convergent for each \(x \in [0,\kappa _j]\), with some \(\kappa _j>0\) which is defined in the sequel. To this end, it is sufficient to show that the finite partial sum of \(Y_j(x)\) is uniformly bounded over \([0,\kappa _j]\). Let

is the finite partial sum of \(Y_j(x)\) for \(j=1, 2,\ldots , n\). The following inequality evidently holds

in view of the recursive calculation of the coefficients. More precisely, if we expand the right-hand side of the above inequality, all coefficients \(\bar{Y}_{j,\nu _{1},\ldots ,\nu _{n}}\) with \(\sum \nolimits _{l=1}^{n}{\frac{\nu _{l}}{\gamma _l}}\le (K+1) \left( \sum \nolimits _{l=1}^{n}{\frac{1}{\gamma _l}}\right) \) are eliminated from both sides while there will some additional positive terms remain in the right-hand side with higher order. Considering

we define

Now we intend to show that \(|S_{j,K}(x)| \le 2 D_1^{(j)}\) for \(1 \le j \le n\) and \(x \in [0, \kappa _j]\). This issue is done through induction over K. For \(K=0\), from definition of \(D_1^{(j)}\) we have

For the induction step from K to \(K+1\), we can write

which concludes the uniform boundedness of \(S_{j,K+1}(x)\) over \([0, \kappa _j]\). Due to positivity of all its coefficients it is also monotone. Therefore the series expansion of \(Y_j\) is absolutely convergent over \([0,\kappa _j]\) and uniformly convergent on the compact subsets of \([0,\kappa _j)\) due to the power series structure of \(Y_j(x)\). Finally, Lindelof’s theorem indicates that series expansion of \(y_j(x)\) are absolutely and uniformly convergent on the compact subsets of \([0,\kappa _j)\) too. Therefore, the interchange of integration and series was done correctly. \(\square \)

From Theorem 2, we can conclude that the \(\lceil \alpha _j \rceil \)th derivative of \(y_{j}(x)\) often has a discontinuity at the origin. This difficulty affects accuracy when the classical spectral methods are implemented to approximate the exact solutions. To overcome this weakness, we apply the coordinate transformation

where \(\gamma \) is the least common multiple of \(\gamma _j\), and convert the Eq. (5) into the following system of equations

where \(\hat{\phi }_j(v)=\phi _j(v^\gamma )\), \(\hat{p}_j(v)=p_j(v^\gamma )\) and

Here \(\hat{y}_j(v)\) is the infinitely smooth exact solution of (10) and given by

for \(\frac{\gamma }{\gamma _j}=b_j \in \mathbb {N},~j=1, 2,\ldots , n\). Consequently, variable transformation (9) regularizes the solutions and provides the possibility of obtaining the familiar exponential accuracy by implementing the classical spectral methods. To monitor the effect of this regularization process on producing high-order approximations for (1), we assume that the assumptions of Theorem 2 hold in the sequel.

3 Numerical Approach

In this section, we introduce an efficient formulation of Chebyshev Tau approach for approximating the solutions of the transformed Eq. (10). For this purpose, we consider Chebyshev Tau solutions of (10) as follows

for \(j=1,2,\ldots , n\), where \(\underline{\mathcal {T}}=[\mathcal {T}_0(v), \mathcal {T}_1(v),\ldots , \mathcal {T}_N(v),\ldots ]^{T}\) is the vector of shifted Chebyshev polynomial basis with degree \((\mathcal {T}_i(v))\le i\) for \(i \ge 0\) on \(\Lambda \). Furthermore, \(\mathcal {T}\) is a lower triangular invertible matrix and \(\underline{V}=[1, v, v^{2},\ldots , v^{N},\ldots ]^T\). Substituting (12) into (10) and assuming

for \(j=1,2,\ldots , n\), we can write

Therefore, it suffices to compute \(\{\hat{J}^{\alpha _j}\underline{V}\}_{j=1}^n\). For this purpose using the relation (2) we have

with

Inserting (14) into (13) yields

which can be rewritten as

where \(\mathcal {A}_{ij}^{\mathcal {T}}=\mathcal {T}\mathcal {A}_{ij}\mathcal {T}^{-1}\), \(\mathcal {B}_j^{\mathcal {T}}=\mathcal {T}\mathcal {B}_{j}\mathcal {T}^{-1}\) and

where I is an identity matrix.

Projecting (15) on the space of \(\langle \mathcal {T}_0(v), \mathcal {T}_1(v),\ldots , \mathcal {T}_N(v)\rangle \) and using the orthogonality of \(\{\mathcal {T}_i(v)\}_{i=0}^N\), the unknown coefficients satisfy in the following block algebraic system of order n

where the corresponding index N on the top of the matrices and vectors represents the principle sub-matrices and sub-vectors of order \(N+1\) respectively and \(\underline{c}_i^{N}=[ c_{i0},c_{i1},\ldots ,c_{iN}]\) is the unknown vector which can be accessed by solving \(n(N+1)\times n(N+1)\) system of algebraic Eq. (17).

3.1 Numerical Solvability and Complexity Analysis

In this subsection the numerical solvability as well as the complexity analysis of the resulting system (17) are studied. In this respect, multiplying both sides of (17) by \(\mathcal {T}^{N}\) and assuming

the following algebraic system of order \(n(N+1)\)

with

and

can be obtained. Applying block LU-decomposition for the matrix \(\Phi \) we derive

with the following block matrices of order \(N+1\)

From (16), it is obvious that \(\mathcal {A}_{ij}^N\) is an invertible bi-diagonal upper triangular matrix with the diagonal entries one for \(i=j\) and is a single diagonal upper triangular matrix with diagonal entries zero for \(i \ne j\). Therefore, from (21) it can be concluded that the following matrices

are upper triangular matrices with diagonal entries zero and the matrices \(\{U_{i,i}\}_{i=1}^n\), are upper triangular matrices with diagonal entries one. This property is used in the following remark for justifying the uniquely solvability of the resulting system (19).

Remark 3

From (20) we obtain

which concludes the invertibility of the coefficient matrix \(\Phi \) and thereby the linear algebraic system (19) is uniquely solvable.

Although the above remark indicates that the system (19) has a unique solution, a direct solution of this system can lead to less accurate approximations, due to high computational costs for large scale systems or high degree of approximations. In order to avoid this difficulty, instead of solving (19) directly, we solve the triangular block systems \(\underline{W}U=\underline{F}\) and \(\underline{C}L=\underline{W}\) separately with \(\underline{W}=[ \underline{w}_1,\underline{w}_2,\ldots ,\underline{w}_n]\). Due to the structure of block matrix U as well as non-singularity of the upper triangular matrix \(U_{j,j}\), the unknowns \(\{\underline{w}_j\}_{j=1}^n\) are obtained from solving the following n systems of upper triangular algebraic equations of order \(N+1\)

and consequently, regarding the structure of block matrix L, the main unknowns \(\{\underline{c}_{i}^{\prime ^{N}}\}_{i=1}^n\) are computed by the following recurrence relation:

In fact, the main advantage of this approach is to avoid solving the \((n(N+1))\times (n(N+1))\) system (19) directly, and calculating the unknowns by solving n non-singular upper triangular systems of order \(N+1\) and a recursive relation. Finally, obtaining \(\{\underline{c}_{i}^{N}\}_{i=1}^n\), from solving the lower triangular system (18), the Chebyshev Tau solutions (12) for the transformed system of Eq. (10) can be calculated. Since the solutions of the main problem (1) and the transformed problem (10) are equivalent by the relation \(\{\hat{y}_j(v)=y_j(v^\gamma )\}_{j=1}^n\), then the approximate solutions \(y_{j,N}(x)\) of the main problem (1) are given by

4 Convergence Analysis

The purpose of this section is to analyze convergence properties of the proposed method and provide suitable error bounds for the approximate solutions in uniform norm. For this purpose, some of the required preliminaries are given and then the convergence theorem is proved.

Definition 4

The space \(C^m(\Lambda )\) for \(m \ge 0\) is the set of all m-times continuously differentiable functions on \(\Lambda \). For \(m=0\), the space \((C(\Lambda ), \Vert .\Vert _\infty )\) is the set of all continuous functions on \(\Lambda \) with the uniform norm \(\Vert f\Vert _\infty =\max \nolimits _{v \in \Lambda }|f(v)|\).

The Chebyshev-weighted \(L^2\)-space with respect to the shifted Chebyshev weight function \(\xi (v)=\frac{1}{\sqrt{v (1-v)}}\) is defined by

$$\begin{aligned}L_{\xi }^{2}(\Lambda )=\lbrace f:\Lambda \rightarrow \mathbb {R}, \Vert f\Vert _{\xi }<\infty \rbrace ,\end{aligned}$$equipped with the norm

$$\begin{aligned} \Vert f \Vert _{\xi }^{2}=(f,f)_{\xi }=\int _{\Lambda }f^{2}(v)\xi (v)dv, \end{aligned}$$where \((.,.)_{\xi }\) is the Chebyshev-weighted inner product formula.

The Chebyshev-weighted Sobolev space of order \(m \ge 0\) is defined by

$$\begin{aligned} H_{\xi }^m(\Lambda )=\{f:\Lambda \rightarrow \mathbb {R},~~\Vert f\Vert _{\xi ,m}<\infty \},\end{aligned}$$equipped with the following norm and semi-norm

$$\begin{aligned} \Vert f\Vert _{\xi ,m}^2=\sum \limits _{k=0}^{m}{\Vert f^{(k)}\Vert _{\xi }^2},\quad |f|_{\xi ,m}=\Vert f^{(m)}\Vert _{\xi }. \end{aligned}$$The \(L_{\xi }^{2}\)-orthogonal Chebyshev projection \(\pi _{N}:L_{\xi }^{2}(\Lambda )\rightarrow \mathbb {P}_N\) for the function \(f \in L_{\xi }^{2}(\Lambda )\) is defined by

$$\begin{aligned} (f-\pi _{N}f,\varphi )_{\xi }=0,\quad \forall \varphi \in \mathbb {P}_N, \end{aligned}$$where \(\mathbb {P}_N\) is the space of all algebraic polynomials with degree at most N.

In the following lemma we present the truncation error \(\pi _N f-f\) in the uniform norm.

Lemma 5

[48] For any \(f \in H_{\xi }^\mu (\Lambda )\) with \(\mu \ge 1\), we have

where \(e_{\pi _N}f=f- \pi _{N} f\) is the truncation errors and C is a positive constant independent of N.

In our analysis we will refer to the following Gronwall’s inequality:

Lemma 6

[18] (Gronwall’s inequality) Suppose that f is a non-negative and locally integrable function satisfying in the following inequality

where \(b(v) \ge 0\). Then, there exists a constant c dependent on q such that

Now we are ready to present the fundamental result of this section, which provides suitable error bounds of the approximate solutions in the uniform norm.

Theorem 7

Assume that \(\{\hat{y}_{j,N}(v)\}_{j=1}^n\), given by (12) are the Chebyshev Tau solutions of the transformed Eq. (10). If \(\hat{J}^{\alpha _j}\hat{y}_{i}\in C^{\mu _{ji}+1}(\Lambda ),~~\hat{J}^{\alpha _j}\hat{p}_{j}\in C^{\rho _{j}+1}(\Lambda )\) and \(\hat{p}_j \in H_\xi ^{\epsilon _j}(\Lambda )\) for \(\mu _{ji}, \rho _{j}, \epsilon _j \ge 1\) and \(i,j=1, 2,\ldots , n\), then for sufficiently large values of N we have

for \(j=1,2,\ldots , n\), where \(\hat{e}_{j,N}(v)=\hat{y}_{j}(v)-\hat{y}_{j,N}(v)\) are the error functions and C is a generic positive constant independent of N.

Proof

Implementing the presented approach in the previous section for (10) leads to the following operator equation

for \(j=1,2,\ldots , n\). Subtracting (10) from (23) yields

in view of considering \(e_{\pi _N}(\hat{\phi }_j(v))=0\) for sufficiently large values of N. By some simple calculations, the Eq. (24) can be rewritten as follows

and equivalently we have

where \(k_{ji}(v,w)=\dfrac{a_{ji}\gamma }{\Gamma (\alpha _j)}w^{\gamma -1}(v^{\gamma }-w^{\gamma })^{\alpha _{j}-1}\), and

Defining the vectors

the Eq. (25) is converted to the following matrix formulation

where

is a continuous function on \(\{(v,w):~ 0 \le w \le v \le 1\}\). From (27) we can write

where \(\Psi =\max \nolimits _{0 \le w \le v \le 1}{\vert K(v,w)\vert }< \infty \). Applying Gronwall’s inequality (i.e., Lemma 6) in (28) indicates

and thereby the relation (26) concludes

Using the inequality \(\Vert \hat{J}^{\alpha _j}f\Vert _\infty \le C \Vert f\Vert _\infty \) (see [31]), the inequality (29) can be rewritten as

Applying Lemma 5, we deduce

Under the assumption \(\hat{J}^{\alpha _j}\hat{y}_{i}\in C^{\mu _{ji}+1}(\Lambda )\) and using the first order Taylor formula, the inequality (31) implies

Also, from Lemma 5, we can conclude

and again by proceeding the same way as (31)–(32), we derive

in view of (33), and the assumption \(\hat{J}^{\alpha _j}\hat{p}_{j}\in C^{\rho _{j}+1}(\Lambda )\) for \(j=1, 2,\ldots ,n\). Inserting the inequalities (32)–(34) into (30) yields

in which

Evidently, the inequality (35) can be written in the following vector-matrix form

where

and M is a matrix of order n with the following entries

Therefore, for large values of N, the matrix M tends to the identity matrix and consequently the inequality (36) gives

which is the desired result. \(\square \)

5 Illustrative Examples

In this section, some test problems are solved using the proposed method to confirm its efficiency and applicability. All of the calculations were performed using Mathematica software v11.2, running in an Intel (R) Core (TM) i5-4210U CPU@2.40 GHz. If we access the exact solution, the errors are calculated by

and if we do not have the exact solution, the errors are estimated by

where \(y_{j,2N}(x)\) and \(y_{j,N}(x)\) are approximations of the exact solution \(y_{j}(x)\), and N is the degree of approximation.

Example 1

Consider the following problem

with zero initial conditions and the following forcing functions

where \(_{\theta }F_{\tau }\big (\{a_1,\ldots a_\theta \};\{b_1,\ldots ,b_{\tau }\};z\big )\) is the generalized hypergeometric function.

The exact solutions are given by

with the following asymptotic behaviors near the origin

which are coincident with the results obtained in Theorem 2.

Applying the variable transformation (9) for this problem with \(\gamma =2\), the transformed Eq. (10) becomes as follows

with the following infinitely smooth exact solutions

The transformed Eq. (37) is numerically solved via the proposed scheme and the obtained results are given in Table 1. Obtained numerical errors as well as the CPU-time (s) are reported in Table 1 for different values of N. Indeed, the reported results confirm that the proposed smoothing process removes the existence discontinuity in the derivatives of the exact solutions and produces the reliable approximate solutions, especially for large values of N in a very short CPU time.

Example 2

[41] Consider the following problem

where the exact solutions are given by

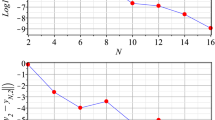

where \(E_{\delta }(x)\) is the one parameter Mittag-Leffler function [31]. Clearly, the exact solutions are non-smooth at the origin with the asymptotic behavior \(O(\sqrt{x})\). This problem is solved by using the proposed approach, and the obtained results are reported in Table 2 and Fig. 1. To compute the numerical errors, 100-terms of the Mittag-Leffler functions are considered. The presented numerical results indicate the well performance of the proposed scheme in approximating the solutions of (38), especially for large values of N in a very short CPU time. Furthermore, from Fig. 1, the predicted exponential like rate of convergence in Theorem 7 can be confirmed due to the linear variations of semi-log representation of errors versus N.

Semi-log representation of the numerical errors of Example 2 versus N

Example 3

[20] Consider the following system of FDEs

where the exact solutions are

We have solved this problem via the proposed scheme for values \(\alpha =\frac{1}{4}, \frac{1}{2}, \frac{2}{3}\) and obtained the exact solutions for degree of approximation \(N \ge 5\). On the other hand, this problem was evaluated in Ref. [20] by applying a hybrid numerical method. In this method, after dividing the integration domain \(\Lambda \) into m subintervals, the approximate solutions were considered as a linear combination of non-polynomials in a neighborhood of the origin, and by polynomials in the rest of domain. The presented results in Ref. [20] for various values \(\alpha \) and m are listed in Table 3. The listed results in Table 3 approve that our method provides more accurate approximations in comparison with the scheme mentioned in [20].

5.1 Application

The following three examples are intended to illustrate the applicability of the proposed scheme in approximating the solutions of some real life and practical problems.

First we consider well-known multi-term Bagley-Torvik equation which has wide applications in engineering. This equation appears in modelling of the movement of a thin, rigid plate in a viscous Newtonian fluid, and the plate is attached to a fixed point via a spring with certain spring constant [3]. Another application of this equation can be seen in studying the performance of a Micro-Mechanical system (MEMS) instrument that is used in measuring the viscosity of fluids that are encountered during oil well exploration [21].

Example 4

Consider the following Bagley-Torvik equation

in which the constants A, B, C and the function g(x) are known.

Here we set \(A=C=1\), \(B=\beta \sqrt{\pi }\), \(g(x)=0\), \(y(0)=1\) and \(y'(0)=0\) which is considered in [21] to study the performance of the MEMS system. In this case, the exact solution is given by

From [17], it can be seen that the main problem (39) is equivalent with the following constant coefficients system of multi-order FDEs

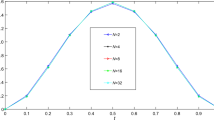

with \(y_1(x)=y(x)\). We solve (40) using the presented method and consider \(y_{N}(x)=y_{1,N}(x)\) as the approximate solution of the Bagley-Torvik equation (39). The obtained results are given in Table 4 and Fig. 2 which demonstrates the effectiveness and applicability of the proposed scheme.

Semi-log representation of the numerical errors of Example 4 versus N with \(\beta =\frac{1}{5}\)

As the second practical example, we consider the following system of multi-order FDEs which arises from modelling of a linear electrical circuit shown in Fig. 3. This circuit consists of resistors, inductors, capacitors, voltage sources with known capacitances \(C_{j}\), inductances \(L_{j}\), voltages on the capacitances \(V_{C_{j}}\), sources voltages \(V_{E_{j}}\), currents \(i_j\) for \(j=1,2\) and resistances \(R_{l}\) for \(l=1,2,3\).

Example 5

[28] Consider the following problem

with \(\alpha _{j}=\frac{j}{5}\) for \(1 \le j \le 4\). Here we set the parameters \(C_{1}=3\), \(C_{2}=2\), \(L_{1}=5\), \(L_{2}=7\), \(R_{1}=R_{3}=5/3\), \(R_{2}=11/6\), \(V_{E_{1}}=3\), \(V_{E_{2}}=6\) and \(d_1=d_2=d_3=d_4=0\).

This problem is evaluated by the proposed approach, and the results are reported in Table 5 and Fig. 4.

The numerical results show that the estimated errors are decreased as the degree of approximation N is increased. Moreover, decay of the errors for large values of N in a very short CPU time reveals the well-posedness of the proposed approach in approximating the solutions of this problem.

Semi-log representation of the numerical errors of Example 5 versus N

The next practical example is a fractional model of the Bloch equation which is used to study the spin dynamics and magnetization relaxation, in the simple case of a single spin particle at resonance in a static magnetic field.

Example 6

[33] Consider the following time fractional Bloch equations (TFBE)

where \(1/T_1^{\prime }=\tau _1^{1-\alpha }/T_1\), \(1/T_2^{\prime }=\tau _2^{1-\alpha }/T_2\) and \(\omega _0^{\prime }=\omega _0/\tau _2^{\alpha -1}\) are parameters with the unit of \((\text {sec})^{-\alpha }\). Here \(M_x(t)\), \(M_y(t)\) and \(M_z(t)\) represent the system magnetization (x, y, and z components), \(T_1\) is the spin-lattice relaxation time, \(T_2\) is the spin-spin relaxation time, \(M_0\) is the equilibrium magnetization, \(c_1\), \(c_2\) and \(c_3\) are given constants, \(\omega _0\) is the resonant frequency given by the Larmor relationship \(\omega _0=\sigma B_{0}\), where \(B_{0}\) is the static magnetic field (z-component) and \(\sigma /2\pi \) is the gyromagnetic ratio (42.57 MHz/Tesla for water protons).

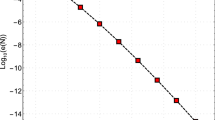

We set the parameters \(\alpha =1/6\), \(T_1^{\prime }=1\), \(T_2^{\prime }=3/2\), \(M_0=2\), \(c_1=0\), \(c_2=2\), \(c_3=0\) and \(\omega _0=4\pi /15 \), and solve the problem via the proposed approach. The numerical results are presented in Table 6 and Fig. 5, which justify efficiency and reliability of the proposed scheme. Indeed, Fig. 5 indicates that the familiar spectral accuracy is achieved because the logarithmic representation of the errors has almost linear behavior versus N. Furthermore, the reported errors as well as the CPU time used, especially for large values of N approve that our implementation process prevents the growth of the rounding errors and its effect on destroying the error of the method.

Semi-log representation of the numerical errors of Example 6 versus N

6 Conclusion

In this paper an efficient formulation of the Chebyshev Tau method for approximating the solutions of constant coefficients system of multi-order FDEs was developed and analyzed. To monitor the smoothness properties of the exact solutions, series representations of the solutions near the origin were obtained which indicate that some derivatives of the exact solutions may suffer from a discontinuity at the origin depending on the fractional derivative orders. To fix this weakness and make the Chebyshev Tau method applicable for obtaining high-order approximation, a regularization strategy proceeded. Convergence analysis of the presented scheme was also investigated, and effectiveness and reliability of the proposed approach were confirmed using some illustrative examples.

References

Abdulaziz, O., Hashim, I., Momani, S.: Solving systems of fractional differential equations by homotopy-perturbation method. Phys. Lett. A 372(4), 451–459 (2008)

Atabakzadeh, M.H., Akrami, M.H., Erjaee, G.H.: Chebyshev operational matrix method for solving multi-order fractional ordinary differential equations. Appl. Math. Model. 37(20–21), 8903–8911 (2013)

Bagley, R.L., Torvik, P.J.: On the fractional calculus model of viscoelastic behavior. J. Rheol. 30(1), 133–155 (1986)

Bataineh, A.S., Alomari, A.K., Noorani, M.S.M., Hashim, I., Nazar, R.: Series solutions of systems of nonlinear fractional differential equations. Acta Appl. Math. 105(2), 189–198 (2009)

Bhrawy, A., Alhamed, Y., Baleanu, D., Al-Zahrani, A.: New spectral techniques for systems of fractional differential equations using fractional-order generalized Laguerre orthogonal functions. Fract. Calc. Appl. Anal. 17(4), 1137–1157 (2014)

Bhrawy, A.H., Zaky, M.A.: Shifted fractional-order Jacobi orthogonal functions: application to a system of fractional differential equations. Appl. Math. Model. 40(2), 832–845 (2016)

Biazar, J., Farrokhi, L., Islam, M.R.: Modeling the pollution of a system of lakes. Appl. Math. Comput. 178(2), 423–430 (2006)

Canuto, C., Hussaini, M.Y., Quarteroni, A., Zang, T.A.: Spectral Methods. Fundamentals in Single Domains. Springer, Berlin (2006)

Cardoso, L.C., Dos Santos, F.L.P., Camargo, R.F.: Analysis of fractional-order models for hepatitis B. Comput. Appl. Math. 37(4), 4570–4586 (2018)

Changpin, L., Zeng, F.: Numerical Methods for Fractional Calculus. Chapman and Hall/CRC, Boca Raton (2015)

Chen, W.C.: Nonlinear dynamics and chaos in a fractional-order financial system. Chaos Solitons Fractals 36(5), 1305–1314 (2008)

Chen, Y., Ke, X., Wei, Y.: Numerical algorithm to solve system of nonlinear fractional differential equations based on wavelets method and the error analysis. Appl. Math. Comput. 251, 475–488 (2015)

Daftardar-Gejji, V., Jafari, H.: Adomian decomposition: a tool for solving a system of fractional differential equations. J. Math. Anal. Appl. 301(2), 508–518 (2005)

Demirci, E., Unal, A., Özalp, N.: A fractional order SEIR model with density dependent death rate. J. Math. Stat. 40(2), 287–295 (2011)

Demirci, E., Ozalp, N.: A method for solving differential equations of fractional order. J. Comput. Appl. Math. 236(11), 2754–2762 (2012)

Diethelm, K., Siegmund, S., Tuan, H.T.: Asymptotic behavior of solutions of linear multi-order fractional differential systems. Fract. Calc. Appl. Anal. 20(5), 1165–1195 (2017)

Diethelm, K.: The Analysis of Fractional Differential Equations. Springer, Berlin (2010)

Dragomir, S.S.: Some Gronwall Type Inequalities and Applications. Nova Science Publishers, New York (2003)

Ertürk, V.S., Momani, S.: Solving systems of fractional differential equations using differential transform method. J. Comput. Appl. Math. 215(1), 142–151 (2008)

Ferrás, L.L., Ford, N.J., Morgado, M.L., Rebelo, M.: A hybrid numerical scheme for fractional-order systems. In: International Conference on Innovation, Engineering and Entrepreneurship, Vol. 505, pp. 735–742. Springer, Cham (2018)

Fitt, A.D., Goodwin, A.R.H., Ronaldson, K.A., Wakeham, W.A.: A fractional differential equation for a MEMS viscometer used in the oil industry. J. Comput. Appl. Math. 229(2), 373–381 (2009)

Ghanbari, F., Ghanbari, K., Mokhtary, P.: High-order Legendre collocation method for fractional order linear semi explicit differential algebraic equations. Electron. Trans. Numer. Anal. 48, 387–409 (2018)

Ghanbari, F., Ghanbari, K., Mokhtary, P.: Generalized Jacobi Galerkin method for nonlinear fractional differential algebraic equations. Comput. Appl. Math. 37, 5456–5475 (2018)

Ghanbari, F., Mokhtary, P., Ghanbari, K.: On the numerical solution of a class of linear fractional integro-differential algebraic equations with weakly singular kernels. Appl. Numer. Math. 144, 1–20 (2019)

Ghanbari, F., Mokhtary, P., Ghanbari, K.: Numerical solution of a class of fractional order integro-differential algebraic equations using Müntz–Jacobi Tau method. J. Comput. Appl. Math. 362, 172–184 (2019)

Hamri, N.E., Houmor, T.: Chaotic dynamics of the fractional order nonlinear Bloch system. Electron. J. Theor. Phys. 8(25), 233–244 (2011)

Hille, E.: Lectures on Ordinary Differential Equations. Addison-Wesley, Reading (1969)

Kaczorek, T.: Positive linear systems consisting of n subsystems with different fractional orders. IEEE Trans. Circuits Syst. I. Regul. Pap. 58(6), 1203–1210 (2011)

Khader, M.M., El Danaf, T.S., Hendy, A.S.: A computational matrix method for solving systems of high order fractional differential equations. Appl. Math. Model. 37(6), 4035–4050 (2013)

Khader, M.M., Sweilam, N.H., Mahdy, A.M.S.: Two computational algorithms for the numerical solution for system of fractional differential equations. Arab J. Math. Sci. 21(1), 39–52 (2015)

Kilbas, A.A., Srivastava, H.M., Trujillo, J.J.: Theory and Applications of Fractional Differential Equations. Elsevier, Amsterdam (2006)

Liu, W., Chen, K.: Chaotic behavior in a new fractional-order love triangle system with competition. J. Appl. Anal. Comput. 5(1), 103–113 (2015)

Magin, R., Feng, X., Baleanu, D.: Solving the fractional order Bloch equation. Concepts Magn. Reson. 34(1), 16–23 (2009)

Mokhtary, P.: Discrete Galerkin method for fractional integro-differential equations. Acta Math. Sci. Ser. B Engl. Ed. 36(2), 560–578 (2016)

Mokhtary, P.: Numerical analysis of an operational Jacobi Tau method for fractional weakly singular integro-differential equations. Appl. Numer. Math. 121, 52–67 (2017)

Mokhtary, P.: Numerical treatment of a well-posed Chebyshev Tau method for Bagley-Torvik equation with high-order of accuracy. Numer. Algorithms 72, 875–891 (2016)

Mokhtary, P., Ghoreishi, F.: Convergence analysis of spectral Tau method for fractional Riccati differential equations. Bull. Iran. Math. Soc. 40(5), 1275–1290 (2014)

Mokhtary, P.: Reconstruction of exponentially rate of convergence to Legendre collocation solution of a class of fractional integro-differential equations. J. Comput. Appl. Math. 279, 145–158 (2015)

Mokhtary, P., Ghoreishi, F.: Convergence analysis of the operational Tau method for Abel-type Volterra integral equations. Elect. Trans. Numer. Anal. 41, 289–305 (2014)

Momani, S., Odibat, Z.: Numerical approach to differential equations of fractional order. J. Comput. Appl. Math. 207(1), 96–110 (2007)

Odibat, Z.M.: Analytic study on linear systems of fractional differential equations. Comput. Math. Appl. 59(3), 1171–1183 (2010)

Petráš, I.: Chaos in the fractional-order Volta’s system: modeling and simulation. Nonlinear Dyn. 57(1–2), 157–170 (2009)

Podlubny, I.: Fractional Differential Equations. Academic Press, New York (1999)

Qin, S., Liu, F., Turner, I., Vegh, V., Yu, Q., Yang, Q.: Multi-term time-fractional Bloch equations and application in magnetic resonance imaging. J. Comput. Appl. Math. 319, 308–319 (2017)

Shen, J., Tang, T., Wang, L.L.: Spectral Methods: Algorithms, Analysis and Applications. Springer, New York (2011)

Sweilam, N.H., Khader, M.M., Al-Bar, R.F.: Numerical studies for a multi-order fractional differential equation. Phys. Lett. A 371(1–2), 26–33 (2007)

Wang, J., Xu, T. Z., Wei, Y. Q., Xie, J. Q.: Numerical solutions for systems of fractional order differential equations with Bernoulli wavelets. Int. J. Comput. Math. 1–20 (2018)

Xie, Z., Li, X., Tang, T.: Convergence analysis of spectral Galerkin methods for Volterra type integral equations. J. Sci. Comput. 53(2), 414–434 (2012)

Yu, Y., Li, H.x, Wang, S., Yu, J.: Dynamic analysis of a fractional-order Lorenz chaotic system. Chaos Solitons Fractals 42(2), 1181–1189 (2008)

Yu, Q., Liu, F., Turner, I., Burrage, K.: Numerical simulation of the fractional Bloch equations. J. Comput. Appl. Math. 255, 635–651 (2014)

Zhu, H., Zhou, S., Zhang, J.: Chaos and synchronization of the fractional-order Chua’s system. Chaos Solitons Fractals 39(4), 1595–1603 (2009)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Faghih, A., Mokhtary, P. An Efficient Formulation of Chebyshev Tau Method for Constant Coefficients Systems of Multi-order FDEs. J Sci Comput 82, 6 (2020). https://doi.org/10.1007/s10915-019-01104-z

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-019-01104-z