Abstract

In this paper, we introduce a class of variational models for the restoration of ultrasound images corrupted by noise. The proposed models involve the convex or nonconvex total generalized variation regularization. The total generalized variation regularization ameliorates the staircasing artifacts that appear in the restored images of total variation based models. Incorporating total generalized variation regularization with nonconvexity helps preserve edges in the restored images. To realize the proposed convex model, we adopt the alternating direction method of multipliers, and the iteratively reweighted \(\ell _1\) algorithm is employed to handle the nonconvex model. These methods result in fast and efficient optimization algorithms for solving our models. Numerical experiments demonstrate that the proposed models are superior to other state-of-the-art models.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In various image systems, images are often corrupted by noise during the image acquisition process. Therefore, image denoising is an important and elemental problem in image processing. It aims to remove noise in the observed images and find the best possible restored image corresponding to the unknown clean image. In this article, we consider the denoising problem for ultrasound images. The removal of additive Gaussian noise has been well-studied and there are some methods that produce remarkable denoising results. However, ultrasound images involve more complicated noise such as multiplicative noise [1, 42, 43].

Let \(\varOmega \) be a bounded open domain with a compact Lipschitz boundary. Experimental measurements in [28] show that an observed ultrasound image \(f:\varOmega \rightarrow \mathbb {R}\) can be modeled using the following form:

where \(u:\varOmega \rightarrow \mathbb {R}\) is a true image and n is a Gaussian random variable with zero mean and a standard deviation \(\sigma \), i.e. \(n \sim \mathcal {N}(0,\sigma )\).

To reconstruct u from the noisy data f, various filtering-based methods, such as Lee filter [26], Kuan filter [25], and PDE-based methods have been proposed. In particular, from the degradation model (1), Krissian et al. [24] derived the following convex data-fidelity term

In a variational framework, a data-fidelity function is usually derived from the maximum a posteriori (MAP) estimation based on the degradation model and a probability density function of noise. In this case, the degradation model in (1) has a singular form. Hence, finding a proper data-fidelity function according to the MAP estimation is very complicated. In fact, the data-fidelity term in (2) is not derived from the MAP estimation. This term was obtained by adapting the work of Rudin et al. [39] dealing with the Gaussian distribution.

Several variational models involving the term (2) have been proposed for solving the inverse problem in (1). First, Jin et al. [21] proposed the following total variation (TV) regularization based model:

where \(\lambda >0\) is a tuning parameter, and TV [39] is defined as

where the vector measure Du represents the directional or weak gradient of u, and \(\Vert \cdot \Vert _\infty \) is the essential supremum norm. This regularization has been widely used in image processing owing to its edge-preserving property. The authors in [21] proved the existence and uniqueness of the solution of model (3), and it was shown that the solution belongs to \([\min (f),\max (f)]\) when \(f > 0\). From now on, we call the model in (3) the “TV model”. More recently, the well-balanced speckle noise reduction (WBSN) model, associated with an edge detection technique, was proposed in [3]:

where g is a smooth non-increasing function, defined as \(g(x) = \frac{1}{1+k|(G_\sigma *u) |(x)}\) with a Gaussian function \(G_\sigma \) and a constant value \(k>0\). To minimize the model (4) with a fixed g, the authors in [3] obtained a numerical solution by solving the Euler–Lagrangian equation for u and then updating g from its definition.

Despite the benefits of TV regularization, TV-based methods cause staircasing artifacts in the restored images. There have been many efforts to improve the performance of TV using higher-order regularization. In [12, 29], the second-order TV regularization was proposed to reduce the staircasing effects. Furthermore, several hybrid regularization methods combining TV with a higher-order regularizer have been considered. The first one is a convex combination of TV and second-order TV [27, 37], which takes advantages of both first- and second-order derivatives. In an earlier work, the infimal convolution TV (ICTV) regularizer [10, 40] was developed, which takes the infimal convolution of TV and second-order TV. Recently, as a generalization of ICTV, the total generalized variation (TGV) regularizer [6] was proposed. The TGV regularization results in restored images with sufficiently denoised smooth regions and well-preserved edges. In this work, we employ the TGV regularization for the removal of noise in the ultrasound images.

In early works, numerical algorithms [9, 39] that directly solve the Euler–Lagrangian equation using the finite difference method were used to find a minimizer of an energy functional. Recently, because of the development of the operating splitting technique [20], many unconstrained minimization problems in image processing can be converted into linearly constrained problems with simple objective functions. This also leads to numerous iterative algorithms [11, 46, 47] for solving linearly constrained minimization problems, which commonly have some subproblems that can be solved alternately. In particular, the alternating direction method of multipliers (ADMM) [15, 20] is one of the most well-known convex optimization algorithms. The ADMM is equivalent to the Douglas-Rachford splitting algorithm [14], and it has been applied to efficiently solve various image processing problems [18, 19, 41]. We adopt the ADMM here to solve our convex minimization problem.

Since the seminal work of Geman and Geman [17], many studies [4, 16, 32, 38] have demonstrated that nonconvex regularization performs better than convex regularization for image restoration by preserving edges. Specifically, Krishnan et al. [23] demonstrated the superiority of the nonconvex \(\ell _q\)-norm of gradient for \( 0.5< q < 0.8\) over its \(\ell _1\) norm in an image denoising problem. Moreover, several studies [22, 36] have utilized a nonconvex hybrid higher-order regularizer and shown its superiority over nonconvex TV regularizers. Recently, Ochs et al. [34, 35] introduced a nonconvex TGV for some applications in computer vision. We also utilize a nonconvex TGV for the ultrasound image denoising problem. On the other hand, many efficient algorithms for solving nonconvex minimization problems have been developed [2, 30, 31, 33, 44]. Recently, the iteratively convex majorization-minimization methods for solving nonsmooth nonconvex optimization problems have been proposed in [34, 35] with convergence analysis. These methods are the generalized versions of the reweighted \(\ell _1\) algorithm in [8]. In this work, we exploit the algorithm in [34] for solving our nonconvex model.

In this article, we introduce two TGV based minimization models for the restoration of ultrasound images polluted by noise. We make use of the convex or nonconvex TGV as a regularization term in our minimization problems. The TGV regularization allows the smooth transition regions in ultrasound images to be restored naturally. In addtion, the nonconvex TGV preserves the edges of the restored images well. We also present a convergence analysis for the convex TGV model and efficient optimization algorithms for solving the proposed convex or nonconvex models.

The rest of our paper is organized as follows. In Sect. 2, we review the TGV regularization and describe the ADMM for the general convex minimization problem with linear constrains. We also present the iteratively reweighted \(\ell _1\) algorithm for the linearly constrained nonconvex minimization problem. In Sect. 3, we introduce our minimization models with the convex TGV or nonconvex TGV regularization for ultrasound image denoising. Specifically, Sect. 3.1 presents the convergence analysis for our convex TGV model and an optimization algorithm for solving the model. Section 3.2 describes the nonconvex TGV-based model and its optimization algorithm. In Sect. 4, we provide some numerical experiments with comparisons to existing denoising models. Lastly, in Sect. 5, we summarize this work and discuss future work.

2 Background

2.1 Total Generalized Variation

The total generalized variation (TGV) was proposed by Bredies et al. [6], as a generalization of the infimal convolution of TV and second-order TV regularizers [10], as follows:

where \(Sym^k(\mathbb {R}^d)\) is the space of symmetric k-tensors on \(\mathbb {R}^d\) as

and the \(\mathcal {C}^k_c(\varOmega ,Sym^k(\mathbb {R}^d))\) represents the space of compactly supported symmetric tensor fields. Here, \(\alpha = (\alpha _0,\alpha _1,\ldots ,\alpha _{k-1})\) is a fixed positive vector. When \(k=1\) and \(\alpha _0=1\), the definition of TGV is identical to that of TV, which implies that TGV is a generalization of TV.

According to [6], the \(TGV^k_\alpha \) can be represented as a k-fold infimal convolution by employing the Fenchel–Rockafellar duality formula, which is given by

where \(u_0=u\), \(u_k = 0\), \(u_j\in C_c^{k-j}(\varOmega ,Sym^j(\mathbb {R}^d))\) for all \(j=1,\ldots ,k\), and \(\mathcal {E}\) is the distributional symmetrized derivative, i.e., \(\mathcal {E}(u_{j-1})=\frac{\nabla u_{j-1}+(\nabla u_{j-1})^T}{2}\); for instance, when \(k=2\), \(TGV^2_\alpha \) involves the \(l_1\)-norm of \(\mathcal {E}(u_0)- u_1=\nabla u -u_1\) and \(\mathcal {E}(u_1)\) with appropriate weights. In this case, the minimization in (6) can be interpreted as an optimal balancing between the first- and second-order derivatives of u in terms of sparse penalization (via the Radon norm). Therefore, from this representation (6), one can say that \(TGV^k_\alpha (u)\) automatically balances the first- and higher-order derivatives (up to the order k), rather than using any fixed combination. As a result, it reduces the staircasing effects of the TV functional.

In this work, we particularly utilize the second order TGV, obtained by letting \(k=2\) in (5). The followings describe several properties of the second order TGV, denoted by \(TGV_\alpha ^2\) [5, 7]. First, \(TGV_\alpha ^2:L^p(\varOmega ) \rightarrow \mathbb {R}\cup \{\infty \}\) is a proper, convex, and lower semicontinuous function for \(1\le p< \infty \). Moreover, there exist some positive constants c and C that, for any \(u\in L^1(\varOmega )\), satisfy

Lastly, \(TGV_\alpha ^2\) satisfies the Poincaré inequality, i.e., there exists a positive constant \(C'\) such that for any \(u\in L^1(\varOmega )\),

where P is a linear projection to the space of affine functions. Using these properties, we will prove the existence and uniqueness of the solutions of our proposed model in the next section.

2.2 Alternating Direction Method of Multipliers

This section reviews a convex optimization algorithm called the alternating direction method of multipliers (ADMM).

Let us consider the following linearly constrained minimization problem:

where \(H : \mathbb {R}^n \rightarrow \mathbb {R}\cup \{\infty \}\) and \(G : \mathbb {R}^m \rightarrow \mathbb {R}\cup \{\infty \}\) are convex, proper, and lower semicontinuous functions, \(A \in \mathbb {R}^{k\times n}, B\in \mathbb {R}^{k\times m}\), and \(b\in \mathbb {R}^k\).

The augmented Lagrangian function for problem (8) is given by

where \(\lambda \) is a Lagrange multiplier vector or dual variable and \(\mu >0\) is a penalty parameter.

The augmented Lagrangian method minimizes \(\mathcal {L}_{\mathcal {A}}\) over \(\mathbf {x}\) and \(\mathbf {y}\) jointly for fixed \(\lambda \) and then updates \(\lambda \) for fixed \(\mathbf {x}\) and \(\mathbf {y}\). Since the augmented Lagrangian function has a coupled penalty term with respect to (\(\mathbf {x}\), \(\mathbf {y}\)), it is hard to solve the subproblem over (\(\mathbf {x}\), \(\mathbf {y}\)) in many cases. To overcome this drawback, the ADMM alternately solves the subproblem, by minimizing the augmented Lagrangian function \(\mathcal {L}_{\mathcal {A}}\) over one variable (\(\mathbf {x}\) or \(\mathbf {y}\)) with the other variable fixed and performs only one outer iteration, which yields

where \(\nu \in (0,\frac{1+\sqrt{5}}{2})\) is a parameter. The convergence of the ADMM was proved in [13] as follows:

Theorem 1

Assume that there exists a saddle point \((\mathbf {x}_*,\mathbf {y}_*,\mathbf {\lambda }_*)\) of problem (8) and that A and B have full column rank or H and G are coercive. Then, for any \(\nu \in (0,\frac{1+\sqrt{5}}{2})\), the tuple (\(\mathbf {x}_k,\mathbf {y}_k,\mathbf {\lambda }_k\)) generated by the ADMM (9) satisfies

The ADMM is adopted to solve our convex minimization problem.

2.3 Iterative Reweighted \(\ell _1\) Algorithm

In this subsection, we recall the iteratively reweighted \(\ell _1\) algorithm (IRLA) [34], which was proposed for solving nonconvex optimization problems.

For a convex function f, a vector g is called a subgradient at a point \(\mathbf {x}\) if for any vector \(\mathbf {y}\), \(f(\mathbf {y})-f(\mathbf {x}) - g^T(\mathbf {y} - \mathbf {x})\ge 0\) is satisfied. The set of all subgradients at \(\mathbf {x}\) is called the subdifferential at \(\mathbf {x}\) and is denoted by \(\partial f(\mathbf {x})\). If \(\tilde{f}\) is a concave function, then \(-\tilde{f}\) is a convex function. An element of \(-\partial (-\tilde{f})\) is called a limiting-supergradient of \(\tilde{f}\).

Let us consider the following nonconvex minimization optimization problem with linear constraints:

where \(E_1\) is a proper, lower semicontinuous, and convex function, \(E_2\) is a concave and coordinatewise nondecreasing function, and \(|\cdot |\) is coordinatewise. When the IRLA is applied to problem (10), it leads to the following iterative algorithm,

where \(\tilde{\mathbf {x}}_k\) is a limiting-supergradient of \(E_2(\cdot )\) at \(|\mathbf {x}_k|\). If \(E_2\) is a differentiable function, then the limiting-supergradient of \(E_2\) is given by \(\nabla E_2\), from the property of the subdifferential.

The convergence of IRLA was proved in [34]:

Theorem 2

Let \((\mathbf {x}_{k+1})\) be the sequence generated by the IRAL algorithm (11), and assume that \(E_1(\mathbf {x}) + E_2(|\mathbf {x}|) \rightarrow \infty \) as \(\Vert \mathbf {x}\Vert \rightarrow \infty \), and \(A\mathbf {x}=b\). Then, \((\mathbf {x}_{k+1})\) is bounded and has at least one accumulation point.

In the following section, we apply the IRLA to solve our proposed nonconvex model.

3 Proposed Models and Algorithms

In this section, we present the convex or nonconvex TGV regularization based models for ultrasound image denoising. We also present efficient optimization algorithms to solve our proposed models by utilizing the ADMM and the IRLA.

3.1 Convex TGV Model

First, we propose the following minimization model, which includes the the second-order TGV regularizer and the data fidelity term in (2):

where \(S(\varOmega ) = \{u \in L^2(\varOmega ):u>0\}\) and \(\gamma \) is a regularization parameter that controls the balance between the TGV regularization and data fidelity terms. From the definition of \(TGV^2_\alpha (u)\) in (6), the TGV model (12) can be reformulated as follows

where \(\alpha _1,\,\alpha _0>0\) are parameters that balance the first- and second-order regularization terms. It is straightforward that the model (12) is convex, due to the convexity of the data-fidelity and TGV terms, as shown in [24] and [7], respectively.

The following theorem exhibits the existence and uniqueness of the solutions for the proposed model in (12).

Theorem 3

The proposed variational model in (12) admits a unique solution.

Proof

The proof of this theorem is given in the appendix, which is based on the properties of the second-order TGV. \(\square \)

3.1.1 ADMM for Solving Our Model (12)

In this subsection, we introduce an optimization algorithm for solving our convex TGV model in (12). From its equivalent model in (13), we can derive the discrete \(\text {TGV}^2_\alpha \) functional as

where the operators \(\nabla u\) and \(\mathcal {E}(v)\) are given by

With this discrete TGV formulation, the discretized version of our proposed model in (13) is as follows:

Here, we assume that \(f_{i,j} \ne 0\) for all \((i,j)\in \varOmega \) (see Remark 1 for further explanation). Furthermore, the set \(S(\varOmega )\) is not a closed set. Hence, we consider the closed set \(\bar{S}(\varOmega )=\{u\in L^2(\varOmega ) : u \ge 0\}\) instead of \(S(\varOmega )\) for the numerical algorithms. Since the term \(\frac{(f-u)^2}{u}\) blows up at \(u=0\), the close set \(\bar{S}(\varOmega )\) is a simple relaxation of \(S(\varOmega )\). Therefore, we solve the following minimization problem instead of the original problem in (14):

We now introduce three auxiliary variables: \(z,d = (d_1,d_2)^T\), and \(w = (w_1,w_2,w_3)^T\). Using these auxiliary variables, the unconstrained model in (15) can be rewritten as the following constrained form,

Letting \(D(z) = \left\langle \frac{(f-z)^2}{z},\mathbf {1} \right\rangle \) and setting

the constrained problem in (16) can be rewritten in the form in (8), so we can use the ADMM to solve it. The augmented Lagrangian function for problem (16) is given by

The ADMM can then applied to problem (16) as follows,

The variables z, d, and w in the augmented Lagrangian function are independent of each other. Hence, we can solve the subproblem for \((z_{k+1}, d_{k+1}, w_{k+1})\) in the ADMM separately. We can then obtain the ADMM for model (16) as follows,

From Theorem 1, we can derive the following convergence result.

Theorem 4

For any \(\nu \in (0,\frac{1+\sqrt{5}}{2})\), the tuple \((z_k,d_k,w_k,u_k,v_k,\lambda ^1_k,\lambda ^2_k,\lambda ^3_k)\) generated by the ADMM (19) converges to a saddle point \((z_*,d_*,w_*,u_*,v_*,\) \(\lambda ^1_*,\lambda ^2_*,\lambda ^3_*)\) of problem (16).

Proof

Since D(z) is not convex when \(z < 0\), we can rewrite the constrained model in (16) as the following equivalent formula

where \(\tilde{D}(z)\) is the extended value function of \(\frac{(f-z)^2}{z}\), which is defined as

It is trivial to show that \(\tilde{D}(z)\) is convex, proper, and lower semi-continuous.

Moreover, the z-subproblem in the ADMM (the third line in (19)) is equivalent to

Hence, the tuple generated by the ADMM (19) is exactly the same as the tuple generated by the ADMM when it is applied to problem (20). Since A and B have full column rank, the assertion of this theorem follows from Theorem 1. \(\square \)

Solving the subproblems in (19). We now solve the subproblems in the ADMM (19). First, the subproblems for \(d_{k+1}\) and \(w_{k+1}\) in (19) have closed form solutions obtained using the shrink operator as follows,

where the shrink operator \(\text {shrink}(s,t)\) is defined as

To solve the z-subproblem, we take the partial derivative of the energy with respect to z, which leads to the following normal equation:

Thus, the exact solution of z-subproblem can be obtained as a positive solution of the following cubic equation:

The following Lemma shows that this cubic equation (21) has one real positive solution.

Lemma 1

The cubic equation (21) has only one positive solution.

Proof

Let \((t_1,t_2,t_3)\) be the solution of the cubic equation in (21). It follows from (21) that

Let \(\alpha =t_2 + t_3\) and \(\beta = t_2 \cdot t_3\). It is trivial to find that a cubic equation has at least one real solution. Thus, we let \(t_1\) be a real solution of (21) without loss of generality. It is easy to show that \(t_i \ne 0\) for all \(i=1,2,3\).

Case (1) Assume that \(t_1\) is negative. Since \(\frac{\gamma f^2}{\mu } > 0\), \(t_2\cdot t_3 < 0\). Using this inequality and (22), we have \(t_1(t_2+t_3) > 0\) and thus \(t_2+t_3 < 0\) is satisfied. Thus, we obtain

Because \((t_2,t_3)\) are the solutions of \(t^2 - \alpha t + \beta = 0\), they satisfy the following equations without loss of generality:

From (23), \(t_2\) and \(t_3\) are real numbers, \(t_3\) is negative, and \(t_2\) is positive.

Case (2) Assume that \(t_1\) is positive. It is enough to show that \(t_2\) and \(t_3\) are either complex numbers or negative. Because \(\frac{\gamma f^2}{\mu } > 0\), then \(t_2\cdot t_3 > 0\). Similar to the case 1, we have \(t_2 + t_3 < 0\), which leads to

Without loss of generality, \((t_2,t_3)\) can be represented as

If \(\alpha ^2 - 4\beta <0\), then \((t_2,t_3)\) are complex numbers. If \(\alpha ^2 - 4\beta \ge 0\), it follows from (24) that \(\alpha \pm \sqrt{\alpha ^2 - 4 \beta }\) are negative. Thus, in this case, \((t_2,t_3)\) are negative. \(\square \)

In practice, we compute the roots of the cubic Eq. (21), using the cubic formula that is the closed-form solution for a cubic equation. Then we choose the positive one from the three solutions.

Lastly, the subproblem for \((u_{k+1},v_{k+1})\) in (19) is a least square problem,

with \(b = (z_{k+1}-\lambda _k^1/\mu , d_{k+1} - \lambda _{k}^2/\mu , w_{k+1} -\lambda _{k}^3/\mu )^T\). Hence, the solution \(\mathbf {y}_{k+1}\) of the above least square problem is the solution of \(B^TB \mathbf {y}=-B^Tb\). The matrix \(B^T B\) is given by

The block matrices in \(B^T B\) can be diagonalized by the Fourier transform under the periodic boundary condition. Thus, solutions \((u_{k+1},v_{k+1})\) in our algorithm can be found explicitly and easily using the Fourier transform and the block matrix inversion formula.

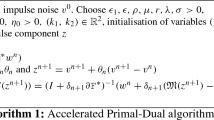

The ADMM for solving model (12) is summarized in Algorithm 1.

Remark 1

If \(f_{i,j}=0\), the equality \(\sqrt{u}_{i,j} = -n_{i,j}\) has to be satisfied by the degraded model in (1). In this case, the intensity value of the original image u at (i, j) must be the square of the Gaussian noise value \(n_{i,j}^2\) and moreover, \(n_{i,j}\) must have a negative value. However, this phenomenon happens with a very low probability. Hence, we can assume that \(f_{i,j} \ne 0\) for all \((i,j)\in \varOmega \) in practice.

3.2 Nonconvex TGV Model

In many prior methods, the nonconvex TV-based models minimize the support of the image gradient, which leads to piecewise-constant restored images. They also yield staircase artifacts near smooth transition regions. Therefore, a nonconvex hybrid TV regularizer [36] has been recently proposed, which is a nonconvex version of a convex combination of the first- and second-order TV. This efficiently deals with the discontinuous and smooth regions, producing visually remarkable solutions.

Here, we utilize a nocnonvex version of \(TGV^2_\alpha \), since \(TGV^2_\alpha \) automatically balances the first- and second-order derivatives, rather than using any fixed combination. Thus, we introduce a nonconvex version of our TGV model in (12), which employs a nonconvex TGV regularization (NTGV), as follows:

where \(\phi _i\) is a nonconvex log function, such as \(\phi _i(s)= \frac{1}{\rho _i}\log (1+\rho _i s)\) with parameter \(\rho _i > 0\). The parameter \(\rho _1\) and \(\rho _2\) control the nonconvexity of the first- and second-order regularization terms. Here, we adopt the nonconvex log function among some possible nonconvex functions. It is hard to solve the minimization problem involving the nonconvex \(\ell _q\)-norm regularizer because finding a limiting-supergradient of \(\Vert \cdot \Vert _q\) at zero is difficult. Moreover, according to the work in [45], the log function is suitable for the reconstruction of piecewise-smooth images, while the fractional function is more proper for the reconstruction of piecewise-constant images. Real ultrasound images are mostly piecewise-smooth, thus, we utilize the log function as our nonconvex function \(\phi _i\). This log type of NTGV was introduced in [34, 35], and the only difference with ours is that we adopt different values for the parameters \(\rho _1\) and \(\rho _2\).

The proposed nonconvex model (25) takes advantages of both higher-order regularization and nonconvex regularization for image denoising. That is, it helps sufficiently denoise smooth regions without staircasing effects while preserving edges and details. Comparing with the convex model (12), the NTGV model (25) is expected to be more suitable for denosing images having much structures and strong edges or in the presence of high level of noise, whereas it is expected to provide similar denoising results with the TGV model for images with weak edges or few discontinuous transitions. On the other hand, the TGV model has more computational efficiency since the NTGV model solves the TGV model in its inner loops, which is explained as follows.

We now present an optimization algorithm to solve the NTGV model in (25), by using the IRLA. First, we consider the discretized version of model (25):

Using the variable splitting technique, we can reformulate the unconstrained model in (26) into the following constrained problem as

By letting

the constrained model in (27) can be reformulated as the form in (10).

Hence, the IRLA can be applied to problem (27), which is given by

The IRLA (28) leads to another convex minimization problem in its inner iteration. It has the same form as the convex TGV model in (16) except for the weighted functions \(\tilde{d}_k\) and \(\tilde{w}_k\). Hence, the subproblem in (28) can be solved by the ADMM. That is, by changing the setting in (17) using \(H(\mathbf {x})= \alpha _1\langle \tilde{d}_k, |d|\rangle + \alpha _0 \langle \tilde{w}_k, |w|\rangle +\gamma D(z)\), we can apply the ADMM to the subproblem in (28), which yields

The subproblems for \(d_{k+1}\) and \(w_{k+1}\) in (29) can be computed explicitly with the shrink operator as follows:

The other solutions can be obtained as described in Sect. 3.1.

Overall, the optimization algorithm for solving our NTGV model in (25) is summarized in Algorithm 2.

Lastly, we can obtain a partial convergence of the IRLA in (28) for solving the constrained problem in (27). In other words, it is trivial to show that the the objective function in problem (27) is coercive in \(z\in S(\varOmega )\). Therefore, from Theorem 2, we can obtain a partial convergence of the IRLA in (28) when it is applied to problem (27): The sequence \((u_k,v_k,z_k,d_k,w_k)\) generated by (28) is bounded, and it has at least one accumulation point. That is, there exists a converging subsequence to an accumulation point. However, the nonconvex log function in (27) is not a sum of a convex function; thus, we cannot even assure the local convergence of the algorithm in (28) (see [34] for more details).

4 Numerical Experiments

In this section, we present numerical results for our proposed models and compare them with existing variational models such as the TV model [21] and the WBSN model [3]. We test using both natural images and real ultrasound images. All experiments were implemented using MATLAB R2015b on a desktop PC with an Intel CPU at 3.50 GHz, 16 GB RAM, and a 64-bit Window 10 operating system.

In [21], the authors solved the TV model by using the gradient-descent method. However, this model can be easily implemented by the split-Bregman method, similar to our approach. For fair comparison with our models, we use the split-Bregman method to solve the TV model. The stopping criterion for our TGV/NTGV model and the TV model is given by

where M is the maximum iteration number; \(M=500\) for our TGV model and the TV model, \(M=100\) for our NTGV model. Besides, the maximum iteration number of the ADMM for the inner subproblem in the NTGV model is fixed as \(M_{\text {in}}=10\). The WBSN model is solved by the gradient-descent based method, so the maximum iteration number M is set as 5000.

Recall that the data fidelity term in (2) is not derived from the MAP estimation based on the degradation model. These result in denoised images with shifted intensity values. That is, variational models using (2) as the data fidelity term are likely to produce a big gap between the mean of a restored image and that of an original clean image. To reduce this gap, we add the bias correction step in the final restored image. Since \(\text {mean}(n)=0\), it follows from the degradation model in (1) that

From this approximation and the constraint \(u > 0\), the rescaled image \(\tilde{u}\) is obtained from the denoised image u as follows:

We then regard the image \(\tilde{u}\) as the final restored image for all models.

The selection of parameters is as follows. First, we select \(\alpha _0\) and \(\alpha _1\) to satisfy \(\alpha _0 + \alpha _1 = 1\). We tune the parameter \(\alpha _1\), which controls the balance between first- and second-order terms. For real ultrasound images, \(\alpha _1\) is fixed as 0.4 or 0.6. The parameter \(\gamma \) is selected depending on the noise level \(\sigma \); if the noise level \(\sigma \) is large, a small value of \(\gamma \) is more proper, and vice versa. Hence, we mainly tune the parameters \(\alpha _1\) and \(\gamma \) to achieve the best restored images. The parameter \(\mu \) in the ADMM affects on the speed of the algorithm; we set \(\mu = 0.01\) for our TGV and the TV models, and \(\mu = 0.05\) for our NTGV model. The values for \(\rho _1\) and \(\rho _2\) in the NTGV model are selected in the ranges [0.001, 0.005] and [0.05, 0.2], respectively, for natural images. Since real ultrasound images in general have blurry edges, we tune the parameter \(\rho _1\) in the range [0.01, 0.05].

4.1 Denoising Results of Synthetic and Natural Images

In the experiments, all clean images have intensity values in the range [0, 255]. In this numerical test, we used two synthetic images and six natural images, as shown in Fig. 1. The test images were corrupted using (1) as the degradation model, with \(\sigma = 2\), 4, and 6.

In order to measure the quality of the restored images, we compute the peak signal-to-noise ratio (PSNR) value and the structure similarity (SSIM) index, which are defined as follows:

where \(u_* \in \mathbb {R}^{m\times n}\) is the clean image, \(\bar{u}\in \mathbb {R}^{m\times n}\) is the restored image, \(\mu _u\) is the average of u, \(\sigma _u\) is the standard deviation of u, and \(c_1\) and \(c_2\) are some constants for stability. For all methods, we determined the tuning parameters that obtained the best restored images visually as well as using the PSNR and SSIM values.

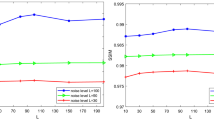

Before discussing denoising results, we first compare the ADMM and the primal-dual algorithm [11] that is used for solving the TGV-based denoising model in [6]. We apply both algorithms to our TGV model (12), and the same regularization parameters \((\alpha _1,\gamma )\) and stopping criterion in (30) are used. Table 1 and Fig. 2 present the denoising results of the “child” image in the presence of noise with \(\sigma =2,4,6\). In Table 1, we present the computing time, final energy functional values, and total iteration numbers, and Fig. 2 shows the plots of the energy functional in (12) via iteration number k. It can be seen that the ADMM reaches the stopping criterion much faster and provides lower final energy functional values than the PD algorithm, which implies the ADMM finds more accurate solutions within shorter time. These show the efficiency of the ADMM for solving our TGV model, compared with the PD algorithm.

In Figs. 3, 4, 5, 6, 7, and 8, we display the original images, noisy images, and restored images of all models, tested on synthetic and natural images. Figure 3 presents the denosing results when the noise level \(\sigma =2\) and 4, respectively, tested on piecewise smooth synthetic images. Figures 4, 5, 6, 7, and 8 exhibit the results when \(\sigma =2\), 4, 6, respectively, tested on natural images.

Throughout the examples, we can observe that our proposed models alleviate the staircasing effects often found in piecewise smooth transition areas in the restored images by the TV and WBSN models. Hence, the restored images of our models look more like natural images. For instance, in Figs. 4 and 5, the restored images of the TV and WBSN models have staircasing artifacts around the chin of the child and woman, while our TGV models conserve the piecewise smooth transition regions very well. This demonstrates the superiority of the TGV-based regularizers over the first-order derivative based regularizers for denoising piecewise smooth images. In addition, the WBSN and NTGV models commonly recover the denoised images with well preserved edges. However, because the WBSN uses the Tikhonov regularization \(\int _\varOmega |\nabla u|^2\,\,dx\), it smoothes out small features or weak edges in the restored images. Specifically, in Fig. 6, the castle’s reflection in the lake is barely visible because of the oversmoothing. In contrast, the images recovered by our NTGV model include the small details.

Comparing our two models, we can see that the NTGV model provides sharper edges and more preserved fine features than the TGV model, particularly in the presence of strong edges or high level of noise, as seen in Figs. 3, 6, 7, and 8. This shows the merit of the nonconvex regularization. On the other hand, in Figs. 4 and 5, the restored images of our models are visually similar. The NTGV model produces slightly better preserved edges and textures than the TGV model, which can be seen in the regions of the textural patterns in child, and the eyes and the woolen hat in woman. Hence, we can presume that both models produce similar denoising results for the images having smooth regions with weak edges.

Lastly, in Table 2, we present the PSNR values and SSIM values of all models for noise levels \(\sigma = 2\), 4, and 6. We also observe from Table 2 that our models obtain restored images that are superior to those obtained by the others. Specifically, the NTGV model outperforms the others when \(\sigma = 6\). In terms of the computing time in Table 3, the TV model is the fastest for all cases. In contrast, the WBSN is the slowest because it is solved using the gradient descent method. The NTGV requires much more time than the TGV model because of the additional outer iterations for the IRLA. Thus, the TGV model has an advantage with respect to computing time, while the NTGV model recovers the best quality images.

4.2 Denoising Results of Real Ultrasound Images

In Figs. 9, 10, 11, and 12, we present the denoising results of all models, tested on several real ultrasound images. The TV and the WBSN models give rise to staircasing artifacts, leading to a more blocky, less authentic denoised image. But our models avoid these artifacts and achieve more natural homogenous regions than both state-of-the-arts models. We can also see that the restored images of our models have smooth interiors and clear boundaries, while the others create somewhat rough boundaries. In other words, our proposed models perform better than the other ones.

Moreover, the WBSN and NTGV models find clear corners and features, thanks to their edge-preserving techniques in common. However, the restored images of the WBSN model look somewhat like cartoon images, while the NTGV model produces clearer images with preserved edges. Specifically, the slim vessel in the upper denoised image of our NTGV model in Fig. 10 is sleek, with no bumps. Although our two models result in visually similar recovered images, the NTGV model provides slightly clearer slim vessel and edges than the TGV model in an enlarged view. Therefore, these examples also qualitatively verify the superior performance of our models for real ultrasound image denoising.

Lastly, as discussed in Sect. 3.2, we can observe from the numerical results that the NTGV model (25) performs better than the TGV model (12) when denoising images having much structures or strong edges, such as “castle”, “pepper” images, or images with high level of noise. On the other hand, the NTGV model provides similar denoising results with the TGV model for images with weak edges, such as “child”, “woman” images or real ultrasound images. Moreover, the TGV model has a much benefit of computing time, as shown in Table 3. To sum up, these numerical experiments validate the effectiveness of the TGV regularization and superiority of the nonconvex regularization.

5 Conclusion

In this paper, we proposed two TGV-based minimization models for denoising ultrasound images. Our models consist of a convex data-fidelity term and the convex or nonconvex TGV regularization terms. The proposed models eliminate the staircasing artifacts commonly appeared in the results of TV-based models. Moreover, the nonconvex TGV model is able to produce better preserved edges, textures, and fine features in the restored images. We proved the existence and uniqueness of the minimizers for the convex TGV model. We also provided efficient iterative algorithms by adopting the ADMM to handle the complicated data fidelity term and nonsmooth convex regularization term, and the IRLA to deal with the nonconvex regularization term. Compared with state-of-the-art methods, the proposed models perform effectively by smoothing homogeneous regions while preserving edges, and thus they provide better denoising results for both natural images and real ultrasound images. Real ultrasound images are usually blurry as well as noisy. Thus, blind deconvolution along with denoising for ultrasound images is a possible target for future work.

References

Aubert, G., Aujol, J.F.: A variational approach to removing multiplicative noise. SIAM J. Appl. Math. 68(4), 925–946 (2008)

Aubert, G., Kornprobst, P.: Mathematical Problems in Image Processing: Partial Differential Equations and the Calculus of Variations, vol. 147. Springer, Berlin (2006)

Barcelos, C.A., Vieira, L.E.: Ultrasound speckle noise reduction via an adaptive edge-controlled variational method. In: 2014 IEEE International Conference on Systems, Man, and Cybernetics (SMC), pp. 145–151 (2014)

Besag, J.: Digital image processing: towards Bayesian image analysis. J. Appl. Stat. 16(3), 395–407 (1989)

Bredies, K., Holler, M.: A TGV regularized wavelet based zooming model. Scale Space Var. Methods Comput. Vis. 7893, 149–160 (2013)

Bredies, K., Kunisch, K., Pock, T.: Total generalized variation. SIAM J. Imaging Sci. 3(3), 492–526 (2010)

Bredies, K., Kunisch, K., Valkonen, T.: Properties of \(L^1\) -\(TGV^2\): The one-dimensional case. J. Math. Anal. Appl. 389(1), 438–454 (2013)

Candés, E.J., Wakin, M.B., Boyd, S.P.: Enhancing sparsity by reweighted \(\ell _1\) minimization. J. Fourier Anal. Appl. 14, 877–905 (2008)

Chambolle, A.: An algorithm for total variation minimization and applications. J. Math. Imaging Vis. 20, 89–97 (2004)

Chambolle, A., Lions, P.: Image recovery via total variation minimization and related problems. Numer. Math. 76(2), 167–188 (1997)

Chambolle, A., Pock, T.: A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40(1), 120–145 (2011)

Chan, T., Marquina, A., Mulet, P.: High-order total variation-based image restoration. SIAM J. Sci. Comput. 22(2), 503–516 (2000)

Deng, W., Yin, W.: On the global and linear convergence of the generalized alternating direction method of multipliers. J. Sci. Comput. 66, 889–916 (2016)

Douglas, J., Rachford, H.H.: On the numerical solution of heat conduction problems in two and three space variables. Trans. Am. Math. Soc. 82(2), 421–439 (1956)

Eckstein, J., Bertsekas, D.P.: On the Douglas–Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 55, 293–318 (1992)

Geman, D., Yang, C.: Nonlinear image recovery with half-quadratic regularization. IEEE Trans. Image Process. 4(7), 932–946 (1995)

Geman, S., Geman, D.: Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images. IEEE Trans. Pattern Anal. Mach. Intell. PAMI 6(6), 721–741 (1984)

Getreuer, P.: Total variation inpainting using split Bregman. Image Process. On Line 2, 147–157 (2012)

Goldstein, T., Bresson, X., Osher, S.: Geometric applications of the split Bregman method: segmentation and surface reconstruction. J. Sci. Comput. 45, 272–293 (2010)

Goldstein, T., Osher, S.: The split Bregman method for L1-regularized problems. SIAM J. Imaging Sci. 2(2), 323–343 (2009)

Jin, Z., Yang, X.: A variational model to remove the multiplicative noise in ultrasound images. J. Math. Imaging Vis. 39, 62–74 (2011)

Kang, M., Jung, M., Kang, M.: Nonconvex higher-order regularization based Rician noise removal with spatially adaptive parameters. J. Vis. Commun. Image R. 32, 180–193 (2015)

Krishnan, D., Fergus, R.: Fast image deconvolution using hyper-Laplacian priors. In: Proceedings of the Advances in Neural Information Processing Systems, pp. 1033–1041 (2009)

Krissian, K., Kikinis, R., Westin, C., Vosburgh, K.: Speckle constrained filtering of ultrasound images. IEEE Comput. Vis. Pattern Recogn. 2, 547–552 (2005)

Kuan, D.T., Sawchuk, A.A., Strand, T.C., Chavel, P.: Adaptive restoration of images with speckle. IEEE Trans. Acoust. Speech Signal Process. ASSP–35, 373–383 (1987)

Lee, J.S.: Speckle suppression and anaylsis for synthetic aperture radar. Opt. Eng. 25(5), 636–643 (1999)

Li, F., Shen, C., Fan, J., Shen, C.: Image restoration combining a total variational filter and a fourth-order filter. J. Vis. Commun. Image R. 18(4), 322–330 (2007)

Loupas, T., McDicken, W.N., Allan, P.L.: An adaptive weighted median filter for speckle suppression in medical ultrasonic images. IEEE Trans. Circuits Syst. 36(1), 129–135 (1989)

Lysaker, M., Lundervold, A., Tai, X.C.: Noise removal using fourth-order partial differential equation with application to medical magnetic resonance images in space and time. IEEE Trans. Image Process. 12(12), 1579–1590 (2003)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course, vol. 87. Kluwer, Boston (2004)

Nikolova, M., Ng, M.K., Tam, C.P.: Fast nonconvex nonsmooth minimization methods for image restoration and reconstruction. IEEE Trans. Image Process. 19(12), 3073–3088 (2010)

Nikolova, M., Ng, M.K., Zhang, S., Ching, W.: Efficient reconstruction of piecewise constant images using nonsmooth nonconvex minimization. SIAM J. Imaging Sci. 1(1), 2–25 (2008)

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer, New York (2006)

Ochs, P., Dosovitskiy, A., Brox, T., Pock, T.: An iterated \(\ell _1\) algorithm for non-smooth non-convex optimization in computer vision. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2013)

Ochs, P., Dosovitskiy, A., Brox, T., Pock, T.: On iteratively reweighted algorithm for nonsmooth nonconvex optimization in computer vision. SIAM J. Imaging Sci. 8(1), 331–372 (2015)

Oh, S., Woo, H., Yun, S., Kang, M.: Non-convex hybrid total variation for image denoising. J. Vis. Commun. Image R. 24(3), 332–344 (2013)

Papafitsoros, K., Schönlieb, C.B.: A combined first and second order variational approach for image restoration. J. Math. Imaging Vis. 48, 308–338 (2014)

Robini, M., Lachal, A., Magnin, I.: A stochastic continuation approach to piecewise constant reconstruction. IEEE Trans. Image Process. 16(10), 2576–2589 (2007)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 60(1), 259–268 (1992)

Setzer, S., Steidl, G.: Variational methods with higher-order derivatives in image processing. In: Approximation Theory XII: San Antonio, pp. 360–386 (2008)

Setzer, S., Steidl, G., Teuber, T.: Deblurring Poissonian images by split Bregman techniques. J. Vis. Commun. Image R. 21(3), 193–199 (2010)

Shi, J., Osher, S.: A nonlinear inverse scale space method for a convex multiplicative noise model. SIAM J. Imaging Sci. 1(3), 294–321 (2008)

Steidl, G., Teuber, T.: Removing multiplicative noise by Douglas-Rachford splitting methods. J. Math. Imaging Vis. 36(2), 168–184 (2010)

Teboul, S., Blanc-Feraud, L., Aubert, G., Barlaud, M.: Variational approach for edge-preserving regularization using coupled PDEs. IEEE Trans. Image Process. 7(3), 387–397 (1998)

Vese, L., Chan, T.: Redced non-convex functional approximations for image restoration and segmentation. UCLA CAM Report 97-56 (1997)

Woo, H., Yun, S.: Proximal linearized alternating direction method for multiplicative denoising. SIAM J. Sci. Comput. 35(2), B336–B358 (2013)

Yang, J., Zhang, Y.: Alternating direction algorithms for \(\ell _1\) problems in compressive sensing. SIAM J. Sci. Comput 33(1), 250–278 (2011)

Acknowledgements

Myeongmin Kang was supported by the NRF (2016R1C1B1009808). Myungjoo Kang was supported by the NRF (2014R1A2A1A10050531, 2015R1A5A1009350), MOTIE (10048720) and IITP-MSIP (B0717-16-0107). Miyoun Jung was supported by the Hankuk University of Foreign Studies Research Fund and NRF (2013R1A1A3010416).

Author information

Authors and Affiliations

Corresponding author

Appendix: The Proof of Theorem 3

Appendix: The Proof of Theorem 3

Proof

For any fixed \(x\in \varOmega ,\) we can deduce from the inequality of arithmetic-geometric mean that for \(u > 0\),

It follows from the above inequality that

i.e., E(u) is bounded below. Hence, we can choose a minimizing sequence \(\{u^n\}\in L^2(\varOmega )\) for problem (12), and the sequence \(\{TGV^2_\alpha (u^n)\}\) is also bounded.

In addition, from the Poincar\(\acute{e}\) inequality of \(TGV^2_\alpha \), we can obtain that

where \(P : L^2(\varOmega ) \rightarrow \ker (TGV_\alpha ^2)\) is a linear projection. It follows that \(u^n - Pu^n\) is bounded in \(L^2(\varOmega )\) for each n.

We can also easily deduce that for \(u > 0\),

where c is a positive constant that is independent of n. Since \(\{u^n\}\) is a minimizing sequence of problem (12), \(\Vert Pu^n\Vert _2\) is bounded from the above inequalities.

Then, from the following inequalities,

we can conclude that \(\{u^n\}\) is bounded in \(L^2(\varOmega )\). Therefore, there exist a subsequence \(\{u^{n_k}\}\) and \(u^*\in L^2(\varOmega )\) such that the subsequence converges weakly to \(u^*\). Since \(u^n > 0\) and \(E(u^*)\) must be bounded, \(u^* > 0\) and \(u^*\in S(\varOmega )\).

Because \(TGV^2_\alpha \) is lower semicontinuous, E(u) is also lower semicontinuous. By applying Fatou’s Lemma, we have

which implies that \(u^*\) is a solution of problem (12).

If we let \(p(t) = \frac{(f(x) -t)^2}{t}\) for any fixed \(x\in \varOmega \), then its second derivative is given by \(p''(t) = \frac{2f(x)^2}{t^3}\) and thus \(p''(t) > 0\) for any \(t > 0\). Hence, p is a strictly convex function when \(t > 0\). Therefore, problem (12) has a unique minimizer. \(\square \)

Rights and permissions

About this article

Cite this article

Kang, M., Kang, M. & Jung, M. Total Generalized Variation Based Denoising Models for Ultrasound Images. J Sci Comput 72, 172–197 (2017). https://doi.org/10.1007/s10915-017-0357-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-017-0357-3