Abstract

In this paper we study a variational problem in the space of functions of bounded Hessian. Our model constitutes a straightforward higher-order extension of the well known ROF functional (total variation minimisation) to which we add a non-smooth second order regulariser. It combines convex functions of the total variation and the total variation of the first derivatives. In what follows, we prove existence and uniqueness of minimisers of the combined model and present the numerical solution of the corresponding discretised problem by employing the split Bregman method. The paper is furnished with applications of our model to image denoising, deblurring as well as image inpainting. The obtained numerical results are compared with results obtained from total generalised variation (TGV), infimal convolution and Euler’s elastica, three other state of the art higher-order models. The numerical discussion confirms that the proposed higher-order model competes with models of its kind in avoiding the creation of undesirable artifacts and blocky-like structures in the reconstructed images—a known disadvantage of the ROF model—while being simple and efficiently numerically solvable.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the following general framework of a combined first and second order non-smooth regularisation procedure. For a given datum u 0∈L s(Ω), \(\varOmega\subset\mathbb{R}^{2}\), s=1,2, we compute a regularised reconstruction u as a minimiser of a combined first and second order functional H(u). More precisely, we are interested in solving

for s∈{1,2}, non-negative regularisation parameters α,β, convex functions \(f:\mathbb{R}^{2}\rightarrow\mathbb{R}^{+}\), \(g:\mathbb{R}^{4}\rightarrow\mathbb{R}^{+}\) with at most linear growth at infinity, and a suitable linear operator T, see Sect. 3 for details. The appropriate space for this minimisation is the space of functions of bounded Hessian BH(Ω) which consists of all functions u∈W 1,1(Ω) such that ∇u is a function of bounded variation. The idea of this combination of first and second order dynamics is to regularise with a fairly large weight α in the first order term—preserving the jumps as good as possible—and using a not too large weight β for the second order term such that artifacts (staircasing) created by the first order regulariser are eliminated without introducing any serious blur in the reconstructed image. We will show that for image denoising, deblurring as well as inpainting the model (1.1) offers solutions whose quality (accessed by an image quality measure) is not far off from the ones produced by some of the currently best higher-order reconstruction methods in the field, e.g., the recently proposed total generalised variation (TGV) model [14]. Moreover, the computational effort needed for its numerical solution is not much more than the one needed for solving the standard ROF model [61]. For comparison the numerical solution for TGV regularisation is in general about ten times slower than this, see Table 1 at the end of the paper.

In this paper we prove existence and uniqueness of (1.1) for the classical setting of the problem in the space W 2,1(Ω) by means of relaxation. The generality of this result includes both the classical variational formulation in W 2,1, e.g. for the Huber regularised version of the total variation, as well as the non-smooth norm minimisation setting in BH(Ω), which constitutes the relaxed version of (1.1). In the numerical part of the paper a discretised version of (1.1) is minimised by means of an operator splitting technique for the cases f(x)=|x| and g(x)=|x|, and its application to image denoising, deblurring and inpainting is discussed.

The rest of the introduction is structured as follows. In Sect. 1.1 we phrase the general inverse problem for image reconstruction, which leads us to non-smooth norm minimisation, e.g. total variation minimisation, and eventually to the introduction of higher-order regularisers within this class. This section is followed by a presentation of state of the art higher-order methods in imaging in Sect. 1.2 and a discussion of some models from this group which are closely related to (1.1) in Sect. 1.3.

1.1 Context

A general inverse problem in imaging reads as follows. Observing or measuring data \(u_{0}\in\mathcal{H}\) in a suitable Hilbert space of real functions defined on a domain Ω, we seek for the original or reconstructed image u that fulfils the model

where T is a linear operator in \(\mathcal{H}\), i.e., \(T\in\mathcal{L}(\mathcal{H})\), and n=n(u 0) denotes a possible noise component which—depending on the noise statistics—might depend on the data u 0. The operator T is the forward operator of this problem. Examples are blurring operators (in which case Tu denotes the convolution of u with a blurring kernel), \(T=\mathcal{F}\) the Fourier transform or \(T=\mathcal{P}_{n}\) a projection operator onto a subspace of \(\mathcal{H}\) (i.e., only a few samples of u are given).

To reconstruct u one has to invert the operator T. This is not always possible since in many applications a problem can be ill-posed and further complicated by interferences like noise. In this case a common procedure in inverse problems is to add a-priori information to the model, which in general is given by a certain regularity assumption on the image u. Hence, instead of solving (1.2) one computes u as a minimiser of

defined in a suitable Banach space \(\mathcal{H}_{\psi}\). Here, ψ models the regularity assumption on u with a certain regularity parameter α>0 and is called the regulariser of the problem, and Φ is a distance function in \(\mathcal{H}\) that enforces (1.2). The latter depends on the statistics of the data u 0, which can be either estimated or are known from the physics behind the acquisition of u 0. For u 0 corrupted by normally distributed additive noise, this distance function is the squared L 2 norm of u 0−Tu. For the choice of the regulariser ψ, squared Hilbert space norms have a long tradition in inverse problems. The most prominent example is H 1 regularisation

see also [68, 75]. For T=Id, the gradient flow of the corresponding Euler-Lagrange equation of (1.3) reads u t =αΔu−u+u 0. The result of such a regularisation technique is a linearly, i.e., isotropically, smoothed image u, for which the smoothing strength does not depend on u 0. Hence, while eliminating the disruptive noise in the given data u 0 also prominent structures like edges in the reconstructed image are blurred. This observation gave way to a new class of non-smooth norm regularisers, which aim to eliminate noise and smooth the image in homogeneous areas, while preserving the relevant structures such as object boundaries and edges. More precisely, instead of (1.3) one considers the following functional over the space W 1,1(Ω):

where f is a function from \(\mathbb{R}^{2}\) to \(\mathbb{R}^{+}\) with at most linear growth, see [70]. As stated in (1.4) the minimisation of \(\mathcal{J}\) over W 1,1(Ω) is not well-posed in general. For this reason relaxation procedures are applied, which embed the optimisation for \(\mathcal{J}\) into the optimisation for its lower semicontinuous envelope within the larger space of functions of bounded variation, see Sect. 2. The most famous example in image processing is f(x)=|x|, which for T=Id results in the so-called ROF model [61]. In this case the relaxed formulation of (1.4) is the total variation denoising model, where \(\|\nabla u\| _{L^{1}(\varOmega)}\) is replaced by the total variation |Du|(Ω) and \(\mathcal{J}\) is minimised over the space of functions of bounded variation. Other examples for f are regularised versions of the total variation like \(f(x)=\sqrt{x^{2}+\epsilon^{2}}\) for a positive ϵ≪1 [1, 41], the Huber-regulariser and alike [22, 28, 47, 54]. The consideration of such regularised versions of |∇u| is sometimes of advantage in applications where perfect edges are traded against a certain smoothness in homogeneous parts of the image, [58]. Moreover such regularisations become necessary for the numerical solution of (1.4) by means of time-stepping [70] or multigrid-methods [43, 69, 71] for instance.

As these and many more contributions in the image processing community have proven, this new non-smooth regularisation procedure indeed results in a nonlinear smoothing of the image, smoothing more in homogeneous areas of the image domain and preserving characteristic structures such as edges. In particular, the total variation regulariser is tuned towards the preservation of edges and performs very well if the reconstructed image is piecewise constant. The drawback of such a regularisation procedure becomes apparent as soon as one considers images or signals (in 1D) which do not only consist of flat regions and jumps, but also possess slanted regions, i.e., piecewise linear parts. The artifact introduced by total variation regularisation in this case is called staircasing. Roughly this means that the total variation regularisation of a noisy linear function u 0 in one dimension is a staircase u, whose L 2 norm is close to u 0, see Fig. 1. In two dimensions this effect results in blocky-like images, see Fig. 2. In one dimension this effect has been rigorously studied in [31].

One way to reduce this staircasing effect is in fact to “round off” the total variation term by using regularised versions defined by functions f as indicated above, e.g., Huber regularisation [58]. However, such a procedure can only reduce these artifacts to a certain extent. For instance, the Huber-type regularisation will eliminate the staircasing effect only in areas with small gradient. Another way of improving total variation minimisation is the introduction of higher-order derivatives in the regulariser as in (1.1). Especially in recent years, higher-order versions of non-smooth image enhancing methods have been considered.

1.2 Related Work

Already in the pioneering paper of Chambolle and Lions [22] the authors propose a higher-order method by means of an inf-convolution of two convex regularisers. Here, a noisy image is decomposed into three parts u 0=u 1+u 2+n by solving

where ∇2 u 2 is the distributional Hessian of u 2. Then, u 1 is the piecewise constant part of u 0, u 2 the piecewise smooth part and n the noise (or texture). Along these lines a modified infimal-convolution approach has been recently proposed in the discrete setting in [65, 66]. Another attempt to combine first and second order regularisation originates from Chan, Marquina, and Mulet [26], who consider total variation minimisation together with weighted versions of the Laplacian. More precisely, they consider a regularising term of the form

where f must be a function with certain growth conditions at infinity in order to allow jumps. The well-posedness of the latter in one space dimension has been rigorously analysed by Dal Maso, Fonseca, Leoni and Morini [31] via the method of relaxation.

The idea of bounded Hessian regularisers was also considered by Lysaker et al. [52, 53], Chan et al. [27], Scherzer et al. [48, 62], Lai at al. [50] and Bergounioux and Piffet [8]. In these works the considered model has the general form

In Lefkimmiatis et al. [51], the spectral norm of the Hessian matrix is considered. Further, in [60] minimisers of functionals which are regularised by the total variation of the (l−1)st derivative, i.e.,

are studied. Another interesting higher-order total variation model is proposed by Bredies et al. [14]. The considered regulariser is called total generalised variation (TGV) and is of the form

where \(\mathrm{Sym}^{k}(\mathbb{R}^{d})\) denotes the space of symmetric tensors of order k with arguments in \(\mathbb{R}^{d}\), and α l are fixed positive parameters. Its formulation for the solution of general inverse problems was given in [13, 16].

Properties of higher-order regularisers in the discrete setting in terms of diffusion filters are further studied in [34]. Therein, the authors consider the Euler-Lagrange equations corresponding to minimisers of functionals of the general type

for different non-quadratic penaliser functions f. Moreover, Bertozzi and Greer [10] have rigorously studied the fourth-order evolution equation which arises as a gradient flow of ∫G(Δu), where G is a nondecreasing function of quadratic growth in a neighbourhood of 0 and at most linear growth at infinity. Solutions of this model are called low curvature image simplifiers and are given by

when G(s)=sarctan(s)−1/2log(s 2+1).

Higher-order inpainting methods in general perform much better than first order methods—like total variation inpainting—because of the additional directional information used for the interpolation process. Euler’s elastica is a popular higher-order variational method [25, 67]. There, the regularising term reads:

i.e., is a combination of the total variation and the curvature of the level lines of u (a nonlinear second order regularising term). Other examples of higher-order inpainting are the Cahn-Hilliard inpainting [9], TV-H−1 inpainting [17, 63] and Hessian-based surface restoration [50].

1.3 Relation of our Model to TGV, Infimal Convolution Regularisation, Higher-Order Diffusion Filters and Euler’s Elastica

In this section we want to analyse the connection of our combined first and second order approach (1.1) with infimal convolution (1.5) [22, 65, 66], the total generalised variation regulariser of order two (1.6) [14] and with higher-order diffusion filters [34]. Moreover, in the case of inpainting, we discuss the connection of our model to Euler’s elastica [25, 67].

In the case of inf-convolution (1.5) the regularised image u=u 1+u 2 consists of a function u 1∈BV(Ω) and a function u 2∈BH(Ω) which are balanced against each other by positive parameters α,β. Differently, a minimiser u of (1.1) is in BH(Ω) as a whole and as such is more regular than the infimal convolution minimiser which is a function in BV(Ω). Hence, infimal convolution reproduces edges in an image as perfect jumps while in our combined first and second order total variation approach edges are lines where the image function has a large but finite gradient everywhere. We believe that our approach (1.1) can be made equivalent to infimal convolution if combined with the correct choice of adaptive regularisation, e.g. [35, 42]. More precisely, we replace the two constant parameters α and β by spatially varying functions α(x),β(x) and minimise for u

Then, we can choose α and β according to (1.5), i.e., α=0 where u=u 2, β=0 where u=u 1, and α/β correctly balancing u 1 and u 2 in the rest of Ω. However, let us emphasise once more that this is not our intention here.

The relation of (1.1) to the regularisation approach with total generalised variation [14] of order 2 can be understood through its equivalence with the modified infimal convolution approach [66] in the discrete setting. The total generalised variation of order 2 is defined for a positive multi-index \(\mathbf{\alpha}=(\alpha_{0},\alpha_{1})\) as

where \(\mathrm{Sym}^{2}(\mathbb{R}^{d})\) is the space of symmetric tensors of order 2 with arguments in \(\mathbb{R}^{d}\). An alternative definition of \(\mathrm{TGV}_{\alpha}^{2}\) was proven in [16]

where BD(Ω) is the space of functions of bounded deformation, \(\mathcal{E}w\) is the distributional symmetrised gradient of w and |⋅|(Ω) denotes the total variation measure evaluated on Ω.

The relation to higher-order diffusion filters as analysed in [34] becomes apparent when considering the Euler-Lagrange equation of (1.1) in the case T=Id and f, g having the form f(x)=h(|x|), g(x)=h(|x|), where h is convex and has at most linear growth. Namely, with appropriate boundary conditions we obtain the following Euler-Lagrange equation

This simplifies for the case \(h(x)=\sqrt{|x|^{2}+\epsilon^{2}}\) to a regularised first-second order total variation reaction-diffusion equation that reads

The consideration of the corresponding evolution equations for (1.8) in the presence of different choices of penalising functions h promises to give rise to additional properties of this regularisation technique and is a matter of future research.

As far as inpainting is concerned, we examine here the connection of our method to Euler’s elastica. Depending on how each of the terms in the Euler’s elastica regulariser are weighted, the interpolation process is performed differently. If a larger weight is put on the total variation the interpolation results into an image with sharp edges, which however can get disconnected if the scale of the gap is larger than the scale of the object whose edges should be propagated into it. This behaviour is a validation of the so-called “good continuation principle” defined by the Gestaltist school [49] and not desirable in image inpainting. Putting a larger weight on the curvature term however resolves this issue and gives satisfying results with respect to the continuation principle. The right combination (balance) of these two terms seems to result into a good tradeoff between “sharpness” and “continuation” of edges. However, one disadvantage of the Euler’s elastica inpainting model for analytic and numerical issues is that it is a non-convex minimisation problem. In particular, numerical algorithms are in general not guaranteed to converge to a global minimiser, only local minimisation can be achieved. The minimisation of functional (1.1) can be seen as a convex simplification of the Euler’s elastica idea, where we have replaced the non-convex curvature by the convex total variation of the first derivative of u.

For more discussion and comparison of higher-order regularisers we recommend Chaps. 4.1.5–4.1.7 and 6.4 in [5].

Outline of the Paper

In Sect. 2 we give a brief introduction to Radon measures, convex functions of measures and functions of bounded variation. In Sect. 3 we introduce the variational problem (1.1) and the space BH(Ω) that this functional is naturally defined in. We define two topologies on BH(Ω) and we identify the lower semicontinuous envelope of (1.1) with respect to these topologies. Finally, we prove the well-posedness—existence, uniqueness, stability—of the minimisation of the relaxed functional using standard techniques from calculus of variations and Bregman distances. Section 4 deals with two special versions of (1.1), the anisotropic version and the case with the L 1 norm in the fidelity term. In Sect. 5 we introduce the corresponding discretised problem and we propose the split Bregman method for its numerical implementation in the case f(x)=|x|, g(x)=|x|. In Sects. 6, 7 and 8 we present some numerical examples of our method in image denoising, deblurring and inpainting respectively. Finally, in Sect. 9 we discuss how our approach compares with other higher-order methods like infimal convolution (denoising, deblurring), total generalised variation (denoising, deblurring) and Euler’s elastica (inpainting).

2 Preliminaries

In this section, we introduce some basic notions that we are going to use. A reader familiar with Radon measures, BV functions and relaxed functionals can quickly go through Sects. 2.1, 2.3 and 2.4 respectively. Section 2.2 familiarises the reader with convex functions of measures, a perhaps less known subject.

Remarks on Standard Notation

As usual, we denote with \(\mathcal{L}^{n}\) the Lebesgue measure. Different notions are denoted by |⋅|: When it is applied on vectors or matrices it denotes the Euclidean norm (vector) or the Frobenius norm (matrices). When it is applied on measures it denotes the total variation measure while when it is applied on Borel subsets of \(\mathbb{R}^{n}\) it denotes the Lebesgue measure of that subset. Finally, |⋅|1 denotes the ℓ 1 norm in \(\mathbb{R}^{n}\) and (⋅,⋅) denotes the standard Euclidean inner product.

2.1 Finite Radon Measures

All our notation and definitions are consistent with [4]. From now on, Ω denotes an open set in \(\mathbb{R}^{n}\). We define the space \([\mathcal{M}(\varOmega)]^{m}\) to be the space of \(\mathbb {R}^{m}\)-valued finite Radon measures. The total variation measure of \(\mu\in[\mathcal{M}(\varOmega)]^{m}\) is denoted by |μ|. We say that a sequence \((\mu_{k})_{k\in\mathbb{N}}\) in \([\mathcal{M}(\varOmega)]^{m}\) converges weakly∗ to a measure \(\mu\in[\mathcal{M}(\varOmega )]^{m}\) and we write μ k ⇀μ if lim k→∞∫ Ω u dμ k =∫ Ω u dμ for all u∈C 0(Ω), the completion of C c (Ω) endowed with the supremum norm. Thus \((\mu_{k})_{k\in\mathbb{N}}\) converges weakly∗ to μ if it converges weakly∗ component-wise. We will often consider the Lebesgue decomposition of a \(\mathbb{R}^{m}\)-valued finite Radon measure μ with respect to a σ-finite positive Borel measure ν:

where μ a is the absolutely continuous part of μ with respect to ν, μ s is the singular part and (μ/ν) denotes the density function of μ with respect to ν (Radon-Nikodým derivative). Again this is nothing else than the usual Lebesgue decomposition regarded component-wise. Recall also that any \(\mu\in [\mathcal{M}(\varOmega)]^{m}\) is absolutely continuous with respect to its total variation measure |μ| and thus we obtain the polar decomposition of μ

2.2 Convex Functions of Measures

Let g be a continuous function from \(\mathbb{R}^{m}\) to \(\mathbb{R}\) which is positively homogeneous of degree 1, i.e., for every \(x\in\mathbb{R}^{m}\)

Given a measure \(\mu\in[\mathcal{M}(\varOmega)]^{m}\), we define the \(\mathbb{R}\)-valued measure g(μ) as follows:

It can be proved that if g is a convex function then g(⋅) is a convex function in \([\mathcal{M}(\varOmega)]^{m}\) and if ν is any positive measure such that μ is absolutely continuous with respect to ν then

We refer the reader to Proposition A.1 in the Appendix for a proof of the above statement. Suppose now that g is not necessarily positively homogeneous but it is a continuous function from \(\mathbb{R}^{m}\to\mathbb{R}\) which is convex and has at most linear growth at infinity, i.e., there exists a positive constant K such that

In that case the recession function g ∞ of g is well defined everywhere, where

It can be proved that g ∞ is a convex function and positively homogeneous of degree 1. Given a measure \(\mu\in[\mathcal{M}(\varOmega )]^{m}\) we consider the Lebesgue decomposition with respect to Lebesgue measure \(\mathcal{L}^{n}\), \(\mu=(\mu/\mathcal{L}^{n})\mathcal{L}^{n}+\mu^{s}\) and we define the \(\mathbb{R}\)-valued measure g(μ) as follows:

We refer the reader to Theorem A.2 in the Appendix for a result regarding lower semicontinuity of convex functions of measures with respect to the weak∗ convergence.

2.3 The Space [BV(Ω)]m

We recall that a function u∈L 1(Ω) is said to be a function of bounded variation or else u∈BV(Ω) if its distributional derivative can be represented by a \(\mathbb{R}^{n}\)-valued finite Radon measure, which is denoted by Du. This means that

for some \(\mathbb{R}^{n}\)-valued finite Radon measure Du=(D 1 u,…,D n u). The absolutely continuous part of Du with respect to Lebesgue measure \(\mathcal{L}^{n}\) is denoted by ∇u. It is immediate that W 1,1(Ω)⊆ BV(Ω) since if u∈W 1,1(Ω) then \(Du=\nabla u \mathcal{L}^{n}\). Consistently, we say that a function u=(u 1,…,u m)∈[L 1(Ω)]m belongs to [BV(Ω)]m if

In that case Du is an m×n matrix-valued measure. A function u belongs to [BV(Ω)]m if and only if its variation in Ω, V(u,Ω) is finite, where,

Moreover if u∈[BV(Ω)]m then |Du|(Ω)=V(u,Ω) and if u∈[W 1,1(Ω)]m, then |Du|(Ω)=∫ Ω |∇u|dx, where \(|\nabla u|= (\sum_{a=1}^{m}\sum_{i=1}^{n} (\partial_{i}u^{a})^{2} )^{1/2}\). The space [BV(Ω)]m endowed with the norm ∥u∥BV(Ω):=∫ Ω |u|dx+|Du|(Ω) is a Banach space. It can be shown that if Du=0, then u is equal to a constant a.e. in any connected component of Ω.

Suppose that \((u_{k})_{k\in\mathbb{N}}\), u belong to [BV(Ω)]m. We say that the sequence \((u_{k})_{k\in\mathbb{N}}\) converges to u weakly ∗ in [BV(Ω)]m if it converges to u in [L 1(Ω)]m and the sequence of measures \((Du_{k})_{k\in\mathbb{N}}\) converges weakly∗ to the measure Du. It is known that \((u_{k})_{k\in\mathbb{N}}\) converges to u weakly∗ in [BV(Ω)]m if and only if \((u_{k})_{k\in \mathbb{N}}\) is bounded in [BV(Ω)]m and converges to u in [L 1(Ω)]m. The usefulness of the introduction of the weak∗ convergence is revealed in the following compactness result: Suppose that the sequence \((u_{k})_{k\in\mathbb{N}}\) is bounded in [BV(Ω)]m, where Ω is a bounded open set of \(\mathbb{R}^{n}\) with Lipschitz boundary. Then there exists a subsequence \((u_{k_{\ell}})_{\ell\in \mathbb{N}}\) and a function u∈[BV(Ω)]m such that \((u_{k_{\ell}})_{\ell\in\mathbb{N}}\) converges to u weakly∗ in [BV(Ω)]m.

We say that the sequence \((u_{k})_{k\in\mathbb{N}}\) converges to u strictly in [BV(Ω)]m if it converges to u in [L 1(Ω)]m and \((|Du_{k}|(\varOmega))_{k\in\mathbb{N}}\) converges to |Du|(Ω). It is immediate that strict convergence implies weak∗ convergence.

Suppose that Ω is bounded with Lipschitz boundary. Define 1∗=n/(n−1) when n>1 and 1∗=∞ when n=1. Then \(\mathrm{BV}(\varOmega)\subseteq L^{1^{\ast}}(\varOmega)\) with continuous embedding. Moreover if Ω is connected then the following inequality holds (Poincaré-Wirtinger):

where the constant C depends only on Ω and u Ω denotes the mean value of u in Ω:

We refer the reader to [4] for a detailed description of the above as well as for an introduction to weak continuity and differentiability notions in BV(Ω) and the decomposition of the distributional derivative of a function u∈BV(Ω).

2.4 Relaxed Functionals

Suppose that X is a set endowed with some topology τ and let \(F:X\to\mathbb {R}\cup\{+\infty\}\). The relaxed functional or otherwise called the lower semicontinuous envelope of F with respect to the topology τ is a functional \(\overline {F}:X\to\mathbb{R}\cup\{+\infty\}\) defined as follows for every x∈X:

It is easy to check that \(\overline{F}\) is the greatest τ lower semicontinuous functional which is smaller or equal than F. It can be also checked that

where \(\mathcal{N}(x)\) denotes the open neighbourhoods of x. Moreover, if X is a first countable topological space, then \(\overline {F}(x)\) is characterised by the two following properties:

-

(i)

For every sequence \((x_{k})_{k\in\mathbb{N}}\) converging to x, we have

$$\overline{F}(x)\le\liminf_{k\to\infty}F(x_{k}). $$ -

(ii)

There exists a sequence \((x_{k})_{k\in\mathbb{N}}\) converging to x such that

$$\overline{F}(x)\ge\limsup_{k\to\infty}F(x_{k}). $$

An interesting property of the relaxed functional is that if it has a minimum point then the value of \(\overline{F}\) at that point will be equal with the infimum of F, i.e.,

For more information on relaxed functionals see [12] and [30].

3 The Variational Formulation

In the current section we specify our definition of the functional (1.1) that we want to minimise. We start by defining the minimisation problem in the space W 2,1(Ω) as this is the space in which our analysis subsumes various choices for the regularisers f and g. As this space is not reflexive, and thus existence of minimisers cannot be guaranteed, we extend the definition to a larger space. We introduce this larger space BH(Ω) as the subspace of all u∈W 1,1(Ω) such that ∇u∈[BV(Ω)]m. We define the weak∗ and the strict topology of BH(Ω) and we identify the lower semicontinuous envelope (relaxed functional) of the extended functional with respect to these topologies. We prove existence of minimisers of the relaxed functional, uniqueness under some assumptions as well as stability.

In the following Ω denotes as usual a bounded, connected, open subset of \(\mathbb{R}^{2}\) with Lipschitz boundary, T denotes a bounded linear operator from L 2(Ω) to L 2(Ω), u 0∈L 2(Ω) and α, β are non-negative constants. Further we suppose that \(f:\mathbb{R}^{2}\to\mathbb{R}^{+}\), \(g:\mathbb{R}^{4}\to\mathbb{R}^{+}\) are convex functions with at most linear growth at infinity, i.e., there exist positive constants K 1 and K 2 such that

Moreover, we assume that both f and g are minimised in 0 and they satisfy a coercivity condition:

where K 3 and K 4 are strictly positive. We want to minimise the following functional:

The natural space for the functional H to be defined in, is W 2,1(Ω). Since this space is not reflexive a solution of the minimisation problem by the direct method of calculus of variations does not work. Rather, existence of a minimiser of (3.5) can be shown via relaxation that is: We extend the functional H into a larger space which has some useful compactness properties with respect to some topology and we identify the relaxed functional with respect to the same topology. This space is BH(Ω).

3.1 The Space BH(Ω)

The space BH(Ω) (often denoted with BV2(Ω)) is the space of functions of bounded Hessian. It was introduced by Demengel in [32] and consists of all functions u∈W 1,1(Ω) whose distributional Hessian can be represented by an \(\mathbb{R}^{2}\times\mathbb {R}^{2}\)-valued finite Radon measure. In other words:

We set D 2 u:=D(∇u). Again it is immediate that W 2,1(Ω)⊆BH(Ω). BH(Ω) is a Banach space equipped with the norm ∥u∥BH(Ω)=∥u∥BV(Ω)+|D 2 u|(Ω). If Ω has a Lipschitz boundary and it is connected then it can be shown that there exist positive constants C 1 and C 2 such that

Moreover, the embedding from BH(Ω) into W 1,1(Ω) is compact, see [32]. We denote the approximate differential of ∇u with ∇2 u, see [4] for a definition.

Analogously with BV(Ω) we define the following notions of convergence in BH(Ω):

Definition 3.1

(Weak∗ Convergence in BH(Ω))

Let \((u_{k})_{k{\in}\mathbb{N}}\), u belong to BH(Ω). We say that \((u_{k})_{k\in\mathbb{N}}\) converges to u weakly∗ in BH(Ω) if

and

or in other words

It is not hard to check that a basis for that topology consists of the following sets:

where v∈BH(Ω), F is finite, ϵ>0 and ϕ i ∈C 0(Ω). This topology is also metrizable, hence first countable. We do not imply here that BH(Ω) is the dual space of a Banach space but we name this convergence weak ∗ to show the correspondence with the weak∗ convergence in BV(Ω). We have also the corresponding compactness result:

Theorem 3.2

(Compactness in BH(Ω))

Suppose that the sequence \((u_{k})_{k\in\mathbb{N}}\) is bounded in BH(Ω). Then there exists a subsequence \((u_{k_{\ell}})_{\ell\in \mathbb{N}}\) and a function u∈BH(Ω) such that \((u_{k_{\ell }})_{\ell\in\mathbb{N}}\) converges to u weakly ∗ in BH(Ω).

Proof

From the compact embedding of BH(Ω) into W 1,1(Ω) and the fact that the sequence \((\nabla u_{k})_{k\in\mathbb{N}}\) is bounded in [BV(Ω)]2 we have that there exists a subsequence \((u_{k_{\ell}})_{\ell\in\mathbb{N}}\), a function u∈W 1,1(Ω) and a function v∈[BV(Ω)]2 such that \((u_{k_{\ell}})_{\ell \in\mathbb{N}}\) converges to u in W 1,1(Ω) and \((\nabla u_{k_{\ell}})_{\ell\in\mathbb{N}}\) converges to v weakly∗ in [BV(Ω)]2, as ℓ goes to infinity. Then, ∇u=v, u∈BH(Ω) and \((u_{k_{\ell}})_{\ell\in\mathbb{N}}\) converges to u weakly∗ in BH(Ω). □

Definition 3.3

(Strict Convergence in BH)

Let \((u_{k})_{k\in\mathbb{N}}\) and u belong to BH(Ω). We say that \((u_{k})_{k\in\mathbb{N}}\) converges to u strictly in BH(Ω) if

and

It is easily checked that the function

is a metric and induces the strict convergence in BH(Ω). The following Lemma can be used to compare these two topologies.

Lemma 3.4

Suppose that \((u_{k})_{k\in\mathbb{N}}\), u ∗ belong to BH(Ω) and \((u_{k})_{k\in\mathbb{N}}\) converges to u ∗ strictly in BH(Ω). Then

Proof

We recall from (3.6) that there exist positive constants C 1 and C 2 such that

Since the sequence \((u_{k})_{k\in\mathbb{N}}\) is strictly convergent in BH(Ω), the sequences \((\|u_{k}\|_{L^{1}(\varOmega)})_{k\in\mathbb {N}}\) and \((|D^{2}u_{k}|(\varOmega))_{k\in\mathbb{N}}\) are bounded. Hence, there exists a positive constant C such that

which implies that the sequence \((u_{k})_{k\in\mathbb{N}}\) is bounded in BH(Ω). From the compact embedding of BH(Ω) into W 1,1(Ω) we get that there exists a subsequence \((u_{k_{\ell }})_{\ell\in\mathbb{N}}\) and a function v∈W 1,1(Ω) such that \((u_{k_{\ell}})_{\ell\in\mathbb{N}}\) converges to v in W 1,1(Ω). In particular \((u_{k_{\ell}})_{\ell\in\mathbb{N}}\) converges to v in L 1(Ω) so v=u ∗ and thus \((u_{k_{\ell}})_{\ell\in\mathbb{N}}\) converges to u ∗ in W 1,1(Ω). Since every subsequence of \((u_{k})_{k\in\mathbb {N}}\) is bounded in BH(Ω) we can repeat the same argument and deduce that for every subsequence of \((u_{k})_{k\in\mathbb{N}}\) there exists a further subsequence which converges to u ∗ in W 1,1(Ω). This proves that the initial sequence \((u_{k})_{k\in \mathbb{N}}\) converges to u ∗ in W 1,1(Ω). □

Corollary 3.5

Strict convergence implies weak ∗ convergence in BH(Ω).

3.2 Relaxation of the Second Order Functional

We now extend the functional H in (3.5) to BH(Ω) in the following way:

As we have discussed above, the weak∗ topology in BH(Ω) provides a good compactness property which is inherited from the weak∗ topology in [BV(Ω)]n. However, the functional H ex is not sequentially lower semicontinuous with respect to the strict topology in BH(Ω) and hence it is neither with respect to the weak∗ topology in BH(Ω). Indeed, we can find a function u∈BH(Ω)∖W 2,1(Ω), see [32] for such an example. Hence, from the definition of H ex we have H ex (u)=∞. However, according to Theorem A.3 we can find a sequence \((u_{k})_{k\in\mathbb{N}}\) in W 2,1(Ω) which converges strictly to u. It follows that the sequences \((\|u_{k}\|_{L^{1}(\varOmega)})_{k\in\mathbb{N}}\), \((|D^{2}u_{k}|(\varOmega))_{k\in\mathbb{N}}\) as well as \((\|\nabla u_{k}\| _{L^{1}(\varOmega)})_{k\in\mathbb{N}}\) are bounded. Moreover the sequence \((u_{k})_{k\in\mathbb{N}}\) is bounded in L 2(Ω). Since T is a bounded linear operator and from the fact that f and g have at most linear growth at infinity we deduce that the sequence \((H_{ex}(u_{k}))_{k\in\mathbb{N}}\) is bounded as well. Hence we get

which proves that H ex is not lower semicontinuous with respect to the strict topology in BH(Ω). Thus, we have to identify its lower semicontinuous envelope with respect to the strict convergence. We define the following functional in BH(Ω) :

where ∇2 u, the approximate differential of ∇u, is also the density of D 2 u with respect to the Lebesgue measure, see [4]. It is immediate to see that if u∈W 2,1(Ω) then \(\overline {H}_{ex}(u)=H_{ex}(u)\). Thus is general, \(\overline{H}_{ex}\) is smaller than H ex .

Theorem 3.6

The functional \(\overline{H_{ex}}\) is lower semicontinuous with respect to the strict topology in BH(Ω).

Proof

It is not hard to check that since f is convex and has at most linear growth then it is Lipschitz, say with constant L>0. Let u and \((u_{k})_{k\in\mathbb{N}}\) be functions in BH(Ω) and let \((u_{k})_{k\in\mathbb{N}}\) converge to u strictly in BH(Ω) and thus also weakly∗ in BH(Ω). We have to show that

From the definition of the weak∗ convergence in BH(Ω) we have that \((u_{k})_{k\in\mathbb{N}}\) converges to u in W 1,1(Ω). From the Sobolev inequality, see [40],

we have that \((u_{k})_{k\in\mathbb{N}}\) converges to u in L 2(Ω). Since T:L 2(Ω)→L 2(Ω) is continuous then the map \(u\mapsto\frac{1}{2}\int_{\varOmega}(u_{0}-Tu)^{2}dx\) is continuous and hence we have that

Moreover since \(\|\nabla u_{k}-\nabla u\|_{[L^{1}(\varOmega)]^{2}}\) converges to 0 as k→∞, we have from the Lipschitz property

i.e., we have

Finally we have that D 2 u k →D 2 u weakly∗. We can apply Theorem A.2 for \(\mu_{k}=\mu=\mathcal{L}^{2}\), ν=D 2 u, ν k =D 2 u k and get that

From (3.8), (3.9) and (3.10) we get that

□

Theorem 3.7

The functional \(\overline{H_{ex}}\) is the lower semicontinuous envelope of H ex with respect to the strict convergence in BH(Ω).

Proof

Suppose that \((u_{k})_{k\in\mathbb{N}}\) converges to u strictly in BH(Ω). From the lower semicontinuity of \(\overline{H_{ex}}\) we have that \(\overline{H_{ex}}(u)\le\liminf_{k\to\infty}\overline {H_{ex}}(u_{k})\) and since \(\overline{H_{ex}}\le H_{ex}\) we get that \(\overline{H_{ex}}(u)\le\liminf_{k\to\infty}H_{ex}(u_{k})\). For the other direction Theorem A.3 tells us that given u∈BH(Ω) there exist a sequence \((u_{k})_{k\in\mathbb{N}}\subseteq C^{\infty }(\varOmega)\cap W^{2,1}(\varOmega)\) such that \((u_{k})_{k\in\mathbb{N}}\) converges to u strictly in BH(Ω) and

We have also that \((u_{k})_{k\in\mathbb{N}}\) converges to u in W 1,1(Ω) which implies that

as the proof of Theorem 3.6 shows. From (3.11) and (3.12) we get that

□

Observe that \(\overline{H_{ex}}\) is also the lower semicontinuous envelope of H ex with respect to the weak∗ convergence in BH(Ω).

Let us note here that in fact the relaxation result of Theorem 3.7 follows from a more general relaxation result in [3]. There, the authors solely assume g to be quasi-convex. However, since we consider convex functions the proof we give in this paper is simpler and more accessible to the non-specialist reader.

The proof of the following minimisation theorem follows the proof of the corresponding theorem in [70] for the analogue first order functional. Here we denote with \(\mathcal{X}_{\varOmega}\) the characteristic function of Ω, i.e., \(\mathcal{X}_{\varOmega}(x)=1\), for all x∈Ω and 0 otherwise.

Theorem 3.8

Assuming \(T(\mathcal{X}_{\varOmega})\ne0\), α>0, β>0 then the minimisation problem

has a solution u∈BH(Ω).

Proof

Let \((u_{k})_{k\in\mathbb{N}}\) be a minimising sequence for (3.13) and let C>0 be an upper bound for \((\overline {H_{ex}}(u_{k}))_{k\in\mathbb{N}}\). We have that

for every \(k\in\mathbb{N}\). From the coercivity assumptions (3.3)–(3.4) and from (3.14) we have

for a possibly different constant C. We show that the sequence \((u_{k})_{k\in\mathbb{N}}\) is bounded in L 2(Ω), following essentially [70]. By the Poincaré-Wirtinger inequality there exists a positive constant C 1 such that for every \(k\in\mathbb{N}\)

Thus it suffices to bound |∫ Ω u k dx| uniformly in k. We have for every \(k\in\mathbb{N}\)

It follows that

and thus

since \(T(\mathcal{X}_{\varOmega})\ne0\).

Since the sequence is bounded in L 2(Ω) and Ω is bounded, we have that the sequence is bounded in L 1(Ω) and moreover it is bounded in BH(Ω). From Theorem 3.2 we obtain the existence of a subsequence \((u_{k_{\ell}})_{\ell\in\mathbb {N}}\) and u∈BH(Ω) such that \((u_{k_{\ell}})_{\ell\in\mathbb {N}}\) converges to u weakly∗ in BH(Ω). Since the functional \(\overline{H_{ex}}\) is lower semicontinuous with respect to this convergence we have:

which implies that

□

Let us note here that in the above proof we needed α>0, in order to get an a priori bound in the L 1 norm of the gradient (for β=0 see [70]). However, the proof goes through if α=0 and T is injective. If T is not injective and α=0 it is not straightforward how to get existence. The proof of the following theorem also follows the proof of the corresponding theorem for the first order analogue in [70].

Proposition 3.9

If, in addition to \(T(\mathcal{X}_{\varOmega})\ne0\), T is injective or if f is strictly convex, then the solution of the minimisation problem (3.13) is unique.

Proof

Using the Proposition A.1 in the Appendix we can check that the functional \(\overline{H_{ex}}\) is convex. Let u 1, u 2 be two minimisers. If T(u 1)≠T(u 2) then from the strict convexity of the first term of \(\overline{H_{ex}}\) we have

which is a contradiction. Hence T(u 1)=T(u 2) and if T is injective we have u 1=u 2. If T is not injective but f is strictly convex then we must have ∇u 1=∇u 2 otherwise we get the same contradiction as before. In that case, since Ω is connected, there exists a constant c such that \(u_{1}=u_{2}+c\mathcal{X}_{\varOmega}\) and since \(T(\mathcal{X}_{\varOmega })\ne0\), we get c=0. □

3.3 Stability

To complete the well-posedness picture for (1.1) it remains to analyse the stability of the method. More precisely, we want to know which effect deviations in the data u 0 have on a corresponding minimiser of (1.1). Ideally the deviation in the minimisers for different input data should be bounded by the deviation in the data. Let R be the regularising functional in (1.1), i.e.,

It has been demonstrated by many authors [7, 18, 19, 59] that Bregman distances related to the regularisation functional R are natural error measures for variational regularisation methods with R convex. In particular Pöschl [59] has derived estimates for variational regularisation methods with powers of metrics, which apply to the functional we consider here. However, for demonstration issues and to make constants in the estimates more explicit let us state and prove the result for a special case of (1.1) here.

We consider functional (1.1) for the case s=2. For what we are going to do we assume that one of the regularisers is differentiable. Without loss of generality, we assume that f(s) is differentiable in s. The analogous analysis can be done if g(s) is differentiable or even under weaker, continuity conditions on f and g, see [15]. Let \(\tilde{u}\) be the original image and \(\tilde{u}_{0}\) the exact datum (without noise), i.e. \(\tilde{u}_{0}\) is a solution of \(T\tilde{u}=\tilde{u}_{0}\). We assume that the noisy datum u 0 deviates from the exact datum by \(\|\tilde{u}_{0}-u_{0}\|_{L^{2}}\leq \delta\) for a small δ>0. For the original image \(\tilde{u}\) we assume that the following condition, called source condition, holds

where D(T ∗) denotes the domain of the operator T ∗ and ∂R is the subdifferential of R. Moreover, since f is differentiable and both f and g are convex, the subdifferential of R can be written as the sum of subdifferentials of the two regularisers, i.e.,

see [38, Proposition 5.6., p. 26]. We define the symmetric Bregman distance for the regularising functional R as

Theorem 3.10

Let \(\tilde{u}\) be the original image with source condition (SC) satisfying \(T\tilde{u} = \tilde{u}_{0}\). Let u 0∈L 2(Ω) be the noisy datum with \(\|\tilde{u}_{0}-u_{0}\|_{L^{2}}\leq\delta\). Then a minimiser u of (1.1) fulfils

where the source element q is decomposed in \(q=\alpha q_{\nabla}+ \beta q_{\nabla^{2}}\). Moreover, let u 1 and u 2 be minimisers of (1.1) with data u 0,1 and u 0,2 respectively. Then the following estimate is true

Proof

The optimality condition for (1.1) for s=2 and differentiable f reads

Adding ξ from (SC) and \(T^{*}\tilde{u}_{0}\) we get

Then we use that \(\xi=\alpha\xi_{\nabla}+ \beta\xi_{\nabla^{2}}\) and take the duality product with \(u-\tilde{u}\), which gives

By Young’s inequality and the standard inequality \(\frac{1}{2} (a+b)^{2} \leq a^{2} + b^{2}\) for \(a,b\in\mathbb{R}\), we eventually get

Similarly, we can derive the stability estimate by subtracting the optimality conditions for (1.1) in u 1 and u 2 with datum u 0,1 and u 0,2, respectively and applying Young’s inequality again, we get (3.16). □

Remark 3.11

In the case α=β we obtain the classical form of Bregman error estimates, that is

4 Special Cases and Extensions

In this section we introduce two more versions of the functional \(\overline{H_{ex}}\), the anisotropic version and the version where the L 1 norm appears in the fidelity term.

4.1 The Anisotropic Version

We introduce the anisotropic version of the functional H in (3.5). Note that when f(x)=|x|, g(x)=|x| then the relaxed functional \(\overline{H_{ex}}\) is given by

Its anisotropic analogue is defined for f(x)=|x|1 and g(x)=|x|1. In that case, the relaxed functional is given by

where D i , i=1,2 denotes the distributional derivative with respect to x and y respectively. Since the functional F is obtained for the above choice of f and g, the following theorem holds as a special case of Theorem 3.8:

Theorem 4.1

Assuming \(T(\mathcal{X}_{\varOmega})\ne0\), the minimisation problem

has a solution. If T is injective then the solution is unique.

4.2 L 1 Fidelity Term

We consider here the case with the L 1 norm in the fidelity term, i.e.,

where for simplicity we consider the case f(x)=|x|, g(x)=|x|. As it has been shown in [55] and also studied in [24] and [36], the L 1 norm in the fidelity term leads to efficient restorations of images that have been corrupted by impulse noise.

Theorem 4.2

Assuming \(T(\mathcal{X}_{\varOmega})\ne0\), the minimisation problem

has a solution.

Proof

The proof is another application of the direct method of calculus of variation. Similarly with the proof of Theorem 3.8 we show that any minimising sequence is bounded in L 1(Ω). Hence it is bounded in BH(Ω). Thus we can extract a weakly∗ convergent subsequence in BH(Ω). Trivially, the functional is lower semicontinuous with respect to that convergence. □

Note that in this case the uniqueness of the minimiser cannot be guaranteed since the functional G is not strictly convex anymore, even in the case where T=Id. The versions with general f and g of Theorem 3.8 can be easily extended to the cases discussed in Sects. 4.1 and 4.2.

5 The Numerical Implementation

In this section we work with the discretised version of the functional (3.5) and we discuss its numerical realisation by the so-called split Bregman technique [46]. We start by defining the discrete versions of L 1 and L 2 norms in Sect. 5.1. In Sect. 5.2 we proceed with an introduction to the Bregman iteration which is used to solve constrained optimisation problems, an idea originated in [56]. In [46] the Bregman iteration and an operator splitting technique (split Bregman) is used in order to solve the total variation minimisation problem. In the latter paper it was also proved that the iterates of the Bregman iteration converge to the solution of the constrained problem assuming that the iterates satisfy the constraint in a finite number of iterations. Here, we give a more general convergence result where we do not use that assumption, see Theorem 5.1. Finally in Sect. 5.3 we describe how our problem is solved with the Bregman iteration, using the splitting procedure mentioned above.

5.1 The Discretised Setting

In this section we study the discretisation and minimisation of the functional (3.5). In our numerical examples we consider f(x)=|x|, g(x)=|x| and the data fidelity term is measured in the L 2 norm, i.e.,

In order to discretise (5.1) we specify the corresponding discrete operators and norms that appear in the continuous functional. We denote the discretised version of (5.1) with J. In the discrete setting, u is an element of \(\mathbb{R}^{n\times m}\) and T is a bounded linear operator from \(\mathbb{R}^{n\times m}\) to \(\mathbb{R}^{n\times m}\). For f(x)=|x| and g(x)=|x|, the discrete functional J is given by

where for every \(u\in\mathbb{R}^{n\times m}\), \(v=(v_{1},v_{2})\in (\mathbb{R}^{n\times m} )^{2}\) and \(w=(w_{1},w_{2},w_{3},w_{4})\in (\mathbb{R}^{n\times m} )^{4}\) we define

For the formulation of the discrete gradient and Hessian operators we use periodic boundary conditions and we follow [76]. We also refer the reader to [57] where the form of the discrete differential operators is described in detail. We define ∇ and \(\operatorname{div}\) consistently with the continuous setting as adjoint operators and the same is done for the Hessian ∇2 and its adjoint \(\operatorname{div}^{2}\).

In particular the first and second order divergence operators, div and \(\operatorname{div}^{2}\), have the properties:

where the “⋅” denotes the Euclidean inner product, if we consider u, v and w as large vectors formed successively by the columns of the corresponding matrices.

5.2 Constrained Optimisation and the Bregman Iteration

We now introduce the Bregman and the split Bregman iteration as a numerical method for the solution of (5.2). We would like to recall some basic aspects of the general theory of Bregman iteration before finishing this discussion with the convergence result in Theorem 5.1.

Suppose we have to solve the following constrained minimisation problem:

where the function E is convex and A is a linear map from \(\mathbb {R}^{d}\) to \(\mathbb{R}^{\ell}\). We transform the constrained minimisation problem (5.3) into an unconstrained one, introducing a parameter λ:

where in order to satisfy the constraint Au=b we have to let λ increase to infinity. Instead of doing that we perform the Bregman iteration as it was proposed in [56] and [77]:

Bregman Iteration

In [56], assuming that (5.5) has a unique solution, the authors derive the following facts for the iterates u k :

and

The following theorem was proved in [46] in the case where the iterates of Bregman iteration satisfy the constraint in a finite number of iterations. In other words, it was proved that if for some iterate \(u_{k_{0}}\) we have \(Au_{k_{0}}=b\) then \(u_{k_{0}}\) is a solution to the original constrained problem (5.3). In the following theorem we give a more general proof where we do not use that assumption, something that makes it a genuine contribution to the convergence theory of Bregman iteration.

Theorem 5.1

Suppose that the constrained minimisation problem (5.3) has a unique solution u ∗. Moreover, suppose that the convex functional E is coercive and (5.5) has a unique solution for every k. Then the sequence of the iterates \((u_{k})_{k\in\mathbb {N}}\) of Bregman iteration converges to u ∗.

Proof

We have that the statements (5.7)–(5.10) hold. Moreover, we have for every k,

From (5.10) and the coercivity of E we get that the sequence \((\|u_{k}\|_{2})_{k\in\mathbb{N}}\) is bounded, say by a constant C>0. Thus, it suffices to show that every accumulation point of \((u_{k})_{k\in\mathbb{N}}\) is equal to u ∗. Since Au ∗=b, for every increasing sequence of naturals \((k_{\ell})_{\ell\in \mathbb{N}}\), we have that

Now because of the fact that

we get that

Suppose now that \((u_{k_{\ell}})_{\ell\in\mathbb{N}}\) converges to some \(\tilde{u}\) as ℓ goes to infinity. Then taking into account (5.7), \(\tilde{u}\) also satisfies

Taking limits in (5.11) and using Kronecker’s lemma, see Lemma A.4, we have that the limit in the right hand side of (5.11) is E(u ∗). Thus, we have

and since u ∗ is the solution of the constrained minimisation problem and (5.12) holds, we have \(\tilde{u}=u^{\ast}\). Since every accumulation point of the bounded sequence \((u_{k})_{k\in \mathbb{N}}\) is equal to u ∗, we conclude that the whole sequence converges to u ∗. □

For more information about the use of Bregman iteration in L 1 regularised problems we refer the reader to [46, 56, 77].

5.3 Numerical Solution of Our Minimisation Problem

In this section, we explain how the Bregman iteration (5.5)–(5.6) together with an operator splitting technique can be used to implement numerically the minimisation of functional (5.2). The idea originates from [46] where such a procedure is applied to total variation minimisation and is given the name split Bregman algorithm. This iterative technique is equivalent to certain instances of combinations of the augmented Lagrangian method with classical operator splitting such as Douglas-Rachford, see [64]. We also refer the reader to the paper of Benning, Brune, Burger and Müller [6], for applications of Bregman methods to higher-order regularisation models for image reconstruction.

Exemplarily, we present the resulting algorithm for the minimisation of J in (5.2), i.e., for f(x)=|x|, g(x)=|x| and L 2 data fidelity term. Recall that we want to solve the following unconstrained minimisation problem:

The derivation of the split Bregman algorithm for solving (5.13) starts with the first observation that the above minimisation problem is equivalent to the following constrained minimisation problem:

It is clear that since the gradient and the Hessian are linear operations, the minimisation problem (5.14) can be reformulated into the more general problem:

where \(E:\mathbb{R}^{d}\to\mathbb{R^{+}}\) is convex, A is a d×d matrix and b is a vector of length d=7 nm.

It is easy to see that the iterative scheme of the type (5.5)–(5.6) that corresponds to the constraint minimisation problem (5.14) is:

where \(b_{1}^{k+1}=(b_{1,1}^{k+1},b_{1,2}^{k+1})\in(\mathbb{R}^{n\times m})^{2}\) and \(b_{2}^{k+1}=(b_{2,11}^{k+1}, b_{2,22}^{k+1},b_{2,12}^{k+1},b_{2,21}^{k+1})\in (\mathbb{R}^{n\times m})^{4}\).

Remark 5.2

Notice that at least in the case where T is injective (denoising, deblurring), the minimisation problem (5.16) has a unique solution. Moreover in that case, the functional E is coercive and the constrained minimisation problem (5.14) has a unique solution. Thus, Theorem 5.1 holds.

Our next concern is the efficient numerical solution of the minimisation problem (5.16). We follow [46] and iteratively minimise with respect to u, v and w alternatingly:

Split Bregman for TV-TV2-L2

The above alternating minimisation scheme, make up the split Bregman iteration that is proposed in [46] to solve the total variation minimisation problem as well as problems related to compressed sensing. For convergence properties of the split Bregman iteration and also other splitting techniques we refer the reader to [29, 39, 64]. In [76] and [77] it is noted that the Bregman iteration coincides with the augmented Lagrangian method. Minimising alternatingly with respect to the variables in the augmented Lagrangian method results to the alternating direction method of multipliers (ADMM), see [44]. Thus split Bregman is equivalent to ADMM. In [37] and [44] it is shown that ADMM is equivalent to the Douglas-Rachford splitting algorithm and thus convergence is guaranteed. We refer the reader to [64] for an interesting study in this subject.

We now discuss how we solve each of the minimisation problems (5.19)–(5.21). The problem (5.19) is quadratic and it is solved through its optimality condition. This condition reads:

where T ∗ denotes the adjoint of the discrete operator T. Since all the operators that appear in (5.24) are linear, this condition leads to a linear system of equations with nm unknowns. In [46], one iteration of the Gauss-Seidel method is used to approximate the solution of the corresponding optimality condition of (5.24). However, numerical experiments have shown that in the higher-order case it is preferable and more robust to solve this problem exactly. Since we impose periodic boundary conditions for the discrete differential operators, this can be done efficiently using fast Fourier transform, see [57, 76].

The solutions of the minimisation problems (5.20) and (5.21) can be obtained exactly through a generalised shrinkage method as it was done in [46] and [72]. It is a simple computation to check that if \(a\in\mathbb{R}^{n}\) then the solution to the problem

can be obtained through the following formula:

Each of the objective functionals in (5.20) and (5.21) can be written as a sum of functionals of the same type in (5.25) where n=2,4 respectively. Thus the solution to the problems (5.20) and (5.21) can be computed as follows:

for i=1,…,n and j=1,…,m.

Let us note that the algorithm (5.19)–(5.23) can be easily generalised to colour images, again see [57, 76].

We have performed numerical experiments for image denoising, deblurring and inpainting using the algorithm (5.19)–(5.23). In all of our numerical examples the range of image values is [0,1] (zero for black and one for white).

Notice that different values of λ can result in different speeds of convergence. Also, one can consider having two parameters λ 1 and λ 2 for the first and the second order term respectively. We can easily check that the Bregman iteration converges in this case as well. Even though it is not obvious a priori how to choose λ 1 and λ 2 in order to have fast convergence, this choice only has to be done once and a potential user does not have to worry about them. Empirically we have found that λ 1 and λ 2 have to be a few orders of magnitude larger than α and β respectively. We have found that a good empirical rule is to choose λ 1=10α and λ 2=10β or even λ 1=100α and λ 2=100β . In [11] there is an interesting discussion about that matter. See also [57], where it is shown with numerical experiments for the case of inpainting, how this choice of λ’s results in different speed of convergence and different behaviour of the intermediate iterates.

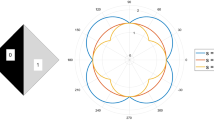

6 Applications in Denoising

In this section we discuss the application of the TV-TV2 approach (5.2) to image denoising, where the operator T equals the identity. We have performed experiments to images that have been corrupted with Gaussian noise, thus the L 2 norm in the fidelity term is the most suitable. We compare our method with infimal convolution [22] solved also with a split Bregman scheme and the total generalised variation [14] solved with the primal-dual method of Chambolle and Pock [23] as it is described in [13]. We present examples of (5.2) for α=0, β≠0 and α≠0, β=0. Note that for β=0, our model corresponds to the classical Rudin-Osher-Fatemi (ROF) denoising model, while for α=0 it corresponds to the pure TV2 restoration [8]. Our basic synthetic test image is shown in Fig. 3.

Our main assessment for the quality of the reconstruction is the structural similarity index SSIM [73, 74]. The reason for that choice is that in contrast to traditional quality measures like the peak signal-to-noise ratio PSNR, the SSIM index also assesses the conservation of the structural information of the reconstructed image. Note that a perfect reconstruction has SSIM value equal to 1. A justification for the choice of SSIM as a good fidelity measure instead of the traditional PSNR can be seen in Fig. 4. The second and the third image are denoising results with the first order method (β=0, Gaussian noise, Variance = 0.5). The middle picture is the one with the highest SSIM value (0.6595) while the SSIM value of the right picture is significantly lower (0.4955). This assessment comes into an agreement with the human point of view since, even though this is subjective, one would consider the middle picture as a better reconstruction. On the other hand the middle picture has slightly smaller PSNR value (14.02) than the right one (14.63), which was the highest PSNR value. Similar results are obtained for β≠0.

Justification for the usage of SSIM index. The initial image, seen on Fig. 3, is contaminated with Gaussian noise of variance 0.5 (left). We provide the best SSIM valued (middle) (SSIM=0.6595, PSNR=14.02) and the best PSNR valued (right) (SSIM=0.4955, PSNR=14.63) reconstructions among reconstructed images with the first order method (β=0). The better SSIM assessment of the first image agrees more with the human perception

As a stopping criterium for our algorithm we use a predefined number of iterations. In most examples this number is 300. We observe that after 80–100 iterations the relative residual of the iterates is of the order of 10−3 or lower (see also Table 1) and hence no noticeable change in the iterates is observed after that.

In the following we shall examine whether the introduction of the higher-order term (β≠0) in the denoising procedure, produces results of higher quality.

The noise has been produced with MATLAB’s built in function imnoise.

Figure 5 depicts one denoising example, where the original image is corrupted with Gaussian noise of variance 0.005. For better visualisation, we include the middle row slices of all the reconstructions in Fig. 6. The highest SSIM value for TV denoising is achieved for α=0.12 (SSIM=0.8979) while the highest one for TV-TV2 is achieved for α=0.06, β=0.03 (SSIM=0.9081). This is slightly better than infimal convolution (SSIM=0.9053). Note, however, that this optimal combination of α and β in terms of SSIM does not always correspond to best visual result. In general, the latter corresponds to a slightly bigger β than the one chosen by SSIM, see Fig. 5(h). Still, for proof of concept, we prefer to stick with an objective quality measure and SSIM, in our opinion, is the most reliable choice for that matter. In the image of Fig. 5(h) the staircasing effect has almost disappeared and the image is still pleasant to the human eye despite being slightly more blurry. This slight blur, which is the price that the method pays for the removal of the staircasing effect, can be easily and efficiently removed in post-processing using simple sharpening filters, e.g. in GIMP. We did that in Fig. 5(i), also adjusting the contrast, achieving a very good result both visually and SSIM-wise (0.9463).

Corresponding middle row slices of images in Fig. 5

The highest SSIM value is achieved by TGV (0.9249), delivering a reconstruction of very good quality. However, TGV converges slowly to the true solution. In order to check that, we compute the ground truth solution (denoted by GT) for the parameters of the TGV problem that correspond to Fig. 5(d), by taking a large amount of iterations (2000). We check the GPU time that is needed for the iterates to have a relative residual

and we do the same for the TV-TV2 example of Fig. 5(f). For TGV, it takes 1297 iterations (primal-dual method [23]) and 36.25 seconds while for TV-TV2 it takes 86 split Bregman iterations and 4.05 seconds, see Table 1. That makes our method more suitable for cases where fast but not necessarily optimal results are needed, e.g. video processing.

In order to examine the quality of the reconstructions that are produced from each method as the number of iteration increases we have plotted in Fig. 7 the evolution of SSIM values. In the horizontal axis, instead of the number of iterations we put the absolute CPU time calculated by the product of the number of iterations times the CPU time per iteration as it is seen in Table 1. We observe that, for TGV, the SSIM value increases gradually with time, while for the methods solved with split Bregman the image quality peaks very quickly and then remains almost constant except for TV where the staircasing appears in the later iterations.

Evolution of the SSIM index with absolute CPU time for the examples of Fig. 5. For TV denoising the SSIM value peaks after 0.17 seconds (0.9130) and the after it drops sharply when the staircasing appears, see corresponding comments on [46]. For TV-TV2 the peak appears after 1.08 seconds (0.9103) and remains essentially constant. The TGV iteration starts to outperform the methods after 1.89 seconds. This shows the potential of split Bregman to produce visually satisfactory results before convergence has occurred, in contrast with the primal-dual method (Color figure online)

Next we check how the SSIM and PSNR values of the restored images behave as a function of the weighting parameters α and β. In Fig. 8 we plot the results for α=0,0.02,0.04,…,0.3 and β=0,0.005,0.01,…,0.1. The plots suggest that both quality measures behave in a continuous way and that they have a global maximum. However, PSNR tends to rate higher those images that have been processed with a small value of β or even with β=0 which is not the case for SSIM. An explanation for that is that higher values of β result to a further loss of contrast, see Fig. 9, something that is penalised by the PSNR. The SSIM index penalises the loss of contrast as well but it also penalises the creation of the staircasing effect. This is another indication for the suitability of SSIM over PNSR. Note also, that the contrast can be recovered easily in a post-processing stage while it is not an easy task to reduce the staircasing effect using conventional processing tools.

Plot of the SSIM and PSNR values of the restored image as functions of α and β. For display convenience all the values under 0.85 (SSIM) and 26 (PSNR) were coloured with dark blue. The dotted cells corresponds to the highest SSIM (0.9081) and PSNR (32.39) value that were achieved for α=0.06, β=0.03 and α=0.06, β=0.005 respectively. Note that the first column in both plots corresponds to TV denoising, (β=0). The original image was corrupted with Gaussian noise of variance 0.005 (Color figure online)

Left: Middle row slices of reconstructed images with α=0.12, β=0 (blue colour) and α=0.12. β=0.06 (red colour). Slices of the original image are plotted with black colour. Right: Detail of the first plot. Even though the higher-order method eliminates the staircasing effect, it also results to further slight loss of contrast (Color figure online)

Finally, in Fig. 10 we perform denoising in an natural image which has been corrupted with Gaussian noise also of variance 0.005. The staircasing of TV denoising (SSIM=0.8168) is obvious in Figs. 10(c) and (i). The overall best performance of the TV-TV2 method (SSIM=0.8319) is achieved by choosing α=β=0.017, Fig. 10(d). However, one can get satisfactory results by choosing α=β=0.023 (SSIM=0.8185), eliminating further the staircasing without blurring the image too much, compare for example the details in the Figs. 10(j) and (k).

7 Applications in Deblurring

In our deblurring implementation T denotes a circular convolution with a discrete approximation of a Gaussian kernel (σ=2, size: 11×11 pixels). The blurred image is also corrupted by additive Gaussian noise of variance 10−4. Let us note here that the optimality condition (5.24) can be solved very fast using fast Fourier transforms. Deblurring results are shown in Fig. 11 and the corresponding middle row slices in Fig. 12. As in the denoising case the introduction of the second order term with a small weight β decreases noticeably the staircasing effect, compare Figs. (11)(c) and (f). Moreover, we can achieve better visual results if we increase further the value of β without blurring the image significantly, Fig. (11)(g). Infimal convolution does not give a satisfactory result here, Fig. 11(e). TGV gives again the best qualitative result, Fig. (11)(d), but the computation takes about 10 minutes. Even though the time comparison is not completely fair here (the implementation described in [13] does not use FFT) it takes a few thousands iterations for TGV to deblur the image satisfactorily, in comparison with a few hundreds for our TV-TV2 method.

Corresponding middle row slices of images in Fig. 11

In Fig. 13 we discuss the performance of the TV-TV2 method for deblurring a natural image. The best result for the TV-TV2 method (SSIM=0.8361) is achieved with α=0.0005 and β=0.0001, Fig. 13(d). As in the case of denoising, one can increase the value of β slightly, eliminating further the staircasing effect, Figs. 13(e) and (k). The additional blur which is a result of the larger β can be controlled using a sharpening filter, Figs. 13(f) and (l).

8 Applications in Inpainting

Finally, we present examples for the application of our TV-TV2 approach to image inpainting. There, the goal is to reconstruct an image inside a missing part using information from the intact part. The missing part is a domain D⊆Ω, known as the inpainting domain. In this case the operator T applied to an image u gives

where as before \(\mathcal{X}_{\varOmega\setminus D}\) is the characteristic function of Ω∖D, the intact part of the image domain. Let us note here that in cases of a small number of inpainting domains and a completely noise free and trustable image outside of the missing part, it is preferable to solve the inpainting problem only inside the holes, see for example [21]. However, here we would like to keep the method flexible, such that it includes the case where there is noise in the known regions as well.

In order to take advantage of the FFT for the optimality condition (5.24) a different splitting technique to (5.14) is required:

such that \(u=\tilde{u}\), \(v=\nabla\tilde{u}\), \(w= \nabla^{2}\tilde {u}\). We refer the reader to [57] for the details.

In Fig. 14 we compare our method with harmonic and Euler’s elastica inpainting, see [25, 67]. In the case of harmonic inpainting the regulariser is the square of the L 2 norm of the gradient ∫ Ω |∇u|2 dx. In the case of Euler’s elastica the regulariser is

The minimisation of the Euler’s elastica energy corresponds to the minimisation of the length and curvature of the level lines of the image. Thus, this method is able to connect large gaps in the inpainting domain, see Fig. 14(d). However, the term (8.2) is non-convex and thus difficult to be minimised. In order to implement Euler’s elastica inpainting we used the augmented Lagrangian method, proposed in [67]. There, the leading computational cost per iteration is due to the solution of one linear PDE and a system of linear PDEs (solved with FFT as well), in comparison to our approach which consists of one linear PDE only. Hence, in Table 1, we do not give absolute computational times as that would not be fair even more so because we did not optimise the Euler’s elastica algorithm with respect to the involved parameters. Moreover, it should be emphasised that the solution of TV-TV2 inpainting amounts to solve a convex problem while Euler’s elastica inpainting is a non-convex model.

In Fig. 14 we see that, in contrast to harmonic and TV inpainting, TV2 inpainting is able to connect big gaps with the price of a blur, which can be controlled using a shock filter [2], see Fig. 14(g). Notice that one has to choose α small or even 0, in order to make the TV2 term dominant, compare Figs. 14(e) and (f).

We also observe that in the TV2 case, the ability to connect large gaps depends on the size and geometry of the inpainting domain, see Fig. 15 and also see [57] for more examples. Deriving sufficient conditions on the size and geometry of the inpainting domain for this connectivity to happen is a matter of future research.

Finally, in Fig. 16 we compare TV, TV2 and Euler’s elastica, for its application to removing text (of large font) from a natural image. TV inpainting, Fig. 16(b) gives unsatisfactory results, by producing piecewise constant results inside the inpainting domain, while the TV2 and Euler’s elastica results are comparable and seem to be visually closer to the true solution than the TV inpainted image.

9 Comparison with Other Higher-Order Methods

In the case of denoising and deblurring we compared our method with TGV, which we consider a state of the art method in the field of higher-order image reconstruction in the variational context. Indeed, in both image reconstruction tasks, TGV gives better qualitative results, in terms of the SSIM index. However the computational time that was needed to obtain the TGV result solved with the primal-dual method is significantly more than the one that is needed to compute the TV-TV2 method using split Bregman, see Table 1. We also show that with simple and fast post-processing techniques we can obtain results comparable with TGV. For these reasons, we think that the TV-TV2 approach is in particular interesting for applications in which the speed of computation matters. Regarding the comparison with inf-convolution, our method is slightly faster and results in better reconstructions in deblurring while in denoising the results are comparable. As far as inpainting is concerned, we compared our method (essentially the pure TV2 approach) with the Euler’s elastica, a higher-order variational method which is capable of giving very good results, by connecting large gaps in the inpainting domain. However, the regulariser there is non convex, something that could make the minimisation process produce a local minimiser instead of a global one. In TV2 the regulariser—regarded as a convex simplification of the Euler’s elastica idea—it has the ability to connect large gaps and the slight blur that is produced can be reduced by using a shock filter see for example [2, 45] and Fig. 14(g). Moreover as we pointed out in the previous sections, our approach is computationally less expensive compared to TGV for image denoising and deblurring and Euler elastica for image inpainting.

10 Conclusion

We formulate a second order variational problem in the space of functions of bounded Hessian in the context of convex functions of measures. We prove existence and uniqueness of minimisers using a relaxation technique as well as stability. We propose the use of the split Bregman method for the numerical solution of the analogue discretised problem. The application of the split Bregman method to our model is quite robust and is converging after a few iterations. We perform numerical experiments by denoising images that have been corrupted by Gaussian noise, deblurring images that have been convoluted with Gaussian kernels, as well as in image inpainting.

In the case of denoising and deblurring, the introduction of the second order term leads to a significant reduction of the staircasing effect resulting in piecewise smooth images rather than piecewise constant images when using the ROF model. The superiority of an approach that combines first and second order regularisation rather than first order regularisation only, is confirmed quantitatively by the SSIM index. In the case of inpainting the higher-order method is able to connect edges along large gaps, a task that TV-inpainting is incapable of solving.

In summary, our approach is a simple and convex higher-order extension of total variation regularisation that improves the latter by reducing the staircasing effect in image denoising and deblurring, and by more faithfully respecting the good continuation principle in image inpainting. It can compete with other higher-order methods of its kind by giving almost comparable qualitative results while computing them in a fraction of time.

As fas as future work is concerned, a (not necessarily rigorous) rule for selecting the parameters α and β would be useful. Investigating the links with inf-convolution and spatially dependent regularisation on our model is also of interest. Moreover, the relation between the continuum and the discretised model could be investigated through Γ-convergence arguments, see [30] and [12]. Finally the characterisation of subgradients of this approach and the analysis of solutions of the corresponding PDE flows for different choices of functions f and g promises to give more insight into the qualitative properties of this regularisation procedure. The characterisation of subgradients will also give more insight to properties of exact solutions of the minimisation of (1.1) concerning the avoidance of the staircasing effect.

References

Acar, R., Vogel, C.R.: Analysis of bounded variation penalty methods for ill-posed problems. In: Inverse Problems, pp. 1217–1229 (1994)

Alvarez, L., Mazorra, L.: Signal and image restoration using shock filters and anisotropic diffusion. SIAM J. Numer. Anal. 31(2), 590–605 (1994)

Amar, M., Cicco, V.: Relaxation of quasi-convex integrals of arbitrary order. Proc. R. Soc. Edinb., Sect. A, Math. 124(05), 927–946 (1994)

Ambrosio, L., Fusco, N., Pallara, D.: Functions of Bounded Variation and Free Discontinuity Problems. Oxford University Press, Oxford (2000)

Benning, M.: Singular Regularization of Inverse Problems (2011)

Benning, M., Brune, C., Burger, M., Müller, J.: Higher-order TV methods—Enhancement via Bregman iteration. J. Sci. Comput. 54(2–3), 269–310 (2013)

Benning, M., Burger, M.: Error estimates for general fidelities. Electron. Trans. Numer. Anal. 38(44–68), 77 (2011)

Bergounioux, M., Piffet, L.: A second-order model for image denoising. Set-Valued Var. Anal. 18(3–4), 277–306 (2010)

Bertozzi, A., Esedoglu, S., Gillette, A.: Analysis of a two-scale Cahn-Hilliard model for binary image inpainting. Multiscale Model. Simul. 6(3), 913–936 (2008)

Bertozzi, A.L., Greer, J.B.: Low-curvature image simplifiers: global regularity of smooth solutions and Laplacian limiting schemes. Commun. Pure Appl. Math. 57(6), 764–790 (2004)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 3(1), 1–122 (2011)

Braides, A.: Γ-Convergence for Beginners. Oxford Lecture Series in Mathematics and Its Applications. (2002)

Bredies, K.: Recovering piecewise smooth multichannel images by minimization of convex functionals with total generalized variation penalty. Preprint (2012). http://math.uni-graz.at/mobis/publications/SFB-Report-2012-006.pdf

Bredies, K., Kunisch, K., Pock, T.: Total generalized variation. SIAM J. Imaging Sci. 3, 1–42 (2009)

Bredies, K., Lorenz, D.: Mathematische Bildverarbeitung: Einführung in Grundlagen und moderne Theorie. Vieweg+Teubner, Wiesbaden (2011)

Bredies, K., Valkonen, T.: Inverse problems with second-order total generalized variation constraints. In: Proceedings of SampTA 2011—9th International Conference on Sampling Theory and Applications, Singapore (2011)

Burger, M., He, L., Schönlieb, C.: Cahn-Hilliard inpainting and a generalization for gray value images. SIAM J. Imaging Sci. 2(4), 1129–1167 (2009)

Burger, M., Osher, S.: Convergence rates of convex variational regularization. Inverse Probl. 20, 1411 (2004)

Burger, M., Resmerita, E., He, L.: Error estimation for Bregman iterations and inverse scale space methods in image restoration. Computing 81(2), 109–135 (2007)

Buttazzo, G., Freddi, L.: Functionals defined on measures and applications to non equi-uniformly elliptic problems. Ann. Mat. Pura Appl. 159(1), 133–149 (1991)

Cai, J., Chan, R., Shen, Z.: A framelet-based image inpainting algorithm. Appl. Comput. Harmon. Anal. 24(2), 131–149 (2008)

Chambolle, A., Lions, P.: Image recovery via total variation minimization and related problems. Numer. Math. 76, 167–188 (1997)

Chambolle, A., Pock, T.: A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40(1), 120–145 (2011)

Chan, T., Esedoglu, S.: Aspects of total variation regularized L 1 function approximation. SIAM J. Appl. Math. 65(5), 1817–1837 (2005)

Chan, T., Kang, S., Shen, J.: Euler’s elastica and curvature-based inpainting. SIAM J. Appl. Math. 63(2), 564–592 (2002)

Chan, T., Marquina, A., Mulet, P.: High-order total variation-based image restoration. SIAM J. Sci. Comput. 22(2), 503–516 (2001)

Chan, T.F., Esedoglu, S., Park, F.: Image decomposition combining staircase reduction and texture extraction. J. Vis. Commun. Image Represent. 18(6), 464–486 (2007)