Abstract

We employ a Kansa-radial basis function (RBF) method for the numerical solution of elliptic boundary value problems in annular domains. This discretization leads, with an appropriate selection of collocation points and for any choice of RBF, to linear systems in which the matrices possess block circulant structures. These linear systems can be solved efficiently using matrix decomposition algorithms and fast Fourier transforms. A suitable value for the shape parameter in the various RBFs used is found using the leave-one-out cross validation algorithm. In particular, we consider problems governed by the Poisson equation, the inhomogeneous biharmonic equation and the inhomogeneous Cauchy–Navier equations of elasticity. In addition to its simplicity, the proposed method can both achieve high accuracy and solve large-scale problems. The feasibility of the proposed techniques is illustrated by several numerical examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

During the past decade, radial basis function (RBF) collocation methods have attracted great attention in scientific computing and have been widely applied to solve a large class of problems in science and engineering. Among the various types of RBF collocation methods, the Kansa method [15], proposed in early 1990s is the most known. One of the main attractions of this method is its simplicity, since in it neither a boundary nor a domain discretization are required. This feature is especially useful for solving high dimensional problems in complex geometries. Furthermore, we have the freedom of choosing an RBF as the basis function which does not necessary satisfy the governing equation. Hence, the Kansa method is especially attractive for solving nonhomogeneous equations. In general, the multiquadric (MQ) function is the most commonly used RBF in the implementation of the Kansa method. Using the MQ, an exponential convergence rate has been observed and thus high accuracy beyond the reach of the traditional numerical methods such as finite element and finite difference methods can be achieved. Despite all the advantages mentioned above, the Kansa method has a number of unfavorable features. It is known that the determination of the optimal shape parameter of various RBFs is still a challenge. Currently, there are a variety of techniques [5, 11, 16, 26, 32] available for the determination of a suitable shape parameter. In this paper, we will use the so called leave-one-out cross validation (LOOCV) proposed by Rippa [32] to find a sub-optimal shape parameter. Another potential problem of the RBF collocation methods is the ill-conditioning of the resultant matrix. On the one hand, when the number of interpolation points is increased, the accuracy improves, while, on the other hand, the condition number becomes larger. This phenomenon is referred to as the principle of uncertainty by Schaback [33, 34]. Eventually, when the number of interpolation points becomes too large, the condition number becomes enormous and the solution breaks down. When using globally supported RBFs such as the MQ, the resultant matrix of the Kansa method is dense and ill-conditioned. Not only do we have a stability problem, but also the computational cost becomes very high. The Kansa method is thus not suitable for the solution of large-scale problems which require the use of a large number of interpolation points. In recent years, the localized Kansa method [27] has been developed for handling large-scale problems. In this approach, a local influence domain is established for each interpolation point and only a small number of neighbouring points is used to approximate the solution. The resultant matrix is thus sparse allowing for the use of a large number of collocation points. However, the accuracy of the localized Kansa method is rather low because the local influence domain only contains a small number of interpolation points.

In this paper, we couple the Kansa method and a matrix decomposition technique for solving problems using a large number of interpolation points. Since global RBFs are used as basis functions, we can also achieve high accuracy in numerical results. To be more specific, we consider the discretization of elliptic boundary value problems in annular domains using the Kansa method. For any choice of RBF, such appropriate discretizations lead to linear systems in which the coefficient matrices possess block circulant structures and which are solved efficiently using matrix decomposition algorithms (MDAs). An MDA [1] is a direct method which reduces the solution of an algebraic problem to the solution of a set of independent systems of lower dimension with, often, the use of fast Fourier Transforms (FFTs). MDAs lead to considerable savings in computational cost and storage. This decomposition technique not only allows us to handle large-scale matrices but also makes it possible to implement the LOOCV technique to find the sub-optimal shape parameter of the RBFs used. It should be noted that the LOOCV technique is not suitable when the size of the matrix is too large. Such MDAs have been used in the past in various applications of the method of fundamental solutions (MFS) to boundary value problems in geometries possessing radial symmetry, see e.g., [17, 18]. A similar MDA was also applied for the approximation of functions and their derivatives using RBFs in circular domains in [21], see also, [13]. Some preliminary results for the Dirichlet Poisson problem have recently been presented in [20].

The paper is organized as follows. In Sect. 2 we present the Kansa method and the proposed MDA for both the Dirichlet and the mixed Dirichlet–Neumann Poisson problem. The Kansa-RBF discretization for the first and second biharmonic problems is given in Sect. 3. The corresponding discretization and MDA for the Dirichlet and the mixed Dirichlet–Neumann boundary value problems for the more challenging Cauchy–Navier equations of elasticity is described in Sect. 4. In Sect. 5 we present the results of three numerical experiments. In the first example various RBFs are tested and the normalized MQ turns out to be the most effective one. As a result, in the other examples, we focus on using the normalized MQ. We also show that the LOOCV technique is very effective for finding a good shape parameter of the RBFs. All three examples show that we can solve large-scale problems with high accuracy. Finally, in Sect. 6 some conclusions and ideas for future work are outlined.

2 The Poisson Equation

2.1 The Problem

We first consider the Poisson equation

subject to the Dirichlet boundary conditions

in the annulus

The boundary \(\partial \Omega = \partial \Omega _1\cup \partial \Omega _2\), \(\partial \Omega _1\cap \partial \Omega _2=\emptyset \) where \(\partial \Omega _1=\left\{ {\varvec{x} } \in {\mathbb {R}}^2: |{\varvec{x} }|={\varrho }_1 \right\} \) and \(\partial \Omega _2=\left\{ {\varvec{x} } \in {\mathbb {R}}^2: |{\varvec{x} }|={\varrho }_2 \right\} \).

2.2 Kansa’s Method

In Kansa’s method [15] we approximate the solution \(u\) of boundary value problem (2.1) by a linear combination of RBFs [3, 7]

The RBFs \(\phi _\mathsf{n}(x,y), \mathsf{n}=1, \ldots , \mathsf{N}\) can be expressed in the form

Thus each RBF \(\phi _\mathsf{n}\) is associated with a point \((x_\mathsf{n}, y_\mathsf{n})\). These points \(\left\{ (x_\mathsf{n},y_\mathsf{n})\right\} _{\mathsf{n}=1}^{\mathsf{N}}\) are usually referred to as centers.

The coefficients \(\left\{ a_{\mathsf{n}}\right\} _{\mathsf{n}=1}^{\mathsf{N}}\) in Eq. (2.3) are determined from the collocation equations

where \({\mathsf{M}}_{\mathrm{int}}+{\mathsf{M}}_{\mathrm{bry}} = {\mathsf{M}}\) and the points \(\left\{ (x_\mathsf{m},y_\mathsf{m})\right\} _{\mathsf{m}=1}^{\mathsf{M}} \in \bar{\Omega }\) are the collocation points. Note that, in general, the collocation points are not the same as the centers and \({\mathsf{M}}\ge {\mathsf{N}}\).

In this work, however, we shall assume that \({\mathsf{M}} = {\mathsf{N}}\) and that the centres are the same as the collocation points. In particular, we define the \(M\) angles

and the \(N\) radii

The collocation points \(\left\{ (x_{mn}, y_{mn})\right\} _{m=1,n=1}^{M,N}\) are defined as follows:

In (2.8) the parameters \(\left\{ \alpha _n\right\} _{n=1}^N \in [-1/2, 1/2]\) correspond to rotations of the collocation points and may be used to produce more uniform distributions. Typical distributions of collocation points without rotation (\(\alpha _n=0, n=1,\ldots ,n\)) and with rotation are given in Fig. 1. In the current application of Kansa’s method, we take

where the \({\mathsf{M}}=MN\) coefficients \(\left\{ ({a}_{mn})\right\} _{m=1,n=1}^{M,N}\) are unknown. These coefficients are determined by collocating the differential equation (2.1a) and the boundary conditions (2.1b)–(2.1c) in the following way:

yielding a total of \({\mathsf{M}}=MN\) equations.

By vectorizing the arrays of unknown coefficients and collocation points from

Equation (2.10) yield a linear system of the form

The \(M\times M\) submatrices \(A_{n_1,n_2}, \; n_1,n_2=1, \ldots , N\) are defined as follows:

while the \(M\times 1\) vectors \({\varvec{a} }_n, {\varvec{b} }_n, n=1, \ldots , N\) are defined as

2.2.1 Neumann Boundary Conditions

Suppose that instead of boundary condition (2.1b) we had the Neumann boundary condition

where \(\mathbf{n}(x,y)=(\mathrm{n}_x, \mathrm{n}_y)\) denotes the outward normal vector to the boundary at the point \((x,y)\). The corresponding submatrices \(\left( A_{1,n}\right) , \, n=1, \ldots , N\), in (2.13b) are now defined by

As proved in the “Appendix” (Lemma 1) each of the \(M\times M\) submatrices \(A_{n_1,n_2}, \; n_1, n_2=1, \ldots , N\), in the coefficient matrix in (2.12) is circulant [6]. Hence matrix \(A\) in system (2.12) is block circulant.

2.3 Matrix Decomposition Algorithm

First, we define the unitary \(M \times M\) Fourier matrix

If \(I_N\) is the \(N \times N\) identity matrix, pre–multiplication of system (2.12) by \(I_N \otimes U_M\) yields

where

and

From the properties of circulant matrices [6], each of the \(M \times M\) matrices \(D_{n_1,n_2}, \; n_1, n_2 = 1, \cdots , N\), is diagonal. If, in particular

we have, for \(n_1, n_2 = 1, \cdots , N\),

Since the matrix \(\tilde{A}\) consists of \(N^2\) blocks of order \(M\), each of which is diagonal, the solution of system (2.17) can be decomposed into solving the \(M\) independent systems of order \(N\)

where

and

Having obtained the vectors \({\varvec{x} }_m, \; m=1, \cdots , M\), we can recover the vectors \(\tilde{{\varvec{a} }}_n, \; n=1, \cdots , N\) and, subsequently, the vector \({\varvec{a} }\) from (2.19), i.e.

In conclusion, the MDA can be summarized as follows:

In Steps 1, 2 and 4 FFTs are used while the most expensive part of the algorithm is the solution of \(M\) linear systems, each of order \(N\). The FFTs are carried out using the MATLAB\(^{\copyright }\) [35] commands fft and ifft. In addition to the savings in computational cost, considerable savings in storage are achieved since only one row of the circulant matrices involved needs to be stored.

3 The Biharmonic Equation

3.1 The Problem

We next consider the biharmonic equation

subject to the boundary conditions

where \(\Omega \) is the annulus (2.2). This problem is known as the first biharmonic problem.

3.2 Kansa’s Method

We approximate the solution \(u\) of boundary value problem (3.1) by (2.9).

The coefficients are determined by collocating the differential equation (3.1a) and the boundary conditions (3.1b)–(3.1c) in the following way:

yielding a total of \(MN\) equations.

By vectorizing the arrays of unknown coefficients and collocation points via (2.11), Eq. (3.2) yield a system of the form (2.12). In this case, the \(M\times M\) submatrices \(A_{n_1,n_2}, \; n_1,n_2=1, \ldots , N\) are defined as follows:

while the \(M\times 1\) vectors \({\varvec{a} }_n, {\varvec{b} }_n, n=1, \ldots , N\) are defined as

3.2.1 Second Biharmonic Problem

Suppose that instead of boundary conditions (3.1b)–(3.1c) we had the boundary conditions

This problem is known as the second biharmonic problem.

The corresponding submatrices \(\left( A_{2,n}\right) , \, \left( A_{N-1,n}\right) , \, n=1, \ldots , N\), in (3.3) are now defined by

As shown in the “Appendix” (Lemma 2), as in the case of the Poisson equation in Sect. 2.2, each of the \(M\times M\) submatrices \(A_{n_1,n_2}, \; n_1, n_2=1, \ldots , N\), is circulant and hence the matrix \(A\) is block circulant. The resulting system can therefore be solved efficiently using the MDA described in Sect. 2.3.

4 The Cauchy–Navier Equations of Elasticity

4.1 The Problem

We finally consider the Cauchy–Navier system for the displacements \((u_1, u_2)\) in the form (see, e.g. [12])

subject to the Dirichlet boundary conditions

where \(\Omega \) in the annulus (2.2). In (4.1a) the constant \(\nu \in [0, 1/2)\) is Poisson’s ratio and \(\mu >0\) is the shear modulus.

4.2 Kansa’s Method

We approximate the solution \((u_1, u_2)\) of boundary value problem (3.1) by \((u_{MN}^{(1)}, u_{MN}^{(2)})\) where

and the \(2MN\) coefficients \(\left\{ ({a}_{mn}^{(\ell )})\right\} _{m=1,n=1}^{M,N}, \; \ell =1,2,\) are unknown.

The unknown coefficients are determined by collocating the differential equations (4.1a) and the boundary conditions (4.1b)–(4.1c) in the following way:

yielding a total of \(2MN\) equations.

By vectorizing the arrays of unknown coefficients and collocation points as in (2.11), Eq. (4.3) yield a \(2 M N \times 2 M N\) system of the form (2.12). The \(2M\times 2M\) submatrices \(A_{n_1,n_2}, \; n_1,n_2=1, \ldots , N\) are now defined as follows: (note that we are now defining the matrix and vector elements in (2.12) as \(2\times 2\) and \(2\times 1\) arrays, respectively)

while the \(2M\times 1\) vectors \({\varvec{a} }_n, {\varvec{b} }_n, n=1, \ldots , N\) are defined as

4.2.1 Neumann Boundary Conditions

Suppose that instead of the Dirichlet boundary conditions (4.1b) we had the Neumann boundary conditions

where \((t_1, t_2)\) are the tractions defined by [12]

In this case, we have, instead of (4.4b), that the submatrices \(\left( A_{1,n}\right) _{m_1,m_2}, m_1,m_2=1,\ldots ,M, \; n=1, \ldots , N,\) are defined by

with all the quantities in (4.6) evaluated at the boundary point \((x_{m_1,1},y_{m_1,1})\).

In contrast to the Poisson and biharmonic cases, matrix \(A\) in (2.12) does not possess a block circulant structure. However, as described in the context of the MFS, in e.g., [23–25] a block circulant structure can be obtained by means of a simple transformation.

4.3 Matrix Decomposition Algorithm

We introduce the \(2M \times 2M\) matrix

where

Since clearly \(R_{{\vartheta }_k}^2=I_2\) then \(R^2=I_{2N}\).

We premultiply the \(2MN\times 2MN\) system (2.12) (\(A{\varvec{a} } = {\varvec{b} }\)) by the \(2MN\times 2MN\) matrix \(I_N \otimes R\) to get

where

The \(2MN\times 2MN\) matrix \(\tilde{A}\) can be written as

where each of the \(2M\times 2M\) submatrices \(\tilde{A}_{m,\ell }=RA_{m,\ell }R\).

Moreover, keeping in mind that the elements \(\left( \tilde{A}_{n_1,n_2}\right) _{m_1,m_2}=\left( \left( \tilde{A}_{n_1,n_2}\right) _{m_1,m_2}\right) _{i,j=1}^2\) are \(2 \times 2\) arrays, we have

Further, as proved in the “Appendix” (Lemma 3), each submatrix \(\tilde{A}_{n_1,n_2}, n_1, n_2=1, \ldots , N\), has a block \(2 \times 2\) block circulant structure. The \(2MN\times 1\) vectors \(\tilde{{\varvec{a} }}, \tilde{{\varvec{b} }}\) are written as

where the \(2M \times 1\) subvectors \(\tilde{{\varvec{a} }}_n, \tilde{{\varvec{b} }}_n, n=1,\ldots N\), are defined by \(\tilde{{\varvec{a} }}_n =R {\varvec{a} }_n, \tilde{{\varvec{b} }}_n =R {\varvec{b} }_n\) and the \(2 \times 1\) subvectors \(((\tilde{{\varvec{a} }}_n)_m)_{i=1}^2, \; ((\tilde{{\varvec{b} }}_n)_m)_{i=1}^2,\; m=1,\ldots , M\), are defined by

We next rewrite system (4.8) in the form

where the \(MN \times MN\) matrices \(B_{ij}, i,j =1,2,\) are expressed in the form

Each \(M\times M\) submatrix \(\tilde{B}_{n_1,n_2}^{ij}, i,j =1,2, \; n_1,n_2=1, \dots , N,\) is circulant and defined from

Also, the \(MN\times 1\) vectors \({\varvec{c} }_i, {\varvec{d} }_i, i=1,2,\) are defined from

where

We premultiply system (4.11) by the matrix \(I_2 \otimes I_N \otimes U_M\) to get

or

where

where for \(i=1,2\)

The matrices \(\tilde{B}_{ij}, i,j =1,2\) are given from

and since each of the matrices \({B}_{ij}, i,j =1,2\) is block circulant, from (2.18) it follows that

where each \(M \times M\) matrix \(D_{n_1,n_2}^{ij}, n_1, n_2=1, \ldots , N,\) is diagonal.

More specifically, if

we have, for \(n_1, n_2 = 1, \cdots , N\),

Since each matrix \(\tilde{B}_{ij}, i,j =1,2,\) consists of \(N^2\) blocks of order \(M\) each of which is diagonal, the solution of system (4.15) can be decomposed into solving the \(M\) systems of order \(2N\)

where

and

From the vectors \({\varvec{x} }_i^m, i=1,2; m=1, \ldots , M\) we can obtain the vectors \(p_1, p_2\) and the vectors \(c_1, c_2\), and subsequently the vector \(\tilde{{\varvec{a} }}\), before finally obtaining the vector \({\varvec{a} }\).

The MDA, in this case, can be summarized as follows:

In Steps 3, 4 and 6 FFTs are used while the most expensive part of the algorithm is the solution of \(M\) linear systems, each of order \(2N\). Again, substantial savings in storage are obtained as only the first line of the circulant matrices involved needs to be constructed and stored.

5 Numerical Examples

In all numerical examples considered, we took collocation points described by \(\alpha _{n}=(-1)^{n}/4, n=1, \ldots , N\) (cf. (2.8)). The inner and outer radii of the annular domain \(\Omega \) are \({\varrho }_1 = 0.3, {\varrho }_2 = 1\), respectively. We calculated the maximum relative error \(E\) over \(\mathcal {M}\mathcal {N}\) test points in \(\overline{\Omega }\) defined by

Unless otherwise stated, we choose \(\mathcal {N}=25, \mathcal {M}=50\) so that the test points are different than the collocation points. The maximum relative error \(E\) is defined as

The proper selection of an RBF is crucial in obtaining good accuracy. In the first example, we test various commonly used RBFs and then choose the best one to perform the tests for the remaining examples. The determination of a suitable shape parameter remains a major issue in the many applications of RBFs involving such parameters. As we have mentioned earlier, numerous techniques have been proposed for the selection of an appropriate shape parameter. In this work, we apply the so-called LOOCV (leave-one-out cross validation) algorithm proposed by Rippa [32] for the identification of a suitable shape parameter. The MATLAB\(^{\copyright }\) codes for LOOCV can be found in [9]. The MATLAB\(^{\copyright }\) function fminbnd is used to find the minimum of a function of one variable within a fixed interval. Since fminbnd is a local minimizer, an initial guess of the lower and upper bounds denoted by min and max needs to be provided so that the search takes place in the interval [min, max]. One of the attractive features of using LOOCV is that we do not need to know the exact solution of the given problem for the selection of a (sub-optimal) shape parameter. Typically, in the problems considered, there are \(M\) systems of equations of order \(N\) to be solved, see, e.g., (2.21). It is clearly not cost-effective to apply the LOOCV to each of the \(M\) systems and determine the sub-optimal shape parameter for each. We therefore only apply the LOOCV to a randomly chosen system among the \(M\) systems. This adds \(O(N^3)\) computational complexity to the proposed technique. The sub-optimal shape parameter thus obtained is then used for all the other systems. This technique, as shown by the results, appears to be working well.

All numerical computations were carried out on a MATLAB\(^{\copyright }\) 2010a platform in OS Windows 7 (32 bit) with Intel Core(TM) i5 2.4 GHz CPU and 4 GB memory.

We have considered the following numerical examples.

5.1 Example 1

We consider the Poisson equation (2.1a) with a Neumann boundary condition prescribed on \(\partial \Omega _1\) and a Dirichlet boundary condition prescribed on \(\partial \Omega _2\). The boundary conditions correspond to the exact solution which is given by \(u=\hbox {e}^{x+y}\).

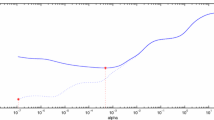

We first perform some tests with the normalized multiquadric (MQ) basis functions

where \(c\) is the shape parameter. In Fig. 2, we present the maximum relative error in \(u\) versus the shape parameter \(c\) for the case when \(M=128, N=64\) using the normalized MQ basis functions. From this figure, we can observe that the optimal shape parameter is \(c = 5.208\) and the corresponding error is \(2.940(-8)\). The results in Table 1 are obtained using the LOOCV algorithm for various search intervals [min, max]. When comparing the results in Fig. 2 and Table 1, we can see that the (sub-optimal) shape parameter results obtained for various search intervals are very stable and satisfactorily close to the optimal shape parameter. In terms of accuracy, the errors obtained using the LOOCV algorithm are comparable to the ones obtained using the optimal shape parameter. Moreover, in order to obtain the optimal shape parameter in Fig. 2, one needs to know the exact solution. In contrast, to obtain the sub-optimal shape parameter using the LOOCV algorithm in Table 1, it is not necessary to know the exact solution a priori. The results obtained for the Dirichlet problem (2.1) are very similar.

In Table 2, we present the results obtained using various numbers of collocation points \(M = N\). It can be seen that the sub-optimal shape parameter becomes larger when the density of the collocation points increases.

In Fig. 3 we present the error convergence plots for \((M,N) = (16,8), (32,16), (64,32)\) and \((128, 64)\) for varying \(c\). From these plots we observe that as the number of degrees of freedom increases, the accuracy improves.

We also compared the performance of the proposed MDA Kansa-RBF versus the full Kansa-RBF solution in which the full system (2.12) is solved. In Table 3, we present the errors \(E\), CPU times and condition numbers for the full Kansa-RBF and the corresponding quantities using the proposed MDA Kansa-RBF for various numbers of degrees of freedom, using LOOCV. The most time consuming part in both approaches is the search of a suitable shape parameter using LOOCV, in which systems are solved repeatedly. This makes the advantage of the MDA Kansa-RBF even more pronounced as, for example, the solution of the full Kansa system for \(M=N=50\) once requires 2.82 s while with LOOCV the method requires 55.68 s. Clearly, the use of LOOCV with the MDA Kansa approach does not slow the process as considerably. Note that for \(M=N=100\) and 150 the size of the full Kansa-RBF matrices becomes prohibitive. From the values of the condition numbers in the two approaches we may infer that the Principle of Uncertainty [32,33] is more pronounced for the full Kansa-RBF than the MDA Kansa-RBF. The sub-optimal shape parameters are not given in Table 3 since the focus is on the CPU time and accuracy. Similar results were observed for the other examples considered.

Next, we examine and compare the performance of different RBFs using the proposed MDA. The Matérn RBF [30, 31]

where \(n\in \mathbb {Z}, K_n\) is the modified Bessel function of second kind with order \(n\) and \(c\) is the shape parameter, is known as a highly effective basis function. In [31], the Kansa method using the Matérn RBF was applied to solve Poisson’s equation. For large-scale problems, domain decomposition was applied to decompose the domain into smaller domains so that the Kansa method can be applied. In this work, instead of domain decomposition, we use matrix decomposition to handle large-scale problems. For (2.1a) with Dirichlet boundary conditions, the profiles of the maximum relative error versus the shape parameter for the Matérn RBF of orders \(n=4, 5, 6,\) and 7, with \(M=128, N=64\), are shown in Fig. 4. As can be observed, as the order increases so does the value of the optimal shape parameter. Furthermore, the stability of the curve deteriorates as the order becomes higher due to an increase in the higher condition number. As shown in Table 4, when using the Matérn of order 6, the results for various search intervals are compatible with the results in Table 1. Since the value of the optimal shape parameter increases with the order of the Matérn RBF, we use different search intervals for different orders. In Table 5, we present the sub-optimal shape parameters and the corresponding errors for various orders of the Matérn RBF. The accuracy obtained using the Matérn RBF of various orders and the LOOCV algorithm is very close to the results obtained using the actual optimal shape parameters as shown in Fig. 4. Even though the results are highly accurate, the drawback of using the Matérn RBF is the high cost of computation due to the presence of the special function \(K_n(cr)\).

The Gaussian RBF

is another popular and widely used RBF. As shown in Fig. 5, we see a very different profile of the shape parameter versus the maximum relative error, for problem (2.1). The errors are fluctuating between \(10^{-4}\) and \(10^{-6}\). Despite this irregularity, we obtain excellent results using the LOOCV algorithm, as shown in Table 6. We note that all the local minima in Fig. 5 have similar accuracy. The MATLAB\(^{\copyright }\) function fminbnd is capable of finding the local minima and, hence, as we can see in Table 6, for different search intervals we may obtain quite different sub-optimal shape parameters and yet the obtained accuracy is very satisfactory. In conclusion, however, it is preferable to use one of the previous two RBFs which are more predictable in finding a suitable shape parameter.

Finally, we tested the performance of the inverse multiquadric (IMQ) RBF

for the same example. The results obtained were very similar to those obtained using the normalized MQ RBF but the best accuracy recorder in this case was slightly worse at around \(10^{-7}\).

Among all the RBFs tested in this example, we conclude that the normalized MQ is the best in terms of efficiency, stability, and accuracy. As a result, we will only consider the normalized MQ as an RBF for the next two examples.

Remark 1

We also considered a distribution of the collocation points which renders their concentration near the boundaries denser (see, e.g., [10]). In particular, instead the of the \(N\) uniformly distributed radii defined by (2.7) we used the \(N\) Chebyshev–Gauss–Lobatto points on the interval \([{\varrho }_1,{\varrho }_2]\), defined by

while the angles are still defined by (2.6) and the collocation points by (2.8). Extensive experimentation revealed no significant difference in the accuracy of the results with the uniform distribution and the Chebyshev–Gauss–Lobatto point distribution. Moreover, it was observed that there was no significant difference between the size of the errors near the boundaries and the interior of the domain.

5.2 Example 2

We next consider the first biharmonic boundary value problem (3.1) corresponding to the exact solution \(u = \sin (\pi x) \cos (\pi y/2)\). In Table 7 we present the sub-optimal shape parameters and their corresponding errors using the LOOCV algorithm with various search intervals for the case \(M=128, N=64\). As in Example 1, the results we obtained are very stable irrespective to the initial search interval. Without prior knowledge of the exact solution, we can find a good shape parameter and obtain accuracy which is fairly close to the optimal accuracy, as shown in Fig. 6.

In Table 8 we present the sub-optimal shape parameters and errors in \(u\) for various values of \(M = N\) using the LOOCV algorithm for the case of Dirichlet and Neumann boundary conditions. The initial search internal is set to [0, 20] and obtain stable and accurate results. We also run a similar test for the corresponding second biharmonic boundary value problem (3.1a), (3.4) and (3.5) in which the initial search interval was set to [0, 10]. The results are shown in Table 9.

5.3 Example 3

We finally consider a mixed boundary value problem for the Cauchy–Navier equations of elasticity given by (4.1), (4.5) and (4.1c) and corresponding to the exact solution \(u_{1}=\mathrm{e}^{x+y}\), \(u_{2}=\sin (x+y)\). We take Poisson’s ratio and the shear modulus to be \(\nu =0.3\) and \(\mu =1\), respectively. In Fig. 7 we present the errors in \(u_{1}\) and \(u_{2}\) for various numbers of degrees of freedom versus the shape parameter \(c\) when the tractions are prescribed on \(\partial \Omega _{1}\) (cf. Sect. 4.2.1). In this case, the optimal shape parameter is \(c=5.216\) and the corresponding errors in \(u_{1}\) and \(u_2\) are \(3.530(-8)\) and \(1.059(-7)\), respectively. Note that the errors \(E_1\) and \(E_2\) in \(u_{1}\) and \(u_2\) are defined using (5.2).

In Table 10 we present results obtained when applying the LOOCV algorithm to find the sub-optimal shape parameter and the corresponding errors in \(u_1\) and \(u_2\). Overall, accurate results are obtained for various initial search intervals. If we choose the interval properly, the accuracy can be very close to the optimal one as shown in Fig. 7. In Table 11 we present results obtained using various \(M=N\) for a search interval of [0,20]. We also considered the corresponding Dirichlet boundary value problem (4.1) and in Table 12 we present results obtained using various \(M=N\) for a search interval of [0,20] which are very similar to those in Table 11. Moreover, these results are consistent with the corresponding results obtained in Examples 1 and 2.

6 Conclusions

We have applied a Kansa-RBF method for the solution of elliptic boundary value problems in annular domains. With an appropriate choice of collocation points, the discretization of such problems governed by the Poisson, inhomogeneous biharmonic or the Cauchy–Navier equations of elasticity leads to linear systems in which the coefficient matrices are either block circulant or can be easily transformed into block circulant matrices. Thus these systems may be solved efficiently using MDAs leading to substantial savings in computational cost and storage. In addition, a major advantage of the proposed technique, other than its simplicity, is that it is applicable for any RBF and, as shown in our numerical tests, it is applicable to large-scale problems achieving high accuracy. Moreover, the use of the LOOCV algorithm enables us to select a good shape parameter which is critical to ensure appropriate numerical accuracy. We would like to indicate that the proposed method can be extended to solving a large class of partial differential equations without difficulty. The algorithm proposed in this paper can be easily applied to other RBF collocation methods [4, 8, 29]. In the spirit of reproducible research and for the convenience of interested readers the MATLAB\(^{\copyright }\) code for Example 1, as described by Algorithm 1 in Sect. 2.3, maybe accessed at [14].

Possible areas of future research include

-

The application of the method using compactly supported RBF, see, e.g., [2].

-

The application of the method using a localized RBF collocation, see, e.g., [28].

-

The extension of the current method for the solution of three-dimensional axisymmetric elliptic boundary value problems, see, e.g., [22].

-

The extension of the current method to problems in which the type of boundary conditions alternates on the circular segments of the boundary, see, e.g., [19].

References

Bialecki, B., Fairweather, G., Karageorghis, A.: Matrix decomposition algorithms for elliptic boundary value problems: a survey. Numer. Algorithms 56, 253–295 (2011)

Buhmann, M.D.: A new class of radial basis functions with compact support. Math. Comput. 70, 307–318 (2001)

Buhmann, M.D.: Radial Basis Functions: Theory and Implementations, Cambridge Monographs on Applied and Computational Mathematics, vol. 12. Cambridge University Press, Cambridge (2003)

Chen, C.S., Fan, C.M., Wen, P.H.: The method of particular solutions for solving certain partial differential equations. Numer. Methods Partial Differ. Equ. 28, 506–522 (2012)

Cheng, A.H.-D.: Multiquadric and its shape parameter—a numerical investigation of error estimate, condition number, and round-off error by arbitrary precision computation. Eng. Anal. Bound. Elem. 36, 220–239 (2012)

Davis, P.J.: Circulant Matrices, 2nd edn. AMS Chelsea Publishing, Providence (1994)

Fasshauer, G.E.: Meshfree Approximation Methods with MATLAB, Interdisciplinary Mathematical Sciences, vol. 6. World Scientific Publishing Co., Pte. Ltd., Hackensack, NJ (2007)

Fasshauer, G.E.: Solving partial differential equations by collocation with radial basis functions. In: Le Mehaute, A., Rabut, C., Schumaker, L.L. (eds.) Surface Fitting and Multiresolution Methods, pp. 131–138. Vanderbilt University Press, Nashville, TN (1997)

Fasshauer, G.E., Zhang, J.G.: On choosing optimal shape parameters for RBF approximation. Numer. Algorithms 45, 345–368 (2007)

Fornberg, B., Larsson, E., Flyer, N.: Stable computations with Gaussian radial basis functions. SIAM J. Sci. Comput. 33(2), 869–892 (2011)

Fornberg, B., Wright, G.: Stable computation of multiquadric interpolants for all values of the shape parameter. Comput. Math. Appl. 48(5–6), 853–867 (2004)

Hartmann, F.: Elastostatics. In: Brebbia, C.A. (ed.) Progress in Boundary Element Methods, vol. 1, pp. 84–167. Pentech Press, London (1981)

Heryudono, A.R.H., Driscoll, T.A.: Radial basis function interpolation on irregular domain through conformal transplantation. J. Sci. Comput. 44, 286–300 (2010)

Kansa, E.J.: Multiquadrics–a scattered data approximation scheme with applications to computational fluid-dynamics. II. Solutions to parabolic, hyperbolic and elliptic partial differential equations. Comput. Math. Appl. 19(8–9), 147–161 (1990)

Kansa, E.J., Hon, Y.C.: Circumventing the ill-conditioning problem with multiquadric radial basis functions: applications to elliptic partial differential equations. Comput. Math. Appl. 39(7–8), 123–137 (2000)

Karageorghis, A.: Efficient Kansa-type MFS algorithm for elliptic problems. Numer. Algorithms 54, 261–278 (2010)

Karageorghis, A.: Efficient MFS algorithms for inhomogeneous polyharmonic problems. J. Sci. Comput. 46, 519–541 (2011)

Karageorghis, A.: The method of fundamental solutions for elliptic problems in circular domains with mixed boundary conditions. Numer. Algorithms 68, 185–211 (2015)

Karageorghis, A., Chen, C.S.: A Kansa-RBF method for Poisson problems in annular domains. In: Brebbia, C.A., Cheng, A.H.-D. (eds.) Boundary Elements and Other Mesh Reduction Methods XXXVII, pp. 77–83. WIT Press, Southampton (2014)

Karageorghis, A., Chen, C.S., Smyrlis, Y.-S.: A matrix decomposition RBF algorithm: approximation of functions and their derivatives. Appl. Numer. Math. 57, 304–319 (2007)

Karageorghis, A., Chen, C.S., Smyrlis, Y.-S.: Matrix decomposition RBF algorithm for solving 3D elliptic problems. Eng. Anal. Bound. Elem. 33(12), 1368–1373 (2009)

Karageorghis, A., Marin, L.: Efficient MFS algorithms for problems in thermoelasticity. J. Sci. Comput. 56, 96–121 (2013)

Karageorghis, A., Smyrlis, Y.-S.: Matrix decomposition MFS algorithms for elasticity and thermo-elasticity problems in axisymmetric domains. J. Comput. Appl. Math. 206, 774–795 (2007)

Karageorghis, A., Smyrlis, Y.-S., Tsangaris, T.: A matrix decomposition MFS algorithm for certain linear elasticity problems. Numer. Algorithms 43, 123–149 (2006)

Larsson, E., Fornberg, B.: A numerical study of some radial basis function based solution methods for elliptic PDEs. Comput. Math. Appl. 46, 891–902 (2003)

Lee, C.K., Liu, X., Fan, S.C.: Local multiquadric approximation for solving boundary value problems. Comput. Mech. 30, 396–409 (2003)

Li, M., Chen, W., Chen, C.S.: The localized RBFs collocation methods for solving high dimensional PDEs. Eng. Anal. Bound. Elem. 37, 1300–1304 (2013)

Mai-Duy, N., Tran-Cong, T.: Indirect RBFN method with thin plate splines for numerical solution of differential equations. CMES Comput. Model. Eng. Sci. 4, 85–102 (2003)

Matérn, B.: Spatial Variation. Lecture Notes in Statistics, vol. 36, 2nd edn. Springer, Berlin (1986)

Mouat, C.T.: Fast Algorithms and Preconditioning Techniques for Fitting Radial Basis Functions, Ph.D. thesis, Mathematics Department, University of Canterbury, New Zealand (2001)

Rippa, S.: An algorithm for selecting a good value for the parameter \(c\) in radial basis function interpolation. Adv. Comput. Math. 11, 193–210 (1999)

Schaback, R.: Error estimates and condition numbers for radial basis function interpolation. Adv. Comput. Math. 3, 251–264 (1995)

Schaback, R.: Multivariate interpolation and approximation by translates of a basis function. In: Chiu, C.K., Schumaker, L.L. (eds.) Approximation Theory VIII, vol. 1, pp. 491–514. World Scientific Publishing Co., Inc, Singapore (1995)

The MathWorks Inc, 3 Apple Hill Dr., Natick, MA, Matlab

Acknowledgments

The authors wish to thank the referees for their helpful comments and suggestions which resulted in an improved manuscript.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

We consider the RBFs \(\phi _{mn}, \, m=1, \ldots , M, n=1, \ldots , N\). These satisfy, see, e.g., [7, Appendix D],

It can be easily seen that

and

Also,

and

In the sequel, we shall be using the notation (cf. (2.8))

We shall also need the normal derivatives on the boundary \(\partial \Omega _1\) which are given by

We next state and prove the following lemmata:

Lemma 1

For any radial function, each submatrix \(\left( {A}_{n_1,n_2}\right) \) in (2.12) possesses a circulant structure.

Proof

We first consider the submatrices \(\left( {A}_{n_1,n_2}\right) , \; n_1=2, \ldots , N-1, \; n_2=1, \ldots , N,\) which are defined by (2.13a). From (6.6), \(\Delta \phi _{m_2,n_2}\) is an RBF, i.e. it is a function of \(r_{m_2,n_2}\) (cf. (6.1)). Moreover,

shows that for fixed \(n_1, n_2\), the quantity \(r_{m_2,n_2}(x_{m_1,n_1},y_{m_1,n_1})\) only depends on \(({\vartheta }_{m_1}-{\vartheta }_{m_2})\), i.e. \(({m_1}-{m_2})\) and therefore the submatrices are circulant.

The proof that the submatrices \(\left( {A}_{n_1,n_2}\right) , \; n_1=1, N, \; n_2=1, \ldots , N\), defined by (2.13b)–(2.13c), are circulant in the case of Dirichlet boundary conditions is identical since their elements involve the RBFs \(\phi _{m_2,n_2}\), which only depend on \(r_{m_2,n_2}\).

In the case of a Neumann boundary condition on \(\partial \Omega _1\), however, we have that the submatrices \(\left( {A}_{1,n}\right) , \; n=1, \ldots , N\), are defined by (2.15), or

and from (6.2) and (6.9) we obtain

Ignoring the radial part, we examine

which, again, only depends on \((m_2-m_1)\). Hence, this part is also radial and the corresponding submatrices are circulant. \(\square \)

Following identical arguments we also arrive at the corresponding result for the biharmonic case.

Lemma 2

For any radial function, each submatrix \(\left( {A}_{n_1,n_2}\right) \) in (2.12) corresponding to collocation scheme (3.2), for both the first and the second biharmonic problem, possesses a circulant structure.

Finally, we consider the corresponding result for the Cauchy–Navier equations of elasticity.

Lemma 3

For any radial function, each submatrix \(\left( \tilde{A}_{n_1,n_2}\right) \) in (4.9) possesses a \(2 \times 2\) block circulant structure.

Proof

In order to prove the lemma we need to show that, see, e.g. [23],

provided \(m_1+m, m_2+m \le M\). In case \(m_1+m > M\), \(m_1+m\) is replaced by \(m_1+m-M\) and in case \(m_2+m > M\), \(m_2+m\) is replaced by \(m_2+m-M\).

Since \(R_{{\vartheta }_k}^2=I_2\) proving (6.11) is equivalent to proving

However,

and

Hence proving (6.12) is equivalent to proving that

From (4.4a), for \(m_1,m_2=1,\ldots ,M, \; n_1=2, \ldots , N-1, \; n_2=1, \ldots , N\), we can write

We first consider the first term in (6.14), namely \(\mu \Delta \phi _{m_2,n_2}(x_{m_1,n_1},y_{m_1,n_1}) I_2\).

We clearly have that

since the Laplacian of \(\phi _{m_2,n_2}(x,y)\) is radial from (6.6) and

Therefore, the first term in (6.14) satisfies (6.13).

For the second term in (6.14), from (6.3)–(6.5) and ignoring the radial parts we only need show that

By performing the multiplications on the left hand side of (6.16) we obtain that it is equal to

Moreover, it can be easily shown that

and

from which (6.16) follows. Hence, the second term in (6.14) also satisfies (6.13).

We next need to prove that

Since from (4.4b) we can write

from (6.15), following a similar argument to the one used for the first term of (6.14), (6.17) follows.

Finally, we show that if instead of the displacements \(u_1, u_2\) we have that the tractions \(t_1, t_2\) are prescribed on, say, the boundary \(\partial \Omega _1\), cf. Sect. 4.2.1, then

is still true.

We write

where \(T_{ij}, i,j=1,2,\) are the appropriate quantities in (4.6).

We can easily show that

We shall first show that the first term in (4.6) is radial. Using the notation of (6.8) with \(n_1=1\), we have from (6.2) that

and ignoring the radial factor, we have, using (6.10) that

which only depends on \((m_2-m_1)\), hence it is radial.

We next consider the second term in (4.6). Dropping the multiplying constants and the obviously radial parts, and with the appropriate notation, we consider the quantities

By using (6.9), we first consider the term

We next consider

Similarly, we have that

Finally,

Therefore (6.18) is satisfied and the proof is complete. \(\square \)

Rights and permissions

About this article

Cite this article

Liu, XY., Karageorghis, A. & Chen, C.S. A Kansa-Radial Basis Function Method for Elliptic Boundary Value Problems in Annular Domains. J Sci Comput 65, 1240–1269 (2015). https://doi.org/10.1007/s10915-015-0009-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-015-0009-4

Keywords

- Radial basis functions

- Poisson equation

- Biharmonic equation

- Cauchy–Navier equations of elasticity

- Fast Fourier transforms

- Kansa method