Abstract

Adolescent problem gambling prevalence rates are reportedly five times higher than in the adult population. Several school-based gambling education programs have been developed in an attempt to reduce problem gambling among adolescents; however few have been empirically evaluated. The aim of this review was to report the outcome of studies empirically evaluating gambling education programs across international jurisdictions. A systematic review following guidelines outlined in the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) Statement searching five academic databases: PubMed, Scopus, Medline, PsycINFO, and ERIC, was conducted. A total of 20 papers and 19 studies were included after screening and exclusion criteria were applied. All studies reported intervention effects on cognitive outcomes such as knowledge, perceptions, and beliefs. Only nine of the studies attempted to measure intervention effects on behavioural outcomes, and only five of those reported significant changes in gambling behaviour. Of these five, methodological inadequacies were commonly found including brief follow-up periods, lack of control comparison in post hoc analyses, and inconsistencies and misclassifications in the measurement of gambling behaviour, including problem gambling. Based on this review, recommendations are offered for the future development and evaluation of school-based gambling education programs relating to both methodological and content design and delivery considerations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Prevalence

Despite age restrictions, the literature reports most Australians gamble prior to age 15 (Delfabbro et al. 2005, 2009a, b; Purdie et al. 2011; Splevins et al. 2010). While the majority gamble recreationally, studies have reported the prevalence of problem gambling among adolescent subpopulations is three to ten times that of adults (Derevensky and Gupta 2000; Gupta et al. 2013; Purdie et al. 2011; Splevins et al. 2010; Welte et al. 2008). Additionally, adolescents are more likely to gamble on the Internet (Olason et al. 2011), which may place them at risk for more severe harms compared to those who gamble on land-based forms (Griffiths and Barnes 2008).

Such high rates have generated substantial interest in developing and implementing preventive measures among children and adolescent populations. While some are administered outside of schools (e.g., in youth centres, community initiatives, juvenile justice system), the majority of preventive educational programs have been carried out in primary and high school settings, either incorporated into education curricula, or offered as stand-alone workshops. Despite the effort and expenditure directed toward their delivery, few programs have been assessed and evaluated.

A recent review of gambling education programs criticised the lack of long-term follow-ups and behavioural measures in program evaluations (Ladouceur et al. 2013). These authors concluded that at best, current programs are effective at reducing misconceptions and increasing knowledge about gambling in the short-term but their longitudinal impact on gambling-related harms and incidence of gambling disorders remain unknown (Ladouceur et al. 2013). The current review adhered to the stringent systematic search protocols recommended in the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines (Moher et al. 2009). It adds seven studies to those reviewed by Ladouceur et al. (2013) and provides an updated resource for the gambling education sector.

Universal Prevention Versus Targeted Intervention

Ladouceur and colleagues argued that gambling education programs generally adopt one of two approaches: universal or targeted (Ladouceur et al. 2013). Universal prevention programs are aimed at youth, regardless of risk or gambling status. In contrast, targeted programs are aimed specifically at at-risk or problem gamblers. The presumed benefit of the latter is that such interventions can be directed and specifically tailored to those needing it most. The disadvantage is the potential failure for a proportion of non-identified problem gamblers to be offered appropriate support. Tailored programs more closely represent treatment options for at-risk groups, whereas universal programs can be seen as genuine primary prevention initiatives. This review focuses solely on primary prevention programs, and so targeted approaches are not discussed.

Although evidence suggests gambling from an early age is associated with more severe gambling problems (Jiménez-Murcia et al. 2010), longitudinal studies have reported that adolescent problem gambling does not predict such behaviours in adulthood (Delfabbro et al. 2009, 2014; Slutske et al. 2003). Despite inconsistent findings that risk factors in adolescents and children predict adult gambling problems, the available evidence indicates that exposure to multiple factors and experiences in the formative stages of adolescent development can shape subsequent attitudes, cognitions and behaviours in adulthood (Sroufe et al. 2010). Although the mechanism of impact remains unclear, there is a basis for arguments favouring the implementation of early intervention preventative educational programs in schools.

Irrespective of which approach is adopted, there are general guidelines for program development to increase potential effectiveness. Nation et al. (2003) reviewed prevention strategies in substance use, sexual health, school failure, and delinquency. These authors identified nine characteristics of effective interventions: (1) comprehensive coverage of material; (2) inclusion of varied teaching methods; (3) provision of sufficient dosage; (4) theoretical justification; (5) establishment of positive relationships; (6) appropriate timing, (7) socio-cultural relevance; (8) inclusion of outcome evaluations; and (9) well-trained staff (Nation et al. 2003). It is argued that although community-based initiatives and treatment centres have the ability to deliver gambling education to youth, schools appear to have the necessary resources and capacity to meet the above requirements.

The aim of this systematic review was to evaluate existing school-based gambling education programs and offer recommendations for improving research methodology and program effectiveness, respectively.

Current Review

This systematic review follows the checklist and flow diagram outlined in the PRISMA Statement (Moher et al. 2009). This review located and critically assessed studies evaluating school-based gambling education programs among youth. Studies were sought that sampled children and adolescents attending primary or high school.

Methodology

Initial Search

The original search was conducted on the 20th January 2016; five databases were searched: PubMed, Scopus, Medline, PsycINFO, and ERIC. The search terms included: gambling, adolescent, teen, child, youth, student, program, intervention, awareness, prevention, school, evaluation, education, and curriculum, as well as all derivatives of the words. No date filter was applied, as it was important to maximise the search for all possible evidence pertaining to gambling education programs.

Selection Criteria

Inclusion criteria: The research had to:

-

a)

Empirically evaluate a gambling education program; and

-

b)

Evaluate a program that was administered/implemented in a school setting; and

-

c)

Involve some form of quantitative analysis of pre-post intervention scores; and

-

d)

Report on primary data; and

-

e)

Sample youth attending primary or high school.

Exclusion criteria: Studies were excluded if they:

-

a)

Were not available as full text; or

-

b)

Could not be obtained in English; or

-

c)

Were reviews, or conceptual or opinion pieces reporting no original data; or

-

d)

Reported on programs or interventions that were:

-

(a)

Carried out in a therapeutic setting, or;

-

(b)

A media campaign or public policy; or

-

(c)

A public announcement; or

-

(d)

A stand-alone website; or

-

(a)

-

e)

Only reported on qualitative data; or

-

f)

Sampled participants attending colleges or universities

Grey literature including government reports, industry-commissioned documents, unpublished theses, and conference proceedings were included in the review to reduce the risk of publication bias.

Results

Study Selection

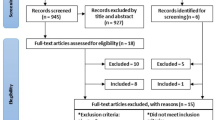

The original search yielded over 6000 references; however only 69 were retained for review (see Fig. 1). Retained studies were included if they appeared relevant based on their title and abstract. Two independent reviewers assessed all these 69 articles and applied the specific inclusion and exclusion criteria. A total of 54 papers were subsequently excluded as not meeting inclusion criteria, leaving 15 included articles. Following this, a snowball method was used to search the references contained in included articles to locate any further studies. Seven additional papers were located, five of which met inclusion criteria, resulting in a total sample of 20 papers (Fig. 1). Inter-rata reliability between the two reviewers was high, with initial agreement on 95.65 % of papers, κ = 0.905 (95 % CI 0.800–1.00). It should be noted that while 20 papers were included, only 19 studies were reviewed as two papers reported data from the same study. Information for the 19 reviewed studies is summarised in Table 1.

Consort diagram adapted from (Zorzela et al. 2016)

Study Characteristics

All programs reviewed were carried out in a school setting. The majority of studies were cluster randomised controlled trials, and grouped students either by class or school. Participants were aged between 10 and 18 years old, and analysis samples ranged from 75 to 8455. Nine of the 19 programs provided one intervention session (Ferland et al. 2002; Ladouceur et al. 2003, 2004, 2005; Lavoie and Ladouceur 2004; Lemaire et al. 2004; Taylor and Hillyard 2009; Turner et al. 2008; Walther et al. 2013), two programs provided two to three sessions (Donati et al. 2014; Ferland et al. 2005), and eight of the programs provided more than three sessions (Canale et al. 2016; Gaboury and Ladouceur 1993; Lupu and Lupu 2013; Todirita and Lupu 2013; Turner et al. 2008; Williams 2002; Davis 2003; Williams et al. 2004, 2010). Program sessions lasted between 20 and 120 min each, between 20 and 500 min per program (M = 194.71, SD = 3.08) (based on 17 studies that reported session duration), and were delivered over one to ten sessions (M = 3.53, SD = 3.08). All studies measured cognitive outcomes such as knowledge, perceptions, or beliefs, but only nine measured behavioural outcomes (Canale et al. 2016; Donati et al. 2014; Ferland et al. 2005; Gaboury and Ladouceur 1993; Turner et al. 2008; Walther et al. 2013; Williams 2002; Davis 2003; Williams et al. 2004, 2010). Study characteristics are described in Table 1. Measures of effect size (Cohen’s d) are presented where possible where 0.2–0.3 represents a small effect, 0.5 a medium effect, and ≥0.8 a large effect (Cohen 1992).

Quality Assessment of Selected Papers

Studies were assessed for quality using the Quality Assessment Tool for Quantitative Studies (National Collaborating Centre for Methods and Tools 2008). Each study was assigned a rating of weak, moderate, or strong on measures of selection bias, study design, confounding variables, blinding, data collection, withdrawals and dropouts, and given an overall global rating. Validity and reliability properties for this measure meet acceptable standards. Content validity was assessed using an iterative process with an expert panel. Test–retest reliability was calculated twice by two reviewers and was good (Cohen’s Kappa = 0.74, 0.61) for both reviewers, respectively (Thomas et al. 2004). Results of quality assessment by component can be seen in Table 2.

General Limitations of the Adolescent Gambling Education Literature

Design

The lack of a behavioural outcome measure was the most common methodological weakness found in ten out of 19 studies. These studies limited their outcome measures to cognitive changes, primarily in the short-term (Ferland et al. 2002; Ladouceur et al. 2003, 2004, 2005; Lavoie and Ladouceur 2004; Lemaire et al. 2004; Taylor and Hillyard 2009; Todirita and Lupu 2013; Turner et al. 2008). Two studies measured problem gambling at baseline (Taylor and Hillyard 2009; Turner et al. 2008), but failed to include this in post-test assessment as a primary outcome measure.

Moreover, only four out of the 19 studies assessed follow-up outcomes at the six-month post-intervention interval, or beyond (Donati et al. 2014; Ferland et al. 2005; Gaboury and Ladouceur 1993; Lupu and Lupu 2013). Impressively, Lupu and Lupu (2013) assessed intervention effects over three, six, nine, and 12 months, however, they did not take the opportunity to evaluate gambling behaviour at any of these time points. Seven studies had no follow-up assessment at all (Ferland et al. 2002; Ladouceur et al. 2003, 2004; Lavoie and Ladouceur 2004; Lemaire et al. 2004; Taylor and Hillyard 2009; Todirita and Lupu 2013). Although the absence of follow-up assessment is less problematic when the aim is to measure cognitive change, post-test-only assessment provides no indication of the permanence of such cognitive changes, or if they translate into any behavioural changes over time.

Notably, most studies used a cluster randomised control approach, randomly allocating schools (Ferland et al. 2005; Ladouceur et al. 2003, 2004; Turner et al. 2008; Walther et al. 2013; Williams 2002; Davis, 2003; Williams et al. 2004, 2010) or classes (Canale et al. 2016; Donati et al. 2014; Ferland et al. 2002; Lavoie and Ladouceur 2004; Lemaire et al. 2004; Lupu and Lupu 2013; Todirita and Lupu 2013) as opposed to individual students. This is particularly important when administering and evaluating interventions among youth because adolescents’ attitudes toward gambling are vastly influenced by the opinions and behaviours of their peers (Hanss et al. 2014). Thus, when delivering interventions to an entire grade cohort, adolescents are likely to be of the same age, thus ensuring long-term studies are especially sensitive to crucial changes in development (Slutske 2007).

However, class allocation can confound the observed effects. This is often because students from control classes are likely to have peers in intervention classes with whom they share thoughts, ideas, and newly acquired knowledge. No study provided information on integrity checks, so it is unclear as to whether the same intervention was applied consistently across groups. Only three studies varied from a randomised control design; Ladouceur et al. (2003) and Turner et al. (2008) did not randomly allocate participants to their controlled study, and Taylor and Hillyard (2009) did not use a control in their pre-post design.

Measurement Instruments

Of the seven studies that measured gambling problems at baseline, the majority reported reasonably high levels of problematic gambling among youth (see Table 1) (Canale et al. 2016; Gaboury and Ladouceur 1993; Taylor and Hillyard 2009; Turner et al. 2008; Williams 2002; Davis 2003; Williams et al. 2004, 2010). However, the distribution of problematic gambling did not appear to commensurate with real-world effects. Among those who did gamble, relatively small amounts of money were wagered (Canale et al. 2016; Williams 2002; Williams et al. 2010). Although there was no breakdown of the amount of money spent by problem gamblers, the level of harm experienced by those categorised as ‘problem gamblers’ remains questionable. Furthermore, low baseline amounts of money wagered were reported on average. This makes it is difficult to detect and interpret reductions in average expenditure over time. For example, Canale et al. (2016) reported that on average, gambling expenditure was less than 10 Euros per month. Similarly, Williams (2002) reported a median loss of just $10 over 3 months, and only 4 % of Williams et al. (2010) sample reported losing more than $51 in the past month on gambling.

Additionally, of the five studies that administered the DSM-IV-J/MR-J (Fisher 1992, 2000) or SOGS-RA (Winters et al. 1993), three did not take into consideration the 12-month timeframe of these measures, which are not appropriate for re-test intervals of 6 months or less (Turner et al. 2008; Williams et al. 2004, 2010). With a lapse of <6 months, one would not expect to see changes in such problem gambling measures from baseline to follow-up. Notably, Donati et al. (2014) and Canale et al. (2016) modified the SOGS-RA to reflect the brevity in their follow-up (6 months and 1 month, respectively). However, given that both measures assess gambling problems over a 12-month timeframe, it is questionable if the instruments are sensitive to detect significant difference between baseline and follow-up scores over shorter periods, even by modifying its timeframe.

There were also issues with the classification of participants’ gambling status. In addition to the straightforward numerical scoring of the SOGS-RA, known as the narrow criteria, the SOGS-RA is commonly used in conjunction with gambling frequency to produce an overall level of gambling ‘severity’, referred to as the broad criteria (Winters et al. 1993, 1995). Donati et al., (2014) applied broad criteria to their sample in order to categorise them into two groups; non-problem gamblers, and at-risk and problem gamblers (ARPGs). However, in this study, ARPGs included adolescents who gambled less than weekly and obtained a SOGS-RA score of one or more; but the original scale criteria requires a SOGS-RA score of at least two, not one, resulting in a large overrepresentation of ARPGs.

Similarly, both Donati et al. (2014) and Canale et al. (2016) misclassified non-gamblers as non-problem or non-frequent gamblers, respectively. While it is unclear how many participants were misclassified in Canale et al.’s study, nearly one-quarter (23.18 %) of the non-problem gambling group in Donati et al.’s study were in fact non-gamblers. Consequently, one must cautiously interpret any results that indicate differences between gambling groups in these studies as such groups are not truly representative of recreational gamblers.

The range of challenges and confounds related to the evaluation of programs includes reliance on self-reported expenditure data. There are two issues. The first pertains to the way in which questions are phrased, as gamblers tend to differ in the way they calculate their expenditure (Blaszczynski et al. 1997, 2006, 2008; Wood and Williams 2007). The second relates to the fidelity of responses. Adolescents (and adults) tend to overestimate wins and underestimate losses (Braverman et al. 2014; Wood and Williams 2007). For example, the data reported by Davis (2003) and Williams et al. (2010), suggested students won more often than they lost. Given the significant house edge inherent in commercial gambling, it is doubtful that student responses were valid and reliable.

Of the nine studies that evaluated gambling behaviour, only four of those explicitly operationalised gambling behaviour as involving the wagering of money (Ferland et al. 2005; Walther et al. 2013; Williams 2002; Williams et al. 2010). Without wagering money, adolescents may be inclined to positively report ‘betting’ on various activities, such as games of skill, sport, and cards, without ever having risked any actual money (e.g. “I bet you can’t make this shot”). Further, it is possible that adolescents, having such low disposable income (if any), wagered items of value such as food, clothing, or jewellery, rather than money. None of the studies asked if youth were gambling items of value instead of money.

Statistical Analyses and Interpretation of Results

There were concerns over the method of analyses and interpretation of results regarding statistically significant intervention effects. Williams (2002) reported reductions in gambling frequency and expenditure in their intervention group; however this occurred within the intervention group only from baseline to follow-up, and not relative to the control group. Similarly, Williams et al. (2010) reported significantly fewer self-reported problem gamblers in the intervention and booster groups compared to the control group at follow-up assessment. However, there was no change in self-reported problem gambling within any of the groups over time, thus such a between-groups comparison is an unreliable marker of true intervention effects. The lack of a statistically significant interaction may be due to the small numbers of problem gamblers in each group (between 7 and 35) leading to low statistical power. Indeed, the standard and booster intervention groups resulted in a 77 and 50 % reduction in self-reported problem gamblers from baseline to follow-up, respectively, while the control group saw a 150 % increase in self reported problem gambling.

Williams et al. (2004) reporting of results were inconsistent with their interpretation of such results in their discussion. The results section of the paper reports an increase in gamblers in the intervention group, however this is interpreted as a reduction in the discussion section. Such contradictory claims confound interpretation of the intervention’s effectiveness.

Donati et al. (2014) did not compare follow-up data to baseline data when determining the long-term efficacy of their intervention. The authors argued that because there were significant improvements in the intervention group from baseline (Time 1) to post-test (Time 2), and no significant deterioration between post-test (Time 2) and follow-up (Time 3), that this indicates permanence of the intervention’s effects. However, without verifying that follow-up (Time 3) scores were significantly different from baseline (Time 1) scores, such conclusions are questionable. Indeed, subsequent non-significant deteriorations were observed between post-test and follow-up in this intervention group. Further, while the authors reported a significant decrease in the number of gamblers and problem gamblers from baseline to follow-up in the intervention group, there was no statistical comparison to the control group. Without taking into consideration any between-group effects, it is difficult to detect if this decrease was truly due to the intervention in question.

Program Effects

Knowledge, Misperceptions, and Attitudes

Drawing conclusions about program effectiveness is difficult given challenges that are not easily controlled in research design. Nonetheless, the main indicator of program effectiveness is long-term behavioural change. However, most studies did not measure the effects of interventions in terms of behavioural indicators capable of identifying reduced problem gambling among adolescents.

Overall, the effectiveness of a program is generally suggested by observed measurement changes in cognitive variables. Programs were effective in reducing common misconceptions and fallacies about gambling, increasing knowledge of gambling forms, odds, highlighting differences between chance and skill, and creating more negative attitudes toward gambling.

Some studies also demonstrated improvements in more specific skills such as coping, awareness and self-monitoring, attitudes toward and dialogue about peer and familial gambling, problem solving and decision-making. However, from these results it is not possible to determine if such cognitive improvements prevent the development of future gambling problems. Additionally, any improvements if present, may deteriorate in the long term (Donati et al. 2014; Ferland et al. 2005; Lupu and Lupu 2013). Given only one study measured outcomes at 12 months, it is difficult to determine if such deterioration effects are unique to these programs or if they are likely to be observed in all preventive efforts.

Gambling Behaviour

Behavioural outcomes were less clear. Presumably, the justification for including cognitive measures in program evaluations is that such changes in cognition are expected to produce, or at least highly correlate with, changes in behavioural outcomes. Thus, one would assume that if an intervention were effective in producing cognitive improvements, it would also be effective in producing behavioural improvements. Although four studies that measured behavioural outcomes observed improvements in knowledge, attitudes, and cognitive errors (Ferland et al. 2005; Gaboury and Ladouceur 1993; Turner et al. 2008; Williams 2002; Davis 2003), they did not detect consequent behavioural changes. It is possible that significant improvements on measures such as the Random Events Knowledge Test (Turner and Liu 1999), and measures of gambling knowledge may be due to rehearsal effects, rather than genuine cognitive development. Additionally, cognitive changes observed at post-test have been shown to decrease over time, further suggesting immediate improvements may be a result of recency effects (Donati et al. 2014; Ferland et al. 2005; Lupu and Lupu 2013). It is also possible that the structural constraints pre-empted the observation of behaviour change within a short study period.

Theoretical conceptualisations for mechanisms of change were also unclear. Canale et al. (2016) attributed much of the success of their intervention to personalised feedback. However, both control and intervention groups were administered personalised feedback, while the intervention group also completed additional online training modules. Thus, it is more appropriate to attribute any success to the online modules, which tended to focus more on randomness, fallacies, and negative mathematical expectation. Indeed, as described by the authors, personalised feedback may have had a detrimental effect on students who gambled regularly, as those in the control condition reported significantly more unrealistic attitudes at the follow-up compared to their baseline assessment. Similarly, the Romanian studies (Lupu and Lupu 2013; Todirita and Lupu 2013) compared Rational Emotive Education (REE) combined with the Amazing Chateau software developed by the International Centre for Youth Gambling Problems and High-Risk Behaviours (ICYGPHRB 2004). The software combined with REE was more effective than REE alone and a control. However, it is not known what component of this combination is effective, i.e. if the software alone is more effective than REE and a control, thus rendering the REE an unnecessary component.

Measures of problem gambling were primarily used as proxies for harm. Five studies used problem gambling measures (DSM-IV-MR-J, SOGS-RA) as their primary outcome variable (Canale et al. 2016; Donati et al. 2014; Turner et al. 2008; Williams et al. 2004, 2010), however many used other behavioural variables to measure intervention outcomes such as frequency, duration, and expenditure or bet size (Canale et al. 2016; Ferland et al. 2005; Gaboury and Ladouceur 1993; Walther et al. 2013; Williams 2002; Davis 2003; Williams et al. 2004, 2010). Given gambling expenditure is considerably low among adolescents, and abstinence is not necessarily an adequate or realistic outcome, such measures by themselves may not be appropriate indicators of efficacy. Thus, it is important that measures of gambling-related harm are used as markers of efficacy in harm reduction and prevention programs.

Program Content and Delivery

Content

All programs targeted known cognitive aspects of problem gambling, including gambling fallacies, and misconceptions. Thirteen programs attempted to teach students about the unprofitability of gambling (house edge, odds) (Canale et al. 2016; Donati et al. 2014; Ferland et al. 2002; Gaboury and Ladouceur 1993; Ladouceur et al. 2004; Lavoie and Ladouceur 2004; Lemaire et al. 2004; Lupu and Lupu 2013; Todirita and Lupu 2013; Walther et al. 2013; Williams 2002; Davis 2003; Williams et al. 2004, 2010), and 11 involved covered components on randomness in gambling (Canale et al. 2016; Donati et al. 2014; Ferland et al. 2002, 2005; Ladouceur et al. 2003, 2004; Lavoie and Ladouceur 2004; Lupu and Lupu 2013; Todirita and Lupu 2013; Turner et al. 2008a, b). Raising awareness of the signs, symptoms, and consequences of problem gambling was also commonly found (11 out of 19) (Canale et al. 2016; Ferland et al. 2005; Gaboury and Ladouceur 1993; Ladouceur et al. 2005; Lemaire et al. 2004; Taylor and Hillyard 2009; Turner et al. 2008; Walther et al. 2013; Williams 2002; Davis 2003; Williams et al. 2004, 2010), however, more specific skills such as coping, problem-solving and decision-making were less common (6 out of 19) (Ferland et al. 2005; Gaboury and Ladouceur 1993; Turner et al. 2008; Williams 2002; Davis 2003; Williams et al. 2004, 2010).

Most studies did not provide a rationale for developing the intervention program, or used programs already developed by third parties. Williams (2002) and Williams et al. (2010) explicitly stated that their program development followed a comprehensive and systematic process that was informed by a thorough review of the educational literature. This was to ensure that their content would be engaging and relevant to youth.

Dosage

Programs varied considerably in dosage and total exposure (20–500 min per program). Generally, studies that evaluated behavioural outcomes tended to implement more comprehensive programs and evaluate them over a longer period of time than those that did not measure behavioural outcomes. Of the ten studies that did not measure behavioural outcomes, only three programs were delivered over more than one session, or integrated into the school curriculum (Lupu and Lupu 2013; Todirita and Lupu 2013; Turner et al. 2008). On the other hand, seven out of the nine studies that measured behavioural outcomes involved programs that lasted more than one session (Canale et al. 2016; Donati et al. 2014; Ferland et al. 2005; Gaboury and Ladouceur 1993; Williams 2002; Davis 2003; Williams et al. 2004, 2010).

More comprehensive programs, and those with booster sessions, tended to perform better than their brief counterparts on cognitive and behavioural measures (Ferland et al. 2002; Williams et al. 2010, respectively). Brief interventions on their own may not be sufficient to produce lasting changes, and larger dosages might assist youth to fully understand complex concepts such as randomness and negative expectation. However, the absence of long-term follow-up precludes assessment of a dose-responsive relationship between the duration of programs and their outcomes and longevity of effects.

Delivery Mode

Most programs comprised a combination of multi-media tools (videos, online modules) and classroom discussions and activities. Only three programs did not involve some form of multi media (Ladouceur et al. 2003; Turner et al. 2008; Walther et al. 2013), and only five were solely multi-media programs (no teacher intervention) (Canale et al. 2016; Ladouceur et al. 2004; Lemaire et al. 2004; Lupu and Lupu 2013; Todirita and Lupu 2013). Video-based and online programs provide an appropriate alternative to teacher-based education programs. Internet-based interventions for gambling are cost-effective, convenient, and especially suited to empirical evaluation (Gainsbury and Blaszczynski 2011). Moreover, and in line with Nation et al. (2003) recommendations, they are relevant and engaging for youth (Monaghan and Wood 2010).

Almost all programs were delivered to class cohorts (Canale et al. 2016; Donati et al. 2014; Ferland et al. 2002; Gaboury and Ladouceur 1993; Ladouceur et al. 2003, 2004; Lavoie and Ladouceur 2004; Lemaire et al. 2004; Lupu and Lupu 2013; Todirita and Lupu 2013) or school cohorts (Ferland et al. 2005; Turner et al. 2008; Walther et al. 2013; Williams 2002; Davis 2003; Williams et al. 2004, 2010). School-wide distribution is preferable, with two primary advantages; (1) student peer groups are targeted simultaneously, and (2) control groups are distinct from intervention groups. In the case where allocation is carried out by class, control and intervention participants are likely to engage and share information, confounding true control conditions.

Only one study assessed the impact of educators on outcome variables, finding that exercises delivered by a gambling specialist were more effective in reducing erroneous perceptions than those delivered by a teacher (Ladouceur et al. 2003). Perhaps counter-intuitively, this suggests teachers may not be the most appropriate people to deliver such programs.

Discussion

Methodological Considerations

One of the difficulties in measuring behavioural change in adolescent gambling is that relatively small numbers of youth gamble at problematic levels, and therefore, large sample sizes are needed to detect small but significant reductions in gambling problems. Additionally, many programs are not designed to promote abstinence, so large reductions in gambling frequency are not necessarily anticipated.

Importantly, many of the studies demonstrated that changes in knowledge, beliefs and attitudes do not necessarily translate into changes in behaviour (e.g. Ferland et al. 2005; Gaboury and Ladouceur 1993; Turner et al. 2008). This is likely the result of two factors: inaccurate measurement of problematic gambling in adolescence, and/or a lack of theoretical conceptualisation in program design. Firstly, it is important that studies do not use cognitive measures as proxies for harm as these may represent mechanisms for problematic behaviour (process) but are not conceptually the same as the consequences of negative impacts (harm). For example, the fallacy that machines run in cycles is a mechanism by which gamblers may be persuaded to spend beyond their affordable means (process), but it is not the consequent harm (money lost). As previously mentioned, adolescent measures of problem gambling have come under considerable criticism (Derevensky et al. 2003; Jacques and Ladouceur 2003; Stinchfield 2010), and similar to the adult gambling literature, there is a suggested need to move away from diagnostic criteria of gambling pathology and toward measures of gambling-related harm (Blaszczynski et al. 2008; Currie et al. 2009; Langham et al. 2016; Neal et al. 2005). Second, even with improvements in measurement instruments, a program that is designed from sound theory increases the likelihood of observing behavioural change. In the absence of a theoretical conceptualisation regarding mechanisms for change, designing preventive interventions proceeds by trial and error.

The confidence in observed program evaluations increases with longer follow-up periods. It is preferable for studies to evaluate behavioural outcomes over a period of 6 months or more, as there appears to be evidence of deteriorating effects over time (Donati et al. 2014; Ferland et al. 2005; Lupu and Lupu 2013). Observed changes at brief follow-up intervals do not necessarily indicate lasting positive effects on future gambling behaviour. Additionally, problem gambling measures (and measures of harm) should reflect follow-up periods. The SOGS-RA and DSM-IV were developed as measures of gambling problems over the last 12-months, as such it is not adequate to simply adjust the timeframe of these measures to suit shorter assessment timeframes.

Program Content and Delivery

Second to the methodological issues faced in the evaluation of gambling education programs, specific attention must be paid to their content and mode of delivery. In practise, it would be more economical for existing teachers to adapt and deliver programs to their students via some form of program manual or teaching kit. Ladouceur et al. (2003) demonstrated that gambling initiatives delivered by gambling specialists were significantly more effective at reducing cognitive errors among students compared to those delivered by their teachers. Nevertheless, it does not seem feasible that schools enlist gambling psychologists to deliver education programs, especially those that span multiple sessions. Online programs or modules may provide a promising compromise. Canale et al. (2016) demonstrated some efficacy in reducing gambling problems among high school students using a web-based intervention, despite its methodological flaws, and the Amazing Chateau computer program and Lucky video produced encouraging cognitive improvements (Ladouceur et al. 2004; Lupu and Lupu 2013; Todirita and Lupu 2013). The benefits are many; web-based interventions are cost effective, consistent, unbiased, and socio-culturally relevant to youth (Gainsbury and Blaszczynski 2011; Monaghan and Wood 2010).

To date, many of the programs implemented in schools and reviewed in this paper focus on raising awareness of problem gambling, its signs, symptoms and consequences, available treatment services, fallacies and cognitive errors, and superficial explanations of terms such as probability, odds, and house edge. Few programs emphasised learning complex mathematical concepts such as randomness and expected value. Only four of the nine studies that evaluated behavioural outcomes sought to teach students about randomness. There may be hesitation toward including complex mathematical concepts in gambling education programs so as not to overwhelm students. Nevertheless, such important concepts are crucial to understanding the unprofitability and unpredictability of commercial gambling products. Promoting a negative viewpoint of gambling and its associated consequences are not sufficient to prevent gambling problems.

Limitations

The current systematic review was limited foremost by the lack of meta-analyses. Due to the variation in outcome measures, samples, and analyses, it was not feasible to calculate comparable measures of effect size. Two studies were excluded despite meeting all other eligibility criteria as they were not available in English, and no funding was available for a translator. This may have limited the representativeness of the reported findings. Further, there was a genuine risk of publication bias in the reviewed studies. Given the large number of programs currently available in schools, it is likely that others have been evaluated and not published due to non-significant findings. That said, this review followed a rigorous search procedure in an attempt to mitigate such biases, and every effort was made to include all possible relevant studies. In light of the broad scope of this review, we were able to provide recommendations for the design and evaluation of future programs, based on the available evidence.

Recommendations

To prevent gambling problems, programs should be implemented universally, as early as possible (age 10 onward) to prevent misconceptions from developing. It is logical that programs orient their efforts toward preventing gambling problems from occurring, rather than preventing gambling, or treating adolescents identified as ‘problem gamblers’. Programs should focus primarily on teaching mathematical principles that account for the long-term unprofitability experienced by users, such as expected value. Where possible, programs that are staggered over several sessions will be better suited to the needs of complex content. It is important programs are relevant to youth in terms of delivery and content; that is, multi-media platforms are preferable, and examples within the program should help to connect new knowledge with existing knowledge and familiar experiences (most adolescents have not gambled inside a casino, but might be familiar with footy tipping). Evaluations should measure reductions in harm, not frequency or expenditure (as these are typically very low, and unreliable), and conduct follow-up assessments into adulthood (or time of legal age).

Conclusion

Given the prevalence of gambling among adolescents, few gambling education programs for adolescents have been evaluated. No doubt the number of programs currently implemented in schools far exceeds those reviewed in this paper. There is a discord between current practice, and evidence-based practice. The strength of the efficacy of the reviewed programs remains unclear due to notable methodological flaws including measurement issues, small numbers of problem gamblers, and brief follow-up assessments. Further, improvements could be made to the content and design of programs so that they have a greater likelihood of producing behavioural outcomes. Strong theoretical conceptualisation in designing programs is essential to boost intervention effects and meet the objective of reducing or preventing gambling problems among adolescents.

References

Blaszczynski, A., Dumlao, V., & Lange, M. (1997). How much do you spend gambling? Ambiguities in survey questionnaire items. Journal of Gambling Studies, 13(3), 237–252. doi:10.1023/A:1024931316358.

Blaszczynski, A., Ladouceur, R., Goulet, A., & Savard, C. (2006). How much do you spend gambling? Ambiguities in questionnaire items assessing expenditure. International Gambling Studies, 6(2), 123–128. doi:10.1080/14459790600927738.

Blaszczynski, A., Ladouceur, R., Goulet, A., & Savard, C. (2008a). Differences in monthly versus daily evaluations of money spent on gambling and calculation strategies. Journal of Gambling Issues, 21, 98–105. doi:10.4309/jgi.2008.21.9.

Blaszczynski, A., Ladouceur, R., & Moodie, C. (2008b). The Sydney Laval universities gambling screen: Preliminary data. Addiction Research & Theory, 16(4), 401–411. doi:10.1080/16066350701699031.

Braverman, J., Tom, M., & Shaffer, H. (2014). Accuracy of self-reported versus actual online gambling wins and losses. Psychological Assessment, 26(3), 865–877. doi:10.1037/a0036428.

Canale, N., Vieno, A., Griffiths, M. D., Marino, C., Chieco, F., Disperati, F., et al. (2016). The efficacy of a web-based gambling intervention program for high school students: A preliminary randomized study. Computers in Human Behavior, 55, 946–954. doi:10.1016/j.chb.2015.10.012.

Cohen, J. (1992). A power primer. Psychological Bulletin, 112(1), 155–159. doi:10.1037/0033-2909.112.1.155.

Currie, S. R., Miller, N., Hodgins, D. C., & Wang, J. (2009). Defining a threshold of harm from gambling for population health surveillance research. International Gambling Studies, 9(1), 19–38. doi:10.1080/14459790802652209.

Davis, R. M. (2003). Prevention of problem gambling: A school-based intervention (M.Sc.). University of Calgary, Canada. Retrieved from http://search.proquest.com.ezproxy1.library.usyd.edu.au/docview/305339641/abstract/1ADA6FA2BAB34CD8PQ/1.

Delfabbro, P., King, D., & Griffiths, M. D. (2014). From adolescent to adult gambling: An analysis of longitudinal gambling patterns in South Australia. Journal of Gambling Studies, 30(3), 547–563. doi:10.1007/s10899-013-9384-7.

Delfabbro, P., Lahn, J., & Grabosky, P. (2005). Further evidence concerning the prevalence of adolescent gambling and problem gambling in Australia: A study of the ACT. International Gambling Studies, 5(2), 209–228. doi:10.1080/14459790500303469.

Delfabbro, P., Lambos, C., King, D., & Puglies, S. (2009a). Knowledge and beliefs about gambling in Australian secondary school students and their implications for education strategies. Journal of Gambling Studies, 25(4), 523–539. doi:10.1007/s10899-009-9141-0.

Delfabbro, P., Winefield, A. H., & Anderson, S. (2009b). Once a gambler—always a gambler? A longitudinal analysis of gambling patterns in young people making the transition from adolescence to adulthood. International Gambling Studies, 9(2), 151–163. doi:10.1080/14459790902755001.

Derevensky, J. L., & Gupta, R. (2000). Prevalence estimates of adolescent gambling: A comparison of the SOGS-RA, DSM-IV-J, and the GA 20 Questions. Journal of Gambling Studies, 16(2), 227–251. doi:10.1023/A:1009485031719.

Derevensky, J. L., Gupta, R., & Winters, K. (2003). Prevalence rates of youth gambling problems: Are the current rates inflated? Journal of Gambling Studies, 19(4), 405–425. doi:10.1023/A:1026379910094.

Donati, M. A., Primi, C., & Chiesi, F. (2014). Prevention of problematic gambling behavior among adolescents: Testing the efficacy of an integrative intervention. Journal of Gambling Studies, 30(4), 803–818. doi:10.1007/s10899-013-9398-1.

Ferland, F., Ladouceur, R., & Vitaro, F. (2002). Prevention of problem gambling: Modifying misconceptions and increasing knowledge. Journal of gambling studies, 18(1), 19–29. http://springerlink.bibliotecabuap.elogim.com/article/10.1023/A:1014528128578.

Ferland, F., Ladouceur, R., & Vitaro, F. (2005). Efficacité d’un programme de prévention des habitudes de jeu chez les jeunes: résultats de l’évaluation pilote. L’Encéphale, 31(4), 427–436. doi:10.1016/S0013-7006(05)82404-2.

Fisher, S. (1992). Measuring pathological gambling in children: The case of fruit machines in the U.K. Journal of Gambling Studies, 8(3), 263–285. doi:10.1007/BF01014653.

Fisher, S. (2000). Developing the DSM-IV criteria to identify adolescent problem gambling in non-clinical populations. Journal of Gambling Studies, 16(2), 253–273. doi:10.1023/A:1009437115789.

Gaboury, A., & Ladouceur, R. (1993). Evaluation of a prevention program for pathological gambling among adolescents. The Journal of Primary Prevention, 14(1), 21–28. doi:10.1007/BF01324653.

Gainsbury, S., & Blaszczynski, A. (2011). Online self-guided interventions for the treatment of problem gambling. International Gambling Studies, 11(3), 289–308. doi:10.1080/14459795.2011.617764.

Griffiths, M., & Barnes, A. (2008). Internet gambling: An online empirical study among student gamblers. International Journal of Mental Health and Addiction, 6(2), 194–204. doi:10.1007/s11469-007-9083-7.

Gupta, R., Nower, L., Derevensky, J. L., Blaszczynski, A., Faregh, N., & Temcheff, C. (2013). Problem gambling in adolescents: An examination of the pathways model. Journal of Gambling Studies, 29(3), 575–588. doi:10.1007/s10899-012-9322-0.

Hanss, D., Mentzoni, R. A., Delfabbro, P., Myrseth, H., & Pallesen, S. (2014). Attitudes toward gambling among adolescents. International Gambling Studies, 14(3), 505–519. doi:10.1080/14459795.2014.969754.

International Centre for Youth Gambling Problems and High-Risk Behaviours. (2004). The amazing chateau [CD-ROM]. Montreal, Quebec: McGill University.

Jacques, C., & Ladouceur, R. (2003). DSM-IV-J criteria: A scoring error that may be modifying the estimates of pathological gambling among youths. Journal of Gambling Studies, 19(4), 427–431. doi:10.1023/A:1026332026933.

Jiménez-Murcia, S., Álvarez-Moya, E. M., Stinchfield, R., Fernández-Aranda, F., Granero, R., Aymamí, N., et al. (2010). Age of onset in pathological gambling: Clinical, therapeutic and personality correlates. Journal of Gambling Studies, 26(2), 235–248. doi:10.1007/s10899-009-9175-3.

Ladouceur, R., Ferland, F., & Fournier, P. (2003). Correction of erroneous perceptions among primary school students regarding the notions of chance and randomness in gambling. American Journal of Health Education, 34(5), 272–277.

Ladouceur, R., Ferland, F., Poulin, C., Vitaro, F., & Wiebe, J. (2005a). Concordance between the SOGS-RA and the DSM-IV criteria for pathological gambling among youth. Psychology of Addictive Behaviors, 19(3), 271–276. doi:10.1037/0893-164X.19.3.271.

Ladouceur, R., Ferland, F., & Vitaro, F. (2004). Prevention of problem gambling: Modifying misconceptions and increasing knowledge among Canadian youths. Journal of Primary Prevention, 25(3), 329–335. http://springerlink.bibliotecabuap.elogim.com/article/10.1023/B:JOPP.0000048024.37066.32. Accessed 7 January, 2016.

Ladouceur, R., Ferland, F., Vitaro, F., & Pelletier, O. (2005b). Modifying youths’ perception toward pathological gamblers. Addictive Behaviors, 30(2), 351–354. doi:10.1016/j.addbeh.2004.05.002.

Ladouceur, R., Goulet, A., & Vitaro, F. (2013). Prevention programmes for youth gambling: a review of the empirical evidence. International Gambling Studies, 13(2), 141–159. doi:10.1080/14459795.2012.740496.

Langham, E., Thorne, H., Browne, M., Donaldson, P., Rose, J., & Rockloff, M. (2016). Understanding gambling related harm: A proposed definition, conceptual framework, and taxonomy of harms. BMC Public Health, 16. http://search.proquest.com.ezproxy1.library.usyd.edu.au/docview/1774001169/abstract/5C01E93F73C440FEPQ/1.

Lavoie, M., & Ladouceur, R. (2004). Prevention of gambling among youth: Increasing knowledge and modifying attitudes toward gambling. Journal of Gambling Issues,. doi:10.4309/jgi.2004.10.7.

Lemaire, J., de Lima, S., & Patton, D. (2004). It’s your lucky day: Program evaluation (pp. 1–61). Addictions Foundation of Manitoba.

Lupu, I. R., & Lupu, V. (2013). Gambling prevention program for teenagers. Journal of Cognitive and Behavioral Psychotherapies, 13(2a), 575–584.

Moher, D., Liberati, A., Tetzlaff, J., Altman, D.G., & The PRISMA Group. (2009). Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Med 6(7): e1000097. http://doi.org/10.1371/journal.pmed1000097.

Monaghan, S., & Wood, R. T. A. (2010). Internet-based interventions for youth dealing with gambling problems. International Journal of Adolescent Medicine and Health, 22(1), 113–128.

Nation, M., Crusto, C., Wandersman, A., Kumpfer, K. L., Seybolt, D., Morrissey-Kane, E., et al. (2003). What works in prevention: Principles of effective prevention programs. American Psychologist, 58(6–7), 449–456. doi:10.1037/0003-066X.58.6-7.449.

National Collaborating Centre for Methods and Tools. (2008). Quality assessment tool for quantitative studies. Hamilton, ON: McMaster University. (Updated 13 April, 2010). http://www.nccmt.ca/resources/search/14.

Neal, P., Delfabbro, P. H., & O’Neill, M. (2005). Problem gambling and harm: working towards a national definition. Melbourne, Australia: National Ministerial Council on Gambling, South Australian Centre for Economic Studies, & the Department of Psychology, University of Adelaide. https://www.adelaide.edu.au/saces/gambling/publications/ProblemGamblingAndHarmTowardNationalDefinition.pdf.

Olason, D. T., Kristjansdottir, E., Einarsdottir, H., Haraldsson, H., Bjarnason, G., & Derevensky, J. L. (2011). Internet gambling and problem gambling among 13 to 18 year old adolescents in Iceland. International Journal of Mental Health and Addiction, 9(3), 257–263. doi:10.1007/s11469-010-9280-7.

Purdie, N., Matters, G., Hillman, K., Ozolins, C., & Millwood, P. (2011). Gambling and young people in Australia. Report prepared for Gambling Research Australia. http://www.gamblingresearch.org.au/home/research/gra+research+reports/gambling+and+young+people+in+australia+%282011%29.

Slutske, W. S. (2007). Longitudinal studies of gambling behaviour. In G. Smith, D. C. Hodgins, & R. J. Williams (Eds.), Research and measurement issues in gambling studies. Oxford: Academic Press: Elsevier.

Slutske, W. S., Jackson, K. M., & Sher, K. J. (2003). The natural history of problem gambling from age 18 to 29. Journal of Abnormal Psychology, 112(2), 263–274. doi:10.1037/0021-843X.112.2.263.

Splevins, K., Mireskandari, S., Clayton, K., & Blaszczynski, A. (2010). Prevalence of adolescent problem gambling, related harms and help-seeking behaviours among an Australian population. Journal of Gambling Studies, 26(2), 189–204. doi:10.1007/s10899-009-9169-1.

Sroufe, L. A., Coffino, B., & Carlson, E. A. (2010). Conceptualizing the role of early experience: Lessons from the Minnesota longitudinal study. Developmental Review, 30(1), 36–51. doi:10.1016/j.dr.2009.12.002.

Stinchfield, R. (2010). A critical review of adolescent problem gambling assessment instruments. International Journal of Adolescent Medicine and Health, 22(1), 77–93. http://www.ncbi.nlm.nih.gov/pubmed/20491419.

Taylor, L. M., & Hillyard, P. (2009). Gambling awareness for youth: An analysis of the “Don’t Gamble Away our Future™” program. International Journal of Mental Health and Addiction, 7(1), 250–261. doi:10.1007/s11469-008-9184-y.

Thomas, B. H., Ciliska, D., Dobbins, M., & Micucci, S. (2004). A process for systematically reviewing the literature: Providing the research evidence for public health nursing interventions. Worldviews on Evidence-Based Nursing, 1(3), 176–184. doi:10.1111/j.1524-475X.2004.04006.x.

Todirita, I. R., & Lupu, V. (2013). Gambling prevention program among children. Journal of Gambling Studies, 29(1), 161–169.

Turner, N., & Liu, E. (1999). The naive human concept of random events. Presented at the 1999 Conference of the American Psychological Association, Boston, MA.

Turner, N., Macdonald, J., Bartoshuk, M., & Zangeneh, M. (2008a). The evaluation of a 1-h prevention program for problem gambling. International Journal of Mental Health and Addiction, 6(2), 238–243. doi:10.1007/s11469-007-9121-5.

Turner, N., Macdonald, J., & Somerset, M. (2008b). Life skills, mathematical reasoning and critical thinking: A curriculum for the prevention of problem gambling. Journal of Gambling Studies, 24(3), 367–380. doi:10.1007/s10899-007-9085-1.

Walther, B., Hanewinkel, R., & Morgenstern, M. (2013). Short-term effects of a school-based program on gambling prevention in adolescents. Journal of Adolescent Health, 52(5), 599–605. doi:10.1016/j.jadohealth.2012.11.009.

Welte, J. W., Barnes, G. M., Tidwell, M.-C. O., & Hoffman, J. H. (2008). The prevalence of problem gambling among U.S. adolescents and young adults: Results from a national survey. Journal of Gambling Studies, 24(2), 119–133. doi:10.1007/s10899-007-9086-0.

Williams, R. J. (2002). Prevention of problem gambling: A school-based intervention. Alberta: Canada: Alberta Gaming Research Institute. https://www.uleth.ca/dspace/handle/10133/370.

Williams, R. J., Connolly, D., Wood, R., Currie, S., & Davis, M. (2004). Program findings that inform curriculum development for the prevention of problem gambling. Gambling Research: Journal of the National Association for Gambling Studies (Australia), 16(1), 47–69. https://www.uleth.ca/dspace/handle/10133/372.

Williams, R. J., Wood, R. T., & Currie, S. R. (2010). Stacked Deck: An effective, school-based program for the prevention of problem gambling. The Journal of Primary Prevention, 31(3), 109–125. doi:10.1007/s10935-010-0212-x.

Winters, K. C., Stinchfield, R. D., & Fulkerson, J. (1993). Toward the development of an adolescent gambling problem severity scale. Journal of Gambling Studies, 9(1), 63–84. doi:10.1007/BF01019925.

Winters, K. C., Stinchfield, R. D., & Kim, L. G. (1995). Monitoring adolescent gambling in Minnesota. Journal of Gambling Studies, 11(2), 165–183. doi:10.1007/BF02107113.

Wood, R. T., & Williams, R. J. (2007). “How much money do you spend on gambling?” The comparative validity of question wordings used to assess gambling expenditure. International Journal of Social Research Methodology, 10(1), 63–77. doi:10.1080/13645570701211209.

Zorzela, L., Loke, Y. K., Ioannidis, J. P., Golder, S., Santaguida, P., Altman, D. G., et al. (2016). PRISMA harms checklist: Improving harms reporting in systematic reviews. BMJ, 352, i157. doi:10.1136/bmj.i157.

Acknowledgments

The authors would also like to thank Melanie Hartmann and Lanhowe Chen for their assistance with inter-rater reliability checks for inclusion of studies and quality assessment measures, respectively.

Funding

The authors would like to acknowledge DOOLEYS Lidcombe for their financial support to conduct a preliminary scoping report on gambling education programs for adolescents.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Keen, B., Blaszczynski, A. & Anjoul, F. Systematic Review of Empirically Evaluated School-Based Gambling Education Programs. J Gambl Stud 33, 301–325 (2017). https://doi.org/10.1007/s10899-016-9641-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10899-016-9641-7