Abstract

In real life applications, optimization problems with more than one objective function are often of interest. Next to handling multiple objective functions, another challenge is to deal with uncertainties concerning the realization of the decision variables. One approach to handle these uncertainties is to consider the objectives as set-valued functions. Hence, the image of one decision variable is a whole set, which includes all possible outcomes of this decision variable. We choose a robust approach and thus these sets have to be compared using the so-called upper-type less order relation. We propose a numerical method to calculate a covering of the set of optimal solutions of such an uncertain multiobjective optimization problem. We use a branch-and-bound approach and lower and upper bound sets for being able to compare the arising sets. The calculation of these lower and upper bound sets uses techniques known from global optimization, as convex underestimators, as well as techniques used in convex multiobjective optimization as outer approximation techniques. We also give first numerical results for this algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In multiobjective optimization one considers optimization problems with more than one objective function. This is already a challenge. Dealing with multiobjective optimization for real life problems can lead to an additional difficulty. Often, the calculated optimal solutions cannot be used precisely, because they can only be realized within a certain accuracy. This is for instance the case, when a magnet system for a measurement technique for electrically conducting fluids should be constructed, see [10]. There, the optimal direction of the magnetization of each magnet and the optimal magnetization have to be determined such that the so-called Lorentz force is maximized and the weight of the system is minimized. In practice, an (optimally) chosen magnetic direction cannot be realized in any arbitrary accuracy, as magnets can only be produced within some tolerances. Therefore, decision uncertainty has to be taken into account. Another example is the growing media mixing problem for a plant nursery, see [10, 21, 22]. There, a mixture of peat and compost for the growing media has to be determined, which can also not be mixed exactly by workers.

Also, in case of such uncertainties in the realization of variables, the actual realized solutions should lead to near-optimal values. This kind of uncertainty in optimization is called decision uncertainty, which should be distinguished from parameter uncertainty, because the inaccuracies are caused by the decision variables, [10]. Parameter uncertainty was considered in several works, for example in [3] in the single-objective case, or in [11, 12, 24] for the multiobjective case.

Decision uncertainty for single-objective optimization problems has been handled with minmax robustness under different names like ‘robust optimization with implementation error’, e.g. in [4], or robust regularization, e.g. in [25]. In multiobjective optimization there are also different approaches to treat uncertainties, for example sensitivity analysis, [2], or evaluating the mean or integral of each objective over the set of possible values of a solution, [7], or adding a robustness measure as a new objective function, [33]. We want to follow the so-called worst case robustness approach as it was done in [10], see also [20].

In the worst case approach one considers all possible outcomes, which leads to sets which have to be compared. In the single-objective case these sets are just intervals, which can be compared much easier numerically. In case of a multiobjective optimization problem with m objectives we have to compare subsets of \(\mathbb {R}^m\). This means, we have to solve a specific set-valued optimization problem with a certain set-order relation to handle the uncertainties.

In set-valued optimization different possibilities to compare sets are discussed in the literature. In case of the worst case approach we have to use the upper-type less order relation, see, for instance, [17, 23]. When comparing whole sets one also speaks of the set approach in set-valued optimization. So far, there exists only a limited number of numerical algorithms to solve such set optimization problems. For unconstrained set-valued optimization and a similar order relation, Löhne and Schrage introduced an algorithm in [27], which is applicable for linear problems only. Jahn presented some derivative-free algorithms, see [15], to find one single solution of the whole set of optimal solutions in case the sets which have to be compared are convex. Köbis and Köbis extended the method from [15] to the nonconvex case, i.e., when the sets are nonconvex sets, see [19]. However, all methods aim at finding one single minimal solution only. In [16] for the first time a method for nonconvex sets is presented, which uses discretization to compare sets and which can find many minimal solutions, but still not all. The procedure was parallelized and implemented on a CPU and GPU. As set-valued optimization problems have in general infinitely many optimal solutions, a representation of the whole set of optimal solutions is of interest.

In [10], see also [21, 22], for some specific multiobjective decision uncertain optimization problems solution approaches or characterizations of the optimal solution set have been provided. But they are all for specific cases only, as for linear or for monotone objective functions.

In this paper, we suggest a numerical approach for smooth multiobjective optimization problems with decision uncertainty with the worst case approach under quite general assumptions. Solving such problems is the same as solving a specific set-valued optimization problem, where the image sets are nonconvex. Thus, our approach also gives new ideas for developing algorithms for solving set optimization problems with the set approach, even if nonconvex sets have to be compared thereby. The proposed algorithm determines a covering of the set of optimal solutions, i.e., a subset of the feasible set which contains all optimal solutions.

Thereby, we use the branch-and-bound concept to partition the feasible set and to quickly detect regions of the feasible set which do not contain optimal solutions. We have to assume that the feasible set is a subset of a box which is defined by convex constraints, and that the set of uncertainties is convex and compact as well, to be able to solve the arising subproblems numerically. However, we do not need any assumptions on the convexity of the objective functions. As we are using techniques from global optimization, these functions are allowed to be nonconvex.

The paper is structured as follows: In Sect. 2 the preliminaries for multiobjective optimization and decision uncertainty can be found. Additionally, the relations to set optimization are shown. The main ideas for our algorithm are explained in Sect. 3. In particular, we mention the concept of convex underestimators and concave overestimators. Both concepts are essential to find lower and upper bound sets. The description how those bound sets can be determined can be found in Sects. 3.2 and 3.3. The whole branch-and-bound algorithm as well as some numerical results are presented in Sect. 4. We end with a conclusion and an outlook in Sect. 5.

2 Multiobjective optimization with decision uncertainty

We start with an introduction to multiobjective optimization. Let a nonempty feasible set \(\varOmega \subseteq \mathbb {R}^n\) and twice continuously differentiable functions \(f_j:\varOmega \rightarrow \mathbb {R},\ j=1,\ldots ,m\) be given. The multiobjective optimization problem is stated as

Without any uncertainties, one says that a point \(x^*\in \varOmega \) is efficient for (P), if there is no \(x\in \varOmega \) with \(f(x)\not =f(x^*)\) and \(f(x)\le f(x^*)\). Here, we write \(a\le b\) for two vectors \(a,b\in \mathbb {R}^m\) if \(a_j\le b_j\) holds for all \(j=1,\ldots ,m\). If \(x^*\) is efficient for (P), we call \(y^*:=f(x^*)\) to be a nondominated point of (P).

Next, we introduce the relevant notions from multiobjective optimization with decision uncertainty, before we shortly point out the relations to the more general class of set optimization problems.

2.1 Decision uncertainty

As described in the introduction, it is possible that the realization of the decision variable x is associated with uncertainties. These uncertainties will be modeled by a convex and compact set \(Z\subseteq \mathbb {R}^n\) with \(0\in Z\). Hence, instead of x it might happen that \(x+z\) for some \(z\in Z\) is realized due to the uncertainties. We follow the notation as introduced in [10].

In view of robustness, only feasible points \(x\in \varOmega \) which are feasible for any uncertainty \(z\in Z\) are of interest. This reduces the feasible set to the set of decision robust feasible points defined by

It is a well known challenge in robust optimization to calculate S from \(\varOmega \) and Z, also in the single-objective case. In this work, we assume that S is known and nonempty and we only concentrate on those challenges which are due to the multiple objectives.

Moreover, we assume that S is convex and that there exists a box X which contains S. A set \(X\subseteq \mathbb {R}^n\) is called a box (or hyper rectangle) if \(X=\{x\in \mathbb {R}^n\mid {\underline{x}}_i\le x_i\le {\overline{x}}_i ,\ i=1,\ldots ,n\}\) with two points \({\underline{x}}, {\overline{x}}\in \mathbb {R}^n\) with \({\underline{x}} \le {\overline{x}}\). The set of all n-dimensional real boxes will be denoted by \(\mathbb {I}\mathbb {R}^n\). These requirements are due to the fact that we will have to solve single-objective subproblems with a convex objective function over the set S. For being able to solve those problems globally and efficiently, we need the assumption of convexity of S. As our algorithm works with a subdivision in the pre-image space, we also need the structure of the box.

For defining decision robust efficient solutions, we have to take all possible realizations of each x into account. The set of all these realizations for one x is given by \(\{x+z\mid z\in Z\}\). As we are interested in the values of the objective functions, we have to compare the sets

which defines a set-valued map \(f_Z:S\rightrightarrows \mathbb {R}^m\).

In case of linear functions \(f_j\) we have \(f_Z(x)=\{f(x)\}+\{f(z)\in \mathbb {R}^m \mid z\in Z\}\). This simplifies the problem significantly, see [10, Theorem 23] or [9]. If the functions \(f_j\) and the set Z are convex, the sets \(f_Z(x)\) for \(x\in S\) do not have to be convex, as the following example shows:

Example 1

Let \(f_1,f_2:\mathbb {R}^2\rightarrow \mathbb {R}\) with \(f_1(x)=x_1, f_2(x)=x_1^2+x_2^2\) and \(Z=[-0.1,0.1]\times [0,0.1]\) be given. Then for \(x=0\), the set

contains the points \(y^1=(-0.1,0.02)\) and \(y^2=(0.1,0.02)\), but not the point \(0.5y^1+0.5y^2=(0,0.02)\). This is because \(f(0+z)=(0,0.02)\) only holds for \(z=(0,-\sqrt{0.02})\) or \(z=(0,\sqrt{0.02})\). But in both cases it is \(z\notin Z\). Hence, the set \(f_Z(0)\) is not convex.

Due to [10], we introduce the following optimality concept for the problem (P) with respect to decision uncertainty given by the set Z. It is motivated by the definition of optimality for single-objective decision uncertain optimization as well as by the definition of optimality in parameter uncertain multiobjective optimization.

Definition 1

[10] A point \(x^*\in S\) is called a decision robust strictly efficient solution of (P) with respect to Z if there is no \(x\in S{\setminus }\{x^*\}\) with the property

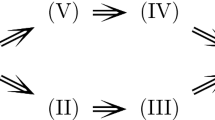

Example 2, first case

Example 2, second case

We illustrate this definition with the following example:

Example 2

Assume \(S=\{x^1,x^2\}\), and Z as well as \(f:S\rightarrow \mathbb {R}^2\) are in such a way that the sets \(f_Z(x^1)\) and \(f_Z(x^2)\) look as in Figs. 1 and 2, respectively. Then for the situation in Fig. 1, only \(x^1\) is a decision robust strictly efficient solution w.r.t. Z, while for Fig. 2 both points, i.e., \(x^1\) and \(x^2\), are decision robust strictly efficient solutions.

Note that in the case of \(Z=\{0\}\), i.e., the case of no uncertainty, there can be efficient solutions of (P) that are not decision robust strictly efficient solutions of (P) w.r.t. Z. This is due to the fact that decision robust strict efficiency is a generalization of strict efficiency for multiobjective problems. In contrast to efficiency, strict efficiency of a point \(x^*\) does not allow the existence of another point x with \(f(x)=f(x^*)\). For more details and a more detailed example we refer to [10, Sect. 2].

For applying Definition 1 in an algorithm, one has to be able to verify numerically whether it holds \(A\subseteq B-\mathbb {R}^m_+\) for two sets \(A,B\subseteq \mathbb {R}^m\). In case of polyhedral sets this can be done by using a finite number of support functionals, see [15]. In case of arbitrary closed convex sets one might need an infinite number of such linear functionals. Additionally, one has to solve a minimizing problem for each of these functionals and for each of the sets to decide whether the subset condition holds. This is based on the equivalence

which was formulated in a more general setting in [14, Theorem 2.1].

2.2 Relation to set optimization

In set optimization one studies set-valued optimization problems. Solving the following set optimization problem is closely related to determining decision robust strictly efficient solutions.

There are different approaches to define optimal solutions for problems like (SOP). For an introduction to set optimization, see the book [18]. In case of the set approach, see [17], one uses order relations to compare the sets which are the images of the objective function. As we are minimizing in our multiobjective optimization problem, the ’maximal elements’ of a set are in some sense the worst elements, which fits to our worst case approach. Comparing the ’maximal elements’ of a set corresponds to the upper-less order relation, which we introduce next.

Definition 2

[17] Let \(A,B\subseteq \mathbb {R}^m\) be two nonempty sets. The upper-type (u-type) less order relation \(\preccurlyeq _u\) is defined by:

For nonempty sets \(A,B\subseteq \mathbb {R}^m\) we have

Moreover, the order relation is reflexive and transitive, but it is in general not anti-symmetric. However, it holds

Now we can state the definition of an optimal solution of a set-valued optimization problem as in (SOP). For this, we use a definition which was formulated in [30]:

Definition 3

Let a nonempty set \(X\subseteq \mathbb {R}^n\) and a set-valued map \(H:X\rightrightarrows \mathbb {R}^m\) be given, where \(H(x)\ne \emptyset \) holds for all \(x\in X\). A point \(x^*\in X\) is called a strictly optimal solution of the set optimization problem

w.r.t. \(\preccurlyeq _u\) if there exists no \(x\in X{\setminus }\{x^*\}\) with \(H(x)\preccurlyeq _u H(x^*)\).

In our case, we have the special set-valued map described by \(f_Z:S\rightrightarrows \mathbb {R}^m\) with \(f_Z(x)=\{f(x+z)\mid z\in Z\}\). Obviously, a point \(x^*\in S\) is a decision robust strictly efficient solution of (P) w.r.t. Z if and only if \(x^*\in S\) is a strictly optimal solution of the set optimization problem (SOP). Hence, we present in this paper an algorithm to calculate a covering of the set of strictly optimal solutions of a specific set optimization problem. The proposed techniques can also be used to develop algorithms for more general set optimization problems. We will briefly discuss this in Sect. 5.

A basic technique of our algorithm will be a branch-and-bound approach. Lower and upper bounds will be important for the bounding step. The next definition clarifies these terms.

Definition 4

Let \(A\subseteq \mathbb {R}^m\) be a nonempty set.

A set \(U\subseteq \mathbb {R}^m\) is called an upper bound set/upper bound for A if \(A\preccurlyeq _u U\).

A set \(L\subseteq \mathbb {R}^m\) is called a lower bound set/lower bound for A if \(L\preccurlyeq _u A\).

3 Algorithmic approach

Our algorithm uses the concept of a branch-and-bound method. The branching will be in the pre-image space \(\mathbb {R}^n\). We have assumed that there is a box X which contains the convex feasible set S. This is, for instance, the case when S is given by convex inequality constraints and by lower and upper bounds for each variable. We start with the box X and partition it along the longest edge into two subboxes, see, for instance [29], for a more detailed description of such a partitioning. On each subbox \(X^*\), we test whether a sufficient criteria is satisfied which guarantees that \(X^*\cap S\) does not contain any decision robust strictly efficient solution of (P) w.r.t. Z. If such a criterion is satisfied, we do not consider this subbox and the feasible points in \(X^*\cap S\) anymore. Otherwise, we partition the box until all boxes are either discarded or smaller than a predefined value.

For such a branch-and-bound scheme, a good criteria for discarding a box is essential. These criteria are in general based on lower bounds obtained on the subboxes and on upper bounds obtained within the procedure. This is a widely used approach in single-objective global (i.e., nonconvex) optimization. There, the upper bounds are function values of feasible solutions. The lower bounds are bounds for all possible values of the objective over a subbox which are determined by interval arithmetic or by other underestimation methods. Hence, just scalars have to be compared. In our setting, already a ‘function value’ \(f_Z(x)\) for some feasible point x is a whole set. As these lower and upper bounds have to be compared frequently within such an algorithm, we will present a way to avoid to compare sets as \(f_Z(x)\) for some x directly. We will present replacements (i.e., sufficient conditions) using sets which have a very simple structure. To distinguish between upper and lower bound sets in our article, the corresponding variables x, z and subboxes or subsets of X and Z are indicated with \({\tilde{\cdot }}\) or \(\cdot ^*\), respectively. This means that an upper bound set of \(f_Z(\tilde{x})\) is computed with respect to a fixed point \({\tilde{x}}\in {\tilde{X}}\) and a lower bound set is determined for all sets \(f_Z(x^*)=\{f(x^*+z)\mid z\in Z\}\) with \(x^*\in X^*\). Note that determining a lower bound L for all sets \(f_Z(x^*)\) with \(x^*\in X^*\) (i.e., \(L\preccurlyeq _u f_Z(x^*)\) for all \(x^*\in X^*\)) is not equivalent to simply determining a lower bound for \(f_Z(X^*):=\bigcup _{x^*\in X^*} \{f(x^*+z)\mid z\in Z\}\) (i.e., with \(L\preccurlyeq _u f_Z(X^*)\)).

As the objective functions \(f_i\), and also the sets \(f_Z(x)\), are not necessarily convex, we will use the concept of convex relaxations for being able to formulate such replacements and a numerically tractable sufficient condition finally.

3.1 Convex underestimators and concave overestimators

As shown in Example 1, the sets \(f_Z(x)\) for \(x\in S\) may be nonconvex, even in case of convex functions \(f_i:S\rightarrow \mathbb {R}\). For that reason, we will make use of the concept of convex underestimators and concave overestimators, respectively, depending on whether we aim at lower or at upper bounds. Let \(X\subseteq \mathbb {R}^n\) be a box and \(h:X\rightarrow \mathbb {R}\) a function. Then a convex underestimator of h on X is a function \({\underline{h}}:X\rightarrow \mathbb {R}\) which is convex and with \({\underline{h}}(x)\le h(x)\) for all \(x\in X\). A simple way to construct such convex underestimators is explained next and is known in the literature as \(\alpha \)BB method.

Let the box be defined by \(X=[{\underline{x}}, {\overline{x}}]\in \mathbb {I}\mathbb {R}^n\). Then we obtain a convex underestimator of a smooth function h by

where \(\alpha _h\ge \max \{0, -\min _{x\in X} \lambda _{\min , h}(x)\}.\) Here, \(\lambda _{\min ,h}(x)\) denotes the smallest eigenvalue of the Hessian \(H_h(x)\) of h in x [28]. A lower bound for \(\lambda _{\min ,h}(x)\) over X can easily be calculated with the help of interval arithmetic, see [28]. In our algorithm, we use the Matlab toolbox Intlab [31] for these calculations. See also [32] for improved lower bounds. There are also other possibilities for the calculation of convex underestimators. For example, in [1] special convex underestimators for bilinear, trilinear, fractional, fractional trilinear or univariate concave functions were defined. Here, we restrict ourselves to the above proposed convex underestimator. The theoretical results remain true if the above underestimators are replaced by tighter ones. Another important benefit is stated in the following remark, where \(\omega (X)\) denotes the box width of X, i.e., \(\omega (X):=\Vert {\overline{x}}-{\underline{x}}\Vert _2\).

Remark 1

[28, Property 4] For all \(\alpha _h\ge 0\) the maximal pointwise difference between h and \({\underline{h}}\) is \(\frac{\alpha _h}{2}\omega (X)^2\), i.e., \(\max \limits _{x\in X} |h(x)-{\underline{h}}(x)|=\frac{\alpha _h}{2}\omega (X)^2\).

In nonconvex optimization one uses the minimum value of \({\underline{h}}\) over \(X\cap S\) as a lower bound for the values of h on \(X\cap S\). If the set S is convex, the minimum value can be calculated by standard techniques from convex optimization. We use convex underestimators to be able to calculate the elements of sets \(L\subseteq \mathbb {R}^m\) numerically such that

holds for all \(x\in X^*\cap S\) for some subbox \(X^*\) of X.

Also concave overestimators of a function h on X are of interest, i.e., concave functions \({\overline{h}}:X\rightarrow \mathbb {R}\) with \({\overline{h}}(x)\ge h(x)\) for all \(x\in X\). If we calculate a convex underestimator of the function \(-h\) as described above, i.e.,

where \(\alpha _{-h}\ge \max \{0, -\min _{x\in X} \lambda _{\min , -h}(x)\}\), the function \({\overline{h}}:=-(\underline{- h})\) is such a concave overestimator of h on X. We use such concave overestimators to be able to calculate sets \(U\subseteq \mathbb {R}^m\) numerically such that

holds for some given \({\tilde{x}}\in S\). The advantage is that while it might be numerically difficult to compare the lower bound L of all sets \(f_Z(x)\) for all \(x\in X^*\cap S\) with \(f_Z({\tilde{x}})\), it might be much easier to compare L with U. Note that we have

When we use the set order relation as defined in Definition 2 we can write this equivalently as

The implication holds as \(\preccurlyeq _u\) is a transitive set order relation. Thus, in case \({\tilde{x}}\in S{\setminus } X^*\), we can discard the subbox \(X^*\) as it does not contain any decision robust strictly efficient solution of (P) w.r.t. Z.

3.2 Upper bound sets

First, we describe how to calculate a simple set U with \(f_Z({\tilde{x}})\preccurlyeq _u U\), where \({\tilde{x}}\) is a fixed feasible point of a given box \({\tilde{X}}\). To begin with, we explain how to construct such a set U which is a singleton. Then we describe how this upper bound can be improved by using outer approximations as known from convex multiobjective optimization.

For deriving a singleton upper bound for a set, we use the so-called anti-ideal point. For the set \(f_Z({\tilde{x}})=\{f({\tilde{x}}+z)\mid z\in Z\}\), this is the point \({\overline{a}}\) defined by

Hence, the anti-ideal point can easily be determined if \(f_j\) is a concave function for \(j=1,\ldots ,m\), as Z is assumed to be a convex and compact set and the functions \(f_j\) are twice continuously differentiable. One can apply any solution method from single-objective constrained optimization, for instance, an algorithm which uses sequential quadratic programming (SQP).

However, if \(f_j\) is not concave, such a local solution method as SQP might only deliver a locally maximal solution and not a globally maximal one. In that case we use the concave overestimators which were introduced in Sect. 3.1. The result is summarized in the next lemma. This lemma needs a box \({\hat{Z}}\) with \(Z\subseteq {\hat{Z}}\), but recall that Z was assumed to be a compact convex set and thus such a set \({\hat{Z}}\) can easily be calculated. The reason for the assumption of a box is that we can determine concave overestimators only over boxes, as explained in Sect. 3.1. With Remark 1 it follows that a small box \({\hat{Z}}\) leads to a tighter concave overestimator. Therefore, \({\hat{Z}}\) should be chosen as small as possible.

Lemma 1

Let \({\hat{Z}}\in \mathbb {I}\mathbb {R}^n\) be a box with \(Z\subseteq {\hat{Z}}\), and let \({\tilde{x}}\in S\) be given. Let \(\overline{f_j}\) be the concave overestimator of \(f_j\) on the box \(\{{\tilde{x}}\}+{\hat{Z}}\) for all \(j=1,\ldots , m\) as defined in Sect. 3.1. The singleton set U with

is an upper bound set for \(f_Z({\tilde{x}})\), i.e., \(f_Z({\tilde{x}})\preccurlyeq _u U\).

Proof

For the proof of this lemma, we show \(f_Z({\tilde{x}})\subseteq U-\mathbb {R}^m_+\). Therefore, let \(w\in f_Z({\tilde{x}})\) be arbitrary, i.e., there is a \(z\in Z\) with \(w=f({\tilde{x}}+z)\). As \(\overline{f_j}\) is a concave overestimator of \(f_j\) on \(\{{\tilde{x}}\}+{\hat{Z}}\) and \(z\in Z\subseteq {\tilde{Z}}\), we obtain for every \(j=1,\ldots ,m\):

Therefore, \(w\in U-\mathbb {R}^m_+\) holds.\(\square \)

The optimization problems in (2) have a convex and compact feasible set and twice continuously differentiable concave objective functions, which are maximized. Thus, they can be solved for instance with an SQP method. Lemma 1 uses that it holds for the set

that \(f_Z({\tilde{x}})\subseteq \overline{f_{Z}}({\tilde{x}})-\mathbb {R}^m_+\) and that \(\overline{f_{Z}}({\tilde{x}})-\mathbb {R}^m_+\) is a convex set. Thus, the anti-ideal point of \(\overline{f_{Z}}({\tilde{x}})\) can be calculated by known local methods for single-objective optimization. Figure 3 shows the idea on how to obtain the upper bound set U.

The set \(f_Z({\tilde{x}})\) and its singleton upper bound set U according to Lemma 1

This rough upper bound can be improved by using outer approximation techniques from convex multiobjective optimization. These can be applied as the set \(\overline{f_{Z}}({\tilde{x}})-\mathbb {R}^m_+\) is a convex set. The algorithm which we are using is called Benson’s outer approximation algorithm. It was introduced for linear multiobjective optimization in [5], and extended to the convex case in [8] and in [26]. The idea of Benson’s outer approximation algorithm is to construct supporting hyperplanes of the set \(\overline{f_Z}({\tilde{x}})-\mathbb {R}^m_+\). One step depends on one fixed point \({\bar{p}}\), which can, for instance, be the anti-ideal point. Within Benson’s outer approximation algorithm one solves single-objective convex optimization problems of the following type:

With \(e\in \mathbb {R}^m\) we denote the m-dimensional all-one vector \((1,\ldots ,1)^T\in \mathbb {R}^m\).

Let \(({\tilde{z}},{\tilde{t}})\) be an optimal solution of (\(P_{{\tilde{x}},{\bar{p}}}\)) and let \({\tilde{\lambda }}\ge 0\) be a Lagrange multiplier to the constraint \({\bar{p}}-te\le \overline{f}({\tilde{x}}+z)\). Then

describes a supporting hyperplane of \(\overline{f_Z}({\tilde{x}})\). If no Lagrange multiplier is available, a single-objective linear optimization problem can be solved to calculate a normal vector \({\tilde{\lambda }}\) of the supporting hyperplane, see [8].

Note that already the anti-ideal point of \(\overline{f_{Z}}({\tilde{x}})\) gives m supporting hyperplanes of \(\overline{f_{Z}}({\tilde{x}})\) by

for every \(j=1,\ldots ,m\). Several such supporting hyperplanes can be constructed to various points \({\bar{p}}\) with the help of (\(P_{{\tilde{x}},{\bar{p}}}\)). In our numerical experiments we limited ourselves to the hyperplane which we obtain for \({\bar{p}}\) as the anti-ideal point of \(\overline{f_{Z}}({\tilde{x}})\) and to those which we obtain directly from the anti-ideal point of \(\overline{f_{Z}}({\tilde{x}})\).

The construction of such supporting hyperplanes is explained in detail in [8, 26]. This technique is also used for solving nonconvex multiobjective optimization problems in [29]. In that paper one can also find more details on the construction of improved bounds by using Benson’s outer approximation technique. Adding more hyperplanes to get a better outer approximation is possible, but then even more single-objective convex optimization problems have to be solved. Moreover, the calculation of the intersection of these hyperplanes gets more challenging. Also steering the calculation of the hyperplanes within the algorithm by an adaptive choice of the points \({\bar{p}}\) in (\(P_{{\tilde{x}},{\bar{p}}}\)) is an interesting approach for further improvements of the proposed algorithmic approach. Such an approach was, for instance, followed in [29] for nonconvex multiobjective optimization problems.

The next lemma gives a summary of the construction of our upper bound set, which is also illustrated in Fig. 4.

Lemma 2

Let \({\hat{Z}}\in \mathbb {I}\mathbb {R}^n\) be a box with \( Z\subseteq {\hat{Z}}\) and let \({\tilde{x}}\in S\) be given. Let \(\overline{f_j}\) be the concave overestimator of \(f_j\) on the box \(\{{\tilde{x}}\}+{\hat{Z}}\) for all \(j=1,\ldots , m\) as defined in Sect. 3.1. Furthermore, let \({\bar{p}}\in \mathbb {R}^m\) be the anti-ideal point of the concave overestimators \({\overline{f}}=(\overline{f_1},\ldots , \overline{f_m})^T\) on \(\{{\tilde{x}}\}+Z\), see Lemma 1. Moreover, let \(({\tilde{z}},{\tilde{t}})\) be a minimal solution of (\(P_{{\tilde{x}},{\bar{p}}}\)) with Lagrange multiplier \({\tilde{\lambda }}\ge 0\) to the constraint \({\bar{p}}-te\le \overline{f}({\tilde{x}}+z)\). Then the set U with

is an upper bound set for \(f_Z({\tilde{x}})\), i.e., \(f_Z({\tilde{x}})\preccurlyeq _u U\).

Proof

We denote the hyperplane to which \({\tilde{\lambda }}\ge 0\) is the normal vector by

As \(({\tilde{z}}, {\tilde{t}})\) is feasible for (\(P_{{\tilde{x}},{\bar{p}}}\)) and by the definition of \({\bar{p}}\), we get for each \(j=1,\ldots , m\):

As a consequence, we have \({\bar{p}}-{\tilde{t}}e\in U\). In particular, \({\bar{p}}-{\tilde{t}}e\in U^*\) holds and thus \({\bar{p}}\in U^*+\mathbb {R}^m_+.\)

Next, we show that \(f_Z({\tilde{x}})\subseteq U^*-\mathbb {R}^m_+\). This follows from [26] as (\(P_{{\tilde{x}},{\bar{p}}}\)) is the same optimization problem which is solved to obtain a supporting hyperplane of \(-\overline{f_Z}({\tilde{x}})+\mathbb {R}^m_+\) with the ideal point \(-{\bar{p}}\) (see Sect. 3.3 for more details on the ideal point). There, \(U^*\) is by construction a supporting hyperplane of \(-\overline{f_Z}({\tilde{x}})+\mathbb {R}^m_+\), i.e., \({\tilde{\lambda }}^T(-{\bar{p}}+{\tilde{t}}e)\le {\tilde{\lambda }}^Ty\) holds for all \(y\in -\overline{f_Z}({\tilde{x}})+\mathbb {R}^m_+\). By multiplying with \(-1\), this is equivalent to \({\tilde{\lambda }}^T({\bar{p}}-{\tilde{t}}e)\ge {\tilde{\lambda }}^Ty\) for all \(y\in \overline{f_Z}({\tilde{x}})-\mathbb {R}^m_+\). Hence, \(U^*\) is a supporting hyperplane of \(\overline{f_Z}({\tilde{x}})-\mathbb {R}^m_+\). As \(\overline{f_j}\) is a concave overestimator of \(f_j\) on the box \(\{{\tilde{x}}\}+{\hat{Z}}\) for all \(j=1,\ldots , m\), it follows:

Now, let \(w\in f_Z({\tilde{x}})\) be arbitrarily chosen. Then

and \({\bar{p}}\in U^*+\mathbb {R}^m_+=\{y\in \mathbb {R}^m\mid {\tilde{\lambda }}^Ty\ge {\tilde{\lambda }}^T({\bar{p}}-{\tilde{t}}e)\}\) hold. Thus, there exists some \(\mu \in [0,1]\) with \(y:=\mu {\bar{p}}+(1-\mu ) w\in U^*\). By Lemma 1 we have \(w\le {\bar{p}}\). Therefore,

and \(w\le y\) with \(y\in U^*\cap (\{{\bar{p}}\}-\mathbb {R}^m_+)=U.\) Hence, we derive \(f_Z({\tilde{x}})\subseteq U-\mathbb {R}^m_+\).\(\square \)

The set \(f_Z({\tilde{x}})\) and its upper bound set U according to Lemma 2

3.3 Lower bound sets

For a subbox \(X^*\) of X we denote the feasible set of \(X^*\) by

For applying the implication (1), we propose a method to determine a set L with a simple structure, i.e., with

“Simple structure” means that it should be easily computable and comparable with the obtained upper bounds. Similar to the anti-ideal point from Lemma 1, we can use here the concept of the ideal point of a set. While one could use the ideal point of the set

already the ideal point of any set

for any \(z^*\in Z\) delivers a lower bound:

Lemma 3

Let \(z^*\in Z\), \(X^*\) be a subbox of X, and let \(a\in \mathbb {R}^m\) be defined by

Then the set \(L:=\{a\}\) is a lower bound set of \(f_Z(x)\) for all \(x\in S^*\).

Proof

Let \(x\in S^*\) be arbitrarily chosen. We have to show that \(a\in f_Z(x)-\mathbb {R}^m_+\). As \(a_j\le f_j(x+z^*)\) for all \(j=1,\ldots ,m\), we have that \(a\in \{f(x+z^*)\}-\mathbb {R}^m_+\subseteq f_Z(x)-\mathbb {R}^m_+\).\(\square \)

In case of convex functions \(f_j\), \(j=1,\ldots ,m\), the single-objective optimization problems in (4) can be solved by any local solution method as an SQP method. Otherwise, convex underestimators, see Sect. 3.1, can be used. Let \(\underline{f_j}\) be the convex underestimator of \(f_j\) on the box \(X^*\) for all \(j=1,\ldots ,m\). Define \(\underline{f}:=(\underline{f_1},\ldots , \underline{f_m})^T\negthickspace .\) We can choose one or several points \(z^*\in Z\) and determine the ideal point of the set

for each chosen \(z^*\). As each ideal point gives a lower bound set of the sets \(f_Z(x)\) for all \(x\in S^*\), also the set of all ideal points to the various points \(z^*\) is a lower bound set of \(f_Z(x)\) for all \(x\in S^*\):

Lemma 4

Let \(X^*\) be a subbox of X, and let \(\underline{f_j}\) be the convex underestimator of \(f_j\) on \(X^*\) for all \(j=1,\ldots ,m\) as defined in Sect. 3.1. Let \(Z^*=\{z^1,\ldots , z^p\}\subseteq Z\), and for \(k=1,\ldots ,p\) determine the ideal point \({\underline{a}}^k\) of \({\underline{f}}(S^*+z^k)\), i.e., calculate

Then it holds:

i.e., L is a lower bound set for all sets \(f_Z(x)\) with \(x\in S^*\).

Proof

We have to show that for any \(k\in \{ 1,\ldots ,p\}\) and for all \(x^*\in S^*\) it holds that \(a^k\in f_Z(x^*)-\mathbb {R}^m_+\). Thus, let \(k\in \{ 1,\ldots ,p\}\) and \(x^*\in S^*\) be arbitrarily chosen. As

holds for all \(j=1,\ldots ,m\), we have that \({\underline{a}}^k\in \{f(x^*+z^k)\}-\mathbb {R}^m_+\subseteq f_Z(x^*)-\mathbb {R}^m_+\).\(\square \)

A visualization of this lemma can be found in Fig. 5. The set \(f_Z(S^*)\) is defined by \(\{f_Z(x+z)\mid x\in S^*, z\in Z\}\) and includes all images of all possible outcomes \(x^*+z\) with \(x^*\in S^*\) and \(z\in Z\). By fixing a \(z^k\in Z\) we only obtain a subset \(\{f(x+z^k)\mid x\in S^*\}=:A^k\) of \(f_Z(S^*)\) for every \(k=1,\ldots , p\). As \(A^k+\mathbb {R}^m_+\) is in general nonconvex, a convex underestimator and its ideal point \({\underline{a}}^k\) are computed. The lower bound set L consists of all ideal points \(a^k,\ k=1,\ldots ,p\).

For the algorithm, a large p, i.e., many elements \(\{z^1,\ldots ,z^p\}\), improves the lower bound set. On the other hand, a large p implies that we have to solve a large number of convex single-objective optimization problems on each subbox to determine the ideal points \({\underline{a}}^k\), \(k=1,\ldots ,p\). We explore this issue in Sect. 4.2.

The set \(f_Z(S^*)\), the sets \(A^k=\{f(x+z^k)\mid x\in S^*\},\ k=1,2,3\) and the lower bound set \(L=\{{\underline{a}}^1, {\underline{a}}^2, {\underline{a}}^3\}\) according to Lemma 4

3.4 Discarding test

The main idea of our discarding test is the implication given in (1) together with the way in which we construct the sets L and U. We summarize our discarding test in the next theorem:

Theorem 1

Let \(X^*\) be a subbox of X, \({\tilde{x}}\in S\) with \({\tilde{x}}\not \in X^*\), and let the set U be defined as in Lemma 2 and the set L as in Lemma 4. If \(U\preccurlyeq _u L\), the subbox \(X^*\) can be discarded, i.e., \(X^*\cap S\) does not contain any decision robust strictly efficient solution of (P) w.r.t. Z.

Proof

With U as in Lemma 2 and with L as in Lemma 4 we obtain

i.e., no \(x\in S^*\) can be a strictly optimal solution of the set optimization problem \(\min _{x\in S}f_Z(x)\). Therefore, no \(x\in S\) can be decision robust strictly efficient for (P) w.r.t. Z. \(\square \)

With our choice for the construction of the sets L and U we aim at two goals. Firstly, we want to be able to easily calculate them. This is achieved since the arising convex single-objective optimization problems can easily be solved, as any locally optimal solution is already a globally optimal solution. Secondly, we want the sets L and U to have a simple structure such that they can easily be compared w.r.t. the upper-type less order relation. If L and U are finite sets, this can be done with a pairwise comparison. In case of \(m=2\) and U is not finite, i.e., not just the anti-ideal point, then U is just a line segment and we can easily check whether \(U\preccurlyeq _u L\) holds. For \(m\ge 3\) and non-finite U such comparisons get already more complicated, as U is then a subset of a hyperplane. This comparison becomes even more difficult if several points \({\bar{p}}\) are used in (\(P_{{\tilde{x}},{\bar{p}}}\)) to construct improved outer approximations, as it was done in [29]. In that case the concept of local upper bounds is helpful which is used and explained in detail in [29]. As this is an implementation issue and does not add insights to the main idea of the discarding test above, we limit ourselves here to cases which can easily be implemented.

4 The algorithm and numerical results

In this section, we derive our algorithm based on the proposed discarding test in Theorem 1. We also illustrate and discuss the algorithm on several test instances.

4.1 The branch-and-bound algorithm

As already mentioned, the base of our algorithm is a branch-and-bound approach in which we partition the box X into subboxes. Then we try to discard subboxes which do not contain decision robust strictly efficient solutions.

We are working with three lists. The list \({\mathscr {L}}_W\) is the working list which collects those boxes which are still of interest. The upper bound sets for some feasible points \({\tilde{x}}\in S\) are collected in the list \({\mathscr {L}}_U\). The list \({\mathscr {L}}_S\) collects those boxes which deliver a first cover of the set of decision robust strictly efficient solutions. In the second while-loop from line 26 we check again if boxes from \({\mathscr {L}}_S\) can now be discarded, because the set of upper bound sets \({\mathscr {L}}_U\) changes during the main while-loop (lines 8–25). The final solution list is denoted by \({\mathscr {L}}_{S, \text{ final }}\).

For obtaining lower and upper bound sets, single-objective optimization problems are solved. In lines 4 and 17 the upper bound set U of a set \(f_Z(x)\) is computed. If the set U consists of the anti-ideal point only, m optimization problems are solved. If the set U is determined by outer approximations, we need \(m+1\) optimization problems. For the lower bound set in line 12, the algorithm solves \(mp=m|Z^*|\) additional optimization problems.

For computing upper bound sets, at least one feasible point \({\tilde{x}}\in S^*\) of a currently considered box \(X^*\) is required. One possibility is to use the midpoints of the boxes, i.e., \({\tilde{x}}:=\text{ mid }(X^*)\) as far as \({\tilde{x}}\in S\). Another possibility to obtain feasible points is the following: in line 12, see also Lemma 4, for each \(z\in Z^*\) and for each objective function \(f_j\) an optimization problem is solved with feasible set \(S^*\). The minimal solutions can thus be used as feasible points for computing upper bound sets. In addition, we obtain the information whether \(S^*\) is empty.

To get only one upper bound set per considered box and to make numerical experiments comparable, we use the minimal solution of the first (underestimated) objective function without uncertainties, i.e., with \(z^*=0\).

After adding a new upper bound set to \({\mathscr {L}}_U\), an update procedure is applied in line 18: If there is a set \(U'\in {\mathscr {L}}_U\) with \(U'\preccurlyeq _u U\) for some \(U\in {\mathscr {L}}_U\), all those dominated U are removed from \({\mathscr {L}}_U\). Moreover, \(U'\) is not stored in \({\mathscr {L}}_U\) if \(U\preccurlyeq _u U'\) holds for one \(U\in {\mathscr {L}}_U\). This reduces the amount of comparisons for checking the conditions in lines 15 and 28.

We stop the algorithm when all boxes are either discarded or put into the solution list, i.e., if the working list is empty. We put a box \(X^*\) to the solution list if its box width is small enough, i.e., for given \(\delta >0\) it holds: \(\omega (X^*)< \delta \).

Theorem 2

Let \({\mathscr {L}}_{S,\text{ final }}\) be the output of the algorithm on page 16, and \({\mathscr {L}}_S\) be the solution list after line 25. Moreover, let \(X_E\) be the set of decision robust strictly efficient solutions of (P) w.r.t. Z. Then the following holds:

- (i)

\(X_E\subseteq \bigcup \limits _{X\in {\mathscr {L}}_{S,\text{ final }}}X\subseteq \bigcup \limits _{X\in {\mathscr {L}}_S} X\)

- (ii)

For every box \(X^*\in {\mathscr {L}}_{S,\text{ final }}\) it holds: \(\omega (X^*)<\delta \)

Moreover, the algorithm terminates after finitely many subdivisions in line 10.

Proof

Property (i) holds because of Theorem 1. In line 19 only boxes \(X^*\) with \(\omega (X^*)<\delta \) are stored in the solution list \({\mathscr {L}}_S\). The final elimination in the second while-loop of the algorithm just discards some boxes from \({\mathscr {L}}_S\), but the box width of the boxes does not change. This proves (ii).

The boxes are bisected perpendicularly to a direction of maximum width. Boxes are either discarded or partitioned until the subboxes have a width smaller than \(\delta \). Hence, even if no box is discarded, all subboxes have a width smaller than \(\delta \) after a finite number of subdivisions. \(\square \)

We would like to mention that our algorithm does not guarantee to find anything like almost decision robust strictly efficient solutions or that with decreasing \(\delta \) the covering of \(X_E\) gets arbitrarily close. For being able to show something like that, we would need lower and upper bound sets which get closer to the sets which are compared for smaller boxes. With Remark 1 it follows that for smaller boxes \(X^*\) the lower bounds for \(f(S^*+z^*)\) for one \(z^*\in Z^*\) are indeed tighter than for larger boxes. For an upper bound set U one has to calculate concave overestimators \(\overline{f_j}\) for each \(f_j\) on the box \(\{{\tilde{x}}\}+{\hat{Z}}\). However, the distance between \(\overline{f_j}\) and \(f_j\) does not decrease, when the boxes \(X^*\) become smaller, as it only depends on the size of \({\hat{Z}}\) (and the non-concavity of \(f_j\)). The boxes in the set \({\mathscr {L}}_{S,\text{ final }}\) might contain infeasible points. However, the distance from any point in any box in \({\mathscr {L}}_{S,\text{ final }}\) to the feasible set is bounded from above by \(\delta \), because in every box in \({\mathscr {L}}_{S,\text{ final }}\) there exists at least one feasible point. Otherwise, it would have been discarded.

4.2 Numerical results

The proposed algorithm has been implemented in Matlab R2018a and uses the toolbox Intlab [31] for interval arithmetic. All experiments have been done on a computer with Intel(R) Core(TM) i5-7400T CPU and 16 Gbytes RAM on operating system Windows 10 Enterprise.

As just recently the notion of decision robust strictly efficient solutions has been introduced in multiobjective optimization, there are no test instances from the literature so far. Here, we have introduced some test instances where it is possible to calculate the decision robust strictly efficient solution sets also analytically. This allows to verify our results. We will also discuss the impact of various parameters of the algorithm as, for instance, the choice and the number p of elements of the set \(Z^*\) which are used to calculate the lower bounds in Lemma 4.

For all instances we used \(\delta =0.05\). Additionally, we also used \(\delta =0.01\) for the last two test instances to explore the influence of \(\delta \). In Tables 1, 2 and 3 the number of subdivisions in line 10, the time, the number of boxes in the final solution list, and the total number of solved single-objective optimization problems are given for each test instance. The last number is split into the number of solved problems to obtain the upper bounds U and the number of solved problems to get the lower bounds L. Also, visualizations of the partitions of the initial box X after the execution of the algorithm are presented. The light gray boxes are the discarded boxes. All boxes from the final solution list \({\mathscr {L}}_{S, \text{ final }}\) are dark gray. In case of convex constraints, boxes can be discarded because of infeasibility. These boxes are white in the figures.

Moreover, we have compared the impact of using upper bounds based on the anti-ideal point only, as in Lemma 1, and based on an improved outer approximations, as defined in Lemma 2.

Test instance 1

This convex test function is inspired by [6]:

The set of decision robust strictly efficient solutions is a line segment:

The sets \(Z^0\) to \(Z^3\) are chosen as

The results of the algorithm are shown in Fig. 6 and Table 1.

Partition of the feasible set of Test instance 1 after the algorithm

In Table 1 and Fig. 6, it can be seen that choosing a larger set \(Z^*\) and computing the lower bound set U by an improved outer approximation leads to better results. However, the improvement from the case \(Z^*=Z^1\) to \(Z^*=Z^2\) or \(Z^*=Z^3\) is not as significant as the one from \(Z^*=Z^0\) to \(Z^*=Z^1\). The best choice for \(Z^*\) depends on how tight the covering of the set of decision robust strictly efficient solutions should be. Note that the point \({\bar{x}}=0\) is not a decision robust strictly efficient solution while \({\bar{x}}\) is an efficient solution of the corresponding multiobjective optimization problem without uncertainties.

Test instance 2

This test instance consists of a nonconvex objective function and a circular decision uncertainty set Z:

A box \({\hat{Z}}\) which contains Z is \({\hat{Z}}=[-0.1,0.1]\times [-0.1,0.1]\).

The sets \(Z^0\) and \(Z^1\) are chosen as

For the results of the algorithm see Fig. 7 and Table 2.

Partition of the feasible set of Test instance 2 after the algorithm

In Fig. 7 it can be seen that choosing a smaller \(\delta \) leads to a tightening of the covering of the decision robust strictly efficient solutions. However, all quantities increase because more boxes have to be bisected until the termination rule is fulfilled.

Test instance 3

The set of the decision robust strictly efficient solutions is

The sets \(Z^0\) to \(Z^3\) are chosen as

Note that \(Z^1\) consists of the vertices of Z (and 0) while \(Z^2\) contains some interior points of Z only. The results of the algorithm are shown in Fig. 8 and Table 3.

Partition of the feasible set of Test instance 3 after the algorithm

The results for this test instance, see Table 3, show that the upper bound sets which are obtained from the improved outer approximations do not lead to any improvements. The number of subdivisions and boxes in the solution list \({\mathscr {L}}_{S, \text{ final }}\) are the same. We suppose that this is caused by the convex objective functions, which are symmetric and do not depend on the same variables. The computational time is even higher if the upper bound sets are obtained by outer approximations, because more optimization problems have to be solved.

It can be seen that choosing a set \(Z^*\) with multiple points improves the results. If the vertices of Z are included in \(Z^*\), i.e., in case of \(Z^1\) and \(Z^3\), we obtain the smallest amount of subdivisions and boxes in the solution list. The reason for this is the symmetry of the convex objective functions again. Therefore, for this example, the best choice for \(Z^*\) is \(Z^1\), and it is sufficient to use the anti-ideal point of the concave overestimators only. Another thing is that the covering of the decision robust strictly efficient solution \((-0.1,-0.1)^T\) is cross shaped and lies more symmetrically around the real decision robust solution set in case of \(Z^*\ne \{0\}\).

The influence of \(\delta \) to the covering of the decision robust strictly efficient points is not that noticeable. This clarifies that with decreasing \(\delta \) we cannot expect to obtain an arbitrary tight covering of the set \(X_E\), as remarked below Theorem 2.

In all additional numerical experiments similar results have been observed. To summarize, choosing a set \(Z^*\) with more than one element is a better choice than \(Z^*=\{0\}\). On the other hand, the cardinality of \(Z^*\) does not have to be very large. For simple (i.e., convex, concave, symmetric, independent,...) objective functions the set with some characteristic points at the boundary of Z, e.g. the vertices of \({\hat{Z}}\) and 0, leads to the overall best results. In general, using outer approximations to obtain upper bound sets improves the results.

5 Conclusions, outlook, and applications to set optimization

In this work, we proposed a branch-and-bound based algorithm for multiobjective optimization problems with decision uncertainty. In case of nonlinear objective functions we need to work with convex underestimators or concave overestimators, or even with both, to make the subproblems numerically tractable. The computational experiments showed that the algorithm is indeed able to discard areas which do not contain any decision robust strictly efficient solution. Nevertheless, the remaining parts of the feasible set can still be large and then other (local) algorithms could be applied afterwards for more exact solutions. Moreover, the results can be improved, i.e., the covering can be tightened by improving the outer approximation used in Lemma 2 with techniques from [8] and [26].

We have assumed that the set Z is convex. An adaption also to nonconvex sets is possible. One can replace the set Z within the optimization problems in Sect. 3.2 by the convex hull of Z, or by a box which contains Z. If Z is a finite (and small) set, the problems can also be solved directly by enumeration.

The proposed techniques have been developed for a set optimization problem with a very specific structure and with the upper-type less order relation. It is also of interest, and we believe it is possible, to adapt the methods for other set order relations and more general set-valued optimization problems. For example, the new methods can easily be adapted for the lower-type less order relation \(\preccurlyeq _l\) (\(A\preccurlyeq _l B\Leftrightarrow B\subseteq A+\mathbb {R}^m_+\)). This is also related to an optimistic approach to uncertain multiobjective optimization, see Section 3.2 in [13].

To handle other and more general set-valued optimization problems we have to ensure a certain structure of the set-valued objective function, i.e., convex underestimators or concave overestimators should be computable. This is the case for a set-valued map given by \(f_Z(x):=\{f(x,z)\mid z\in Z\}\), where \(f:\mathbb {R}^n\times \mathbb {R}^n\rightarrow \mathbb {R}^m\). A special case of this is, for instance, \(f_{Z}(x):=\{f(x+g(z))\mid z\in Z\}\), where \(g:\mathbb {R}^n\rightarrow \mathbb {R}^n\) is a twice continuously differentiable function.

References

Adjiman, C., Dallwig, S., Floudas, C., Neumaier, A.: A global optimization method, \(\alpha \)BB, for general twice-differentiable constrained NLPs: I-theoretical advances. Comput. Chem. Eng. 22(9), 1137–1158 (1998)

Barrico, C., Antunes, C.: Robustness analysis in multi-objective optimization using a degree of robustness concept. In: IEEE Congress on Evolutionary Computation. CEC 2006, pp. 1887–1892. IEEE Computer Society (2006)

Ben-Tal, A., Ghaoui, L.E., Nemirovski, A.: Robust Optimization. Princeton University Press, Princeton (2009)

Ben-Tal, A., Nemirovski, A.: Robust optimization—methodology and applications. Math. Program. 92(3), 453–480 (2002)

Benson, H.: An outer approximation algorithm for generating all efficient extreme points in the outcome set of a multiple objective linear programming problem. J. Glob. Optim. 13(1), 1–24 (1998)

Binh, T., Korn, U.: MOBES: A multiobjective evolution strategy for constrained optimization problems. In: Proceedings of the 3rd International Conference on Genetic Algorithms, pp. 176–182 (1999)

Deb, K., Gupta, H.: Introducing robustness in multi-objective optimization. Evol. Comput. 14(4), 463–494 (2006)

Ehrgott, M., Shao, L., Schöbel, A.: An approximation algorithm for convex multi-objective programming problems. J. Glob. Optim. 50(3), 397–416 (2011)

Eichfelder, G., Gerlach, T.: On classes of set optimization problems which are reducible to vector optimization problems and its impact on numerical test instances. In: Khan, A., Köbis, E., Tammer, C. (eds.) Variational Analysis and Set Optimization, Chap. 10, pp. 265–290. CRC Press, Boca Raton (2019)

Eichfelder, G., Krüger, C., Schöbel, A.: Decision uncertainty in multiobjective optimization. J. Glob. Optim. 69(2), 485–510 (2017)

Fliege, J., Werner, R.: Robust multiobjective optimization & applications in portfolio optimization. Eur. J. Oper. Res. 234(2), 422–433 (2014). https://doi.org/10.1016/j.ejor.2013.10.028

Goberna, M.A., Jeyakumar, V., Li, G., Vicente-Pérez, J.: Robust solutions of multiobjective linear semi-infinite programs under constraint data uncertainty. SIAM J. Optim. 24(3), 1402–1419 (2014)

Ide, J., Köbis, E., Kuroiwa, D., Schöbel, A., Tammer, C.: The relationship between multi-objective robustness concepts and set-valued optimization. Fixed Point Theory Appl. 2014(1), 83 (2014)

Jahn, J.: Vectorization in set optimization. J. Optim. Theory Appl. 167(3), 783–795 (2013)

Jahn, J.: A derivative-free descent method in set optimization. Comput. Optim. Appl. 60(2), 393–411 (2015)

Jahn, J.: A derivative-free rooted tree method in nonconvex set optimization. Pure Appl. Funct. Anal. 3, 603–623 (2018)

Jahn, J., Ha, T.: New order relations in set optimization. J. Optim. Theory Appl. 148, 209–236 (2011)

Khan, A., Tammer, C., Zălinescu, C.: Set-Valued Optimization. Springer, Berlin (2015)

Köbis, E., Köbis, M.: Treatment of set order relations by means of a nonlinear scalarization functional: a full characterization. Optimization 65(10), 1805–1827 (2016)

Krüger, C.: On minmax robustness for multiobjective optimization with decision or parameter uncertainty. Ph.D. thesis, Georg-August Universität Göttingen (2018)

Krüger, C.: Peat and pots: analysis of robust solutions for a biobjective problem in agriculture. Preprint-Reihe, Institut für Numerische und Angewandte Mathematik, Georg-August Universität Göttingen (2018)

Krüger, C., Castellani, F., Geldermann, J., Schöbel, A.: Peat and pots: an application of robust multiobjective optimization to a mixing problem in agriculture. Comput. Electron. Agric. 154, 265–275 (2018)

Kuroiwa, D.: The natural criteria in set-valued optimization. RIMS Kokyuroku 1031, 85–90 (1998)

Kuroiwa, D., Lee, G.M.: On robust multiobjective optimization. Vietnam J. Math. 40(2&3), 305–317 (2012)

Lewis, A., Pang, C.: Lipschitz behavior of the robust regularization. SIAM J. Control Optim. 48(5), 3080–3105 (2009)

Löhne, A., Rudloff, B., Ulus, F.: Primal and dual approximation algorithms for convex vector optimization problems. J. Glob. Optim. 60(4), 713–736 (2014)

Löhne, A., Schrage, C.: An algorithm to solve polyhedral convex set optimization problems. Optimization 62(1), 131–141 (2013)

Maranas, C.D., Floudas, C.A.: Global minimum potential energy conformations of small molecules. J. Glob. Optim. 4(2), 135–170 (1994)

Niebling, J., Eichfelder, G.: A branch-and-bound-based algorithm for nonconvex multiobjective optimization. SIAM J. Optim. 29(1), 794–821 (2019)

Rodríguez-Marín, L., Sama, M.: \((\Lambda, C)\)-contingent derivatives of set-valued maps. J. Math. Anal. Appl. 335(2), 974–989 (2007)

Rump, S.: INTLAB—INTerval LABoratory. In: Csendes, T. (ed.) Developments in Reliable Computing, pp. 77–104. Kluwer Academic Publishers, Dordrecht (1999)

Schulze Darup, M., Mönnigmann, M.: Improved automatic computation of Hessian matrix spectral bounds. SIAM J. Sci. Comput. 38(4), A2068–A2090 (2016)

Zhou-Kangas, Y., Miettinen, K., Sindhya, K.: Solving multiobjective optimization problems with decision uncertainty: an interactive approach. J. Bus. Econ. 89(1), 25–51 (2019). https://doi.org/10.1007/s11573-018-0900-1

Acknowledgements

The second author thanks the Carl-Zeiss-Stiftung and the DFG-founded Research Training Group 1567 “Lorentz Force Velocimetry and Lorentz Force Eddy Current Testing” for financial support. The work of the third author is funded by the Deutsche Forschungsgemeinschaft under Grant No. EI 821/4. Additionally, we thank the two anonymous referees for their helpful remarks and comments, which helped us to improve this article.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Eichfelder, G., Niebling, J. & Rocktäschel, S. An algorithmic approach to multiobjective optimization with decision uncertainty. J Glob Optim 77, 3–25 (2020). https://doi.org/10.1007/s10898-019-00815-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-019-00815-9