Abstract

Detection of hypovolemia prior to overt hemodynamic decompensation remains an elusive goal in the treatment of critically injured patients in both civilian and combat settings. Monitoring of heart rate variability has been advocated as a potential means to monitor the rapid changes in the physiological state of hemorrhaging patients, with the most popular methods involving calculation of the R–R interval signal’s power spectral density (PSD) or use of fractal dimensions (FD). However, the latter method poses technical challenges, while the former is best suited to stationary signals rather than the non-stationary R–R interval. Both approaches are also limited by high inter- and intra-individual variability, a serious issue when applying these indices to the clinical setting. We propose an approach which applies the discrete wavelet transform (DWT) to the R–R interval signal to extract information at both 500 and 125 Hz sampling rates. The utility of machine learning models based on these features were tested in assessing electrocardiogram signals from volunteers subjected to lower body negative pressure induced central hypovolemia as a surrogate of hemorrhage. These machine learning models based on DWT features were compared against those based on the traditional PSD and FD, at both sampling rates and their performance was evaluated based on leave-one-subject-out fold cross-validation. Results demonstrate that the proposed DWT-based model outperforms individual PSD and FD methods as well as the combination of these two traditional methods at both sample rates of 500 Hz (p value <0.0001) and 125 Hz (p value <0.0001) in detecting the degree of hypovolemia. These findings indicate the potential of the proposed DWT approach in monitoring the physiological changes caused by hemorrhage. The speed and relatively low computational costs in deriving these features may make it particularly suited for implementation in portable devices for remote monitoring.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Hemorrhage resulting from major trauma remains the most prevalent cause of potentially preventable death in both civilian and combat related trauma [1, 2]. In the latter setting, approximately 20 % of casualties die prior to reaching a treatment facility, and 50 % of these deaths are due to blood loss [3, 4]. Survival depends on a number of factors; among the most crucial are the types of treatment received and how quickly they are provided. It is therefore vital that trauma victims with undiagnosed hemorrhage be rapidly assessed and that accurate triage decisions are made. However, evaluating a hypovolemic patient’s condition can be challenging, particularly in a high-paced environment such as a combat setting. Standard vital signs such as arterial blood pressure, heart rate and arterial hemoglobin oxygen saturation can remain stable until the onset of cardiovascular decompensation [5]. Consequently, any advance warning of changes in blood volume status is important for early and successful intervention [5]. Ideally, such an advanced warning system needs to provide predictions about the physiological status of individual patients. This requirement calls for the design of novel combinations of signal processing and machine learning methods to process physiological signals and to learn how to map the characteristics of these signals into early warnings for each patient.

One potentially useful source of such information is the heart rate variability (HRV) time series, i.e., the variation in the beat-to-beat intervals of the heart. Over the past few decades, multiple investigators have emphasized the connection between HRV and cardiovascular mortality [6, 7], and there has recently been intense interest in the use of HRV analysis as a means to detect the severity of both hemorrhage and general trauma in civilian casualties [8–12]. Models of sepsis and other critical illnesses have demonstrated that as a patient’s state worsens, HRV is either decreased or lost entirely [13]. High frequency components in the ECG signal contain information on parasympathetic nervous system activity, while low frequency components contain information on both parasympathetic and sympathetic activity [7, 14]. A patient’s condition can therefore potentially be evaluated by analyzing the changes in the balance between these two frequency domains. Several studies have explored this approach for hemorrhage [5, 15–17], some of which were conducted using lower body negative pressure (LBNP) as an experimental model of simulating hemorrhage in humans [18–21]. The previous studies have shown that while the aggregate group mean values of the conventional measures based on the HRV are well correlated with stroke volume changes during central hypovolemia, such metrics are not reliable measures for tracking individual reductions in central volume during LBNP [17]. Hence, the conventional HRV metrics, such as simple statistical means as well as power spectral density (PSD) [14, 22] and fractal dimension (FD) [23] based measures may not be useful for hemorrhage detection and quantification in individual patients. The potential disadvantages of PSD and FD resulting in a need for a new approach are briefly discussed below.

Extracting features based on the PSD [7] requires use of the Fourier transform (FT), which has two major disadvantages when applied to R–R interval signals. First, the FT assumes that the input signal is stationary, when in fact, the R–R interval signal is clearly non-stationary, particularly as the physiological status of the patient deteriorates. This results in FT ignoring the very same changes, i.e. non-stationary variations, that would allow the detection of deterioration in individual physiological state. The second disadvantage is that while FT is able to extract frequency information from a signal via it examination of the signal’s amplitude, the timing information of the events is lost. This information may be crucial in monitoring the changes in the condition of hypovolemic patients.

Fractal dimension (FD), on the other hand, is a non-linear dynamical technique, which defines a large family of techniques actively explored as methods of analyzing ECG and HRV in tracking changes in volume status [24]. This technique is based on the concept of fractal theory and has been applied in many areas, including medicine and biology [25]. Fractal properties have been found suitable for characterizing HRV in several disease states. For example, Acharya et al. [26] were able to identify patients with heart disease by the decreasing fractal complexity of ECG. Another study indicates that measures of FD may be more effective in assessing HRV than the approach using PSD by showing that the FD of high frequency (0.2–0.5 Hz) of the heart rate time series is highly correlated with the sympathetic activities [27]. Furthermore, instead of presenting only the local information as in the PSD, the FD analysis is focusing on the self-similarity of the events repeated in different scales, therefore providing a multi-resolution description of signal that allows capturing the variations in HRV that occur over periods of time with relatively-arbitrarily length. However, this performance comes at a high computational cost and also requires a large number of samples to achieve sufficient reliability [28, 29]. This means that in order for the FD to provide reliable information, one needs to have a sufficient window of measurements of ECG (or HRV) to capture the targeted variations. In additions, some important patterns associated with variations in HRV that may not be captured by the FD features as FD does not allow directly capturing particular “expected” patterns/variations associated with ceratin states (e.g. severe hemorrhage). This is due to the fact that the FD is always calculated according to a fixed methodology that disregards the nature of the signal that is being processed. Additionally, new evidence suggests that FD metrics, similar to metrics from PSD analyses, display high inter- and intra-individual variability during progressive reductions in central blood volume, which may limit their usefulness for application to individual patients [17].

The use of Discrete Wavelet Transform (DWT) as reported in this paper is an attempt to overcome the above potential limitations of using the traditional methods (PSD and FD). DWT has proven promising in applications suitable for time-frequency analysis [30–32] and therefore rivaling PSD in frequency analysis. It is also capable of conducting advanced multi-resolution analysis of signals, and as such may extracting information often extracted by FD. More importantly, unlike FD, DWT does not require very long windows of signal acquisition, or high sampling rates, in order to provide reliable results. Moreover, in contrast to the FD, the DWT allows customizing the method towards the particular problem/signal that is being analyzed, as discussed later.

DWT was developed as an alternative to the Short Time Fourier Transform (STFT) as a method that could not only capture the frequency content of a signal, but also the times at which particular frequencies occurred. This ability to preserve both time and frequency resolution has led to widespread use of DWT in many practical applications in biology and medicine [33]. It is particularly well suited to local analysis of fast time varying and non-stationary signals such as the R–R interval signal. Stiles et al. [34] advocate that applying wavelet transform to ECG signals can assist in detecting clinically significant features that may be missed by traditional HRV analysis methods. Wavelet transform has been applied to HRV analysis in the past for tasks such as the study of ischemic heart disease, assessment of the nervous system’s response to epidural analgesia, and diagnosis of sleep apnea syndrome [35–37]. However, it has not previously been applied to the prediction and assessment of hemorrhage, a particularly time-sensitive application requiring a fine level of sensitivity to fluctuations in patient state. As such, DWT seems to be only more accurate in extracting and representing the physiological changes due to volumetric variations, it can approximately represent the information extracted by both PSD and FD. Consequently, in this study we hypothesized that DWT features extracted from HRV can be used to accurately detect the severity of hypovolemia. We tested DWT against a combination of PSD and FD methods in order to investigate the potential of the proposed approach over these traditional methods in determining the severity of central hypovolemia. Specifically, the performance of the two traditional approaches (PSD and FD), both individually and combined, was compared with the proposed DWT-based method using leave-one-subject-out cross-validation methodology. Additionally, this analyses was performed at both 500 and 125 Hz sampling rates to determine whether a lower sampling rate affects the accuracy of prediction.

2 Methodology

2.1 Study design, dataset and experimental procedure

This was a retrospective study using a database of ECG signals collected from subjects undergoing LBNP, housed at the U.S. Army Institute of Surgical Research (USAISR) in San Antonio, TX. Subsequent analysis of the data took place at both the USAISR and Virginia Commonwealth University (VCU) in Richmond, VA. The protocol described in this report was approved by the Institutional Review Boards of both institutions. The database consisted of eighty-seven subjects, all of whom were healthy volunteers recruited by the USAISR and none of whom had undergone any special conditioning prior to the study. Due to the potential effects on cardiovascular function, subjects were asked to refrain from alcohol, exercise, and stimulants such as caffeine and other non-prescription drugs for the 24 h prior to testing.

Central hypovolemia was simulated in the subjects by application of increasing negative pressure to the lower body, performed using a LBNP chamber as shown in Fig. 1. Each subject was positioned such that the lower half of their body was inside the chamber. The LBNP protocol began with an initial 5-min rest period, used to obtain baseline recordings. The subject was then exposed to four successive levels of decompression (−15, −30, −45 and −60 mmHg), each maintained for 5 min. This was followed by further decreases of −10 mmHg applied every 5 min until the subject either completed 5 min at −100 mmHg or reached a state of cardiovascular collapse. The latter stage was defined as the subject experiencing any one of the following: (a) a drop of >15 mmHg in systolic blood pressure (SBP); (b) a decrease of >15 bpm in heart rate; (c) SBP measured as <80 mmHg; or (d) pre-syncopal symptoms (such as sweating, nausea or dizziness). No subject completed the −100 mmHg LBNP level.

Subjects were monitored using a standard four-lead ECG, which was recorded to a computer equipped with commercial hardware and software (WINDAQ, Dataq instruments, Akron OH). The ECG signals were originally recorded and analyzed at a sampling rate of 500 Hz. The signal was subsequently down sampled to 125 Hz using Matlab (2010a, MathWorks) for additional analysis. A summary of the dataset is presented in Table 1 demonstrating the stage at which subjects “collapsed” as defined above.

In order to compare the predictive performance of the model based on the DWT features with that of the PSD and FD features in differentiating and identifying hypovolemia severity, LBNP stages were mapped to the severity of hypovolemia in individual subjects. For this task, we used two different approaches because while sometimes it is important only to separate between severe and non-severe cases of volume loss, in other cases it is important to know more on the extent of volume loss, i.e. it is mild, moderate, or severe. As a results, first the LBNP stages were grouped into three classes: mild, moderate and severe, as defined in Table 2. Since subjects do not uniformly collapse at the same LBNP stage, we considered the stage of collapse and the stage before it as severe (if at least 4 stages were used), the first 2 stages as mild (if 5 stages were used) and all other stages in between as moderate.

We also used the approach of mapping LBNP stages into two classes (i.e. severe vs. non-severe) for the two-class-classification scenario. As shown in Table 2, the baseline and the first stage were always considered non-severe, and the collapse stage and the stage before it were always considered severe. For subjects collapsing at LBNP stages 7 or higher, the middle LBNP stages were mapped to the closest severity group. The severe/non-severe states can also be used in medical monitors to assist clinical personnel as a decision support indicator.

These two classification scenarios not only more closely match the clinical labels and reality of blood volume loss but also allow for a more individualized approach in detection which is commensurate with symptoms of hypovolemia.

2.2 Computational methods

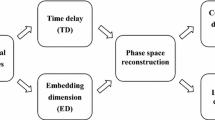

Figure 2 presents an overview of the ECG analysis approach used in this study.

2.2.1 ECG segmentation

The raw ECG signal was segmented into sub-signals, each covering one stage as defined in Table 1. Although the signal for each completed stage was 5 min in length, this study considered only the steady state portion when extracting HRV features, i.e., the last 4 min. In earlier work, we chose to use only the last 3 min of the 5 min period within each LBNP stage (ref). In this study, however, with the assumption that almost subjects achieve steady within the first minute of each LBNP stage, we have decided to use 4 min of each stage.

2.2.2 Pre-processing and QRS detection

Prior to any analysis, the ECG signal was filtered to remove motion artifacts, baseline drift, and interference from the 60 Hz power-line interface. This was performed using a band-pass filter with cut-off frequencies of 1 and 55 Hz. The next step was to detect the QRS complex in order to identify the R waves. For this, non-overlapping sliding windows (20 s in length) were used to detect R waves. A detailed description of the QRS detection process is provided in “Appendix 1”. Once each R-wave was identified, the RR signal was formed as follows:

2.2.3 Feature extraction

After the RR signal (\( = RR_{i} \)series) was generated, feature extraction was performed via DWT, PSD, and FD methods. This was done using transformation analysis as described below.

The RR signal was treated as input to the DWT, PSD and FD transformations. DWT decomposes a signal into different scales by calculating its correlation with a set of scaled and shifted versions of the chosen wavelet basis function, a process implemented by passing the signal through multiple levels of high-pass and low-pass filters [30, 31]. This study used the db4 basis function, belonging to the Daubechies family. Preliminary tests were performed at a number of levels of DWT decomposition. Based on the results, four levels were used for actual analysis. Several different types of mother wavelets were tested to see which one performs better for this study, including Harr, DB4, DB16 and DB32 from the Daubechies family of wavelets. For each of these, varying levels of decompositions were also experimented with, such as 4, 8 and 16 levels. It was noted that increasing the levels of decomposition, did not provide any further information and only added further to the computational complexity [38, 39]. Hence the mother wavelet DB4 with 4 levels of decomposition was chosen since it provided the most relevant amount of information for feature extraction. For fractal feature extraction, the FD was estimated using Higuchi’s method. This requires less computational time and memory than other approaches and provides reasonably reliable measures even with relatively few samples. However, it makes an essential assumption that the analyzed signal is stationary [40]. A detailed explanation of how both FD and PSD were used in RR analysis was provided in “Appendix 2”.

The final step of the feature extraction process was calculation of statistical and algebraic measures, as follows:

-

a.

Standard deviation of approximation coefficient and detail coefficients at each DWT decomposition level, i.e.\( \sigma_{A} ,\sigma_{D1} ,\sigma_{D2} ,\sigma_{D3} ,\sigma_{D4} \), where A represents the approximate level and D i the detail at level i.

-

b.

Mean of approximation coefficient and detail coefficients at each DWT decomposition level, i.e. \( \mu_{A} ,\mu_{D1} ,\mu_{D2} ,\mu_{D3} ,\mu_{D4} \).

-

c.

Median of approximation and detail coefficients at each level, i.e. \( {\text{med}}_{A} ,{\text{med}}_{D1} ,{\text{med}}_{D2} ,{\text{med}}_{D3} ,{\text{med}}_{D4} \).

-

d.

Using the twenty highest approximation and detail coefficients for each level sorted by size, the median coefficient and the coefficients immediately preceding and following it, i.e.\( A_{M} ,A_{M - 1} ,A_{M + 1} ,D1_{M} ,D1_{M - 1} ,D1_{M + 1} \), etc.

-

e.

Energy of approximation and detail coefficients at each level, i.e. \( \varepsilon_{A} ,\varepsilon_{D1} ,\varepsilon_{D2} ,\varepsilon_{D3} ,\varepsilon_{D4} \),

-

f.

PSD-based features, HF, LF, very low frequency (VLF), normalized LF (\( {\text{LF}}_{\text{N}} \)), normalized HF (\( {\text{HF}}_{\text{N}} \)), LF-HF ratio and HF-LF ratio, as described in “Appendix 2”.

-

g.

The Higuchi FD, calculated using the two parameters as specified in “Appendix 2”.

In summary, for the feature extraction after DWT decomposition, four features are extracted at each of the four levels of decomposition. Along with which four features from the final level approximate coefficient is also extracted.

2.2.4 Machine learning

The features extracted in the previous step (either the DWT features or the PSD and/or FD features) were fed into a machine learning method to estimate the severity of blood loss during two class (severe and non-severe) as well as three class (mild, moderate, and severe) classification tasks. While a variety of machine learning methods such as neural networks [41] and radial basis functions [42] have been tested for this purpose, support vector machine (SVM) [43] which is commonly considered as one of the most powerful machine learning methods for medical applications [44], was used. In comparison with SVM, linear classifiers typically resulted in accuracies which were 3–5 % below that of SVM in almost all cases.

The same process and classifier, i.e. leave-one-subject-out and SVM, were used for training and testing of machine learning models using PSD and FD features. LibSVM was the method used for this study. In each case of tenfold cross validation, a grid search on the parameters sigma and C was performed. The best parameters were then finally utilized for the study. The features used for PSD/FD were: 7 PSD-based features and 2 FD features, i.e. HF, LF, VLF, normalized LF (\( {\text{LF}}_{\text{N}} \)), normalized HF (\( {\text{HF}}_{\text{N}} \)), LF-HF ratio and HF-LF ratio, as well as two Higuchi FDs calculated based on window sizes of 8 and 15 samples (see “Appendices 1 and 2” for details). The SVM models were trained with the PSD features only, the FD features, and the PSD/FD features combined (to include the capabilities of both PSD and FD features). As such, the tests allowed a direct comparison between our DWT model and the combined capabilities of PSD and FD.

3 Results

A total of 32 DWT features, as defined above, were extracted. Since we just have 87 subjects, reducing the number of feature set can be helpful in removing redundancy and increasing the overall accuracy. For this step three options were considered;

-

1.

Manually select only those DWT features, that are conceptually known to be independent or uncorrelated. However this is not a very practical approach since by excluding certain features extracted from certain level, it is difficult to verify the true non-correlation that exists between the selected features.

-

2.

Calculate all possible features and use a dimension reduction method such as principal component analysis (PCA), to eliminate redundancy and reduce therefore, the number of features. Although this is a popular choice for many signal processing applications, the resulting features typically do not possess any meaning from a physiological stand point as they are presented as a linear combination of features extracted from DWT coefficients. Physicians and physiologist might prefer to have a more intuitive interpretation of the features used in decision making.

-

3.

Calculate all possible features and use a constructive (or destructive) method to create a sub-set of all features whose members are least correlated. Methods such as constructive and destructive logistic regression are example methods of this category. The advantage of the methods in this category is the fact that, unlike PCA in which features are combination of the original features, all surviving features in the final feature set belong to the original feature set too. This means that all physical, physiological and signal processing concepts still apply to the surviving features.

The third option is the most relevant option for the objective of this study hence the third option has been used to reduce the feature space for the DWT feature set. Using ANOVA, we tested the statistical significance of these 32 DWT-based features across the three classes (mild, moderate, and severe) as well as the two classes (severe and non-severe). We found that all but five of these 32 DWT features are statistically significant for (p value <0.05) for the tests described above. The PSD method, on the other hand, produced only seven features that were statistically significant. Similarly, assuming two different sizes of windows for calculating FD, the FD method generates only 2 features. Since the DWT method has many features, multiple combinations of the statistically significant DWT features were tested against the traditional method. As mentioned earlier, the extracted features for each of the methods were also tested to investigate the impact of lowering the signal sampling rate (down to 125 Hz) on the quality and usefulness of the approach in assessing volume loss.

Before applying machine learning to the extracted features on the two feature groups (DWT vs. PSD and/or FD), we investigated group and individual variations of the resulting features.

3.1 Group and individual variations

Figures 3a–j present the group responses from baseline until stage four since the majority of subjects reached stage 4 (79 out of 87). The DWT, PSD, and FD features were extracted from the ECG sampled at 500 Hz as well as 125 Hz; all DWT features, PSD HF and both FD features at 125 Hz demonstrated a visually similar pattern to those obtained at 500 Hz. Some of the feature patterns for 500 Hz are presented here (e.g., four features: the median DWT detail coefficient at level 1 and 4, as well as the HF and LF features of PSD) .

Group and individual variations from baseline to LBNP stage 4 for 500 Hz. Each group plot is presented as Mean ± SE. a DWT Level 1 median: Group, b DWT Level 1 median: Individual, c DWT Level 4 median: Group, d DWT Level 4 median: Individual, e PSD HF: Group (f) PSD HF: Individual, g PSD LF: Group, h PSD LF: Individual, i FD: Group, j FD: Individual, k Plot of Heart Rate Variability for 5 subjects shown from stages 0 to 4

Figure 3a–j show the values of the DWT, HF and FD features in each stage and the standard errors as error bars. The figures demonstrate the differentiation among the LBNP stages at the group mean level (Fig. 3a, c, e, g, i). Visual inspection of the individual trajectories indicates a more consistent pattern for the DWT features than the PSD and FD features (Fig. 3b, d, f, h, j). It can also be seen that the performance of the FD measure is comparable with those of the DWT features.

Figure 3k shows the computed HRV during each stage of the LBNP (only stages 0–4 shown) for 5 subjects as an example to visualize the information provided purely from HRV. From Fig. 3k it is evident that using raw HRV alone is not very useful, since there is a lot of overlap between the different stages/classes. There is very little visual difference in the first few stages of the LBMP. Only in the severe stages more evident changes in the HRV become visible.

3.2 Classification results

The final test explored the ability of our DWT based method for detecting the severity of hypovolemia, and compared its results with those of PSD, FD and PSD/FD based models. This was done using leave-one-subject-out cross validation, in which during each test the data for all but one subject were used for training of an SVM model and the data for the left-out subject were used for testing. These tests were then repeated while rotating the left-out subject.

For classification with PSD, FD, and PSD/FD, the same PSD and FD features were used and for our DWT based model, the following DWT feature sub-set was used for this study:

-

the standard deviation of level 4 and approximate coefficient,

-

mean of the level 4 and approximate coefficient,

-

the median of level 3 and approximate coefficient,

-

the coefficients immediately preceding the medians of level 2,

-

the approximation coefficient, energy of level 2.

Table 3 presents the results of two-class and three-class classification scenarios with leave-one-subject-out cross-validation at 500 and 125 Hz sampling rates. Table 3 compares the results of the classification accuracy for the proposed DWT method for both two-class and three-class scenarios with those of classification using PSD only, FD only, and combined PSD/FD features (using the same machine learning method, i.e. SVM).

Table 3 presents the results of two-class (severe, non-severe) and three-class (mild, moderate, severe) classification scenarios with leave-one-subject-out cross-validation at 500 Hz and 125 Hz sampling rates. As it can be seen, the DWT features show higher accuracy than others (e.g. compared to using PSD FD and combined PSD/FD features).

Therefore, a non-parametric statistical test, i.e. sign rank test, was performed to explore the statistical significance of these differences, SAS software (www.sas.com).

Specifically, the statistical test was performed on difference between the average of the leave-one-subject-out classification accuracy obtained by the DWT features and that obtained by other features, e.g. difference between the average accuracy of classification obtained by DWT features and that of the combined PSD/FD Features. These tests were conducted to validate whether or not the resulting differences in the average accuracies are statistically significant or not. Table 4 presents the results of these statistical tests.

Table 4 suggests that the accuracy differences observed in Table 3 between the proposed DWT features and other methods are statistically significant, even when the proposed method was compared with classification using combined PSD/FD features (at both 500 and 125 Hz).

In order to further test the reliability of the proposed method against the other methodologies, the area under the curve (AUC) of receiver operating characteristic (ROC) in detecting the severity of volume status (i.e. severe/non-severe) was calculated for classification with the proposed DWT features, as well as classification with combined PSD/FD features, PSD only features, and FD only features. The values of AUC for the DWT method as well as the traditional methods are provided in Table 5.

While Table 5 shows that the AUC was higher in both frequencies for the proposed DWT based method, as in the case of classification accuracy, the main question was whether the observed difference between the AUC of the DWT method and traditional methods was statistically significant. In order to investigate this, we performed sign rank tests among the AUC’s obtained by the proposed DWT and the traditional methods (i.e. PSD/FD, PSD only, and FD only). The results are shown in Table 6. As it can be seen in Table 6, the differences between the AUC of the DWT method and others are statistically significant, both at 500 and 125 Hz sampling rates.

4 Discussion

Predictive models generated by applying SVM to a subset of features extracted via DWT, as shown in Tables 3, 4 and 5, indicated better performance than the traditional methods of detecting the degree of hypovolemia using HRV which are based on PSD and FD. This may be due to DWT’s intrinsic capacities for handling non-stationary signals and registering not only the frequency of events but also their location in time. The features extracted from DWT analysis seem to have a much higher association with early physiological shock as created in this LBNP model, compared to FD and PSD alone. This may be associated with the fact that the concepts of “level” and “shift” in DWT are much richer than “frequency” in the PSD. Moreover, due to DWT’s ability to combine the domains of time and frequency, it can analyze HRV signal and in-turn the physiological changes in multi-resolutions. DWT is therefore well-suited to analysis for RR series, particularly when studying hypovolemic patients whose conditions may be physiologically compensated for in the early stages, but can quickly deteriorate. In addition, unlike FT based methods, such as PSD, that are limited to sinusoidal basis functions, DWT allow the use of unlimited number of basis functions to decompose signals. This may be useful in applications such as RR signal analysis where the RR signal displays different characteristics, since a suitable basis function can be chosen to match the signal shape. By preserving the locality of RR changes, our approach allows the extraction of additional information from the beat-to-beat intervals in the heart rate signal which may be missed by traditional methods. DWT’s ability to capture these fine and rapid variations may prove particularly useful in earlier detection of hemorrhage, and thus lead to more successful treatment.

In order to address the possibility of overfitting, in this study we performed a cross validation experiment on 87 examples (subjects) using only 9 features; this significantly reduces or eliminates the risk of overfitting. In particular note that the cross validation experiment is conducted over subjects, i.e. models are tested on subjects whose data were not included in training at all. When overfitting happens, the testing accuracy would be low at least in some of the subjects not included in the training set. Furthermore, choosing the type of cross-validation method is a complex and scenario specific issue. With relevance to the dataset for this study, ‘leave-one-subject-out’ cross validation seemed the most practical approach to explore whether overfitting exists or not, and as shown above, the model presented in this paper did not exhibit any sign of overfitting.

Based on the results of this study, the following observations can be made. First, it has been shown that DWT is able to extract underlying information from RR signals which can be used to more accurately distinguish the presence and severity of progressive reduction in central blood volume similar to that occurring in bleeding patients, but simulated by LBNP. DWT decomposition and PSD have correspondence with the high frequency (HF) and low frequency (LF) components of RR intervals respectively, which respond to parasympathetic and sympathetic activity [45]. However, when the classification performance of the models based on the DWT features were compared with that of the PSD and FD features, the DWT-based models showed higher accuracies. Specifically, the performance results from the leave-one-subject-out cross validation between DWT, PSD, and FD demonstrated that the DWT approach shows better classification accuracy for both two-class and three-class scenarios. Second, although the traditional PSD and FD methods showed utility in this application, the DWT approach demonstrated better performance (in most cases p values <0.0001). Although degraded, performance was also better using DWT than PSD and FD when the lower sampling rates (i.e., 125 Hz) was applied to the ECG signal. This has important implications in its reduction to clinical practice.

There are several limitations to this study. These include the fact that LBNP is limited in its ability to model severe hemorrhagic and traumatic shock. Tissue injury is not a part of the model, and subjects cannot be taken to a level resulting in a more severe level of central hypovolemia for safety reasons. That being said, the fact that HRV relates changes in the ECG can be detected and evaluated indicates that the approach may allow for more of an early warning detection method. Additionally, performance cannot be fully evaluated due to the lack of balanced data. A possibility exists that the amount of data for some stages (e.g., collapse at stages 2, 3, 7, and 8) was not enough to generate a reliable predictive model compared with other stages (e.g., 5 and 6) for which there was an ample data set. This problem, however, equally affected both models.

The ability to detect physiological changes via analysis of a single low-level signal such as ECG is appealing, provided the information contained within the signal can be easily extracted, processed and tied into the condition of interest. However, it is not known if a signal exists which offers sufficient accuracy for diagnosis and monitoring of individual patients. Based on our preliminary studies [38, 46], it is likely that analysis of multiple signals will provide more powerful predictive capabilities. We are therefore currently examining the use of DWT methods in extracting useful information from other easily-available physiological signals(e.g. blood pressure, skin temperature, etc.), and subsequently applying machine learning techniques to create condition detection algorithms for integration into computer-assisted decision making tools [47].

The ultimate goal of computer-aided diagnosis based on analysis of physiological signals is that the process be suitably sensitive to the changes in individuals and specific to the patient currently under consideration. As shown in Fig. 3, DWT responses seem to be fairly uniform across individual subjects. In contrast, the PSD and FD responses appear more inconsistent. While not quantified herein, these results are in agreement with a recent finding that PSD and FD values may not be highly correlated with changes in stroke volume during LBNP [17]. Since DWT showed promising results in distinguishing volume status levels (as shown in Tables 3, 4, 5, it may have greater potential for incorporation into portable monitors for rapid detection of hemodynamic changes associated with hypovolemia. With the eventual objective of the system designed towards prehospital and battlefield use, some of the features from FD and PSD measure might also be incorporated into the final feature set if it is seen relevant and informative and computationally feasible. However for the scope of this paper, features extracted through DWT analysis has been given more emphasis.

Nonetheless, the innate suitability of each individual signal in detection of a given condition must be thoroughly investigated, along with its ability to be practically implemented in a realistic environment in terms of sampling and processing requirements. In the field of computer-aided diagnosis, rapid feedback is often crucial, particularly in cases of trauma and hemorrhage. Consequently, careful consideration of which signals to use and the application of fast and accurate methods such as DWT for analysis is necessary for a decision support system to be of true practical use. Our approach to HRV analysis is designed to be simple, accurate, and easy to implement, making it particularly suited for use in portable monitoring devices in combat settings, remote civilian triage, and any other situation where a full medical facility is not readily available.

There are several suggested criteria for development and evaluation of new methods and markers of cardiovascular risk as detailed by Hlatky et al. [48], which emphasize the assessment of the clinical value of new markers on their effect on patient management and outcome. As we move forward with examining our approach to HRV monitoring with DWT in actual trauma and other critically ill subjects, adaptation of these evaluation criteria should be considered including adding other markers.

5 Conclusion

This study examined an approach to RR series analysis based on extracting features via the DWT. Specifically, the utility of DWT features in detecting hypovolemia severity was evaluated, using SVM and a dataset based on the LBNP model of simulated blood-loss. Results suggest that the proposed wavelet-based features may be able to detect changes associated with progressive hypovolemia, while remaining simple and computationally inexpensive. The novelty of this study lies in its development of a practical and accurate framework for detection of hypovolemia, and in its use of DWT as a vital tool in this application. As such, it has potential as a practical approach to detecting the extent of hypovolemia, such as in portable monitoring devices for remote triage. This may be best achieved by a simple portable monitoring device which can offer instant feedback on subject condition by offering higher accuracy and rapid results at a relatively low computational cost.

References

Kelly JF, Ritenour AE, McLaughlin DF, Bagg KA, Apodaca AN, Mallak CT, Pearse L, Lawnick MM, Champion HR, Wade CE, Holcomb JB. Injury severity and causes of death from Operation Iraqi Freedom and Operation Enduring Freedom: 2003–2004 versus 2006. J Trauma. 2008;64:S21–6.

Teixeira PG, Inaba K, Hadjizacharia P, Brown C, Salim A, Rhee P, Browder T, Noguchi TT, Demetriades D. Preventable or potentially preventable mortality at a mature trauma center. J Trauma. 2007;63:1338–46.

Alam HB, Burris D, DaCorta JA. Hemorrhage control in the battlefield: role of new hemostatic agents. Mil Med. 2005;170(1):63–9.

Weil MH, Becker L, Budinger T, Kern K, Nichol G, Shechter I, Traystman R, Wiedemann H, Wise R, Weisfeldt M, Sopko G. Post resuscitative and initial utility in life saving efforts (pulse): a workshop executive summary. Resuscitation. 2001;50(1):23–5.

Convertino VA, Ryan KL, Rickards CA, Salinas J, McManus JG, Cooke WH, Holcomb JB. Physiological and medical monitoring for en route care of combat casualties. J Trauma. 2008;64:S342–53.

Schwartz PJ, Priori SG. Sympathetic nervous system and cardiac arrhythmias. In: Zipes DP, Jalife J, editors. Cardiac electrophysiology. Philadelphia: WB Saunders Company; 1990.

Task Force of the European Society of Cardiology, the North American Society of Pacing, and Electrophysiology. Heart rate variability, standards of measurement, physiological interpretation and clinical use. Circulation. 1996;96:1043–65.

Cooke WH, Salinas J, Convertino VA, Ludwig DA, Hinds D, Duke JH, Moore FA, Holcomb JB. Heart rate variability and its association with mortality in prehospital trauma patients. J Trauma. 2006;60:363–70.

Cooke WH, Salinas J, McManus JG, Ryan KL, Rickards CA, Holcomb JB, Convertino VA. Heart period variability in trauma patients may predict mortality and allow remote triage. Aviat Space Environ Med. 2006;77:1107–12.

Rapenne T, Moreau D, Lenfant F, Vernet M, Boggio V, Cottin Y, Freysz M. Could heart rate variability predict outcome in patients with severe head injury? A pilot study. J Neurosurg Anesthesiol. 2001;13:260–8.

Batchinsky AI, Cancio LC, Salinas J, Kuusela T, Cooke WH, Wang JJ, Boehme M, Convertino VA, Holcomb JB. Prehospital loss of R-to-R interval complexity is associated with mortality in trauma patients. J Trauma. 2007;63:512–8.

Cancio LC, Batchinsky AI, Salinas J, Kuusela T, Convertino VA, Wade CE, Holcomb JB. Heart-rate complexity for prediction of prehospital lifesaving interventions in trauma patients. J Trauma. 2008;65:813–9.

Gang Y, Malik M. Heart rate variability in critical care medicine. Curr Opin Crit Care. 2002;8:371–5.

Akselrod S, Gordon D, Ubel FA, Shannon DC, Berger AC, Cohen RJ. Power spectrum analysis of heart rate fluctuation: a quantitative probe of beat-to-beat cardiovascular control. Science. 1981;213:220–2.

Cooke WH, Convertino VA. Heart rate variability and spontaneous baroreflex sequences: implications for autonomic monitoring during hemorrhage. J Trauma. 2005;58:798–805.

Cooke WH, Rickards CA, Ryan KL, Convertino VA. Autonomic compensation to simulated hemorrhage monitored with heart period variability. Crit Care Med. 2008;36:1892–9.

Ryan KL, Rickards CA, Ludwig DA, Convertino VA. Tracking central hypovolemia with ECG in humans: cautions for the use of heart period variability in patient monitoring. Shock. 2010;33(6):583–9.

Cooke WH, Ryan KL, Convertino VA. Lower body negative pressure as a model to study progression to acute hemorrhagic shock in humans. J Appl Physiol. 2004;96:1249–61.

Bennett T. Cardiovascular responses to central hypovolaemia in man: physiology and pathophysiology. Physiologist. 1987;30:S143–6.

Murray RH, Thompson LJ, Bowers JA, Albright CD. Hemodynamic effects of graded hypovolemia and vasodepressor syncope induced by lower body negative pressure. Am Heart J. 1968;76:799–811.

van Hoeyweghen R, Hanson J, Stewart MJ, Dethune L, Davies I, Little RA, Horan MA, Kirkman E. Cardiovascular response to graded lower body negative pressure in young and elderly man. Exp Physiol. 2001;86(03):427–35.

Pomeranz B, Macaulay RJ, Caudill MA, Kutz I, Adam D, Gordon D, Kilborn KM, Barger AC, Shannon DC, Cohen RJ. Assessment of autonomic function in humans by heart rate spectral analysis. Am J Physiol. 1985;248:H151–3.

Esteller R, Vachtsevanos G, Echauz J, Litt B. A comparison of waveform fractal dimension algorithms. IEEE Trans Circuits Syst I. 2001;48(2):177–83.

Batchinsky AI, Cooke WH, Kuusela TA, Jordan BS, Wang JJ, Cancio LC. Sympathetic nerve activity and heart rate variability during severe hemorrhagic shock in sheep. Auton Neurosci. 2007;136(1–2):43–51.

Acharya UR, Joseph KP, Kannathal N, Lim CM, Suri JS. Heart rate variability: a review. Med Biol Eng Comput. 2006;44:1031–51.

Acharya UR, Subbanna BP, Kannathal N, Rao A, Lim CM. Analysis of cardiac health using fractal dimension and wavelet transformation. ITBM-RBM. 2005;26(2):133–9.

Yeragani VK, Srinivasan K, Vempati S, Pohl R, Balon R. Fractal dimension of heart rate time series, an effective measure of autonomic function. J Appl Physiol. 1993;75(6):2429–38.

Carlin M. Measuring the complexity of non-fractal shapes by a fractal method. Pattern Recogn Lett. 2000;21(11):1013–7.

Accardo A, Affinito M, Carrozzi M, Bouquet F. Use of the fractal dimension for the analysis of electroencephalographic time series. Biol Cybern. 1997;77:339–50.

Meyer Y. Wavelets: algorithms and applications. SIAM; 1993, translated and revised by R. D. Ryan.

Unser M, Aldroubi A. A review of wavelets in biomedical applications. Proc IEEE. 1996;84(4):626–38.

Addison PS. Wavelet transforms and the ECG: a review. Physiol Meas. 2005;26:R155–99.

Aldroubi A, Unser M, editors. Wavelets in medicine and biology. Boca Raton: CRC Press; 1996.

Stiles MK, Clifton D, Grubb NR, Watson JN, Addison PS. Wavelet-based analysis of heart-rate-dependent ECG features. Ann Noninvasive Electrocardiol. 2004;9:316–22.

Burri H, Chevalier P, Arzi M, Rubel P, Kirkorian G, Touboul P. Wavelet transform for analysis of heart rate variability preceding ventricular arrhythmias in patients with ischemic heart disease. Int J Cardiol. 2006;109(1):101–7.

Hilton MF, Bates RA, Godfrey KR, Chappell MJ, Cayton RM. Evaluation of frequency and time-frequency spectral analysis of heart rate variability as a diagnostic marker of the sleep apnoea syndrome. Med Biol Eng Comput. 1999;37:760–9.

Tan BH, Shimizu H, Hiromoto K, Furukawa Y, Ohyanagi M, Iwasaki T. Wavelet transform analysis of heart rate variability to assess the autonomic changes associated with spontaneous coronary spasm of variant angina. J Electrocardiol. 2003;36:117–24.

Ji SY, Chen W, Ward K, Ryan K, Rickards C, Convertino V, Najarian K. Wavelet based analysis of physiological signals for prediction of severity of hemorrhagic shock. In Proceedings of IEEE international conference on complex medical engineering (CME); 2009. p. 1–6.

Ji SY, Soo-Yeon. Computer-aided trauma decision making using machine learning and signal processing. PhD dissertation, VCU digital archives, 2008.

Gomez C, Mediavilla A, Hornero R, Abasolo D, Fernandez A. Use of the Higuchi’s fractal dimension for the analysis of MEG recordings from Alzheimer’s disease patients. Med Eng Phys. 2009;31:306–13.

Najarian K. Fixed-distribution PAC learning theory for neural FIR models. J Intell Inform Syst. 2005;25(30):275–91.

Najarian K. Learning-based complexity evaluation of radial basis function networks. Neural Process Lett. 2002;16(2):137–50.

Suykens JAK, Vandewalle JPL. Least squares support vector machine classifiers. Neural Process Lett. 1999;9(3):293–300.

Candelieri A, Conforti D. A hyper-solution framework for SVM classification: application for predicting destabilizations in chronic heart failure patients. Open Med Inform J. 2010;4:136–40.

Ducla-Soares JL, Santos-Bento M, Laranjo S, Andrade A, Ducla-Soares E, Boto JP, Silva-Carvalho L, Rocha IJL. Wavelet analysis of autonomic outflow of normal subjects on head-up tilt, cold pressor test, Valsalva manoeuvre and deep breathing. Exp Physiol. 2007;92(4):677–86.

Kohavi R. A study of cross-validation and bootstrap for accuracy estimation and model selection. In: Proceedings of international joint conference on AI; 1995. p. 1137–1145.

Ji SY, Bsoul AR, Ward K, Ryan K, Rickards C, Convertino V, Najarian K. Incorporating physiological signals to blood loss prediction based on discrete wavelet transformation. Circulation. 2009;120:1483.

Hlatky MA, Greenland P, Arnett DK, Ballantyne CM, Criqui MH, Elkind MS, Go AS, Harrell FE, Hong Y Jr, Howard BV, Howard VJ, Hsue PY, Kramer CM, McConnell JP, Normand SL, O’Donnell CJ, Smith SC Jr, Wilson PW. Criteria for evaluation of novel markers of cardiovascular risk: a scientific statement from the American Heart Association. Circulation. 2009;119:2408–16.

Pan J, Tompkins WJ. A real-time QRS detection algorithm. IEEE Trans Biomed Eng. 1985;32:230–6.

Acknowledgments

This material is based upon work supported by the National Science Foundation under Grant No. IIS0758410 and by the U.S. Army Medical Research and Material Command Combat Casualty Care Research Program (Grant: 05-0033-02). The opinions expressed herein are the personal opinions of the authors and are not to be construed as representing those of the Department of Defense, the Department of the Army, or the United States Army.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1

This section explains the process of QRS detection performed prior to feature extraction. Construction of the RR signal using the RR intervals in the ECG signal first requires that the R-waves be identified, which is done via QRS detection. The most common technique is the Pan-Tompkins algorithm [49], which detects QRS complexes based on analysis of their slope, amplitude and width. This method consists of four stages: band-pass filtering, differentiation, squaring, and windowing. The first stage applies the same filter as described in Sect. 2.2. The signal is then passed through a differentiator which suppresses the low-frequency P and T wave components and emphasizes the steep slopes of the QRS complex. The QRS complex also displays high amplitude, which is emphasized by applying a squaring operator. The next stage uses a moving average window to smooth the signal and reduce noise. The final step is to select a threshold that detects the QRS peaks in the waveform.

This study applies a modified version of the Pan-Tompkins algorithm which incorporates an additional histogram analysis step after averaging is performed, to identify any unusual values which may cause errors in the QRS detection process. It also employs an adaptive threshold T to detect the QRS peaks, which offers more flexibility in dealing with individuals than a fixed threshold. This is calculated as \( T = \mu (S) + \hbox{max} (S)*\alpha \), where S is the filtered ECG stage segment currently being analyzed and α is an empirically-chosen weight measure. This study found α = 0.4 to be a suitable value.

In calculating the RR intervals, any single interval is compared to those previously detected using a sliding window. This process is described as follows. Let \( I_{i} \) be the estimate of the RR interval at sample i, n the total number of RR intervals detected so far, and \( \omega_{0} \), \( \omega_{1} \), and \( \omega_{2} \)be boundaries on the acceptable range of variation of the interval, chosen based on previous interval values. In order to find the new RR interval l, the following rules are followed:

The boundaries are set as \( \omega_{0} = 0.89m,\,\omega_{1} = 1.29m \) and \( \omega_{2} = 2m \), where m is the median value of the previous eight RR intervals.

Appendix 2

This section briefly explains the two other HRV analysis methods used in this study. Power spectral density (PSD) describes the distribution of the power in a signal with frequency [22]. In other words, it shows at which frequencies variations are strong and at which frequencies variations are weak. The measures of interest in this study are the powers of the high frequency (HF; 0.15–0.4 Hz), low frequency (LF; 0.04–0.15 Hz) and very low frequency (VLF; 0.003–0.04 Hz) bands, the normalized powers of the LF and HF bands, and the ratios of LF to HF and HF to LF. These are calculated by integrating the spectrum for each band. Normalization is performed by calculating

where TAP is the total average power of the RR interval [7].

Fractal analysis monitors data via fractals: sets of points that can be divided into subsets which each resemble the whole. Calculating the FD of a signal quantifies both this self-similarity and the signal’s complexity. FD is commonly used in analyzing biosignals such as electrocardiogram (ECG) and electroencephalogram (EEG) to differentiate physiological states. This study applies Higuchi’s algorithm to calculate the FD of the ECG segments recorded over each LBNP stage. Consider a signal \( X_{i} = x_{1} ,x_{2} , \ldots ,x_{n} \), consisting of n samples. This is first divided into smaller epochs, by constructing k new time series \( x_{m}^{k} \)such that

where \( m = 1, \ldots ,k \) indicates the initial time value and k is the time interval between points. For each \( x_{m}^{k} \), the average length \( L_{m} (k) \) is calculated as

where \( a = \frac{N - m}{k} \). The average length \( L(k) \)for each delay k is calculated as the mean of the k lengths \( L_{m} (k) \) for \( m = 1,2, \ldots ,k \). This is repeated for each of the k time series. The Higuchi FD is estimated as the slope of the least-squares best fit line to the curve of \( \ln [L(k)] \) versus \( \ln \left( \frac{1}{k} \right) \) for \( k = 1, \ldots ,k_{\hbox{max} } \) [23]. Calculation accuracy depends on the epoch length; this study tested lengths of 8 and 15.

Rights and permissions

About this article

Cite this article

Ji, SY., Belle, A., Ward, K.R. et al. Heart rate variability analysis during central hypovolemia using wavelet transformation. J Clin Monit Comput 27, 289–302 (2013). https://doi.org/10.1007/s10877-013-9434-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10877-013-9434-9