Abstract

Recent research efforts (Schmidt et al. in The preparation gap: teacher education for middle school mathematics in six countries, MSU Center for Research in Mathematics and Science Education, 2007) demonstrate that teacher development programs in high-performing countries offer experiences that are designed to develop both mathematical knowledge and pedagogical knowledge. However, identifying the nature of the mathematical knowledge and the pedagogical content knowledge (PCK) required for effective teaching remains elusive (Ball et al. in J Teacher Educ 59:389–407, 2008). Building on the initial conceptual framework of Magnusson et al. (Examining pedagogical content knowledge, Kluwer, Dordrecht, pp 95–132, 1999), we examined the PCK development for two beginning middle and secondary mathematics teachers in an alternative certification program. The PCK development of these two individuals varied due to their focus on developing particular aspects of their PCK, with one individual focusing on assessment and student understanding, and the other individual focusing on curricular knowledge. Our findings indicate that these individuals privileged particular aspects of their knowledge, leading to differences in their PCK development. This study provides insight into the specific aspects of PCK that developed through the course of actual instructional practice, providing a lens for future research in this area.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

How beginning mathematics teacher knowledge develops

Despite widespread recognition that teacher knowledge impacts mathematics teacher instruction and student learning, our understanding of teacher knowledge continues to emerge. We know that growth in student achievement varies with teachers who have similar backgrounds and classroom contexts due to differences in their content knowledge, preparation, use of routines, and content coverage (Rowan et al. 2002). However, debate continues about whether knowledge beyond content knowledge is critical for the effective teaching of mathematics. Recent research efforts (Schmidt et al. 2007) demonstrate that teacher development programs in high-performing countries offer experiences that are designed to develop both mathematical knowledge and pedagogical knowledge. Despite these findings, identifying the nature of the mathematical knowledge and the pedagogical knowledge required for effective teaching remains elusive (Ball et al. 2008). In addition, research by Hill et al. (2005) has revealed that specialized mathematical knowledge for teaching (MKT) directly impacts elementary student achievement. Yet, despite its importance to the teaching and learning, we need further insight into how mathematics teacher knowledge develops.

Considerable research has demonstrated that the knowledge United States (US) beginning mathematics teachers bring to the classroom is insufficient for them to teach mathematics effectively (c.f., Ponte and Chapman 2006). Various policy documents provide evidence that policymakers are aware that a different type of knowledge for mathematics teaching is necessary for effective mathematics teaching. For example, the U.S. National Council for Accreditation of Teacher Education (NCATE) Standards for Middle School Mathematics Teachers (2003) states that beginning teachers should “use representations of mathematical ideas to support and deepen students’ mathematical understanding” (p. 2). Further, NCATE Standard 8 states that beginning mathematics teachers should “possess a deep understanding of how students learn mathematics and the pedagogical knowledge specific to mathematics teaching and learning” (p. 3). Such knowledge does not emerge through experiences that prospective teachers have as students in K-12 mathematics classrooms. Instead, such knowledge must be gained through teacher preparation programs, professional development, and classroom experiences.

The purpose of the work presented in this manuscript is to characterize the development of pedagogical content knowledge (PCK) for two purposefully selected beginning mathematics teachers. As such, the research question that guided this study was: What PCK develops over 2 years for beginning mathematics teachers? This study provides insight into the complexity of knowledge development for mathematics teaching as we characterize the differences in knowledge of mathematics teaching that developed for two beginning teachers in the same teacher preparation cohort.

Theoretical underpinnings

In the following sections, we delineate the knowledge required for mathematics teaching by providing background on the knowledge referred to as PCK and share our definition and conceptual framework for PCK. In addition, we situate our study in prior research on the development of PCK and share concerns that have arisen about the use of PCK as a construct.

Pedagogical content knowledge

Shulman (1986) conceptualized the knowledge of content-area specialists that is necessary for effective teaching. Shulman called this knowledge PCK and described it as a “special amalgam of content and pedagogy that is uniquely the province of teachers, their own special form of professional understanding” (p. 227). Researchers in mathematics education have also delineated PCK components that extend Shulman’s (1986, 1987) original conceptions. Marks (1990) clarified PCK for mathematics teachers by identifying four components strands: knowledge of student understanding, knowledge of subject matter for instructional purposes, knowledge of media for instruction, and knowledge of instructional processes. An et al. (2004) utilized a PCK framework that included three components: knowledge of content, knowledge of curriculum, and knowledge of teaching. In the past decade, Deborah Ball and her colleagues (Ball and Bass 2003; Hill et al. 2004, 2008) have, through investigations focused on elementary teachers’ knowledge, developed a construct called MKT. As presented in Hill, et al. (2008), MKT is composed of two major categories: subject matter knowledge and PCK. Subject matter knowledge contains common content knowledge (CKT), specialized content knowledge (SCK), and knowledge at the mathematical horizon. In this model, the authors characterized PCK as containing three components: knowledge of content and students (KCS), knowledge of content and teaching (KCT), and knowledge of curriculum.

In comparing the Hill et al. (2008) model of PCK (represented in the right-hand half of the model) with the model we use in our work (represented in Fig. 1), we can correlate, respectively, KCS with knowledge of student understandings within mathematics (e.g., knowledge that students have difficulty developing meaning for mathematical notation), KCT with knowledge of instructional strategies for mathematics (e.g., knowledge of particular mathematical representations that can build student understanding), and knowledge of curriculum with knowledge of curriculum for mathematics (e.g., knowledge of how mathematical idea develop across a unit or across grade levels). We adapted the framework that Magnusson et al. (1999) initially developed for science teaching, because it provides more insight into aspects of PCK, including knowledge of assessment (e.g., knowledge of what mathematical ideas are important to assess) that are not included in the Hill et al. model.

A challenge for researchers as we attempt to identify PCK involves delineating the relationship between knowledge and beliefs. We applied the definition of knowledge used by Philipp (2007) that knowledge consists of “beliefs held with certainty or justified true belief. What is knowledge for one person may be belief for another, depending upon whether one holds the conception as beyond question” (p. 259). Thus, we view teacher knowledge as beliefs that are justified in the mind of the individual teacher. When we discuss aspects of PCK, we recognize that we simultaneously address both knowledge and beliefs that teachers have about teaching and learning mathematics.

Shulman argued that PCK includes the most useful forms of representation, the most powerful analogies, illustrations, examples, explanations, demonstrations, pedagogical techniques, and knowledge of what makes concepts. However, Shulman’s initial conceptualization of PCK can lead to confounding the type of knowledge with the quality of knowledge, intimating that there is no such thing as weak PCK. This is analogous to how Star (2005) argued that we view “conceptual knowledge” as connected knowledge that is rich in relationships. However, Star notes that conceptual knowledge could contain superficial connections. In a similar way, we view PCK as knowledge for mathematics teaching that may or may not involve strong connections to the actual teaching and learning of mathematics. For example, Marks (1990) noted that in his study that the mathematical PCK of elementary teachers included knowledge that elementary students could easily represent and interpret fractions as regions or as sets. In contrast, research (e.g., Hiebert et al. 1991) has shown that elementary students have difficulty interpreting various models as fractions. However, we do view the knowledge for teachers in the Marks study as PCK for these teachers, despite the fact that researchers may view this knowledge as lacking a deep understanding of the teaching and learning of fractions.

Development of PCK

Despite the importance and growing understanding that PCK impacts student learning, little has been done to examine the development of PCK. Magnusson et al. (1999) hypothesized that teachers may develop different PCK based on the influence of their subject matter knowledge, pedagogical knowledge, or knowledge of the school context. For example, a teacher with relatively strong pedagogical knowledge may develop different PCK than a teacher in a similar setting whose strength lies with subject matter knowledge. However, little empirical work has been conducted in relation to PCK development.

In one of the few studies on PCK development, Kinach (2002) examined the PCK of pre-service secondary teachers related to adding and subtracting integers over the course of a semester. Kinach found that changes occurred in what the pre-service teachers viewed as a valid mathematical explanation. She applied an instructional model that emphasized the importance of explaining, applying, justifying, comparing, contrasting, generalizing, and contextualizing the mathematics that pre-service teachers often feel they already know. However, it is unclear to us how the knowledge described by Kinach differs from the knowledge of mathematics that we would expect for anyone with a strong mathematical understanding. In other words, it is unclear whether Kinach addresses PCK or simply a deeper understanding of mathematics, as we would expect secondary students to explain, justify, compare, and generalize their understanding of addition and subtraction of integers.

In another empirical study of PCK development, Horn (2009) investigated teacher learning through their communities of practice in two separate high school mathematics departments. Teachers who focused on streamlining the curriculum, without considering student learning, did not develop their PCK as quickly or robustly as teachers in another school who spent their energy working toward using high-quality instructional tasks. The teachers whose knowledge grew more quickly and robustly were willing to interrogate their instructional assumptions where the teachers in the other school were less likely to challenge each other’s ideas.

As we can see from the work of Kinach (2002) and Horn (2009), theoretical challenges exist regarding the definition of PCK though some research has demonstrated the impact that increased PCK can have on instruction. However, we still lack insights that clarify and characterize the development of PCK.

Empirical and theoretical concerns regarding PCK

Questions have been raised about the viability of PCK as a practical construct for examining teacher knowledge. However, recent research has demonstrated that such knowledge exists, though further clarification of the different aspects of this knowledge is needed. Marks (1990) conducted his initial work on mathematical PCK by interviewing eight middle school mathematics teachers, six experienced teachers, and two novice teachers, about their perceptions of what was “easy” or “difficult” to learn and what common student errors occurred. He found that teachers often drew on many aspects of their knowledge of subject matter, student understanding of the subject matter, media for instruction, and instructional processes during the interviews. However, he was concerned about the ambiguity that arose in attempting to distinguish between PCK and mathematical content knowledge that would also be expected to exist for those outside the teaching profession. Stones (1992) concurred with Marks (1990) about the ambiguities inherent in the concept of PCK. Stones expressed further concern about the lack of meaning associated with PCK and noted that such a distinction for knowledge provided little practical assistance in examining teaching. The question of whether or not it is possible in practice to make a clear distinction between subject knowledge and PCK was also expressed by McEwan and Bull (1991) who argued that Shulman’s distinction between content knowledge and PCK is unfounded. They stated that all mathematical knowledge has pedagogical underpinnings.

Confusion also occurs among researchers about the difference between deep mathematical understanding and PCK. Studies such as Kinach (2002) and Even and Tirosh (1995) use PCK to mean gaining a deeper understanding of the mathematics that teachers use in practice. For example, they focus on the recognizing valid mathematical explanations for integer operations (Kinach) and recognizing whether (−8)(1/3) = −2 (examples that Hill et al. 2008 refer to as specialized subject matter knowledge rather than PCK). However, other researchers have identified particular aspects of MKT that only teachers develop. For example, the recognition of specific examples when comparing decimals (e.g., 0.345, 14.6, and 2.3) that lead to common errors with elementary students is knowledge that mathematics teachers possess, but mathematicians and others with considerable mathematical knowledge do not (Hill et al. 2004). Similarly, Hill et al. (2008) identified PCK related to common mathematical student errors (e.g., that the slope of a linear function varies proportionally with the angle and the x-axis). Though PCK for mathematics teaching has been further illuminated through recent research, further clarification regarding the nature of this knowledge is needed.

It is clear that differences in perspectives and opinions of what PCK is exist. In addition, few studies have been undertaken to examine the development of PCK. We adopted Magnusson et al. (1999) framework to examine the development of PCK in two individuals as they began their teaching careers.

Methods

The study reported in this manuscript draws on data from a larger study of mathematics and science teacher PCK. Our research team, under the auspices of a 5-year research project funded by the National Science Foundation (ESI-0553929), is engaged in empirically grounding the PCK of mathematics and science teachers by developing frameworks to characterize teacher knowledge. Situated in the context of an alternative certification program (ACP), we study knowledge development of beginning teachers over a 2- to 4-year timespan, seeking to understand what knowledge pre-service and beginning teachers bring to the program and how that knowledge develops over the course of the program and into their first years of teaching.

To address the research questions for this manuscript, we analyzed data collected during a larger longitudinal research project in which we study the knowledge development of beginning mathematics teachers enrolled in an ACP. We further describe the ACP below.

Program context

The ACP is a 15-month program that prepares individuals with college degrees that include strong mathematics backgrounds to become teachers. These individuals completed 32 credit hours of coursework that included general education and three content-specific mathematics methods courses, leading to a master’s degree and teacher certification at the middle school level.

In the summer prior to their internship, participants completed two courses: (a) a general education course and the first of three mathematics methods courses. The first course was a general learning theory and instruction course that included theories of classroom management. In this course, students learned about cognitive development and strengths and limitations of various learning theories. The mathematics methods course emphasized the content and process standards and the principles from Principles and Standards of School Mathematics (NCTM 2000). The instructor provided an array of activities emphasizing different aspects of assessment practices in mathematics, including the use of student questioning as a means of informal assessment.

Two other mathematics specific methods classes followed during the school year when participants worked as interns in a classroom. These courses continued previous themes such as attending to student mathematical reasoning and adjusting instructional strategies to deepen student mathematical understanding. These courses were designed to develop knowledge specific to particular mathematical content, such as student ways of reasoning in Algebra and the use of mathematical representations to help students understand geometric concepts.

During our analysis for the larger study, we recognized that the teachers in our study developed distinctly different aspects of PCK. In this study, we focus on two individuals, Keith and Lyle, who completed the program and achieved teacher certification at the middle-school level. We purposefully selected these participants due to the differences that emerged with the development of their PCK and due to the similarities in the grades that they taught and the curricular materials that they used. Keith and Lyle served as illustrative examples of these differences.

Keith completed his degree in broadcast journalism (though he took a finance position), and Lyle graduated in Biochemistry with a minor in mathematics. Keith completed the equivalent of a bachelor’s degree in mathematics while working for a bank. Lyle began a doctoral program in Biochemistry and became interested in the teaching aspect of his work, eventually being awarded a special teacher fellowship. However, he chose to pursue middle school mathematics certification.

Their first year in the ACP included 20 h per week working in a supportive middle school or junior high school (i.e., schools with students aged 11–14) with well-established mathematics teachers. They taught approximately three mathematics classes per day and completed coursework in the ACP.

Keith and Lyle’s mentor teachers allowed them to plan and implement instruction within the classroom policies of their classrooms. Keith and Lyle interned for 32 weeks using curricular materials from the Connected Mathematics Project (CMP) (Lappan et al. 2006). The CMP text is designed as an “investigative” style where problems are posed to students; they work on the problems individually or in small groups and share their mathematical reasoning. Keith interned in 8th and 9th grade classrooms, Lyle in 6th and 7th grade classrooms. After completing their certification program in the summer between Year 1 and Year 2, Keith and Lyle took teaching positions at middle schools, teaching grade 7, (students ages 12–13) where the textbook series was CMP. Keith taught for 36 weeks (i.e., the entire academic year) at a school where CMP was used for several years; Lyle also taught for 36 weeks at a middle school that recently adopted CMP. See Table 1 that summarizes the background and experiences for Lyle and Keith.

Data collection

We used an entry and exit instructional planning task (Van Der Valk and Broekman 1999) and stimulated recall interviews (Pirie 1996; Schempp 1995) related to 4 days of mathematics instruction per year during the participants’ mentored teaching placement and during their first year of teaching.

Instructional planning task and interview

As participants entered and exited the ACP, they completed the following task (see Table 2):

Following the completion of this task, we asked questions specific to the four PCK categories in order to document participant knowledge of students’ understandings, instructional strategies, assessment, and curriculum. The interviews were audio recorded and transcribed verbatim for later analysis.

Stimulated recall interviews during observation cycles

After the participants completed 8 weeks of coursework during their first summer, they began a 32-week, 20-h per week mentored internship at the middle school level. They taught approximately three mathematics classes per day and completed coursework in the ACP. During both the fall and spring semesters of this field-based, mentored internship, we collected data during a 2-day observation cycle for each participant. Each observation cycle began with the participants selecting a specific mathematics class they wanted us to observe them teach. They prepared two consecutive mathematics lessons for the periods we scheduled to observe. Prior to conducting the observations, we conducted a pre-observation interview where the participants discussed the mathematics lessons they would teach as well as the knowledge they drew on when designing these two lessons. In addition, we asked questions to gather data about their mathematics teaching orientations as well as their knowledge of learners, instructional strategies, assessment, and curriculum.

We then observed, videotaped, and took field notes for the two consecutive lessons taught to the same class. Following each lesson, we conducted a stimulated recall interview (Pirie 1996; Schempp 1995) in which we probed participant knowledge via playback of critical parts of the lesson. We selected video excerpts of the lesson that focused on particular aspects of participant knowledge. For example, we asked about the use of representations by showing video of representations that students developed and shared during the lesson, and we questioned about the statements students made or questions that students asked during small group or whole-class discussions to inquire into what the participants were learning about student learning and about assessment practices. In addition, during each observation cycle, we conducted a 1-h interview with Keith and Lyle’s mentor teachers to gather insights into the PCK that they focused on during the internship. In particular, we questioned the mentors about what Keith and Lyle were learning about student understanding, instructional strategies, assessment, and curriculum. During the observation cycles, we took the role of the empathetic observer, seeking to understand the PCK and the context in which the PCK developed, but not providing feedback or suggestions related to our PCK.

Data analysis

For this manuscript, we coded the participant interviews, mentor interviews, and lesson plans using four categories in the Magnusson et al. (1999) PCK model: (a) knowledge of students’ understanding, (b) knowledge of instructional strategies, (c) knowledge of assessment, and (d) knowledge of curriculum. We identified segments of the interviews and plans that focused on one of the four categories and coded the entire episode. Table 3 provides a list of the PCK components and codes that we developed. Two members of the research team were assigned to independently code each participant’s data using NVivo, a qualitative data analysis program. For this study, we independently coded all data for the two participants for each of the PCK components. We then shared and further discussed during group research meetings until we reached consensus on the coding for the PCK components. We used data from the interviews of the mentors to triangulate the data from Keith and Lyle’s interviews. To characterize the PCK development for Keith and Lyle, we developed rich individual profiles describing the PCK development for these two participants. As we developed these profiles, we considered their similarities and differences in the PCK development.

Results

In the following sections, we present our results to answer the question: What PCK develops over 2 years for two beginning mathematics teachers? First, we present results from the first and second year for Keith, then we present the results for Lyle.

In the following section, we characterize their PCK using our PCK framework (as represented in Fig. 1). We characterize Keith and Lyle’s knowledge based on their personal focus in their teaching. We demonstrate how the differences in their foci led to differences in the knowledge they developed. Furthermore, we alert the reader that we have not exhaustively described our participants’ knowledge in the separate PCK domains, but highlight the main conclusions to which our analysis led. Before illustrating Keith’s PCK development, we provide an overview of his development.

Overview of Keith’s knowledge development

Keith’s strong desire was to understand his students’ thinking. Thus, he primarily talked about his teaching through a dual focus on his PCK for assessment and PCK of student understanding. When we interviewed Keith, he spoke at length about the thinking of particular student thinking, recounting detailed student thinking after he taught a particular lesson (and following subsequent teachings of the same lesson). His focus on assessment and student understanding impacted his knowledge in the other PCK domains.

Keith’s knowledge of assessment

When asked how he would know whether students understood the mathematics, Keith initially referred the assessment strategies of homework, quizzes, and tests. During our first observation cycle he said,

The main assessment for me is homework. (Their homework) gives a good view of what they are thinking and how sharp their understanding is of looking at a graph and explaining what is going on in relation to the x and y-axis and the different variables. I think that assignment is the biggest assessment. (Keith, Year 1, Fall, Pre-Observation Interview).

But it was clear that Keith also employed informal assessment strategies. When asked about other assessment strategies, he further explained that he would be “talking to the kids while they are working. I get a sense of what they think by talking to them.”

Later in Year 1, Keith provided evidence that he was developing knowledge of potential teacher responses due to his use of informal assessment strategies. When he noticed student difficulty, he adjusted instruction to support student learning. As an example, during the spring observations of his first year, Keith described how he altered the initial activity the second day based on the confusion that students had the previous day. In particular, while teaching the first lesson on developing student understanding of the distributive property, he noticed that students were not using the distributive property for the tasks that he designed. The next day, he attempted to connect ideas back to an earlier lesson when they used an area model to teach factoring. “I combined the two (lessons) and talked about the distributive property” (Keith, Year 1, Spring, Stimulated Recall Interview Day 2). On Day 2, Keith encouraged students to draw area models to represent algebraic expressions such as 4(x + 2).

In Keith’s second year, his assessment strategies focused on classroom interactions with students, and he recognized the assessment challenges related to what could be gleaned by assessing student homework, noting that for many students, “there is no way to tell how much they got from parents at home. Just because a student has the right answers to the homework does not mean he understands how to do it” (Keith, Stimulated Recall Interviews Day 1). He also recognized the challenges related to collecting and interpreting student assessment information. In a lesson on transformations, he said,

This (content) is difficult to assess in the classroom. So looking at what they’re doing, trying to move these figures around and make rotational and reflectional symmetry, it will be hard even if they have the right work on their paper. I think it’s hard to question them and make sure they understand it. That’s what I’m trying to think through when I’m lesson planning. I feel like the lesson plan is alright and it will work well, but when they’re working in groups, and I try to understand what they know, that will be difficult for me. (Keith, Year 2, Fall, Stimulated Recall Interview Day 2)

In our post-observation interviews, he lamented that he had difficulty gaining insight into how students approached the task.

Keith’s mentor noticed his focus on student thinking when using formal assessments and informal student questioning. Keith’s mentor teacher noted:

He was really good at judging (student understandings and misunderstandings). He would look at a quiz they took, and he could see right away, without a lot of analyzing, and see what they were doing. He does a lot of informal assessing. He keeps a lot of notes on separate kids, and gets a lot out of just talking to them. I would say that his knowledge of assessment is pretty good. (Keith’s Mentor, Year 1, Fall, Mentor Interview)

Keith’s desire to assess student understanding, combined with his realization of the difficulty in making these informal assessments, was an example of his assessment PCK. As can be seen in this section, Keith used his knowledge of assessment in concert with his knowledge of instructional strategies to assess student understanding of the content of one lesson and then adjust his instruction in the next lesson based on the information obtained in the first lesson (a classic example of the use of formative assessment to adjust instruction). As will become evident in the following sections, Keith’s knowledge of assessment impacted his knowledge of curriculum, instructional strategies, and learners.

Keith’s knowledge of student understanding

As noted above, Keith had a strong desire to understand the mathematical thinking of students. During Year 1 and Year 2, Keith recounted particular student strategies and described student written work many hours after the lesson concluded. He reflected on the thinking of his students and developed hypotheses about student difficulties and the specific strategies students may use to solve problems.

Initially, Keith’s mentor teacher noted that Keith’s expectations for students were not aligned with the reality of what students could and could not do, particularly in relation to performing mental mathematics. As noted by his mentor teacher:

He expected to walk in and start talking about these things and he couldn’t because they would have to get up and go get their calculators… He didn’t have a real good feeling. A lot of that comes with experience, but he just didn’t have a good feeling for what (the students) should and shouldn’t know. (Keith’s Mentor, Year 1, Fall, Mentor Interview)

However, in Keith’s first year, he hypothesized the student difficulty with mathematical and non-mathematical aspects of a particular lesson. Though prior to each lesson, he anticipated few specific ways of student thinking, his attention to students’ ideas (through his informal assessments) during the lessons quickly led to greater knowledge of student’s difficulties and likely ways of thinking. For example, when asked where students might struggle in the Fall of Year 1, he anticipated that the poor reading comprehension of his students would lead to difficulties. He said, “So I think problems A through D will cause problems because of their reading comprehension. They have problems with a question that is not straightforward” (Keith, Year 1, Fall, Pre-Observation Interview). This indicates an emerging knowledge of students and student difficulties, though such knowledge is not related to student mathematics and represents general pedagogical knowledge of student understanding.

However, Keith also focused on his student understandings of specific mathematical strategies. For example, he anticipated various graphs students might develop in his first lesson in Year 1. This task (see Table 4 for the task and his instructional plan for the task) involved creating a graph to represent the number of dancers on the dance floor at a party where many students were dancing and as the night wore on, fewer and fewer dancers were on the dance floor. He said, “I think most of them will, on the y-axis, represent people on the dance floor or some kind of measure of people on the dance floor and make a graph that represents the relationship between the dancers and time” (Keith, Year 1, Fall, Pre-Observation Interview). While this quote suggests that Keith had a general inclination for what strategies student may use for this situation, he later identified, in greater detail, what he hoped he would see. He said,

As they’re finished drawing I will explain where there are differences, if [someone has] a line that is kind of going slightly down have them tell what that represents and kind of have them briefly explain what each part of the graph means. And I will try and pick one that is linear and parts of it that seem to be straight lines and curved to give them a couple of different ideas of things to look at. Hopefully there will be two people I choose with fairly good examples that I can show the class. (Keith, Year 1, Fall, Pre-Observation Interview)

Keith anticipated general strategies for learners, but did not anticipate specific student difficulties that occurred when modeling the relationship between the number of people on the dance floor and time. For example, almost all students drew linear graphs with negative slopes, a response that Keith did not anticipate from the majority of the class.

However, after the first lesson in Year 1, it was clear that Keith developed knowledge about student strategies and student misconceptions. He said,

I was expecting them to have a straight line for a little bit and then have it dip down to a constant rate for a while and then dip down sharper. But I did not see anyone do that specifically, I saw some people do parts of that, but no one had that whole graph that I just explained. (Keith, Year 1, Fall Stimulated Recall Interview Day 1)

Throughout the first year, Keith demonstrated a general knowledge of students’ understanding and possible difficulties. This knowledge increased following each lesson due to his informal assessment strategies.

In his second year, Keith continued developing general and particular knowledge of students’ understanding. He was increasingly able to articulate areas of student difficulty and to anticipate student strategies that were likely to occur. For example, he knew that students tended to add numerators together and denominators together when asked to add two fractions. He said,

A lot of times when they are adding fractions they just add both numerators and both denominators. So, I think that will come up again (in today’s lesson). We have already talked about that in the past couple days because we have had some problems where we have had to look at like a piece of land where we had a fourth of the land and a sixteenth of the land and some students will try to make that into two-twentieths by just adding (the numerators and denominators) together. (Keith, Year 2, Spring, Pre-Observation Interview)

Keith also noted that students struggled with identifying lines of symmetry and rotational symmetry, and Keith recognized that understanding these ideas was not easy for student to apply. He said,

I think the line of symmetry is something that we would need to go over a lot more before that’s something that they really understood. I think certain kids it is kind of hard for them to describe, to use that kind of vocabulary when describing it. They probably didn’t really pick up on it, but I really didn’t expect everyone to pick up on lines of symmetry just because they’re just starting to understand you know, reflectional and rotational symmetry let alone where those lines would be. (Keith, Year 2, Fall, Stimulated Recall Interview Day 1)

In both of these examples, we see that Keith gained insight into student difficulties and anticipated how difficult mathematical ideas were to learn. Such knowledge appeared to be gained through the use of informal assessment strategies.

Keith’s knowledge of curriculum

Keith’s strength in assessment led to his substantial development of knowledge of students and their mathematical thinking. His knowledge and enactment of assessment also led to some limited development of his knowledge of curriculum. In our interviews, it was clear that his primary focus was not in developing knowledge of the curriculum. Initially, Keith exhibited limited knowledge scope and sequence of mathematical content (and this was confirmed by his mentor teacher).

However, in Keith’s second year of teaching, he expanded his curricular knowledge, developing a deeper sense of the scope and sequence through assessing what students already knew at the beginning of each unit. In the following excerpt, he responded to a question about what the students previously learned about definitions of shapes. In reference to the curriculum, he said,

I know that they’ve had exposure to some shapes, but I don’t think that there’s been a strong emphasis on the definitions. At the beginning of the unit we did some assignments, and some assessments of their knowledge of the different shapes. (Keith, Year 2, Fall, Pre-Observation Interview)

This excerpt illustrates how Keith’s curricular knowledge was limited as to what students studied in previous years but was developing through his assessments of students’ prior knowledge.

Keith’s knowledge of instructional strategies

Initially, Keith struggled with helping students who exhibited mathematical difficulties. He did not know what to say if they had a misconception or mistake, but his knowledge and enactment of assessment, through his development of knowledge of student understanding, allowed him to assess the effectiveness of his instructional strategies. His knowledge and efforts combined to provide experiences in which he developed his knowledge of instructional strategies. The following example illustrates how his informal assessments combined with his desire not to tell led to challenges with his instruction. These challenges were evident when teaching a lesson that involved students creating a story and then representing some quantitative aspect of the story in a graph.

Their graph and problem situation didn’t match up, so I was trying to explain the best I could why their graph wouldn’t match up. Then, I don’t know if I was giving too much information for their answer or not, but I was struggling on how to dance around it in saying if you are going 5 MPH the entire time, that would be a straight line. I didn’t really know how to address it other than saying, ‘it’s just a straight line’ (Keith, Year 1, Fall, Stimulated Recall Interview Day 2).

Keith noticed this discrepancy between students’ stories and their graphs, and he wanted to avoid telling, but had limited knowledge-specific actions to use during instruction as he was unsure what questions to ask or statements to make to prompt or correct their thinking. He encouraged students to share their thinking, but he found that this did not dislodge incorrect thinking.

In his second year, Keith further developed his knowledge of student misconceptions through asking questions. In this area, it was evident that his knowledge of specific actions to use during instruction changed as he introduced counterexamples to address student misconceptions changed. For example, in a lesson where students developed a procedure for adding fractions, he noticed students adding the numerators and denominators. When asked how he addressed this error, he said,

(When I see students) adding numerator and denominator (when adding fractions) I ask, ‘Is one-half plus one-half the same as two-fourths?’ I have asked that to them before, but they still like to add the numerators and denominators. And I say, ‘Okay, so you do not add the numerators and denominators in this case because then it would come out to two-fourths so it does not work for that example?’ and they say ‘no.’ (Keith, Year 2, Spring, Stimulated Recall Interview Day 1)

Keith indicated that students continued to “add the numerators and denominators” despite various efforts to change their thinking. Keith’s assessment efforts led to increased knowledge of student understanding and misunderstanding that prompted him to reconsider what actions to take during instruction and impacted his knowledge of instructional strategies.

Summary of Keith’s PCK development

Over the 2 years of the study, Keith’s knowledge increased in all the components of the PCK framework, but the increase occurred in different ways and to a different extent in the four components. His knowledge and enactment of informal assessments facilitated his development of knowledge of student understanding and instructional strategies. He developed PCK related to mathematics curriculum through his knowledge and enactment of assessment. He privileged student understanding and he invested time in that knowledge, specifically. He recognized that the enactment of informal assessments provided access to student understandings and misunderstandings.

Overview of Lyle’s knowledge development

Lyle concentrated his efforts on increasing his knowledge of the scope and sequence of the curriculum. In his first year, he taught in a classroom environment that lessened his need to apply particular classroom management strategies, but he struggled to engage students in mathematical discussions. In his second year, his knowledge of curriculum continued to increase, but his knowledge development appeared to be minimal for the other PCK components.

Lyle’s knowledge of curriculum

During Year 1, Lyle expressed interest in gaining further curricular knowledge. He sought understanding of the scope and sequence of mathematical topics within the textbook series. As part of his mentored teaching experience, he observed 6th grade (11- to 12 year olds) CMP classes and his mentor reported that he often asked how topics were addressed in prior years or units.

At the beginning of his internship, Lyle’s mentor mentioned his desire to study the curricular materials that they used in the class.

He took that (studying the textbooks) on his own initiative. He took that on from Day 1. He requested an extra copy of the teacher’s manual. We got the new unit and the next unit. When we finish a unit, we send it back and request the next one that’s going to be after that, so he’s always got something. And he’s very good at taking that home and looking through what the lesson is and actually figuring out if there’s things he thinks they might not need as much or things he needs to interject he looks for those things too. (Lyle’s Mentor, Year 1, Fall, Mentor Interview)

Beyond becoming acquainted with the scope and sequence of the curriculum, he displayed growing knowledge of the structure of the content within the textbook series. For example, during the first observation, Lyle articulated a similar structure that he noticed in the textbooks. He said,

Today is the last unit in the book. It is designed to bring together all of the mathematical strategies students have been using in the previous investigations. I found in the previous two books that the last lessons are designed to do that. It seems to be a consistent aspect of the textbook. (Lyle, Year 1, Fall, Pre-Observation Interview)

In this excerpt, Lyle recognized structure in the content of the curriculum. But he also knew how the content built up across years and across units. Lyle also saw many connections to other areas of mathematics. For example, in the spring, in a unit on surface area and volume, he identified connections to calculus and linear relationships. Additionally, he recognized how surface area and volume were developed and how they developed similar to prisms and cylinders.

In his second year, Lyle continued to develop his PCK of curriculum. He aligned the state content standards with his lessons to ensure that he addressed what was expected. He demonstrated knowledge of the scope and sequence of topics across grade levels by identifying units where similar topics were addressed.

Lyle made choices in his teaching that were anchored or tied back to particular goals for instruction. In his plans for an introductory problem, he wrote: “Have a rectangular prism and ask similar questions to numbers 1-3 for homework the previous night. Monitor for students who struggle. If many do, go over #2 from the homework” (Lyle, Year 2, Spring, Lesson Plans). He explained in an interview his reason for this choice, “the reason I chose (homework item) number two, number two is not a basic rectangular prism. This gets them to think (further) about length, width, surface area, that kind of thing” (Lyle, Year 2, Spring, Stimulated Recall Interview Day 2).

Lyle related student difficulties or struggles in terms of past, present, and future curricular goals. For example, in a unit on surface area and volume, he noticed students struggling with use of units. In one interview, he explained that this was the first year adoption of the textbook series by his school district. He was unsure what previous sequences of mathematical topics had been introduced to students. He said:

And I don’t think that they’ve ever taught specific units and how units can actually be multiplied. That it’s not just a length, that it’s centimeters times centimeters gives centimeters squared. And centimeters times centimeters times centimeters you’ve got centimeters cubed. This is I think an initial step at doing that. But I don’t see the program addressing measurement at all, other than through cursory methods. Even the [state test] doesn’t require them to put units on the tests… At least they didn’t for a couple years, but science teachers are killing this. They tell us, “Do not let them write things without units.” Because in science, that is one of their standards. Now why there’s disconnect in math I don’t understand. (Lyle, Year 2, Spring, Stimulated Recall Interview Day 1)

Lyle developed knowledge of the curriculum in terms of scope and sequence as it was represented in the textbook he used. He also developed knowledge of state standards both in mathematics and in science. He used this knowledge to make instructional decisions and to explain student thinking.

Lyle’s knowledge of instructional strategies

Though Lyle focused on knowledge of curriculum, he felt he needed to gain more knowledge of instructional strategies. He knew the organization for a problem-based lesson that involved introducing an activity, allowing students to engage in the activity, and summarizing the mathematics content of the lesson, but he struggled to enact specific actions to engage the class in whole-class discussions. He lamented, “I like to have the kids communicate, which is why I keep complaining that I don’t have a talkative class. I can’t get the kids to engage” (Lyle, Year 1, Fall, Stimulated Recall Interview Day 1). For example, when students estimated the proportion of marked beans in a bag after drawing a sample of beans from a bag, Lyle attempted to draw student attention to the idea that small sample sizes can have large variation, but few students participated in the discussion.

I wanted students to notice what I told them afterward: that there was a lot of variation in small sample sizes. Not necessarily in those words, but I wanted them to see that taking the larger sample size gives you a better reference point for what the percentages were going to be (Lyle, Year 1, Fall, Stimulated Recall Interview Day 1).

Lyle called on a student to articulate this idea and when the student finished, he moved on. He was unsure whether other students recognized the importance of this idea. Lyle explained, “I knew that no one else would get it. And in the back of my mind I thought, ‘yes, that’s a great (explanation),’ but we couldn’t discuss it further” (Lyle, Stimulated Recall Interview Day 1). He wanted greater student engagement, but when he invited students to participate, he found them reluctant to do so.

Due to his difficulty promoting discussion, Lyle often dominated classroom discussions. He called on students to encourage discussion, but was unsure what specific questions to ask or how to ask them to further discussion. However, Lyle did ask some questions designed to push student thinking, as is noted by his mentor below.

And he’s really good about introducing (the lesson). He will let kids read the opening part of it, and he says I do that because you’re more apt to listen to yourselves, you know, one of your own classmates read than me. And then, but he’ll do that and then he’ll talk about it and kind of get it kicked in by asking them some questions: “What do you think is going to happen?” Or, “What do you think about this?” (Lyle’s Mentor, Year 1, Fall, Mentor Interview).

Despite the mentor’s positive view of Lyle’s questioning, we rarely observed Lyle asking specific questions that focused students on the specific mathematical ideas in the lesson. Instead, he tended to ask general questions such as: “What did you do first on this problem?” and “What do you think about (another student’s response)?”

In the Spring of Year 1, he spoke about his teaching, indicating confidence in presenting the content, but concern about his teaching. He said, “I have a lot to learn. I am nowhere near proficient in teaching” (Lyle, Stimulated Recall Interview Day 1). Lyle noted that he lacked knowledge of specific strategies to engage the whole class in meaningful discussions of mathematics. He reiterated that this was an ongoing challenge in Year 2.

In his second year, Lyle continued to organize instruction as he had in Year 1. He met with the other mathematics teachers on a weekly basis. From these meetings, he learned general strategies that he was unfamiliar with such as “stations” and “board races.” Lyle was disgruntled that he had not learned these strategies in his teacher preparation classes. He said,

The things that I find myself struggling with are not content methods but just general methods: behavior management, classroom management, classroom design, coming up with lesson plans, effective lesson planning, being able to plan for advanced students. That’s the hardest thing this year. I went at it the wrong way to start the year. I did not have an effective plan. I lost control of students at the start because I didn’t have a good plan, and I didn’t know how to start the year. I had a content plan but no classroom plan. (Lyle, Year 2, Spring, Pre-Observation Interview)

Lyle noted he had a “content plan, but no classroom plan.” He focused on the curriculum and how content was organized, but this did not translate into knowledge of instructional strategies that he deemed successful.

We note that most of Lyle’s concern and learning was about general pedagogical strategies. While he struggled to take specific actions that engaged students in classroom discussions, he expressed frustration for not learning general pedagogical strategies.

Although Lyle was frustrated with his knowledge of instructional strategies and classroom management, he knew and developed strategies to help students with difficulties. When students experienced difficulty, he referred them to pages in their text, such as a previous investigation or to the glossary. He used this strategy because, “I didn’t want to tell them what the answer is” (Lyle, Year 2, Spring, Stimulated Recall Interview Day 1). In addition to referring students to sections of the text to review, he reminded students how they solved related problems, connecting student prior knowledge to current problems. For example, when students wrote equations to represent a purchase, he helped them interpret the situation by asking questions that referred them to patterns they previously studied. He said:

I ask them, “If you were being charged $1 for this, how much would the tax be? So how much would it be for $2? How much would it be for $10?” That is consistent with the textbook, having them think about writing an equation. So I think by saying that and getting them to think, “This is how we did it before, and it worked before.” That’s why I was approach it that way. (Lyle, Year 2, Spring, Stimulated Recall Interview Day 1)

Lyle’s knowledge of the content of the textbooks led to a specific action for assisting students as they solved problems by reminding them how they solved similar problems. Although Lyle was discouraged by his lack of knowledge of instructional strategies, he developed specific actions to confront student errors that drew on his knowledge of the curriculum.

Lyle’s knowledge of assessment

During Year 1, Lyle emphasized various assessment strategies. Though he taught from the CMP curriculum where student explorations were routine, he did not explicitly identify assessment strategies other than homework, quizzes, and tests. He stated that his observations during student explorations focused on classroom management. Knowledge of students’ understanding emerged from his efforts, but he did not view these interactions as assessment.

During the spring semester, he reaffirmed that homework was his primary assessment strategy. He was asked: “Where and when do you have planned to respond to how the kids did on this homework?” He replied,

I don’t at the moment. Now, that may come down to giving a quiz on Friday or having a real assessment or it might be going through them and pulling them aside during a lesson that they might be able to forego to say, ‘where are your troubles for this? Do I need to redirect some information to you?’ It might just be that they didn’t get a chance to do it or they just scribbled something down. I pass it back and give them discussion points after asking them how it’s going and showing them what I came up with. I might ask them if they have any differences and see where these differences are coming from. (Lyle, Year 1, Spring, Stimulated Recall Interview Day 1)

From this and other excerpts, Lyle demonstrated that his knowledge of assessment largely consisted of summative assessments for homework, quizzes, and tests. Although we have some evidence that Lyle tacitly used some other assessments—during student explorations—he did not identify what he learned through his interactions as an important type of assessment.

In his second year, Lyle’s knowledge of assessment strategies changed little. He continued to focus on homework, quizzes, and tests as his primary assessment strategies. When asked about specific areas where students were struggling, he hypothesized why students had difficulty by relating it to the curriculum. He developed responses based on his assessment results in his lesson plans to monitor students who were struggling by reviewing particular problems that were similar in the homework. In a stimulated recall interview during our fourth observation cycle, he said:

I had two groups go back to investigation 1.3 where they formalized (the formula for volume of a prism). I had one of the students read through it. And they were like, oh yeah it’s length times width times height. Yeah that’s right. Other students were having issues with understanding the difference between surface area and volume. I asked them to go back to the glossary, because I didn’t want to tell them what it is. (Lyle, Year 2, Spring, Stimulated Recall Day 1)

When Lyle engaged with students, their misunderstandings were revealed. Though he did not identify this as assessment, his interaction with students led to changes in his knowledge of student understanding that resulted in him referring students to various appropriate places in the curriculum.

Lyle’s knowledge of student understanding

Lyle developed knowledge of various student characteristics. He identified “smart students who were lazy,” and students who unsystematically combined numbers to guess responses, but his knowledge of student understanding typically consisted of general knowledge of student understanding. For example, he knew students seemed to rarely use variables to describe mathematical relationships. He said, “Based on the last book, they do not like variables. They do not like to use them or manipulate them” (Lyle, Year 1, Spring, Stimulated Recall Interview Day 1).

In addition, he hypothesized about the content in which students developed a shallow understanding. Regarding one student who was experiencing difficulty, he said,

She is a concrete thinker. In general, she is good with numbers and can figure it out if there is a definitive method. She did poorly in the [algebra] section. She had difficulty equating numbers and variables as equivalent. I think that she does not yet have the understanding that a variable is a number: that it can be used as a number; it can be multiplied, and it can be added to another variable. (Lyle, Year 1, Spring, Stimulated Recall Interview Day 1)

Lyle could tell when students were not understanding, but did not refer to specific mathematical misunderstandings.

Lyle shifted his view of student learning and how they come to understand mathematics during Year 1. When asked how his experiences with students changed his teaching he said, “I’m more realistic in what they understand after a single lesson. They don’t make connections from day to day. I thought they might” (Lyle, Year 1, Spring, Pre-Observation Interview). Lyle sensed the need to begin each day connecting to the previous day’s content because he determined that students were not making connections.

In his second year, Lyle continued to develop general knowledge of students’ understanding (not PCK) and their tendencies. This knowledge did not directly relate to specific mathematical ideas or procedures. For example, he identified qualities of students, such as “She leans heavily on her seatmates on what she’s doing” (Lyle, Year 2, Fall, Stimulated Recall Interview Day 1). He recognized other student characteristics that constrained teaching, such as the student unwillingness to write explanations of their solutions.

As illustrated in Year 1, Lyle was aware of general struggles students had, but did not anticipate them. This led to further knowledge about student difficulties and what students find difficult to understand. For example, when students struggled with conceptualizing the cost of an orange in dollars per orange. He said, “I didn’t anticipate them having so much trouble. That was panic mode (for me) because I wanted them to get it. It was, ‘I have no plan for this, why aren’t they getting this? Let’s try to think of a different way’” (Lyle, Stimulated Recall Interview Day 2). His statement, “Why aren’t they getting this?” represents Lyle’s recognition of difficulties in student understanding. He identified that students were struggling, but unsure how to address student difficulties. Lyle’s knowledge of student understanding was filtered through his knowledge of curriculum. His knowledge of student understanding was often connected to what students had been exposed to and should have learned within the curriculum.

Summary of Lyle’s PCK trajectory

Lyle demonstrated a growing knowledge of the curriculum, including the scope and sequence of the mathematics content. He saw connections among units and grade levels. Lyle recognized and used a typical inquiry-based structure for his lessons, yet struggled to initiate classroom discussions. He knew what mathematical content he wanted students to learn but was unsure what strategies to use to encourage students to develop further understanding. He pointed students back to various places in the curricular materials so that they could correct their misunderstandings. His knowledge of assessment and assessment strategies continued to be rather general, consisting of homework, quizzes, and tests. He identified general characteristics of his students and recognized general areas of struggle, and these he often connected to prior student experiences in the curriculum. He privileged knowledge of mathematics curriculum and invested time in collecting curricular materials and studying the tasks and the development of mathematical topics across and among instructional units.

Discussion and implications

The findings of this study demonstrate the differences that exist in the development of PCK for beginning mathematics teachers. In addition, this is one of a few studies that empirically situate PCK in actual classroom instruction, providing the mathematics education community with a new method for studying PCK. However, we acknowledge the challenges that previous researcher have identified in delineating aspect of PCK as compared to general pedagogical knowledge that is also developing for beginning teachers.

In this study, we clarify the PCK construct, differentiating it from Shulman’s (1987) initial conception. We view PCK as not the “most useful forms of representation, analogies,” etc., but as the subject-specific knowledge of curriculum, learners, assessment, and instructional strategies regardless of its quality. Through the coding scheme that was developed in this study, we further delineated the various components of PCK. In addition, we characterized PCK from the perspective of the individual teacher rather than determining PCK based on the quality of this knowledge. However, this study is also limited due to the narrow focus on a relatively small number of beginning teachers in a particular alternative certification program. Further investigation is needed to understand how the findings of this study can be extended to other teacher preparation programs, teacher professional development settings, or other grade bands.

Our findings about the PCK of beginning teachers are similar to the findings of Ponte and Chapman (2006), as Keith and Lyle recognized that they lacked the knowledge necessary to teach mathematics successfully. For example, Keith acknowledged that he did not have strategies for assisting students with errors, and Lyle noted that he lacked knowledge of how to effectively manage classroom discussions. Furthermore, these knowledge limitations persisted despite three targeted mathematics methods courses and a 32-week, 20-h per week internship.

In addition, these findings demonstrate that PCK can develop in different ways for beginning teachers. What remains unclear is what factors led to differences in Keith and Lyle’s PCK development. Magnusson et al. (1999) hypothesized that the subject matter knowledge, pedagogical knowledge, and knowledge of the school context may influence the development of PCK. We concur that each of these factors impacted the PCK development for Keith and Lyle, and recognize that it is difficult to determine which aspects may have had more or less impact or their PCK development. We hypothesize that another plausible explanation for the differences in the PCK for Lyle and Keith resulted due to their privileging of particular components of their knowledge. Such privileging of particular components of their knowledge connects to the impact of reflection-in-action and reflection-on-action on PCK development (Park & Oliver, 2008). Lyle valued PCK for mathematics curriculum. Keith valued PCK of students’ understanding of mathematics. These values appeared to influence the type of PCK that developed over their first two years.

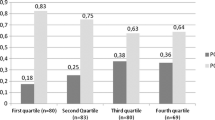

Despite the similarities in their coursework and internship, Lyle’s focus on developing curricular knowledge led to developing general pedagogical knowledge of instructional strategies (e.g., use of board races) and PCK for instructional strategies (e.g., providing example of specific student mathematical errors). Lyle’s PCK of assessment and student understanding was comparatively underdeveloped than his curricular PCK. We represent Lyle’s knowledge development in Fig. 2, where we see his PCK of mathematics curriculum, influencing his PCK of instructional strategies, student understanding, and assessment—albeit in limited ways. Lyle’s development of different PCK components originated in and was filtered through his knowledge of curriculum for mathematics.

In contrast, Keith focused on developing his PCK of assessment and student understanding. As Keith increased his knowledge of assessment strategies (e.g., moving to asking questions that provided insight into student mathematical thinking), his PCK of student understanding developed in regard to the specific student mathematical difficulties. His PCK of assessment and student understanding led to further development of PCK of instructional strategies (e.g., identifying specific counterexamples to challenge student thinking) and, to a lesser extent, his PCK of curriculum. Figure 3 represents Keith’s development of PCK, where we see his development of PCK of assessment and student understanding influence each other and the other two PCK components.

Notwithstanding the similarities in their teacher preparation, it was evident that Keith and Lyle developed different knowledge across the four PCK components. Keith’s growth in assessment knowledge for mathematics appeared to deepen his knowledge of student understanding of mathematics, providing an entry point to knowledge of instructional strategies for mathematics and knowledge of mathematics curriculum. However, Lyle’s PCK was limited primarily to knowledge of curriculum for mathematics. His curricular knowledge impacted his PCK development across the other components, but his growth in other components primarily involved general pedagogical knowledge rather than knowledge specific to the teaching of mathematics.

The findings of our research document differences in knowledge growth for these beginning teachers; these findings also illuminate the importance of individualizing the professional development of teachers during their induction years—an argument made by Chval (2008). Mentors of beginning teachers who facilitate knowledge growth in various aspects of an individual’s PCK may better assist beginning teachers in their knowledge development. Based on his PCK growth in the first 2 years, we anticipated that Keith will continue to develop his PCK for teaching mathematics across the four components. We are unsure about Lyle’s knowledge development, who in the 2 years in which he participated in this study appeared stymied by his underdeveloped PCK of assessment, instructional strategies, and student understanding. Further longitudinal work with individuals like Keith and Lyle is needed to understand the long-term development of PCK in beginning teachers as well as teachers across the professional continuum.

The differences in the PCK that Keith and Lyle developed over the 2 years have implications for the ways in which we support beginning teachers’ knowledge growth. Researchers have argued (e.g., Philipp et al. 2007) that focusing teacher education activity around knowledge of student understanding can provide an entry point into teachers’ conceptions that promote changes in beliefs about teaching mathematics. The findings of our study support Philipp’s argument, but also point to the importance of supporting teachers with developing specific assessment strategies that can provide insight into students’ mathematical difficulties. Until Keith developed specific assessment strategies, his knowledge of student mathematical understanding was minimal. As his assessment strategies improved and he learned more about how his students understood the mathematics they were learning, his increased knowledge of student understandings impacted other aspects of his PCK. Providing opportunities for beginning teachers to unpack students understandings from specific student work or of student interviews may allow beginning teachers to gain insight into student understanding that can impact other aspects of their PCK. Further research efforts that build on our findings and are designed to investigate the influence of how different components of PCK develop (and influence each others’ development) in beginning teachers could provide important implications for how we educate beginning mathematics teachers.

References

An, S., Kulm, G., & Wu, Z. (2004). The pedagogical content knowledge of middle school teachers in China and the U.S. Journal of Mathematics Teacher Education, 7, 145–172.

Ball, D. L., & Bass, H. (2003). Toward practice-based theory of mathematical knowledge for teaching. In B. Davis & E. Simmt (Eds.), Proceedings of the 2002 annual meeting of the Canadian Mathematics Education Study Group (pp. 3–14). Edmonton, AB: AMESG/GCEDM.

Ball, D. L., Thames, M. H., & Phelps, G. (2008). Content knowledge for teaching: What makes it special? Journal of Teacher Education, 59, 389–407.

Chval, K. (2008). Determining and responding to teacher professional development needs. Paper commissioned by Horizon Research, Inc. Available at: http://pdmathsci.net/reports/pi_memos/alm.pdf.

Even, R., & Tirosh, D. (1995). Subject-matter knowledge and knowledge about students as sources of teacher presentations of the subject matter. Educational Studies in Mathematics, 29, 1–20.

Hiebert, J., Wearne, D., & Tabor, S. (1991). Fourth graders’ gradual construction of decimal fractions during instruction using different physical representations. The Elementary School Journal, 91, 321–341.

Hill, H. C., Ball, D. L., & Shilling, S. G. (2008). Unpacking pedagogical content knowledge: Conceptualizing and measuring teachers’ topic-specific knowledge of students. Journal for Research in Mathematics Education, 39, 372–400.

Hill, H. C., Rowan, B., & Ball, D. L. (2005). Effects on teachers’ mathematical knowledge for teaching on student achievement. American Educational Research Journal, 42, 371–406.

Hill, H. C., Shilling, S. G., & Ball, D. L. (2004). Developing measures of teachers’ mathematics knowledge for teaching. The Elementary School Journal, 105(1), 11–30.

Horn, I. S., (2009, September). The development of pedagogical content knowledge in collaborative high school teacher communities. Paper presented at the psychology in mathematics education annual meeting, Atlanta, GA.

Kinach, B. M. (2002). A cognitive strategy for developing pedagogical content knowledge in the secondary mathematics methods course: Toward a model of effective practice. Teaching and Teacher Education, 18, 51–71.

Lappan, G., Fey, J. T., Fitzgerald, W. M., Friel, S. N., & Phillips, E. D. (2006). Connected mathematics series (2nd ed.). Boston, MA: Pearson Prentice Hall.

Magnusson, S., Krajcik, J., & Borko, H. (1999). Nature, sources and development of pedagogical content knowledge for science teaching. In J. Gess-Newsome & N. G. Lederman (Eds.), Examining pedagogical content knowledge (pp. 95–132). Dordrecht, The Netherlands: Kluwer.

Marks, R. (1990). Pedagogical content knowledge: From a mathematical case to a modified conception. Journal of Teacher Education, 41, 3–11.

McEwan, H., & Bull, B. (1991). The pedagogic nature of subject matter knowledge. American Educational Research Journal, 28, 316–334.

National Council of Teachers of Mathematics. (2000). Principles and standards for school mathematics. Reston, VA: National Council of Teachers of Mathematics.

NCATE. (2003). Programs for initial preparation of mathematics teachers: Standards for middle level mathematics teachers. Retrieved from http://www.ncate.org/LinkClick.aspx?fileticket=2rnqh%2bt9zhE%3d&tabid=676.

Philipp, R. A. (2007). Mathematics teachers’ beliefs and affect. In F. Lester (Ed.), Second handbook of research on mathematics teaching and learning. Reston, VA: National Council of Teachers of Mathematics.

Philipp, R. A., Ambrose, R., Lamb, L. C., Sowder, J. T., Schappelle, B. P., Sowder, L., et al. (2007). Effects of early field experiences on the mathematical content knowledge and beliefs of prospective elementary school teachers: An experimental study. Journal for Research in Mathematics Education, 38, 438–476.

Pirie, S. E. B. (1996). Classroom video-recording: When, why and how does it offer a valuable data source for qualitative research? (report no. SE-059-195). In Annual meeting of the north American chapter of the international group for the psychology of researching teacher knowledge 23 mathematics education, Columbus, OH (ERIC/CSMEE Document Reproduction Service No. ED 401 128).

Ponte, J. P., & Chapman, O. (2006). Mathematics teachers’ knowledge and practices. In A. Gutierrez & P. Boero (Eds.), Handbook of research on the psychology of mathematics education: Past, present and future (pp. 461–494). Rotterdam: Sense.

Rowan, B., Correnti, R., & Miller, R. J. (2002). What large-scale survey research tells us about teacher effects on student achievement: Insights from the Prospects Study of elementary schools. Teachers College Record, 104(8), 1525–1567.

Schempp, P. G. (1995). Learning on the job: An analysis of the acquisition of a teacher’s knowledge. Journal of Research and Development in Education, 28(4), 237–244.

Schmidt, W. H., et al. (2007) The preparation gap: Teacher education for middle school mathematics in six countries. MSU Center for Research in Mathematics and Science Education. Retrieved on May 24, 2011 at http://usteds.msu.edu/MT21Report.pdf.

Shulman, L. S. (1986). Those who understand: Knowledge growth in teaching. Educational Researcher, 15(2), 4–14.

Shulman, L. S. (1987). Knowledge and teaching. Harvard Educational Review, 57(1), 1–22.

Star, J. R. (2005). Reconceptualizing procedural knowledge. Journal for Research in Mathematics Education, 36(5), 404–411.

Stones, E. (1992). Quality teaching: A sample of cases. London and New York: Routledge.

Van Der Valk, T., & Broekman, H. (1999). The lesson preparation method: A way of investigating pre-service teachers’ pedagogical content knowledge. European Journal of Teacher Education, 22(1), 11–22.

Acknowledgments

This manuscript is based upon work supported by the National Science Foundation under grant ESI-0553929. Any opinions, findings, conclusions, and recommendations in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lannin, J.K., Webb, M., Chval, K. et al. The development of beginning mathematics teacher pedagogical content knowledge. J Math Teacher Educ 16, 403–426 (2013). https://doi.org/10.1007/s10857-013-9244-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10857-013-9244-5