Abstract

Stacking fault energy (SFE) is an intrinsic material property whose value is crucial in determining different secondary deformation mechanisms in austenitic (face-centered cubic, fcc) steels. Considerable experimental and computational work suggests that the SFE itself is highly dependent—in a complex manner—on chemical composition and temperature. Over the past decades, there have been a large number of efforts focused on determining the composition dependence of SFE in austenitic steel alloys by means of experimental, theoretical or computational methods. Unfortunately, experimental methods suffer from the indirect nature of the methodologies used to estimate the value of SFE, while computational and/or theoretical approaches are either limited by the physics that they can incorporate into the predictions or have more practical limitations associated, for example, to the size of the systems that can be modeled or the assumptions that must be made. In this paper, we review the major experimental and computational approaches to determine SFE in austenitic steel alloys, and we discuss their limitations. We then demonstrate a data-driven machine learning technique to mine the literature of experimental SFE data in steels, while algorithms at the fore-front of machine learning have been used to visualize the SFE data and then construct a three-class classifier. The classifier is used then to predict likely secondary deformation mechanisms of untested compositions, while the classifier itself is presented as a valuable tool for the further development of austenitic steel alloys in which the specific secondary plastic deformation mechanisms are a feature to design for. The data as well as the entire analysis workflow are made available to the wider community through a public github repository.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Stacking fault energies

There have been many approaches to designing steels with superior mechanical properties, with one of the most successful approaches to date being the design of austenitic steels that exhibit, in addition to slip, secondary plastic deformation mechanisms, such as transformation-induced and twinning-induced plasticity (TRIP, TWIP) behavior, or both. Steels in which these secondary mechanisms can be activated tend to have high strain-hardening rates, large ductility and high strength [1]. These properties are attributed to the \(\gamma \rightarrow \epsilon \) stress-induced martensitic transformation and deformation twinning in the case of TRIP and TWIP-assisted steels, respectively. Theoretical and experimental work suggests that these so-called secondary deformation mechanisms in austenitic steels are primarily a function of their stacking fault energy (SFE). With decreasing SFE, the deformation mechanisms tend to change from (i) dislocation glide to (ii) dislocation glide + deformation twinning to (iii) dislocation glide + martensitic transformation. Many researchers have attempted to correlate different SFE regimes to different secondary deformation behavior [2, 3]. Hence, the ability to predict SFE for any given austenitic steel composition is crucial to steel alloy design since a certain desired mechanical deformation behavior is one of the most important design criteria when it comes to this very important class of structural materials. While there have been many theoretical approaches to predicting SFE—all of which are discussed at length in the paper—all of the current efforts have important limitations that preclude their use in the context of alloy design. In this paper, this is addressed through the use, as will be shown, of machine learning approaches.

Materials genome initiative

The materials genome initiative (MGI) calls for the acceleration of materials discovery at a fraction of current costs [4]. The MGI has paved the way for a paradigm shift in how we think of materials discovery and design—from experimental guesswork in a large, unbounded n-dimensional parameter space to one of targeted search among infinitely fewer possibilities derived from computational—or computationally assisted—exploration. The MGI emphasizes the adoption of new frameworks and techniques to accelerate materials discovery. One of the pillars and new paradigms of this initiative is Materials Informatics [5] and Materials Data Science [6]. Materials Data Science and Informatics encapsulates Machine Learning/Statistical Learning/Data Mining (or various other interchangeably used terminologies) which are being used in myriad disciplines ranging from artificial intelligence, social media analytics, decision making in enterprises to economics, bioinformatics, astronomy and other scientific fields. The basis of these learning algorithms is that given some information or data and an understanding of the meaning of, or information associated to, that data, an underlying pattern can be uncovered and ‘learnt’ which can be used to predict outcomes for unseen scenarios. These powerful algorithms have contributed to the further development of the above-discussed disciplines, specifically where the complexity of the problems rendered theoretical modeling based on domain knowledge incredibly challenging. Materials Informatics and Materials Data Science leverage these techniques on top of the experimental and physics-based computational data in materials sciences community to usher the field forward in a new era of accelerated materials discovery.

Our approach

This paper is a description of our research to accomplish a materials discovery task using techniques and algorithms in machine learning. The task at hand is to predict the stacking fault energy, SFE, of any given untested austenitic steel composition, thereby aiding alloy design of austenitic steels with a requisite mechanical deformation behavior. For this task, we collected, to the best of our knowledge, all data available in the literature on experimental determination of SFE for austenitic steels and then used machine learning algorithms to predict SFE. The paper is organized in the following sections: (i) discussion of existing theoretical SFE prediction approaches and their shortcomings (ii) extensive review of experimental methods for SFE determination (iii) description of our methodology and its uniqueness (iv) results and discussion.

Stacking fault energy determination in the literature

SFE for austenitic steels can either be predicted based on different theoretical modeling approaches or experimentally determined by casting the alloy compositions and characterizing them using different techniques. From an alloy design perspective, the ability to predict SFE for any given composition is crucial as SFE is a strong indicator of likely secondary plastic deformation mechanisms in these systems and hence theoretical approaches for its estimation are important as the latter can potentially contribute to the acceleration of the alloy design process. The experimental calculations aid the formulation of these theoretical models as they are true data against which models are evaluated.

Stacking fault energy prediction: theoretical methods

There have been predominantly three approaches to predicting stacking fault energy for any given composition in austenitic steels: (i) first principles/ab-initio electronic structure calculations, (ii) thermodynamic modeling and (iii) linear regression. While the first two are physics-based modeling paradigms, the latter is a purely statistical approach. The modeling techniques are discussed below with an added emphasis on their pitfalls and a plausible explanation for why there is currently no robust go-to equation in the literature that explains the composition-SFE relationship in austenitic steels.

First principles approaches to predicting SFE

Calculation of SFE in steel alloy systems by first principles, ab-initio electronic structure modeling based on quantum mechanical theory can be realized by more than one approach. However the choice of approach is eventually guided by the computational cost and accuracy of method at hand. Unfortunately, with current computational resources it is exceedingly difficult (if not impossible) to do first-principle calculations of SFE for multi-component steels with compositions corresponding to realistic systems. In fact, the most complex chemistry for which the SFE has been modeled and predicted using ab-initio approaches are quaternary steel alloys [7, 8], which, while complex, do not approach the richness in chemistry exhibited by most commonly used alloy systems.

Overall, there are predominantly two approaches in the literature for calculating SFE of Fe alloyed with other elements. One approach was first presented by Vitos et al. [7] who calculated the intrinsic SFE in ternary and quaternary Fe alloys by adopting a Axial Next Nearest Neighbor Interaction (ANNNI) model for ferrous alloys. Another approach is the widely known explicit calculation of the generalized stacking fault energy (GSFE) surface which claims to provide a complete description of the energy landscape is shared along specific slip directions on specific slip planes. Based on accuracy and computational cost, the approach from Vitos seems to be the most suitable, at least for alloy systems. A high-level description of both approaches is explained next.

ANNNI modeling The approach pioneered by Vitos is based on the application of the ANNNI model, which assumes that the energetics corresponding to different layer stacking in a given crystal system can be described essentially with a one-dimensional Ising-like model where different values of spins correspond to different layer identities or stacking sequences. This model was first used to describe the energetics of different SiC polytypes [9, 10]. Earlier work on SiC suggested that this one-dimensional representation of the configurational state of layered systems and their corresponding energetics was sufficiently adequate to explain the thermodynamics of such complex systems. Practical applications of the ANNNI model consist of the calculation of the energetics of structures that can be represented through different one-dimensional stacking sequences, followed by the fitting of a one-dimensional Ising model against the energies of known configuration-energy tuples and then using the Ising parameters for predicting arbitrary configurations/stackings.

In their paper [7], Vitos et al. formulate the formation energy of an intrinsic stacking fault in a fcc crystal as the excess free energy per unit area of a region in the crystal with ‘incorrect’ stacking, relative to the conventional \(\cdots \)ABCABC\(\cdots \) stacking of an fcc structure along the [111] direction:

\(F_{\text{SF}}\) is the free energy of the system with the stacking fault, \(F_0\) is the free energy of system without stacking fault and \(A_{2D}\) is the specific area of the stacking fault. With the ANNNI model, the stacking sequence along a specific direction—[111] in the case of fcc crystals—is represented by \(S_i\)’s, the same formulation as the Ising model but here they represent different layering/stacking sequences rather than spin configurations. The excess free energy of a stacking sequence is then formulated in terms of \(-\Sigma _i\Sigma _jJ_nS_iS_{i+n}\) where \(J_i\) is the interaction parameter for the ith nearest neighbor. Hence \(J_1\) is nearest neighbor, \(J_2\) is the next nearest neighbor and so on. Using this representation, the energy of intrinsic stacking faults can be expressed in terms of differences between periodic crystal structures such as fcc, hcp and dhcp, which in turn correspond to different stacking sequences, ABCABC, ABAB, ABCB, etc. Based on these expressions, we have:

Here the reason for approximate symbol is the fact that the expressions in terms of \(J_i\) are truncated and only the first three or four interactions are considered. Although these approximations have shown to be consistent and within the limits of experimental error, for higher accuracy, higher order terms need to be considered. The calculations were then done based on the EMTO-CPA approach also devised by the same authors. For a detailed description one can refer to their work [7].

Vitos and collaborators have modeled ternaries like Fe–Cr–Ni [7, 8, 11] systems as well as quaternaries like Fe–Cr–Ni–Mn [7, 8], Fe–Cr–Ni–Nb [7, 8] and Fe–Cr–Ni–Co [7, 8]. These results have been shown to be in good agreement with the experimental values when taking into account the inconsistencies and uncertainty of experiments. Figure 1 shows a relevant comparison between predictions and experimental data. The formulation also has an excellent description for the temperature variance of SFE which has been modeled indirectly in terms of magnetic contributions. The biggest contribution of the above approach has been to explain the nature of composition-SFE relationship, since Vitos and collaborators have found highly nonlinear relationships [7, 8, 11]. In fact, they have conclusively shown that the effect of an alloying element on SFE depends not only on the element but also on the host composition. Figure 2 demonstrates these nonlinear relationships. Thus the authors have suggested that it is impossible to derive universal SFE-composition relationships for multi-component steel alloys.

Adapted from Ref. [7] and reproduced with permission

Comparison of theoretical calculations of SFE by ANNNI model with experimental values.

Adapted from Ref. [8] and reproduced with permission

Nonlinear dependence of SFE on composition of Mn based on host composition of Fe–Cr–Ni.

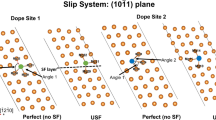

GSFE modeling Another common approach to the prediction of SFE is the widely used technique known as the generalized stacking fault energy calculation that explicitly calculates the energy landscape of a system as it is being sheared along specific slip plane-slip direction systems. In fcc systems, a single-layer of stacking fault can be generated by displacing the upper half of the crystal relative to the lower half along 〈112〉 direction on the {111} planes. The fault energy \(\gamma \) can be calculated as a function of this displacement, which will be a curve representing energy of different sheared configurations resulting from different values of displacement along specific crystallographic directions, such as 〈112〉. There are some important characteristic material properties which can be calculated based on the GSFE curve which are depicted in Fig. 3, namely the unstable fault energy, intrinsic fault energy and stable fault energy. The displacements which lead to the structures corresponding to these energies are 0.5\(|b_{\text{p}}|\), \(|b_{\text{p}}|\) and 2\(|b_{\text{p}}|\) respectively, where \(|b_{\text{p}}|= 1/6 \langle 112\rangle \) is the Burger’s vector of the partial dislocation producing the shear.

Adapted from Ref. [12] and reproduced with permission

a A representation of different shear displacements to calculate a GSFE curve. b A schematic of the GSFE curve with important quantities marked.

However the use of this approach has not yielded—in general—good quantitative predictions of SFE in Fe alloy systems. The calculations using this approach for Fe alloy system were first performed by Kibey et al. [12], who modeled the Fe–N binary and Fe–Mn–N ternary systems. The same approach has been adopted in the literature to model other alloying additions in iron, such as the Fe–Mn binary [13], Fe–C binary [14], Fe–Cr–Ni ternary [15], Fe–Mn–Al–C quaternary [16] and Fe-X binaries where X \(=\) transition metals [17]. As mentioned earlier, the important note about all these calculations is that they all significantly underestimate intrinsic SFE compared to similar experimental and theoretical (thermodynamic and other ab-initio approaches) data, even taking into account that these are 0 K calculations. Hence most of these GSFE calculations are restricted to explaining trends of SFE variation in particular alloy systems, rather than predicting SFE values that could be directly used in alloy design.

In summary ab-initio electronic structure modeling is a fundamentally robust approach to predicting SFE in steel alloys, specifically the ANNNI model has been shown to have agreement with experimental values. However due to computational cost and complexity, these calculations are exceedingly difficult (if not impossible) for multi-component alloys with many alloying additions. They, however, provide crucial insights about the nature of the composition-SFE relationship in the steel alloy system.

CALPHAD-based approaches to predicting SFE

There have been many investigations into the calculation of SFE using the so-called CALPHAD approach where the energy of the stacking fault is modeled as a martensitic embryo or as a hcp secondary phase with phase boundaries lining the austenite or fcc phase on either sides. The schematic of such a formulation is shown in Fig. 4. As shown, the SFE can then be written out in terms of extra energy needed to nucleate this stacking fault, which roughly corresponds to the Gibbs Free energy to nucleate the locally hcp stacking fault i.e. \(\Delta {G}_{\text{fcc} \rightarrow \text{hcp}}\), the strain energy due to the stacking fault as a second phase in the fcc matrix and partial dislocations bounding the stacking fault i.e. \(E_{m}^{\text{str}}\) and the interfacial energy on the hcp–fcc boundary i.e. \(\sigma \).

The Gibbs free energy—related to the contribution of the thermodynamic driving force—can be written out as a summation of ideal, excess, magnetic and other contributions. Different researchers have modeled various different Fe-based systems using slightly varying formulations. For example, the stacking fault modeled as an hcp second phase can lead to different strain energies based on the shape assumed for the stacking fault. Olson [18] and Cotes [19] have modeled the second phase as a spherical particle while Ferreira and Mullner [20] model it as flattened plate-like particle. These lead to different strain energy contributions although the energy value itself and the differences are low compared to SFE values. However the biggest discrepancy in thermodynamic approaches to the modeling of the composition dependence of SFE is in the assumed value of interfacial energy \(\sigma \) that is necessary to parameterize composition-dependent expressions for SFE. In all research papers to date, though, this is not physically estimated, although in fairness this quantity is extremely difficult to estimate through experimental means and perhaps even more difficult to calculate from first-principles. Hence, this quantity is generally used as a fitting parameter to make the thermodynamic calculation match experimental SFE calculations. This leads to large discrepancies as the value of \(\sigma \) in the literature varies from 4 to 20 mJ/m\(^2\) [2, 3, 18, 19, 21,22,23,24,25,26,27,28,29] which leads to even larger variations in SFE, thus highly depreciating the reliability of the thermodynamic modeling approach to SFE prediction. Figure 5 demonstrates such an example. In their paper, Akbari et al. [3] calculate SFE for Fe–Mn–C and other systems. They construct composition-SFE maps choosing different values of \(\sigma \) given in the literature since there is no way to determine this as discussed earlier. We consider 2 random compositions on this map and show how the choice of different interfacial energy values leads to a large range of possible SFE for a fixed composition.

Adapted from Ref. [3] and reproduced with permission

An example of variation in SFE due to large uncertainty in interfacial energy parameter.

Hence, the compositions corresponding to the blue point and green point on the composition-SFE map can have SFE values differing by 30 mJ/m\(^2\) depending on what the interfacial energy parameter is chosen, and this is an unreliable prediction for the purposes of alloy design. Many different papers show many other values of interfacial energy. Also, it has been discussed in the literature how this value itself is composition-dependent and hence estimating it for one composition based on experimental value and then using the same for all other compositions is not a robust approximation. This energy parameter than becomes essentially a fitting parameter that absorbs all unexplained physics in one’s model.

To summarize, thermodynamic approaches in theory are a good approach for multi-component steels. However the problems lie with databases and energy parameters. Modeling the Gibbs free energy of the fcc-to-hcp phase transition needs data for hcp state in the databases for Fe alloys which is lacking. The other significant problem is the value for interfacial energy parameters which are basically used as fitting parameters in the model leading to high uncertainties and inconsistencies among models in the literature. Sometimes the difference in interfacial energy parameters is so large that the difference in SFE more or less means an altogether different SFE regime for the same composition. Hence the thermodynamic modeling approach is not as robust as one would need for practical alloy design.

Linear regression approaches to predicting SFE

In addition to the theoretical/computational formulations for describing SFE-composition relationships, the statistical technique of linear regression has been applied on many small sets of experimental SFE data to describe a linear relationship between SFE and composition. Table 1 has a comprehensive list of such linear regression models in the literature, which have been formulated for different composition regimes in the austenitic steel system. Most of these equations have been modeled based on the experiments carried out in one research paper and hence the size of the dataset is typically very small. Except for a couple of papers which have either many experiments or took data from other papers, these equations are very “local” in the composition space.

There are two major problems with the linear regression approaches in the literature. First the amount of data used to create these regression models is small. In most cases the models have been built from the few experimental values in the paper, and hence cannot be extrapolated with any degree of certainty to compositions outside the range explored. More importantly, there has been ample discussion in the literature about the composition-SFE relationship being nonlinear as well as the significant interaction between amount of elements in alloy to affect the SFE. Hence there cannot be universal regression equations as the effect of one element on SFE depends on the values of other elements in the alloy system. When looking at all the above equations, it is evident that all of them have linear relationships in composition-SFE, which is not the true case. These equations are essentially modeled in sections of the whole composition-SFE space, keeping values of some elements constant. For example, the effect of Ni wt% on SFE is very different in all these equations and also SFE is linearly related to SFE in all cases. As such all these equations cannot be considered as universal composition-SFE relationships. In order to do robust statistical modeling, a much larger dataset is needed. Also the model should try to mimic the true relationship; hence, there is a need to consider nonlinear higher order and interaction terms.

Stacking fault energy measurement: experimental methods

Above we discussed the methods for the prediction of SFE for untested Fe alloys. However these predictions have to be matched against some (assumed, ground truth) value to gauge their accuracy. The true value of SFE can be inferred, albeit not measured, by experimental techniques and we discuss the two major techniques and methods that are used to experimentally estimate SFE in austenitic steels. The understanding of this is important, as for our modeling we eventually use all the experimental data available to do informatics and delving into the methodology of these techniques provides crucial insights into the uncertainty of measurements. The uncertainty will eventually prove to be a very important parameter in the selection of modeling techniques.

Transmission electron microscopy technique

The oldest technique used to indirectly estimate SFE is through transmission electron microscopy (TEM). Although this is sometimes referred to as a direct method, it is essentially indirect as it relates geometry of dislocation configurations and other crystal defects to the SFE of the material. Historically, many different geometries and related techniques have been used to calculate SFE using TEM. These are extended dislocation nodes, extrinsic–intrinsic stacking fault pairs [42], stacking fault tetrahedra [43], twinning frequency [44] and most recently partial dislocation separation. Out of these, the extended dislocation nodes and partial dislocation separation are the two most widely used techniques. The earliest work in this field was introduced by Whelan [45] who laid out the theoretical foundation for using extended dislocation nodes in steels to (indirectly) measure/estimate SFE and did the same on 304 stainless steel. Various theoretical refinements were suggested by a host of researchers to this original work, predominantly along geometric considerations [46,47,48,49,50]. Subsequently, many researchers have used this technique to calculate SFE over the years, a detailed list of which is available in Table 2. A more direct and more widely accepted technique, based on the measurements of partial dislocation separation, had also been used earlier but has found more relevance in recent years, mainly due to the availability of better instrumentation and imaging approaches. Many recent papers have used this technique to estimate the SFE in multi-component austenitic steels [36, 41, 51] and others, again the list of which can be looked up in Table 2.

Theoretically, when a perfect dislocation in a fcc lattice dissociates into two partial dislocations, a stacking fault is formed. The separation or dissociation width d between these partials is inversely related to the SFE \(\gamma \) of the fcc material. This can be calculated from anisotropic elasticity theory and the SFE \(\gamma \) is given by:

This is used to calculate for dislocations along [110] in the (111) glide plane. Here \(b_{\text{p}}\) is the partial Burger’s vector, \(\mu \) is the effective shear modulus in the (111) plane, \(\nu \) is the effective Poisson ratio, \(\alpha \) is the angle between Burger’s vector and dislocation line. The effective terms arise due to anisotropy in the crystal and can be calculated by:

where

Here the C’s are the elastic constants of the material which are measured or are approximated from alloys of similar compositions. Hence the partial dislocation separation d can be measured and used to calculate SFE. In practice d is measured multiple times along the dislocation line and the average is taken. Figure 6 shows the two commonly imaged geometries, extended dislocation nodes and partial dislocation separation from a certain composition of steel.

Adapted from Ref. [51] and reproduced with permission

Example geometries of dislocations used to measure SFE experimentally using TEM. a Extended dislocation nodes. b Partial dislocation separation.

Although the TEM technique is theoretically the most reliable experimental method as well as the most direct method of SFE measurement, there are various reasons why this method is not ideal and some are discussed here. For alloy compositions with high SFE, the partial dislocations are so close to each other that estimates of their separation distance can have significant uncertainties relevant to the absolute value of SFE. Moreover, one seldom finds partial dislocations parallel to each other, as in reality there are curvatures and kinks in the partial dislocation separation. Thus, the measurement of partial–partial distances becomes challenging and highly sensitive to outliers.

In the case of the extended dislocation node radii measurements, the nodes are rarely arranged in a manner which allow for proper radii measurement. Added to all this is the statistical scatter. Since with TEM imaging one can only image a few regions and a few geometries, there is always a question of sample representation by these few observations. Microstructural effects and interstitials also affect dislocation geometries, and hence another criticism is that the geometries are not at equilibrium. Given that SFE depends on temperature and TEM imaging leads to sample heating, there is also a possibility of measuring SFE at temperatures higher than intended. Lastly, most of the factors/constants used in the equations used in the estimation of SFE based on indirect measurements are not considered to be composition dependent, while in principle they should be—at the very least—weak functions of composition. Hence it can be seen that there are a large number of possible sources of uncertainty, some of which are quantifiable and others are not. Although some papers report the quantifiable experimental error, many do not. Thus all experimental values for SFE measurement need to be treated in a manner consistent with such large uncertainty around measurements.

X-ray and neutron diffraction technique

While indirect methods based on TEM imaging of dislocation configurations is a widely used and established method for the estimation of SFE, it is tedious and is mostly suitable for low SFE values, where (partial)dislocation pairs can be adequately resolved. This method, however, suffers from significant intrinsic uncertainties due to the fact that the (small) volume observed in a sample corresponding to a specific composition/temperature is very likely not representative of the entire sample. Consequently, other indirect methods have been suggested, although the seminal work from Reed and Schramm [52] represents the canonical work on the determination of SFE based on the analysis of XRD patterns. These authors established a relationship between SFE, stacking fault probability and RMS microstrain which made it possible to calculate SFE rather than just reporting stacking fault probability as was done earlier. Based on the above formulation, Schraam and Reed published the first work for calculation of SFE using X-ray diffraction in commercial austenitic steels [30] and Fe–Ni alloys [53]. The equation formulated by the authors is [52]:

where,

There are many quantities in the equation, including some that depend directly from the X-ray diffraction experiment while some are material constants which can be taken either from the available literature or separate experiments/calculations. A complete process chart for the calculation of SFE using XRD-based approaches in Eq. 3 is shown in Fig. 7. The section ahead is an elaborate literature review on the experimental determination and the literature for these quantities. After Schramm and Reed developed and demonstrated the methodology for X-ray diffraction, researchers adopted the same for neutron diffraction experiments.

Stacking fault probability Stacking faults occur on the (111) close-packed planes in the fcc crystal and cause a shift in position of diffraction lines. Stacking fault probability can be calculated using the angular displacements (shifts) of the of the diffraction peaks according to Warren’s method of peak shift analysis [54]. Comparison between annealed and deformed specimens is typically used to measure the peak shift and get corresponding \(\alpha \). As explained by Reed and Schramm [30], it is best to obtain the profile angular separation of (111) and (200) rather than absolute 2\(\theta \) positions to avoid diffractometer errors and achieve better sensitivity. Thus the widely used [33, 35, 55,56,57,58,59,60] equation to calculate stacking fault probability as given in [30]

This equation is derived for (111) and (200) reflections from the general equation described by Warren [54]

where \(\sum _b \pm L_0 /{h_0}^2\left( u+b\right) \) is a constant specific to each hkl reflection. For austenite 111, 200, 220, 311 and 222 reflections, the values are 1/4, −1/2, 1/4, −1/11 and −1/8, respectively [54]. Using the above general form some researchers have calculated \(\alpha \) using a different set of reflections [61] or taken averages of \(\alpha \) for different set of reflections [37, 62]. To do away with the need of testing a stacking fault free annealed sample for comparison, Talonen et al. [63] described a novel method which combined Warren’s treatment and Bragg’s law. By assuming no long range residual stresses in the powder sample, the peak positions are affected by lattice spacing and stacking faults which leads to the following equation with two unknown parameters (interplanar spacing \(\lambda \) and stacking fault probability \(\alpha \))

Hence Eq. 10 can be used to obtain as many independent linear equations corresponding to each representative hkl austenite reflection. These equations are subsequently solved using the linear regression method for \(\alpha \). Talonen et al. [63] and other researchers [64, 65] have described the use of the above method for calculation of stacking fault probability in deformed sample eliminating the need of comparison with an annealed sample.

RMS microstrain X-ray or Neutron Diffraction profile broadening of plastically deformed samples can be caused due to various factors, but primarily due to powder size and strain; hence, the profiles can be analyzed for evaluating these 2 components. Various different approaches and software packages exist for the calculation of RMS microstrain from the broadening of diffraction profiles. Schramm and Reed [30, 52, 53] in their seminal papers adopted the Warren–Averbach technique [66] in which the value of RMS microstrain \({\left\langle \epsilon _{L}^2 \right\rangle }_{hkl}\) can be expressed as

where \(A_L\left( h_1k_1l_1\right) \) and \(A_L\left( h_2k_2l_2\right) \) are the coefficients of the cosine term with first power in the Fourier series expression of diffraction profiles, L a length normal to reflecting planes, \(a_0\) is the lattice parameter. Typically \(\left( h_1k_1l_1\right) \) and \(\left( h_2k_2l_2\right) \) are (111) and (222) planes while as a matter of practice \({\left\langle \epsilon _{L}^2 \right\rangle }_{hkl}\) is averaged over 50 \(\AA \) in the [111] direction. Instrumental broadening can be eliminated by comparing the profiles for annealed and deformed samples for the same material. In addition to Schramm and Reed many other researchers [33, 35, 57] have used the Warren–Averbach method for calculating RMS microstrain. Another widely used [55, 58,59,60, 67] method is the Williamson–Hall plot [68] for XRD profiles. Citing probability of errors due to neglecting dislocation density and arrangement effects, some researchers have used a modified Williamson equation along with a modified Warren approach to calculate RMS microstrain [64, 65].

Hence from the above discussion it is seen how by analysis of diffraction patterns of deformed alloy compositions, SFE can be inferred, indirectly. However, as was the case in the TEM-based methodology, diffraction-based methods also suffer from many problems, particularly when considering the multiple corrections that must be made in order to make the estimation. Although the whole sample is being considered for analysis, this is an indirect method and many other physical and material interactions can influence the results derived from this methodology. There has been discussion regarding the robustness of the underlying theory of this method and the value of proportionality constant suggested by Schramm and Reed. The calculated RMS microstrain may have influences due to other sources of strain in the material, one source claimed as powder preparation of sample for diffraction analysis. The material constants plugged into the equation are mostly approximated from similar compositions and can be another source of error. Hence similar to TEM technique, data from the diffraction technique need to be accounted for large uncertainty.

Uncertainty in SFE determination

We have discussed in earlier sections the computational/theoretical and experimental approaches to determine the SFE in austenitic Fe-based alloys. In this section we revisit the sources of uncertainty in the predictions/determinations of this important quantity. Typically, in materials research, it is difficult to compare values from computational models to experimental observations due to missing physics in the computational models and experimental measurement error. However the task becomes dramatically difficult when the experimental observations themselves are very uncertain and inconsistent, not only due to measurement error but the inherent technique of observation or calculation as well.

SFE is an intrinsic material property depending on composition and temperature. Although there have been a very few papers claiming grain size and processing effects, the evidence is minimal or the effects are so small that they can be safely ignored [69]. Despite being a material property, there is no direct way to measure SFE. For example, the strength of a material can directly be determined by a tensile test. There can be uncertainty in the strength due to experimental error but not due to the method of measurement itself. However in the case of SFE, the value calculated is indirect. An observable quantity like geometry of dislocations or diffraction profile of the material is theoretically related to the SFE of the material. In this calculation there is then substitution of material constants, the observed property and other material parameters. Hence, there are multiple sources of uncertainty and inconsistency. In general there can be error due to observed quantity not matching the exact theoretical requirement to be used to calculate SFE, using averaged value of material properties for multiple compositions, and finally the robustness of the underlying theory itself relating an observed quantity to the SFE of material which can all be considered as the systemic uncertainty in SFE calculation while there can always be experimental error in the observed quantity due to sampling and measurement which can be called as the experimental uncertainty. This leads to a complex set of results for SFE of austenitic steels making it very difficult to use for comparisons and trends as well as matching against theoretical predictions. Different techniques lead to different calculations of SFE for similar composition. Any theoretical prediction model needs to tackle the problem of uncertainty in SFE data as it is difficult to choose the “correct” data.

Methodology

This work consisted of two parts: Collecting data from the literature on SFE calculations for steel compositions and using machine learning algorithms for visualizing, mining trends and predicting SFE.

Prediction problem setup and uniqueness of approach

The final objective of this work is to predict SFE for any given austenitic steel alloy based on just the chemical composition. A natural approach would be to build a model capable of providing a quantitative prediction for SFE as a function of arbitrary chemical compositions. All theoretical computational models essentially do the same, albeit only for a limited composition space—up to 4 or 5 different components. Linear regression techniques applied to SFE data allow for many components to be included in the models; however, problems with work in this direction until now have been discussed earlier. Given the high uncertainty in SFE determination, both systemic and experimental, we thought of an approach which would address practical needs of SFE determination and be more robust to underlying data uncertainties.

SFE value is of prime importance in alloy design. Since the SFE value of austenitic steels drives different deformation mechanisms, for designing a steel with certain grain structure and amenable to required deformation in process-use we need to know the SFE for unknown compositions. However, we certainly do not need the “exact” value or even precise values. There has been previous work in this direction by many researchers which have established that deformation mechanisms in austenitic steels are a function of SFE ‘regimes’, rather than specific values. What also helps is that this mapping from SFE regime to deformation mechanism is monotonic as well as one-to-one. Although the research establishes different values for these regimes, they are not too different and there is a common acceptance in the literature. Figure 8 shows different SFE regimes proposed in the literature. It is well accepted that an Austenitic steel with SFE value below 20 mJ/m\(^2\) deforms by martensitic transformation of TRIP-like behavior, with SFE value in between 20 and 45 mJ/m\(^2\) deforms primarily by deformation twinning leading to a TWIP-like behavior while SFE values above 45 mJ/m\(^2\) deforms majorly by slip. These regimes can be termed as Low, Medium and High, respectively.

Based on this knowledge, we can construct three “classes” of SFE ranges or three regimes and can map all our SFE numerical data to categorical data. The advantage of this approach is that it helps engulf the systemic and experimental uncertainty associated and now we can say that although the SFE calculation may be uncertain numerically, it most certainly belongs to a certain regime. This helps us circumvent the problem of choosing “correct” data from experiments. As discussed, the experimental data in SFE is highly conflicting, but by clustering the existing data in different SFE regimes/ranges we can leverage all the data available. Once we construct this dataset as such, our prediction problem is essentially a ‘classification’ task as it is known in machine learning and data mining fields. Given a composition we now want to classify whether the specific steel belongs to either the Low or Medium or High regime of SFE values. This is practically applicable to an alloy design task where compositions can be checked using this classifier and predicted how they deform. Another advantage is in the classification algorithm itself which will be discussed in the upcoming section.

Data capture and curation

A very extensive literature survey has been done to capture all the various experiments done on different steel compositions to build the best possible training dataset for the prediction algorithms. The authors believe that this is the most exhaustive dataset for experimental SFE calculations available to the community yet in a single source. The process of data collection was conceived in a manner so that not only the data but also all relevant metadata was captured. Essentially then, metadata regarding the experimental technique, process and conditions which can potentially inform more about the data itself were added into the database. These data descriptors can help making selections for the training data set, understand patterns on which experimental technique has larger uncertainties etc. and hence constitute very useful information.

The process of data collection is the first step but an even more important step is that of data curation. Data curation is the process which essentially enables reliable retrieval and querying of data for future research and reuse. To make our data collection efforts usable and available to the community, we decided to use Materials Data Curation System (MDCS) which is an undertaking by NIST to promote efforts in MGI. Hence we devised a schema for passing the captured data to a repository in a systematic manner such that the data are query-able as well as re-usable for the community at large.

Data We have discussed how SFE is a function of composition and temperature and these are the predictor variables or features which will help in predicting SFE. Thus we have captured composition, temperature and SFE. All possible elements that have been used in alloying in austenitic steel system have been considered. We have also discussed various experimental techniques for SFE calculation and underlying reasons on how these calculations can be uncertain. Hence capturing uncertainty in SFE data is crucial and we have reported the spread in SFE values wherever available.

MetaData In addition to the above-mentioned data, descriptors that add meaning and context to the data are equally important. These descriptors might not directly go into the calculations or algorithms for prediction, but are essential for establishing physical relations between features and indicators. As discussed earlier, there is considerable work in the literature that establishes SFE as a function of processing due to alloying effects. Similarly, there is available critique in the literature on the accuracy and applicability of different SFE measurement techniques as well as specific bodies of work. We can take all of these into account to make selections on the complete SFE dataset to choose subsets which we think are the best for a certain problem. However to be able to make these subsets, we need the descriptors of the data—which is essentially the metadata. For the experimental SFE dataset the metadata is broadly bibliography, experimental technique and processing. The bibliography maps observations to particular journal papers which enables us to subset certain observations based on the community’s confidence and critique of certain papers. Similarly experimental technique helps choose data only from certain techniques which might be established to be more accurate while processing helps identify differences in SFE measurements based on different process routes.

Training set

We have a dataset of SFE calculations on various compositions at different temperature conditions processed by different processing routes. This complete dataset has 500 odd points. Table 2 is a list of all the papers in the SFE literature from which experimental SFE data were recorded. This to the authors’ knowledge the most comprehensive SFE dataset to date and is exhaustive as far as experimental research in SFE of austenitic steels is concerned.

As evident from the table, a large number of papers have been mined to collect these data and build an exhaustive dataset. One merit of this work is the sheer amount of data collected for this task compared to earlier regression approaches in SFE prediction which had data from a maximum of 5 research papers, hence making the data mining task unreliable.

Machine learning algorithms

In this section we briefly discuss the machine learning (ML) algorithms used in this work on the SFE dataset. All ML algorithms work with an input dataset which has observations across various predictors or features. This dataset may or may not have a target value for observations and depending on that an ML algorithm can be classified as supervised or un-supervised. The objective and dataset at hand leads to selection of which ML algorithm one uses with their dataset. Different ML algorithms can be used at different points in a data mining workflow starting from (i) dimensionality reduction which can be useful in working with very high-dimensional data (large number of predictors) and hence capturing only the important ones to reduce dataset size or where dimensionality of data makes it impossible to completely visualize it in three dimensions and hence we reduce dimensions for the purpose of visualization (ii) prediction which depends on our target output type—nominal or categorical. Regression and classification algorithms are used to predict target output based off the predictors/features in the dataset. We use the open source ML Library Sci-Kit Learn [109] in this work.

Visualization

The SFE Dataset has 9 predictors—the weight percentage of different alloying elements. We cannot visualize the predictors and SFE value, a ten-dimensional data at once. Hence, we have to rely on dimensionality reduction algorithms to reduce the 10-d data to 2-d or 3-d such that minimal information is lost and we can search for insights after visualizing the data. While there is a wide range of approaches to dimensionality reduction, we focus our attention to three different approaches. It is important to note that these new dimensions in which we visualize our data might not have any physical meaning or significance but nevertheless help us visualize underlying trends and patterns in the data—if any which would be impossible to visualize in the higher dimensions. The three techniques we use are (i) Principal Component Analysis (ii) Multidimensional Scaling (iii) Locally Linear Embedding. The common idea in all dimensionality reduction algorithms is based on distance between data points in euclidean space which statistically can be thought of as covariance between points which is tried to be preserved when moving from the high-dimensional mapping to a lower-dimensional representation.

Classification

As explained earlier, our problem definition is the prediction of SFE regime or deformation mechanism given a composition of austenitic steel. Hence our target output are classes which are categorical. This problem is essentially a classification task which is a very standard problem in the field of machine learning. It has been discussed in the SFE literature that the compositional dependence in elements space is inherently nonlinear. That was one of the main drawbacks of linear regression approaches. Although regression can be used to model nonlinear higher order relationships between predictor variables and output class, classification algorithms by virtue of there design are robust to nonlinearities and are able to learn faster compared to regression approaches. The three algorithms we used are: (i) random forests (ii) support vector machines (iii) artificial neural networks. These three algorithms are known to be the best performing algorithms in the machine learning literature [110, 111].

Perfomance metrics

The performance of classification algorithms can be measured in different ways, the two most common techniques being using n-fold cross validation or a hold-out test set. In n-fold cross validation, the dataset is partitioned into n subsets and n models are trained with n−1 subsets while evaluating performance on the 1 subset not used to train the model. The final metric is then an average over the performance of these n models. In hold-out test set method, a small sample of the original dataset is randomly left out from the training. Hence the model is trained on a training set and is evaluated against the unseen hold-out test set. The n-fold cross validation is preferred when the dataset is not large as the complete dataset is used in training eventually while in the hold-out test set method a subset is lost which means the model is trained with less information, and information is crucial in small datasets. However Braga-Neto and Dougherty [112] have done extensive research on using n-fold cross validation on small datasets which are characteristic to the field of bioinformatics and concluded that there is large spread in the values of performance metric and one can over-estimate performance. Hence keeping in mind that our dataset is relatively small, we use hold-out test set methodology for evaluating performance for increasing robustness of our results, mindful of the fact that this might deteriorate performance since some information will be lost for training. In fact, we recommend the material informatics community to use the same methodology as more often than not, the size of dataset in our domain is small.

The metric most commonly used to evaluate performance for ML models is accuracy. It is a very simple metric which basically measures out of all predictions predicted by a model, how many predictions are correct. This accuracy is tested over the hold-out test set in our case. However Dougherty and researchers have researched how sampling of data points to form the training dataset affects accuracy [113]. When random sampling from population cannot be assured for the formation of training dataset, accuracy can be biased as per sampling. Since in material science experiments tend to be done based on a researcher’s interest in chemical space based on the literature or on intuition, sampling is almost never random. Hence, accuracy is not the ideal metric as the population distribution of classes being predicted might not be represented by the sampled training dataset. Based on this discussion, other metrics like false positive rate (FPR), recall or true positive rate (TPR) which are agnostic to population distribution of classes are more suitable. For an extensive discussion on different metrics that can be used for evaluating performance of ML models, one can refer to this excellent paper [114].

Results and discussion

Based on the informatics workflow discussed earlier, we delved into the SFE data to look for insights, patterns and then eventually build a classification model. The materials knowledge a priori known to us from the literature can be used to support the data analysis, empirical generalization about alloying behavior can be backed by data and vice versa the inconsistencies in the literature can also be pointed otherwise. In the Methodology section we explained how we have built a dataset with all experimental SFE data in the literature. The dataset has data to account for the variation of SFE with different alloying additions; however, for some alloying elements the data are very sparse. This makes it impossible to study trends and model SFE for such small sample set of alloying additions. Thus only a subset of the dataset where elements have more than 0.05 wt% alloying addition and then their is substantial number of data points have been chosen for the analysis. Additionally only data points for which Fe is the major alloying element have been retained. Similarly, only data for room temperature measurements have been retained as there are very less data points for specific non-room temperature measurements.

Another way to explore and analyze this data space would be to transform the input variables i.e. the compositions into some other variables leveraging materials knowledge. This would help explain the results more from the theoretical perspective and will also help reduce the dimensionality of the problem. Since we are looking at SFE of austenitic alloys, we can look at mean atomic radii of alloy compositions as well as valence electron concentration. Also since magnetic character has been shown to influence SFE, we can also choose magnetic entropy of alloys as a variable. Hence we can transform a 9-d composition space to this 3-d material character space. This was shown to have similar results as our analysis from prediction perspective. However this approach can be very useful in problems with very high dimensionality where materials knowledge can help us reduce complexity of input space.

Data exploration

Visualizing data leads to many simple yet powerful observations. Just plotting the data along various dimensions and exploring what you see is a very helpful tool in data analysis. There have been many empirical observations and propositions in SFE literature which material scientists use as rules of thumb in alloy design in absence of concrete composition-SFE relationship. These can be useful; however, the generalizations need to be done over a lot of observations. Since we have at our disposal an exhaustive dataset of SFE, we queried and explored the data for visual trends which can be generalized or validate existing ones. We looked at scatterplot matrices where we fixed x-axes as wt% of a particular element and varied other alloying elements on y-axes in different matrices of the scatterplot. Since these are 2-d plots, there is no way to represent SFE numerically with 2 axes taken for wt%. We represent this SFE value dimension by color coding points according to SFE regimes (pointed out in figure labels). Hence while looking at the plots, one should be eying for change in colors in regions which would correspond to certain compositional range leading to a particular SFE regime. We also visualize scatterplots with explicit SFE value versus wt% of certain element to see only the effect of that particular element. Here the color is a redundant value since SFE value is the y-axes, however it helps eyeballing fraction of data in a regime for a particular element. The following is the data exploration analysis with SFE dataset. The salient feature about all the plots is that there really are not standout patterns and in general the SFE data are very complex with many interplaying factors. Most scatterplot matrices have a mixture of SFE regimes all over the compositions and not much can be said about rules that can explain the data.

For example, in Fig. 9 we examine the variation of SFE with change in wt% of Cr. From Fig. 9a we can see no outstanding pattern of SFE regime based on interaction of Cr and any other elements. All regimes are mixed and based on wt% of Cr and another element, nothing can be predicted about the possible SFE regime. Examining Fig. 9b we can see the there is absolutely no relation between SFE and only wt% Cr. For a given Cr alloying, the SFE varies from very low values to very high, thus showing no apparent trend with wt%. We explore similarly effect of other alloying elements on SFE and see similar results. To summarize, by visual exploration some observations clearly stand out about the SFE dataset that can be related to existing discussion in the literature regarding SFE-composition relationships.

-

(i)

As seen in the plots, the pattern of points going from blue to green (representing increase in SFE) is not monotonic across any dimension and in fact highly mixed. Hence this clearly brings out the dependence of composition-SFE relationship of any element on other elements in the system verifying the first-principles calculations regarding nonlinear dependencies.

-

(ii)

These plots also clearly thwart all attempts to use linear regression models in the literature listed out earlier as clearly there is no linear relationship between any element and SFE. As emphasized above, there are strong interactions between elements’ effects on SFE.

-

(iii)

Not many rules of thumb, or generalizations could be processed which might aid in composition tinkering for alloy design. In fact given the nonlinearities, it would not be advisable to look for simple rules in composition-SFE relationships.

Dimensionality reduction and visualization

As shown above, visualization can be a simple yet powerful technique to look for patterns and know more about the dataset as well underlying relations. However in the data exploration tasks described above, we were only able to visualize part of the features of the data at once. Thus we were losing crucial insights about the true nature of SFE dependence on composition. If we could visualize all changing parameters at once and the dependence of SFE on these parameters, we would essentially know everything. However when the data are higher dimensional than what our viewing permits, complete data visualization becomes increasingly difficult. Here we have to take help of ML algorithms discussed earlier where the aim is to compress the nine-dimensional composition space to 2 or 3 dimensions with minimal loss of actual information. We tried the three algorithms listed in the methodology. However to bring out specific points, we discuss the results of PCA and LLE algorithms on the SFE dataset below.

Principal component analysis (PCA) As discussed earlier, PCA is a linear dimensionality reduction algorithm. We performed PCA on the SFE dataset and retained the first three components to visualize it in 3 dimensions. The algorithm compresses the nine-dimensional data to three-dimensional data with 80.5% retention of variance which can be intuitively imagined as the measure of information recovered in this compression. To aid in visualization, we take projections of this 3 components to visualize in 2 dimensions. In Fig. 10 we plot 1st PCA component against the 2nd and 1st against the 3rd. Hence one is the top view and another is the front view of the three-dimensional plot. Although there still are not any clear regions corresponding to a specific SFE class, we can use parts of information hidden in these plots to help in our data analysis and modeling.

After careful observation we can draw linear boundaries in the projections that have knowledge. For both plots one can see that for PC1 > 0.1 approximately, there are almost no observations which fall within the low SFE regime. Similarly, for PC \(<-\)0.2 approximately, there are almost no observations that fall in high SFE regime. This is a pattern, very simple rules that it in itself can act as a classification rule. If a new point falls in one of these regions of the plot after transformation, we can say with some certainty what SFE regime it cannot exhibit. However this is not complete information neither the degree of certainty can be quantitatively calculated. Nevertheless we can leverage this pattern to do other analysis of the data. For instance we can look at the very few points which do not follow our proposed pattern and examine the reasoning in the data.

Looking for low SFE or blue points in the region PC1 > 0.1, we find 3 instances labeled point 1–3 in Fig. 10. Point 1 is a data point from the author Ojima [36]. When we look at compositions very close to this value, we find that there are many observations that correspond to the medium SFE regime. This is evidence of an unreliable data point in the literature to which we can attribute low confidence or choose not to include while modeling the data. Point 2 is an interesting case. We checked the data for this observation and found out that we had erroneously entered the wrong SFE value while building the dataset from this paper. This point actually corresponds to a point which has an SFE value in the medium regime. Point 3 is an outlier from the general dataset. This has been taken from the author Kireeva [105]. In this paper very small changes in N content have been shown to cause very large changes in SFE. Thus this does not fit the proposed pattern, but the accuracy of this calculation cannot be commented on as there are no benchmark observations for comparison. Similarly points which do not follow the other proposed pattern have been labeled from 4 to 6 in Fig. 10 and can be explained along similar lines.

Locally linear embedding (LLE) Having used a linear algorithm, we try a nonlinear dimensionality reduction algorithm in the pursuit to find lower-dimensional representation which might inform us more about the underlying patterns in the SFE dataset. As mentioned earlier, locally linear embedding algorithm (LLE) tries to search for linear sections in the higher dimensional space and then cuts and pastes those sections in the two-dimensional space [115]. Figure 11 shows the application of LLE on SFE dataset. We can observe that in the LLE representation the complete SFE data lie on a shape very close to a V or a Y with a very small vertical base. The most interesting feature of this representation is that each of the arms of this Y has a correlation to the SFE regime. This is precisely what LLE is used for in pattern recognition. As discussed earlier, in a very ideal case different classes separate out on the LLE representation enabling classification from this representation. In the LLE representation of SFE data in Fig. 11, we can see the left arm (highlighted in yellow) has very less green points or high SFE compositions while the right arm (highlighted in orange) has almost negligible blue points or low SFE compositions. In the earlier 2 visualizations using PCA and MDS we were able to propose similar patterns. However there were no clear boundaries in those representations where we can assert that the pattern exists. LLE helps bring out the pattern explicitly with compositions along the two arms mapped to absence of a particular regime.

In this case we can also look at various points which do not follow the pattern and explain them in a similar fashion to what was done for the PCA analysis. We specifically look at the large cluster of points named C \(+\) N. All these points are observations from papers by Gavriljuk and Petrov [70, 100, 103, 107]. The common link among them is that these are observations to measure effect of C and N alloying in steels and the effect of C and N seems to be very significant. According to experiments, small additions lead to large changes. Hence although two compositions can be really close in the LLE embedding due to only a small difference in C or N alloying, they might have very different SFE regimes. Hence all observations measuring effects of interstitials like C and N will most certainly not follow mappings from dimensionality reduction algorithms as is the case here since their effect is markedly different from other predictors.

To summarize, we used various dimensionality reduction algorithms to view the complete SFE data in 2 or 3 dimensions by doing certain transformations. Important learnings from the task are:

-

(i)

We observe the classes are highly mixed in the composition space and there are no regions or cluster for single classes. Had there been any clusters forming or clear boundaries forming, boundaries could have been derived as to what compositions relates to what SFE class. This reinforces the underlying nonlinearity and complexity in the data and also draws merit to the use of classification algorithms to link composition and SFE.

-

(ii)

Nevertheless we could identify 2 patterns in the PCA and MDS representations, regions of absence of compositions with high and low SFE regime. This pattern was identified more concretely with well defined boundary in the LLE representation with the SFE data lying along a Y-like shape and each arm corresponding to non-existence of compositions with a certain regime.

-

(iii)

Exceptions to the above proposed pattern were examined. They were either unreliable observations in the literature or outliers. Unreliable observations were recommended to be modified which would help in increasing reliability of data models while outliers were explained as the effects of C and N alloying. Thus this is an excellent showcase of what visual representations can be leveraged for and at the same time their limitations.

Classification

With the complexity in composition-SFE relationship discussed extensively up to this point, we applied classification algorithms to uncover or “learn” this underlying relationship. Although these classification models are not as intuitively explainable as linear regression which means one cannot write down a final expression relating composition to SFE, it is the price that one has to pay in order to attain higher accuracy than any existing models. These algorithms can essentially be thus treated as black boxes which when given an input composition, will output the SFE regime or class that the composition belongs to.

Artificial neural network (ANN) Artificial neural networks (ANN) are very powerful because of their sheer computational complexity. Given enough parameters, neural networks can learn almost any function irrespective of how nonlinear it is. However this can act as a problem since neural networks can easily overfit to the training data leading, to poor generalization ability. Hence sometimes lower models are preferred at the cost of accuracy. ANNs can have many hidden layers and each layer can have many units. The number of layers and units are essentially hyper-parameters to the ANN model which decide the shape of the decision boundary in higher dimensions. The activation function in the units of the hidden layer can also be changed but we used a sigmoid function which has been demonstrated to have good performance. There are other parameters like learning rate and number of iterations for searching minima of cost function, but they typically do not affect performance of ANN on unseen data. However they are essential to training performance and minima search. Given the size of our data, we implemented only a 1 hidden layer ANN. The number of units in this hidden layer and number of iterations were the hyper-parameters which we searched for using grid search.

Random forests (RF) Random forests (RF) are one of the most popular ensemble machine learning algorithms. They are essentially a collection of many decision trees, but each decision tree in the ensemble is trained differently leading to the ‘randomness’. The performance of random forests has been attributed to two key parameters in the literature. First is the number of decision trees used to train the model which leads to many random samples getting selected from the training set since each tree is fit to a bootstrapped sample of the training set. Second is the number of predictors considered at every node to obtain the best split. These 2 hyper-parameters essentially control the performance of random forests.

Support vector machines (SVMs) Support vector machines (SVMs) are very rich classifiers because of the various types of kernels which can be used to fit the data. The most commonly used kernels which have been proven to give good results are linear, polynomial and radial basis function (rbf) or Gaussian. In addition to the kernel, there are two crucial hyper-parameters to select in SVMs, C and \(\gamma \). C is a parameter that acts as a regularizing coefficient balancing misclassifications and simplicity of decision surface. Hence it is the term which controls over-fitting in SVMs. \(\gamma \) is a parameter whose purpose is the same, though it is imagined as the degree of influence of one data point on another. The interplay between these 2 parameters hugely affects SVM performance. For choosing the best parameters and kernel function we did a grid search over combination of parameters and function. The functions we chose were the same as listed above and for the parameters we did a logarithmic sweep.

Figure 12 summarizes the performance of the above 3 algorithms on the hold-out test set. Each algorithm has an accuracy of about 85% on the validation set. The macro scores of precision and recall are very similar too. We have taken macro scores since all classes are equally important to us and hence no weighting has been provided to better performance over one of the classes. However as discussed earlier, for small datasets where random sampling cannot be assured, false positive rate is a good metric as it is agnostic to population distribution of classes. Moreover from an alloy design perspective, we need this rate to be low. Thus RF with lowest false positive rate is the model of choice. From the confusion matrices, our learning from data visualization are further emphasized. As can be seen, misclassifications are almost always among Low and Medium or High and Medium classes. There is at maximum 1 misclassification across all models between Low and High classes. Thus as evident in the LLE embedding, there is a relatively simple decision boundary separating the Low and High classes. However the boundary between the Medium class and other 2 classes is comparatively fuzzy which can be attributed to lack of data as well as uncertainty in SFE data itself.

Increasing confidence of predictions

Engineering design is always accompanied by a known factor of safety. Many times better performance is compromised for a more reliable and robust design. With the same principle and philosophy as motivation, we discuss the choice of classification algorithm. We have discussed how this model can be used for alloy design. In alloy design it is costlier to make misclassifications than not adding information. For example, if we want to design a steel that exhibits significant twinning and our classifier incorrectly tags a Low SFE composition as Medium in face of just minimal evidence for Low over Medium, this is an undesirable situation. However if the classifier outputs a tag which says that for this composition there is not enough evidence to assign it to Low or Medium, we can also check for other compositions. In the process we might lose some candidates, but if we can reduce the incorrect classifications this helps in alloy design.

This evidence is essentially class probability. Most classification algorithms output probabilities of the output being a certain class and then the class with maximum probability is assigned as the output class. This might mean the probabilities being very similar in absolute terms but still having an output class. Though this is not incorrect, specifically when there is large training data; in our case with a small dataset and sparse sampling in the composition space coupled with the cost of misclassification, maximum probability is not robust enough. We need overwhelming evidence of a composition’s mapping to a SFE regime. Hence we have to choose a certain threshold probability only above which the model assigns a class, the rest of the time it shows that the output is fuzzy. Since SVMs do not a have a straightforward way of calculating probabilities, we drop them as choice. Between ANN and RF, we chose RF due to its slightly better performance based on false positive rate.

It has been discussed as to how the Low–Medium classes are closely entwined and this is similar in the case of Medium–High classes. There are two reasons to this. Mathematically due to errors and uncertainty in experimental SFE calculations, for some compositions whose true value lies on the border—there is data with both classes in training set. Another reason is the lack of data as this is relatively a small dataset and hence interpolations are sometimes uncertain. There is a physical materials behavior-based reasoning as well. Although there are truly 3 deformation regimes in steels, there is a set of transitioning values between these regimes which has experimental evidence for both regimes. Thus there are two fuzzy zones, one between Low and Medium which we name as Fuzzy-LowMedium and one between Medium and High which we name as Fuzzy-MediumHigh. We do not assign a value to these fuzzy zones as they are unknown. Mathematically all we can say is that they extend out from the boundaries at 20 and 45 mJ/m\(^{2}\).

We decided we want to establish a threshold probability below which our classifier should not assign any class membership. The choice of this probability will be dictated by practical choices between reliability or safety and predictive ability. If we take too stringent a probability we will output a lot of fuzzy choices which is very safe but becomes less predictive as a lot of predictions will lie in the fuzzy zone. If we choose too low, we are basically at the base model. We tried different probabilities greater than 0.5 and below are the results for RF in Table 3. The reliability or safety in this case is defined as follows. A prediction is safe if it mathematically satisfies the criteria. For instance, a class output of Fuzzy-LowMedium is reliable and safe if the actual SFE is either Low or Medium. One has to realize the reliability is coming from increasing threshold probability which is the gold standard for confidence on an output.

One sees that the safety or reliability is increasing as the threshold probability is increasing (by virtue of increase in threshold probability) but so is the number of fuzzy outputs. The %fuzzy outputs take a huge jump after 0.6–0.66 which means the base classifier was classifying a lot of points within this range. However looking for more reliability comes at cost of %fuzzy outputs which if very high, decrease the decision making ability or predictive power of the model. Thus based on above statistics, we choose a threshold probability of 0.66 below which the classifier would tag only fuzzy classes. This is a good enough metric as it ensures the probability of chosen class is at least more than double the probability of any other class. Also at this probability we are classifying about 10% of the inputs fuzzy which we believe as a good price to pay for reliability.

Thus based on our above discussion, now our model outputs 5 classes: Low, Medium, High, Fuzzy-LowMedium and Fuzzy-HighMedium.

Validation

The accuracy of the classification algorithms were judged based on the hold-out test set from the SFE dataset. That is a perfect measure of a model’s performance in machine learning. Nevertheless we now wanted to randomly pick some austenitic steel alloys in the literature for which deformation experiments have been carried out and predict their possible deformation behavior from the classifier. This would be an ultimate litmus test for the model. Table 4 is a list of some randomly chosen alloys from the literature, their experimentally observed deformation and the model’s prediction.

As can be seen from the table, the model performs reasonably well. It was able to predict the correct deformation behavior for most compositions. For the compositions it predicted as belonging to a fuzzy class, the experimental behavior is one or both of the expected behaviors from that fuzzy class. Notice that for alloy 1, although experimentally the alloy shows both behavior, the classifier predicts Medium class. This is rooted in the fact that there is no clear boundary for the transition between these two behaviors. For many alloys which might exhibit this behavior, the experiments must have calculated their value in the Medium SFE class and hence our classifier will predict the same. Thus from a design perspective, the fact that the algorithm has not misclassified any composition is very crucial.

Conclusions